Abstract

Humans rely heavily on the visual and oculomotor systems during social interactions. This study examined individual differences in gaze behavior in two types of face-to-face social interactions: a screen-based interview and a live interview. The study examined how stable these individual differences are across scenarios and how it relates to individuals’ traits of social anxiety, autism, and neuroticism. Extending previous studies, we distinguished between individuals’ tendency to look at the face, and the tendency to look at the eyes if the face was fixated. These gaze measures demonstrated high internal consistencies (correlation between two halves of the data within a scenario) within both the screen-based and live interview scenarios. Furthermore, individuals who had a tendency to look more at the eyes during one type of interview tended to display the same behavior during the other interview type. More socially anxious participants looked less at faces in both scenarios, but no link with social anxiety was observed for the tendency to look at the eyes. This research highlights the robustness of individual variations in gaze behavior across and within interview scenarios, as well as the usefulness of measuring the tendency to look at faces separately from the tendency to look at eyes.

Keywords: social interaction, face-to-face, face preference, social anxiety, eye movements, individual differences

Introduction

Social interactions constitute one of the fundamental pillars of human behavior. During face-to-face interactions, people exchange social signals through speech, gestures, gaze direction, and facial expressions. Understanding these signals relies heavily on gaze behavior that determines which visual information is collected and processed. Thus, gaze deployment plays an essential role in shaping percepts (e.g., emotion recognition Vaidya, Jin, & Fellows, 2014), in molding high cognitive processes (e.g. memory Pertzov, Avidan, & Zohary, 2009) and by extension human social behavior. Consequently, a research avenue has emerged, examining gaze behavior during social interactions, which encompasses a range of social interaction scenarios, such as the decision to initiate or participate in a social interaction (Hirschauer, 2005; Laidlaw, Foulsham, Kuhn, & Kingstone, 2011). The current study focuses on a particular social interaction—face-to-face interaction (for a review, see Hessels, 2020).

Although social presence and interactivity are key characteristics of face-to-face interaction, until recently, gaze behavior has been mainly studied in face-to-face scenarios that are not interactive or without social presence (e.g., Langton, Watt, & Bruce, 2000; Võ, Smith, Mital, & Henderson, 2012), which are not necessary representative of gaze behavior during most real-life interactions. Some findings were generalized to a more realistic face-to-face interactions, such as the tendency to look at the mouth when the companion talks was shown in a dynamic noninteractive scenario (Võ et al., 2012) and was later shown also in interactive social interaction with social presence (Rogers, Speelman, Guidetti, & Longmuir, 2018). However, no study has tested the same participants in both types of scenarios. Therefore, it remains unclear if individual differences in gaze behavior are consistent across different types of face-to-face scenarios. The current study examines this issue and specifically whether individual differences in gaze behavior tendencies (e.g., the duration individuals look at others’ eyes and face) are similar between a screen-based interview scenario that is not interactive and without social presence (pre-recorded clip of the interviewers asking questions) and an interactive live scenario that includes a conversation with a human companion.

Two main types of gaze behavior have been studied in the context of face-to-face interactions, the tendency to look at others’ eyes and face (eye-preference and face preference, respectively). These two measures typically exhibit high internal consistency (the extent to which different subsets of the data produce similar values, often measured by split-half correlations. See Peterson, Lin, Zaun, & Kanwisher (2016), Guy et al. (2019), Rubo, Huestegge, & Gamer (2020), and Hessels et al. (2020). However, it is yet to be established whether eye and face preferences are stable across different types of face-to-face interaction scenarios, such as live and screen-based interviews (tested by correlating individuals’ gaze tendencies across scenarios. See Peterson et al. (2016) and Grossman, Zane, Mertens, and Mitchell (2019). As far as we know, the first study to examine individuals’ face-related gaze preferences across different viewing scenarios was Peterson et al. (2016). This study showed that individuals optimize face identification by fixating at a particular location within a face (Peterson & Eckstein, 2013), this location is specific to the observer, and is similar when viewing faces on a screen and when walking in a corridor (Peterson et al., 2016). Notably, this study did not include face-to-face interactions and did not examine how individuals’ tendency relates to various traits. A recent study did examine live and screen-based face-to-face interactions and found that the tendency to look at a face is similar in live and screen-based interactions (Grossman et al., 2019). However, this study had a few limitations, which were discussed by the authors. First, participants were asked to speak and communicate in the real-life interaction; however, in the screen-based design, participants were instructed to look freely at clips of people talking about their own life experiences. Second, the periods that participants were speaking and listening were examined together, leaving out a factor that influences gaze behavior considerably (Freeth, Foulsham, & Kingstone, 2013; Mansour & Kuhn, 2019; Rogers et al., 2018). Finally, a large amount of the data was lost in the real-life scenario. The authors suggested that it occurred because participants were allowed to freely move in their chairs and that this data lost issue could have been less substantial if glasses-based eye tracker had been used, as done in the current experiment. The current study addressed the points mentioned above by taking into consideration whether a person is speaking or listening, as well as the data quality and data loss in each scenario.

The amount of time observers look directly at others’ eyes and face had been shown to reflect various processes and traits of the observer. It was shown to signal to the other person when they start to speak (Ho, Foulsham, & Kingstone, 2015), predict motor intentions (Castiello, 2003; Palanica & Itier, 2014) and to relate to face recognition abilities (Haas, Iakovidis, Schwarzkopf, & Gegenfurtner, 2019). A few studies showed that gaze behavior reflects understanding others’ mental states or emotions (Adolphs et al., 2005; Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001). However, emotional expressions can be identified even in peripheral vision when presented for less than 150ms (Bayle, Schoendorff, Hénaff, & Krolak-Salmon, 2011) and are not entirely dependent on the distribution of gaze within the face (Yitzhak, Pertzov, Guy, & Aviezer, 2020). This finding suggests that, although gaze often corresponds with the observed emotional expression, it is not always necessary for the accurate recognition of emotions (Hessels, 2020). Furthermore, the tendency to look at eyes and the face seems to be related to social related traits, such as social anxiety, autism, and neuroticism. However, the nature of this relation is still not clear due to mixed results when considering social anxiety (Chen, van den Bos, Velthuizen, & Westenberg, 2020, Chen, van den Bos, Karch, & Westenberg, 2022; Tönsing et al., 2022), autism traits (Hessels, Holleman, Cornelissen, Hooge, & Kemner, 2018; Vabalas & Freeth, 2016), and neuroticism (Harrison, Binetti, Coutrot, Johnston, & Mareschal, 2018; Perlman et al., 2009; Simplicio et al., 2014) within neurotypical populations.

There are a few potential factors that might explain the discrepancies between previous studies. First, whether participants observed static faces or interact with another human has been found to be a meaningful factor in studies of autism. Studies that have used more realistic stimuli, such as dynamic ones, have observed that individuals with high levels of autism-like symptoms tend to look less at others (Speer, Cook, McMahon, & Clark, 2007). On the other hand, studies using static stimuli have produced mixed results (Klin, Jones, Schultz, Volkmar, & Cohen, 2002; Fletcher-Watson, Leekam, Benson, Frank, Findlay, 2009). A review of the literature (Risko, Laidlaw, Freeth, Foulsham, & Kingstone, 2012) highlighted the importance of considering the realism and dynamic nature of social stimuli in autism research. Second, the setting of face-to-face interactions has been found to significantly impact the results of studies on social anxiety. For instance, research has shown that when tasks include asking personal questions as part of the interaction, individuals with high levels of social anxiety tend to exhibit decreased face preference (Chen et al., 2022). However, in studies that do not include self-related questions, this relation was not observed (Rösler, Göhring, Strunz, & Gamer, 2021). These findings highlight the importance of considering the specific setting and context of social interactions in research on social anxiety. Finally, most previous studies did not dissociate the time in which observers look at the face and the eyes, sometimes even treating them as equivalent. Research has shown that the amount of time gaze is directed toward the eyes does not necessarily correlate with the accuracy of emotion recognition (Yitzhak et al., 2020), suggesting that valuable social information can be gleaned from other facial features as well (Palanica & Itier, 2014). This finding highlights the potential value of differentiating between an individual's tendency to look at the eyes versus their tendency to look at the whole face. Studies that have only used one of these measures may not fully capture the nuances of social gaze behavior. For example, Freeth et al. (2013) measured the preference to look at the face and compared it with the Chen and Yoon (2011) study that measured the preference to look at the eyes. The current study differentiates between these influential factors to better understand individuals' social gaze behavior.

The current study seeks to answer two questions. First, does gaze behavior preferences during a screen-based interview scenario are similar to a live interview scenario? Thus, participants performed two interview scenarios: one screen-based and one face-to-face (in a counterbalanced order). Both scenarios include self-related questions; however, they differ in various factors, including their interactivity and social presence. Finding consistent results in both scenarios will demonstrate the robustness of individual variability in eye and face preference. The second aim of this study was to examine if the tendency to look at faces has additional value above the preference to look at the eyes. To that end, we measured participants’ traits related to social behavior and examined whether they are associated with the main gaze behavior measures used in previous studies: percent of fixation time on faces (face preference) and percent of fixation time on the eye region (eye preference). To measure eye preference in a way that is not confounded with face preference (because the eyes are always within the face), we introduced an additional measure; namely percent of fixation time on the eye region out of the total fixation time on the face region (eyes within face preference). Although the current study focused on face preference, eyes within face preference, and eye preference, mouth preference was analyzed to enable a more direct comparison with previous studies.

Methods

Participants

Fifty-two undergraduate native Hebrew speaking students, mean age 23.9 ± 2.16 years, 20 males, took part in the experiment. All participants signed a written informed consent prior to the experiment. Participant data for a specific scenario was excluded if they met any of the following definitions: did not complete the task, the precision error (RMS-S2S; Holmqvist et al., 2011) was larger than 2 standard deviations above the mean (as in Holleman, Hessels, Kemner, & Hooge, 2020), and the percent of data loss was greater than 30% (the percent of samples that gaze was not detected properly owing to blinks or technical issues). Overall, three participants were excluded from the screen-based interview scenario and 9 participants were excluded from the live interview scenario, resulting in 49 participants in the screen-based interview scenario and 43 in the live interview scenario. For further details regarding comparing data quality between scenarios, see the last part of the Results section (Eye tracking data quality assessment of screen-based and live interview scenarios). Based on pilot studies, we expected Pearson r values of 0.4 between the personality traits and the gaze behavior measures. Thus, to achieve a power of 80% with at a significance level of 0.05, 46 participants were required (power analysis was performed using the “pwr” R package) (Champely et al., 2018).

Procedure

The experiment involved two types of interactions: a screen-based and a live face-to-face interview. To conceal the real objective of the experiment (comparing gaze behavior across live and screen-based interview scenarios and its relationship to personality traits), participants were told that they would engage in two separate experiments, a short screen-based interview task and a face-to-face intelligence test named the “block design task.” In fact, the block design task was composed of a short interview (approximately 5 minutes), that will be reported here, followed by a block reconstruction task itself (approximately 10 minutes) that will be reported in another manuscript. Participants were randomly assigned to start one of the tasks. At the end of the experiment, participants filled out four questionnaires: the Social Phobia Inventory (Connor et al., 2000; SPIN), the Autism Quotient (Baron-Cohen, Wheelwright, Skinner, Raste, & Plumb, 2001), the Rosenberg self-esteem scale (Rosenberg, 1965), and a Big-5 questionnaire (Goldberg, 1992) to measure neuroticism).

During the screen-based interview task, participants’ eyes and voice were recorded while viewing short clips presented on a laptop. These included 14 people asking 28 personal questions, such as, “What is the last book you read?” (see the Supplementary materials). Each interviewer asked two questions, which appeared in separate clips. Before each question, participants were instructed to focus their gaze on a fixation point located at the bottom of the screen for 500 ms. The clips started once the eye tracking system validated that the participant had looked as instructed; thus, the first fixation was directed at the interviewer's neck, rather than facial features. After each question, participants were instructed to answer the question while viewing a white screen with the instruction, “Please answer the question now. Press on the spacebar when you are done” (in Hebrew).

The live interview scenario (presented to the participants as a preliminary step before a block reconstruction test) was performed using the Tobii-Pro-Glasses-2 eye tracking system (Tobii, Danderyd Municipality, Sweden). Participants were instructed to answer a few questions before taking the test and to avoid excessive head and body movements. As in the screen-based interview, the questions were personal, but this time were more closely related to academic achievement and intelligence (e.g., “What was your SAT score?”; see the Supplementary materials). The experimental room was clean and uncluttered (the same for all participants). The participant and experimenter sat on either side of a table. A few papers and a phone were placed on a table nearby (see stimulus examples in Figure 1). The live interview scenario started by calibrating the glasses-mounted eye tracker. Then, the experimenter took the control laptop out of the room and returned to her seat to start the interview. The live interview was conducted by one of two female experimenters who wore the same plain blouse. Because the experimenter's identity can influence the observer's gaze behavior, it was added as a covariate to the analysis. In both interview scenarios, the experimenters were instructed to look directly at the participants/camera.

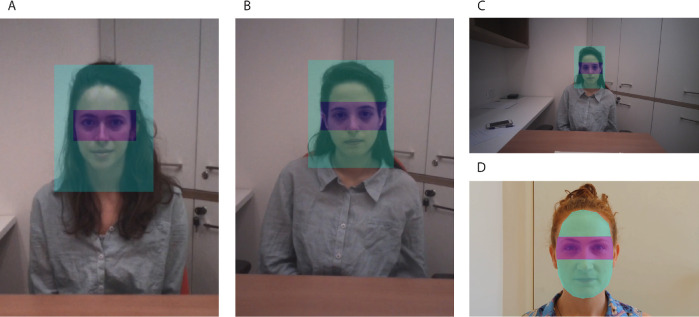

Figure 1.

Stimulus examples. (A) and (B) show the two experimenters in the live interview scenario. (C) the whole field of view captured by the front camera of the Tobii-Pro-Glasses-2 (B appeared in C). (D) One of the interviewers who appeared in the screen-based scenario.

Eye tracking systems

In the screen-based interview, a RED250-SMI (SensoMotoric Instruments, Telto, Germany) eye tracker was used in combination with a 15inch DELL laptop. The sampling rate was 250 Hz. Before the interview, each participant performed a 5-point calibration process provided by SMI. Only participants with an average error of less than 1 visual degree performed the experiment. The size of the face region was approximately 8° × 12° and of the eyes region was approximately 8.0° × 3.5°.

In the live interview, a Tobii-Pro-Glasses-2 eye tracker was used with a sampling rate of 100 Hz. The calibration was performed using the Tobii calibration card after participants were seated in a chair at a distance of approximately 120 cm from the observer. The experimenters were instructed to continue to the experiment only if the gaze position appeared in the Tobii software was inside a calibration circle with a diameter of approximately 1°. The live interview task did not include additional accuracy measures. The size of the face region was approximately 5.5° × 6.5° and of the eyes was approximately 3.5° × 2.0°.

Data preprocessing

In both scenarios, three main gaze related measures were considered: 1) Percent of fixation time in the face region—“face preference,” 2) percent of fixation time in the eye region—“eye preference,” and 3) percent of fixation time in the eye region out of the total fixation time in the face region (“eyes within face preference”). Although individuals’ preference to look at others’ mouth was not included in our original analysis, to provide a more complete picture of face regions preferences, we report on this measure mainly in the Supplementary materials.

In the screen-based interview scenario, we used the SMI BeGaze program (SensoMotoric Instruments, Tetlow, Germany) to define the regions of interest and to extract the total fixation duration in each region (face, eyes and mouth; see Figure 1, stimulus examples). The regions of interest were defined manually by a research assistant before running the experiment. Each region was a polygon that cover the relevant region most concisely. Fixations were detected using velocity based detection algorithm using a peak velocity threshold of 40°/s and minimum duration of 50 ms (the system's default setting).

In the live interview scenario, we used the Tobii Pro mapping software to define the regions of interest. For each participant, we selected a frame from the middle of the interview and defined two regions on it: the face and eyes of the experimenter (see Figure 1, stimulus examples). Each region was defined by a rectangle. The face region included the neck and hair. The eye region included the eyebrows and the nose bridge. Then, we transformed the regions from the selected frame to all other frames using the software mapping feature. Next, we extracted a sample report that included the gaze position of each recording sample, whether the sample was part of a fixation, and whether or not the fixation was directed to the eyes or the face. Fixations were detected using velocity-based detection algorithm using a peak velocity threshold of 100°/s and minimum duration of 60 ms (the system's default settings for glasses eye tracker).

Statistical analyses

The analysis consisted of four parts. First, we examined the internal consistency of the gaze behavior measures (face preference, eye preference, and eyes within face preference) in each of the two scenarios by calculating Pearson correlations across individuals’ values. In the screen-based interview scenario, the correlation was performed between the first and the second questions of each interviewer appearing in the clip (each one asked two questions). In the live interview scenario, the correlation was performed between odd and even questions. Next, in the second part of the analysis, we examined the stability of the measures across scenarios by examining the correlation across participants’ measures across the two interview scenarios. The magnitude of the correlation was described according to the guidelines provided by Navarro and Foxcroft (2022).

In the third part of the analysis, we examined whether these measures were related to the observers’ traits. We applied one mixed linear model for each gaze measure to test for the influence of personality traits and interview scenario: screen-based (only listening), live interview listening, and live interview speaking. To capture the differences between listening stages across scenarios and between speaking and listening in the live interview scenario, contrasts were set accordingly. The first contrast compares between screen-based and live interview during listening, and the second contrast compares between the two stages within the live interview scenario. The models incorporated the traits’ scores (centered and standardized) and their interaction with the interview-scenario (full model formula: dv ∼ interview scenario *(Social Anxiety + Neuroticism + Autism-like) + (1|Participant)). The full models’ statistics are in the Supplementary Material. In addition, to verify whether the sex of the participant (participant sex) or the experimenter identity (experimenter) influence the gaze measures, an additional set of models were performed while considering these factors (see Supplementary Results—Exploring personality traits using face and eye preferences—Potential interfering factors). The mixed model allowed us to include participants with data from only one of the interview scenarios. The models were computed using the “lme4” package and p values were computed based on “lmerTest” package in R. Because three models were applied, the significance level was set to 0.016. Multicollinearity was tested using variance inflation factors (vif in “car” package in R) and were found not substantial (variance inflation factors values are lower than 2). Furthermore, in each scenario the gaze behavior measures were tested for normality using the Kolmogorov–Smirnov test. All measures were found to be insignificantly different from the normal distribution (p > 0.1). In the last part of the results, we compared the data quality measures between scenarios. For each participant we computed precision and data loss values. Precision was defined as the root mean squared sample-to-sample deviation in visual degrees (RMS-S2S; Holmqvist et al., 2011) across all samples recorded, fixation and saccades. Data loss was defined as the percent of samples that gaze was not detected properly (e.g., blinks, tracking loss) from the total samples. Then, we examined the individual differences in data quality values and their relation to the personality traits examined in the study to rule out the possibility that data quality measures are somehow related to the effects found in the previous parts of the analysis.

Results

Internal consistency of gaze measures in the screen-based and live interview scenarios

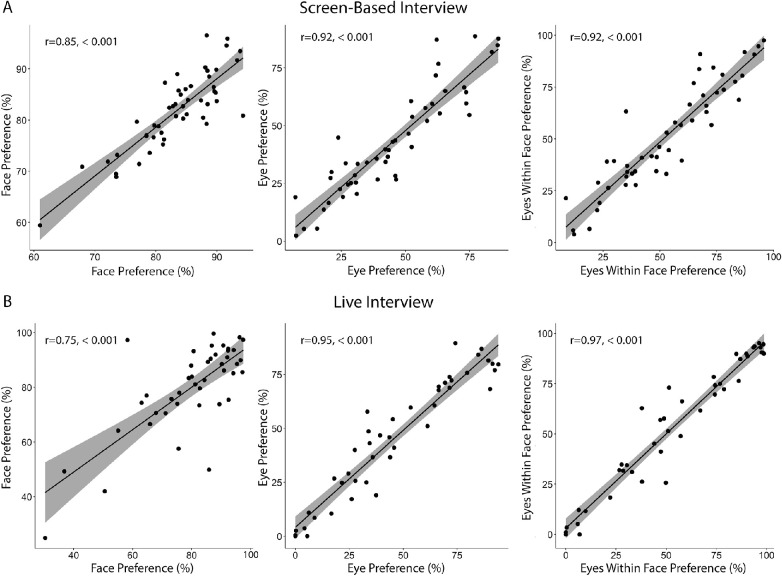

To establish whether participants’ gaze deployment on faces and eyes is consistent within each scenario, we correlated each measure extracted from one-half of the data with the other one-half of the data. Specifically, in the screen-based interview scenario, we correlated the measures extracted from the first questions (of each interviewer) with the extracted measures from the second questions (Figure 23A, top). In the live interview scenario, we split the data to odd and even questions according to their order (Figure 2B, bottom). All the correlations were strong (r > 0.75), indicating that all gaze measures have a high level of internal consistency (see Table S1 in the Supplementary Materials). This finding converges with other studies of individual differences in scanning patterns of faces (Guy et al., 2019; Hessels, 2020; Mehoudar, Arizpe, Baker, & Yovel, 2014; Peterson & Eckstein, 2013; Rogers et al., 2018).

Figure 2.

Internal consistency of participants’ gaze behavior within each interview scenario. Face preference—the percent of fixation time in the face region. Eye preference—the percent of fixation time in the eye region. Eyes within face preference—percent of fixation time in the eye region out of the total fixation time in the face region. (A) Correlations of each gaze behavior measure within the screen-based interview scenario. (B) Correlations within the live interview scenario.

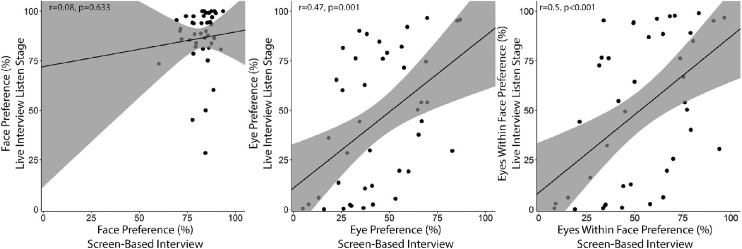

Figure 3.

Stability of measures across scenarios. Scatter plots of the correlations between the screen-based interview scenario and the listening stage in the live interview scenario for face preference (left), eye preference (center), and the eyes within face preference (right).

Stability of measures across scenarios

The stability of each measure across both scenarios was assessed using a Pearson correlation across participants. Correlations of both eye preference and eyes within face preference across scenario types were moderate (r = 0.47 and r = 0.5 respectively). However, face preference's correlation was found negligible (r = 0.08). All correlations are presented in Figure 3 and the full statistics are described in Table 1.

Table 1.

Cross-scenario correlations for all gaze measures. The correlation coefficients between screen-based interview and live interview when participants listen (first row) and speak (second row).

| Stability across tasks | Eye preference | Face preference | Eyes within face preference |

|---|---|---|---|

| Screen-based <-> live interview—listening (n = 42) | r = 0.47, p = 0.001 | r = 0.08, p = 0.633 | r = 0.5, p < 0.001 |

| Screen-based <-> live interview—speaking (n = 42) | r = 0.4, p = 0.008 | r = 0.1, p = 0.532 | r = 0.46, p = 0.002 |

| live interview—speaking <-> listening (n = 43) | r = 0.8, p < 0.001 | r = 0.59, p < 0.001 | r = 0.81, p < 0.001 |

Individuals’ traits and gaze preferences

A potential explanation for the cross-individual variability in gaze behavior during social interactions is the observers’ traits, in particular those traits that relate to social interactions such as neuroticism, social anxiety and autism-like symptoms.

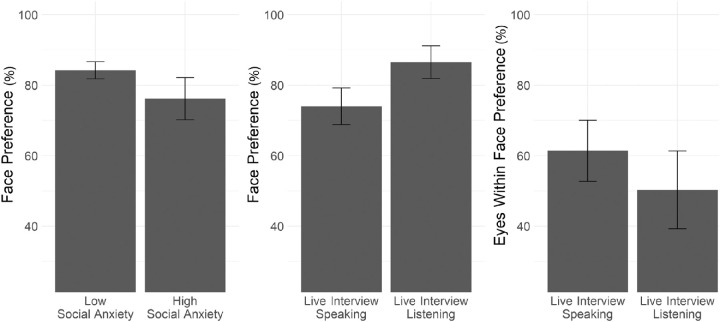

The face preference model revealed two significant effects. One was a negative relation between social anxiety and face preference, β = −6.08, p = 0.003, meaning that individuals who scored higher on the social anxiety questionnaires directed their gaze less to the face of the interviewers. Figure 4 visualizes this effect by comparing between more anxious and less anxious participants (median split). The comparison between groups revealed a difference, t(22.56) = 2.45, p = 0.023, between the face preference of highly socially anxious participants, mean, 76.2% ± 12.9%, and of low socially anxious participants, mean 84.2% ± 6.04%, with a moderate effect size, Cohen's d = 0.797. Face preference also differed significantly between listening and speaking stages, β = −7.15, p < 0.001 (interaction-condition2 in Table S2), reflecting a higher tendency to look at the face while listening compared with speaking periods (see Figure 4). These findings are consistent with the findings reported in a study from Rogers et al. (2018). In addition, the interaction between social anxiety and scenario type (contrast comparing between listening stages of the screen-based and live interview scenarios) revealed a negative nonsignificant estimator, β = 3.8, p = 0.072, suggesting a possible difference between scenarios in the relation between social anxiety and face preference, in which the negative relation is more prominent in the live interview scenario. Note that this interaction was not significant so no clear claims could be deduced. The full models’ statistics are reported in the Supplementary material (Supplementary Table S2).

Figure 4.

Difference in face and eyes within face preferences. (Left) Differences between low and high socially anxious participants in face preference across all scenario types (between-subject comparison). The graph includes only participants that were not excluded in any of the scenarios. The other two graphs represent the difference between speaking and listening stages (within-subject comparison) in face preference (center) and eyes within face-preference (right). Error bars reflect 95% of the confidence interval.

The eye preference model did not reveal any significant effect, neither for the observers’ traits and the interaction condition. The eyes within face preference model did reveal one significant effect between listening and speaking stages within the live interview scenario, reflecting a higher eyes within face preference in the speaking stage (61%) compared to the listening stage (50%). Note that this effect, which is in the opposite direction to face-preference (see Figure 4) is consistent with previous findings (Holleman et al., 2021; Rogers et al., 2018). Although this effect was absent from the eyes preference model, it did emerge in the eyes within face preference model. Even though it is possible that with greater power the same effect will emerge in the eye preference measure, this discrepancy could be considered as evidence for a unique signal that is captured by the eyes within face preference and not by eye preference.

To examine whether the effects mentioned are driven by the experimenter’s identity and the participants’ sex, additional models with these variables were performed. Adding these variables did not qualitatively change the results (Table S3 in the Supplementary materials).

Finally, to get a complete picture of the main facial features, we also examined the mouth within face preference (percent of fixation time in the mouth region out of the total time in the face region). The full statistics, including internal consistency reliability and stability across scenarios, are described in the Supplementary materials. Consist with the results mentioned elsewhere in this article, the mouth within face preference was found to be significantly higher when participants listened (19%), compared with when they were speaking (11%). In addition, a higher mouth with face preference was found in the screen-based interview scenario (25% vs. 19% in the real-life scenario), which might be a result of the different stimuli type (prerecorded video and live interview). Finally, more neurotic participants were found to look more at the mouth region, β = 9.9, p = 0.003; however, this effect did not remain significant (after correction for multiple compassions) while controlling for participant sex and experimenter identity (p = 0.037).

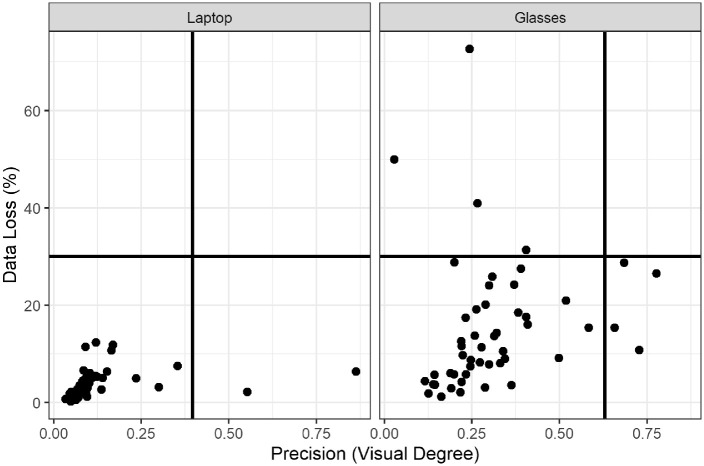

Data quality assessment

Data quality in each scenario was assessed using two measures: precision error—the root mean squared sample-to-sample deviation in degrees of visual angle (RMS-S2S; Holmqvist et al., 2011), and the percent of data lost (data loss)—the percent of samples that gaze was not detected properly (e.g., blinks) from the total samples. As presented in Figure 5, data loss in the live interview scenario, mean 15.4% ± 13.44%, was significantly higher than the screen-based interview scenario, mean 3.88% ±2.93%; t(52.37) = −5.87, p < 0.001. The mean precision error was also significantly higher in the live interview scenario, mean 0.30° ± 0.16°, than the screen-based interview scenario, mean 0.12° ± 0.14°; t(48.01) = −7.96, p < 0.001.

Figure 5.

Data quality in each interview scenario. Precision error and data loss values of the screen-based interview (left) and the live interview (right) scenarios. Each dot represents a participant. The x value reflects the precision error (RMS-S2S) and the y value reflects the data loss. Lines represent the exclusion criteria—30% data loss (horizontal line) and the value of 2 standard deviations from the mean precision error (vertical lines). Participants above the horizontal lines or to the right of the vertical lines were excluded from the analysis of the specific task.

To examine whether the stability of eye and face preferences across scenarios are a result of individual differences in data quality, a Pearson correlation analysis was conducted for each data quality measurement across scenarios. The results of the analysis indicated that the correlations between the two variables in either scenario were weak, 0 < r < 0.06, and insignificant, p > 0.69. Furthermore, to test whether the data quality measures explain the relation with traits related to social behavior, a Pearson correlation was performed between each data quality measure in each scenario type with each social-related trait (after data exclusion as performed in Individuals’ traits and gaze preferences). All correlations were weak, –0.16 < r < 0.14, and insignificant, p > 0.25.

Discussion

Gaze behavior during face-to-face interactions was examined by measuring the amount of time gaze was directed at faces (face preference) and at the eyes (eye preference) across two interview scenarios, screen-based and live interview. To decouple between face and eye preferences, we introduced the “eyes within face preference” measure that captures the proportion of time individuals look at the eyes out of the total time on the whole face. Gaze behavior measures exhibited high internal consistency within scenarios; however, only eye-related preferences (eye preference and eyes within face preference) were correlated across the screen-based and live interview scenarios. The comparison of individuals’ gaze preferences and their traits revealed a moderate correlation between social anxiety and face preference in which more socially anxious participants fixated less on faces (this effect was somewhat more pronounce in the real-life interview). Furthermore, during listening, face preference was higher and eyes within face preference was lower, in comparison with speaking. The two opposite effects lead to no effect of conversation stage on the overall eye preference per se.

Stability of gaze measures across different interview scenarios

An important aspect of gaze behavior during social interactions is whether gaze preferences are stable measures that generalize across different scenarios. In this study, we used two very different face-to-face interaction scenarios, a screen-based interview and a live interview. Despite the differences between both scenarios in the viewing platform (screen vs. live) and type of questions (personal vs. academic achievements, both self-related questions), individuals’ preference to look at the eyes was correlated between the two. The stability of the eyes-related preferences suggests that how people look at the regions within a face is a trait-like characteristic of each individual. This extends recent studies that reported on gaze preference stability over time (Mehoudar et al., 2014) and demonstrates that these measures are also stable across face to face interaction scenarios.

The tendency to look at faces was not correlated across the two scenarios. This difference may be attributed to three (not necessarily mutually exclusive) factors. First, the live interview scenario included a social presence of the experimenter and an interactive dialog while the screen-based included prerecorded questions. These differences are expected to influence cognitive processes and gaze behavior (Freeth et al., 2013; Risko et al., 2012). The second factor is the interview characteristics (number of interviewers and the questions asked) and the content of the questions asked (academic achievement or personal). Last, in the screen-based scenario, the faces covered most of the screen, and thus limited the variance in individuals’ face preference. Presenting the face of the interlocutor with a restrict visual field surrounding it occurs in most previous studies dealing with face-to-face interview-like interactions, including ones with a presence of a live confederate (Hessels et al., 2018; Hessels, Holleman, Kingstone, Hooge, & Kemner, 2019; Holleman et al., 2020). This strategy might decrease the intersubject variability of the time participants look at the face. Importantly, the significant internal consistency of face preference and its relationship to social anxiety suggests that face preference can convey valuable information about one's gaze behavior during social interactions. Thus, future studies could benefit from using a face embedded within a setting containing other meaningful objects, rather than a face presented on the whole screen.

Face preference and social anxiety in social interaction scenarios

The current study found a moderate difference between highly and less socially anxious participants, which reflects that highly socially anxious individuals fixated less (8%) on faces in both scenarios. These findings are consistent with previous studies that used interviews with self-related questions (Chen et al., 2022). Apart from face-to-face interactions, other social situations might also reveal a reduced face-preference among individuals with high social anxiety. Two studies examined the relationship between social anxiety and gaze behavior during public speaking (Chen et al., 2021) and while walking in a train station (Rubo et al., 2020). Both studies revealed a reduced face preference in socially anxious individuals (but did not examine eye preference). The observed association between social anxiety and face preference exhibited an insignificant variation across interview scenarios (p = 0.072). This potential difference, if validated, could be viewed as evidence for the contribution of social presence to face avoidance in participants with high levels of social anxiety.

Research on social anxiety and gaze behavior has used various gaze behavior measures, which underscores the importance of precise terminology and differentiation between these measures. For example, one study reported reduced face preference in highly socially anxious participants (Dechant, Trimpl, Wolff, Mühlberger, & Shiban, 2017), and another study found reduced eye preference during an interaction that took place on a screen (the face covered most of the screen) in socially anxious participants (Hessels et al., 2018). Although both studies dealt with social avoidance, it remains unclear whether the observed avoidance was more strongly related to the eyes or face, or whether both tendencies should be addressed together. The significant relationship with face preference, and not eye preference, presented in the current study suggests that it is preferable to separate social avoidance behavior into two components: avoidance of faces (regardless of the facial features) and avoidance of the eyes (or eye contact) while viewing faces. The distinction between these two components could lead to a better understanding of social avoidance in neurotypical and abnormal populations during different social interactions. For example, as demonstrated in the current study, listening and speaking changed the face preference (face preference was higher while listening) in the opposite way that it influenced the relative amount of time the gaze focuses on the eyes versus other facial regions (eyes within face preference was higher while speaking). The same pattern could emerge in relation to other factors during social interactions.

Although this study establishes an association between social anxiety and gaze behavior during face-to-face social interactions, it is plausible that other traits may also be involved in, or even mediate, this association. For instance, a study discovered that individuals with super recognition abilities—a heightened capacity to recall faces—tend to direct their attention toward faces more frequently than normal recognizers (Bobak, Pampoulov, & Bate, 2017). Future studies should explore face processing abilities or other cognitive abilities that might be related to the relation between social anxiety and gaze behavior during face-to-face interactions.

Limitations

The current study examined gaze directed to the eyes, mouth, and face. Unlike state-of-the-art desktop eye tracking systems, the potential slippage of eye tracker systems that are used in live interview scenarios (glasses-based eye trackers) can potentially jeopardize data quality and spatial accuracy (Vehlen, Standard, & Domes, 2022). In the current study, we tried to minimize slippage by asking both the participants and the experimenters to limit their body and head movements (Niehorster, Cornelissen, Holmqvist, Hooge, & Hessels, 2018). The high internal consistency of all measures, the strong correlation of eye preference (the smaller area in our study) between scenarios, and the relation between face preference and social anxiety scores indicate that the current settings enabled a good spatial precision. Moreover, the analysis of the data quality and precision indicates that the results could not be explained by individual differences in data quality.

In both scenarios, the experimenters were instructed to look directly at the participants, which is known to influence gaze behavior (Rogers et al., 2018). Thus, it remains unclear whether the experimenter's direct gaze was an intervening factor related to social anxiety. In other words, it is not clear whether the reduced face preference in participant with high anxiety would emerge even when the experimenter is not instructed to look at the participants directly. Furthermore, the live interview scenario included questions related to academic achievement, questions that could be stressful and influence gaze behavior in more socially anxious participants. Last, in this study interviewers and participants did not know each other. Talking with a familiar interviewer or partner, might elicit different gaze behavior.

Conclusions

In social interactions, people rely heavily on the visual and oculomotor systems. Classically, gaze behavior during social interactions has been measured using the preference to look at faces and eyes in an interchangeable manner. This study demonstrates that individuals’ tendency to look at the eyes is similar across very different face to face interaction scenarios, differing in social presence (screen-based vs. live), type of questions and visual background. The tendency to look at faces, rather than the eyes per se, is related to the level of social anxiety as more socially anxious individuals looked less at faces especially in the live-interview scenario. Thus, future studies should differentiate between the tendency to look in the general direction of the face and the eyes and would preferably include live interactions that include a conversation with a human companion.

Supplementary Material

Acknowledgments

The authors thank Alona Rimon, Hodaya Abadi, and Liat Refaeli for data collection and preprocessing the data.

Supported by Israeli Science Foundation (ISF) grant 2414/20 and JOY fund research grant to Y.P. Written informed consent was obtained from the two persons for the publication of identifying information and images in an open-access publication. We thank them for the approval.

Data availability: The dataset analyzed and code of the current study are available in the Open Science Framework repository—https://osf.io/zdu9n/?view_only=db381e49665a439c8e383a1f76db7032.

Commercial relationships: none.

Corresponding authors: Nitzan Guy and Yoni Pertozv.

Emails: nitzan.guy@mail.huji.ac.il, yoni.pertzov@mail.huji.ac.il.

Address: Mt. Scopus, 9190501 Jerusalem, Israel.

References

- Adolphs, R., Gosselin, F., Buchanan, T. W., Tranel, D., Schyns, P., & Damasio, A. R. (2005). A mechanism for impaired fear recognition after amygdala damage. Nature, 433(7021), 68–72. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The “reading the mind in the eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 42(2), 241–251. [PubMed] [Google Scholar]

- Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17. [DOI] [PubMed] [Google Scholar]

- Bayle, D. J., Schoendorff, B., Hénaff, M.-A., & Krolak-Salmon, P. (2011). Emotional facial expression detection in the peripheral visual field. PloS One, 6(6), e21584, 10.1371/journal.pone.0021584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bobak, A. K., Parris, B. A., Gregory, N. J., Bennetts, R. J., & Bate, S. (2017). Eye-movement strategies in developmental prosopagnosia and “super” face recognition. Quarterly Journal of Experimental Psychology, 70(2), 201–217, 10.1080/17470218.2016.1161059. [DOI] [PubMed] [Google Scholar]

- Castiello, U. (2003). Understanding other people's actions: Intention and attention. Journal of Experimental Psychology. Human Perception and Performance, 29(2), 416–430, 10.1037/0096-1523.29.2.416. [DOI] [PubMed] [Google Scholar]

- Champely, S., Ekstrom, C., Dalgaard, P., Gill, J., Weibelzahl, S., Kumar, A., & De Rosario, M. H. (2018). Package ‘pwr’. R Package Version, 1(2). [Google Scholar]

- Chen, F. S., & Yoon, J. M. D. (2011). Brief report: Broader autism phenotype predicts spontaneous reciprocity of direct gaze. Journal of Autism and Developmental Disorders, 41(8), 1131–1134, 10.1007/s10803-010-1136-2. [DOI] [PubMed] [Google Scholar]

- Chen, J., van den Bos, E., Karch, J. D., & Westenberg, P. M. (2022). Social anxiety is related to reduced face gaze during a naturalistic social interaction. Anxiety, Stress, & Coping, 25, 1–15, 10.1080/10615806.2022.2125961. [DOI] [PubMed] [Google Scholar]

- Chen, J., van den Bos, E., Velthuizen, S. L. M., & Westenberg, P. M. (2021). Visual avoidance of faces in socially anxious individuals: The moderating effect of type of social situation. Journal of Experimental Psychopathology, 12(1), 2043808721989628, 10.1177/2043808721989628. [DOI] [Google Scholar]

- Chen, J., van den Bos, E., & Westenberg, P. M. (2020). A systematic review of visual avoidance of faces in socially anxious individuals: Influence of severity, type of social situation, and development. Journal of Anxiety Disorders, 70, 102193, 10.1016/j.janxdis.2020.102193. [DOI] [PubMed] [Google Scholar]

- Connor, K. M., Davidson, J. R. T., Churchill, L. E., Sherwood, A., Weisler, R. H., & Foa, E. (2000). Psychometric properties of the Social Phobia Inventory (SPIN): New self-rating scale. British Journal of Psychiatry, 176(4), 379–386, 10.1192/bjp.176.4.379. [DOI] [PubMed] [Google Scholar]

- Dechant, M., Trimpl, S., Wolff, C., Mühlberger, A., & Shiban, Y. (2017). Potential of virtual reality as a diagnostic tool for social anxiety: A pilot study. Computers in Human Behavior, 76, 128–134, 10.1016/j.chb.2017.07.005. [DOI] [Google Scholar]

- Fletcher-Watson, S., Leekam, S. R., Benson, V., Frank, M. C., & Findlay, J. M. (2009). Eye-movements reveal attention to social information in autism spectrum disorder. Neuropsychologia, 47(1), 248–257. [DOI] [PubMed] [Google Scholar]

- Freeth, M., Foulsham, T., & Kingstone, A. (2013). What affects social attention? Social presence, eye contact and autistic traits. PLoS One, 8(1), e53286, 10.1371/journal.pone.0053286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg, L. R. (1992). The development of markers for the Big-Five factor structure. Psychological Assessment, 4(1), 26. [Google Scholar]

- Grossman, R. B., Zane, E., Mertens, J., & Mitchell, T. (2019). Facetime vs. screentime: Gaze patterns to live and video social stimuli in adolescents with ASD. Scientific Reports, 9(1), 12643, 10.1038/s41598-019-49039-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guy, N., Azulay, H., Kardosh, R., Weiss, Y., Hassin, R. R., Israel, S., et al. (2019). A novel perceptual trait: Gaze predilection for faces during visual exploration. Scientific Reports, 9(1), 1–12, 10.1038/s41598-019-47110-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haas, B. de, Iakovidis, A. L., Schwarzkopf, D. S., & Gegenfurtner, K. R. (2019). Individual differences in visual salience vary along semantic dimensions. Proceedings of the National Academy of Sciences of the United States of America, 201820553, 10.1073/pnas.1820553116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison, C., Binetti, N., Coutrot, A., Johnston, A., & Mareschal, I. (2018). Personality traits do not predict how we look at faces. Perception, 0301006618788754, 10.1177/0301006618788754. [DOI] [PubMed] [Google Scholar]

- Hessels, R. S. (2020). How does gaze to faces support face-to-face interaction? A review and perspective. Psychonomic Bulletin & Review. 27( 5), 856–881, 10.3758/s13423-020-01715-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessels, R. S., Benjamins, J. S., van Doorn, A. J., Koenderink, J. J., Holleman, G. A., & Hooge, I. T. C. (2020). Looking behavior and potential human interactions during locomotion. Journal of Vision, 20(10), 5, 10.1167/jov.20.10.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessels, R. S., Holleman, G. A., Cornelissen, T. H. W., Hooge, I. T. C., & Kemner, C. (2018). Eye contact takes two—Autistic and social anxiety traits predict gaze behavior in dyadic interaction. Journal of Experimental Psychopathology, 9(2), jep.062917, 10.5127/jep.062917. [DOI] [Google Scholar]

- Hessels, R. S., Holleman, G. A., Kingstone, A., Hooge, I. T. C., & Kemner, C. (2019). Gaze allocation in face-to-face communication is affected primarily by task structure and social context, not stimulus-driven factors. Cognition, 184, 28–43, 10.1016/j.cognition.2018.12.005. [DOI] [PubMed] [Google Scholar]

- Hirschauer, S. (2005). On doing being a stranger: The practical constitution of civil inattention. Journal for the Theory of Social Behaviour, 35(1), 41–67, 10.1111/j.0021-8308.2005.00263.x. [DOI] [Google Scholar]

- Ho, S., Foulsham, T., & Kingstone, A. (2015). Speaking and Listening with the Eyes: Gaze Signaling during Dyadic Interactions. PLoS One, 10(8), e0136905, 10.1371/journal.pone.0136905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holleman, G. A., Hessels, R. S., Kemner, C., & Hooge, I. T. C. (2020). Implying social interaction and its influence on gaze behavior to the eyes. PLoS One, 15(2), e0229203, 10.1371/journal.pone.0229203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holleman, G. A., Hooge, I. T. C., Huijding, J., Deković, M., Kemner, C., & Hessels, R. S. (2021). Gaze and speech behavior in parent–child interactions: The role of conflict and cooperation. Current Psychology, 1–22, 10.1007/s12144-021-02532-7 [DOI] [Google Scholar]

- Holmqvist, K., Nyström, M., Andersson, R., Dewhurst, R., Jarodzka, H., & Van de Weijer, J. (2011). Eye tracking: A comprehensive guide to methods and measures. Oxford: Oxford University Press. [Google Scholar]

- Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59(9), 809–816. [DOI] [PubMed] [Google Scholar]

- Laidlaw, K. E. W., Foulsham, T., Kuhn, G., & Kingstone, A. (2011). Potential social interactions are important to social attention. Proceedings of the National Academy of Sciences of the United State of America, 108(14), 5548–5553, 10.1073/pnas.1017022108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langton, S. R. H., Watt, R. J., & Bruce, V. (2000). Do the eyes have it? Cues to the direction of social attention. Trends in Cognitive Sciences, 4(2), 50–59, 10.1016/S1364-6613(99)01436-9. [DOI] [PubMed] [Google Scholar]

- Mansour, H., & Kuhn, G. (2019). Studying “natural” eye movements in an “unnatural” social environment: The influence of social activity, framing, and sub-clinical traits on gaze aversion. Quarterly Journal of Experimental Psychology, 72(8), 1913–1925, 10.1177/1747021818819094. [DOI] [PubMed] [Google Scholar]

- Mehoudar, E., Arizpe, J., Baker, C. I., & Yovel, G. (2014). Faces in the eye of the beholder: Unique and stable eye scanning patterns of individual observers. Journal of Vision, 14(7), 6–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarro, D. J., & Foxcroft, D. R. (2022). Learning statistics with jamovi: A tutorial for psychology students and other beginners (Version 0.75). 10.24384/Hgc3-7p15. Retrieved from https://www.learnstatswithjamovi.com/. [DOI]

- Niehorster, D. C., Cornelissen, T. H. W., Holmqvist, K., Hooge, I. T. C., & Hessels, R. S. (2018). What to expect from your remote eye-tracker when participants are unrestrained. Behavior Research Methods, 50(1), 213–227, 10.3758/s13428-017-0863-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palanica, A., & Itier, R. J. (2014). Effects of peripheral eccentricity and head orientation on gaze discrimination. Visual Cognition, 22(9–10), 1216–1232, 10.1080/13506285.2014.990545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perlman, S. B., Morris, J. P., Wyk, B. C. V., Green, S. R., Doyle, J. L., & Pelphrey, K. A. (2009). Individual differences in personality predict how people look at faces. PLoS One, 4(6), e5952, 10.1371/journal.pone.0005952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pertzov, Y., Avidan, G., & Zohary, E. (2009). Accumulation of visual information across multiple fixations. Journal of Vision, 9(10), 2–2, 10.1167/9.10.2. [DOI] [PubMed] [Google Scholar]

- Peterson, M. F., & Eckstein, M. P. (2013). Individual differences in eye movements during face identification reflect observer-specific optimal points of fixation. Psychological Science, 24(7), 1216–1225, 10.1177/0956797612471684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson, M. F., Lin, J., Zaun, I., & Kanwisher, N. (2016). Individual differences in face-looking behavior generalize from the lab to the world. Journal of Vision, 16(7), 12–12. [DOI] [PubMed] [Google Scholar]

- Risko, E. F., Laidlaw, K. E., Freeth, M., Foulsham, T., & Kingstone, A. (2012). Social attention with real versus reel stimuli: Toward an empirical approach to concerns about ecological validity. Frontiers in Human Neuroscience, 6, 143, 10.3389/fnhum.2012.00143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers, S. L., Speelman, C. P., Guidetti, O., & Longmuir, M. (2018). Using dual eye tracking to uncover personal gaze patterns during social interaction. Scientific Reports, 8(1), 4271, 10.1038/s41598-018-22726-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg, M. (1965). Rosenberg self-esteem scale. Journal of Religion and Health. [Google Scholar]

- Rösler, L., Göhring, S., Strunz, M., & Gamer, M. (2021). Social anxiety is associated with heart rate but not gaze behavior in a real social interaction. Journal of Behavior Therapy and Experimental Psychiatry, 70, 101600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubo, M., Huestegge, L., & Gamer, M. (2020). Social anxiety modulates visual exploration in real life—But not in the laboratory. British Journal of Psychology, 111(2), 233–245, 10.1111/bjop.12396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simplicio, M. D., Doallo, S., Costoloni, G., Rohenkohl, G., Nobre, A. C., & Harmer, C. J. (2014). ‘Can you look me in the face?’ Short-term SSRI Administration Reverts Avoidant Ocular Face Exploration in Subjects at Risk for Psychopathology. Neuropsychopharmacology, 39(13), 3059–3066, 10.1038/npp.2014.159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Speer, L. L., Cook, A. E., McMahon, W. M., & Clark, E. (2007). Face processing in children with autism: Effects of stimulus contents and type. Autism, 11(3), 265–277, 10.1177/1362361307076925. [DOI] [PubMed] [Google Scholar]

- Tönsing, D., Schiller, B., Vehlen, A., Spenthof, I., Domes, G., & Heinrichs, M. (2022). No evidence that gaze anxiety predicts gaze avoidance behavior during face-to-face social interaction. Scientific Reports, 12(1), Article 1, 10.1038/s41598-022-25189-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vabalas, A., & Freeth, M. (2016). Brief report: Patterns of eye movements in face to face conversation are associated with autistic traits: Evidence from a student sample. Journal of Autism and Developmental Disorders, 46(1), 305–314, 10.1007/s10803-015-2546-y. [DOI] [PubMed] [Google Scholar]

- Vaidya, A. R., Jin, C., & Fellows, L. K. (2014). Eye spy: The predictive value of fixation patterns in detecting subtle and extreme emotions from faces. Cognition, 133(2), 443–456, 10.1016/j.cognition.2014.07.004. [DOI] [PubMed] [Google Scholar]

- Vehlen, A., Standard, W., & Domes, G. (2022). How to choose the size of facial areas of interest in interactive eye tracking. PLoS One, 17(2), e0263594, 10.1371/journal.pone.0263594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Võ, M. L.-H., Smith, T. J., Mital, P. K., & Henderson, J. M. (2012). Do the eyes really have it? Dynamic allocation of attention when viewing moving faces. Journal of Vision, 12(13), 3, 10.1167/12.13.3. [DOI] [PubMed] [Google Scholar]

- Yitzhak, N., Pertzov, Y., Guy, N., & Aviezer, H. (2020). Many ways to see your feelings: Successful facial expression recognition occurs with diverse patterns of fixation distributions. Emotion, 22(5), 844–860, 10.1037/emo0000812. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.