Abstract

The emergence of the global coronavirus pandemic in 2019 (COVID-19 disease) created a need for remote methods to detect and continuously monitor patients with infectious respiratory diseases. Many different devices, including thermometers, pulse oximeters, smartwatches, and rings, were proposed to monitor the symptoms of infected individuals at home. However, these consumer-grade devices are typically not capable of automated monitoring during both day and night. This study aims to develop a method to classify and monitor breathing patterns in real-time using tissue hemodynamic responses and a deep convolutional neural network (CNN)-based classification algorithm. Tissue hemodynamic responses at the sternal manubrium were collected in 21 healthy volunteers using a wearable near-infrared spectroscopy (NIRS) device during three different breathing conditions. We developed a deep CNN-based classification algorithm to classify and monitor breathing patterns in real time. The classification method was designed by improving and modifying the pre-activation residual network (Pre-ResNet) previously developed to classify two-dimensional (2D) images. Three different one-dimensional CNN (1D-CNN) classification models based on Pre-ResNet were developed. By using these models, we were able to obtain an average classification accuracy of 88.79% (without Stage 1 (data size reducing convolutional layer)), 90.58% (with 1 × 3 Stage 1), and 91.77% (with 1 × 5 Stage 1).

Keywords: COVID-19, deep learning, convolutional neural network, respiratory disease, NIRS, wearable device

1. Introduction

Infectious respiratory diseases are caused by various microorganisms such as viruses, fungi, parasites, and bacteria [1,2]. Examples of infectious respiratory diseases include tuberculosis, diphtheria, bacterial pneumonia, and viral pneumonia, such as influenza and COVID-19 disease [3,4,5]. These diseases affect not only individuals but can also have a significant impact on society, depending on the extent. COVID-19 disease was declared a global pandemic in 2020 by the World Health Organization (WHO) and has had a great economic and social impact worldwide [5]. To prevent the severe consequences of infectious disease, it is of utmost importance to develop an effective method to monitor infected individuals. The current practice of treating individuals with infectious respiratory disease requires in-person examination, chest radiography, and, if necessary, blood and sputum tests [6,7,8]. In a global pandemic, it is impossible to monitor individual patients due to the large number of patients and limited medical personnel [9,10]. Additionally, in the case of highly contagious infectious diseases, in-person examination poses an increased risk of infection to healthcare providers [11]. Therefore, there is a need for remote methods to monitor patients with infectious diseases to facilitate more efficient treatments and prevent the spread of infection [12,13].

Common features of infectious respiratory diseases are symptoms caused by abnormalities in the respiratory system, such as cough and rapid and shallow breathing (tachypnoea) [14,15]. These symptoms are often accompanied by fever, headache, fatigue, and lethargy [16,17]. To examine these symptoms without direct contact with the patient, different methods are being conducted to analyze abnormal breathing patterns. Among these methods, radar [18,19], CT scans [20], X-rays [21], and ultrasounds [22] have shown promising results. However, limitations regarding accessibility, patient movement, and high costs prevent these techniques from being suitable for real-time and continuous patient monitoring [18,19,20,21,22,23,24,25]. To overcome these difficulties, various wearable biosensors that can test and monitor patients in real-time are being developed [26]. Among them, near-infrared spectroscopy (NIRS) is receiving great attention due to its simple optical device structure, low-cost, and capability to non-invasively monitor changes in tissue hemodynamic and oxygenation responses to respiratory infections [27]. In addition to a conventional provision of heart rate and respiratory rate, commercially available wearable sensors such as smart watches can measure peripheral arterial blood oxygen saturation (SpO2), which was reported as a critical indicator of deterioration in patients with infectious respiratory diseases [28]. The commercial wearable smart sensor can provide convenience when observing biological signals, but it can cause false readings due to various factors including poor circulation, skin thickness, and skin color [29]. While SpO2 is measured from arterial blood circulation, NIRS can measure changes in hemoglobin concentration within tissue microvasculature. This provides a significant advantage to standard pulse oximeters, as venous capillary blood provides the majority of the contribution to the hemoglobin absorption spectrum [30]. Furthermore, NIRS can measure tissue oxygenation without pulsatile flow, which provides a significant advantage in the critically ill patient monitoring [31]. Additionally, Cheung et al. found that transcutaneous muscle NIRS can detect the effects of hypoxia significantly sooner than pulse oximetry [32]. In patients with severe respiratory disease, early diagnosis and treatment are essential to ensure improved patient prognosis and reduce long-term negative health consequences [33]. Hence, in order to detect the effects of infectious respiratory diseases on patients earlier, a NIRS device was used to measure tissue hemodynamics.

In our previous studies, we employed a wearable NIRS device to monitor tissue hemodynamic responses, including changes in tissue oxygenated hemoglobin (O2Hb), deoxygenated hemoglobin (HHb), total hemoglobin (THb), and tissue saturation index (TSI) from the chest of healthy volunteers during different simulated breathing tasks [34]. Measured O2Hb signals were then processed to extract three features: O2Hb change, breathing interval, and breathing depth, averaged over a period of 60 s. These features were then fed to a well-known machine learning model (Random Forest classification) to classify different simulated breathing tasks: baseline (rest), rapid/shallow, and loaded breathing. We were able to achieve a classification accuracy of 87%. However, a drawback that can hinder the real-time monitoring capability of this methodology is the extra step of feature extraction. In this study, to eliminate this time-consuming step, we propose using a deep learning model to classify the three breathing patterns.

When the AlexNet model based on deep CNN won the 2012 ImageNet Large Scale Visual Recognition Challenge (ISLVRC) with an overwhelming performance [35], various types of convolutional neural network (CNN) models were proposed in various fields, including image classification, image enhancement, computer vision, medical imaging, and network security. The development of these deep learning algorithms has high potential for applications in the classification and monitoring of infectious respiratory diseases. Cho et al. used a thermal camera to track breathing patterns using temperature changes around an individual’s nose [36]. Following this study, a CNN-based algorithm was used to classify psychological stress levels automatically. Shah et al. proposed a method to identify characteristic patterns of COVID-19 disease from CT scan images taken using a deep learning algorithm [37]. Chen proposed a deep learning model that adds a 3D attention module to the 3D U-Net model [38], enabling the segmentation of COVID-19 lung lesions from CT images [39]. Rabbah et al., Haritha et al., and Qjidaa et al. proposed a method of detecting COVID-19-infected individuals from chest X-ray images using a CNN-based algorithm [40,41,42]. Wang et al., used a depth camera to measure depth variations in the chest, abdomen, and shoulder of participants to classify six breathing patterns (Eupnea, Tachypnea, Bradypnea, Biots, Cheyne-Stokes, and Central-Apnea) using the BI-AT-GRU algorithm, which combines bidirectional and attentional mechanisms in a Gated Recurrent Unit neural network [15]. Sarno et al. proposed a method that utilizes an electronic nose (E-nose) to collect sweat samples from the human axilla and employed a stacked Deep Neural Network to classify individuals as having or not having respiratory conditions [43].

All of these methods showed promising results for classifying patients suffering from respiratory disease. However, the data acquisition method is unsuitable for continuous monitoring due to the constant inconvenience to the patients. To solve this problem, we aimed to apply a deep learning algorithm to the data acquired from a wearable NIRS device. We hypothesize that the capability of a deep learning model to enact automatic feature extraction will enable the use of a wearable NIRS device for real-time monitoring and increase classification accuracy.

2. Materials and Methods

2.1. Data Collection

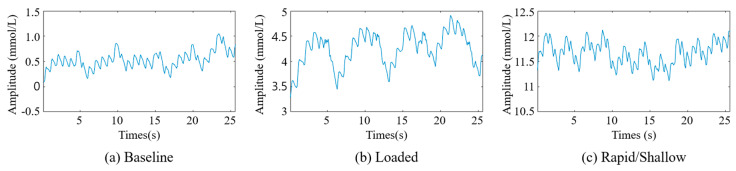

Tissue hemodynamic indices, including O2Hb, HHb, THb, and TSI were measured at the manubrium using a wearable NIRS device. The NIRS device consists of three light sources, emitting light at two wavelengths (760 nm and 850 nm), and one light detector. Data were collected from 21 healthy adult volunteers (12 males and 9 females; mean age = 29.47 ± 9.73 years old) through a clinical protocol approved by the Clinical Research Ethics Board at the University of British Columbia. Each participant performed three separate breathing conditions: baseline (3 min), loaded (5 min), and rapid/shallow (5 min). During the baseline phase, participants breathed normally through the nose or mouth at a relaxed pace of breathing. To simulate dyspnea, a common breathing issue observed during acute pneumonia, a respiratory trainer was used during the loaded breathing phase. The respiratory trainer increases resistance during breathing, forcing respiratory muscles to work harder to facilitate breathing. The rapid/shallow breathing phase was designed to simulate tachypnea—the condition of being unable to take deep breaths during acute pneumonia. Participants were instructed to breathe 25 times per minute during this phase. The data acquisition rate was 10 Hz. The signals acquired through a NIRS device are obtained in the form of a one-dimensional signal. Figure 1 shows the O2HB signal data obtained using the NIRS device during the three phases. During the three phases, the signal obtained under the loaded phase has the largest amplitude, while the signal obtained under the rapid phase has the shortest period. The data for each condition is cropped at 6.4-s intervals and used as input data for model training and testing. Further description of data collection can be found in our previous publication [34].

Figure 1.

O2Hb signal during three breathing phases.

2.2. Classification Model for Simulated Breathing Model

In this paper, we propose a classification model based on deep CNN to classify simulated breathing patterns. The classification algorithm was developed by improving and modifying the pre-activation residual model (Pre-ResNet)—a two-dimensional (2D) image classification algorithm [44]. The parameters of CNN are learned by stochastic gradient descent (SGD) [45] with a backpropagation [46]. However, this approach presents a vanishing gradient problem—where the gradient becomes smaller as the depth of the network increases [47,48,49]. To solve this problem, He et al. proposed a residual unit that adds a shortcut connection between building blocks for the residual learning [44]. The building block is proposed to increase the depth of the network and is composed of standardized layers. Short connections mitigate gradient loss by skipping one or more layers during backpropagation. This short connection has been applied to various CNN models [50,51,52]. The residual unit is defined as follows:

| (1) |

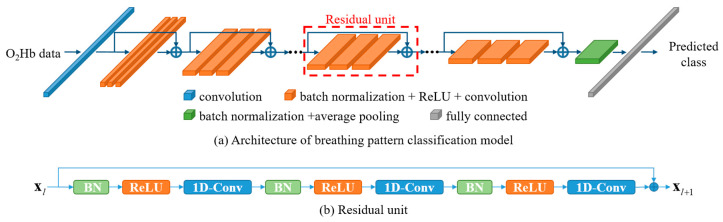

where and represent the input and output features of units, respectively. is the weight parameters of the residual unit, and is the residual function. The residual function includes a convolutional layer, an active function (ReLU) [53], and a batch normalization (BN) [54] as shown in Figure 2b.

Figure 2.

Breathing pattern classification model. (a) The framework of the proposed method: the proposed CNN model consists of multiple residual units. (b) The structure of a residual unit.

The Pre-ResNet model is designed for a 2D signal with a size of 32 × 32. To apply this model to the 1D signal obtained from the NIRS device, the 2D convolutional layer was changed to a 1D convolutional layer. Additionally, we added a residual unit consisting of a 1 × 5 kernel to create a global feature while reducing the data size at the front of the network. We confirmed that adding a residual unit with a 1 × 5 kernel shows better classification performance than simply downsampling the data through experiments. Figure 2a shows the architecture of the CNN model for the classification of breathing patterns. The detailed parameter information is shown in Table 1. BN and ReLU functions are omitted from the table. Each convolution block belonging to the same group has the same kernel size and number of kernels. The block structure represents the kernel size and number of the convolutional layer included in each block. Block number indicates the number of residual units constituting each group. The input size and output size indicate the size of the input feature and output feature of the stage, respectively.

Table 1.

Classification model architectures of a CNN with 1 × 5 kernel Stage 1.

| Group Name | Input Size Output Size |

Block Structure (Kernel Size, Number) |

Block Numbers (113-Layers) |

|---|---|---|---|

| Stage 0 | 1 × 64 | 15, 16 | 1 |

| Stage 1 | 164 132 |

11, 16 | 1 |

| 15, 16 | |||

| 11, 16 | |||

| Stage 2 | 132 132 |

11, 16 | 12 |

| 13, 16 | |||

| 11, 64 | |||

| Stage 3 | 132 116 |

11, 32 | 12 |

| 13, 32 | |||

| 11, 128 | |||

| Stage 4 | 116 18 |

11, 64 | 12 |

| 13, 64 | |||

| 11, 256 | |||

| Average pooling | 18 1256 |

18 | 1 |

| Fully connected layer | 1256 Classes number |

1 |

As the depth of the network increases, downsampling by 1/2 was performed using a stride of 2, and the feature map dimension is doubled in the first convolutional layer of the first residual unit in Stage 1, Stage 3, and Stage 4 to reduce the feature map and generate high-dimensional feature maps. The parameter optimization of the network was performed using SGD. SGD is an iterative optimization algorithm widely used in machine learning applications to find model parameters that minimize the error between predicted output and the ground truth value. The gradient descent algorithm computes the gradient using the entire training dataset for each iteration to update the model. In contrast, SGD uses a single random training example to compute the gradient. As a result, SGD exhibits faster learning speed compared to gradient descent. Cross entropy loss was used as the loss function.

3. Results

We used data obtained from a customized wearable NIRS device for a classification experiment. The outputs of the NIRS device are O2Hb, HHb, THb, and TSI. A customized data acquisition software collects and converts measured signals to changes in these outputs [34]. Collected data were preprocessed, and signals containing movement artifacts were removed. The preprocessed signal is cropped at 64 data intervals, which is equivalent to a signal length of 6.4 s. As a result, 531 baseline samples, 780 loaded samples, and 874 rapid/shallow samples were generated. The entire dataset was randomly selected at a ratio of 80:20 for model training and evaluation. For classification, only O2Hb and HHb datasets were used. The classification did not include THb (total hemoglobin, which is the sum of O2Hb and HHb) and TSI (the ratio of O2Hb to THb) because they are directly affected by changes in O2Hb and HHb. Table 2 displays the number of O2Hb and HHb datasets used for training and testing.

Table 2.

The number of the datasets.

| Class | Train | Test |

|---|---|---|

| Baseline | 425 | 106 |

| Loaded | 624 | 156 |

| Rapid/shallow | 700 | 174 |

The classification model used in this experiment consists of a total of 113 layers, as shown in Table 1. Our classification model was trained using SGD with a batch size of 64 samples and momentum of 0.9 for 120 epochs. The initial learning rate started at 0.1 and was divided by a factor of 10 every 30 epochs. All classification models used in the experiment were trained from scratch.

Table 3 shows the test results of the classification model. Each CNN-based method was tested five times, except for the Random Forest method, and Table 3 shows the average accuracy, standard deviation (STD), and best accuracy. The accuracy of the results can be calculated using Equation (2).

| (2) |

Table 3.

Classification results. Where DS denotes downsampling.

| Method | Data Type | Mean Accuracy (%) |

STD | Best Accuracy (%) |

|---|---|---|---|---|

| Random Forest | O2Hb | - | - | 87.00 |

| Pre-ResNet with DS | O2Hb | 88.79 | 0.423 | 89.44 |

| HHb | 86.28 | 0.550 | 87.16 | |

| O2Hb and HHb | 88.02 | 0.490 | 88.76 | |

| Pre-ResNet with Stage 1, (1 × 3) | O2Hb | 90.58 | 0.488 | 91.51 |

| HHb | 89.63 | 0.639 | 90.37 | |

| O2Hb and HHb | 90.23 | 0.658 | 91.28 | |

| Pre-ResNet with Stage 1, (1 × 5) | O2Hb | 91.77 | 0.456 | 92.43 |

| HHb | 89.68 | 0.490 | 90.60 | |

| O2Hb and HHb | 90.78 | 0.523 | 91.74 |

The Random Forest method is a classification model we have tested in previous research [34]. This model contains 100 trees, and the characteristics of the average amplitude, interval, and magnitude of the signal are used for training and evaluation of the model. The Pre-ResNet with DS shows the test result after downsampling the signal to 1/2 size without Stage 1.

As a result of the experiment, the CNN-based classification model shows better overall performance than the Random Forest method. Adding Stage 1 improves the classification performance compared to direct downsampling of the signal. Additionally, the CNN-based model using a 1 × 5 kernel in Stage 1 achieved the highest performance on the O2Hb dataset, with an accuracy of 92.43%. In the experiments using Pre-ResNet with downsampling and Pre-ResNet with Stage 1 (1 × 5 kernel), classification experiments were compared using individual signals of O2Hb and HHb, as well as combined signals of HHb and O2Hb, and it was found that the best performance was achieved when using only the O2Hb data.

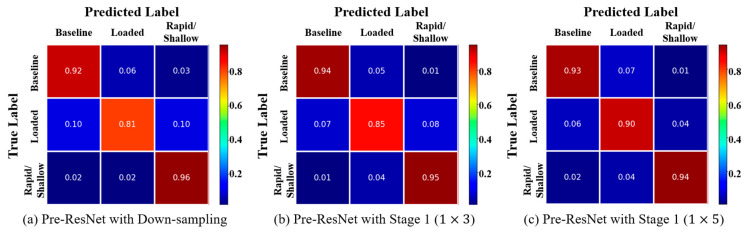

Figure 3 shows the normalized confusion matrix of different CNN-based methods. The true label represents the label of the data sample, and the predicted label represents the label estimated from the model. It can be seen that the Loaded class has the lowest classification accuracy across all three different models.

Figure 3.

Normalized confusion matrix for the CNN based models on the test set.

Table 4 shows the recall values of each class and the balanced accuracy of best classification accuracies after five-times testing. The balanced accuracies in Table 4 show a similar performance to the best accuracies in Table 3.

Table 4.

Balanced accuracy of each CNN based methods on the O2Hb dataset.

| Metric | Pre-ResNet with DS | Pre-ResNet with Stage 1, (1 × 3) | Pre-ResNet with Stage 1, (1 × 5) |

|---|---|---|---|

| Recall Baseline | 0.92 | 0.94 | 0.93 |

| Recall Loaded | 0.81 | 0.85 | 0.90 |

| Recall Rapid | 0.96 | 0.95 | 0.94 |

| Balanced Accuracy | 89.66% | 91.33% | 92.33% |

Table 5 shows the results of the classification experiment conducted using the dataset split on a participant basis. Out of 21 participant datasets, 17 participant datasets were randomly selected for model training, and the remaining 4 participant datasets were used for classification testing. The classification of the datasets split on the subject level yields slightly less accurate results compared to the classification of the datasets of all the subjects.

Table 5.

Classification results on the dataset split on the subject level.

| Method | Data Type |

Number of Parameters |

FLOPS | Mean Accuracy (%) |

STD | Best Accuracy (%) |

|---|---|---|---|---|---|---|

| Pre-ResNet with DS | O2Hb | 0.7 M | 15 M | 87.25 | 0.472 | 88.07 |

| Pre-ResNet with Stage 1, (1 × 5) | 0.7 M | 15 M | 90.14 | 0.649 | 91.28 | |

| EfficientNetV2 m with DS [55] | 52 M | 225 M | 91.05 | 0.562 | 91.97 | |

| PyramidNet with DS [51] | 17 M | 396 M | 87.39 | 0.481 | 88.89 | |

| CF-CNN with DS [52] | 29.7 M | 627 M | 89.27 | 0.531 | 90.74 |

To compare the classification performance of our proposed method with other advanced deep learning classification algorithms, we used EfficientNetV2 m [55], PyramidNet [51], and CF-CNN [52]. EfficientNetV2 m and PyramidNet models are CNN-based algorithms developed for image classification and are being utilized in various fields [56,57,58,59,60].

CF-CNN proposed a classification model with multiple coarse sub-networks and a multilevel label augmentation method to enhance the training performance of the base model. For comparison experiments, we replaced the 2D convolutional layer of this model with a 1D convolutional layer. PyramidNet is composed of 272 layers, and the widening factor is set to 200. To train and test the CF-CNN model, we used PyramidNet as the base model. The layers of coarse 1, coarse 2, and the fine network were set to 26, 52, and 272, respectively, with group labels of 1, 2, and 3.

Each classification model was tested five times and trained from scratch. The model training parameters and input data size were set as used in the experiment that yielded the results in Table 1 and used for training. The experimental results show that, despite having a smaller number of parameters and floating point operations per second (FLOPS), the Pre-ResNet model with Stage 1 (1 × 5) demonstrates similar classification performance to the EfficientNetV2 m model.

4. Discussions

The authors of this study modified a conventional deep convolutional neural network (CNN) to classify three different breathing patterns: baseline, loaded, and rapid/shallow breathing. Since the CNN shares parameters, they can create robust features for shifted data. In addition, the CNN performs convolution operation using the kernel; hence, it shows good performance in detecting repetitive patterns. As a result, we were able to obtain a high classification accuracy when using the CNN (92.43%) in Table 1.

In this experiment, data sampled from all participants were randomly divided into training and testing sets at an 80:20 ratio for classification experiments. The experimental results showed that the classification models based on 1D-CNN (92.43%) yielded much better performance than the classification model using Random Forest (87%). To obtain good classification performance of traditional machine learning methods such as Random Forest, features and classification methods that can distinguish breathing patterns are required. However, it is not easy to generate various features and/or classification models. The CNN-based classification model demonstrates increased performance because it automatically generates numerous features and performs classification simultaneously during the learning process. Additionally, when Stage 1 using the 1 × 5 kernel was added, it showed better classification performance than Stage 1 using the 1 × 3 kernel due to its ability to detect important features in a wider temporal domain. In Figure 3, the data of the loaded class showed the lowest classification accuracy due to the intermediate characteristics between the baseline and rapid/shallow respiration.

The results of Table 3 reveal that solely employing the O2Hb data type yields higher accuracy in comparison to utilizing both O2Hb and HHb data. This can be related to the characteristics of the data. The O2Hb signal has a higher signal-to-noise ratio and acceptable high reproducibility compared to the HHb signal [61,62,63]. Additionally, lower classification accuracy when using both O2Hb and HHb could be because HHb contains additional information other than respiration. O2Hb is formed when hemoglobin combines with oxygen molecules during the process of respiration. On the other hand, HHb is a protein that releases the oxygen molecules it was carrying and travels back to the lungs to pick up more oxygen [64]. HHb is indirectly linked to oxygenation and can be influenced by factors such as changes in blood flow, vascular conditions, and tissue metabolism, which are not solely related to respiration.

Table 4 demonstrates that the similarity between the general accuracy and the balanced accuracy confirms that the classification model is well-trained regardless of the difference in the number of data in each class. Based on our results, it can be confirmed that the O2Hb data obtained using the NIRS device has suitable characteristics for classifying breathing patterns. Furthermore, by automatically generating appropriate features for classification, the CNN-based algorithm shows better performance than the Random Forest algorithm, which uses handcraft-based features (average amplitude, interval, and magnitude).

The high classification accuracies obtained when datasets were divided at the participant level (Table 5) prove the generalizability of our proposed model. Additionally, the performance of our proposed model was compared with the following state-of-the-art models: EfficientNetV2-M, PyramidNet, and CF-CNN. From the experimental results, it was confirmed that the proposed method shows similar performance to the EfficientNetV2 m model while using much fewer parameters and FLOPS compared to other models.

While this study has yielded promising results, it is important to acknowledge a notable limitation. Specifically, the NIRS respiratory data utilized in our research were obtained exclusively from healthy individuals. Consequently, the simulated symptoms of acute respiratory diseases such as COVID-19 and viral pneumonia were based on loaded breathing and rapid/shallow breathing patterns. To enhance the classification method, future studies should aim to broaden the scope by collecting respiratory signals from individuals diagnosed with acute pneumonia and incorporating this data into the analysis. This would provide valuable insights and improve the applicability of our findings.

5. Conclusions

In this study, we proposed a CNN-based method to classify respiratory patterns in patients with infectious respiratory diseases. This method employs oxygenated hemoglobin change measured with a wearable NIRS device as the input. The wearable NIRS device used for data acquisition is small, portable, and attachable to the human body. Additionally, the NIRS device has no environmental constraints, which allows for continuous monitoring. We designed a 1D-CNN-based classifier by improving and modifying the pre-activation residual network developed for 2D image classification to classify respiratory patterns. With the developed classification model, we were able to obtain a maximum classification accuracy of 92.43%. The proposed method can be used for the remote detection and real-time monitoring of various respiratory diseases, including COVID-19, tuberculosis, influenza, and pneumonia.

Acknowledgments

We would like to thank the Intramural Research Program at Eunice Kennedy Shriver National Institute of Child Health and Human Development, National Institutes of Health for supporting us in performing this study. This research was supported by the Peter Wall Institute for Advanced Studies, grant number PHAX-GR017213. Babak Shadgan holds a scholar award from the Michael Smith Health Research BC.

Author Contributions

Conceptualization, J.P., B.S. and A.H.G.; Data curation, A.J.M., T.N. and L.G.Z.; Formal analysis, J.P. and S.P.; Investigation, J.P., A.J.M., T.N., S.P. and L.G.Z.; Methodology, J.P., A.J.M., T.N. and S.P.; Project administration, J.P., B.S. and A.H.G.; Resources, B.S. and A.H.G.; Software, J.P.; Supervision, B.S. and A.H.G.; Validation, J.P., A.J.M., T.N., S.P., L.G.Z., B.S. and A.H.G.; Visualization, J.P. and S.P.; Writing—original draft, J.P., A.J.M., T.N. and S.P.; Writing—review and editing, J.P., A.J.M., T.N., S.P., L.G.Z., B.S. and A.H.G. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The data collection was conducted in accordance with the guidelines of the Declaration of Helsinki and approved by the Clinical Research Ethics Board at the University of British Columbia.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study at the beginning of the data collection process.

Data Availability Statement

Our code and data available at https://github.com/dkskzmffps/BPC_NIRSdata, Accessed on 7 June 2023.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by the intramural research program at the Eunice Kennedy Shriver National Institute of Child Health and Human Development (Z01-HD000261).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Schulze F., Gao X., Virzonis D., Damiati S., Schneider M.R., Kodzius R. Air quality effects on human health and approaches for its assessment through microfluidic chips. Genes. 2017;8:244. doi: 10.3390/genes8100244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lin H.-H., Ezzati M., Murray M. Tobacco smoke, indoor air pollution and tuberculosis: A systematic review and meta-analysis. PLoS Med. 2007;4:e20. doi: 10.1371/journal.pmed.0040020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Monto A.S., Gravenstein S., Elliott M., Colopy M., Schweinle J. Clinical signs and symptoms predicting influenza infection. Arch. Intern. Med. 2000;160:3243–3247. doi: 10.1001/archinte.160.21.3243. [DOI] [PubMed] [Google Scholar]

- 4.Tasci S.S., Kavalci C., Kayipmaz A.E. Relationship of meteorological and air pollution parameters with pneumonia in elderly patients. Emerg. Med. Int. 2018;2018:4183203. doi: 10.1155/2018/4183203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zheng Y.-Y., Ma Y.-T., Zhang J.-Y., Xie X. COVID-19 and the cardiovascular system. Nat. Rev. Cardiol. 2020;17:259–260. doi: 10.1038/s41569-020-0360-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Huang S., Wiszniewski L., Constant S. The Use of In Vitro 3D Cell Models in Drug Development for Respiratory Diseases. Volume 8 Chapter; Toronto, ON, Canada: 2011. [Google Scholar]

- 7.Echavarria M., Sanchez J.L., Kolavic-Gray S.A., Polyak C.S., Mitchell-Raymundo F., Innis B.L., Vaughn D., Reynolds R., Binn L.N. Rapid detection of adenovirus in throat swab specimens by PCR during respiratory disease outbreaks among military recruits. J. Clin. Microbiol. 2003;41:810–812. doi: 10.1128/JCM.41.2.810-812.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Peeling R.W., Olliaro P.L., Boeras D.I., Fongwen N. Scaling up COVID-19 rapid antigen tests: Promises and challenges. Lancet Infect. Dis. 2021;21:e290–e295. doi: 10.1016/S1473-3099(21)00048-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brindle M.E., Gawande A. Managing COVID-19 in surgical systems. Ann. Surg. 2020;272:e1–e2. doi: 10.1097/SLA.0000000000003923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cheng K.J.G., Sun Y., Monnat S.M. COVID-19 death rates are higher in rural counties with larger shares of Blacks and Hispanics. J. Rural Health. 2020;36:602–608. doi: 10.1111/jrh.12511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang J., Zhou M., Liu F. Reasons for healthcare workers becoming infected with novel coronavirus disease 2019 (COVID-19) in China. J. Hosp. Infect. 2020;105:100–101. doi: 10.1016/j.jhin.2020.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Salathé M., Althaus C.L., Neher R., Stringhini S., Hodcroft E., Fellay J., Zwahlen M., Senti G., Battegay M., Wilder-Smith A. COVID-19 epidemic in Switzerland: On the importance of testing, contact tracing and isolation. Swiss Med. Wkly. 2020;150:w20225. doi: 10.4414/smw.2020.20225. [DOI] [PubMed] [Google Scholar]

- 13.Lewnard J.A., Lo N.C. Scientific and ethical basis for social-distancing interventions against COVID-19. Lancet Infect. Dis. 2020;20:631–633. doi: 10.1016/S1473-3099(20)30190-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pan L., Mu M., Yang P., Sun Y., Wang R., Yan J., Li P., Hu B., Wang J., Hu C. Clinical characteristics of COVID-19 patients with digestive symptoms in Hubei, China: A descriptive, cross-sectional, multicenter study. Am. J. Gastroenterol. 2020;115:766–773. doi: 10.14309/ajg.0000000000000620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Y., Hu M., Li Q., Zhang X.-P., Zhai G., Yao N. Abnormal respiratory patterns classifier may contribute to large-scale screening of people infected with COVID-19 in an accurate and unobtrusive manner. arXiv. 20202002.05534 [Google Scholar]

- 16.Tleyjeh I.M., Saddik B., Ramakrishnan R.K., AlSwaidan N., AlAnazi A., Alhazmi D., Aloufi A., AlSumait F., Berbari E.F., Halwani R. Long term predictors of breathlessness, exercise intolerance, chronic fatigue and well-being in hospitalized patients with COVID-19: A cohort study with 4 months median follow-up. J. Infect. Public Health. 2022;15:21–28. doi: 10.1016/j.jiph.2021.11.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Martelletti P., Bentivegna E., Spuntarelli V., Luciani M. Long-COVID headache. SN Compr. Clin. Med. 2021;3:1704–1706. doi: 10.1007/s42399-021-00964-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Rahman A., Lubecke V.M., Boric–Lubecke O., Prins J.H., Sakamoto T. Doppler radar techniques for accurate respiration characterization and subject identification. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018;8:350–359. doi: 10.1109/JETCAS.2018.2818181. [DOI] [Google Scholar]

- 19.Purnomo A.T., Lin D.-B., Adiprabowo T., Hendria W.F. Non-contact monitoring and classification of breathing pattern for the supervision of people infected by COVID-19. Sensors. 2021;21:3172. doi: 10.3390/s21093172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ceniccola G.D., Castro M.G., Piovacari S.M.F., Horie L.M., Corrêa F.G., Barrere A.P.N., Toledo D.O. Current technologies in body composition assessment: Advantages and disadvantages. Nutrition. 2019;62:25–31. doi: 10.1016/j.nut.2018.11.028. [DOI] [PubMed] [Google Scholar]

- 21.Wang L., Lin Z.Q., Wong A. COVID-net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest x-ray images. Sci. Rep. 2020;10:19549. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Taylor W., Abbasi Q.H., Dashtipour K., Ansari S., Shah S.A., Khalid A., Imran M.A. A Review of the State of the Art in Non-Contact Sensing for COVID-19. Sensors. 2020;20:5665. doi: 10.3390/s20195665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cippitelli E., Fioranelli F., Gambi E., Spinsante S. Radar and RGB-depth sensors for fall detection: A review. IEEE Sens. J. 2017;17:3585–3604. doi: 10.1109/JSEN.2017.2697077. [DOI] [Google Scholar]

- 24.Le Kernec J., Fioranelli F., Ding C., Zhao H., Sun L., Hong H., Lorandel J., Romain O. Radar signal processing for sensing in assisted living: The challenges associated with real-time implementation of emerging algorithms. IEEE Signal Process. Mag. 2019;36:29–41. doi: 10.1109/MSP.2019.2903715. [DOI] [Google Scholar]

- 25.Gurbuz S.Z., Amin M.G. Radar-based human-motion recognition with deep learning: Promising applications for indoor monitoring. IEEE Signal Process. Mag. 2019;36:16–28. doi: 10.1109/MSP.2018.2890128. [DOI] [Google Scholar]

- 26.Kim J., Campbell A.S., de Ávila B.E.-F., Wang J. Wearable biosensors for healthcare monitoring. Nat. Biotechnol. 2019;37:389–406. doi: 10.1038/s41587-019-0045-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Nguyen T., Khaksari K., Khare S.M., Park S., Anderson A.A., Bieda J., Jung E., Hsu C.-D., Romero R., Gandjbakhche A.H. Non-invasive transabdominal measurement of placental oxygenation: A step toward continuous monitoring. Biomed. Opt. Express. 2021;12:4119–4130. doi: 10.1364/BOE.424969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Torjesen I. COVID-19: Patients to use pulse oximetry at home to spot deterioration. BMJ. 2020;371:m4151. doi: 10.1136/bmj.m4151. [DOI] [Google Scholar]

- 29.Todd B. Pulse oximetry may be inaccurate in patients with darker skin. Am. J. Nurs. 2021;121:16. doi: 10.1097/01.NAJ.0000742448.35686.f9. [DOI] [PubMed] [Google Scholar]

- 30.Samraj R.S., Nicolas L. Near infrared spectroscopy (NIRS) derived tissue oxygenation in critical illness. Clin. Investig. Med. 2015;38:E285–E295. doi: 10.25011/cim.v38i5.25685. [DOI] [PubMed] [Google Scholar]

- 31.Takegawa R., Hayashida K., Rolston D.M., Li T., Miyara S.J., Ohnishi M., Shiozaki T., Becker L.B. Near-infrared spectroscopy assessments of regional cerebral oxygen saturation for the prediction of clinical outcomes in patients with cardiac arrest: A review of clinical impact, evolution, and future directions. Front. Med. 2020;7:587930. doi: 10.3389/fmed.2020.587930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cheung A., Tu L., Macnab A., Kwon B.K., Shadgan B. Detection of hypoxia by near-infrared spectroscopy and pulse oximetry: A comparative study. J. Biomed. Opt. 2022;27:077001. doi: 10.1117/1.JBO.27.7.077001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kim P.S., Read S.W., Fauci A.S. Therapy for early COVID-19: A critical need. Jama. 2020;324:2149–2150. doi: 10.1001/jama.2020.22813. [DOI] [PubMed] [Google Scholar]

- 34.Mah A.J., Nguyen T., Ghazi Zadeh L., Shadgan A., Khaksari K., Nourizadeh M., Zaidi A., Park S., Gandjbakhche A.H., Shadgan B. Optical Monitoring of Breathing Patterns and Tissue Oxygenation: A Potential Application in COVID-19 Screening and Monitoring. Sensors. 2022;22:7274. doi: 10.3390/s22197274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 36.Cho Y., Bianchi-Berthouze N., Julier S.J. DeepBreath: Deep learning of breathing patterns for automatic stress recognition using low-cost thermal imaging in unconstrained settings; Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII); San Antonio, TX, USA. 23–26 October 2017; pp. 456–463. [Google Scholar]

- 37.Shah V., Keniya R., Shridharani A., Punjabi M., Shah J., Mehendale N. Diagnosis of COVID-19 using CT scan images and deep learning techniques. Emerg. Radiol. 2021;28:497–505. doi: 10.1007/s10140-020-01886-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Çiçek Ö., Abdulkadir A., Lienkamp S.S., Brox T., Ronneberger O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation; Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference; Athens, Greece. 17–21 October 2016; pp. 424–432. [Google Scholar]

- 39.Chen C., Zhou K., Zha M., Qu X., Guo X., Chen H., Wang Z., Xiao R. An effective deep neural network for lung lesions segmentation from COVID-19 CT images. IEEE Trans. Ind. Inform. 2021;17:6528–6538. doi: 10.1109/TII.2021.3059023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rabbah J., Ridouani M., Hassouni L. A new classification model based on stacknet and deep learning for fast detection of COVID 19 through X rays images; Proceedings of the 2020 Fourth International Conference on Intelligent Computing in Data Sciences (ICDS); Fez, Morocco. 21–23 October 2020; pp. 1–8. [Google Scholar]

- 41.Haritha D., Pranathi M.K., Reethika M. COVID detection from chest X-rays with DeepLearning: CheXNet; Proceedings of the 2020 5th International Conference on Computing, Communication and Security (ICCCS); Patna, India. 14–16 October 2020; pp. 1–5. [Google Scholar]

- 42.Qjidaa M., Ben-Fares A., Mechbal Y., Amakdouf H., Maaroufi M., Alami B., Qjidaa H. Development of a clinical decision support system for the early detection of COVID-19 using deep learning based on chest radiographic images; Proceedings of the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV); Fez, Morocco. 9–11 June 2020; pp. 1–6. [Google Scholar]

- 43.Sarno R., Inoue S., Ardani M.S.H., Purbawa D.P., Sabilla S.I., Sungkono K.R., Fatichah C., Sunaryono D., Bakhtiar A., Prakoeswa C.R. Detection of Infectious Respiratory Disease Through Sweat From Axillary Using an E-Nose With Stacked Deep Neural Network. IEEE Access. 2022;10:51285–51298. [Google Scholar]

- 44.He K., Zhang X., Ren S., Sun J. Identity mappings in deep residual networks; Proceedings of the Computer Vision–ECCV 2016: 14th European Conference; Amsterdam, The Netherlands. 11–14 October 2016; pp. 630–645. [Google Scholar]

- 45.Robbins H., Monro S. A stochastic approximation method. Ann. Math. Stat. 1951;22:400–407. doi: 10.1214/aoms/1177729586. [DOI] [Google Scholar]

- 46.LeCun Y., Boser B., Denker J.S., Henderson D., Howard R.E., Hubbard W., Jackel L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989;1:541–551. doi: 10.1162/neco.1989.1.4.541. [DOI] [Google Scholar]

- 47.Bengio Y., Simard P., Frasconi P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994;5:157–166. doi: 10.1109/72.279181. [DOI] [PubMed] [Google Scholar]

- 48.Glorot X., Bengio Y. Understanding the difficulty of training deep feedforward neural networks; Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Chia Laguna Resort; Sardinia, Italy. 13–15 May 2010; pp. 249–256. [Google Scholar]

- 49.Basodi S., Ji C., Zhang H., Pan Y. Gradient amplification: An efficient way to train deep neural networks. Big Data Min. Anal. 2020;3:196–207. doi: 10.26599/BDMA.2020.9020004. [DOI] [Google Scholar]

- 50.Zagoruyko S., Komodakis N. Wide residual networks. arXiv. 20161605.07146 [Google Scholar]

- 51.Han D., Kim J., Kim J. Deep pyramidal residual networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 5927–5935. [Google Scholar]

- 52.Park J., Kim H., Paik J. Cf-cnn: Coarse-to-fine convolutional neural network. Appl. Sci. 2021;11:3722. doi: 10.3390/app11083722. [DOI] [Google Scholar]

- 53.Nair V., Hinton G.E. Rectified linear units improve restricted boltzmann machines; Proceedings of the 27th International Conference on Machine Learning (ICML-10); Haifa, Israel. 21–24 June 2010; pp. 807–814. [Google Scholar]

- 54.Ioffe S., Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift; Proceedings of the International Conference on Machine Learning; Lille, France. 6–11 July 2015; pp. 448–456. [Google Scholar]

- 55.Tan M., Le Q. Efficientnetv2: Smaller models and faster training; Proceedings of the International Conference on Machine Learning; Virtual. 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- 56.Huang Y., Kang D., Jia W., He X., Liu L. Channelized Axial Attention for Semantic Segmentation--Considering Channel Relation within Spatial Attention for Semantic Segmentation. arXiv. 2021 doi: 10.1609/aaai.v36i1.19985.2101.07434 [DOI] [Google Scholar]

- 57.Long X., Deng K., Wang G., Zhang Y., Dang Q., Gao Y., Shen H., Ren J., Han S., Ding E. PP-YOLO: An effective and efficient implementation of object detector. arXiv. 20202007.12099 [Google Scholar]

- 58.Tan M., Pang R., Le Q.V. Efficientdet: Scalable and efficient object detection; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; Virtual. 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- 59.Kim S., Rim B., Choi S., Lee A., Min S., Hong M. Deep learning in multi-class lung diseases’ classification on chest X-ray images. Diagnostics. 2022;12:915. doi: 10.3390/diagnostics12040915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Paoletti M.E., Haut J.M., Fernandez-Beltran R., Plaza J., Plaza A.J., Pla F. Deep pyramidal residual networks for spectral–spatial hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018;57:740–754. doi: 10.1109/TGRS.2018.2860125. [DOI] [Google Scholar]

- 61.Herold F., Wiegel P., Scholkmann F., Müller N.G. Applications of functional near-infrared spectroscopy (fNIRS) neuroimaging in exercise–cognition science: A systematic, methodology-focused review. J. Clin. Med. 2018;7:466. doi: 10.3390/jcm7120466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Strangman G., Culver J.P., Thompson J.H., Boas D.A. A quantitative comparison of simultaneous BOLD fMRI and NIRS recordings during functional brain activation. Neuroimage. 2002;17:719–731. doi: 10.1006/nimg.2002.1227. [DOI] [PubMed] [Google Scholar]

- 63.Kumar V., Shivakumar V., Chhabra H., Bose A., Venkatasubramanian G., Gangadhar B.N. Functional near infra-red spectroscopy (fNIRS) in schizophrenia: A review. Asian J. Psychiatry. 2017;27:18–31. doi: 10.1016/j.ajp.2017.02.009. [DOI] [PubMed] [Google Scholar]

- 64.Wright R.O., Lewander W.J., Woolf A.D. Methemoglobinemia: Etiology, pharmacology, and clinical management. Ann. Emerg. Med. 1999;34:646–656. doi: 10.1016/S0196-0644(99)70167-8. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Our code and data available at https://github.com/dkskzmffps/BPC_NIRSdata, Accessed on 7 June 2023.