Abstract

With the confounding effects of demographics across large-scale imaging surveys, substantial variation is demonstrated with the volumetric structure of orbit and eye anthropometry. Such variability increases the level of difficulty to localize the anatomical features of the eye organs for populational analysis. To adapt the variability of eye organs with stable registration transfer, we propose an unbiased eye atlas template followed by a hierarchical coarse-to-fine approach to provide generalized eye organ context across populations. Furthermore, we retrieved volumetric scans from 1842 healthy patients for generating an eye atlas template with minimal biases. Briefly, we select 20 subject scans and use an iterative approach to generate an initial unbiased template. We then perform metric-based registration to the remaining samples with the unbiased template and generate coarse registered outputs. The coarse registered outputs are further leveraged to train a deep probabilistic network, which aims to refine the organ deformation in unsupervised setting. Computed tomography (CT) scans of 100 de-identified subjects are used to generate and evaluate the unbiased atlas template with the hierarchical pipeline. The refined registration shows the stable transfer of the eye organs, which were well-localized in the high-resolution (0.5 mm3) atlas space and demonstrated a significant improvement of 2.37% Dice for inverse label transfer performance. The subject-wise qualitative representations with surface rendering successfully demonstrate the transfer details of the organ context and showed the applicability of generalizing the morphological variation across patients.

Keywords: Computed Tomography, Eye Atlas, Unbiased Template, Medical Image Registration

1. INTRODUCTION

Significant effort has been invested by the Human BioMolecular Atlas Program (HuBMAP) to relate the molecular findings in organ anatomy across scales (from cellular to organ system level). Previous efforts have been focused mapping the organization and molecular profiles at cellular resolution [3], while several studies have been focused on generating an initial imaging template to adapt contextual information on an organ scale. Medical imaging such as computed tomography (CT) and magnetic resonance imaging (MRI) provided the imaging platform to visualize organ anatomy at a systematic level. Contrast enhancement is leveraged to emphasize the structural and anatomical context between neighboring organs with the injection of a contrast agent and guide to extract information from regions of interest (ROIs). However, the morphology of organs varies significantly across different demographics, especially in eye organs. The orbital shape and the length of the optic nerve vary with age and sex [4]. To adapt and generalize the population profiles of eye organs, it is important to contextualize the variable anatomy of organs in well-defined reference templates (atlas) to act as a common framework for mapping correspondence across patients [5, 6]. From a technical perspective, current studies have shown the opportunity to adapt the anatomical variability to one single atlas template with image registrations. However, the anatomical transferred context is biased to the chosen template subject and the registration algorithm is limited to by the large deformation field for localizing the organ anatomy [7]. Therefore, we aim to adapt the conventional information of eye organs with minimal biases and adapt the contextual variability across large scale of patients with robust registration algorithms following the work of [2, 8, 9].

Previous works have been developed an atlas platform in neuroimaging [10, 11]. Aging brain atlases are built to visualize the variability of brain organs in both adults and infants populations [12–14]. Apart from looking into the anatomical characteristics, a single atlas reference is chosen to perform segmentation with unsupervised settings [15, 16]. Also, multiple atlas references are randomly picked and registration is performed between the subject moving scans and the multiple atlases’ platform [17]. Segmentation predictions are computed with joint label fusion using the guidance of multiple registered outputs. Apart from the applicational usage of the atlas template, an organ-specific atlas template has been proposed to adapt the multi-contrast characteristics and the significant shape variability across a large scale of patients. A contrast-specific substructure template has also been proposed to look into the fine-grained anatomical details of kidney substructure and stably generalize the variation of small substructures in a single template. Furthermore, unbiased template brain atlases have been proposed using brain MRI to reveal the anatomical characteristics of populations across different perspectives such as ages and disease conditions. However, none of such works have been proposed in generating unbiased atlas frameworks for the eye organs. Apart from the limited work in generating organ-specific unbiased atlas, challenges are also raised for the robustness of registration pipelines. Significant efforts have been demonstrated in image registration with metric-based and deep learning-based approaches [1, 2]. VoxelMorph has been proposed as the current network basis to perform deformable registration in unsupervised settings [2]. However, the input for the network is needed to be affinely aligned and such a basis structure is limited to provide diffeomorphism for inverse transformation [8]. It is challenging to well align the correspondence between the atlas template and the moving image [9]. Therefore, a robust pipeline is demanded to generate an eye-specific template with minimal biases.

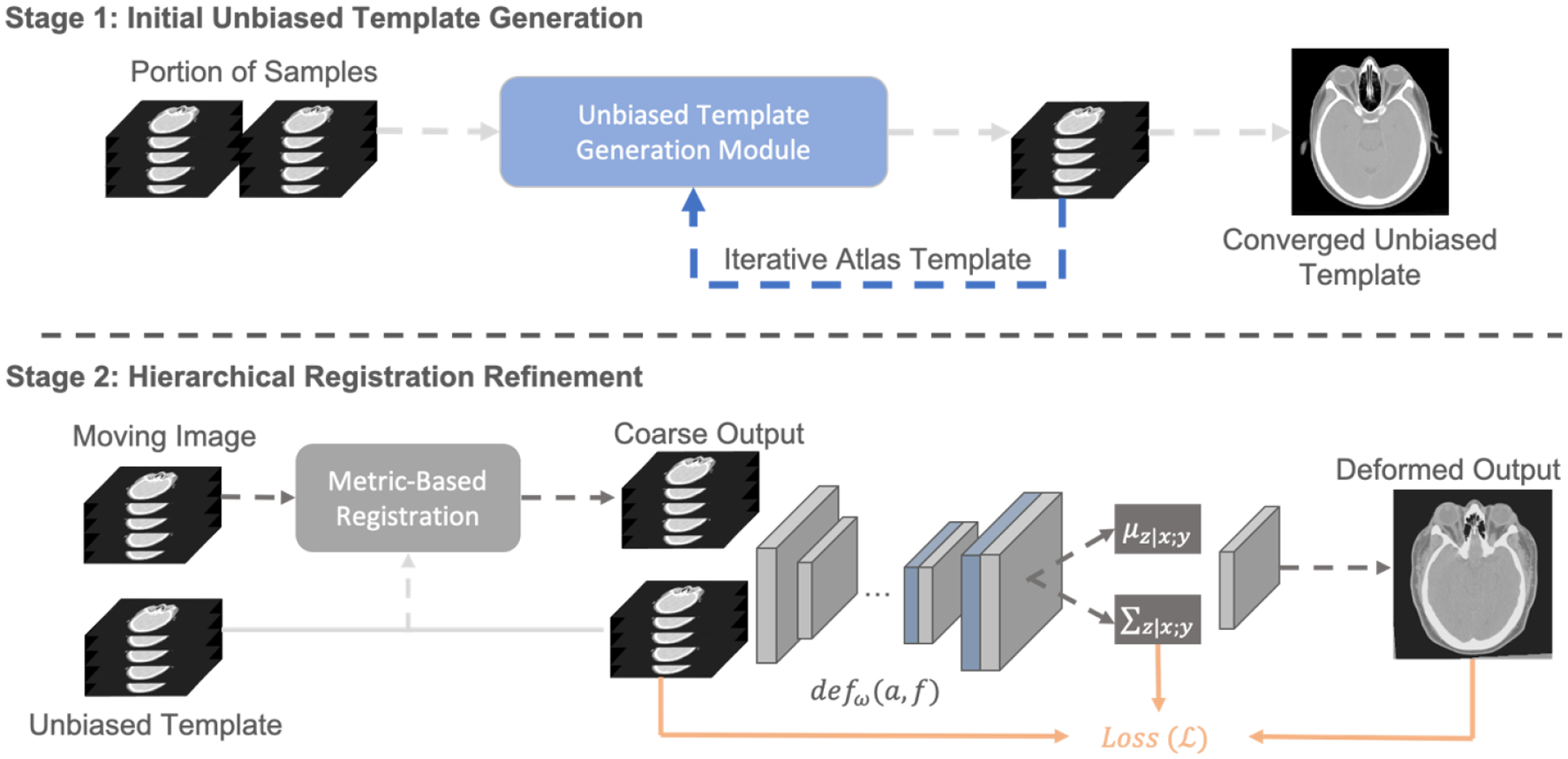

In this study, we propose a hierarchical registration approach to construct an unbiased atlas template in an unsupervised setting and aim to increase the generalizability of adapting eye organ context across populations. With a total of 100 contrast-enhanced head CT scans without known for ocular diseases, we initially performed iterative registration to generate an unbiased average mapping with a small portion of subject scans (~20 scans). We further performed a hierarchical registration pipeline to leverage the coarse output from metric-based registration for further registration refinement with a deep learning network. Furthermore, we introduce the probabilistic estimation to model the diffeomorphism and compute accurate transformation to enhance the stability of the registration across patients. The generated atlas target and moving subject scans are downsampled to input for the deep registration pipeline. The predicted deformation field is upsampled back to the atlas resolution and inverse transformation is performed with the atlas label for label evaluation as quantitative measures. Qualitative visualization is further demonstrated the convergence of unbiased templates and the proposed registration performance at image level.

2. METHODS

2.1. Initial Unbiased Template Generation

To adapt the variability of eye organs across patients, image registrations are performed to match the anatomical context to the single spatial-defined template using different registration tools such as ANTs [1] and NiftyReg [18]. However, the contextual information of each organ from the registered output is then biased and has a similar anatomical structure to the single template. Here, we introduce an unbiased template generation module to generate an initial coarse atlas with small portion of data samples and minimize the registration bias instead of using a single fixed template. Specifically, we first input 20 samples and directly generate an average map to coarsely align the morphological structure of the head. Hierarchical metric-based registration (following with rigid, affine, and deformable registrations) is performed with ANTs and computes an average template with all registered outputs. We use the computed average template in each epoch as the next fixed template and iteratively perform the same hierarchical procedures to the average template until the registration loss across all samples is converged. We hypothesize that such a template has the minimal biases and is beneficial to further adapt the population characteristics of eye organs.

2.2. Hierarchical Registration Refinement

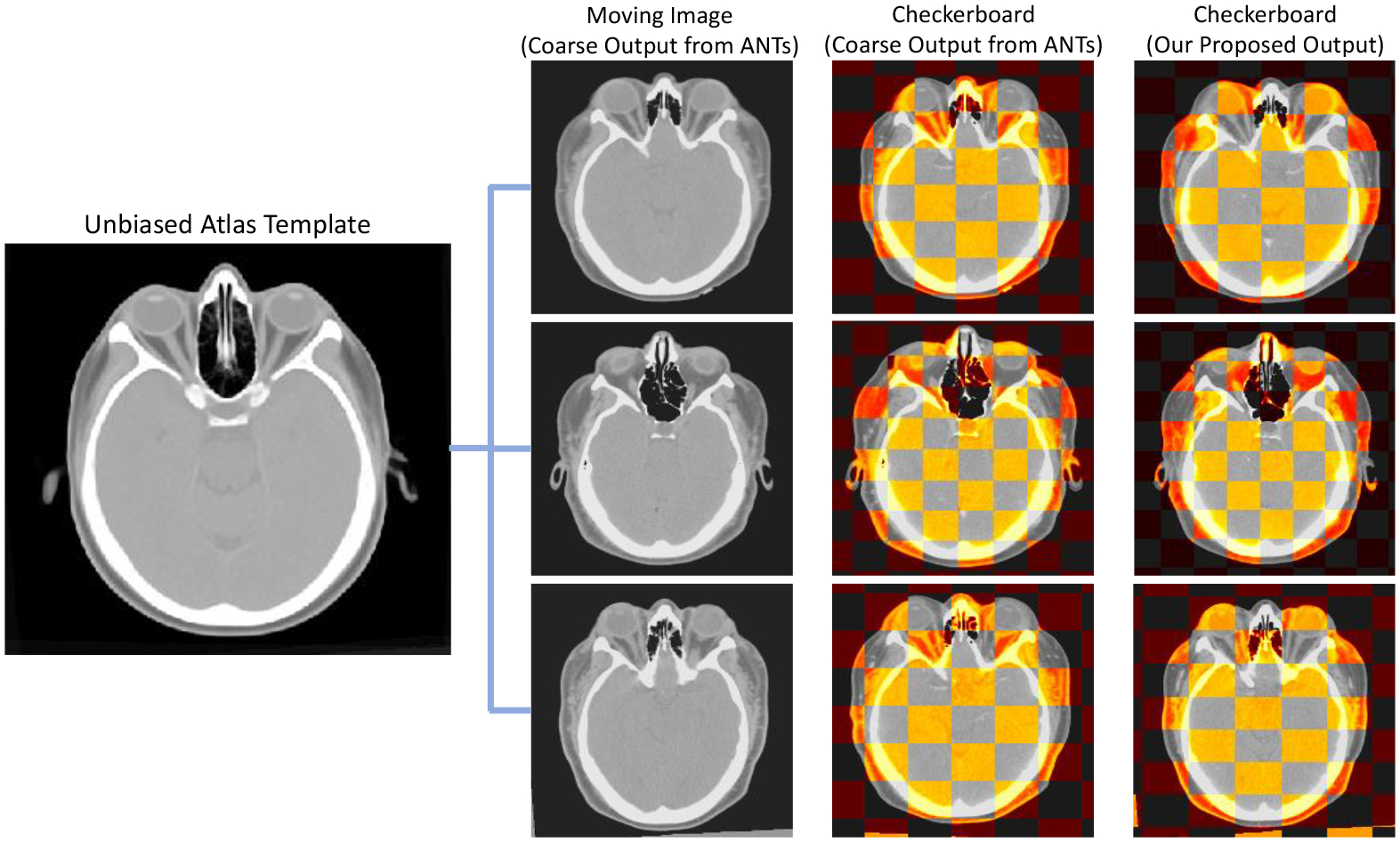

After generating the initial template with minimal bias, we perform registration to the remaining samples and aim to generalize the anatomical characteristics of eye organs across populations. However, with the visualization of the checkerboard overlay in Figure 3, we found that the metric-based registration is limited to perform significant deformation to align the head boundaries and the orbital anatomy. Motivated by VoxelMorph and probabilistic network, we introduce a deep probabilistic model defw (a, f) that leverages convolutional neural network (CNN) with diffeomorphic integration and spatial transform layers. Such a registration model is trained in an unsupervised setting and demonstrates better ability to generate significant deformation towards specific organ anatomies. We define a and m as the 3D volumetric atlas image and moving images, and z as the latent representation that parametrizes the transformation function . In our registration scenario, the deformation field is defined as the following differential equation (ODE):

| (1) |

where φ0 = Id is the identity transformation and t corresponds to time. We compute the stationary velocity field v over t = [0,1] and output the deformation field that is differentiable and invertible [8]. To model the diffeomorphism with CNN, we model the prior probability of z as follows:

| (2) |

where M(·; μ, ∑) is the multivariate normal distribution that models with mean μ and covariance ∑. In our registration scenario, the representation z is defined as the stationary velocity field that correlates the diffeomorphism through equation 1. With the basis of the above modeling, we can then convert equation 2 to estimate the posterior registration probability with the fixed template and moving image as follows:

| (3) |

With Equation 3, we can approximately predict the diffeomorphic registration field φz to warp moving image m to the atlas template a via MAP estimation. Furthermore, to evaluate p(z|a; m), a variational approach is used and introduces an approximate estimation of the posterior probability qφ(z|a; m) parameterized by φ. We aim to minimize the difference in the predicted posterior probability p(z|a; m) and qφ(z|a; m) through KL divergence for unsupervised training as follows:

| (4) |

Figure 3.

The qualitative representation that demonstrates the registration performance comparing with metric-based method as coarse output and the final output with our proposed hierarchical registration pipeline. The checkerboard overlay shows that the second stage refinement allows to further deform significantly and adapt the variability in boundaries and anatomical structure of organs.

3. DATA AND EXPERIMENTS

3.1. Data and Parameters

To evaluate the unbiased atlas template and our proposed hierarchical registration pipeline, head CT volumetric scans from 1842 patients were retrieved in de-identified form from ImageVU with the approval of the Institutional Review Board (IRB 131461). 100 subjects were retrospectively selected to generate and evaluate the atlas template. All selected subject scans consist of 4 organs ground-truth label, which corresponds to optic nerve, rectus muscle, globe and orbital fat. To generate the unbiased atlas, we selected 20 subject scans and resampled them to isotropic resolution (0.8 mm × 0.8 mm × 0.8 mm) for iterative registration with a dimension of 512 × 512 × 224. The criteria for choosing subjects for unbiased template generation are based on the morphological characteristics of eye organs and the high-resolution characteristics of the volumetric image. For the probabilistic registration, both the atlas template and moving subject scans are downsampled to an isotropic voxel resolution of (1.0 mm × 1.0 mm × 1.0 mm) with a dimension of 256 × 256 × 224. The batch size is 1 and the learning rate is 0.0001 for the network initialization. The predicted deformation field is upsampled back to the original atlas resolution and computed inverse transform for label evaluation.

3.2. Experiments

3.2.1. Iterative Template Comparison

We performed a conventional registration algorithm ANTs as our registration baseline and leverage a small portion of subject scans to use the template generation tool (antsMultivariateTempalteConstruction) for coarse atlas construction. We initially applied both rigid and affine registration to align the anatomical location of the head skull and eye organs. SyN registration is then performed for deformable registration with the similarity metric of cross-correlation (CC). In total, there are 4 resolution levels for registration and the number of iterations is defined as 100 × 100 × 70 × 20. The registration losses become converged when the templates is updated with 6 iterations. Figure 2 further demonstrates the qualitative visualization of the unbiased template in tri-planar view.

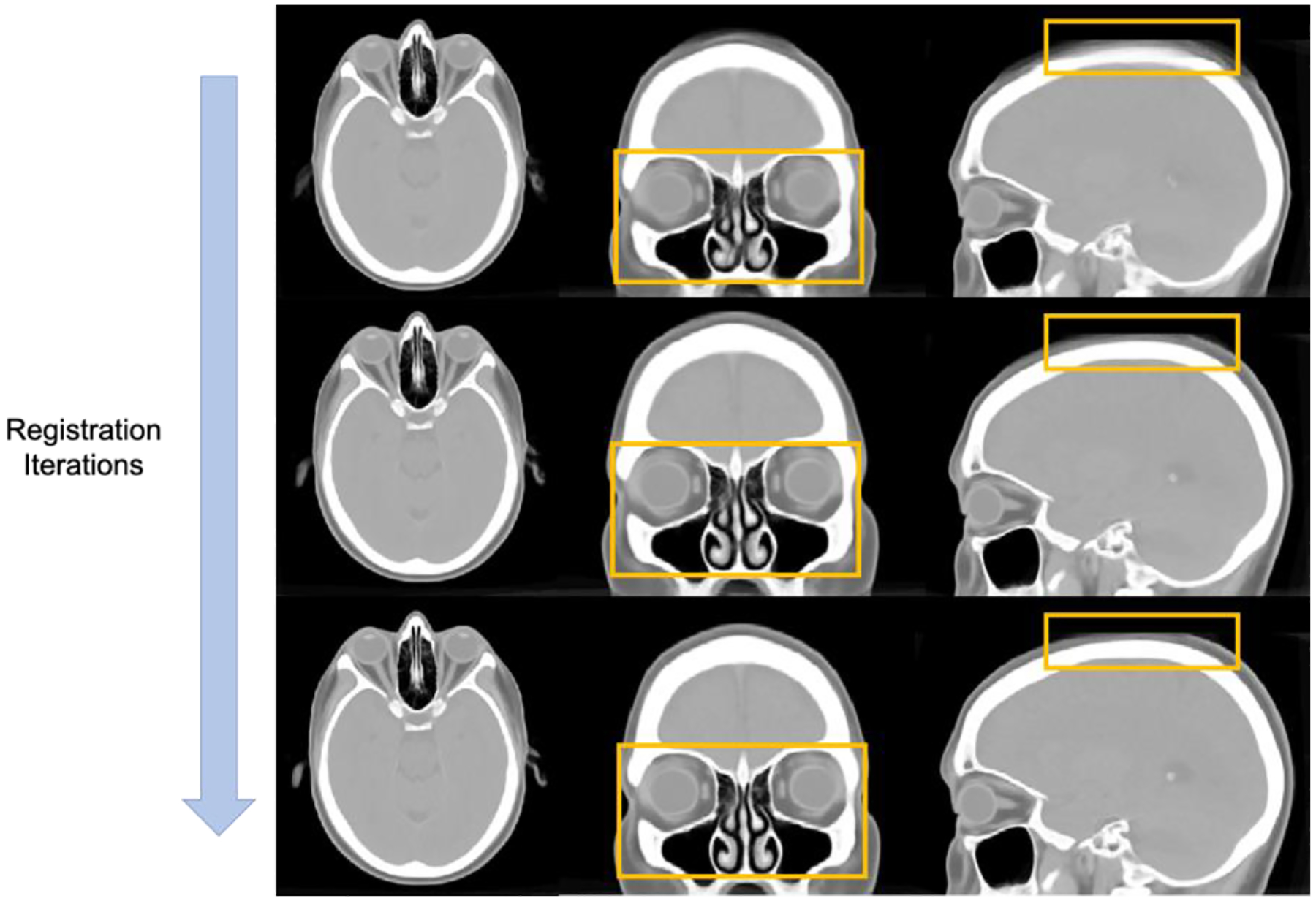

Figure 2.

The qualitative representations to visualize the convergence of anatomical details across eye organs and the boundaries of head skull in the generation process of unbiased template.

3.2.2. Hierarchical Registration Refinement

After we generate the unbiased template, we further leverage the unbiased template to register the remaining samples for adapting the eye organ context and evaluate the registration pipeline. We first perform ANTs registration with the remaining 80 subjects following with the same hierarchical manner in atlas generation (rigid, affine and deformable). We then perform a deep learning registration algorithm to enhance the contextual matching in eye organs and the skull boundaries. We perform VoxelMorph as our baseline network to concentrate on enhancing the registration performance. We further integrate the probabilistic ideas with the basis of VoxelMorph to smooth the deformable field and prevent the over-deformation across the eye organs. We compare the registration performance by computing the inverse transform of the atlas label with the predicted deformation field and transforming back to the moving image space. Dice Coefficient is used to measure the overlapping regions between the transferred label and ground truth labels.

4. RESULTS

To minimize the biases of using a single atlas template for registration, we want to first investigate the quality and following with the effectiveness of the unbiased atlas template. We evaluate the quality of the unbiased template with qualitative visualization. From Figure 3, with the increase in registration iterations, significant changes can be seen in particular anatomies within the orange bounding boxes. The skull boundaries become clearer and the contrast of boundaries is enhanced to provide better guidance to localize the head skull after several iterations. Furthermore, some of the eye organs, especially the retus muscle and the globe, being demonstrated with enhanced contrast level in both the boundaries and the morphology of the organ structure. Regions near the eye organs also appear to be stably localized with clear structural characteristics compared with the initial iterations.

After generating the minimal biased atlas template from a small portion of data, we want to further enhance the generalizability of the atlas template and adapt the anatomical context with the remaining samples using different registration approaches. We first compare the registration performance qualitatively and manually visualize the quality of the registered eye organs. As shown in Figure 3, the registered outputs from ANTs demonstrate a fair registration quality that localizes the boundaries of the head skull and can align some of the morphological characteristics in eye organs. However, when we further perform the checkerboard overlay to the atlas template, the boundaries of eye organs are not well aligned, especially for the globe and the orbital muscle nearby. The deformable registration of ANTs is limited to generating significant deformation to match the atlas context when significant differences in eye organs morphology or skull shape. By using the hierarchical registration refinement, the anatomical context of both the head skull and eye organs is comparable to the atlas-defined space without over-deformation. The checkerboard with our proposed registration method demonstrates a more distinctive appearance and alignment to the eye organs and matches the boundaries well. The contrastive and morphological characteristics can be well transferred with stability across all populations. Apart from the qualitative measures, we further inversely applied the predicted deformation field to the atlas label and transformed it back to the original moving image space for label similarity evaluation. By using ANTs registration only, the transferred label across all organs demonstrates with a mean Dice of 0.727 compared to the atlas label. After we add the second stage process for registration refinement, the label transferred performance significantly increase from 0.727 to 0.758 across all organs with the basis of VoxelMorph network. Such significant increases demonstrate the possibility of refining coarse output from metric-based registration and further performed deformation to adapt the variability of organs across the population. Furthermore, by introducing the probabilistic estimation into the registration network, the label transfer performance outperforms VoxelMorph and demonstrates another significant increase of Dice 0.758 to 0.776.

5. DISCUSSION AND CONCLUSION

With the qualitative and quantitative representation above, the contrastive and morphological context of the eye organs are stably localized with minimal bias using the hierarchical registration pipeline. The label transfer performance in all sub-organ regions of the eye demonstrates significant improvement with a decrease of variance in trend. The deep learning registration network in the second stage is trained in an unsupervised setting and leverages the estimation of probability distribution to enhance the accuracy of the predicted deformation field. With the opportunity of integrating metric-based registration and deep learning registration as a hierarchical pipeline, we leverage the characteristics of deep learning network characteristics to generate large deformation and solve the limitation of metric-based registration to adapt morphological variability of both eye organs and the head skull. Furthermore, the probabilistic estimation for registration demonstrates the diffeomorphism in deep learning networks and enhances the stability by comparing the probabilistic representation in the latent space. In this paper, we constructed an unbiased anatomical reference to localize the context of eye organs and further propose a hierarchical registration pipeline integrating both metric-based and deep learning registration. The unbiased average mapping demonstrated the contrastive characteristics of both eye organs and well localized with skull morphology across patients. The atlas target stably adapted the eye organs information with the illustration of checkerboard overlay and demonstrate significant improvement in label transfer performance with our proposed registration pipeline. We aim to create a minimal bias average template that adapts multi-modality imaging for eye organs as our future long-term goal and analyzes variability across demographics.

Figure 1.

The complete pipeline for generating an unbiased eye atlas template can be divided into two stages: 1) initial unbiased template generation and 2) hierarchical registration refinement. We leverage the small portion of samples to generate an unbiased template with iterative registration. The average template generated in each iteration is used as the fixed template for the next registration iteration. After the registration loss is converged, we further use the generated template to perform metric-based registration for the remaining samples. As the coarse output is limited to adapt the significant variability of organ structure, a probabilistic refinement network is used to generate large deformation and further aligned the anatomical details across eye organs and head skull.

Table 1.

Quantitative evaluation of inverse transferred label for eye organs across all patients (*: p < 0.001)

| Methods | Optic Nerve | Rectus Muscle | Globe | Orbital Fat | Mean Organ |

|---|---|---|---|---|---|

| ANTs [1] | 0.586 ± 0.0532 | 0.634 ± 0.0339 | 0.904 ± 0.0253 | 0.782 ± 0.0362 | 0.727 ± 0.132 |

| VoxelMorph [2] | 0.635 ± 0.0420 | 0.687 ± 0.0420 | 0.920 ± 0.0274 | 0.790 ± 0.0343 | 0.758 ± 0.140 |

| Ours | 0.651 ± 0.0358 | 0.712 ± 0.0301 | 0.931 ± 0.0227 | 0.810 ± 0.0319 | 0.776 ± 0.115* |

ACKNOWLEDGEMENTS

This research is supported by NIH Common Fund and National Institute of Diabetes, Digestive and Kidney Diseases U54DK120058 (Spraggins), NSF CAREER 1452485, NIH 2R01EB006136, NIH 1R01EB017230 (Landman), and NIH R01NS09529. This study was in part using the resources of the Advanced Computing Center for Research and Education (ACCRE) at Vanderbilt University, Nashville, TN. The identified datasets used for the analysis described were obtained from the Research Derivative (RD), database of clinical and related data. The imaging dataset(s) used for the analysis described were obtained from ImageVU, a research repository of medical imaging data and image-related metadata. ImageVU and RD are supported by the VICTR CTSA award (ULTR000445 from NCATS/NIH) and Vanderbilt University Medical Center institutional funding. ImageVU pilot work was also funded by PCORI (contract CDRN-1306-04869).

REFERENCE

- [1].Avants BB, Epstein CL, Grossman M, and Gee JC, “Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain,” Medical image analysis, vol. 12, no. 1, pp. 26–41, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, and Dalca AV, “Voxelmorph: a learning framework for deformable medical image registration,” IEEE transactions on medical imaging, vol. 38, no. 8, pp. 1788–1800, 2019. [DOI] [PubMed] [Google Scholar]

- [3].Rozenblatt-Rosen O, Stubbington MJ, Regev A, and Teichmann SA, “The Human Cell Atlas: from vision to reality,” Nature News, vol. 550, no. 7677, p. 451, 2017. [DOI] [PubMed] [Google Scholar]

- [4].Weaver AA, Loftis KL, Tan JC, Duma SM, and Stitzel JD, “CT based three-dimensional measurement of orbit and eye anthropometry,” Investigative ophthalmology & visual science, vol. 51, no. 10, pp. 4892–4897, 2010. [DOI] [PubMed] [Google Scholar]

- [5].Lee HH et al. , “Supervised deep generation of high-resolution arterial phase computed tomography kidney substructure atlas,” in Medical Imaging 2022: Image Processing, 2022, vol. 12032: SPIE, pp. 736–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Lee HH et al. , “Multi-contrast computed tomography healthy kidney atlas,” Computers in Biology and Medicine, vol. 146, p. 105555, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Mok TC and Chung A, “Large deformation diffeomorphic image registration with laplacian pyramid networks,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, 2020: Springer, pp. 211–221. [Google Scholar]

- [8].Dalca AV, Balakrishnan G, Guttag J, and Sabuncu MR, “Unsupervised learning for fast probabilistic diffeomorphic registration,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, 2018: Springer, pp. 729–738. [Google Scholar]

- [9].Dalca A, Rakic M, Guttag J, and Sabuncu M, “Learning conditional deformable templates with convolutional networks,” Advances in neural information processing systems, vol. 32, 2019. [Google Scholar]

- [10].Gholipour A et al. , “A normative spatiotemporal MRI atlas of the fetal brain for automatic segmentation and analysis of early brain growth,” Scientific reports, vol. 7, no. 1, pp. 1–13, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Oishi K et al. , “Multi-contrast human neonatal brain atlas: application to normal neonate development analysis,” Neuroimage, vol. 56, no. 1, pp. 8–20, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].James GA, Hazaroglu O, and Bush KA, “A human brain atlas derived via n-cut parcellation of resting-state and task-based fMRI data,” Magnetic resonance imaging, vol. 34, no. 2, pp. 209–218, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Rajashekar D et al. , “High-resolution T2-FLAIR and non-contrast CT brain atlas of the elderly,” Scientific Data, vol. 7, no. 1, pp. 1–7, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Kuklisova-Murgasova M et al. , “A dynamic 4D probabilistic atlas of the developing brain,” NeuroImage, vol. 54, no. 4, pp. 2750–2763, 2011. [DOI] [PubMed] [Google Scholar]

- [15].Wang J, Zu H, Guo H, Bi R, Cheng Y, and Tamura S, “Patient-specific probabilistic atlas combining modified distance regularized level set for automatic liver segmentation in CT,” Computer Assisted Surgery, pp. 1–7, 2019. [DOI] [PubMed] [Google Scholar]

- [16].Li D et al. , “Augmenting atlas-based liver segmentation for radiotherapy treatment planning by incorporating image features proximal to the atlas contours,” Physics in Medicine & Biology, vol. 62, no. 1, p. 272, 2016. [DOI] [PubMed] [Google Scholar]

- [17].Heinrich MP, Maier O, and Handels H, “Multi-modal Multi-Atlas Segmentation using Discrete Optimisation and Self-Similarities,” VISCERAL Challenge@ ISBI, vol. 1390, p. 27, 2015. [Google Scholar]

- [18].Modat M et al. , “Fast free-form deformation using graphics processing units,” Computer methods and programs in biomedicine, vol. 98, no. 3, pp. 278–284, 2010. [DOI] [PubMed] [Google Scholar]