Abstract

There is rich variety in the activity of single neurons recorded during behaviour. Yet, these diverse single neuron responses can be well described by relatively few patterns of neural co-modulation. The study of such low-dimensional structure of neural population activity has provided important insights into how the brain generates behaviour. Virtually all of these studies have used linear dimensionality reduction techniques to estimate these population-wide co-modulation patterns, constraining them to a flat “neural manifold”. Here, we hypothesised that since neurons have nonlinear responses and make thousands of distributed and recurrent connections that likely amplify such nonlinearities, neural manifolds should be intrinsically nonlinear. Combining neural population recordings from monkey, mouse, and human motor cortex, and mouse striatum, we show that: 1) neural manifolds are intrinsically nonlinear; 2) their nonlinearity becomes more evident during complex tasks that require more varied activity patterns; and 3) manifold nonlinearity varies across architecturally distinct brain regions. Simulations using recurrent neural network models confirmed the proposed relationship between circuit connectivity and manifold nonlinearity, including the differences across architecturally distinct regions. Thus, neural manifolds underlying the generation of behaviour are inherently nonlinear, and properly accounting for such nonlinearities will be critical as neuroscientists move towards studying numerous brain regions involved in increasingly complex and naturalistic behaviours.

Introduction

Behaviour is ultimately generated by the orchestrated activity of neural populations across the brain. An increasing number of studies show that the coordinated activity of tens or hundreds of neurons within a given brain region can be captured by relatively few covariation patterns, which we call neural modes1,2. This observation holds strikingly well across a variety of species, brain regions, and tasks, from the locust olfactory system during odour presentation3, to the human frontal cortex during memory and categorization4. These low-dimensional activity patterns are thought to reflect fundamental constraints on neural population activity1 that arise due to biophysical phenomena including circuit connectivity5–8, neuromodulators9,10, etc. As such neural manifolds capture the potential “states” that the collective activity of a neural population can take.

The investigation of the neural modes and their time-varying activation, or latent dynamics, has shed light on questions about the generation of behaviour that had remained elusive when studying the independent function of single neurons. These insights range from behavioural flexibility11,12 and stability13,14, to motor learning5,15–19, principles of overt20–23 and covert behaviour24–27, and representations of time28–30 and space7,31. All these findings are based on the idea that neural manifolds and their latent dynamics capture the functional processes underlying motor control13,14,20,21, sensory processing32–34—including how these change along the neuraxis35—and abstract cognition7,8,31. Neural manifolds seem to also shape how the brain may deploy new activity patterns to adapt to novel situations5,15,18,36–38. Thus, accurately capturing the geometrical properties of neural manifolds is necessary to advance in our understanding of the neural processes underlying behaviour.

Virtually all previous studies adopted linear dimensionality reduction methods such as Principal Component Analysis (PCA) or Factor Analysis to identify the neural modes and their associated latent dynamics from the firing rates of the recorded neural population39,40. As such, it was implicitly assumed that the neural modes capturing the population activity define a lower dimensional surface or neural manifold1,41 that is effectively flat—although there are exceptions outside the motor system7,8,31,42,43, the focus of this work. However, the activity of single neurons is inherently nonlinear. For example, at any given moment, a single neuron fires either zero or a positive and finite number of action potentials. Moreover, each neuron makes up to thousands of connections with other neurons, creating intricate connectivity patterns44–46. Such intricate connectivity patterns, in turn, should make the interactions between these neurons equally complex, and likely nonlinear. This combination of nonlinear neurons with complex interactions suggests that the neural manifolds underlying the neural population activity during behaviour may be similarly nonlinear (Figure 1A).

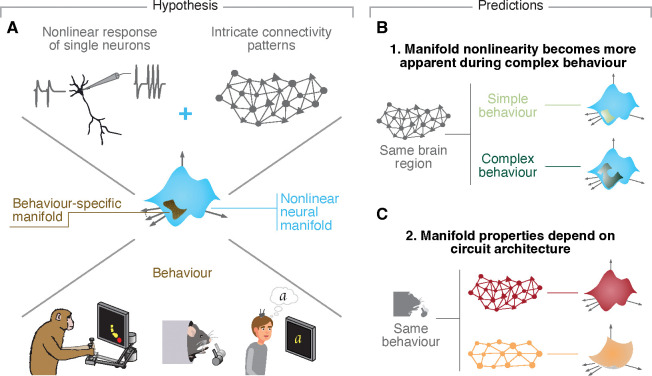

Figure 1: Hypothesis.

A. Due to the nonlinear activity profiles of single neurons and the complex connectivity profiles of neural circuits, we hypothesized that neural manifolds underlying behaviour shou be nonlinear. B. We predicted that more complex behavioural tasks that require a broader range of activity patterns will make the intrinsic nonlinearity of neural manifolds more apparent. C. We further predicted that, if the geometry of neural manifolds indeed reflects circuit properties, neural manifolds from cytoarchitecturally different brain regions would exhibit distinct degrees of nonlinearity.

The assumptions underpinning our hypothesis that neural manifolds are intrinsically nonlinear lead to two testable predictions. First, any nonlinear object can be locally approximated by a (hyper)plane. However, this approximation becomes progressively worse—and the nonlinearity more apparent—as more of the surface of this nonlinear object is sampled. Accordingly, looking over a larger extent of the manifold by studying a more complex behaviour will be more revealing of differences between a flat approximation and a more accurate nonlinear description. Thus, we predict that for any given brain region, “complex” tasks that require more varied behaviour and thus elicit a broader range of neural activity patterns should have more apparently nonlinear manifolds than simple tasks that only require a few different activity patterns (Figure 1B). In practice, while in the case of relatively simple, constrained tasks, linear methods may yield reconstructions of the underlying neural manifold that are almost as accurate as those provided by nonlinear methods, we expect them to degrade as task complexity increases. Second, following on our hypothesis that complex circuit connectivity should make neural manifolds nonlinear, we further predict that brain regions with very different circuit architectures should display different degrees of nonlinearity during the same behaviour (Figure 1C). This implies that, in practice, the accuracy of the neural manifold estimates provided by linear methods may be largely different across regions.

We addressed these three hypotheses using a combination of computational models and neural population recordings from monkeys, mice, and humans performing reaching, grasping and pulling, and imagined writing tasks, respectively. We first show that even during a relatively simple centre-out reaching task, the activity of neural populations from monkey motor cortex is better described by a nonlinear rather than a flat manifold. In good agreement with our second hypothesis, the nonlinearity of this neural manifold was much more apparent when considering all eight reach directions as opposed to a single one, a result that we extended by examining population recordings from human motor cortex22. There, manifolds underlying a broad range of attempted movements were much more nonlinear than those underlying a more limited repertoire. Next, we addressed our third hypothesis that manifold nonlinearity is determined by circuit properties through comparison of manifolds underlying the activity of simultaneously recorded populations from two cytoarchitecturally distinct motor regions of the mouse brain—motor cortex and the dorsolateral striatum— during a grasping and pulling task14,47,48. Manifold nonlinearity was indeed markedly different between these two regions, with striatal manifolds being much more nonlinear than motor cortical manifolds. Finally, we used computational models to observe how manipulation of circuit connectivity influences manifold nonlinearity. The nonlinearity of manifolds underlying the activity of recurrent neural network (RNN) models18,37,49–51 trained to perform the monkey centre-out reaching task was tightly linked to their degree of recurrent connectivity, an observation that held across different network various architectures and hyperparameter configurations. Combined, these results show that intrinsically nonlinear manifolds underlie neural population activity during behaviour, and that the degree of nonlinearity is shaped by both the circuit connectivity and behavioural “complexity”. Considering these nonlinearities will likely be crucial as the field moves toward the study of a broader range of brain regions during ever more complex behaviours.

Results

Nonlinear manifolds underlie motor cortical population activity during reaching

We trained two macaque monkeys (C and M) to perform an instructed delay centre-out reaching task using a planar manipulandum (Figure 2A) (Methods). Monkey C performed the task in two sets of experiments, first using the right arm, and then the left arm, which we denote as Monkey CL and CR, respectively (L and R refer to the contralateral side of the brain). We recorded neural activity using chronically-implanted microelectrode arrays inserted into the arm area of the primary motor cortex (Monkey CR, CL, and M) and dorsal premotor cortex (Monkeys CL and M). These recordings, which we combined across areas due to similarities in their activity52, allowed us to identify the activity of hundreds of putative single motor cortical neurons during each session (46–290 depending on the session; average, 154±86) (Figure 2B). To better account for the variability in neural activity and behaviour across different trials, we performed all analyses on single trial data rather than on trial averaged data.

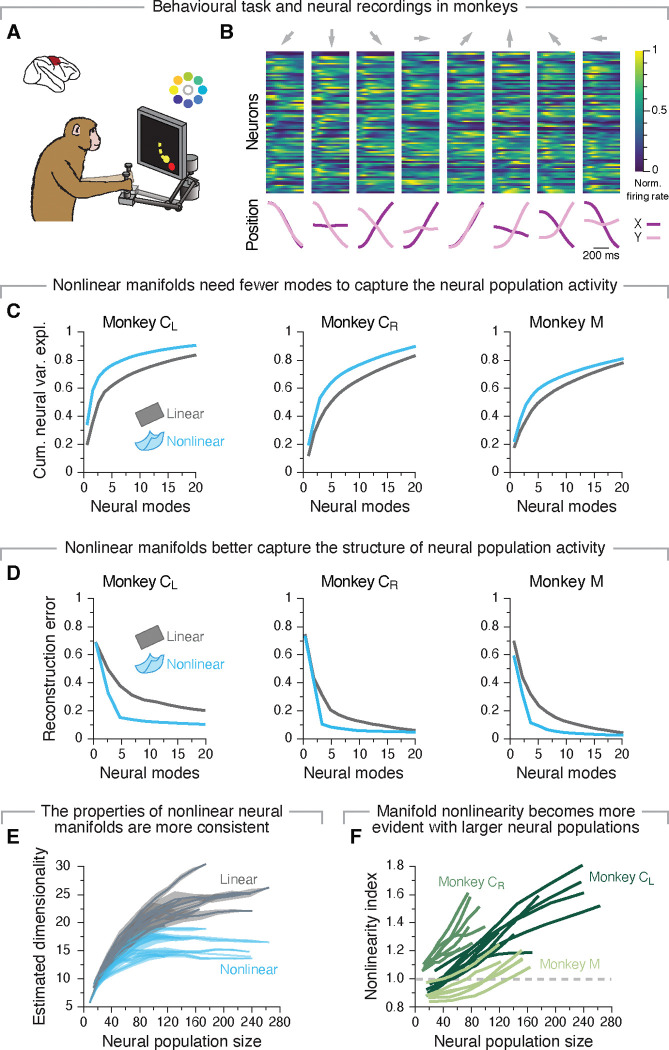

Figure 2: A nonlinear manifold underlies motor cortical population activity during a centre-out reaching task.

A. Monkeys performed a centre-out reaching task with eight targets. B. Average single neuron firing rates and hand positions as a monkey reached to each target. Data from a representative session from Monkey CR. C. Cumulative neural variance explained by flat (gray) and nonlinear (blue) manifolds as function of the number dimensions. Shown are one example data set for each monkey. D. Reconstruction error after fitting flat (gray) and nonlinear (blue) manifolds with increasing dimensionality. Data from the same three example sessions as in C. E. Estimated dimensionality of flat (grey) and nonlinear (blue) manifolds as function of the number of neurons used to sample them. Lines and shaded areas, mean±s.d. across 10 random subsets of neurons. Data from all sessions from Monkey CL. F. Nonlinearity index indicating the ratio of the estimated dimensionality of nonlinear manifolds to that of flat manifolds as function of the number of neurons used to sample them. Shown are all sessions from each monkey, colour coded by animal (legend). Values greater than one (dashed grey line) indicate that nonlinear manifolds capture the data better than flat manifolds.

We first identified flat manifolds spanning the neural population activity during movement using PCA11,13,16,20,39. As expected11,14,16,21,53, a large portion of the variance in the neural data was captured by relatively few neural modes (principal components in the case of PCA; gray trace in Figure 2C, and Figure S1). To determine if nonlinear manifolds capture the neural population activity better than flat manifolds, we used a standard nonlinear dimensionality reduction method, Isomap54, to find a nonlinear manifold underlying the same neural population activity. As shown in Figure 2C, a nonlinear manifold explained the same amount of variance as a flat manifold with considerably fewer neural modes (compare the blue and gray traces; Figure S1 shows additional examples, Figure S2 validates this approach on known manifolds, and on synthetic neural data55). Since explaining more variance with fewer modes is a hallmark of a better representation of the (neural) data, this result indicates that motor cortical manifolds may be intrinsically nonlinear even during a relatively simple task.

We performed additional analyses to verify this observation. First, we assessed whether flat or nonlinear manifolds better capture the structure of neural population activity by quantifying how well their respective latent dynamics can be used to reconstruct the full dimensional activity using a “reconstruction error” metric (called “residual variance” in the original Isomap paper54). For all 24 datasets, linear manifolds had considerably larger reconstruction errors than their nonlinear counterparts until at least 10–20 neural modes were considered (Figure 2D; Figure S1; Figure S3A shows that this metric is robust across both different sets of trials and neurons). Moreover, the Isomap reconstruction errors had a clear “elbow”—a dimensionality value at which the error abruptly saturated— indicating that additional nonlinear neural modes did not greatly improve the low-dimensional representation of the neural population activity.

The previous analyses indicate that nonlinear manifolds require fewer dimensions than flat manifolds to capture the variance in neural population activity (Figure 2C), while also allowing for better reconstruction (Figure 2D). As a final analysis to establish the nonlinearity of neural manifolds, we investigated whether the properties of the estimated nonlinear manifold were also more robust against changes in the neurons used for its estimation than those of their linear counterparts. We focused on the estimated dimensionality of the neural manifold and hypothesised that if a method provides a good estimate, using a sufficiently large number of neurons for its estimation should consistently give the same estimated dimensionality (see examples for known manifolds and synthetic data in Figure S2; Figure S3A–C show that this metric is robust against dropping trials and data points). In contrast, if the low-dimensional projection onto the neural manifold fails at fully capturing the structure of the population activity, then the estimated dimensionality will likely increase as more neurons are considered. As expected, for virtually all datasets, the estimated dimensionality of the nonlinear manifolds plateaued after 30–40 neurons were considered (blue traces in Figure 2E; Figure S3D;), whereas that of flat manifolds never reached a plateau even when considering all recorded neurons (65–250). This trend became most apparent when we computed a “nonlinearity index” as the ratio between the estimated dimensionality of the linear and nonlinear manifolds for the same number of neurons. The nonlinearity index mostly took values greater than one and increased monotonically with the number of neurons, indicating that nonlinear manifolds provided progressively better approximations of the neural population activity as the number of neurons increased (Figure 2F). These results were obtained by estimating the manifold dimensionality using the “participation ratio”, defined as the number of neural modes required to explain ~80 % of the total variance2,56, but we obtained similar results when using a different dimensionality estimation metric55,57 (Figure S3E).

We performed additional controls to establish the nonlinearity of neural manifolds. First, we verified that the dimensionality estimates for the linear and nonlinear manifolds were independent of the dimensionality reduction technique used for manifold estimation. Reassuringly, we obtained qualitatively similar results when we used Factor Analysis5,58 instead of PCA to identify the flat neural manifolds (Figure S3F), and when we used nonlinear PCA59 instead of Isomap to identify the nonlinear neural manifolds (Figure S3G). Nonlinear manifolds were also more informative about behaviour: decoders trained on the latent dynamics within nonlinear manifolds outperformed those trained on linear manifolds given the same number of neural modes (Figure S3H), indicating that the nonlinearities capture behaviourally relevant information. Taken together, these results suggest that the presence of nonlinear manifolds reflects fundamental features of neural population activity, and not just the greater ability of nonlinear dimensionality reduction methods to fit data. Motor cortical manifolds thus exhibit nonlinear features even for a eight-target centre-out reaching task, and linear approximations of these intrinsically nonlinear structures become progressively more inaccurate as more neurons are considered.

More varied behaviours reveal greater manifold nonlinearities

We have shown that neural manifolds in monkey motor cortex during a relatively simple centre-out reaching task are nonlinear (Figure 2). Our second hypothesis stated that, during tasks that require a broader range of movements, neural activity would explore a larger portion of the underlying neural manifold thus revealing more clearly its intrinsic nonlinearity (Figure 1B).

To study the relationship between manifold nonlinearity and task complexity, we analysed the activity of neural populations from the “hand knob” area of motor cortex while a paralysed participant attempted to perform a broad range of movements. These included writing straight lines, single letters, and symbols according to a visual cue using their contralateral hand (data from Ref. 22, including ~200 multi-units per session) (Figure 3A,B). We first studied attempted drawing of one-stroke lines of three different lengths across 16 directions. We compared neural manifolds underlying population activity while the participant drew lines in a single direction with those underlying the drawing of lines in all 48 length × direction combinations. First, we observed that the neural manifold during the task of drawing lines in a single direction was rather flat, with both PCA and Isomap giving similar reconstruction error (Figure 3C; Figure S4B), and both of their estimated dimensionalities plateauing before all the neurons in the population were considered (Figure S4C). In contrast, when many line lengths and directions were considered, all our measures indicated that the motor cortical manifold was nonlinear (Figure 3C,D; Figure S4B,C; S4D shows how nonlinearity tends to increase for an increasing number of conditions).

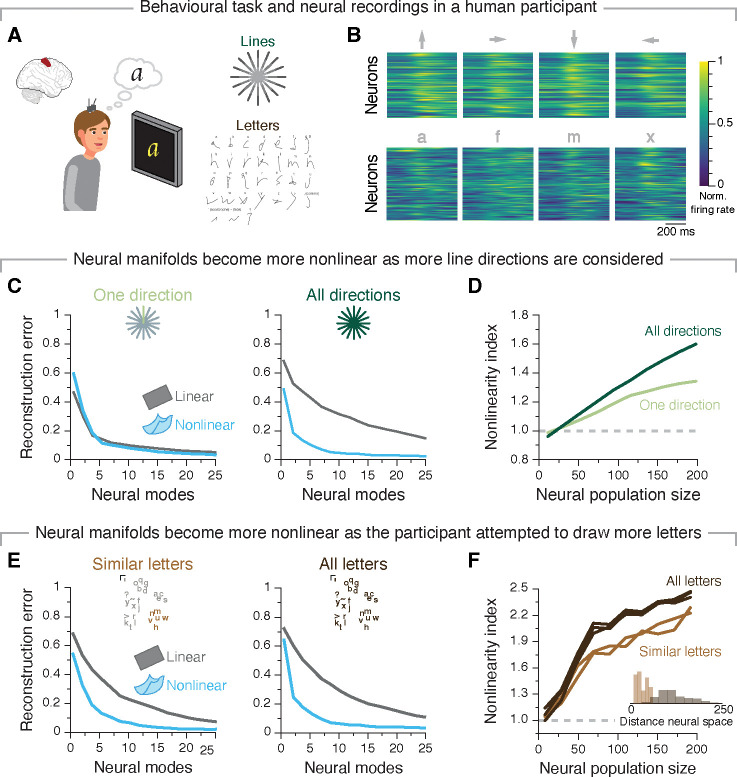

Figure 3: More complex tasks that require more varied neural population activity patterns reveal greater manifold nonlinearity in human motor cortex.

A. One participant implanted with microelectrode arrays in the hand “knob” area of motor cortex performed a variety of attempted drawing (“Lines task”) and handwriting tasks. B. Average firing rates for twelve different letters when attempting to draw lines in four different directions (top) and when attempting to write four different letters (bottom). C. Reconstruction error after fitting flat (grey) and nonlinear (blue) manifolds with increasing dimensionality to the neural population activity as the participant attempted to draw strokes in one direction (left) or across all sixteen directions (right). D. Nonlinearity index indicating the ratio of the estimated dimensionality of nonlinear manifolds to that of flat manifolds as a function of the number of neurons used to sample them for the simple and complex version of the stroke drawing task. E. Same as C but comparing drawing similar letters (left) to drawing all the letters in the English alphabet (right). F. Same as D but for the comparison between simple and complex writing tasks. Inset: Neural states associated with attempting to draw letters with similar shapes are closer than those for different letters, as confirmed by an Euclidean distance analysis.

To further investigate this relationship, we re-visited the monkey centre-out reaching task and compared the nonlinearity of neural manifolds underlying reaches to all eight targets to those underlying reaches to one single target (Figure S4G–H). As predicted, the difference in quality of fit between nonlinear and flat neural manifolds was much smaller when only considering a single reaching direction, indicating that manifold nonlinearity increases with task complexity.

We further compared the nonlinearity of neural manifolds underlying even more complex tasks by focusing on the attempted handwriting task. We isolated trials where the participant attempted to draw letters from the English alphabet with very similar shapes60, and compared the estimated neural manifolds with those underlying the drawing of all letters. The nonlinear manifolds underlying both tasks were considerably lower dimensional than their flat counterparts (Figure 3E; Figure S4J,K). Moreover, as expected, manifold nonlinearity increased for the larger group of dissimilar letters (Figure 3F), a difference likely driven by the smaller exploration of neural space for the limited set of letters (inset in Figure 3F). The observation that task complexity increases manifold nonlinearity across three different tasks in two different species supports the hypothesis that more complex behaviours that require more varied behaviour make the intrinsic nonlinearity of neural manifolds more apparent.

Neural manifold nonlinearity changes across cytoarchitecturally distinct brain regions

Our third hypothesis that manifold nonlinearity is shaped by network connectivity properties implies that different brain regions may be better or worse approximated by flat manifolds depending upon their circuit properties. To address this presumed relationship in vivo, we compared the nonlinearity of motor cortical manifolds to another area critical for forelimb movement but with very different microarchitecture and cell types: the dorsolateral striatum (henceforth just striatum). For example, while motor cortex has more recurrent excitatory connectivity, and has a broad range of cellular nonlinearities61,62 (due to large dendritic tree and many interneuron types), striatum, does not present these different neuron types, and has a very different circuitry dominated by feedforward and recurrent inhibitory connectivity63,64.

We analysed simultaneous motor cortical and striatal population recordings from four mice performing a reaching, grasping and pulling task14,47(Methods; Figure 4A,B) (motor cortical neurons: 54–96 depending on the session; average, 76±15, mean±s.d.; striatal neurons: (62–106 depending on the session; average, 83±14, mean±s.d.). A direct comparison of the nonlinearity of motor cortical and striatal manifolds provided direct evidence that manifold nonlinearity can be strikingly different across different brain regions: all of our three measures revealed that striatal manifolds are nonlinear whereas motor cortical manifolds estimated during this behaviour appear mostly flat (Figure 4C,D; Figure S5A,B, S6). This was the case even if single neuron firing rate statistics were similar between the two regions (Figure S5C), and when considering that the nonlinear aspects of striatal neuron biophysics are less marked than those of motor cortical neurons62,65. Thus, our experimental findings suggest that circuit properties may indeed be the primary factor underlying neural manifold nonlinearity.

Figure 4: The nonlinearity of neural manifolds changes across architecturally distinct brain regions.

A. Mice performed a reaching, grasping, and pulling task with four conditions (two positions × two targets). B. Average single neuron firing rates for motor cortex (top) and striatum (bottom), and hand positions as one mouse performed each condition. C. Reconstruction error after fitting flat (grey) and nonlinear (blue) manifolds with increasing dimensionality to the motor cortical (left) and striatal (right) population activity. Data from the same example mouse as in B. D. Nonlinearity index indicating the ratio of the estimated dimensionality of nonlinear manifolds to that of flat manifolds as function of the number of neurons used to sample them. Shown are all mouse sessions, colour coded by region (legend). Values greater than one (dashed grey line) indicate that nonlinear manifolds capture the data better than flat manifolds.

A neural network model to understand the emergence of manifold nonlinearity

The previous analyses provided support for our hypotheses that neural manifolds are intrinsically nonlinear (Figure 2,3), and manifold nonlinearity is influenced by circuit connectivity properties (Figure 4). Since synaptic connectivity could not be manipulated during our behavioural experiments, here we investigated directly the relationship between manifold nonlinearity and circuit connectivity by developing RNN models of motor cortical activity with different structure in their recurrent weights. We trained RNNs to perform the same instructed delay reaching task in monkeys (Methods) that was used to demonstrate our core experimental results. Our model architecture was based on previous studies using RNNs to simulate motor cortical activity18,37,49–51, but adapted to account for trial-to-trial variability during reaches to the same target. We achieved this by training RNNs to produce the monkey’s hand velocity during each recorded trial using the actual trial-specific preparatory neural activity as input, rather than the typical target cue (Methods; Figure 5A,B; Figure S7A,B). RNNs trained with this approach recapitulated key features of the actual neural activity, both at the population level (Figure 5C; Figure S7D) and the single unit level, including clear—albeit more moderate—fluctuations across different trials to the same target (Figure S7C). These observations established our RNNs as suitable models for the actual neural activity.

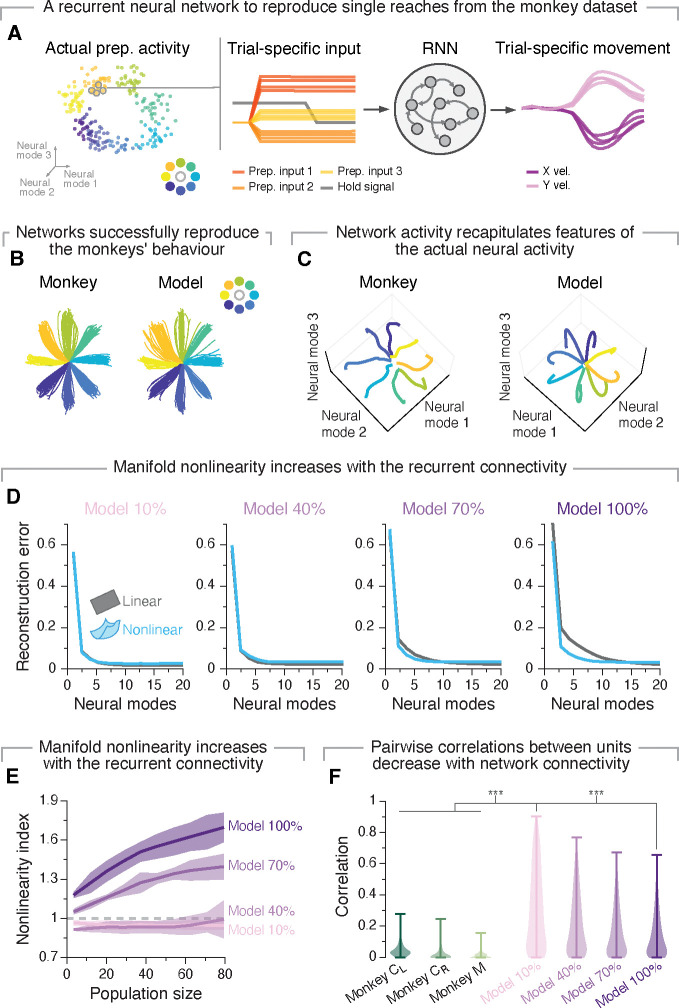

Figure 5: A recurrent neural network model supports the relationship between circuit connectivity and manifold nonlinearity.

A. We trained recurrent neural network models to generate the actual hand velocities generated by the monkeys using trial-specific preparatory activity as inputs. B. These models reproduced the variability across reaches to the same target observed experimentally. C. The latent dynamics of the network activity recapitulated the structure of the monkey latent dynamics (systematic quantifications in Figure S7). Individual traces, trial averaged activity for each target. D. Reconstruction error after fitting flat (grey) and nonlinear (blue) manifolds with increasing dimensionality. Data for example networks with increasing degrees of recurrent connectivity (10%, 40%, 70% and 100%). E. Nonlinearity index indicating the ratio of the estimated dimensionality of nonlinear manifolds to that of flat manifolds as function of the number of sampled units. We compare results for networks with increasing degrees of recurrent connectivity (legend). Values greater than one (dashed grey line) indicate that nonlinear manifolds capture the data better than flat manifolds. Lines and shaded areas, mean±s.d. across 15 repetitions for each of the 10 seeds. F. Strength of pairwise correlations between units from networks with different degrees of recurrent connectivity, compared to the actual monkey data. Violin, probability density for each network connectivity and monkey level. ***P < 0.001, two-sided Wilcoxon rank-sum test.

We next investigated the relationship between the degree of recurrent connectivity of the network and manifold nonlinearity. We originally hypothesized that manifold nonlinearity is in part due to the extensive number of connections across brain neurons. Consequently, we predicted that for networks with the same architecture manifold nonlinearity would increase with the degree of recurrent connectivity. Our results show that the population activity for networks with low connectivity (10% and 40%) was spanned by a relatively flat manifold, as indicated by the similarity in variance explained (Figure S7E), reconstruction error (Figure 5D) and estimated dimensionality (Figure S7F) between flat and nonlinear manifolds. High connectivity networks (70% and 100%), in contrast, had manifolds that were clearly nonlinear based on all three metrics (Figure 5D; Figure S7E,F). Most notably, the dimensionality of nonlinear manifolds reached a plateau after relatively few units, while that of the flat manifolds continued to increase as more units were considered (Figure S7F). This resulted in nonlinearity indexes presenting values well above one (Figure 5E). These differences were driven by differences in recurrent connection probability not the strength of the recurrent weights, since the weight distributions were similar across all trained networks (Figure S7G). Therefore, when considering these “standard” RNN models, only the activity of networks with dense recurrent connections was spanned by nonlinear manifolds, with manifold nonlinearity increasing with the level of recurrent connectivity. This trend held for a different class of recurrent network models (Figure S9), and even when we trained RNNs with linear rather than nonlinear units to perform this same task (Figure S8A). In stark contrast, fully connected feedforward networks that lacked recurrent connectivity had flat manifolds (Figure S8B). Combined, these results suggest that dense recurrent connectivity may be both necessary and sufficient for neural manifolds to become nonlinear.

While the RNN models above exhibited clear similarities to the recorded neural activity (Figure 5; Figure S7), the pairwise correlations between units were much larger than those observed experimentally34,66–68 (compare “Monkey” and “Model” distributions in Figure 5F). Yet, intriguingly, increasing the degree of recurrent connectivity, which brought manifold nonlinearity closer to the larger experimentally observed values (Figure 2), also decreased moderately the pairwise correlations between units. This inverse relationship between manifold nonlinearity and pairwise correlations between units also present in the mouse recordings—striatum showed lower pairwise correlation between units (Figure S5D) and higher manifold nonlinearity than motor cortex (Figure 4C–D)—suggesting a potential fundamental association between these two measures.

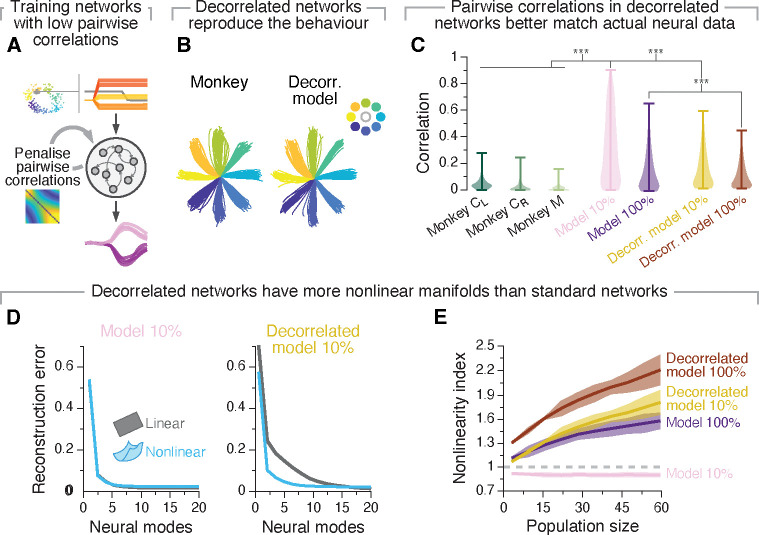

We explored this relationship by training a new set of RNNs with the additional constraint of producing lower pairwise unit correlations (Methods; Figure 6A) to test more directly the association between these metrics. As expected, while these “decorrelated” networks also learned the task successfully (Figure 6B; Figure S10A) and produced activity patterns similar to those observed in monkey motor cortex (Figure S10D), the resulting unit correlations were much closer to the experimentally observed correlations than those of our previous standard models (Figure 6C; Figure S10B). The manifolds of the decorrelated networks were much more nonlinear than those of the standard networks, even for matching degrees of recurrent connectivity (Figure 6D,E; Figure S10E), while still exhibiting an association between recurrent connectivity level and manifold nonlinearity (Figure S10C). In agreement with our hypothesis that circuit connectivity shapes manifold nonlinearity, the differences in pairwise correlations and manifold nonlinearity between standard and decorrelated networks corresponded to differences in their connectivity: overall, the weight changes required by the standard networks to learn the task were higher dimensional than those of decorrelated networks (Figure S10G), even if their distributions did not exhibit striking differences (compare Figure S7G and S10F). Combined, our simulations provide strong support for our experimental observation that network connectivity is indeed an important factor shaping manifold nonlinearity. While the activity of sparsely connected standard networks could be captured by a flat manifold, the more physiologically relevant condition of dense recurrent connectivity led to the emergence of nonlinear manifolds.

Figure 6: A recurrent neural network model constrained to have low pairwise correlation between units has more nonlinear manifolds.

A. We modified the training procedure of our models so as to limit their pairwise correlations between units to better match experimental neural data. B. These models also reproduced the experimentally recorded hand trajectories. C. “Decorrelated networks” have pairwise correlations between units that are similar in magnitude to those experimentally observed in monkeys. Shown are the strength of pairwise correlations between units from “decorrelated networks” with two degrees of recurrent connectivity compared to “standard networks” with similar degrees of connectivity, along with the pairwise correlations experimentally observed in monkeys. Violin, probability density for each network connectivity level and monkey. ***P < 0.001, two-sided Wilcoxon rank-sum test. D. Reconstruction error after fitting flat (grey) and nonlinear (blue) manifolds to “standard” (left) and “decorrelated” (models) with similar levels of recurrent connectivity (10%). E. Nonlinearity index indicating the ratio of the estimated dimensionality of nonlinear manifolds to that of flat manifolds as function of the number of sampled units. We compare results between various standard and decorrelated networks (results for additional degrees of recurrent connectivity are shown in Figure S10C). Lines and shaded areas, mean±s.d. across seeds.

Discussion

Technological, computational, and theoretical advances have fostered an expansion from the study of single-neuron activity and the encompassing neural circuits to the investigation of the latent dynamics reflecting the coordinated activity of neural populations1,41,69,70. In practice, these latent dynamics are often inferred by projecting the recorded neural activity onto a neural manifold that is identified using a particular dimensionality reduction or manifold learning method39,40. As such, the particular choice of methodology translates into implicit assumptions about the properties of the neural manifold. Most studies have relied on linear methods such as PCA or Factor Analysis to identify the latent dynamics, thereby assuming that the neural population activity lies in a flat neural manifold. Here, we tested the hypothesis that neural manifolds underlying behaviour are intrinsically nonlinear by comparing the relative performance of linear and nonlinear dimensionality reduction methods on recordings from a variety of tasks, brain regions, and species. We showed that, in virtually all cases, the activity of neural populations from motor regions of the brain is best described by a nonlinear rather than a flat manifold. This difference between linear and nonlinear methods became more dramatic as task complexity (e.g., number of different movements) increased. During the same task, architecturally distinct brain regions differed in the degree of nonlinearity of their manifolds, an observation that we further supported by manipulating network connectivity in RNNs performing the same task.

Influence of task complexity on manifold nonlinearity

The nonlinearities inherent in neural manifolds became more apparent as the complexity of behaviours increased, an observation that held for the motor cortex in both human (Figure 3) and monkey (Figure S4E,F). Intuitively, we proposed that this can be explained by simple behaviours exploring only a small portion of the available neural states. Even if the “landscape” defined by these states were intrinsically nonlinear, these small explorations could be well approximated locally by a flat manifold. However, as the complexity of behaviours increases, activity explores a larger region of state space and in the process makes manifold nonlinearities more apparent (Figure 1B).

A previous theoretical study on the properties of flat motor cortical manifolds proposed that the upper bound on their dimensionality should increase with task complexity2. Our results suggest an alternative explanation: increasing the number of movements an animal performs may make the neural activity explore a larger portion of an intrinsically nonlinear manifold. This should in turn increase the difference in the number of dimensions needed by linear and nonlinear manifolds to accurately capture the neural population activity3,71,72.

Our data from monkey and mouse motor cortex seemed to indicate an apparent difference between these two species: during the studied motor tasks, monkey motor cortical manifolds were nonlinear (Figure 2) while mouse motor cortical manifolds were flat (Figure 4). The differences in cytoarchitecture between these two species73 could be one factor driving this difference, but our data suggest that behavioural complexity is the primary cause. The monkeys reached to eight different targets by producing a relatively large variety of muscle activation patterns74,75, including arm flexion and extension. The mice, in contrast, reached in only one direction–away from the body14,47. Thus, the mice performed a far simpler behaviour and thus their neural activity may have explored only a small portion of the motor cortical state space. This interpretation is supported by our direct comparison between reaches to all eight different targets and reaches to a single target during the monkey centre-out reaching task (Figure S4D–F). In the latter case, which is more akin to the mouse dataset, manifolds were almost flat, as the nonlinearity indices became similar (Figure S4D).

For practical and conceptual reasons, most neuroscientists study relatively low-dimensional behaviours produced by animals with extensive practice in a given task. Linear methods that estimate a flat manifold defined based on a series of orthogonal directions may be the best approach for this kind of tasks, especially given that geometric interpretation may be more intuitive. However, our results indicate that as the field moves toward the study of more complex naturalistic behaviours (e.g., Ref. 76–78), we will need to consider nonlinear aspects of manifold geometry. This poses the additional challenge of choosing one among the many nonlinear dimensionality reduction or manifold learning methods. In practice, since each method has its inherent assumptions and these may lead to different geometries (and distortions), e.g., based on how well they preserve global vs. local features40, one may need to try several methods to achieve an accurate view of a manifold’s geometry, especially when a priori predictions are not available. Defining the dimensionality of behaviour also remains a looming challenge. Here, we have assumed that tasks requiring more varied outputs—be it intended, as in the case of the human BCI participant (Figure 3), or actual, as in the case of the monkeys (Figure S4E,F)—are more complex. While this assumption is reasonable for the present study, a recent proposal to define the dimensionality of behaviour based on the number of past features that maximally predict future movements79 could establish a more rigorous relationship between the dimensionalities of the neural manifold and behavior.

Factors driving the region-specificity of manifold nonlinearity

We investigated possible biophysical factors leading to the intrinsic nonlinearity of neural manifolds. We predicted that circuit connectivity would be a key determinant of manifold nonlinearity, and found that increasing recurrent connectivity monotonically increased manifold nonlinearity for two different artificial neural network architectures (Figure 5; Figure S7, S9). Moreover, flat manifolds accurately captured the latent dynamics for only a subset of sparsely connected networks (Figure 5; Figure S9). This association between recurrent connectivity and manifold nonlinearity held for RNN models with both nonlinear and linear units (Figure S8A) but was absent in purely feedforward fully-connected networks with nonlinear units (Figure S8B). Thus, manifold nonlinearity may result not from the properties of the individual units, but rather from the interactions among the constituent units in an emergent fashion80,81.

We further confirmed the link between circuit connectivity and manifold nonlinearity experimentally. Neural manifolds from the architecturally distinct mouse motor cortex and dorsolateral striatum showed different degrees of nonlinearity during the same behaviour even while providing comparable predictions of movement kinematics14,47. Based on our RNN results, we argue that this difference arises at least in part from their distinct cytoarchitecture61–64. Yet, the difference in manifold nonlinearity between motor cortex and striatum could also be driven by differences in the types of inputs they receive. Indeed, compared to M1, striatum may integrate inputs from a wider variety of brain regions, encompassing motor, associative, sensory, and limbic regions63,82,83. Integration of such varied information streams may lead to more intricate population-wide activity patterns that are better approximated by a nonlinear manifold.

Both our RNN models and the comparison between mouse motor cortex and striatum showed that more nonlinear manifolds are associated with lower pairwise correlations between neurons (Figure 5F and 4D, respectively). Moreover, when we limited the value of these correlations during training, RNNs with comparable degrees of recurrent connectivity exhibited stronger manifold nonlinearities (Figure 6). Interestingly, a previous study linked lower pairwise correlations to a higher dimensionality in flat manifolds84. Our results suggest that the increase in dimensionality of the embedding flat manifold may be driven by an increase in nonlinearity, perhaps without a comparably large increase in the intrinsic dimensionality85. That is, the number of variables needed to characterise the neural population activity may remain constant even if the pairwise correlations between neurons and the dimensionality of the flat “embedding” manifold increases.

Manifold nonlinearity beyond the motor system

While we have largely focused on regions in the motor system in the present work, we believe that nonlinear manifolds may be an ubiquitous feature of neural population activity throughout the brain. Yet, the different roles of different systems may translate into fundamental differences in the geometric and topological properties of these underlying nonlinear manifolds. For example, primary sensory regions process rapidly changing stimuli, and are thus likely more input-driven than motor regions controlling smooth limb movements. A recent study analysing the responses of large neural populations in mouse primary visual cortex (V1) found that the dimensionality of flat manifolds increases with the number of visual stimuli32, a trend that parallels our findings in human (Figure 3) and monkey (Figure S4E,F) motor cortex. Our results suggest that these V1 manifolds with increasing dimensionality may actually approximate a much lower-dimensional neural manifold that is strikingly nonlinear. Relatedly, a recent study of the mouse whisker system suggests that responses of somatosensory populations are best described by nonlinear manifolds33, providing evidence of nonlinear manifolds in primary somatosensory regions. The amount of nonlinearity likely varies as one moves further from primary sensory organs, consistent with the recent observation of a gradient in linear manifold dimensionality along the visual system86.

“Higher” brain regions that are less directly linked to the production of behaviour or sensory processing seem to also have neural manifolds with complex and interesting nonlinear geometries. For example, the activity of populations of head-orientation cells in the thalamus lie in a ring-shaped manifold the coordinates of which map onto the animal’s heading direction8. Similarly, the activity of enthorinal “grid cells” lies in a toroidal manifold that forms a tessellated, robust, and accurate representation of the environment7. Both these manifolds are preserved between awake behaviour and different phases of the sleep cycle7,8,87, suggesting that their features may be dominated by biophysical constraints (e.g., circuit properties) on neural population activity that are invariant to behavioural states. This conservation of manifold geometry across behavioural states seems at odds with the changes reported for the motor system, where producing different behaviours leads to dramatic changes in the orientation of flat manifolds12—although these changes are absent when animals produce various related behaviours11.

Finally, the activity of neural populations in the hippocampus, a brain region involved in representing abstract maps of concepts88 including space89, also lies on a nonlinear manifold whose geometry seems to be flexibly shaped by experience31,90. Indeed, hippocampal manifold geometry changes as animals familiarise themselves with a new environment90, or when they link their spatial maps to other cognitive variables, such as value31. Therefore, growing evidence suggests that nonlinear neural manifolds may be a universal feature across many cytoarchitecturally distinct regions in the brain.

Conclusion

Investigating the coordinated activity of neural populations has furthered our understanding of how the brain generates behaviour. However, leveraging this approach to understand more naturalistic behaviours will likely depend upon adequately estimating the neural manifolds underlying the population activity. Here, we have shown that, during a variety of motor tasks, neural manifolds are intrinsically nonlinear, their degree of nonlinearity varies across cytoarchitecturally different brain regions, and becomes more evident during more complex behaviours. These results extend recent reports of nonlinear manifolds across a variety of non-motor regions of the brain7,8,28,31, to which we expect our findings to also translate.

From a translational point of view, accounting for the nonlinear geometry of neural manifolds across the sensorimotor system may be key to develop brain-computer interfaces that restore function across a broad range of behaviours based on “decoding” control signals from brain activity91,92. From a fundamental science point of view, accounting for the region-specific nonlinearity of neural manifolds, which is present in cortical and subcortical regions during both overt and covert behaviour, may be crucial to understand the neural basis for more complex and naturalistic behaviours.

Methods

Subjects and tasks

Monkey

Two monkeys (both male, Macaca mulatta; Monkey C, 6–8 years during these experiments, and Monkey M, 6–7 years) were trained to perform a standard centre-out reaching task using a planar manipulandum. They both had performed a similar task for several months prior to the neural recordings, so they were proficient at it. In this task, the monkey moved their hand to the centre of the workspace to begin each trial. After a variable instructed delay period (0.5–1.5 s), the monkey was presented with one of eight outer targets, equally spaced in a circle and selected randomly with a uniform probability. Then, an auditory go cure signalled the animals to reach the target. The trial was considered successful if the monkey reached the target within 1 s after the go cue, and held the position for 0.5 s. As the monkey performed this task, we recorded the position of the endpoint of the manipulandum at a sampling frequency of 1 kHz using encoders in each joint, and digitally logged the timing of task events, such as the go cue. Portions of these data have been previously published and analysed in Ref. 13,16,93 among others, and are publicly available on Dryad (https://doi.org/10.5061/dryad.xd2547dkt).

Mouse

After habituation to head-fixation and the recording setup, four 8–16 week old mice were trained to reach, grasp, and pull a manipulandum (similar to the tasks in Ref. 48,94) for approximately one month. In this task, mice had to reach and pull a joystick positioned approximately 1.5cm away from the initial hand position. The joystick was placed in one of two positions (left or right, ¡ 1 cm apart), and was weighed with two different loads (3 g or 6 g), adding to a total of four trial types. During the experiments, mice could self-initiate a reach to the joystick, followed by the inward pull to get a liquid reward, which was delivered ~1 s after pull onset in successful trials only. A minimum inter-trial period of 7 s was required. Each trial type was repeated 20 times before the task parameters were switched to the next trial type without any cue. For each session, there were two repetitions of each set of four trial types, presented in the same order, making up 2 × 4 × 20 = 160 trials.

Two high-speed, high resolution monochrome cameras (Point Grey Flea3; 1,3 MP Mono USB3 Vision VITA 1300; Point Grey Research Inc., Richmond, BC, Canada) with 6-mm to 15-mm (f/1.4) lenses (C-Mount; Tokina, Japan) were placed perpendicularly in front and to the right of the animal. A custom-made near-infrared light-emitting diode light source was mounted on each camera. Cameras were synced to each other and captured at 500 frames/s at a resolution of 352 by 260 pixels. Video was recorded using custom-made software developed by the Janelia Research Campus Scientific Computing Department and IO Rodeo (Pasadena, CA). This software controlled and synchronized all facets of the experiment, including auditory cue, turntable rotation, and high-speed cameras. Fiji video editing software was used to time stamp in the videos. Annotation of behavior was accomplished using Janelia Automatic Animal Behavior Annotator95 (JAABA).

Human

We analysed publicly available data by Willett et al.22 (https://doi.org/10.5061/dryad.wh70rxwmv). This data was recorded while a BrainGate study participant (T5) attempted to write a variety of digits, letters, and symbols. Participant T5, a 65 year old man at the time of data collection, with a 4 AIS C (ASIA Impairment Scale C – Motor Incomplete) spinal cord injury that occurred approximately 9 years prior to study enrollment. As a result from this injury, his only hand movements were limited to twitching and micromotions. Among the three tasks in this dataset, we analysed the two that allowed us to most consistently explore a broad range of attempted movements, which we hypothesised would reveal an increase in manifold nonlinearity. These were: 1) attempting to draw straight lines of three different lengths across 16 different directions using a single pen stroke; and 2) attempting to write one out of all the letters and symbols of the English alphabet.

In both types of tasks, Participant T5 was presented with visual cues displayed on a computer monitor. Each trial began with an instructed delay period of variable length (2.0–3.0 s), during which a single character appeared on the screen above a red square that served as hold cue. During the delay period, T5 waited and prepared to attempt drawing the appropriate stroke or writing the corresponding character. Then, the red square in the center of the screen turned green, instructing T5 to begin trying to attempt drawing the stroke or writing the character. After the 1 s go period, the next trial’s instructed delay period began.

Neural recordings

Monkey

All surgical and experimental procedures were approved by the Institutional Animal Care and Use Committee of Northwestern University under protocol #IS00000367. For recording, we used 96-channel Utah microelectrode arrays implanted in the primary motor cortex (M1) and dorsal premotor cortex (PMd) using standard surgical procedures. When recordings of both areas were available, these were pooled together and were denoted as motor cortex. Implants were located in the opposite hemisphere of the hand the animal was using in the task. Monkey C received two sets of implants: a single array in the right M1 while performing the task with the left hand; and, later, two arrays in the left hemisphere (M1 and PMd) while using the right hand for the task. These sessions are denoted CR and CL respectively. Monkey M received dual implants in the right M1 and PMd. We analysed data from seven sessions from each of the three monkeys.

Neural activity was recorded during the behaviour using a Cerebus system (Blackrock Microsystems). The recordings on each channel were band-pass filtered (250 Hz – 5kHz), digitised (30 kHz) and then converted to spike times based on threshold crossings. The threshold was set to −5.5× the root-mean square activity on each channel. We manually spike sorted all the recordings to identify putative neurons (Offline Sorter v3, Plexon, Inc, Dallas, TX). Overall, we identified an average of 278 ± 33 neurons during each session from monkey CL (range, 207–309), 72 ± 17 neurons during each session from monkey CR (range, 46–93), and 125 ± 23 neurons during each session from monkey M (range, 92–159).

Mouse

All surgical and experimental procedures were approved by the Institutional Animal Care and Use Committee of Janelia Research Campus. A brief (<2h) surgery was first performed to implant a 3D-printed headplate96. Following recovery, the water consumption of the mice was restricted to 1.2 ml per day, in order to train them in a behavioural task. Following training, a small craniotomy for acute recording was made at 0.5 mm anterior and 1.7 mm lateral relative to bregma in the left hemisphere. A Neuropixels probe was centred above the craniotomy and lowered with a 10 degree angle from the axis perpendicular to the skull surface at a speed of 0.2 mm/min. The tip of the probe was located at 3mm ventral from the pial surface. After a slow and smooth descent, the probe was allowed to sit still at the target depth for a least 5 min before initiation of recording to allow the electrodes to settle. We analysed data from two sessions from each of mouse 38 and mouse 40, and one session from each of mouse 39 and mouse 44.

Neural activity was filtered (high-pass at 300 Hz), amplified (200× gain), multiplexed, and digitised (30 kHz), and recorded using the Spike GLX software (https://github.com/billkarsh/SpikeGLX). Recorded data were pre-processed using the open-source software Kilosort 2.0 (https://github.com/MouseLand/Kilosort), and manually curated using Phy (https://github.com/cortex-lab/phy) to identify putative single units in each of the primary motor cortex and dorsolateral striatum. A total of six experimental sessions (from four different mice) with simultaneous motor cortical and striatal recordings were included in this work. We identified an average of 76 ± 15 M1 neurons (range, 54–96), and 83 ± 15 striatal neurons (range, 62–106) in each session.

Human

Participant T5 was implanted with two 96-channel Utah microelectrode arrays implanted in the hand “knob” area of the precentral gyrus. The publicly available neural data includes “multi-unit activity”, consisting of binned spike counts (10 ms bins) indicating the number of times the voltage time series on a given electrode crossed a threshold set to −3.5× the root-mean square activity on that channel for 192 channels per session. We analysed data from a total of three sessions.

Data analysis

We used a similar approach to analyse the monkey, mouse, and human data. First we discarded all the unsuccessful (in the case of the animals, unrewarded) trials. An equal number of trials to each target was randomly selected (eight targets for the monkey and four conditions for mice). We focused our analyses on the following analysis windows: for the monkey data, −100 – 400 ms with respect to the go cue; for the mouse data, −100 – 400 ms with respect to movement onset; and for the human data, −100 – 600 ms with respect to the go cue.

Before performing any dimensionality reduction analysis to identify the underlying linear or nonlinear neural manifold, we first computed the smoothed firing rates as a function of time for each single neuron (or multi-units, in the case of the human data). We obtained these smoothed firing rates by applying a Gaussian kernel to the binned square-root transformed firings (bin size = 20 ms) of each single unit or multi-unit (henceforth, simply “units”). We only excluded units with a low mean firing rate (< 1 Hz mean firing rate across all time bins).

Estimating flat and nonlinear manifolds using linear and nonlinear dimensionality reduction techniques

Following the pre-processing described in the previous section, we concatenated all the trials, producing a neural data matrix X of dimension n by T, where n is the number of units and T is the total number of time points from all trials. Note that for each session all trials were truncated to the same duration, and thus T equals the number of trials × the duration of each trial. For each session, the simultaneous activity of all n recorded units was represented in a neural state space. In this space, the joint recorded neural activity at each time bin is represented as a single point, the coordinates of which are determined by the firing rate of the corresponding units. As the activity evolves over time, this point describes a trajectory in neural state space, the latent dynamics. We estimated the linear and nonlinear manifolds underlying this neural population activity to understand whether nonlinear manifolds outperform their flat counterparts at capturing its properties. We used Principal Component Analysis (PCA) to compute flat manifolds and Isomap54 to compute nonlinear manifolds, although we later replicated the main analysis using different linear and nonlinear dimensionality reduction methods (see section “Additional analyses including controls” below).

PCA is a linear technique for dimensionality reduction that identifies an set of orthogonal directions (eigenvectors) that capture the greatest variance in the data. These directions are ranked based on the amount of variance they explain, which is quantified by the associated eigenvalue. In contrast, Isomap is a nonlinear dimensionality reduction technique that finds a nonlinear manifold that embeds the data. The Isomap algorithm starts by constructing a neighborhood graph based on the pairwise distances between data points. It connects each data point to its nearest neighbors, forming a graph where the distances between connected points are approximately preserved. Next, Isomap estimates the pairwise geodesic distances between all data points on the graph. Once the geodesic distances are computed, Isomap employs classical multidimensional scaling to embed the data points into a lower-dimensional manifold. We refer to the dimensions identified by any dimensionality reduction method simply as neural modes.

We used three different measures to establish how well flat and nonlinear manifolds captured the neural population activity. First, we calculated the total neural variance explained by manifolds with increasing dimensionality by computing the cumulative sum of the eigenvalues of the covariance matrix (for PCA) and the double-centered geodesic distance matrix (for Isomap) (see, e.g., Figure 2C). In both cases, the eigenvalue corresponding to a given dimension was divided by the total sum of the eigenvalues, bounding the values between zero and one.

As a second measure, we computed the reconstruction error, , provided by each method54:

| (1) |

where is the matrix of Euclidean distances in the low-dimensional embedding found by each method, and is each method’s best distance estimate; in the case of Isomap, this corresponds to the geodesic matrix of distances, and for PCA, to the Euclidean distance matrix of distances. Residual errors are bound between zero and one, with zero indicating perfect reconstruction (see, e.g., Figure 2D). Note that this metric is called residual variance in the original Isomap paper54; we have adopted a different nomenclature to avoid confusion with our first variance explained metric.

As a third measure, we assessed the estimated dimensionality of linear and nonlinear manifolds underlying the neural population activity as function of the number of units. We posited that if a linear or nonlinear manifold captured well the geometric structure of the latent dynamics, including more units should not change the estimated dimensionality. In contrast, if the approximations provided by these methods are inaccurate, the estimated dimensionality would grow monotonically. We used the Participation Ratio2, , to estimate the dimensionality of the flat and nonlinear manifolds:

| (2) |

Where are the eigenvalues obtained with either PCA or Isomap. Since the eigenvalues are a measure of the amount of variance explained by each mode, the Participation Ratio gives us an estimate of the variance distribution across the different modes. In the extreme case that all the eigenvalues are the same, equals , meaning the variance is evenly distributed across all dimensions. In contrast, if equals one, the majority of the variance is captured by the first dimension. This way, the Participation Ratio gives an estimation of the effective dimensionality, defined as the number of dimensions necessary to explain ~ 80% of the total neural variance2,56. To test the stability (or lack of) the estimated manifold dimensionality as number of neurons, we took 10 random subsets of neurons between 5 and the total number of recorded neurons, in steps of 10. Results are reported as mean±s.d.; Figure 2E shows a representative example. Note that in contrast to Ref. 2 we used single-trial activity because our goal was to consistently compare the dimensionality of manifolds for different numbers of neurons, rather than estimating the actual dimensionality of the neural population activity. Moreover, we observed that the value of the Participation Ratio did not change after enough (~ 15 trials per target, for the centre-out reaching task) trials were considered.

Finally, to obtain a direct comparison between the estimated dimensionality of linear and nonlinear manifolds as a function of the number of units considered for their estimation, we computed a “nonlinearity index” as the ratio between the estimated dimensionality of the linear to that of the nonlinear manifold for the same number of units (see, e.g., Figure 2F).

Establishing the influence of task complexity on manifold nonlinearity

We investigated whether more complex behaviours requiring a broader range of actions would reveal greater manifold nonlinearity by directly comparing manifolds underlying a “simple” and a “complex” version of the same task. We defined the simple task as a subset comprising one (for the monkey data) or several conditions (for the human data) of the full set of conditions, which constituted the complex task. We matched the total number of trials between simple and complex task by randomly subsampling from the complex task while keeping the same number of trials per condition, to avoid biasing our results based on the number of data points.

Monkey

We defined the simple and complex tasks in the monkey data as reaching to one target and reaching eight targets, respectively. We performed the comparison between simple and complex tasks while considering each of the eight directions as a different simple task. For each reach direction, we repeated the analysis 10 times, taking different samples of trials from the complex direction. The results, shown in Figure S4E, represent the average estimated dimensionality of flat and nonlinear manifolds across all 10 repetitions of the eight simple tasks.

Human

For the human data, we investigated the influence of task complexity in manifold nonlinearity during one session in which the participant attempted to draw straight-line strokes, and two sessions in which the participant attempted to write single letters. For the session in which the participant attempted to draw straight-line strokes, we defined the simple and complex task as we did for the monkeys: the simple comprised lines in only one direction but considering all three lengths (for a total of 30 trials), and the complex task comprised the same number of trials including lines from all 16 directions. We repeated this analysis 10 times per reach direction (taking different subsets of trials for the complex task), and averaged the results across them.

For the sessions in which the participant attempted to draw individual letters, we define the simple and complex tasks in a slightly different way. Intuitively, and in agreement with Figure 1e in Ref. 22, similar shape letters and symbols should require more similar M1 activity patterns than letters with different shape. We thus defined the simple task by selecting five letters with similar morphology60. We verified that similar letters defined based on their morphology indeed required more similar activity patterns than any pair of randomly selected letters by computing the Euclidean distance between neural states (details) in the full-dimensional state space (inset in Figure 3G). For this analysis, we defined the simple task by taking 50 trials in which the participant attempted to write similar letters or symbols. As before, for the complex task, we selected a matched number of trials from all the different letter and symbols. We repeated this process for five groups of similar letters taking, for each of them, 50 subsets of trials to define the complex task. We report the average result.

Additional analyses including controls

Pairwise correlation analysis

We simply defined the pairwise correlation between pairs of units as their Pearson’s correlation coefficient.

Simulation of neural signals

We generates synthetic data to evaluate if the nonlinearity index is representative of manifold nonlinearity. We replicated the methods in Altan et al.55. Initially, we generated d-dimensional signals by choosing (d x M) random samples from a distribution of firing rates derived from multi-electrode array recordings of neural activity in the macaque primary motor cortex (M1) during a center-out task. These firing rates were grouped in 30 ms intervals. The selection of samples was made randomly from all the neurons and time intervals recorded during successful tasks. These signals served as a basis of known dimension d (d=10), maintaining the initial firing statistics observed in the M1 recordings. The 10-dimensional latent signals were initially smoothed with a Gaussian kernel before being multiplied by an N × d mixing matrix W. The values of W were randomly sampled from a Gaussian distribution with a mean of zero, and a variance of one. We used N=84 to replicate the nb of neurons in the dataset used. We then created a nonlinear embedding of the sythetic neural data by processing each simulated neural recordings with a exponential activation function as descripbed bellow :

| (3) |

We varied the degree of nonlinearity by changing the parameter between the values of 1, 4 and 855.

Results do not depend on the specific dimensionality reduction technique used to identify the flat and nonlinear manifolds

We used PCA and Isomap as linear and nonlinear methods to estimate a flat and a nonlinear manifold underlying the neural population activity, respectively. To verify that our results were not a consequence of our choice of dimensionality reduction techniques, we repeated our main analyses using two alternative methods: Factor Analysis and Nonlinear PCA. Factor analysis (FA), like PCA, is often used to find a flat manifold underlying neural population recordings39. FA identifies a low-dimensional space that preserves the variance that is shared across units while discarding variance that is independent of each unit. We then estimated the dimensionality of these flat manifolds by computing the Participation Ratio using the eigenvalues of the shared covariance matrix. Importantly, the estimated dimensionality of flat manifolds identified using PCA and FA was highly correlated (Figure S3C), indicating that our results do not arise from the inherent assumptions of PCA.

Similarly, we verified that the better ability of Isomap to capture the neural population activity when compared to PCA generalised to other nonlinear dimensionality reduction techniques by repeating the main analyses using Nonlinear PCA. Nonlinear PCA is an autoencoder-based approach that finds a latent representation of the input firing rates and orders those latent signals (“nonlinear PCs”) based on their variance explained, enforcing a PCA-like structure on the nonlinear low-dimensional embedding59. We used the MATLAB package from Scholz et al.59. Since nonlinear PCA does not yield eigenvalues associated to the latent signals, explained variance was defined based on the quality of data reconstruction. We used an 80% threshold to define the dimensionality of these flat manifolds, since this is the approximate threshold provided by the Participation Ratio. Our direct comparison between nonlinear PCA and Isomap shows a high correlation between the estimated dimensionality of their respective nonlinear manifolds (Figure S3E). Thus, our results do not arise from the implicit assumptions of Isomap alone, but generalise to other nonlinear dimensionality reduction techniques.

Finally, we also verified that our results were not contingent on our choice of the Participation Ratio as a metric for manifold dimensionality estimation. Thus, we verified that our results held when considering a recently proposed principled alternative, Parallel Analysis55. Parallel analysis generates null distributions for the eigenvalues by repeatedly shuffling each of the n firing rate vectors separately. The shuffling step ensures that the remaining covariation structure across firing rates is not due to chance. Similar to Ref. 55, we repeated the shuffling procedure 200 times, resulting in a null distribution for each eigenvalue based on 200 samples. The eigenvalues that exceeded the 95th percentile of their null distribution were identified as significant; the number of significant eigenvalues determined the dimensionality of the flat manifold. Figure S3D shows that the results obtained using Parallel Analysis on the eigenvalues obtained with PCA are similar to those obtained using the Participation Ratio, establishing that the estimated dimensionality of flat manifolds does not depend on our adopting a particular metric.

Establishing the behavioural relevance of nonlinear manifolds

To test whether the nonlinear neural modes identified with Isomap were behaviourally relevant, we built standard Wiener filter decoders to predict continuous hand movements based on the latent dynamics within flat (identified with PCA) and nonlinear (identified with Isomap) manifolds with increasing dimensionality. For decoding, we used Ridge Regression (the Ridge class in Ref. 97). Since the hand trajectory is a two-dimensional signal, we built separate decoders to predict the hand trajectories along the X and Y axes; we reported the average performance across these two axes. The R2 value, defined as the squared correlation coefficient between actual and predicted hand trajectories, was used to quantify decoder performance. Figure S3E shows that linear decoders trained on latent dynamics within nonlinear manifolds provide more accurate behavioural predictions than their counterparts trained on latent dynamics within flat manifolds.

Neural network models

Recurrent neural network models

Model Architecture

To better understand the factors underlying manifold nonlinearity, we trained recurrent neural networks (RNNs) to perform the same centre-out reaching task as the monkeys. These models were implemented using Pytorch98. Similar to previous studies simulating motor cortical dynamics during reaching18,36,37,49, we implemented the dynamical system to describe the RNN dynamics:

| (4) |

where is the hidden state of the i-th unit and is the corresponding firing rate following tanh activation of . All networks had units and inputs, a time constant , and an integration time step . Each unit had an offset bias, , initially set to zero. The initial states were sampled from the uniform random distribution . To understand the influence of recurrent connectivity in manifold nonlinearity, we trained networks with an increasing percentage of recurrent connections (10 %, 40%, 70%, and 100 %). The recurrent weights were initially sampled from the Gaussian distribution , where . The time-dependent inputs (specified below) fed into the network had input weights initially sampled from the uniform distribution .

In order to replicate experimental trial-to-trial variability across reaches to the same target, we designed the networks so they took actual preparatory activity and produced single reaches recorded during a representative session. The inputs were four-dimensional, consisting of a one-dimensional fixation signal and a three-dimensional target signal. The target signal remained at 0 until the target was presented (set to ). The fixation signal started at 1 and went to 0 at movement onset (set to ). The three-dimensional target signal was derived from trial-specific preparatory activity from monkey neural data by integrating over time the latent dynamics along the first neural modes obtained from performing PCA during the instructed delay period99 (−210 to +30 ms with respect to the go cue).

The networks were trained to produce two-dimensional outputs corresponding to and velocities of the experimentally recorded reach trajectories, which were read out via the linear mapping:

| (5) |

where the output weights were sampled from the uniform distribution .

Training

Networks were trained to generate velocities of experimental reach trajectories from 600 trials from Monkey CL using the Adam optimiser with a learning rate , first moment estimates decay rate , second moment estimates decay rate , and . Networks were trained for 2500 trials with a batch size . The loss function was defined as the mean squared error between the two-dimensional output and the target velocities over each time step , with the total number of time steps (equal to 750 ms with ):

| (6) |

To produce dynamics that align more closely to experimentally estimated dynamics, we added L2 regularization terms for the activity rates and network weights in the overall loss function used for optimization49:

| (7) |

where

| (8) |

and

| (9) |

where and . We clipped the gradient norm at 0.2 before applying the optimization step.

To model the experimental observation of lower pairwise correlations between neurons, we trained a set of “decorrelated networks” with an additional loss term that penalized the magnitude of pairwise correlations:

| (10) |

where is the Pearson correlation coefficient between the i-th and j-th units, and . Both “standard” and “decorrelate” network training were performed on ten different networks initialised from different random seeds.

LSTM model

To establish that our core model results did not depend critically on the type of neural network architecture we adopted, we repeated our main analyses using a long short-term (LSTM) architecture. We chose this architecture given its ability to predict movement during various tasks100. We used the same approach to replicate single trial variability as for the RNNs: we used the actual preparatory activity as inputs and trained the LSTMs on the actual hand velocities that monkeys produced during that trial.

We again trained the networks using the Adam optimiser with a learning rate , and used hyperbolic tangent (tanh) as the network’s nonlinearity. Networks were trained until the error, they explained at least 70% of the variance of the target velocity signals, according to the error function:

| (11) |

Where and are the predicted and measured two-dimensional velocities, respectively, is the number of time bins , and is the mini-batch size ( trials).

To compare the network activity and the neural data, we rectified, square-root-transformed, and smoothed the unit activity using the same parameters as for the neural recordings.

Model validation

We used Canonical Correlation Analysis (CCA), a method that finds linear transformations that maximize the correlation between pairs of signals101, to quantify the similarity between the neural network activity and the neural recorded data36,49. First, we separately applied PCA to the processed neural firing rates and model activations, and then performed CCA on their 15-dimensional latent dynamics; this provided a vector with 15 monotonically decreasing correlation values. To establish the relevance of these canonical correlations, we compared their values to various lower bounds obtained by shuffling the data in different ways (over time, across targets, or both over time and across targets). In all cases the actual canonical correlations greatly exceeded the shuffled lower bounds (e.g., Figure S7D, S10D).

Supplementary Material

Acknowledgements

We thank Sara A. Solla for discussions about this research and feedback on the manuscript. L.E.M. received funding from the NIH National Institute of Neurological Disorders and Stroke (NS053603 and NS074044). J.A.G. received funding from the EPSRC (EP/T020970/1) and the European Research Council (ERC-2020-StG-949660). The funders had no role in study design, data collection and analysis, the decision to publish, or preparation of the manuscript.

Footnotes

Competing Interests

J.A.G. receives funding from Meta Platform Technologies, LLC.

Code availability

All code to reproduce the analyses in the paper will be made freely available upon peer-reviewed publication on GitHub.

Data availability

Many of the monkey datasets and the human dataset used in this study are publicly available on Dryad (https://datadryad.org/stash/dataset/doi:10.5061/dryad.xd2547dkt and https://datadryad.org/stash/dataset/doi:10.5061/dryad.wh70rxwmv respectfully). The remaining data that support the findings in this study are available from the corresponding author upon reasonable request.

References

- 1.Gallego Juan A., Perich Matthew G., Miller Lee E., and Solla Sara A.. Neural manifolds for the control of movement. Neuron, 94(5):978–984, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gao Peiran, Trautmann Eric, Yu Byron, Santhanam Gopal, Ryu Stephen, Shenoy Krishna, and Ganguli Surya. A theory of multineuronal dimensionality, dynamics and measurement. BioRxiv, page 214262, 2017. [Google Scholar]

- 3.Stopfer Mark, Jayaraman Vivek, and Laurent Gilles. Intensity versus identity coding in an olfactory system. Neuron, 39(6):991–1004, 2003. [DOI] [PubMed] [Google Scholar]