Abstract

We assessed eight acoustic indices as proxies for bird species richness in the National Science Complex (NSC), University of the Philippines Diliman. The acoustic indices were the normalized Acoustic Complexity Index (nACI), Acoustic Diversity Index (ADI), inverse Acoustic Evenness Index (1-AEI), Bioacoustic Index (BI), Acoustic Entropy Index (H), Temporal Entropy Index (Ht), Spectral Entropy Index (Hf), and Acoustic Richness Index (AR). Low-cost, automated sound recorders using a Raspberry Pi were placed in three sites at the NSC to continuously collect 5-min sound samples from July 2020 to January 2022. We selected 840 5-min sound samples, equivalent to 70 hours, through stratified sampling and pre-processed them before conducting acoustic index analysis on the raw and pre-processed data. We measured Spearman’s correlation between each acoustic index and bird species richness obtained from manual spectrogram scanning and listening to recordings. We compared the correlation coefficients between the raw and pre-processed.wav files to assess the robustness of the indices using Fisher’s z-transformation. Additionally, we used GLMMs to determine how acoustic indices predict bird species richness based on season and time of day. The Spearman’s rank correlation and GLMM analysis showed significant, weak negative correlations between the nACI, 1-AEI, Ht, and AR with bird species richness. The weak correlations suggest that the performance of acoustic indices are dependent on various factors, such as the local noise conditions, bird species composition, season, and time of day. Thus, ground-truthing of the acoustic indices should be done before applying them in studies. Among the eight indices, the nACI was the best-performing index, performing consistently across sites and independently of season and time of day. We highlight the importance of pre-processing sound data from urban settings and other noisy environments before acoustic index analysis, as this strengthens the correlation between index values and bird species richness.

Introduction

The presence of most bird species can be confirmed based on their distinct vocalizations [1]. Because of this, passive acoustic monitoring is suitable for studying bird communities [2, 3], and deploying sound recorders overcomes the logistical challenges posed by active point count and line-transect surveys [4]. If enough recorders are deployed, they can cover a greater area than human observers and can be left in the field for extended periods, especially if programmed to only record at specific time intervals [5]. In areas with mobile or Wi-Fi network connections, the collected digital sound files can automatically be transmitted to a central server for long-term data storage and analysis [6]. However, the challenge with acoustic surveys is the processing effort required to analyze extensive sound data collections and translate them into biodiversity measures that can give timely information relevant to species conservation and management [7].

Several ecoacoustic indices were proposed to summarize the acoustic features of soundscapes using the sound spectrum [8]. Moreover, acoustic indices are mathematical functions that measure the distribution of acoustic energy across time and frequency in a recording [9]. These indices enable the quick processing of sound data and measure the acoustic complexity, diversity, evenness, entropy, and richness of recordings. They are based on species diversity indices and are thought to characterize the variation in sound production in animal communities across time, serving as proxies for diversity metrics such as species abundance, richness, evenness, and diversity [10]. The ease with which these indices can be calculated using R packages, such as soundecology [11] and seewave [12], makes them attractive tools for rapid biodiversity assessment and monitoring.

Despite the growing usage of acoustic indices in ecoacoustic surveys, the relationships between index values and ground-truthed measures of biodiversity remain inconclusive [13]. The reference studies wherein these indices were developed reported positive correlations with bird species richness [14–18], but some studies found the opposite [19–21]. Retamosa Izaguirre et al. [22] and Moreno-Gómez et al. [23] evaluated acoustic indices as proxies for bird species richness, and both studies reported low to moderate correlations. Alcocer et al. [24] conducted a meta-analysis of acoustic indices as proxies for biodiversity and found that acoustic indices had a moderate positive relationship with the diversity metrics, such as species abundance, species richness, species diversity. As acoustic indices measure the amplitude and frequency properties of soundscapes, they cannot discern between species and may only weakly to moderately predict bird species richness.

Acoustic index values should be significantly correlated with the number of vocalizing bird species in recordings to reflect bird species richness accurately. However, field conditions can affect the performance of the acoustic indices [25, 26]. Noise and other environmental factors can confound the index values [27]. Outdoor environments have a diverse array of biophonies, such as sounds produced by birds, mammals, amphibians, and insects. Sounds from running water or rain falling through a canopy may also be present in the forest soundscape [28]. In rural and urban areas, geophony from breezes and drizzles are sources of bias when using acoustic indices [20]. At sites near farmlands, roads, or human settlements, sounds generated by vehicles, sirens, machines, and human speech may affect acoustic index values [27]. Unavoidable ambient sounds highlight the importance of pre-processing sound data [17, 18] and ground-truthing of the acoustic indices (i.e., validation of index values with survey data) before applying them in studies.

We assessed eight acoustic indices as proxies for bird species richness in an urban green space in Metro Manila. The anthropause [29], or slowdown of human activity during the COVID-19 pandemic, was an opportune time to measure bird vocalizations with reduced human disturbance. The acoustic indices assessed in the study were the normalized Acoustic Complexity Index (nACI), Acoustic Diversity Index (ADI), inverse Acoustic Evenness Index (1-AEI), Bioacoustic Index (BI), Acoustic Entropy Index (H), Temporal Entropy Index (Ht), Spectral Entropy Index (Hf), and Acoustic Richness Index (AR). We measured the correlation between each acoustic index and the bird species richness obtained from manual spectrogram scanning and listening to recordings. The correlations using two datasets, the unprocessed (raw) and the pre-processed sound files, were also compared to assess the robustness of the acoustic indices. We used generalized linear mixed models (GLMMs) to examine how acoustic indices predict bird species richness in pre-processed 5-min sound samples dependently on season and time of day. We hypothesized that if acoustic index values are significantly correlated with the number of species heard, then the selected indices can be used as proxies for bird species richness in the field.

Materials and methods

We conducted the study at the National Science Complex (NSC) in the University of the Philippines Diliman (UPD) campus, one of the last urban green spaces in Metro Manila, Philippines. The study area is home to 42 bird species, including endemic, resident, and migrant bird species [30]. Located within the NSC is the one-hectare UP Biology—EDC BINHI Threatened Species Arboretum, home to endemic and threatened tree species [31, 32] and various bird species [33]. The anthropause [29], or slowdown of human activity during the COVID-19 pandemic, provided an opportune time to measure bird vocalizations with reduced human disturbance. With COVID-19 restrictions and suspended face-to-face classes, entering the campus was restricted to authorized personnel only. Metro Manila has a Type I climate, marked by two pronounced seasons: the dry season from November to April, and the wet season from May to October. The months of June to September receive the highest rainfall [34].

Ethics statement

No specific ethical approval was needed because automated sound recorders were used to collect data remotely.

Study area

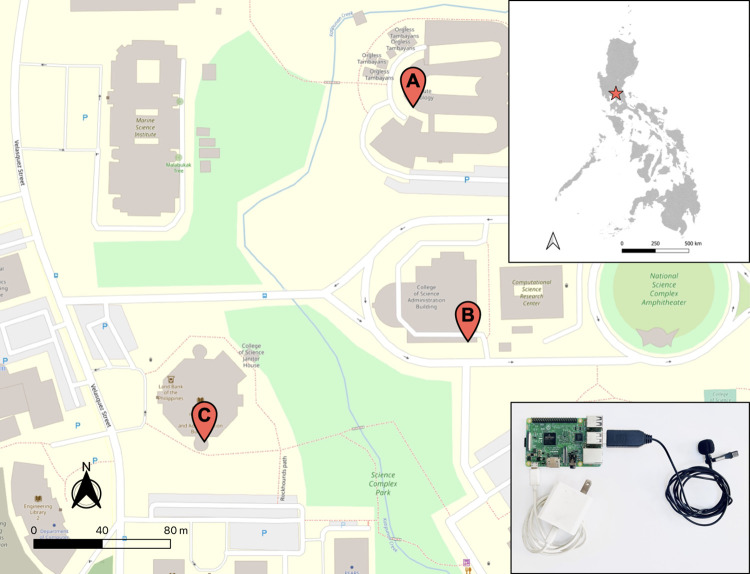

We established three recording sites, namely the Institute of Biology (A), the College of Science Administrative Building (B), and the College of Science Library (C) (Fig 1). The recording sites were selected in consideration of Wi-Fi and electricity access. The sites are adequately spaced (>100m) from each other to avoid overlapping between recording points. Site A directly faces the UP Biology—EDC BINHI Threatened Species Arboretum, but it is disturbed by ongoing construction and maintenance work. Site B is approximately 50 meters from the closest tree line and is surrounded by a road network. Site C is enclosed by vegetation, and the recorder is less than 10 meters from the closest tree line and about 30 meters from the nearest road. Guard posts are also located at the entrance going to Site C.

Fig 1. Map of three sound recording sites within the National Science Complex, University of the Philippines Diliman, and its relative location in the Philippines.

This map was generated with data from OpenStreetMap [35] and Wikimedia Commons [36]. (A) Institute of Biology, (B) College of Science Administrative Building, and (C) College of Science Library. The inset shows the recording system (USB microphone attached to Raspberry Pi 3 Model B+).

Acoustic data collection

At each recording point, we placed a low-cost sound recorder built using an omnidirectional USB microphone attached to a Raspberry Pi 3 Model B+ (Fig 1) [37]. All three systems, running with Raspberry Pi OS, were programmed to record 5-min sound clips every 6-min, using a sampling rate of 44.1 kHz. This resulted in a 1-min gap between recording bouts. The systems were recording continuously from July 2020 to January 2022 to cover the wet and dry seasons and account for migratory birds. These were done using the program arecord [38], called in a script that also uploads the WAV files to Google Drive for long-term data storage and analysis.

Low-cost recorders may have varying sensitivities, resulting in different responses in terms of frequency range and signal intensity [39]. Thus, we tested the response of each low-cost recorder across a range of frequencies. Using version 3.0.0 of Audacity® recording and editing software [40], we generated a 30-sec tone from 1 Hz to 10,000 Hz and played it through a loudspeaker held at breast level, gradually increasing the distance from the recorder. We plotted the spectrum data of the 30-sec generated tone against the average response of each recorder (S1 Fig). The frequency response was not flat over the entire frequency range, and higher frequencies were increasingly attenuated, except for recorder A (S1 Fig).

Data pre-processing

We randomly selected 840.wav files from the database based on the YYYY-MM-DD-HH-mm timestamp, totaling 4,200 minutes (≈70 hours). Through stratified sampling, we selected 20 sound samples from each hour between 5:00 AM and 6:00 PM to cover the daily activity period, including the dawn and dusk chorus. This resulted in a total of 280 samples for each recording point. We did not remove recordings with heavy rain or strong wind from the sample.

We performed pre-processing on the 840.wav files by adding a high-pass filter (400.0 Hz) and performed subsequent noise reduction and signal amplification in Audacity® macros [40] to reduce anthropogenic and geophonic background noise [18]. We used the default settings to streamline the processing of multiple.wav files, and upon visual inspection, the noise reduction setting (12 dB) was enough to reduce the unwanted background noise.

Manual spectrogram scanning and listening to recordings

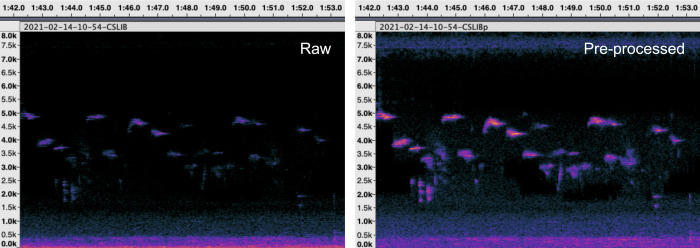

We used the 840 pre-processed sound samples for manual spectrogram scanning and listening to recordings. We viewed each 5-min sound sample in Audacity®, using Spectrogram View set to Linear Scale, with the Minimum Frequency set to 0 kHz and the Max Frequency set to 8 kHz (Fig 2). S. Diaz was the primary observer who analyzed all the recordings to reduce variability between observers. We listened to the selected recordings and identified all bird vocalizations up to the species level; thus, each WAV file had a list of species heard. Sound files from online repositories of recorded avian vocalizations, such as Xeno-canto [41] and eBird [42], were used as references in identifying bird species in the recordings. In addition, expert consultation from experienced birders (J. Gan, C. Española, and A. Constantino) helped in further species identification. Unidentified vocalizations with distinct time-frequency characteristics were still included as sonospecies.

Fig 2. Sample spectrograms for raw and pre-processed.wav files opened in Audacity® Spectrogram View.

The x-axis represents the time expressed in seconds, while the y-axis represents the frequency expressed in kHz. Spectrogram parameters: linear scale, 8 kHz maximum frequency, and window size = 4096. Amplitude ranges from -60 dB to -44 dB (raw) and -60 dB to -29 dB (pre-processed).

The limitation of our study is that we did not measure bird species abundance because of the difficulty of telling apart individuals based simply on their calls. Hence, we focused on the relationship of acoustic indices with bird species richness.

Acoustic index analysis

We used two data sets for the acoustic index analysis: raw (direct from recording) and pre-processed.wav files. We calculated eight acoustic indices: Acoustic Complexity Index (ACI) [14], Acoustic Diversity Index (ADI) [15], Acoustic Evenness Index (AEI) [15], Bioacoustic Index (BI) [16], Acoustic Entropy Index (H) [17], Temporal Entropy Index (Ht) [17], Spectral Entropy Index (Hf) [17], and Acoustic Richness Index (AR) [18] using the soundecology [11] and seewave [12] R packages [43]. We used a sampling frequency of 44,100 Hz and a fast Fourier transform (FFT) window of 512 points (S1 Table). This corresponds to a frequency resolution of FR = 86.133 Hz and a time resolution of TR = 0.0116 s.

All acoustic indices, except H and AR, were calculated using the multiple_sounds function in soundecology. We used the default settings for the ACI, ADI, AEI, and BI (S1 Table). The maximum frequency of ADI and AEI was set to 10 kHz, and the frequency step was set to 1 kHz. The minimum frequency of BI was set to 2 kHz, and the maximum frequency was set to 8 kHz. We calculated the H and AR in seewave using the default settings (S1 Table). We extracted the Ht from the results of AR and each H value by its corresponding Ht value to obtain Hf.

Since the results given by ACI are accumulative (i.e., very long samples will return very large values) [11], we divided each ACI value by the ACI value of a silent recording (= ACI/8843.141) to get a range of values easier to compare, herein referred to as normalized Acoustic Complexity Index (nACI). We also calculated the inverse Acoustic Evenness Index (1-AEI) to facilitate comparability with the ADI [21]. Other indices had no further calculations.

Normalized Acoustic Complexity Index (nACI)

The ACI is calculated from a spectrogram divided into frequency bins and temporal steps [14]. This index calculates the absolute difference between adjacent sound intensity values in each frequency bin. The absolute differences for all frequency bins are summed to obtain the ACI for the entire recording [14]. Based on this formula, high ACI values correspond to intensity modulations with the assumption that bird vocalizations dominated the recording. In contrast, low ACI values correspond to constant intensities, increased insect buzz, and anthrophony (i.e., human-generated sounds). The ACI correlated positively with the number of bird vocalizations in a beech forest in Italy [14].

Acoustic Diversity Index (ADI) and Inverse Acoustic Evenness Index (1-AEI)

The ADI and AEI are calculated on a matrix of amplitude values extrapolated from a spectrogram divided into frequency bins. Both indices first obtain the proportion of amplitude values in each bin above a dB threshold [15]. The ADI applies the Shannon diversity index to these values, whereas the AEI applies the Gini index. A higher Shannon’s index indicates higher diversity, whereas a higher Gini index indicates lower evenness and diversity. Consequently, ADI values should correlate positively and AEI values negatively with bird species richness. In Indiana, USA, the ADI values were higher, and AEI values were lower during the dawn and dusk choruses, indicating the presence of more vocalizing bird species during these periods [15]. We calculated the inverse of AEI, herein referred to as the inverse Acoustic Evenness Index (1-AEI) to facilitate comparability between the two indices [21]. Higher ADI and 1-AEI should indicate higher frequency occupation of the spectrum and higher sound diversity.

Bioacoustic Index (BI)

The BI is calculated based on a spectrogram generated from a fast Fourier transform (FFT). This index obtains the area of the FFT (i.e., total sound energy) between 2 and 8 kHz, which is the typical frequency range of bird vocalizations [16]. The BI was strongly and positively correlated with avian abundance in Hawaii [16].

Acoustic Entropy Index (H): Temporal Entropy Index (Ht) and Spectral Entropy Index (Hf)

The H is calculated as the product of Ht and Hf, where Ht represents the Shannon entropy of the amplitude envelope, and Hf represents the Shannon entropy of the frequency spectrum [17]. A flatter amplitude envelope, or low entropy, indicates constant intensities, whereas higher H values indicate more intensity modulations [17]. In Tanzanian lowland coastal forests, H increased with bird species richness [17].

Acoustic Richness Index (AR)

The AR is based on Ht and the median of the amplitude envelope (M) [18]. Lower AR values (i.e., flatter amplitude envelope and lower entropy) indicate lower acoustic richness and the presence of fewer bird species. In contrast, higher AR values correspond to increased amplitude modulations, higher acoustic richness, and more bird species. In France, the AR correlated positively with bird species richness [18].

Statistical analysis

Spearman’s correlation

We calculated Spearman’s rank correlation between each acoustic index and the bird species richness obtained from manual spectrogram scanning and listening to recordings. We used two data sets: 840 raw and 840 pre-processed.wav files, herein referred to as pooled raw data and pooled pre-processed data. Additionally, we calculated Spearman’s rank correlation separately for each recording point. We calculated Fisher’s z-transformation to compare the correlations between the raw and pre-processed datasets.

Generalized linear mixed models (GLMMs)

We used generalized linear mixed models (GLMMs) to examine how acoustic indices predict bird species richness in pre-processed 5-min sound samples depending on season and time of day. For each sound sample, we specified the acoustic index as the dependent variable, bird species richness, season, and time of day as fixed effects, and the recording point as the random effect. We fitted a set of three models for each acoustic index, which included the full model and fixed effects models in R using the lme4 [44] and glmmTMB [45] packages (S2 Table). We used GLMMs to fit nACI, ADI, and BI with Gaussian distribution and identity link function [46]; AR with Gaussian distribution and log link function; and 1-AEI, H, Ht, and Hf with beta distribution [47]. We computed the corrected Akaike Information Criterion (AICc) using the AICcmodavg [48] R package and chose the model with the lowest AICc value as the best model for each acoustic index [49]. We considered all models with Δ AICc < 4 as equally plausible [49]. Lastly, we compared the plausible models using likelihood-ratio tests (LRT).

Results

Summary of bird species heard

From the 840 pre-processed 5-min sound samples analyzed, we heard 21 bird species from 20 families in the recordings from the NSC (Table 1). This represented only 52.5% of the 40 known species sighted at the NSC based on recent eBird checklists [50] submitted from July 2020 to January 2022. However, two species, namely the Philippine Nightjar and Pygmy Flowerpecker, were heard in the recordings but not included in the list because there is insufficient eBird data available for both species. Of the 21 detected bird species, seven (33%) are Philippine endemics (Table 1). Thirteen are resident species. The Brown Shrike is the only migrant species. Seventeen (81.0%) bird species were found at all three sites. Given the proximity of the three sites, the bird community across them is most likely shared. We assume that the bird abundance and bird richness are similar across the three sites.

Table 1. Summary of bird species heard and number of sound samples each species was detected out of 280 samples per recording point.

| Family | Common Name | Scientific Name | Site A | Site B | Site C |

|---|---|---|---|---|---|

| Acanthizidae | Golden-bellied Gerygone | Gerygone sulphurea | 32 | 127 | 143 |

| Alcedinidae | Collared Kingfisher | Todiramphus chloris | 46 | 76 | 105 |

| Ardeidae | Black-crowned Night Heron | Nycticorax nycticorax | 2 | 0 | 2 |

| Artamidae | White-breasted Woodswallow | Artamus leucorynchus | 3 | 2 | 0 |

| Campephagidae | Pied Triller | Lalage nigra | 2 | 5 | 3 |

| Caprimulgidae | Philippine Nightjar* | Caprimulgus manillensis | 2 | 1 | 2 |

| Columbidae | Zebra Dove | Geopelia striata | 1 | 2 | 11 |

| Corvidae | Large-billed Crow | Corvus macrorhynchos | 32 | 127 | 143 |

| Dicaeidae | Red-keeled Flowerpecker* | Dicaeum australe | 24 | 4 | 35 |

| Pygmy Flowerpecker* | Dicaeum pygmaeum | 0 | 0 | 1 | |

| Laniidae | Brown Shrike | Lanius cristatus | 13 | 6 | 1 |

| Megalaimidae | Coppersmith Barbet | Psilopogon haemacephalus | 0 | 7 | 31 |

| Muscicapidae | Philippine Magpie-robin* | Copsychus mindanensis | 5 | 1 | 1 |

| Nectariniidae | Olive-backed Sunbird | Cinnyris jugularis | 69 | 47 | 28 |

| Oriolidae | Black-naped Oriole | Oriolus chinensis | 130 | 121 | 86 |

| Passeridae | Eurasian Tree Sparrow | Passer montanus | 84 | 49 | 86 |

| Picidae | Philippine Pygmy Woodpecker* | Yungipicus maculatus | 15 | 3 | 4 |

| Psittaculidae | Philippine Hanging Parrot* | Loriculus philippensis | 21 | 16 | 33 |

| Pycnonotidae | Yellow-vented Bulbul | Pycnonotus goiavier | 115 | 155 | 97 |

| Rhipiduridae | Philippine Pied Fantail* | Rhipidura nigritorquis | 36 | 21 | 23 |

| Zosteropidae | Lowland White-eye | Zosterops meyeni | 18 | 66 | 8 |

From July 2020 to January 2022, 21 species from 20 families were heard in the National Science Complex. Asterisk (*) indicates Philippine endemic species.

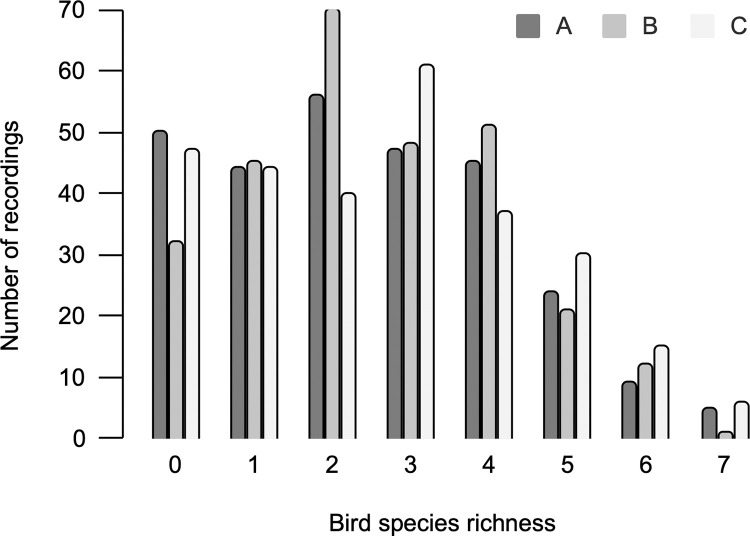

An uneven distribution of species was heard in the recordings (Fig 3). The highest number of bird species heard in a single recording was seven. However, this only comprised twelve (1.43%) of the 840 pre-processed.wav files. Seventy percent (588.wav files) had one to four species recorded, and 15% (129.wav files) had zero bird species heard.

Fig 3. Bird species richness and number of recordings per site in 840 pre-processed 5-min sound samples.

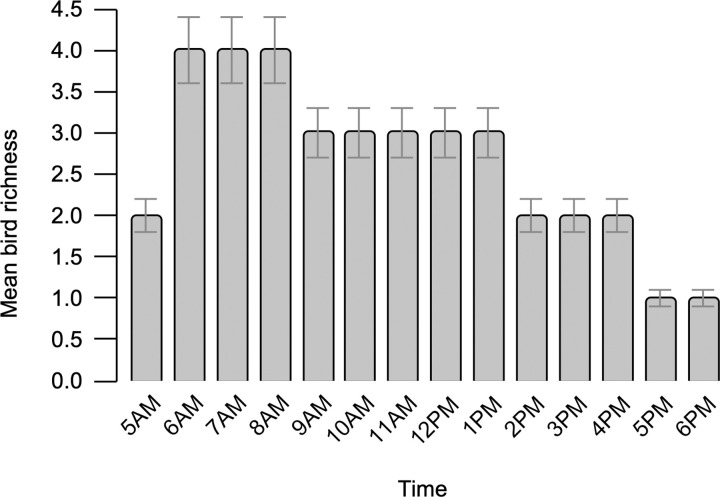

In 840 pre-processed 5-min sound samples, the highest mean richness was observed from recordings between 6:00 AM and 8:00 AM, indicating the dawn chorus (Fig 4). The dusk chorus was less pronounced.

Fig 4. Mean bird species richness across time from 5:00 AM to 6:00 PM in 840 pre-processed 5-min sound samples.

In addition to bird sounds, we also detected various other biotic sounds, including those produced by mammals, amphibians, reptiles, and insects. These include the sounds of chickens, dogs, geckos, frogs, sheep, and crickets. We also identified anthrophonies, such as human speech, construction noise, and road traffic, as well as geophonies, such as rain and wind. Although we detected other non-avian sounds in our recordings, we did not take their frequencies into account.

Spearman’s correlation between acoustic indices and bird species richness

The Spearman’s correlation and Fisher’s z-transformation analyses revealed that bird species richness correlated more strongly with the pre-processed data than with the raw data (Tables 2 and 3). Regarding the pooled pre-processed data, five acoustic indices, namely the nACI, ADI, 1-AEI, Ht, and AR, had significant but weak negative correlations with the number of bird species heard in the recordings (Table 2). The three other acoustic indices, BI, H, and Hf, had no significant correlation with bird species richness.

Table 2. The Spearman’s rank correlation coefficients between acoustic indices and bird species richness.

| Site | nACI | ADI | 1-AEI | BI | H | Ht | Hf | AR |

|---|---|---|---|---|---|---|---|---|

| Raw (unprocessed) | ||||||||

| Pooled | -0.10 ** | -0.02 | -0.02 | -0.01 | -0.07 * | 0.02 | -0.07* | -0.001 |

| A | -0.25 *** | -0.14 * | -0.15 * | -0.01 | -0.19 ** | 0.02 | -0.19 ** | -0.09 |

| B | -0.05 | -0.01 | -0.01 | -0.16 ** | -0.01 | 0.08 | -0.02 | 0.01 |

| C | -0.04 | 0.15 * | 0.17 ** | 0.05 | 0.01 | 0.12 | 0.004 | 0.09 |

| Pre-processed | ||||||||

| Pooled | -0.15 **** | -0.09 ** | -0.09 ** | -0.01 | 0.02 | -0.10 ** | 0.05 | -0.11 ** |

| A | -0.25 **** | -0.20 ** | -0.20 *** | 0.01 | -0.08 | -0.05 | -0.05 | -0.13 * |

| B | -0.05 | -0.10 | -0.10 | -0.18 ** | 0.15 * | -0.03 | 0.16 ** | -0.04 |

| C | -0.20 *** | -0.05 | -0.05 | 0.06 | 0.05 | -0.20 *** | 0.10 | -0.16 ** |

Based on the pooled pre-processed data, the nACI, ADI, 1-AEI, Ht, and AR had significant but weak negative correlations with bird species richness. Asterisks and bold indicate significance levels

* p < 0.05

** p < 0.01

*** p < 0.001.

Table 3. The results of Fisher’s z-transformation comparing Spearman’s rank correlation coefficients for the raw and pre-processed data sets.

| Index | zraw | zpre-processed | zdiff | t | p |

|---|---|---|---|---|---|

| nACI | -0.104 | -0.148 | -0.044 | -1.817 | 0.069 |

| ADI | -0.023 | -0.092 | -0.069 | -2.839 | <0.01 |

| 1-AEI | -0.015 | -0.093 | -0.077 | -3.165 | <0.01 |

| BI | -0.007 | -0.006 | 0.001 | 0.040 | 0.968 |

| H | -0.072 | 0.024 | 0.096 | 3.927 | <0.001 |

| Ht | 0.022 | -0.099 | -0.121 | -4.942 | <0.001 |

| Hf | -0.074 | 0.052 | 0.125 | 5.133 | <0.001 |

| AR | -0.001 | -0.111 | -0.110 | -4.514 | <0.001 |

Pre-processing significantly strengthened the ADI, 1-AEI, Ht, and AR correlations.

Fisher’s z-transformation results showed no significant improvement in the correlation between the nACI and bird species richness (Table 3). Pre-processing significantly strengthened the correlations for ADI, 1-AEI, Ht, and AR, whereas the correlations for H and Hf became significantly weaker (Table 3).

Generalized linear mixed models (GLMMs)

The 1-AEI, Ht, and AR predicted bird species richness in pre-processed 5-min sound samples, and they showed significant differences with season and time of day (Table 4). Specifically, the 1-AEI, Ht, and AR were significantly higher during the wet season than the dry season. The nACI also predicted bird species richness; however, we found no significant differences associated with season and time of day (Table 4). Conversely, the ADI, BI, H, and Hf did not significantly estimate bird species richness (Table 4). We found that season and time of day were stronger predictors for these indices.

Table 4. The results of GLMM examining how eight acoustic indices reflect bird species richness, season, and time of day, using the 840 pre-processed 5-min sound samples dataset.

| Coefficient | SE | t | p | |

|---|---|---|---|---|

| Normalized Acoustic Complexity Index (nACI) | ||||

| Intercept | 1.039 | 0.011 | 94.470 | <0.001 |

| Richness | -0.003 | 0.001 | -4.698 | <0.001 |

| Season [wet] | -0.003 | 0.002 | -1.422 | 0.156 |

| Hour | -0.0004 | 0.0002 | -1.651 | 0.099 |

| Acoustic Diversity Index (ADI) | ||||

| Intercept | 1.787 | 0.233 | 7.672 | <0.01 |

| Richness | -0.013 | 0.010 | -1.348 | 0.178 |

| Season [wet] | 0.253 | 0.033 | 7.652 | <0.001 |

| Hour | -0.014 | 0.004 | -3.285 | <0.01 |

| Inverse Acoustic Evenness Index (1-AEI) | ||||

| Intercept | 0.574 | 0.447 | 1.283 | 0.200 |

| Richness | -0.068 | 0.020 | -3.417 | <0.001 |

| Season [wet] | 0.369 | 0.067 | 5.529 | <0.001 |

| Hour | -0.021 | 0.009 | -2.419 | <0.05 |

| Bioacoustic Index (BI) | ||||

| Intercept | 7.735 | 2.552 | 3.031 | 0.087 |

| Richness | -0.086 | 0.065 | -1.335 | 0.182 |

| Season [wet] | 1.065 | 0.214 | 4.966 | <0.001 |

| Hour | -0.079 | 0.028 | -2.822 | <0.01 |

| Acoustic Entropy Index (H) | ||||

| Intercept | 1.714 | 0.152 | 11.282 | <0.001 |

| Richness | 0.001 | 0.008 | 0.072 | 0.942 |

| Season [wet] | 0.075 | 0.028 | 2.693 | <0.01 |

| Hour | -0.008 | 0.004 | -2.230 | <0.05 |

| Temporal Entropy Index (Ht) | ||||

| Intercept | 3.915 | 0.162 | 24.201 | <0.001 |

| Richness | -0.030 | 0.010 | -2.991 | <0.01 |

| Season [wet] | 0.125 | 0.034 | 3.667 | <0.001 |

| Hour | -0.009 | 0.004 | -2.103 | <0.05 |

| Spectral Entropy Index (Hf) | ||||

| Intercept | 1.854 | 0.150 | 12.340 | <0.001 |

| Richness | 0.006 | 0.009 | 0.684 | 0.494 |

| Season [wet] | 0.064 | 0.029 | 2.197 | <0.05 |

| Hour | -0.008 | 0.004 | -2.137 | <0.05 |

| Acoustic Richness Index (AR) | ||||

| Intercept | -1.066 | 0.135 | -7.880 | <0.001 |

| Richness | -0.072 | 0.018 | -4.021 | <0.001 |

| Season [wet] | 0.580 | 0.068 | 8.588 | <0.001 |

| Hour | -0.038 | 0.008 | -5.028 | <0.001 |

We specified the acoustic index as the dependent variable, bird species richness, season, and time of day as fixed effects, and the recording point as the random effect.

Discussion

Acoustic indices and bird species richness

Our study showed that the nACI, 1-AEI, Ht, and AR estimated bird species richness in pre-processed 5-min sound samples, showing a significant negative relationship between index values and bird richness. We found nACI to be the most robust, as it predicted bird species richness independently of season and time of day and consistently across sites. The negative relationship between nACI and bird species richness may be attributed to the overlapping vocalizations of multiple birds in the recordings. This leads to reduced differences in sound intensities and lower nACI values. The ACI also showed a significant negative correlation with bird species richness in a grassland ecosystem in the U.S.A [51] and a tropical rainforest in Costa Rica [52]. The negative correlation was explained by less complex bird calls at the study sites and the presence of dominant species, which resulted in less variable sound intensities [51, 52].

While the nACI was a more robust index, the 1-AEI, Ht, and AR were reliable predictors of bird species richness in urban settings when pre-processing was applied to the.wav files. After pre-processing, the 1-AEI, Ht, and AR showed stronger correlations with bird species richness (Table 3). Therefore, using the 1-AEI, Ht, and AR with pre-processed data can lead to better predictions of bird species richness in green spaces and other urban environments.

The negative correlation between 1-AEI and bird species richness could be due to bird species occupying a wide frequency range, resulting in high index values. Since recordings with heavy rain and intense anthrophony were also not excluded, these recordings yielded high 1-AEI values, despite having little to no audible bird species.

The Ht also had a significant negative relationship with bird species richness in a rainforest in Chile [23]. The low diversity of birds in the recordings and the presence of one or few dominant species may explain the negative correlation of the Ht with bird species richness. If only a few bird species vocalize at a time, the amplitude modulations become more distinct, and the envelope becomes less flat, returning higher Ht values.

In contrast, the BI, H, and Hf showed inconsistent and poor performance in predicting bird richness. The correlations remained inconsistent across three sites, even after pre-processing. Therefore, we do not recommend using the BI, H, and Hf as proxies for bird species richness in urban settings.

Given the performance of the eight acoustic indices assessed, we recommend using only the nACI, 1-AEI, Ht, and AR as proxies for bird species richness in urban settings. However, ground-truthing of the acoustic indices should be conducted before applying them to studies in different environments as their performance depends on various factors including, the local noise conditions, bird species composition of the site, season, and time of day.

Although most bird species in the NSC were heard at all three sites, different local noise conditions due to the construction and maintenance work at site A, the proximity of site B to the road, or site C to the tree line, resulted in inconsistent correlations between index values and bird species richness. However, pre-processing strengthened the correlation between select acoustic indices (ADI, 1-AEI, Ht, AR) and bird richness. Our study highlights the importance of pre-processing sound data from urban and noisy environments before using acoustic indices. If background noise has been preliminarily removed from recordings by applying amplitude threshold cut-off filters (e.g., high-pass filter), noise reduction, and signal amplification, then acoustic indices can perform consistently across diverse acoustic conditions and accurately reflect bird species richness [17, 18].

Conclusions

We demonstrate passive acoustic monitoring using low-cost sound recorders to study urban bird communities in the Philippines and attempt to address the challenge of processing and analyzing extensive sound data collections. To our knowledge, this is the first study assessing acoustic indices as proxies for bird species richness in an urban green space in the country. Among the eight acoustic indices assessed in the study, the nACI was the best and most robust, while the 1-AEI, Ht, and AR were also good predictors of bird species richness. We emphasize the importance of ground-truthing the indices before applying them to studies. Research exploring the acoustic indices and their relation to bird species richness is needed to understand the inconsistent correlations fully.

As the frequency response of our recorders was not flat over the entire frequency range, which impacts the calculation of acoustic indices, low-cost recorders should be calibrated before use in ecoacoustic studies to offset this limitation. We also recognize the importance of pre-processing sound data, such as applying high-pass filters, noise reduction, and signal amplification, to account for differences among recorders, remove unwanted ambient sounds, and improve the detection of bird species.

As acoustic indices were created to measure different characteristics of soundscapes quickly, they are not species-specific and lack sound recognition capabilities. For this reason, machine learning via convolutional neural networks (CNN) is increasingly being used in bioacoustic studies for birdsong classification [53–55]. Neural networks trained on Xeno-canto and Macaulay Library recordings have successfully classified North American and European bird species [53]. This remains to be explored in Philippine bird species. The use of other analysis, such as principal component analysis (PCA) [56] and cluster analysis [57], also help discriminate among different soundscapes in urban areas based on ecoacoustic indices.

Supporting information

(PDF)

(PDF)

(PDF)

(PDF)

(PDF)

Acknowledgments

The authors appreciate the help of expert birders, Dr. Carmela P. Española and Adrian Constantino, in species identification.

Data Availability

All relevant data are within the manuscript and its Supporting Information files.

Funding Statement

The author(s) received no specific funding for this work.

References

- 1.Byers BE, Kroodsma DE. Avian vocal behavior. Cornell Lab of Ornithology. Handbook of Bird Biology. 2016:355–405. [Google Scholar]

- 2.Bardeli R, Wolff D, Kurth F, Koch M, Tauchert KH, Frommolt KH. Detecting bird sounds in a complex acoustic environment and application to bioacoustic monitoring. Pattern Recognition Letters. 2010. Sep 1;31(12):1524–34. doi: 10.1016/j.patrec.2009.09.014 [DOI] [Google Scholar]

- 3.Penar W, Magiera A, Klocek C. Applications of bioacoustics in animal ecology. Ecological complexity. 2020. Aug 1;43:100847. doi: 10.1016/j.ecocom.2020.100847 [DOI] [Google Scholar]

- 4.Abrahams C. Bird bioacoustic surveys-developing a standard protocol. In Practice. 2018(102):20–3. [Google Scholar]

- 5.Zwart MC, Baker A, McGowan PJ, Whittingham MJ. The use of automated bioacoustic recorders to replace human wildlife surveys: an example using nightjars. PloS one. 2014. Jul 16;9(7):e102770. doi: 10.1371/journal.pone.0102770 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Aide TM, Corrada-Bravo C, Campos-Cerqueira M, Milan C, Vega G, Alvarez R. Real-time bioacoustics monitoring and automated species identification. PeerJ. 2013. Jul 16;1:e103. doi: 10.7717/peerj.103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gibb R, Browning E, Glover‐Kapfer P, Jones KE. Emerging opportunities and challenges for passive acoustics in ecological assessment and monitoring. Methods in Ecology and Evolution. 2019. Feb;10(2):169–85. doi: 10.1111/2041-210X.13101 [DOI] [Google Scholar]

- 8.Eldridge A, Casey M, Moscoso P, Peck M. A new method for ecoacoustics? Toward the extraction and evaluation of ecologically-meaningful soundscape components using sparse coding methods. PeerJ. 2016. Jun 30;4:e2108. doi: 10.7717/peerj.2108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sueur J. Sound analysis and synthesis with R. Cham: Springer; 2018. Jun 6. doi: 10.1007/978-3-319-77647-7 [DOI] [Google Scholar]

- 10.Sueur J, Farina A, Gasc A, Pieretti N, Pavoine S. Acoustic indices for biodiversity assessment and landscape investigation. Acta Acustica united with Acustica. 2014. Jul 1;100(4):772–81. doi: 10.3813/AAA.918757 [DOI] [Google Scholar]

- 11.Villanueva-Rivera LJ, Pijanowski BC. Soundecology: soundscape ecology. R package version 1.3.3. 2018. Available from: https://cran.r-project.org/package=soundecology [Google Scholar]

- 12.Sueur J, Aubin T, Simonis C. Seewave: sound analysis and synthesis. R package version 2.2.0. 2022. Available from: https://CRAN.R-project.org/package=seewave [Google Scholar]

- 13.Bateman J, Uzal A. The relationship between the Acoustic Complexity Index and avian species richness and diversity: a review. Bioacoustics. 2022. Sep 3;31(5):614–27. doi: 10.1080/09524622.2021.2010598 [DOI] [Google Scholar]

- 14.Pieretti N, Farina A, Morri D. A new methodology to infer the singing activity of an avian community: The Acoustic Complexity Index (ACI). Ecological indicators. 2011. May 1;11(3):868–73. doi: 10.1016/j.ecolind.2010.11.005 [DOI] [Google Scholar]

- 15.Villanueva-Rivera LJ, Pijanowski BC, Doucette J, Pekin B. A primer of acoustic analysis for landscape ecologists. Landscape ecology. 2011. Nov;26(9):1233–46. doi: 10.1007/s10980-011-9636-9 [DOI] [Google Scholar]

- 16.Boelman NT, Asner GP, Hart PJ, Martin RE. Multi‐trophic invasion resistance in Hawaii: bioacoustics, field surveys, and airborne remote sensing. Ecological Applications. 2007. Dec;17(8):2137–44. doi: 10.1890/07-0004.1 [DOI] [PubMed] [Google Scholar]

- 17.Sueur J, Pavoine S, Hamerlynck O, Duvail S. Rapid acoustic survey for biodiversity appraisal. PloS one. 2008. Dec 30;3(12):e4065. doi: 10.1371/journal.pone.0004065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Depraetere M, Pavoine S, Jiguet F, Gasc A, Duvail S, Sueur J. Monitoring animal diversity using acoustic indices: implementation in a temperate woodland. Ecological Indicators. 2012. Feb 1;13(1):46–54. doi: 10.1016/j.ecolind.2011.05.006 [DOI] [Google Scholar]

- 19.Machado RB, Aguiar L, Jones G. Do acoustic indices reflect the characteristics of bird communities in the savannas of Central Brazil?. Landscape and Urban Planning. 2017. Jun 1;162:36–43. doi: 10.1016/j.landurbplan.2017.01.014 [DOI] [Google Scholar]

- 20.Mammides C, Goodale E, Dayananda SK, Kang L, Chen J. Do acoustic indices correlate with bird diversity? Insights from two biodiverse regions in Yunnan Province, south China. Ecological Indicators. 2017. Nov 1;82:470–7. doi: 10.1016/j.ecolind.2017.07.017 [DOI] [Google Scholar]

- 21.Dröge S, Martin DA, Andriafanomezantsoa R, Burivalova Z, Fulgence TR, Osen K, et al. Listening to a changing landscape: Acoustic indices reflect bird species richness and plot-scale vegetation structure across different land-use types in north-eastern Madagascar. Ecological Indicators. 2021. Jan 1;120:106929. doi: 10.1016/j.ecolind.2020.106929 [DOI] [Google Scholar]

- 22.Retamosa Izaguirre M, Barrantes-Madrigal J, Segura Sequeira D, Spínola-Parallada M, Ramírez-Alán O. It is not just about birds: what do acoustic indices reveal about a Costa Rican tropical rainforest?. Neotropical Biodiversity. 2021. Jan 1;7(1):431–42. doi: 10.1080/23766808.2021.1971042 [DOI] [Google Scholar]

- 23.Moreno-Gómez FN, Bartheld J, Silva-Escobar AA, Briones R, Márquez R, Penna M. Evaluating acoustic indices in the Valdivian rainforest, a biodiversity hotspot in South America. Ecological Indicators. 2019. Aug 1;103:1–8. doi: 10.1016/j.ecolind.2019.03.024 [DOI] [Google Scholar]

- 24.Alcocer I, Lima H, Sugai LS, Llusia D. Acoustic indices as proxies for biodiversity: a meta‐analysis. Biological Reviews. 2022. Dec;97(6):2209–36. doi: 10.1111/brv.12890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gasc A, Pavoine S, Lellouch L, Grandcolas P, Sueur J. Acoustic indices for biodiversity assessments: Analyses of bias based on simulated bird assemblages and recommendations for field surveys. Biological Conservation. 2015. Nov 1;191:306–12. doi: 10.1016/j.biocon.2015.06.018 [DOI] [Google Scholar]

- 26.Eldridge A, Guyot P, Moscoso P, Johnston A, Eyre-Walker Y, Peck M. Sounding out ecoacoustic metrics: Avian species richness is predicted by acoustic indices in temperate but not tropical habitats. Ecological Indicators. 2018. Dec 1;95:939–52. doi: 10.1016/j.ecolind.2018.06.012 [DOI] [Google Scholar]

- 27.Fairbrass AJ, Rennert P, Williams C, Titheridge H, Jones KE. Biases of acoustic indices measuring biodiversity in urban areas. Ecological Indicators. 2017. Dec 1;83:169–77. doi: 10.1016/j.ecolind.2017.07.064 [DOI] [Google Scholar]

- 28.Pijanowski BC, Farina A, Gage SH, Dumyahn SL, Krause BL. What is soundscape ecology? An introduction and overview of an emerging new science. Landscape ecology. 2011. Nov;26(9):1213–32. doi: 10.1007/s10980-011-9600-8 [DOI] [Google Scholar]

- 29.Rutz C, Loretto MC, Bates AE, Davidson SC, Duarte CM, Jetz W, et al. COVID-19 lockdown allows researchers to quantify the effects of human activity on wildlife. Nature Ecology & Evolution. 2020. Sep;4(9):1156–9. doi: 10.1038/s41559-020-1237-z [DOI] [PubMed] [Google Scholar]

- 30.Vallejo BM Jr, Aloy AB, Ong PS. The distribution, abundance and diversity of birds in Manila’s last greenspaces. Landscape and Urban Planning. 2009. Feb 15;89(3–4):75–85. doi: 10.1016/j.landurbplan.2008.10.013 [DOI] [Google Scholar]

- 31.Energy Development Corporation. EDC establishes threatened species arboretum in UP. 2014. December 11. Available from: https://www.energy.com.ph/2014/12/11/edc-establishes-threatened-species-arboretum-in-up/ [Google Scholar]

- 32.Cabel IC. Walk and learn to save PH native tree species. Philippine Daily Inquirer. 2019. April 8. Available from: https://newsinfo.inquirer.net/1104179/walk-and-learn-to-save-ph-native-tree-species [Google Scholar]

- 33.Villasper J. Destinations: The University of the Philippines Diliman Campus–Choose Your Own Adventure. Birdwatch. 2020. March 2. Available from: http://birdwatch.ph/2020/03/02/destinations-the-university-of-the-philippines-diliman-campus-choose-your-own-adventure-2/ [Google Scholar]

- 34.Philippine Atmospheric, Geophysical and Astronomical Services Administration (PAGASA). Climate of the Philippines. Available from: https://www.pagasa.dost.gov.ph/information/climate-philippines [Google Scholar]

- 35.OpenStreetMap Contributors, CC BY-SA 2.0. 2015. Available from: https://openstreetmap.org [Google Scholar]

- 36.HueMan1—Own work, CC BY-SA 4.0. Blank map of the Philippines. 2021. Available from: https://commons.wikimedia.org/w/index.php?curid=108050493 [Google Scholar]

- 37.Fletcher AC, Mura C. Ten quick tips for using a Raspberry Pi. PLoS computational biology. 2019. May 2;15(5):e1006959. doi: 10.1371/journal.pcbi.1006959 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kysela J. arecord (1): command-line sound recorder and player for ALSA. [Cited 2021 August 31]. Available from: http://manpages.org/arecord [Google Scholar]

- 39.Benocci R, Potenza A, Bisceglie A, Roman HE, Zambon G. Mapping of the Acoustic Environment at an Urban Park in the City Area of Milan, Italy, Using Very Low-Cost Sensors. Sensors. 2022. May 6;22(9):3528. doi: 10.3390/s22093528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Team Audacity. Audacity®: free audio editor and recorder [Computer application]. Version 3.0.0. 2021. Audacity® software is copyright © 1999–2021 Audacity Team. Available from: https://www.audacityteam.org/. It is free software distributed under the terms of the GNU General Public License. The name Audacity® is a registered trademark. [Google Scholar]

- 41.Planqué B, Vellinga WP, Pieterse S, Jongsma J, de By RA. Xeno-canto. 2005. Available from: https://www.xeno-canto.org [Google Scholar]

- 42.Sullivan BL, Wood CL, Iliff MJ, Bonney RE, Fink D, Kelling S. eBird: A citizen-based bird observation network in the biological sciences. Biological conservation. 2009. Oct 1;142(10):2282–92. Available from: https://www.ebird.org/ [Google Scholar]

- 43.R Development Core Team. The R Project for Statistical Computing. R Version 4.0.0. 2020. Available from: https://www.r-project.org/ [Google Scholar]

- 44.Bates D, Maechler M, Bolker B, Walker S. lme4: Linear Mixed-Effects Models using ’Eigen’ and S4. R package version 1.1–32. 2023. Available from: https://cran.r-project.org/package=lme4 [Google Scholar]

- 45.Magnusson A, Skaug H, Nielsen A, Berg C, Kristensen K, Maechler M, et al. glmmTMB: Generalized Linear Mixed Models using Template Model Builder. R package version 1.1.6. 2023. Available from: https://CRAN.R-project.org/package=glmmTMB [Google Scholar]

- 46.Budka M, Sokołowska E, Muszyńska A, Staniewicz A. Acoustic indices estimate breeding bird species richness with daily and seasonally variable effectiveness in lowland temperate Białowieża forest. Ecological Indicators. 2023. Apr 1;148:110027. doi: 10.1016/j.ecolind.2023.110027 [DOI] [Google Scholar]

- 47.Sánchez‐Giraldo C, Bedoya CL, Morán‐Vásquez RA, Isaza CV, Daza JM. Ecoacoustics in the rain: understanding acoustic indices under the most common geophonic source in tropical rainforests. Remote Sensing in Ecology and Conservation. 2020. Sep;6(3):248–61. doi: doi.org/10.1002/rse2.162 [Google Scholar]

- 48.Mazerolle MJ. AICcmodavg: Model Selection and Multimodel Inference Based on (Q)AIC(c). R package version 2.3–2. 2023. Available from: https://CRAN.R-project.org/package=AICcmodavg [Google Scholar]

- 49.Burnham KP, Anderson DR. Model selection and multimodel inference: A practical information-theoretic approach. New York, NY: Springer New York; 2002. doi: 10.1007/b97636 [DOI] [Google Scholar]

- 50.Ebird. eBird: An online database of bird distribution and abundance. eBird, Cornell Lab of Ornithology. UP Diliman—MSI Hotspot. 2022. Available from: https://ebird.org/hotspot/L10877450?yr=all&m=&rank=mrec [Google Scholar]

- 51.Shamon H, Paraskevopoulou Z, Kitzes J, Card E, Deichmann JL, Boyce AJ, et al. Using ecoacoustics metrices to track grassland bird richness across landscape gradients. Ecological Indicators. 2021. Jan 1;120:106928. doi: 10.1016/j.ecolind.2020.106928 [DOI] [Google Scholar]

- 52.Retamosa Izaguirre MI, Ramírez-Alán O, De la O Castro J. Acoustic indices applied to biodiversity monitoring in a Costa Rica dry tropical forest. Journal of Ecoacoustics. 2018. Feb 26;2. doi: 10.22261/JEA.TNW2NP [DOI] [Google Scholar]

- 53.Kahl S, Wood CM, Eibl M, Klinck H. BirdNET: A deep learning solution for avian diversity monitoring. Ecological Informatics. 2021. Mar 1;61:101236. doi: 10.1016/j.ecoinf.2021.101236 [DOI] [Google Scholar]

- 54.Marchal J, Fabianek F, Aubry Y. Software performance for the automated identification of bird vocalisations: the case of two closely related species. Bioacoustics. 2022. Jul 4;31(4):397–413. doi: 10.1080/09524622.2021.1945952 [DOI] [Google Scholar]

- 55.Liu J, Zhang Y, Lv D, Lu J, Xie S, Zi J, et al. Birdsong classification based on ensemble multi-scale convolutional neural network. Scientific Reports. 2022. May 23;12(1):1–4. doi: 10.1038/s41598-022-12121-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Benocci R, Roman HE, Bisceglie A, Angelini F, Brambilla G, Zambon G. Eco-acoustic assessment of an urban park by statistical analysis. Sustainability. 2021. Jul 14;13(14):7857. doi: 10.3390/su13147857 [DOI] [Google Scholar]

- 57.Benocci R, Brambilla G, Bisceglie A, Zambon G. Eco-acoustic indices to evaluate soundscape degradation due to human intrusion. Sustainability. 2020. Dec 14;12(24):10455. doi: 10.3390/su122410455 [DOI] [Google Scholar]