Abstract

Unmeasured confounding is a key threat to reliable causal inference based on observational studies. Motivated from two powerful natural experiment devices, the instrumental variables and difference-in-differences, we propose a new method called instrumented difference-in-differences that explicitly leverages exogenous randomness in an exposure trend to estimate the average and conditional average treatment effect in the presence of unmeasured confounding. We develop the identification assumptions using the potential outcomes framework. We propose a Wald estimator and a class of multiply robust and efficient semiparametric estimators, with provable consistency and asymptotic normality. In addition, we extend the instrumented difference-in-differences to a two-sample design to facilitate investigations of delayed treatment effect and provide a measure of weak identification. We demonstrate our results in simulated and real datasets.

Keywords: causal inference, effect modification, exclusion restriction, instrumental variables, multiple robustness

1 |. INTRODUCTION

Unmeasured confounding is a key threat to reliable causal inference based on observational studies (Lawlor et al., 2004; Rutter, 2007). A popular approach to handle unmeasured confounding is the instrumental variable (IV) method, which requires an IV that satisfies three core assumptions (Angrist et al., 1996; Baiocchi et al., 2014; Hernan & Robins, 2020): (i) (relevance) it is associated with the exposure; (ii) (independence) it is independent of any unmeasured confounder of the exposure–outcome relationship; (iii) (exclusion restriction) it has no direct effect on the outcome. By extracting exogenous variation in the exposure that is independent of the unmeasured confounder, IVs can be used to estimate the causal effect.

Meanwhile, the increasing availability of large longitudinal datasets such as administrative claims and electronic health records has created new opportunities to expand study designs to take the advantage of the longitudinal structure. One method that is widely used in economics and other social sciences is difference-in-differences (DID) (Card & Krueger, 1994; Angrist & Pischke, 2008). The method of DID is based on a comparison of the trends in outcome for two exposure groups, where one group consists of individuals who switch from being unexposed to exposed and the other group consists of individuals who are never exposed. Under the parallel trends assumption, which says that the outcomes in the two exposure groups evolve in the same way over time in the absence of the exposure, DID is able to remove time-invariant bias from the unmeasured confounder. However, because the setup and assumptions of DID are motivated from applications in social sciences, its applicability is limited in biomedical sciences. For example, in social sciences it is relatively common for a new policy to be applied to one region of the country but not another, creating a circumstance in which key assumptions such as parallel trends are likely to hold and facilitating a DID design. In assignment of pharmacologic or other treatments in health care, such clear natural, exogenous sources of cleavage between exposed and unexposed groups are rare, making it more difficult to identify situations in which all assumptions of DID will be met.

In this paper, we connect these two powerful natural experiment devices (referred to as the standard IV and standard DID) and propose a new method called instrumented DID to estimate the causal effect of the exposure in the presence of unmeasured confounding. Unlike the standard DID, the instrumented DID exploits a haphazard encouragement targeted at a subpopulation toward faster uptake of the exposure or a surrogate of such encouragement, which we call IV for DID. Then, any observed nonparallel trends in outcome between the encouraged and unencouraged groups provides evidence for causation, as long as their trends in outcome are parallel if all individuals were counterfactually not exposed. A prototypical example of instrumented DID is a longitudinal randomized experiment, where after a baseline period, some individuals are randomly selected to be encouraged to take the treatment regardless of their treatment history. If the encouragement is effective, the exposure rate would increase more for the encouraged group than the unencouraged group. If additionally the encouragement has no direct effect on the trend in outcome, then any nonparallel trends in outcome must be due to the nonparallel trends in exposure. Therefore, through exploiting haphazard encouragement that affects the exposure trend, the instrumented DID is able to extract some variation in the exposure trend that is independent of the unmeasured confounder and relax some of the most disputable assumptions of the standard IV and standard DID method, particularly the exclusion restriction for the standard IV method and the parallel trends for the standard DID method; see Section 2 for more discussion.

Reasoning similar to the instrumented DID has been applied informally in prior studies. A prominent example is the differential trends in smoking prevalence for men and women as a consequence of targeted tobacco advertising to women, which were associated with disproportional trends for men and women in lung cancer mortality (Burbank, 1972; Meigs, 1977; Patel et al., 2004). Specifically, because of marketing efforts designed to introduce specific women’s brands of cigarettes such as Virginia Slims in 1967, there was a considerable increase in smoking initiation by young women, which lasted through the mid-1970s (Pierce & Gilpin, 1995). Thirty years later, the lung cancer mortality rates for women at the age of 55 years or older had increased to almost four times the 1970 rate, whereas rates among men had no such dramatic change (Bailar & Gornik, 1997). In Section 7, we will analyze this example using the proposed method.

The rest of this paper is organized as follows. In Section 2, we establish the identification assumptions for the instrumented DID. In Section 3, we develop various estimation and inference approaches. In Section 4, we extend the instrumented DID to a two-sample design. In Section 5, we provide a measure of weak identification. Results from simulation studies and a real-data application are in Sections 6 and 7, respectively. The paper concludes with a discussion in Section 8. A review of IV and DID designs can be found in Section S1 of the Supporting information.

2 |. INSTRUMENTED DIFFERENCE-IN-DIFFERENCES: IDENTIFICATION

Suppose that random samples of a target population are collected at two time points and , and there is no overlap between individuals in these two samples. We leave consideration of overlapping samples and multiple time points to future work. For each individual in the pooled sample, we observe , where is a time indicator that equals one if this individual appears at , equals zero if is a binary IV for DID observed at the baseline, is a vector of baseline covariates, is a binary exposure variable, is a real-valued outcome of interest. We assume that are independent and identically distributed (i.i.d.) realizations of . This data setup is also commonly known as repeated cross-sectional data (Abadie, 2005).

We define causal effects using the potential outcomes framework (Neyman, 1923; Rubin, 1974). For each individual, let be the potential exposure if this individual were observed at time and if were externally set to be the potential outcome if this individual were observed and exposed to at time , and had the same value it actually had. The full data vector for each individual is . Moreover, let be the potential outcome if this individual were exposed to at the time point it actually got sampled and had the same value it actually had. Our target estimand is the average treatment effect (ATE) and conditional average treatment effect (CATE) , where is a pre-specified subset of , representing the effect modifiers of interest; for example, setting to be an empty set gives the unconditional ATE . Note that the separation of and separates the need to adjust for possible confounding and the specification of effect modifiers of interest, which provides great flexibility and allows researchers to define the target estimand a priori. Throughout the paper, we consider treatment effect on the additive scale.

We make the following identification assumptions for using the instrumented DID.

Assumption 1.

(Consistency) and .

(Positivity) for with probability 1.

(Random sampling) .

Assumption 1(a) states that the observed exposure is if and only if and , and the observed outcome is if and only if and . Implicit in this assumption is that an individual’s observed outcome is not affected by others’ exposure level or this individual’s exposure level at the other time point; this is known as the Stable Unit Treatment Value Assumption (Rubin, 1978, 1990). Assumption 1(b) postulates that there is a positive probability of receiving each combination within each level of , or equivalently, the support of is the same for each level of . Assumption 1(c) is often assumed for repeated cross-sectional studies and says that for each level of , the collected data at every time point are a random sample from the underlying population; see Section 3.2.1 of Abadie (2005) that makes a similar assumption.

Assumption 2.

(Instrumented DID). With probability 1,

(Trend relevance) .

(Independence & exclusion restriction) .

(No unmeasured common effect modifier) for .

(Stable treatment effect over time) .

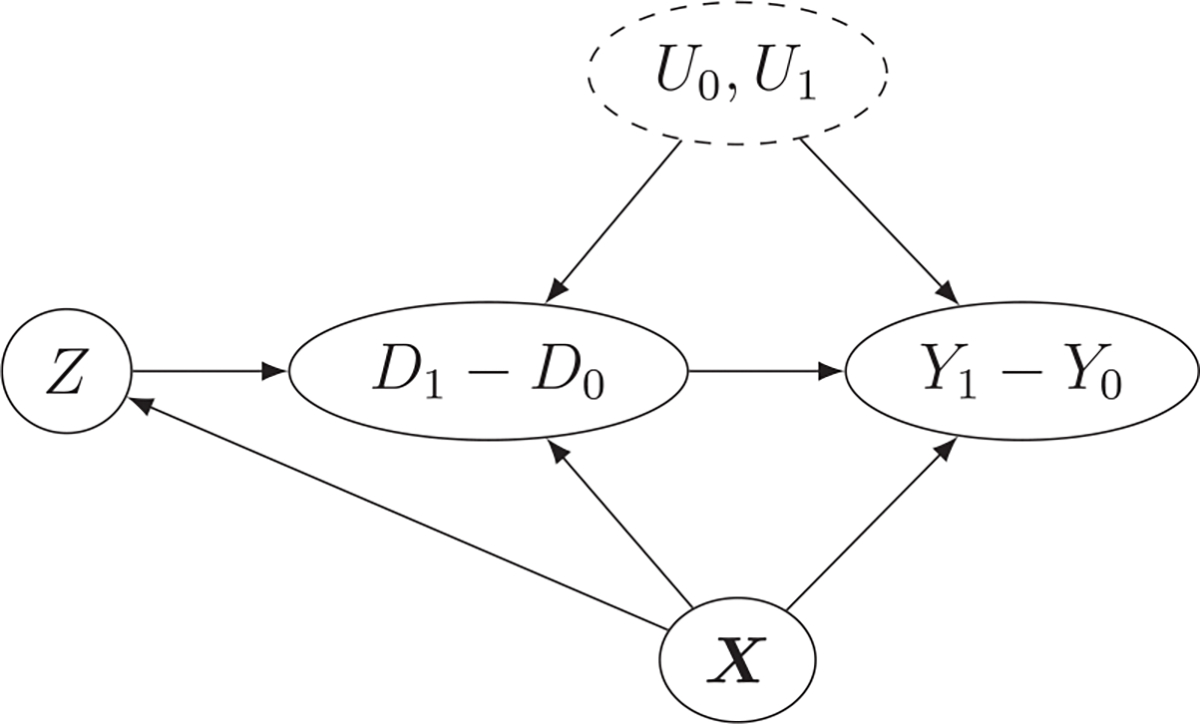

Assumptions 2(a) and (b) formalize the core assumptions that an IV for DID needs to satisfy, which are illustrated by a directed acyclic graph (DAG) in Figure 1. Assumptions 2(a) and (b) are also parallel to the core assumptions for the standard IV introduced in Section 1.

FIGURE 1.

Directed acyclic graph (DAG) for instrumented difference-in-differences (DID). Suppose the existence of an unmeasured confounder such that . Assumption 2(a) states that must be associated with the change in exposure , Assumption 2(b) states that is independent of any unmeasured confounders and cannot have any direct effect on the change in outcome and does not modify the treatment effect.

Assumption 2(a) says that the IV for DID , as an encouragement that disproportionately acts on only a subpopulation, affects the trend in exposure. For example, can be a random encouragement for some subjects in a longitudinal experiment, an advertisement campaign targeted at a certain geographic region or subpopulation, or a change in reimbursement policies for a certain insurance plan. Under Assumption 1, Assumption 2(a) is equivalent to with probability 1, thus is checkable from observed data.

Assumption 2(b) is an integration of the independence and exclusion restriction assumptions. To see this, we adopt a more elaborated definition of the potential outcomes and define as the potential outcome if the individual were observed and exposed to at time , and if were externally set to , then Assumption 2(b) is implied by (independence) and (exclusion restriction) and , where means having the same distribution; see Tan (2006) for a parallel statement for the standard IV and Hernán and Robins (2006) for connections and comparisons between different definitions of the standard IV. Hence, Assumption 2(b) essentially states that is unconfounded, has no direct effect on the trend in outcome, and does not modify the treatment effect. Here, we see the main advantage of using as an IV for DID compared to as a standard IV: as an IV for DID is allowed to have a direct effect on the outcome, as long as it has no direct effect on the trend in outcome and does not modify the treatment effect. For example, Newman et al. (2012) considered using a hospital’s preference for phototherapy when treating newborns with hyperbilirubinemia as a standard IV to study the effect of phototherapy but found evidence that hospitals that use more phototherapy also have greater use of infant formula, which is thought to be an effective treatment for hyperbilirubinemia. Hence, the hospital’s preference is a potentially invalid standard IV as it can have a direct effect on the outcome through the use of infant formula. However, it may still qualify as an IV for DID if the use of phototherapy evolves differently between the high and low preference hospitals over time, but the use of infant formula in the two groups of hospitals does not change over time. These features imply that variables like hospital’s preference may be more likely to be an IV for DID, compared to being a standard IV.

Assumption 2(c) is developed in Cui and Tchetgen Tchetgen (2021) and a slightly stronger version is proposed earlier in Wang and Tchetgen Tchetgen (2018). Suppose in this paragraph only the existence of an unmeasured confounder such that , then Assumption 2(c) holds if either (i) there is no additive interaction in ; or (ii) there is no additive interaction in .

Assumption 2(d) requires that the CATE does not change over time. This is a strong assumption but may be plausible in many applications when the study period only spans a short period of time. In our application in Section 7, we conduct a sensitivity analysis to gauge the sensitivity of the study conclusion to violation of this assumption.

Two additional remarks on Assumption 2 are in order. First, an attractive feature of Assumptions 2(c) and (d) is that they are guaranteed to be true under the sharp null hypothesis of no treatment effect for all individuals. This means that the instrumented DID method can be used for testing the sharp null hypothesis under Assumptions 2(a) and (b). Second, from the definition of potential exposures , the IV for DID is considered causal for the exposure. In Section S4.2, we present another version of notations and assumptions which does not require to be causal, that is, is allowed to be correlated with a cause that affects the trend in exposure, and is more suitable for our application in Section 7 in which we use gender as the IV for DID for its correlation with the encouragement from targeted tobacco advertising.

For , let , , and let and denote their counterparts without observed covariates. The next proposition indicates that the (conditional) ATE can be identified under the above assumptions.

Proposition 1.

If Assumptions 1 and 2 hold, then

| (1) |

This and all the other proofs in this paper are in Section S3.

Now we contrast the instrumented DID with standard DID. As discussed in Section 1, the standard DID identifies the ATE for the treated in the post-treatment period from comparing the trends in outcome between two exposure groups, where every individual in one group switches from being unexposed to exposed between two time points, and every individual in the other group is never exposed. However, its key assumption, the parallel trends, will be violated if there is a time-invariant unmeasured confounder that has time-varying effects or there is a time-varying unmeasured confounder in the exposure–outcome relationship. We use time-varying unmeasured confounding to refer to either case. In contrast, the instrumented DID explicitly probes the relationship between the trend in outcome and the trend in exposure using an exogenous variable which often results in partial compliance with exposure within groups defined by levels of . Compared with standard DID, instrumented DID is robust to time-varying unmeasured confounding in the exposure–outcome relationship by making use of an exogenous variable that is not subject to this time-varying unmeasured confounding.

We remark that when there are no observed covariates, has been derived in alternative ways in econometrics under different assumptions. It is the same as the standard IV Wald ratio after first differencing the exposure and outcome when each individual is observed at both time points (Wooldridge, 2010, Chap. 15.8), as motivated from the linear structural equation models. Importantly, Proposition 1 provides a justification of this approach using the potential outcomes framework without any modeling assumption. It is also the same as the Wald ratio in the fuzzy DID method for identification of a local ATE under the assumption that individuals can switch treatment in only one direction within each treatment group (de Chaisemartin & D’HaultfŒuille, 2018), as motivated from social science applications (e.g., Duflo 2001). Compared with this derivation, our proposed instrumented DID is less stringent in terms of the direction in which each individual can switch treatment, thus is better suited for applications using healthcare data where individuals can switch treatment in any direction. In addition, we complement the proposed instrumented DID with a novel semiparametric estimation and inference method in Section 3.2, two-sample design in Section 4, and measure of weak identification in Section 5.

Finally, we note that Assumption 2(c) can be replaced by the monotonicity assumption for with probability 1, under which in (1) identifies a complier ATE; see Section S3.3 for details.

3 |. ESTIMATION AND INFERENCE

3.1 |. Wald estimator

When there are no observed covariates, based on Proposition 1, we can simply replace the conditional expectations in Equation (1) with their sample analogues and obtain the Wald estimator

| (2) |

where , , for . In Theorem S1, we prove consistency and asymptotic normality of and give a consistent variance estimator.

3.2 |. Semiparametric theory and multiply robust estimators

Consider the case with a baseline observed covariate vector . Suppose that we have a parametric model for , written as for some finite-dimensional parameter . Importantly, we do not assume that this model is necessarily correct, but instead treat it as a working model and formulate our estimand as the projection of the CATE onto the working model . Specifically, we use the weighted least-squares projection given by

| (3) |

where is a user-specified weight function, which can be tailored if there is subject matter knowledge for emphasizing specific parts of the support of ; otherwise, we can set . By definition, is the best least-squares approximation to the CATE . For example, when effect modification is not of interest, we can specify such that is projected onto a constant , which can be interpreted as the ATE; if we want to estimate a linear approximation of the CATE, we can specify , with including the intercept. This working model approach is also adopted in Abadie (2003), Ogburn et al. (2015), and Kennedy et al. (2019).

Let , , , , and , for . Consider three sets of model assumptions:

: models for are correct.

: models for , are correct.

: models for , are correct.

In what follows, we first discuss three different estimators for that are consistent and asymptotically normal under , respectively. Bounded semiparametric estimators analogous to those in Wang and Tchetgen Tchetgen (2018) are developed in Section S2.4. Let be the empirical average. Under model , we present a regression-based estimator that solves

where is a parametric specification of , the solution to a vector of the same dimension as , and are respectively estimators of . Under model , we present an inverse probability weighting (IPW) estimator that solves

where is a parametric specification of , the solution to an estimator of , and a vector of the same dimension as . Finally, under model , we present an estimator based on g-estimation, defined as the solution to

where is the solution to . These three classes of estimators are consistent and asymptotically normal in three different models , following standard arguments, for example, as in Newey and McFadden (1994, Chap. 6.1). Depending on the specific applications, some classes may be more preferable when knowledge about certain nuisance parameters is available. In practice, when we are uncertain about which models are correctly specified, it is of interest to develop a multiply robust estimator that is guaranteed to deliver valid inference about provided that one, but not necessarily more than one, of models holds (Vansteelandt et al., 2008; Wang & Tchetgen Tchetgen, 2018; Shi et al., 2020).

The next theorem derives the efficient influence function for (Bickel et al., 1993; van der Vaart, 2000), which provides the basis of constructing a multiply robust estimator.

Theorem 1.

If Assumptions 1 and 2 hold, and exists and is continuous. Under a nonparametric model, the efficient influence function for is proportional to

| (4) |

where denotes the vector of nuisance parameters, , and .

Note that the efficient influence function gives an estimator defined as a solution to , where is a vector of the estimated nuisance parameters. Among the nuisance parameters, can be estimated directly from likelihood or moment equations, whereas the estimation of and relies on additional nuisance parameters. To achieve multiple robustness, we need to construct a consistent estimator of in the union of and , as well as a consistent estimator of in the union of and . We achieve these goals by using doubly robust g-estimation (Robins, 1994). Specifically, we solve for and respectively from

We prove in the Supporting information that is multiply robust, in the sense that the estimator is consistent as long as either one of the three models holds.

Next, we derive the asymptotic properties of . Let denote convergence in probability, the Euclidean norm for any column vector the norm for any real-valued function , and for any collection of real-valued functions , where denotes the distribution of . Moreover, let denote the true values of the nuisance parameters.

Assumption 3.

, where with either (i) ; or (ii) and ; or (iii) and .

For each in an open subset of Euclidean space and each in a metric space, let be a measurable function such that the class of functions is Donsker for some , and such that as . The maps are differentiable at , uniformly in in a neighborhood of with nonsingular derivative matrices .

Assumption 3(a) describes the multiple robustness of our estimator. Assumption 3(b) is standard for M-estimators (van der Vaart, 2000, Chap. 5.4).

Theorem 2.

Under Assumptions 1–3, is consistent with rate of convergence

Suppose further that

then is asymptotically normal and semiparametric efficient, satisfying

| (5) |

The first part of Theorem 2 describes the convergence rate of , which again indicates the multiple robustness of our estimator. That is, is consistent provided that (i) either one of or is consistent, and (ii) either one of or is consistent. The multiple robustness property is important in practice, because nuisance parameters such as , and may be easier to estimate than and . When all the nuisance parameters are consistently estimated, we can still benefit from using the semiparametric methods, in that even the nuisance parameters are estimated at slower rates, can still have the fast convergence rate. For example, if all the nuisance parameters are estimated at rates, then can still achieve fast rate. The second part of Theorem 2 says that if the nuisance parameters are consistently estimated with fast rates, for example, if they are estimated using parametric methods, then their variance contributions are negligible, and achieves the semiparametric efficiency bound.

When Equation (5) holds, a plug-in variance estimator for can be easily constructed as , with . Even if Equation (5) does not hold, for example, when only one of holds, but all the nuisance parameters are finite-dimensional and in the form of M-estimators, is still consistent and asymptotically normal from standard M-estimation theory (Newey & McFadden, 1994, Chap. 6). Thus, a consistent variance estimator for can be constructed by stacking the efficient influence function and the estimation equations for the nuisance parameters, solving for simultaneously, and taking the corresponding diagonal component of the joint sandwich variance estimator. Alternatively, the nonparametric bootstrap is commonly used in practice (Cheng & Huang, 2010).

4 |. TWO-SAMPLE INSTRUMENTED DIFFERENCE-IN-DIFFERENCES

In some applications, it is hard to collect the exposure and outcome variables for the same individual, especially when the outcome is defined to reflect a delayed treatment effect. For instance, in the smoking and lung cancer example in Section 1, the outcome of interest is lung cancer mortality after 35 years and it is infeasible to follow the same individuals for 35 years. Motivated from Angrist and Krueger (1992, 1995)’s influential two-sample standard IV analysis, we extend the instrumented DID to a two-sample design.

Suppose there are i.i.d. realizations of from one sample, and i.i.d. realizations of from another sample. These two samples are independent of each other and we never observe and . We write the observed data as and , which are respectively referred to as the outcome dataset and the exposure dataset. Let , be as defined in Equations (1) and (2) but evaluated correspondingly using the outcome dataset and exposure dataset. Suppose that Assumptions 1 and 2 hold for the data-generating processes in both datasets, and , then the ATE is identified by . Analogously, the two-sample instrumented DID Wald estimator is obtained as . In Theorem S2, we establish the consistency and asymptotic normality of and provide a consistent variance estimator. Both and its variance estimator can be conveniently calculated based on solely summary statistics and and their standard errors (SEs).

5 |. MEASURE OF WEAK IDENTIFICATION

Weak identification is a general challenge for IV-type methods and has recently received increased attention among theoretical and applied researchers; see Stock et al. (2002) for a survey. For standard IV, weak identification refers to that the IVs are only weakly associated with the exposure. For instrumented DID, weak identification refers to that the trends in exposure for and are near-parallel. Under weak identification, the sampling distribution for the point estimators is generally non-normal and the standard inference can be unreliable (Bound et al., 1995). Therefore, it is important to have a measure of weak identification tailored for the instrumented DID as diagnostic checks to make sure the developed asymptotic inference procedures can be reliably applied.

Consider first the case when there are no observed covariates. We take the one-sample estimator as an example; the result for the two-sample estimator is similar. Note that and can be respectively obtained from fitting a saturated model of or on 1, , and , where is the interaction term. Let be the -dimensional vector of residuals from regressing on 1, , and . By using the Frisch–Waugh–Lovell theorem (Davidson & MacKinnon, 1993), in Equation (2) can be equivalently formulated as

where is the hat matrix. Interestingly, the above formula indicates that can be alternatively obtained from a conventional two-stage least squares: the exposure is first regressed on (first-stage regression) and the outcome is then regressed on the predicted values from the first-stage regression. This provides a perception that as an IV for DID is equivalent to using as the standard IV while further controlling for 1, , and . Hence, the concentration parameter of as the standard IV (controlling for 1, , and ) serves here as a measure of weak identification using as the IV for DID. Specifically, this measure is defined as , where is defined in Proposition 1, is the population residual variance from the first-stage regression. Heuristically, increases if we have a larger sample size , larger , or a larger limit of . For the usual inference based on normal approximation to be accurate, must be large.

A commonly used estimate of is the F-statistic from the first-stage regression. When only summary-data are available, that is, only and its SE are available, one can also use the squared -score as an estimate of , where the -score is the ratio of to its SE. When there are observed covariates, a measure of weak identification can also be easily calculated by defining as the vector of residuals from regressing on 1, . We follow Stock et al. (2002) and recommend checking to make sure that an estimated is larger than 10 before applying the inference methods in Sections 3 and 4.

6 |. SIMULATIONS

To evaluate the finite sample performance of the proposed instrumented DID (iDID) methods, we simulate data as follows: , , , , , , for . We simulate random samples from and let The observed data are .

Under this data-generating process, Assumptions 1 and 2 do not hold unconditionally, but do hold in each of the four strata defined by . Hence, the Wald estimator in Equation (2) is valid when considering each stratum separately; we denote the obtained stratum-specific Wald estimators as and they are respectively estimating the stratum-specific ATE: −0.60, 1, 1, 2.60. On the other hand, Assumptions 1 and 2 hold when conditioning on , and thus the three classes of semiparametric estimators and the multiply robust estimator proposed in Section 3.2 are all valid. For the semiparametric iDID method, we consider two working models for the CATE: a constant working model with and a linear working model , with . The true values of are all equal to 1 because and . The weight function in Equation (3) is set to be 1.

We also examine the effect of model misspecification for the semiparametric iDID estimators. Note that the data-generating process implies that , and , , , , , , , are all linear in . The misspecified model we fit for is a product of two logistic regressions, one for and the other for , both in terms of , the misspecified models for , are linear in , and for , , , , , are linear in .

We compare with two other methods, direct treated-versus-control outcome comparison using ordinary least squares (OLS) and the standard IV method using as the IV, where the latter is implemented using the R package ivpack (Jiang & Small, 2014). Direct outcome comparison is invalid because of the unmeasured confounder ; the standard IV method is also invalid due to the direct effect of on the outcome. Table 1 shows the simulation results based on 1,000 repetitions, which includes: (i) the simulation average bias and standard deviation (SD) of each estimator; (ii) the mean standard errors (SEs), which are calculated according to Equation (S4) in the supplementary materials for the Wald estimator, using standard M-estimation theory for the semiparametric estimators; (iii) simulation coverage probability (CP) of 95% confidence intervals.

TABLE 1.

Bias, standard deviation (SD), average standard error (SE), and coverage probability (CP) of 95% asymptotic confidence interval based on 1,000 repetitions with

| Method | Correct model | Estimator | Bias | SD | SE | CP |

|---|---|---|---|---|---|---|

| OLS | 2.466 | 0.024 | 0.023 | 0.000 | ||

| Standard IV | −38.525 | 3.385 | 3.316 | 0.000 | ||

| iDID-Wald | −0.014 | 0.247 | 0.251 | 0.950 | ||

| 0.007 | 0.253 | 0.259 | 0.958 | |||

| −0.008 | 0.250 | 0.259 | 0.961 | |||

| 0.000 | 0.289 | 0.284 | 0.943 | |||

| Constant working model | ||||||

| iDID-MR | all correct | −0.002 | 0.111 | 0.114 | 0.956 | |

| correct | −0.001 | 0.110 | 0.114 | 0.960 | ||

| correct | −0.003 | 0.136 | 0.139 | 0.944 | ||

| correct | −0.003 | 0.137 | 0.140 | 0.945 | ||

| none | −0.355 | 0.144 | 0.142 | 0.293 | ||

| iDID-Reg | correct | −0.002 | 0.109 | 0.114 | 0.960 | |

| incorrect | −0.351 | 0.144 | 0.149 | 0.335 | ||

| iDID-IPW | correct | −0.021 | 0.225 | 0.225 | 0.948 | |

| incorrect | −0.271 | 0.234 | 0.242 | 0.816 | ||

| iDID-G | correct | −0.021 | 0.225 | 0.224 | 0.948 | |

| incorrect | −0.276 | 0.235 | 0.233 | 0.814 | ||

| Linear working model | ||||||

| iDID-MR | all correct | −0.002 | 0.110 | 0.114 | 0.956 | |

| 0.004 | 0.113 | 0.115 | 0.950 | |||

| correct | −0.001 | 0.110 | 0.114 | 0.960 | ||

| 0.004 | 0.115 | 0.118 | 0.946 | |||

| correct | −0.003 | 0.136 | 0.139 | 0.944 | ||

| 0.003 | 0.146 | 0.150 | 0.960 | |||

| correct | −0.003 | 0.137 | 0.140 | 0.946 | ||

| 0.004 | 0.144 | 0.149 | 0.958 | |||

| None | −0.355 | 0.144 | 0.142 | 0.292 | ||

| −0.129 | 0.221 | 0.175 | 0.908 | |||

| iDID-Reg | correct | −0.001 | 0.109 | 0.114 | 0.960 | |

| −0.005 | 0.114 | 0.118 | 0.949 | |||

| incorrect | −0.351 | 0.144 | 0.149 | 0.332 | ||

| −0.110 | 0.473 | 0.478 | 0.942 | |||

| iDID-IPW | correct | −0.021 | 0.225 | 0.225 | 0.948 | |

| −0.010 | 0.270 | 0.269 | 0.957 | |||

| incorrect | −0.271 | 0.234 | 0.242 | 0.813 | ||

| −0.072 | 0.267 | 0.313 | 0.957 | |||

| iDID-G | correct | −0.021 | 0.225 | 0.224 | 0.949 | |

| −0.007 | 0.245 | 0.247 | 0.953 | |||

| incorrect | −0.276 | 0.235 | 0.233 | 0.812 | ||

| −0.234 | 0.246 | 0.241 | 0.863 | |||

Abbreviations: iDID, instrumented difference-in-differences; OLS, ordinary least squares.

The following is a summary based on the results in Table 1. The OLS and standard IV estimators have large bias due to violations of their assumptions. The stratum-specific iDID Wald estimators show negligible bias and adequate coverage probability. The three classes of semiparametric iDID estimators that rely on have negligible bias and adequate coverage probability when the corresponding models are correctly specified but are biased when misspecified. The multiply robust semiparametric iDID estimators exhibit negligible bias and adequate coverage probabilities when at least one of is correct, which supports the multiple robustness property.

7 |. APPLICATION

We apply the proposed method to analyze the effect of cigarette smoking on lung cancer mortality. Given the lag between smoking exposure and lung cancer mortality, we adopt the two-sample instrumented DID design. Our analysis is based upon two datasets arranged by 10-year birth cohort: the 1970 National Health Interview Survey (NHIS) for nationally representative estimates of smoking prevalence (National Health Interview Survey, 1970), and the US Centers for Disease Control and Prevention’s (CDC) Wide-ranging ONline Data for Epidemiologic Research (WONDER) system for estimates of national lung cancer (ICD-8/9: 162; ICD-10: C33-C34) mortality rates (CDC, 2000a, 2000b, 2016). Only the 1970 NHIS is used because it is the first NHIS that records the initiation and cessation time of smoking such that a longitudinal structure is available. We closely follow the approach taken by Tolley et al. (1991, Chapter 3) to calculate the smoking prevalence rates.

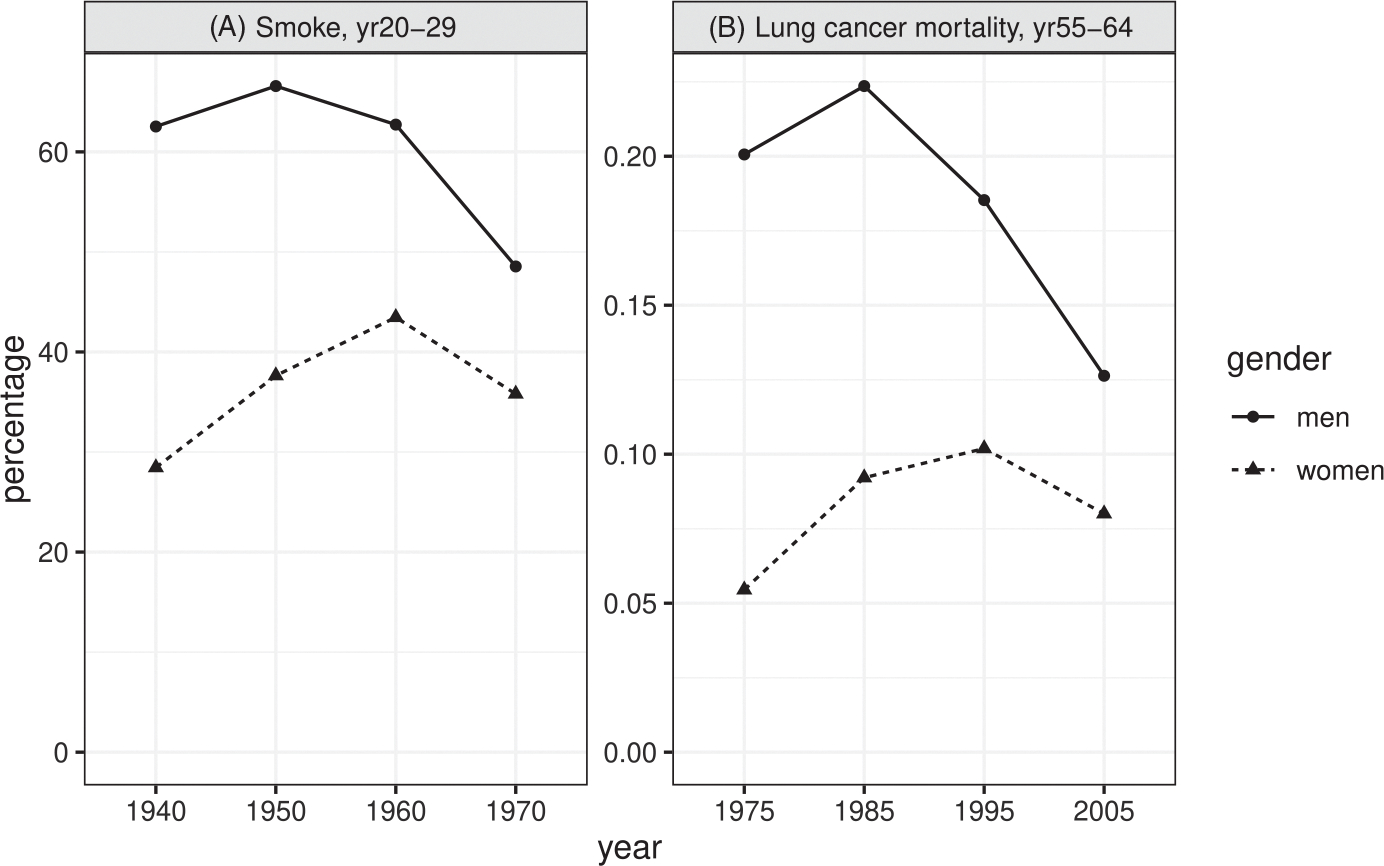

Based on the data availability, we focus on four successive 10-year birth cohorts: 1911–1920, 1921–1930, 1931–1940, 1941–1950, whose smoking prevalence is estimated respectively at year 1940, 1950, 1960, 1970 when they are at age 20–29, whose lung cancer mortality rates are estimated respectively at year 1975, 1985, 1995, 2005 when they are at age 55–64. Here, cohort of birth plays the role of time. Figure 2 shows the changes in prevalence of cigarette smoking among men and women aged 20–29 years, and the changes in lung cancer mortality rates 35 years later in the United States. From Figure 2, we see that the trends in lung cancer mortality rates follow the trends in smoking prevalence, with a lag of 35 years, which provides evidence that smoking increases lung cancer mortality rate.

FIGURE 2.

Changes in prevalence of cigarette smoking for men and women aged 20–29, lung cancer mortality rates for men and women aged 55–64 years among four successive 10-year birth cohorts: 1911–1920, 1921–1930, 1931–1940, 1941–1950

There have been many direct comparisons of the lung cancer mortality rates between smokers and non-smokers which have found higher rates among smokers (International Agency for Research on Cancer, 1986). Additional studies that replicate direct comparisons of smokers and non-smokers may not add much evidence beyond the first comparison. It is argued in Rosenbaum (2010) that “in such a situation, it may be possible to find haphazard nudges that, at the margin, enable or discourage [the exposure]. ... These nudges may be biased in various ways, but there may be no reason for them to be consistently biased in the same direction, so similar estimates of effect from studies subject to different potential biases gradually reduce ambiguity about what part is effect and what part is bias.” The instrumented DID is one such method that attempts to exploit the “haphazard nudges”, that is, the targeted tobacco advertising to women in the 1960s that led to a rapid increase in smoking among young women in a way that is presumably independent of other causes of lung cancer mortality.

To quantitatively evaluate the effect of cigarette smoking on lung cancer mortality, we take gender—a surrogate of whether each individual received encouragement (targeted tobacco advertising) or not—as the IV for DID. Note that gender does not need to have a causal effect on smoking; as proved in the Supporting information, it suffices that gender is correlated with smoking due to the encouragement from targeted tobacco advertising. We consider two successive 10-year birth cohorts, setting the earlier birth cohort as and the later birth cohort as . Gender is likely a valid IV for DID, as it clearly satisfies the trend relevance assumption, the lung cancer mortality rates for men and women would have evolved similarly had all subjects counterfactually not smoked, and there is no evident gender difference in the cancer-causing effects of cigarette smoking (Patel et al., 2004).

Table 2 summarizes (i) the F-statistic proposed in Section 5 to measure weak identification; and (ii) the two-sample iDID Wald estimators defined in Section 4 and their SEs defined in Equation (S6). More details on the application are also in the Supporting information. From Table 2, under the assumption that gender is a valid IV for DID and the treatment effect is stable over time, we find evidence that smoking leads to significantly higher lung cancer mortality rates. Specifically, we find that smoking in one’s 20s leads to an elevated annual lung cancer mortality rate at age 55–64 years, with the effect size ranging from 0.285% to 0.568%. This is of a similar magnitude as the findings in Thun et al. (1982, 2013). Using different birth cohorts gives slightly different point estimates, but they are within two SEs of each other. Nonetheless, there is still concern about violating the stable treatment effect over time assumption (Assumption 2(d)), possibly because the cigarette design and composition have undergone changes that promote deeper inhalation of smoke (Thun et al., 2013; Warren et al., 2014). In Section S4, we perform a sensitivity analysis and find that increasing risk of smoking over time does not explain away the observed treatment effect.

TABLE 2.

Two-sample iDID Wald estimates and their standard errors (in parentheses) using two successive birth cohorts (in %)

| 1911–1920 | 1921–1930 | 1931–1940 | |

|---|---|---|---|

| Birth cohort | 1921–1930 | 1931–1940 | 1941–1950 |

| F-statistic | 13.94 | 47.28 | 21.33 |

| 0.285 (0.089) | 0.497 (0.076) | 0.568 (0.127) |

0Notes: F-statistic is the squared -score, defined in Section 4 estimates the ATE of smoking on lung cancer mortality. Abbreviations: ATE, average treatment effect; iDID, instrumented difference-in-differences.

8 |. RESULTS AND DISCUSSION

In this paper, we have proposed a new method called instrumented DID that explicitly leverages exogenous randomness in the exposure trends, and controls for unmeasured confounding in repeated cross-sectional studies. The instrumented DID method evolves from two powerful natural experiment devices, the standard IV and standard DID, but is able to relax some of their most disputable assumptions. Our motivation of assessing the causal effect by linking the change in outcome mean and the change in exposure rate is also related to the trend-in-trend design (Ji et al., 2017) and etiologic mixed design (Lash et al., 2021).

In principle, any variable that satisfies Assumptions 2(a)–(c) can be chosen as the IV for DID. Here, we list two common sources of the IV for DID: (i) administrative information, such as geographic region and insurance type; and (ii) variables that are commonly used as standard IVs, such as physician preference, distance to care provider, and genetic variants—see Baiocchi et al. (2014) for more examples; as discussed in Section 2, these variables are more likely to be an IV for DID compared to being a standard IV, because IVs for DID are allowed to have direct effects on the outcome.

Supplementary Material

ACKNOWLEDGMENTS

We gratefully acknowledge support by grant R01AG064589 from the National Institutes of Health. We would like to thank the anonymous referees, an Associate Editor and the Editor for their constructive comments that led to a much improved paper.

Funding information

National Institutes of Health, Grant/Award Number: R01AG064589

Footnotes

OPEN RESEARCH BADGES

This article has earned Open Data and Open Materials badges. Data and materials are available at https://github.com/jfiksel/compregpaper, https://github.com/jfiksel/codalm.

SUPPORTING INFORMATION

Web Appendices referenced in Sections 2–7 are available with this paper at the Biometrics website on Wiley Online Library. Code and data for replicating the simulations and data analyses in this paper are being made available online with the paper. R package idid, available at https://github.com/tye27/idid, implements the proposed method.

Table S1: Sample sizes for 1970 NHIS datasets and 1975, 1985, 1995, 2005 CDC WONDER compressed mortality datasets by birth cohort and gender

Data and codes are available in the Supporting Information of this article.

DATA AVAILABILITY STATEMENT

The data that support the findings of this paper are openly available in a GitHub repository at https://github.com/jfiksel/compregpaper.

REFERENCES

- Abadie A (2003) Semiparametric instrumental variable estimation of treatment response models. Journal of Econometrics, 113, 231–263. [Google Scholar]

- Abadie A (2005) Semiparametric difference-in-differences estimators. The Review of Economic Studies, 72, 1–19. [Google Scholar]

- Angrist JD, Imbens GW & Rubin DB (1996) Identification of causal effects using instrumental variables. Journal of the American Statistical Association, 91, 444–455. [Google Scholar]

- Angrist JD & Krueger AB (1992) The effect of age at school entry on educational attainment: an application of instrumental variables with moments from two samples. Journal of the American statistical Association, 87, 328–336. [Google Scholar]

- Angrist JD & Krueger AB (1995) Split-sample instrumental variables estimates of the return to schooling. Journal of Business & Economic Statistics, 13, 225–235. [Google Scholar]

- Angrist JD & Pischke J-S (2008) Mostly harmless econometrics: an empiricist’s companion. Princeton, NJ: Princeton University Press. [Google Scholar]

- Bailar JC & Gornik HL (1997) Cancer undefeated. New England Journal of Medicine, 336, 1569–1574. [DOI] [PubMed] [Google Scholar]

- Baiocchi M, Cheng J & Small DS (2014) Instrumental variable methods for causal inference. Statistics in Medicine, 33, 2297–2340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel P, Klaassen C, Ritov Y & Wellner J (1993) Efficient and adaptive estimation for semiparametric Models. Springer. [Google Scholar]

- Burbank F (1972) U.S. lung cancer death rates begin to rise proportionately more rapidly for females than for males: a dose-response effect? Journal of Chronic Diseases, 25, 473–479. [DOI] [PubMed] [Google Scholar]

- Card D & Krueger AB (1994) Minimum wages and employment: a case study of the fast food industry in new jersey and pennsylvania. American Economic Review, 84, 772–793. [Google Scholar]

- CDC (2000a). Centers for disease control and prevention, national center for health statistics. compressed mortality file 1968–1978. CDC WONDER online database, compiled from compressed mortality file CMF 1968–1988, series 20, no. 2A, 2000. Available from: http://wonder.cdc.gov/cmf-icd8.html [Accessed 27th Aug 2020]. [Google Scholar]

- CDC (2000b). Centers for disease control and prevention, national center for health statistics. compressed mortality file 1979–1998. CDC WONDER online database, compiled from compressed mortality file CMF 1979–1998, series 20, no. 2A, 2000 and CMF 1989–1998, series 20, no. 2E, 2003. Available from: http://wonder.cdc.gov/cmf-icd9.html [Accessed 27th Aug 2020]. [Google Scholar]

- CDC (2016) Centers for disease control and prevention, national center for health statistics. compressed mortality file 1999–2016 on cdc wonder online database, released june 2017. Data are from the compressed mortality file 1999–2016 series 20 no. 2U, 2016. Available from: http://wonder.cdc.gov/cmf-icd10.html [Accessed 28th Aug 2020]. [Google Scholar]

- Cheng G & Huang JZ (2010) Bootstrap consistency for general semiparametric M-estimation. The Annals of Statistics, 38, 2884–2915. [Google Scholar]

- Cui Y & Tchetgen Tchetgen E (2021) A semiparametric instrumental variable approach to optimal treatment regimes under endogeneity. Journal of the American Statistical Association, 116, 162–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson R & MacKinnon JG (1993) Estimation and inference in econometrics. Oxford University Press. [Google Scholar]

- de Chaisemartin C & D’HaultfŒuille X (2018) Fuzzy differences-in-differences. The Review of Economic Studies, 85, 999–1028. [Google Scholar]

- Duflo E (2001) Schooling and labor market consequences of school construction in indonesia: evidence from an unusual policy experiment. American Economic Review, 91, 795–813. [Google Scholar]

- Hernán MA & Robins JM (2006) Instruments for causal inference: an epidemiologist’s dream? Epidemiology, 17, 360–372. [DOI] [PubMed] [Google Scholar]

- Hernan MA & Robins JM (2020) Causal inference: what if. Boca Raton, FL: Chapman & Hall. [Google Scholar]

- International Agency for Research on Cancer (1986) Tobacco smoking, vol. 38. World Health Organization. [Google Scholar]

- Ji X, Small DS, Leonard CE & Hennessy S (2017) The trend-in-trend research design for causal inference. Epidemiology, 28, 529–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y & Small DS (2014) ivpack: instrumental Variable Estimation. R package version 1.2. [Google Scholar]

- Kennedy EH, Lorch S & Small DS (2019) Robust causal inference with continuous instruments using the local instrumental variable curve. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 81, 121–143. [Google Scholar]

- Lash TL, VanderWeele TJ, Haneuse S & Rothman KJ (2021) Modern epidemiology, vol. 4. Wolters Kluwer Health. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawlor DA, Davey Smith G, Kundu D, Bruckdorfer KR & Ebrahim S (2004) Those confounded vitamins: what can we learn from the differences between observational versus randomised trial evidence? Lancet, 363, 1724–1727. [DOI] [PubMed] [Google Scholar]

- Meigs JW (1977) Epidemic lung cancer in women. JAMA, 238, 1055–1055. [PubMed] [Google Scholar]

- National Health Interview Survey (1970) Available from: ftp://ftp.cdc.gov/pub/health_statistics/nchs/datasets/nhis/1970 [Accessed 31st Aug 2020].

- Newey WK & McFadden D (1994) Large sample estimation and hypothesis testing. Chap. 36. Handbook of Econometrics, 4, 2111–2245. [Google Scholar]

- Neyman J (1923) On the application of probability theory to agricultural experiments. Essay on principles. section 9. Statistical Science, 5, 465–472. Trans. Dabrowska Dorota M. and Speed Terence P. (1990). [Google Scholar]

- Ogburn EL, Rotnitzky A & Robins JM (2015) Doubly robust estimation of the local average treatment effect curve. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 77, 373–396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel JD, Bach PB & Kris MG (2004) Lung cancer in US women: a contemporary epidemic. JAMA, 291, 1763–1768. [DOI] [PubMed] [Google Scholar]

- Pierce JP & Gilpin EA (1995) A historical analysis of tobacco marketing and the uptake of smoking by youth in the United States: 1890–1977. Health Psychology, 14, 500. [DOI] [PubMed] [Google Scholar]

- Robins JM (1994) Correcting for non-compliance in randomized trials using structural nested mean models. Communications in Statistics: Theory and Methods, 23, 2379–2412. [Google Scholar]

- Rosenbaum PR (2010) Design of observational studies. Springer. [Google Scholar]

- Rubin DB (1974) Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of Educational Psychology, 6, 688–701. [Google Scholar]

- Rubin DB (1978) Bayesian inference for causal effects: the role of randomization. Annals of Statistics, 6, 34–58. [Google Scholar]

- Rubin DB (1990) Comment: Neyman (1923) and causal inference in experiments and observational studies. Statistical Science, 5, 472–480. [Google Scholar]

- Rutter M (2007) Identifying the environmental causes of disease: how should we decide what to believe and when to take action? Report Synopsis. Academy of Medical Sciences. [Google Scholar]

- Shi X, Miao W, Nelson JC & Tchetgen Tchetgen EJ (2020) Multiply robust causal inference with double-negative control adjustment for categorical unmeasured confounding. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 82, 521–540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stock JH, Wright JH & Yogo M (2002) A survey of weak instruments and weak identification in generalized method of moments. Journal of Business & Economic Statistics, 20, 518–529. [Google Scholar]

- Tan Z (2006) Regression and weighting methods for causal inference using instrumental variables. Journal of the American Statistical Association, 101, 1607–1618. [Google Scholar]

- Thun JM, Day-Lally C, Myers GD, Calle EE, Flanders WD, Zhu B-P et al. (1982) Trends in tobacco smoking and mortality from cigarette use in cancer prevention studies I (1959–1965) and II (1982–1988). Changes in cigarette-related disease risks and their implication for prevention and control: smoking and tobacco control monograph. Vol. 8. Bethesda, MD: U.S. Department of Health and Human Services, National Institutes of Health, National Cancer Institute. NIH Pub. [Google Scholar]

- Thun MJ, Carter BD, Feskanich D, Freedman ND, Prentice R, Lopez AD, et al. (2013) 50-year trends in smoking-related mortality in the United States. New England Journal of Medicine, 368, 351–364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolley H, Crane L & Shipley N (1991) Strategies to control tobacco use in the United States—a blueprint for public health action in the 1990s. NIH publication no. 92–3316 pp. 75–144. Bethesda, MD: U.S. Department of Health and Human Services, Public Health Service, National Institutes of Health, National Cancer Institute. [Google Scholar]

- van der Vaart A (2000) Asymptotic statistics. Cambridge University Press. [Google Scholar]

- Vansteelandt S, VanderWeele TJ, Tchetgen Tchetgen EJ & Robins JM (2008) Multiply robust inference for statistical interactions. Journal of the American Statistical Association, 103, 1693–1704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L & Tchetgen Tchetgen E (2018) Bounded, efficient and multiply robust estimation of average treatment effects using instrumental variables. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80, 531–550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren GW, Alberg AJ, Kraft AS & Cummings KM (2014) The 2014 surgeon general’s report:“the health consequences of smoking—50 years of progress”: a paradigm shift in cancer care. Cancer, 120, 1914–1916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wooldridge JM (2010) Econometric analysis of cross section and panel data. MIT press. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this paper are openly available in a GitHub repository at https://github.com/jfiksel/compregpaper.