Abstract

Background

Deimplementing overused health interventions is essential to maximizing quality and value while minimizing harm, waste, and inefficiencies. Three national guidelines discourage continuous pulse oximetry (SpO2) monitoring in children who are not receiving supplemental oxygen, but the guideline-discordant practice remains prevalent, making it a prime target for deimplementation. This paper details the statistical analysis plan for the Eliminating Monitor Overuse (EMO) SpO2 trial, which compares the effect of two competing deimplementation strategies (unlearning only vs. unlearning plus substitution) on the sustainment of deimplementation of SpO2 monitoring in children with bronchiolitis who are in room air.

Methods

The EMO Trial is a hybrid type 3 effectiveness-deimplementation trial with a longitudinal cluster-randomized design, conducted in Pediatric Research in Inpatient Settings Network hospitals. The primary outcome is deimplementation sustainment, analyzed as a longitudinal difference-in-differences comparison between study arms. This analysis will use generalized hierarchical mixed-effects models for longitudinal clustering outcomes. Secondary outcomes include the length of hospital stay and oxygen supplementation duration, modeled using linear mixed-effects regressions. Using the well-established counterfactual approach, we will also perform a mediation analysis of hospital-level mechanistic measures on the association between the deimplementation strategy and the sustainment outcome.

Discussion

We anticipate that the EMO Trial will advance the science of deimplementation by providing new insights into the processes, mechanisms, and likelihood of sustained practice change using rigorously designed deimplementation strategies. This pre-specified statistical analysis plan will mitigate reporting bias and support data-driven approaches.

Trial registration

ClinicalTrials.gov NCT05132322. Registered on 24 November 2021.

Keywords: Bronchiolitis, Deimplementation sustainment, Overuse, Pulse oximetry, Cluster randomized trial

1. Introduction

To maximize quality and value and minimize harm, waste, and inefficiencies in health care it is essential to deimplement overused interventions [1,2]. The Eliminating Monitor Overuse (EMO) Trial is a hybrid type III effectiveness-deimplementation trial [3] with a longitudinal cluster-randomized design, conducted in Pediatric Research in Inpatient Settings (PRIS) Network hospitals [4]. Hybrid effectiveness-implementation trials combine questions about the effectiveness of interventions with questions about how best to implement or deimplement them; type 3 denotes trials in which the emphasis is on testing strategies that promote implementation or deimplementation while secondarily measuring clinical outcomes [3].

The EMO Trial is focused on overuse of pulse oximetry (SpO2) for inpatient pediatric treatment of viral bronchiolitis (“bronchiolitis”)—a common acute lung disease in children under 2 years old [[5], [6], [7]], which leads to over 100,000 hospitalizations annually in the US [8]. Although national guidelines now discourage the use of continuous SpO2 monitoring in hospitalized children with bronchiolitis who are not receiving supplemental oxygen [6,9,10]—recommending that these children instead have their oxygen saturation measured intermittently via “spot checks”—continuous SpO2 monitoring remains overused in this population [11], making it an ideal target for deimplementation.

Based on Helfrich's Dual Process Theory-based deimplementation framework [12], the EMO trial will compare the effects of an unlearning-only strategy vs. an unlearning plus substitution strategy on deimplementation of SpO2 monitoring in children with bronchiolitis who are in room air (i.e., not requiring supplemental oxygen to maintain oxygen saturations) [13]. We hypothesize that routinization (clinicians developing new routines supporting practice change) and institutionalization (the organization embedding practice change into existing systems) support the sustainment of practice change [14]. We also hypothesize that unlearning strategies alone are insufficient to promote sustained deimplementation compared to a combination of unlearning and substitution strategies [13]. Finally, we will examine the effects of deimplementation on clinical effectiveness outcomes. Ethics and institutional approval were obtained from all hospitals supporting recruitment, and the study protocol was published [13].

This paper provides a detailed description of the statistical analysis plan for the EMO Trial, which has been prepared in accordance with published guidelines in the context of statistical analysis plans [15]. The main publication of the trial results will adhere to this statistical analysis plan as approved by the EMO Trial Steering Committee.

2. Study design

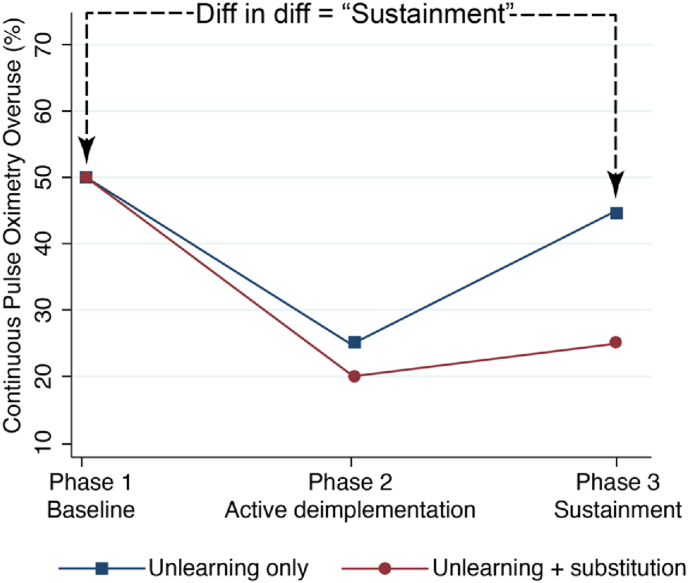

The primary objective of the EMO Trial (ClinicalTrials.gov, NCT05132322) is to compare the effects of two deimplementation strategies on longitudinal hospital-level deimplementation of SpO2 monitoring in children with bronchiolitis who are in room air. The study's specific aims and hypotheses were previously published [13] (for more detail, see Supplemental Material). The three phases of the trial are baseline measurement (Phase 1, P1), active deimplementation (P2), and sustainment (P3) [13] (Supplemental Fig. 1). Fig. 1 shows the hypothesized effect of the deimplementation strategies on overuse, assessed by a difference-in-difference comparison between study arms of the overuse of continuous SpO2 monitoring at P1 vs. P3. This hospital-level deimplementation sustainment will be inferred using patient-level continuous SpO2 monitoring data through statistical modeling (see Section 5).

Fig. 1.

Hypothesized hospital-level changes in continuous SpO2 monitoring overuse across study phases, between trial arms.

As described in detail previously [13], the trial will be conducted within PRIS Network hospitals, and the cluster-randomization will be at the hospital level. The patient population includes children aged 2–23 months old with bronchiolitis who are hospitalized in general medical-surgical inpatient units at participating hospitals. Detailed inclusion and exclusion criteria for hospitals and patients are described in the main protocol publication and Supplemental Fig. 1 [13].

3. Methods

3.1. Deimplementation strategy arm assignment

All deimplementation strategies are assigned and delivered at the hospital level, including educational outreach, audit and feedback, and the substitution strategy. The main protocol provides a description of the deimplementation strategies in detail [13].

3.2. Randomization and blinding

Eligible hospitals will be randomized to either the unlearning only or unlearning + substitution arm, using a covariate-constrained randomization approach [16] to achieve an optimal balance between arms, as previously described [13]. Specifically, we will generate 100,000 of 1:1 allocation schemes for the hospitals to be randomized, stratified on the presence of pre-existing EHR clinical decision support as well as baseline overuse rate dichotomized at the median to guarantee balance or achieve similar distribution between arms for the two variables. We will calculate the imbalance metric L2 for each allocation based on the hospital type and baseline overuse rate [17]. The final randomization scheme will be randomly selected among the top 10% of the randomization schemes with the smallest imbalance metric. We will use the R package “cvcrand” [18] to randomize. In this trial, it is impossible to blind the participating sites, given the nature of the intervention.

4. Sample size and power estimations

The power analysis was based on the primary outcome measured at the hospital level, i.e., the deimplementation sustainment [13]. This is a difference-in-differences comparison between study arms of the overuse of continuous SpO2 monitoring at baseline vs. in the sustainment phase. Prior to completing a power analysis, we discussed with content experts, clinicians, and the EMO Steering Committee to determine that a clinically meaningful difference in the outcome was a 15% point reduction in overmonitoring. Sample size and power estimations were previously published [13]. Briefly, we estimate that if 24 hospitals (12 per arm) complete P2 and P3, we will have 80% power at a significance level of 0.05 to detect a difference of 16% points [13]. This detectable difference is within the range of the pre-specified clinically meaningful difference. To account for the impact of the COVID-19 pandemic on recruitment and follow-ups, our power calculation allows for a high dropout rate of 37% over the course of the trial (i.e., a reduction from 19 hospitals per arm at randomization to 12 hospitals per arm at the time of analysis). A two-group comparison of mean change in a repeated measures design implemented in Power and Sample Size software (PASS, NCSS, Kaysville, UT, USA) was used for the power calculations.

5. Statistical analysis

5.1. General analysis principles and considerations

All analyses will be intention-to-treat, which will include all randomized hospitals, irrespective of dropout or noncompliance to the assigned intervention. We will perform a per-protocol analysis as a secondary analysis. Estimates of the deimplementation effect with corresponding 95% confidence intervals (CIs) and p-values will be reported for comparisons between trial arms. A two-sided statistical significance level of 0.05 will be used. The Benjamini-Hochberg correction on the p-value will be used to adjust the analyses of secondary outcomes for multiple testing [19]. All statistical analyses will be performed in Stata (StataCorp, College Station, Texas, USA). The outcomes were predefined as primary, secondary, and exploratory. This paper only describes the primary and secondary outcomes' statistical analysis plan.

5.2. Descriptive statistics for baseline characteristics

Patient demographic and clinical characteristics as well as hospital variables will be collected at baseline and in each study phase. Patient-level variables will include age, gestational age at delivery, time since weaning from supplemental oxygen, presence of an enteral feeding tube, and whether data were captured during an overnight shift (to allow for possible systematic difference in nurse:patient ratios, differences in family presence at the bedside, and local monitoring culture in the hospital at nighttime). Hospital-level variables will include the hospital type (freestanding children's hospitals vs. general or community hospitals), presence of pre-existing EHR clinical decision support for bronchiolitis, and the standardized overuse rate of continuous pulse oximetry [11].

Participants' (i.e., hospital's) progress through the study will be summarized using a flow diagram according to the CONSORT extension for cluster randomized trials [20], as diagramed in the previous publication [13]. Baseline characteristics will be summarized overall and by intervention arm using standard descriptive statistics and presented in a table. Continuous variables will be summarized using means and standard deviations or medians and interquartile ranges depending on the distribution of data. Categorical variables will be presented as frequencies and percentages. The degree of missingness, if any, will be reported.

5.3. Statistical analysis for primary outcome

The primary outcome of this study is deimplementation sustainment, analyzed as the hospital-level change in the percentage of bronchiolitis patients in room air who are continuously SpO2 -monitored across the 3 study phases.

5.3.1. Primary model

We will calculate unadjusted guideline-discordant monitoring percentages for each hospital at each study phase, using the denominator of all directly observed patients with bronchiolitis who were not receiving supplemental oxygen and the numerator of patients who were simultaneously receiving continuous Spo2 monitoring. We will evaluate the clinical effectiveness of the two deimplementation intervention strategies by analyzing the binary patient-level continuous SpO2 monitoring status using the hierarchical generalized linear mixed-effects models [21] with logit link for hospital panel data with patients clustered in hospitals. The general analysis model will include intervention arm, time (i.e., P1, P2, P3), and arm-by-time interaction as fixed effects, where the interaction term allows us to assess whether and how much the change in deimplementation penetration over the study phases differs between the arms. We will consider linear spline-based (i.e., piecewise) regression [22] in the mixed-effects model to capture any potential nonlinear trajectories over time, with the start of P2 and the start of P3 being the pre-specified knots of the spline. This model will incorporate hospital-specific random effects to account for within-hospital correlation due to repeated measure design and the fact that patients are clustered within hospitals. Since repeated measures of continuous SpO2 monitoring status are permitted as long as they are separated by at least 6 h, some patients may contribute more than one data point to the study. If this happens, we will employ a two-level clustering random effects with patient nested under hospital. For each hospital, we will then obtain the post-hoc aggregated hospital-level continuous SpO2 monitoring percentage at each study phase with a 95% CI using ‘margins’ in STATA, as well as the change in the percentage between phases (e.g., between P1 and P3) with 95% CI as the estimate of deimplementation sustainment, and summarize in a figure similar to Fig. 1.

5.3.2. Covariate adjustment

To account for patient-level factors that cannot be balanced by the site-level randomization process, we will adjust for the same covariates used in previous research [11], including patient age, gestational age at delivery, time since weaning from supplemental oxygen, presence of an enteral feeding tube, and whether data are collected during an overnight shift. In addition, we will adjust for the neurological impairment in the analyses.

5.3.3. Moderators

We hypothesize that some characteristics of hospitals and clinicians may moderate or modify the effects of the deimplementation strategy on the primary outcome. Potential moderators include hospital implementation climate and clinician psychological reactance to the deimplementation strategy, which will be collected through questionnaires. Select subscales of the Implementation Climate Scale (i.e., Focus on Evidence-Based Practice and Recognition for Evidence-Based Practice) will help us understand whether hospital clinicians and staff perceive that their deimplementation of continuous SpO2 monitoring is expected, supported, and rewarded [23]; this measure has demonstrated criterion-related and predictive validity in prior implementation studies [[24], [25], [26], [27]]. The psychological reactance scale will assess clinicians’ responses to deimplementation messaging: perceptions of threats to their freedom, emotional responses, and cognitive responses [[28], [29], [30]]. The moderating effects of these variables will be tested separately by adding terms for each moderator and its interaction with deimplementation strategy to the mixed-effects models for the primary outcome.

5.4. Mediation analysis for sustainment outcome

We hypothesize that hospital-level mechanistic measures may mediate the effect of the deimplementation strategy on the sustainment outcome. We consider two potential mediators which are closely related but conceptually distinct processes: routinization and institutionalization [14]. Specifically, we will assess these two mediators by distributing the Slaghuis Measurement Instrument for Sustainability of Work Practices [14] to eligible clinical staff at two time points: at baseline following randomization (P1) and in the final month of the initial sustainment phase (P2), which is when we would expect the hypothesized mediation mechanisms to have occurred.

The hypothesized mechanism and the relationships linking the exposure, mediators, and outcomes are shown conceptually in Supplemental Fig. 2, where path ‘a’ represents the effect of the deimplementation strategy on the hospital-level mediators, and path ‘b’ represents the effect of the hospital-level mediators on the outcomes, adjusted for the exposure effect. Path ‘c’ corresponds to the direct effect of the deimplementation strategy on the sustainment outcome, independent of the influence of the mediators. We will use the counterfactual approach to mediation analysis, sometimes called the causal mediation approach, as described by VanderWeele [31], Muthén and Asparouhov [32], and Nguyen et al. [33], and as implemented in Mplus [34]. In this approach, the total effect of the deimplementation strategy is parsed into direct and indirect effects by estimating two simultaneous regressions and combining the parameters from these models using counterfactually defined formulas. In the first regression, the path ‘a’ will be modeled using linear regression at the hospital level, with the mediator at P2 as the dependent variable and the deimplementation strategy assignment as the independent variable. To improve the plausibility of the underlying model assumptions [35], this model will control for the hospital-level P1 mediator variable and hospital outcome rate from P1. Per the unbiased estimate of Nguyen et al. [33], path ‘b’ will be estimated using a probit regression model with robust standard errors to account for the clustering of patients within hospitals. In this model, the patient-level binary outcome at P3 will be the dependent variable, and the P2 hospital indirect, direct, and total effects will be obtained on the probability scale, conditional on the mean-centered values of the control variables, using counterfactually-defined formulas described by VanderWeele [35] and Muthen et al. [34].

5.5. Statistical analysis for secondary outcomes

We plan to collect two secondary clinical outcomes among the enrolled bronchiolitis patients (measured in hours): length of hospital stay and oxygen supplementation duration. We will investigate the effect of hospital-level deimplementation penetration for each study phase (baseline, active deimplementation, and sustainment) on these clinical outcomes. For the two clinical outcomes, we will consider data transformation in order to meet the normality assumption for regression analysis, e.g., logarithm or square-root, depending on the distribution of the data. We will use linear mixed-effects regressions to model the two outcomes with the hospital-level deimplementation penetration at each study phase as fixed effects. We will also incorporate hospital-specific random effects to account for patients clustering within hospitals.

5.6. Safety and adverse events

The overall PIs, Site PIs, Medical Monitors, and the Data and Safety Monitoring Board will oversee the safety of bronchiolitis patients at the unit and institutional levels. Adverse event (AE) data will be collected through the study and classified as serious adverse events (SAE) and other adverse events (Other AE). The process of conducting proactive surveillance for adverse events is outlined in the protocol publication [13].

5.7. Interim analyses

Planned interim analyses will occur prior to each meeting of the Data and Safety Monitoring Board (DSMB). Therefore, we will conduct these analyses prior to trial launch, after P1, after P2, and after P3. Interim analyses will summarize participant baseline characteristics, overuse proportions/penetration, equity of overuse, clinical outcomes, and safety data overall, by intervention arm (with investigators blinded to arm), and by site when necessary. The results of the blinded-by-arm analysis and the pooled analysis will be presented to the study team. Access to the unblinded interim data and results will be limited to the DSMB members and statisticians performing the analysis. Following the unblinded review of the safety data, the DSMB will make a recommendation to either (1) allow the study to continue according to protocol, (2) allow the study to continue with modifications to the protocol, or (3) discontinue the study in accordance with good medical practice.

5.8. Missing data

Overall, we anticipate minimal missing data for the outcomes, given that the repeated measure of the outcomes in this study is at the hospital-rather than patient-level. The study procedures involve data entry forms that enforce data entry with required fields, training of site research staff, and independent remote data monitoring by the Data Coordinating Center (DCC). The DCC will contact site investigators to retrieve any missing data values. Although we do not expect many hospitals to drop out, we anticipate that the primary cause of missing data will be hospital withdrawal during P2 or P3. We will perform descriptive analysis on these hospitals and compare them with hospitals continuing in the study. Analyses for primary and secondary outcomes will be based on participants for whom outcome data are available (i.e., available case analysis). Thus, no imputation will be performed for the primary outcome analyses.

5.9. Sensitivity analysis

If randomized hospitals drop out of the trial at any point, one or more study phase may have sparse or completely missing data, preventing measurement of their performance in the active deimplementation and/or the sustainment phase. If many (e.g., 6 or more in one arm) hospitals drop out, this could threaten the interpretability of the trial results. To estimate the potential ways that hospital dropout could impact the trial results, we will first examine the percentiles of sustainment (i.e., percent overuse in P3 minus percent overuse in P1, divided by the percent overuse in P1) in the hospitals that complete P2 and P3. We will then conduct the following sensitivity analyses: (1) assume the hospitals with incomplete P2 and P3 data performed similarly to the hospitals with poor sustainment (20th percentile), (2) assume the hospitals with incomplete P2 and P3 data performed similarly to the hospitals with average sustainment (50th percentile), and (3) assume the hospitals with incomplete P2 and P3 data performed similarly to the hospitals with the best sustainment (80th percentile). We will then simulate the effect of each of these scenarios on the overall trial outcome in a mixed-effects model [36].

6. Discussion

We designed a hybrid type III effectiveness-deimplementation trial with a longitudinal cluster-randomized design to compare two deimplementation strategies on the sustainment of deimplementation of SpO2 monitoring in children with bronchiolitis who are in room air. The limitations of the trial design have been discussed previously in detail [13].

This work provided details on the statistical analysis methods for our prespecified primary and secondary outcomes and moderation and mediation analyses. Specifically, the proposed statistical models will analyze the patient-level data, allowing us to adjust for both patient- and hospital-specific covariates. More importantly, we will be able to answer the key ‘sustainment’ question in our specific aims by calculating the aggregated hospital-level continuous SpO2 monitoring rate at each study phase for each hospital. This analysis approach may inform the audience interested in conducting similar cluster-randomized trials with the outcome(s) defined at the institutional/cluster level.

We anticipate that the EMO SpO2 trial will advance the science of deimplementation by providing new insights from a pediatric research network into the processes, mechanisms, costs, and sustainment of rigorously designed deimplementation strategies.

Funding

Research reported in this publication was supported by a cooperative agreement with the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number U01HL159880 (MPIs: Bonafide, Beidas). The funder had no role in any of the following: study design; collection, management, analysis, or interpretation of the data; writing of the report; the decision to submit the report for publication; or ultimate authority over any of these activities. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Availability of data and materials

Drs. Xiao and Bonafide had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Author contributions

Concept and design:Bonafide, Brady, Landrigan, Beidas, Schondelmeyer.

Acquisition, analysis, or interpretation of data:All authors.

Drafting of the manuscript:Bonafide, Xiao.

Critical revision of the manuscript for important intellectual content: All authors.

Statistical analysis: Xiao, Bonafide.

Obtained funding: Bonafide, Beidas.

Administrative, technical, or material support: Brent.

Supervision: Xiao, Bonafide, Schisterman.

Declaration of competing interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: Dr. Beidas is principal at Implementation Science & Practice, LLC. She receives royalties from Oxford University Press, consulting fees from United Behavioral Health and OptumLabs, and serves on the advisory boards for Optum Behavioral Health, AIM Youth Mental Health Foundation, and the Klingenstein Third Generation Foundation outside of the submitted work.

Acknowledgments

Editorial support provided by Claire Daniele, PhD, of SciPubSupport, LLC and funded by Children's Hospital of Philadelphia.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.conctc.2023.101219.

Contributor Information

Rui Xiao, Email: rxiao@pennmedicine.upenn.edu.

Christopher P. Bonafide, Email: bonafide@chop.edu.

Nathaniel J. Williams, Email: natewilliams@boisestate.edu.

Zuleyha Cidav, Email: Zuleyha.Cidav@Pennmedicine.upenn.edu.

Christopher P. Landrigan, Email: christopher.landrigan@childrens.harvard.edu.

Jennifer Faerber, Email: faerberj@chop.edu.

Spandana Makeneni, Email: makenenis@chop.edu.

Courtney Benjamin Wolk, Email: Courtney.wolk@pennmedicine.upenn.edu.

Amanda C. Schondelmeyer, Email: Amanda.schondelmeyer@cchmc.org.

Patrick W. Brady, Email: patrick.brady@cchmc.org.

Rinad S. Beidas, Email: rinad.beidas@northwestern.edu.

Enrique F. Schisterman, Email: enrique.schisterman@pennmedicine.upenn.edu.

Appendix A. Supplementary data

The following is the supplementary data to this article.

Data availability

No data was used for the research described in the article.

References

- 1.Norton W.E., Chambers D.A. Unpacking the complexities of de-implementing inappropriate health interventions. Implement. Sci. 2020;15:2. doi: 10.1186/s13012-019-0960-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nilsen P., Ingvarsson S., Hasson H., von Thiele Schwarz U., Augustsson H. Theories, models, and frameworks for de-implementation of low-value care: a scoping review of the literature. Implementation Research and Practice. 2020;1 doi: 10.1177/2633489520953762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Curran G.M., Bauer M., Mittman B., Pyne J.M., Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Simon T.D., Starmer A.J., Conway P.H., Landrigan C.P., Shah S.S., Shen M.W., Sectish T.C., Spector N.D., Tieder J.S., Srivastava R., Willis L.E., Wilson K.M. Quality improvement research in pediatric hospital medicine and the role of the Pediatric Research in Inpatient Settings (PRIS) network. Acad Pediatr. 2013;13:S54–S60. doi: 10.1016/j.acap.2013.04.006. [DOI] [PubMed] [Google Scholar]

- 5.Keren R., Luan X., Localio R., Hall M., McLeod L., Dai D., Srivastava R. Pediatric Research in Inpatient Settings (PRIS) Network, Prioritization of comparative effectiveness research topics in hospital pediatrics. Arch. Pediatr. Adolesc. Med. 2012;166:1155–1164. doi: 10.1001/archpediatrics.2012.1266. [DOI] [PubMed] [Google Scholar]

- 6.Ralston S.L., Lieberthal A.S., Meissner H.C., Alverson B.K., Baley J.E., Gadomski A.M., Johnson D.W., Light M.J., Maraqa N.F., Mendonca E.A., Phelan K.J., Zorc J.J., Stanko-Lopp D., Brown M.A., Nathanson I., Rosenblum E., Sayles S., Hernandez-Cancio S. American Academy of Pediatrics, Clinical practice guideline: the diagnosis, management, and prevention of bronchiolitis. Pediatrics. 2014;134:e1474–e1502. doi: 10.1542/peds.2014-2742. [DOI] [PubMed] [Google Scholar]

- 7.Hasegawa K., Tsugawa Y., Brown D.F.M., Mansbach J.M., Camargo C.A. Trends in bronchiolitis hospitalizations in the United States. Pediatrics. 2013;132:28–36. doi: 10.1542/peds.2012-3877. 2000-2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fujiogi M., Goto T., Yasunaga H., Fujishiro J., Mansbach J.M., Camargo C.A., Hasegawa K. Trends in bronchiolitis hospitalizations in the United States: 2000-2016. Pediatrics. 2019;144 doi: 10.1542/peds.2019-2614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Quinonez R.A., Garber M.D., Schroeder A.R., Alverson B.K., Nickel W., Goldstein J., Bennett J.S., Fine B.R., Hartzog T.H., McLean H.S., Mittal V., Pappas R.M., Percelay J.M., Phillips S.C., Shen M., Ralston S.L. Choosing wisely in pediatric hospital medicine: five opportunities for improved healthcare value. J. Hosp. Med. 2013;8:479–485. doi: 10.1002/jhm.2064. [DOI] [PubMed] [Google Scholar]

- 10.Schondelmeyer A.C., Dewan M.L., Brady P.W., Timmons K.M., Cable R., Britto M.T., Bonafide C.P. Cardiorespiratory and pulse oximetry monitoring in hospitalized children: a delphi process. Pediatrics. 2020;146 doi: 10.1542/peds.2019-3336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bonafide C.P., Xiao R., Brady P.W., Landrigan C.P., Brent C., Wolk C.B., Bettencourt A.P., McLeod L., Barg F., Beidas R.S., Schondelmeyer A. Pediatric research in inpatient Settings (PRIS) network, prevalence of continuous pulse oximetry monitoring in hospitalized children with bronchiolitis not requiring supplemental oxygen. JAMA. 2020;323:1467–1477. doi: 10.1001/jama.2020.2998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Helfrich C.D., Rose A.J., Hartmann C.W., van Bodegom-Vos L., Graham I.D., Wood S.J., Majerczyk B.R., Good C.B., Pogach L.M., Ball S.L., Au D.H., Aron D.C. How the dual process model of human cognition can inform efforts to de-implement ineffective and harmful clinical practices: a preliminary model of unlearning and substitution. J. Eval. Clin. Pract. 2018;24:198–205. doi: 10.1111/jep.12855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bonafide C.P., Xiao R., Schondelmeyer A.C., Pettit A.R., Brady P.W., Landrigan C.P., Wolk C.B., Cidav Z., Ruppel H., Muthu N., Williams N.J., Schisterman E., Brent C.R., Albanowski K., Beidas R.S. Pediatric Research in Inpatient Settings (PRIS) Network, Sustainable deimplementation of continuous pulse oximetry monitoring in children hospitalized with bronchiolitis: study protocol for the eliminating monitor overuse (EMO) type III effectiveness-deimplementation cluster-randomized trial. Implement. Sci. 2022;17:72. doi: 10.1186/s13012-022-01246-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Slaghuis S.S., Strating M.M.H., Bal R.A., Nieboer A.P. A framework and a measurement instrument for sustainability of work practices in long-term care. BMC Health Serv. Res. 2011;11:314. doi: 10.1186/1472-6963-11-314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gamble C., Krishan A., Stocken D., Lewis S., Juszczak E., Doré C., Williamson P.R., Altman D.G., Montgomery A., Lim P., Berlin J., Senn S., Day S., Barbachano Y., Loder E. Guidelines for the content of statistical analysis plans in clinical trials. JAMA. 2017;318:2337–2343. doi: 10.1001/jama.2017.18556. [DOI] [PubMed] [Google Scholar]

- 16.Moulton L.H. Covariate-based constrained randomization of group-randomized trials. Clin. Trials. 2004;1:297–305. doi: 10.1191/1740774504cn024oa. [DOI] [PubMed] [Google Scholar]

- 17.Raab G.M., Butcher I. Balance in cluster randomized trials. Stat. Med. 2001;20:351–365. doi: 10.1002/1097-0258(20010215)20:3<351::aid-sim797>3.0.co;2-c. [DOI] [PubMed] [Google Scholar]

- 18.Yu H., Li F., Gallis A J., Turner L E. Cvcrand: a package for covariate-constrained randomization and the clustered permutation test for cluster randomized trials. The R Journal. 2019;11:191. doi: 10.32614/RJ-2019-027. [DOI] [Google Scholar]

- 19.Benjamini Y., Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. Roy. Stat. Soc. B. 1995;57:289–300. [Google Scholar]

- 20.Campbell M.K., Piaggio G., Elbourne D.R., Altman D.G. CONSORT Group, Consort 2010 statement: extension to cluster randomised trials. BMJ. 2012;345 doi: 10.1136/bmj.e5661. [DOI] [PubMed] [Google Scholar]

- 21.Skrondal A., Rabe-Hesketh S. Chapman & Hall/CRC Press; 2004. Generalized Latent Variable Modeling: Multilevel, Longitudinal, and Structural Equation Models. [Google Scholar]

- 22.Harrell F.E., Jr. Springer; 2015. Regression Modeling Strategies: with Applications to Linear Models, Logistic and Ordinal Regression, and Survival Analysis. [Google Scholar]

- 23.Ehrhart M.G., Aarons G.A., Farahnak L.R. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS) Implement. Sci. 2014;9:157. doi: 10.1186/s13012-014-0157-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Williams N.J., Becker-Haimes E.M., Schriger S.H., Beidas R.S. Linking organizational climate for evidence-based practice implementation to observed clinician behavior in patient encounters: a lagged analysis. Implementation Science Communications. 2022;3:64. doi: 10.1186/s43058-022-00309-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Williams N.J., Hugh M.L., Cooney D.J., Worley J.A., Locke J. Testing a theory of implementation leadership and climate across autism evidence-based interventions of varying complexity. Behav. Ther. 2022;53:900–912. doi: 10.1016/j.beth.2022.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Williams N.J., Wolk C.B., Becker-Haimes E.M., Beidas R.S. Testing a theory of strategic implementation leadership, implementation climate, and clinicians' use of evidence-based practice: a 5-year panel analysis. Implement. Sci. 2020;15:10. doi: 10.1186/s13012-020-0970-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Williams N.J., Ramirez N.V., Esp S., Watts A., Marcus S.C. Organization-level variation in therapists' attitudes toward and use of measurement-based care. Adm Policy Ment Health. 2022;49:927–942. doi: 10.1007/s10488-022-01206-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dillard J.P., Shen L. On the nature of reactance and its role in persuasive health communication. Commun. Monogr. 2005;72:144–168. doi: 10.1080/03637750500111815. [DOI] [Google Scholar]

- 29.Silvia P.J. Reactance and the dynamics of disagreement: multiple paths from threatened freedom to resistance to persuasion. Eur. J. Soc. Psychol. 2006;36:673–685. doi: 10.1002/ejsp.309. [DOI] [Google Scholar]

- 30.Reynolds-Tylus T., Bigsby E., Quick B.L. A comparison of three approaches for measuring negative cognitions for psychological reactance. Commun. Methods Meas. 2021;15:43–59. doi: 10.1080/19312458.2020.1810647. [DOI] [Google Scholar]

- 31.VanderWeele T.J. Oxford University Press; New York: 2015. Explanation in Causal Inference: Methods for Mediation and Interaction. [Google Scholar]

- 32.Muthén B., Asparouhov T. Causal effects in mediation modeling: an introduction with applications to latent variables. Struct. Equ. Model.: A Multidiscip. J. 2015;22:12–23. doi: 10.1080/10705511.2014.935843. [DOI] [Google Scholar]

- 33.Nguyen T.Q., Webb-Vargas Y., Koning I.M., Stuart E.A. Causal mediation analysis with a binary outcome and multiple continuous or ordinal mediators: simulations and application to an alcohol intervention. Struct. Equ. Model. 2016;23:368–383. doi: 10.1080/10705511.2015.1062730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Muthén B.O., Muthén L.K., Asparouhov T. Muthén & Muthén; Los Angeles, CA: 2017. Regression and Mediation Analysis Using Mplus.https://www.statmodel.com/Mplus_Book.shtml [Google Scholar]

- 35.VanderWeele T.J. Explanation in causal inference: developments in mediation and interaction. Int. J. Epidemiol. 2016;45:1904. doi: 10.1093/ije/dyw277. –1908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Schisterman E.F., DeVilbiss E.A., Perkins N.J. A method to visualize a complete sensitivity analysis for loss to follow-up in clinical trials. Contemp Clin Trials Commun. 2020;19 doi: 10.1016/j.conctc.2020.100586. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Drs. Xiao and Bonafide had full access to all of the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

No data was used for the research described in the article.