Abstract

Trustworthy science requires research practices that center issues of ethics, equity, and inclusion. We announce the Leadership in the Equitable and Ethical Design (LEED) of Science, Technology, Mathematics, and Medicine (STEM) initiative to create best practices for integrating ethical expertise and fostering equitable collaboration.

Introduction

As Francis Collins prepared to step down from his 12-year tenure as Director of the National Institutes of Health (NIH), the esteemed genome scientist and celebrated government leader reflected frankly on the failures of the biomedical sciences to win the trust of the American public. In an interview with the New York Times in October of 2021, he described his expectation that Americans were “people of the truth,” and expressed his “heartbreak” at discovering the high levels of unwillingness to accept “accurate medical information” about COVID-19 vaccines.1 Indeed, a February 2022 report by the Pew Research Center found that after decades of expressing a relatively high degree of trust, Americans reporting a “great deal of confidence in scientists” dropped from 39% at the beginning of the pandemic to 29% in November of 2021.2 A report issued two months later explored in depth why trust among “Black Americans” had dropped and found that the main reasons were concerns about abuse within scientific research (e.g., from the United States Public Health Service Study of Untreated Syphilis at Tuskegee) and negative interactions with doctors and health care providers.3

In line with these findings, we argue that public mistrust in science is due substantially to lack of adequate attention to these legacies and ongoing realities of injustice, and not primarily the result of public misunderstanding or miscommunication of scientific procedures and results, as is often assumed. Research initiatives launched in fields as diverse as genomics, neuroscience, and AI too often incorporate concerns with justice and ethics in limited and marginal ways. Scholars with expertise in these domains are typically brought onboard too late in the process to provide meaningful input into decisions about the categories that frame research and the questions and aims that guide it. Instead of helping shape studies so that questions of ethics and justice are substantively engaged from the very beginning, such experts are tasked with helping cope with public implications, miscommunications, and misunderstandings. We introduce below a framework for re-centering questions of ethics and justice in the research process, a realignment that we argue is fundamental to building public trust in science.

Misrecognizing the roots of mistrust

The problem of public mistrust in science has been commonly interpreted as a deficit in communication and education. Collins, for example, explained that the NIH was considering launching an initiative on health communication focused on framing messages in a more effective way.4 Although such efforts are important, they treat the problem of trust as existing outside of the realm of science. We argue instead that science must also be concerned with its own trustworthiness, and to do this it must center issues of ethics and justice. Scientists are rightly concerned with troubling instances of fraud and irreproducibility and have undertaken many reforms to promote research integrity as well as transparency, data availability, and replication practices under the banner of open science. These efforts to strengthen epistemic reliability constitute one critical component of trustworthiness. However, as philosophers, anthropologists, sociologists, and historians of science have emphasized, trustworthiness is also a socio-ethical problem that demands better, more trusting relations between scientists and scientific institutions, on the one side, and their many publics on the other. It requires not just technical competence and integrity, but also acting to benefit these publics—whether they be potential research participants, patients, community research partners, or the growing number of people who depend on scientific and technical knowledge and innovations to live their lives.

When looking at the roots of mistrust, a common approach is to highlight how distrust of science, and expertise more broadly, is increasingly grounded in social and political identities. However, this approach assumes that these identities have no relationship to scientific and biomedical institutions. Identities are in part made through experiences of exclusion, discrimination, and mistreatment. Within the life sciences and biomedicine, they are shaped by experiences of a medical research complex that for too long has categorized too many as objects of research, rather than participants worthy of offering expertise and receiving care, and that too frequently promotes the commercialization and privatization of knowledge in a manner that many suspect contravenes the public good.5 As the philosopher Naomi Scheman explains, “Institutional reputations for trust-eroding practices … make such mistrust rational, and—insofar as various insiders bear some responsibility for those practices—make those insiders less trustworthy”.6

Addressing these deeper roots of mistrust requires revolutionizing scientific theory and practice in a manner that centers questions of ethics, justice, and the public good. Here, we lay out the challenges that must be overcome to achieve this transformation and present a new initiative to create a framework for integrating into scientific research the expertise needed to build ethical and equitable, and thus trustworthy, science.

Cultivating trustworthy science: Current challenges

Despite widespread recognition of the importance of questions of ethics and justice in science, technology, engineering, mathematics, and medicine (STEM), currently no adequate practices exist to ensure that these questions are addressed throughout the research process. Ethical review by Institutional Review Boards, with their focus on respect for individuals, are explicitly precluded from taking into account harms to groups and society at large. Grant-funded required courses on “Responsible Conduct of Research” focus largely on scientists’ responsibilities to each other, such as data sharing, and not on social responsibilities. Requirements that scientists include “Broader Impacts” statements in applications for research funding also are of questionable efficacy in assuring actual societal benefits.7

Those scholars and practitioners working in the broad area of the ethical, legal, and social implications (ELSI) of science may be best positioned to partner with scientists to build the socio-ethical-scientific practices that are needed. However, even here there are challenges. ELSI was not designed to transform science so that it could account for and respond to the potential harms and inequities of research. Instead, as its first director, Eric Juengst, reflected, “the enterprise of genome research and the knowledge generated by it were to be treated as ‘unalloyed prima facie goods’”.8 The goal of the ELSI program was to create the policy tools that would ensure its growth, not to ensure equitable public benefit from the massive investment in genomics. These instrumental origins of ELSI undermine its legitimacy and have led to efforts to implement it that too often fail to address the deeper entangled issues of science, ethics, and justice that scientists must address to build trustworthiness.

Consider the following scenario typical of the requests scientists make when seeking support for the mandatory ELSI—and, increasingly, diversity, equity and inclusion (DEI)—components of research grants: a biomedical scientist contacts their social science colleague when submitting a grant to map the organization of cells in the human brain because the Request for Proposals requires addressing ELSI issues. Like the Human Genome Project, this project promises to produce fundamental data that will inform the future of neurosciences and the medical treatment of brain disorders. To ensure that all will benefit from this foundational research, the biomedical scientist explains to their social science colleague that it is critical to include brain tissues from different racial and ethnic groups. They believe the social scientist’s expertise is essential to achieve this. The social scientist responds with interest and questions. What does the biomedical scientist mean by “race” and “ethnicity”? Will the use of these categories facilitate the understanding of brain variation that the scientist seeks to achieve? The biomedical scientist acknowledges that these are good questions, but states that the grant must be submitted in two weeks and asks if the social scientist can write the ELSI and DEI portions of the grant.

The problem in this familiar scenario is not merely that the biomedical scientist contacted their social science colleague two weeks before the grant deadline. Last-minute work is nothing unusual in the grant-writing world. It is instead an approach to research in which scientific and technical goals are prioritized, and the goals of diverse, equitable, and ethical science are circumscribed and facilitative in an already determined process. The biomedical scientist has not asked their social science colleague to help conceptualize the research, even though they have pertinent expertise. The reason the social scientist asked about how the study defines “race” and “ethnicity” was not so that they might better understand how to recruit subjects into the study, but because these questions relate to the conceptualization of the study itself. For example, how are race and ethnicity used in the study to improve understanding of diverse brains? Are these the most useful variables, or do they function as proxies for other more relevant factors? In what ways might organizing data collection in this way promote misunderstanding or even harm?

These questions are not unique to natural scientists. Social scientists too must grapple with these critical questions. Indeed, when looking at the Pew study cited above, it is important to understand how the researchers defined “Black Americans,” and what intersectional differences (e.g., of class, gender, sexuality, religion, political orientation, citizenship status) such a category might occlude. For all scientists—natural and social—the racial and ethnic categories most readily available for use are those created to order human beings for the purposes of governing and—in too many cases—oppressing them. Although some argue that these categories should be cast out of science, replaced by new apolitical groupings, the solution is not so simple or straightforward.9 As the focus on diversity in genomic research has increasingly targeted racialized populations for recruitment, expertise on the complexities of the use and downstream implications of categories of race and ethnicity is sorely needed.

Consider the case of the stubbornly persistent lack of racial and ethnic diversity in genomic databases.10 This lack of diversity could have tangible clinical implications. For example, several studies have found that patients identified as Black, Asian, Native American, or Hispanic are much more likely than those identified as White to have a genomic “variant of uncertain significance”, meaning that these clinical findings cannot be interpreted and used to direct clinical care. Other studies suggest that variant classification (i.e., deciding whether a given genetic change is disease-associated) is enhanced when data from groups of diverse ethnic backgrounds are considered. However, simply increasing the diversity of research samples is insufficient to make science trustworthy. If the claimed benefits of that science do not accrue to underrepresented communities who donate those samples, scientists will not be trusted, and we will be left with “the illusion of inclusion”.11 Additionally, categorizing by race and ethnicity raises the risk that genetics will be conflated with race and ethnicity in a manner that harms more than it helps.

As these cases make clear, analyzing data using racial and ethnic categories only helps if it is accompanied by careful analysis of the definition and risks of using these categories. Categories frame scientific questions and embed decisions about who the research is for, and whose lives it might help or hinder.12 They shape not only the production of knowledge, but the creation of common goods. Cultivating trustworthy science requires forging scientific practices that account for and respond to these entanglements. It entails integrating a concern for equity and ethics at the very start of the research process, as well as in every aspect of it. In the scenarios noted above, for example, it means asking what variation in brain organization or in the human genome means? Is it the product of physiology? Does it reflect only biological differences, or social ones as well? How can we know?

Ethics and justice are fundamental to trustworthy science

The dominant approach to the design of scientific studies assumes that scientists and engineers conceptualize research, and then social scientists, historians, ethicists and—increasingly—artists facilitate its acceptance through addressing ELSI and DEI issues. This approach will continue to disappoint because it misunderstands the relationships between science, technology, ethics, and justice. Ethics and justice are not downstream implications of science. Rather, ethical interrogation and a commitment to equity are fundamental to creating trustworthy science. To create trusted research, practices for addressing questions of ethics and justice must join technical rigor and empirical practices as core elements of science and engineering.

Achieving this important goal requires action on multiple fronts. First, it requires re-imagining what is meant by innovation, what counts as good science, and who counts as a scientist. This work must be done not just in the academy, but across society. We are encouraged and inspired by the recent work of the US Office of Science, Technology and Policy (OSTP) to implement policies that ensure equity and justice are foundational to how we conceive of and enact innovation.13 This means increasing the number and kind of avenues that exist to enter a career in science and engineering. It also means as we collect data for research, not only are the rights of individuals considered, but affected communities are engaged, and the sovereign status of groups, such as Tribal Nations, is respected.14

Within the academy, universities must examine how they support research, and how they structure the spaces and incentives that shape how research teams form. Transforming DEI work will be central to these efforts. This work must not seek simply to include more diverse people in the STEM workforce. Rather, it must address the lack of incentives and underlying structural barriers that too often prevent their full participation, even once included.15

At the same time, how we address questions of ethics and justice in research must be transformed. As the exemplary case described above demonstrates, too often science and engineering projects grant the power to define research questions and approaches to those with perceived “scientific” and/or “technical” expertise. Demonstrating to funders that bioethics, the social sciences, humanities, and the arts have been included is an insufficient goal. Rather, science that is more equitable and just—and, thus, more trustworthy—necessitates transforming the power structures that organize research to ensure that a concern for equity and ethics are integrated into the very conceptualization of research, and in all phases of the research development process—including, critically, in decisions about budgets and administration.

Leadership in the Equitable and Ethical Design of STEM

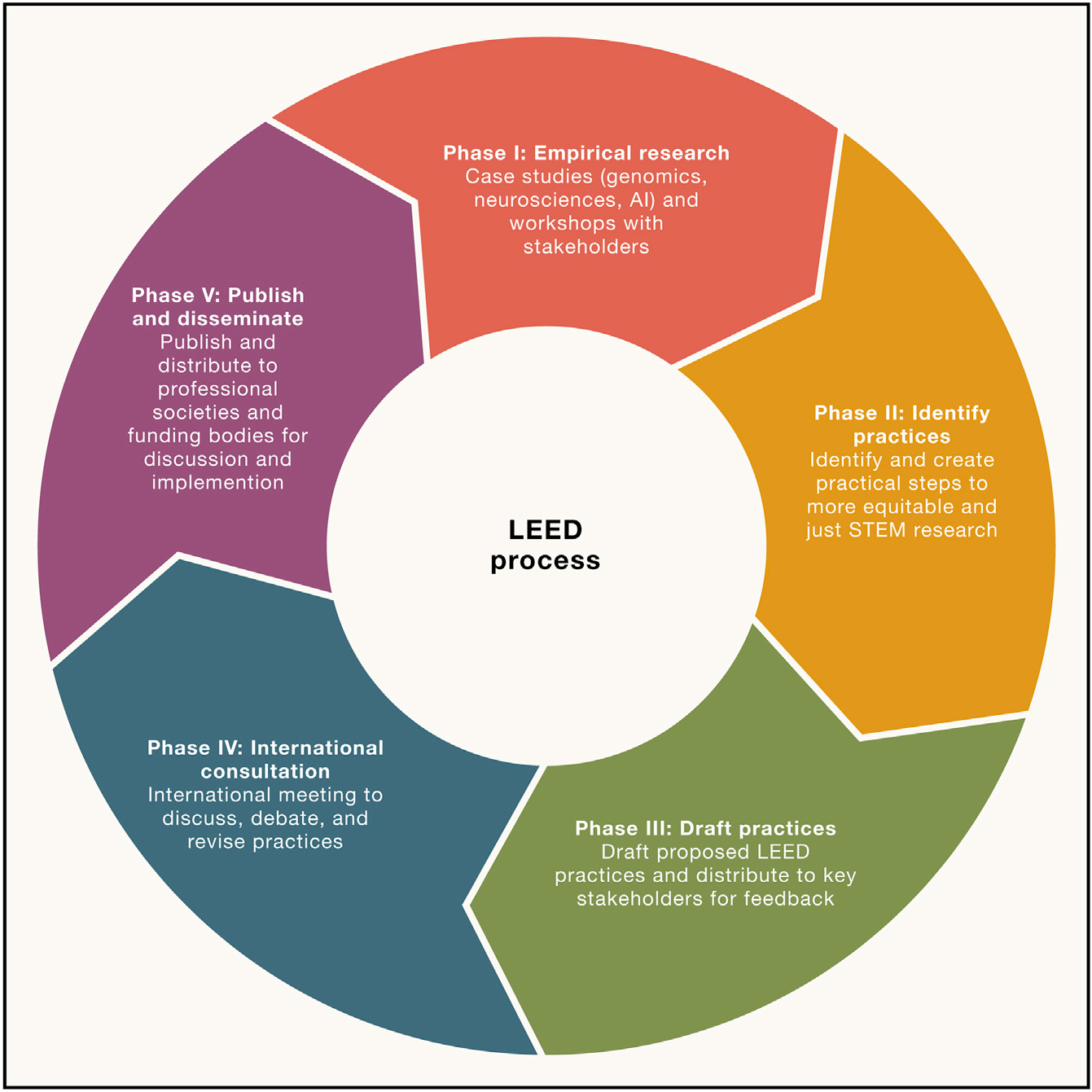

To facilitate this transformation, we propose the creation of Leadership in the Equitable and Ethical Design (LEED) of STEM. Like the work currently being led by the OSTP and its new Science and Society program, we seek to learn from existing and past efforts to center equity and justice in order to make recommendations for change. Inspired by the original LEED, Leadership in Energy and Environmental Design Building Certification, we seek to create a set of concrete practices to achieve our goal: the creation of equitable and just science and technology (Figure 1).

Figure 1. LEED Process.

Process for developing, refining, and revising LEED Practices.

In its first phase, LEED will create the empirical data needed to assess current efforts to incorporate a concern for ethics and equity in the research process—namely, ELSI and DEI. This evidence base will be built up in two ways: (1) a content analysis of documents and literature that describe existing approaches to ELSI research and DEI objectives and (2) an in-depth case-study comparison of these approaches in the fields of genomics, neuroscience, and artificial intelligence (AI). For each case study we will conduct semi-structured, in-depth interviews with key stakeholders—including scientists, social scientists, bioethicists, artists, and community research partners—who can offer their perspectives on the rationale, experience, and expectations of the ELSI and DEI components of research. The core questions to be examined in each case are described in Box 1 below.

Box 1. Core LEED Questions.

How are commitments to ethics and the values of diversity, equity, and inclusion currently translated into practice by research teams?

Which of these practices best facilitate collaborative relationships that foster accountability and demonstrate trustworthiness?

Which of these practices create outcomes that realize goals of equity, ethics, and justice in STEM res

Results from this research will be used to outline clear, practical steps for building research teams and designing scientific studies that do not merely check DEI and ELSI boxes, but substantively build more equitable and ethical STEM research. These steps will be discussed and further refined at open, on-line workshops that allow for the participation of a larger and broader range of stakeholders, including funders, representatives of professional societies, and affected communities. The results of these workshops will then be used to form the basis of a provisional set of proposed LEED practices.

Once drafted, we will organize an international meeting to discuss, debate, and further revise these best practices. Key stakeholders—and their funders—will be invited to this meeting. Insights from the meeting will guide a final revision of the practices, which then will be published and distributed to professional societies and funding bodies for discussion and possible implementation.

It is important to recognize that we do not envision producing a consensus document. We believe that such a document is neither possible nor desirable. There will be important differences of opinion about how to organize this most essential function of societies—the crafting of trustworthy scientific and technical knowledge that informs and guides them. Also, importantly, learning will happen over time as the proposed practices are tested in different contexts, leading to further revisions of the practices. Thus, we imagine that LEED will be a dynamic, interactive project, adopted in different ways in different contexts over time.

Conclusion

It should be unacceptable to support science that does not center ethics and justice throughout the research process. As recent declines in trust in science make clear, the era is long past in which it is viable to ignore or to tack these issues onto an ELSI or DEI work package. Ethical, just, and trustworthy science cannot be made from the margins. LEED seeks to shine a spotlight on this critical issue, and to seed the transformation required to ensure that ethics and justice are at the heart of our best research. It is only then that we can begin to regain the trust people have lost in science.

ACKNOWLEDGMENTS

We acknowledge funding from UC Santa Cruz Office of Research, the National Center for Advancing Translational Sciences (NCATS) (award no 5UL1TR001873), the National Institutes of Health (award no 1RF1MH117800–01), and the National Science Foundation (award no SES 2220631). Chessa Adsit-Morris assisted with the creation of Figure 1.

Footnotes

DECLARATION OF INTERESTS

The authors declare no competing interests.

REFERENCES

- 1.Weiland N, and Kolata G (2021). Francis Collins, who guided N.I.H. through Covid-19 Crisis, is exiting. The New York Times, 25 October. https://www.nytimes.com/2021/10/05/us/politics/francis-collins-nih.html. [Google Scholar]

- 2.Kennedy B, Tyson A, and Funk C (2022). Americans’ trust in scientists, other groups declines. Pew Research Trust. 15 February. https://www.pewresearch.org/science/2022/02/15/americans-trust-in-scientists-othe-rgroups-declines/. [Google Scholar]

- 3.Funk C (2022). Black Americans’ views of and engagement with science. Pew Research Trust. 7 April. https://www.pewresearch.org/science/2022/04/07/black-americans-views-of-and-engagement-with-science/. [Google Scholar]

- 4.Simmons-Duffin S (2021). The NIH director on why Americans aren’t getting healthier, despite medical advances. NPR. 7 December. https://www.npr.org/sections/health-shots/2021/12/07/1061940326/the-nih-director-on-why-americans-arent-getting-healthier-despite-medical-advanc. [Google Scholar]

- 5.Roberts D (2012). Fatal invention: How science, politics and big Business re-create race in the twenty-first century (New York: The New Press; ). [Google Scholar]

- 6.Scheman N (2020). Trust and Trustworthiness. In In the Routledge Handbook of Trust and Philosophy, Simon Routledge J, ed. (New York and London: Routledge; ). 29. [Google Scholar]

- 7.Roberts MR (2009). Realizing societal benefit from academic research: analysis of the National Science Foundation’s broader impacts criterion. Soc. Epistemol. 23, 3–4. 199–219. 10.1080/02691720903364035. [DOI] [Google Scholar]

- 8.Juengst E (1996). Self-critical federal science? The ethics experiment within the U.S. Human Genome Project. Soc. Philos. Pol. 13, 68. [DOI] [PubMed] [Google Scholar]

- 9.Trujillo AK, Kessé EN, and Rollins O (2022). A discussion of the notion of race in cognitive neuroscience research. Cortex 150, 153–164. [DOI] [PubMed] [Google Scholar]

- 10.Popejoy AB, and Fullerton SM (2016). Genomics is failing on diversity. Nature 538, 161–164. 10.1038/538161a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fox K (2020). The illusion of inclusion – The “All of Us” research program and indigenous peoples’ DNA. N. Engl. J. Med. 383, 411–413. 10.1056/NEJMp1915987. [DOI] [PubMed] [Google Scholar]

- 12.Lee SS, Mountain J, Koenig B, Altman R, Brown M, Camarillo A, Cavalli-Sforza L, Cho M, Eberhardt J, Feldman M, et al. (2008). The ethics of characterizing difference: guiding principles on using racial categories in human genetics. Genome Biol. 9, 1–4. 10.1186/gb-2008-9-7-404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Nelson A, and Kearney W (2021). Science and technology now sit in the center of every policy and social issue. Issues Sci. Technol. 38, 26–29. https://issues.org/wp-content/uploads/2021/10/26-29-Interview-with-Alondra-Nelson-Fall-2021.pdf. [Google Scholar]

- 14.Tsosie K, Claw K, and Garrison N (2021). Considering ‘Respect for Sovereignty’ beyond the Belmont Report and the Common Rule: Ethical and legal implications for American Indians and Alaska Native Peoples. Am. J. Bioeth. 21, 27–30. 10.1080/15265161.2021.1968068. [DOI] [PubMed] [Google Scholar]

- 15.Hammonds E, Taylor V, and Hutton R (2022). Transforming trajectories for women of color in tech (Washington DC: The National Academies Press; ). [Google Scholar]