Abstract

Objective:

A limited focus on dissemination and implementation (D&I) science has hindered the uptake of evidence-based interventions (EBIs) that reduce workplace morbidity and mortality. D&I science methods can be used in the occupational safety and health (OSH) field to advance the adoption, implementation, and sustainment of EBIs for complex workplaces. These approaches should be responsive to contextual factors, including the needs of partners and beneficiaries (such as employers, employees, and intermediaries).

Methods:

By synthesizing seminal literature and texts and leveraging our collective knowledge as D&I science and/or OSH researchers, we developed a D&I science primer for OSH. First, we provide an overview of common D&I terminology and concepts. Second, we describe several key and evolving issues in D&I science: balancing adaptation with intervention fidelity and specifying implementation outcomes and strategies. Next, we review D&I theories, models, and frameworks and offer examples for applying these to OSH research. We also discuss widely used D&I research designs, methods, and measures. Finally, we discuss future directions for D&I science application to OSH and provide resources for further exploration.

Results:

We compiled a D&I science primer for OSH appropriate for practitioners and evaluators, especially those newer to the field.

Conclusion:

This article fills a gap in the OSH research by providing an overview of D&I science to enhance understanding of key concepts, issues, models, designs, methods and measures for the translation into practice of effective OSH interventions to advance the safety, health and well-being of workers.

Keywords: Dissemination and implementation science, Translational research, Occupational safety and health, Workplace safety and health, Evidence-based interventions, Research-to-practice

1. Introduction

Many occupational safety and health (OSH) interventions have been demonstrated to improve worker safety and health. Examples of positive effects range from preventing occupational injuries and hearing loss to reducing musculoskeletal, skin, and lung diseases (Keefe et al., 2020; Teufer et al., 2019) to reducing work-related stress (Richardson and Rothstein, 2008). However, effective OSH research programs are not broadly translated to other settings (Cunningham et al., 2020; Dugan and Punnett, 2017; Guerin et al., 2021; Rondinone et al., 2010; Schulte et al., 2017). This research-to-practice lag has substantial implications for the health and well-being of the global workforce (Schulte et al., 2017). For example, according to Lucas et al. (2014), only 17% of fishing safety research has been adopted in workplaces to the benefit of workers. Similar results were reported in a review by Tinc and colleagues (2018). More adaptive, innovative, accelerated and transdisciplinary OSH research has been called for (Schulte et al., 2019; Tamers et al., 2018). This includes scientific approaches that speed translation for addressing the multi-level and interconnected real-world challenges of a rapidly changing global economy and workforce (Schulte et al., 2019), and global public health crises such as the COVID-19 pandemic. These approaches should include dissemination and implementation (D&I) science—a growing field that examines the complex processes by which scientific evidence is adopted, implemented, and maintained/sustained in clinical and community-based settings, bridging the gap between research and everyday practice (Estabrooks et al., 2018; National Institutes of Health [NIH], 2021). D&I science is variously referred to as implementation science, (T3-T4) translational science, knowledge translation, and knowledge transfer and exchange (Cunningham et al., 2020; Guerin et al., 2021; Rabin and Brownson, 2018; Schulte et al., 2017). The application of D&I science methods has been shown to shorten the research to practice pipeline (e.g., Fixsen et al., 2007; Harden et al., 2021; Khan et al., 2021) increasing the speed of translation to benefit the public.

In the United States, the National Institute for Occupational Safety and Health (NIOSH), within the Centers for Disease Control and Prevention (CDC), advanced the use of D&I methods in response to reviews by the National Academies of Science (NAS) highlighting the gap in research to practice in OSH (and at NIOSH) (NAS & NRC, 2009). These efforts were also aimed at answering calls within the OSH community to promote the study of factors that facilitate or limit the development, transfer, use, and sustainability of OSH interventions (Schulte et al., 2003; NAS, 2009). D&I science approaches have been integrated into strategic NIOSH initiatives and funding opportunities (Dugan and Punnett, 2017; Guerin et al., 2021), and D&I is a topic of scholarly interest at national and international OSH conferences and workshops. Despite increased awareness and some early progress in integrating D&I research methods into OSH initiatives, critical gaps persist (Cunningham et al., 2020; Guerin et al., 2021; Lucas et al., 2014; Redeker et al., 2019; Tinc et al., 2018). The reasons for this lag are numerous. As is the case with many scientific fields, D&I science suffers from inconsistency in its terminology that may confuse those seeking to learn its methods (Cunningham et al., 2020; Dugan and Punnett, 2017; Guerin et al., 2021; Rabin and Brownson, 2018). OSH and other researchers new to the D&I field may have difficulty knowing where and how to get started. D&I approaches may appear as burdensome “add-ons” to existing studies that are primarily focused on providing efficacy or effectiveness evidence and have little funding or other support for fostering additional evaluation or dissemination activities. Finally, the complexity and diversity of workplaces and difficulty accessing some worker populations (e.g., immigrant and contingent workers and small businesses employees) can make conducting D&I research in OSH settings challenging (Cunningham et al., 2020). Notwithstanding these difficulties, substantial opportunities exist for further integrating D&I approaches into OSH research and practice in the U.S. context and internationally. Such a move will fill important knowledge gaps related to moving OSH research into sustained practice to protect workers.

The purpose of this primer is to provide OSH researchers and practitioners who are newer to D&I with resources and an overview of D&I science methods and approaches that hold promise for translating effective OSH interventions into practice to improve safety, health, and well-being outcomes for working people. To do this, we first provide an overview of terminology, topics, and concepts in D&I relevant to OSH. Second, we discuss some of the most important foci of D&I science, including how D&I fits into the typical translational research pipeline conceptualization; the importance of balancing adaptation of interventions to fit context with fidelity to core program components; and the difference between implementation outcomes, effectiveness outcomes, and implementation strategies. Third, we provide guidance and resources for applying D&I theories, models, and frameworks (TMFs) to OSH research; give examples of D&I science in OSH; and as an example, develop an extended application of the Practical, Robust, Implementation and Sustainability Model (PRISM) (Feldstein and Glasgow, 2008; Glasgow et al., 1999; Glasgow et al., 2019). We also introduce appropriate research designs, methods and measures for D&I science. Finally, we discuss future directions for D&I science generally, and specific key applications to OSH research and practice efforts.

2. What is dissemination and implementation science? A brief overview

2.1. D&I science defined

In OSH, where D&I science is still gaining a foothold, there is variation and inconsistency in terminology, similar to what is found in the still young D&I field (Lobb and Colditz, 2013). For example, the term “translation research” is promoted by NIOSH and used among its grantees (Cunningham et al., 2020; Schulte et al., 2017), while “knowledge transfer” (Crawford et al., 2016; Duryan et al., 2020; Rondinone et al., 2010) and “knowledge transfer and exchange” (Van Eerd and Saunders, 2017) are terms commonly used among OSH scientists in Europe and Canada to conceptualize similar overlapping (but not synonymous) areas of investigation. Increasingly, OSH researchers in the United States are adopting the terminology of mainstream D&I science (Dugan and Punnett, 2017). Rabin and colleagues (2008, 2018a) have assembled extensive glossaries to capture and synthesize the multitude of terms and definitions used by D&I researchers, practitioners, and funders (Colquhoun et al., 2014; McKibbon et al., 2010; Powell et al., 2015). With the proliferation of D&I science TMFs —more than 150 have been identified to date (e.g., Birken et al., 2017a; Strifler et al., 2018; Tabak et al., 2013)—harmonizing terminology in the field is an ongoing challenge.

For purposes of this paper, we use (slightly revised) NIH (2019) definitions: implementation research is the systematic investigation of the use of strategies to enhance adoption, integration, and sustainment of evidence-based health interventions in clinical and community settings to improve individual and population health; dissemination research is the scientific study of targeted distribution of information and intervention materials to a specific public health audience. Dissemination strategies are often informed by Rogers’ widely-used theory, Diffusion of Innovations (Dearing, 2008; Dearing et al., 2018; Rogers, 2003), and are concerned with promoting the use of evidence-based practices by important decision-makers through communication of information tailored to their specific needs (Brownson et al., 2018a). D&I science also addresses “designing for dissemination, implementation and sustainment” in the development of new, evidence-based programs, by grounding their development and evaluation in key collaborator/recipient perspectives (Brownson et al., 2013; Rabin and Brownson, 2018; Rabin et al., 2018). Within the D&I field, research methods do not typically foster only implementation or only dissemination, but it is recognized that certain D&I approaches and models are better suited to “I” than “D,” and vice versa (Lobb and Colditz, 2013). As more scientific knowledge has been generated to date about the methods that successfully promote implementation as compared to those promoting dissemination, one may consider that the approaches discussed in this article are most relevant to successful implementation of interventions. The D&I field overall continues to wrestle with how to best disseminate successful programs (Brownson et al., 2013).

Dissemination, “scale up” and “scale-out“ are distinct concepts within D&I science. Whereas dissemination research is the study of how best to spread and sustain knowledge of an evidence-based intervention through the systematic distribution of information to a specific audience (NIH, 2019), scale-up refers to the planned spreading of an evidence-based intervention to additional units of the same or in a similar context, often focusing on the same population for which the program/ practice was originally shown to be effective (Aarons et al., 2017). Scale-out refers to the use of strategies to implement, assess, adapt, and sustain an evidence-based policy/practice/program as it is delivered to new populations and/or in new settings differing from those tested in effectiveness trials (Aarons et al., 2017). The potential promise of “scale out” is that this approach may “borrow strength” from evidence in a prior effectiveness trial, thereby speeding up the translational pipeline (Aarons et al., 2017; Smith et al., 2018). For an OSH example, Buller and colleagues (2020) are conducting a randomized trial (guided by multiple D&I models and theories) to compare two methods of national scale up of an effective occupational sun protection program for outdoor workers experiencing an elevated risk of skin cancer.

2.2. Characteristics of D&I science

2.2.1. The translational pipeline

The D&I field has a long history with roots in agriculture, sociology, education, communication, marketing, and management (Colditz and Emmons, 2018; Dearing et al., 2018; Estabrooks et al., 2018; Rabin and Brownson, 2018; Rogers, 2003), and has expanded to other disciplines. Health-related fields leading D&I efforts currently include health services, HIV prevention, school health, mental health, nursing, cancer control, cardiovascular risk reduction, and violence prevention, among others (Rabin and Brownson, 2018). D&I science relies on transdisciplinary approaches that cross fields of inquiry, integrating multiple perspectives and methods (Bauer et al., 2015; Estabrooks et al., 2018) to address the “leaky pipeline” that hinders the transfer of scientific knowledge to practice (Green, 2009). This pipeline is characterized by the 17 years it takes to turn 14 percent of original research to the benefit of program recipients (Balas and Boren, 2000; Brownson et al., 2018b; Green, 2009). Several interacting factors—including characteristics of the intervention (e.g., high cost, not developed considering user needs), the setting/context (e.g., competing demands, limited time and resources), inadequate/inappropriate research designs (e.g., not relevant or representative of the population of interest), and interactions among these factors—have limited the uptake of evidence-based practices and programs (Colditz and Emmons, 2018; Glasgow and Emmons, 2007). D&I science systematically addresses the gaps in the research-to-practice pipeline by engaging partners and beneficiaries to tailor the ways that an intervention will be implemented to fit the context and setting of endusers (Aarons et al., 2012; Chambers et al., 2013). The application of D&I methods has been shown to shorten the time needed to advance research to practice (e.g., Fixsen et al., 2007; Harden et al., 2021; Kahn et al., 2021).

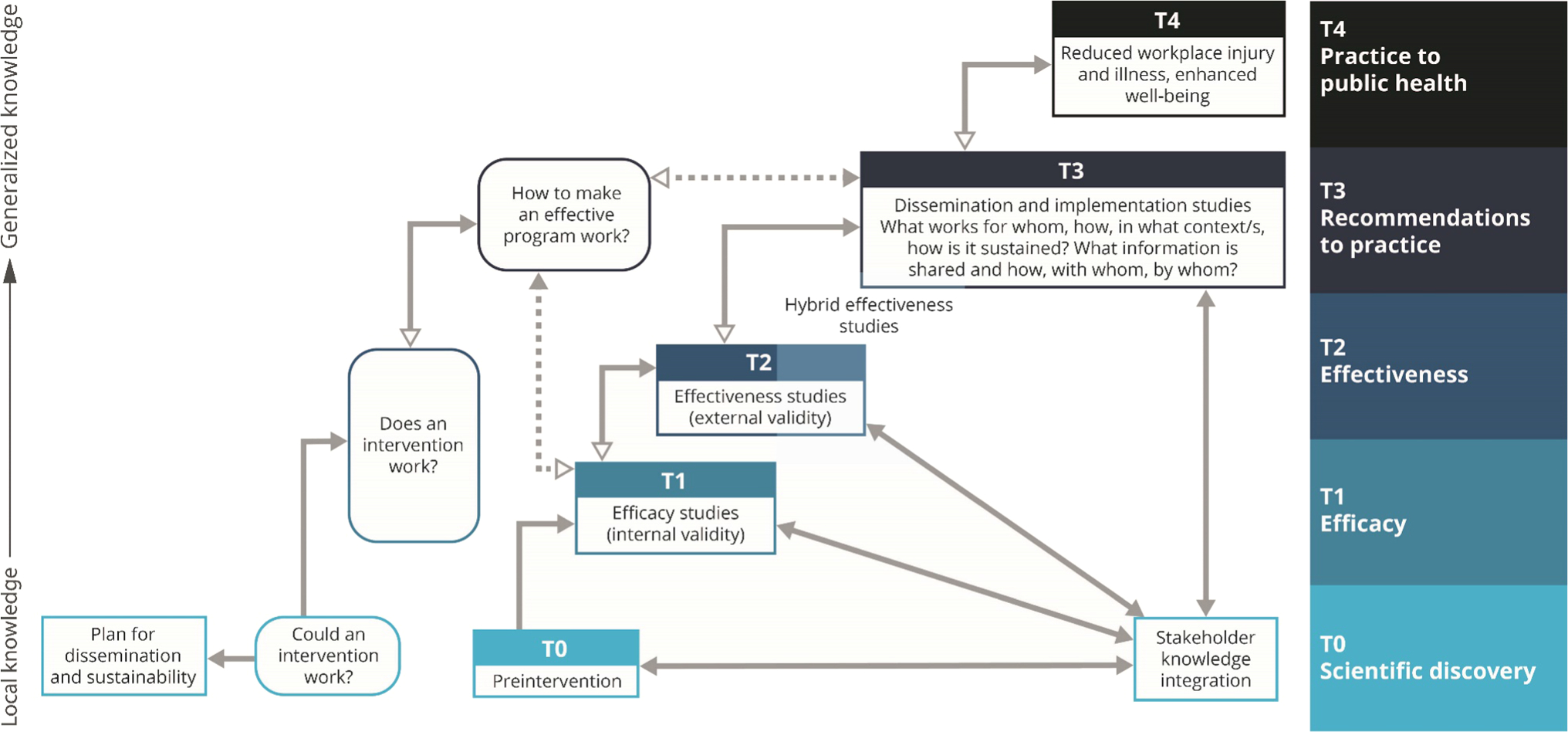

To explain the movement of scientific innovations from discovery to widespread adoption and population impact, clinical and public health researchers have used pipeline or “T” (translational) stage models (Khoury et al., 2010; Brown et al., 2017; Westfall et al., 2007). Fig. 1 presents an adapted “T” stage model for OSH that emphasizes the interactions and iterations of the research translation process. As illustrated in Fig. 1, for implementation success, it is important to: a) plan for dissemination and sustainability from the outset (Brownson et al., 2013) and b) engage stakeholders/beneficiaries on an ongoing basis across all stages of the research continuum (Glasgow et al., 2012). T0 translation focuses on the “pre-intervention” scientific discovery stage, identifying research challenges and opportunities, asking the question, could an intervention work? The T1 phase emphasizes internal validity (efficacy) and results in knowledge creation about “does an intervention work?” under optimal conditions (Fort et al., 2017; Khoury et al., 2007; Rabin and Brownson, 2018). T2 translation involves effectiveness research that investigates, through randomized trials or other methods simulating “real world conditions,” whether an intervention improves health and safety outcomes (Fort et al., 2017; Glasgow et al., 2012; Rabin and Brownson, 2018). T3 translational research continues to assess effectiveness, but also systematically explores pragmatic (Loudon et al., 2015), realist questions (Pawson, 2013) such as what works, for whom, how, in what contexts, and how is it sustained over time? (Gaglio and Glasgow, 2018). T3 questions may focus on how to make an effective intervention work in diverse, multi-level settings (Khoury et al., 2007; Rabin and Brownson, 2018) and how it can be adapted to fit various contexts and resource constraints, such as in large vs. small workplaces. Hybrid effectiveness studies, as indicated in Fig. 1, promote examination of both effectiveness and implementation outcomes within the same study, with the aim of accelerating the research-to-practice process by combining aspects of T2 and T3 research (Curran et al., 2012). Finally, T4 translational research is focused on producing public health impact and developing the most generalizable knowledge about the positive and sustained health and safety outcomes at the population level that result from disseminating and implementing interventions known to be effective (Agency for Healthcare Research and Quality [AHRQ], 2014; Khoury et al., 2010; Rabin and Brownson, 2018). As stated previously, the translational research cycle is recursive. Information at a later stage informs research at earlier stages and depending on the outcomes at any given timepoint, it may be necessary to go back to an earlier stage. The need to consider partner/beneficiary engagement at the early T1-T2 phases is important yet often overlooked, resulting in the unintended consequence of developing an intervention that is highly efficacious, but fundamentally ill-suited to the needs of partners who would adopt it in the T3-T4 phases (Brownson et al., 2013).

Fig. 1.

The Translational Research Cycle

Sources adapted from: AHRQ, 2014; Brown et al., 2017; Khoury et al., 2010; PAR-19–274 Dissemination and Implementation Research in Health; Westfall et al., 2007.

2.3. Defining Evidence

Within D&I science, evidence-based interventions are defined broadly and may include programs, practices, policies, recommendations and guidelines (Rabin et al., 2010). Brown and colleagues (2017) refer to seven types (the “7 Ps”) of public health and health services interventions relevant to D&I efforts that can be delivered in different contexts, and which have varying degrees of applicability to OSH: programs, practices, principles, procedures, products, pills, and policies. Brownson and colleagues (2009) delineate three types of evidence generated through and from public health interventions: 1) Type 1 evidence defines the etiology of diseases and the magnitude, severity, and extent to which the risk factors for these conditions, and the conditions themselves, can be prevented; 2) Type 2 evidence describes the relative effectiveness of specific interventions to improve people’s safety and health; and 3) Type 3 evidence, which is the most scarce in OSH, demonstrates how and under which contextual conditions interventions are (successfully) implemented and sustained. In its most basic terms, research in D&I science seeks to take programs that already have sufficient Type 2 evidence, typically framed as consistent effectiveness demonstrated through meta-analyses and/or Cochrane reviews or strong recommendations in public health guidelines (Proctor et al., 2012), and to study them in order to generate Type 3 evidence. To provide a simple comparison between these types of evidence and the translational research cycle (Fig. 1), Type 2 evidence is typically derived from T2 effectiveness research studies, whereas Type 3 evidence is derived from T3 and/or T4 research studies that evaluate implementation strategies and outcomes and consider how an intervention’s effects relate to context.

It has been argued that requiring definitive evidence at a given stage of the translational pipeline before moving to the next has resulted in a lack of “rapid and relevant” movement of research to practice (Kessler and Glasgow, 2011). In public health, not all types of evidence (e.g., qualitative research) are equally represented in systematic reviews, and gray literature—such as government reports, book chapters, conference proceedings, and other materials—may provide useful information (Jacobs et al., 2012). In addition to the traditional terminology of evidence-based interventions, some contexts also define promising interventions as valid targets of dissemination and implementation activities. For example, the U.S. Department of Veterans Affairs (VA, 2021) maintains a warehouse of promising practices that meet a set of criteria regarding potential for impact. These criteria for the Office of Rural Health within the VA include increased access, strong partnerships, clinical impact, return on investment, operational feasibility, and customer satisfaction.

In the OSH field, Type 2 evidence is more typically represented by “strong recommendations in public health guidelines” (Proctor et al., 2012) because there is often limited evidence from randomized controlled trials (Anger et al., 2015; Hempel et al., 2016; Howard et al., 2017; Nold and Bochmann, 2010; Robson et al., 2012). OSH evidence is generated from and through diverse and multidisciplinary sources, including human and animal studies and observational, epidemiological and worker case studies (Hempel et al., 2016). Examples of factors relevant to OSH recommendations and guidelines that may or may not be included in an evidence synthesis include projected costs of the preventive action or policy, current industry standards, context-dependent values and practices, technical feasibility (Hempel et al., 2016; Nold and Bochmann, 2010), partner and program recipient concerns (e.g. needs of employers, employees, and intermediaries such as labor or professional organizations; Sinclair et al., 2013), and occupational health equity issues (Ahonen et al., 2018).

2.4. Defining “Context“

The D&I field uses the term “context” to capture the complex web of factors to be considered when implementing interventions (Huebschmann et al., 2019; NIH, 2019). More specifically, Movsisyan et al. (2019) define context as the “set of characteristics and circumstances that consist of active and unique factors within which the implementation of an intervention is embedded” (p. 2). Context is multilevel, and cuts across economic, social, political, and temporal domains (Neta et al., 2015). D&I research seeks to address barriers to adoption of evidence-based interventions arising from multiple, interacting influences (Burke et al., 2015) crossing socioecological (e.g., policy, community, organizational, interpersonal, and individual) levels (Bronfenbrenner, 1979; Sallis et al., 2008). Context is not a fixed organizational structure but a process that is dynamic, iterative and negotiated (May et al., 2016; Chambers et al., 2013). A key premise of D&I science is that it is critical to package and convey the evidence necessary to improve health in ways that fit local settings and meet the needs of end-users (including workers, employers, intermediary groups such as professional organizations, and policy makers). This is because even the most robust evidence-based program can fail if context is not explicitly considered. Partners/collaborators find it challenging to implement with fidelity programs that are unacceptable or not feasible in their setting, or if they prefer alternative approaches.

An example of OSH research incorporating contextual factors is Tenney and colleagues’ (2019) study of the adoption of the NIOSH Total Worker Health® (TWH) approach among 382 businesses. TWH programs are designed to integrate protection from work-related safety and health hazards with promotion of injury and illness prevention efforts to advance worker well-being (NIOSH, 2020). The authors found that larger businesses (>200 employees) implemented more comprehensive health and safety strategies in their workplaces compared to smaller businesses (≤50 employees), highlighting contextual factors related to size of business that were associated with TWH implementation. At the organizational level, contextual factors including business structure, age, organization of work/workflows, characteristics of the workforce including employee demographics (such as age), use of contingent labor, management and leadership characteristics, financial resources, and organizational climate were identified as key in understanding the differential adoption of TWH initiatives by smaller versus larger businesses (Tenney et al., 2019). In another example from Carlan and colleagues (2012), the authors explore how the organization of work in the Canadian construction sector (decentralized, non-linear, and nonhierarchical) requires moving away from top-down approaches for disseminating workplace safety and health information to identifying effective networks and intermediaries (such as unions) through which OSH knowledge may be communicated and transferred in these complex, dynamic contexts.

Overall, as shown in Fig. 1, research in D&I science should generate new knowledge on the feasibility and acceptability of specific implementation strategies (discussed in further detail below) to deliver an evidence-based program in a given context, leading to a better understanding of how and why the program works, for whom it works and in what settings. Research that is more relevant, actionable, tailored, responsive and iterative holds the promise of creating a “pull” not just for evidence-based practice, but for practice-based evidence (Lobb and Colditz, 2013; Green, 2007).

3. Key D&I science concepts

The following section provides a brief overview of several key concepts in D&I science including fidelity and adaptation, implementation strategies, and implementation outcomes.

3.1. Fidelity and adaptation

Systematically monitoring and documenting adaptations to an evidence-based program is critical for understanding how these modifications influence intervention outcomes (Rabin and Brownson, 2018), and is closely linked to the concept of fidelity, or the extent to which a program is implemented as intended by its designers (Backer, 2001). Balancing fidelity and adaptation has been a topic of scholarly interest and debate for many years (see e.g., Bopp et al., 2013; Carvalho et al., 2013; Castro et al., 2004; Chambers and Norton, 2016; Dearing, 2008; Rohrbach et al., 2007), including in OSH (von Thiele Schwarz et al., 2015). There is a growing recognition among D&I researchers that adaptation is inevitable, and even desirable, to meet the local needs and constraints of program providers and recipients (Allen et al., 2018). The value of adaptation and fidelity may be different for various partners and program recipients, and recommendations have been proposed for reconciling their respective roles in the research-to-practice translational pathway (von Thiele Schwarz et al., 2019). How to identify what is essential to an evidence-based intervention—its core components or functions (or “the intervention’s basic purpose”; Perez Jolles et al., 2019, p. 1032)—is an important challenge in the successful implementation of evidence-based programs (Backer, 2001; Durlak and DuPre, 2008) and is critical to the measurement and assessment of implementation fidelity. Core functions or components are directly related to an intervention’s theory of change, which delineates the mechanisms by which the intervention works (Blasé and Fixsen, 2013). They are, in other words, the “special sauce” that characterizes an effective program. A recent study (Nykänen et al., 2021), sought to identify the “active ingredients” of a safety training intervention for young workers delivered in Finnish vocational schools. Based in social cognitive theory (Bandura, 1986), the intervention tested the association between the core intervention components (safety skills training, safety inoculation training, a positive atmosphere for safety learning, and active learning techniques) and study outcomes (safety preparedness, internal safety locus of control, risk attitudes and safety motivation). The study team found, for example, quality of program delivery was associated with student motivational outcomes. Identifying the core components of the safety training intervention will facilitate efforts to replicate or adapt it to other settings while keeping the key elements intact (Nykänen et al., 2021).

A current area for exploration in D&I science includes using systematic frameworks (e.g., Stirman et al., 2019; Stirman et al., 2013) to plan for, capture and characterize adaptations of implementation strategies and/or interventions during the implementation process (Escoffery et al., 2019; Finley et al., 2018; Rabin et al., 2018), with particular attention to the rationale for each adaptation and to the preservation of core components. Several recommendations for categorizing and understanding adaptations have been advanced in the D&I field (Escoffery et al., 2019; Glasgow et al., 2020; Kirk et al., 2020; Miller et al., 2021; Perez Jolles et al., 2019; Stirman et al., 2013, 2019). Investigations related to measuring and monitoring intervention fidelity and adaptations in OSH are limited, and more research is needed in this area.

3.2. Implementation strategies

Colloquially referred to as “how” an evidence-based program is implemented (Proctor et al., 2013), implementation strategies are the methods used to enhance program implementation outcomes (e.g., adoption, fidelity, and sustainability, see section 3.3 below) (Proctor et al., 2011). Powell and colleagues (2015) identified 73 discrete implementation strategies that can be grouped into 9 categories (Waltz et al., 2015). For example, the category “Train and educate stakeholders” consists of multiple strategies, including using train-the-trainer methods and making training interactive, while the category “Develop stakeholder interrelationships” includes discrete strategies such as “identify and prepare champions,” and “identify early adopters.” It is common to use multiple strategies or ‘strategy bundles’ during implementation to address multiple determinants (barriers and facilitators) to intervention implementation. The selection of strategies may vary depending on the phase of implementation and may require a variety of techniques to ensure that the strategies fit the local context (NCI, 2019; Powell et al., 2017). Implementation Mapping can be used to identify barriers and facilitators to program implementation and specific strategies, such as those delineated by Powell et al. (2015), to address these determinants and optimally deliver an intervention (Fernandez et al., 2019). However, it should be noted that there is systematic evidence of the effectiveness for only a minority of implementation strategies (Grimshaw et al., 2012; Wolfenden et al., 2018) and that implementation strategies do not always lead to improved implementation or sustainment. Research is needed in OSH on tailoring implementation strategies to context using robust and systematic methods (such as those described above) and leveraging what is already known about fitting strategies to other settings to achieve program impact.

3.3. Implementation outcomes

As previously mentioned, the outcomes assessed in D&I research are related to but distinct from those assessed in intervention studies. Generally speaking, implementation outcomes are associated with the effects of implementation strategies, while effectiveness outcomes are intended to analyze the impact of the program, policy or practice on specific health outcomes, such as a reduction in work-related injuries or improvement in work-related fatigue (Table 1). Proctor and colleagues (2011) developed a frequently cited taxonomy of eight conceptually distinct implementation outcomes. These include acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, penetration, and sustainability. Implementation outcomes are typically assessed at the organizational, community, or policy level (Rabin et al., 2008), but several, such as feasibility and acceptability, are also examined at the individual (program recipient) level. Approaches to examine these eight implementation outcomes should be adapted to account for health equity (e.g., assessing feasibility in a low-resource setting) (Brownson et al., 2021). In sum, implementation outcomes are key, intermediate outcomes that are critical for monitoring the successful implementation of evidence-based programs (NCI, 2019).

Table 1.

Examples of key implementation outcomes and OSH effectiveness outcomes

| Implementation outcomes* | Organizational effectiveness outcomes | Individual (worker/employer) effectiveness outcomes |

|---|---|---|

|

| ||

|

Acceptability: Perception among

key partners/beneficiaries that the OSH program or practice is agreeable

or satisfactory. Adoption: Intention among key partners/beneficiaries to employ an OSH intervention (i.e., “uptake”). Appropriateness: Perceived fit of the OSH innovation or intervention for a given context/population/health and safety problem. Costs: Costs of an OSH implementation effort Feasibility: Extent to which the OSH intervention can be successfully used within a given setting. Fidelity: Degree to which an OSH intervention was implemented as intended by the program developers Penetration: Extent of integration of an OSH intervention within a community, organization, or system. Sustainability: Extent to which a newly implemented program/intervention is maintained or institutionalized within an organization/workplace. |

Safety culture/climate Supervisory support Absenteeism Presenteeism Turnover Occupational health equity Occupational injuries, illnesses and fatalities |

Well-being Physical health Mental health Changes in attitude, intention and behavior Occupational injuries, illnesses and fatalities Occupational health equity Fatigue Stress Depression Burnout Social connectedness Job performance Job satisfaction Job commitment Intent to leave Work-life balance Positive self-concept |

Source. Adapted from, Friedland & Price, 2003; Lewis et al., 2015; NCI, 2019; NIOSH, 2013; *Proctor et al., 2011

4. Commonly used D&I science theories, models and frameworks

The terms “theory,” “model,” and “framework” (TMFs) in D&I science publications have distinct technical meanings, but they are often used interchangeably (Bauer et al., 2015). TMFs generally describe approaches or systematic ways to plan, implement, and evaluate the implementation of evidence-based interventions and can help researchers understand context, D&I processes, and outcomes (Damschroder, 2020; Nilsen, 2015; Nilsen and Bernhardsson, 2019). TMFs can be used to assess why an intervention works (or fails to) and increase the interpretability of study findings (Tabak et al., 2018). As stated previously, more than 150 D&I TMFs have been identified (e.g. Birken et al., 2017a; Strifler et al., 2018; Tabak et al., 2013) and applied to varying degrees (Skolarus et al., 2017). Prior reviews (Damschroder, 2020; Nilsen, 2015; Nilsen and Bernhardsson, 2019) have categorized D&I TMFs, depending upon their purpose, as process models, evaluation frameworks, and determinant theories/frameworks. Many TMFs are hybrid in the sense that they fall into more than one of the process, determinants, or evaluation categories (Damschroder, 2020). An overview of some commonly used D&I TMFs is provided below. (For a link to an interactive webtool to help researchers and practitioners select D&I science TMFs for their research, see Appendix A).

The earliest and still one of the most widely used theories in D&I science is Rogers’ Diffusion of Innovations (Rogers, 2003), which seeks to explain the processes influencing the spread and adoption of innovations through certain channels over time, considering factors such as adopters’ perceptions of the innovation (such as its cost, effectiveness, compatibility, complexity, trialability and observability); innovativeness of the adopter; and characteristics of social system(s), individual adoption processes, and the diffusion system, including the important roles of “opinion leaders” and “champions” (Damschroder, 2020; Dearing et al., 2018; Nilsen, 2015; Rogers, 2003). The Theoretical Domains Framework (Michie et al., 2005; Michie et al., 2011), which resulted from a systematic review of 19 published D&I frameworks, provides guidance for studying behavior change in terms of implementation activities and outcomes (Michie, 2014). For example, the TDF was recently used by OSH researchers to develop and psychometrically test a questionnaire to identify determinants of safety behaviors among workers in critical industries, such as transportation (Morgan et al., 2021). Organizational change theories in D&I science (Weiner et al., 2008, 2009, 2020) hold promise for OSH research for exploring implementation processes and outcomes within multilevel systems/complex workplaces (Carlan et al., 2012; Hofmann et al., 2017; Robertson et al., 2021).

Process models provide general guiding principles and “phases” of research planning and implementation rather than explicitly indicating a set of specific steps needed within each phase of implementation (Estabrooks et al., 2018; Nilsen, 2015; Tabak et al., 2018). An example of a process model used in public health research is knowledge into action (K2A) advanced by the CDC, which includes three (non-linear) components—research, translation, and institutionalization—and the implementer, deliverer, recipient interactions, support structures, and evaluation capacity needed to move knowledge to sustainable practice (Wilson and Brady, 2011). Many other process models are used in public health research and practice (see e.g., Birken et al., 2017a).

Consisting of four phases that describe the implementation process, the Exploration, Preparation, Implementation, Sustainment (EPIS) framework (Aarons et al., 2011) is considered a hybrid process and determinant model (Damschroder, 2020). Within EPIS, diverse factors from the inner and outer context that may hinder or facilitate the implementation of programs are considered in each phase (Brown et al., 2017; Moullin et al., 2019). This framework also articulates outer system contextual factors, such as the regulatory/policy environment, and inner context factors, such as organizational leadership, considered key to implementation processes. These factors may apply across many or all implementation stages. Another component of EPIS is the factors that relate to the intervention, such as its fit and cost. Finally, “bridging factors,” such as interagency collaboration, intermediaries (e.g., unions and professional organizations) and community-academic partnerships, create linkages between inner and outer contextual factors (Moullin et al., 2019). EPIS also provides some guidance regarding the temporal relations of D&I outcomes (Becan et al., 2018; Lewis et al., 2018a). For example, perceived fit of the intervention would primarily be assessed in the preparation phase, fidelity would be measured while the program is being implemented, and institutionalization of the intervention would be assessed in the sustainment phase (Lewis et al., 2018a). While EPIS has had limited uptake in OSH to date, it may be useful for assessing the multilevel factors that hinder/facilitate implementation of new innovations in workplaces.

Another hybrid model that is most often used as an evaluation framework is RE-AIM (Glasgow et al., 1999), with its five, specific dimensions (Reach, Effectiveness, Adoption, Implementation, and Maintenance) and its contextually expanded version, PRISM (Practical Robust Implementation and Sustainability Model, described later in this paper; Feldstein and Glasgow, 2008; Glasgow et al., 2019). Used frequently in research and grant applications to the CDC and the NIH (Glasgow et al., 1999; Glasgow et al., 2019; Vinson et al., 2018), RE-AIM was designed to enhance the quality, efficiency, and public health impact of efforts to translate research into practice. Cutting across all five of the RE-AIM implementation outcomes are equity concerns related to the representativeness of those who participate or benefit from the evidence-based program (Glasgow et al., 2019; Gaglio et al., 2013; Woodward et al., 2021). Although RE-AIM is most widely applied as an evaluation framework, it is also been used for guiding initial intervention planning with partners and beneficiaries (Holtrop et al., 2018). RE-AIM can also be used iteratively during program implementation to guide adaptations to implementation strategies if interim RE-AIM outcomes are not being met to the extent expected or intended (Glasgow, et al. 2019).

Recognizing that organizations may have limited capacity and resources, the intended goal of RE-AIM is to improve intervention monitoring and reporting across the dimensions, while not necessarily requiring comprehensive assessment of the intervention across all five dimensions (Glasgow and Estabrooks, 2018; Glasgow et al., 2019). Such “pragmatic” uses of the framework suggest the importance of engaging partners and beneficiaries early on and throughout the design, implementation, and evaluation of interventions, to establish a priori the dimensions and research questions that are most suitable to the setting, audience, needs, and resources, and the stage of research (Glasgow et al., 2019). Recent reconsiderations of RE-AIM promote an enhanced focus on sustainability (Glasgow et al., 2018; Shelton et al., 2020a) by addressing dynamic context, focusing on multi-faceted cost and economic issues, and promoting health equity (Shelton et al., 2020a).

A scan of the past decade of OSH literature for use of implementation and evaluation models and frameworks suggests only modest uptake of RE-AIM, which has been suggested as a useful tool for the evaluation of OSH interventions (Schelvis et al., 2015). However, some examples of the use of the framework in OSH as an evaluation and planning tool are available (e.g., Cocker et al., 2018; Jenny et al., 2015; Schwatka et al., 2018; Storm et al., 2016; Viester et al., 2014). Issues of sustainability and dynamic context, including impacts on health equity (Baumann and Cabassa, 2020; Shelton et al., 2020; Woodward et al., 2019, 2021) are topics of current interest in OSH (Ahonen et al., 2018), which may be systematically investigated through an application of RE-AIM. Appendix A contains a link to RE-AIM resources, which include guidance on using the framework, measures, checklists and a planning tool. An applied example of the use of RE-AIM to conduct a process evaluation for an RCT of a worksite behavior change intervention to prevent musculoskeletal disorders (MSDs) among construction workers in the Netherlands is summarized in Appendix B (Viester et al., 2014). Through their use of RE-AIM, the research team was able to demonstrate that the study achieved satisfactory adoption and representative reach among workers while also identifying challenge areas, including difficulties delivering the intervention with fidelity.

Determinant models/frameworks specify barriers and facilitators to implementation processes or outcomes and include the Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009) — one of the most widely used TMFs in D&I science (Birken et al., 2017b). CFIR was developed based on multiple, published implementation theories to identify and categorize known determinants of implementation outcomes. The framework consists of five domains known to influence implementation outcomes and interact with each other: Intervention Characteristics, Outer Setting, Inner Setting, Characteristics of Individuals, and Process (by which implementation is achieved). Within each of these domains are multiple constructs reflecting determinants (i.e., barriers and facilitators) of implementation (Damschroder et al., 2009). Determinants can act as independent variables with a direct effect on implementation outcomes (the dependent variable); as moderators (“effect modifiers”) of the effectiveness of D&I interventions; or as mediators that are links in a causal chain of a D&I mechanism (Flottorp et al., 2013; Lewis et al., 2018b; Nilsen, 2015). Developed to advance health services research by consolidating many existing implementation theories, the CFIR has been cited extensively across disciplines and is currently being updated (“CFIR 2.0”) to reflect more diverse settings, provide clarification on and elaboration of key constructs, include additional constructs of interest and relevance (e.g., “mass disruptions” such as global pandemics in the outer setting domain), and to expand the focus on health equity and implementation outcomes (Damschroder, 2021).

CFIR has received limited attention in OSH, but one interesting application is in research by Tinc et al. (2020). Tractor overturns have been a leading cause of death on American farms, and rollover protection structures (ROPS), first introduced in the 1960s, are highly effective in preventing death and serious injury when used with a seatbelt. While ROPS have been standard issue for decades on new tractors, many farmers use older equipment that needs to be retrofitted. Research was conducted using CFIR to gain an understanding of the barriers to farmers’ uptake of ROPS (Tinc et al., 2020). Participants in the National ROPS Rebate Program (NRRP) were surveyed at four time points. The surveys measured 14 CFIR constructs and correlations with three intervention outcomes (intakes, or the number of people who signed up for the program, funding progress, and tractor retrofits with ROPS). Findings revealed that eight CFIR survey items covering four constructs in two domains (access to knowledge and information [inner setting], leadership engagement [inner setting], engaging [process], and reflecting and evaluating [process]) were correlated (rho ≥ 0.50) with at least one of the three outcome measures. Items correlated with all three outcome measures included those related to access to knowledge and information (inner setting) and engaging (process), indicating that these constructs may be the most important for expanding the NRRP (Tinc et al., 2020). In terms of the utility of applying the CFIR in OSH to scale-up initiatives, research indicates challenges when using this D&I framework in agricultural settings versus single site implementation studies (Tinc et al., 2018). More research is needed to understand CFIR’s utility for other OSH interventions and settings.

Another determinant TMF (Nilsen and Bernhardsson, 2019) is the Practical Robust Implementation and Sustainability Model (PRISM; Feldstein and Glasgow, 2008; Glasgow et al., 2019), an extension of RE-AIM which addresses key multilevel contextual factors that influence RE-AIM outcomes and considers the perspectives of multiple partners and beneficiaries (as depicted in Fig. 2 and adapted to reflect the OSH context).

Fig. 2.

The Practical, Robust, Implementation and Sustainability Model (PRISM) for OSH. Adapted from: Feldstein and Glasgow, 2008.

The PRISM contextual factors include: the program characteristics from the perspective of organizational and individual recipients; the characteristics of diverse, multilevel recipients of the program; the implementation and sustainability infrastructure; and the external environment (Feldstein and Glasgow, 2008; Glasgow et al., 2019; McCreight et al., 2019). PRISM contextual factors may be used to guide researchers during the program planning, implementation, evaluation and dissemination phases (Glasgow et al., 2019).

For the OSH practitioner, PRISM could be used to consider employers’, managers’, and workers’ perspectives and to identify factors influencing successful implementation of programs, policies and guidelines in the workplace. For instance, in the program planning phase, PRISM could guide the selection of appropriate evidence-based interventions to address context-specific needs and priorities, as well as engage workers and managers in the identification of barriers/facilitators to successful implementation and sustainability. During implementation, PRISM could be used to improve the fit between the evidence-based practice and the workplace by systematically assessing organizational, employer and worker characteristics and perspectives, and the existing implementation and sustainability infrastructure. Using iterative, qualitative approaches, such as focus groups and interviews, OSH researchers and practitioners could tailor implementation strategies or adapt the evidence-based intervention to provide a better match to the workplace, and to improve RE-AIM outcomes (such as Maintenance; Fig. 1). In general, understanding factors that influence the program end users, whether employers, managers/supervisors, or workers/employees, will likely improve Reach and Effectiveness; addressing organizational characteristics (such as industry, business size, geography, and firm structure; Schwatka et al., 2018; Tenney et al., 2019) should lead to improved Adoption, Implementation and Maintenance, at both the individual and organizational level.

Table 2 provides examples of key questions and probes for OSH practitioners and researchers when applying the PRISM/RE-AIM framework (as illustrated in Fig. 2) to the multilevel implementation of an evidence-based intervention within a worksite, business or organization.

Table 2.

Key PRISM* questions and probes for OSH practitioners and researchers.

| PRISM domain | Key questions | Probes |

|---|---|---|

| PROGRAM INTERVENTION | How does the intervention design influence implementation? | |

| Organizational perspective | What organizational-level factors impede/facilitate successful intervention implementation? | Is the intervention: • Aligned with the organization’s mission and readiness for change? • In line with employers’ /managers’ preferences, beliefs, and priorities? • Supported by strong evidence? • Addressing a gap or need within the organization? • Observed to be beneficial? • Easy to use and cost-effective? |

| Employee/worker perspective | What individual, employee-level factors impede/facilitate successful intervention implementation? | Is the intervention: • Addressing key employee concerns? • In line with employee preferences, beliefs, and priorities? • Accessible to workers with diverse backgrounds? • Easy to use and cost-effective? |

| PROGRAM RECIPIENTS | How do characteristics of (multilevel) recipients influence implementation? | |

| Organizational level (leadership, management) | What characteristics of the organization (e.g., financial health and resources, tendency to take risks or be an early adopter, and morale) can impact the successful implementation of an intervention? | • Is management supportive? • Are the goals realistic and clearly communicated? • Is input provided across all organizational levels? • Who has knowledge, ideas and opinions on the program/problem? Is there previous experience with similar programs? • Who will be naysayers and what will they say? • Who are the key players (champions) to get on board? |

| Employee/worker level | What characteristics of workers/employees, including socioeconomic and demographic factors can impact the success of an intervention? | • Who has knowledge, ideas and opinions

on the program/problem?, Is there previous experience with similar

programs? • Who are the key players (champions) to get on board? • Are there demographic or baseline health and/or social determinants that facilitate or hinder participation? |

| IMPLEMENTATION AND SUSTAINABILITY INFRASTRUCTURE | How can the implementation plan be developed with partner/beneficiary input that adequately considers dissemination and sustainability from the beginning? | |

| Are there established procedures or personnel whose responsibilities will include performance related to this program? (e.g., audit and feedback; allocated budget) | • Can the intervention be paired with

an already institutionalized process or collective understanding?

• How likely is the intervention to produce lasting effects for participants? • How can the intervention be monitored for an extended period? • How will success be tracked and reported? • How will lessons learned be communicated/disseminated? • What are likely modifications or adaptations that will need to be made to sustain the initiative over time (e.g., lower cost, different staff/expertise, reduced intensity, different settings)? |

|

| EXTERNAL ENVIRONMENT | How do outside factors influence the organization? | |

| What external influences potentially hinder/facilitate program implementation? | • Are there regulations and/or policies

or guidelines that may hinder/facilitate program implementation?

• What is the economic climate? Are there endogenous shocks (e. g., an economic downturn), or sociodemographic shifts/changes (e.g., an aging workforce) that could affect program adoption, implementation and maintenance? |

Practical, Robust, Implementation and Sustainability Model (PRISM).

TMFs are foundational to D&I science, in that they inform the design, evaluation, and outcomes assessed, and they may be used in combination with each other. Combining frameworks may help researchers to address multiple study purposes and multiple conceptual levels (Birken et al., 2017b.). For example, CFIR (Damschroder et al., 2009) did not originally specify implementation outcomes and has been used in combination with the Proctor Implementation Outcomes Model (2011) or RE-AIM (Glasgow et al., 2019). Relatively recent studies (e.g., Damschroder et al., 2017; King et al., 2020) provide examples of the integration of CFIR and RE-AIM where CFIR is used to assess context and RE-AIM to describe diverse implementation and effectiveness outcomes. Birken and colleagues (2017b) conducted a systematic review and provide in-depth analysis of the combination of CFIR and TDF in D&I science studies where CFIR served as the overarching D&I TMF and the TDF allowed for more focused assessment of provider level behavior change. Bowser and colleagues (2019) combined EPIS with multiple models and theories to guide an assessment of the environmental, organizational, and economic factors affecting delivery of behavioral health services for justice—involved youth. D&I TMFs can also be combined with other approaches, such as the Pragmatic Explanatory Continuum Indicator Summary (PRECIS-2) model (Loudon et al., 2015) to determine how pragmatic and generalizable a study is (Gaglio et al., 2014; Luoma et al., 2017). In contrast to explanatory studies that rely on highly controlled methods to establish efficacy, pragmatic studies are those that address the effectiveness of an intervention in real-world settings, are conducted under ‘usual care’ conditions, and produce data that are directly relevant to real-world beneficiaries. In designing for dissemination, implementation and sustainment and impact in OSH, low burden, cost-effective, pragmatic approaches should be considered (Zohar and Polachek, 2014). The PRECIS-2 (Loudon et al., 2015) and the newer PRECIS-2 PS (Norton et al., 2021) are particularly relevant to pragmatic, implementation research, and include key domains of importance to multiple relevant beneficiaries (Huebschmann et al., 2019) scored from 1 (very explanatory, i.e., with a focus on internal validity) to 5 (very pragmatic, i.e., with a focus on external validity). In OSH research, PRECIS-2 PS could be combined with another model (e.g., EPIS or CFIR) to design a study, to write a review article to evaluate the level of pragmatism of existing study designs in the OSH literature, or to inform a funding or grant application. It should be noted that, while combining D&I models in a single study can be useful for exploring multiple study purposes and conceptual levels and domains, this approach may result in unnecessary complexity and redundancy (Birken et al., 2017b).

Although the examples above highlight different TMFs, it is also important to recognize that there are many more commonalities than differences across D&I science TMFs and that many key issues—such as the importance of context and multi-level perspectives, understanding and tracking implementation and adaptations, and engaging partners and beneficiaries—are addressed to a greater or lesser extent in all. More guidance and practical tools, such as those provided free-of-charge on the Dissemination and Implementation Science Models in Health webtool [https://dissemination-implementation.org] which also allows for the comparison of multiple models, are needed (Birken et al., 2017b).

5. D&I science study designs and methods

With their strong focus on internal validity (and limited emphasis on external validity or pragmatism), traditional, randomized controlled trials (RCTs) are not always desirable or feasible for investigating D&I questions, including in workplace settings, and several alternative approaches have been advanced (Brown et al., 2017). Mentioned previously, hybrid implementation-effectiveness trial designs (Curran et al., 2012; Kemp et al., 2019; Landes et al., 2020) may be of particular interest and value to OSH researchers, as these approaches combine aspects of effectiveness trials and implementation research, allowing for a timelier translation of results to public health impact (Wolfenden et al., 2016). However, hybrid designs are typically more complex to execute than traditional RCTs (Curran et al., 2012), and may require additional resources and expertise to deploy successfully.

Other D&I study designs include the Multiphase Optimization Strategy (MOST), a framework for developing multicomponent interventions involving a three-stage process (preparation, optimization, evaluation) through which the most effective intervention can be identified within key constraints (such as program cost) (Collins et al., 2007; Collins et al., 2011; Guastaferro and Collins, 2019). Other pragmatic approaches include iterative designs [e.g., Sequential Multiple Assignment Randomized Trials (SMART); Nahum-Shani et al., 2012], user-centered designs (Lyon and Koerner, 2016), cluster randomized and stepped wedge designs (Brown and Lilford, 2006; Handley et al., 2018) in which all settings receive the intervention. While the above approaches are promising in terms of their ability to address key D&I science issues such as dynamic context and adaptation, their application can be challenging and is beyond the scope of this primer. Interested readers are referred to the citations above.

The issue of statistical power in implementation studies is also a critical design issue (Brown et al., 2017; Landsverk et al., 2018). D&I science research often tests system-level interventions where power is influenced most strongly by the number of units at the highest (group) level (e.g., work units/team or organizations) versus the individual (e.g., worker) level (Brown et al., 2017; Landsverk et al., 2018). Previous, and simpler techniques for calculating statistical power and sample size are typically not appropriate for implementation studies because of the multilevel clustering and longitudinal nature of D&I data (Landsverk et al., 2018). Newer tools exist for adequately planning/powering these complex study designs (e.g., Optimal Design from the W.T. Grant Foundation; http://wtgrantfoundation.org/resource/optimal-design-with-empirical-information-od0).

D&I science methods are varied, with an increasing focus on the use of pragmatic, participatory approaches, including community-based participatory research (CBPR) (Minkler, 2010). CBPR emphasizes equitable representation and engagement of multilevel and multisectorial partners and beneficiaries throughout the research process. Building strong community linkages is integral to participatory research approaches, as it is to successful D&I efforts. Moreover, improving the relevance of evidence-based interventions through participatory research approaches may help to expedite the use of new practices and programs by relevant collaborators and program recipients (Lobb and Colditz, 2013). Mixed methods research (Creswell et al., 2011) is also frequently used in D&I scholarship to appropriately evaluate complex, multilevel research translation challenges (Rabin and Brownson, 2018). In mixed methods studies, researchers intentionally integrate (or combine in a meaningful and systematic way) quantitative and qualitative data to maximize the strengths and minimize the weaknesses of each type of data (Creswell et al., 2011). Use by researchers of mixed methods approaches in D&I science is most common for identifying the barriers and facilitators to successful intervention dissemination and implementation, but these techniques can also be used to plan and monitor all stages of the implementation process (Palinkas and Cooper, 2018; Palinkas et al., 2011).

6. D&I measures

In D&I science, qualitative assessments, such as through interviews and focus groups (e.g., Aarons et al., 2012; Hamilton and Finley, 2019; McCreight et al., 2019), are the predominant assessment approach (Weiner, 2021). As a newer field, quantitative assessment in D&I science is challenged by measurement issues, and work in this area is underdeveloped but rapidly expanding (Lewis et al., 2015; Lewis et al., 2018a, 2018b; Martinez et al., 2014; Weiner, 2021). Advancements in D&I science necessitate the development, and widespread use, of reliable, valid, and pragmatic measures (Glasgow, 2013; Glasgow and Riley, 2013; Stanick et al., 2021; Weiner, 2021) to assess the effects of context, implementation strategies and adaptations on outcome variables and constructs (Lewis et al., 2018a). Glasgow and Riley (2013) describe pragmatic measures as those that are important to collaborators, have low burden for respondents and staff, have broad applicability, are sensitive to change over time, and are actionable (e.g., easy to score and interpret in real-world settings).

Tools available to researchers wishing to qualitatively assess D&I constructs include for example a customizable interview guide based on the CFIR constructs that are the focus of an evaluation (cfirguide.org). Free templates of focus group and one-on-one interview guides are also available for assessing RE-AIM constructs, before, during and after program implementation (re-aim.org). Examples of quantitative D&I measures that have been shown to be reliable, have validity data, and meet criteria for being pragmatic include:

Measures by Weiner and colleagues (2017) to assess intervention acceptability, appropriateness and feasibility (12 items, four for each construct).

A measure by Jacobs et al. (2014) with three dimensions and two items per subscale to assess implementation climate—or the extent to which organizational members perceive that innovation use is expected, supported, and rewarded by their organization.

An 18-item, pragmatic measure by Ehrhart et al. (2014) of strategic climate for implementation of evidence-based interventions, that assesses six dimensions of organizational context.

A 12-item measure of implementation leadership (with four subscales, 3-items each) by Aarons and colleagues (2014).

A 12-item measure of organizational readiness for implementing change from Shea and colleagues (2014).

A brief and pragmatic measure from Moullin et al. (2018) to assess providers’ intentions to use a specific innovation or intentions to use new practices.

The Program Sustainability Assessment Tool (PSAT) from Luke and colleagues (2014), a reliable, 40-item instrument with eight domains (5 items per domain) that can be used to assess the capacity for the sustainability of public health programs. The newer Clinical Sustainability Assessment Tool (CSAT) has seven domains (35 items) and is self-assessment used by both clinical staff and recipients to evaluate the sustainability capacity of a clinical practice [https://sustaintool.org/csat/assess/].

As demonstrated by these examples, progress has been made in developing pragmatic rating criteria for D&I science to inform measure development and evaluation (Lewis et al., 2015; Stanick et al., 2021). See Appendix A for links to some commonly used D&I science measures.

7. Additional topics and emerging issues

Although it is impossible in this relatively brief overview to capture the range, depth, and complexity of issues being addressed in the D&I field today, this primer presents some main themes, concepts and methods of investigation and provides guidance for OSH researcher engagement in D&I. Additional and emerging topics of interest and areas for future study are briefly described below.

7.1. Designing for dissemination, implementation and sustainment (D4DIS)

Although this primer refers to both “D” and “I” research, it is generally acknowledged that dissemination research, and what is known about the active process of spreading evidence-based information to key audiences through defined channels and strategies (Rabin and Brownson, 2018), lags implementation research. To address this gap, and to improve the spread of evidence-based interventions across public health domains, Brownson and colleagues (2013) have advanced strategies for Designing for Dissemination, Implementation and Sustainability (D4DIS) (Rabin and Brownson, 2018). This entails ensuring that the products of research (including technologies and messages) are developed to align with the needs of the audience and the characteristics of the context (Brownson et al., 2018a). Practical tools exist for helping researchers to plan D4DIS efforts (see for example, Designing for Dissemination, 2018 and the Stakeholder Engagement Selection Tool, https://dicemethods.com/tool). Similarly, until relatively recently the issue of sustainability of intervention programs and results has not received adequate attention. Inter-related issues of sustainability, cost and other economic issues, and health equity are the focus of developing D&I science research initiatives (Brownson et al., 2021; Chambers et al., 2013; Proctor et al., 2015; Shelton et al., 2018; Woodward et al., 2019, 2021) and have obvious and timely relevance to OSH.

7.2. D&I mechanisms

As mentioned previously, mechanisms of change/action describe the process by which an implementation strategy brings about specified implementation outcomes. However, implementation strategies are frequently misaligned to contexts where programs are being implemented (Lewis et al., 2018a). For a hypothetical example in OSH, workers may receive training on the proper donning and doffing of personal protective equipment (PPE) to protect them from workplace exposures (an intrapersonal-level strategy), but if the problem is provision of PPE to the workplace, an organizational-and/or societal-level determinant, this intervention will be ineffective at achieving the desired health and safety outcomes. Without understanding how implementation strategies work, they will likely fail to achieve positive impact (Grimshaw et al., 2012). D&I science research on mechanisms of action sheds light on the “how and why” questions of health interventions and programs. In these studies, causal pathway models and analyses illustrate how mechanisms can be mediators (but not all mediators are mechanisms) or can be moderators of the effects on implementation outcomes. These models can delineate how an implementation strategy operates by illustrating the specific actions that lead from the deployment of the strategy to the desired implementation outcomes (Lewis et al., 2018b). Research on mechanisms of action is an emerging topic of interest among D&I researchers and funders (Brownson et al., 2018b; Lewis et al., 2018b), and may be an area for future exploration in the OSH field.

7.3. Systems science

Systems science involves methods for simulating and modeling complex systems to inform practice and policy (Luke and Stamatakis, 2012). Complex OSH challenges—including global public health crises such as the COVID-19 pandemic, the changing nature of work and the workforce, and the interaction of work and nonwork factors—will increasingly require the application of systems science or similar approaches (Guerin et al., 2021; Schulte et al., 2019). Complex systems consist of heterogeneous components that are nonlinear, interact with one another, have collective properties that are not explained by studying the individual elements of the system, persist over time, and are dynamic and adaptive to changing circumstances (Luke and Stamatakis, 2012). Lags between cause and effect, nonlinear relationships between variables, and unplanned system behavior at various, socioecological levels are hallmarks of complexity in D&I (Burke et al., 2015; Neta et al., 2018). Systems science investigations use methods (e.g., social network analysis, system dynamics, agent-based modeling and systems dynamics modeling) developed in other disciplines including sociology, business, political science, organizational behavior, computer science, and engineering (Luke et al., 2018; Neta et al., 2018). For example, in D&I science, systems science has been used to model the impact of alternate implementation approaches over time to “test not guess” the expected outcomes before proceeding with pilot testing (Zimmerman et al., 2016) and to be responsive to the needs of practitioners and decision makers (Chambers, 2020; Estabrooks et al., 2018). As Estabrooks and colleagues (2018) note, systems-based approaches, by their very nature, cannot be successful without representation and sustained engagement from the systems that these activities are intended to change.

8. Conclusion

The limited focus on D&I science within the OSH field (Dugan and Punnett, 2017; Guerin et al., 2021; Schulte et al., 2017), has real implications for the timely and relevant translation of research knowledge to practice for preventing workplace injuries and illnesses, and improving worker well-being. It is both concerning and a missed opportunity that an extensive evidence base of OSH research and developed and accumulated knowledge is not often applied in real world settings, including in areas related to prevalent, and generally well-understood, occupational exposures and health effects (Schulte et al., 2017). Even less is known about translating research on newer, psychosocial, and other emergent hazards related to work, workplaces and nonwork factors to improve public health. D&I science approaches are uniquely suited to addressing the complex challenges faced by OSH researchers as they scan the horizon for emerging threats and risks to today’s and tomorrow’s workers (Guerin et al., 2021; Schulte et al., 2019; Tamers et al., 2020). To seize the promise of D&I science, there is a need to build expertise and capacity (Colditz and Emmons, 2018; Proctor and Chambers, 2017); explore pragmatic and cost-effective practices and programs that emphasize external validity, representative reach, and health equity (Colditz and Emmons, 2018; Green and Nasser, 2018; Glasgow et al., 2012, 2019); and build active community partnerships (Chambers and Azrin, 2013). A key premise of D&I science is packaging and conveying the evidence necessary to improve public health in ways that are relevant to local communities, settings, and end-users (Brownson et al., 2018a; Dearing and Kreuter, 2010; Huebschmann et al., 2019) and that reduce OSH inequities (Ahonen et al., 2018).

In conclusion, this primer offers an overview of the promise, opportunities, and challenges of integrating D&I science into OSH, as well as examples, guidance, and resources for exploring these approaches to enhance the impact of OSH efforts. In the light of the global COVID-19 pandemic and other emergent and dynamic OSH risks, never have these challenges been more salient, or more urgent, than they are today.

Acknowledgements

Special thanks to Drs. Samantha Harden, Lauren-Menger Ogle, Andrea Okun, Paul Schulte, Christina Studts, Liliana Tenney and Pamela Tinc for their thoughtful input to and feedback on earlier versions of this manuscript and to Samantha Newman, NIOSH, for graphic design expertise and assistance.

Funding

This research was primarily supported by internal CDC/NIOSH funding. Research reported in this publication was also supported by the National Cancer Institute of the National Institutes of Health (NIH) under Center P50 grant award number 5P50CA244688. Dr. Tyler is supported by grant number K08HS026512 from the Agency for Healthcare Research and Quality.

Appendix A

Building dissemination & implementation (D&I) science capacity in OSH

More widespread, subject matter expertise in D&I science in the OSH field, as is the case more broadly across scientific disciplines (Proctor and Chambers, 2017), is needed. Training programs in D&I science include the Implementation Science 2 (IS2), and the Training Institute in Dissemination Research in Health (TIDRH) programs in Australia and Ireland that follow the former NIH model. Other professional development opportunities in D&I science are listed on The Society for Implementation Research Collaboration (SIRC) website, and several websites provide regularly updated D&I resources, interactive tools, example applications, and information about conferences and upcoming events.

Select D&I Science Resource Websites.

Models, frameworks and tools

Dissemination & Implementation Models in Health. Provides a searchable guide to multiple D&I theories, models and framework (TMFs), including measures for key constructs: www.dissemination-implementation.org

RE-AIM. Provides resources and tools for using the RE-AIM framework in research and practice activities: www.re-aim.org.

Consolidated Framework for Implementation Research (CFIR). Provides resources and tools for using the CFIR framework in research and practice activities: https://Cfirguide.org

Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Provides resources and tools for using the EPIS framework in research and practice activities: https://episframework.com

The Stakeholder Engagement Selection Tool. A free, interactive tool research teams can use to plan for stakeholder engagement activities at various stages of the project: https://dicemethods.com/tool

The Stages of Implementation Completion® (SIC) framework. Focuses on eight stages of implementation activities. The Cost of Implementing New Strategies (COINS) is a cost-mapping tool that works with the SIC: Oregon Social Learning Center (www.oslc.org).

The Program Sustainability Framework and Assessment Tool (PSAT). The PSAT is a self-assessment used by both program staff and stakeholders to evaluate the sustainability capacity of a program/intervention. https://sustaintool.org/psat/assess/#about-assessment

D&I science programs and other resources

Society for Implementation Research Collaboration (SIRC). Provides resources for disseminating and implementing EBPs, including DIS measures, and DIS conferences and training opportunities. https://societyforimplementationresearchcollaboration.org/

Dissemination and Implementation Science Program, University of Colorado Adult and Child Center for Outcomes Research and Delivery Science, Anschutz Medical Campus. Provides regularly updated D&I references and resources, such as the use of PRECIS-2, pragmatic trials e-books, and tips on getting D&I grants funded: https://medschool.cuanschutz.edu/accords/cores-and-programs/dissemination-implementation-science-program

Pennsylvania State University Evidence-based Prevention and Intervention Support project. Provides free access to research, resources and tools to support data collection and reporting for EBPs: http://www.episcenter.psu.edu/

University California San Diego, Dissemination and implementation (D&I) Science Center. Include information and resources on training, technical assistance, community engagement, and research advancement. https://medschool.ucsd.edu/research/actri/centers/DIR/Pages/default.aspx

University of North Carolina. Includes funded D&I grant examples: https://sph.unc.edu/research/explore/implementation-science

University of North Carolina School of Medicine, North Carolina Translational and Clinical Sciences (NC TraCS), Dissemination and Implementation (D&I) Methods Unit. Supports the development and integration of frameworks, methods, and metrics in community-engaged research. https://tracs.unc.edu/index.php/services/implementation-science

University of Washington, Implementation Science Resource Hub. Provides information on D&I resources, training and funding opportunities. https://impsciuw.org/

Washington University Institute for Clinical and Translational Science, Dissemination and Implementation Research Core (DIRC). Provides methodological expertise to advance translational (T3 and T4) research. https://icts.wustl.edu/items/dissemination-and-implementation-research-core-dirc/

The National Implementation Research Network’s Active Implementation Hub. Provides a free, online learning environment for researchers and practitioners active in implementation and scaling up of interventions: https://implementation.fpg.unc.edu/

The Community Toolbox. Provides resources and training for assessing community needs, addressing social determinants of health, engaging collaborators, action planning, building leadership, improving cultural competency, planning an evaluation, and sustaining intervention/program efforts: https://ctb.ku.edu/en

NIH resources and other U.S. government-supported D&I science initiatives

Center for Prevention Implementation Methodology (NIH-funded), Northwestern University: http://cepim.northwestern.edu/

NIH Collaboratory: https://www.rethinkingclinicaltrials.org/

National Cancer Institute Implementation Science: https://cancercontrol.cancer.gov/IS

National Cancer Institute, Grid-Enabled Measures Database (GEM): https://www.gem-measures.org/Public/Home.aspx

National Institute of Nursing Research: https://www.ninr.nih.gov

U.S. Department of Veteran’s Affairs Quality Enhancement Research Initiative (QUERI): https://www.queri.research.va.gov/default.cfm

Evidence-based practices and programs

HHS, Substance Abuse and Mental Health Services Administration (SAMHSA), National Registry of Evidence-based Programs and Practices (behavioral health): https://www.samhsa.gov/nrepp

The Community Guide: https://www.thecommunityguide.org/

Research-Tested Intervention Programs (NCI): https://rtips.cancer.gov/rtips/index.do

International D&I science initiatives and programs

European Implementation Collaborative: https://implementation.eu/

Global Implementation Society. Provides resources to promote and establish coherent and collaborative approaches to implementation practice, science, and policy. https://globalimplementation.org/

Kings College, London. Implementation Science Master Class. https://www.kcl.ac.uk/events/implementation-science-masterclass-4

Knowledge Translation Canada: http://ktcanada.net/

World Health Organization. Has a free toolkit to foster deeper learning on implementation research that can be used to compare across regions and countries: https://www.who.int/tdr/publications/topics/ir-toolkit/en/