Abstract

The emergence of artificial emotional intelligence technology is revolutionizing the fields of computers and robotics, allowing for a new level of communication and understanding of human behavior that was once thought impossible. While recent advancements in deep learning have transformed the field of computer vision, automated understanding of evoked or expressed emotions in visual media remains in its infancy. This foundering stems from the absence of a universally accepted definition of “emotion,” coupled with the inherently subjective nature of emotions and their intricate nuances. In this article, we provide a comprehensive, multidisciplinary overview of the field of emotion analysis in visual media, drawing on insights from psychology, engineering, and the arts. We begin by exploring the psychological foundations of emotion and the computational principles that underpin the understanding of emotions from images and videos. We then review the latest research and systems within the field, accentuating the most promising approaches. We also discuss the current technological challenges and limitations of emotion analysis, underscoring the necessity for continued investigation and innovation. We contend that this represents a “Holy Grail” research problem in computing and delineate pivotal directions for future inquiry. Finally, we examine the ethical ramifications of emotion-understanding technologies and contemplate their potential societal impacts. Overall, this article endeavors to equip readers with a deeper understanding of the domain of emotion analysis in visual media and to inspire further research and development in this captivating and rapidly evolving field.

Keywords: Artificial emotional intelligence (AEI), bodily expressed emotion understanding (BEEU), deep learning, ethics, evoked emotion, expressed emotion, human behavior, intelligent robots, movement analysis, psychology

I. INTRODUCTION

As artificial intelligence (AI) technology becomes more prevalent and capable of performing a wide range of tasks, the need for effective communication between humans and AI systems is becoming increasingly important. The adoption of smart home products and services is projected to reach 400 million worldwide, with smart devices, such as Alexa and Astro, becoming increasingly common in households [1]. However, these devices are currently limited to executing specific commands and do not possess the capability to understand or respond to human emotions [2]. This lack of emotional intelligence (EQ) limits their potential applications, and this constraint is particularly relevant for future robotic applications, such as personal assistant robots, social robots, service robots, factory/warehouse robots, and police robots, which require close collaboration and a comprehensive understanding of human behavior and emotions.

The ability to impart EQ to AI when dealing with visual information is a topic of growing interest. This article aims to address the fundamental question of how to “teach” AI to understand and respond to human emotions based on images and videos. The potential technical solutions to these questions have far-reaching implications for various application domains, including human–AI interaction, autonomous driving, social media, entertainment, information management and retrieval, design, industrial safety, and education.

To provide a comprehensive and well-balanced view of this complex subject, it is essential to draw on the expertise of various fields, including computer and information science and engineering, psychology, data science, movement analysis, and performing arts. The interdisciplinary nature of this topic highlights the need for collaboration and cooperation among researchers from different fields in order to achieve a deeper understanding of the subject.

In this article, we focus on the topic of affective visual information analysis as it represents a highly nuanced and complex area of study with strong connections to well-established scholarly fields, such as computer vision, multimedia, and image and video processing. However, it is important to note that the techniques presented here can be integrated with other data modalities, such as speech, sensor-generated streaming data, and text, in order to enhance the performance of real-world applications.

The primary objective of this article is to introduce the technical communities to the emerging field of affective visual information analysis. Recognizing the breadth and dynamic nature of this field, we do not aim to provide a comprehensive survey of all subareas. Instead, our discussion focuses on the fundamental psychological and computational principles (see Sections II and III), recent advancements and developments (see Section IV), core challenges and open issues (see Section V), connections to other areas of research and development (see Section VI), and ethical considerations related to this new technology (see Section VII). We apologize in advance for any important publications that may have been omitted in our discussion.

Recently, there have been some other surveys and reviews on artificial EQ (AEI), such as facial expression recognition (FER) [3], [4], [5], [6], microexpression recognition (MER) [7], [8], [9], textual sentiment classification [10], [11], [12], music and speech emotion recognition [13], [14], [15], affective image content analysis [16], emotional body gesture recognition [17], bodily expressed emotion recognition [18], emotion recognition from physiological signals [19], [20], multimodal emotion recognition [21], [22], and affective theory use [23]. These articles mainly focus on emotion and sentiment analysis for a specific modality from the perspective of machine learning and pattern recognition or focus on the psychological emotion theories. Cambria [24] summarized the common tasks of affective computing and sentiment analysis, and classified existing methods into three main categories: knowledge-based, statistical, and hybrid approaches. Poria et al. [25] and Wang et al. [26] reviewed both unimodal and multimodal emotion recognition before the year of 2017 and between the years of 2017 and 2020, respectively. As opposed to those reviews, this article aims to provide a comprehensive overview of emotion analysis from visual media (e.g., both images and videos) with insights drawn from multiple disciplines.

II. EMOTION: THE PSYCHOLOGICAL FOUNDATION

How we define emotion largely descends from the theoretical framework used to study it. In this section, we provide an overview of the most prominent emotion theories, beginning with Darwin, and underscore how contemporary dimensional approaches to understanding emotion align with both the processing of emotion by the human brain and current computer vision approaches for modeling emotion to make predictions about human perception (see Section II-A). In addition, we examine the intrinsic link between emotion and adaptive behavior, a contention that is largely shared across different emotion theories (see Section II-B).

A. Definitions and Models of Emotion

One of the first emotion theories put forth was Charles Darwin’s in his seminal book “On the Expression of the Emotions in Man and Animals” [32]. This book proposed that humans possess a finite set of biologically privileged emotions that evolved to confer upon us survival-related behavior. William James [33] later added to this arguing that the experience of emotion is ultimately our experience of distinct patterns of physiological arousal and physical behaviors associated with each emotion [34]. Building upon these assumptions, Ekman’s Neurocultural Theory of Emotion [35] further perpetuated the notion that there exists a “universal affect program” that underlies the experience and expression of several discrete emotions, such as anger, fear, sadness, and happiness. According to this theory, basic emotional experiences and emotional displays evolved as adaptive responses to specific environmental contingencies and, thus, felt, expressed, and recognized emotions are uniform across all people and cultures, and are marked by specific patterns of physiological and neural responsivity.

Considerable research has since questioned the utility of this approach. This includes findings that people: 1) are often ill-equipped to describe their own emotions in discrete emotion terms, both in research and clinical settings [36]; 2) show low consensus in their ability to categorize both facial and vocal expressions of emotions in discrete emotion terms [37]; and 3) show high intercorrelations across the emotional experiences they do report [38]. Such findings have prompted many researchers to explore alternative approaches to conceptualizing and measuring emotional experience that necessarily involve a cognitive component.

Magda Arnold’s cognitive appraisal theory of emotion was the first to introduce the necessity of cognition in emotion elicitation [39]. Although she did not disagree with Darwin and James that emotions are adaptive states spurring on survival-related behavior, she, nonetheless, took them to task for not considering vast individual variation in emotional experiences. Arnold rightly underscored the capacity for the same emotion-evoking events to lead to different emotional experiences in different people. This becomes readily apparent when considering one’s own emotional experiences. For example, diving off a cliff may generate an aversive fear state in one person but an enjoyable thrill state in another. The difference in how an event is evaluated, therefore, shapes the emotion that results. Central to her theory was the importance of cognitive appraisal in initially eliciting an emotion. Once elicited, she largely agreed with Darwin’s functional assumptions regarding the survival benefits of emotion-related behavior. Later, Schachter and Singer [40] drew on these insights to help resolve ongoing debates regarding James’ theory of embodied emotional experience. Their research demonstrated that the emotion we experience when adrenaline is released in our body depends on our cognitive framing and context. Those injected with adrenaline reported feeling happier when in a fun context and more irritated when in an angering context. The only difference was the cognitive appraisal that framed the experience of that arousal.

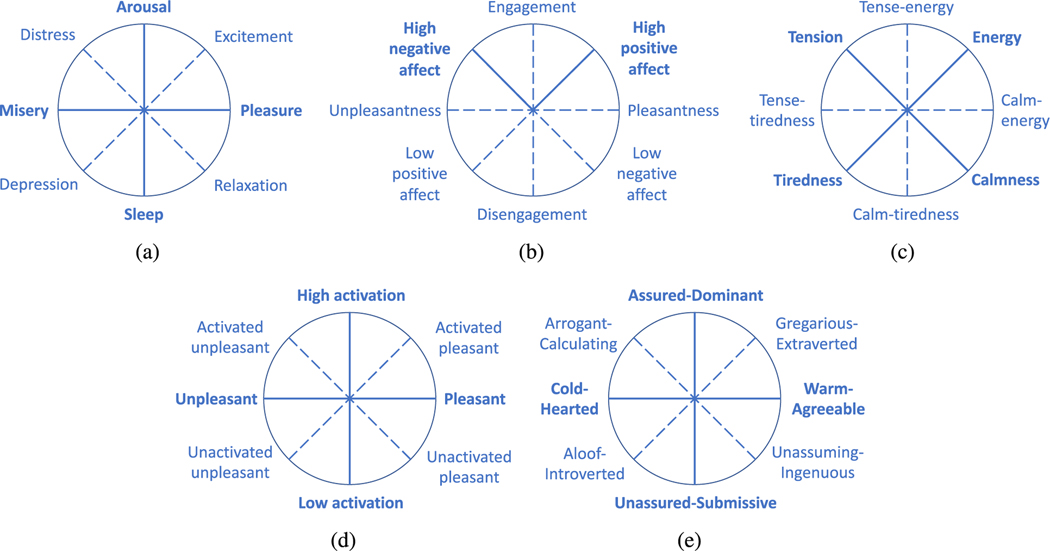

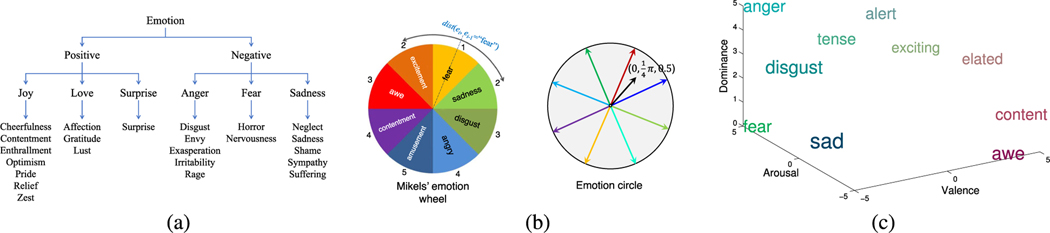

Building upon these ideas further, Mehrabian and Russell [41] proposed the Pleasure, Arousal, and Dominance (PAD) Emotional State Model, which suggests a dimensional account of emotion, one in which PAD constitutes the fundamental dimensions that represent all emotions. Later, Russell dropped dominance as a key dimension and focused on what he refers to as “core affect,” suggesting that all emotions can be reduced to the fundamental psychological and biological dimensions of pleasantness and arousal [42]. Dimensional approaches offer a way of conceptualizing and assessing emotion that closely approximates how the human brain processes emotion (see Fig. 1 for a comparison of different dimensional/circumplex models).

Fig. 1.

Five influential circumplex emotion models developed in the psychology literature [27], [28], [29], [30], [31]. (a) Russell, 1980. (b) Watson and Tellegen, 1985. (c) Thayer, 1989. (d) Larsen and Diener, 1992. (e) Knutson, 1996.

Notably, some early attempts to use computational methods to predict human emotions elicited by visual scenes employed the discrete emotion approach described at the outset of this section. For example, Mikels et al. [43] and Machajdik and Hanbury [44] used categorical approaches to assess the visual properties of stimuli taken from the International Affective Picture System (IAPS) [45], a widely used set of emotionally evocative photographs in the emotion literature. However, such approaches resulted in high levels of multicollinearity between emotions, making it difficult to disentangle emotions using traditional regression models. In contrast, adopting a dimensional approach not only aligns well with emerging theoretical accounts of emotion but has been validated by James Wang and his colleagues in the successful assessment of human aesthetics and emotions evoked by visual scenes, as well as bodily expressed emotion [46], [47], [48], [49], [50], [51], [52]. This offers a methodological approach that is consistent with dimensional theories of emotion.

B. Interplay Between Emotion and Behavior

Fridlund’s behavioral ecology perspective of emotion argues that emotional expression evolved primarily as a means of signaling behavioral intent [53]. The view that facial expression evolved specifically as a way to forecast behavioral intentions and consequences to others drew from Darwin’s seminal writings on expression [32] even though Darwin himself argued that expressions did not evolve for social communication per se. Fridlund’s argument is based on the idea that perceiving behavioral intentions is adaptive. From this perspective, anger may primarily convey to an observer a readiness to attack, whereas fear may primarily convey a readiness to submit or retreat (see [54]). From this perspective, behavioral intentions are considered “syndromes of correlated components” [53, p. 151]. Fridlund is not alone in these assumptions. Some researchers have gone so far as to suggest that feeling states associated with emotions are merely conscious perceptions of underlying behavioral intentions or action tendencies, which implies that emotional feeling is simply the experience of behavioral intention, similar to William James’s theory [55].

It is worth noting that empirical research has provided support for the idea that behavioral intention is conveyed through emotional expression. For example, one study demonstrated that action tendencies and emotion labels are attributed to faces at comparable levels of consistency [55]. Similarly, in forced-choice paradigms [54], cross-cultural evidence indicates that participants assign behavioral intention descriptors with about equal consistency as they do with emotion descriptors.

A focus on approach-avoidance tendencies has been highlighted in most of the research conducted to date. The ability to detect another’s intention to approach or avoid us is thought of as a principal factor governing social exchange. However, much of the work on approach-avoidance behavioral motivations has also tended to concentrate on the experience or response of an observer to a stimulus event [56]. One common method of operationalizing approach and avoidance then stems from traditional behavioral learning paradigms that link behavioral motivation and emotion through reward versus punishment contingencies [57]. Approach motivation is defined by appetitive, reward-related behavior, while avoidance motivation is defined by aversive, punishment-related behavior, where appetitive behavior is movement toward a reward, and aversive behavior is movement away from a punishment.

Much research has focused on the relationship between approach and avoidance tendencies and emotional experience [58]. However, there has been less attention paid to whether approach and avoidance tendencies are fundamentally signaled by the external expression of emotion. It stands to reason that, if the experience of emotion is associated with approach and avoidance tendencies, these tendencies should be signaled to others when expressed. This distinction is important as the approach-avoidance tendencies attributed to expressive faces may not always match the approach-avoidance reactions elicited by them. For example, the expression of joy arguably conveys a heightened likelihood of approach by the expressor and a reaction of approach from the observer [56]. In contrast, anger expressions signal approach by the expressor but tend to elicit avoidance by the observer.

Recent insights from the embodiment literature also provide evidence that emotional experiences are grounded in specific action tendencies [34]. This means that emotional experiences can be expressed through stored action tendencies in the body, rather than through semantic cues. For example, studies have examined the coherence between emotional experience (positive or negative) and approach (arm flexion, i.e., pulling toward) versus avoidance behavior (arm extension, i.e., pushing away) [59]. In one study, participants were randomly assigned to an arm flexion (approach behavior) or arm extension (avoidance behavior) condition, either during the reading of a story about a fictional character or during a positive versus negative semantic priming task before reading the story. Participants in the congruent conditions (happy prime and arm flexion, and sad prime and arm extension) were able to remember more items from the story.

Although important for explaining behavioral responding, these studies did not address whether basic tendencies to approach or avoid were also fundamentally signaled by emotional expressions. If they were, expressions coupled with approach and avoidant behaviors should impact the efficiency of emotion recognition. In one set of studies, anger expressions were found to facilitate judgments of approach versus withdrawing faces compared with fear expressions [60]. Similarly, perceived movement of a face toward or away from an observer likewise facilitated angry or fearful expression perception [61]. Thus, approach and avoidance movement are associated in a fundamental way with the recognition of anger and fear displays, respectively, supporting the conclusion that basic action tendencies are inherently associated with the perception of emotion.

In sum, despite widely debatable assumptions about the nature of emotion and emotional expression across various theories, most tend to agree that emotion expression conveys fundamental information regarding basic behavioral tendencies [60].

III. EMOTION : COMPUTATIONAL PRINCIPLES AND FOUNDATIONS

In this section, we aim to establish computational foundations for analyzing and recognizing emotions from visual media. Emotion recognition systems typically involve several fundamental data-related stages, including data collection (see Section III-A), data reliability assessment (see Section III-B), and data representation (see Sections III-D–III-F for general computer vision-based representation, movement coding, and context and function purpose detection, respectively). As we present specific examples at each stage, we will emphasize the underlying principles that they adhere to. We provide a list of representative datasets in Section III-C. We will also introduce the factors of acted portrayals (see Section III-G), cultural and gender dialects (see Section III-H), structure (see Section III-I), personality (see Section III-J), and affective style (see Section III-K) in inferring emotion, based on prior research.

A. Data Collection

Because the categories of emotions are not well-defined, it is not possible to program a computer to recognize all emotion categories based on a set of predefined logic rules, computational instructions, or procedures. Thus, researchers must take a data-driven approach in which computers learn from a large quantity of labeled, partially labeled, and/or unlabeled examples. To enable such research and subsequent real-world applications, it is essential to collect large-scale, high-quality, ecologically valid datasets. To highlight the complexity of the data collection problem and to introduce best practices, we describe a few data collection approaches that incorporate psychological principles in their design.

1). Evoked Emotion—Immediate Response:

In the field of modeling evoked emotion, earlier researchers utilized the IAPS dataset, which consisted of only 1082 images rated for evoked emotional response [46]. In 2017, Lu et al. [48] introduced one of the first large-scale datasets, the EmoSet, utilizing a human subject study. The EmoSet dataset is much larger, and all images are complex scenes that humans regularly encounter in daily life.

To create a diverse image collection, the researchers employed a data-crawling approach to gather nearly 44 000 images from social media and obtained emotion labels (both dimensional and categorical) using crowdsourcing via the Amazon Mechanical Turk (AMT) platform. They used the valence, arousal, and dominance (VAD) dimensional model [27], which is similar to the PAD model. The researchers followed strict psychological subject study procedures and validation approaches. The images were collected from more than 1000 users’ Web albums on Flickr using 558 emotional words as search terms. These words were summarized by Averill [62].

The researchers carefully designed their online crowdsourcing human subject study to ensure the quality of the data. For example, each image was presented to a subject for exactly 6 s. This design differed from conventional object recognition data annotation tasks, where the subject was often given no restrictions on the amount of time to view an image. This design followed psychological convention, as the intention was to collect the subject’s immediate affective response to the visual stimuli. If subjects were given varying amounts of time to view an image before rating it, the data would not be a reliable capture of their immediate affective response. To accommodate for this, subjects were given the option to click a “Reshow Image” button if they needed to refer back to the image. In addition, recognizing that categorical emotions may not cover all feelings, this method allowed the subject to enter other feelings that they may have had.

2). Evoked Emotion—Test–Retest Reliability:

The data collection method proposed by Lu et al. [48] aimed to understand immediate affective responses to visual content, but it did not ensure retest reliability of affective picture stimuli over time and across a population. Many psychological studies, from behavioral to neuroimaging studies, have used visual stimuli that consistently elicited specific emotions in human subject. While the IAPS and other pictorial datasets have validated their data, they have not examined the retest reliability or agreement over time of their picture stimuli.

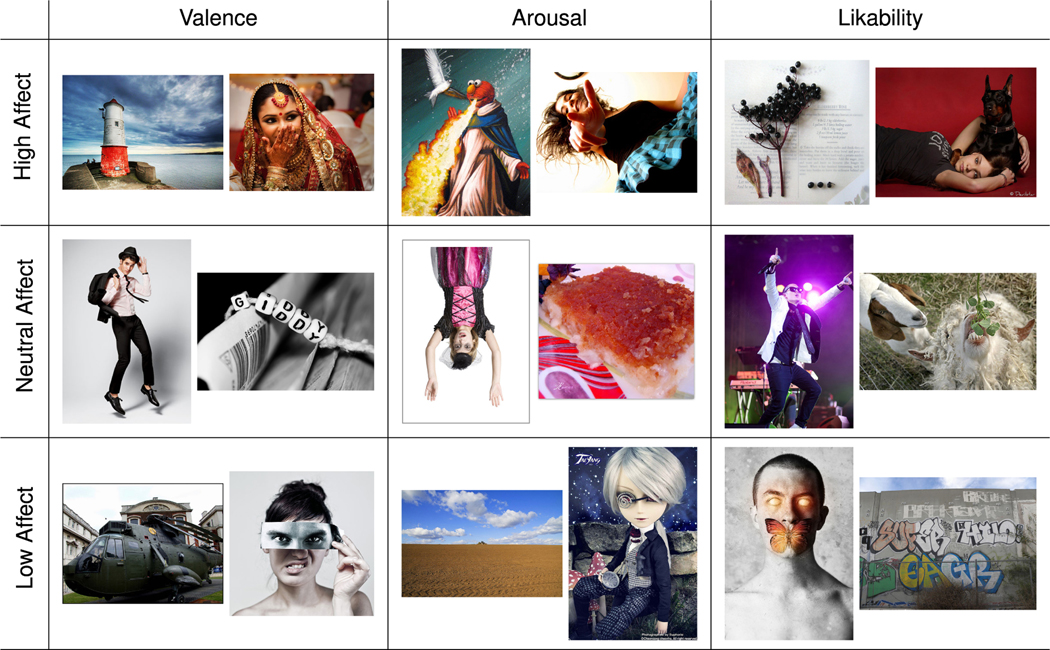

To address this issue, Kim et al. [50] developed the Image Stimuli for Emotion Elicitation (ISEE) as the first set of stimuli for which there was an unbiased initial selection method and with images specifically selected for high retest correlation coefficients and high within-person agreement across time. The ISEE dataset used a subset of 10 696 images from the Flickr-crawled EmoSet. In the initial screening study, study participants rated stimuli twice for emotion elicitation across a one-week interval, resulting in the selection of 1620 images based on the number of ratings and retest reliability of each picture. Using this set of stimuli, a second phase of the study was conducted, again having participants rate images twice with a one-week interval, in which the researchers found a total of 158 unique images that elicited various levels of emotionality with both good reliability and good agreement over time. Fig. 2 shows 18 example images in the ISEE dataset.

Fig. 2.

Example images in the ISEE dataset [50]. Images were selected after a thorough test–retest reliability study.

3). Expressed Emotion—Body:

In the field of expressed emotion recognition, the collection of data on bodily expressed emotions has received less attention compared to the more widely studied areas of facial expression and microexpression data collection. In addition, whereas earlier studies often relied on data collected in controlled laboratory environments, recent advancements in technology have made it possible to collect data in more naturalistic, real-world settings. These “in-the-wild” datasets are more challenging to collect, but they offer the opportunity to capture a more diverse range of emotions and expressions. Whereas laboratory environments provide the advantage of advanced sensors, such as Motion Capture (MoCap), body temperature, and brain electroencephalogram (EEG) for collecting data, and it is possible to capture self-identified rather than perceived emotional expression, it is impossible to accurately replicate the vast array of diverse real-world scenarios within a controlled laboratory setting.

Using video clips from movies, TV shows, sporting, and wedding events as a source of data for emotion recognition has several advantages. These videos provide a wide range of scenarios, environments, and situations that can be used to train computer systems to understand human behavior, expression, and movement. For instance, these videos have recorded scenes during natural and man-made disasters, providing valuable information for understanding human emotions under extreme conditions. In addition, a large proportion of video shots in movies is of outdoor human activities, providing a diverse range of contexts for training.

However, it is important to note that using publicly available video clips as a source of data has its limitations. One such limitation is that this approach can only capture perceived emotions, as opposed to self-identified emotions. In many applications, perceived emotions are a sufficient proxy for actual emotions, particularly when the goal is for robots to “perceive” or “express” emotions in a way that is similar to humans for efficient communication with humans. A further constraint is that the videos mainly feature staged or user-selected scenes, rather than depicting natural everyday interactions. This topic will be further explored in Section III-G.

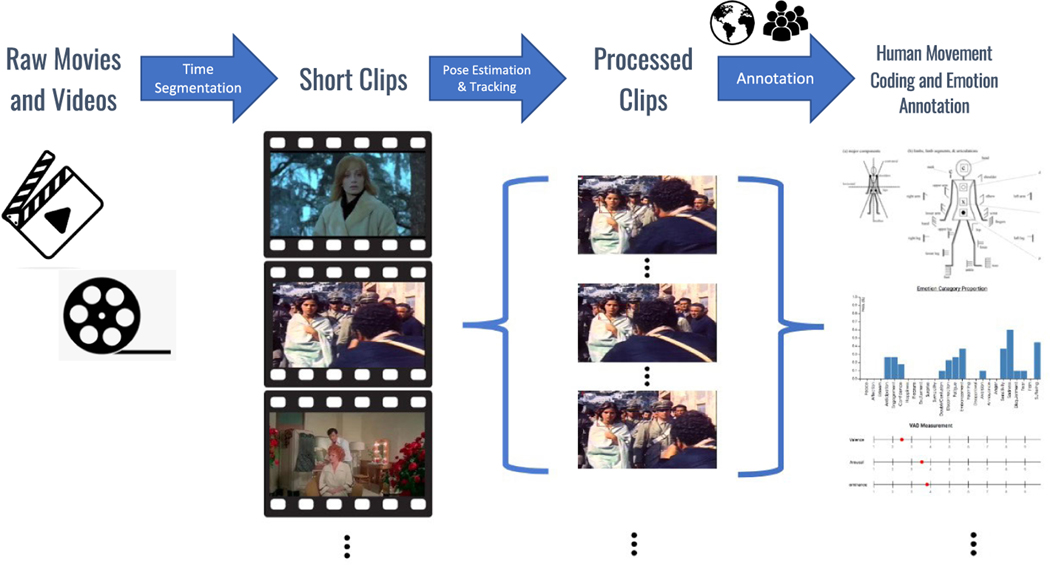

Luo et al. [51] developed the first dataset for bodily expressed emotion understanding (BEEU), named the Body Language Dataset (BoLD), using this approach. The data collection pipeline is illustrated in Fig. 3. The researchers collected hundreds of movies from the Internet and cut them into short clips. An identified character with landmark tracking in a single clip is called an instance. They used the AMT platform for crowdsourcing emotion annotations of a total of over 48 000 instances. The emotion annotation included the VAD dimensional model [27] and 26 emotion categories [63].

Fig. 3.

Data collection pipeline was developed by Luo et al. [51] to utilize crowdsourcing to annotate vast amounts of videos available on the Internet. The pipeline involved obtaining raw movies and videos from the Internet, segmenting them into short clips, processing the clips using computer vision techniques to extract posture information and track individual characters, and utilizing crowdsourcing and expert annotation to assign emotion and movement labels to the clips.

B. Data Quality Assurance

Quality control is a crucial aspect for crowdsourcing, particularly for affect annotations. Different individuals may have varying perceptions of affect, and their understanding can be influenced by factors such as cultural background, current mood, gender, and personal experiences. Even an honest participant may provide uninformative affect annotations, leading to poor-quality data. In this case, the variance in acquiring affect usually comes from two kinds of participants, i.e., dishonest ones, who give useless annotations for economic motivation, and exotic ones, who give inconsistent annotations compared with others. The existence of exotic participants is inherent in emotion studies. The annotations provided by an exotic participant could be valuable when aggregating the final ground truth or investigating cultural or gender effects of affect. However, we typically want to reduce the risk of high variance caused by dishonest and exotic participants in order to collect generalizable annotations.

In the case of the BoLD dataset [51], five complementary mechanisms were used, including three online approaches (i.e., analyzing while collecting the data) and two offline (i.e., postcollection analysis), based on a recent technological breakthrough for crowdsourced affective data collection [49]. These mechanisms were participant EQ screening [64], annotation sanity/consistency check [51], gold standard test based on control instances [51], and probabilistic multigraph modeling for reliability analysis [49].

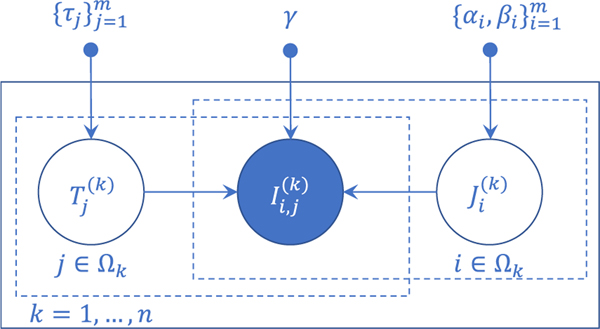

Particularly critical is the probabilistic graphical model Ye et al. developed to jointly model subjective reliability, which is independent of supplied questions, and regularity [49]. For brevity of discussion, we focus on using the mode(s) of the posterior as point estimates. We assumed that each subject had a reliability parameter and regularity parameters , characterizing their agreement behavior with the population, for . We also used the parameter for the rate of agreement between subjects by pure chance. Let be the set of parameters. Let be a random subsample from subjects who labeled the stimulus , where . We also assumed that sets ’s were created independently of each other. For each image , every subject paired from , i.e., (, ) with , had a binary indicator coding whether their opinions agreed on the respective stimulus. We assumed that was generated from a probabilistic process involving two latent variables. The first latent variable indicated whether subject was reliable or not. Given that it was binary, a natural choice of model was the Bernoulli distribution. The second latent variable , lying between 0 and 1, measured the extent to which subject agreed with other reliable responses. We used the beta distribution parameterized by and to model because it was a widely used and flexible parametric distribution for quantities on the interval [0, 1].

In a nutshell, is a latent switch (a.k.a. gate) that controls whether can be used for the posterior inference of the latent variable . Hence, the researchers referred to the model as the gated latent beta allocation (GLBA). A graphical illustration of the model is shown in Fig. 4. If an uninformative annotator was in the subject pool, their reliability parameter was zero though others could still agree with their answers by chance at a rate of . On the other hand, if an individual was very reliable yet often provided controversial answers, their reliability could be one, while they typically disagreed with others, as indicated by their high irregularity

We were interested in finding both types of participants. Most participants were between these two extremes. The quantitative characterization of participants by GLBA will assist in selecting subsets of the data collection for quality control or gaining a comprehensive understanding of subjectivity. For more details, please refer to [49], [65].

Fig. 4.

Probabilistic graphical model, GLBA, was developed by Ye et al. [49] to model the subjective reliability and regularity in a crowdsourced affective data collection. The work enables researchers in affective computing to effectively identify and exclude highly subjective annotation data provided by uninformative human participants, thereby improving the overall quality of the collected data.

A recent study [66] presented a Python-based software program called MuSe-Toolbox, which combines emotion annotations from multiple individuals. The software includes several existing annotation fusion methods, such as estimator weighted evaluator (EWE) [67] and generic-canonical time warping (GCTW) [68]. In addition, the authors have developed a new fusion method based on EWE, named rater-aligned annotation weighting (RAAW), which is also included in the software. Furthermore, MuSe-Toolbox includes the capability to convert continuous emotion annotations into categorical labels.

C. Existing Datasets

Several recent literature surveys have provided an overview of existing datasets for emotions in visual media. In order to avoid duplication of effort, readers are directed to these papers for further information, which includes surveys on evoked emotion [16], BEEU [17], FER [4], MER [7], and multimodal emotion [22], [69], [70]. Table 1 presents a comparison of the properties of some representative datasets. Researchers are advised to thoroughly review the data collection protocol used before utilizing a dataset to ensure that the data have been collected in accordance with appropriate psychological guidelines. In addition, when crowdsourcing is utilized, effective mechanisms are essential for filtering out uninformative annotations.

Table 1.

Recent Representative Datasets for Emotion Recognition

| Dataset Name | Labeled Samples | Data Type | Categorical Emotions | Continuous Emotions | Lab Controlled | Year | Primary Application |

|---|---|---|---|---|---|---|---|

| IAPS [45] | 1.2k | I | - | VAD | 2005 | Evoked | |

| FI [71] | 23.3k | I | 8§ | - | 2016 | Evoked | |

| VideoEmotion-8 [72] | 1.2k | V | 8† | - | 2014 | Evoked | |

| Ekman-6 [73] | 1.6k | V | 6† | - | 2018 | Evoked | |

| E-Walk [74] | 1k | V | 4‡ | - | 2019 | BEEU | |

| BoLD [51] | 13k | V | 26† | VAD | 2020 | BEEU | |

| iMiGUE [75] | 0.4k | V | 2 | - | 2021 | BEEU★ | |

| CK+ [76] | 0.6k | V | 7‡ | - | ✓ | 2010 | FER |

| Aff-Wild [77] | 0.3k | V | - | VA | 2017 | FER | |

| AffectNet [78] | 450k | I | 7‡ | - | 2017 | FER | |

| EMOTIC [79] | 34k | I | 26† | VAD | 2017 | FER* | |

| AFEW 8.0 [80] | 1.8k | V | 7‡ | - | 2018 | FER | |

| CAER [81] | 13k | V | 7‡ | - | 2019 | FER* | |

| DFEW [82] | 16k | V | 7‡ | - | 2020 | FER | |

| FERV39k [83] | 39k | V | 7‡ | - | 2022 | FER | |

| SAMM [84] | 0.2k | V | 7‡ | - | ✓ | 2016 | MER |

| CAS(ME)2 [85] | 0.06k | V | 4‡ | - | ✓ | 2017 | MER |

| ICT-MMMO [86] | 0.4k | V,A,T | - | Sentiment | 2013 | Multi-Modal | |

| MOSEI [87] | 23.5k | V.A.T | 6† | Sentiment | 2018 | Multi-Modal |

A superset of Ekman’s basic emotions

Ekman’s basic emotions + neutral

Mikels’ emotions

Micro-gesture understanding and emotion analysis dataset Data Type Key: (I)mage, (V)ideo, (A)udio, (T)ext

Context-aware emotion dataset

Data Type Key: (I)mage, (V)ideo, (A)udio, (T)ext

D. Data Representations

After the data collection and quality assurance stages, a significant technological challenge is to represent the emotion-relevant information present in the raw data in a concise form. While current deep neural network (DNN) approaches often utilize raw data, such as matrices of pixels, as input in the modeling process, utilizing a compact data representation can potentially improve the efficiency of the learning process, allowing for larger-scale experiments to be conducted with limited computational resources. In addition, a semantically meaningful data representation can facilitate interpretability, which is crucial for certain applications. There are numerous methods for compactly representing raw visual data, and we discuss several intriguing or widely used data representations for emotion modeling in the following.

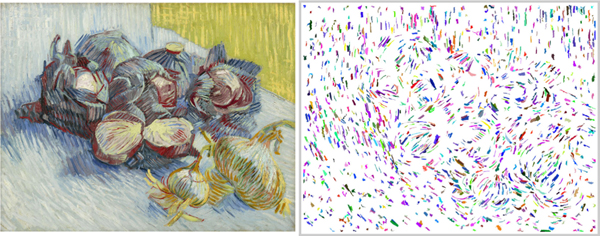

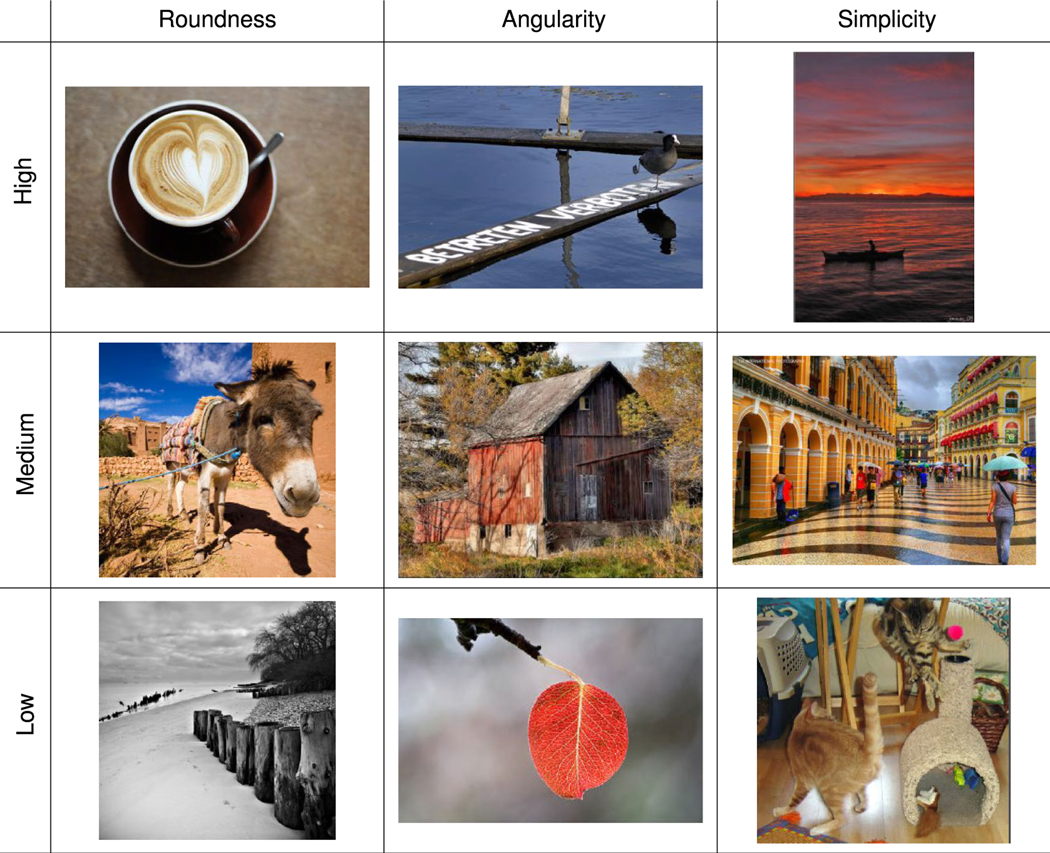

1). Roundness, Angularity, Simplicity, and Complexity:

Colors and textures are commonly used in image analysis tasks to represent the content of an image, but research has shown that shape can also be an effective representation when analyzing evoked emotions. In both visual art and psychology, the characteristics of shapes, such as roundness, angularity, simplicity, and complexity, have been linked to specific emotional responses in humans. For example, round and simple shapes tend to evoke positive emotions, while angular and complex shapes evoke negative emotions. Leveraging this understanding, Lu et al. [46] developed a system that predicted evoked emotion based on line segments, curves, and angles extracted from an image. They used ellipse fitting to implicitly estimate roundness and angularity, and used features from the visual elements to estimate complexity. Later, they developed algorithms to explicitly estimate these representations [48]. Fig. 5 shows some example images with different levels of roundness, angularity, and simplicity. The researchers found that these three physically interpretable visual constructs achieved comparable classification accuracy to the hundreds of shape, texture, composition, and facial feature characteristics previously examined. This result was thought-provoking because just a few numerical-value representations could effectively predict evoked emotions.

Fig. 5.

Researchers developed algorithms to compute the roundness, angularity, and simplicity/complexity of a scene as the representation for estimating the evoked emotion [48].

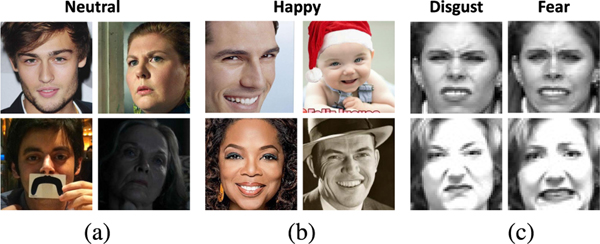

2). Facial Action Coding System (FACS) and Facial Landmarks:

People use particular facial muscles to express certain facial expressions. For instance, people can express anger by frowning and pursing their lips. Consequently, each facial expression can be viewed as a combination of some facial muscle movements. Ekman and Friesen [88] developed the FACS in 1976, which encodes all movements of facial muscles. FACS defines a total of 32 atomic facial muscle actions, called Action Units (AUs), including Lids Tight (AU7), Cheek Raise (AU6), and so on. By detecting all AUs of a person and linking them to specific expressions, we can identify the individual’s facial expressions.

The problem of AU detection can be approached as a multilabel binary classification problem for each AU. Early work on AU detection used facial landmarks to identify regions of corresponding muscles and then applied neural networks [89] or support vector machines (SVMs) [90] for classification. More recent work has developed end-to-end AU detection networks [91]. Survey papers provide detailed introductions to facial AU detection [92], [93] and face landmark detection [94], [95]. Some researchers also used facial landmarks directly as a representation of facial information in their recognition work.

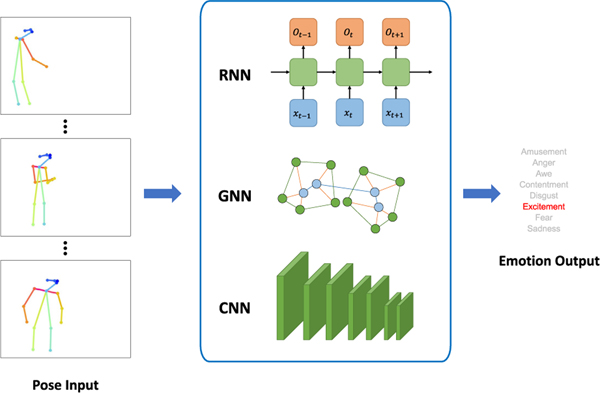

3). Body Pose and Body Mesh:

People can express emotions through body posture and movement. By manipulating the positioning of body parts (e.g., the shoulders and arms), people produce various postures and movements. The coordinates of human joints can serve as a representation of body language, reflecting the individual’s bodily expression. In the field of computer vision, 2-D pose estimation is a well-studied task for detecting the 2-D position of human joints in an image. Leveraging large-scale 2-D pose datasets (e.g., COCO [96]), researchers have proposed several high-performing pose networks [97], [98]. Even with challenging scenes, such as crowded or occluded scenes, these networks are able to provide comprehensive joint detection and linking.

However, 2-D pose estimation does not fully capture the 3-D nature of human posture and movement. 3-D human pose estimation, on the other hand, aims to predict the 3-D coordinates of human joints in space. Single-person 3-D pose estimation methods determine the 3-D joint coordinates relative to the person’s root joint (i.e., the torso) [99], [100]. In addition, some multiperson 3-D pose estimation approaches comprehensively estimate the absolute distance between the camera and the individuals in the image [101].

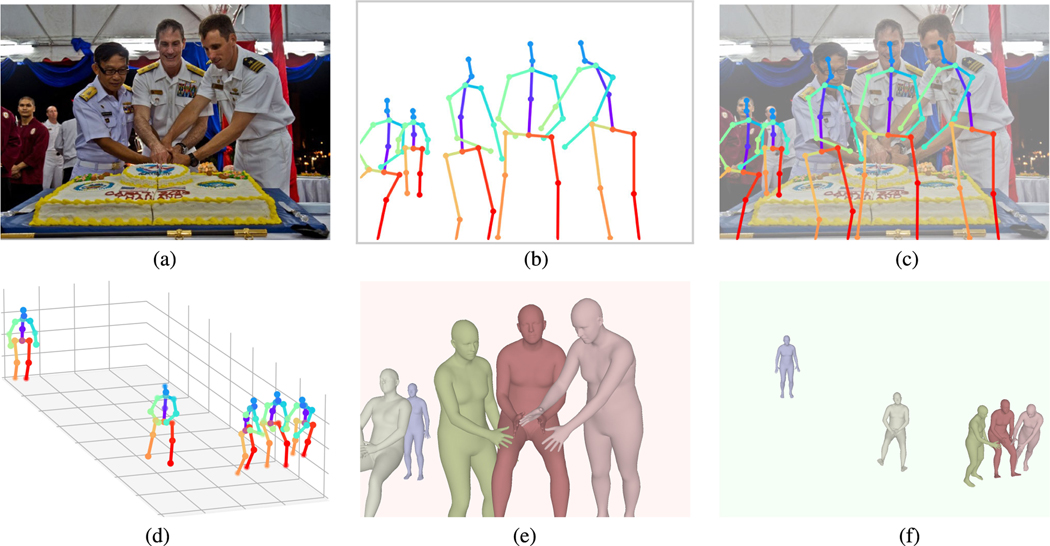

3-D human mesh estimation, which provides the 3-D coordinates of each point on the human mesh, is a further extension of the 3-D pose estimation. Researchers often utilize SMPL [103], [104] or other human graph models to represent the mesh. Fig. 6 illustrates an example of 2-D pose, 3-D pose, and 3-D mesh automatically generated from a scene with multiple people [102].

Fig. 6.

Example of state-of-the-art deep learning-based human pose and mesh detection with individuals in the scene separated properly [102]. The input to the methods is a standard 2-D RGB photograph. The 3-D mesh method provides an estimation of the relative depths of the people in the scene. (a) Original photograph. (b) 2-D pose. (c) 2-D pose, superimposed. (d) 3-D pose. (e) 3-D mesh. (f) 3-D mesh, alternative view.

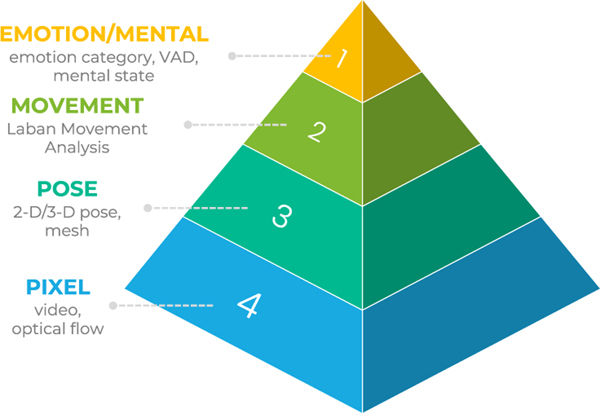

While human pose and human mesh representations provide a higher level of abstraction compared to low-level representations, such as raw video or optical flow, there is still a significant gap between these intermediate-level representations and high-level emotion representations. To bridge this gap, an intermediate-level representation that effectively describes human movements is proposed. Specifically, Laban movement analysis (LMA) is a movement coding system initially developed by the dance community, similar to sheet music for describing music. Fig. 7 illustrates the layers of data representation for BEEU, from low pixel-level representations to the ultimate high-level emotion representation. Elevating each layer higher in this information pyramid requires considerable advancements in abstraction technology.

Fig. 7.

For BEEU, LMA as a movement coding system can serve as an intermediate level of representation to bridge the significant gap between the pose and the emotion/mental levels [51].

E. Human Movement Coding and LMA

Expressive human movement is a complex phenomenon. The human body has 360 joints, many of which can move various distances at different velocities and accelerations, and in two (and depending on the joint, sometimes more) different directions, resulting in an astronomical number of possible combinations. These variables create an infinite number of movements and postures that can convey different emotions. Beyond this array of body parts moving in space, the expressive movement also involves multiple qualitative motor components that appear in unique patterns in different individuals and situations. The complexity of human movement, thus, raises the question: how do we determine which of the numerous components present in the expressive movement are significant to code for emotional expression in movement? Thus, when choosing a coding system, the early stages of each research project can benefit from deeply considering which aspects of movements are central to the expression being studied. A multistage methodology, such as first identifying what is potentially relevant and then using preliminary analyses to refine the selection of movements most promising to code, can be helpful before selecting a method to code or quantify the multitude of variables present in unscripted movement (e.g., [105] and [106]).

After deliberating about which movement variables are relevant and meaningful, we must then consider the three main types of coding systems that have been used in various fields, such as psychology, computer vision, animation, robotics, and AI.

Lists of specific motor behaviors that have been found in scientific studies to be typical to the expressions of specific emotions, such as head down and moving slowly as characterizing sadness; moving backward and bringing the arms in front of the body as characterizing fear; jumping, expanding, and upward movements as characterizing happiness; and so on (for review of these studies and lists of these behaviors, see [107] and [108]).

Kinematic description of the human body models, such as skeleton-based models, contour-based models, or volume-based models. Most work in the field of emotion recognition is based on skeleton-based models [109]. This type of model uses 3-D coordinates of markers that were placed on (using various MoCap systems) or were mapped (using pose estimation techniques) to the main joints of the body to create a moving “skeleton,” which enables researchers to quantitatively capture the movement kinematics (e.g., [110] and [111]).

LMA, a comprehensive movement analysis system that depicts qualitative aspects of movement and, theoretically [112], [113], as well as through scientific research [114], [115], relates the different LMA motor elements (movement qualities) to cognitive and emotional aspects of the moving individual.

The first coding system, based on lists of motor behaviors, was primarily used in earlier studies in the field of psychology, where the encoding and decoding of motor behaviors into emotions and vice versa were done manually by human coders in a labor-intensive process. Other limitations of this coding system include the following.

It is based on a limited number of behaviors that have been used in prior scientific studies. However, people can physically express emotions in many different ways, so the list of previously observed and studied whole-body expressions may not be exhaustive or inclusive of cultural variations.

Likewise, because each study used different lists of behaviors, this method makes it difficult to compare results or to review them additively to arrive at larger verification. Thus, this coding system may miss parts of the range of bodily emotional expression, such as those never observed and coded before. This limitation is especially pronounced because many of these previous studies examined emotional expressions performed by actors, whose movement tended to rely upon more stereotypical bodily emotional expressions that were widely recognized by the audience, rather than naturally occurring motor expressions.

When using the second coding system, kinematic description, in particular, the skeleton-based models, researchers usually employ a set of markers similar to, or smaller than that provided by the Kinect Software Development Kit, and transform a large amount of 3-D data into various low- and/or high-level kinematic and dynamic motion features, which are easier to interpret than the raw data. Researchers using this method have studied specific features, such as head and elbow angles [111], maximum acceleration of hand and elbow relative to spine [116], and distance between joints [117], among others. For a review of such studies, please refer to [17]. In recent years, instead of computing handcrafted features on the sequence of body joints, researchers have employed various deep learning methods to generate representations of the dynamics of the human body embedded within the joint sequence, such as spatiotemporal graph convolutional network (ST-GCN) [51], [118], [119]. Although the 3-D data from joint markers can provide a relatively detailed, objective description of whole-body movement, this coding system has two main limitations.

Movement is often captured by a camera from a single view (usually the frontal), which can result in long segments of missing data from markers that are hidden by other body parts, people, or objects in a video frame. Automatic imputations of such missing data are often impractical as they tend to create incorrect and unrealistic movement trajectories.

The SDK system has only three markers along the torso, which are insufficient for capturing subtle movements in the chest area, movements that are usually observed during authentic emotional expressions, as opposed to acted (and often exaggerated) bodily emotional expressions. Another disadvantage is that these systems have not yet been able to successfully and reliably detect many qualitative changes in movement that are significant for perceiving emotional expression.

In contrast to the quantitative data from joint markers, which enable the capture of detailed movement of every body part, the third coding system mentioned above, LMA, describes qualitative aspects of movement and can relate to a general impression from movements of the entire body or to the movement of specific body parts. By identifying the association between LMA motor components and emotions, and characterizing the typical kinematics of these components using high-level features (e.g., [51], [120], [121], [122], [123], and [124]), researchers can overcome the limitations of other coding systems. If people express their emotions with movements that have never been observed in previous studies, we can still decode their emotions based on the quality of their movement. Similarly, if parts of the body, which are usually used to express a certain emotion, are not visible, it is possible that the emotion could still be decoded by identifying the motor elements composing the visible part of the movement. Moreover, by slightly changing the kinematics of a movement of a robot or animation (i.e., adding to a gesture or a functional movement the kinematics of certain LMA motor elements associated with a specific emotion), we can “color” this functional movement with an expression of that emotion, even when the movement is not the typical movement for expressing that emotion (e.g., [125] and [126]). Similarly, identifying the quality of a movement can enable decoding the expressed emotion even from functional actions, such as walking [127] or reaching and grasping. These advantages and the fact that LMA features have been found to be positively correlated with emotional expressions are why LMA coding is becoming popular in studies that encode or decode bodily emotion expressions (e.g., [51] and [123]). In addition, LMA offers the option to link our coding systems to diverse ways in which humans talk about and describe expressive movement—it is a comprehensive movement-theory system that is and can be used across disciplines for application in acting [128], therapy [129], education [130], and animation [131], among others. The last advantage to consider is that LMA is a comprehensive theory of body movement, much like art theory or music theory, including theories of harmony, and, thus, has been used by artists to attune to aesthetics including movement-perception of visual art (such as that discussed in Section VI-A) and visual, auditory, and movement elements of film and theater (as discussed in Section III-G). Like music theory, LMA is capable of attending to rhythm and phrasing as elements shift and unfold over time, aspects that may be crucial to communicating and interpreting emotional expression.

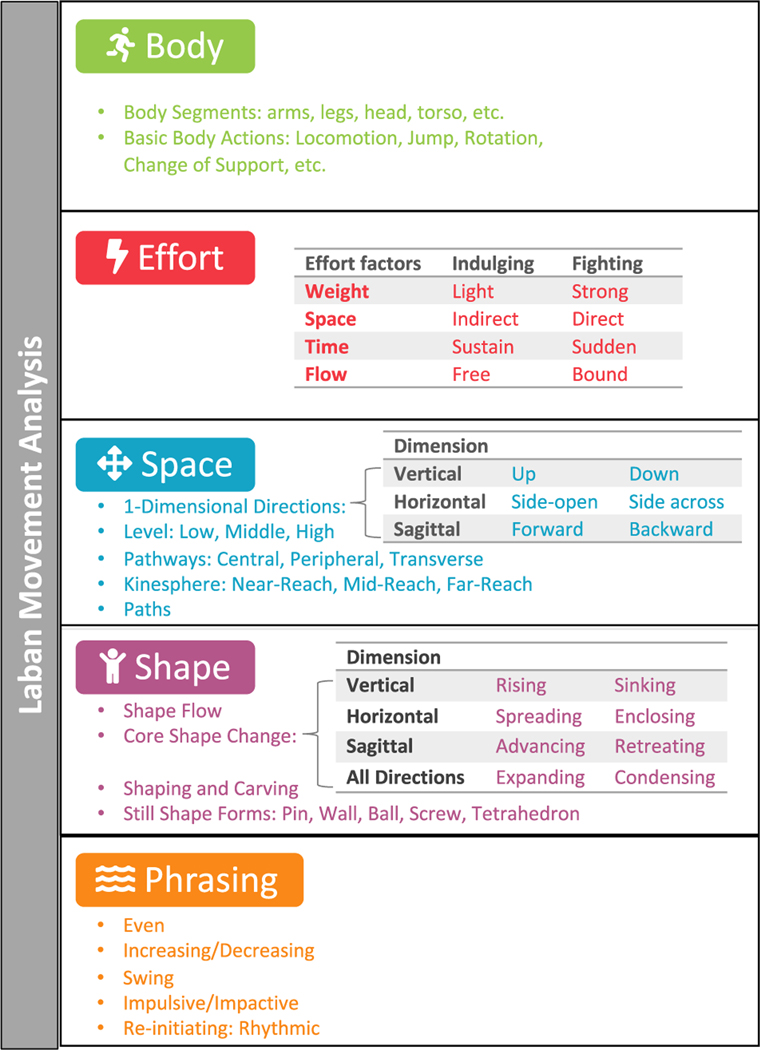

LMA identifies four major categories of movement: Body, Effort, Shape, and Space. Each category encompasses several subsets of motor components (LMA terms are spelled with capital letters to differentiate them from regular usage of these words). Fig. 8 illustrates some basic components of LMA, which are often used in coding.

Fig. 8.

Some basic components of LMA, which are often used in coding.

The Body category describes what is moving, and it is composed of the elements of Body Segments (e.g., arms, legs, and head), their coordination, and basic Body Actions, such as locomotion, jump, rotation, change of support, and so on.

The Effort category describes the qualitative aspect of movement or how we move. It expresses a person’s inner attitude toward movement, and it has four main factors, each describing the continuum between two extremes: indulging in the motor quality of that factor and fighting against that quality. An effort has four factors.

Weight effort, meaning the amount of force or pressure exerted by the body. Activated Weight Effort can be Strong or Light. Alternatively, there may be a lack of weight activation when we give in to the pull of gravity, which we describe as Passive or Heavy Weight.

Space effort describes attention to space, denoting the focus or attitude toward a chosen pathway, i.e., is the movement Direct or flexibly Indirect.

Time effort, describing the mover’s degree of urgency or acceleration/deceleration involved in a movement, i.e., is the movement Sudden or Sustained.

Flow effort, reflecting the element of control or the degree to which a movement is Bound, i.e., restrained or controlled by muscle contraction (usually cocontraction of agonist and antagonist’s muscles), versus Free, i.e., being released and liberated.

The Shape category reflects why we move: Shape describes how the body adapts its shape as we respond to our needs or the environment: do I want to connect with or avoid something, dominate, or cower under? The way the body sculpts itself in space reflects a relationship to self, others, or to the environment. This component includes Shape Flow that describes how the body changes to relate to oneself; it includes Shape Change that describes changes in the form or shape of the body and includes the motor components of Expanding the body or Condensing it in all directions and Rising or Sinking in the vertical dimension, Spreading and Enclosing in the horizontal dimension, and Advancing or Retreating in the sagittal dimension. Another Shape component is Shaping and Carving, which describes how a person shapes their body to shape or affect the environment or other people. For example, when we hug somebody, we might shape and carve the shape of our body, adjusting it to the shape of the other person’s body, or we might shape our ideas by carving or manipulating them through posture and gesture.

The Space category describes where the movement goes in the environment. It describes many spatial factors, such as the Direction, where the movement goes in space, such as Up and Down in the vertical dimension, Side open and Side across in the horizontal dimension, and Forward and Backward in the sagittal dimension; the Level of the movement in space relative to the entire body or parts of the body, such as Low level (movement toward the ground), Middle level, (movement maintaining level, without lowering or elevating), or High level (moving upward in space); Paths or how we travel through space by locomoting; and Pathways through the Kinesphere (the personal bubble of reach-space around each mover that can be moved in and through without locomoting or traveling.) Movement in the Kinesphere might take Central pathways, crossing the space close to the mover’s body center, Peripheral pathways along the periphery of the mover’s reach space, or Transverse pathways cutting across the reach space.

In addition, another important aspect of LMA that is particularly helpful and meaningful to expression is the Phrasing of movements. Phrasing describes changes over time, such as changes in the intensity of the movement over time, similar to musical phrases, which can be Increasing, Decreasing, or Rhythmic, among others. It can also depict how movement components shift during the same action or a series of actions occurring over time, for example, beginning emphatically with strength and then ending by making a light direct point conclusion.

Previous research has highlighted the lack of a notation system that directly encodes the correspondence between bodily expression and body movements in a way similar to FACS for face [51], [105]. LMA, by its nature, has the potential to serve as such a coding system for emotional expressions through body movement. Shafir et al. [115] identified the LMA motor components (qualities), whose existence in a movement could evoke each of the four basic emotions: anger, fear, sadness, and happiness. Melzer et al. [114] identified the LMA components whose existence in a movement caused that movement to be identified as expressing one of those four basic emotions. In an additional experiment by the Shafir group, Gilor, for her Master’s thesis, has been studying the motor elements that are used for expressing sadness and happiness. In this series of studies, the LMA motor components found to evoke these emotions through movement were, for the most part, the same as those used to identify or recognize each emotion from movement, or to express each emotion through movement. For example, anger was associated with Strong Weight Effort, Sudden Time Effort, Direct Space Effort, and Advancing Shape during both emotion elicitation and emotion recognition. Fear was associated with Retreating Shape, moving backward in Space for both emotion elicitation and recognition. In addition, Enclosing and Condensing Shape, and Bound Flow Effort, were also found for emotion elicitation through movement. Sadness was associated with Passive Weight Effort, Sinking Shape, and Head drop for emotion elicitation, emotional expression, and emotion recognition, and arms touching the upper body were also significant indicators for emotion elicitation and expression. Sadness expression was also associated with using Near-Reach Space and Stillness. In contrast, Happiness was associated with jumping, rhythmic movement, Free and Light Efforts, Spreading and Rising Shape, and moving upward in Space for emotion elicitation, emotion expression, and emotion recognition. Happiness expression was also associated with Sudden Time Effort and Rotation. These findings for Happiness were also validated by van Geest et al. [132]. While these studies represent a promising start, further research is needed to create a comprehensive LMA coding system for bodily emotional expressions.

F. Context and Functional Purpose

While human emotions are shown through the face and body, they are closely connected to context and purpose. Thus, the image context surrounding the human can also be used to further identify human emotions. The context information includes the semantic information of the background and what the person is holding, which can assist in identifying activities of the human, thereby allowing for more accurate prediction of the human’s emotion. For instance, people are more likely to feel happy than sad during a birthday party. Context information also includes interactions among humans, which can help infer emotion. For example, people are more likely to be angry when they are engaged in a heated argument with others.

Since the early 2000s, researchers in the field of image retrieval have been developing context recognition systems using machine learning and statistical modeling [133], [134], [135]. With the advent of deep learning and the widespread use of modern graphics processing units (GPUs) or AI processors, accurate object annotation from images has become more feasible. Several deep learning-based approaches have been proposed to leverage context information to enhance basic emotion recognition networks [81], [136], [137]. Sections IV-B3 and IV-E provide more details.

In addition to contextual information, the functional purpose of a person’s movement can also provide valuable insights when inferring emotions. Movement is a combination of both functional and emotional expression, and thus, emotion recognition systems must be able to differentiate between movement that serves a functional purpose and movement that expresses emotions. Action recognition [138], an actively studied topic in computer vision, has the potential to provide information on the function of a person’s movement and assist in disentangling functional and emotional components of movement.

G. Acted Portrayals of Emotion

Research on emotions has often turned to acted portrayals of emotion to overcome some of the challenges inherent in accessing adequate datasets, particularly because evoking intense, authentic emotions in a laboratory can be problematic both ethically [139] and practically. This is because, as noted in Section II, emotional responses vary. Datasets relying upon actors have been useful in overcoming challenges of obtaining ample, adequately labeled emotion-expression data because, compared to unscripted behavior (“in the wild”), where emotion expression often appears in blended or complex ways, actors are typically serving a narrative, in which emotion is part of the story. In addition, sampling emotional expression in the wild encounters cultural distinctions for the expressions themselves, as well as social norms for emotion expression or its repression [140], which may also be culturally scripted for gender. Thus, researchers interested in emotional expressivity and nonverbal communication of emotion often turn to trained actors [139] both to generate new datasets of emotionally expressive movement (e.g., [141]) and for the sampling of emotion expression (e.g., [51]). Such datasets are useful because actors coordinate all three channels of emotions expression, namely, vocal, facial, and body, to produce natural or authentic-seeming expressions of emotions. Some researchers have validated the perception of actor-based and expert movement-based datasets in the lab by showing them to lay observers [114], [141]. This approach also entails problems, in which, while it may capture norms of emotion expression and its clear communication, it may miss distinctions related to demographics such as gender [142], ethnolinguistic heritage, individual, or generational norms [143], [144]. According to cultural dialect theory, we all have nonverbal emotion accents [144], meaning that emotion is expressed differently by different people in different regions, from different cultures. Only some of those cultural dialects appear when sampling films. Such films have often been edited so that viewers beyond that cultural dialect can “read” the emotional expression central to the narrative. Nuance in nonverbal dialects may be excluded in favor of general appeal.

Yet, an advantage of generating datasets from actors or other trained expressive movers is that ground truth can be better established. The intention of the emotion expressed, the emotion felt, and later the emotion perceived from viewing can all be assessed when generating the dataset. Likewise, because actors coordinate image, voice, and movement in the service of storytelling, the context and purpose are clarified, and thus, many of the multiple expressive modes can be organized into individual emotion expression [145], [146]. Moreover, because performing arts productions, such as movies and films made of theater, music, and dance performances, integrate multiple communication modes, the creative team collaborates frequently about the emotional tone or intent of each work, coordinating lighting, scenery/background, objects, camerawork, and sound, with the performers (actors, dancers, and musicians). While the team articulates their intentions during the creative process, the resulting produced art often resonates with wider variation to different audiences, according to their perceptions and tastes.

For researchers relying upon acted emotions, it may be helpful to understand some ways in which actor training considers the role of emotions in theater and film [147]. The role of emotion in narrative arts may reflect some of the theories about the role of emotion itself [148]—to approximately inform the character (organism) about their needs, in order to drive them to take action to meet their needs, or to provide feedback on how recent actions meet or do not meet their needs. As actors prepare, they identify a character’s needs (called their objective) and are moved by emotion to drive the character’s action to overcome obstacles as they pursue the character’s objective. Thus, when collecting data from acted examples, emotion expression can often be found preceding action in the narrative or in response to it. Actors are also trained to listen with their whole being to their scene partner to “hold space” during dialog for emotion expression to fully unfold and complete its purpose of moving either the speaker or the listener to response or action. This expectation is particularly true in opera or other musical theater genres, which often extends the time for emotion expression.

In terms of 3-D modeling, actors trained for theater not only highly develop the specific emotion-related action tendencies of the body but also consider viewer perception from 3-D, for example, when performing on a thrust stage or theater-in-the-round. Thus, their emotion expression may be more easily picked up by systems marking parts of the body during movement. Because bodily emotion expression is so crucial to a narrative, an important application of this field might be to automate audio description of emotion expression in film for the visually impaired or audio description of movement features salient to emotion expression.

While we often do not recognize subtle emotion expression in strangers, and we can in those we know, when actors portray characters within the circumstances of a play, the audience, gradually over the arc of the play, comes to perceive the emotional expression of each character as revealed over time. Understanding how this works in art can help us develop systems that take the time to learn and better understand emotion expression in diverse contexts and individuals, similar to how current voice recognition learns individual accents.

H. Cultural and Gender Dialects

In a meta-analysis examining cultural dialects in the nonverbal expression of emotion, measurable variations were found in response to facial expression across cultures [149]. Moreover, it has long since been acknowledged that learned display rules [150] and decoding rules [151] vary across different cultures. For example, in Japanese culture, overt displays of negative emotional expression are discouraged. Furthermore, smiling behavior in this context is often considered an attempt to conceal negative emotions. In contrast, in American culture, negative expressions are considered more appropriate to display [151].

Previous research has similarly demonstrated a powerful influence of gender-related emotion stereotypes in driving differential expectations for the type of emotions that men and women are likely to experience and express. Men are expected to experience and express more power-related emotions, such as anger and contempt, whereas women are expected to experience and express more powerless emotions, such as sadness and fear [152]. These findings match self-reported emotional experiences and expressions with strong cultural consistency across 37 different countries worldwide [153]. Furthermore, there are even differences in the extent to which neutral facial appearance resembles different emotions, with male faces physically resembling anger expressions more (low, hooded brows, thin lips, and angular features) and female faces resembling fear expressions more [154].

While cultural norms affect how and whether emotions are displayed, such norms also influence how displays of emotion are perceived. For example, when a culture’s norm is to express emotion, less intensity is read into such displays, whereas, in cultures where the norm is not to express emotion intensely, the same displays are read as more intense [155]. In this way, visual percepts derived from objective stimulus characteristics can generate different subjective experiences based on culture and other individual factors. For instance, there is notable cultural variation in the extent to which basic visual information, such as background versus foreground, is integrated into observers’ perceptual experiences [156].

Culture and gender add complexity to human emotional expression, yet little research to date has examined individual variation in responses to visual scenes, either in terms of basic aesthetics or the emotional responses that people have. Future work assessing simple demographic details (e.g., gender and age) will begin to explore this important source of variation.

I. Structure

Some basic visual properties have been found that characterize positive versus negative experiences and preferences. Most notably, round features—whether represented in faces or objects—elicit feelings of positivity and warmth, and tend to be preferred over angular visual features. This preference has been used to explain the roundness of smiling faces and the angularity of anger displays [157]. Such visual curvature has also been found to influence attitudes toward and preference for even meaningless patterns represented in abstract visual designs [158]. The connection between affective responses and these basic visual forms has helped computer vision predict emotions evoked from pictorial scenes, as mentioned earlier [46].

Importantly, the dimensional approach to assessing visual properties underlying emotional experience can be used to examine both visual scenes and faces found within those scenes. Indeed, the dimensional approach adequately captures both “pure” and mixed emotional facial expressions [159], as well as affective responses to visual scenes, as demonstrated by the IAPS. Critically, even neutral displays have been found to elicit strong spontaneous impressions [160], ones that are effortless, nonreflective, and highly consensual. Recent research utilizing computer-based models suggests that these inferences are largely driven by the same physical properties found in emotional expressions. For instance, Said et al. [161] employed a Bayesian network trained to detect expressions in faces and then applied this analysis to images of neutral faces that had been rated on a number of common personality traits. The results showed that the trait ratings of faces were meaningfully associated with the perceptual resemblances that these “neutral” faces had with emotional expressions. Thus, these results speak to a mechanism of perceptual overlap, whereby expression and identity cues can both trigger similar emotional responses.

A reverse engineering model of emotional inferences has suggested that perceptions of stable personality traits can be derived from emotional expressions as well [162]. This work implicates appraisal theory as a primary mechanism by which observing facial expressions can inform stable personality inferences made of others. This account suggests that people use appraisals that are associated with specific emotions to reconstruct inferences of others’ underlying motives, intents, and personal dispositions, which they then use to derive stable impressions. It has likewise been shown that emotion-resembling features in an otherwise neutral face can drive person perception [152]. Finally, research has also suggested that facial expressions actually take on the form that they do to resemble static facial appearance cues associated with certain functional affordance, such as facial maturity [163] and gender-related appearance [152].

J. Personality

Personality describes an individual’s relatively stable patterns of thinking, feeling, and behaving. These are characterized by certain personality traits that represent a person’s disposition toward the world. Emotions, on the other hand, are the consequence of the individual’s response to external or internal stimuli. They may change when the stimuli change and, therefore, are considered as states (as opposed to traits). Just as a full-blown emotion represents an integration of feeling, action, appraisal, and wants at a particular time and location, personality represents the integration of these components over time and space. Researchers have tried to characterize the relationships between personality and emotions. Several studies found correlations between certain personality traits and specific emotions. For instance, the trait neuroticism was found to be correlated with negative emotions, while extroverted people were found to experience higher levels of positive emotions than introverted people [164]. These correlations were explained by relating different personality traits to specific emotion regulation strategies e.g., [165], or by demonstrating that evaluation mediates between certain personality traits and negative or positive affect [166]. Whatever the reason for these correlations is, the fact that they are correlated might help to create state-to-trait inferences. Therefore, if emotional states can be mapped to personality, the ability to automatically recognize emotions could provide tools for the automatic detection of personality.

Advances in computationally, data-driven methods offer promising strides toward personality traits being predicted based on a dataset of individuals’ behavior, self-reported personality traits, or physiological measures. It is also possible to use factor analyses to identify underlying dimensions of personality, such as the Big Five personality traits, or openness, conscientiousness, extraversion, agreeableness, and neuroticism (OCEAN) [167], [168] (see Fig. 9). Personality can likewise be inferred from facial expressions of emotion [162] and even from emotion-resembling facial appearance [169]. Machine learning algorithms have recently been successfully employed to predict human perceptions of personality traits based on such facial emotion cues [170]. Furthermore, network analysis, such as social network analysis, can also be incorporated to identify patterns of connectivity between different personality traits or behavioral measures. Finally, interpreting a person’s emotional expression might also depend on the perception of their personality when additional data are available for inferring personality [171]. For further information, readers can refer to recent research in this area, e.g., [172] and [173].

Fig. 9.

Big Five personality traits or OCEAN.

K. Affective Style

Affective styles driven by the tendencies to approach reward versus avoid punishments found their way into early conflict theories [174] and remain a mainstay of contemporary psychology in theories such as Carver and Scheier’s Control Theory [175] and Higgins’s Self-discrepancy Theory [176].

Evidence for the specific association between emotion and approach-avoidance motivation has largely involved the examination of differential hemispheric asymmetries in cortical activation. Greater right frontal activation has been associated with avoidance motivation, as well as with flattened positive affect and increased negative affect. Greater left frontal activation has been associated with approach motivation and positive affect [177]. Supporting the meaningfulness of these findings, Davidson [177] argued that projections from the mesolimbic reward system, including basal ganglia and ventral striatum, which are associated with dopamine release, give rise to greater left frontal activation. Projections from the amygdala associated with the release of the primary vigilance-related transmitter norepinephrine give rise to greater right frontal activation.

Further evidence supporting the emotion/behavior orientation link stems from evidence accumulated in studies using measures of behavioral motivation based on Gray’s [178] proposed emotion systems. The most widely studied of these are the behavioral activation system (BAS) and the behavioral inhibition system (BIS). The BAS is argued to be highly related to appetitive or approach-oriented behavior in response to reward, whereas the BIS is argued to be related to inhibited or avoidance-oriented behavior in response to punishment. Carver and White [179] developed a BIS/BAS self-report rating measure that is thought to tap into these fundamental behavioral dispositions. They found that extreme scores on BIS/BAS scales were linked to behavioral sensitivity toward reward versus punishment contingencies, respectively [179]. BIS/BAS measures have been shown to be related to emotional predisposition, with positive emotionality being related to the dominance of BAS over BIS, and depressiveness and fearful anxiety being related to the dominance of BIS over BAS [180].

Notably, for many years, there existed a valence (positive/negative) versus motivational (approach/avoidance) confound in all work conducted in the emotion/behavior domain. Negative emotions were associated with avoidance-oriented and positive emotions with approach-oriented behavior, a contention supported by much of the work reviewed above. The valence/motivation confound led researchers Harmon-Jones and Allen [181] to test for hemispheric asymmetries in activation associated with anger, a negative emotion with an approach orientation (aggression). They argued that, if left hemispheric lateralization was associated with anger, this would indicate that the hemispheric lateralization in activation previously found was in fact due to behavioral motivation. However, right hemispheric lateralization would indicate that they were due to valence. In these studies, they found that dispositional anger [181] was associated with left lateralized EEG activation, consistent with the first interpretation and with that previously reported only for positive emotion. They supported this conclusion by showing that the dominance of BAS over BIS was associated with anger [58].

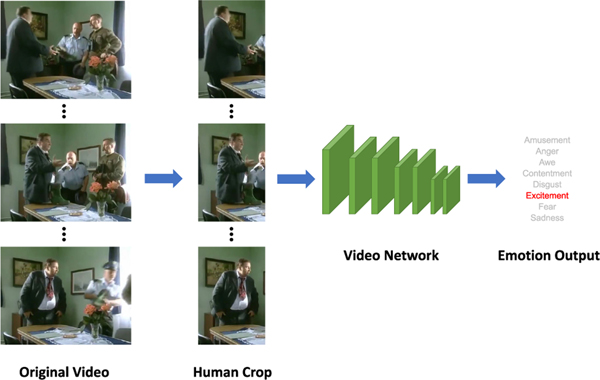

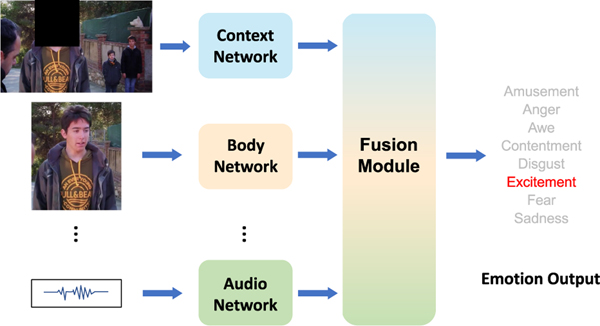

IV. EMOTION RECOGNITION: KEY IDEAS AND SYSTEMS

The field of computer-based emotion recognition from visual media is nascent but has seen encouraging developments in the past two decades. Interest in this area has also grown sharply (see Section IV-A). We will highlight some existing research ideas and systems related to modeling evoked emotion (see Section IV-B), facial expression (see Section IV-C), and bodily expression (see Section IV-D). In addition, we will discuss integrated approaches to emotion recognition (see Sections IV-E and IV-F). Because of the breadth of the field, it is not possible to cover all exciting developments, so we will focus our review on the evolution of the field and some of the most current, cutting-edge results.

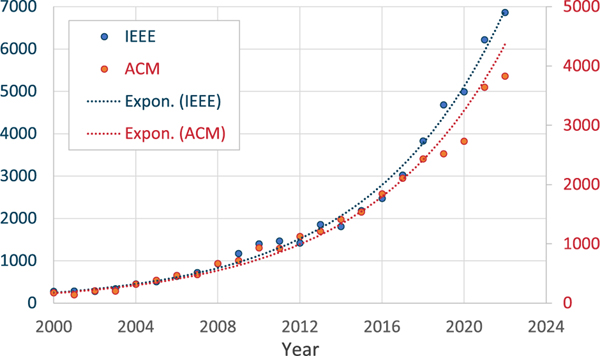

A. Exponential Growth of the Field

To gain insight into the growing interest of the IEEE and computing communities in emotion recognition research, we conducted a survey of publications in the IEEE Xplore and ACM Digital Library (DL) databases. Results revealed an exponential increase in the number of publications related to emotion or sentiment in images and videos over the last two decades (see Fig. 10).

Fig. 10.

Exponential growth in the number of IEEE and ACM publications related to emotion in images and videos in the recent two decades was observed. Statistics are based on querying the IEEE and ACM publication databases. The lower rate of expansion observed in the year 2020 can be attributed to the disruptions caused by the COVID-19 pandemic and the limitations that it imposed on human subject studies.

As of February 2023, a total of 48 154 publications were found in IEEE Xplore, with the majority (35 247 or 73.2%) being in conference proceedings, followed by journals (8214 or 17.1%), books (2400 or 5.0%), magazines (1413 or 2.9%), and early access journal articles (831 or 1.7%). The field has experienced substantial growth, with a 25-fold increase during the period from the early 2000s to 2022, rising from an average of 275 publications per year to about 7000 per year in 2022.

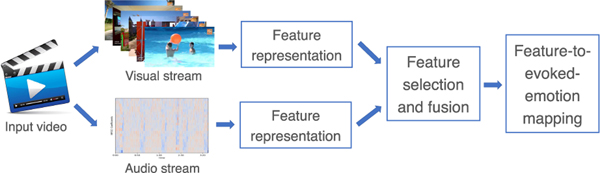

In the ACM DL, a total of 30 185 publications were found, with conference proceedings making up the majority (24 817 or 82.2%), followed by journals (3851 or 12.8%), magazines (780 or 2.6%), newsletters (505 or 1.7%), and books (264 or 0.9%). In the ACM community, the field has seen a 22-fold growth during the same period, with an average of 170 publications per year in the early 2000s rising to about 3800 per year in 2022.