Abstract

We present a framework for learning Granger causality networks for multivariate categorical time series based on the mixture transition distribution (MTD) model. Traditionally, MTD is plagued by a nonconvex objective, non-identifiability, and presence of local optima. To circumvent these problems, we recast inference in the MTD as a convex problem. The new formulation facilitates the application of MTD to high-dimensional multivariate time series. As a baseline, we also formulate a multi-output logistic autoregressive model (mLTD), which while a straightforward extension of autoregressive Bernoulli generalized linear models, has not been previously applied to the analysis of multivariate categorial time series. We establish identifiability conditions of the MTD model and compare them to those for mLTD. We further devise novel and efficient optimization algorithms for MTD based on our proposed convex formulation, and compare the MTD and mLTD in both simulated and real data experiments. Finally, we establish consistency of the convex MTD in high dimensions. Our approach simultaneously provides a comparison of methods for network inference in categorical time series and opens the door to modern, regularized inference with the MTD model.

Keywords: time series, Granger causality, categorical data, structured sparsity, convex, 68Q25, 68R10, 68U05

1. Introduction.

Granger causality [17] is a popular framework for assessing the relationships between time series, and has been widely applied in econometrics, neuroscience, and genomics, amongst other fields. Given two time series and , the idea is to use the temporal structure of the data to assess whether the past values of one, say , are predictive of future values of the other, , beyond what the past of can predict alone; if so, is said to Granger cause . Recently, the focus has shifted to inferring Granger causality networks from multivariate time series data, with the goal of uncovering a sparse set of Granger causal relationships amongst the individual univariate time series. Building on the typical autoregressive framework for assessing Granger causality, the majority of approaches for inferring Granger causal networks have focused on real-valued Gaussian time series using the vector autoregressive model (VAR) with sparsity inducing penalties [19, 42]. More recently, this approach has been extended to non-Gaussian data such as multivariate point processes using sparse Hawkes processes [48], count data using autoregressive Poisson generalized linear models [18], or even time series with heavy tails using VAR models with elliptical errors [36]. In contrast, inferring networks for multivariate categorical time series under this paradigm has received less attention.

Multivariate categorical time series arise naturally in many domains. For example, we might have health states from various indicators for a patient over time, voting records for a set of politicians, action labels for players on a team, social behaviors for kids in a school, or musical notes in an orchestrated piece. There are also many datasets that can be viewed as binary multivariate time series based on the presence or absence of an action for some set of entities. Furthermore, in some applications, collections of continuous-valued time series are each quantized into a small set of discrete values, like the weather data from multiple stations [12], wind data [39], stock returns [32], or sales volume for a collection of products [10]. Our work develops both interpretable and computationally efficient methodology for Granger causality network estimation in such cases using sparse penalties [19, 42]. Existing approaches to modeling categorical series both do not scale to higher dimensional series and also lack Granger causal interpretability, hampering their ability to estimate large Granger causality networks. We first discuss the specific drawbacks of existing approaches and then introduce the contributions of our proposed framework.

The mixture transition distribution (MTD) model [4, 39], originally proposed for parsimonious modeling of higher order Markov chains, can provide an approach to modeling multivariate categorical time series [10, 32, 49]. The MTD model reduces each categorical interaction to a standard single dimensional Markov transition probability table. While alluring due to its elegant construction and intuitive interpretation, widespread use of the MTD model has been limited by a non-convex objective with many local optima, a large number of parameter constraints, and unknown identifiability conditions [32, 49, 3]. For these reasons, the few applications of the MTD model to multivariate time series have looked at a maximum of three or four time series. To bypass the limitations of MTD, autoregressive generalized linear models have been advocated for categorical time series. In particular, autoregressive generalized linear binomial models are often used for the special case of two categories per series [18, 2]. While their multinomial-output extension to a larger number of states per series has not been widely adopted, they have been applied to the univariate time series case [24].

We refer to the autoregressive multinomial GLM as the mixture logistic transition distribution (mLTD). The mLTD model uses a logistic function to bypass parameter constraints, results in a convex objective, and has well-known identifiability conditions. However, these advantages of mLTD come at the cost of reduced interpretability, mainly because the transition distribution in mLTD depends nonlinearly on the model parameters. Recently, a constrained autoregressive probit model that improves interpretability has been proposed [32]. However, the probit model is both non-convex and inference is computationally intensive, limiting applications to higher dimensional series. As such, one is still torn between a computational and interpretability tradeoff. Methods for learning Granger causality networks among general time series based on transfer entropy or directed information have been proposed. In particular, the empirical estimator [37] and the context tree weighting estimator [23] for directed information are specifically applicable to categorical time series. However, consistency guarantees of these estimators are derived under the pairwise (group-wise) Markov assumption, and implementing these algorithms can be computationally intensive.

We address these issues by going back to the interpretable MTD framework and showing how one can improve its computational drawbacks. In particular, we recast inference in the MTD model as a convex problem through a novel re-parameterization. We further develop a regularized estimation framework for identifying Granger causality for multivariate categorical time series. We also establish, for the first time, conditions for identifiability in the MTD model and compare the identifiability conditions for MTD and mLTD models. We find that while the identifiability conditions for the MTD model are given by a non-convex set, we may easily enforce the constraints using our convex re-parameterization by augmenting the likelihood with appropriate convex penalties. We then develop efficient projected gradient and Frank-Wolfe algorithms for optimizing the penalized convex MTD objective. Our projected gradient algorithm depends on a Dykstra splitting method for projection onto the constraint sets of the MTD model. This computational approach for MTD enables this model to be applied to large, modern datasets for the first time. Importantly, the computational insights we provide carry over to the suite of other applications of MTD models, such as higher-order Markov chains, beyond the multivariate categorical time series which are the focus herein.

As a comparison benchmark we also develop a penalized mLTD model for Granger causality in multivariate Markov chains. While straightforward, the application of the penalized mLTD framework to multivariate categorical time series with more than two categories is new. We compare MTD and mLTD methods under multiple simulation conditions and use the MTD method to uncover Granger causality structure in both music [27] and iEEG brain recording [9] data sets. Finally, we also establish, for the first time, consistency of the convex MTD in high dimensions, which facilitates future theoretical developments in this area.

2. Categorical Time Series and Granger Causality.

2.1. Granger Causality.

Let denote a -dimensional categorical random variable indexed by time where , with denoting the set of possible values of . Let be the cardinality of set , i.e., the number of categories that series may take. A length multivariate categorical time series is the sequence .

An order multivariate Markov chain models the transition probability between the categories at lagged times and those at time using a transition probability distributions:

| (2.1) |

Due to the complexity of fully parameterizing this transition distribution, it is common to simplify the model and assume that the categories at time are conditionally independent of one another given the past realizations:

| (2.2) |

For simplicity, we assume , but stress that all models and results equally apply to higher orders of . By the decomposition assumption (2.2), the problem of estimation and inference can be divided into independent subproblems over each series . Using this decomposition, we define Granger non-causality for two categorical time series and as follows.

Definition 2.1.

Time series is not Granger causal for time series iff ,

Definition 2.1 states that is not Granger causal for time series if the probability that is in any state at time is conditionally independent of the value of at time given the values of all other series , at time . Definition 2.1 is natural since it implies that if does not Granger cause , then knowing does not help predicting the future state of series . For real-valued data, classical definitions of Granger non-causality generally state that the conditional mean, in homoskedastic models, or conditional variance, in heteroskedastic models, of do not depend on the past values . Thus, Definition 2.1 is a generalization of the classical case to multivariate categorical data, where notions like conditional mean and variance are less applicable. The same definition has been considered before, for example, in [14].

2.2. Tensor Representation for Categorical Time Series.

Each individual conditional distribution in Equation (2.2) can be represented as a conditional probability tensor with modes of dimension . Each entry of the tensor is given by

| (2.3) |

Definition 2.1 may be stated equivalently using the language of tensors: does not Granger cause if all subtensors along the mode associated with are equal. Specifically,

| (2.4) |

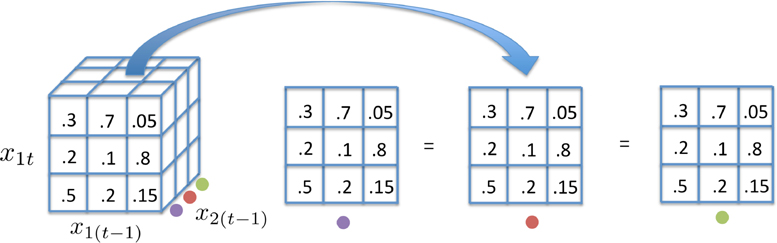

This subtensor view of Granger non-causality in categorical time series is displayed graphically in Figure 1.

Figure 1.

Illustration of Granger non-causality in an example with and . Since the tensor represents conditional probabilities, the columns of the front face of the tensor, the vertical axis, must sum to one. Here, is not Granger causal for since each slice of the conditional probability tensor along the mode is equal.

The tensor interpretation suggests a naive penalized likelihood method for Granger non-causality selection in categorical time series: perform penalized maximum likelihood estimation of the conditional probability tensor with a penalty that enforces equality among the subtensors of each mode. While we have explored the above approach in low dimensions, e.g. for , memory, and in turn, computational requirements for storing the complete probability tensor become infeasible for even moderate dimensions since has entries. Other authors have modeled the conditional probability distribution of Markov chains using a Bayesian nonparametric higher order singular value decomposition [41] that adaptively shrinks the number of parameters needed to represent the high-dimensional tensor. We take an alternative approach and, instead, in Sections 2.3 and 2.4, present tensor parameterizations where the number of parameters needed to represent the full conditional probability tensor grows linearly with . We establish Granger non-causality conditions and associated penalized likelihood methods for estimation under these parameterizations in Sections 3 and 4, respectively.

In specifying our models, and throughout the remainder of the paper, we focus on a single conditional of given in Equation (2.2). For notational simplicity, we drop the index.

2.3. The MTD Model.

The MTD model as in [39] provides an elegant and intuitive parameterization of a high-order Markov chain. Here, we extend this model to the case of multiple time series, and model the multivariate Markov transition as a convex combination of pairwise transition probabilities. The MTD model is given by:

| (2.5) |

where is a probability vector, is a pairwise transition probability table between and and is a dimensional probability distribution such that with . We let the matrix denote the pairwise transition probability . Thus, . We also let denote the intercept, where . While past formulations of the MTD model neglect the intercept term, we show below that the intercept is crucial for model identifiability and, consequently, Granger causality inference. Finally, we note that the MTD model may be extended by adding interaction terms for pairwise effects [4], such as , though we focus our presentation on the simple case above.

2.4. The mLTD Model.

The multinomial logistic transition distribution model (mLTD) is given by:

| (2.6) |

where and . The probit model in [32] is not a natural fit for inferring Granger causality networks both due to the non-convexity of the probit model and the non-convex constraints imposed on the matrices. Note that, like the MTD model, the mLTD model naturally allows adding interaction terms, though we focus again on the simple case above.

2.5. Comparing MTD and mLTD Models.

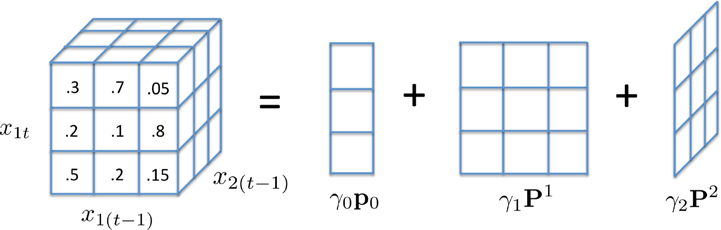

Both MTD and mLTD models represent the full conditional probability tensor using individual matrices for each series, for MTD and for mLTD. However, how these matrices are composed and restrictions on their domains differ substantially between the two models. The MTD model is a convex combination of pairwise probability tables, whereas mLTD is a nonlinear function of the unrestricted . MTD may thus be thought of as a linear tensor factorization method for conditional probability tensors, where the tensor is created by summing probability table slices along each dimension. This interpretation of MTD is displayed graphically in Figure 2.

Figure 2.

Schematic of the MTD factorization of the conditional probability tensor for time series and categories.

3. Convexity, Identifiability and Granger Causality.

In this section, we first introduce a novel re-parameterization of the MTD model that renders the log likelihood of the MTD model convex. The convex formulation alone opens up an array of possibilities for the MTD framework beyond our multivariate categorical time series focus, eliminating the primary barrier to adoption of this model, i.e., non-convexity and associated computationally demanding inference procedures. The proposed change-of-variables also allows us to derive both novel identifiability conditions for the MTD model and Granger causality restrictions that hold for both MTD and mLTD models. The non-identifiability of the MTD model was first pointed out by [28], but no explicit conditions or general framework for identifiability were given. We show that while the identifiability conditions for MTD are non-convex, they may be enforced implicitly by adding an appropriate convex penalty to the convex log-likelihood objective. The proofs of all results are given in the Supplementary Material.

3.1. Convex MTD.

Maximum likelihood estimator for the MTD model under the parameterization is defined by the non-convex optimization problem:

| (3.1) |

The log-likelihood surface is highly non-convex, following from the multiplication of and in the log term. It also contains many local optima due to the general non-identifiability. Indeed, the set of equivalent models forms a non-convex region in the parameterization (i.e., the convex combination of equivalent models is not necessarily another equivalent model). This limitation may lead to many non-convex shaped ridges and sets of equal probability.

Fortunately, the optimization problem in (3.1) may be recast as a convex program using the re-parameterization and . Using this reparameterization, we can rewrite the factorization of the conditional probability tensor for MTD in Equation (2.5) as

| (3.2) |

The full optimization problem for maximum log-likelihood including constraints then becomes:

| (3.3) |

Problem (3.3) is convex since the objective function is a linear function composed with a log function and only involves linear equality and inequality constraints [6].

The reparameterization in Equation (3.2) also provides clear intuition for why the MTD model may not be identifiable. Since the probability function is a linear sum of s, one may move probability mass around, taking mass from some and moving to some or , while keeping the conditional probability tensor constant. These sets of equivalent MTD parameterizations have the following appealing property:

Proposition 3.1.

The set of MTD parameters, , that yield the same factorized conditional distribution forms a convex set.

Taken together, the convex reparameterization and Proposition 3.1 imply that the convex function given in Equation (3.3) has no local optima, and that the globally optimal solution to Problem (3.3) is given by a convex set of equivalent MTD models.

3.2. Identifiability.

3.2.1. Identifiability for the MTD Model.

The re-parameterization of the MTD model in terms of s, instead of and , combined with the introduction of an intercept term, allows us to explicitly characterize identifiability conditions for the MTD model.

Theorem 3.2.

Every MTD distribution has a unique parameterization where the minimal element in each row of (and thus ) is zero for all .

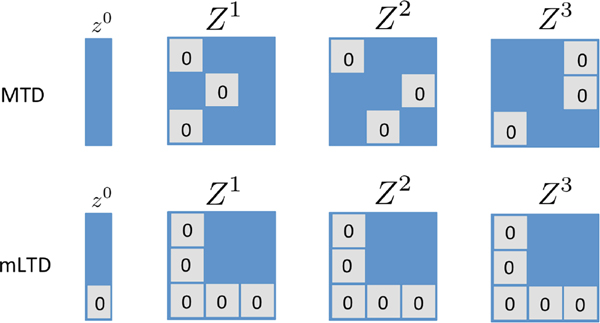

The intuition for this result is simple: any excess probability mass on a row of each may be pushed onto the same row of the intercept term without changing the full conditional probability. This operation may be done until the smallest element in each row is zero, but no more without violating the positivity constraints of the pairwise transitions. The identifiability condition in Theorem 3.2 also offers an interpretation of the parameters in the MTD model. Specifically, the element denotes the additive increase in probability that is in state given that is in state . Furthermore, the parameters now represent the total amount of probability mass in the full conditional distribution explained by categorical variable , providing an interpretable notion of dependence in categorical time series. The mLTD model, however, does not readily suggest a simple and interpretable notion of dependence from the matrix due to the non-linearity of the link function. The identifiability conditions are displayed pictorially in Figure 3.

Figure 3.

Schematic displaying the identifiability conditions for the MTD model (top) and the mLTD model (bottom) for an example with and . Identifiability for MTD requires a zero entry in each row of , while for mLTD the first column and last row must all be zero. In MTD the columns of each must also sum to the same value, and must sum to one across all .

Unfortunately, the necessary constraint set for identifiability specified in Theorem 3.2 is a non-convex set since the locations of the zero elements in each row of are unknown. Naively, one could search over all possible locations for the zero element in each row of each ; however, this quickly becomes infeasible as both and grow. Instead, we add a penalty term , or prior, that biases the solution towards the uniqueness constraints. This regularization also aids convergence of optimization since the maximum likelihood solution without identifiability constraints is not unique. Letting

| (3.4) |

the regularized estimation problem is given by

| (3.5) |

Theorem 3.3.

For any and that does not depend on and is increasing with respect to the absolute value of entries in , the solution to the problem in Equation (3.5) is contained in the set of identifiable MTD models described in Theorem 3.2.

Intuitively, by penalizing the entries of the matrices, but not the intercept term, solutions will be biased to having the intercept contain the excess probability mass, rather than the matrices. Thus, even with a very small penalty, we constrain the solution space to the set of identifiable models. Theorem 3.3 characterizes an entire class of regularizers that enforce the identifiability constraints for MTD. As we explain in Section 4.1, a simple choice for is a regularizer that also selects for Granger causality.

3.2.2. Identifiability for the mLTD Model.

The non-identifiability of multinomial logistic models is also well-known, as is the non-identifiability of generalized linear models with categorical covariates. Combining the standard identifiability restrictions for both settings gives the following result.

Proposition 3.4.

([1]) Every mLTD has a unique parameterization such that first column and last row of are zero for all and the last element of is zero.

These conditions are displayed pictorially in Figure 3. Under the identifiability constraints for both MTD and mLTD models, at least one element in each row must be zero. For MTD this zero may be in any column, while for mLTD the zero may, without loss of generality, be placed in the first column of each row. For mLTD, the last row of must also be zero due to the logistic output (one category serves as the ‘baseline’); in MTD, instead, each column of must sum to one.

3.3. Granger Causality in MTD and mLTD.

Under the parameterization for MTD and mLTD specification of Equation (2.6), we have the following simple result for Granger non-causality conditions:

Proposition 3.5.

In both the MTD model of Equation (3.2) and the mLTD model of Equation (2.6), time series is Granger non-causal for time series if and only if the columns of are all equal. Furthermore, all equivalent MTD model parameterizations give the same Granger causality conclusions.

Intuitively, if all columns of are equal, the transition distribution for does not depend on . This result for mLTD and MTD models is analogous to the general Granger non-causality result for the slices of the conditional probability tensor being constant along the mode being equal. Based on Proposition 3.5, we might select for Granger non-causality by penalizing the columns of to be the same. While this approach is potentially interesting, a more direct, stable method takes into account the conditions required for identifiability of the under both models.

Under the identifiability constraints for both MTD and mLTD given in Theorems 3.2 and Proposition 3.4, respectively, is Granger non-causal for if and only if (a special case of all columns being equal). For both MTD and mLTD models this fact follows from each row having at least one zero element; for all the columns to be equal, as stated in Proposition 3.5, all elements in each row must also be equal to zero. Taken together, if we enforce the identifiability constraints, we may uniquely select for Granger non-causality by encouraging some to be zero.

4. Granger Causality Selection.

We now turn to procedures for inferring Granger non-causality statements from observed multivariate categorical time series. In Section 3, we derived that if , then is Granger non-causal for in both MTD and mLTD models. To perform model selection, we take a penalized likelihood approach and present a set of penalty terms that encourage , while maintaining convexity of the overall objective. The final parameter estimates automatically satisfy the identifiability constraints for MTD. We also develop an analogous penalized criterion for selecting Granger causality in the mLTD model.

4.1. Model Selection in MTD.

We now explore penalties that encourage the matrices to be zero. Under the parameterization, this is equivalent to encouraging the to be zero. We first introduce an penalized problem in terms of the original parameterization, and then show how convex relaxations of the norm on lead to natural convex penalties on . Ideally, we would solve the penalized problem:

| (4.1) |

where is a regularization parameter and is the norm over the weights; the intercept weight is not regularized. The penalty simply counts the number of non-zero , which is equivalent to the number of non-zero . This results in a non-convex objective. Instead, we develop alternative convex penalties suited to model selection in MTD. Importantly, we require that any such penalty, , fall in the intersection of two penalty classes: 1) must be a convex relaxation to the norm in Problem (4.1) to promote sparse solutions and 2) must satisfy the conditions of Theorem 3.3 to ensure the final parameter estimates satisfy the MTD identifiability constraints. We propose and compare two penalties that satisfy these criteria.

Our first proposal is the standard relaxation, as in lasso regression, which simply sums the absolute values of . This penalty encourages soft-thresholding, where some estimated are set exactly to zero while others are shrunk relative to the estimates from the unpenalized objective. Note that due to the non-negativity constraint, the norm on is simply given by . If were included in the regularization, the relaxation would fail due to the simplex constraints so the norm would always be equal to one over the feasible set [35]. Our addition of an unpenalized intercept to the MTD model allows us to sidestep this issue and leverage the sparsity promoting properties of the penalty for model selection in MTD. The regularized MTD problem is thus given by

| (4.2) |

Equation (4.2) may be rewritten solely in terms of the s by noting that . Defining , and assuming, for simplicity of presentation, , we can rewrite the MTD constraints as

where

| (4.3) |

and is a -dimensional identity matrix. This gives the final penalized optimization problem only in terms of as

| (4.4) |

Writing the penalized problem in this form shows that the penalty increases with the absolute value of the entries in and does not penalize the intercept; it thus satisfies the conditions of Theorem 3.3. As a result, the solution to the problem given in Equation (4.4) automatically satisfies the MTD identifiability constraints. Furthermore, the solution will lead to Granger causality estimates since many of the will be zero due to the penalty.

Another natural convex relaxation of the objective in Equation (4.1) is given by a group lasso penalty on each [47]. The relaxation is derived by writing the norm as a rank constraint in terms of , which is then relaxed to a group lasso. Specifically, assume all time series have the same number of categories, i.e., . Due to the equality and non-negativity constraints,

where

Thus, we can use the nuclear norm on as a convex relaxation of . Furthermore, the nuclear norm of is given by the sum of Frobenius norms of . More specifically, denoting by the nuclear norm and by the Froebenius norm,

This group lasso penalty gives the final problem

| (4.5) |

Here, we penalize directly, rather than indirectly via . The group lasso penalty drives all elements of to zero together, such that the optimal solution sets some to be all zero. This effect naturally coincides with our conditions of Granger non-causality that all elements of . The group lasso penalty also satisfies the conditions of Theorem 3.3 since the norm is increasing with respect to each element in and the intercept is not penalized. Thus, solutions to Problem (4.5) automatically enforce the MTD identifiability constraints.

The group lasso penalty tends to favor larger groups [20]. When the time series have different number of categories, the sizes of the coefficient matrices s are different. In this case, one can use penalties that scale with the group size, for example, . For simplicity, we focus on the case where all time series have the same number of categories hereafter, and omit the dependence of the penalty on group sizes.

4.2. Model Selection in mLTD.

To select for Granger causality in the mLTD model, we add a group lasso penalty to each of the matrices, similar to Equation (4.5), leading to the following optimization problem:

| (4.6) |

For two categories, , this problem reduces to sparse logistic regression for binary time series, which was recently studied theoretically [18]. As in the MTD case, the group lasso penalty shrinks some entirely to zero.

5. Optimization.

Here we present fast proximal algorithms for fitting both penalized MTD and mLTD models. The convex formulation invites new optimization routines for fitting MTD models since many options exist for solving problems with convex objectives with linear equality and inequality constraints. In the Supplementary Material, we present alternative MTD solvers based on Frank-Wolfe [22] and Majorization-Minimization (MM) [21], and discuss their trade-offs. Both Frank-Wolfe and MM algorithms for MTD take elegant and simple forms. Furthermore, the MM algorithm for the non-penalized convex Problem 3.2 is equivalent to an EM algorithm for the MTD model in the original non-convex parameterization of Problem 3.1. As a byproduct, this equivalence shows that the EM algorithm under the non-convex parameterization converges to a global optima. Here we focus on proximal algorithms since the MM algorithm for MTD is applicable only to the non-penalized MTD objective and Frank-Wolfe converges slowly relative to proximal gradient for the dimensions we consider; see the Supplementary Material for more details.

For the mLTD model, we perform gradient steps with respect to the mLTD likelihood followed by a proximal step with respect to the group lasso penalty. This leads to a gradient step of the smooth likelihood followed by separate soft group thresholding [33] on each .

For the MTD model, our proximal algorithm reduces to a projected gradient algorithm [33]. Projected gradient algorithms take steps along the gradient of the objective, and then project the result onto the feasible region defined by the constraints. Compared to other MTD optimization methods, our projected gradient algorithm under the parameterization is guaranteed to reach the global optima of the MTD log-likelihood. The gradient of the regularized MTD model with respect to entries in over the feasible set is given by

| (5.1) |

For the norm, is not differentiable when an element in any is zero. For the group norm, is not differentiable when every element in at least one is zero. However, the MTD constraints enforce that . Since the point of non-differentiability for the norm in our case occurs when elements are identically zero, we modify the constraints so that for some small when using the group penalty. This allows us to ignore non-differentiability issues, and instead take gradient steps directly along the penalized MTD objective.

Following the notation from the end of Section 4.1, let the set denote the modified MTD constraints with respect to the parameterization. We perform projected gradient descent by taking a step along the regularized MTD gradient of Equation (5.1) and then project the result onto . Specifically, the algorithm iterates the following recursion starting at iteration :

| (5.2) |

where is a step size chosen by line search [33]. For ease of presentation, here we have written the projected gradient steps in terms of the vectorized variables , rather than the matrices. The operation is the projection of a vector onto the modified MTD constraint set :

with for the penalty and but small for the group lasso penalty. While this is a standard quadratic program for which we may use the dual method [16] as, e.g. implemented in the R quadratic programming package quadprog [43], we have found that standard solvers may scale poorly as the number of time series become large. To mitigate this inefficiency, here we develop a fast projection algorithm based on Dykstra’s splitting algorithm [7] that harnesses the particular structure of the constraint set for much faster projection, as described in Section 5.1.

5.1. Dykstra’s Splitting Algorithm for Projection onto the MTD Constraints.

The set may be written as the intersection of two simpler sets: , where is the simplex constraint set of the first column of each matrix and the non-negativity constraint for all entries of . Specifically,

| (5.3) |

On the other hand, , where is the constraint set that all columns in sum to the same value:

| (5.4) |

where the matrix is given in Equation (4.3). Dykstra’s algorithm alternates between projecting onto the simplex constraints and the equal column sums by iterating the following steps. Let . Denote by the projection onto the set and by the projection onto the set . Dykstra’s algorithm amounts to the following iterations starting with :

The projection may be split into a simplex projection for the constraint and a non-negativity constraint and . We perform the simplex projection in time using the algorithm of [13]; the non-negativity projection is simply thresholding elements at zero and is performed in linear time. The linear projection is performed separately for each :

| (5.5) |

where may be precomputed so the per-iteration complexity for the full projection is since is a matrix. Importantly, this projection scheme harnesses the structure of the constraint set by splitting the projections into components that admit fast and simple low-dimensional projections. The full projection algorithm is given in Algorithm 5.2.

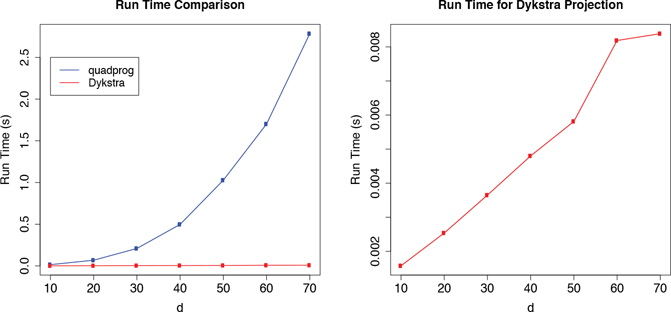

We compare projection times of the Dykstra algorithm to the active set method of [16] implemented in the R package quadprog [43]. The Dykstra projection for the MTD constraints was implemented in C++. Elements of were drawn independently from a normal distribution with standard deviation .7 and then projected onto . Average run times across 10 random realizations for series and categories are displayed in Figure 4. The Dykstra algorithm was run until iterates changed by less than 10−10. For each run, the elementwise maximum difference between the Dykstra projection and the quadprog projection was always on the scale of 10−10. Across this range of , the quadprog runtime appears to scale quadratically in , with a total run time on the scale of seconds for . The Dykstra projection method, however, appears to scale near linearly in this range with run times on the order of milliseconds. We also performed experiments with differing standard deviations for the independent draws of and observed very similar results.

Figure 4.

(left) A runtime comparison of the quadprog projection method and the Dykstra projection method on a range of time series dimensions. (right) A zoom in of only the compute time of the Dykstra method.

Algorithm 5.1.

Projected gradient algorithm for MTD using Dykstra projections.

| Initialize |

| while not converged do |

| compute via Equation 5.1 |

| determine by line search [33] |

| end while |

| return |

5.2. Comparing Model Selection and Optimization in MTD and mLTD.

Approaches to model selection in MTD and mLTD models are conceptually similar; both add regularizing penalties to enforce elements in to zero. However, these two approaches differ in practice. We explore the differences in selecting for Granger causality between these two approaches via extensive simulations in Section 7.

Both MTD and mLTD models take gradient steps followed by a proximal operation. In the mLTD model, this proximal operation is given by soft thresholding on the elements of . In the MTD optimization the proximal operation reduces to a projection onto the MTD constraint set. Importantly, due to the restricted domain of the MTD parameter set, the normally non-smooth penalty terms become smooth over the constraint set and we thus include them in the gradient step. In mLTD, the soft threshold proximal operation is performed in linear time while in MTD the projection is performed by iteratively using the Dykstra algorithm, where each step of the Dykstra algorithm is performed in log-linear time.

Algorithm 5.2.

DykstraMTD: Dykstra algorithm for projection onto the MTD constraints.

| Let be the ordered indices of whose elements belong in the first column of some or in |

| Let () refer to ordered indices of whose elements belong to . |

| while not converged do |

| via [13] |

| for do |

| via Equation 5.5 |

| end for |

| end while |

| return |

6. Estimation Consistency of MTD Model.

In this section, we establish an upper bound for estimation error of MTD parameters under the group lasso penalty. Analogous results can be obtained for the standard lasso penalty.

We first note that the MTD likelihood is of the same form as a multinomial GLM model with identity link, i.e., with probability modeled as linear combination of covariates. However, the dependence in the time series and the identity link create additional technicalities in the proof, and we will use newly developed concentration and entropy results in the dependent sample setting to overcome these difficulties.

We begin by stating the assumptions. Recall that is a Markov chain with state space . The transition kernel is given by (2.2) and (2.5). As in [34], we say that is -irreducible, if there exists a non-zero -finite measure on such that for all with and for all , there exists a positive integer such that . Here, is the distribution of given . Our first assumption concerns the nature of the data generating model and is rather mild.

Assumption 1.

is aperiodic and -irreducible, and has a unique stationary distribution .

For the ease of presentation, we will write the MTD likelihood as a multinomial model with identity link. Let be the indicator function. Define where , and hence indicates the state of time series at time . We define , for each , where and . Hence, indicates both the state of time series at time and the state of time series at time . Define a new covariate vector as . We note that each component of can take values only in {0, 1}, and denote the possible values of as . The MTD model can then be written as

| (6.1) |

where is the coefficient of interest defined in terms of ’s. Specifically, for a general set of MTD parameters, we let for and define . In other words, the first components correspond to the intercept and each subsequent consecutive components correspond to a transition matrix. The superscript 0 denotes the true parameter value.

Denote the group lasso penalty by , where the intercept is left unpenalized. The MTD optimization problem can be written as

| (6.2) |

| (6.3) |

Let and be the empirical and conditional expected negative log-likelihood risks, respectively

| (6.4) |

where is the -algebra generated by . Furthermore, let denote the active set of , i.e., and denote its complement in . We define and . With this formulation, we are now ready to state the next assumptions.

Assumption 2.

For all such that for some function that only depends on and . Moreover, we assume that

| (6.5) |

Assumption 3.

Define a semi-norm as . For a stretching factor , define

| (6.6) |

| (6.7) |

where is the set of all that can be written as a scaled difference between two vectors that satisfy the MTD model constraints and identifiability constraints. We assume that for some for some constant .

Assumption 2 states that the transition probabilities are either 0 or bounded away from 0 by some quantity that only depends on the sample size and dimension. We further assume that this quantity is larger than the estimation error, which we will derive later. It ensures that when the parameter estimates are close to the true value, the likelihoods are also close. This in general may not be the case, as log(⋅) is unbounded when its argument approaches 0 and is not Lipschitz-continuous. Assumption 3 is a compatibility condition, often used in establishing estimation consistency of lasso-type estimators [8]. It is slightly weaker than the restricted eigenvalue condition which is also commonly used. Intuitively, this assumption requires that inactive groups are not too correlated with the active ones. The requirement that constrains the inherent co-linearity among the covariates.

Due to the Markovian structure, the design has to be treated as random, yet the compatibility constant is defined using population quantities. Hence, we need to show that the sample version of compatibility constant converges to its population counterpart defined in Assumption 3. To this end we use concentration results for Markov chains developed in [34] based on spectral methods.

A key quantity for the concentration results is the pseudo spectral gap of the chain [34]. We re-state the relevant definitions here for completeness. Let be the Hilbert space of complex valued measurable functions on that are square integrable with respect to . We equip with the inner product . Define a linear operator on as , which is induced from the transition kernel . The spectrum of a chain is defined as

| (6.8) |

If is a self-adjoint operator, the spectral gap is defined as

| (6.9) |

The self-adjointness of corresponds to the reversibility of the Markov chain with transition kernel . In general, the chain specified by the MTD model may not be reversible. In this case, define the time reversal of as the transition kernel

| (6.10) |

Then, the induced linear operator is the adjoint of on . Note that when the chain is indeed reversible, we have . Finally, the pseudo spectral gap of is defined as

| (6.11) |

where denotes the spectral gap of the self-adjoint operator . See Section 3.1 in [34] for additional discussion on the pseudo spectral gap. We make the following assumption on the pseudo spectral gap:

Assumption 4.

The pseudo spectral gap satisfies .

This assumption requires that as grows, the pseudo spectral gap of the chain does not approach 0 too fast. For a uniformly ergodic chain, the pseudo spectral gap is closely related to its mixing time, and this assumption requires that the mixing time does not grow too large. If is lower bounded by some constant, Assumption 4 reduces to an assumption on the dimension and sparsity relative to the sample size. Methods have been proposed to estimate the pseudo spectral gap [44], which can be used to assess the validity of this assumption empirically.

We are now ready to state our main theorem on the estimation error of the MTD model.

Theorem 6.1.

(Estimation error) Let . Suppose that there exists such that for all

| (6.12) |

and

| (6.13) |

Take . Then, under Assumption 1 and Assumption 4, for sufficiently large , we have that

| (6.14) |

Furthermore, under Assumption 3, the RHS is upper bounded by where is a constant depending on .

This theorem states that the estimation error defined in terms of is closely related to . The next lemma quantifies the magnitude of .

Lemma 6.2.

Under Assumption 2 and Assumption 3, we can take and to satisfy (6.12) and (6.13), and

| (6.15) |

Combining Theorem 6.1 and Lemma 6.2, we have the following corollary.

Corollary 6.3. Under Assumptions 1–4, we have that

| (6.16) |

If the minimal nonzero transition probability is large enough so that , we get a convergence rate of . Compared with the classical results on the estimation error of lasso (see, for example, [5]), we have an extra term. This is due to a concentration result in the dependent data setting [40]. Investigating whether this log factor can be removed would be an interesting question for future research.

Based on the estimation error bound, one can consider a thresholded version of the MTD estimator to achieve variable selection consistency. The thresholding step helps eliminating false positives, without the stringent irrepresentable condition, which is required for variable selection consistency of the lasso [30]. Specifically, we can use a threshold of for some appropriately chosen . If we additionally assume that the minimal signal strength is of order larger than the estimation error bound, we can achieve variable selection consistency asymptotically.

7. Experiments.

We study the performance of our approaches to Granger causality detection in categorical time series. First, we compare penalized mLTD and MTD methods across multiple simulated data scenarios in Section 7.1. In Section 7.2 we apply our penalized MTD method to detect Granger causal connectivity between musical elements in a music dataset of Bach chorales and in Section 7.3 between iEEG sensors during seizures in an awake canine.

7.1. Simulated Data.

We perform a set of simulation experiments to compare the MTD and mLTD model selection methods. Specifically, we compare the MTD group lasso, -MTD, and mLTD group lasso methods on simulated categorical time series generated from a sparse MTD model, a sparse mLTD model and a sparse latent vector autoregressive model (VAR) with quantized outputs. In the sparse VAR setting, we also compare the three proposed methods to a penalized VAR fit using the ordinal categorical variables. For all experiments, we consider time series of length , , and number of categories . We first explain the details of each simulation condition and then discuss the results.

Sparse MTD.

For the MTD model, we randomly generate parameters by where and . We let . Columns of are generated according to with . (Note that here we have added a superscript to to specifically indicate the to interaction, whereas previously we dropped the index for notational simplicity by assuming we were just looking at the series term.) To ensure that the columns are not close to identical in (which would imply Granger non-causality), is sampled until the average total variation norm between the columns is greater than some tolerance . This ensures that non-causality occurs only when are zero, and not due to equal columns in the simulation. For our simulations, we set . A lower value of makes it more difficult to learn the Granger causality graph since some true interactions might be extremely weak.

Sparse mLTD.

For the mLTD model, the nonzero parameters are generated by where with .

Sparse Latent VAR.

To examine data generated from neither of the models considered, we simulate data from a continuous time series according to a sparse VAR(1):

| (7.1) |

where . The sparse matrix A is generated by first sampling entries and then setting , where with . We then quantize each dimension, , into categories to create a categorical time series . For example, when if is in the (0,.33) quantile of , and so forth.

Results.

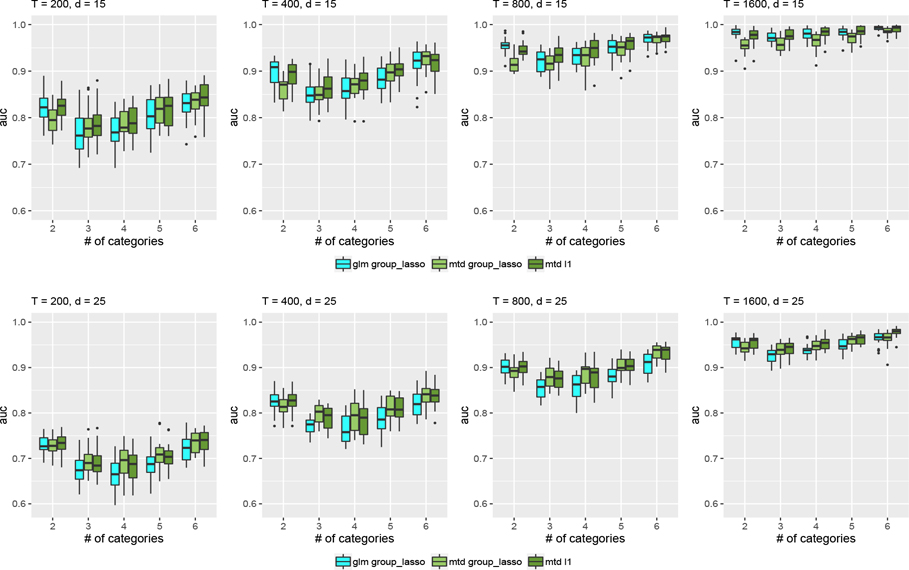

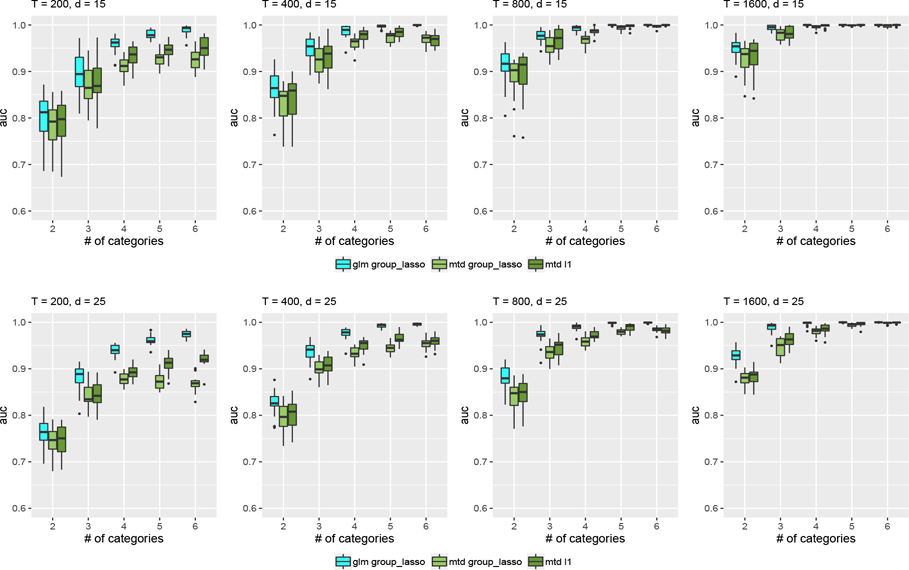

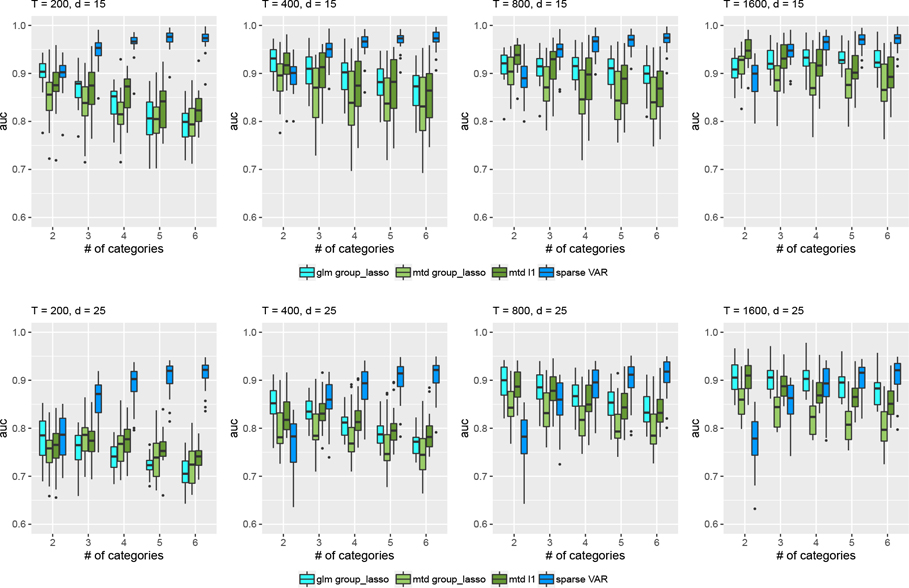

For all methods — MTD , MTD group lasso, and mLTD group lasso — we compute the true positive rate and false positive rate over a grid of values, and trace out the ROC curve. We then compute the area under the ROC curve. The results are displayed as boxplots across 20 simulation runs in Figures 5, 6, and 7 for the categorical time series generated by MTD, mLTD, and latent VAR, respectively. We note that the mLTD group lasso model performs best when the data are generated from a mLTD, and likewise the MTD and MTD group lasso perform better when the data are generated from a MTD. As pointed out in [20], when the groups are homogeneous in the sense that most coefficients in the active group are nonzero, group lasso tends to perform well. This is the case in the MTD model as the coefficients in nonzero are generated from a Dirichlet distribution. However, this principle is less applicable when the data are generated from a mLTD model as we have model misspecification. MTD with either group lasso or lasso penalty tries to find the best MTD approximation to the true data generating mechanism. Interestingly, for data generated from mLTD, we see improved performance as a function of the number of categories for all and settings, while for MTD performance starts high, dips and goes back up with increasing . This is probably due to the simulation conditions, as in both MTD and mLTD models Granger causality can be quantified as the difference between the columns of . When there are more categories, there is higher probability under our simulation conditions that there will be some columns with large deviation from other columns in . This leads to improved Granger causality detection when it exists. Furthermore, we notice that in general the performances of all three methods are better when the data are generated from a mLTD model compared to a MTD model. This is again related to the simulation conditions. In the MTD model, the columns of are generated from a Dirichlet distribution with values constrained between 0 and 1, and the differences among columns are in general smaller than those in the mLTD model where the coefficients are generated using a normal distribution. Thus the connections among time series in the sense of Granger causality are weaker in the MTD model than the mLTD model. The difference in the signal strengths is illustrated in Figure SM3 in the Supplementary Material.

Figure 5.

AUC for data generated by a sparse MTD process. Boxplots over 20 simulation runs.

Figure 6.

AUC for data generated by a sparse mLTD process. Boxplots over 20 simulation runs.

Figure 7.

AUC for data generated by a sparse latent VAR process. Boxplots over 20 simulation runs.

In the latent VAR simulation, the MTD and the mLTD methods have comparable performance, and both outperform the MTD group lasso approach. However, under model misspecfication, the relative performance of these methods might depend on how well they approximate the true data generating mechanism. There is also evidence of worsened performance for all three methods as the quantization of the latent VAR processes becomes finer, and the number of categories increases. This might be due to the increased extent of model misspecification. We additionally compare the proposed methods to a sparse VAR fit, where we use the ordinal categorical variables directly. We observe that when the number of categories is small, our proposed methods perform similarly to the sparse VAR approach, as not much information is lost by ignoring the order. However, as the number of categories increases, sparse VAR approach performs better by taking the order into account.

As expected, across all simulation conditions and estimation methods increasing the sample size leads to improved performance while increasing the dimension worsens performance.

We additionally present the median ROC curves in the Supplementary Material, along with points on the ROC curves chosen by cross-validation and BIC. In general, our numerical experiments indicate that the values of the tuning parameter selected by cross-validation tend to over-select edges, which has been observed in previous studies [29]. This highlights the importance of the thresholding step to reduce false positives. In contrast, BIC tends to give a large tuning parameter and results in an overly sparse graph when the sample size is small compared to the dimension; however, its performance improves considerably with large sample sizes.

7.2. Music Data Analysis.

We analyze Granger causality connections in the ‘Bach Choral Harmony’ data set available at the UCI machine learning repository [27] (https://archive.ics.uci.edu/ml/datasets/Bach+Chorales). This data set, which has been used previously [38, 15], consists of 60 chorales for a total of 5665 time steps. At each time step, 15 unique discrete events are recorded. There are 12 harmony notes, {C, C#, D, F#, D#, E, F, G, G#, A, A#, B}, that take values either ‘on’ (played) or ‘off’ (not played), i.e., for . There is a ‘meter’ category taking values in , where lower numbers indicate less accented events and higher numbers higher accented events. There is also the ‘pitch class of the base note’, taking 12 different values and a ‘chord’ category. We group all chords that occur less than 200 times into one group, giving a total of 12 chord categories.

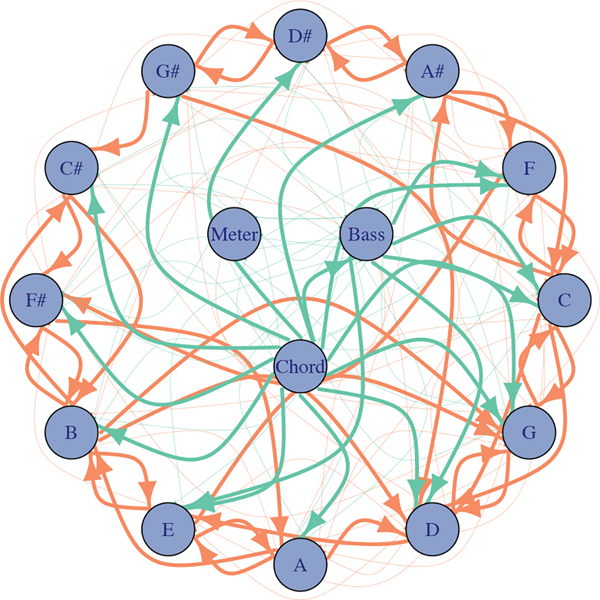

We apply the sparse MTD model for Granger causality selection. As the sample size is relatively small compared to the number of time series and number of categories per series, we choose the tuning parameter by five-fold cross validation over a grid of values. However, since cross-validation tends to over-select Granger causality relationships, we threshold the weights at .01. The estimated resulting Granger causality graph is plotted in Figure 8. To aide in the presentation of our structural analysis below, we bold all edges with weight magnitudes greater than .06.

Figure 8.

The Granger causality graph for the ‘Bach Choral Harmony’ data set using the penalized MTD method. The harmony notes are displayed around the edge in a circle corresponding to the circle of fifths. Orange links display directed interactions between the harmony notes while green links display interactions to and from the ‘bass’, ‘chord’, and ‘meter’ variables.

The harmony notes in the graph are displayed in a circle corresponding to the circle of fifths; the circle of fifths is a sequence of pitches where the next pitch in the circle is found seven semitones higher or lower, and it is a common way of displaying and understanding relationships between pitches in western classical music. Plotting the graph in this way shows substantially higher connections with respect to sequences on this circle. For example, moving both clockwise and counter-clockwise around the circle of fifths we see strong connections between adjacent pitches, and in some cases strong connections between pitches that are two hops away on the circle of fifths. Strong connections to pitches far away on the circle of fifths are much rarer. Together, the results suggest that in these chorales there is strong dependence in time between pitches moving in both the clockwise and counter-clockwise direction on the circle of fifths.

We also note that the ‘chord’ category has very strong outgoing connections implying it has strong Granger causality relationship with all harmony pitches. This result is intuitive, as it implies that there is strong dependence between what chord is played at time step and what harmony notes are played at time step . The ‘bass’ pitch is also influenced by ‘chord’ and tends to both influence and be influenced by most harmony pitches. Finally, we note that the ‘meter’ category has much fewer and weaker incoming and outgoing connections, capturing the intuitive notion that the level of accentuation of certain notes does not really relate to what notes are played.

As mentioned in Section 3.2.1, the MTD model is much more appropriate than the mLTD model for this type of exploratory Granger causality analysis: The weights intuitively describe the amount of probability mass that is accounted for in the conditional probability table, giving an intuitive notion of dependence between categorical variables. In the mLTD model, in contrast, there is not as an intuitive interpretation of ‘link strength’ between two categorical variables due to the non-linearity of the softmax function. For this reason, it is not clear how to define the strength of interaction and dependence given a set of estimated parameters. We still attempted to draw such a comparison. We chose to use the normalized norm of each matrix, , as a measure of connection strength in the mLTD model. However, this metric does not have a direct interpretation with respect to the conditional probability tensor. Due to these interpretational difficulties, we present the results of the mLTD Bach analysis in the Supplementary Material. We note here that the final graph shows some of the structure of the MTD analysis: strong connections between chord and the harmony notes and some strong connections between notes on the circle of fifths. However, in general, the resulting graph is much less sparse and interpretable than the MTD graph.

7.3. Functional Connectivity in Canine iEEG.

We analyze functional connectivity among intracranial electroencephalogram (iEEG) sensors during seizures in an awake canine [11]. The data was collected from a single canine undergoing seizures and is available at ieeg.org [9]. Recent time series segmentation of iEEG data around seizure events has shown that different discrete dynamic states are active before, during, and after a seizure onset [45, 11]. We analyze Granger causal connectivity between the iEEG recording channels at the level of these discrete dynamic states, providing a Granger causal analysis at a more abstract level. Specifically, we segment the continuous measurements into nominal categorical states using a Markov switching autoregressive model. This analysis illustrates which channel’s dynamic states are predictive of another channel’s states.

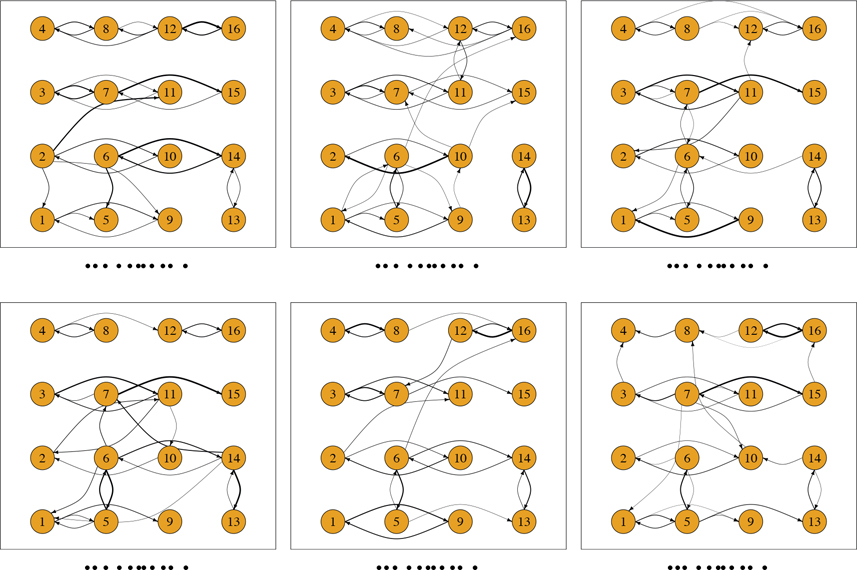

Each of 18 iEEG recordings from a single dog contains channels and time points corresponding to a two minute window around a seizure event. The time series for each channel was segmented into a categorical time series with states using a Markov switching autoregressive model of multiple time series [46, 45]. See the Supplementary Material for details on the segmentation model and procedure.

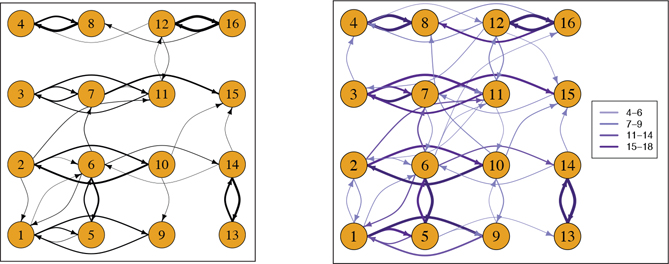

We separately apply our sparse MTD model to the resulting iEEG multivariate categorical time series from 18 different seizure events. For each seizure, the hyperparameter was varied over 800 values sampled uniformly between 0.01 and 100000. As the sample size is large, the final model was selected by the Bayesian information criterion (BIC). The resulting estimated graphs for six representative seizure events are shown in Figure 9. For aided interpretability, only edges that contribute more than 1% of the total conditional probability tensor are displayed. In Figure 10 we display two graphs that summarize Granger causality across all 18 seizures. In the first, we compute the average edge weight across all seizures and threshold the final graph at 0.5%. In the second, for each edge we display the number of times that edge is included across all seizures.

Figure 9.

Granger causality graphs estimated from a sparse MTD model across six different seizure events for canine iEEG data.

Figure 10.

(left) Graph weighted by the average across 18 siezures and (right) graph weighted and colored by the number of edge inclusions across 18 seizures.

The graphs in Figures 9 and 10 indicate persistent shared structure across seizures. The four nodes in the same row represent a strip of four electrodes that were placed along the anterior-posterior direction. There were two parallel strips of four electrodes on each hemisphere. Most connections appear horizontally across the sensor locations, corresponding to anterior-posterior connections among regions within the same strip, which should be close both spatially and functionally. The few vertical connections are between adjacent rows, which represent connections between strips next to each other. Some groups of edges like 1 → 9, 14 → 13, 13 → 14, 3 → 7, 7 → 3, 4 → 8, 8 → 4, 12 → 16, 16 → 12 and others, appear in at least fifteen of the seizure graphs, showing the persistence in some Granger causal connectivity across different seizure events. Future work aims to assess the clinical significance of these findings. But, at a high level, we have identified that AR states, that capture the frequency content in individual channel signals, are correlated across time in a structured and sparse manner during seizure events.

8. Discussion.

We have proposed a novel convex framework for the MTD model, as well as two penalized estimation strategies that simultaneously promote sparsity in Granger causality estimation and constrain the solution to an identifiable space. We have also introduced the mLTD model as a baseline for multivariate categorical time series that although straightforward, has not been explored in the literature. Novel identifiability conditions for the MTD have been derived and compared to those for the mLTD model. Finally, we have developed both projected gradient and Frank-Wolfe algorithms for the MTD model that harnesses the new convex formulation. For the projected gradient optimization, we also developed a Dykstra projection method to quickly project onto the MTD constraint set, allowing the MTD model to scale to much higher dimensions. Our experiments demonstrate the utility of both the MTD and mLTD model for inferring Granger causality networks from categorical time series, even under model misspecification.

There are a number of potential directions for future work. Consistency of high dimensional autoregressive GLMs with univariate natural parameters for each series has been recently established [18]. With less stringent parametric assumptions, the MTD model offers a more flexible framework than autoregressive GLMs. To handle this additional flexibility, we need additional assumptions on the Gram matrix and the spectral properties of the process when deriving an upper bound for the estimation error. We also have an extra factor in the upper bound compared to the results for lasso-type estimators in the independent data setting. This log factor also appears in [18]. Whether it can be removed or not would be an interesting question for future research. Further theoretical comparison between mLTD and MTD is also important. For example, to what extent may a mLTD distribution be represented by an MTD one, and vice versa; or, to what extent are both models consistent for Granger causality estimation under model misspecification? Our simulation results suggest that both methods perform well under model misspecification but more general theoretical results are certainly needed. Our sparse MTD framework also presents a simple approach to sparsity estimation under simplex constraints. As mentioned in Section 4.1, typically penalties are avoided under simplex constraints since the sum is constrained to equal one. Many authors have proposed a variety of non-convex sparsity regularizers that demand more involved optimization routines [35]. Inspired by our work with MTD, a simple solution is to leave some of the important coefficients known to be in the model unpenalized. For instance, treasury bonds in a sparse portfolio optimization [26] or large background clusters in sparse clustering and density estimation [25, 35].

It would also be interesting to explore other regularized MTD objectives, such as the nuclear norm on when the number of categories per time series is large. This penalty would both select for sparse dependencies, while simultaneously sharing information about transitions within each . While we have considered sparsity in , in other applications including categorical time series with large state-spaces, such as language modeling, the entries within each might be sparse. Comparing the projected gradient and Frank-Wolfe algorithms in these sparse, large state-space settings would be interesting. Another possible extension includes the hierarchical group lasso over lags for higher order Markov chains [31] to automatically obtain the order of the Markov chain. Overall, the methods presented herein open many new opportunities for analyzing multivariate categorical time series both in practice and theoretically.

Supplementary Material

Funding:

This work was partially funded by ONR grant N00014-18-1-2862 and grants from the National Science Foundation (CAREER IIS-1350133, DMS-1161565, DMS-1561814) and the National Institutes of Health (R01GM114029)

Footnotes

Submitted to the editors April 9th, 2020.

REFERENCES

- [1].Agresti A and Kateri M, Categorical Data Analysis, Springer Berlin Heidelberg, Berlin, Heidelberg, 2011, pp. 206–208, 10.1007/978-3-642-04898-2, [DOI] [Google Scholar]

- [2].Bahadori MT, Liu Y, and Xing EP, Fast structure learning in generalized stochastic processes with latent factors, in Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ‘13, New York, NY, USA, 2013, ACM, pp. 284–292, 10.1145/2487575.2487578, http://doi.acm.org/10.1145/2487575.2487578. [DOI] [Google Scholar]

- [3].Berchtold A, Estimation in the mixture transition distribution model, Journal of Time Series Analysis, 22 (2001), pp. 379–397, 10.1111/1467-9892.00231, https://onlinelibrary.wiley.com/doi/abs/10.1111/1467-9892.00231, https://arxiv.org/abs/https://onlinelibrary.wiley.com/doi/pdf/10.1111/1467-9892.00231. [DOI] [Google Scholar]

- [4].Berchtold A and Raftery A, The mixture transition distribution model for high-order markov chains and non-gaussian time series, Statist. Sci, 17 (2002), pp. 328–356, 10.1214/ss/1042727943, 10.1214/ss/1042727943. [DOI] [Google Scholar]

- [5].Bickel PJ, Ritov Y, Tsybakov AB, et al. , Simultaneous analysis of lasso and dantzig selector, The Annals of Statistics, 37 (2009), pp. 1705–1732. [Google Scholar]

- [6].Boyd S and Vandenberghe L, Convex optimization, Cambridge university press, 2004. [Google Scholar]

- [7].Boyle JP and Dykstra RL, A method for finding projections onto the intersection of convex sets in hilbert spaces, in Advances in Order Restricted Statistical Inference, Dykstra R, Robertson T, and Wright FT, eds., New York, NY, 1986, Springer New York, pp. 28–47. [Google Scholar]

- [8].Bühlmann P and Van De Geer S, Statistics for high-dimensional data: methods, theory and applications, Springer Science & Business Media, 2011. [Google Scholar]

- [9].M. C and U. of Pennsylvania, Ieeg.org.

- [10].Ching W, Fung ES, and Ng MK, A multivariate markov chain model for categorical data sequences and its applications in demand predictions, IMA Journal of Management Mathematics, 13 (2002), pp. 187–199, 10.1093/imaman/13.3.187. [DOI] [Google Scholar]

- [11].Davis KA, Ung H, Wulsin D, Wagenaar J, Fox E, Patterson N, Vite C, Worrell G, and Litt B, Mining continuous intracranial eeg in focal canine epilepsy: Relating interictal bursts to seizure onsets, Epilepsia, 57 (2016), pp. 89–98, 10.1111/epi.13249, https://onlinelibrary.wiley.com/doi/abs/10.1111/epi.13249, https://arxiv.org/abs/https://onlinelibrary.wiley.com/doi/pdf/10.1111/epi.13249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Doshi-Velez F, Wingate D, Tenenbaum J, and Roy N, Infinite dynamic bayesian networks, in Proceedings of the 28th International Conference on International Conference on Machine Learning, ICML’11, USA, 2011, Omnipress, pp. 913–920, http://dl.acm.org/citation.cfm?id=3104482.3104597. [Google Scholar]

- [13].Duchi J, Shalev-Shwartz S, Singer Y, and Chandra T, Efficient projections onto the l1-ball for learning in high dimensions, in Proceedings of the 25th International Conference on Machine Learning, ICML ‘08, New York, NY, USA, 2008, ACM, pp. 272–279, 10.1145/1390156.1390191, http://doi.acm.org/10.1145/1390156.1390191. [DOI] [Google Scholar]

- [14].Eichler M, Graphical modelling of multivariate time series, Probability Theory and Related Fields, 153 (2012), pp. 233–268. [Google Scholar]

- [15].Esposito R and Radicioni DP, Carpediem: Optimizing the viterbi algorithm and applications to supervised sequential learning, Journal of Machine Learning Research, 10 (2009), pp. 1851–1880. [Google Scholar]

- [16].Goldfarb D and Idnani A, Dual and primal-dual methods for solving strictly convex quadratic programs, in Numerical Analysis, Hennart JP, ed., Berlin, Heidelberg, 1982, Springer Berlin Heidelberg, pp. 226–239. [Google Scholar]

- [17].Granger C, Investigating causal relations by econometric, Rational Expectations and Econometric Practice, 1 (1981), p. 371. [Google Scholar]

- [18].Hall EC, Raskutti G, and Willett R, Inference of high-dimensional autoregressive generalized linear models, arXiv preprint arXiv:1605.02693, (2016). [Google Scholar]

- [19].Han F, Lu H, and Liu H, A direct estimation of high dimensional stationary vector autoregressions, The Journal of Machine Learning Research, 16 (2015), pp. 3115–3150. [Google Scholar]

- [20].Huang J, Zhang T, et al. , The benefit of group sparsity, The Annals of Statistics, 38 (2010), pp. 1978–2004. [Google Scholar]

- [21].Hunter DR and Lange K, A tutorial on mm algorithms, The American Statistician, 58 (2004), pp. 30–37, 10.1198/0003130042836, 10.1198/0003130042836, 10.1198/0003130042836. [DOI] [Google Scholar]

- [22].Jaggi M, Revisiting frank-wolfe: Projection-free sparse convex optimization, in ICML (1), 2013, pp. 427–435. [Google Scholar]

- [23].Jiao J, Permuter HH, Zhao L, Kim Y-H, and Weissman T, Universal estimation of directed information, IEEE Transactions on Information Theory, 59 (2013), pp. 6220–6242. [Google Scholar]

- [24].Kedem B and Fokianos K, Regression Models for Time Series Analysis,, vol. 488, John Wiley & Sons, 2005, ch. Regression models for categorical time series. [Google Scholar]

- [25].Kyrillidis A, Becker S, Cevher V, and Koch C, Sparse projections onto the simplex, in International Conference on Machine Learning, 2013, pp. 235–243. [Google Scholar]

- [26].Kyrillidis A, Becker S, Cevher V, and Koch C, Sparse simplex projections for portfolio optimization, in 2013 IEEE Global Conference on Signal and Information Processing, Dec 2013, pp. 1141–1141, 10.1109/GlobalSIP.2013.6737104. [DOI] [Google Scholar]

- [27].Lichman M et al. , Uci machine learning repository, 2013.

- [28].Lèbre S and Bourguignon P-Y, An em algorithm for estimation in the mixture transition distribution model, Journal of Statistical Computation and Simulation, 78 (2008), pp. 713–729, 10.1080/00949650701266666, 10.1080/00949650701266666, 10.1080/00949650701266666. [DOI] [Google Scholar]

- [29].Meinshausen N, Bühlmann P, et al. , High-dimensional graphs and variable selection with the lasso, The annals of statistics, 34 (2006), pp. 1436–1462. [Google Scholar]

- [30].Meinshausen N, Yu B, et al. , Lasso-type recovery of sparse representations for high-dimensional data, The annals of statistics, 37 (2009), pp. 246–270. [Google Scholar]

- [31].Nicholson WB, Bien J, and Matteson DS, Hierarchical vector autoregression, arXiv preprint arXiv:1412.5250, (2014). [Google Scholar]

- [32].Nicolau J, A new model for multivariate markov chains, Scandinavian Journal of Statistics, 41 (2014), pp. 1124–1135. [Google Scholar]

- [33].Parikh N, Boyd S, et al. , Proximal algorithms, foundations and trends r in optimization, 1 (2014).

- [34].Paulin D et al. , Concentration inequalities for markov chains by marton couplings and spectral methods, Electronic Journal of Probability, 20 (2015). [Google Scholar]

- [35].Pilanci M, Ghaoui LE, and Chandrasekaran V, Recovery of sparse probability measures via convex programming, in Advances in Neural Information Processing Systems 25, Pereira F, Burges CJC, Bottou L, and Weinberger KQ, eds., Curran Associates, Inc., 2012, pp. 2420–2428, http://papers.nips.cc/paper/4504-recovery-of-sparse-probability-measures-via-convex-programming.pdf. [Google Scholar]

- [36].Qiu H, Xu S, Han F, Liu H, and Caffo B, Robust estimation of transition matrices in high dimensional heavy-tailed vector autoregressive processes, in Proceedings of the... International Conference on Machine Learning. International Conference on Machine Learning, vol. 37, NIH Public Access, 2015, p. 1843. [PMC free article] [PubMed] [Google Scholar]

- [37].Quinn CJ, Kiyavash N, and Coleman TP, Directed information graphs, IEEE Transactions on information theory, 61 (2015), pp. 6887–6909. [Google Scholar]

- [38].Radicioni DP and Esposito R, BREVE: An HMPerceptron-Based Chord Recognition System, Springer Berlin Heidelberg, Berlin, Heidelberg, 2010, pp. 143–164, 10.1007/978-3-642-11674-2, 10.1007/978-3-642-11674-2. [DOI] [Google Scholar]

- [39].Raftery AE, A model for high-order markov chains, Journal of the Royal Statistical Society: Series B (Methodological), 47 (1985), pp. 528–539, 10.1111/j.2517-6161.1985.tb01383.x, https://rss.onlinelibrary.wiley.com/doi/abs/10.1111/j.2517-6161.1985.tb01383.x, https://arxiv.org/abs/https://rss.onlinelibrary.wiley.com/doi/pdf/10.1111/j.2517-6161.1985.tb01383.x. [DOI] [Google Scholar]

- [40].Rakhlin A, Sridharan K, and Tewari A, Sequential complexities and uniform martingale laws of large numbers, Probability Theory and Related Fields, 161 (2015), pp. 111–153. [Google Scholar]

- [41].Sarkar A and Dunson DB, Bayesian nonparametric modeling of higher order markov chains, Journal of the American Statistical Association, 111 (2016), pp. 1791–1803, 10.1080/01621459.2015.1115763, 10.1080/01621459.2015.1115763, 10.1080/01621459.2015.1115763. [DOI] [Google Scholar]

- [42].Shojaie A and Michailidis G, Discovering graphical Granger causality using the truncating lasso penalty, Bioinformatics, 26 (2010), pp. i517–i523, 10.1093/bioinformatics/7btq37, , https://arxiv.org/abs/http://oup.prod.sis.lan/bioinformatics/article-pdf/26/18/i517/536841/btq377.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Turlach B and Weingessel A, quadprog r package. available online, 2013.

- [44].Wolfer G and Kontorovich A, Estimating the mixing time of ergodic markov chains, arXiv preprint arXiv:1902.01224, (2019). [Google Scholar]

- [45].Wulsin D, Fox E, and Litt B, Parsing epileptic events using a markov switching process model for correlated time series, in International Conference on Machine Learning, 2013, pp. 356–364. [Google Scholar]

- [46].Wulsin DF, Fox EB, and Litt B, Modeling the complex dynamics and changing correlations of epileptic events, Artificial Intelligence, 216 (2014), pp. 55–75, 10.1016/j.artint.2014.05.006, http://www.sciencedirect.com/science/article/pii/S0004370214000599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Yuan M and Lin Y, Model selection and estimation in regression with grouped variables, Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68 (2006), pp. 49–67. [Google Scholar]

- [48].Zhou K, Zha H, and Song L, Learning social infectivity in sparse low-rank networks using multidimensional hawkes processes, in Artificial Intelligence and Statistics, 2013, pp. 641–649. [Google Scholar]

- [49].Zhu D and Ching W, A new estimation method for multivariate markov chain model with application in demand predictions, in 2010 Third International Conference on Business Intelligence and Financial Engineering, Aug 2010, pp. 126–130, 10.1109/BIFE.2010.39. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.