Abstract

Deep neural network models of sensory systems are often proposed to learn representational transformations with invariances like those in the brain. To reveal these invariances, we generated ‘model metamers’, stimuli whose activations within a model stage are matched to those of a natural stimulus. Metamers for state-of-the-art supervised and unsupervised neural network models of vision and audition were often completely unrecognizable to humans when generated from late model stages, suggesting differences between model and human invariances. Targeted model changes improved human recognizability of model metamers but did not eliminate the overall human–model discrepancy. The human recognizability of a model’s metamers was well predicted by their recognizability by other models, suggesting that models contain idiosyncratic invariances in addition to those required by the task. Metamer recognizability dissociated from both traditional brain-based benchmarks and adversarial vulnerability, revealing a distinct failure mode of existing sensory models and providing a complementary benchmark for model assessment.

Subject terms: Sensory processing, Object vision, Auditory system

The authors test artificial neural networks with stimuli whose activations are matched to those of a natural stimulus. These ‘model metamers’ are often unrecognizable to humans, demonstrating a discrepancy between human and model sensory systems.

Main

A central goal of neuroscience is to build models that reproduce brain responses and behavior. The hierarchical nature of biological sensory systems1 has motivated the use of hierarchical neural network models that transform sensory inputs into task-relevant representations2,3. As such models have become the top-performing machine perception systems over the last decade, they have also emerged as the leading models of both the visual and auditory systems4,5.

One hypothesis for why artificial neural network models might replicate computations found in biological sensory systems is that they instantiate invariances that mirror those in such systems6,7. For instance, visual object recognition must often be invariant to pose and to the direction of illumination. Similarly, speech recognition must be invariant to speaker identity and to details of the prosodic contour. Sensory systems are hypothesized to build up invariances8,9 that enable robust recognition. Such invariances plausibly arise in neural network models as a consequence of optimization for recognition tasks or other training objectives.

Although biological and artificial neural networks might be supposed to have similar internal invariances, there are some known human–model discrepancies that suggest that the invariances of the two systems do not perfectly match. For instance, model judgments are often impaired by stimulus manipulations to which human judgments are invariant, such as additive noise10,11 or small translations of the input12,13. Another such discrepancy is the vulnerability to adversarial perturbations (small changes to stimuli that alter model decisions despite being imperceptible to humans14,15). Although these findings illustrate that current task-optimized models lack some of the invariances of human perception, they leave many questions unresolved. For instance, because the established discrepancies rely on only the model’s output decisions, they do not reveal where in the model the discrepancies arise. It also remains unclear whether observed discrepancies are specific to supervised learning procedures that are known to deviate from biological learning. Finally, because we have lacked a general method to assess model invariances in the absence of a specific hypothesis, it remains possible that current models possess many other invariances that humans lack.

Here, we present a general test of whether the invariances present in computational models of the auditory and visual systems are also present in human perception. Rather than target particular known human invariances, we visualize or sonify model invariances by synthesizing stimuli that produce approximately the same activations in a model. We draw inspiration from human perceptual metamers (stimuli that are physically distinct but that are indistinguishable to human observers because they produce the same response at some stage of a sensory system), which have previously been characterized in the domains of color perception16,17, texture18–20, cue combination21, Bayesian decision-making22 and visual crowding23,24. We call the stimuli we generate ‘model metamers’ because they are metameric for a computational model25.

We generated model metamers from a variety of deep neural network models of vision and audition by synthesizing stimuli that yielded the same activations in a model stage as particular natural images or sounds. We then evaluated human recognition of the model metamers. If the model invariances match those of humans, humans should be able to recognize the model metamer as belonging to the same class as the natural signal to which it is matched.

Across both visual and auditory task-optimized neural networks, metamers from late model stages were nearly always misclassified by humans, suggesting that many of their invariances are not present in human sensory systems. The same phenomenon occurred for models trained with unsupervised learning, demonstrating that the model failure is not specific to supervised classifiers. Model metamers could be made more recognizable to humans with selective changes to the training procedure or architecture. However, late-stage model metamers remained much less recognizable than natural stimuli in every model we tested regardless of architecture or training. Some model changes that produced more recognizable metamers did not improve conventional neural prediction metrics or evaluations of robustness, demonstrating that the metamer test provides a complementary tool to guide model improvements. Notably, the human recognizability of a model’s metamers was well predicted by other models’ recognition of the same metamers, suggesting that the discrepancy with humans lies in idiosyncratic model-specific invariances. Model metamers demonstrate a qualitative gap between current models of sensory systems and their biological counterparts and provide a benchmark for future model evaluation.

Results

General procedure

The goal of our metamer generation procedure (Fig. 1a) was to generate stimuli that produce nearly identical activations at some stage within a model but that were otherwise unconstrained and thus could differ in ways to which the model was invariant. We first measured the activations evoked by a natural image or sound at a particular model stage. The metamer for the natural image or sound was then initialized as a white noise signal (either an image or a sound waveform; white noise was chosen to sample the metamers as broadly as possible subject to the model constraints without biasing the initialization toward a specific object class). The noise signal was then modified to minimize the difference between its activations at the model stage of interest and those for the natural signal to which it was matched. The optimization procedure performed gradient descent on the input, iteratively updating the input while holding the model parameters fixed. Model metamers can be generated in this way for any model stage constructed from differentiable operations. Because the models that we considered are hierarchical, if the image or sound was matched with high fidelity at a particular stage, all subsequent stages were also matched (including the final classification stage in the case of supervised models, yielding the same decision).

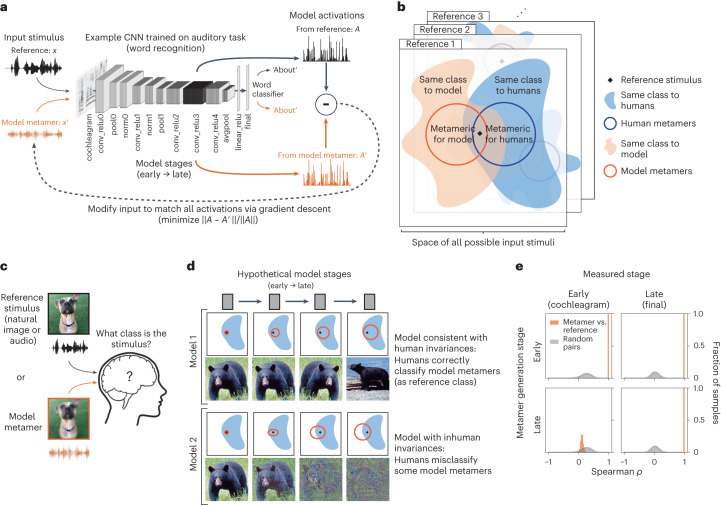

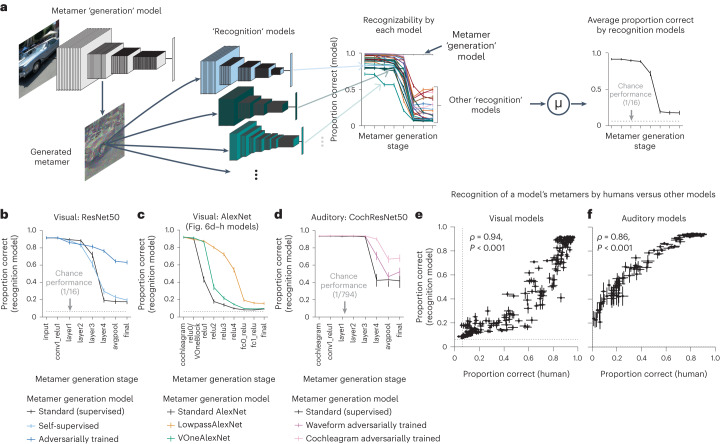

Fig. 1. Overview of model metamers methodology.

a, Model metamer generation. Metamers are synthesized by performing gradient descent on a noise signal to minimize the difference (normalized Euclidean distance) between its activations at a model stage and those of a natural signal. The architecture shown is the CochCNN9 auditory model. b, Each reference stimulus has an associated set of stimuli that are categorized as the same class by humans (blue) or by models (orange, if models have a classification decision). Metamers for humans and metamers for models are also sets of stimuli in the space of all possible stimuli (subsets of the set of same-class stimuli). Here, model metamers are derived for a specific model stage, taking advantage of access to the internal representations of the model at each stage. c, General experimental setup. Because we do not have high-resolution access to the internal brain representations of humans, we test for shared invariances behaviorally, asking humans to make classification judgments on natural stimuli or model metamers. See text for justification of the use of a classification task. d, Possible scenarios for how model metamers could relate to human classification decisions. Each square depicts sets of stimuli in the input space. Model 1 represents a model that passes our proposed behavioral test. The set of metamers for a reference stimulus grows over the course of the model, but even at the last stage, all model metamers are classified as the reference category by humans. Model 2 represents a model whose invariances diverge from those of humans. By the late stages of the model, many model metamers are no longer recognizable by humans as the reference stimulus class. The metamer test results thus reveal the model stage at which model invariances diverge from those of humans. e, Example distributions of activation similarity for pairs of metamers (a natural reference stimulus and its corresponding metamer) along with random pairs of natural stimuli from the training set. The latter provides a null distribution that we used to verify the success of the model metamer generation. Distributions were generated from the first and last stage of the CochCNN9 auditory model.

Experimental logic

The logic of our approach can be related to four sets of stimuli. For a given ‘reference’ stimulus, there is a set of stimuli for which humans produce the same classification judgment as the reference (Fig. 1b). A subset of these are stimuli that are indistinguishable from the reference stimulus (that is, metameric) to human observers. If a model performs a classification task, it will also have a set of stimuli judged to be the same category as the reference stimulus, and a subset of these stimuli will produce the same activations at a given model stage (model metamers). Even if the model does not perform classification, it could instantiate invariances that define sets of model metamers for the reference stimulus at each model stage.

In our experiments, we generate stimuli (sounds or images) that are metameric to a model and present these stimuli to humans performing a classification task (Fig. 1c). Because we have access to the internal representations of the model, we can generate metamers for each model stage (Fig. 1d). In many models there is limited invariance in the early stages (as is believed to be true of early stages of biological sensory systems9), with model metamers closely approximating the stimulus from which they are generated (Fig. 1d, left). But successive stages of a model may build up invariance, producing successively larger sets of model metamers. In a feedforward model, if two distinct inputs map onto the same representation at a given model stage, then any differences in the inputs cannot be recovered in subsequent stages, such that invariance cannot decrease from one stage to the next. If a model replicates a human sensory system, every model metamer from each stage should also be classified as the reference class by human observers (Fig. 1d, top). Such a result does not imply that all human invariances will be shared by the model, but it is a necessary condition for a model to replicate human invariances.

Discrepancies in human and model invariances could result in model metamers that are not recognizable by human observers (Fig. 1d, bottom). The model stage at which this occurs could provide insight into where any discrepancies with humans arise within the model.

Our approach differs from classical work on metamers17 in that we do not directly assess whether model metamers are also metamers for human observers (that is, indistinguishable). The reason for this is that a human judgment of whether two stimuli are the same or different could rely on any representations within their sensory system that distinguish the stimuli (rather than just those that are relevant to a particular behavior). By contrast, most current neural network models of sensory systems are trained to perform a single behavioral task. As a result, we do not expect metamers of such models to be fully indistinguishable to a human, and the classical metamer test is likely to be too sensitive for our purposes. Models might fail the classical test even if they capture human invariances for a particular task. But if a model succeeds in reproducing human invariances for a task, its metamers should produce the same human behavioral judgment on that task because they should be indistinguishable to the human representations that mediate the judgment. We thus use recognition judgments as the behavioral assay of whether model metamers reflect the same invariances that are instantiated in an associated human sensory system. We note that if humans cannot recognize a model metamer, they would also be able to discriminate it from the reference stimulus, and the model would also fail a traditional metamerism test.

We sought to answer several questions. First, we asked whether the learned invariances of commonly used neural network models are shared by human sensory systems. Second, we asked where any discrepancies with human perception arise within models. Third, we asked whether any discrepancies between model and human invariances would also be present in models obtained without supervised learning. Fourth, we explored whether model modifications intended to improve robustness would also make model metamers more recognizable to humans. Fifth, we asked whether metamer recognition identifies model discrepancies that are not evident using other methods of model assessment, such as brain predictions or adversarial vulnerability. Sixth, we asked whether metamers are shared across models.

Metamer optimization

Because metamer generation relies on an iterative optimization procedure, it was important to measure optimization success. We considered the procedure to have succeeded only if it satisfied two conditions. First, measures of the match between the activations for the natural reference stimulus and its model metamer at the matched stage had to be much higher than would be expected by chance, as quantified with a null distribution (Fig. 1e) measured between randomly chosen pairs of examples from the training dataset. This criterion was adopted in part because it is equally applicable to models that do not perform a task. Metamers had to pass this criterion for each of three different measures of the match (Pearson and Spearman correlations and signal-to-noise ratio (SNR) expressed in decibels (dB); Methods). Second, for models that performed a classification task, the metamer had to result in the same classification decision by the model as the reference stimulus. In practice, we trained linear classifiers on top of all unsupervised models, such that we were also able to apply this second criterion for them (to be conservative).

Example distributions of the match fidelity (using Spearman’s ρ in this example) are shown in Fig. 1e. Activations of the matched model stage have a correlation close to 1, as intended, and are well outside the null distribution for random pairs of training examples. As expected, given the feedforward nature of the model, matching at an early stage produces matched activations in a late stage (Fig. 1e). But because the models we consider build up invariances over a series of feedforward stages, stages earlier than the matched stage need not have the same activations and in general these differ from those for the original stimulus to which the metamer was matched (Fig. 1e). The match fidelity of this example was typical, and optimization summaries for each analyzed model are included at https://github.com/jenellefeather/model_metamers_pytorch.

Metamers of standard visual deep neural networks

We generated metamers for multiple stages of five standard visual neural networks trained to recognize objects26–29 (trained on the ImageNet1K dataset30; Fig. 2a). The five models spanned a range of architectural building blocks and depths. Such models have been posited to capture similar features as primate visual representations, and, at the time the experiments were run, the five models placed 1st, 2nd, 4th, 11th and 59th on a neural prediction benchmark26,31. We subsequently ran a second experiment on an additional five models pretrained on larger datasets that became available at later stages of the project32–34. To evaluate human recognition of the model metamers, humans performed a 16-way categorization task on the natural stimuli and model metamers (Fig. 2b)10.

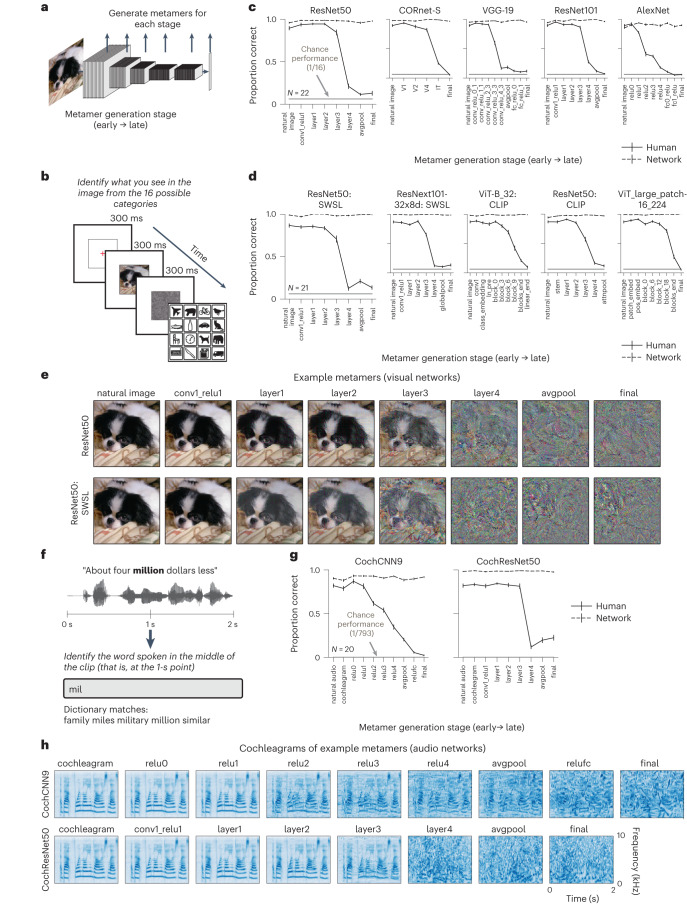

Fig. 2. Metamers of standard-trained visual and auditory deep neural networks are often unrecognizable to human observers.

a, Model metamers are generated from different stages of the model. Here and elsewhere, in models with residual connections, we only generated metamers from stages where all branches converge, which ensured that all subsequent model stages, and the model decision, remained matched. b, Experimental task used to assess human recognition of visual model metamers. Humans were presented with an image (a natural image or a model metamer of a natural image) followed by a noise mask. They were then presented with 16 icons representing 16 object categories and classified each image as belonging to one of the categories by clicking on the icon. c, Human recognition of visual model metamers (N = 22). At the time of the experiments the five models tested here placed 11th, 1st, 2nd, 4th and 59th (left to right) on a neural prediction benchmark26,31. For all tested models, human recognition of model metamers declined for late model stages, while model recognition remained high (as expected). Error bars plot s.e.m. across participants (or participant-matched stimulus subsets for model curves). d, Human recognition of visual model metamers (N = 21) trained on larger datasets. Error bars plot s.e.m. across participants (or participant-matched stimulus subsets for model curves). e, Example metamers from standard-trained and semi-weakly-supervised-learning (SWSL)-trained ResNet50 visual models. f, Experimental task used to assess human recognition of auditory model metamers. Humans classified the word that was present at the midpoint of a 2-s sound clip. Participants selected from 793 possible words by typing any part of the word into a response box and seeing matching dictionary entries from which to complete their response. A response could only be submitted if it matched an entry in the dictionary. g, Human recognition of auditory model metamers (N = 20). For both tested models, human recognition of model metamers decreased at late model stages, while model recognition remained high, as expected. When plotted, chance performance (1/793) is indistinguishable from the x axis. Error bars plot s.e.m. across participants (or participant-matched stimulus subsets for model curves). h, Cochleagram visualizations of example auditory model metamers from CochCNN9 and CochResNet50 architectures. Color intensity denotes instantaneous sound amplitude in a frequency channel (arbitrary units).

Contrary to the idea that the trained neural networks learned human-like invariances, human recognition of the model metamers decreased across model stages, reaching near-chance performance at the latest stages even though the model metamers remained as recognizable to the models as the corresponding natural stimuli, as intended (Fig. 2c,d). This reduction in human recognizability was evident as a main effect of observer and an interaction between the metamer generation stage and the observer, both of which were statistically significant for each of the ten models (P < 0.0001 in all cases; Supplementary Table 1).

From visual inspection, many of the metamers from late stages resemble noise rather than natural images (Fig. 2e and see Extended Data Fig. 1a for metamers generated from different noise initializations). Moreover, analysis of confusion matrices revealed that for the late model stages, there was no detectably reliable structure in participant responses (Extended Data Fig. 2). Although the specific optimization strategies we used had some effect on the subjective appearance of model metamers, human recognition remained poor regardless of the optimization procedure (Supplementary Fig. 1). The poor recognizability of late-stage metamers was also not explained by less successful optimization; the activation matches achieved by the optimization were generally good (for example, with correlations close to 1), and what variation we did observe was not predictive of metamer recognizability (Extended Data Fig. 3).

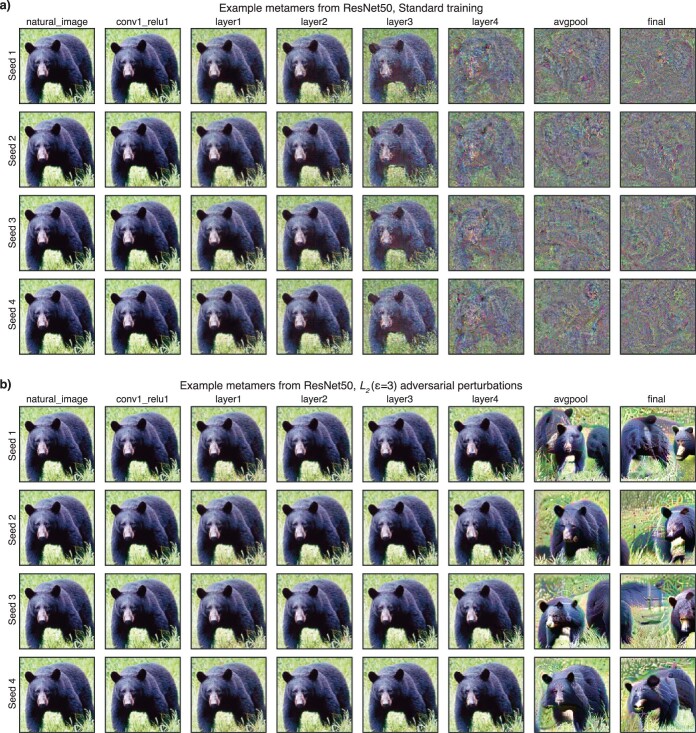

Extended Data Fig. 1. Model metamers generated from different noise initializations.

a,b, Model metamers generated from four different white noise initializations for the Standard ResNet50 (a) and an adversarially trained ResNet50 (b).

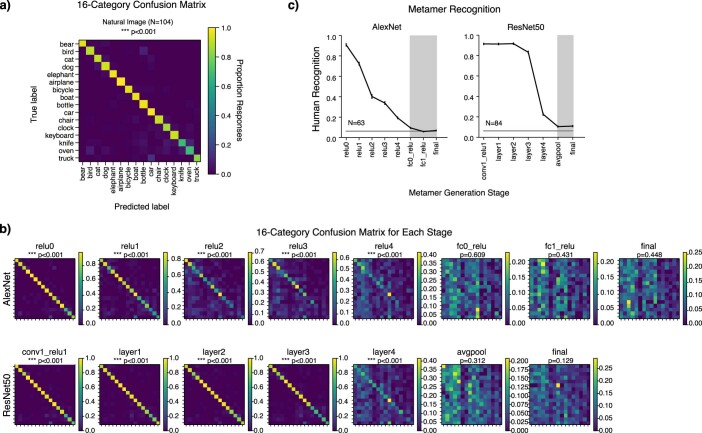

Extended Data Fig. 2. Analysis of consistency of human recognition errors for model metamers.

a, 16-way confusion matrix for natural images. Here and in b and c, results incorporate human responses from all experiments that contained the AlexNet Standard architecture or the ResNet50 Standard architecture (N = 104 participants). Statistical test for confusion matrix described in b. b, Confusion matrices for human recognition judgments of model metamers from each stage of the AlexNet and ResNet50 models (using data from all experiments that contained the AlexNet Standard architecture or the ResNet50 Standard architecture). We performed a split-half reliability analysis of the confusion matrices to determine whether the confusions were reliable across participants. We measured the correlation between the confusion matrices for splits of human participants, and assessed whether this correlation was significantly greater than 0 (one-sided test). P-values from this analysis are given above each confusion matrix. For the later stages of each model, the confusion matrices are no more consistent than would be expected by chance, consistent with the metamers being completely unrecognizable (that is, containing no information about the visual category of the natural image they are matched to). c, Human recognizability of model metamers from different stages of AlexNet (N = 63 participants) and ResNet50 models (N = 84 participants). Error bars are s.e.m. across participants. Stages whose confusions were not consistent across splits of human observers are noted by the shaded region. The stages for which recognition is near chance show inconsistent confusion patterns, ruling out the possibility that the chance levels of recognition are driven by systematic errors (for example consistently recognizing metamers for cats as dogs).

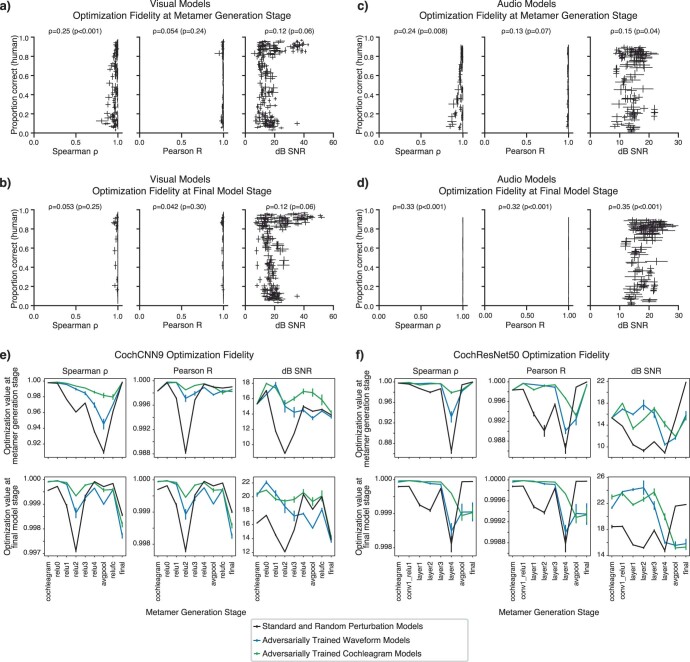

Extended Data Fig. 3. Optimization fidelity vs. human recognition of model metamers.

a,b, Optimization fidelity for visual model metamers at the metamer generation stage (a) and at the final model stage corresponding to a categorization decision (b; N = 219 model stages). Visual models are those in Figs. 2–4 and Fig. 6g. Note that most data points are very close to 1 for the final stage correlation metrics (for example 209/219 stages exceed an average Spearman ρ of 0.99). Each point corresponds to a single stage of a single model. c,d, Optimization fidelity for auditory model metamers at the metamer generation stage (c) and at the final model stage corresponding to a categorization decision (d). Auditory models are those in Fig. 2 and Fig. 5 (N = 127 model stages). Note that most data points are again very close to 1 for the final stage correlation metrics (all 127 stages exceed an average Spearman ρ of 0.99). In all cases, optimization fidelity is high for both the metamer generation stage and the final stage, and human recognition is not predicted by the optimization fidelity, or only weakly correlated with the optimization fidelity for the generated model metamers (accounting for a very small fraction of the variance). Error bars on each data point are standard deviation across generated model metamers that passed the optimization criteria to be included in the psychophysical experiment. e,f, Final stage optimization fidelity plotted vs. model metamer generation stage for CochCNN9 auditory model (e) and CochResNet50 auditory model (f). Note the y axis limits, which differ across plots to show the small variations near 1 for the correlation measures. It is apparent that for any given model, some stages are somewhat less well optimized than others, but these variations do not account for the recognizability differences found in our experiments (compare these plots to the recognition plots in Figs. 2 and 5).

Metamers of standard auditory deep neural networks

We performed an analogous experiment with two auditory neural networks trained to recognize speech (the word recognition task in the Word–Speaker–Noise dataset25). Each model consisted of a biologically inspired ‘cochleagram’ representation35,36, followed by a convolutional neural network (CNN) whose parameters were optimized during training. We tested two model architectures: a ResNet50 architecture (henceforth referred to as CochResNet50) and a convolutional model with nine stages similar to that used in a previous publication4 (henceforth referred to as CochCNN9). Model metamers were generated for clean speech examples from the validation set. Humans performed a 793-way classification task4 to identify the word in the middle of the stimulus (Fig. 2f).

As with the visual models, human recognition of auditory model metamers decreased markedly at late model stages for both architectures (Fig. 2g), yielding a significant main effect of human versus model observer and an interaction between the model stage and the observer (P < 0.0001 for each comparison; Supplementary Table 1). Subjectively, the model metamers from later stages sound like noise (and appear noise-like when visualized as cochleagrams; Fig. 2h). This result suggests that many of the invariances present in these models are not invariances for the human auditory system.

Overall, these results demonstrate that the invariances of many common visual and auditory neural networks are substantially misaligned with those of human perception, even though these models are currently the best predictors of brain responses in each modality.

Unsupervised models also exhibit discrepant metamers

Biological systems typically do not have access to labels at the scale that is needed for supervised learning37 and instead must rely in large part on unsupervised learning. Do the divergent invariances evident in neural network models result in some way from supervised training with explicit category labels? Metamers are well suited to address this question given that their generation is not dependent on a classifier and thus can be generated for any sensory model.

At present, the leading unsupervised models are ‘self-supervised’, being trained with a loss function favoring representations in which variants of a single training example (different crops of an image, for instance) are similar, whereas those from different training examples are not38 (Fig. 3a). We generated model metamers for four such models38–41 along with supervised comparison models with the same architectures.

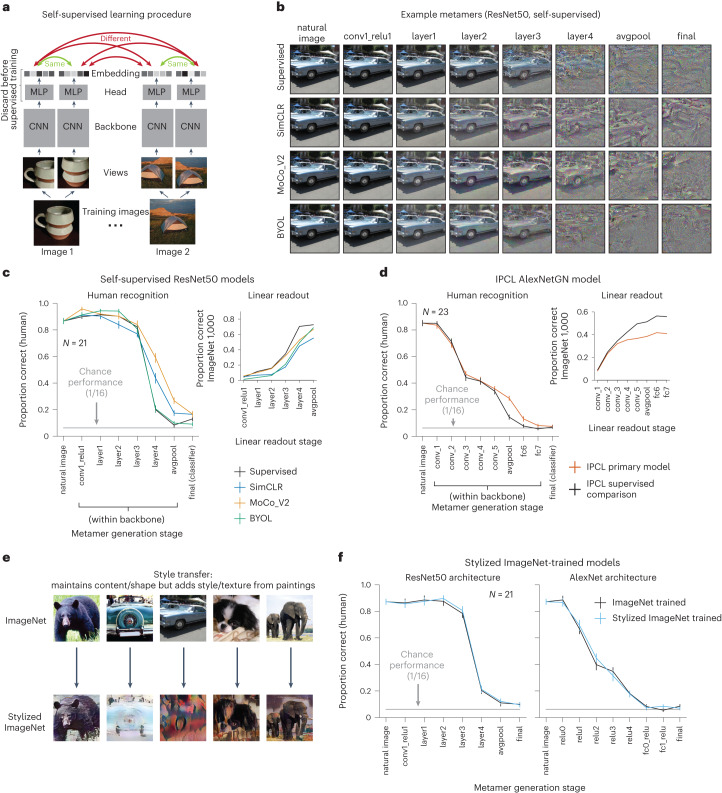

Fig. 3. Model metamers are unrecognizable to humans even with alternative training procedures.

a, Overview of self-supervised learning, inspired by Chen et al.38. Each input was passed through a learnable convolutional neural network (CNN) backbone and a multi-layer perceptron (MLP) to generate an embedding vector. Models were trained to map multiple views of the same image to nearby points in the embedding space. Three of the self-supervised models (SimCLR, MoCo_V2 and BYOL) used a ResNet50 backbone. The other self-supervised model (IPCL) had an AlexNet architecture modified to use group normalization. In both cases, we tested comparison supervised models with the same architecture. The SimCLR, MoCo_V2 and IPCL models also had an additional training objective that explicitly pushed apart embeddings from different images. b, Example metamers from select stages of ResNet50 supervised and self-supervised models. In all models, late-stage metamers were mostly unrecognizable. c, Human recognition of metamers from supervised and self-supervised models (left; N = 21) along with classification performance of a linear readout trained on the ImageNet1K task at each stage of the models (right). Readout classifiers were trained without changing any of the model weights. For self-supervised models, model metamers from the ‘final’ stage were generated from a linear classifier at the avgpool stage. Model recognition curves of model metamers were close to ceiling, as in Fig. 2, and are omitted here and in later figures for brevity. Here and in d, error bars plot s.e.m. across participants (left) or across three random seeds of model evaluations (right). d, Same as c but for the IPCL self-supervised model and supervised comparison with the same dataset augmentations (N = 23). e, Examples of natural and stylized images using the Stylized ImageNet augmentation. Training models on Stylized ImageNet was previously shown to reduce a model’s dependence on texture cues for classification43. f, Human recognition of model metamers for ResNet50 and AlexNet architectures trained with Stylized ImageNet (N = 21). Removing the texture bias of models by training on Stylized ImageNet does not result in more recognizable model metamers than the standard model. Error bars plot s.e.m. across participants.

As shown in Fig. 3b–d, the self-supervised models produced similar results as those for supervised models. Human recognition of model metamers declined at late model stages, approaching chance levels for the final stages. Some of the models had more recognizable metamers at intermediate stages (significant interaction between model type and model stage; ResNet50 models: F21,420 = 16.0, P < 0.0001, ; IPCL model: F9,198 = 3.13, P = 0.0018, ). However, for both architectures, recognition was low in absolute terms, with the metamers bearing little resemblance to the original image they were matched to. Overall, the results suggest that the failure of standard neural network models to pass our metamer test is not specific to the supervised training procedure. This result also demonstrates the generality of the metamers method, as it can be applied to models that do not have a behavioral readout. Analogous results with two classical sensory system models (HMAX3,8 and a spectrotemporal modulation filterbank42), which further illustrate the general applicability of the method, are shown in Extended Data Figs. 4 and 5.

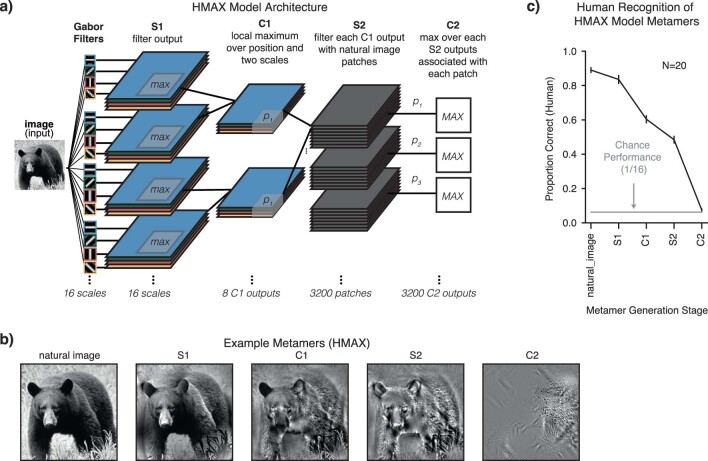

Extended Data Fig. 4. Metamers from a classic vision model.

a, Schematic of HMAX vision model. The HMAX vision model is a biologically-motivated architecture with cascaded filtering and pooling operations inspired by simple and complex cells in the primate visual system and was intended to capture aspects of biological object recognition3,8. We generated model metamers by matching all units at the S1, C1, S2, or C2 stage of the model. b, Example HMAX model metamers. c, Although HMAX is substantially shallower than the “deep” neural network models investigated in the rest of this paper, it is evident that by the C2 model stage its model metamers are comparably unrecognizable to humans (significant main effect of model stage, F(4,76) = 351.9, p < 0.0001, ). This classical model thus also has invariances that differ from those of the human object recognition system. Error bars plot s.e.m. across participants (N = 20). HMAX metamers were black and white, while all metamers from all other models were in color.

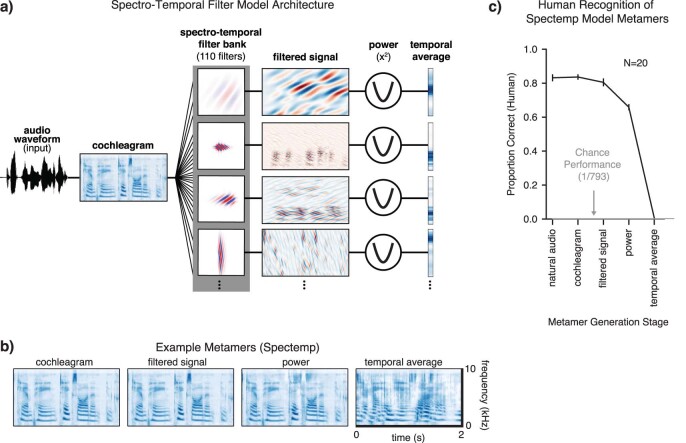

Extended Data Fig. 5. Metamers from a classic auditory model.

a, Schematic of spectro-temporal auditory filterbank model (Spectemp), a classical model of auditory cortex consisting of a set of spectrotemporal filters applied to a cochleagram representation42. We used a version of the model in which the convolutional responses are summarized with the mean power in each filter4,103,104. b, Cochleagrams of example Spectemp model metamers. c, Human recognition of Spectemp model metamers. Metamers from the first two stages were fully recognizable, and subjectively resembled the original audio, indicating that these stages instantiate few invariances, as expected for overcomplete filter-bank decompositions. By contrast, metamers from the temporal average representation were unrecognizable (significant main effect of model stage F(4,76) = 515.3, p < 0.0001, ), indicating that this model stage produces invariances that humans do not share (plausibly because the human speech recognition system retains information that is lost in the averaging stage). Error bars plot s.e.m. across participants (N = 20). Overall, these results and those in Extended Data Fig. 4 show how metamers can reveal the invariances present in classical models as well as state-of-the-art deep neural networks, and demonstrate that both types of models fail to fully capture the invariances of biological sensory systems.

Discrepant metamers are not explained by texture bias

Another commonly noted discrepancy between current models and humans is the tendency for models to base their judgments on texture rather than shape43–45. This ‘texture bias’ can be reduced with training datasets of ‘stylized’ images (Fig. 3e) that increase a model’s reliance on shape cues, making them more human-like in this respect43. To assess whether these changes also serve to make model metamers less discrepant, we generated metamers from two models trained on Stylized ImageNet. As shown in Fig. 3f, these models had metamers that were comparably unrecognizable to humans as those from models trained on the standard ImageNet1K training set (no interaction between model type and model stage; ResNet50: F7,140 = 0.225, P = 0.979, ; AlexNet: F8,160 = 0.949, P = 0.487, ). This result suggests that metamer discrepancies are not simply due to texture bias in the models.

Effects of adversarial training on visual model metamers

A known peculiarity of contemporary artificial neural networks is their vulnerability to small adversarial perturbations designed to change the class label predicted by a model14,15. Such perturbations are typically imperceptible to humans due to their small magnitude but can drastically alter model decisions and have been the subject of intense interest in part due to the security risk they pose for machine systems. One way to reduce this vulnerability is via ‘adversarial training’ in which adversarial perturbations are generated during training, and the model is forced to learn to recognize the perturbed images as the ‘correct’ human-interpretable class46 (Fig. 4a). This adversarial training procedure yields models that are less susceptible to adversarial examples for reasons that remain debated47.

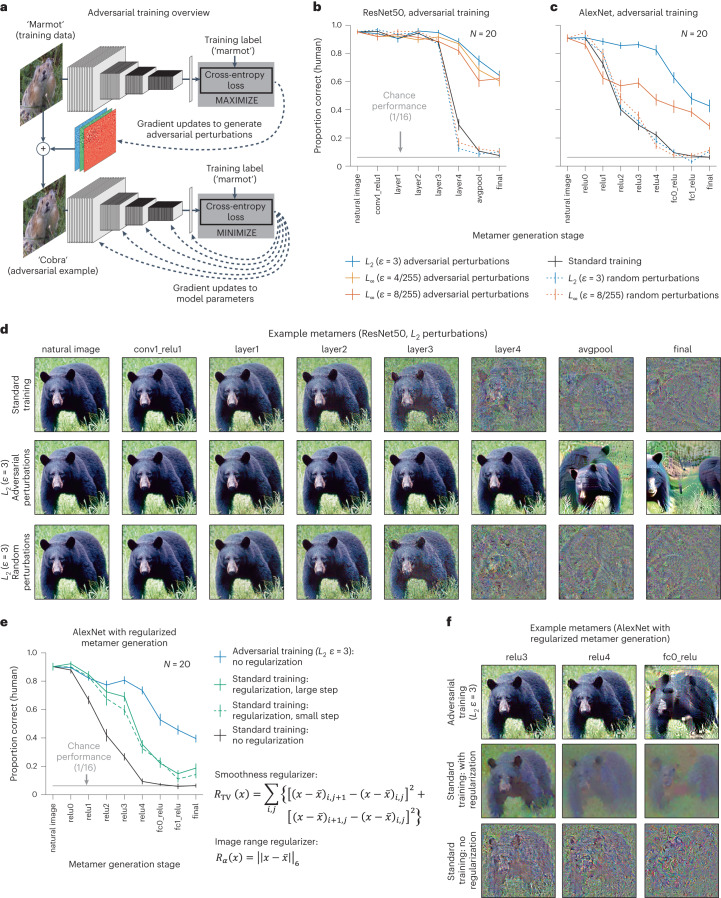

Fig. 4. Adversarial training increases human recognizability of visual model metamers.

a, Adversarial examples are derived at each training step by finding an additive perturbation to the input that moves the classification label away from the training label class (top). These derived adversarial examples are provided to the model as training examples and used to update the model parameters (bottom). The resulting model is subsequently more robust to adversarial perturbations than if standard training was used. As a control experiment, we also trained models with random perturbations to the input. b, Human recognition of metamers from ResNet50 models (N = 20) trained with and without adversarial or random perturbations. Here and in c and e, error bars plot s.e.m. across participants. c, Same as b but for AlexNet models (N = 20). In both architectures, adversarial training led to more recognizable metamers at deep model stages (repeated measures analysis of variance (ANOVA) tests comparing human recognition of standard and each adversarial model; significant main effects in each case, F1,19 > 104.61, P < 0.0001, ), although in both cases, the metamers remain less than fully recognizable. Random perturbations did not produce the same effect (repeated measures ANOVAs comparing random to adversarial; significant main effect of random versus adversarial for each perturbation of the same type and size, F1,19 > 121.38, P < 0.0001, ). d, Example visual metamers for models trained with and without adversarial or random perturbations. e, Recognizability of model metamers from standard-trained models with and without regularization compared to that for an adversarially trained model (N = 20). Two regularization terms were included in the optimization: a total variation regularizer to promote smoothness and a constraint to stay within the image range51. Two optimization step sizes were evaluated. Smoothness priors increased recognizability for the standard model (repeated measures ANOVAs comparing human recognition of metamers with and without regularization; significant main effects for each step size, F1,19 > 131.8246, P < 0.0001, ). However, regularized metamers remained less recognizable than those from the adversarially trained model (repeated measures ANOVAs comparing standard model metamers with regularization to metamers from adversarially trained models; significant main effects for each step size, F1,19 > 80.8186, P < 0.0001, ). f, Example metamers for adversarially trained and standard models with and without regularization.

We asked whether adversarial training would improve human recognition of model metamers. A priori, it was not clear what to expect. Making models robust to adversarial perturbations causes them to exhibit more of the invariances of humans (the shaded orange covers more of the blue outline in Fig. 1b), but it is not obvious that this will reduce the model invariances that are not shared by humans (that is, to decrease the orange outlined regions that do not overlap with the blue shaded region in Fig. 1b). Previous work visualizing latent representations of visual neural networks suggested that robust training might make model representations more human-like48, but human recognition of model metamers had not been behaviorally evaluated.

We first generated model metamers for five adversarially trained vision models48 with different architectures and perturbation sizes. As a control, we also trained models with equal magnitude perturbations in random, rather than adversarial, directions, which are typically ineffective at preventing adversarial attacks49. As intended, adversarially trained models were more robust to adversarial perturbations than the standard-trained model or models trained with random perturbations (Supplementary Fig. 2a,b).

Metamers for the adversarially trained models were in all cases significantly more recognizable than those from the standard model (Fig. 4b–d and Extended Data Fig. 1), evident as a main effect of training type in each case. Training with random perturbations did not yield the same benefit (Supplementary Table 2). Despite some differences across adversarial training variants, all variants that we tried produced a human recognition benefit. It was nonetheless the case that metamers for late stages remained less than fully recognizable to humans for all model variants. We note that performance is inflated by the use of a 16-way alternative force choice task, for which above-chance performance is possible even with severely distorted images. See Extended Data Figs. 6 and 7 for an analysis of the consistency of metamer recognition across human observers and examples of the most and least recognizable metamers.

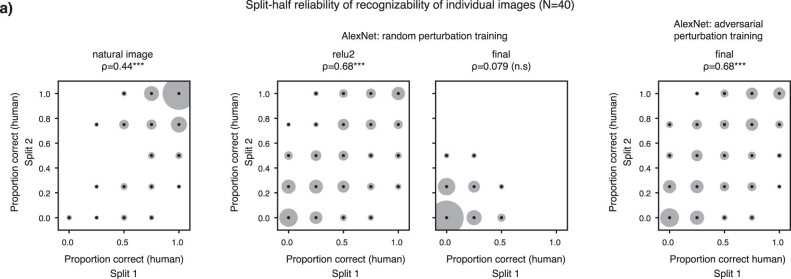

Extended Data Fig. 6. Consistency of human recognition of individual metamers from models trained with random or adversarial perturbations.

a, Consistency of recognizability of individual stimuli across splits of participants. Graph plots the proportion correct for individual stimuli for one random split. Circle size represents the number of stimuli at that particular value. Correlation values were determined by averaging the Spearman ρ over 1000 random splits of participants (p-values were computed non-parametrically by shuffling participant responses for each condition, and computing the number of times the true Spearman ρ averaged across splits was lower than the shuffled correlation value; one-sided test). We only included images that had at least 4 trials in each split of participants, and we only included 4 trials in the average, to avoid having some images exert more influence on the result than others (resulting in quantized values for proportion correct). Recognizability of individual stimuli was reliable for natural images, relu2 of AlexNet trained with random perturbation training and the final stage of adversarially trained AlexNet (p < 0.001 in each case). By contrast, recognizability of individual metamers from the final stage of AlexNet trained with random perturbations showed no consistency across participants (p = 0.485).

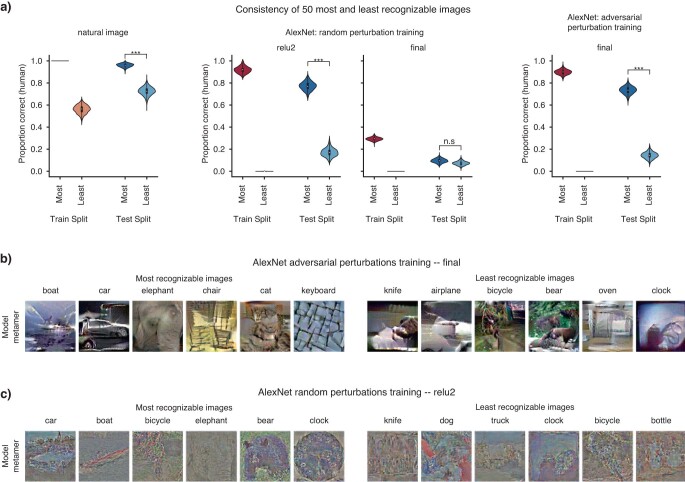

Extended Data Fig. 7. Analysis of most and least recognizable visual model metamers.

a, Using half of the N = 40 participants, we selected the 50 images with the highest recognizability and the 50 images with the lowest recognizability (“train” split). We then measured the recognizability for these most and least recognizable images in the other half of participants (“test” split). We analyzed 1000 random participant splits; p-values were computed by measuring the number of times the “most” recognizable images had higher recognizability than the “least” recognizable images (one-sided test). Graph shows violin plots of test split results for the 1000 splits. Images were only included in analysis if they had responses from at least 4 participants in each split. The difference between the most and least recognizable metamers replicated across splits for the model stages with above-chance recognizability (p < 0.001), indicating that human observers agree on which metamers are recognizable (but not for the final stage of AlexNet trained with random perturbations, p = 0.165). Box plots within violins are defined with a center dot as the median value, bounds of box as the 25th-75th percentile, and the whiskers as the 1.5x interquartile range. b,c, Example model metamers from the 50 “most” and “least” recognizable metamers for the final stage of adversarially trained AlexNet (b) and for the relu2 stage of AlexNet trained with random perturbations (evaluated with data from all participants; c). All images shown had at least 8 responses across participants for both the natural image and model metamer condition, had 100% correct responses for the natural image condition, and had 100% correct (for “most” recognizable images) or 0% correct (for “least” recognizable images).

Given that metamers from adversarially trained models look less noise-like than those from standard models and that standard models may overrepresent high spatial frequencies50, we wondered whether the improvement in recognizability could be replicated in a standard-trained model by including a smoothness regularizer in metamer optimization. Such regularizers are common in neural network visualizations51, and although they side step the goal of human–model comparison, it was nonetheless of interest to assess their effect. We implemented the regularizer used in a well-known visualization paper51. Adding smoothness regularization to the metamer generation procedure for the standard-trained AlexNet model improved the recognizability of its metamers (Fig. 4e) but not as much as did adversarial training (and did not come close to generating metamers as recognizable as natural images; see Extended Data Fig. 8 for examples generated with different regularization coefficients). This result suggests that the benefit of adversarial training is not simply replicated by imposing smoothness constraints and that discrepant metamers more generally cannot be resolved with the addition of a smoothness prior.

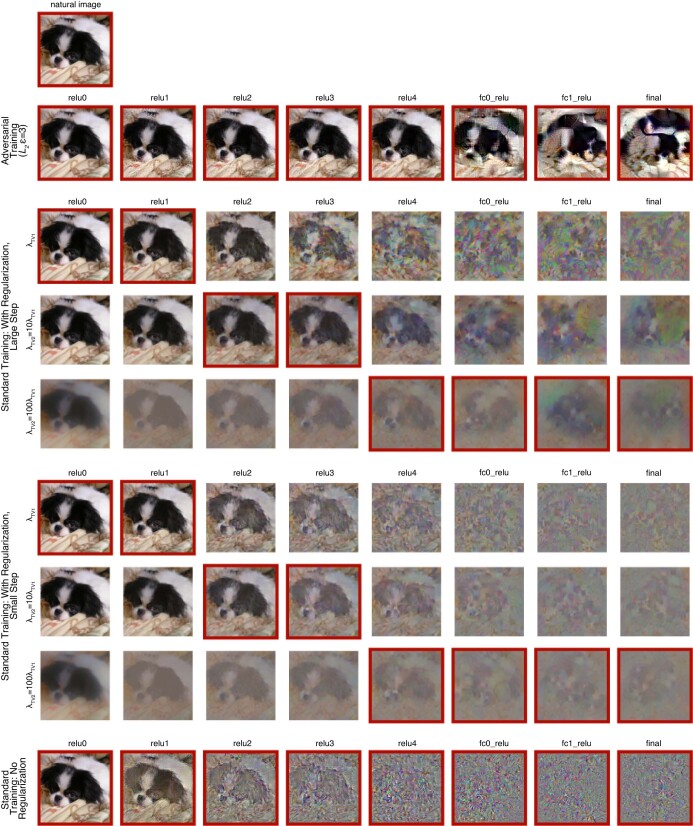

Extended Data Fig. 8. Examples of model metamers generated with regularization.

Metamers were generated with terms for smoothness and image range included in the loss function. Three coefficients for the smoothness regularizer were used. Red outlines are present on conditions that were used in the human classification experiment (chosen to maximize the recognizability, and to match the choices in the original paper that introduced this type of regularization51).

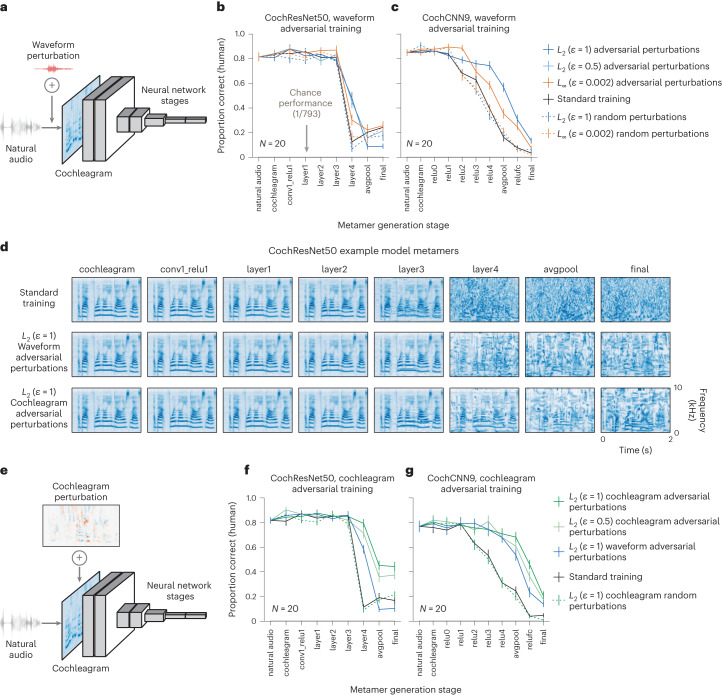

Effects of adversarial training on auditory model metamers

We conducted analogous experiments with auditory models, again using two architectures and several perturbation types. Because the auditory models contain a fixed cochlear stage at their front end, there are two natural places to generate adversarial examples: they can be added to the waveform or the cochleagram. We explored both for completeness and found that adversarial training at either location resulted in adversarial robustness (Supplementary Fig. 2c–f).

We first investigated adversarial training with perturbations to the waveform (Fig. 5a). As with the visual models, human recognition was generally better for metamers from adversarially trained models but not for models trained with random perturbations (Fig. 5b,c and Supplementary Table 2). The model metamers from the robust models were visibly less noise-like when viewed in the cochleagram representation (Fig. 5d).

Fig. 5. Adversarial training increases human recognition of auditory model metamers.

a, Schematic of auditory CNNs with adversarial perturbations applied to the waveform input. b,c, Human recognition of auditory model metamers from CochResNet50 (b; N = 20) and CochCNN9 (c; N = 20) models with adversarial perturbations generated in the waveform space (models trained with random perturbations are also included for comparison). When plotted here and in f and g, chance performance (1/793) is indistinguishable from the x axis, and error bars plot s.e.m. across participants. L2 (ε = 0.5) waveform adversaries were only included in the CochResNet50 experiment. ANOVAs comparing standard and each adversarial model showed significant main effects in four of five cases (F1,19 > 9.26, P < 0.0075, ) and no significant main effect for CochResNet50 with L2 (ε = 1) perturbations (F1,19 = 0.29, P = 0.59, ). Models trained with random perturbations did not show the same benefit (ANOVAs comparing each random and adversarial perturbation model with the same ε type and size; significant main effect in each case (F1,19 > 4.76, P < 0.0444, )). d, Cochleagrams of example model metamers from CochResNet50 models trained with waveform and cochleagram adversarial perturbations. e, Schematic of auditory CNNs with adversarial perturbations applied to the cochleagram stage. f,g, Human recognition of auditory model metamers from models trained with cochleagram adversarial perturbations are more recognizable for CochResNet50 (f) and CochCNN9 (g) models than those from models trained with waveform perturbations. ANOVAs comparing each model trained with cochleagram perturbations versus the same architecture trained with waveform perturbations showed significant main effects in each case (F1,19 > 4.6, P < 0.04, ). ANOVAs comparing each model trained with cochleagram perturbations to the standard model showed a significant main effect in each case (F1,19 > 102.25, P < 0.0001, ). The effect on metamer recognition was again specific to adversarial perturbations (ANOVAs comparing effect of training with adversarial versus random perturbations with the same ε type and size; F1,19 > 145.07, P < 0.0001, ).

We also trained models with adversarial perturbations to the cochleagram representation (Fig. 5e). These models had significantly more recognizable metamers than both the standard models and the models adversarially trained on waveform perturbations (Fig. 5f,g and Supplementary Table 2), and the benefit was again specific to models trained with adversarial (rather than random) perturbations. These results suggest that the improvements from intermediate-stage perturbations may in some cases be more substantial than those from perturbations to the input representation.

Overall, these results suggest that adversarial training can cause model invariances to become more human-like in both visual and auditory domains. However, substantial discrepancies remain, as many model metamers from late model stages remain unrecognizable even after adversarial training.

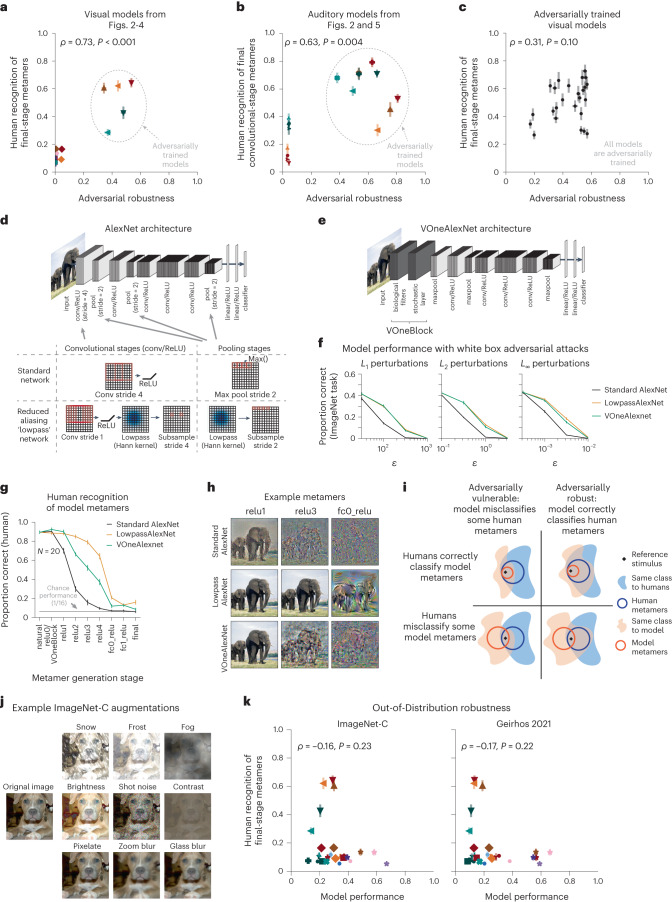

Metamer recognizability dissociates from adversarial robustness

Although adversarial training increased human recognizability of model metamers, the degree of robustness from the training was not itself predictive of metamer recognizability. We first examined all the visual models from Figs. 2–5 and compared their adversarial robustness to the recognizability of their metamers from the final model stage (this stage was chosen because it exhibited considerable variation in recognizability across models). There was a correlation between robustness and metamer recognizability (ρ = 0.73, P < 0.001), but it was mostly driven by the overall difference between two groups of models, those that were adversarially trained and those that were not (Fig. 6a).

Fig. 6. Human recognition of model metamers dissociates from adversarial robustness.

a, Adversarial robustness of visual models versus human recognizability of final-stage model metamers (N = 26 models). Robustness was quantified as average robustness to L2 (ε = 3) and L∞ (ε = 4/255) adversarial examples, normalized by performance on natural images. Symbols follow those in Fig. 7a. Here and in b and c, error bars for abscissa represent s.e.m. across 5 random samples of 1,024 test examples, and error bars for ordinate represent s.e.m. across participants. b, Same as a but for final convolutional stage (CochCNN9) or block (CochResNet50) of auditory models (N = 17 models). Robustness was quantified as average robustness to L2 (ε = 10−0.5) and L∞ (ε = 10−2.5) adversarial perturbations of the waveform, normalized by performance on natural audio. Symbols follow those in Fig. 7c. c, Adversarial robustness of a set of adversarially trained visual models versus human recognizability of final-stage model metamers (N = 25 models). d, Operations included in the AlexNet architecture to more closely obey the sampling theorem (the resulting model is referred to as ‘LowpassAlexNet’). e, Schematic of VOneAlexNet. f, Adversarial vulnerability as assessed via accuracy on a 1,000-way ImageNet classification task with adversarial perturbations of different types and sizes. LowpassAlexNet and VOneAlexNet were equally robust to adversarial perturbations (F1,8 < 4.5, P > 0.1 and for all perturbation types), and both exhibited greater robustness than the standard model (F1,8 > 137.4, P < 0.031 and for all adversarial perturbation types for both architectures). Error bars plot s.e.m. across 5 random samples of 1,024 test images. g, Human recognition of model metamers from LowpassAlexNet, VOneAlexNet and standard AlexNet models. LowpassAlexNet had more recognizable metamers than VOneAlexNet (main effect of architecture: F1,19 = 71.7, P < 0.0001, ; interaction of architecture and model stage: F8,152 = 21.8, P < 0.0001, ). Error bars plot s.e.m. across participants (N = 20). h, Example model metamers from the experiment in d. i, Schematic depiction of how adversarial vulnerability could dissociate from human recognizability of metamers. j, Example augmentations applied to images in tests of out-of-distribution robustness. k, Scatter plot of out-of-distribution robustness versus human recognizability of final-stage model metamers (N = 26 models). Models with large-scale training are denoted with ★ symbols. Other symbols follow those in Fig. 7a; the abscissa value is a single number, and error bars for ordinate represent s.e.m. across participants.

The auditory models showed a similar relationship as the visual models (Fig. 6b). When standard and adversarially trained models were analyzed together, metamer recognizability and robustness were correlated (ρ = 0.63, P = 0.004), driven by the overall difference between the two groups of models, but there was no obvious relationship when considering just the adversarially trained models.

To further assess whether variations in robustness produce variation in metamer recognizability, we compared the robustness of a large set of adversarially trained models (taken from a well-known robustness evaluation52) to the recognizability of their metamers from the final model stage. Despite considerable variation in both robustness and metamer recognizability, the two measures were not significantly correlated (ρ = 0.31, P = 0.099; Fig. 6c). Overall, it seems that something about the adversarial training procedure leads to more recognizable metamers but that robustness per se does not drive the effect.

Adversarial training is not the only means of making models adversarially robust. But when examining other sources of robustness, we again found examples where a model’s robustness was not predictive of the recognizability of its metamers. Here, we present results for two models with similar robustness, one of which had much more recognizable metamers than the other.

The first model was a CNN that was modified to reduce aliasing (LowpassAlexNet). Because many traditional neural networks contain downsampling operations (for example, pooling) without a preceding lowpass filter, they violate the sampling theorem25,53 (Fig. 6d). It is nonetheless possible to modify the architecture to reduce aliasing, and such modifications have been suggested to improve model robustness to small image translations12,13. The second model was a CNN that contained an initial processing block inspired by the primary visual cortex in primates54. This block contained hard-coded Gabor filters, had noise added to its responses during training (VOneAlexNet; Fig. 6e) and had been previously demonstrated to increase adversarial robustness55. A priori, it was unclear whether either model modification would improve human recognizability of the model metamers.

Both architectures were comparably robust and more robust than the standard AlexNet to adversarial perturbations (Fig. 6f) as well as ‘fooling images’14 (Extended Data Fig. 9a) and ‘feature adversaries’56 (Extended Data Fig. 9b,c). However, metamers generated from LowpassAlexNet were substantially more recognizable than metamers generated from VOneAlexNet (Fig. 6g,h). This result provides further evidence that model metamers can differentiate models even when adversarial robustness does not.

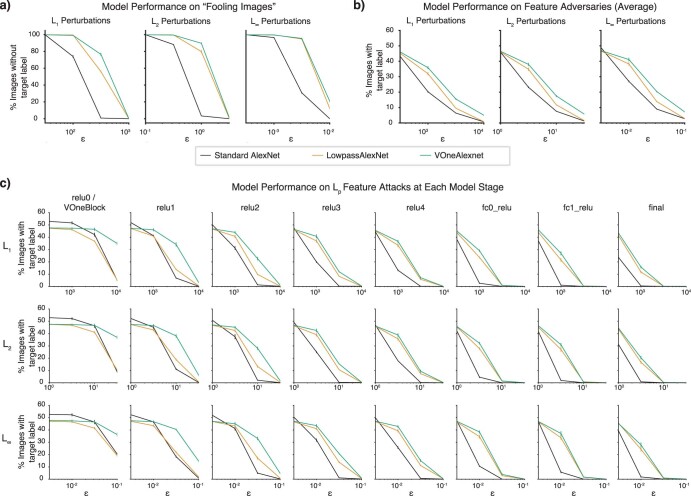

Extended Data Fig. 9. Adversarial robustness of VOneAlexNet and LowPassAlexNet to different types of adversarial examples.

a, Adversarial robustness to “Fooling images”. Fooling images14 are constructed from random noise initializations (the same noise type used for initialization during model metamer generation) by making small Lp-constrained perturbations to cause the model to classify the noise as a particular target class. LowpassAlexNet and VOneAlexNet are more robust than the standard AlexNet for all perturbation types (ANOVA comparing VOneAlexNet or LowPassAlexNet to the standard architecture; main effect of architecture; F(1,8) > 6787.0, p < 0.031, , for all adversarial perturbation types in both cases), and although there was a significant robustness difference between LowPassAlexNet and VOneAlexNet, it was in the opposite direction as the difference in metamer recognizability: VOneAlexNet was more robust (ANOVA comparing VOneAlexNet to LowPassAlexNet; main effect of architecture; F(1,8) > 98.6, p < 0.031, for all perturbation types). Error bars plot s.e.m. across five sets of target labels. b, Adversarial robustness to feature adversaries. Feature adversaries56 are constructed by perturbing a natural “source” image so that it yields model activations (at a particular stage) that are close to those evoked by a different natural “target” image, while constraining the perturbed image to remain within a small distance from the original natural image in pixel space. The robustness measure plotted here is averaged across adversaries generated for all stages of a model. LowpassAlexNet and VOneAlexNet were more robust than the standard AlexNet for all perturbation types (ANOVA comparing VOneAlexNet or LowPassAlexNet to the standard architecture; main effect of architecture F(1,8) > 90.8, p < 0.031, , for all adversarial perturbation types), and although there was a significant robustness difference between LowPassAlexNet and VOneAlexNet, it was again in the opposite direction as the difference in metamer recognizability: VOneAlexNet was more robust (ANOVA comparing VOneAlexNet to LowPassAlexNet; main effect of architecture; F(1,8) > 69.0, p < 0.031, for all perturbation types). Here and in (c), error bars plot s.e.m. across five samples of target and source images. c, Performance on feature adversaries for each model stage used to obtain the average curve in b. LowpassAlexNet is not more robust than VOneAlexNet to any type of adversarial example, even though it has more recognizable model metamers (Fig. 6g), illustrating that metamers reveal a different type of model discrepancy than that revealed with typical metrics of adversarial robustness.

These adversarial robustness-related results may be understood in terms of configurations of the four types of stimulus sets originally shown in Fig. 1b (Fig. 6i). Adversarial examples are stimuli that are metameric to a reference stimulus for humans but are classified differently from the reference stimulus by a model. Adversarial robustness thus corresponds to a situation where the human metamers for a reference stimulus fall completely within the set of stimuli that are recognized as the reference class by a model (blue outline contained within the orange shaded region in Fig. 6i, right column). This situation does not imply that all model metamers will be recognizable to humans (orange outline contained within the blue shaded region in the top row). These theoretical observations motivate the use of model metamers as a complementary model test and are confirmed by the empirical observations of this section.

Metamer recognizability and out-of-distribution robustness

Neural network models have also been found to be less robust than humans to images that fall outside their training distribution (for example, line drawings, silhouettes and highpass-filtered images that qualitatively differ from the photos in the common ImageNet1K training set; Fig. 6j)10,57,58. This type of robustness has been found to be improved by training models on substantially larger datasets59. We compared model robustness for such ‘out-of-distribution’ images to the recognizability of their metamers from the final model stage (the model set included several models trained on large-scale datasets taken from Fig. 2d, along with all other models from Figs. 2c, 3 and 4). This type of robustness (measured by two common benchmarks) was again not correlated with metamer recognizability (ImageNet-C: ρ = –0.16, P = 0.227; Geirhos 2021: ρ = –0.17, P = 0.215; Fig. 6k).

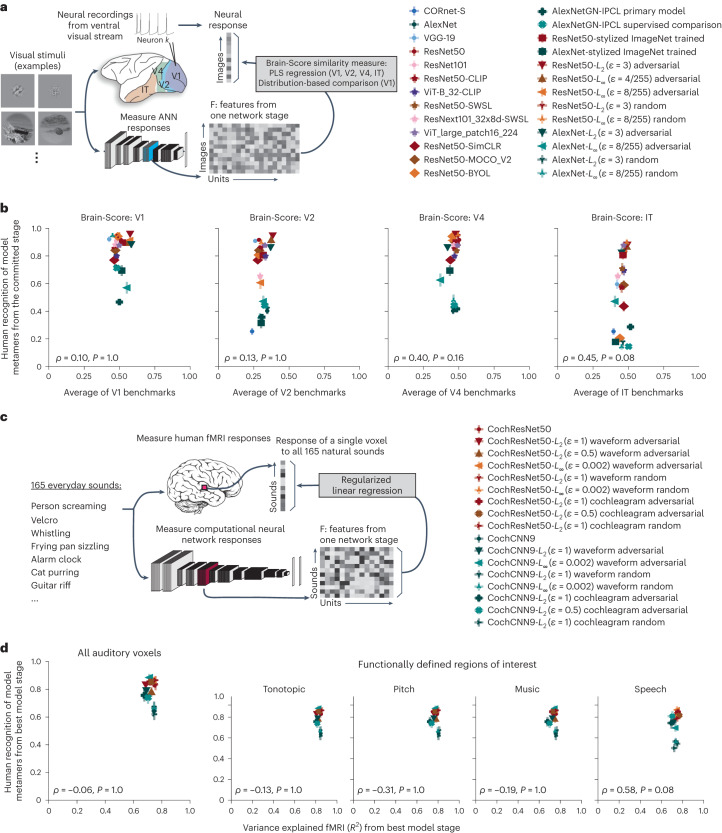

Metamer recognizability dissociates from model–brain similarity

Are the differences between models shown by metamer recognizability similarly evident when using standard brain comparison benchmarks? To address this question, we used such benchmarks to evaluate the visual and auditory models described above in Figs. 2–5. For the visual models, we used the Brain-Score platform to measure the similarity of model representations to neural benchmarks for visual areas V1, V2 and V4 and the inferior temporal cortex (IT26,31; Fig. 7a). The platform’s similarity measure combines a set of model–brain similarity metrics, primarily measures of variance explained by regression-derived predictions. For each model, the score was computed for each visual area using the model stage that gave the highest similarity in held-out data for that visual area. We then compared this neural benchmark score to the recognizability of the model’s metamers from the same stage used to obtain the neural predictions. This analysis showed modest correlations between the two measures for V4 and IT, but these were not significant after Bonferroni correction and were well below the presumptive noise ceiling (Fig. 7b). Moreover, the neural benchmark scores were overall fairly similar across models. Thus, most of the variation in metamer recognizability was not captured by standard model–brain comparison benchmarks.

Fig. 7. Human recognition of model metamers dissociates from model predictions of brain responses.

a, Procedure for neural benchmarks; ANN, artificial neural network. b, Human recognizability of a model’s metamers versus model–brain similarity for four areas of the ventral stream assessed by a commonly used set of benchmarks26,31. The benchmarks mostly consisted of the neurophysiological variance explained by model features via regression. A single model stage was chosen for each model and brain region that produced highest similarity in a separate dataset; graphs plot results for this stage (N = 26 models). Error bars on each data point plot s.e.m. across participant metamer recognition; benchmark results are a single number. None of the correlations were significant after Bonferroni correction. Given the split-half reliability of the metamer recognizability scores and the model–brain similarity scores81, the noise ceiling of the correlation was ρ = 0.92 for IT. c, Procedure for auditory brain predictions. Time-averaged unit responses in each model stage were used to predict each voxel’s response to natural sounds using a linear mapping fit to the responses to a subset of the sounds with ridge regression. Model predictions were evaluated on held-out sounds. d, Average voxel response variance explained by the best-predicting stage of each auditory model from Figs. 2 and 5 plotted against metamer recognizability for that model stage obtained from the associated experiment. We performed this analysis across all voxels in the auditory cortex (left) and within four auditory functional ROIs (right). Variance explained (R2) was measured for the best-predicting stage of the models (N = 17 models) chosen individually for each participant and ROI (N = 8 participants). For each participant, the other participants’ data were used to choose the best-predicting stage. Error bars on each data point plot s.e.m. of metamer recognition and variance explained across participants. No correlations were significant after Bonferroni correction, and they were again below the noise ceiling (the presumptive noise ceiling ranged from ρ = 0.78 to ρ = 0.87 depending on the ROI).

We performed an analogous analysis for the auditory models using a large dataset of human auditory cortical functional magnetic resonance imaging (fMRI) responses to natural sounds60 that had previously been used to evaluate neural network models of the auditory system4,61. We analyzed voxel responses within four regions of interest in addition to all of the auditory cortex, in each case again choosing the best-predicting model stage, measuring the variance it explained in held-out data and comparing that to the recognizability of the metamers from that stage (Fig. 7c). The correlation between metamer recognizability and explained variance in the brain response was not significant when all voxels were considered (ρ = –0.06 and P = 1.0 with Bonferroni correction; Fig. 7d). We did find a modest correlation within one of the regions of interest (ROIs; speech: ρ = 0.58 and P = 0.08 with Bonferroni correction), but it was well below the presumptive noise ceiling (ρ = 0.78).

We conducted analogous analyses using representational similarity analysis instead of regression-based explained variance to evaluate auditory model–brain similarity; these analyses yielded similar conclusions as the regression-based analyses (Extended Data Fig. 10). Overall, the results indicate that the metamer test is complementary to traditional metrics of model–brain fit (and often distinguishes models better than these traditional metrics).

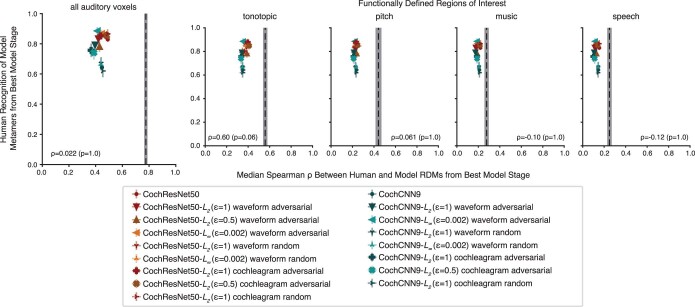

Extended Data Fig. 10. Representational Similarity Analysis of auditory fMRI data.

The median Spearman ρ between the RDM from fMRI activations to natural sounds (N = 8 participants) and the RDM from model activations at the best model stage as determined with held-out data, compared with the metamer recognition by humans at this chosen model stage. The dashed black line shows the upper bound on the RDM similarity that could be measured given the data reliability, estimated by comparing a participant’s RDM with the average of the RDMs from each of the other participants. Error bars are s.e.m. across participants. The correlation between metamer recognizability and the human-model RDM similarity was not statistically significant for any of the ROIs following Bonferroni correction (all: ρ = 0.02, p = 1.0; tonotopic: ρ = 0.60, p = .06; pitch: ρ = 0.06, p = 1.0; music: ρ = 0.10, p = 1.0; speech: ρ = 0.12, p = 1.0), and was again well below the presumptive noise ceiling (which ranged from ρ = 0.79 to ρ = 0.89, depending on the ROI). We also note that the variation in metamer recognizability across models is substantially greater than the variation in RDM similarity, indicating that metamers better differentiate this set of models than does the RDM similarity with this fMRI dataset.

Metamer transfer across models

Are one model’s metamers recognizable by other models? We addressed this issue by taking all the models trained for one modality, holding one model out as the ‘generation’ model and presenting its metamers to each of the other models (‘recognition’ models), measuring the accuracy of their class predictions (Fig. 8a). We repeated this procedure with each model as the generation model. As a summary measure for each generation model, we averaged the accuracy across the recognition models (Fig. 8a and Supplementary Figs. 3 and 4). To facilitate comparison, we analyzed models that were different variants of the same architecture. We used permutation tests to evaluate differences between generation models (testing for main effects).

Fig. 8. Human recognition of a model’s metamers is correlated with their recognition by other models.

a, Model metamers were generated for each stage of a ‘generation’ model (one of the models from Figs. 2c,d, 3, 4 and 6g for visual models and from Figs. 2f and 5 for auditory models). These metamers were presented to ‘recognition’ models (all other models from the listed figures). We measured recognition of the generating model’s metamers by each recognition model, averaging accuracy over all recognition models (excluding the generation model), as shown here for a standard-trained ResNet50 image model. Error bars represent s.e.m. over N = 28 recognition models. b, Average model recognition of metamers from the standard ResNet50, the three self-supervised ResNet50 models and the three adversarially trained ResNet50 models. To obtain self-supervised and adversarially trained results, we averaged each recognition model’s accuracy curve across all generating models and averaged these curves across recognition models. Error bars represent s.e.m. over N = 28 recognition models for standard models and N = 29 recognition models for adversarially trained and self-supervised models. c, Same as b but for Standard AlexNet, LowpassAlexNet and VOneAlexNet models from Fig. 6d–h. Error bars are over N = 28 recognition models. d, Same as b but for auditory models, with metamers generated from the standard CochResNet50, the three CochResNet50 models with waveform adversarial perturbations and the two CochResNet50 models with cochleagram adversarial perturbations. Chance performance is 1/794 for models because they had a ‘null’ class in addition to 793 word labels. Error bars represent s.e.m. over N = 16 recognition models for the standard model and N = 17 recognition models for adversarially trained models. e,f, Correlation between human and model recognition of another model’s metamers for visual (e; N = 219 model stages) and auditory (f; N = 144 model stages) models. Abscissa plots average human recognition accuracy of metamers generated from one stage of a model, and error bars represent s.e.m. across participants. Ordinate plots average recognition by other models of those metamers, and error bars represent s.e.m. across recognition models. Human recognition of a model’s metamers is highly correlated with other models’ recognition of those same model metamers.

Metamers from late stages of the standard-trained ResNet50 were generally not recognized by other models (Fig. 8b). A similar trend held for the models trained with self-supervision. By contrast, metamers from the adversarially trained models were more recognizable to other models (Fig. 8b; P < 0.0001 compared to either standard or self-supervised models). We saw an analogous metamer transfer boost from the model with reduced aliasing (LowpassAlexNet), for which metamers for intermediate stages were more recognizable to other models (Fig. 8c; P < 0.0001 compared to either standard or VOneAlexNet models). Similar results held for auditory models (Fig. 8d; waveform adversarially trained versus standard, P = 0.011; cochleagram adversarially trained versus standard, P < 0.001), although metamers from the standard-trained CochResNet50 transferred better to other models than did those for the supervised vision model, perhaps due to the shared cochlear representation present in all auditory models, which could increase the extent of shared invariances.

These results suggest that models tend to contain idiosyncratic invariances, in that their metamers vary in ways that render them unrecognizable to other models. This finding is loosely consistent with findings that the representational dissimilarity matrices for natural images can vary between individual neural network models62. The results also clarify the effect of adversarial training. Specifically, they suggest that adversarial training removes some of the idiosyncratic invariances of standard-trained deep neural networks rather than learning new invariances that are not shared with other models (in which case their metamers would not have been better recognized by other models). The architectural change that reduced aliasing had a similar effect, albeit limited to the intermediate model stages.

The average model recognition of metamers generated from a given stage of another model is strikingly similar to human recognition of the metamers from that stage (compare Fig. 8b–d to Figs. 3c, 4b, 5f and 6g). To quantify this similarity, we plotted the average model recognition for metamers from each stage of each generating model against human recognition of the same stimuli, revealing a strong correlation for both visual (Fig. 8e) and auditory (Fig. 8f) models. This result suggests that the human–model discrepancy revealed by model metamers reflects invariances that are often idiosyncratic properties of a specific neural network, leading to impaired recognition by both other models and human observers.

Discussion

We used model metamers to reveal invariances of deep artificial neural networks and compared these invariances to those of humans by measuring human recognition of visual and auditory model metamers. Metamers of standard deep neural networks were dominated by invariances that are absent from human perceptual systems, in that metamers from late model stages were typically completely unrecognizable to humans. This was true across modalities (visual and auditory) and training methods (supervised versus self-supervised training). The effect was driven by invariances that are idiosyncratic to a model, as human recognizability of a model’s metamers was well predicted by their recognizability to other models. We identified ways to make model metamers more human-recognizable in both the auditory and visual domains, including a new type of adversarial training for auditory models using perturbations at an intermediate model stage. Although there was a substantial metamer recognizability benefit from one common training method to reduce adversarial vulnerability, we found that metamers revealed model differences that were not evident by measuring adversarial vulnerability alone. Moreover, the model improvements revealed by model metamers were not obvious from standard brain prediction metrics. These results show that metamers provide a model comparison tool that complements the standard benchmarks that are in widespread use. Although some models produced more recognizable metamers than others, metamers from late model stages remained less recognizable than natural images or sounds in all cases we tested, suggesting that further improvements are needed to align model representations with those of biological sensory systems.

Might humans analogously have invariances that are specific to an individual? This possibility is difficult to explicitly test given that we cannot currently sample human metamers (metamer generation relies on having access to the model’s parameters and responses, which are currently beyond reach for biological systems). If idiosyncratic invariances were also present in humans, the phenomenon we have described here might not represent a human–model discrepancy and could instead be a common property of recognition systems. The main argument against this interpretation is that several model modifications (different forms of adversarial training and architectural modifications to reduce aliasing) substantially reduced the idiosyncratic invariances present in standard deep neural network models. These results suggest that idiosyncratic invariances are not unavoidable in a recognition system. Moreover, the set of modifications explored here was far from exhaustive, and it seems plausible that idiosyncratic invariances could be further alleviated with alternative training or architecture changes in the future.

Relation to previous work

Previous work has also used gradient descent on the input to visualize neural network representations51,63. However, the significance of these visualizations for evaluating neural network models of biological sensory systems has received little attention. One contributing factor may be that model visualizations have often been constrained by added natural image priors or other forms of regularization64 that help make visualizations look more natural but mask the extent to which they otherwise diverge from a perceptually meaningful stimulus. By contrast, we intentionally avoided priors or other regularization when generating model metamers, as they defeat the purpose of the metamer test. When we explicitly measured the benefit of regularization, we found that it did boost recognizability somewhat but that it was not sufficient to render model metamers fully recognizable or reproduce the benefits of model modifications that improve metamer recognizability (Fig. 4e).

Another reason the discrepancies we report here have not been widely discussed within neuroscience is that most studies of neural network visualizations have not systematically measured recognizability to human observers (in part because these visualizations are primarily reported within computer science, where such experiments are not the norm). We found controlled experiments to be essential. Before running full-fledged experiments, we always conducted the informal exercise of generating examples and evaluating them subjectively. Although the largest effects were evident informally, the variability of natural images and sounds made it difficult to predict with certainty how an experiment would turn out. It was thus critical to substantiate informal observation with controlled experiments in humans.

Metamers are also methodologically related to a type of adversarial example generated by adding small perturbations to an image from one class such that the activations of a classifier (or internal stage) match those of a reference image from a different class56,65, despite being seen as different classes by humans when tested informally66,67. Our method differs in probing model invariances without any explicit bias to cause metamers to appear different to humans. We found models in which vulnerability to these adversarial examples dissociated from metamer recognizability (Extended Data Fig. 9), suggesting that metamers may reflect distinct model properties.

Effects of unsupervised training

Unsupervised learning potentially provides a more biologically plausible computational theory of learning41,68 but produced qualitatively similar model metamers as supervised learning. This finding is consistent with evidence that the classification errors of self-supervised models are no more human-like than those of supervised models69. The metamer-related discrepancies are particularly striking for self-supervised models because they are trained with the goal of invariance, being explicitly optimized to become invariant to the augmentations performed on the input. We also found that the divergence with human recognition had a similar dependence on model stage irrespective of whether models were trained with or without supervision. These findings raise the possibility that factors common to supervised and unsupervised neural networks underlie the divergence with humans.

Differences in metamers across stages

The metamer test differs from some other model metrics (for example, behavioral judgments of natural images or sounds, or measures of adversarial vulnerability) in that metamers can be generated from every stage of a model, with the resulting discrepancies associated with particular model stages. For instance, metamers revealed that intermediate stages were more human-like in some models than others. The effects of reducing aliasing produced large improvements in the human recognizability of metamers from intermediate stages (Fig. 6g), consistent with the idea that biological systems also avoid aliasing. By contrast, metamers from the final stages showed little improvement. This result indicates that this model change produces intermediate representations with more human-like invariances despite not resolving the discrepancy introduced at the final model stages. The consistent discrepancies at the final model stages highlight these late stages as targets for model improvements45.