Abstract

Recent progress in engineering highly promising biocatalysts has increasingly involved machine learning methods. These methods leverage existing experimental and simulation data to aid in the discovery and annotation of promising enzymes, as well as in suggesting beneficial mutations for improving known targets. The field of machine learning for protein engineering is gathering steam, driven by recent success stories and notable progress in other areas. It already encompasses ambitious tasks such as understanding and predicting protein structure and function, catalytic efficiency, enantioselectivity, protein dynamics, stability, solubility, aggregation, and more. Nonetheless, the field is still evolving, with many challenges to overcome and questions to address. In this Perspective, we provide an overview of ongoing trends in this domain, highlight recent case studies, and examine the current limitations of machine learning-based methods. We emphasize the crucial importance of thorough experimental validation of emerging models before their use for rational protein design. We present our opinions on the fundamental problems and outline the potential directions for future research.

Keywords: activity, artificial intelligence, biocatalysis, deep learning, protein design

1. Introduction

Biocatalysis is a promising field that offers diverse possibilities for creating sustainable and environmentally friendly solutions in various industries. Its potential stems from its ability to mimic and harness the power of nature using cells and enzymes that have evolved over millions of years to perform specific chemical reactions with high efficiency. This makes it possible to transform chemical compounds selectively and efficiently, providing an alternative to traditional chemical catalysis, which often requires harsh conditions and toxic chemicals.1 Biocatalysts could therefore be valuable in the production of fine chemicals, pharmaceuticals, and food ingredients as well as in the development of sustainable processes for the production of energy and materials.2 In addition, biocatalysis is an exciting area of research and development with great promise for the future because of the potential to unlock new solutions for diverse challenges by providing green alternatives to traditional chemical processes, new energy sources, and tools for improving the overall efficiency of industrial processes or biological removal of recalcitrant waste.3−5 It is also a highly interdisciplinary research area that makes heavy use of advanced experimental techniques and computational methods.6

Many research fields are undergoing a gradual transition from near-exclusive reliance on experimental work to hybrid approaches that incorporate computational simulations and data-driven methods.7−9 In the past, researchers would accumulate observations from individual experiments and use the resulting data to formulate fundamental rules. They then created simulations based on these rules to better understand the system under investigation. As computational power has increased, researchers have been able to shift toward data-driven methods that rely on machine learning (ML, see the glossary in Table 1 for terms in bold) algorithms to deduce rules directly from data.10,11 This transition has made it possible to efficiently and comprehensively analyze large and complex data sets that are often generated by high-throughput technologies. Particularly, very powerful deep learning algorithms are finding a wide range of applications in life sciences and will be discussed in great detail in this Perspective. While experimental science and computational simulations still play essential roles, the trend toward data-driven methods will likely continue as technology and data collection methods evolve further.7

Table 1. Glossary of Terms Used Frequently in the Context of Machine Learning for Enzyme Engineeringa.

| accuracy | metric primarily used for classification tasks, measuring the ratio of correct predictions to all predictions produced by a machine learning model |

| active learning | a type of machine learning in which the learning algorithm queries a person for providing labels for particular data points during training iteratively. the first iteration usually starts with many unlabeled and few labeled data points, e.g., protein sequences. after training on this data set, the algorithm proposes a next set of data points for labeling to the experimenter, e.g., more sequences to be characterized in the lab. their labels are then provided to the algorithm for the next iteration, and the cycle repeats several times |

| artificial intelligence (AI) | artificial intelligence as defined by McCarthy is “the science and engineering of making intelligent machines, especially intelligent computer programs” |

| continual learning | a concept in which a model can train on new data while maintaining the abilities acquired from earlier training on old data |

| cross-validation (K-fold) | an approach to evaluating the performance of a model whereby a data set is split into K parts, the model is retrained K times on all but one part, and the performance is evaluated on the excluded part. this way each data point is used once for validation, and K different evaluations are produced to provide a distribution of the values |

| deep learning (DL) | a branch of machine learning that uses multiple-layer neural network architectures. deep networks generally include many more parameters (sometimes, billions) and hyperparameters than traditional machine learning models. this gives deep neural networks tremendous expressive power and design flexibility, which has made them a major driver of modern technology with applications ranging from on-the-fly text generation to protein structure prediction |

| diffusion | deep learning paradigm based on denoising diffusion probabilistic modeling. diffusion models learn to generate novel objects, e.g., images or proteins, by reconstructing artificially corrupted training examples |

| embedding | representation of high-dimensional data, e.g., text, images, or proteins, in a lower-dimensional vector space while preserving important information |

| end-to-end learning | a type of ML that requires minimal to no data transformation (e.g., just one-hot encoding of the input) to train a predictor. This is often the case in deep learning, when abundant data are available to establish direct input-to-output correspondence, in contrast to classical ML approaches using small data sets, which typically require feature and label engineering before the data can be used for training |

| equivariance | an ML model is said to be equivariant with respect to a particular transformation if the order of applying the transformation and the model to an input does not change the outcome. for example, if we pass a rotated input to a model that is equivariant to rotation, the result will be the same as if the model was applied to the original input and the output was then rotated |

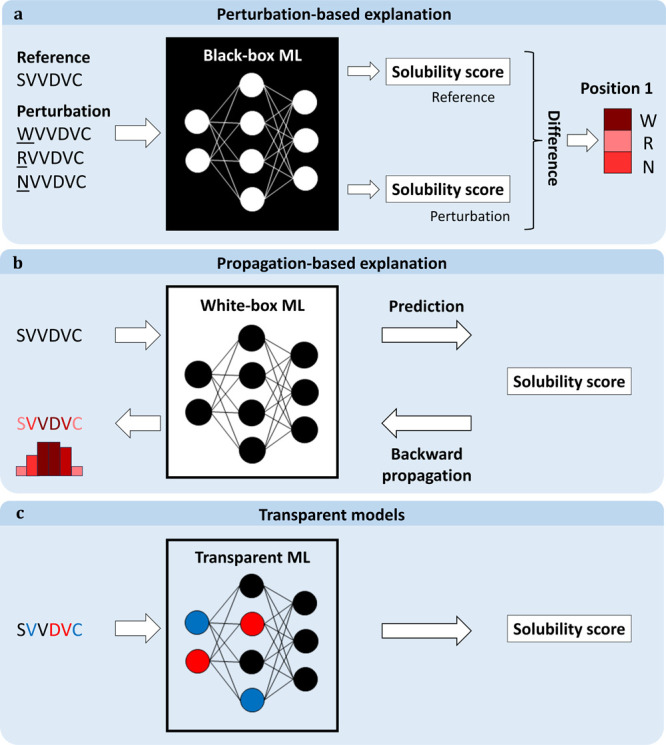

| explainable artificial intelligence (XAI) | AI or ML-based algorithms designed such that humans can understand the reasons for their predictions. its core principles are transparency, interpretability, and explainability |

| findable, accessible, interoperable, reusable (FAIR principles) | principles for the management and stewardship of scientific data to ensure findability, accessibility, interoperability, and reusability |

| fine-tuning | an approach to transfer learning (see “transfer learning” below) in which all or part of the weights of an artificial neural network pretrained on another task are further adjusted (“fine-tuned”) during the training on a new task |

| generalizability | in the context of ML models, generalizability refers to a model’s ability to perform well on new data not used during the training process |

| generative models | algorithms aiming to capture the data distribution of the training samples to be capable of generating novel samples resembling the data that they was trained on |

| inductive bias | set of assumptions of a model used for predictions over unknown inputs. for example, the model can be built such that it can only predict values within a certain range consistent with the expected range of values for a particular problem |

| learning rate | a parameter influencing the speed of the training of an ML model. a higher learning rate increases the effect of a single pass of the training data on the model’s parameters |

| loss function/cost function | a function used to evaluate a model during training. by iteratively minimizing this function, the model updates the values of its parameters. a typical example is mean-squared error of prediction |

| machine learning (ML) | machine learning, according to Mitchell, is the science that is “concerned with the question of how to construct computer programs that automatically improve with experience”. the terms ML and AI are often used interchangeably, but such usage is an oversimplification, as ML involves learning from data whereas AI can be more general |

| masking | deep learning paradigm for self-supervised learning. neural networks trained in a masked-modeling regime acquire powerful understanding of data by learning to reconstruct masked parts of inputs, e.g., masked words in a sentence, numerical features artificially set to zeros, or hidden side-chains in a protein structure |

| multilayer perceptron (MLP) | a basic architecture of artificial neural networks. it consists of multiple fully connected layers of neurons. each neuron calculates a weighted sum of inputs from the previous layer, applies a nonlinear activation function, and sends the result to the neurons in the next layer |

| multiple sequence alignment (MSA) | a collection of protein or nucleic acid sequences aligned based on specific criteria, e.g., allowing introductions of gaps with a given penalty or providing substitution values for pairs of residue types, to maximize similarity at aligned sequence positions. sequence alignments provide useful insights into the evolutionary conservation of sequences |

| one-hot encoding for protein sequence | each amino acid residue is represented by a 20-dimensional vector with a value of one at the position of the corresponding amino acid in the 20-letter alphabet and zeros elsewhere |

| overfitting | case of an inappropriate training of ML model in which the model has too many degrees of freedom, and it is allowed to use these degrees of freedom to fit the noise in the training data during training. as a result, the model can reach seemingly excellent performance on the training data, but it will fail to generalize to new data |

| regularization | in ML, regularization is a process used to prevent model overfitting. regularization techniques typically include adding a penalty on the magnitude of model parameters into the loss function to favor the use of low parameter magnitudes and thereby compress the parameter space. another popular regularization method for DL is to use a dropout layer, which randomly switches network neurons on and off during training |

| reinforcement learning (RL) | a machine learning paradigm in which the problem is defined as finding an optimal sequence of actions in an environment where the value of each action is quantified using a system of rewards. for example, if RL were used to learn to play a boardgame, the model would function as a player, the actions would represent the moves available to the player, and the game would constitute the environment. using game simulations, the model learns to perform stronger actions based on the “rewards” (feedback from the environment, e.g., winning/losing the game) it receives for its past actions |

| self-supervised learning | a machine learning paradigm of utilization of unlabeled data in a setting of supervised learning. In self-supervised learning, the key is to define a proxy task for which labels can be synthetically generated for the unlabeled data, and then the task can be learned in a supervised manner. for example, in natural language processing, a popular task is to take sentences written in natural language, mask (see “masking” above) words in those sentences, and learn to predict the missing word. such a proxy task can help the model to learn the distribution of the data it is supposed to work with |

| semisupervised learning | a machine learning paradigm for tasks where the amount of labeled data is limited but there is an abundance of unlabeled data available. the unlabeled data are used to learn a general distribution of the data, aiding the learning of a supervised model. for example, all the data can be clustered by an unsupervised algorithm, and the unlabeled samples can be automatically labeled based on the labels present in the cluster, leading to enhancement of the data set for supervised learning, which can benefit its performance despite the lower quality of labeling |

| supervised learning | a machine learning paradigm in which the goal is to predict a particular property known as a label for each data point. for example, the datapoints can be protein sequences and the property to be predicted would be the solubility (soluble/insoluble). training a model in a supervised way requires having the training data equipped with labels |

| transfer learning | a machine learning technique in which a model is first trained for a particular task and then used (“transferred”) as a starting point for a different task. some of the learned weights can further be tuned to the new task (see “fine-tuning” above), or the transferred model can be used as a part of a new model that includes, for example, additional layers trained for the new task |

| transformer | transformers learn to perform complex tasks by deducing how all parts of input objects, e.g., words in a sentence or amino acids in a protein sequence, are related to each other, using a mechanism called “attention”. the transformer architecture is currently one of the most prominent neural network architectures |

| underfitting | the case of an insufficient training of a ML model, where the model could not capture the patterns in the available data well and exhibits high training error. it can be caused for example by a wrongly chosen model class, too strong regularization, an inappropriate learning rate, or too short training time |

| unsupervised learning | a machine learning paradigm in which the goal is to identify patterns in unlabeled data and the data distribution. Typical examples of unsupervised learning techniques include clustering algorithms and data compression or projection methods such as principal component analysis. the advantage of these methods is the capability of handling unlabeled data, often at the expense of their predictive power |

Focusing on their meaning in the context of this Perspective. The terms from this table are highlighted in bold upon their first usage in the text.

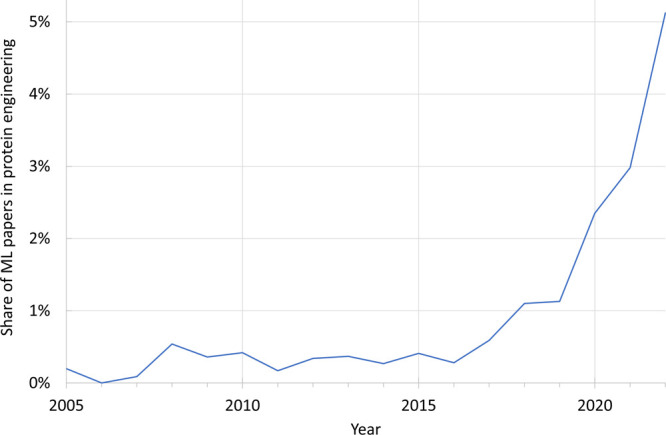

This paradigm shift is illustrated by the exponentially growing number of scientific articles describing the use of machine learning for protein engineering (Figure 1). The trend toward data-driven methods is expected to continue as technological advances allow us to accumulate, deposit, and reuse biological and biochemical data more effectively. This is being facilitated by initiatives such as the FAIR principles, which promote the findability, accessibility, interoperability, and reusability of data, and the European Open Science Cloud, which is designed to promote best practices in handling data.12 These large-scale initiatives are expected to accelerate the adoption of data-driven methods by making it easier for researchers to access, use, and share existing data and ensuring that these data are of high quality.

Figure 1.

The trend in the use of machine learning in the literature on protein engineering. The graph shows the ratio of publications mentioning “machine learning” and “protein engineering” to all papers mentioning “protein engineering” in their title, abstract, or keywords, based on the Scopus database. This trend illustrates the increasing attention ML is receiving as a generally applicable and useful technology for protein engineering.

This Perspective focuses on the application of machine learning to protein engineering, which means improving the properties of biocatalysts by optimizing their sequences and tertiary structures using molecular biology techniques. Time-wise, we will primarily cover the period since our previous review on the same topic, published in 2019.13 As for particular areas, we will be focusing mainly on the applications regarding engineering by mutating known proteins rather than designing proteins de novo. While readers with a particular interest in de novo design might also find this Perspective helpful, as we cover many techniques common for various protein design tasks, for particular details on de novo design, such as deep generative modeling, we refer to other reviews.14−16 We will also introduce high-level concepts from machine learning to familiarize the reader with a broader context and will not cover in depth technical aspects such as specifics of various neural network architectures. We refer the readers to several excellent recent reviews on those topics.10,17−23 We will consider new methods from a user’s perspective. We find this important because the methods presented in research papers, while being exciting and innovative, often have only a limited impact if the wider community cannot quickly and easily adopt them. Moreover, we will draw inspiration from other domains, as we believe that identifying parallels between tasks in different fields can accelerate the development of more powerful and practically useful methods. In particular, by examining how solutions have been developed in other disciplines, we can learn effective ways of making methods and software tools applicable to a broader range of users.24,25

This paper is organized as follows. Section 2 briefly reviews the basics of machine learning and underlines the similarities and differences between data related to proteins and data in other domains. Section 3 provides a comprehensive review of machine learning in protein engineering and highlights recent progress in the field. In section 4, we examine a series of exciting recent case studies in which machine learning methods were applied to create new enzyme designs for use in the laboratory and in practical applications. In section 5, we identify gaps in the field that remain to be filled. Finally, in section 6, we investigate what inspiration we can draw from other disciplines to bring ML-based enzyme design to a new level.

2. Principles of Machine Learning

As some of our readers might be unfamiliar with machine learning (ML), we start with a brief introduction to the topic. We will cover the basics of ML-based pipelines and vocabulary, highlight similarities between protein engineering and other domains from a ML perspective, outline the main traits that distinguish protein data from other data types regularly used in ML, and summarize the challenges of performing ML with protein data.

2.1. Machine Learning Basics

Machine learning is often seen as a subcategory of artificial intelligence (AI). Its primary purpose is to learn patterns directly from available data and use the learned patterns to generate predictions for new data. Its main difference from other methods for modeling a system’s behavior, such as quantum mechanical calculations, is that ML does not rely on hard-coded rules to make predictions. Instead, ML models are mathematical functions that depend on generic parameters whose values are obtained (learned) through optimization using available data and an optimization criterion, the so-called loss function.

Since the final model is derived from the input data, careful data collection is vitally important for machine learning. In particular, any biases, measurement noise, and imbalances must be recognized and accounted for. Moreover, as ML is based on mathematical functions, every data point in a data set typically needs to be represented as a vector of numbers that are commonly referred to as features. Features may be obtained by simple encoding of the raw data, e.g., end-to-end learning and one-hot encoding, but they may also represent more involved quantities derived from the raw data. For example, when predicting solubility from a protein sequence, the features may be simple amino acid counts, propensities of different residues to form secondary structures, conservation scores of proteins, or variables representing aggregated physicochemical properties.26 Choosing informative and discriminating features that provide relevant information about the underlying pattern in the data is crucial in ML because the features are the only data characteristics that the algorithm will exploit during training and when making predictions based on future inputs.

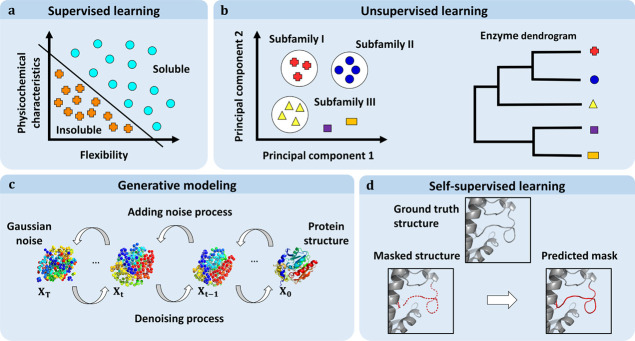

Several different categories of ML problems exist. In supervised learning problems, the goal is to predict a particular property (known as a label) for each data point (Figure 2A). For example, if we were seeking to predict protein solubility, each data point could be labeled “soluble” or “insoluble” based on experimental results. Data points can have multiple labels, so a given protein could have the labels “soluble”, “from a thermophilic organism”, and “globular”. Labels can form a set of classes or fall within a range of numerical values, giving rise to two subtypes of supervised learning problems: classification problems involving labels with no inherent order (e.g., “soluble” or “insoluble”) and regression problems involving labels corresponding to numerical values (e.g., protein yields). In contrast, the goal in unsupervised learning problems is to identify patterns in unlabeled data. Unsupervised learning techniques include clustering algorithms and data compression or projection methods, such as principal component analysis (Figure 2B). Semisupervised learning problems are those where the amount of labeled data is limited but there is an abundance of unlabeled data available. The unlabeled data are used to learn a general distribution of the data, aiding the learning of a supervised model. For example, all the data can be clustered by an unsupervised algorithm, and the unlabeled samples can be automatically labeled based on the labels present in the cluster, leading to the enhancement of the data set for supervised learning, which can benefit its performance despite the lower quality of labeling.

Figure 2.

Four main categories of machine learning methods. (a) Supervised learning methods use labels. For example, each protein in a data set might be labeled “soluble” or “insoluble”, and the model would then aim to find the optimal decision boundary between these two classes in the feature space. The learned boundary is then used to make predictions about new data for which labels are unavailable. (b) Unsupervised learning methods typically find patterns, e.g., clusters or groups) in unlabeled data. Examples include clustering enzymes into subfamilies or grouping them into a dendrogram. (c) Generative models learn the distribution of the training data to generate new instances corresponding to that distribution. Models of this type include diffusion models, which are trained to denoise synthetically noised inputs. The trained models can then be applied to random noise on the input to create a sample resembling the training data, e.g., a new protein structure. (d) Self-supervised learning methods transform an unsupervised problem into a supervised problem, for example, by masking a part of a sequence or structure (red dashed loop) and then predicting the masked information (red loop). Such proxy tasks enable the model to learn important characteristics of the underlying data and can lead the model to perform well even on different tasks.

The boundary between supervised and unsupervised machine learning has been blurred by the emergence of methods that can create labels synthetically. For example, in a data compression method, the label might be the input itself and the algorithm may impose constraints (e.g., a bottleneck in the architecture) that force the model to learn a more compact way of representing the data and their distribution. The algorithms that aim to capture the data distribution to generate new samples belong to a class of ML models called generative models. The most recent examples of this class include diffusion models, which have recently been used to generate protein backbone structures27,28 and predict the binding of a flexible ligand to a protein29 (Figure 2C). In diffusion models, synthetic training data are generated by gradually noising true data (X0) in a stepwise manner to ultimately obtain a maximally noised sample (XT). The sequences of increasingly noisy data are then reversed and used to train a model to denoise each individual step by having the (less noisy) sample Xt-1 serve as a “label” for the (more noisy) following sample Xt. For more details on diffusion generative models and their applications in bioinformatics, see the recent review.30 Alternatively, we can avoid the need for labels by masking a part of the input, e.g., a residue in a protein sequence or structure,31−33 and training a model that will predict the masked part. In other words, the original data (e.g., the amino acid that was masked) are treated as the label for the corresponding data point. Such approaches belong to the methods of self-supervised learning (Figure 2D) and are currently attracting considerable attention because of their great success in large language models; it turns out that this “self-supervision” approach allows algorithms to learn useful characteristics of the data, such as grammar and semantics in the case of natural language models. The following sections present some applications of self-supervised learning in the enzymology domain.

In supervised learning, underfitting and overfitting are two critical concepts that must always be considered. Underfitting refers to a situation in which either the selected class of models is insufficient to approximate patterns in the available data, the regularization is too strong, or the parameters of the training process such as the duration of the training or the learning rate were inappropriate. As a result, the model fails to capture the relationship between the input and output and has a high training error. Conversely, overfitting happens when a model has too many degrees of freedom, allowing it to start fitting noise in the training data during the training process. This leads to poor generalization and a significant drop in performance when the model is applied to new inputs. Robust evaluation of trained models is therefore crucial in machine learning to obtain feedback on the training process and develop improved training protocols or model hyperparameters.

The best practice in machine learning is to split the available data into three disjoint subsets: a training set, a validation set, and a test set. A model learns the underlying patterns within the data by fitting its parameters to the training set. The validation set is provided to the model at certain stages of the training for basic evaluation, and the results of these evaluations are used to select the model’s hyperparameters. Finally, the test set is used to get a realistic estimate of the model’s performance and is therefore only used once training has been completed and the final values for the hyperparameters have been set. Since the model does not see the test set during the training process, the model’s performance, when applied to this “new” data set, should be similar to that achieved with the test set if the test set accurately represents the general distribution of the studied data.

The choice of evaluation metric depends on the task at hand. Classification problems are mainly evaluated based on model accuracy, i.e., the ratio of correct predictions to the number of total predictions. Regression problems are typically evaluated based on the difference between the predicted and ground truth labels, so popular metrics include measures of the correlation between these labels as well as the mean squared error (MSE) and related metrics such as the root-mean-square error (RMSE). More complex problems often require customized metrics. For instance, in a protein structure prediction task, one might use the MSE between the predicted and actual locations of Cα atoms, which can be expressed using a fixed coordinate system (global alignment) or in terms of the local coordinates of each residue (local alignment). Both metrics indicate how well a predicted structure aligns with the corresponding actual structure.

2.2. Parallels between Machine Learning Tasks in Biocatalysis and Those in Other Domains

One of the strengths of machine learning is its universality, as the algorithms used for protein engineering tasks are similar to those used in other domains. Therefore, scientists working with protein data can reuse and build upon existing solutions from other fields, such as natural language processing, computer vision, and network analysis.

Natural language processing (NLP) is a field of computer science that aims to teach computers how to understand and handle natural languages. New ML techniques have enabled great advances in this field in recent years. For instance, a common task in NLP is to generate semantically and grammatically correct sentences. In protein engineering, the strings of amino acids representing primary sequences can be regarded as words constructed from an alphabet of twenty letters representing the canonical amino acids. These words can represent secondary structures or other motifs that can be combined in meaningful ways to create sentences in the language of protein structure that correspond to functional proteins. Another common task in NLP is the assignment of labels to individual words (e.g., to predict lexical categories or identify relevant information) or phrases (e.g., for sentiment analysis). This data structure resembles that of annotated protein data sets with labels representing protein stability, binding affinity, specificity, or other characteristics.34 Moreover, the complexities of the relationships between protein sequence, structure, and function are reminiscent of those in human languages, prompting researchers to adapt transformer-based large language models used in NLP to protein engineering tasks.35

Another field of computer science that has benefited greatly from recent advances in ML is computer vision, and techniques developed for use in this field have also found applications in the study of the protein structure. For example, protein structures can be converted to arrays of voxels (3D pixels) via the application of a discrete grid. The resulting representations are similar to those of volumetric 3D images, enabling the application of ML architectures, such as convolutional neural networks, that were originally designed to process image data. These networks learn representations through convolution and hierarchical aggregation and have recently been used to predict protein mutation landscapes,31 protein–ligand binding affinity,36 and the interactions of proteins with water molecules.37 Denoising diffusion probabilistic models are another class of computer vision models that have been applied in protein structure prediction. They are trained to denoise existing samples, which allows them to generate novel samples by morphing random noise. This has led to major breakthroughs in image generation and the emergence of the highly successful models, such as DALL-E 238 or Stable Diffusion.39 In protein science, such models have been used to perform fast protein–ligand binding,29 generate new small-molecule ligands40 and linkers,41 and perform de novo design of large proteins.27,28

The parallels between images and protein structures can be further exploited by adapting techniques developed for video analysis to predict protein dynamics. For example, a trajectory generated in a molecular dynamics simulation can be regarded as a temporal sequence of 3D images. This makes protein dynamics analysis similar to video processing and implies that video methods for event detection42 can be applied to molecular dynamics trajectories to detect events such as the opening of a tunnel.43 Video processing techniques can thus be adapted to clarify a protein’s function by analyzing the movement of individual atoms or groups of atoms within a protein structure in a manner similar to the movement of objects or groups of objects in a video. Moreover, there have been remarkable advances in ML techniques for video synthesis44−46 that could inspire new methods for capturing and synthesizing protein dynamics.

Finally, one more domain is relevant to protein engineering: network analysis, which involves studying the properties and structures of interconnected elements. Network analysis techniques have been used successfully to study diverse social and biological networks, including the development of Covid-19-related sentiment on social networks47 and protein–protein interaction networks.48 Interactions between proteins or between a protein and a ligand can be represented as networks (graphs) in which nodes correspond to proteins and ligands while edges correspond to biological relationships between them. Once such a network has been defined, link prediction or community detection methods can be applied.49 Alternatively, a protein structure can be represented as a network where nodes correspond to residues or individual atoms and edges correspond to inter-residue interactions or interatomic bonds. This makes it possible to use graph-based ML algorithms for tasks such as predicting protein function, solubility, or toxicity.50,51 Moreover, data on protein interactomes and the structures of small molecules have been used to drive theoretical research on graph-based ML: the best-established benchmark for graph learning, OGB, includes multiple biochemical data sets of this type.52

2.3. Challenges of Machine Learning for Protein Data

As discussed above, there are striking similarities between protein engineering tasks and other ML domains, including natural language processing, computer vision, and network analysis. However, protein data also present unique challenges related to the representation of proteins, the construction of labeled data sets, and the establishment of robust training protocols.

The choice of protein representation is a key step in all protein-related computational tasks. Proteins can be represented at different levels of detail, from a discrete and accurate 1D representation of their amino acid sequence to a continuous and less accurate 3D representation of every atom position, including or excluding chemical bonds. The selected representation determines the type and amount of information available to the computational model and the range of applicable model architectures.

The go-to representation of a protein sequence is a string (word) constructed using an alphabet of 20 amino acids (letters). The length of the string equals the number of residues, and the nth character encodes the amino acid at the nth position in the protein sequence. In silico, the amino acids are typically represented using one-hot encoding. When the amount of data available for training is not enough for end-to-end learning, one-hot encoding of sequences can further be transformed into values corresponding to specific physicochemical characteristics of amino acids, e.g., using AA indices.53 These indices provide additional information and interpretability to the pipeline, although they were shown to perform on par with random vectors for some tasks.54,55 Another strategy to enrich the protein sequence representation is to include evolutionary information using a multiple sequence alignment (MSA) instead of a single protein sequence. This evolutionary information is valuable in various tasks, most notably in structure prediction because the covariance of different residue positions in the sequence can be related to the residues’ spatial proximity.56

The options for representing a protein’s structure are more varied; representations may include all atoms, only selected chemical elements (e.g., all atoms except hydrogens), or just key components of residues (e.g., the α-carbons). Moreover, they may include different types of information about these atoms and/or residues. Ideally, to enable data-efficient model training, structural representations should be invariant to rotation, translation, and reflection. However, straightforward representations based on the 3D coordinates of the residues (atoms) lack this property. It is therefore common to represent protein structure using an inter-residue or interatomic distance matrix in which each row and column is assigned to a specific residue (or atom) of the protein and the value of each matrix entry is equal to the distance between the corresponding residues (atoms). Such a matrix is necessarily symmetric; therefore, it is common to take the upper (or lower) triangular part and convert it into a 1D vector for processing, e.g., by a neural network. While this representation is rotationally and translationally invariant, it is also inherently redundant, as its spatial complexity is quadratic with respect to the number of residues (atoms).

Graph-based protein representations have recently attracted considerable attention.57 A graph consists of a set of nodes linked by a set of edges. The nodes typically represent residues, atoms, or groups of spatially close atoms, while the edges usually correspond to chemical bonds, spatial proximity (contacts) between the nodes, or both.58 Graph-based protein representations are very flexible because the definition of the nodes and edges can be tailored to specific tasks and they can be made equivariant to rotation, translation, and reflection.59 A convenient definition of the nodes and edges can also introduce an inductive bias that improves the model performance. For example, edges corresponding to chemical bonds can guide a model toward learning chemical knowledge more rapidly or with less data than would otherwise be needed. Graph neural network (GNN) architectures have recently achieved state-of-the-art performance in multiple protein-related tasks, as exemplified by the DeepFRI60 and HIGH-PPI61 methods for predicting protein function and protein–protein interactions, respectively. Special graph-based protein representations, such as point clouds (no edges) or complete graphs (a full set of edges), are especially convenient for processing using powerful transformer models.62,63

A more general approach to protein representation is to directly learn the representation by a deep learning model, a direction that is currently on the rise in biology.64 Its aim is to remove the suboptimality of human-made choices by inferring the representation parameters from existing data. Furthermore, it is often possible to learn such a representation through self-supervised training, i.e., without the need for annotated data. These representations can be obtained from the sequence data, e.g., as done by the ESM (“Evolutionary Scale Modeling”) language models,65−67 as well as from large structural data sets, e.g., as done by GearNet.68 More and more models combine both sources of data, e.g., ESM-GearNet.32

Obtaining appropriately labeled data sets can be challenging when seeking to apply ML in enzyme engineering because there is often a trade-off between data quality and quantity when selecting methods for acquiring experimental data. Most reliable biochemical methods using purpose-built instruments can only provide data for small numbers of protein variants69 and are thus generally insufficient for representative sampling of vast mutational spaces. Conversely, high-throughput methods such as Deep Mutational Scanning (DMS) are prone to data quality issues69 and face a throughput bottleneck in the case of enzymes whose screening is considerably slower than sequencing.70

The process of compiling new data sets from multiple sources can be complicated by the inconsistency of conventions in protein research. These inconsistencies include the differing biases of various experimental tools, the use of different distributions for data normalization, and inconsistent definitions of quantities such as stability, solubility, and enzyme activity. All of these can introduce errors into constructed data sets, for example, by causing contradictory labels to be applied to the same protein. Protein data may also contain biases introduced by the design strategy. For example, alanine tends to be overrepresented in mutational data due to the widely used alanine scanning technique.71 It is important to consider these biases during data set construction and when interpreting model outputs since the composition of the training set significantly affects the space of patterns explored by the model.

A significant amount of protein data has been gathered over the years. However, much of these data are proprietary and thus inaccessible to the academic community. In addition, publicly available data sets are often published in an unstructured way, which limits their usability. While large language models such as GPT-3,72 GPT-4,73 or BioGPT74 are remarkably effective at summarizing text on large scales, mining relevant data from publications still requires considerable human effort.

Some ML packages, e.g., TorchProtein,75 offer preprocessed data sets for various protein science tasks, making protein research more accessible to ML experts from other domains. Other packages, such as PyPEF76 provide frameworks for the integration of simpler ML models together with special encodings derived from the AAindex database of physicochemical and biochemical properties of amino acids.53 Despite this progress, the development of ML models for protein data requires a certain level of biochemistry domain knowledge to account for the specifics of protein data absent in other application areas of ML. These specifics include the evolutionary relationships and structural similarities between proteins. Models produced without the benefit of such expertise may lack practical utility due to data handling errors.

One common type of data handling error is data leakage between data splits. The training, validation, and test sets should not share the same (or nearly the same) data points because such overlaps could lead to over estimating the model’s performance on new data, compromising the model’s evaluation. In some data sets, all of the data points are distinct enough that randomly splitting the available data into disjoint sets is a viable strategy. However, more complex splitting strategies are often needed when dealing with protein data to avoid problems such as evolutionary data leakage.77 It may also be important to consider multiple levels of separation when dealing with protein data, e.g., consisting of mutations and their effects. For example, one might want to ensure that the same substitutions, positions, or proteins do not appear in the training and test sets. Defining protein similarity is also a challenging task for which multiple strategies exist. Many of these strategies involve clustering proteins based on sequence identity or similarity thresholds and then ensuring that all members of a given cluster are assigned to the same set when splitting the data. This strategy is particularly useful for constructing labeled data sets of protein structures because such data sets are primarily sourced from large redundant databases such as PDB78 (see Table S2). However, clustering in the sequence space can be insufficient in some cases. For example, distantly related proteins may have very similar active site geometries even though their sequence homology is low.79 Clustering may thus be performed at the level of the structural representation instead. While such strategies have only rarely been used in the past, they may become more common due to the emergence of new tools for protein structure searching such as Foldseek.80

3. Protein Engineering Tasks Solved by Machine Learning

This section provides a brief overview of the protein engineering tasks that have already drawn the attention of machine learning developers. The first of these tasks is functional annotation, which is important because the overwhelming majority of sequences in protein databases remain unannotated and in silico prediction is necessary to keep pace with the exponentially growing number of deposited sequences. We will then cover the available labeled data sets and state-of-the-art ML tools for predicting mutational effects in proteins, as well as strategies for protein design based on their predictions. In addition, we will review published methods for leveraging unlabeled protein sequence and structure data sets to help guide protein engineering. Finally, we conclude with examples showing how ML models of protein dynamics can facilitate the selection of promising mutations.

3.1. Functional Annotation of Proteins

Knowledge of protein functions is fundamental for protein engineering pipelines. For instance, in protein fitness optimization, scientists start from a characterized wild-type sequence with some degree of the desired function.81−83 Likewise, in biocatalysis, one needs to know the function of enzymes to assemble a biosynthetic pathway from plausible enzymatic reactions.84,85

Traditionally, scientists have characterized the functions of proteins via laborious, time-consuming, and costly wet lab experiments. However, owing to high-throughput DNA sequencing, the exponentially growing repertoire of protein sequences is reaching numbers far beyond the capabilities of experimental functional annotation; for example, the Big Fantastic Database (BFD, Table S2) contains 2.5 billion sequences to date. Functional annotation is particularly important for enzymes. A broad-level annotation (e.g., enzyme family) can be achieved relatively easily by sequence homology and by searching for protein domain motifs, but detailed annotation of enzyme substrates and products currently requires experimental characterization. To accelerate this process, a substantial recent effort has been dedicated to the development of novel computational methods for functional annotation.

The developed computational methods heavily rely on data sets of previously characterized enzymatic functions for training. For example, information on protein families and domains is often sourced from the Pfam,86 SUPERFAMILY,87 or CATH88 databases. Enzymatic activity data often come from databases such as Rhea,89 BRENDA,90 SABIO-RK,91 PathBank,92 ATLAS,93 and MetaNetX.94 The ENZYME database under the Expasy infrastructure95 provides the Enzyme Commission (EC) numbers, the most commonly used nomenclature for enzymatic functions. EC numbers are a hierarchical classification system categorizing enzymatic reactions at four levels of detail, with the fourth level being the most detailed. An EC number groups together proteins with the same enzymatic activity regardless of the reaction mechanisms.96 The data from the above-listed databases have also been post-processed and organized into data sets such as ECREACT97 or EnzymeMap,98 which should further facilitate the development of computational models.

The models for enzymatic activity prediction typically use enzyme amino acid sequences as input, as the goal is to directly annotate the outputs of high-throughput DNA sequencing. The incorporation of the structural inputs, facilitated by the recent breakthroughs in structure prediction,67,99 is still to be explored more by the community. The outputs of these models generally fall into three categories based on the resolution of predictions. First, the most general models predict protein families and domains. Second, the EC class-predictive models provide a more detailed estimation of enzymatic activity. Finally, the most comprehensive picture of enzymatic activity requires models for predicting an enzyme’s substrates and corresponding products. Several recent deep learning models predict protein families and domains.100,101 Although such models are crucial for studying proteins, they have only a limited applicability in enzymatic activity prediction, as a single protein family can combine enzymes catalyzing different reactions.102 To meet the needs of protein engineering, the models predicting the EC numbers appear to be more relevant, as they can capture the catalytic activities.

Over the years, the community attempted to predict EC numbers using multiple sequence alignment and position-specific scoring matrices (PSSM) or hidden Markov model (HMM) profiles,103−105k-nearest neighbor-based classifiers,104−108 support vector machines (SVM),105,109−112 random forests,113,114 and deep learning.60,115−119 Most methods approached the prediction of EC numbers as a classification problem, which led to poor performance on the under-represented EC categories. The recent deep-learning-based method119 tackled the EC number prediction via a contrastive learning approach, training a Siamese neural network on top of sequence embeddings from a pretrained protein language model.66 The resulting predictive algorithm, CLEAN, can better identify enzyme sequences that belong to any EC category, including underrepresented ones. CLEAN achieved state-of-the-art performance in EC number prediction in silico, and it was experimentally validated in vitro using high-performance liquid chromatography–mass spectrometry coupled with enzyme kinetic analysis on a set of previously misannotated halogenase enzyme sequences.

EC class prediction enables downstream applications such as retrobiosynthesis.85 For instance, planning of biosynthesis has been tackled by predicting the chemical structure of the substrate and the required enzyme EC number from the provided enzymatic product using a transformer-based neural network.97 Several other published deep learning models aspired to estimate the substrate of an enzymatic reaction based on its product.120,121 Such models can be used to prioritize enzyme selection based on the EC class or the substrate/product pair. However, the assignment of a specific enzyme sequence (i.e., not only the EC class) to a desired reaction remains a challenge for future development.

Recently, the first general models predicting interaction between individual enzymes and substrates/products were published.122,123 These DL-based models take pairs of a protein sequence and a small molecule as inputs and predict their possible interaction. Unfortunately, none of the general substrate–enzyme interaction models were validated in wet lab experiments. Furthermore, in Kroll et al. the authors admit poor generalization of the model to out-of-sample substrates.123 Moreover, enzyme-family-specific models were shown to outperform the general models in predicting the enzyme–substrate interactions.124 To sum up, the practical applicability of general enzyme–substrate interaction models has yet to be determined.

3.2. Supervised Learning to Predict the Effects of Mutations

The ability to predict mutational effects on various protein properties, such as solubility, stability, aggregation, function, and enantioselectivity, is another desirable goal of protein engineering. From the machine learning perspective, this implies having a model that takes a reference protein and its variant as the input and predicts the change in the studied property as the output. Intuitively, this could be achieved by supervised learning on the labeled data sets of wild-type proteins first (e.g., to predict a solubility score, binding energy, or melting temperature of a given protein), applying the trained model to independently predict labels of the reference protein and its variant, and then taking the difference between the two predicted scores. The attractiveness of this strategy comes from large annotated data sets of wild-type proteins available for training. For example, the Protein Structure Initiative125 generated a massive data set TargetTrack, often used for protein solubility prediction.26 Additionally, the more recent Meltome Atlas of protein stability obtained by liquid chromatography-tandem mass spectrometry126 was used for predicting melting temperatures.127 Our ongoing effort to predict highly valuable melting temperatures solely from the protein sequence resulted in the development of the TmProt software tool (https://loschmidt.chemi.muni.cz/tmprot/). Large labeled data sets usually provide enough training data for powerful end-to-end deep learning.34,128,129 Nonetheless, when the training data set does not contain mutations, a few substitutions will usually result in similar predicted labels (e.g., solubility scores), in contrast to dramatic changes often observed in experiments. Therefore, the strategy of taking the difference between the predicted labels for a reference protein and its variant typically fails to produce reliable predictors for mutational effects.130

A more promising route is to use labeled mutational data sets for training. This strategy has its own limitations, since such data sets are not only scarce but also sparse in terms of the extent of the mutational landscape that is probed (the sequence space grows exponentially with the number of mutated residues) and biased toward several overrepresented proteins.13,131 These barriers severely hinder the use of ML, which relies heavily on the availability of good quality data with a high coverage of the space of interest. Therefore, additional data curation and processing, adjustments to training protocols, and more thorough and critical data evaluations are typically needed. Such efforts will be a crucial first step in establishing reliable ML pipelines for predicting mutational effects.

The most abundant and diverse mutational data come from general biophysical characterizations that are performed routinely in most protein engineering studies, including measurements of protein expressibility, solubility, and stability. The major challenge when using such data lies in collection and curation: measurements are scattered across the literature and often reported ad hoc because they are generally complementary to a study’s main results.132 This highlights the importance of establishing and maintaining databases with protein annotations to facilitate data discoverability and reuse. For example, we recently released SoluProtMutDB,133 which currently has almost 33 000 labeled entries concerning mutational effects on the solubility and expression of over 100 proteins. This database incorporates all of the data points that were recently used to develop solubility predictors (Table 2), which achieved correct prediction ratios of around 70%.133

Table 2. Recent Applications of Supervised ML Tools in Prediction of the Effects of Mutations in Protein Engineeringa.

| targeted property | tool | training data | method | input | Web site |

|---|---|---|---|---|---|

| stability (ΔΔG) | BayeStab (134) | 2648 single mutations from 131 proteins (S2648) derived from ProTherm (135) | Bayesian neural networks | PDB files with the WT and mutant structures | http://www.bayestab.com/ |

| PROST (136) | 2647 single mutations from 130 proteins (S2648) derived from ProTherm (135) | ensemble model | sequence (FASTA) plus a list of mutations | https://github.com/ShahidIqb/PROST | |

| KORPM (137) | 2371 single mutations from 129 proteins, derived from ThermoMutDB (138) and ProTherm (135) | nonlinear regression | a list of PDB files and single-point mutations | https://github.com/chaconlab/korpm | |

| ABYSSAL (139) | 376 918 single mutations from 396 proteins (140) | siamese deep neural networks trained on ESM267 embeddings | ESM2 embeddings of the WT and mutant sequences | https://github.com/dohlee/abyssal-pytorch | |

| solubility | PON-Sol2 (141) | custom data set: 6328 single mutations from 77 proteins | LightGBM | a list of sequences (FASTA) plus lists of single-point mutations | http://139.196.42.166:8010/PON-Sol2/ |

| activity | DLKcat (142) | custom data set: 16 838 data points from BRENDA (90) and Sabio-RK (91) | graph-based and convolutional neural networks | a list of substrate SMILES and enzyme sequences | https://github.com/SysBioChalmers/DLKcat |

| MaxEnt (143) | various custom MSAs and kinetic constant data sets | statistical Potts model | single and pairwise amino acid frequencies from MSA | https://github.com/Wenjun-Xie/MEME | |

| MutCompute (31) | 19 436 protein structures | self-supervised convolutional neural network | protein structure | https://mutcompute.com/ | |

| innov’SAR (144) | custom data set: 7 variants vs 3 substrates at two pH levels | partial least-squares regression | N/A | N/A | |

| ML-variants-Hoie-et-al145 | custom data set: over 150 000 variants in 29 proteins | random forest | Custom preprocessing, derived from PRISM approach (146) | https://zenodo.org/record/5647208 | |

| https://github.com/KULL-Centre/papers/tree/main/2021/ML-variants-Hoie-et-al | |||||

| optimal catalytic temperature Topt | ML-guided directed evolution of a PETase (147) | custom data set: 2643 enzymes from BRENDA90 | random forest | N/A | N/A |

| TOMER (148) | custom data set: 2917 enzymes from BRENDA90 | bagging with resampling | a list of sequences (FASTA) | https://github.com/jafetgado/tomer | |

| substrate specificity | ML-guided directed evolution of an aldolase149 | 131 experimentally characterized variants | Gaussian process | N/A | N/A |

| protein aggregation | ANuPP150 | 1421 hexapeptides obtained from CPAD 2.0,151,152 WALTZ-DB,153,154 and AmyLoad155 databases | ensemble of 9 logistic regressors | a list of sequences (FASTA) | https://web.iitm.ac.in/bioinfo2/ANuPP/about/ |

| AggreProt | 1416 hexapeptides obtained from WALTZ-DB153,154 | deep neural network | up to 3 sequences (FASTA) and (optionally) 3D structures | https://loschmidt.chemi.muni.cz/aggreprot | |

| binding affinity (ΔΔG) | GeoPPI (156) | SKEMPI 2.0 (157) | graph neural network and random forest | PDB file, the names of interacting chains, and mutations | https://github.com/Liuxg16/GeoPPI |

N/A = not available.

Protein stability measurements are another type of widely available biophysical data that can be used in ML. Protein stability is typically quantified in terms of the melting temperature (Tm) or the Gibbs free energy difference between the folded and unfolded states (ΔΔG). Several protein stability databases exist, including FireProtDB,158 ThermoMutDB,138 and ProThermDB,135 and their data have often been used to train ML predictors that have achieved Pearson’s correlations of up to 0.6 and RMSE values of 1.5 kcal/mol when applied to independent test sets.159 Interestingly, these numbers have barely changed over the past decade, indicating that a qualitative paradigm shift might be needed to advance ML-based prediction of mutation-induced protein stability changes.132 It is possible that large new data sets could provide the necessary boost, and some exciting studies collecting such data are already appearing: cDNA display proteolysis was recently used to measure the thermodynamic stability of around 850 000 single-point and selected double-point mutants of 354 natural and 188 de novo designed protein domains between 40 and 72 amino acids in length.140

Changes in catalytic activity upon mutation also attract the attention of ML researchers. Predicting mutational effects on enzyme activity is more challenging than predicting protein stability and solubility due to the enormous diversity of enzymatic mechanisms. One rich source of such mutational data is large-scale deep mutational scanning.69 These experiments combine high-throughput screening and sequencing and typically score protein variants by comparing their abundance before and after a specific selection is applied. These data sets provide comprehensive overviews of the local mutational landscapes of various enzymes and are of significant value for ML due to their unbiased mutant coverage. Several groups have already assembled various deep mutational scanning (DMS) data sets for benchmarking effect predictors,145,160−164 and we expect this trend to continue as more data sets appear. Notable works of this type include the recently published activity landscapes of the phosphatase,165 dihydrofolate reductase,166 DNA polymerase,167 and palmitoylethanolamide transferase.168

DMS can be applied to a wide range of enzyme functions due to its high flexibility with respect to selection procedures. However, its high throughput comes at the cost of limiting the number of protein targets that can be used in a study; often, only a single case is examined. The desire to target multiple enzymes simultaneously motivated the creation of another notable database of enzyme activity changes: D3DistalMutation.169 It contains data derived from UniProt annotations representing over 90 000 mutational effects in 2130 enzymes. However, its potential in ML has not yet been explored.

Other protein characteristics may also be used as targets for protein engineering and machine learning. These targets are often selected based on the enzyme of interest and may include important functional traits such as substrate specificity,149 enzyme enantioselectivity,170,171 kinetic constants,142−144 temperature sensitivity,172 or temperature optima.147,148 In addition, several mutational data sets that can be used for ML-based tools focusing on protein folding rates, binding, and aggregation have been deposited in the VariBench benchmark data set.173 Selected recent examples of these tools are listed in Table 2. An overview of the described databases and data sets is given in Table S2.

3.3. Approaches to Design Mutations

While tools for predicting effects of mutations have become increasingly advanced in recent years, in their simple form, they can only provide labels for a given substitution. However, the desired outcome of protein engineering pipelines is to have a list of promising protein variants for experimental validation. Therefore, even if a reliable ML-based tool for predicting effects of substitutions is available, the problem of suggesting promising hypothetical designs must be addressed. This problem may become a major bottleneck, since even if the prediction of single- or multiple-point mutational effects is fast, evaluating all possible combinations of mutations remains unfeasible. Therefore, there is a growing need for tools that simultaneously predict the effects of mutations and reduce the search space, which is the focus of this subsection.

A major challenge in the development of such tools is finding ways to efficiently reduce the space of multipoint mutants. In an analysis of nine case studies, Milton and coauthors found that the effects of half of the multipoint mutations influencing enzymatic properties could not be predicted using knowledge of the corresponding single-point mutations, with the associated complexities resulting from direct interactions between residues in some cases and long-range interactions in others.174 This common nonadditive behavior, which is known as epistasis, has prompted the development of ML models and combinatorial optimization algorithms capable of scoring or searching multipoint mutants by design. Several approaches discussed below have been proposed to overcome this challenge, more on this topic can be found in a review.175

One such approach is to produce a library of variants for screening using reliable physics- and evolution-based tools. Even a time-consuming preselection of promising hotspots can drastically reduce the computational time of the downstream ML scoring and search.176 For example, HotSpot Wizard 3.0177 achieves robust selection of hotspots by using a number of sequence and structure-based filters to identify mutable residues for which mutation effects are then quantified using the well-established Rosetta and FoldX tools. Another example is the FuncLib web server, which computes promising single-point active-site mutations using evolutionary conservation analysis and Rosetta-based stability calculations.178 This tool exhaustively models each combination of mutants and ranks them by energy. Evolutionary information can also be captured by ML-based models179 and used to suggest promising substitutions, e.g., amino acids with conditional likelihoods higher than the wild-type.180

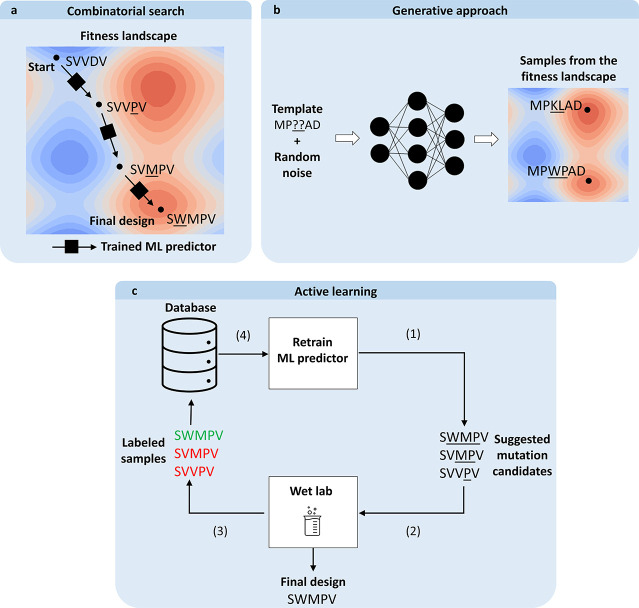

Mathematical optimization methods can generate promising protein sequence candidates in silico by iteratively producing new designs based on available ML scoring data (Figure 3). One group of such methods uses the ML predictor as a black-box oracle to evaluate existing candidates. This evaluation is then used to approximate the “fitness” of sequences, which is in turn used to navigate the sequence landscape and generate a new set of candidates using tools such as evolutionary algorithms82,181 or simulated annealing.182 However, approximating complex mutational landscapes using oracles representing estimated, simplifying distributions can harm the optimization process and prevent the optimal solution from being found.183 Adaptive sampling of the design space can be used instead to obtain better results.81 Other alternatives are to use generative models184 or rely on so-called white-box optimization, which involves using knowledge of a predictor’s internal workings to find the optimal solution. For example, linear regression coefficients can be used to suggest modifications (mutations) of the input that alter the corresponding features in the desired direction. White-box methods are discussed further in the context of explainable AI in section 6.1.

Figure 3.

Selected ML strategies for designing new enzyme variants. (a) In silico evolutionary combinatorial search for favorable mutations. A machine learning predictor is used to iteratively evaluate candidate mutations for some desired property, e.g., stability. The mutated amino acid in each step is underlined. (b) Generation of favorable mutants. The machine learning tool directly infers the sequence with high property values (e.g., stability) from a fitness landscape learned and captured in the weights of the model during the training. (c) An active learning loop: an ML predictor from (a) or (b) is used to (1) propose enzyme variants that are (2) evaluated experimentally, and the resulting data are used to (3) update the knowledge database. The ML model is then (4) retrained on the updated knowledge database, and new variants are designed.

While in silico optimization methods enable the iterative generation of promising designs, they rely entirely on the ability of a predictor to correctly score any given point in a mutational landscape, which might be an unrealistically strong assumption. A possible alternative (albeit one that is more costly and has lower throughput) is to directly incorporate experimental validation into the optimization loop. This experimental input can guide search algorithms to more promising parts of the mutational landscape in a manner that is akin to directed evolution. While advanced search methods of this type have previously been used to improve traditional directed evolution,82,182 they do not fully exploit the potential of experimentally characterizing intermediate variants. ML-based active learning methods accelerate directed evolution by iteratively extracting knowledge from all characterized variants and selecting the most promising ones.185 By relying on the new experimental data, only a limited number of training samples can be expected,186 confining the choice of ML models to those with a lower number of parameters, such as multilayer perceptrons (MLP) with as few as two layers.187 A recent advance in the area of active learning is the development of GFlowNets,188 networks designed to suggest diverse and accurate candidates in a machine–expert loop to accelerate scientific discovery. Studies using such networks have demonstrated their potential for designing small molecules;189 however, the utility for the design of proteins remains to be reliably demonstrated.

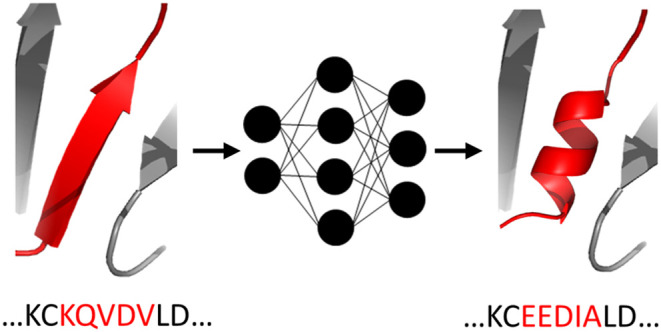

Powerful design techniques for the exploration of variants of enzymes and other proteins often rely not only on combining experimental and in silico techniques but also on combining multiple in silico approaches and sometimes different ML techniques. For example, focused training ML-assisted directed evolution (ftMLDE)186 combines unsupervised and supervised training approaches by using unsupervised clustering to construct a training set for supervised classifiers. These classifiers are then used to select promising mutants with tools such as CLADE 2.0.190 Similarly, unsupervised learning on millions of sequences was used to obtain a protein representation called UniRep,191 which was further tuned in an unsupervised manner to obtained eUniRep (evotuned UniRep) for proteins related to the target sequence, leading to an informative set of features for proteins in general as well as for the target in particular. Such representation enabled data-efficient supervised learning of a model for guiding in silico evolution.192 Alternatively, an unsupervised “probability density model” has been used to produce an “evolutionary density score”, a feature that was then used to augment a small number of labeled data points on which a light model was trained in a supervised manner. Interestingly, such an approach was shown to outperform the supervised fine-tuning of the probability density model pretrained in an unsupervised manner.193 Another approach194 combines self-supervised large protein language models with a supervised structure-to-sequence predictor in a new and more general framework called LM-design that is claimed to advance the state of the art in predicting a protein sequence corresponding to a starting backbone structure, sometimes called “inverse folding”. While inverse folding does not explicitly search the mutational landscape, it can be used to identify promising mutations by inputting an existing protein structure and a partially masked sequence and using the inverse folding tool to propose amino acids for the masked parts.

3.4. Leveraging Unlabeled Data Sets to Score Mutations

Over the past decade, large language models (LLM) have become popular tools for solving NLP problems ranging from language translation to sentiment analysis.195 This major paradigm shift was driven by the realization that even unlabeled data contain useful information: the distributional hypothesis suggests that the meaning of words can be deduced by analyzing how often and with what partner words they appear in various texts.196 Analogously, in biology, we can regard proteins as sequences based on “the grammar of the language of life”, which implies that the distribution of amino acids at specific locations can provide valuable insights that could be used to help predict the effects of substitutions on protein function, thereby reducing the reliance on external data sources.66 For example, Elnaggar et al. showed that the embeddings generated by a LLM, when used as input features, can effectively facilitate the development of small supervised models whose predictive power rivals that of state-of-the-art methods relying on evolutionary information obtained from MSAs.197 Additionally, the protein language model ESM-2 was recently trained on protein sequences from the UniRef database to predict 15% of masked amino acids in a given sequence.67 This made it possible to directly leverage sequence information to greatly improve B-cell epitope prediction198 without a supplementary MSA. The inherent attention mechanism of ESM-2 can also be used to facilitate protein structure prediction.67 We provide more examples in Table 3. Furthermore, the embeddings obtained from the language models, such as ESM-1b,65 ESM-2,67 ProtT5,197 and ProtTrans,199 have become a popular way of representing sequential data, making pretrained ESM models a frequent subject for transfer learning. The transfer of knowledge from these models has been tackled by fine-tuning the pretrained weights,197,200 by training models solely on top of the learned embeddings (keeping the pretrained weights fixed),197 and also by introducing adapter modules201 between the trained layers for parameter-efficient fine-tuning.202 The power of the learned embeddings can also be witnessed in a completely unsupervised setting. More specifically, the ESM-1v66 model was demonstrated to accurately score protein variants by relying solely on the wild-type amino acid probabilities learned by the protein language model during pretraining without any subsequent fine-tuning.

Table 3. Some Recent Self-Supervised ML Models for Protein Engineeringa.

| approach | tool | training data | method | Web site |

|---|---|---|---|---|

| large sequence-based models | ESM-2 (67) | ∼65 million unique sequences sampled from UniRef50 (203) | transformers, 15 billion parameters, masked (15%) language modeling | https://github.com/facebookresearch/esm |

| ProtTrans (197) | 2122 million sequences from Big Fantastic Database used for pretraining and fine-tuning on 45 million sequences from UniRef50 (203) | transformers, 11 billion parameters, masked (15%) language processing | https://github.com/agemagician/ProtTrans | |

| Ankh (199) | 45 million sequences from UniRef50 (203) | transformer, 1.15 billion parameters, 1-gram span partial demasking/reconstruction (20%) | https://github.com/agemagician/Ankh | |

| ProGen35,204 | 281 million nonredundant protein sequences from > 19 000 Pfam205 families | transformer-based conditional language model, 1.2-billion parameters | https://github.com/salesforce/progen | |

| Tranception (164) | 249 million sequences from UniRef100 (203) | autoregressive transformer architecture, 700 million parameters | https://github.com/OATML-Markslab/Tranception | |

| large structure-based models | MutCompute (31) | 19 436 nonredundant structures with resolution better than 2.5 Å drawn from structures in the PDB-REDO database206 | 3D convolutional neural network, amino acid local environment separated into biophysical channels | https://mutcompute.com/ |

| ProteinMPNN (207) | 19 700 single-chain protein structures,78 with resolution better than 3.5 Å and <10 000 residues in length | message-passing neural network, 1.6 billion parameters | https://huggingface.co/spaces/simonduerr/ProteinMPNN | |

| https://github.com/dauparas/ProteinMPNN | ||||

| FoldingDiff (27) | 24 316 training backbones from the CATH data set208 | denoising diffusion probabilistic model | https://github.com/microsoft/foldingdiff | |

| RFdiffusion (28) | structures sampled from PDB78 | denoising diffusion probabilistic model | https://github.com/RosettaCommons/RFdiffusion | |

| GearNet (68) | 805 000 predicted protein structures from AlphaFoldDB209 | graph neural network, 42 million parameters | https://github.com/DeepGraphLearning/GearNet | |

| Stability Oracle (33) | 22 759 PDB structures78 with resolution better than 3 Å, maximum 50% sequence similarity, and ∼144 000 free energy labeled data points for fine-tuning | graph transformer, 1.2 million parameters | N/A | |

| models for specific protein families | MSA Transformer 210 | 26 million MSAs with 1192 sequences per MSA on average | transformers, 100 million parameters, axial attention (211) | https://github.com/rmrao/msa-transformer |

| ProteinGAN (212) | 16 706 sequences of bacterial malate dehydrogenase | generative adversarial networks, 60 million parameters | https://github.com/biomatterdesigns/ProteinGAN | |

| hybrid methods | Prot-VAE (213) | 46 million sequences from UniRef sequences and ∼5300 SH3 (Src homology 3 family) homologues | transformers, 1D convolutional neural network and a custom architecture | N/A |

| ECNet (214) | protein sequences from Pfam205 | transformers with a 38 million parameter model, bidirectional LSTM215 | https://github.com/luoyunan/ECNet | |

| ESM-GearNet (32) | 805 000 predicted protein structures from AlphaFoldDB209 and ∼65 million unique sequences sampled from UniRef50 (203) | transformers, 15 billion parameters, masked (15%) language modeling (sequences), graph neural network, 42 million parameters (structures) | https://github.com/DeepGraphLearning/GearNet |

N/A = not available

Large deep-learning models are impressively capable of learning general protein properties. For the cases in which a detailed understanding of a specific protein or protein family is required rather than general patterns that hold across different protein families, sequence-based models that can learn distributional patterns from a single MSA are available. MSAs have already proven to be a rich source of evolutionary information, e.g., for identifying functionally important conserved regions, insertions, or deletions to clarify the mechanisms driving sequence divergence.