Abstract

In healthcare, the development and deployment of insufficiently fair systems of artificial intelligence can undermine the delivery of equitable care. Assessments of AI models stratified across sub-populations have revealed inequalities in how patients are diagnosed, given treatments, and billed for healthcare costs. In this Perspective, we outline fairness in machine learning through the lens of healthcare, and discuss how algorithmic biases (in data acquisition, genetic variation and intra-observer labelling variability, in particular) arise in clinical workflows and the healthcare disparities that they can cause. We also review emerging technology for mitigating biases via disentanglement, federated learning and model explainability, and their role in the development of AI-based software as a medical device.

Introduction

In healthcare, the development and deployment of insufficiently fair systems of artificial intelligence (AI) can undermine the delivery of equitable care. Assessments of AI models stratified across subpopulations have revealed inequalities in how patients are diagnosed, treated and billed. In this Perspective, we outline fairness in machine learning through the lens of healthcare, and discuss how algorithmic biases (in data acquisition, genetic variation and intra-observer labelling variability, in particular) arise in clinical workflows and the resulting healthcare disparities. We also review emerging technology for mitigating biases via disentanglement, federated learning and model explainability, and their role in the development of AI-based software as a medical device.

With the proliferation of AI algorithms in healthcare, there are growing ethical concerns regarding the disparate impact that the models may have on under-represented communities1,2,3,4,5,6,7,8. Audit studies have shown that AI algorithms may discover spurious causal structures in the data that correlate with protected-identity status. These correlations imply that some AI algorithms may use protected-identity statuses as a shortcut to predict health outcomes3,9,10. For instance, in pathology images, the intensity of haematoxylin and eosin (H&E) stains can predict ethnicity on the Cancer Genome Atlas (TCGA), owing to hospital-specific image-acquisition protocols9. On radiology images, convolutional neural networks (CNNs) may underdiagnose and misdiagnose underserved groups (in particular, Hispanic patients and patients on Medicaid in the United States) at a disproportionate rate compared with White patients, and capture implicit information about patient race10,11,12,13. Despite the large disparities in performance, there is a lack of regulation on how to train and evaluate AI models on diverse and protected subgroups. With an increasing number of algorithms receiving approval from the United States Food and Drug Administration (FDA) as AI-based software as a medical device (AI-SaMD), AI is poised to penetrate routine clinical care over the next decade by replacing or assisting human interpretation for disease diagnosis and prognosis, and for the prediction of treatment responses. However, if left unchecked, algorithms may amplify existing healthcare inequities that have already impacted underserved subpopulations14,15,16.

In this Perspective, we discuss current challenges in the development of fair AI for medicine and healthcare, through diverse viewpoints spanning medicine, machine learning and their intersection in guiding public policy on the development and deployment of AI-SaMD. Discussions of AI-exacerbated healthcare disparities have primarily debated the usage of race-specific covariates in risk calculators and have overlooked broader and systemic inequities that are often implicitly encoded in the processes generating medical data. These inequalities are not easily mitigated by ignoring race5,17,18,19,20. And vice versa, conventional bias-mitigation strategies in AI may fail to translate to real-world clinical settings, because protected health information may include sensitive attributes, such as race and gender, and because data-generating processes across healthcare systems are heterogeneous and often capture different patient demographics, causing data mismatches in model development and deployment21.

We begin by providing a succinct overview of healthcare disparities, fair machine learning and fairness criteria. We then outline current inequities in healthcare systems and their varied data-generating processes (such as the absence of genetic diversity in biomedical datasets and differing image-acquisition standards across hospitals), and their connections to fundamental machine-learning problems (in particular, dataset shift, representation learning and robustness; Fig. 1). By understanding how inequities can drive disparities in the performance of AI algorithms, we highlight federated learning, representation learning and explainability as emerging research areas for the mitigation of bias and for improving the evaluation of fairness in the deployment lifecycle for AI-SaMD. We provide a glossary of terms in Box 1.

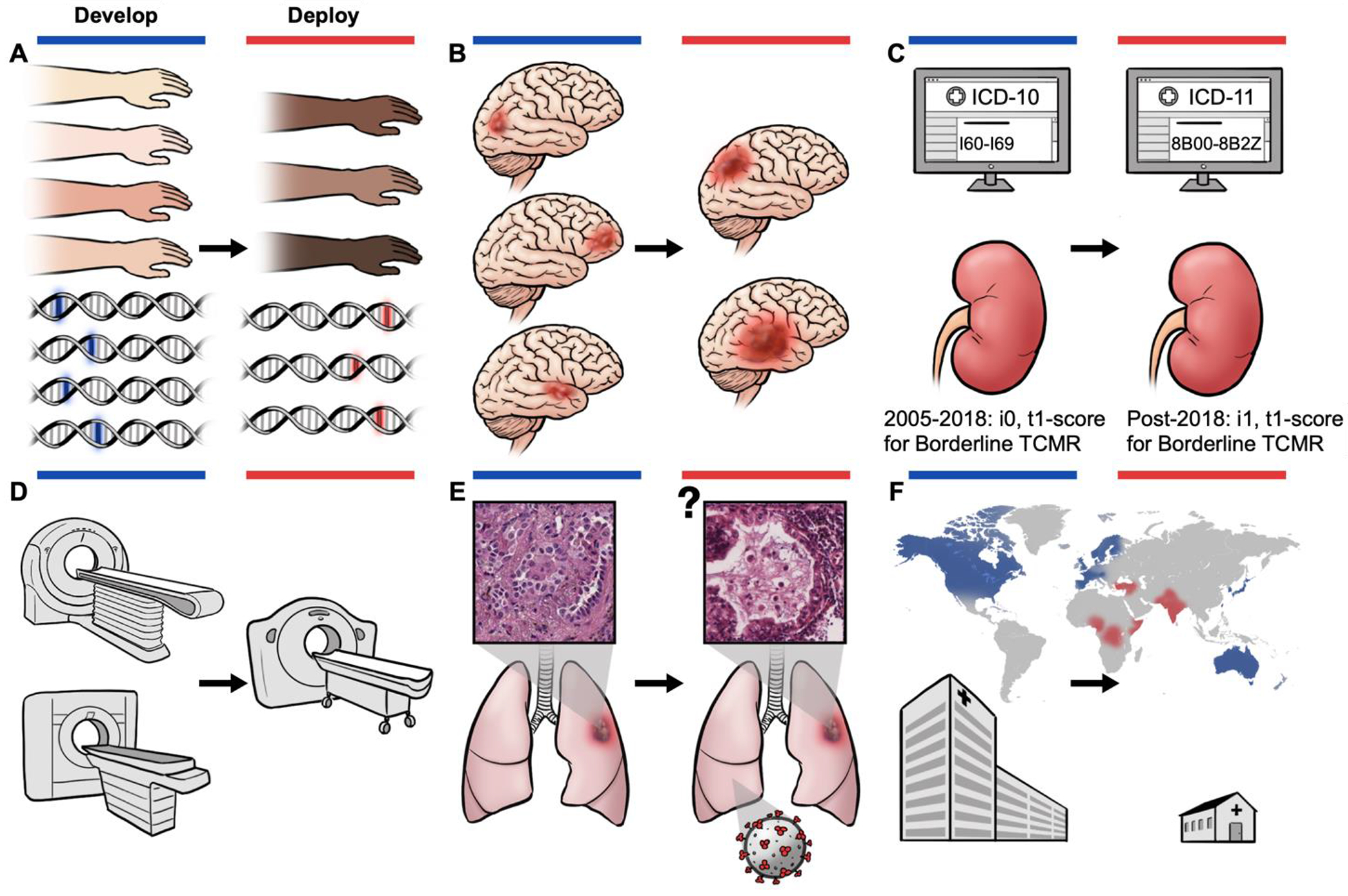

Fig. 1 |.

Connecting healthcare disparities and dataset shifts to algorithm fairness. A, Population shift as a result of genetic variation and of other population-specific phenotypes across subpopulations. Current AI algorithms for the diagnosis of skin cancer using dermoscopic and macroscopic photographs may be developed on datasets that underrepresent darker skin types, which may exacerbate health disparities of some geographic regions138,139,140,388. In developing algorithms using datasets that overrepresent individuals with European ancestry, the prevalence of certain mutations may also differ in the training and test distributions. This is the case for disparities in EGFR-mutation frequencies across European and Asian populations146. B, Population shifts and prevalence shifts resulting from disparities in social determinants of health. Differences in healthcare access may result in delayed referrals, and cause later-stage disease diagnoses and worsened mortality rates18,34. C, Concept shift as a result of the ongoing refinement of medical-classification systems, such as the recategorization of strokes, which was previously defined under diseases of the circulatory system in ICD-10 and is now defined under neurological disorders in ICD-11127,128. In other taxonomies, such as the Banff-classification system for renal allograft assessment, which updates the diagnostic criteria approximately every two years, the use of the post-2018 Banff criteria for borderline cases of T-cell-mediated rejection (TCMR), all i0,t1-score biopsies would be classified as ‘normal’189. D, Acquisition shift as a result of differing data-curation protocols (associated with the use of different MRI/CT scanners, radiation dosages, sample-preparation protocols or image-acquisition parameters) may induce batch effects in the data9,279. E, Novel or insufficiently understood occurrences, such as interactions between the SARS-CoV-2 virus and lung cancer, may arise in new types of dataset shift such as open set label shift389. F, Global-health challenges in the deployment of AI-SaMDs in low-and-middle-income countries can lead to resource constraints for AI-SaMDs, such as limitations in GPU resources, a lack of digitization of medical records and other health data, as well as dataset-shift barriers such as differing demographics, disease prevalence, classification systems, and data-curation protocols. Group fairness criteria may also be difficult to satisfy when AI-SaMD deployment faces constraints in the access of protected health information.

Box 1 |. Glossary of terms.

Health disparities: Group-level inequalities as a result of socioeconomic factors and social determinants of health, such as insurance status, education level, average income in ZIP code, language, age, gender, sexual identity or orientation, and BMI.

Race: An evolving human construct categorizing human populations. It differs from ancestry, ethnicity, nationality and other taxonomies. Race is usually self-reported.

Protected or sensitive attributes: Patient-level metadata which predictive algorithms should not discriminate against.

Protected subgroup: Patients belonging to the same category in a protected attribute.

Disparate treatment: Intentional discrimination against protected subgroups. Disparate treatment can result from machine-learning algorithms that include sensitive-attribute information as direct input, or that have confounding features that explain the protected attribute.

Disparate impact: Unintentional discrimination as a result of disproportionate impact on protected subgroups.

Algorithm fairness: A concept for defining, quantifying and mitigating unfairness from machine-learning predictions that may cause disproportionate harm to individuals or groups of individuals. Fairness is a formalization of the minimization of disparate treatment and impact. There are multiple criteria for the quantification of fairness, yet they typically involve the evaluation of differences in performance metrics (such as accuracy, true positive rate, false positive rate, or risk measures; larger differences would indicate larger disparate impacts) across protected subgroups, as defined in Box 2.

AI-SaMD (artificial-intelligence-based software as a medical device): a categorization of medical devices undergoing regulation by the United States Food and Drug Administration (FDA).

Model auditing: Post-hoc quantitative evaluation for the assessment of violations of fairness criteria. This is often coupled with explainability techniques for attributing specific features to algorithm fairness.

Dataset shift: A mismatch in the distribution of data in the source and target datasets (or of the training and testing datasets).

Domain adaptation: Correction of dataset shifts in the source and target datasets. Typically, domain-adaptation methods match the datasets’ input spaces (via importance weighting or related techniques) or feature spaces (via adversarial learning).

Federated learning: A form of privacy-preserving distributed learning that trains neural networks on local clients and sends updated weight parameters to a centralized server without sharing the data.

Fair representation learning: Learning intermediate feature representations that are invariant to protected attributes.

Disentanglement: A property of intermediate feature representations in deep neural networks, in which individual features control independent sources of variation in the data.

Fairness and machine learning

Understanding health disparities and inequities

Healthcare disparities can lead to differences in healthcare quality, access to healthcare and health outcomes across patient subgroups. These disparities are deeply shaped by both historical and current socioeconomic inequities22,23,24,25. Although they are often viewed at observable group-level characteristics—such as race, gender, age and ethnicity—the sources of these disparities encompass a wider range of observed and latent risk factors, including body mass index, education, insurance type, geography and genetics. As formalized by the United States Department of Health and Human Services, most of these factors are defined within the five domains of social determinants of health: economic stability, education access and quality, healthcare access and quality, neighbourhood and built environment, and social and community context. These factors are commonly attributed to disparate health outcomes and to mistrust in the healthcare system26,27,28,29,30,31,32. For instance, in the early 2000s, reports by the United States Surgeon General documented the disparities in tobacco use and in access to mental healthcare as experienced by different racial and ethnic groups33. In the epidemiology of maternal mortality in the United States, fatality rates for Black women are substantially higher than for White women, owing to economic instability, the lack of providers accepting public insurance and poor healthcare access (as exemplified by counties that do not offer obstetric care, also known as maternal care deserts)34,35,36.

Definition of fairness

The axiomatization of fairness is a collective societal problem that has existed beyond the evaluation of healthcare disparities. In legal history, fairness was a central problem in the development of non-discrimination laws (for example, titles VI and VII of the Civil Rights Act of 1964, which prohibit discrimination based on legally protected classes, such as race, colour, sex and national origin) in federal programs and employment46. In the Griggs versus Duke Power Company case in 1971, the Supreme Court of the United States prohibited the use of race (and other implicit variables) in hiring decisions, even if discrimination was not intended47. Naturally, fairness spans many human endeavours, such as diversity hiring in recruitment, the distribution of justice in governance, the development of moral machines in autonomous vehicles48,49,50, and more recently the revisiting of historical biases of existing algorithms in healthcare and the potential deployment of AI algorithms in AI-SaMD11,12,18,19,20,51,52,53,54. Frameworks for understanding and implementing fairness in AI have been largely aimed at learning neutral models that are invariant to protected class identities when predicting outcomes (disparate treatment), and that have non-discriminatory impact on protected subgroups with equalized outcomes (disparate impact)55,56,57.

Formally, for a sample with features X and with a target label Y, we define A as a protected attribute that denotes a sensitive characteristic about the population of the sample that a model P(Y|X) should be non-discriminatory against when predicting Y. To mitigate disparate treatment in algorithms, an intuitive (but naive) fairness strategy is ‘fairness through unawareness’; that is, knowledge of A is denied to the model.

Although removing race would seemingly debias the eGFR equation, for many applications denying protected-attribute information can be insufficient to satisfy guarantees of non-discrimination and of fairness. This is because there can be other input features that may be unknown confounders that correlate with membership to a protected group58,59,60,61,62. A canonical counterexample to ‘fairness through unawareness’ is the 1998 COMPAS algorithm, a risk tool that excluded race as a covariate in predicting criminal recidivism. The algorithm was contended to be fair in the mitigation of disparate treatment63. However, despite not using race as a covariate, a retrospective study found that COMPAS assigned medium-to-high risk scores to Black defendants twice as often than to White defendants (45% versus 23%, respectively)4. This example illustrates how differing notions of fairness can be in conflict with one another, and has since motivated the ongoing development of formal definitions of evaluation criteria for group fairness for use in supervised-learning algorithms56,64,65,66 (Box 2). For example, for the risk of re-offense, fairness via predictive parity was satisfied for White and Black defendants, whereas fairness via equalized odds was violated, owing to unequal FPRs. And, for the eGFR-based prediction of CKD, removing race can correct the overestimation of kidney function and lower the false-negative rate (FNR), yet may also lead to the underestimation of kidney function and to an increase in the FPR. Hence, depending on the context, fairness criteria can have different disparate impact. Different practical applications may thus be better served by different fairness criteria.

Box 2 |. Brief background on fairness criteria.

There are three commonly used fairness criteria for binary classification tasks, described below by adapting the notation used in refs. 56,57,64,65,66,391.

We note denote our data distribution for samples , labels , and protected subgroups . We note denote the model parameterized by that produces scores 1, and the threshold used to derive a classifier where .

For the problem of placing patients on the kidney transplant waitlist, we use represent patient covariates (e.g. - age, body size, Serum Creatinine, Serum Cystatin C), denotes self-reported race categories , be a model that produces probability risk score for needing a transplant, and be our classifier as a threshold policy that qualifies patients for the waitlist () if the risk score is greater than a therapeutic threshold . As a regression task, is equivalent to current equations for estimating glomeruli filtration rate (eGFR), with corresponding to clinical guidelines for recommending kidney transplantation if eGFR value is less than 40,41,42. Though the true prevalence for patients in subgroup that require kidney transplantation is unknown, in this simplified but illustrative example, we make assumptions that: 1) 43, and 2) all non-waitlisted patients that develop kidney failure are patients that would have needed a transplant (measurable outcome as false negative rate, FNR).

Demographic parity.

Demographic parity asserts that the fraction of positive predictions made by the model should be equal across protected subgroups, e.g. – the proportion of Black and White that qualify for the waitlist is equal65,391. Hence, for subgroups and , the predictions should satisfy the independence criterion via the constraint . The independence criterion reflects the notion that decisions should be made independently of the subgroup identity. However, demographic parity only equalizes the positive predictions and does not consider if the prevalence of transplant need across subgroups is the different. After removing disparate treatment, Black patients may still be more at-risk for kidney failure than White patients and thus should have greater proportion of patients qualifying for the waitlist. Enforcing demographic parity in this scenario would mean equalizing the positive predictions made by the model, resulting in equal treatment rates but disparate outcomes between Black and White patients.

Predictive parity.

Predictive parity asserts that the predictive positive values (PPVs) and predictive negative values (PNVs) should be equalized across subgroups65,391. Hence, the sufficiency criteria should be satisfied via the constraint for , which implies scores should have consistent meaning and correspond to observable risk across groups (also known as calibration by group). For example, under calibration, amongst patients with risk score , 20% of patients have need for transplantation to preclude kidney failure. When the risk distributions across subgroups differ, threshold policies may cause miscalibration. For example, suppose at risk score , and . This threshold policy would under-qualify certain Black patients (higher FNR, lower PNV) and over-qualify certain White patients according to their implied thresholds. Models are often naturally calibrated when trained with protected attributes392, however, this leads back to the ethical issues of introducing race in risk calculators.

Equalized odds.

Equalized odds asserts that the true positive rates (TPRs) and false positive rates (FPRs) should be equalized across protected subgroups56,65,391. Hence, the separability criteria should be satisfied via the respective TPR and FPR constraints and 2. Therefore, differently from demographic parity, equalized odds enforces similar error rates across all subgroups. However, as emphasized by Barocas, Hardt, & Narayanan391, “who bears the cost of misclassification”? In satisfying equalized odds with post-processing techniques, often group-specific thresholds need to be set for each population, which is not feasible if the ROC curves do not intersect. Moreover, an important limitation of fairness criteria is the impossibility to satisfy all criterion unless under certain scenarios. For example, when the prevalence differs from subgroups, equalized odds and predictive parity cannot be satisfied at the same time. This can be seen in the following expression:

which was previously used to highlight the impossibility of satisfying equalized odds and predictive parity in recividism prediction65. In equalizing FNR to satisfy separation for two subgroups, sufficiency is violated as from our preposition and thus PPV cannot also be equivalent. In other words, at the cost of equalizing underdiagnosis, we would over-qualify Black or White patients.

Techniques for the mitigation of fairness

To reduce violations of group fairness, bias-mitigation techniques can be used to adapt existing algorithms with pre-processing steps that blind, augment or reweight the input space to satisfy group fairness55,67,68,69,70,71,72; with in-processing steps that construct additional optimization constraints or with regularization-loss terms that penalize non-discrimination73,74,75,76,77,78; and with post-processing steps that apply corrections to calibrate model predictions across subgroups56,57,64,79,80.

Pre-processing

Algorithmic biases in healthcare often stem from historical inequities that create spurious associations linking protected class identity to disease outcome in the dataset, in particular when the underlying causal factors stem from factors that span social determinants of health. By training algorithms on health data that have internalized such biases, the distribution of outcomes across ethnicities may be skewed; for example, underserved Hispanic and Black patients have more delayed referrals, which may result in more high-grade and invasive phenotypes at the time of cancer diagnosis. Such sources of labelling prejudice are known as ‘negative legacy’ or as sample-selection bias73. To mitigate this form of bias, data pre-processing techniques such as importance weighting (Fig. 2) can be applied. Importance weighting reweights infrequent samples belonging to protected subgroups67,68,70,71,72. Similarly, resampling aims to correct for sample-selection bias by obtaining fairer subsamples of the original training dataset and can be intuitively applied to correct for the under-representation of subgroups81,82,83,84,85. For tabular-structured data, blinding, data transformation and other techniques can also be used to directly eliminate proxy variables that encode protected attributes. However, these techniques may be subject to high variance and sensitivity under dataset shift, may be sensitive to outliers and data paucity in subgroups, and may overlook joint relationships between multiple proxy variables62,86,87,88.

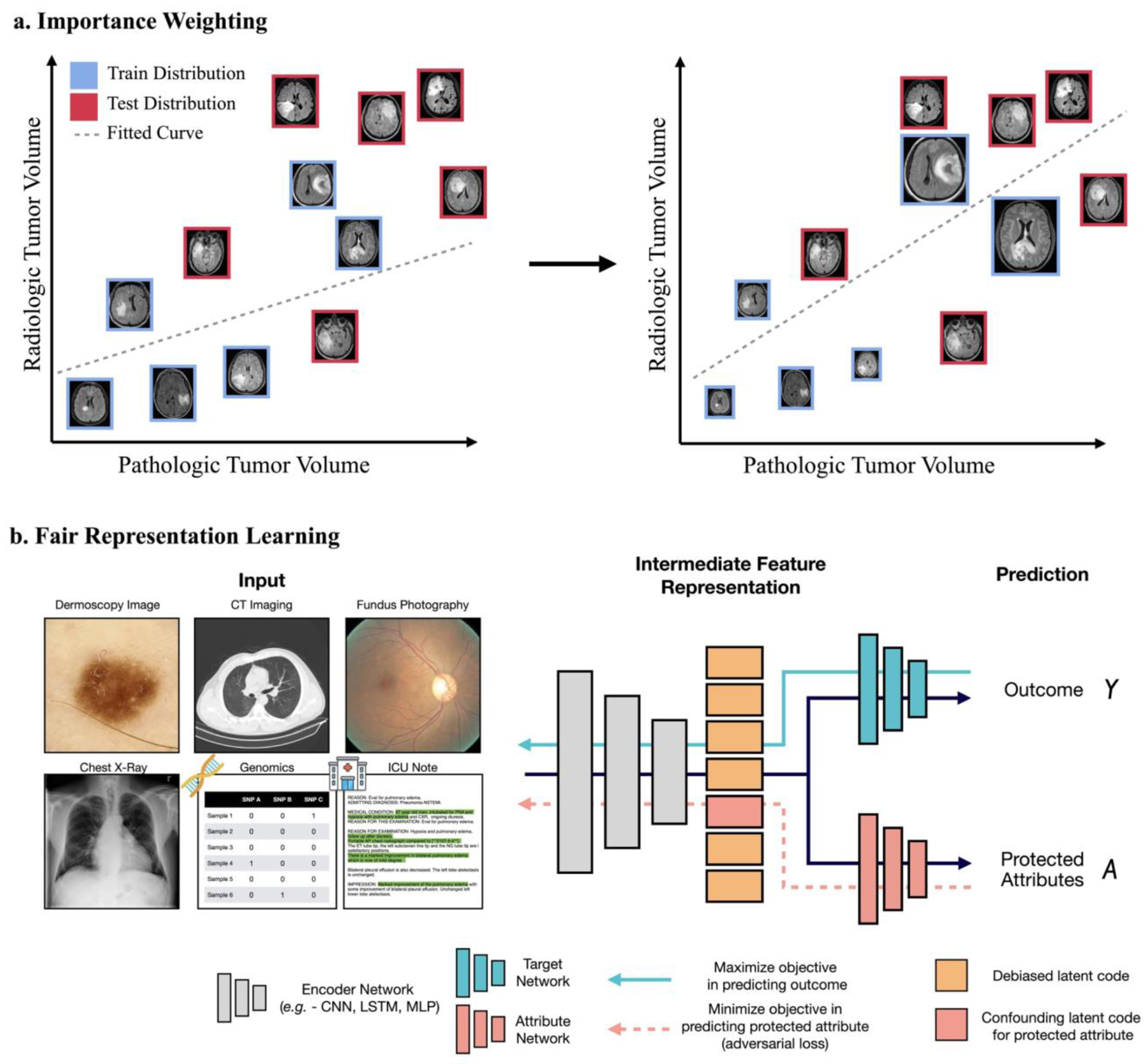

Fig. 2 |. Strategies for mitigating disparate impact.

a, For under-represented samples in the training and test datasets, importance weighting can be applied to reweight the infrequent samples so that their distribution matches in the two datasets. The schematic shows that, before importance reweighting, a model that overfits to samples with a low tumour volume in the training distribution (blue) underfits a test distribution that has more cases with large tumour volumes. For the model to better fit the test distribution, importance reweighting can be used to increase the importance of the cases with large tumour volumes (denoted by larger image sizes). b, To remove protected attributes in the representation space of structured data (CT imaging data or text data such as intensive care unit (ICU) notes), deep-learning algorithms can be further supervised with the protected attribute used as a target label, so that the loss function for the prediction of the attribute is maximized. Such strategies are also referred to as ‘debiasing’. Clinical images can include subtle biases that may leak protected-attribute information, such as age, gender and self-reported race, as has been shown for fundus photography and chest radiography. Y and A denote, respectively, the model’s outcome and a protected attribute. LSTM, long short-term memory; MLP, multilayer perceptron.

In-processing

Biased data-curation protocols may induce correlations between protected attributes and other features, which may be implicitly captured during model development. For example, medical images (such as radiographs, pathology slides and fundus photographs) can leak protected attributes, which can become ‘shortcuts’ to model predictions9,12,89,90. To mitigate the effect of confounding variables, in-processing techniques adopt a non-discrimination term within the model to penalize learning discriminative features of a protected attribute73,74,75,91,92. For instance, a logistic-regression model can be modified to include non-discrimination terms by computing the covariance of the protected attribute with the signed distance of the sample’s feature vectors to the decision boundary, or by modifying the decision-boundary parameters to maximize fairness (by minimizing disparate impact or mistreatment), subject to accuracy constraints74. For deep-learning models, such as CNNs, adversarial-loss terms (inspired by the minimax objective of a generative adversarial network; GAN) can be used to make the internal feature representations invariant to protected subgroups93 (Fig. 2). Modifications to stochastic gradient descent can also be made to weigh fairness constraints in online learning frameworks94. A limitation of in-processing approaches is that the learning objective is made non-convex when including these additional non-discriminatory terms. Moreover, metrics such as the FPR and the FNR can be sensitive to the shape of risk distributions and to label prevalence across subgroups, which may result in reduced overall performance57,95,96.

Post-processing

Post-processing refers to techniques that modify the output of a trained model (such as probability scores or decision thresholds) to satisfy group-fairness metrics. To achieve equalized odds, one can simply pick thresholds for each group such that the model achieves the same operating point across all groups. However, when the receiver operating curves do not intersect, or when the desired operating point does not correspond to an intersection point, this approach requires systematically worsening the performance for select subgroups using a randomized decision rule56. This implies that the performance on select groups may need to be artificially reduced to satisfy equalized odds. Hence, the difficulty of the task could vary between different groups11, which may raise ethical concerns. For survival models and other rule-based systems that assess risk using discrete scores (for example, by defining high cardiovascular risk as a systolic blood pressure higher than 130 mmHg; ref. 97), probability thresholds for each risk category can be selected to satisfy predictive parity. In this case, the proportion of positive-labelled samples in each category is equalized across subgroups (known as calibration; Box 2). To calibrate continuous risk scores, such as predicted probabilities, a logit transform can be applied to the predicted probabilities and then a calibration curve can be fitted for each group. This ensures that the risk scores have the same meaning regardless of group membership96. However, as was found for the COMPAS algorithm, satisfying both predictive parity and equalized odds may be impossible.

Targeted data collection

In practice, increasing the size of the dataset mitigates biases98. Although audits of publicly available and commercial AI algorithms have revealed large performance disparities, collecting data for under-represented subgroups can be an effective stopgap81,99. However, targeted data collection may require surveillance, and hence pose ethical and privacy concerns. Also, there are practical limitations in the collection of protected health information, as well as stringent data-interoperability standards100.

Healthcare disparities arising from dataset shift

Many domain-specific challenges in healthcare preclude the adoption of bias-mitigation techniques for reducing harm from AI algorithms. In particular, benchmarking these techniques in real-world healthcare applications has shown that optimizing fairness parity can result in worse model performance or in suboptimal calibration across subgroups. This is often described as an accuracy–fairness trade-off95,96,101,102,103. Benchmarks such as MEDFAIR, which evaluated 11 fairness techniques across 10 diverse medical-imaging datasets, found that current state-of-the-art methods do not outperform ‘fairness through unawareness’ with statistical significance. Also, fairness techniques often make strong assumptions about the learning scenario, such as the training and test data being independently and identically distributed, an assumption which is often violated when using data from hospitals in different geographies or when employing different data-curation protocols86,104,105,106,107. Another assumption is the availability of clean and protected attributes at test time, which is a re-occurring challenge for the development of fairness methods when working in healthcare applications that limit access to protected health information108,109,110,111,112,113,114,115. Moreover, because genetic ancestry is causally associated with many genetic traits and diseases, there are many clinical problems for which including protected attributes such as ancestry (rather than self-reported race, which is a social construct shaped by historical inequities) may promote fairness.

Many healthcare disparities in medical AI can be understood as arising from fundamental machine-learning challenges, such as dataset shift. Dataset shift can arise from differences in population demographics, genetic ancestry, image-acquisition techniques, disease prevalence and social determinants of health among other factors, and can cause disparate performance at test time31,53,106,116,117,118,119,120,121. Specifically, dataset shift occurs when there is a mismatch between the distributions of the training and test datasets during algorithm development, (that is, and ), and may lead to disparate performance at the subgroup level86,107,119,122,123. Thus, in addition to the above types of bias mitigation strategy, methods for quantifying and mitigating dataset shift such as group distributionally robust optimization (GroupDRO) are commonly used across many fairness studies103,108,123,124,125.

Group unfairness via dataset shift (also known as subpopulation shift) is particularly central in ‘black box’ AI algorithms developed for structured data such as images and text. When developing or using such algorithms, practitioners are often unaware of domain-specific cues that would ‘leak’ subgroup identity present in the input data121. For instance, an AI algorithm trained on cancer-pathology data from the United States and deployed on data from Turkey can misdiagnose Turkish cancer patients, owing to domain shifts from variations in H&E staining protocols and to population shifts from an imbalanced ethnic-minority representation126. Likewise, hospitals operating with different International Classification of Disease (ICD) taxonomies can lead to concept shifts in how algorithms are evaluated127,128. Overall, algorithms sensitive to dataset shifts can exacerbate healthcare disparities and underperform on fairness metrics.

Challenges in the deployment of fair AI-SaMD

In this section, we examine several broad and systematic challenges in the deployment of fair AI in medicine and healthcare. We discuss common dataset shifts in the settings of genomics, medical imaging, electronic medical records (EMRs) and other clinical data (Figs. 1, 3 and 4). Specifically, we examine several types of dataset shift and the impact of this failure mode on healthcare disparities129. Supplementary Table 1 provides examples. For a formal introduction to the topic, we refer to refs. 116,129.

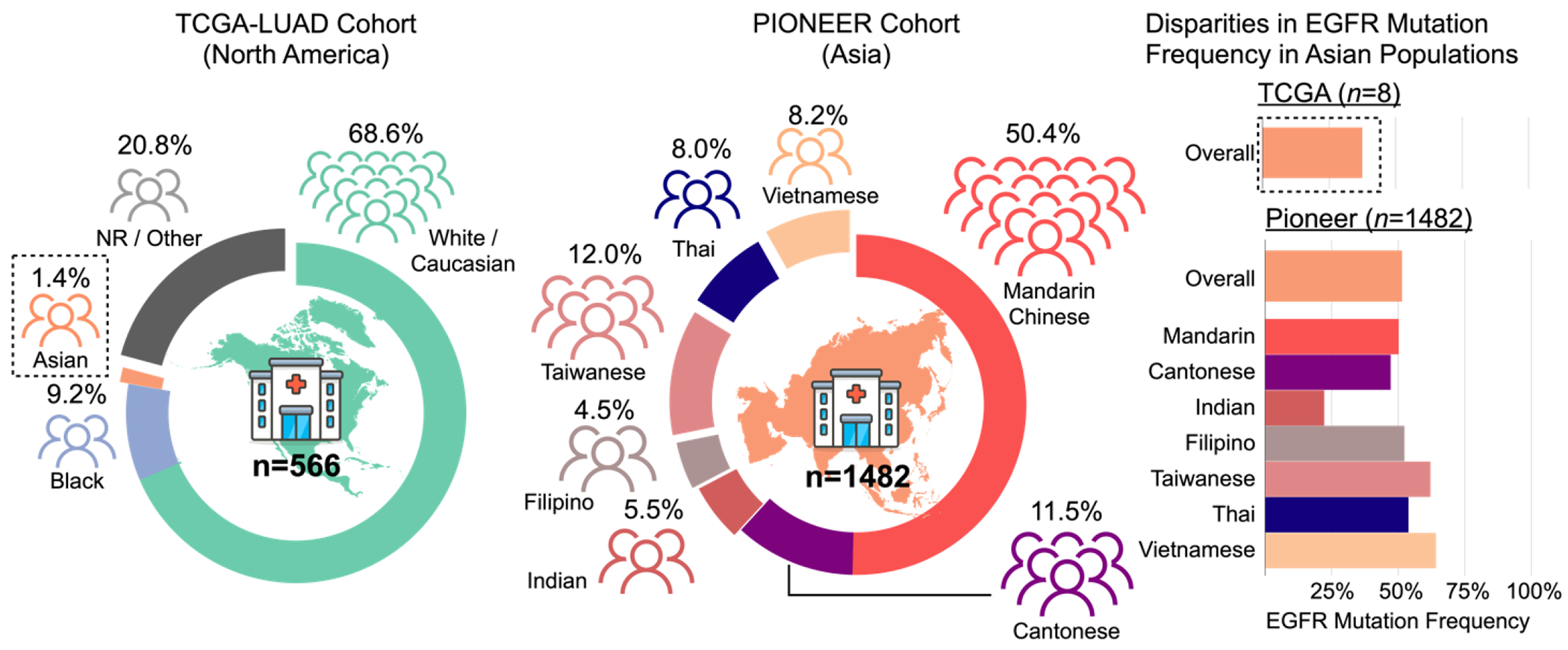

Fig. 3 |. Genetic drift as population shift.

Demographic characteristics and gene-mutation frequencies for EGFR in patients with lung adenocarcinoma in the TCGA-LUAD and PIONEER cohorts. Of the 566 patients with lung adenocarcinoma in the TCGA, only 1.4% (n = 8) self-reported as ‘Asian’; in the PIONEER cohort, 1,482 patients did. The PIONEER study included a more fine-grained characterization of self-reported ethnicity and nationality: Mandarin Chinese, Cantonese, Taiwanese and Vietnamese, Thai, Filipino and Indian. Because of the underrepresentation of Asian patients in the TCGA, the mutation frequency for EGFR, which is commonly used in guiding the use of tyrosine kinase inhibitors as treatment, was only 37.5% (n = 3). For the PIONEER cohort, the overall EGFR-mutation frequency for all Asian patients was 51.4% (n = 653), and different ethnic subpopulations had different EGFR-mutation frequencies.

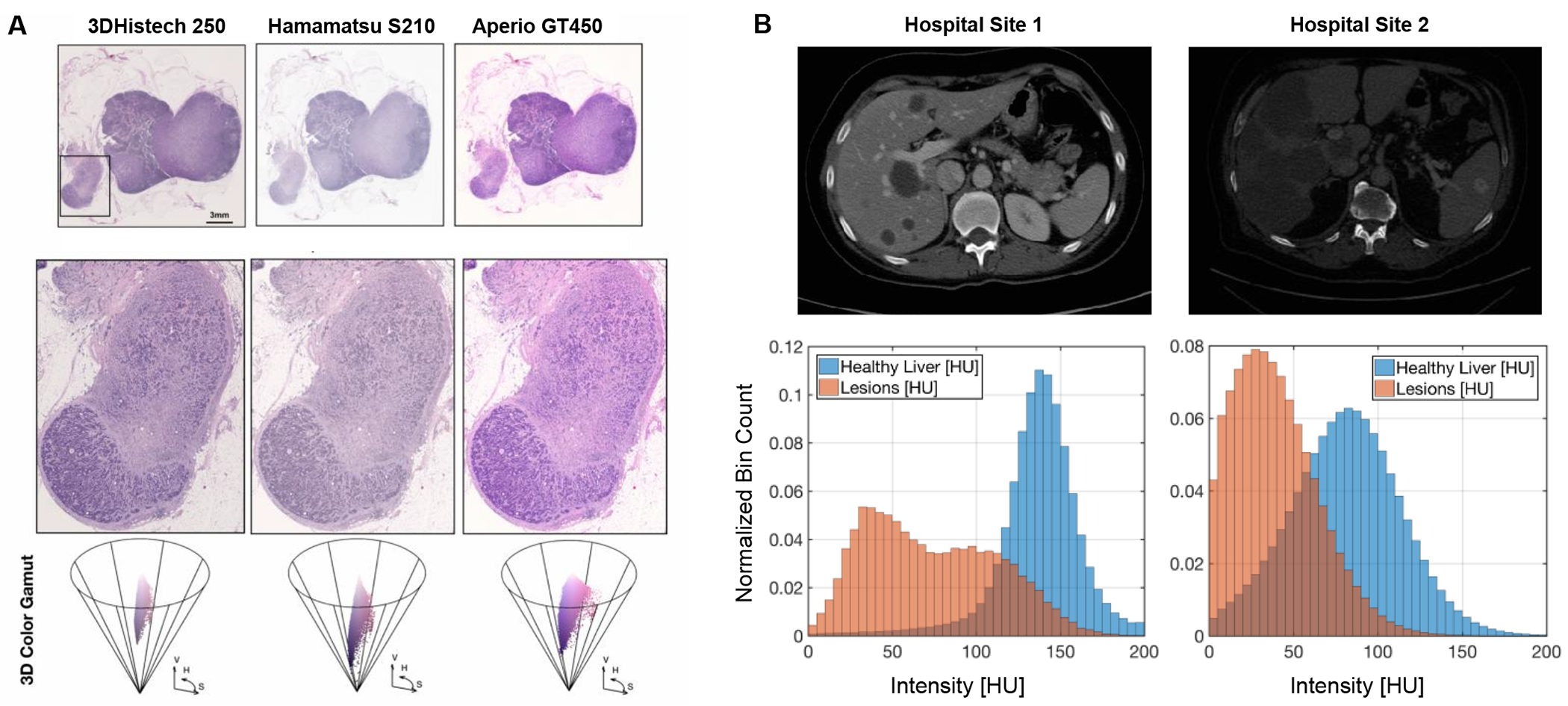

Fig. 4 |. Dataset shifts in the deployment of AI-SaMDs for a clinical-grade AI algorithms.

a, Examples of site-specific H\&E stain variability under different whole slide scanners, resulting in variable histologic tissue appearance. b, Example of variations in CT scans acquired at two different centers. The histograms shows the radiointensity in normal liver tissue and in the liver lesions. Due to differences in the acquisition protocols, there might be significant overlap between CT values of normal liver from one center and tumor values from another center.

Lack of representation in biomedical datasets

In the development and integration of AI-based computer-aided diagnostic systems in healthcare, the vast majority of models are trained on datasets that over-represent individuals of European ancestry, often without the consideration of algorithm fairness. For instance, in TCGA, across 8,594 tumour samples from 33 cancer types, 82.0% of all cases are from White patients, 10.1% are from Black or African American people, 7.5% are from Asians and 0.4% are from highly under-reported minorities (Hispanics, Native Americans, Native Hawaiians and other Pacific Islanders; denoted as ‘other’ in TCGA; Fig. 3)130. The CAMELYON16/17 challenge, which was used in validating the first ‘clinical-grade’ AI models for the detection of lymph-node metastases from diagnostic pathology images, was sourced entirely from the Netherlands131,132. In dermatology, amongst 14 publicly available skin-image datasets, a meta-analysis found that 11 of the datasets (78.6%) originated from North America, Europe and Oceania, and involved limited reporting of ethnicity (1.3%) and Fitzpatrick skin type (2.1%) as well as severe under-representation of darker-skinned patients133. Similarly, a study of 94 ophthalmological datasets found that 10 of them (10.6%) originated from Africa or the Middle East and that 2 of them (2.1%) were from South America, and that the datasets generally omitted age, sex and ethnicity (74%)134. Owing to such disparities, differences in performance across algorithms may in part result from a lack of publicly available independent cohorts that are large and diverse. Table 1 lists biomedical datasets that report sex and race demographics.

Table 1:

Reported demography data were obtained for all patient populations in the original dataset. Model auditing may use only certain subsets, owing to missing labels or to insufficient samples for evaluation in the case of extremely under-represented minorities, or owing to targeting different protected attributes (such as age, income and geography). Dashes denote demographic data that were not made publicly available or acquired.

| Dataset | Modalities | Number of patients | Female patients (%) | White (%) | Black (%) | Asian (%) | Hispanic or Latino (%) | Pacific Islander or Native Hawaiian (%) | American Indian or Alaskan Native (%) | Unknown or other (%) | Audit refs. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TCGA 356 | Pathology, MRI/CT, genomics | 10,953 | 48.5 | 67.5 | 7.9 | 5.9 | 0.3 | 0.01 | 0.2 | – | 9 |

| UK Biobank 141 | Genomics | 503,317 | 54.4 | 94.6 | 1.6 | 2.3 | – | – | – | 1.5 | 142 |

| PIONEER 146 | Genomics | 1,482 | 43.4 | – | – | 100 | – | – | – | – | N/A |

| eMerge Network172,173 | Genomics | 20,247 | – | 77.7 | 16.1 | 0.1 | – | 0.2 | 0.2 | 4.5 | 229 |

| NHANES 357 | Lab measurements | 15,560 | 50.4 | 33.9 | 26.3 | 10.5 | 22.7 | – | – | 6.5 | 339,54 |

| Undisclosed EMR data 2 | EMRs, billing transactions | 49,618 | 62.9 | 87.7 | 12.3 | – | – | – | – | – | |

| OAI358,359 | Limb X-rays | 4,172 | 57.4 | 70.9 | 29.1 | – | – | – | – | – | 3,359 |

| SIIM-ISIC360,361,362 | Dermoscopy | 2,056 | 48 | – | – | – | – | – | – | – | 361,362 |

| NIH AREDS363,364 | Fundus photography | 4,203 | 56.7 | 97.7 | 1.4 | 8 | 2 | 1.2 | 1 | – | 163 |

| RadFusion 365 | EMRs, CT | 1,794 | 52.1 | 62.6 | – | – | – | – | – | 37.4 | 365 |

| CPTAC 366 | Pathology, proteomics | 2,347 | 39.5 | 36.5 | 3.2 | 10 | 2.3 | 0.1 | 0.4 | 49.1 | N/A |

| MIMIC367,368 | Chest X-rays, EMRs, waveforms | 43,005 | 44.1 | 68.2 | 9.2 | 2.9 | 4 | 0.2 | 0.2 | – | 10,95,125,336,337,124 |

| CheXpert 310 | Chest X-rays | 64,740 | 41 | 67 | 6 | 13 | – | – | – | 11.3 | 336,369 |

| NIH NLST369,370 | Chest X-rays, spiral CT | 53,456 | 41 | 90.8 | 4.4 | 2 | 1.7 | 0.4 | 0.4 | 2 | N/A |

| RSPECT 371 | CT | 270 | 53 | 90 | 10 | – | – | – | – | – | N/A |

| DHA 372 | Limb X-rays | 691 | 49.2 | 52 | 48.2 | – | – | – | – | – | N/A |

| EMBED 373 | Mammography | 115,910 | 100 | 38.9 | 41.6 | 6.5 | 5.6 | 1 | – | 11.3 | N/A |

| Optum 372 | EMRs, billing transactions | 5,802,865 | 56.1 | 67 | 7.5 | 2.8 | 7.5 | – | – | 15.2 | 95 |

| eICU-CRD374,375 | EMRs | 200,859 | 46 | 77.3 | 10.6 | 1.6 | 3.7 | – | 0.9 | 5.9 | 375 |

| Heritage Health376,377,378,379 | EMRs | 172,731 | 54.4 | – | – | – | – | – | – | – | 170,270,379,363 |

| Pima Indians Diabetes380,381 | Population health study | 768 | 100 | – | – | – | – | – | 100 | – | 381,271 |

| Warfarin382,383 | Drug relationship | 5,052 | – | 55.3 | 8.9 | 30.3 | – | – | – | 5.4 | 383 |

| Infant Health (IDHP)384,385 | Clinical measures | 985 | 50.9 | 36.9a | 52.5 | – | 10.7 | – | – | – | 170,386 |

| DrugNet 386 | Clinical measures | 293 | 29.4 | 8.5a | 33.8 | – | 52.9 | – | – | – | 387 |

= Grouping of White and unknown/other.

AREDS, Age-Related Eye Disease Study; CPTAC, Clinical Proteomic Tumor Analysis Consortium; DHA, Digital Hand Atlas; eICU-CRD, Electronic ICU Collaborative Research Database; EMBED, Emory Breast Imaging Dataset; EMR, electronic medical record; ICU, intensive care unit; NLST, National Lung Screening Trial; N/A, not applicable; OAI, Osteoarthiritis Initative; RSPECT, RNA Pulmonary Embolism CT Dataset; SIIM-ISIC, International Skin Imaging Collaboration.

Studies that have audited AI applications in healthcare that do consider group-fairness criteria have shown that algorithms developed using problematic ethnicity-skewed datasets provide worse outcomes for under-represented populations. CNNs trained on publicly available chest X-ray datasets (such as, Medical Information Mart for Intensive Care (MIMIC)-CXR, CheXpert and National Institutes of Health (NIH) ChestX-ray15) underdiagnose underserved populations; that is, the likelihood is greater of the algorithms incorrectly predicting ‘no symptomatic conditions (findings)’ for female patients, Black patients, Hispanic patients and patients with Medicaid insurance11. These patient populations are systematically underserved and are therefore under-represented in the datasets. Thus, these algorithms may be biased by population shifts (healthcare disparities, owing to worse social determinants of health) and prevalence shifts, because a greater proportion of underserved patients are diagnosed with ‘no finding’135. Follow-up discussions to ref. 11 have proposed bias-mitigation strategies via pre-processing and post-processing techniques (such as, importance reweighting and calibration). However, the absence of diversity in the datasets makes it difficult to select thresholds for each subgroup that would balance underdiagnosis rates, which may reduce overall model performance53,121,136. Biases such as population and prevalence shifts are also heavily influenced by how the dataset is stratified into training-validation-test splits, and should also be taken into account when studying disparities13.

Inclusion of ancestry and genetic variation

Ancestry is a crucial determining factor of the mutational landscape and the pathogenesis of diseases137,138,139,140. Our understanding of many diseases has been developed using biobank repositories that predominantly represent individuals with European ancestry. Additionally, the prevalence of certain mutations is only detectable via high-throughput sequencing of large and representative cohorts141,142,143,144,145. For instance, individuals with Asian ancestry are known to have a high prevalence of mutations in the epidermal growth-factor receptor (EGFR; discovered by other high-sequencing efforts), as detected in the PIONEER cohort, which enrolled 1,482 Asian patients146 (Fig. 3). However, owing to the absence of genetic diversity in datasets such as TCGA, many such common genomic alterations may be undetectable, despite being extensively used to discover molecular subtypes and despite having helped to redefine World Health Organization (WHO) taxonomies for cancer classification147,148.

Therefore, studies that control for social determinants of health may nevertheless be affected by population shift from genetic variation, and hence may manifest population-specific phenotypes. For instance, many cancer types have well-known disparities explained by biological determinants in which, even after controlling for socioeconomic status and access to healthcare, there exist population-specific genetic variants and gene-expression profiles that contribute substantially towards disparities in clinical outcomes and treatment responses149,150,151,152,153,154,155,156,157,158,159. Glioblastomas, for instance, demonstrate sex differences in clinical features (in the left temporal lobe for males and in the right temporal lobe for females), in genetic features (the association of neurofibromatosis type 1 (NF1) inactivation with tumour growth and in whether variations in the isocitrate dehydrogenase 1 (IDH1) sequence are a prognostic marker) and in outcomes (worse survival and treatment responses in men)160,161. In aggressive cancer types such as triple negative breast cancer (TNBC), there is mounting evidence that ancestry-specific innate immune variants contribute to the higher incidence of TNBC and mortality in people of African ancestry155,156,159. In prostate cancer, diagnostic gene panels (such as OncotypeDX, developed with patients of predominantly European ancestry) predict poorer prognosis (higher risk) in people of European descent than African Americans; this introduces the notion of population-specific gene signatures that could shed light on a complex aetiology162. In ophthalmology, there are known phenotypic variations across ethnicities, such as melanin concentration within uveal melanocytes (a higher concentration leads to darker fundus pigmentation), retinal-vessel appearance as a function of retinal arteriolar calibre size, and optic-disc size163. And, for transgender women, there may be novel histological findings following complications with gender-affirming surgery164.

It may thus be beneficial to include protected attributes such as sex, ethnicity and ancestry into AI algorithms, especially when the target label is strongly associated with the protected attribute. An example is integrating histology and patient-sex information in AI algorithms, as it can improve predictions of the origin of a tumour in metastatic cancers of unknown primary. This could be used as an assistive tool in recommending diagnostic immunohistochemistry for difficult-to-diagnose cases. Although the sex of a patient can be viewed as a sensitive attribute, not including this information may result in unusual diagnoses, such as predicting the prostate as the origin of a tumour in cases of cancer of unknown primary in women165. As another example, the prediction of mutations from whole-slide images via deep learning could become a low-cost screening approach for inferring genetic aberrations without the need for high-throughput sequencing. It could be used to predict biomarkers (such as microsatellite instability) that guide the use of immune-checkpoint inhibition therapy166, or of EGFR status to guide the selection of a tyrosine kinase inhibitor in the treatment of lung cancer167. However, if the approach were to be trained on TCGA and evaluated on the PIONEER cohort, it may predict a low EGFR-mutation frequency in Asian patients and lead to incorrect cancer-treatment strategies for this population. In this particular instance, using protected class information such as ancestry as a conditional label may improve performance on mutation-prediction tasks. Yet disentangling genetic variation and measuring the contribution of ancestry towards phenotypic variation in the tissue microenvironment is currently precluded by the lack of suitable, large and publicly available datasets. Moreover, it is generally unclear where and when protected attributes can be used to improve fairness outcomes. And bias-mitigation strategies that consider the inclusion of protected attributes are few94,168,169,170,171.

The importance of developing diverse data biobanks is well-known in the context of genome-wide association studies, where variations in linkage disequilibrium structures and minor allele frequencies across ancestral populations can contribute to worsening the performance of polygenic-risk models for under-represented populations172,173,174,175,176,177. Indeed, a fixed-effect meta-analysis found that polygenic-risk models for schizophrenia trained only on European populations performed worse for East Asian populations, owing to differing allele frequencies175. Additionally, cross-ancestry association studies that include populations from divergent ancestries have uncovered new diseased loci174.

Image acquisition and measurement variation

Variations in image acquisition and measurement technique can also leak protected class information. In this type of covariate shift (also known as domain shift or acquisition shift), institution-specific protocols and other non-biological factors that affect data acquisition can induce variability in the acquired data129,178. For example, X-ray, mammography or computed tomography (CT) images are affected by radiation dosage. Similarly, in pathology, heterogeneities in tissue preparation and in staining protocols as well as scanner-specific parameters for slide digitization can affect model performance in slide-level cancer-diagnostic tasks (Fig. 4).

Domain shift as a result of site-specific or region-specific factors that correlate with demographic characteristics may introduce spurious associations with ethnicity. For example, an audit study assessing site-specific stain variability of pathology slides in TCGA found shifts in stain intensity in the only site (the University of Chicago) that had a greater prevalence of patients of African ancestry9. Hence, clinical-grade AI algorithms in pathology may be learning inadvertent cues for ethnicity owing to institution-specific staining patterns. In this instance of domain shift, variable staining intensity can be corrected using domain adaptation and optimal transport techniques that adapt the test distribution to the training dataset. This can be performed on either the input space or the representation space. For instance, deep-learning techniques using GANs can learn stain features as a form of ‘style transfer’, in which a GAN can be used to pre-process data at deployment time to match the training distribution179,180. Other in-processing techniques such as adversarial regularization can be leveraged to learn domain-invariant features using semi-supervised learning and samples from both the training and test distributions. However, a practical limitation in both mitigation strategies is that the respective style-transfer or gradient-reversal layers would need to be fine-tuned with data from the test distribution for each deployment site, which can be challenging owing to data interoperability between institutions and to regulatory requirements for AI-SaMDs14. In some applications, understanding sources of shift presents a challenge for the development of bias-mitigation strategies that remove unwanted confounding factors. For instance, CNNs can reliably predict race in chest X-ray and other radiographs after controlling for image-acquisition factors, removing bone-density information and severely degrading image quality12.

Evolving dataset shifts over time

Dataset shifts can also occur as a result of changes in diagnostic criteria and in labelling paradigms across populations. This is known as concept shift or concept drift181,182,183,184,185,186, and it involves a change in the conditional distributions P(X|Y) or P(Y|X) while the marginal distributions P(X) and P(Y) remain unchanged. Concept shift is similar to other temporal dataset shifts187,188 (such as label shift), in that an increased prevalence in disease Y (for example, pneumonia) causing X (for instance, cough) does not change the causal relationship P(X|Y). However, in concept shift, the relationship between X and Y changes. This can occur if the criteria for diagnosing disease Y are revised over time or are incongruent between populations. Frequently studied examples of concept shift include the migration from ICD-8 to ICD-9, which refactored the coding for surgical procedures; the subsequent migration from ICD-9 to ICD-10, which resulted in a large spike in opioid-related inpatient stays127,128; and the more recent recategorization of ICD-10 to ICD-11, which recategorized strokes as neurological disorders rather than cardiovascular diseases.

Taxonomies and classification systems for many diseases undergo constant evolution, owing to new scientific discoveries and to research findings from randomized control trials. These changes may cause substantial variation in the way AI-SaMDs are evaluated across countries. An example of this is the assessment of kidney transplantation using the Banff classification (which since 1991 has established diagnostic criteria for renal-allograft assessment). Since its original development, the Banff classification has been subject to several major revisions: the establishment of a diagnosis based on antibody-mediated rejection (ABMR) in 1997, the specification of chronic ABMR on the basis of a transplant glomerulopathy biomarker in 2005, and the requirement of evidence of antibody interactions with the microvascular endothelium as a prerequisite for diagnosing chronic ABMR in 2013 (which resulted in a doubling of the diagnosis rate)189. Other notable examples are the shift from the Fuhrman nuclear grading system to the WHO/International Society of Urological Pathology grading system in renal cell carcinomas, the refined WHO taxonomy of diffuse gliomas to include molecular subtyping, the ongoing refinement of American College of Cardiology/American Heart Association guidelines for defining hypertension severity and the 17 varying diagnostic criteria for Behcet’s disease that have been proposed around the world97,190,191,192. As AI algorithms in medicine are often trained on large repositories of historical data (to overcome data paucity), numerous pitfalls may be affecting AI-SaMDs: they may have poor stability in adapting to changing disease prevalence, may be trained and deployed across healthcare systems with different labelling systems and may be trained with datasets affected by historical biases or that do not include data for under-represented populations. The fairness of an AI-SaMD under concept shift has been rarely analysed, yet it is well documented that many international disease-classification systems have poor intra-observer agreement, which suggests that an algorithm trained in one country may not be evaluated under the same labelling paradigm in another country189,190. To mitigate label shifts and concept shifts, some guidelines have emphasized the importance of guaranteeing model stability to how the data were generated118, and the use of reactive or proactive approaches for intervening on temporal dataset shifts in early-warning systems such as those for the prediction of sepsis117,184. However, at the moment there are relatively few strategies for the mitigation of concept shift in AI-SaMDs122,193,194,195.

Variations in self-reported race

As with concept shift across train and test distributions, different geographic regions and countries may collect protected attribute data with varying levels of stringency and granularity, which complicates the incorporation of race as a covariate in evaluations of fairness of medical AI. In addition to historical inequities that have confounded race in elucidating biological differences, another challenge is the active evolution of the understanding of race itself196. As discussions regarding race and ethnicity have moved more into the mainstream, the medical community has begun to realize that the taxonomies of the past do not adequately represent the groups of people that they purport to. Indeed, it is now accepted that race is a social construct and that there is greater genetic variability within a particular race than there are between races197,198,199. As such, the categorization of patients by race can obscure culture, history, socioeconomic status and other confounders of fairness; indeed, they may all separately or synergistically influence a particular patient’s health200,201. These factors can also vary by location: the same person may be considered of different races in different geographic locations, as exemplified by self-reported Asian ethnicity in the TCGA and PIONEER cohorts, and by self-reported race in the COMPAS algorithm146,201.

Ideally, discussions should centre explicitly around each component of race and include ancestry, a concept that has a clear definition—the geographic origins of one’s ancestors—and that is directly connected to the patient’s underlying genetic admixture and hence to many traits and diseases. However, introducing this granularity in fairness evaluations has clear drawbacks in terms of the power of subgroup analyses, as ancestry DNA testing is not routinely performed on patients at most institutions. In practice, institutions would often fall back on the traditional ‘dropdown menu’ for selecting only a single race or ethnicity. Performing fairness evaluations without explicitly considering these potential confounders of race may mean that the AI system is sensitive to unaccounted-for factors200.

Paths forward

Using federated learning to increase data diversity

Federated learning is a distributed-learning paradigm in which a network of participants uses their own computing resources and local data to collectively train a global model stored on a server202,203,204,205. Unlike machine learning performed over a centralized pool of training data, in federated learning users in principle retain oversight of their own data and must only share the update of weight parameters or gradient signals (with privacy-preserving guarantees) from their locally trained model with the central server. In this way, algorithms can be trained on large and diverse datasets without sharing sensitive information. Federated learning has been applied to a variety of clinical settings to overcome data-interoperability standards that would usually prohibit sensitive health data from being shared and to tackle low-data regimes of clinical machine-learning tasks, for example for the prediction of rare diseases98,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220. Federated learning applied to EMRs has satisfied privacy-preserving guarantees for transferring sensitive health data and has enabled the development of early-warning systems for hospitalization, sepsis and other preventive tasks215,221. In radiology, federated learning under various network architectures, privacy-preserving protocols and adversarial attacks has leveraged multi-institutional collaboration to aid the validation of AI algorithms for prostate segmentation, brain-cancer detection, the monitoring of the progression of Alzheimer’s disease using magnetic resonance imaging (MRI) and the classification of paediatric chest X-ray images211,214,222,223,224. In pathology, federated learning has been used to assess the robustness of the performance of weakly supervised algorithms for the analysis of whole-slide images under various privacy-preserving noise levels in diagnostic and prognostic tasks225. It has also been used to overcome low sample sizes in the development of AI models for COVID-19 pathology, and in independent test-cohort evaluation226,227,228.

Regarding fairness, federated learning for the development of decentralized AI-SaMDs can be naturally extended to address many of the cases of dataset shift and to mitigate disparate impact via model development on larger and more diverse patient populations229. In developing polygenic risk scores, decentralized information infrastructures have been shown to harmonize biobank protocols and to enable tangible material transfer agreements across multiple hospitals230, which can then enable model development on large and diverse biobank datasets229,231. Federated learning applied to multi-site domain adaptation across distributed clients would naturally mitigate many instances of dataset shift232,233,234,235,236. In particular, methods such as federated multi-target domain adaptation address practical scenarios, such as when centralized label data are made available only at the client (source) and the unlabelled data are distributed across multiple clients as targets237. The application of federated learning in fairness may allow for new fairness criteria, such as client-based fairness that equalizes model performance at only the client-level238,239, as well as novel formulations of existing bias-mitigation strategies that may not require centralizing information about protected attributes for evaluating fairness criteria240,241,242,243,244,245,246.

Operationalizing fairness principles across healthcare ecosystems

Although federated learning may overcome data-interoperability standards and enable the training of AI-SAMDs with diverse cohorts, the evaluation of AI biases in federated-learning settings would need to be extensively studied. Despite numerous technical advances in improving the communication efficiency, robustness and security of parameter updates, one central statistical challenge is learning from data that are not independent and/or not identically distributed (known as non-i.i.d data). This arises because of the sometimes-vast differences in local-data distribution at contributing sites, which can lead to the divergence of local model weights during training following synchronized initiation204,247,248,249. Accordingly, the performance of federated-learning algorithms (including that of the well-known FedAvg algorithm250,251) that use averaging to aggregate local model-parameter updates deteriorates substantially when applied to non-i.i.d. data252. Such statistical challenges may produce further disparate impact depending on the heterogeneity of the data distributions across clients. For instance, in using multi-site data in the TCGA invasive breast carcinoma (BRCA) cohort as individual clients for federated learning, a majority of parameter updates would come from clients that over-represent individuals with European ancestry, with only one parameter update coming from a single client that has majority representation for African ancestry. This problem can be decomposed into two phenomena, known as local drift (when clients are biased towards minimizing the loss objective of their own distribution) and global drift (when the server is updating diverging gradients from clients with of mismatched data distributions). As a result, many federated-learning approaches developed for learning in non-i.i.d. scenarios inherently adapt dataset shift and bias-mitigation techniques to resolve local and global drift in tandem with evaluating fairness236,237,239,245,246,253,254. Without bias-mitigation strategies, federated models would still be subject to persisting biases found in centralized models, such as the problems of site-specific image-stain variability, intra-observer variability, and under-representation of ethnic minorities in the multi-site TCGA-BRCA cohort9. Federated learning may enable the fine-tuning of AI-SaMDs at each deployment site; however, the evaluation of race and ethnicity for healthcare applications via federated models has yet to be benchmarked. Additionally, because race and ethnicity and other sensitive information are typically isolated in separate databases, there may be logistic barriers to accessing such protected attribute data at each site.

The difficulty of operationalizing fairness principles for the much simpler AI development and deployment life cycles of centralized models will also affect the practical adoption of fair federated-learning paradigms. In current organizational structures, the roles and responsibilities created for implementing fairness principles are typically isolated into ‘practitioner’ or data-regulator roles (which design AI fairness checklists for guiding the ethical development of algorithms in the organization) and ‘engineer’ or data-user roles (which follow the checklist during algorithm implementation255). Such binary partitioning of the roles may lead to poor practices, as fairness checklists are often too broad or abstractive, and are not co-designed with engineers to address problem-specific and technical challenges for the achievement of fairness256. For federated-learning paradigms for the development and global deployment of AI-SaMDs, the design of fairness checklists would require interdisciplinary collaboration from all relevant healthcare roles (in particular, clinicians, ethics practitioners, engineers and researchers), as well as further involvement from stakeholders at participating institutions so as to identify potential site-specific biases that may be propagated during parameter sharing or as a result of accuracy–fairness trade-offs carried out at inference time255.

Overall, although federated learning presents an opportunity to evaluate AI algorithms on diverse biomedical data at a global scale, it faces unknown challenges in the design of global fairness checklists that consider the burdens and patient preferences of each region. For instance, a federated scheduling algorithm for patient follow-ups calibrated to set a high threshold to maximize fairness criteria may not account for substantial differences in burden (which could be much higher at a low-resource setting)257. As with the problems of label shift or concept shift that may occur at various sites, there may be additional complexity arising from culture-specific or regional factors affecting access to protected information, and from definitions and criteria for fairness from differing moral and ethical philosophies48. Navigating such ethical conflicts may involve considering the preferences of diverse stakeholders, particularly of under-represented populations.

Fair representation learning

By focusing on learning intermediate representations that retain discriminative features from the input space X without any features correlating with A, typically via an adversarial-loss term (Fig. 1), fair representation learning is orthogonal to causality258, model robustness and many other subjects in machine learning, and shares techniques and goals with adversarial learning for debiasing representations. Inspired by the minimax objective in GANs, fair representation learning has been used to learn domain-invariant features of the distributions of the training and test datasets (; ref. 179), treatment-invariant representations for producing counterfactual explanations259,260,261, and race-invariant or ethnicity-invariant features to remove disparate impact in deep-learning models67,101,262,263,264,265,266,267,268.

Although fair-representation methods are typically supervised, training fair AI algorithms in an unsupervised manner would allow representations to be freely transferred to other domains without constraints on the downstream classifiers (such as being fair or enabling greater applications of fair algorithms without access to protected attributes93,269). One prominent example is the method known as learned adversarially fair and transferable representation (LAFTR)270, which first modified the GAN minimax objective with an adversarial-loss term to make the latent feature representation invariant to the protected class. LAFTR also showed that such representations are transferable (as examined in the Charlson Comorbidity Index prediction task in the Heritage Health dataset, in which LAFTR was able to transfer to other tasks without leaking sensitive attributes93,269). Across other tasks in medicine, LAFTR can be extended as a privacy-preserving machine-learning approach that allows for the transfer of useful intermediate features. This could advance multi-institutional collaboration in the fine-tuning of algorithms without leaking sensitive information. Similar to LAFTR is unsupervised fair clustering, which aims at learning attribute-invariant cluster assignments (which can also be done via adversarial learning for debiasing representations)271,272. Still, a main limitation in many of these unsupervised fairness approaches is that they depend on having the protected attribute at hand during training, which may not be possible in many clinical settings in which protected class identity is secured. Moreover, in assessing the accuracy–fairness trade-off, adding additional regularization components may decrease representation quality and thus lower performance in downstream fairness tasks103.

Despite access to protected attributes possibly constraining model training, geographical data in the client identities may be used as proxy variables for subgroup identity. This may inform the development of fairness techniques without access to sensitive information. Decentralized frameworks have shown that federated learning in combination with fair representation learning can be used to learn federated, adversarial and debiasing representations with similar privacy-preserving and transferable properties as LAFTR125,273,274,275,276,277,278,279,280,281. Using client identities as proxy variables for protected attributes in adversarial regularization may hold in certain scenarios273, as geographical location is more closely linked to genetic diversity than ethnicity282.

Debiased representations via disentanglement

Disentanglement in generative models can also be used to further promote fairness in learned representations without requiring access to protected attributes. It aims at disentangling independent and easy-to-interpret factors of data in latent space, and has allowed for the isolation of sources of variation in objects, such as colour, pose, position and shape283,284,285,286,287. BetaVAE is a method to quantify disentanglement in deep generative models. It uses a variational autoencoder (VAE) bottleneck for unsupervised learning, followed by the generation of a disentanglement score by training a linear classifier to predict the fixed factor of variation from the representation287. Disentangled VAEs with adversarial-loss components have been used to disentangle size, skin colour and eccentricity in dermoscopy images, as well as causal health conditions and anatomical factors in physiological waveforms288,289,290,291.

With regards to fairness and dataset shifts, disentanglement can be viewed as a form of data pre-processing for debiasing representations in downstream fairness tasks and for providing flexibility in terms of allowing data users to isolate and truncate specific latent codes that correspond to protected attributes in representation space255,292,293. The evaluation of unsupervised VAE-based disentangled models has shown that disentanglement scores correlate with fairness metrics, benchmarked on numerous fairness-classification tasks without the need for protected attribute information294. Disentanglement would be particularly advantageous in settings where the latent code information for including and excluding protected attributes needs to be flexibly adapted; for example, in pathology, where ethnicity may be excluded when predicting cancer stage yet included when predicting mutation status293. Disentanglement-like methods have been used to cluster faces without latent code information according to dominant features such as skin colour and hair colour272,295,296. In chest X-ray images, they have also been shown to mitigate hidden biases in the detection of SARS-CoV-2297. In combination with federated learning, FedDis and related frameworks have been used to isolate sensitive attributes in non-i.i.d. MRI lesion data; images were disentangled according to shape and appearance features, with only the shape parameter shared between clients254,298,299,300,301,302 (Fig. 5).

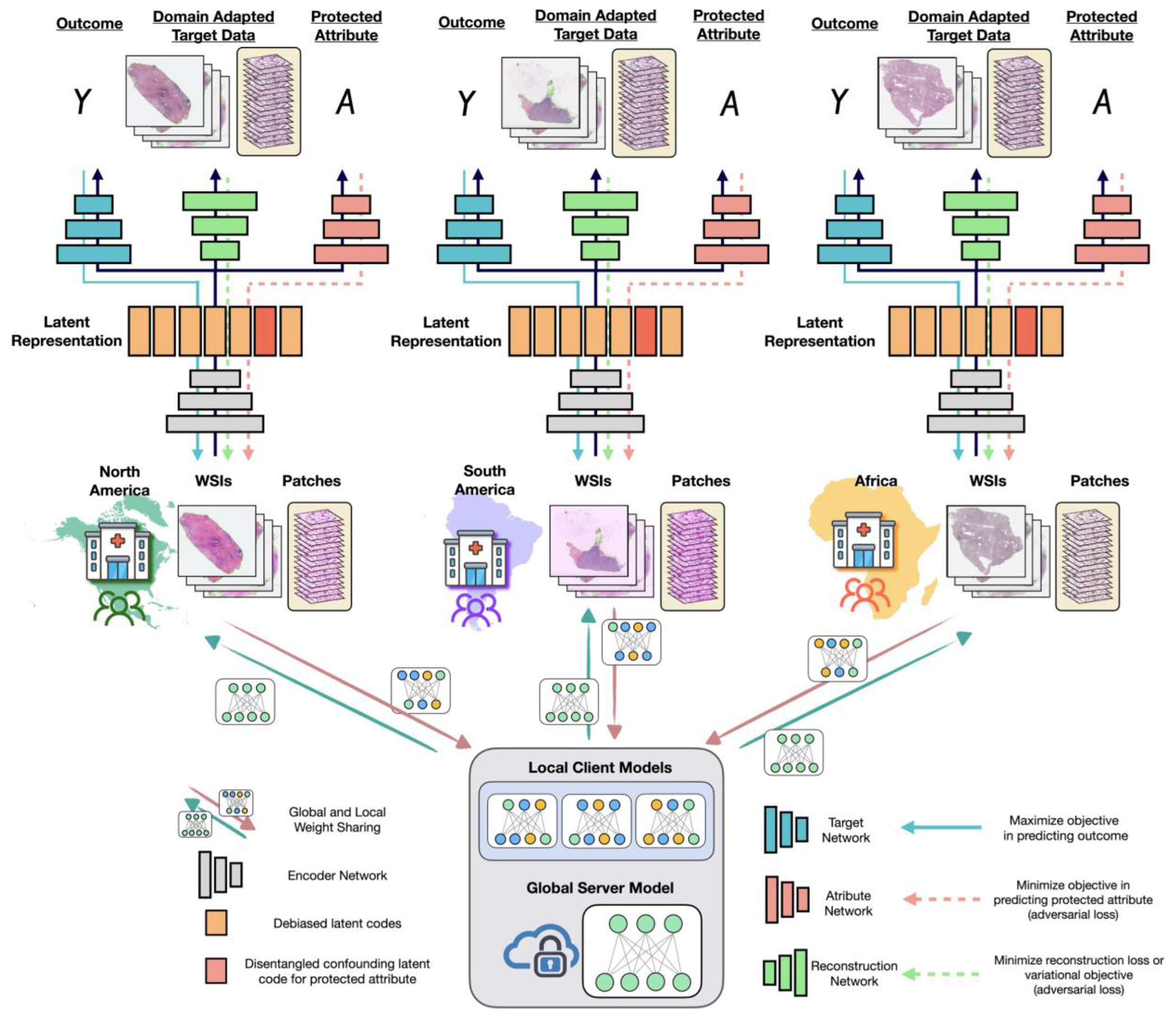

Fig. 5 |. A decentralized framework that integrates federated learning with adversarial learning and disentanglement.

In addition to aiding the development of algorithms using larger and more diverse patient populations, federated learning can be integrated with many techniques in representation learning and in unsupervised domain adaptation that can learn in the presence of unobserved protected attributes. In federated learning, global and local weights are shared between the global server and the local clients (such as different hospitals in different countries), each with different datasets of whole-slide images (WSIs) and image patches. Different domain-adaptation methods can be used with federated learning. In federated adversarial and debiasing (FADE), the client IDs were used as protected attributes, and adversarial learning was used to debias the representation so that it did not vary with geographic region273 (red). In FedDis, shape and appearance features in brain MRI scans were disentangled, with only the shape parameter shared between clients298 (orange). In federated adversarial domain adaptation (FADA), disentanglement and adversarial learning were used to further mitigate domain shifts across clients236 (red and orange). Federated learning can also be used in combination with style transfer, synthetic data generation, and image normalization. In these cases, domain-adapted target data or features would need to be shared, or other techniques employed (green)236,239,299,300,301,302,331,390. Y and A denote, respectively, the model’s outcome and a protected attribute.

Disentangling the roles of data regulators and data users in life cycles of AI-SaMDs

Within current development and deployment life cycles of AI-SaMDs and other AI algorithms, the adaptability of unsupervised fair-representation and disentanglement methods would allow the refinement of the distribution of responsibilities in organizational structures by adding the role of ‘data producers’; that is, those who produce ‘cleaned up’ versions of the input for more informative downstream tasks, as proposed previously255. In this setting, the roles of ‘data users’ and ‘data regulators’ would be separate and compatible with conventional model-development pipelines without the need to consider additional fairness constraints255. Moreover, ‘data producers’ would also quantify the potential accuracy–fairness trade-offs when using regularization components to achieve debiased and disentangled representations. It has been hypothesized that such an approach could pave a path forward for a three-party governance model that simplifies communication overhead when discussing concerns of accuracy–fairness trade-offs, and that adapts to test populations without the complexities of federated learning, which also needs access to protected attributes255. Still, fair representation learning has yet to be benchmarked against competitive self-supervised learning methods (particularly, contrastive learning), and more evaluations of fair representation learning in clinical settings are also needed303. Future work on understanding disentanglement and on adapting it to robust self-supervised learning paradigms would contribute to improving fairness in transfer-learning tasks and serve as a privacy-preserving measure for clinical machine-learning tasks.

Algorithm interpretability for building fair and trustworthy AI

In current regulatory frameworks for the development of AI-SaMDs, algorithm interpretability is pivotal in medical decision-making and in model auditing so as to understand the sources of unfairness and to detect dataset shifts304. Trust in AI algorithms is also an important consideration in current regulation of AI-SaMDs. As a conceptualization for the machine-learning community, and now broadly advocated by regulatory bodies, trust is the fulfilment of a contract in human–AI collaboration. Such contracts are AI functionalities that are anticipated to have known vulnerabilities305. For instance, model correctness is a contract that anticipates patterns that distinguish the model’s correct and incorrect cases available to the user. There are many different types of contract (concerning technical robustness, safety, non-discrimination and transparency, in particular) outlined by the European Commission’s ethical guidelines for trustworthy AI306,307. Similarly, the FDA, in its Good Machine Learning Practices guidelines outlined bias assessment and interpretability as contracts in its action plan for developing trust in AI-SaMDs16. In the remainder of this section, we discuss current applications of algorithm interpretability, and their relevance to fairness in medicine and healthcare.

Interpretability methods and model auditing

For post-hoc interpretability in deep-learning architectures, class-activation maps (CAMs, or saliency mapping) are a commonly used technique for finding sensitive input features that would explain the decision made by an algorithm. CAMs compute the partial derivatives of the predictions with respect to the pixel intensities computed during the back-propagation step of the neural network. The derivatives are then used to produce a visualization of the informative pixel regions308. To produce more fine-grained visualizations, extensions of these methods (such as Grad-CAM) instead attribute how neurons of an intermediate feature layer in a CNN would affect its output, such that the attributions for these intermediate features can be up-sampled to the original image size and viewed as a mask to identify discriminative image regions309. CAM-based methods have gained widespread adoption in the clinical interpretability of the output of CNNs, because salient regions (rather than low-level pixel intensities) would refer to high-level image features. Because these techniques can be applied without modifying the neural networks, they have been used in preclinical applications such as the detection of skin lesions, the localization of disease in chest X-ray images and the segmentation of organs in CT images3,89,310,311,312,313,314,315,316.

However, saliency-mapping techniques may not be accurate, understandable to humans or actionable, and thus insufficiently trustworthy by practitioners in medical support, decision-making, biomarker discovery and model auditing317,318,319. Indeed, interpretability via saliency mapping is typically qualitative but not sufficiently quantifiable to evaluate group differences. An audit of Grad-CAM interpretability in natural images and in echocardiograms found that saliency maps can be misleading and often equivalent to results from simple edge detectors304,320. In the diagnosis of chest radiographs, saliency-mapping techniques are insufficiently accurate if used to localize pathological features314,321. In cancer prognosis, although useful in highlighting important regions-of-interest in histology tissue, it is unclear how highly attributed pixel regions can be used by clinicians for patient stratification without further post-hoc assessment322. And in the problem of unknown dataset shifts of radiology images leaking self-reported race, model auditing via saliency mapping was ineffective in determining any explainable anatomic landmarks or image-acquisition factors associated with the misdiagnoses12. Still, saliency mapping can be used to detect spurious bugs or artefacts in the input feature space (although they do not always detect them in ‘contaminated’ models323,324). Overall, although the visual appeal of saliency mapping may be informative for some uses in medical interpretation, its many limitations preclude its use for enhancing trust into AI algorithms and for the assessment of fairness in their clinical deployment305,325.