Abstract

Controlling large-scale dynamical networks is crucial to understand and, ultimately, craft the evolution of complex behavior. While broadly speaking we understand how to control Markov dynamical networks, where the current state is only a function of its previous state, we lack a general understanding of how to control dynamical networks whose current state depends on states in the distant past (i.e. long-term memory). Therefore, we require a different way to analyze and control the more prevalent long-term memory dynamical networks. Herein, we propose a new approach to control dynamical networks exhibiting long-term power-law memory dependencies. Our newly proposed method enables us to find the minimum number of driven nodes (i.e. the state vertices in the network that are connected to one and only one input) and their placement to control a long-term power-law memory dynamical network given a specific time-horizon, which we define as the ‘time-to-control’. Remarkably, we provide evidence that long-term power-law memory dynamical networks require considerably fewer driven nodes to steer the network’s state to a desired goal for any given time-to-control as compared with Markov dynamical networks. Finally, our method can be used as a tool to determine the existence of long-term memory dynamics in networks.

Subject terms: Electrical and electronic engineering, Complex networks

Introduction

Dynamical networks, including brain networks1, quantum networks2, financial networks3, gene networks4, protein networks5, cyber-physical system networks6 (e.g. power networks2, healthcare networks7), social networks8, and physiological networks9, exhibit not only an intricate set of higher-order interactions but also exhibit distinct long-term memory dynamics where both the recent and more distant past states influence the state’s evolution. Regulating these long-term memory dynamical networks in a timely fashion becomes critical to avoid a full-blown catastrophe. Examples include treating epilepsy by arresting a seizure in a human brain, mitigating climate-related power surges in the power grid, anticipating an undesired shock in the financial market, defending against cyber-attacks in cyber-physical systems, and even thwarting the spread of misinformation in social networks.

To address the challenge of regulating long-term memory dynamical networks, we need a general mathematical method to assess and design controllable large-scale long-term memory dynamical networks within a specified time horizon (i.e. time-to-control). More specifically, we need to be able to determine the minimum number of nodes in a long-term memory dynamical network that must connected to one and only one input to achieve a controllable dynamical network within a specified time horizon. These controlled nodes are known as driven nodes. While initial efforts on analyzing the controllability of long-term memory dynamic networks exist10, 11, these methods suffer from three main shortcomings. First, they do not assess the trade-offs between the time-to-control and the required number of driven nodes. Second, they require the knowledge of the exact parametrization of the system, which makes assessing such trade-offs computationally intractable. Third, these methods are limited to a few hundred nodes, which prohibits the analysis of real-world large-scale dynamical networks. In contrast, to overcome all the aforementioned limitations, we propose to analyze and design controllable large-scale long-term memory dynamical networks by considering the structure of the system that manifests when the exact system parameters are not known, which is both robust and scalable.

In particular, we present a strategy for determining the minimum number of driven nodes to control a long-term memory dynamical network within a specified amount of time, i.e. time-to-control.

Furthermore, our approach investigates the trade-off between the time horizon required to steer the network behavior to a desirable state and the required amount of resources necessary to correct the evolution of long-term dynamical networks. Subsequently, our approach provides answers to the following important questions: How does the nature of the dynamics (Markov versus long-term memory) affect the required number of driven nodes in networks having the same spatial topology? In the case of long-term power-law memory systems, how do the interactions between the nodes of a network, described as the spatial dynamics, affect the network’s ability to manipulate its evolution within a time frame to achieve a desired behavior? How does the size of a long-term memory dynamical network affect its ability to properly control itself on a specific time horizon? How does the inherent structure (i.e. topology) and its properties of a long-term power-law memory dynamical network affect its ability to quickly alter itself to operate correctly within a time horizon?

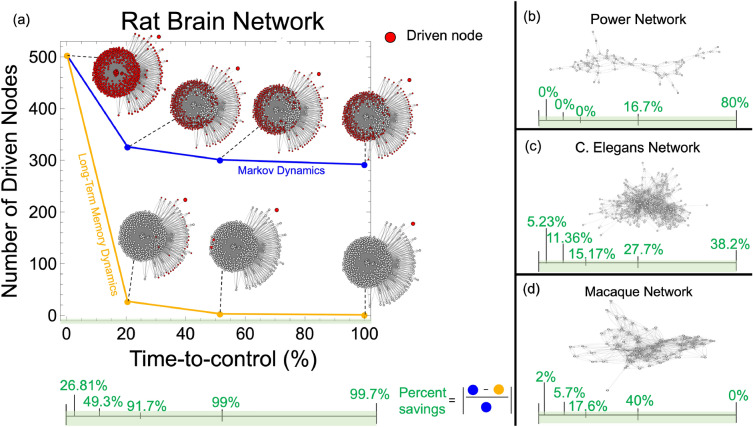

Figure 1 summarizes a few of the important outcomes of our proposed strategy. In short, given a network exhibiting either Markov or long-term memory dynamics, we compare the minimum number of driven nodes (in red) needed to steer the network to a desired goal within a given time horizon. Ultimately, our evidence suggests that long-term power-law memory dynamical networks require fewer driven nodes to steer the network to a desired behavior regardless of the given time-to-control.

Figure 1.

(a) The number of driven nodes is shown across the time-to-control for the rat brain network for both Markov and long-term memory dynamics. The rat brain network12 has 503 regions, which are captured by nodes in the network. At 0% of the time-to-control, all nodes in any dynamical network need to be driven nodes (shown in red). In just 20% of the time-to-control for the rat brain network, we see a drastic reduction in the required number of driven nodes for the long-term memory network as compared to the Markov dynamical network. As the time-to-control increases, the number of driven nodes decreases for both network dynamics; however, it is much more pronounced for the long-term memory dynamical network. The relationship between the percent savings (in green) and the time-to-control is shown at the bottom (highlighted in green). (b) shows the percent savings for the power network13 (60 nodes). (c) shows the percent savings for the C. Elegans network14, 15 (277 nodes). (d) shows the percent savings for the cortical brain structure of a macaque having 71 regions16, 17.

Methods

Fractional-order calculus and fractional-order dynamical networks provide efficient and compact mathematical tools for representing long-term memory with power-law memory dependencies18–29. These dependencies are captured by using a fractional-order derivative denoted by , which is the so-called Grünwald-Letnikov discretization of the fractional derivative, where is the vector of fractional-order exponents. We represent a complex dynamical network exhibiting long-term memory as a fractional-order dynamical network. A fractional-order dynamical network is given as follows:

| 1 |

where time is discrete, so , is a state vector that assigns a single element to each node in the network, is an input vector such that each element may influence a single state in the network, and N is the size of the network (i.e. the total number of nodes in the network).

The matrix A describes the spatial relationship between the nodes in a network (i.e. the network topology). It is important to note that a network with nodal dynamics would mean that A has non-zero diagonal entries. The matrix B describes the relationship between the external input and the nodes of the network, where we implicitly assume that one input element actuates only one node. This assumption limits the structure of the B matrix to be diagonal and potentially have non-zero diagonal entries.

The vector of fractional-order exponents can capture the long-term memory of each state element assigned to each node in the network. This concept is evidenced by the following relationship for a single state at node i:

| 2 |

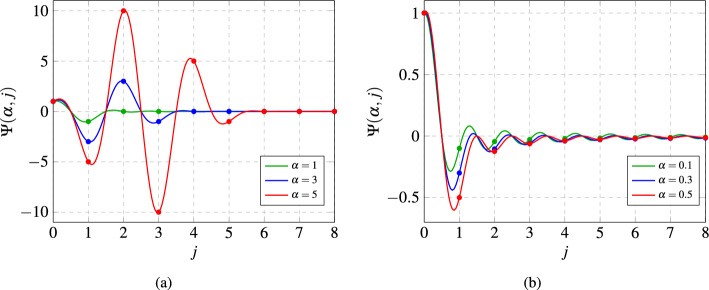

where encodes the weight or importance on each past state, and , with denoting the Gamma function30. We plot the behavior of the function in Fig. 2.

Figure 2.

These figures show the behavior of the function for different values of . (a) shows the function of for integer values of . (b) shows the function of for non-integer values of . The non-zero values of for non-integer values of enable the fractional-order system to capture long-term memory.

We notice from (2) and Fig. 2b that as time increases (i.e. as j increases), the weights described by the function will always be non-zero and decay according to a power-law as long as is indeed a fractional value18, 31. Hence, each previous state will be weighted with a non-zero power-law dependent value thereby forcing the dynamical network to be non-Markovian and possess what is referred to as long-term power-law dependent memory. In contrast, if is instead an integer value, then the weights of decrease to zero as j increases as seen in Fig. 2a, so the dynamical network will not possess long-term memory.

By combining (1) and (2), we obtain an equivalent formalism of the fractional-order dynamical network considered in this paper, which is given as follows18, 31:

| 3 |

where and , for and

| 4 |

A fractional-order dynamical network described by system matrices is controllable in T time steps, if there exists a sequence of inputs such that any initial state of the network can be steered to any desired state in a finite number of time steps T, where the time-to-control is the minimum time horizon necessary for the network to achieve controllability. If we want the network to achieve controllability in one time step, then all of the nodes must be driven nodes. On the other hand, if the time horizon equals the size of the network, then we obtain the minimum number of nodes needed to attain controllability.

The two most common strategies to assess controllability of complex networks are as follows: (i) quantitative and (ii) qualitative. Quantitative methods focus on the exact knowledge of the system parameters and seek to either compute the rank of the controllability matrix or the energy to control the network given by the Controllability Grammian32. The qualitative approach seeks to assess the possibility for controllability by assuming that the parameters are not known but the structure of the network is known (or, equivalently, which entries in the state space representation are nonzero). Such an approach is of practical use as often the network structure is the only information available. Qualitative methods often rely on structural systems theory33, as we also do in the current paper.

A structural matrix is defined as , and is a structural matrix with fixed zeros and represents an arbitrary scalar parameter. Subsequently, a fractional-order dynamical network with structural matrices is said to be structurally controllable in T time steps, if there exists a set with the same structure as that is controllable.

Subsequently, any time-to-control is such that there exists with

| 5 |

where g-rank is the generic rank of an structural matrix given as

| 6 |

where , and is the structural controllability matrix given by

| 7 |

Hereafter, we seek to determine the minimum number of driven nodes that ensure that is structurally controllable in T time steps. Specifically, we aim to determine with the minimum number of non-zero diagonal entries such that a given fractional-order dynamical network, represented as , is structurally controllable in T time steps. We achieve this through the following result, which relates the number of driven nodes required to control fractional-order dynamical networks to the number of driven nodes required to control Markov dynamical networks – see Supplementary Material for details.

Theorem 1

The minimum number of driven nodes required to structurally control a fractional dynamical network within a specified time-to-control is generically equal to that of a Markov dynamical network where all nodes possess self-loops.

The nodal dynamics that emerge from analyzing the fractional-order dynamics are a result of Theorems 2 and 3 in the Supplementary Material, which relate the structural equivalence of the fractional-order system to a linear time-invariant system having nodal dynamics. These results are key in finding the conditions for structural controllability of fractional-order dynamical networks and hence fundamental in providing a solution for the minimum number of driven nodes for long-term memory dynamical networks.

There are two significant consequences from Theorem 1. First, if a network has self-loops on every node, then the dynamical networks with and without long-term power-law memory require the same number of driven nodes for the same time-to-control horizon, and subsequently, in some sense, they are equally difficult to control. Second, networks with long-term power-law memory dynamics are always, at most, as difficult to control as Markov dynamical networks that have the same network topology. Nonetheless, it is surprising that the minimum number of driven nodes for a network possessing long-term power-law memory dynamics can be significantly lower than the same topological network possessing Markov dynamics without self-loops. Hence, it may be easier to control long-term memory networks than the corresponding Markov networks that do not contain self-loops.

In general, the time-to-control can be greater than the size of the network, but because we are interested in understanding structural controllability properties, our results establish that generically speaking one can design a controllable fractional dynamical network by designing a controllable linear time-invariant dynamical network with nodal dynamics. Hence, from the results for linear time-invariant dynamical networks, we know that if the system is controllable, then it must be controllable in at most N steps (the size of the network) by Cayley–Hamilton’s theorem (Theorem 12.134).

Given a network, we define the difference in the number of driven nodes as follows:

where is the number of driven nodes needed to control the network when it possesses Markov dynamics and is the number of driven nodes needed to control the network when it possesses long-term memory dynamics.

We define the percent difference as follows:

where is the difference in the number of driven nodes and N is the size of the network. We also define the percentage of the time-to-control ( time-to-control) as follows.

where is defined as the minimum time horizon needed to control a network and N is the size of the network. Finally, we define the percent savings as

where is the difference in the number of driven nodes and is the number of driven nodes needed to control the network when it possesses Markov dynamics.

In what follows, we conduct a detailed study to further understand the variations in the number of driven nodes for different dynamics, time horizons, and network topologies. We examined real-world and synthetic networks, including Erdős–Rényi, Barabási–Albert, and Watts-Strogatz networks35. To generate the Erdős–Rényi network, we selected a network uniformly at random from the collection of all networks having a pre-defined number of nodes and edges. The Barabási–Albert network is a scale-free network with two parameters, the number of nodes and a parameter k, where a new vertex with k edges is added at each step in generating the network. Finally, the Watts-Strogatz network exhibits small-world properties, including high clustering and short average path lengths. The two parameters for generating Watts-Strogatz networks include the number of nodes and the rewiring probability p. The adjacency matrix associated with the network topology forms the matrix .

Results

We performed experiments on both synthetic and real-world networks. In each experiment, we find the difference between the required number of driven nodes () for the network having Markov dynamics and for the same network having long-term memory dynamics in a given time-to-control. Since the available time-to-control varies with the size of a particular network, we consider the percentage of the time-to-control (% time-to-control).

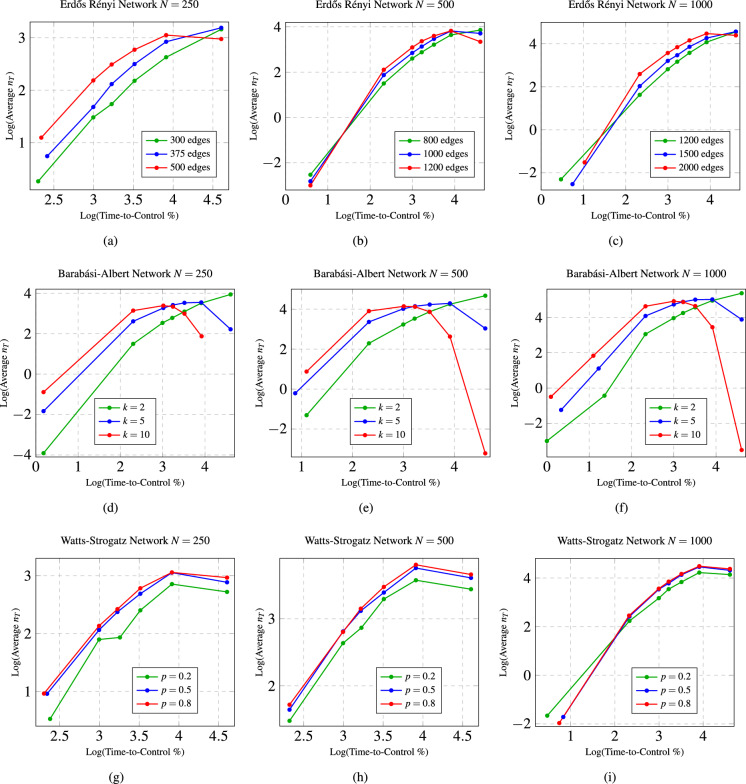

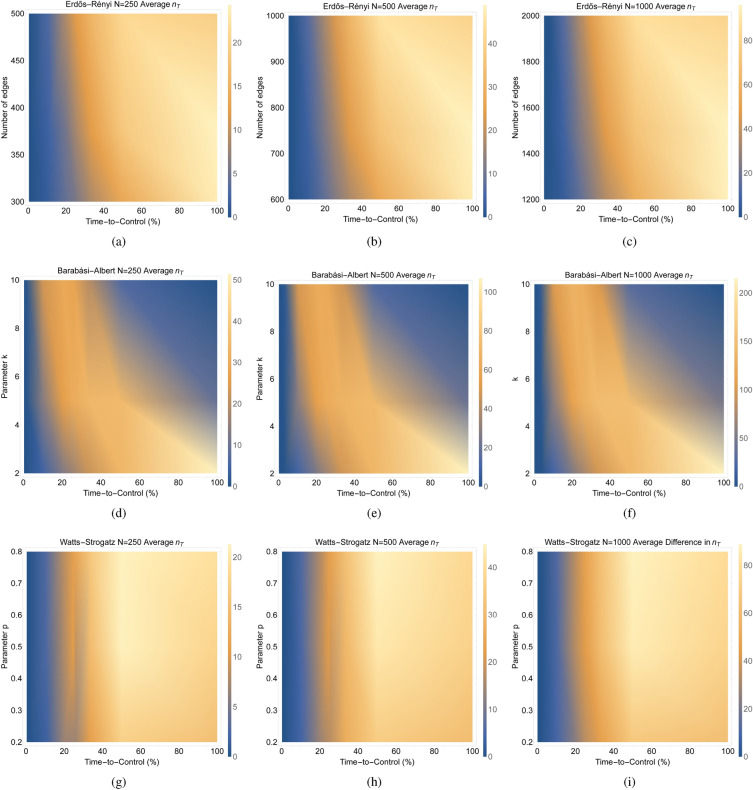

Long-term memory dynamical networks require fewer driven nodes than Markov counterparts

Our experimental results confirm our theoretical results that long-term power-law memory dynamical networks require equal or fewer driven nodes than Markov dynamical networks having the same structure. In all of the experiments, we find that the difference in the required number of driven nodes () is non-negative. In fact, the experiments for the Erdős–Rényi networks (see Fig. 3a–c) suggest that the average (over 100 random networks) difference in the number of driven nodes across the time-to-control partially resembles a power-law. For the Barabási–Albert networks (Fig. 3d–f), the average difference in the number of driven nodes initially scales by a power-law but then quickly drops very low nearly to zero difference as the time-to-control increases. Finally, the average difference in the number of driven nodes for the Watts-Strogatz networks (Fig. 3g–i) scales initially by a power-law and then levels out to a linear relationship towards the final time-to-control. The results in Fig. 3 show that, depending on the network topology, a Markov dynamical network requires up to a power-law times more driven nodes to achieve structural controllability than a long-term memory dynamical network having the same spatial structure.

Figure 3.

These figures show the relationship between the average difference (over 100 networks) in the required number of driven nodes across the time-to-control for different types of synthetic networks with different sizes and parameters. For network sizes 250, 500, and 1000, respectively, (a–c) show the log-log plot of the average difference in the required number of driven nodes () versus the time-to-control (%) for 100 realizations of Erdős–Rényi networks with various edge parameters. For network sizes 250, 500, and 1000, respectively, (d–f) show the log-log plot of the average difference in the required number of driven nodes () versus the time-to-control (%) for 100 realizations of Barabási–Albert networks with various k parameters. For network sizes 250, 500, and 1000, respectively, (g–i) show the log-log plot of the average difference in the required number of driven nodes () versus the time-to-control (%) for 100 realizations of Watts-Strogatz networks with various p parameters.

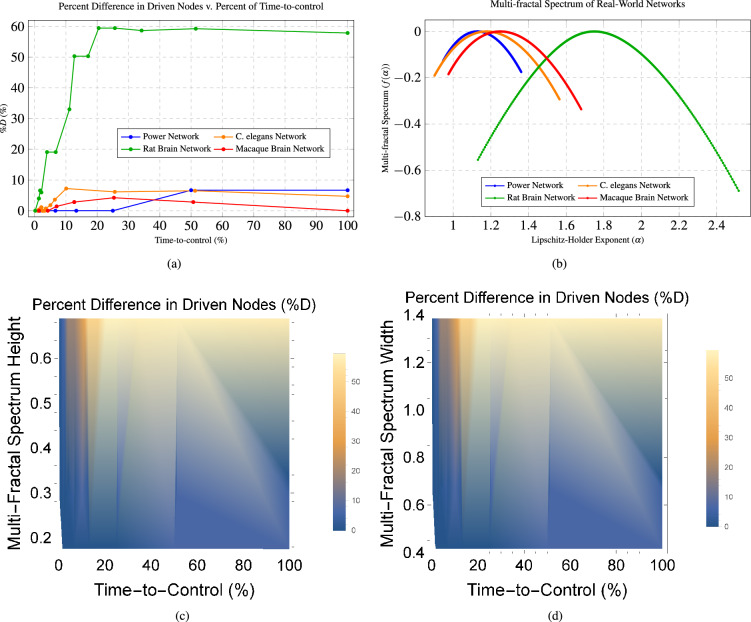

For several real-world dynamical networks, we notice similar trade-offs between the difference in the number of driven nodes and the time-to-control see Fig. 6a. We find that the rat brain network12 gives approximately a 60% difference in the minimum number of driven nodes, which is achieved around 20% of the time-to-control (Fig. 6a). These results are significant considering that the rat brain network has 503 total nodes. The Caenorhabditis elegans (C. elegans) network14, 15, which has 277 nodes, has approximately a 12% difference in the number of driven nodes at around 20% of the time-to-control (Fig. 6a). However, the percent difference in the number of driven nodes decreases as the time-to-control increases in the C. elegans network. The power network13, with only 60 nodes, has approximately an 8% difference in the number of driven nodes at the final time-to-control (Fig. 6a). Lastly, the macaque brain network16, 17, containing 71 regions (and, subsequently 71 nodes), gives less dramatic results with only a peak of 5% difference in the number of driven nodes achieved at the 25% time-to-control (Fig. 6a). These real-world networks show the capability of saving up to 91% of resources as early as 20% of the total time-to-control when controlling large-scale networks exhibiting long-term memory dynamics.

Figure 6.

These figures show the relationship between the percent difference in the number of driven nodes across the time-to-control (%) and the multi-fractal spectrum of four real-world networks, including a power network (60 nodes), rat brain network12 (503 nodes), C. elegans network14, 15 (277 nodes), and a macaque brain network16, 17 (71 nodes). (a) shows the plot of the percent difference of the required number of driven nodes () versus the percent of time-to-control (%) for several real-world networks. (b) shows the plot of the multi-fractal spectrum of several real-world networks. (c) shows the spectrum of the difference in the required number of driven nodes () compared with the multi-fractal spectrum width and the time-to-control ((%) for the same four real-world networks. (d) shows the spectrum of the difference in the required number of driven nodes () compared with the multi-fractal spectrum height and the time-to-control (%) for the same four real-world networks.

Network topologies, not size, determine the control trends

We generated 100 random networks having the same size and synthetic parameters and found the average difference in the number of driven nodes () versus the time-to-control (see Fig. 3). The results in Fig. 3 show that the same overall trend occurs for each of the three types of random networks (Erdős–Rényi, Barabási–Albert, and Watts-Strogatz) independent of the network size. These results suggest that the network topology significantly affects the controllability over other network attributes, such as the size of the network.

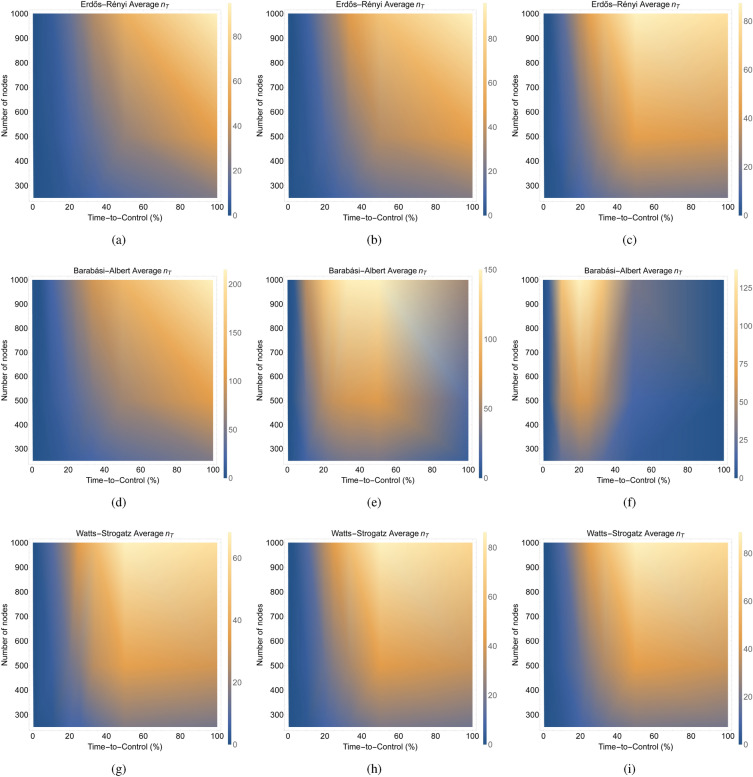

We examine the effect of varying the parameters on the average difference in the required number of driven nodes across the time-to-control for the three types of random networks. Across the sizes of the networks, we notice similar trends for the Erdős–Rényi and Watts-Strogatz networks and drastically different results for the Barabási–Albert networks (Fig. 4). The results in Fig. 4 suggest that varying the number of edges for the Erdős–Rényi networks and the p parameter for the Watts-Strogatz networks, in general, show similar behavior in their difference in the number of driven nodes across the time-to-control. However, for the Barabási–Albert network, varying the k parameter greatly impacts the difference in the number of driven nodes across the time-to-control. For example, on the one hand, a lower k parameter in the Barabási–Albert network gives a higher difference in the number of driven nodes at higher time-to-control values. On the other hand, a higher k parameter gives a lower difference in the number of driven nodes at higher time-to-control values (Fig. 4). For each of the different random networks, as the network sizes increase, the same pattern appears but the difference in the required number of driven nodes increases proportionally with the network size. These results provide further evidence that the topology of the network has a significant influence on the controllability of the network.

Figure 4.

These figures plot the average difference (over 100 networks) in the required number of driven nodes () across the time-to-control () for different types of random networks having different sizes and parameter values. For network sizes 250, 500, and 1000, respectively, (a–c) show average difference in the required number of driven nodes () across the number of edges in the network versus the time-to-control (%) for 100 realizations of Erdős–Rényi networks. For network sizes 250, 500, and 1000, respectively, (d–f) show the average difference in the required number of driven nodes () across the k parameter versus the time-to-control (%) for 100 realizations of Barabási–Albert networks. For network sizes 250, 500, and 1000, respectively, (g–i) show the average difference in the required number of driven nodes () across the p parameter versus the time-to-control (%) for 100 realizations of Watts-Strogatz networks.

We aim to analyze the impact of varying network size on the average difference in the required number of driven nodes over the time-to-control for distinct types of random networks. In Fig. 5, we show the average difference (computed across 100 networks) in terms of the required number of driven nodes. The focus is on different types of random networks, each having varying sizes and parameter values.

Figure 5.

These figures plot the average difference (over 100 networks) of the required number of driven nodes across the time-to-control for different types of random networks having different sizes and parameter values. For the the edge count being 20%, 50%, and 100% higher than the number of nodes, respectively, (a–c) show the average difference in the required number of driven nodes () along the size of the network versus the time-to-control (%) for 100 realizations of Erdős–Rényi networks. For parameter value , respectively, (d–f) show the average difference in the required number of driven nodes () along the size of the network versus the time-to-control (%) for 100 realizations of Barabási–Albert networks. For parameter value , respectively, (g–i) show the average difference in the required number of driven nodes () along the size of the network versus the time-to-control (%) for 100 realizations of Watts-Strogatz networks.

Specifically, for networks with edge counts 20%, 50%, and 100% higher than the number of nodes, we plot the average difference in the required number of driven nodes () as a function of both the network size and the time-to-control (%) in Fig. 5a–c from 100 instances of Erdős–Rényi networks. Similarly, with parameter values , , and , we illustrate the average difference in the required number of driven nodes () against network size and time-to-control (%) in Fig. 5d–f based on 100 realizations of Barabási–Albert networks. Furthermore, we show the average difference in the required number of driven nodes () against the network size and time-to-control (%) for 100 instances of Watts-Strogatz networks, using parameter values , , and , in Fig. 5g–i.

Observing the Erdős–Rényi and Watts-Strogatz networks, in Fig. 5, we identify a pattern where the difference in the required number of driven nodes increases with both network size and time-to-control. These trends hold true across different parameter values. In contrast, the variability in the number of driven nodes for Barabási–Albert networks is heavily influenced by the parameter k. These results support the claim that the network topology plays a relevant role in determining the controllability of the network, whereas the network size does not seem to play a relevant role.

Multi-fractal spectrum dictates savings when controlling long-term memory dynamical networks

From the results in Cowan et al.36, the degree distribution of a network does not determine the required number of driven nodes to ensure controllability. Nonetheless, we analyze the relationship between the average degree of a network, the difference in the required number of driven nodes, and the time-to-control in Fig. 1 in the Supplementary Material for differently sized random networks. These results suggest that more than the average degree, the topology of the network plays a larger role in determining the difference in the required number of driven nodes.

Knowing these results, we focus on studying the relationship between the multi-fractal spectrum and the difference in the required number of driven nodes since the multi-fractal spectrum is a measure of the heterogeneity in the structure of the network37, 38. We investigate the relationship between the multi-fractal spectrum and the difference in the required number of driven nodes in several real-world networks, namely the rat brain12, C. elegans14, 15, macaque brain16, 17, and power networks13. We pay particular attention to the width of the spectrum , where is the maximum Lipschitz–Holder exponent and is the minimum Lipschitz–Holder exponent, since the width reflects the degree of structural heterogeneity39. Thus, the wider the spectrum, the more heterogeneous the network structure will be. The results indicate that, in general, the wider and higher the multi-fractal spectrum, the larger the percent difference in the number of driven nodes – see Fig. 6a and b. From our results in Fig. 6a and b, it seems that the difference in the number of driven nodes is proportional to the structural heterogeneity of a network, meaning that, the wider the multi-fractal spectrum, the larger the difference in the number of driven nodes. This conclusion is also supported by Fig. 6d, which plots the relationship between the multi-fractal spectrum width, time-to-control, and percent difference in the number of driven nodes for the four real-world networks.

Figure 6c plots the relationship between the multi-fractal spectrum height, time-to-control, and percent difference in the number of driven nodes for the four real-world networks. The height of the multi-fractal spectrum indicates the frequency of a particular network structure39. Hence, the higher the multi-fractal spectrum, then the more structures that appear in the network. These figures, Fig. 6c and d, support the notion that, in general, the greater the width and height of the multi-fractal spectrum, then the greater the difference in the number of driven nodes for nearly all time-to-control horizons. Hence, the greater the structural heterogeneity of the network, then the greater the savings in the amount of driven nodes when controlling long-term memory dynamical networks. Therefore, the width of the multi-fractal spectrum is an indicator of the total savings of driven nodes that can be achieved when controlling long-term memory dynamical networks, and the multi-fractal spectrum is more indicative of the difference in the required number of driven as compared with the average degree.

Discussion

Fewer required driven nodes, dictated by network topology and multi-fractal spectrum

Following from the results of Theorem 1, we proved that a long-term power-law memory requires less than or equal to the number of driven nodes as the same network possessing Markov dynamics requires (see Theorem 6 in the Supplementary Material). In our experimental results, we showed that long-term power-law memory dynamical networks can provide a significant advantage in terms of the resources for controlling networks. In particular, the results in Fig. 3 showed that, depending on the network topology, a Markov dynamical network requires up to a power-law times more driven nodes to achieve structural controllability than a long-term power-law memory dynamical network having the same spatial structure.

Long-term power-law memory dynamical networks can use 91% fewer driven nodes at 20% of the time-to-control compared to Markov dynamical networks. More specifically, the rat brain network showed a 60% difference in the minimum number of driven nodes at approximately 20% of the time-to-control (Fig. 6a). Hence, we provided evidence that long-term power-law memory dynamical networks may be easier to control than Markov dynamical networks.

Our experimental results showed that the network topology, not the size of the network, determined the overall trends of the difference in the minimum resources needed for control over the time-to-control (see Figs. 3, 4 and 5). The results in Fig. 3 showed that the same overall trend occurs for each of the three types of random networks (Erdős–Rényi, Barabási–Albert, and Watts-Strogatz) independent of the network size. The results in Fig. 4 suggest that the same pattern appears but the difference in the required number of driven nodes increases proportionally with the network size for each of the different random networks. Finally, the results in Fig. 5 showed that the difference in the minimum number of driven nodes increased as the time-to-control increases for both the Erdős–Rényi and Watts-Strogatz networks, whereas the difference in the minimum number of driven nodes for the Barabási–Albert network is heavily dependent on the parameter k.

It is difficult to determine which nodes are better to control because it depends on the specific connections within the network. To illustrate this fact, we notice that the algorithm that we develop and analyze in this paper depends on first partitioning the network and then on identifying the source strongly components within these partitions. Therefore, our algorithm requires that one node per source SCC in every partition is selected to be controlled. A graph can be partitioned in multiple different ways as outlined in Pequito et al.40 and, identifying these partitions and their source strongly connected components are not not correlated with other network properties such as network communities and metrics41. Hence, it becomes difficult to describe which nodes are best to be controlled.

Remarkably, in contrast with the work by Liu et al.42, the difference in the minimum number of driven nodes to achieve controllability for an arbitrary time-to-control does not correlate with the degree distribution of the network. We provide evidence to support this claim in Fig. 2 in the Supplementary Material. Here, we generated 100 networks having a similar degree distribution to the rat brain network and computed the difference in the number of driven nodes over the time-to-control. However, the difference in the number of driven nodes for 100 generated networks having a similar degree distribution to the rat brain network varies drastically from the difference in the number of driven nodes for the original rat brain network. Hence, having a similar degree distribution does not necessarily mean that the difference in the number of driven nodes will be similar across the time-to-control. A related conclusion demonstrated that the degree distribution of a linear time-invariant dynamical network does not dictate the nodes that must be controlled36.

Finally, we find that the height and width of the multi-fractal spectrum serve as an indication of the total savings in the minimum number of driven nodes for a given network, when considering long-term memory dynamics over Markov dynamics (see Fig. 6). In particular, Fig. 6c and d, support the notion that, in general, the greater the width and height of the multi-fractal spectrum, then the greater the difference in the minimum number of driven nodes for nearly all time-to-control horizons.

A scalable and robust method to determine the trade-offs between the minimum number of driven nodes and time-to-control for controlling large-scale long-term power-law memory dynamical networks

The work by Pequito et al.43 characterized the minimum number of driven nodes for controlling Markov networks and used these results to provide algorithms to design controllable Markov networks. Liu et al. showed the relationship between the required number of driven nodes in Markov networks and the average degree distribution for different network topologies42. The work in36 showed the importance of nodal dynamics in determining the minimum number of inputs (i.e. an input can be connected to multiple nodes) to achieve structural controllability in the case of Markov networks.

Recent work by Lin et al. claims to control Markov networks with only two time-varying control inputs with the assumption that these sources can be connected to any node at any time; however, this is not a realistic solution in the case of many physical systems, such as the power grid or the brain44. Most notably though, none of these previously mentioned works take into account the long-term memory dynamics exhibited in many real-world complex dynamical networks. Furthermore, only the work by Pequito et al.40 has analyzed the trade-offs between the time-to-control of a dynamical network and the required number of driven nodes, but this study is limited to only considering Markov dynamical networks. Here, we have examined the effect that long-term memory dynamics plays on the ability to control the network while considering the time-to-control.

There are only two works10, 11 that have examined the control of networks exhibiting long-term memory dynamics, but they have several shortcomings, including scalability and lack of robustness. For example, the work in by Kyriakis et al.10, which uses energy-based methods to control long-term memory dynamics modeled as a linear time-invariant fractional-order system in discrete-time, is not tractable as it has computational-time complexity of . Similarly, the work by Cao et al.11, which uses a greedy algorithm that maximizes the rank of the controllability matrix, relies on the computation of the matrix rank, so it quickly becomes intractable as a network grows in size and has computational-time complexity of . Furthermore, both of these works require that the precise dynamics are known10, 11. Simply speaking, these works do not account for the inherent uncertainty in the parametric model for long-term memory dynamics.

Hence, we present the first scalable and robust method to determine the trade-offs between the minimum number of driven nodes and time-to-control for controlling large-scale long-term power-law memory dynamical networks with a computational-time complexity of – See Theorem 11 in the Supplementary Material. Our novel method can assess the trade-offs in controlling a large-scale long-term memory dynamical network with unknown parameters in a given number of time steps, which leads us to provide answers to fundamental questions regarding the relationships between the number of driven nodes, the time-to-control, the network topology, and the size of the network.

Determining the existence of long-term memory in dynamical networks

Our approach, which assesses the trade-offs between the minimum number of driven nodes in a given time-to-control for controlling long-term power-law dynamical networks, can determine the existence of long-term memory in dynamical networks. We can achieve this by considering whether a network can be controlled with the minimum number of driven nodes given by the proposed method.

For example, suppose that we want to control a dynamical network in a finite amount of time, where we assume that we know the structure of the network including its size (i.e. number of nodes) and the relationship between nodes in the network (i.e. the edges and their placement in the network), but we do not know the dynamics of the system. In this case, our approach can be used to determine whether the dynamical network possesses long-term memory by first trying to control the network with the minimum number of driven nodes for long-term memory dynamics in a specified time frame, which can be computed using our proposed approach. If the dynamical network can indeed be steered to a desired behavior with the minimum number of driven nodes in a given time-to-control as computed by our proposed method, then the dynamical network indeed possesses long-term power-law memory dynamics. On the other hand, if the network cannot be controlled with the minimum number of driven nodes in a specified time frame as computed using our proposed method, then the network must not possess long-term power-law memory dynamics as it requires more driven nodes to control the network.

This is a powerful result that provides a significant advantage over current state-of-the-art methods to determine the existence of long-term memory dynamics in large-scale networks, which rely on first finding the exact parameterization of the known dynamics of the system from data6, 45–47. In particular, these approaches estimate the two parameters of the long-term power-law dynamical networks, namely the fractional-order exponents () and the spatial matrix (A). By investigating whether the estimated values of the fractional-order exponents are indeed fractional, this inherently determines the existence of long-term memory in the dynamics (see Fig. 2).

There are several works that have proposed methods to estimate the parameters of long-term power-law dynamical networks. The work by Xue et al.6 proposes an approximate approach to estimate the fractional-order exponents, then based on this result, the methods finds the spatial matrix using a least-squares approach. The work by Flandrin et al.48 leverages wavelets to find the fractional-order exponents. Using this wavelet approach, the work by Gupta et al.45 proposes an expectation-maximization approach to estimate the the spatial matrix and the unknown inputs of a fractional-order system. In a similar vein, the work in by Gupta et al. 46 proposes a method to estimate from data the parameters of a fractional-order system having partially unknown states. Finally, the work by Chatterjee et al. 47 considers a finite-sized augmented fractional-order system and proposes an iterative algorithm to find the fractional-order exponents and a least-squares approach to find the spatial matrix.

While these works rely on computing the parameters of long-term power-law dynamical networks from data, the advantage of our proposed approach is that it can determine the existence of long-term memory dynamics in large-scale networks without knowing the exact dynamics of the system.

Supplementary Information

Acknowledgements

Emily A. Reed acknowledges the financial support from the National Science Foundation GRFP DGE-1842487, the University of Southern California Annenberg Fellowship, and a USC WiSE Top-Off Fellowship. Emily Reed and Paul Bogdan also acknowledge the financial support from the National Science Foundation under the Career Award CPS/CNS-1453860, the NSF award under Grant Numbers CCF-1837131, MCB-1936775, CMMI-1936624, and CNS-1932620, the U.S. Army Research Office (ARO) under Grant No. W911NF-17-1-0076 and W911NF-23-1-0111, and the DARPA Young Faculty Award and DARPA Director Award, under Grant Number N66001-17-1-4044, a 2021 USC Stevens Center Technology Advancement Grant (TAG) award, an Intel faculty award and a Northrop Grumman grant. The financial supporters of this work had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript. The views, opinions, and/or findings contained in this dissertation are those of the author and should not be interpreted as representing official views or policies, either expressed or implied by the Defense Advanced Research Projects Agency, the Department of Defense or the National Science Foundation.

Author contributions

S.P. and P.B. conceived the ideas for the manuscript. E.R., G.R. and S.P. derived the theoretical results. E.R. and G.R. wrote the code to perform the experimental results. E.R., G.R., P.B. and S.P. contributed to editing the manuscript.

Data availability

The real-world networks, that is the rat brain12, C. elegans14, 15, macaque brain16, 17, and power networks13, are publicly available. The code is available upon request by contacting the corresponding author.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-023-46349-9.

References

- 1.Cook MJ, et al. The dynamics of the epileptic brain reveal long-memory processes. Front. Neurol. 2014;5:217. doi: 10.3389/fneur.2014.00217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shalalfeh L, Bogdan P, Jonckheere EA. Fractional dynamics of PMU data. IEEE Trans. Smart Grid. 2020;12:2578–2588. doi: 10.1109/TSG.2020.3044903. [DOI] [Google Scholar]

- 3.Picozzi S, West BJ. Fractional Langevin model of memory in financial markets. Phys. Rev. E. 2002;66:046118. doi: 10.1103/PhysRevE.66.046118. [DOI] [PubMed] [Google Scholar]

- 4.Jain S, Xiao X, Bogdan P, Bruck J. Generator based approach to analyze mutations in genomic datasets. Nat. Sci. Rep. 2021;11:1–12. doi: 10.1038/s41598-021-00609-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bogdan P. Taming the unknown unknowns in complex systems: Challenges and opportunities for modeling, analysis and control of complex (biological) collectives. Front. Physiol. 2019;10:1452. doi: 10.3389/fphys.2019.01452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xue Y, Rodriguez S, Bogdan P. A spatio-temporal fractal model for a CPS approach to brain-machine-body interfaces. In: Xue Y, Rodriguez S, Bogdan P, editors. Proceedings of the 2016 Design, Automation & Test in Europe Conference & Exhibition. IEEE; 2016. pp. 642–647. [Google Scholar]

- 7.Bogdan P, Jain S, Marculescu R. Pacemaker control of heart rate variability: A cyber physical system perspective. ACM Trans. Embed. Comput. Syst. 2013;12:50. doi: 10.1145/2435227.2435246. [DOI] [Google Scholar]

- 8.Tenreiro Machado J, Lopes AM. Complex and fractional dynamics. Entropy. 2017;19:62. doi: 10.3390/e19020062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bogdan P, Eke A, Ivanov PC. Fractal and multifractal facets in the structure and dynamics of physiological systems and applications to homeostatic control, disease diagnosis and integrated cyber-physical platforms. Front. Physiol. 2020;11:447. doi: 10.3389/fphys.2020.00447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kyriakis P, Pequito S, Bogdan P. On the effects of memory and topology on the controllability of complex dynamical networks. Nat. Sci. Rep. 2020;10:1–13. doi: 10.1038/s41598-020-74269-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cao Q, Ramos G, Bogdan P, Pequito S. The actuation spectrum of spatiotemporal networks with power-law time dependencies. Adv. Complex Syst. 2019;22:1950023. doi: 10.1142/S0219525919500231. [DOI] [Google Scholar]

- 12.Wolfram Data Repository. Wolfram research rat brain graph 1 (2016).

- 13.Wolfram Research. Wolfram research USA electric system operating network (2021).

- 14.Choe Y, McCormick BH, Koh W. Network connectivity analysis on the temporally augmented c. elegans web: A pilot study. In: Choe Y, McCormick BH, Koh W, editors. Society for Neuroscience Abstracts. Society for Neuroscience; 2004. p. 921.9. [Google Scholar]

- 15.White JG, Southgate E, Thomson JN, Brenner S. The structure of the nervous system of the nematode Caenorhabditis Elegans. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1986;314:1–340. doi: 10.1098/rstb.1986.0056. [DOI] [PubMed] [Google Scholar]

- 16.Rubinov M, Sporns O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage. 2010;52:1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 17.Young MP. The organization of neural systems in the primate cerebral cortex. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1993;252:13–18. doi: 10.1098/rspb.1993.0040. [DOI] [PubMed] [Google Scholar]

- 18.Xue, Y. & Bogdan, P. Constructing compact causal mathematical models for complex dynamics. In Proc. of the 8th International Conference on Cyber-Physical Systems, 97–107 (2017).

- 19.Valério D, Trujillo JJ, Rivero M, Machado JT, Baleanu D. Fractional calculus: A survey of useful formulas. Eur. Phys. J. Spec. Top. 2013;222:1827–1846. doi: 10.1140/epjst/e2013-01967-y. [DOI] [Google Scholar]

- 20.West BJ. Colloquium: Fractional calculus view of complexity: A tutorial. Rev. Mod. Phys. 2014;86:1169. doi: 10.1103/RevModPhys.86.1169. [DOI] [Google Scholar]

- 21.Kilbas AA, Srivastava HM, Trujillo JJ. Theory and Applications of Fractional Differential Equations. Elsevier; 2006. [Google Scholar]

- 22.Baleanu D, Diethelm K, Scalas E, Trujillo JJ. Fractional Calculus: Models and Numerical Methods. World Scientific; 2012. [Google Scholar]

- 23.Podlubny I. Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of their Solution and Some of their Applications. Elsevier; 1998. [Google Scholar]

- 24.Sabatier J, Agrawal OP, Machado JT. Advances in Fractional Calculus. Springer; 2007. [Google Scholar]

- 25.Lundstrom BN, Higgs MH, Spain WJ, Fairhall AL. Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 2008;11:1335–1342. doi: 10.1038/nn.2212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Werner G. Fractals in the nervous system: Conceptual implications for theoretical neuroscience. Front. Physiol. 2010;1:1787. doi: 10.3389/fphys.2010.00015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chen W, Sun H, Zhang X, Korošak D. Anomalous diffusion modeling by fractal and fractional derivatives. Comput. Math. Appl. 2010;59:1754–1758. [Google Scholar]

- 28.Petráš I. Fractional-order chaotic systems. In: Petráš I, editor. Fractional-Order Nonlinear Systems: Modeling, Analysis and Simulation. Springer-Verlag; 2011. pp. 103–184. [Google Scholar]

- 29.Reed E, Chatterjee S, Ramos G, Bogdan P, Pequito S. Fractional cyber-neural systems-a brief survey. Annu. Rev. Control. 2022 doi: 10.48550/arXiv.2112.08535. [DOI] [Google Scholar]

- 30.Dzielinski A, Sierociuk D. Adaptive feedback control of fractional order discrete state-space systems. Proc. Int. Conf. Comput. Intell. Model. Control Autom. 2005;1:804–809. [Google Scholar]

- 31.Xue, Y., Rodriguez, S. & Bogdan, P. A spatio-temporal fractal model for a CPS approach to brain-machine-body interfaces. In 2016 Design, Automation Test in Europe Conference Exhibition (DATE), 642–647 (2016).

- 32.Pasqualetti F, Zampieri S, Bullo F. Controllability metrics, limitations and algorithms for complex networks. IEEE Trans. Control Netw. Syst. 2014;1:40–52. doi: 10.1109/TCNS.2014.2310254. [DOI] [Google Scholar]

- 33.Ramos G, Aguiar AP, Pequito S. An overview of structural systems theory. Automatica. 2022;140:110229. doi: 10.1016/j.automatica.2022.110229. [DOI] [Google Scholar]

- 34.Hespanha JP. Linear Systems Theory. Princeton University Press; 2018. [Google Scholar]

- 35.Barabási A-L. Network Science. Cambridge University Press; 2016. [Google Scholar]

- 36.Cowan NJ, Chastain EJ, Vilhena DA, Freudenberg JS, Bergstrom CT. Nodal dynamics, not degree distributions, determine the structural controllability of complex networks. PloS One. 2012;7:e38398. doi: 10.1371/journal.pone.0038398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Yang R, Bogdan P. Controlling the multifractal generating measures of complex networks. Nat. Sci. Rep. 2020;10:1–13. doi: 10.1038/s41598-020-62380-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Yang R, Sala F, Bogdan P. Hidden network generating rules from partially observed complex networks. Nat. Commun. Phys. 2021;4:1–12. [Google Scholar]

- 39.Xiao X, Chen H, Bogdan P. Deciphering the generating rules and functionalities of complex networks. Nat. Sci. Rep. 2021;11:1–15. doi: 10.1038/s41598-021-02203-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pequito S, Preciado VM, Barabási A-L, Pappas GJ. Trade-offs between driving nodes and time-to-control in complex networks. Nat. Sci. Rep. 2017;7:1–14. doi: 10.1038/srep39978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ramos G, Pequito S. Generating complex networks with time-to-control communities. PloS One. 2020;15:e0236753. doi: 10.1371/journal.pone.0236753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu Y-Y, Slotine J-J, Barabási A-L. Controllability of complex networks. Nature. 2011;473:167. doi: 10.1038/nature10011. [DOI] [PubMed] [Google Scholar]

- 43.Pequito S, Kar S, Aguiar AP. A framework for structural input/output and control configuration selection in large-scale systems. IEEE Trans. Autom. Control. 2015;61:303–318. doi: 10.1109/TAC.2015.2437525. [DOI] [Google Scholar]

- 44.Lin Y, et al. Spatiotemporal input control: Leveraging temporal variation in network dynamics. IEEE/CAA J. Autom. Sin. 2022;9:635–651. doi: 10.1109/JAS.2022.105455. [DOI] [Google Scholar]

- 45.Gupta, G., Pequito, S. & Bogdan, P. Dealing with unknown unknowns: Identification and selection of minimal sensing for fractional dynamics with unknown inputs. In Proc. of the 2018 American Control Conference, 2814–2820 (IEEE, 2018).

- 46.Gupta, G., Pequito, S. & Bogdan, P. Learning latent fractional dynamics with unknown unknowns. In Proc. of the 2019 American Control Conference, 217–222 (IEEE, 2019).

- 47.Chatterjee, S. & Pequito, S. On learning discrete-time fractional-order dynamical systems. In Proc. of the 2022 American Control Conference, 4335–4340 (IEEE, 2022).

- 48.Flandrin P. Wavelet analysis and synthesis of fractional Brownian motion. IEEE Trans. Inf. Theory. 1992;38:910–917. doi: 10.1109/18.119751. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The real-world networks, that is the rat brain12, C. elegans14, 15, macaque brain16, 17, and power networks13, are publicly available. The code is available upon request by contacting the corresponding author.