Abstract

Evidence-based decision making often relies on meta-analyzing multiple studies, which enables more precise estimation and investigation of generalizability. Integrative analysis of multiple heterogeneous studies is, however, highly challenging in the ultra high-dimensional setting. The challenge is even more pronounced when the individual-level data cannot be shared across studies, known as DataSHIELD contraint. Under sparse regression models that are assumed to be similar yet not identical across studies, we propose in this paper a novel integrative estimation procedure for data-Shielding High-dimensional Integrative Regression (SHIR). SHIR protects individual data through summary-statistics-based integrating procedure, accommodates between-study heterogeneity in both the covariate distribution and model parameters, and attains consistent variable selection. Theoretically, SHIR is statistically more efficient than the existing distributed approaches that integrate debiased LASSO estimators from the local sites. Furthermore, the estimation error incurred by aggregating derived data is negligible compared to the statistical minimax rate and SHIR is shown to be asymptotically equivalent in estimation to the ideal estimator obtained by sharing all data. The finite-sample performance of our method is studied and compared with existing approaches via extensive simulation settings. We further illustrate the utility of SHIR to derive phenotyping algorithms for coronary artery disease using electronic health records data from multiple chronic disease cohorts.

Keywords: DataSHIELD, Distributed learning, High dimensionality, Model heterogeneity, Rate optimality, Sparsistency

1. Introduction

1.1. Background

Synthesizing information from multiple studies is crucial for evidence-based medicine and policy decision making. Meta-analyzing multiple studies allows for more precise estimates and enables investigation of generalizability. In the presence of heterogeneity across studies and high-dimensional predictors, such integrative analysis however is highly challenging. An example of such integrative analysis is to develop generalizable predictive models using electronic health records (EHR) data from different hospitals. In addition to high-dimensional features, EHR data analysis encounters privacy constraints in that individual patient data (IPD) typically cannot be shared across local hospital sites, which makes the challenge of integrative analysis even more pronounced. Breach of Privacy arising from data sharing is in fact a growing concern in general for scientific studies. Recently, Wolfson et al. (2010) proposed a generic individual-information protected integrative analysis framework, named DataSHIELD, that transfers only summary statistics1 from each distributed local site to the central site for pooled analysis. Conceptually highly valued by research communities (see, e.g., Jones et al. 2012; Doiron et al. 2013), the DataSHIELD facilitates multi-study integrative analysis when IPD pooled meta-analysis is not feasible due to ethical and/or legal restrictions (Gaye et al. 2014). In the low-dimensional setting, a number of statistical methods have been developed for distributed analysis that satisfy the DataSHIELD constraint (see, e.g., Chen et al. 2006; Wu et al. 2012; Liu and Ihler 2014; Lu et al. 2015; Huang and Huo 2015; Han and Liu 2016; He et al. 2016; Zöller, Lenz, and Binder 2018; Duan et al. 2019, 2020). Distributed high-dimensional regression have largely focused on settings without between-study heterogeneity as detailed in Section 1.2. To the best of our knowledge, no existing distributed learning methods can effectively handle both high-dimensionality and the presence of model heterogeneity across the local sites.

1.2. Related Work

In the context of high-dimensional regression, several recently proposed distributed inference approaches can be potentially used for integrative analysis under the DataSHIELD constraint. Specifically, Tang, Zhou, and Song (2016), Lee et al. (2017), and Battey et al. (2018) proposed distributed inference procedures aggregating the local debiased LASSO estimators (Zhang and Zhang 2014; Van de Geer et al. 2014; Javanmard and Montanari 2014). By including debiasing procedure in their pipelines, the corresponding estimators can be used for inference directly. Lee et al. (2017) and Battey et al. (2018) proposed to further truncate the aggregated dense debiased estimators to achieve sparsity; see also Maity, Sun, and Banerjee (2019). Though this debiasing-based strategy can be extended to fit for our heterogeneous modeling assumption, it still loses statistical efficiency due to the failure to account for the heterogeneity of the information matrices across different sites. In addition, the use of debiasing procedure at local sites incurs additional error for estimation, as detailed in Section 4.4.

Lu et al. (2015) and Li et al. (2016) proposed distributed approaches for -regularized logistic and Cox regression. However, their methods requires equential communications between local sites and the central machine, which may be time and resource consuming. Chen and Xie (2014) proposed to estimate high dimensional parameters by first adopting majority voting to select a positive set and then combining local estimation of the coefficients belonging to this set. Wang, Peng, and Dunson (2014) proposed to aggregate the local estimators through their median values rather than their mean, shown to be more robust to poor estimation performance of local sites with insufficient sample size (Minsker 2019). More recently, Wang et al. (2017) and Jordan, Lee, and Yang (2019) presented a communication-efficient surrogate likelihood framework for distributed statistical learning that only transfers the first-order summary statistics, that is, gradient between the local sites and the central site. Fan, Guo, and Wang (2019) extended their idea and proposed two iterative distributed optimization algorithms for the general penalized likelihood problems. However, their framework, as well as others summarized in this paragraph, is restricted to homogeneous scenarios and cannot be easily extended to the settings with heterogeneous models or covariates.

1.3. Our Contributions

In this article, we fill the methodological gap of high-dimensional distributed learning methods that can accommodate cross-study heterogeneity by proposing a novel data-Shielding High-dimensional Integrative Regression (SHIR) method under the DataSHIELD constraints. While SHIR can be viewed as analogous to the integrative analysis of debiased local LASSO estimators, it achieves debiasing without having to perform debiasing for the local estimators. SHIR solves LASSO problem only once in each local site without requiring the inverse Hessian matrices or the locally debiased estimators and only needs one turn in communication. Statistically, it serves as the tool for the integrative model estimation and variable selection, in the presence of high dimensionality and heterogeneity in model parameters across sites. In addition, under the ultra-high dimensional regime where p can grow exponentially with the total sample size N, we demonstrate that SHIR can achieve the same error rates asymptotically as the ideal estimator based on the IPD pooled analysis, denoted by IPDpool, and attain consistent variable selection. Such properties are not readily available in the existing literature and some novel technical tools are developed for the theoretical verification. We also show theoretically that SHIR is statistically more efficient than the approach based on integrating and thresholding locally debiased estimators (see, e.g., Lee et al. 2017; Battey et al. 2018). Results from our numerical studies confirm that SHIR performs similarly to the ideal IPDpool estimator outperforms the other methods.

1.4. Outline of the Paper

The rest of this article is organized as follows. We introduce the settings in Section 2 and describe SHIR, our proposed approach in Section 3. Theoretical properties of the SHIR estimator are studied in Section 4. We derive the upper bound for its prediction and estimation risks, compare it with the existing approach, and show that the errors incurred by aggregating derived data is negligible compared to the statistical minimax rate. When the true model is ultra-sparse, SHIR is shown to be asymptotically equivalent to the IPDpool estimator and achieves sparsistency. Section 5 compares the performance of SHIR to existing methods through simulations. We apply SHIR to derive classification models for coronary artery disease (CAD) using EHR data from four different disease cohorts in Section 6. Section 7 concludes the paper with a discussion. Technical proofs of the theoretical results and additional numerical results are provided in the supplementary material.

2. Problem Statement

Throughout, for any integer . For any vector and index set , , , denotes the norm of and . Suppose there are independent studies and subjects in the study, for . For the subject in the study, let and , respectively, denote the response and the -dimensional covariate vector, , , and . We assume that the observations in study , , are independent and identically distributed. Without loss of generality, assume that includes 1 as the first component and has mean . Define the population parameters of interests as

for some specified loss function . Let , , and , denote the true values of , . We consider the ultra-high dimensional setting, where the covariate dimension could grow in an exponential rate of the sample size .

For each , we follow the typical meta-analysis to decompose as with and we set for identifiability. Here, represents average effect of the covariate and captures the between-study heterogeneity of the effects. Let , , , and and be the true values of and , respectively. Consider the empirical global loss function

Minimizing is obviously equivalent to estimating using only. To improve the estimation of by synthesizing information from and overcome the high dimensionality, we employ penalized loss functions, , with the penalty function designed to leverage prior structure information on . Under the prior assumption that is sparse and are sparse and share the same support, we impose a mixture of LASSO and group LASSO penalty: , where is a tuning parameter. Similar penalty has been used in Cheng, Lu, and Liu (2015). Our construction differs slightly from that of Cheng, Lu, and Liu (2015) where was used instead of . This modified penalty leads to two main advantages: (i) the estimator is invariant to the permutation of the indices of the studies; and (ii) it yields better theoretical estimation error bounds for the heterogeneous effects. Then an idealized IPDpool estimator for can be obtained as

| (1) |

for some tuning parameter . However, the IPDpool estimator is not feasible under the DataSHIELD constraint. Our goal is to construct an alternative estimator that attains the same efficiency as asymptotically but only requires sharing summary data. When is small, the sparse meta analysis (SMA) approach by He et al. (2016) achieves this goal via estimating as , where , and . The SMA method is DataSHIELD since only derived statistics and are shared in the integrative regression. The SMA estimator attains oracle property when is relatively small but fails for large due to the failure of .

3. Data-SHIR

3.1. SHIR Method

In the high-dimensional setting, one may overcome the limitation of the SMA approach by replacing with the regularized LASSO estimator,

| (2) |

However, aggregating is problematic with large due to their inherent biases. To overcome the bias issue, we build the SHIR method motivated by SMA and the debiasing approach for LASSO (see, e.g., Van de Geer et al. 2014) yet achieve debiasing without having to perform debiasing for local estimators. Specifically, we propose the SHIR estimator for as , where

| (3) |

is an estimate of the Hessian matrix and . Our SHIR estimator satisfy the DataSHIELD constraint as depends on only through summary statistics , which can be obtained within the study, and requires only one round of data transfer from local sites to the central node.

With , we may implement the SHIR procedure using coordinate descent algorithms (Friedman, Hastie, and Tibshirani 2010) along with reparameterization. Let

where and

Then the optimization problem in Equation (3) can be reparameterized and represented as:

and is obtained with the transformation: for every . The above procedure is presented in Algorithm A1 in Section A.5 of the supplementary material.

Remark 1.

The first term in is essentially the second-order Taylor expansion of at the local LASSO estimators . The SHIR method can also be viewed as approximately aggregating local debiased LASSO estimators without actually carrying out the standard debiasing process. To see this, let , where is the debiased LASSO estimator for the study with

| (4) |

and is a regularized inverse of . We may write

where we use in the above approximation and the term

does not depend on . We only use heuristically above to show a connection between our SHIR estimator and the debiased LASSO, but the validity and asymptotic properties of the SHIR estimator do not require obtaining any or establishing a theoretical guarantee for being sufficiently close to .

Remark 2.

Compared with existing debiasing-based methods (Lee et al. 2017; Battey et al. 2018), the SHIR approach is both computationally and statistically efficient. It does not rely on the debiased statistics (4) and achieves debiasing without calculating , which can only be estimated well under strong conditions (Van de Geer et al. 2014; Janková and Van De Geer 2016).

3.2. Tuning Parameter Selection

The implementation of SHIR requires selection of three sets of tuning parameters, , and . We select for the LASSO problem locally via the standard -fold cross-validation (CV). Selecting and needs to balance the tradeoff between the model’s degrees of freedom, denoted by , and the quadratic loss in It is not feasible to tune and via the CV since individual-level data are not available in the central site. We propose to select and as the minimizer of the generalized information criterion (GIC) (Wang and Leng 2007; Zhang, Li, and Tsai 2010), defined as

where is some prespecified scaling parameter and

Following Zhang, Li, and Tsai (2010) and Vaiter et al. (2012), we define as the trace of

where , , the operator is defined as the second order partial derivative with respect to , after plugging into or .

Remark 3.

As discussed in Kim, Kwon, and Choi (2012), can be chosen depending on the goal with commonly choices including for AIC (Akaike 1974), for BIC (Bhat and Kumar 2010), for modified BIC (Wang, Li, and Leng 2009) and for RIC (Foster and George 1994). We used the BIC with in our numerical studies.

Remark 4.

For linear models, it has been shown that the proper choice of guarantees GIC’s model selection consistency under various divergence rates of the dimension (Kim, Kwon, and Choi 2012). For example, for fixed , GIC is consistent if and . When diverges in polynomial rate , then GIC is consistent provided that (BIC) if ; (modified BIC) if . When diverges in exponential rate with , GIC is consistent as . These results can be naturally extended to more general log-likelihood functions.

4. Theoretical Results

In this section, we present theoretical properties of for but discuss how our theoretical results can be extended to other sparse structures in Section 7. In Sections 4.2 and 4.3, we derive theoretical consistency and equivalence for the prediction and estimation risks of the SHIR, under high dimensional sparse model and smooth loss function . In Section 4.4, we compare the risk bounds for SHIR with an estimator derived based on those of the debiasing-based aggregation approaches (Lee et al. 2017; Battey et al. 2018). In addition, Section 4.5 shows that the SHIR achieves sparsistency, that is, variable selection consistency, for the nonzero sets of and . We begin with some notation and definitions that will be used throughout the article.

4.1. Notation and Definitions

Let , , , and respectively represent the sequences that grow in a smaller, equal/ smaller, larger, equal/larger and equal rate of the sequence . Similarly, let , , , and represent each of the corresponding rates with probability approaching 1 as .

For any vector , denote the -ball around with radius as . Following Vershynin (2018), we define the sub-Gaussian norm of a random variable as and for any random vector , its sub-Gaussian norm defined as . For any symmetric matrix , let and denote its minimum and maximum eigenvalue, respectively. For , denote by the sign of , and for event , denote by the indicator for . Denote by , , , , , and . Let and . Also, let , , , and . Finally, we introduce the compatibility condition as below.

Definition 1 (Compatibility Condition .

The Hessian matrix and the index set satisfy the Compatibility Condition, if for all with any constant , there exists a constant such that,

where , and represents the compatibility constant of on the set .

4.2. Prediction and Estimation Consistency

To establish theoretical properties of the SHIR estimators in terms of estimation and prediction risks, we first introduce some sufficient conditions. Throughout the following analysis, we assume that for and

Condition 1.

There exists an absolute constant such that for all , satisfying , the Hessian matrices and the index set satisfy (Definition 1) with compatibility constant .

Condition 2.

For all , is sub-Gaussian, that is, there exists some positive constant such that . In addition, there exists such that .

Condition 3.

There exists positive such that for all .

Remark 5.

Condition 1 is in a similar spirit as the restricted eigenvalue or restricted strong convexity condition introduced by Negahban et al. (2012). The first part of Condition 2 controls the tail behavior of so that the random error can be bounded properly and the method could be benefited from the group sparsity of (Huang and Zhang 2010). This condition can be easily verified for sub-Gaussian design and an extensive class of models, for example, the logistic model. In addition, the condition holds for bounded design with and for subGaussian design with Condition 3 assumes a smooth function to guarantee that the empirical Hessian matrix is close enough to , and the term is close enough to .

The following Proposition 1 illustrates that for sub-Gaussian weighted design with regular Hessian matrix, Condition 1 (Compatibility Condition) holds with probability approaching 1. This can be viewed as an extension of the existing results, that is, sub-Gaussian design and linear model with lasso penalty (Rivasplata 2012), to our case with nonlinear model and the mixture penalty. We present the proof of Proposition 1 in Section A.1 of the supplementary material.

Proposition 1.

Assume that and Condition 3 holds. Assume in addition that there exists absolute constants , , such that for all , , and for any and , . Then we have that, Condition 1 is satisfied with probability approaching 1.

Remark 6.

As an important example in practice, it is not hard to verify that, for logistic models with , and sub-Gaussian covariates , the key assumption on the weighted design required in Proposition 1, , is satisfied.

We further assume in Condition 4 that the local LASSO estimators achieve the minimax optimal error rates to a logarithmic scale (Raskutti, Wainwright, and Yu 2011; Negahban et al. 2012).

Condition 4.

The local estimators satisfy that , and .

Remark 7.

Extensive literatures, such as Van de Geer et al. (2008), Bühlmann and Van De Geer (2011), and Negahban et al. (2012), have established a complete theoretical framework regarding to this property. See, for example, Negahban et al. (2012), in which Condition 4 can be proved for strongly convex loss function .

Next, we present the risk bounds for the SHIR including the prediction risk and estimation risk .

Theorem 1 (Risk bounds for the SHIR).

Under Conditions 1–4, there exists and such that

Note that, in Theorem 1, the rate of the penalty coefficient for is . The second term in each of the upper bounds of Theorem 1 is the error incurred by aggregation noise of derived data instead of raw data. These terms are asymptotically negligible under sparsity as . Then achieves the same error rate as the ideal estimator obtained by combining raw data as shown in the following section, and is nearly rate optimal.

4.3. Asymptotic Equivalence in Prediction and Estimation

Under specific sparsity assumptions, we show the asymptotic equivalence, with respect to prediction and estimation risks, of the SHIR and the ideal IPDpool estimator or alternatively defined as

where is a tuning parameter.

Theorem 2.

(Asymptotic Equivalence) Under assumptions in Theorem 1 and assume , there exists and such that the IPDpool estimator satisfies

Furthermore, for some , the IPDpool and the SHIR defined by (3) with are equivalent in prediction and estimation in the sense that

Theorem 2 demonstrates the asymptotic equivalence between and with respect to estimation and prediction risks, and hence implies the optimality of the SHIR. Specifically, when , the excess risks of compared to are of smaller order than those of IPDpool, that is, the minimax optimal rates (up to a logarithmic scale) for multi-task learning of high-dimensional sparse model (Huang and Zhang 2010; Lounici et al. 2011). Similar equivalence results was given in Theorem 4.8 of Battey et al. (2018) for the truncated debiased LASSO estimator. However, to the best of our knowledge, in the existing literatures, such results have not been established yet for the LASSO-type estimators obtained directly from a sparse regression model. Compared with Battey et al. (2018), our result does not require the Hessian matrix to have a sparse inverse since we do not actually rely on the debiasing of . Consequently, the proofs of Theorem 2 are much more involved than those in Battey et al. (2018). The new technical skills are developed and presented in detail in the supplementary material.

4.4. Comparison With the Debiasing-based Strategy

To compare to existing approaches, we next consider an extension of the debiased LASSO-based procedures proposed in Lee et al. (2017) and Battey et al. (2018) to incorporating between study heterogeneity. Specifically, at the site, we derive the debiased LASSO estimator as defined in (4) and send it to the central site, where is obtained via nodewise LASSO (Javanmard and Montanari 2014). At the central site, compute , and . The final estimator for and can be obtained by thresholding and as and , by Lee et al. (2017) and Battey et al. (2018), where

for any vector and constant , and respectively denote the hard and soft thresholded counterparts of , and vectorize the matrix by column.

The error rates of can be derived by extending Lee et al. (2017) and Battey et al. (2018). We outline the results below and provide details in Section A.3.4 of the supplementary material. Denote by , , and . Then in analog to Theorem 1, one can obtain that

| (5) |

| (6) |

where is as defined in Condition 2. Compared with the error rates of SHIR as presented in Theorem 1, shares the same “first term”, , representing the error of an individual-level empirical process. However, its second term incurred by data aggregation can be larger than that of SHIR as , which could happen due to the complex design in practice.

In addition, SHIR could be more efficient than the debiasing-based strategy even when the impact of the additional error term, which depends on in (6), is asymptotically negligible. Consider the setting when all ’s are the same, that is, , and is moderate or small so that the regularization is unnecessary and the maximum likelihood estimator (MLE) for is feasible and asymptotically Gaussian. In this case, SHIR can be viewed as the inverse variance weight estimation with asymptotic variance , while the debiasing-based approach outputs an estimator of variance . It is not hard to show that , where the equality holds only if all ’s are in certain proportion. Thus, SHIR is strictly more efficient than debiasing-based approach under the low-dimensional setting with heterogeneous , which commonly arises in metaanalysis as the distributions of ’s are typically heterogeneous across the local sites. In the high-dimensional setting, similarly, SHIR is expected to benefit from the “inverse variance weight” construction, and our simulation results in Section 5 support this point.

4.5. Sparsistency

In this section, we present theoretical results concerning the variable selection consistency of the SHIR. We begin with some extra sufficient conditions for the sparsistency result.

Condition 5.

For all and satisfying , there exists such that , where denotes the submatrix of with its rows and columns corresponding to .

Condition 6.

For all and satisfying , the weighted design matrix satisfies the Irrepresentable Condition on with parameter , where is defined in Section A.2 and is given in Definition A2 of the supplementary material.

Condition 7.

Let . For the defined in Condition 6, , as .

Remark 8.

Conditions 5–7 are sparsistency assumptions similar to those of Zhao and Yu (2006) and Nardi et al. (2008). Condition 5 requires the eigenvalues for the covariance matrix of the weighted design matrix corresponding to to be bounded away from zero, so that its inverse behaves well. Condition 6 adopts the commonly used Irrepresentable Condition (Zhao and Yu 2006) to our mixture penalty setting. Roughly speaking, it requires that the weighted design corresponding to cannot be represented well by the weighted design for . Compared to Nardi et al. (2008), is less intuitive but essentially weaker. We justify such condition on several common correlation structures and compare it with Zhao and Yu (2006) in Section A.2 of the supplementary material. Condition 7 assumes that the minimum magnitude of the coefficients is large enough to make the nonzero coefficients recognizable. It requires essentially weaker assumption on the minimum magnitude than local LASSO (Zhao and Yu 2006). This is because we leverage the group structure of ’s to improve the efficiency of variable selection.

Theorem 3.

(Sparsistency) Let and . Denote by the event and . Under Conditions 1–7 and assume that

we have as .

Theorem 3 establishs the sparsistency of SHIR. When , Condition 7 turns out to be , the corresponding sparsistency assumption for the IPDpool estimator. In contrast, a similar condition, which could be as strong as , is required for the local LASSO estimator (Zhao and Yu 2006). Compared with the local one, our integrative analysis procedure can recognize smaller signal under some sparsity assumptions. In this sense, the structure of helps us to improve the selection efficiency over the local LASSO estimator. Different from the existing work, we need carefully address the mixture penalty and the aggregation noise of the SHIR, which introduce technical difficulties to our theoretical analysis.

In both Theorems 2 and 3, we allow , the number of studies, to diverge while still preserving theoretical properties. The growing rate of is allowed to be

for the equivalence result in Theorem 2 and

for the sparsistency result in Theorem 3.

5. Simulation Study

We present simulation results in this section to evaluate the performance of our proposed SHIR estimator and compare it with several other approaches. The simulation codes are available at https://github.com/moleibobliu/SHIR. Let and and set for each . For each configuration, we summarize results based on 200 simulated datasets. We consider three data-generating mechanisms:

Sparse precision and correctly specified model (strong and sparse signal): Across all studies, let for for and . For each , we generate from a zero-mean multivariate normal distribution with covariance , where , , , denotes the identity matrix, denotes the correlation matrix of AR(1) with correlation coefficient , denotes the matrix with each of its column having randomly picked entries set as or in random and the remaining being 0, and . Given , we generate from the logistic model with and .

Sparse precision and correctly specified model (weak and sparse signal): Use the same data-generation mechanism as in (i) but relatively weak signals and .

Sparse precision and correctly specified model (strong and dense signal): Use the same mechanism as in (i) but denser supports: , and , and more heterogeneous coefficients across the sites (see their specific values in Section A.5 of the supplementary material).

Sparse precision and correctly specified model (weak and dense signal): Use the same mechanism as in (iii) but weaker signals (see Section A.5 of the supplementary material).

Dense precision and wrongly specified model: Let , , and . For each , we generate from zero-mean multivariate normal with covariance matrix , where , and . Given , we generate from a logistic model with .

Across all settings, the distributions of and model parameters of differ across the sites, which mimic the heterogeneity of the covariates and models. The heterogeneity of is driven by the study-specific correlation coefficient in its covariance matrix . Under Settings (i)–(iv), the fitted logistic loss corresponds to the likelihood under a correctly specified model with the support of and that of overlapping but not exactly the same. Under Setting (v), the fitted loss corresponds to a misspecified model but the true target parameter remains approximately sparse with only first 5 elements being relatively large, 45 close to zero and remaining exactly zero. For each , there are 15 nonzero coefficients on average in the column of the precision under Settings (i)–(iv), and 45 nonzero coefficients under Setting (v). So we can use Settings (i)–(iv) to simulate the scenario with sparse precision on the active set and use Setting (v) to simulate relatively dense precision.

For each simulated dataset, we obtain the SHIR estimator as well as the following alternative estimators: (a) the IPDpool estimator ; (b) the SMA estimator (He et al. 2016), following the sure independent screening procedure (Fan and Lv 2008) that reduces the dimension to as recommended by He et al. (2016); and (c) the debiasing-based estimator as introduced in Section 4.4, denoted by . For , we used the soft thresholding to be consistent with the penalty used by IPDpool, SMA and SHIR. We used the BIC to choose the tuning parameters for all methods.

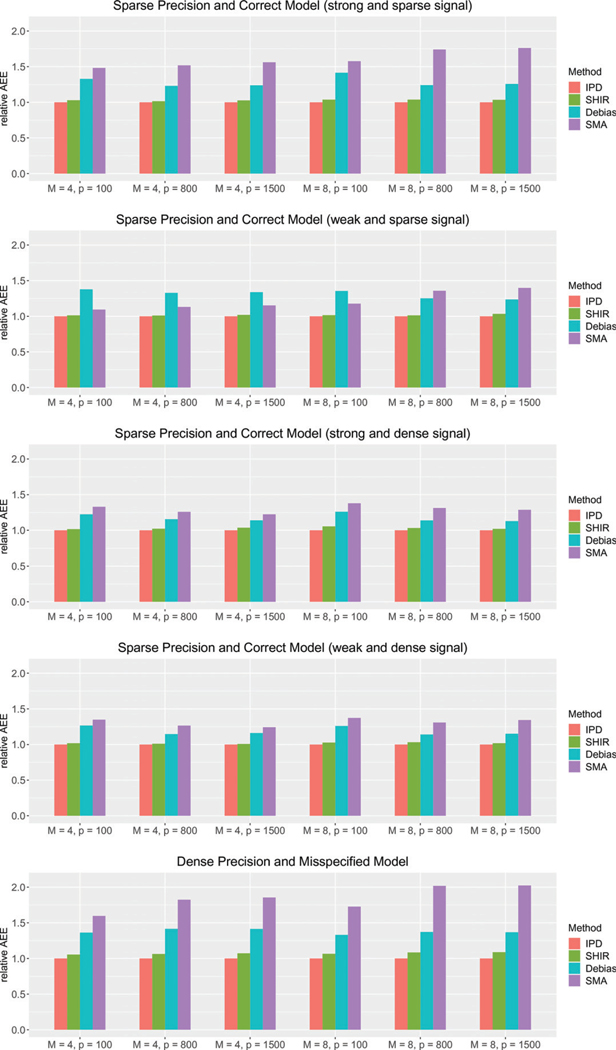

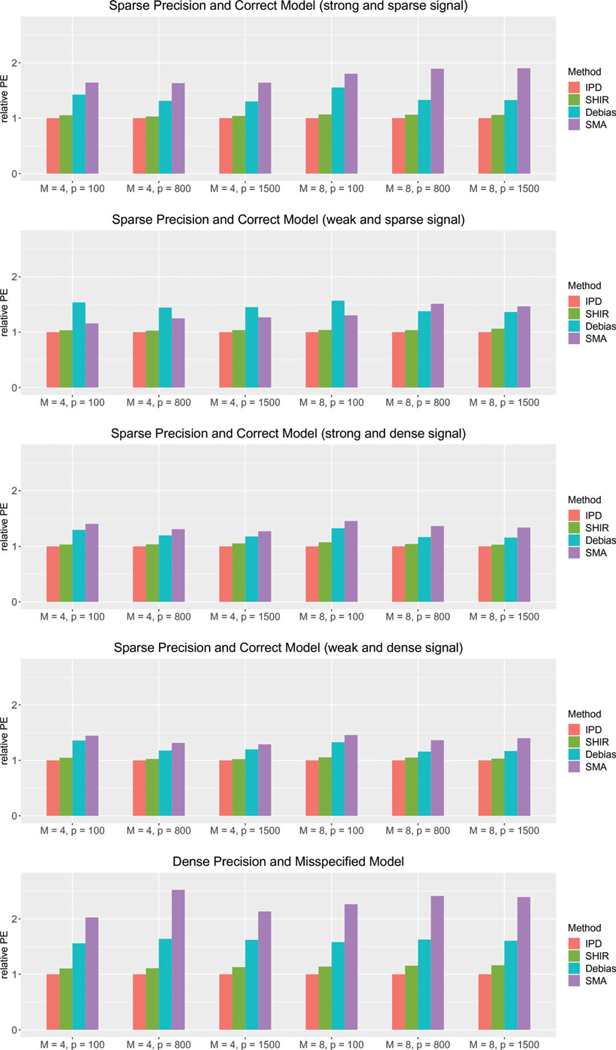

In Figures 1 and 2, we present the relative average absolute estimation error (rAEE), , and the relative prediction error (rPE), , for each estimator compared to the IPDpool estimator, respectively. Consistent with the theoretical equivalence results, the SHIR estimator attains very close estimation and prediction accuracy as those of theidealizedIPDpoolestimator,withrPEandrAEEaround1.03 under Setting (i), 1.02 under (ii), 1.04 under (iii), 1.03 under (iv), and 1.07 under (v). The SHIR estimator is substantially more efficient than the SMA under all the settings, with about 45% reduction in both AEE and PE on average. This can be attributed to the improved performance of the local LASSO estimator over the MLE on sparse models. The superior performance is more pronounced for large such as 800 and 1500, because the screening procedure does not work well in choosing the active set, especially in the presence of correlations among the covariates. Compared with , SHIR also demonstrates its gain in efficiency. Specifically, relative to SHIR, has 15% ~ 29% higher AEE and 18% − 42% higher PE under the five settings. This is consistent with our theoretical results presented in Section 4.4 that SHIR has smaller error compared to due to the heterogeneous Hessians and aggregation errors. In addition, compared to Settings (i)–(iv), the excessive error of is larger in Setting (v) where the the inverse Hessian is relatively dense. This is consistent with conclusion in Section 4.4.

Figure 1.

The relative average absolute estimation error (AEE) of IPDpool (IPD), SHIR, (Debias) and SMA compared to those of IPDpool underdifferent , and data-generation mechanisms (i)–(v) introduced in Section 5.

Figure 2.

The relative prediction error (PE) of IPDpool (IPD), SHIR, (Debias), and SMA compared to those of IPDpool under different , and data-generation mechanisms (i)–(v) introduced in Section 5.

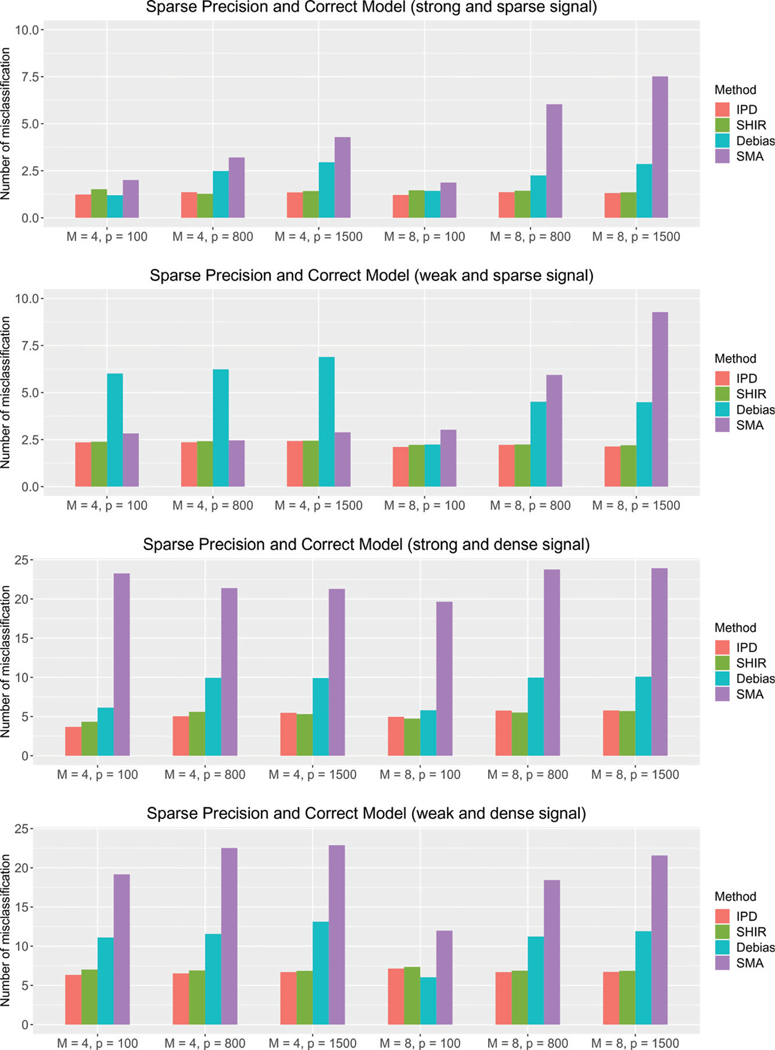

In Figure 3, we present the average number of misclassifications on the support of , that is, for obtained via different methods under Settings (i)–(iv) where the model for is correctly specified. SMA performs poorly and has more misclassification numbers under nearly all the settings, specially for and dense signals. Both IPDpool and SHIR have good support recovery performance with the misclassification numbers below 2.5 under all settings with sparse signal, and below 7.5 under those with dense signal. These two methods attain similar misclassification numbers with the absolute differences less than 0.8 across all settings. Compared to IPDpool and SHIR, has significantly worse performance for all the settings with . For weak signal, and , the misclassification numbers of are about two to four times as large as those of IPDpool and SHIR. For strong signal or , the gap between and SHIR is still visible though a bit smaller. For example, under Setting (i) with , has about 60% more misclassifications than SHIR when , and 110% more misclassifications when on average. In Figures A1 and A2 of the supplementary material, we present the average true positive rate (TPR) and false discovery rate (FDR) for recovering the support of . When , the estimator tends to have smaller FDR than those of SHIR. However, this is achieved at the expense of substantially lower TPR. On the other hand, when is larger , SHIR attains lower FPR than while attaining higher or comparable TPR. In summary, SHIR achieves similar performance as IPDpool and better performance than in support recovery.

Figure 3.

The average number of misclassifications on based on IPDpool (IPD), SHIR, (Debias), and SMA under different , and data-generation mechanisms (i)–(iv) introduced in Section 5.

6. Application to EHR Phenotyping in Multiple Disease Cohorts

Linking EHR data with biorepositories containing “-omics” information has expanded the opportunities for biomedical research (Kho et al. 2011). With the growing availability of these high-dimensional data, the bottleneck in clinical research has shifted from a paucity of biologic data to a paucity of high-quality phenotypic data. Accurately and efficiently annotating patients with disease characteristics among millions of individuals is a critical step in fulfilling the promise of using EHR data for precision medicine. Novel machine learningbased phenotyping methods leveraging a large number of predictive features have improved the accuracy and efficiency of existing phenotyping methods (Liao et al. 2015; Yu et al. 2015).

While the portability of phenotyping algorithms across multiple patient cohorts is of great interest, existing phenotyping algorithms are often developed and evaluated for a specific patient population. To investigate the portability issue and develop EHR phenotyping algorithms for CAD useful for multiple cohorts, Liao et al. (2015) developed a CAD algorithm using a cohort of rheumatoid arthritis (RA) patients and applied the algorithm to other disease cohorts using EHR data from Partner’s Healthcare System. Here, we performed integrative analysis of multiple EHR disease cohorts to jointly develop algorithms for classifying CAD status for four disease cohorts including type 2 diabetes mellitus (DM), inflammatory bowel disease (IBD), multiple sclerosis (MS), and RA. Under the DataSHIELD constraint, our proposed SHIR algorithm enables us to let the data determine if a single CAD phenotyping algorithm can perform well across four disease cohorts or disease specific algorithms are needed.

For algorithm training, clinical investigators have manually curated gold standard labels on the CAD status used as the response , for patients, patients, patients, and patients. There are a total of candidate features including both codified features, narrative features extracted via natural language processing (NLP) (Zeng et al. 2006), as well as their two-way interactions. Examples of codified features include demographic information, lab results, medication prescriptions, counts of International Classification of Diseases (ICD) codes and Current Procedural Terminology (CPT) codes. Since patients may not have certain lab measurements and missingness is highly informative, we also create missing indicators for the lab measurements as additional features. Examples of NLP terms include mentions of CAD, current smoking (CSMO), nonsmoking (NSMO) and CAD related procedures. Since the count variables such as the total number of CAD ICD codes are zero-inflated and skewed, we take transformation and include as additional features for each count variable .

For each cohort, we randomly select 50% of the observations to form the training set for developing the CAD algorithms and use the remaining 50% for validation. We trained CAD algorithms based on SHIR, and SMA. Since the true model parameters are unknown, we evaluate the performance of different methods based on the prediction performance of the trained algorithms on the validation set. We consider several standard accuracy measures including the area under the receiver operating characteristic curve (AUC), the brier score defined as the mean squared residuals on the validation data, as well as the -score at threshold value chosen to attain a false-positive rate of 5% () and 10% (), where the -score is defined as the harmonic mean of the sensitivity and positive predictive value. The standard errors of the estimated prediction performance measures are obtained by bootstrapping the validation data. We only report results based on tuning parameters selected with BIC as in the simulation studies but note that the results obtained from AIC are largely similar in terms of prediction performance. Furthermore, to verify the improvement of the performance by combining the four datasets, we include the LASSO estimator for each local dataset (Local) as a comparison.

In Table 1, we present the estimated coefficients for variables that received nonzero coefficients by at least one of the included methods. Interestingly, all integrative analysis methods set all heterogeneous coefficients to zero, suggesting that a single CAD algorithm can be used across all cohorts although different intercepts were used for different disease cohorts. The magnitude of the coefficients from SHIR largely agree with the published algorithm with most important features being NLP mentions and ICD codes for CAD as well as total number of ICD codes which serves as a measure of healthcare utilization. The SMA set all variables to zero except for age, nonsmoker and the NLP mentions and ICD codes for CAD, while has more similar support to SHIR.

Table 1.

Detected variables and magnitudes of their fitted coefficients for homogeneous effect .

| Variable | SHIR | SMA | |

|---|---|---|---|

| Prescription count of statin | 0.14 | 0.07 | 0 |

| Age | 0.09 | 0.26 | 0.28 |

| Total ICD counts | −0.38 | −0.75 | 0 |

| NLP count of CAD | 0.97 | 1.34 | 0.81 |

| NLP count of CAD procedure related concepts | 0 | 0.02 | 0 |

| NLP count of nonsmoker | −0.07 | −0.25 | − 0.42 |

| NLP count of nonsmoker > 0 | −0.53 | 0 | 0 |

| NLP count of current-smoker | 0 | −0.03 | 0 |

| NLP count of CAD related diagnosis or procedure ≥ 1 | 0.06 | 0.05 | 0 |

| ICD count for CAD | 1.00 | 0.67 | 0.35 |

| CPT count for stent or CABG | 0 | 0.05 | 0 |

| CPT count for echo | 0 | −0.10 | 0 |

| ICD count for CAD × CPT count for echo | 0 | −0.04 | 0 |

| NLP count of non-smoker × Oncall | 0.09 | 0 | 0 |

| NLP count of CAD × NLP count of possible-smoker | 0 | −0.02 | 0 |

NOTE: denotes the interaction term of variables A and B. The transformation is taken on the count data and the covariates are normalized.

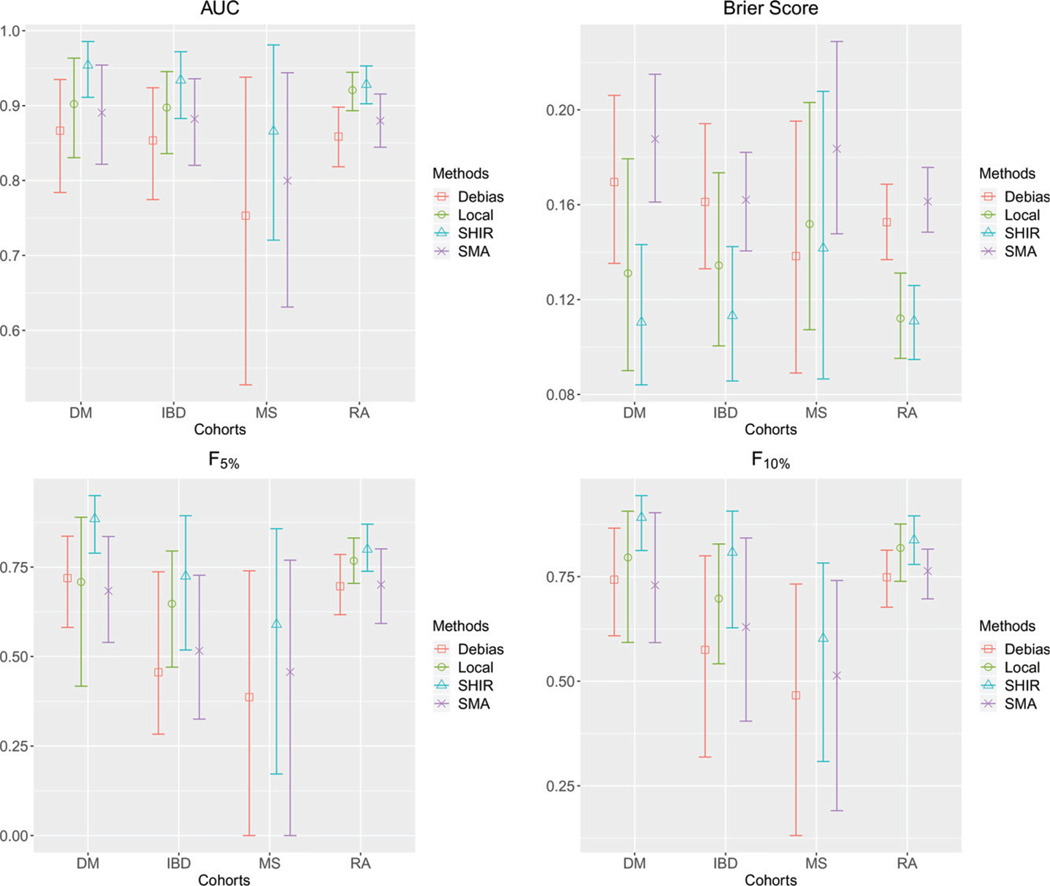

The point estimates along with their 95% bootstrap confidence intervals of the accuracy measures are presented in Figure 4. The results suggest that SHIR has the best performance across all methods, nearly on all datasets and across all measures. Among the integrative methods, SMA and performed much worse than SHIR on all accuracy measures. For example, the AUC with its 95% confidence interval of the CAD algorithm for the RA cohorts trained via SHIR, SMA and is respectively 0.93 (0.90,0.95), 0.88 (0.84,0.92), and 0.86 (0.82,0.90). Compared to the local estimator, SHIR also performs substantially better. For example, the AUC of SHIR and Local for the IBD cohort is 0.93 (0.88,0.97) and 0.90 (0.84,0.95). The difference between the integrative procedures and the local estimator is more pronounced for the DM cohort with AUC being around 0.95 for SHIR and 0.90 for the local estimator trained using DM data only. The local estimator fails to produce an informative algorithm for the MS cohort due to the small size of the training set. These results again demonstrate the power of borrowing information across studies via integrative analysis.

Figure 4.

The mean and 95% bootstrap confidence interval of AUC, Brier Score, and of , Local, SHIR and SMA on the validation data from the four studies.

7. Discussion

In this article, we proposed a novel approach, the SHIR, for integrative analysis of high dimensional data under the DataSHIELD framework, where only summary statistics are allowed to be transferred from the local sites to the central node to protect the individual-level data. As we demonstrated via both theoretical analyses and numerical studies, the SHIR estimator is considerably more efficient than the estimators obtained based on the debiasing-based strategies considered in literatures (Lee et al. 2017; Battey et al. 2018). Also, our method accommodates heterogeneity among the design matrices, as well as the coefficients of the local sites, which is not adequately handled under the ultra high-dimensional regime in existing literature. Our approach only solves the LASSO problem once in each local site without requiring the computation of or debiasing. Note that, SHIR aims at an -consistent estimation and is not asymptotically unbiased. Consequently, it cannot be directly used for hypothesis testing or confidence interval construction, for example, Caner and Kock (2018a,b). Future work lies on developing statistical approaches for such purposes under DataSHIELD, high-dimensionality and heterogeneity. In addition, sparsistency of our estimator relies on the Irrepresentable Condition (Condition 6) that has been commonly used in the literature (see, e.g.,Yuan and Lin 2006; Nardi et al. 2008), but its rigorous verification for random design or nonlinear models is technically highly challenging. To achieve variable selection consistency without such condition, one may use non-concave (group) sparse penalty like group adaptive lasso (Wang and Leng 2008) or group bridge (Zhou and Zhu 2010) in our framework.

For the choice of penalty, in the current article, we focus primarily on the mixture penalty, . Nevertheless, other penalty functions, such as group lasso (Huang and Zhang 2010) and hierarchical lasso (Zhou and Zhu 2010), can be incorporated into our framework provided that they effectively leverage certain prior knowledge. Similar techniques used for deriving the theoretical results of SHIR with the mixture penalty can be used for other penalty functions, with some technical details varying according to different choices on . See Section A.4 of the supplementary material for further justifications.

For the consistency result in Theorem 1, SHIR requires . Although this sparsity assumption is already weaker than those in the existing literature (Battey et al. 2018, e.g.) as shown in Section 4.4, it may be strong in practical applications. For example, in the EHR example which suggests that the sparsity assumption may not hold. Nevertheless, the resulting SHIR algorithm appear to perform well in terms of out-of-sample classification accuracy. On the other hand, it is of interests to explore the possibilities of relaxing such assumption. One potential way is to use multiple rounds of communications such as Fan, Guo, and Wang (2019). Detailed analysis of this approach warrants future research.

Supplementary Material

Funding

The research of Yin Xia was supported in part by NSFC Grants 12022103, 11771094, and 11690013. The research of Tianxi Cai and Molei Liu were partially supported by the Translational Data Science Center for a Learning Health System at Harvard Medical School and Harvard T.H. Chan School of Public Health.

Footnotes

Supplementary Material

In the Supplement, we provide some justifications for Conditions 1 and 6, present detailed proofs of Theorems 1–3, outline theoretical analyses of SHIR for various penalty functions, and present additional simulation results.

Supplementary materials for this article are available online. Please go to www.tandfonline.com/r/JASA.

Commonly used summary statistics include the locally fitted regression coefficient and itsHessian matrix in the low-dimensional parametric regression models (see, e.g., Duan et al. 2019, 2020).

References

- Akaike H. (1974), “A New Look at the Statistical Model Identification,” IEEE Transactions on Automatic Control, 19, 716–723. [2108] [Google Scholar]

- Battey H, Fan J, Liu H, Lu J, Zhu Z. (2018), “Distributed Testing and Estimation Under Sparse High Dimensional Models,” The Annals of Statistics, 46, 1352–1382. [2105,2106,2108,2110,2117,2118] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhat HS, and Kumar N. (2010), On the Derivation of the Bayesian Information Criterion, Los Angels: School of Natural Sciences, University of California. [2108] [Google Scholar]

- Bühlmann P, and Van De Geer S. (2011), Statistics for High-dimensional Data: Methods, Theory and Applications. Springer Science & Business Media. [2109]

- Caner M, and Kock AB (2018a), “Asymptotically Honest Confidence Regions for High Dimensional Parameters by the Desparsified Conservative Lasso,” Journal of Econometrics, 203, 143–168. [2117] [Google Scholar]

- Caner M, and Kock AB (2018b), “High Dimensional Linear GMM,” arXiv preprint arXiv:1811.08779. [2117]

- Chen X, and Xie M. g. (2014), “A Split-and-Conquer Approach for Analysis of Extraordinarily Large Data,” Statistica Sinica, 24, 1655–1684. [2106] [Google Scholar]

- Chen Y, Dong G, Han J, Pei J, Wah BW, and Wang J. (2006), “Regression Cubes With Lossless Compression and Aggregation,” IEEE Transactions on Knowledge and Data Engineering, 18, 1585–1599. [2105] [Google Scholar]

- Cheng X, Lu W, and Liu M. (2015), “Identification of Homogeneous and Heterogeneous Variables in Pooled Cohort Studies,” Biometrics, 71, 397–403. [2107] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doiron D, Burton P, Marcon Y, Gaye A, Wolffenbuttel BH, Perola M, Stolk RP, Foco L, Minelli C, Waldenberger M, Holle R, Kvaløy K, Hillege HL, Tassé A-M, Ferretti V, Fortier I. (2013), “Data Harmonization and Federated Analysis of Population-based Studies: The BioSHaRE Project,” Emerging Themes in Epidemiology, 10, 12. [2105] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duan R, Boland MR, Liu Z, Liu Y, Chang HH, Xu H, Chu H, Schmid CH, Forrest CB, Holmes JH, Schuemie MJ, Berlin JA, Moore JH, Chen Y. (2020), “Learning From Electronic Health Records Across Multiple Sites: A Communication-efficient and Privacypreserving Distributed Algorithm,” Journal of the American Medical Informatics Association, 27, 376–385. [2105] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duan R, Boland MR, Moore JH, and Chen Y. (2019), “Odal: A One-shot Distributed Algorithm to Perform Logistic Regressions on Electronic Health Records Data From Multiple Clinical Sites,” in PSB R. Altman B, Dunker AK, Hunter L, Ritchie MD, Murray T. and Klein TE, Kohala Coast, Hawaii, USA: World Scientific Publishing Conference, pp. 30–41. [2105] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Guo Y, and Wang K. (2019), “Communication-efficient Accurate Statistical Estimation,” arXiv preprint arXiv:1906.04870. [2106,2118] [DOI] [PMC free article] [PubMed]

- Fan J, and Lv J. (2008), “Sure Independence Screening for Ultrahigh Dimensional Feature Space,” Journal of the Royal Statistical Society, Series B, 70, 849–911. [2112] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster DP, and George EI (1994), “The Risk Inflation Criterion for Multiple Regression,” The Annals of Statistics, 1947–1975. [2108]

- Friedman J, Hastie T, and Tibshirani R. (2010), “A Note on the Group Lasso and a Sparse Group Lasso,” arXivpreprintarXiv:1001.0736. [2107]

- Gaye A, Marcon Y, Isaeva J, LaFlamme P, Turner A, Jones EM, Minion J, Boyd AW, Newby CJ, Nuotio M-L, Wilson R, Butters O, Murtagh B, Demir I, Doiron D, Giepmans L, Wallace SE, Budin-Ljøsne I, Oliver Schmidt C, Boffetta P, Boniol M, Bota M, Carter KW, deKlerk N, Dibben C, Francis RW, Hiekkalinna T, Hveem K, Kvaløy K, Millar S, Perry IJ, Peters A, Phillips CM, Popham F, Raab G, Reischl E, Sheehan N, Waldenberger M, Perola M, van den Heuvel E, Macleod J, Knoppers BM, Stolk RP, Fortier I, Harris JR, Woffenbuttel BH, Murtagh MJ, Ferretti V, Burton PR(2014), “DataSHIELD: Taking the Analysis to the Data, not the Data to the Analysis,” International Journal of Epidemiology, 43, 1929–1944. [2105] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han J, and Liu Q. (2016), “Bootstrap Model Aggregation for Distributed Statistical Learning,” in Advances in Neural Information Processing Systems, Lee D, Sugiyama M, Luxburg U, Guyon I. and Garnett R, San Diego, CA, USA: Curran Associates, Inc., pp. 1795–1803. [2105] [Google Scholar]

- He Q, Zhang HH, Avery CL, and Lin D. (2016), “Sparse Meta-analysis With High-dimensional Data,” Biostatistics, 17, 205–220. [2105,2107,2112] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang C, and Huo X. (2015), “A Distributed One-step Estimator,” arXiv preprint arXiv:1511.01443. [2105]

- Huang J, and Zhang T. (2010), “The Benefit of Group Sparsity,” The Annals of Statistics, 38, 1978–2004. [2109,2110,2118] [Google Scholar]

- Janková J, and Van De Geer S. (2016), “Confidence Regions for High-dimensional Generalized Linear Models Under Sparsity,” arXiv preprint arXiv:1610.01353. [2108]

- Javanmard A, and Montanari A. (2014), “Confidence Intervals and Hypothesis Testing for High-dimensional Regression,” The Journal of Machine Learning Research, 15, 2869–2909. [2105,2110] [Google Scholar]

- Jones E, Sheehan N, Masca N, Wallace S, Murtagh M, and Burton P. (2012), “DataSHIELD–shared Individual-level Analysis Without Sharing the Data: A Biostatistical Perspective,” Norsk Epidemiologi, 21.[2105] [Google Scholar]

- Jordan MI, Lee JD, and Yang Y. (2019), “Communication-efficient Distributed Statistical Inference,” Journal of the American Statistical Association, 526, 668–681. [2106] [Google Scholar]

- Kho AN, Pacheco JA, Peissig PL, Rasmussen L, Newton KM, Weston N, Crane PK, Pathak J, Chute CG, Bielinski SJ, Kullo IJ, Li R, Manolio TA, Chisholm RL, Denny JC (2011), “Electronic Medical Records for Genetic Research: Results of the Emerge Consortium,” Science Translational Medicine, 3, 79re1–79re1. [2116] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Y, Kwon S, and Choi H. (2012), “Consistent Model Selection Criteria on High Dimensions,” Journal of Machine Learning Research, 13, 1037–1057. [2108] [Google Scholar]

- Lee JD, Liu Q, Sun Y, and Taylor JE (2017), “Communication-efficient Sparse Regression,” Journal of Machine Learning Research, 18, 1–30. [2105,2106,2108,2110,2117] [Google Scholar]

- Li W, Liu H, Yang P, and Xie W. (2016), “Supporting Regularized Logistic Regression Privately and Efficiently,” PloS One, 11, e0156479. [2106] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liao KP, Ananthakrishnan AN, Kumar V, Xia Z, Cagan A, Gainer VS, Goryachev S, Chen P, Savova GK, Agniel D, et al. (2015). “Methods to Develop an Electronic Medical Record Phenotype Algorithm to ComparetheRisk of CoronaryArteryDiseaseAcross3 Chronic Disease Cohorts,” PLoS One, 10, e0136651. [2116] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu M, Xia Y, Cai T, and Cho K. (2020), “Integrative High Dimensional Multiple Testing With Heterogeneity Under Data Sharing Constraints,” arXiv preprint arXiv:2004.00816. [PMC free article] [PubMed]

- Liu Q, and Ihler AT (2014), “Distributed Estimation, Information Loss and Exponential Families,” in Advances in Neural Information Processing Systems, pp. 1098–1106. [2105]

- Lounici K, Pontil M, Van De Geer S, Tsybakov AB (2011), “Oracle Inequalities and Optimal Inference Under Group Sparsity,” The Annals of Statistics, 39, 2164–2204. [2110] [Google Scholar]

- Lu C-L, Wang S, Ji Z, Wu Y, Xiong L, Jiang X, and Ohno-Machado L. (2015), “Webdisco: A Web Service for Distributed Cox Model Learning Without Patient-level Data Sharing,” Journal of the American Medical Informatics Association, 22, 1212–1219. [2105,2106] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maity S, Sun Y, and Banerjee M. (2019), “Communication-efficient Integrative Regression in High-dimensions,” arXiv preprint arXiv:1912.11928. [2106]

- Minsker S. (2019), “Distributed Statistical Estimation and Rates of Convergence in Normal Approximation,” Electronic Journal of Statistics, 13, 5213–5252. [2106] [Google Scholar]

- Nardi Y, Rinaldo A. (2008), “On the Asymptotic Properties of the Group Lasso Estimator for Linear Models,” Electronic Journal of Statistics, 2, 605–633. [2111,2117] [Google Scholar]

- Negahban SN, Ravikumar P, Wainwright MJ, Yu B. (2012), “A Unified Framework for High-dimensional Analysis of -estimators With Decomposable Regularizers,” Statistical Science, 27, 538–557. [2109] [Google Scholar]

- Raskutti G, Wainwright MJ, and Yu B. (2011), “Minimax Rates of Estimation for High-dimensional Linear Regression Over lq-Balls,” IEEE Transactions on Information Theory, 57, 6976–6994. [2109] [Google Scholar]

- Rivasplata O. (2012), “Subgaussian Random Variables: An Expository Note,” Internet publication, PDF. [2109]

- Tang L, Zhou L, and Song PX-K (2016), “Method of Divide-andcombine in Regularized Generalized Linear Models for Big Data,” arXiv preprint arXiv:1611.06208. [2105] [Google Scholar]

- Vaiter S, Deledalle C, Peyré G, Fadili J, and Dossal C. (2012), “The Degrees of Freedom of the Group Lasso,” arXiv preprint arXiv:1205.1481. [2108]

- Van de Geer S, Bühlmann P, Ritov Y, Dezeure R. (2014), “On Asymptotically Optimal Confidence Regions and Tests for High-dimensional Models,” The Annals of Statistics, 42, 1166–1202. [2105,2107,2108] [Google Scholar]

- Van de Geer SA (2008), “High-dimensional Generalized Linear Models and the Lasso,” The Annals of Statistics, 36, 614–645. [2109] [Google Scholar]

- Vershynin R. (2018), High-dimensional Probability: An Introduction With Applications in Data Science, Vol. 47. Cambridge, UK: Cambridge University Press. [2108] [Google Scholar]

- Wang H, and Leng C. (2007), “Unified Lasso Estimation by Least Squares Approximation,” Journal of the American Statistical Association, 102, 1039–1048. [2108] [Google Scholar]

- Wang H, and Leng C. (2008), “A Note on Adaptive Group Lasso,”Computational Statistics & Data Analysis, 52, 5277–5286. [2118] [Google Scholar]

- Wang H, Li B, and Leng C. (2009), “Shrinkage Tuning Parameter Selection With a Diverging Number of Parameters,” Journal of the Royal Statistical Society, Series B, 71, 671–683. [2108] [Google Scholar]

- Wang J, Kolar M, Srebro N, and Zhang T. (2017), “Efficient Distributed Learning With Sparsity,” in Proceedings of the 34th International Conference on Machine Learning, Vol. 70, pp. 3636–3645. JMLR. org. [2106] [Google Scholar]

- Wang X, Peng P, and Dunson DB (2014), “Median Selection Subset Aggregation for Parallel Inference,” In Advances in Neural Information Processing Systems, pp. 2195–2203. [2106]

- Wolfson M, Wallace SE, Masca N, Rowe G, Sheehan NA, Ferretti V, LaFlamme P, Tobin MD, Macleod J, Little J, Fortier I, Knoppers BM, Burton PR (2010), “DataSHIELD: Resolving a Conflict in Contemporary Bioscienceperforming a Pooled Analysis of Individual-level Data Without Sharing the Data,” International Journal of Epidemiology, 39, 1372–1382. [2105] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y, Jiang X, Kim J, and Ohno-Machado L. (2012), “Grid Binary Logistic Regression (glore): Building Shared Models Without Sharing Data,” Journal of the American Medical Informatics Association, 19, 758–764. [2105] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu S, Liao KP, Shaw SY, Gainer VS, Churchill SE, Szolovits P, Murphy SN, Kohane IS, and Cai T. (2015), “Toward High-throughput Phenotyping: Unbiased Automated Feature Extraction and Selection From Knowledge Sources,” Journal of the American Medical Informatics Association, 22, 993–1000. [2116] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, and Lin Y. (2006), “Model Selection and Estimation in Regression With Grouped Variables,” Journal of the Royal Statistical Society, Series B, 68, 49–67. [2117] [Google Scholar]

- Zeng QT, Goryachev S, Weiss S, Sordo M, Murphy SN, and Lazarus R. (2006), “Extracting Principal Diagnosis, Co-morbidity and Smoking Status for Asthma Research: Evaluation of a Natural Language Processing System,” BMC Medical Informatics and Decision Making, 6, Article no. 30. [2116] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang C-H, and Zhang SS (2014), “Confidence Intervals for Low Dimensional Parameters in High Dimensional Linear Models,” Journal of the Royal Statistical Society, Series B, 76, 217–242. [2105] [Google Scholar]

- Zhang Y, Li R, and Tsai C-L (2010), “Regularization Parameter Selections Via Generalized Information Criterion,” Journal of the American Statistical Association, 105, 312–323. [2108] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao P, and Yu B. (2006), “On Model Selection Consistency of Lasso,” Journal of Machine Learning Research, 7, 2541–2563. [2111] [Google Scholar]

- Zhou N, and Zhu J. (2010), “Group Variable Selection Via a Hierarchical Lasso and Its Oracle Property,” arXiv preprint arXiv:1006.2871. [2118]

- Zöller D, Lenz S, and Binder H. (2018), “Distributed Multivariable Modeling for Signature Development Under Data Protection Constraints,” arXiv preprint arXiv:1803.00422. [2105]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.