Abstract

Proteomics is the large scale study of protein structure and function from biological systems through protein identification and quantification. “Shotgun proteomics” or “bottom-up proteomics” is the prevailing strategy, in which proteins are hydrolyzed into peptides that are analyzed by mass spectrometry. Proteomics studies can be applied to diverse studies ranging from simple protein identification to studies of proteoforms, protein-protein interactions, protein structural alterations, absolute and relative protein quantification, post-translational modifications, and protein stability. To enable this range of different experiments, there are diverse strategies for proteome analysis. The nuances of how proteomic workflows differ may be challenging to understand for new practitioners. Here, we provide a comprehensive overview of different proteomics methods to aid the novice and experienced researcher. We cover from biochemistry basics and protein extraction to biological interpretation and orthogonal validation. We expect this work to serve as a basic resource for new practitioners in the field of shotgun or bottom-up proteomics.

Introduction

Proteomics is the large-scale study of protein structure and function. Proteins are translated from messenger RNA (mRNA) transcripts that are transcribed from the complementary DNA-based genome. Although the genome encodes potential cellular functions and states, the study of proteins in all their forms is necessary to truly understand biology.

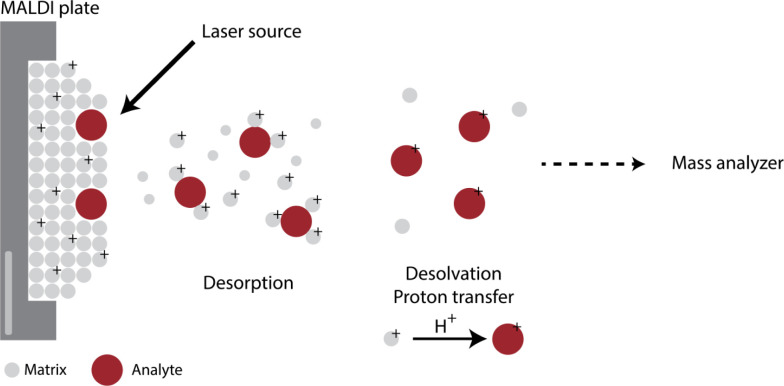

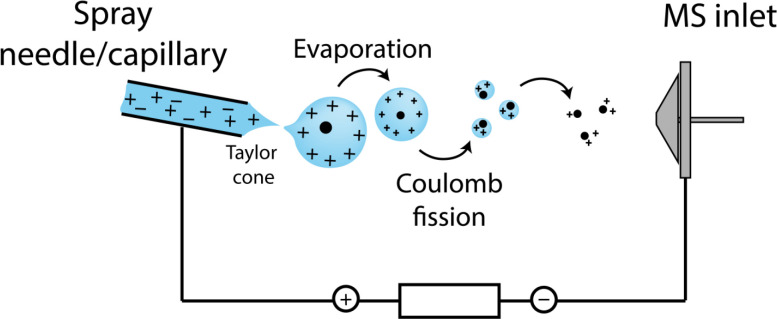

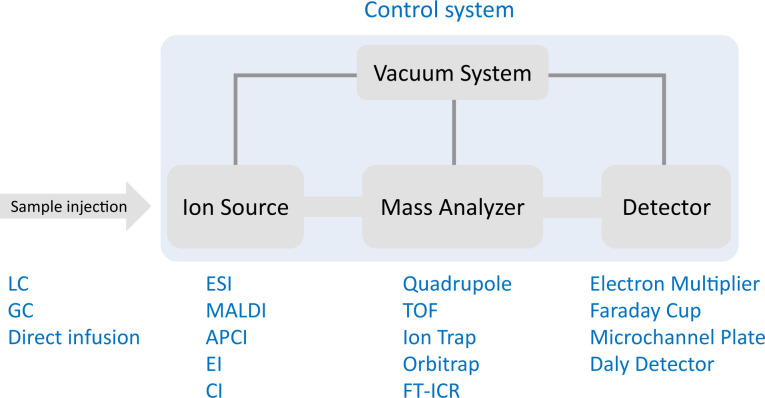

Currently, proteomics can be performed with various methods. Mass spectrometry has emerged within the past few decades as the premier tool for comprehensive proteome analysis. The ability of mass spectrometry (MS) to detect charged chemicals enables the identification of peptide sequences and modifications for diverse biological investigations. Alternative (commercial) methods based on affinity interactions of antibodies or DNA aptamers have been developed, namely Olink and SomaScan. There are also nascent methods that are either recently commercialized or still under development and not yet applicable to whole proteomes, such as motif scanning using antibodies, variants of N-terminal degradation, and nanopores [1,2,3,4]. Another approach uses parallel immobilization of peptides with total internal reflection microscopy and sequential Edman degradation [5]. However, by far the most common method for proteomics is based on mass spectrometry coupled to liquid chromatography (LC).

Modern proteomics had its roots in the early 1980s with the analysis of peptides by mass spectrometry and low efficiency ion sources, but started ramping up around the year 1990 with the introduction of soft ionization methods that enabled, for the first time, efficient transfer of large biomolecules into the gas phase without destroying them [6,7]. Shortly afterward, the first computer algorithm for matching peptides to a database was introduced [8]. Another major milestone that allowed identification of over 1,000 proteins were improvements to chromatography upstream of MS anlaysis [9]. As the volume of data exploded, methods for statistical analysis transitioned from the wild west of ad hoc empirical analysis to modern informatics based on statistical models [10] and false discovery rate [11].

Two strategies of mass spectrometry-based proteomics differ fundamentally by whether proteins are analyzed as a whole chain or cleaved into peptides before analysis: “top-down” versus “bottom-up”. Bottom-up proteomics (also refered to as shotgun proteomics) is defined by the intentional hydrolysis of proteins into peptide pieces using enzymes called proteases [12]. Therefore, bottom-up proteomics does not actually measure proteins, but instead infers protein presence and abundance from identified peptides [10]. Sometimes, proteins are inferred from only one peptide sequence representing a small fraction of the total protein sequence predicted from the genome. In contrast, top-down proteomics attempts to measure intact proteins [13,14,15,16]. The potential benefit of top-down proteomics is the ability to measure the many varied proteoforms [14,17,18]. However, due to myriad analytical challenges, the depth of protein coverage that is achievable by top-down proteomics is considerably less than that of bottom-up proteomics [19].

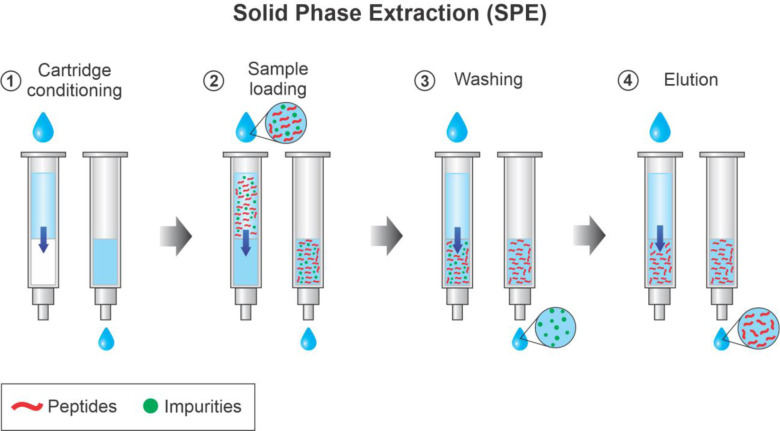

In this tutorial we focus on the bottom-up proteomics workflow. The most common version of this workflow is generally comprised of the following steps. First, proteins in a biological sample must be extracted. Usually this is achieved by mechanically lysing cells or tissue while denaturing and solubilizing the proteins and disrupting DNA to minimize interference in analysis procedures. Next, proteins are hydrolyzed into peptides, most often using the protease trypsin, which generates peptides with basic C-terminal amino acids (arginine and lysine) to aid in fragment ion series production during tandem mass spectrometry (MS/MS). Peptides can also be generated by chemical reactions that induce residue specific hydrolysis, such as cyanogen bromide that cleaves after methionine. Peptides from proteome hydrolysis must be purified; this is often accomplished with reversed-phase liquid chromatography (RPLC) cartridges or tips to remove interfering molecules in the sample such as salts and buffers. The peptides are then almost always separated by reversed-phase LC before they are ionized and introduced into a mass spectrometer, although recent reports also describe LC-free proteomics by direct infusion [20,21,22]. The mass spectrometer then collects precursor and fragment ion data from those peptides. Peptides must be identified from the tandem mass spectra, protein groups are inferred from a proteome database, and then quantitative values are assigned. Changes in protein abundances across conditions are determined with statistical tests, and results must be interpreted in the context of the relevant biology. Data interpretation is the rate limiting step; data collected in less than one week can take months or years to understand.

There are many variations to this workflow. The diversity of experimental goals that are achievable with proteomics technology drives an expansive array of workflows. Every choice is important as every choice will affect the results, from instrument procurement to choice of data processing software and everything in between. In this tutorial, we detail all the required steps to serve as a comprehensive overview for new proteomics practitioners.

1. Biochemistry Basics

Proteins

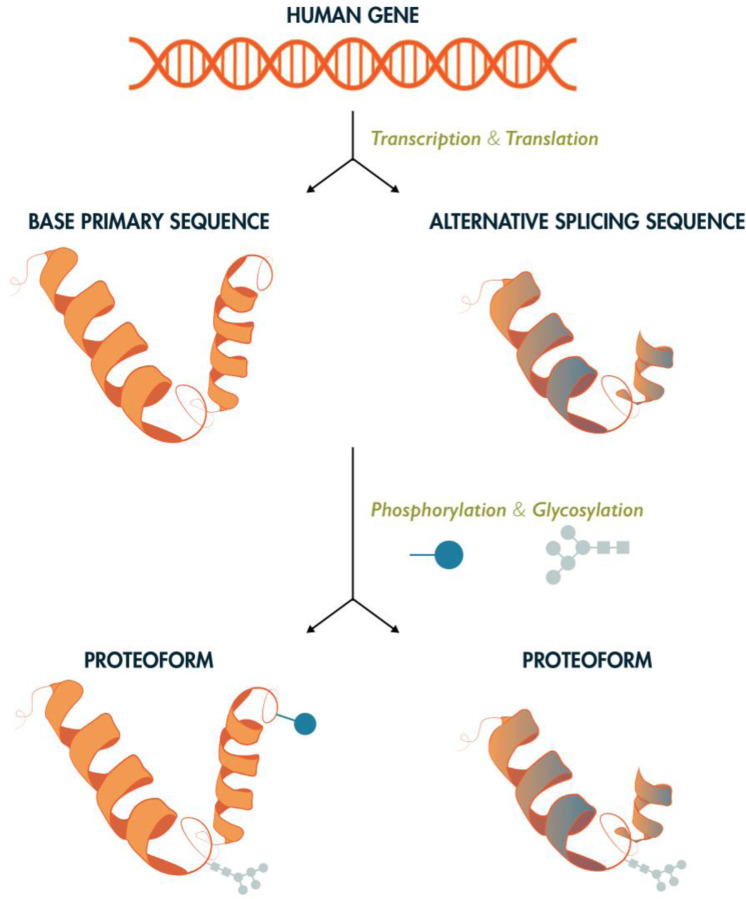

Proteins are large biomolecules or biopolymers made up of a backbone of amino acids which are linked by peptide bonds. They perform various functions in living organisms ranging from structural roles to functional involvement in cellular signaling and the catalysis of chemical reactions (enzymes). Proteins are made up of 20 different amino acids (not counting pyrrolysine, hydroxyproline, and selenocysteine, which only occur in specific organisms) and their sequence is encoded in their corresponding genes. The human genome encodes approximately 19,778 of the predicted canonical proteins coded in the human genome (see www.neXtProt.org) [23]. Each protein is present at a different abundance depending on the cell type or bodily fluid. Previous studies have shown that the concentration range of proteins can span at least seven orders of magnitude to up to 20,000,000 copies per cell, and that their distribution is tissue-specific [24,25]. Protein abundances can span more than ten orders of magnitude in human blood, while a few proteins make up most of the protein by weight in these fluids, making blood and plasma proteomics one of the most challenging matrices for mass spectrometry to analyze. Due to genetic variation, alternative splicing, and co- and post-translational modifications (PTMs), multiple different proteoforms can be produced from a single gene (Figure 1) [14,26].

Figure 1: Proteome Complexity.

Each gene may be expressed in the form of multiple protein products, or proteoforms, through alternative splicing and incorporation of post-translational modifications. As such, there are many more unique proteoforms than genes. While there exist 20,000 – 23,000 coding genes in the human genome, upwards of 1,000,000 unique human proteoforms may exist. The study of the structure, function, and spatial and temporal regulation of these proteins is the subject of mass spectrometry-based proteomics

PTMs

After protein biosynthesis, enzymatic and nonenzymatic processes change the protein sequence through proteolysis or covalent chemical modification of amino acid side chains. Post-translational modifications (PTMs) are important biological regulators contributing to the diversity and function of the cellular proteome. Proteins can be post-translationally modified through enzymatic and non-enzymatic reactions in vivo and in vitro [27]. PTMs can be reversible or irreversible, and they change protein function in multiple ways, for example by altering substrate–enzyme interactions, subcellular localization or protein-protein interactions [28,29].

More than 400 biological PTMs have been discovered in both prokaryotic and eukaryotic cells. There are many more chemical artifact PTMs that occur during sample preparation, such as carbamylation. These modifications are crucial in controlling protein functions and signal transduction pathways [30]. The most commonly studied and biologically relevant post-translational modifications include phosphorylation (Ser, Thr, Tyr, His), glycosylation (Arg, Asp, Cys, Ser, Thr, Tyr, Trp), disulfide bonds (Cys-Cys), ubiquitination (Lys, Cys, Ser, Thr, N-term), succinylation (Lys), methylation (Arg, Lys, His, Glu, Asn, Cys), oxidation (especially Met, Trp, His, Cys), acetylation (Lys, N-term), and lipidations [31].

Protein PTMs can alter its function, activity, structure, spatiotemporal status and interaction with proteins or small molecules. PTMs alter signal transduction pathways and gene expression control [32] regulation of apoptosis [33,34] by phosphorylation. Ubiquitination generally regulates protein degradation [35], SUMOylation regulates chromatin structure, DNA repair, transcription, and cell-cycle progression [36,37], and palmitoylation regulates the maintenance of the structural organization of exosome-like extracellular vesicle membranes by [38]. Glycosylation is a ubiquitous modification that regulates various T cell functions, such as cellular migration, T cell receptor signaling, cell survival, and apoptosis [39,40]. Deregulation of PTMs is linked to cellular stress and diseases [41].

Several non-MS methods exist to study PTMs, including in vitro PTM reaction tests with colorimetric assays, radioactive isotope-labeled substrates, western blot with PTM-specific antibodies and superbinders, and peptide and protein arrays [42,43,44]. While effective, these approaches have many limitations, such as inefficiency and difficulty in producing pan-specific antibodies. MS-based proteomics approaches are currently the predominant tool for identifying and quantifying changes in PTMs.

Protein Structure

Almost all proteins (except for intrinsically disordered proteins[45]) fold into three-dimensional (3D) structures either by themselves or assisted by molecular chaperones [46]. There are four levels relevant to the folding of any protein:

Primary structure: The protein’s linear amino acid sequence, with amino acids connected through peptide bonds.

Secondary structure: The amino acid chain’s folding: α-helix, β-sheet or turn.

Tertiary structure: The three-dimensional structure of the protein.

Quaternary structure: The structure of several protein molecules/subunits in one complex.

Of recent note, the development of AlphaFold, has enabled the high-accuracy three-dimensional structural prediction of all human proteins and for proteins of many other species, enabling a more thorough study of protein folding and is used to predict the relationship between fold and function [47,48].

2. Types of Experiments

A wide range of questions are addressable with proteomics technology, which translates to a wide range of variations of proteomics workflows. In some workflows, the identification of proteins in a given sample is desired. For other experiments, the quantification of as many proteins as possible is essential for the success of the study. Therefore, proteomic experiments can be both qualitative and quantitative. The following sections give an overview of several common proteomics experiments.

Protein abundance changes

A common experiment is a discovery-based, unbiased mapping of proteins along with detection of changes in their abundance across sample groups. This is achieved using methods such as label free quantification (LFQ) or tandem mass tags (TMT), which are described in more detail in subsequent sections. In these experiments, data should be collected from at least three biological replicates of each condition to estimate the variance of measuring each protein. Depending on the experiment design, different statistical tests are used to calculate changes in measured protein abundances between groups. If there are only two groups, the quantities might be compared with a t-test or with a Wilcoxon signed-rank test. The latter is a non-parametric version of the t-test. If there are more than two sample groups, then Analysis of Variance (ANOVA) is used instead. With either testing scheme, the p-values from the first set of tests must be corrected for multiple tests. A common method for p-value correction is the Benjamini-Hochberg method [49]. These types of experiments have revealed wide ranges of proteomic remodeling from various biological systems.

PTMs

Proteins may become decorated with various chemical modifications during or after translation [31], or through proteolytic cleavage such as N-terminal methionine removal [50]. Several proteomics methods are available to detect and quantify each specific type of modification. See also the section on Protein/Peptide Enrichment and Depletion. For a good online resource listing potential modifications, sites of attachment, and their mass differences, the website www.unimod.org is an excellent curated and freely accessible database.

Phosphoproteomics

Phosphoproteomics is the study of protein phosphorylation, wherein a phosphate group is covalently attached to a protein side-chain (most commonly serine, threonine, or tyrosine). Although western blotting can measure one phosphorylation site at a time, mass spectrometry-based proteomics can measure thousands of sites from a sample at the same time. After proteolysis of the proteome, phosphopeptides need to be enriched to be detected by mass spectrometry. Various methods of enrichment have been developed [51,52,53,54]. See the Peptide/Protein Enrichment and Depletion section for more details. A key challenge of phosphoproteomics is sensitivity. It is important to ensure that there is sufficient amount of protein before completing a phosphoproteomics project as typical enrichment workflows may extract only ~1% of the proteome. Many phosphoproteomics workflows start with at least 1 mg of total protein per sample. Newer, more sensitive instrumentation is enabling detection of protein phosphosites from much less material, down to the nanogram-level of peptide loading on the the LC-MS system. Despite advancement in phosphoproteomics technology, the following challenges still exist: limited sample amounts, highly complex samples, and wide dynamic range [55]. Additionally, phosphoproteomic analysis is often time-consuming and requires the use of expensive equipment such as enrichment kits.

Glycoproteomics

Glycosylation is gaining interest due to its ubiquity and emerging functional roles. Protein glycosylation sites can be N-linked (asparagine-linked) or O-linked (serine/threonine-linked). Understanding the function of protein glycosylation will help us understand numerous biological processes since this is a universal protein modification across all domains of life, especially at the cell surface [56,57,58,59].

Studies of phosphorylation and glycosylation share several experimental pipeline steps including sample preparation. Protein clean-up approaches for glycoproteomics may differ from other proteomics experiments because glycopeptides are more hydrophilic than most peptides. Some approaches mentioned in the literature include: filter-aided sample preparation (FASP), suspension traps (S-traps), and protein aggregation capture (PAC) [56,60,61,62,63,64,65]. Multiple proteases may be used to increase the sequence coverage and detect more modification sites, such as: trypsin, chymotrypsin, Pepsin, WaLP/MaLP [66], GluC, AspN, Pronase, Proteinase K, OgpA, StcEz, BT4244, AM0627, AM1514, AM0608, Pic, ZmpC, CpaA, IMPa, PNGase F, Endo F, Endo H, and OglyZOR [56]. Mass spectrometry has improved over the past decade, and now many strategies are available for glycoprotein structure elucidation and glycosylation site quantification [56]. See also the section on “AminoxyTMT Isobaric Mass Tags” as an example quantitative glycoproteomics method.

Structural techniques

Several proteomics methods have been developed to reveal protein structure information for simple and complex systems.

Cross-linking mass spectrometry (XL-MS)

XL-MS is an emerging technology in the field of proteomics. It can be used to determine changes in protein-protein interactions and/or protein structure. XL-MS covalently locks interacting proteins together to preserve interactions and proximity during MS analysis. XL-MS is different from traditional MS in that it requires the identification of chimeric MS/MS spectra from cross-linked peptides [67,68].

The common steps in a XL-MS workflow are as follows [69]:

Generate a system with protein-protein interactions of interest (in vitro or in vivo [70])

Add a cross-linking reagent to covalently connect adjacent protein regions (such as disuccinimidyl sulfoxide, DSSO) [68]

Proteolysis to produce peptides

MS/MS data collection

Identify cross-linked peptide pairs using special software (i.e. pLink [71], Kojak [72,73], xQuest [74], XlinkX [75])

Generate cross-link maps for structural modeling and visualization [76,77] (optional: 7. Use detected cross-links for protein-protein docking [78])

Hydrogen deuterium exchange mass spectrometry (HDX-MS)

HDX-MS works by detecting changes in peptide mass due to exchange of amide hydrogens of the protein backbone with deuterium from D2O [79]. The exchange rate depends on the protein solvent accessible surface area, dynamics, and the properties of the amino acid sequence [79,80,81,82]. Although using D2O to make deuterium-labeled samples is simple, HDX-MS requires several controls to ensure that experimental conditions capture the dynamics of interest [79,83,84,85]. If the peptide dissociation process is tuned appropriately, residue-level quantification of changes in solvent accessibility are possible within a measured peptide [86]. HDX can produce precise protein structure measurements with high reproducibility. Masson et al. gave recommendations on how to prep samples, conduct data analysis, and present findings in a detailed stepwise manner [79].

Radical Footprinting

This technique uses hydroxyl radical footprinting and MS to elucidate protein structures, assembly, and interactions within a large macromolecule [87,88]. In addition to proteomics applications, various approaches to make hydroxide radicals have also been applied for footprinting studies in nucleic acid/ligand interactions [89,90,91]. This chapter is very useful in learning more about this topic: [92].

There are several methods of producing radicals for protein footprinting:

Fast photochemical oxidation of proteins (FPOP) [100]

FPOP is an example of a radical footprinting method. In FPOP, a laser-based hydroxyl radical protein footprinting MS method that relies on the irreversible labeling of solvent-exposed amino acid side chains by hydroxyl radicals in order to understand structure of proteins. A laser produces 248 nm light that causes hydrogen peroxide to break into a pair of hydroxyl radicals [99,101]. The flow rate of solution through the capillary and laser frequency are adjusted such that each protein molecule is irradiated only once. After they are irradiated, the sample is collected in a tube that contins catalase and free methionine in the buffer, quenching the H2O2 and hydroxyl radicals and preventing secondary modification of residues that become exposed due to unfolding after the initial labeling. Control samples are made by running the sample through the flow system without any irradiation. Another experimental control involves the addition of a radical scavenger to tune the extent of protein oxidation [102,103]. FPOP has wide application for proteins including measurements of fast protein folding and transient dynamics.

Protein Painting [104,105]

Protein painting uses “molecular paints” to noncovalently coat the solvent-accessible surface of proteins. These paint molecules will coat the protein surfaces but will not have access to the hydrophobic cores or protein-protein interface regions that solvents cannot access. If the “paint” covers free amines of lysine side chains, the painted parts will not be subjected to trypsin cleavage, while the unpainted areas will. After proteolysis, the peptides samples will be subjected to MS. A lack of proteolysis in a region is interpreted as solvent accessibility, which gives rough structural information about complex protein mixtures or even a whole proteome.

LiP-MS (Limited Proteolysis Mass Spectrometry) [106,107,107,108]

Limited proteolysis coupled to mass spectrometry (LiP-MS) is a method that tracks structural changes in complex proteomes in response to a variety of perturbations or stimuli. The underlying tenet of LiP-MS is that a stimuli-induced change in native protein structure (i.e. protein-protein interaction, introduction of a PTM, ligand/substrate binding, or changes in osmolarity or ambient temperature) can be detected by a change in accessibility of a broad-specificity protease (i.e. proteinase K) to the region(s) of the protein where the structural change occurs. For example, small molecule binding may render a disordered region protected from non-specific proteolysis by directly blocking access of the protease to the cleavage site. LiP-MS can therefore provide a somewhat unbiased view of structural changes at the proteome scale. Importantly, LiP-MS necessitates cell lysates or individual proteins be maintained in their native state prior to or during perturbation and protease treatment. LiP-MS can also be applied to membrane suspensions, to facilitate the study of membrane proteins without the need for purification or detergents [109].

For additional information about LiP-MS, please refer to the following article: [110]

Protein stability and small molecule binding

Cellular Thermal Shift Assay (CETSA) [111,112]

CETSA involves subjecting a protein sample to a thermal shift assay (TSA), in which the protein is exposed to a range of temperatures, and the resulting changes in protein stability by quantifying protein remaining in the soluble fraction. This is done in live cells immediately before lysis, or in non-denaturing lysates. The original paper reported this method using immunoaffinity approaches for detecting changes in soluble protein. The assay is capable of detecting shifts in the thermal equilibrium of cellular proteins in response to a variety of perturbations, but most commonly in response to in vitro drug treatments.

Thermal proteome profiling (TPP) [113,114,115,116]

Thermal proteome profiling (TPP) follows the same principle as CETSA, but has been extended to use an unbiased mass spectrometry readout of many proteins. By measuring changes in thermal stability of thousands of proteins, binding to an unknown or unexpected protein can be discovered. During a typical TPP experiment, a protein sample is first treated with a drug of interest to stabilize protein-ligand interactions. The sample is then divided into multiple aliquots, which are subjected to different temperatures to induce thermal denaturation. The resulting drug-induced changes in protein stability curves are detected using mass spectrometry. By comparing protein stability curves across the temperatures between treatment conditions, TPP can provide insight into the proteins that bind a ligand.

Protein-protein interactions (PPIs)

Affinity purification coupled to mass spectrometry (AP-MS) [117,118,119]

AP-MS is an approach that involves purification of a target protein or protein complex using a specific antibody followed by mass spectrometry analysis to identify the interacting proteins. In a typical AP-MS experiment, a protein or protein complex of interest is first tagged with a specific epitope or affinity tag, such as a FLAG or HA tag, which is used to selectively capture the target protein using an antibody. The protein complex is then purified from the sample using a series of wash steps, and the interacting proteins are identified using mass spectrometry. The success of AP-MS experiments depends on many factors, including the quality of the antibody or tag used for purification, the specificity and efficiency of the resin used for capture, and the sensitivity and resolution of the mass spectrometer. In addition, careful experimental design and data analysis are critical for accurately identifying and interpreting protein-protein interactions. AP-MS has been used to study a wide range of biological processes, including signal transduction pathways, protein complex dynamics, and protein post-translational modifications. AP-MS has been performed on a whole proteome scale as part of the BioPlex project [120,121,122]. Despite its widespread use, AP-MS has some limitations, including the potential for non-specific interactions, the difficulty in interpreting complex data sets, and the possibility of missing important interacting partners due to constraints in sensitivity or specificity. However, with continued advances in technology and data analysis methods, AP-MS is likely to remain a valuable tool for studying protein-protein interactions.

APEX peroxidase [123,124]

APEX-MS is a labeling technique that utilizes a peroxidase genetically fused to a protein of interest. When biotin-phenol is transiently added in the presence of hydrogen peroxide, nearby proteins are covalently biotinylated [125]. APEX thereby enables the discovery of interacting proteins in living cells. One of the major advantages of APEX is its ability to label proteins in their native environment, allowing for the identification of interactions that occur under physiological conditions. Despite its advantages, APEX has some limitations, including the potential for non-specific labeling, the difficulty in distinguishing between direct and indirect interactions, and the possibility of missing interactions that occur at low abundance or in regions of the cell that are not effectively labeled.

Proximity-dependent biotin identification (BioID) [126,127,128,129]

BioID is a proximity labeling technique that allows for the identification of protein-protein interactions. BioID involves the genetic tagging of a protein of interest with a promiscuous biotin ligase in live cells, which then biotinylates proteins in close proximity to the protein of interest. One of the advantages of BioID is its ability to label proteins in their native environment, allowing for the identification of interactions that occur under physiological conditions. BioID has been used to identify a wide range of protein interactions, including receptor-ligand interactions, signaling complexes, and protein localization. BioID is a slower reaction than APEX and therefore may pick up more transient interactions. BioID has the same limitations as APEX. For more information on BioID, please refer to [130].

3. Protein Extraction

Protein extraction from the sample of interest is the initial phase of any mass spectrometry-based proteomics experiment. Thought should be given to any planned downstream assays, specific needs of proteolysis (LiP-MS, PTM enrichments, enzymatic reactions, glycan purification or hydrogen-deuterium exchange experiments), long-term project goals (reproducibility, multiple sample types, low abundance samples), as well as to the initial experimental question (coverage of a specific protein, subcellular proteomics, global proteomics, protein-protein interactions or affinity enrichment of specific classes of modifications). The 2009 version of Methods in Enzymology: guide to Protein Purification [131] serves as a deep dive into how molecular biologists and biochemists traditionally carried out protein extraction. The Protein Protocols handbook [132] and the excellent review by Linn [133] are good sources of general proteomics protocols. Another excellent resource is the “Proteins and Proteomics: A Laboratory Manual” by Richard J. Simpson [134,134]. This manual is 926 pages packed full of bench tested protocols and procedures for carrying out protein centric studies. Any change in extraction conditions should be expected to create potential changes in downstream results. Be sure to plan and optimize the protein extraction step first and use a protocol that works for your needs. To reproduce the results of another study, one should begin with the same extraction protocols.

Buffer choice

General proteomics

A common question to proteomics core facilities is, “What is the best buffer for protein extraction?” Unfortunately, there is no one correct answer. For global proteomics experiments where maximizing the number of protein or peptide identifications is a goal, a buffer of neutral pH (50–100 mM phosphate buffered saline (PBS), tris(hydroxymethyl)aminomethane (Tris), 4-(2-hydroxyethyl)-1-piperazineethanesulfonic acid (HEPES), ammonium bicarbonate, triethanolamine bicarbonate; pH 7.5–8.5) is used in conjunction with a chaotrope or surfactant to denature and solubilize proteins (e.g., 8 M urea, 6 M guanidine, 5% sodium dodecyl sulfate (SDS)) [135,136]. Often other salts like 50–150 mM sodium chloride (NaCl) are also added. Although there are a range of buffers that can be used to provide the correct working pH and ionic strength, not all buffers are compatible with downstream workflows and some buffers can induce modifications of proteins (e.g., ammonium bicarbonate promotes methionine oxidation and we generally suggest Tris-HCl instead to minimize oxidation. A great online resource to help calculate buffer compositions and pH values is the website by Robert Beynon at http://phbuffers.org. Complete and quick denaturation of proteins in the sample is required to limit changes to protein status by endogenous proteases, kinases, phosphatases, and other enzymes. If intact protein separations are planned (based on size or isoelectric point), choose a denaturant compatible with those methods, such as SDS [137]. Compatibility with the protease (typically trypsin) and peptide cleanup steps must be considered. Of note, detergents can be incompatible with LC-MS workflows as they can cause ion suppression and column clogging. It is therefore advisable to remove detergents using detergent-removal kits or precipitation techniques (i.e. deoxycholate precipitates at low pH and can easily be removed by filtration or centrifugation). For more information, see section “Removal of buffer/interfering small molecules”. Alternatively, mass-specrtrometry-compatible detergents may be used (i.e. n-dodecyl-beta-maltoside). Urea must be diluted to 2 M or less for trypsin and chymotrypsin digestions, while guanidine and SDS should be removed either through protein precipitation, through filter-assisted sample preparation (FASP), or similar solid phase digestion techniques. Some buffers can potentially introduce modifications to proteins such as carbamylation from urea at high temperatures [138].

Protein-protein interactions

Denaturing conditions will efficiently extract proteins, but will denature proteins and therefore disrupt most protein-protein interactions. If you are working on an immune- or affinity purification of a specific protein and expect to analyze enzymatic activity, structural features, and/or protein-protein interactions, a non-denaturing lysis buffer should be utilized [139,140]. Check the calculated isoelectric point (pI) and hydrophobicity (e.g., try the Expasy.org resource ProtParam) for a good idea of starting pH/conductivity, but a stability screen may be needed. In general, a good starting point for the buffer will still be close to neutral pH with 50–250 mM NaCl, but specific proteins may require pH as low as 2 or as high as 9 for stable extraction. A low percent of mass spectrometry compatible detergent may also be used, such as n-dodecyl-beta-maltoside. Newer mass spectrometry-compatible detergents are also useful for protein extraction and ease of downstream processing – including Rapigest® (Waters), N-octyl-β-glucopyranoside, MS-compatible degradable surfactant (MaSDeS) [141], Azo [142], PPS silent surfactant [143], sodium laurate [144], and sodium deoxycholate [145]. Avoid using tween-20, triton-X, NP-40, and polyethylene glycols (PEGs) as these compounds are challenging to remove after digestion [146].

Optional additives

For non-denaturing buffer conditions, which preserve tertiary and quaternary protein structures, additional additives may not be necessary for successful extraction and to prevent proteolysis or PTMs throughout the extraction process. Protease, phosphatase and deubiquitinase inhibitors are optional additives in less denaturing conditions or in experiments focused on specific PTMs. For a broad range of inhibitors, a premixed tablet can be added to the lysis buffer, such as Roche cOmplete Mini Protease Inhibitor Cocktail tablets. Protease inhibitors may impact desired proteolysis from the added protease, and will need to be diluted or removed prior to protease addition. To improve extraction of DNA- or RNA-binding proteins, adding a small amount of nuclease or benzonase is useful for degradation of any bound nucleic acids and results in a more consistent digestion [147].

Mechanical or Sonic Disruption

Cell lysis

One typical lysis buffer is 8 M urea in 100 mM Tris, pH 8.5; the pH is based on optimum trypsin activity [148] Small mammalian cell pellets and exosomes will lyse almost instantly upon addition denaturing buffer. If non-denaturing conditions are desired, osmotic swelling and subsequent shearing or sonication can be applied [149]. Efficiency of extraction and degradation of nucleic acids can be improved using various sonication methods: 1) probe sonicator with ice; 2) water bath sonicator with ice or cooling; 3) bioruptor® sonication device 4) Adaptive focused acoustics (AFA®) [150]. Key to these additional lysis techniques is to keep the temperature of the sample from rising significantly which can cause proteins to aggregate or degrade. Some cell types may require additional force for effective lysis (see below). For cells with cell walls (i.e. bacteria or yeast), lysozyme is often added in the lysis buffer. Any added protein will be present in downstream results, however, so excessive addition of lysozyme is to be avoided unless tagged protein purification will occur.

Tissue/other lysis

Although small pieces of soft tissue can often be successfully extracted with the probe and sonication methods described above, larger/harder tissues as well as plants/yeast/fungi are better extracted with some form of additional mechanical force. If proteins are to be extracted from a large amount of sample, such as soil, feces, or other diffuse input, one option is to use a dedicated blender and filter the sample, followed by centrifugation. If samples are smaller, such as tissue, tumors, etc., cryo-homogenization is recommended. The simplest form of this is grinding the sample with liquid nitrogen and a mortar and pestle. Tools such as bead beaters (i.e. FastPrep-24®) are also used, where the sample is placed in a tube with appropriately sized glass or ceramics beads and shaken rapidly. Cryo-mills are chambers where liquid nitrogen is applied around a vessel and large bead or beads. Cryo-fractionators homogenize samples in special bags that are frozen in liquid nitrogen and smashed with various degrees of force [151]. In addition, rapid bead beating mills such as the Bertin Precellys Evolution are both economical, effective and detergent compatible for many types of proteomics experiments at a scale of 96 samples per batch. After homogenization, samples can be sonicated by one of the methods above to fragment DNA and increase solubilization of proteins.

Measuring the efficiency of protein extraction

Following protein extraction, samples should be centrifuged (10–14,000 g for 10–30 min depending on sample type) to remove debris and any unlysed material prior to determining protein concentration. The amount of remaining insoluble material should be noted throughout an experiment as a large change may indicate protein extraction issues. Protein concentration can be calculated using a number of assays or tools [152,153]; generally absorbance measurements are facile, fast and affordable, such as Bradford or BCA assays. Protein can also be estimated by tryptophan fluorescence, which has the benefit of not consuming sample [154]. A nanodrop UV spectrophotometer may be used to measure absorbance at UV280. Consistency in this method is important as each method will have inherent bias and error [155,156]. Extraction buffer components will need to be compatible with any assay chosen; alternatively, buffer may be removed (see below) prior to protein concentration calculation.

Reduction and alkylation

Typically, disulfide bonds in proteins are reduced and alkylated prior to proteolysis in order to disrupt structures and simplify peptide analysis. This allows better access to all residues during proteolysis and removes the crosslinked peptides created by S-S inter peptide linkages. There are a variety of reagent options for these steps. For reduction, the typical agents used are 5–15 mM concentration of tris(2-carboxyethyl)phosphine hydrochloride (TCEP-HCl), dithiothreitol (DTT), or 2-beta-mercaptoethanol (2BME). TCEP-HCl is an efficient reducing agent, but it also significantly lowers sample pH, which can be abated by increasing sample buffer concentration or resuspending TCEP-HCl in an appropriate buffer system (i.e. 1M HEPES pH 7.5). Following the reducing step, a slightly higher 10–20mM concentration of alkylating agent such as chloroacetamide/iodoacetamide or n-ethyl maleimide is used to cap the free thiols [157,158,159]. In order to monitor which cysteine residues are linked or modified in a protein, it is also possible to alkylate free cysteine residues with one reagent, reduce di-sulfide bonds (or other cysteine modifications) and alkylate with a different reagent [160,161,162]. Alkylation reactions are generally carried out in the dark at room temperature to avoid excessive off-target alkylation of other amino acids.

Removal of buffer/interfering small molecules

If extraction must take place in a buffer which is incompatible for efficient proteolysis (check the guidelines for the protease of choice), then protein cleanup should occur prior to digestion. This is generally performed through precipitation of proteins. The most common types are 1) acetone, 2) trichloroacetic acid (TCA), and 3) methanol/chloroform/water [163,164]. Proteins are generally insoluble in most pure organic solvents, so cold ethanol or methanol are sometimes used. Pellets should be washed with organic solvent for complete removal especially of detergents. Alternatively, solid phase based digestion methods such as S-trap [165], FASP [166,167], SP3 [168,169] and on column/bead such as protein aggregation capture (PAC) [170] can allow for proteins to be applied to a solid phase and buffers removed prior to proteolysis [171]. Specialty detergent removal columns exist (Pierce/Thermo Fisher Scientific) but add expense and time-consuming steps to the process. Relatively low concentrations of specific detergents, such as 1% deoxycholate (DOC), or chaotropes (i.e. 1M urea) are compatible with proteolysis by trypsin/Lys-C. Often proteolysis-compatible concentrations of these detergents and chaotropes are achieved by diluting the sample in appropriate buffer (i.e. 100 mM ammonium bicarbonate, pH 8.5) after cell or tissue lysis in a higher concentration. DOC can then be easily removed by precipitation or phase separation [172] following digestion by acidification of the sample to pH 2–3. Any small-molecule removal protocol should be tested for efficiency prior to implementing in a workflow with many samples as avoiding detergent (or polymer) contamination in the LC/MS is very important.

Protein quantification

After proteins are isolated from the sample matrix, they are often quantified. Protein quantification is important to assess the yield of an extraction procedure, and to adjust the scale of the downstream processing steps to match the amount of protein. For example, when purifying peptides, the amount of sorbent should match the amount of material to be bound. Presently, there is a wide variety of techniques to quantitate the amount of protein present in a given sample. These methods can be broadly divided into three types as follows:

Colorimetry-based methods:

The method includes different assays like Coomassie Blue G-250 dye binding (the Bradford assay), the Folin-Lowry assay, the bicinchoninic acid (BCA) assay and the biuret assay [173]. The most commonly used method is the BCA assay. In the BCA method the peptide bonds of the protein reduce cupric ions [Cu2+] to cuprous ions [Cu+] at a rate which is proportional to the amount of protein present in the sample. Subsequently, the BCA reagent binds to the cuprous ions, leading to the formation of a complex which absorbs 562 nm wavelength light. This permits a direct correlation between sample protein concentration and absorbance [174,175]. The Bradford assay is another method for protein quantification also based on colorimetry principle. It relies on the interaction between the Coomassie brilliant blue dye and the protein based on hydrophobic and electrostatic interactions. Dye binding shifts the absorption maxima from 470 nm to 595 nm [176,177]. Similarly, the Folin- Lowry method is a two-step colorimetric assay. Step one is the biuret reaction wherein complexes of copper with the nitrogen in the protein molecule are formed. In the second step, the complexed tyrosine and tryptophan amino acids react with Folin–Ciocalteu phenol reagent generating an intense, blue-green color absorbing light at 650–750 nm [178].

Another simple but less reliable protein quantification method of UV-Vis Absorbance at 280 nm estimates the protein concentration by measuring the absorption of the aromatic residues; tyrosine, and tryptophan, at 280 nm [179].

Fluorescence-based methods:

Colorimetric assays are inexpensive and require common lab equipment, but colorimetric detection is less sensitive than fluorescence. Total protein in proteomic samples can be quantified using intrinsic fluorescence of tryptophan based on the assumption that approximately 1% of all amino acids in the proteome are tryptophan [180].

NanoOrange is an assay for the quantitative measurement of proteins in solution using the NanoOrange reagent, a merocyanine dye that produces a large increase in fluorescence quantum yield when it interacts with detergent-coated proteins. Fluorescence is measured using 485-nm excitation and 590-nm emission wavelengths. The NanoOrange assay can be performed using fluorescence microplate readers, fluorometers, and laser scanners that are standard in the laboratory [153].

3-(4-carboxybenzoyl)quinoline-2-carboxaldehyde (CBQCA) is a sensitive fluorogenic reagent for amine detection, which can be used for analyzing proteins in solution. As the number of accessible amines in a protein is modulated by its concentration, CBQCA has a greater sensitivity and dynamic range when measuring protein concentration [181].

Protein Extraction Summary

Learning the fundamentals and mechanisms of how and why sample preparation steps are performed is vital because it enables flexibility to perform proteomics from a wide range of samples. For bottom-up proteomics, the overreaching goal is efficient and consistent extraction and digestion. A range of mechanical and non-mechanical extraction protocols have been developed and the choice of technique is generally dictated by sample type or assay requirements (i.e. native versus non-native extraction). Extraction can be aided by the addition of detergents and/or chaotropes to the sample, but care should be taken that these additives do not interfere with the sample digestion step or downstream mass-spectrometry analysis.

4. Proteolysis

Proteolysis is the defining step that differentiates bottom-up or shotgun proteomics from top-down proteomics. Hydrolysis of proteins is extremely important because it defines the population of potentially identifiable peptides. Generally, peptides between a length of 7–35 amino acids are considered useful for mass spectrometry analysis. Peptides that are too long are difficult to identify by tandem mass spectrometry or may be lost during sample preparation due to irreversible binding with solid-phase extraction sorbents. Peptides that are too short are also not useful because they may match to many proteins during protein inference. There are many choices of enzymes and chemicals that hydrolyze proteins into peptides. This section summarizes potential choices and their strengths and weaknesses.

Trypsin is the most common choice of protease for proteome hydrolysis [182]. Trypsin is favorable because of its specificity, availability, efficiency and low cost. Trypsin cleaves at the C-terminus of basic amino acids, Arg and Lys, if not immediately followed by proline. Many of the peptides generated from trypsin are short in length (less than ~ 20 amino acids), which is ideal for chromatographic separation, MS-based peptide fragmentation and identification by database search. The main drawback of trypsin is that majority (56%) of the tryptic peptides are ≤ 6 amino acids, and hence using trypsin alone limits the observable proteome [183,184,185]. This limits the number of identifiable protein isoforms and post-translational modifications.

Although trypsin is the most common protease used for proteomics, in theory it can only cover a fraction of the proteome predicted from the genome [186]. This is due to production of peptides that are too short to be unique, for example due to R and K immediately next to each other. Peptides below a certain length are likely to occur many times in the whole proteome, meaning that even if we identify them we cannot know their protein of origin. In protein regions devoid of R/K, trypsin may also result in very long peptides that are then lost due to irreversible binding to the solid phase extraction device, or that become difficult to identify due to complicated fragmentation patterns. Thus, parts of the true proteome sequences that are present are lost after trypsin digestion due to both production of very long and very short peptides.

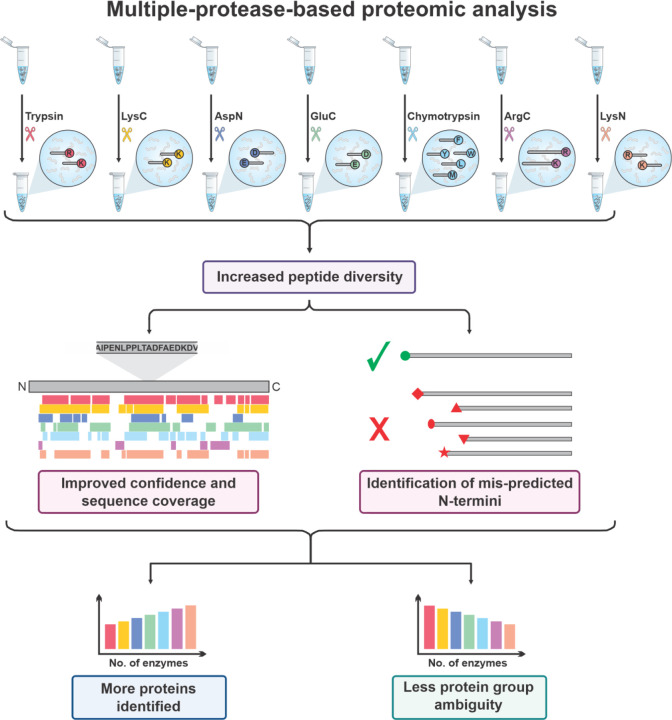

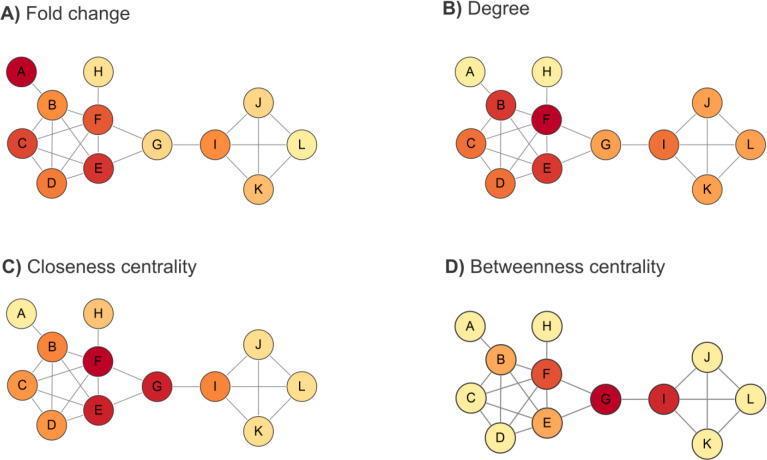

Many alternative proteases are available with different specificities that complement trypsin to reveal different protein sequences [183,187], which can help distinguish protein isoforms [188] (Figure 2). The enzyme choice mostly depends on the application. In general, for a mere protein identification, trypsin is often chosen due to the aforementioned reasons. However, alternative enzymes can facilitate de novo assembly when the genomic data information is limited in the public database repositories [189,190,191,192,193]. Use of multiple proteases for proteome digestion also can improve the sensitivity and accuracy of protein quantification [194]. Moreover, by providing an increased peptide diversity, the use of multiple proteases can expand sequence coverage and increase the probability of finding peptides which are unique to single proteins [66,186,195]. A multi-protease approach can also improve the identification of N-Termini and signal peptides for small proteins [196]. Overall, integrating multiple-protease data can increase the number of proteins identified [197,198], increase the identified post-translational modifications [66,195,199] and decrease the ambiguity of the inferred protein groups [195].

Figure 2: Multiple protease proteolysis improves protein inference.

The use of other proteases beyond Trypsin such as Lysyl endopeptidase (Lys-C), Peptidyl-Asp metallopeptidase (Asp-N), Glutamyl peptidase I, (Glu-C), Chymotrypsin, Clostripain (Arg-C) or Peptidyl-Lys metalloendopeptidase (Lys-N) can generate a greater diversity of peptides. This improves protein sequence coverage and allows for the correct identification of their N-termini. Increasing the number of complimentary enzymes used will increase the number of proteins identified by single peptides and decreases the ambiguity of the assignment of protein groups. Therefore, this will allow more protein isoforms and post-translational modifications to be identified than using Trypsin alone.

Lysyl endopeptidase (Lys-C) obtained from Lysobacter enzymogenesis is a serine protease involved in cleaving carboxyl terminus of Lys [184,200]. Like trypsin, the optimum pH range required for its activity is from 7 to 9. A major advantage of Lys-C is its resistance to denaturing agents, including 8 M urea - a chaotrope commonly used to denature proteins prior to digestion [188]. Trypsin is less efficient at cleaving Lys than Arg, which could limit the quality of quantitation from tryptic peptides. Hence, to achieve complete protein digestion with minimal missed cleavages, Lys-C is often used simultaneously with trypsin digestion [201].

Alpha-lytic protease (aLP) is another protease secreted by the soil bacterial Lysobacter enzymogenesis [202]. Wild-type aLP (WaLP) and an active site mutant of aLP, M190A (MaLP), have been used to expand proteome coverage [66]. Based on observed peptide sequences from yeast proteome digestion, WaLP showed a specificity for small aliphatic amino acids like alanine, valine, and glycine, but also threonine and serine. MaLP showed specificity for slightly larger amino acids like methionine, phenylalanine, and surprisingly, a preference for leucine over isoleucine. The specificity of WaLP for threonine enabled the first method for mapping endogenous human SUMO sites [37].

Glutamyl peptidase I, commonly known as Glu-C or V8 protease, is a serine protease obtained from Staphyloccous aureus [203]. Glu-C cleaves at the C-terminus of glutamate, but also after aspartate [203,204].

Peptidyl-Asp metallopeptidase, commonly known as Asp-N, is a metalloprotease obtained from Pseudomonas fragi [205]. Asp-N catalyzes the hydrolysis of peptide bonds at the N-terminal of aspartate residues. The optimum activity of this enzyme occurs at a pH range between 4 and 9. As with any metalloprotease, chelators like EDTA should be avoided for digestion buffers when using Asp-N. Studies also suggest that Asp-N cleaves at the amino terminus of glutamate when a detergent is present in the proteolysis buffer [205]. Asp-N often leaves many missed cleavages [188].

Chymotrypsin or chymotrypsinogen A is a serine protease obtained from porcine or bovine pancreas with an optimum pH range from 7.8 to 8.0 [206]. It cleaves at the C-terminus of hydrophobic amino acids Phe, Trp, Tyr and barely Met and Leu residues. Since the transmembrane region of membrane proteins commonly lacks tryptic cleavage sites, this enzyme works well with membrane proteins having more hydrophobic residues [188,207,208]. The chymotryptic peptides generated after proteolysis will cover the proteome space orthogonal to that of tryptic peptides both in a quantitative and qualitative manner [208,209,210]

Clostripain, commonly known as Arg-C, is a cysteine protease obtained from Clostridium histolyticum [211]. It hydrolyses mostly the C-terminal Arg residues and sometimes Lys residues, but with less efficiency. The peptides generated are generally longer than that of tryptic peptides. Arg-C is often used with other proteases for improving qualitative proteome data and also for investigating PTMs [184].

LysargiNase, also known as Ulilysin, is a recently discovered protease belonging to the metalloprotease family. It is a thermophilic protease derived from Methanosarcina acetivorans that specifically cleaves at the N-terminus of Lys and Arg residues [212]. Hence, it enabled discovery of C-terminal peptides that were not observed using trypsin. In addition, it can also cleave modified amino acids such as methylated or dimethylated Arg and Lys [212].

Peptidyl-Lys metalloendopeptidase, or Lys-N, is an metalloprotease obtained from Grifola frondosa [213]. It cleaves N-terminally of Lys and has an optimal activity at pH 9.0. Unlike trypsin, Lys-N is more resistant to denaturing agents and can be heated up to 70°C [184]. Peptides generated from Lys-N digestion produce more c-type ions using ETD fragmentation [214]. Hence this can be used for analysing PTMs, identification of C-terminal peptides and also for de novo sequencing strategies [214,215].

Pepsin A, commonly known as pepsin, is an aspartic protease obtained from bovine or porcine pancreas [216]. Pepsin was one of several proteins crystalized by John Northrop, who shared the 1946 Nobel prize in chemistry for this work [217,218,219,220]. Pepsin works at an optimum pH range from 1 to 4 and specifically cleaves Trp, Phe, Tyr and Leu [184]. Since it possess high enzyme activity and broad specificity at lower pH, it is preferred over other proteases for MS-based disulphide mapping [221,222]. Pepsin is also used extensively for structural mass spectrometry studies with hydrogen-deuterium exchange (HDX) because the rate of back exchange of the amide deuteron is minimized at low pH [223,224].

Proteinase K was first isolated from the mold Tritirachium album Limber [225]. The epithet ‘K’ is derived from its ability to efficiently hydrolyze keratin [225]. It is a member of the subtilisin family of proteases and is relatively unspecific with a preference for proteolysis at hydrophobic and aromatic amino acid residues [226]. The optimal enzyme activity is between pH 7.5 and 12. Proteinase K is used at low concentrations for limited proteolysis (LiP) and the detection of protein structural changes in the eponymous technique LiP-MS [227].

Although different specificity is useful in theory to enable improved proteome sequence coverage, there are practical challenges because most standard workflows are optimized for tryptic peptides. For example, peptides that lack a c-terminal positive charge due to arginine or lysine side chains can have a less pronounced y-ion series. This can lead to lower scoring peptide-spectra matches because some peptide identification algorithms preferentially score y ions higher.

Peptide quantitation assays

After peptide production from proteomes, it may be desirable to quantify the peptide yeild. Quantitation of peptide assays is not as easy as protein lysate assays. BCA protein assays perform poorly with peptide solutions and report erroneous values. A simplistic measurement is to use a nanodrop device, but absorbance measurements from a drop of solution does not report accurate values either. A more standardized and reliable approach is to Fluorescamine based assay for peptide solutions for higher accuracy [228,229]. This assay is based on the reaction between a labeling reagent and the N-terminal primary amine in the peptide(s); therefore, samples must be free of amine-containing buffers (e.g., Tris-based buffer and/or amino acids). This procedure has performance similar to the Pierce Quantitative Fluorometric Peptide Assay (Cat 23290).

Fluorescamine peptide digest assay (based on Udenfriend and Bantan-Polak papers cited above).

This assay is scaled to small volume reactions. Use a black flat-bottom 384 well plate such as Corning 384-Well Solid Black (Cat No. 3577). Warm solubilized fluorescamine reagent to room temperature out of light.

Dilute peptide digest standard from 1mg/ml down to 7.8ug/mL in same buffer as sample as 8 serial dilutions.

Include buffer blank of the sample buffer.

Dispense 35 uL of Fluorometric Peptide Assay Buffer (0.1M Sodium borate pH 8.0) to each well.

Dispense 5 uL of sample or 5 uL of peptide standards into designated wells.

Dispense 10 uL of Fluorescamine reagent, mix 3x by pipetting up/down.

Incubate 5 minutes at room temperature.

Measure fluorescence using filters at Excitation 390nm, Emission 475 nm on a BioTek Synergy plate reader.

Excitation 360/40nm, Emission 460/40nm (fixed values)

Gain: scale to high wells (select highest-concentration standards)

Mirror: Top 400nm, Read Height: 7mm

Calculate peptide concentrations using template with quadratic curve fitting and determine yield.

Reagents:

Fluorometric Peptide Assay Buffer: 0.1M sodium borate in Milli-Q H2O, adjust to pH 8.0 with HCl

Making the Fluorescamine Reagent from Fluorescamine (Thermo Scientific Chemicals 191675000, 100mg/100mL fluorescamine in 100% HPLC grade Acetonitrile).

For example, weigh out 25mg fluorescamine and dissolve in 25mL Acetonitrile.

Cover in foil, store out of light at 4°C until use.

Keep powdered fluorescamine covered in foil out of light at room temperature.

5. Mass Spectrometry Methods for Peptide Quantification Label-free quantification (LFQ) of peptides

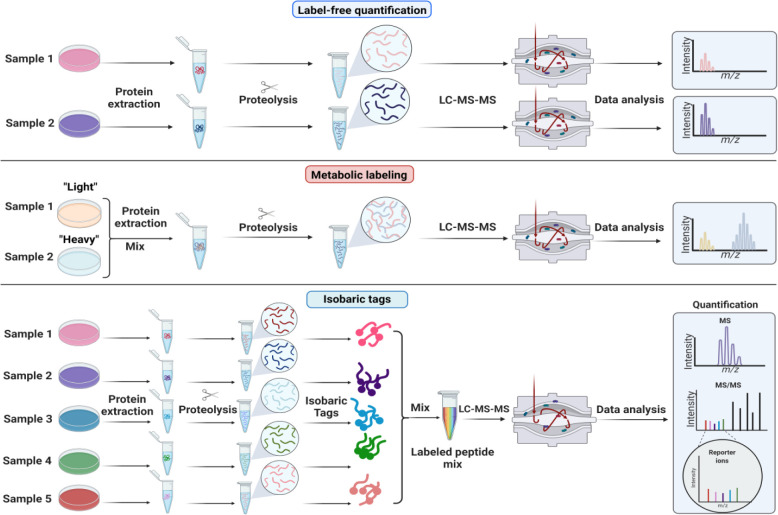

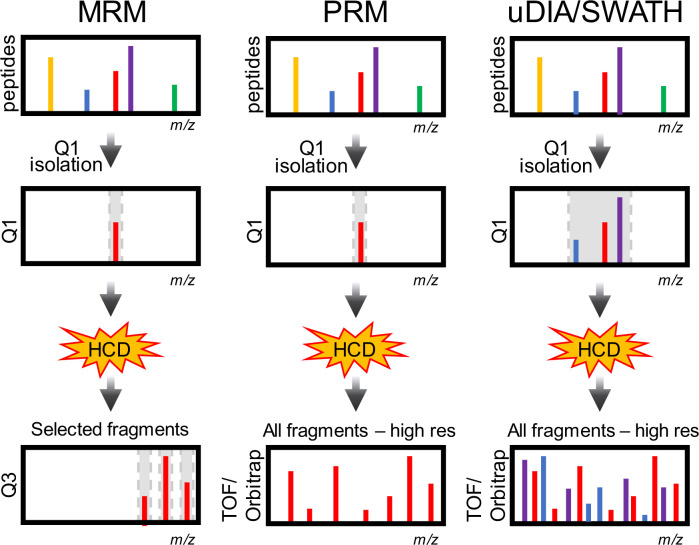

LFQ of peptide precursors requires no additional steps in the protein extraction, digestion, and peptide purification workflow (Figure 3). Samples can be taken straight to the mass spectrometer and are injected one at a time, each sample necessitating their own LC-MS/MS experiment and raw file. Quantification of peptides by LFQ is routinely performed by many commercial and freely available proteomics software (see Data Analysis section below). In LFQ, peptide abundances across LC-MS/MS experiments are usually calculated by computing the area under the extracted ion chromatograms for signals that are specific to each peptide; this involves aligning windows of accurate peptide mass and retention time. LFQ can be performed using precursor MS1 signals from DDA, or using multiple fragment ion signals from DIA (see Data Acquisition section).

Figure 3: Quantitative strategies commonly used in proteomics.

A) Label-free quantitation. Proteins are extracted from samples, enzymatically hydrolyzed into peptides and analyzed by mass spectrometry. Chromatographic peak areas from peptides are compared across samples that are analyzed sequentially. B) Metabolic labelling. Stable isotope labeling with amino acids in cell culture (SILAC) is based on feeding cells stable isotope labeled amino acids (“light” or “heavy”). Samples grown with heavy or light amino acids are mixed before cell lysis. The relative intensities of the heavy and light peptide are used to compute protein changes between samples. C) Isobaric or chemical labelling. Proteins are isolated separately from samples, enzymatically hydrolyzed into peptides, and then chemically tagged with isobaric stable isotope labels. These isobaric tags produce unique reporter mass-to-charge (m/z) signals that are produced upon fragmentation with MS/MS. Peptide fragment ions are used to identify peptides, and the relative reporter ion signals are used for quantification.

Stable isotope labeling of peptides

One approach to improve the throughput and quantitative completeness within a group of samples is sample multiplexing via stable isotope labeling. Multiplexing enables pooling of samples and parallel LC-MS/MS analysis within one run. Quantification can be achieved at the MS1- or MSn-level, dictated by the upstream labeling strategy.

Stable isotope labeling methods produce peptides that are chemically identical from each sample that differ only in their mass due. Methods include stable isotope labeling in amino acid cell culture (SILAC) [230] and chemical labeling such as amine-modifying tags for relative and absolute quantification (mTRAQ) [231] or dimethyl labeling [232]. The labeling of each sample imparts mass shifts (e.g. 4 Da, 8 Da) which can be detected within the MS1 full scan. The ability to label samples in cell culture has enabled impactful quantitative biology experiments [233,234]. These approaches have nearly exclusively been performed using data-dependent acquisition (DDA) strategies. However, recent work employing faster instrumentation has shown the benefits of chemical labeling with 3-plex mTRAQ or dimethyl labels for data-independent acquisition (DIA) [235,236], an idea originally developed nearly a decade earlier using chemical labels to quantify lysine acetylation and succinylation stoichiometry [237]. As new tags with higher plexing become available, strategies like plexDIA and mDIA are sure to benefit [235,236].

Peptide labeling with isobaric tags

Another approach to improve throughput and quantitative completeness within a group of samples is multiplexing via isobaric labels, a strategy which enables parallel data acquisition after pooling of samples. Commercial isobaric tags include tandem mass tags (TMT) [238] and isobaric tags for relative and absolute quantification (iTRAQ) [239] amongst others, and several non-commercial options have also been developed [240]. 10- and 11-plex TMT kits were recently supplanted by proline-based TMT tags (TMTpro), originally introduced as 16-plex kits in 2019 [241] and upgraded to an 18-plex platform in 2021 [242].

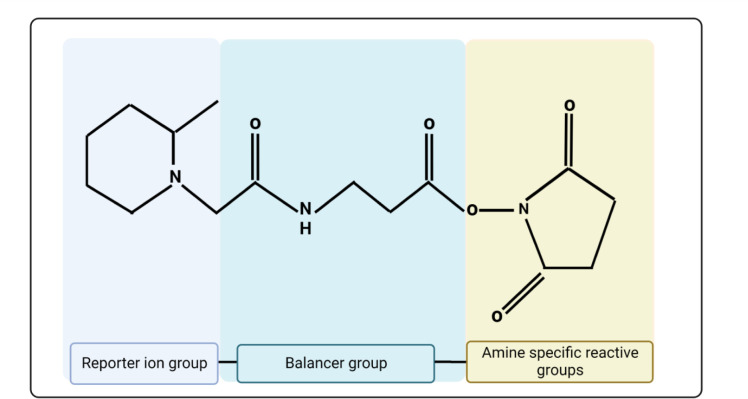

The isobaric tag labeling-based peptide quantitation strategy uses derivatization of every peptide sample with a different isotopic incorporation from a set of isobaric mass tags. All isobaric tags have a common structural theme consisting of 1) an amine-reactive groups (usually triazine ester or N-hydroxysuccinimide [NHS] esters) which react with peptide N-termini and ε-amino group of the lysine side chain of peptides, 2) a balancer group, and 3) a reporter ion group (Figure 4).

Figure 4:

Example chemical structure of isobaric tags “Tandem Mass Tags (TMT)”.

Peptide labeling is followed by pooling the labelled samples, which undergo MS and MS/MS analysis simultaneously. As the isobaric tags are used, peptides labeled with these tags give a single MS peak with the same precursor m/z value in an MS1 scan and identical retention time of liquid chromatography analysis. The modified parent ions undergo fragmentation during MS/MS analysis generating two kinds of fragment ions: (a) reporter ions and (b) peptide fragment ions. Each reporter ions’ relative intensity is directly proportional to the peptide abundance in each of the starting samples that were pooled. As usual, b- and y-type fragment ion peaks are still used to identify amino acid sequences of peptides, from which proteins can be inferred. Since it is possible to label most tryptic peptides with an isobaric mass tag at least at the N-termini, numerous peptides from the same protein can be detected and quantified, thus leading to an increase in the confidence in both protein identification and quantification [244].

Because the size of the reporter ions is small and sometimes the mass difference between reporter ions is small (i.e., a ~6 mDa difference when using 13C versus 15N), these methods neary exclusively employ high-resolution mass analyzers, not classical ion traps [245]. There are examples, however, of using isobaric tags with pulsed q dissociation on linear ion traps (LTQs) [246]. Suitable instruments are the Thermo Q-Exactive, Exploris, Tribrid, and Astral lines, or Q-TOFs such as the TripleTOF or timsTOF platforms [247,248].

The following are some of the isobaric labeling techniques:

isobaric Tags for Relative and Absolute Quantitation (iTRAQ)

The iTRAQ tagging method covalently labels the peptide N-terminus and side-chain primary amines with tags of different masses through the NHS-ester bond. This is followed by mass spectrometry analysis [249]. Reporter ions for an 8-plex iTRAQ are measured at roughly 113, 114, 115, 116, 117, 118, 119, and 121 m/z. Currently, two kinds of iTRAQ reagents are available: 4-plex and 8-plex [250]. Using 4-plex reagents, a maximum of four different biological conditions can be analyzed simultaneously (i.e., multiplexed), whereas using 8-plex reagents enables the simultaneous analysis of eight different biological conditions [251,252].

iTRAQ hydrazide (iTRAQH)

iTRAQH is an isobaric tagging reagent for the selective labeling and relative quantification of carbonyl (CO) groups in proteins [253]. The reactive CO and oxygen groups which are generated as the byproducts of oxidation of lipids at the time of oxidative stress causes protein carbonylation [254]. iTRAQH is produced from iTRAQ and surplus of hydrazine. This reagent reacts with peptides which are carbonylated, thus forming a hydrazone group. iTRAQH is a novel method for analyzing carbonylation sites in proteins utilizing an isobaric tag for absolute and relative quantitation iTRAQ derivative, iTRAQH, and the analytical power of linear ion trap instruments (QqLIT). This new strategy seems to be well suited for quantifying carbonylation at large scales because it avoids time-consuming enrichment procedures [253]. Thus, there is no need for enriching modified peptides before LC-MS/MS analysis.

Tandem Mass Tag (TMT)

TMT labeling is based on a similar principle as that of iTRAQ. The TMT label is based on a glycine backbone and this limits the amount of sites for heavy atom incorporation In the case of 6-plex-TMT, the masses of reporter groups are roughly 126, 127, 128, 129, 130, and 131 Da [245]. TMT works best with MS platforms which allow quantitation at the MS3 level (e.g., Thermo Fisher Orbitrap Tribrid instruments) [243,255]. In experiments performed on Q-Orbitrap or Q-TOF platforms, MS2-based sequence identification (via b- and y-type ions) and quantitation (via low m/z reporter ion intensities) is performed. In experiments performed on Q-Orbitrap-LIT platfroms, MS3-based quantitation can be performed wherein the top ~10 most abundant b- and y-type ions are synchronously co-isolated in the linear ion trap and fragmented once more before product ions are scanned out in the Orbitrap mass analzer. Adding an additional layer of gas-phase purification limits the ratio distortion of co-isolated precursors within isobaric multiplexed quantitative proteomics [256,257]. Infrared photoactivation of co-isolated TMT fragment ions generates more quantitative reporter ion generation and sensitivity relative to standard beam-type collisional activation [258]. High-field asymmetric waveform spectrometry (FAIMS) also aids the accuracy of TMT-based quantitation on Tribrid systems [259]. TMT is widely used for quantitative protein biomarker discovery. In addition, TMT labeling technique helps multiplex sample analysis enabling efficient use of instrument time. TMT labelling also controls for technical variation because after samples are mixed the ratios are locked in, and any sample loss would be equal across channels. A wide range of TMT reagents with different multiplexing capabilities are available, such as TMT zero, TMT duplex, TMT 6-plex, TMT 10-plex, and TMT 11-plex. The recent addition of TMTpro tags, slight adaptations of the TMT structure based on proline, allow for higher plexing with TMTpro 16-plex [241] and now TMTpro 18-plex [242]. These TMT reagents have a similar chemical structure, which allows the efficient transition from method development to multiplexed peptide quantification [248].

iodoTMT

IodoTMT reagents are isobaric reagents used for tagging cysteine residues of peptides. The commercially available IodoTMT reagents are iodoTMTzero and iodoTMT 6-plex [260,261].

aminoxyTMT Isobaric Mass Tags

Also referred to as glyco-TMTs, these reagents have chemistry similar to iTRAQH. The stable isotope-labeled glyco-TMTs are utilized for quantitating N-linked glycans. They are derived from the original TMT reagents with an addition of carbonyl-reactive groups, which involve either hydrazide or aminoxy chemistry as functional groups. These aminoxy TMTs show a better performance as compared to its iTRAQH counterparts in terms of efficiency of labeling and quantification. The glyco-TMT compounds consist of stable isotopes thus enabling (i) isobaric quantification using MS/MS spectra and (ii) quantification in MS1 spectra using heavy/light pairs. Aminoxy TMT6–128 and TMT6–131 along with the hydrazide TMT2–126 and TMT2–127 reagents can be used for isobaric quantification. In the quantification at MS1 level, the light TMT0 and the heavy TMT6 reagents have a difference in mass of 5.0105 Da which is sufficient to separate the isotopic patterns of all common N-glycans. Glycan quantification based on glyco-TMTs generates more accurate quantification in MS1 spectra over a broad dynamic range. Intact proteins or their digests obtained from biological samples are treated with PNGase F/A glycosidases to release the N-linked glycans during the process of labeling using aminoxyTMT reagents. The free glycans are then purified and labeled with the aminoxyTMT reagent at the reducing end. The labeled glycans from individual samples are subsequently pooled and then undergo analysis in MS for identification of glycoforms in the sample and quantification of relative abundance of reporter ions at MS/MS level [262].

N,N-Dimethyl leucine (DiLeu)

The N,N-Dimethyl leucine, also referred to as DiLeu, is an tandem mass tag reagent which is isobaric and has reporter ions of isotope-encoded dimethylated leucine [263]. Each incorporated label produces a 145.1 Da mass shift. A maximum of four samples can be simultaneously analyzed using DiLeu at a highly reduced cost. MS/MS analysis shows intense reporter ions i.e., dimethylated leucine a1 ions at 115, 116, 117, and 118 m/z. The labeling efficiency of DiLeu tags are similar to that of the iTRAQ tags. Although, DiLeu-labeled peptides offer increased confidence of identification of peptides and more reliable quantification as they undergo better fragmentation, generating higher reporter ion intensities [263].

Deuterium isobaric Amine Reactive Tag (DiART)

DiART is an isobaric tagging method used in quantitative proteomics [264,265]. The reporter group in DiART tags is a N,N′-dimethyl leucine reporter group with a mass to charge range of 114–119. DiART reagents can a label a maximum of six samples and further analyzed by MS. The isotope purity of DiART reagents is very high hence correction of isotopic impurities is not needed at the time of data analysis [266]. The performances of DiART including the mechanism of fragmentation, the number of proteins identified and the quantification accuracy are similar to iTRAQ. Irrespective of the sequence of the peptide, reporter ions of high-intensity are produced by DiART tags in comparison to those with iTRAQ and thus, DiART labeling can be used to quantify more peptides as well as those with lower abundance, and with reliable results [264]. DiART serves as a cheaper alternative to TMT and iTRAQ while also having a comparable labeling efficiency. It has been observed that these tags are useful in labeling huge protein quantities from cell lysates before TiO2 enrichment in quantitative phosphoproteomics studies [267].

Hyperplexing or higher-order multiplexing

Some studies have combined metabolic labels (i.e., SILAC) with chemical tags (i.e., iTRAQ or TMT) to expand the multiplexing capacity of proteomics experiments referred to as hyperplexing [268,269] or higher order multiplexing [270,271,272]. This technique combines MS1- and MS2-based quantitative methods to achieve enhanced multiplexing by multiplying the channels used in each dimension. This allows for the quantitation of proteomes across multiple samples in a single MS run. The technique uses two types of mass encoding to label different biological samples. The labeled samples are then mixed together, which increases the MS1 peptide signal. Protein turnover rates were studied using SILAC-iTRAQ multitagging [273], while various cPILOT studies employed MS1 dimethyl labeling with iTRAQ [274,275,276,277]. SILAC-TMT hyperplexing was used to study the temporal response to rapamycin in yeast [278]. SILAC-iTRAQ-TAILS method was developed to study matrix metalloproteinases in the secretomes of keratinocytes and fibroblasts [279]. TMT-SILAC hyperplexing was used to study synthesis and degradation rates in human fibroblasts [280]. Variants of SILAC-iTRAQ and BONCAT, namely BONPlex [281] and MITNCAT [282] were also developed to study temporal proteome dynamics.

6. Peptide/Protein Enrichment and Depletion

In order to study low abundance protein modifications, or to study rare proteins in complex mixtures, various methods have been developed to enrich or deplete specific proteins or peptides.

Peptide enrichment

Glycosylation

Mass spectrometry-based analysis of protein glycosylation has emerged as the premier technology to characterize such a universal and diverse class of biomolecules. Glycosylation is a heterogenous post-translational modification that decorates many proteins within the proteome, conferring broad changes in protein activity. [58,283] This PTM can take many forms. The covalent linkage of mono- or oligosaccharides to polypeptide backbones through a nitrogen atom of asparagine (N) or an oxygen atom of serine (S) or threonine (T) side-chains creates N- and O-glycans, respectively. The heterogenity of proteoglycans is not directly tied to the genome, and thus cannot be inferred. Rather, the abundance and activity of protein glycosylation is governed by glycosyltransferases and glycosidases which add and remove glycans, respectively. The fields of glycobiology and bioanalytical chemistry are intricately intertwined with mass spectrometry at the center thanks in part to its power of detecting any modification that imparts a mass shift.

Due to the myriad glycan structures and proteins which harbor them, the enrichment of glycoproteins or glycopeptides is not as streamlined as that of other PTMs [284]. The enrichment of glycoproteome from the greater proteome inherently introduces bias prior to the LC-MS/MS analysis. One must take into account which class or classes of glycopeptides they are interested in analyzing before enrichment for optimal LC-MS/MS results. Glycopeptides can be enriched via glycan affinity, for example to glycan-binding proteins, chemical properties like charge or hydrophilicity, chemical coupling of glycans to stationary phases, and by bioorthogonal, chemical biology approaches. Glycan affinity-based enrichment strategies include the use of lectins, antibodies, inactivated enzymes, immobilized metal affinity chromatography (IMAC), and metal oxide affinity chromatography (MOAC). The enrichment of glycopeptides by their chemical properties, for example by biopolymer charge and hydrophobicity, include hydrophilic interaction chromatography (HILIC), electrostatic repulsion-hydrophilic interaction chromatography (ERLIC), and porous graphitic carbon (PGC). One variation of ERLIC combines strong anion exchange, electrostatic repulsion, and hydrophilic interaction chromatography (SAX-ERLIC) has risen in popularity thanks to robustness and commercially available enrichment kits [285,286].

Chemical coupling methods most often used to enrich the glycoproteome employ hydrazide chemistry for sialylated glycopeptides. Glycan are cleaved from the stationary phase by PNGase F. The dependence of chemical coupling methods on PNGase F biases their output toward N-glycopeptides. Alkoxyamine compounds and boronic acid-based methods have also shown utility. We direct readers to several reviews on glycopeptide enrichment strategies [284,287,288,289,290]

Phosphoproteomics

Protein phosphorylation, a hallmark of protein regulation, dictates protein interactions, signaling, and cellular viability. This post-translational modification (PTM) involves the installation of a negatively charged phosphate moiety (PO 4-) onto the hydroxyl side-chain of serine (Ser, S), threonine (Thr, T), and tyrosine (Tyr, Y), residues on target proteins. Protein kinases catalyze the transfer of PO 4- group from ATP to the nucleophile (OH) group of serine, threonine, and tyrosine residues, while protein phosphatases catalyze the removal of PO4-. Phosphorylation changes the charge of a protein, often altering protein conformation and therefore function [291]. Protein phosphorylation is one of the major PTMs that alters the stability, subcellular location, enzymatic activity complex formation, degradation of protein, and cell signaling of protein with a diverse role in cells [293]. Phosphorylation can regulate almost all cellular processes, including metabolism, growth, division, differentiation, apoptosis, and signal transduction pathways [32]. Rapid changes in protein phosphorylation are associated with several diseases [294].

Several methods are used to characterize phosphorylation using modification-specific enrichment techniques combined with advanced MS/MS methods and computational data analysis [295]. MS-based phosphoproteomics tools are pivotal for the comprehensive study for the structural and dynamics of cellular signaling networks [296], but there are many challenges [297]. For example, phosphopeptides are low stoichiometry compared to non-phosphorylated peptides, which makes them difficult to identify. Phosphopeptides also exhibit low ionization efficiency [298]. To overcome these challenges, it is important to reduce sample complexity to detect large numbers of phosphorylation sites. This is accomplished using enrichment the modified proteins and/or peptides [299,300,301]. Prefractionation techniques such as strong anionic ion-exchange chromatography (SAX), strong cationic ion-exchange chromatography (SCX), and hydrophilic interaction liquid chromatography (HILIC) are also often useful for reducing sample complexity before enrichment to observe more phosphorylation sites [302].

As with any proteomics experiment, phosphoproteomics studies require protein extraction, proteolytic enzyme digestion, phosphopeptide enrichment, peptide fractionation, LC-MS/MS, bioinformatics data analysis, and biological function inference. Special consideration is required during protein extraction where the cell lysis buffer should include phosphatase inhibitors such as sodium orthovanadate, sodium pyrophosphatase and beta-glycerophosphate [303].