Abstract

Sepsis, a complex medical condition that involves severe infections with life-threatening organ dysfunction, is a leading cause of death worldwide. Treatment of sepsis is highly challenging. When making treatment decisions, clinicians and patients desire accurate predictions of mean residual life (MRL) that leverage all available patient information, including longitudinal biomarker data. Biomarkers are biological, clinical, and other variables reflecting disease progression that are often measured repeatedly on patients in the clinical setting. Dynamic prediction methods leverage accruing biomarker measurements to improve performance, providing updated predictions as new measurements become available. We introduce two methods for dynamic prediction of MRL using longitudinal biomarkers. in both methods, we begin by using long short-term memory networks (LSTMs) to construct encoded representations of the biomarker trajectories, referred to as “context vectors.” In our first method, the LSTM-GLM, we dynamically predict MRL via a transformed MRL model that includes the context vectors as covariates. In our second method, the LSTM-NN, we dynamically predict MRL from the context vectors using a feed-forward neural network. We demonstrate the improved performance of both proposed methods relative to competing methods in simulation studies. We apply the proposed methods to dynamically predict the restricted mean residual life (RMRL) of septic patients in the intensive care unit using electronic medical record data. We demonstrate that the LSTM-GLM and the LSTM-NN are useful tools for producing individualized, real-time predictions of RMRL that can help inform the treatment decisions of septic patients.

Keywords: Biomarker, dynamic prediction, electronic medical record, long short-term memory network, longitudinal data, MIMIC-III, neural network, residual life, sepsis, transformed mean residual life model

1. Introduction.

When making treatment decisions, clinicians and patients often desire accurate predictions of remaining life expectancy that leverage all available patient information, including longitudinal biomarker data. The National Institutes of Health (NIH) defines a biomarker as “a characteristic that is objectively measured and evaluated as an indicator of normal biological processes, pathogenic processes, or pharmacologic responses to a therapeutic intervention (Strimbu and Tavel (2010)).” Longitudinal biomarker measurements, such as blood pressure, ventilator dependence, and white blood cell count, are commonly available in electronic medical records (EMRs). The recent proliferation of EMRs has led to a growing interest in using longitudinal biomarker data with “dynamic” prediction methods which provide updated predictions as new biomarker measurements become available. Clinicians and patients are especially interested in using longitudinal biomarker data to dynamically predict mean residual life (MRL), the remaining life expectancy of a patient at time , given the patient has survived up to time .

MRL prediction is of particular interest for patients diagnosed with sepsis, a complex medical condition that involves severe infections with life-threatening organ dysfunction (Singer et al. (2016)). Sepsis is a leading cause of death worldwide (Singer et al. (2016)). Although international guidelines for sepsis treatment have been established, treating septic patients remains highly challenging (Evans et al. (2021)). The heterogeneity of septic patient populations results in differing responses to medical intervention László et al. (2015)). Dynamic predictions of mean residual life provide clinicians with individualized, real-time information that can help inform the treatment decisions of septic patients.

We dynamically predict the restricted mean residual life (RMRL) of septic patients in the intensive care unit (ICU) from EMR data. We conduct our study using a data set constructed from the Multiparameter Intelligent Monitoring Intensive Care (MIMIC-III) database. MIMIC-III is a freely-available database comprised of deidentified health records for over 40,000 patients who stayed in the critical care units at Beth Israel Deaconess Medical Center between 2001 and 2012 (Johnson et al. (2016)). MIMIC-III contains data on patients’ demographics, vital signs, laboratory measurements, medications, imaging reports, chart notes, procedure codes, diagnostic codes, hospital stay, and survival. For a complete description of the MIMIC-III database, refer to Johnson et al. (2016).

In 2016, the definitions and clinical criteria for sepsis and septic shock were updated in the Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3) (Singer et al. (2016)). Sepsis-3 defines sepsis as a “life-threatening organ dysfunction caused by a dysregulated host response to infection” and provides clinical criteria for diagnosing septic patients (Singer et al. (2016)). Komorowski (2019) developed code to identify patients in MIMIC-III fulfilling the Sepsis-3 criteria. Komorowski’s code pulls relevant physiological parameters for each patient from up to 24 hours preceding their sepsis diagnosis until 48 hours after. The code aggregates the data into four-hour time windows, recording an appropriate summary statistic when several measurements were taken in the same time window. We use the code to construct our studied data set which contains 48 covariates measured on 20,954 patients. We observe time of death for less than 15% of the septic patients.

Due to the computational burden of repeated model fitting for performance evaluation, we study a reduced set of covariates that were identified to be important predictors of mortality for septic patients in relevant studies and exploratory data analysis (Carrara, Baselli and Ferrario (2015), Hou et al. (2020)). The selected covariates include a single baseline covariate and 15 longitudinal biomarkers. The baseline covariate of interest is an indicator of whether the patient was previously admitted to the ICU during the given hospital stay. The longitudinal biomarkers of interest include two treatment variables: the median dosage of vasopressors provided to the patient in the given four-hour time-window and the cumulative amount of intravenous (IV) fluid administered to the patient since hospital admission. We also study a longitudinal indicator of mechanical ventilator dependence as well as 12 vital signs and laboratory values: albumin, calcium, magnesium, hemoglobin, arterial lactate, arterial pH, fraction of inspired oxygen (FiO2), peripheral oxygen saturation (SpO2), Sequential Organ Failure Assessment (SOFA) score, respiratory rate, heart rate, and systolic blood pressure.

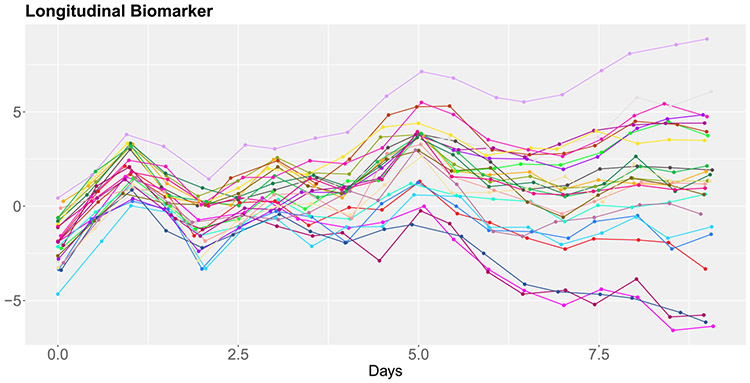

Biomarker trajectories of 25 randomly selected patients are illustrated in Figure 1. The longitudinal biomarker trajectories are sophisticated functions of time that exhibit notable variation among patients. Additionally, the number of biomarker measurements differs among patients. These complexities make it difficult to formulate a parametric model that fully captures the biomarker processes and their relationship with MRL.

Fig. 1.

The biomarker trajectories of magnesium (left) and systolic blood pressure (right) for 25 randomly selected septic patients in MIMIC-III.

The body of literature addressing how to model MRL with covariates measured only at baseline is vast. Popular baseline MRL models include proportional MRL models (Maguluri and Zhang (1994)), additive MRL models (Chen (2007)), and transformed MRL models (Sun and Zhang (2009)). Although Sun, Song and Zhang (2012) extended the family of transformed MRL models to accommodate time-dependent coefficients, none of the aforementioned models accommodate time-dependent covariates. Thus, they cannot be used to conduct dynamic prediction of MRL with longitudinal biomarker data.

A number of dynamic prediction models for survival risk have been developed via the landmarking approach (Zheng and Heagerty (2005), Van Houwelingen (2007), van Houwelingen and Putter (2012), Rizopoulos, Molenberghs and Lesaffre (2017), Zhu, Li and Huang (2019)). In a landmark analysis, a prediction model is fit at a series of time points, referred to as “landmark times,” using only data collected prior to the landmark time on patients at risk at the landmark time. Lin et al. (2018) used the landmarking approach to incorporate longitudinal covariates into the transformed MRL model presented by Sun, Song and Zhang (2012), effectively creating a dynamic prediction model for MRL.

To synthesize the longitudinal biomarker trajectories, Lin et al. (2018) proposed modeling the biomarkers using functional principal components analysis (FPCA). FPCA extracts dominant features from longitudinal trajectories as functional principal component (FPC) scores. Under the FPCA framework, Lin et al. (2018) introduced “window-specific FPC scores” that summarize a given longitudinal biomarker trajectory from baseline to the time of prediction. The authors proposed including the window-specific FPC scores as time-dependent covariates in their dynamic prediction model for MRL.

To calculate window-specific FPC scores at a given prediction time, Lin et al. (2018) presented a method that uses measurements collected from baseline to maximum follow-up time. Consequently, when the window-specific FPC scores are included as predictors in a dynamic MRL model, information collected after the time of prediction is used to predict MRL. This is undesirable in the dynamic prediction setting, where we wish to predict MRL using only the information available at the time of prediction.

Building on the work of Lin et al. (2018), we introduce two new methods to dynamically predict MRL from longitudinal biomarkers. The methods offer two potential advantages. First, the proposed methods uphold the dynamic nature of prediction. Second, the proposed methods may more effectively synthesize the complex longitudinal biomarker trajectories observed in MIMIC-III.

The proposed methods use long short-term memory networks (LSTMs) to construct “window-specific context vectors” which summarize the biomarker trajectories from baseline to the time of prediction. LSTMs are especially suitable for constructing summaries of biomarker trajectories because they are capable of modeling complex, heterogeneous functions. Thus, LSTMs are an attractive alternative to FPCA for synthesizing the MIMIC-III biomarkers. To uphold the dynamic nature of prediction, the LSTMs construct the window-specific context vectors using only the information available at the time of prediction.

The first proposed method, the long short-term memory generalized linear model (LSTM-GLM), dynamically predicts MRL using a dynamic transformed MRL model that includes the baseline covariates and window-specific context vectors as predictors. The second proposed method, the long short-term memory neural network (LSTM-NN), dynamically predicts MRL from the baseline covariates and window-specific context vectors using a feed-forward neural network. We apply the LSTM-GLM and the LSTM-NN to dynamically predict the RMRL of septic patients in MIMIC-III. We demonstrate that the LSTM-GLM and the LSTM-NN produce accurate, individualized, dynamic predictions. Thus, the LSTM-GLM and the LSTM-NN can be used to inform the challenging treatment decisions of patients diagnosed with sepsis.

In Section 2, we introduce the dynamic transformed MRL model, and we present the LSTM-GLM and the LSTM-NN. In Section 3, we describe the procedure used to evaluate prediction performance. In Section 4, we present simulation studies, and in Section 5, we apply the proposed methods to predict the RMRL of septic patients in MIMIC-III. We conclude with a discussion of implications and open problems in Section 6.

2. Methods.

2.1. Notation.

Let there be patients, and let denote the time at which biomarker measurement was collected on patient , . We study septic patients with measurements. Let and denote the potential times to death and censoring, respectively, for patient . We observe only and , the indicator of whether the death of patient was observed () or censored (). Let denote the -dimensional vector of baseline covariates measured on patient . We study indicator of whether patient was previously admitted to the ICU during the given hospital stay. Let denote the -dimensional vector of longitudinal biomarkers measured on patient at time . We study longitudinal biomarkers, including two treatment variables, an indicator of mechanical ventilator dependence, and 12 vital signs and laboratory values. Denote the covariate history of patient at time as , where . At time , the observed data for patient are {}. The mean residual life (MRL) of patient at time , given the patient’s covariate history, is .

To avoid infinite remaining life expectancy and extreme propensity weights (see Section 2.2), we set a restricted lifetime of days. Our potential survival time of interest is then , and the restricted mean residual life (RMRL) for patient at time , given the patient’s covariate history, is . We use the dynamic prediction methods presented in Section 2 to predict the RMRL of septic patients by redefining and .

2.2. Dynamic transformed MRL model.

Building on the work of Lin et al. (2018), we present a dynamic transformed MRL model that regresses residual life only on information collected prior to the time of prediction on patients at risk at the time of prediction. Let be a vector-valued function, and specify a prediction time . For patients with , define the -dimensional vector . Additionally, let be a prespecified, nonnegative link function that is twice continuously differentiable and strictly increasing. We specify the dynamic transformed MRL model as

| (1) |

where is a scalar, time-dependent parameter, is a -dimensional, time-dependent parameter vector, and is a -dimensional, time-dependent parameter vector.

Define , where is an estimate of the survival function of censoring time. We estimate the parameters in equation (1) via a landmarking approach. Contrary to Lin et al. (2018), we do not adopt a supermodel approach for parameter estimation (Van Houwelingen (2007), van Houwelingen and Putter (2012)). Instead, we prespecify a set of positive prediction times . For each , we use penalized maximum likelihood methods (Friedman, Hastie and Tibshirani (2010)) to compute the values of , , and that minimize the objective function

where is a scalar tuning parameter and represents the -norm. The inverse probability of censoring weights account for censoring in the data. We assume censoring time is independent of the baseline covariates and longitudinal biomarkers, and we estimate using the Kaplan–Meier estimator. Alternatively, if censoring time is assumed to depend on only the baseline covariates, a Cox regression model can be used to estimate .

We impose an -penalty on regression parameters in the objective function to prevent overfitting. To ensure fair penalization, we apply proportion-of-maximum scaling (POMS) to the longitudinal biomarkers such that

Conducting dynamic prediction of MRL with is difficult in practice. Often, not all patients have longitudinal measurements available at all desired prediction times . In the MIMIC-III data set, longitudinal measurements are recorded at four-hour time intervals. Over 80% of patients are missing at least one measurement, and over 25% of patients are missing at least 10 measurements for all studied longitudinal biomarkers. Parametric models of that could be used to impute missing measurements are likely to be misspecified due to the complex, heterogeneous nature of biomarker processes. Moreover, regressing MRL only on the biomarker measurements taken at the time of prediction discards the information contained in the history of measurements.

Intuitively, it is desirable to select a function that summarizes the biomarker trajectories from baseline to prediction time . However, a simple summary function, such as average or slope, is unlikely to capture the complex trajectories of the longitudinal biomarkers. To address these complications, Lin et al. (2018) proposed summarizing the biomarker trajectories from baseline to prediction time using window-specific FPC scores. Alternatively, we propose summarizing the trajectories using window-specific context vectors constructed by LSTM autoencoders.

2.3. Context vector construction.

The window-specific context vector is an encoded representation of the trajectory of biomarker from baseline to prediction time for patient . At each prediction time , a distinct LSTM autoencoder is used to construct each of the sets of window-specific context vectors. An LSTM autoencoder is an unsupervised neural network that learns how to best encode temporal input into a context vector, so it can then reconstruct the original input from that context vector. To uphold the dynamic nature of prediction, the LSTM autoencoder used to construct only accepts as input the biomarker measurements collected prior to time on patients at risk at time , where patient is defined to be at risk at time if .

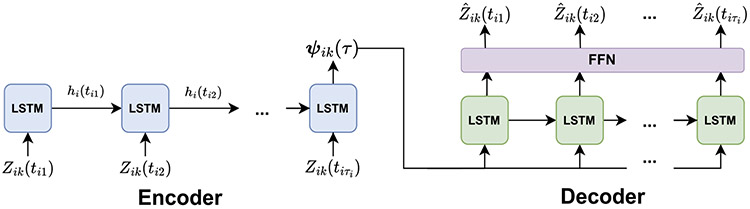

An LSTM autoencoder, which consists of an encoder and a decoder, is illustrated in Figure 2. Proportion-of-maximum scale the biomarker data, and let be the -dimensional vector of scaled measurements of biomarker collected on patient prior to time . For each patient with , input into the encoder. The encoder compresses into the window-specific context vector . The decoder then constructs an estimate of the input scaled biomarker measurements, , from . The autoencoder is trained to minimize the reconstruction error

Fig. 2.

An LSTM autoencoder at time , comprised of an encoder and a decoder. Both the encoder and decoder consist of a series of LSTM temporal units, labelled “LSTM.” The decoder also contains a feed-forward neural network layer, labelled “FFN.” The encoder compresses the input biomarker measurements into the window-specific context vector , and the decoder attempts to reconstruct the original biomarker measurements from . Information is passed between the LSTM temporal units via the hidden vectors .

After training the autoencoder, the decoder can be removed from the network, so the encoder outputs the context vector directly to the user.

In an LSTM autoencoder, both the encoder and decoder are a type of recurrent neural network called a “long short-term memory network.” Recurrent neural networks (RNNs) are a class of artificial neural networks designed to process sequential data. RNNs contain a feedback loop that enables information from previous time steps to be passed to future time steps. The information is passed in an -dimensional vector , referred to as a “hidden vector.” The parameters in an RNN are estimated via the back propagation through time (BPTT) algorithm (Werbos (1990)). In the BPTT algorithm, derivatives are multiplied across time steps. Consequently, in RNNs with a large number of time steps, if the derivatives are large, the gradients will increase exponentially and “explode.” If the derivatives are small, the gradients will decrease exponentially and “vanish.” This is referred to as the “vanishing and exploding gradient problem (Aggarwal (2018)).” The vanishing and exploding gradient problem makes it difficult for simple RNNs to capture long-term dependencies. LSTMs were designed especially to mitigate the vanishing and exploding gradient problem.

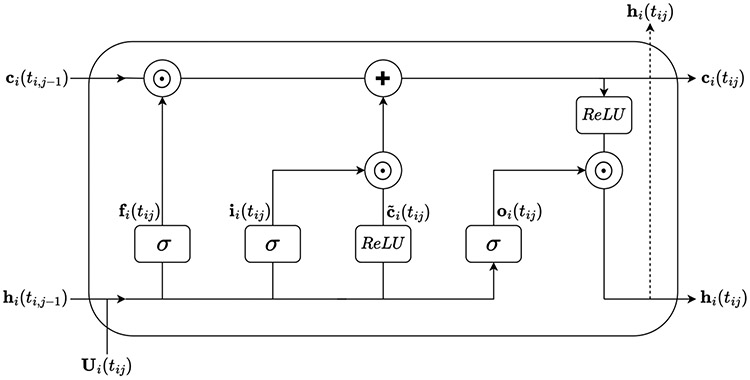

An LSTM can be conceptualized as a network of temporal units, with a single temporal unit corresponding to each time step in the data. Figure 3 depicts the LSTM temporal unit corresponding to time . LSTM networks mitigate the vanishing and exploding gradient problem using an -dimensional vector , referred to as the “cell state.” Conceptually, the cell state can be thought of as a pseudo long-term memory that retains information from previous time steps (Aggarwal (2018)). The cell state is controlled by three -dimensional gate control signals, the input gate , the forget gate , and the output gate . These gate control signals determine which information in the cell state is updated, discarded, and output to the next time step, respectively. Let represent the -dimensional input vector for patient at time , and let represent the -dimensional hidden vector output for patient at time . Then the three gate control signals for patient are characterized by the equations

where , , , is an parameter matrix, is an parameter matrix, and is an bias vector. Note, , , and are gate-specific and temporally-shared.

Fig. 3.

An LSTM temporal unit at time .

At time , an -dimensional candidate cell state for patient , , is computed as

where is an parameter matrix, is an parameter matrix, and is an bias vector. Again, , , and are temporally-shared parameters.

At time , the cell state for patient , , is then computed as

where represents the Hadamard product.

Ultimately, each temporal unit outputs a hidden vector for patient , , computed as

The hidden vector is then passed to the next temporal unit. Additionally, it may be output to the next layer in the LSTM autoencoder.

At each prediction time , for each of the biomarkers, we train a separate LSTM autoencoder to construct the window-specific context vectors for all patients such that . Let the superscript signify elements of the encoder, and let the superscript signify elements of the decoder. Each encoder accepts as input the scaled measurements of biomarker collected at times on patients with . For a given patient , each biomarker measurement is input into a separate LSTM temporal unit, so and for . The hidden vector for patient output by the last temporal unit is taken to be the context vector for patient , so .

Similar to the encoder, the decoder contains an LSTM temporal unit corresponding to each biomarker measurement. In the decoder, each LSTM temporal unit accepts the context vector for patient as input, so and for . Each temporal unit outputs the hidden vector which is fed into a feed-forward neural network (FFN) layer. At each measurement time , the FFN layer constructs an estimate of the scaled biomarker measurement for patient , , from the hidden vector via linear regression. Specifically,

where is an -dimensional, temporally-shared parameter vector, and is a temporally-shared scalar bias.

As previously mentioned, the LSTM autoencoder is trained to minimize the reconstruction error . After training the autoencoder for biomarker at prediction time , the window-specific context vector can be extracted for each patient such that . Because each window-specific context vector is constructed using only measurements taken at times on patients with , can be used to conduct dynamic prediction. Moreover, since the number and timing of biomarker measurements can differ between patients, imputation of missing or irregularly measured biomarkers is unnecessary.

Each LSTM autoencoder has several hyperparameters that influence how well the output window-specific context vectors summarize the input biomarker trajectories. Important hyperparameters include the dimension of the window-specific context vector, , and the number of times the BPTT algorithm processes the entire data set, referred to as the number of “training epochs.” Too many training epochs can lead to overfitting the data, and too few can lead to underfitting. These hyperparameters can be selected via traditional tuning methods such as hold-out validation or cross-validation. In Section 1 of the Supplementary Material (Rhodes, Davidian and Lu (2023)), we present an automated approach for selecting these hyperparameters for the LSTM-GLM.

2.4. LSTM-GLM.

First, we dynamically predict MRL using a dynamic transformed MRL model that includes the baseline covariates and window-specific context vectors as predictors. Let be an -dimensional vector containing the biomarker-specific, window-specific context vectors for patient at prediction time . Additionally, let be a prespecified, nonnegative link function that is twice continuously differentiable and strictly increasing. The LSTM-GLM is specified as

| (2) |

The LSTM-GLM is a special case of the dynamic transformed MRL model, specified in equation (1), where . Accordingly, we estimate the parameters in equation (2) via penalized maximum likelihood by adopting the landmarking approach detailed in Section 2.2. Because a separate context vector is constructed for each biomarker, the parameter estimates of the LSTM-GLM can be used to gain insight into the relationship between the longitudinal biomarkers and mean residual life.

2.5. LSTM-NN.

Next, we introduce an alternative method for dynamic prediction of MRL from window-specific context vectors. The LSTM-NN dynamically predicts MRL using a feed-forward neural network that accepts the baseline covariates and window-specific context vectors as input. Compared to generalized linear models, feed-forward neural networks are more capable of modeling complex functional relationships. In fact, a neural network with a single nonlinear hidden layer and a single linear output layer can compute almost any function (Aggarwal (2018)). This makes neural networks ideal for modeling the complex relationship between MRL and the biomarker processes.

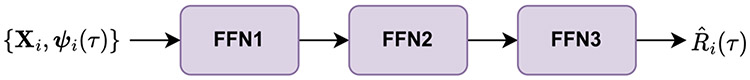

The LSTM-NN is a feed-forward neural network comprised of one or more hidden layers and an output layer. The first hidden layer takes the baseline covariates and context vectors as input, and the output layer produces estimates of MRL. Additional hidden layers can be added to the LSTM-NN to tailor the network’s flexibility to the complexity of the studied data set. For our simulation studies and MIMIC-III data application, we consider an LSTM-NN with two hidden layers, as illustrated in Figure 4.

Fig. 4.

The LSTM-NN at prediction time .

For a given prediction time , the first hidden layer, FFN1, accepts the baseline covariates and the window-specific context vectors as input. FFN1 then computes and outputs the -dimensional hidden vector , which is calculated as

where is a parameter matrix and is a -dimensional bias vector.

The second hidden layer, FFN2, then accepts as input. FFN2 computes and outputs the -dimensional hidden vector , which is calculated as

where is a parameter matrix and is a -dimensional bias vector.

The output layer, FFN3, then accepts as input. FFN3 computes and outputs the estimate of MRL for patient at time as

where is a -dimensional parameter vector and is a scalar bias.

As with the LSTM-GLM, we estimate the parameters of the LSTM-NN via a landmarking approach. First, we specify a set of positive prediction times . Then for each , we train the LSTM-NN to minimize the objective function

Again, we use inverse probability of censoring weights to account for censoring, where is the Kaplan–Meier estimate of the survival function of censoring time. Additionally, we apply an -penalty to the parameter matrices and to prevent overfitting. Here represents the -norm, and is a tuning parameter for the -penalties.

The LSTM-NN provides more flexibility in modeling the relationship between MRL and the longitudinal biomarkers than the LSTM-GLM. However, the complexity of the feed-forward neural network makes it difficult to interpret the relationship between the biomarkers and MRL. Moreover, the LSTM-NN has a number of hyperparameters that must be tuned, including the dimension of the parameter matrices , the tuning parameter for the -penalty , and the number of epochs used to train the LSTM-NN. These hyperparameters can be tuned using traditional processes, such as hold-out validation or cross-validation. However, these processes are computationally-intensive, and imperfect tuning can result in poor prediction performance.

3. Performance evaluation.

3.1. Comparative methods.

For the LSTM-GLM and the LSTM-NN to have utility in the clinical setting, the models must produce accurate dynamic predictions of MRL relative to competing dynamic prediction methods. Consequently, we evaluate the prediction performance of the LSTM-GLM and the LSTM-NN relative to six variations of the dynamic transformed MRL model specified in equation (1). For each of the six dynamic transformed MRL models, we define a distinct function of the history of longitudinal biomarker measurements, . To maintain the dynamic nature of prediction, we construct using only biomarker measurements taken at times on patients with . First, we define to be a vector of the baseline biomarker measurements. Second, we define to be a vector of the biomarker measurements collected most recently before prediction time (i.e., the “last-value carried forward”). Third, we define to be a vector of the average value of each biomarker prior to time . Next, we define two vectors containing the intercept and slope of each biomarker regressed against time. The first vector, , is formed by conducting an independent linear regression on each patient for each biomarker. The second vector, , is formed by fitting a single linear mixed effects model with a random intercept and slope to all patients for each biomarker. Lastly, we define to be a vector of FPC scores computed independently on each biomarker. For each biomarker, contains the minimum number of FPC scores required to explain 99% of the total variance of that biomarker. We provide technical specifications for each comparative method in Section 2 of the Supplementary Material (Rhodes, Davidian and Lu (2023)).

3.2. Performance metrics.

To evaluate prediction performance, we focus on measures of calibration and discrimination. In the MIMIC-III data set, the survival time of interest was observed for only 14.99% of patients. Consequently, it is important for the measures of calibration and discrimination to account for censoring. Let represent a given model’s estimate of RMRL for patient at time . We assess the calibration of each model via the inverse probability of censoring weighted mean square error

We refer to this quantity as the “testing loss.”

In addition to calibration, we assess each model’s discrimination, that is, its ability to accurately predict who among a given pair of patients will live longer. We compute the following discrimination metric based on Harrell’s C-Index (Harrell, Lee and Mark (1996)):

where and . We refer to this quantity as the “testing C-index.”

3.3. Software.

We conduct the simulation studies and MIMIC-III data application in Python and R. We build and train the LSTM autoencoders and LSTM-NNs in Python using the Keras library (Chollet et al. (2015)). We fit the LSTM-GLMs and the six dynamic transformed MRL models in R using the glmnet package (Friedman, Hastie and Tibshirani (2010)). We compute the Kaplan–Meier estimate of the survival function of censoring time, , in R using the survival package (Therneau and Grambsch (2000)). We leverage the fdapace R package (Gajardo et al. (2021)) to construct the FPCA vectors , and we use the lme4 R package (Bates et al. (2015)) to construct the linear regression vectors and the mixed effects vectors .

4. Simulations.

We conduct simulation studies to assess the prediction performance of the LSTM-GLM and the LSTM-NN relative to the performance of the six dynamic transformed MRL models described in Section 3.1. We generate a single data set of covariates for patients. For each patient we generate a single baseline covariate , where denotes the uniform distribution. Let , and let denote a normal distribution with mean and variance-covariance . For each patient we generate a single longitudinal biomarker at patient-specific measurement times , where . We generate the longitudinal biomarker measurements as , where represents the measurement error, and is a piecewise linear mixed effects model with eight interior knots. Specifically,

where and . Let rep() denote a vector containing repeated times. Then , where is the diagonal matrix . Figure 5 illustrates the longitudinal biomarker trajectories of 25 randomly selected patients in the generated data set of covariates.

Fig. 5.

The biomarker trajectories of 25 randomly selected patients in the simulated covariate data set.

We conduct two simulation studies. In each study, we conduct 500 simulations using the aforementioned data set of covariates. For each of the 500 simulations, we generate a new data set of survival times and censoring times for all patients . In both studies, we generate the censoring times as . Conversely, we generate the survival times using a different model for each study. In the first study, we generate according to the accelerated failure time (AFT) model , where , , and . In the second study, we generate according to a Cox proportional hazards model with an exponential baseline hazard function. Specifically, , where and . In both studies, we impose a restricted lifetime of . We describe the technical details of the survival time generation process in the Supplementary Material, Section 3 (Rhodes, Davidian and Lu (2023)).

We conduct prediction at time , so . Across the 500 simulated AFT data sets, between 1824 and 1958 (mean = 1886) patients are at risk at . Across the 500 simulated Cox data sets, between 1368 and 1502 (mean = 1438) patients are at risk at . For both the AFT and Cox simulations, between 14% and 20% (mean = 17%) of the patients at-risk at are censored.

We log-transform the observed restricted residual lifetimes , and we define the link function for the LSTM-GLM and the six dynamic transformed MRL models to be the identity . Since we consider only a single longitudinal biomarker, we are not concerned with overfitting the data. Consequently, we set the tuning parameter to in all dynamic prediction models.

We specify the dimension of the window-specific context vectors to be , and we train the LSTM autoencoders for 500 epochs. We specify the dimension of the LSTM-NN parameter matrices to be , and we train the LSTM-NNs for 5000 epochs. We train all neural networks using the Adam optimization algorithm (Kingma and Ba (2017)). For the LSTM autoencoders, we use cross-validation to adaptively select the learning rate from the options {} (O’Malley et al. (2019)). For the LSTM-NNs, we select a fixed learning rate of 1e−3.

For each simulation we randomly divide the data into a training data set and a testing data set, stratifying on the censoring status . We specify the training and testing data sets to each include 50% of the patients at risk at time . We estimate the survival function of censoring time independently on the training and testing data sets. We then fit each model on the training data set and compute the performance metrics specified in Section 3.2 on the testing data set. We provide the Python and R code used to conduct the simulation studies in the Supplementary Material (Rhodes, Davidian and Lu (2023)).

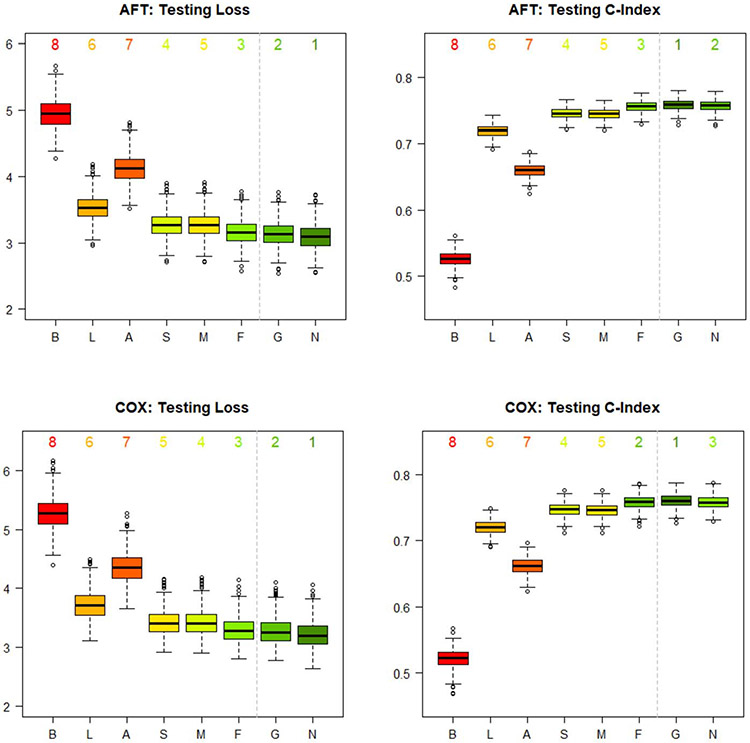

We plot the distributions of the 500 testing losses and 500 testing C-indexes for each of the eight studied dynamic prediction models in Figure 6. For both the AFT and Cox simulations, the LSTM-NN results in the lowest median testing loss, followed by the LSTM-GLM. The LSTM-GLM consistently results in the highest median testing C-index. The LSTM-NN results in the second-highest median testing C-index for the AFT simulations and in the third-highest for the Cox simulations, where it is surpassed by the FPCA model. Overall, the simulation studies indicate that the LSTM-GLM and the LSTM-NN exhibit better calibration and discrimination than the baseline, last-value carried forward, average, linear regression, and mixed effects models, and that they exhibit at least comparable performance to the FPCA model. Thus, the simulation studies support that the LSTM-GLM and the LSTM-NN are useful tools for dynamically predicting MRL from longitudinal biomarker data.

Fig. 6.

Distributions of the 500 testing losses and 500 testing C-indexes for each of the eight dynamic prediction models in the simulation studies. The models resulting in the lowest median testing loss and the highest median testing C-index are labelled 1. The models resulting in the highest median testing loss and the lowest median testing C-index are labelled 8. The six dynamic transformed MRL models are labelled according to their formulation of . “B” represents the baseline vector. “L” represents the last-value carried forward vector. “A” represents the average vector. “S” represents the linear regression vector. “M” represents the mixed effects vector. “F” represents the FPCA vector. Furthermore, “G” represents the LSTM-GLM, and “N” represents the LSTM-NN.

In Section 4 of the Supplementary Material, we repeat the simulation studies, reducing both the measurement error and the variation in measurement times (Rhodes, Davidian and Lu (2023)). Compared to the simulations studies described above, the supplemental simulation studies demonstrate a more significant improvement in calibration and discrimination for the LSTM-GLM and the LSTM-NN relative to competing methods. Thus, the supplemental simulation studies indicate that the LSTM-GLM and the LSTM-NN are especially useful for producing accurate dynamic predictions of MRL in settings where the longitudinal biomarkers are measured using precise instruments.

5. Application to MIMIC-III.

5.1. Data analysis.

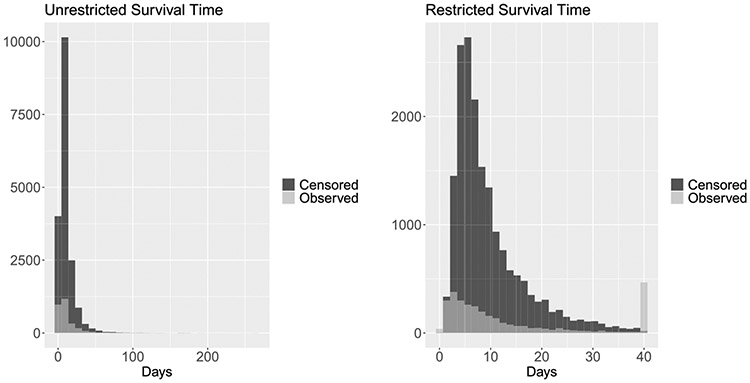

We dynamically predict the RMRL of septic patients in the ICU from EMR data using the LSTM-GLM and the LSTM-NN. To evaluate the utility of the proposed methods in this clinical setting, we compare the prediction performance of the LSTM-GLM and the LSTM-NN to the performance of the six dynamic transformed MRL models described in Section 3.1. We conduct the study on the MIMIC-III data set described in Section 1. The distributions of the unrestricted and restricted survival times of the septic patients are depicted in Figure 7. Because the distribution of restricted survival times is notably right skewed and RMRL is nonnegative by definition, we log-transform the observed restricted residual lifetimes . We then define the link function for the LSTM-GLM and the six dynamic transformed MRL models to be the identity . Because we conduct our study using longitudinal biomarkers, we face the risk of overfitting the data. Consequently, we select the -penalty tuning parameter via five-fold cross-validation for the LSTM-GLM and the six dynamic transformed MRL models.

Fig. 7.

LEFT: The distribution of unrestricted survival time, stratified by censoring status. RIGHT: The distribution of survival time restricted to L = 40 days, stratified by censoring status.

We provide a detailed description of the hyperparameter selection process for the LSTM-GLM and the LSTM-NN in the Supplementary Material, Section 5 (Rhodes, Davidian and Lu (2023)). Let denote the dimension of the window-specific context vectors, and let denote the number of epochs used to train the LSTM autoencoders. At each prediction time , we construct four sets of window-specific context vectors using the hyperparameter settings , and we fit four LSTM-GLMs that each regress on one of the four sets of context vectors. For each , we define the LSTM-GLM that results in the lowest median testing loss to be the “best” LSTM-GLM at time . The hyperparameter settings of the best LSTM-GLM at each can be seen in Table 1.

Table 1.

The hyperparameter settings of the best LSTM-GLM and the best LSTM-NN at each prediction time , where the “best” model is defined to be the one resulting in the lowest median testing loss

| Prediction Time |

LSTM-GLM |

LSTM-NN |

|||||

|---|---|---|---|---|---|---|---|

| Days | |||||||

| 1 | 3 | 150 | 7 | 300 | 0.01 | 1 | 2000 |

| 1.5 | 7 | 300 | 7 | 300 | 0.005 | 2 | 3000 |

| 2 | 3 | 150 | 7 | 300 | 0.005 | 2 | 2000 |

| 2.5 | 5 | 300 | 7 | 300 | 0.01 | 2 | 2000 |

| 3 | 7 | 300 | 7 | 300 | 0.01 | 2 | 3000 |

Additionally, we fit an “automated” LSTM-GLM which selects the hyperparameter settings of the window-specific context vectors via cross-validation. We describe the technical details of the automated hyperparameter selection process in the Supplementary Material, Section 1 (Rhodes, Davidian and Lu (2023)).

Let denote the LSTM-NN -penalty tuning parameter, let denote the dimension of the LSTM-NN parameter matrices, and let denote the number of LSTM-NN training epochs. We train eight LSTM-NNs on the window-specific context vectors constructed with hyperparameter settings (7, 300) using all eight possible combinations of , , and . For each , we define the LSTM-NN that results in the lowest median testing loss to be the “best” LSTM-NN at time . The hyperparameter settings of the best LSTM-NN at each can be seen in Table 1.

We train all LSTM autoencoders and LSTM-NNs using the Adam optimization algorithm (Kingma and Ba (2017)). For each LSTM autoencoder, we use Bayesian optimization to adaptively select the learning rate from the options {} (O’Malley et al. (2019)). For the LSTM-NN, we select a fixed learning rate of 1e−4.

We conduct prediction at five prediction times, , where represents the number of days passed since the time of the given patient’s first record in the data set. At prediction time , we randomly divide the data into a training data set and a testing data set, stratifying on the censoring status . The training data set is specified to include 70% of the patients at risk at time , and the testing data set is defined to include the other 30% of patients at risk. We estimate the survival function of censoring time independently on the training and testing data sets. We then fit each model on the training data set and compute the performance metrics, specified in Section 3.2, on the testing data set. We repeat this process 100 times, using 100 unique divisions of the data.

5.2. Results.

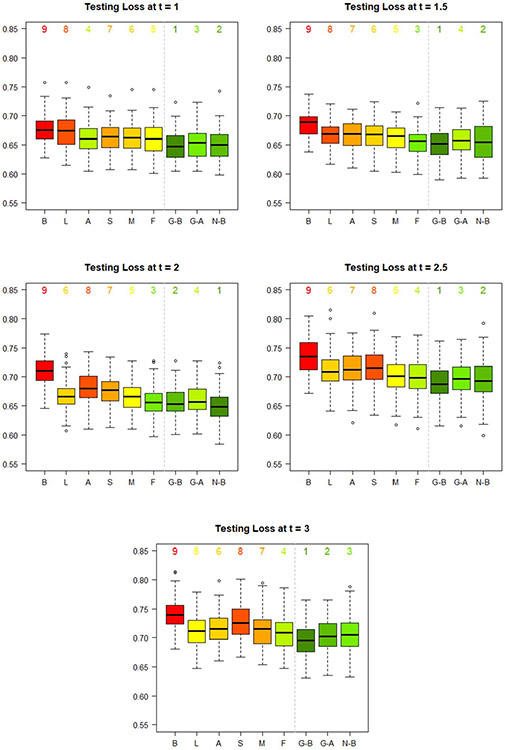

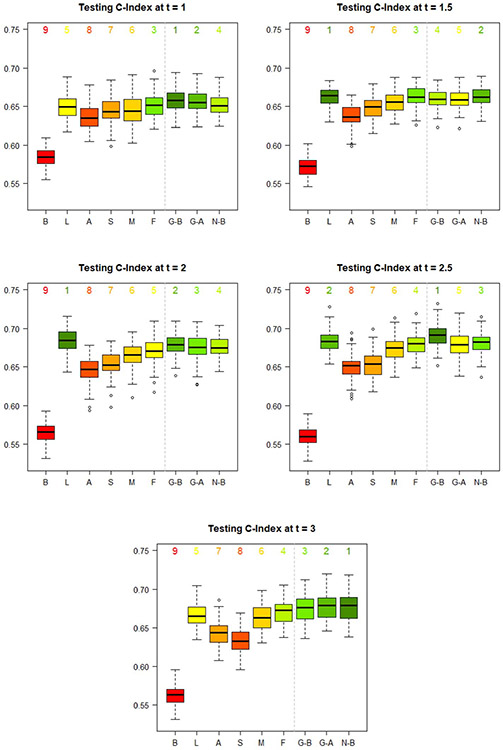

For each prediction time , we plot the distribution of 100 testing losses for the best LSTM-GLM, the automated LSTM-GLM, the best LSTM-NN, and the six dynamic transformed MRL models in Figure 8, and we plot the distribution of 100 testing C-indexes in Figure 9.

Fig. 8.

Distribution of the 100 testing losses for each of the nine studied dynamic prediction models at each prediction time . The model resulting in the lowest median testing loss is labelled 1. The model resulting in the highest median testing loss is labelled 9. The six dynamic transformed MRL models are labelled according to their formulation of . “B” represents the baseline vector. “L” represents the last-value carried forward vector. “A” represents the average vector. “S” represents the linear regression vector. “M” represents the mixed effects vector. “F” represents the FPCA vector. The best LSTM-GLM is labelled “G-B.” The automated LSTM-GLM is labelled “G-A. ” The best LSTM-NN is labelled “N-B.”

Fig. 9.

Distribution of the 100 testing C-indexes for each of the nine studied dynamic prediction models at each prediction time . The model resulting in the highest median testing C-index is labelled 1. The model resulting in the lowest median testing C-index is labelled 9. The six dynamic transformed MRL models are labelled according to their formulation of . “B” represents the baseline vector. “L” represents the last–value carried forward vector. “A” represents the average vector. “S” represents the linear regression vector. “M” represents the mixed effects vector. “F” represents the FPCA vector. The best LSTM-GLM is labelled “G-B.” The automated LSTM-GLM is labelled “G-A.” The best LSTM-NN is labelled “N-B.”

First, we compare the calibration of the nine dynamic prediction models via the testing loss. Generally, the best LSTM-GLM, the automated LSTM-GLM, the best LSTM-NN, and the FPCA model result in the lowest median testing losses. The baseline model consistently results in the highest median testing loss.

At prediction times , the best LSTM-GLM results in the lowest median testing loss. At , the best LSTM-NN results in the lowest median testing loss. In this application, the added flexibility of the LSTM-NN does not offset the cost of imperfect hyperparameter tuning.

In practice, we do not know which hyperparameter settings result in the “best” LSTM-GLM and the “best” LSTM-NN. Consequently, we use cross-validation to automatically select hyperparameters for the LSTM-GLM, as detailed in Section 1 of the Supplementary Material (Rhodes, Davidian and Lu (2023)). Generally, the automated hyperparameter selection process performs well. Disregarding the best LSTM-GLM and the best LSTM-NN, the automated LSTM-GLM results in the lowest median testing loss at prediction times , and in the second-lowest median testing loss at , where it is beat only by the FPCA model.

Second, we compare the discrimination of the nine dynamic prediction models via the testing C-index. As with calibration, the best LSTM-GLM, the automated LSTM-GLM, the best LSTM-NN, and the FPCA model generally result in good discrimination, and the baseline model consistently results in the poorest discrimination. Interestingly, the last-value carried forward model results in the highest median testing C-index at prediction times , 2.

The best LSTM-NN results in a higher testing C-index than the best LSTM-GLM only at prediction times , 3. Again, this indicates that the added flexibility of the LSTM-NN is offset by imperfect hyperparameter tuning.

The automated hyperparameter selection process for the LSTM-GLM performs well with respect to discrimination. Disregarding the best LSTM-GLM and the best LSTM-NN, the automated LSTM-GLM results in the highest median testing C-index at prediction times , the second-highest at , and the third-highest at . In this case, the discrimination of the automated LSTM-GLM is beat only by the last-value carried forward model and the FPCA model.

In clinical application, calibration performance is often valued over discrimination performance. Typically, it is more important to accurately predict one septic patient’s remaining life expectancy than to accurately predict which of two septic patients has a longer remaining life expectancy. Taking this into consideration, these results suggest that the LSTM-GLM and the LSTM-NN exhibit the best performance when dynamically predicting the RMRL of septic patients in the ICU from EMR data, as compared to the baseline, last-value carried forward, average, linear regression, and mixed effects models. Moreover, the LSTM-GLM and the LSTM-NN exhibit at least comparable performance to the FPCA model, and if their hyperparameters are properly tuned, the LSTM-GLM and the LSTM-NN exhibit better performance than the FPCA model. This study demonstrates that the LSTM-GLM and the LSTM-NN are useful models for producing individualized, real-time predictions of the RMRL of septic patients in the ICU from EMR data.

6. Discussion.

Sepsis is a leading cause of death worldwide and remains highly challenging to treat (Singer et al. (2016)). We introduce two methods, the LSTM-GLM and the LSTM-NN, to dynamically predict the RMRL of septic patients in the ICU from EMR data. Through simulation studies and application to the MIMIC-III data set, we demonstrate that the LSTM-GLM and the LSTM-NN exhibit superior prediction performance relative to six competing methods. Thus, the LSTM-GLM and the LSTM-NN offer an automatic method to synthesize complex longitudinal biomarker trajectories and produce accurate predictions of MRL, all while upholding the dynamic nature of prediction. By producing individualized, real-time predictions of the RMRL of septic patients, the LSTM-GLM and the LSTM-NN can help clinicians make informed treatment decisions, potentially improving septic patient care.

The LSTM-GLM and the LSTM-NN can process data containing a large number of patients and biomarkers, like that typically found in EMRs. However, the methods can be computationally expensive relative to the baseline, last-value carried forward, average, linear regression, mixed effects, and FPCA dynamic transformed MRL models.

Because we propose training a distinct LSTM autoencoder to construct the context vector for each longitudinal biomarker, the relative computational cost of the LSTM-GLM and the LSTM-NN is most notable in settings with a large number of longitudinal biomarkers. To improve the computational efficiency of the proposed methods, a context vector summarizing multiple biomarkers could be constructed by training a single LSTM autoencoder on multiple biomarkers. However, this approach further obfuscates the relationship between MRL and the biomarkers. Additional research needs to be conducted to evaluate the feasibility and utility of this approach.

Alternative to the presented methods, a joint model could be constructed to connect the longitudinal biomarkers with the hazard function of death (Tsiatis and Davidian (2004)), and MRL predictions could be derived from the hazard function. However, this procedure can make it difficult to interpret the relationship between the biomarkers and MRL. Additionally, constructing joint models with a large number of longitudinal biomarkers can be computationally challenging (Hickey et al. (2016)).

In this work, we assume censoring time is independent of the baseline covariates and longitudinal biomarkers, and we estimate the survival function of censoring time via the Kaplan–Meier method. If censoring time is dependent on both baseline and longitudinal covariates, a time-dependent Cox regression model can be used to estimate . However, further research should be conducted to determine how best to incorporate the history of longitudinal biomarker measurements into the Cox regression model. Potentially, the window-specific context vectors could be used as predictors in the Cox model.

We present an automated hyperparameter selection process for the LSTM-GLM in the Supplementary Material, Section 1 (Rhodes, Davidian and Lu (2023)). Further research is required to formulate satisfactory methods for tuning the hyperparameters of the LSTM-NN.

In this paper, we focus on obtaining accurate predictions of MRL. Consequently, we impose an -penalty on the parameters of the LSTM-GLM to prevent overfitting. To conduct variable selection with the LSTM-GLM, a group LASSO penalty could instead be imposed to ensure that the entire context vector for a given longitudinal biomarker is either included in or removed from the model (Yuan and Lin (2006)). Further research is needed to determine the efficacy of using the LSTM-GLM to conduct variable selection.

Supplementary Material

Acknowledgments.

The authors would like to thank Han Wang at North Carolina State University. Han Wang constructed the studied MIMIC-III data set using the code made publicly available by Dr. Matthieu Komorowski of Imperial College London (Komorowski (2019)).

Footnotes

SUPPLEMENTARY MATERIAL

Supplement to “Dynamic prediction of residual life with longitudinal covariates using long short-term memory networks” (DOI: 10.1214/22-AOAS1706SUPPA; .pdf). Section 1 details the automated hyperparameter selection process for the LSTM-GLM. Section 2 describes the six dynamic transformed MRL models studied for performance comparison. Section 3 details the data generation process for the simulation studies. Section 4 presents supplemental simulation studies. Section 5 describes the hyperparameter selection process for the MIMIC-III data application.

Simulation code (DOI: 10.1214/22-AOAS1706SUPPB; .zip). The ZIP file contains the Python and R code used to conduct the simulation studies.

REFERENCES

- Aggarwal CC (2018). Neural Networks and Deep Learning. Springer, Cham. MR3966422 10.1007/978-3-319-94463-0 [DOI] [Google Scholar]

- Bates D, Mächler M, Bolker B and Walker S (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw 67 1–48. 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- Carrara M, Baselli G and Ferrario M (2015). Mortality prediction model of septic shock patients based on routinely recorded data. Comput. Math. Methods Med 2015. 10.1155/2015/761435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen YQ (2007). Additive expectancy regression. J. Amer Statist. Assoc 102 153–166. MR2345536 10.1198/016214506000000870 [DOI] [Google Scholar]

- Chollet F. et al. (2015). Keras. https://keras.io. [Google Scholar]

- Evans L, Rhodes A, Alhazzani W, Antonelli M, Coopersmith CM, French C, Machado FR, Mcintyre L, Ostermann M et al. (2021). Surviving sepsis campaign: International guidelines for management of sepsis and septic shock 2021. Crit. Care Med 49 e1063–e1143. 10.1097/CCM.0000000000005337 [DOI] [PubMed] [Google Scholar]

- Friedman J, Hastie T and Tibshirani R (2010). Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw 33 1–22. [PMC free article] [PubMed] [Google Scholar]

- Gajardo A, Bhattacharjee S, Carroll C, Chen Y, Dai X, Fan J, Hadjipantelis PZ, Han K, Ji H et al. (2021). fdapace: Functional data analysis and empirical dynamics. R package version 0.5.8. [Google Scholar]

- Harrell FE, Lee KL and Mark DB (1996). Tutorial in biostatistics: Multivariable prognostic models: Issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat. Med 15 361–387. 10.1002/0470023678.ch2b(i) [DOI] [PubMed] [Google Scholar]

- Hickey GL, Philipson P, Jorgensen A and Kolamunnage-Dona R (2016). Joint modelling of time-to-event and multivariate longitudinal outcomes: Recent developments and issues. BMC Med. Res. Methodol 16 1–15. 10.1186/s12874-016-0212-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hou N, Li M, He L, Xie B, Wang L, Zhang R, Yu Y, Sun X, Pan Z et al. (2020). Predicting 30-days mortality for MIMIC-III patients with Sepsis-3: A machine learning approach using XGboost. J. Transl. Med 18 1–14. 10.1186/s12967-020-02620-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AE, Pollard TJ, Shen L, Lehman LH, Feng M, Ghassemi M, Moody B, Szolovits P, Celi LA et al. (2016). MIMIC-III, a freely accessible critical care database. Sci. Data 3 160035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingma DP and Ba J (2017). Adam: A method for stochastic optimization. arXiv:1412.6980 [cs.LG]. [Google Scholar]

- Komorowski M. (2019). AI Clinician. GitHub repository. Available at https://github.com/matthieukomorowski/AI_Clinician. [Google Scholar]

- László I, Trásy D, Molnár Z and Fazakas J (2015). Sepsis: From pathophysiology to individualized patient care. J. Immunol. Res 2015. 10.1155/2015/510436 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin X, Lu T, Yan F, Li R and Huang X (2018). Mean residual life regression with functional principal component analysis on longitudinal data for dynamic prediction. Biometrics 74 1482–1491. MR3908164 10.1111/biom.12876 [DOI] [PubMed] [Google Scholar]

- Maguluri G and Zhang C-H (1994). Estimation in the mean residual life regression model. J. Roy. Statist. Soc. Ser B 56 477–489. MR1278221 [Google Scholar]

- O’Malley T, Bursztein E, Long J, Chollet F, Jin H, Invernizzi L et al. (2019). KerasTuner. https://github.com/keras-team/keras-tuner. [Google Scholar]

- Rhodes G, Davidian M and Lu W (2023). Supplement to “Dynamic prediction of residual life with longitudinal covariates using long short-term memory networks.” 10.1214/22-AOAS1706SUPPA, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizopoulos D, Molenberghs G and Lesaffre EMEH (2017). Dynamic predictions with time-dependent covariates in survival analysis using joint modeling and landmarking. Biom. J 59 1261–1276. MR3731215 10.1002/bimj.201600238 [DOI] [PubMed] [Google Scholar]

- Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, Annane D, Bauer M, Bellomo R, Bernard GR, Chiche J-D et al. (2016). The third international consensus definitions for sepsis and septic shock (Sepsis-3). J. Amer. Med. Assoc 315 801–810. 10.1001/jama.2016.0287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strimbu K and Tavel JA (2010). What are biomarkers? Curr. Opin. HIV AIDS 5 463–6. 10.1097/COH.0b013e32833ed177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun L, Song X and Zhang Z (2012). Mean residual life models with time-dependent coefficients under right censoring. Biometrika 99 185–197. MR2899672 10.1093/biomet/asr065 [DOI] [Google Scholar]

- Sun L and Zhang Z (2009). A class of transformed mean residual life models with censored survival data. J. Amer. Statist. Assoc 104 803–815. MR2541596 10.1198/jasa.2009.0130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Therneau TM and Grambsch PM (2000). Modeling Survival Data: Extending the Cox Model. Statistics for Biology and Health. Springer, New York. MR1774977 10.1007/978-1-4757-3294-8 [DOI] [Google Scholar]

- Tsiatis AA and Davidian M (2004). Joint modeling of longitudinal and time-to-event data: An overview. Statist. Sinica 14 809–834. MR2087974 [Google Scholar]

- Van Houwelingen HC (2007). Dynamic prediction by landmarking in event history analysis. Scand. J. Stat 34 70–85. MR2325243 10.1111/j.1467-9469.2006.00529.x [DOI] [Google Scholar]

- Van Houwelingen HC and Putter H (2012). Dynamic Prediction in Clinical Survival Analysis. Monographs on Statistics and Applied Probability 123. CRC Press, Boca Raton, FL. MR3058205 [Google Scholar]

- Werbos PJ (1990). Backpropagation through time: What it does and how to do it. Proc. IEEE 78 1550–1560. 10.1109/5.58337 [DOI] [Google Scholar]

- Yuan M and Lin Y (2006). Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B. Stat. Methodol. 68 49–67. MR2212574 10.1111/j.1467-9868.2005.00532.x [DOI] [Google Scholar]

- Zheng Y and Heagerty PJ (2005). Partly conditional survival models for longitudinal data. Biometrics 61 379–391. MR2140909 10.1111/j.1541-0420.2005.00323.x [DOI] [PubMed] [Google Scholar]

- Zhu Y, Li L and Huang X (2019). Landmark linear transformation model for dynamic prediction with application to a longitudinal cohort study of chronic disease. J. R. Stat. Soc. Ser. C. Appl. Stat 68 771–791. MR3937473 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.