Abstract

Cells are a fundamental unit of biological organization, and identifying them in imaging data – cell segmentation – is a critical task for various cellular imaging experiments. While deep learning methods have led to substantial progress on this problem, most models in use are specialist models that work well for specific domains. Methods that have learned the general notion of “what is a cell” and can identify them across different domains of cellular imaging data have proven elusive. In this work, we present CellSAM, a foundation model for cell segmentation that generalizes across diverse cellular imaging data. CellSAM builds on top of the Segment Anything Model (SAM) by developing a prompt engineering approach for mask generation. We train an object detector, CellFinder, to automatically detect cells and prompt SAM to generate segmentations. We show that this approach allows a single model to achieve human-level performance for segmenting images of mammalian cells (in tissues and cell culture), yeast, and bacteria collected across various imaging modalities. We show that CellSAM has strong zero-shot performance and can be improved with a few examples via few-shot learning. We also show that CellSAM can unify bioimaging analysis workflows such as spatial transcriptomics and cell tracking. A deployed version of CellSAM is available at https://cellsam.deepcell.org/.

Keywords: cell segmentation, object detection, deep learning, foundation model

1. Introduction

Accurate cell segmentation is crucial for quantitative analysis and interpretation of various cellular imaging experiments. Modern spatial genomics assays can produce data on the location and abundance of 102 protein species and 103 RNA species simultaneously in living and fixed tissues 1–5. These data shed light on the biology of healthy and diseased tissues but are challenging to interpret. Cell segmentation enables these data to be converted to interpretable tissue maps of protein localization and transcript abundances. Similarly, live-cell imaging provides insight into dynamic phenomena in bacterial and mammalian cell biology. Mechanistic insights into critical phenomena such as the mechanical behavior of the bacterial cell wall 6,7, information transmission in cell signaling pathways 8–11, heterogeneity in immune cell behavior during immunotherapy 12, and the morphodynamics of development13 have been gained by analyzing live-cell imaging data. Cell segmentation is also a key challenge for these experiments, as cells must be segmented and tracked to create temporally consistent records of cell behavior that can be queried at scale. These methods have seen use in a number of systems, including mammalian cells in tissues 5 and cell culture 14,15, bacterial cells 16–19, and yeast20–22.

Significant progress has been made in recent years on the problem of cell segmentation, primarily driven by advances in deep learning 23. Progress in this space has occurred mainly in two distinct directions. The first direction seeks to find deep learning architectures that achieve state-of-the-art performance on cellular imaging tasks. These methods have historically focused on a particular imaging modality (e.g., brightfield imaging) or target (e.g., mammalian tissue) and have difficulty generalizing beyond their intended domain 24–30. For example, Mesmer’s 27 representation for a cell (cell centroid and boundary) enables good performance in tissue images but would be a poor choice for elongated bacterial cells. Similar trade-offs in representations exist for the current collection of Cellpose models, necessitating the creation of a model zoo 25. The second direction is to work on improving labeling methodology. Cell segmentation is a variant of the instance segmentation problem, which requires pixel-level labels for every object in an image. Creating these labels can be expensive ( 10−2 USD/label, with hundreds to thousands of labels per image) 27,31, which provides an incentive to reduce the marginal cost of labeling. A recent improvement to labeling methodology has been human-in-the-loop labeling, where labelers correct model errors rather than produce labels from scratch 25,27,32. Further reductions in labeling costs can increase the amount of labeled imaging data by orders of magnitude.

Recent work in machine learning on foundation models holds promise for providing a complete solution. Foundation models are large deep neural network models (typically transformers 33) trained on a large amount of data in a self-supervised fashion with supervised fine-tuning on one or several tasks 34. Foundation models include the GPT35,36 family of models, which have proven transformative for natural language processing 34. The GPT and BERT families of models have recently seen use for biological sequences 37–41. These successes have inspired similar efforts in computer vision. The Vision Transformer 42 (ViT) was introduced in 2020 and has since been used as the basis architecture for a collection of vision foundation models 43–47. One recent foundation model well suited to cellular image analysis needs is the Segment Anything Model (SAM) 48. This model uses a Vision Transformer to extract information-rich features from raw images. These features are then directed to a module that generates instance masks based on user provided prompts, which can be either spatial (e.g., an object centroid or bounding box) or semantic (e.g., an object’s visual description). Notably, the promptable nature of SAM enabled scalable dataset construction, as preliminary versions of SAM allowed labelers to generate accurate instance masks with 1–2 clicks. The final version of SAM was trained on a dataset of 11 million images containing over 1 billion masks, and demonstrated strong performance on various zero-shot learning tasks. Recent work has attempted to apply SAM to problems in biological and medical imaging, including medical image segmentation 49–51, lesion detection in dermatological images 52,53, nuclear segmentation in H&E images 54,55, and cellular image data for use in the Napari software package 56.

While promising, these studies reported challenges adapting SAM to these new use cases 49,56. These challenges include reduced performance and uncertain boundaries when transitioning from natural to medical images. Cellular images contain additional complications – they can involve different imaging modalities (e.g., phase microscopy vs. fluorescence microscopy), thousands of objects in a field of view (as opposed to dozens in a natural image), and uncertain and noisy boundaries (artifacts of projecting 3D objects into a 2D plane) 56. In addition to these challenges, SAM’s default prompting strategy does not allow for accurate inference on cellular images. Currently, the automated prompting of SAM uses a uniform grid of points to generate masks, an approach poorly suited to cellular images given the wide variation of cell densities. More precise prompting (e.g., a bounding box or mask) requires prior knowledge of cell locations. This creates a weak tautology - SAM can find the cells provided it knows where they are. This limitation makes it challenging for SAM to serve as a foundation model for cell segmentation since it still requires substantial human input for inference. A solution to this problem would enable SAM-like models to serve as foundation models and knowledge engines, as they could accelerate the generation of labeled data, learn from them, and make that knowledge accessible to life scientists via inference.

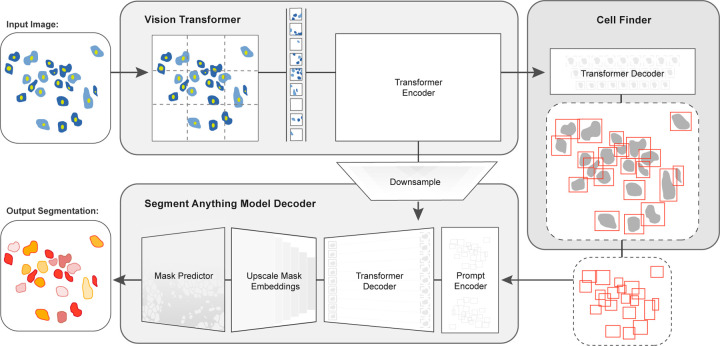

In this work, we developed CellSAM, a foundation model for cell segmentation (Fig. 1). CellSAM extends the SAM methodology to perform automated cellular instance segmentation. To achieve this, we first assembled a comprehensive dataset for cell segmentation spanning five broad data archetypes: tissue, cell culture, yeast, H&E, and bacteria. Critically, we removed data leaks between training and testing data splits to ensure an accurate assessment of model performance. To automate inference with SAM, we took a prompt engineering approach and explored the best ways to prompt SAM to generate high-quality masks. We observed that bounding boxes consistently generated high-quality masks compared to alternative approaches. To facilitate automated inference through prompting, we developed CellFinder, a transformer-based object detector that uses the Anchor DETR framework 57. CellSAM and CellFinder share SAM’s ViT backbone for feature extraction; the bounding boxes generated by CellFinder are then used as prompts for SAM, enumerating masks for all the cells in an image. We trained CellSAM on a large, diverse corpus of cellular imaging data, enabling it to achieve state-of-the-art (SOTA) performance across ten datasets. We also evaluated CellSAM’s zero-shot performance using a held-out dataset, LIVECell 58, demonstrating that it substantially outperforms existing methods for zero-shot segmentation. A deployed version of CellSAM is available at https://cellsam.deepcell.org.

Fig. 1: CellSAM: a foundational model for cell segmentation.

CellSAM combines SAM’s mask generation and labeling capabilities with an object detection model to achieve automated inference. Input images are divided into regularly sampled patches and passed through a transformer encoder (i.e., a ViT) to generate information-rich image features. These image features are then sent to two downstream modules. The first module, CellFinder, decodes these features into bounding boxes using a transformer-based encoder-decoder pair. The second module combines these image features with prompts to generate masks using SAM’s mask decoder. CellSAM integrates these two modules using the bounding boxes generated by CellFinder as prompts for SAM. CellSAM is trained in two stages, using the pre-trained SAM model weights as a starting point. In the first stage, we train the ViT and the CellFinder model together on the object detection task. This yields an accurate CellFinder but results in a distribution shift between the ViT and SAM’s mask decoder. The second stage closes this gap by fixing the ViT and SAM mask decoder weights and fine-tuning the remainder of the SAM model (i.e., the model neck) using ground truth bounding boxes and segmentation labels.

2. Results

2.1. Construction of a dataset for general cell segmentation

A significant challenge with existing cellular segmentation methods is their inability to generalize across cellular targets, imaging modalities, and cell morphologies. To address this, we curated a dataset from the literature containing 2D images from a diverse range of targets (mammalian cells in tissues and adherent cell culture, yeast cells, bacterial cells, and mammalian cell nuclei) and imaging modalities (fluorescence, brightfield, phase contrast, and mass cytometry imaging).

Our final dataset consisted of TissueNet 27, DeepBacs 59, BriFiSeg 60, Cellpose 24,25, Omnipose 61,62, YeastNet 63, YeaZ 64, the 2018 Kaggle Data Science Bowl dataset (DSB) 65, a collection of H&E datasets 66–72, and an internally collected dataset of phase microscopy images across eight mammalian cell lines (Phase400). We group these datasets into six types for evaluation: Tissue, Cell Culture, H&E, Bacteria, and Yeast. As the DSB 65 comprises cell nuclei that span several of these types, we evaluate it separately and refer to it as Nuclear, making a total of six categories for evaluation. While our method focuses on whole-cell segmentation, we included DSB 65 because cell nuclei are often used as a surrogate when the information necessary for whole-cell segmentation (e.g., cell membrane markers) is absent from an image. Fig. 2a shows the number of annotations per evaluation type. Finally, we used a held-out dataset LIVECell 58 to evaluate CellSAM’s zero-shot performance. This dataset was curated to remove low-quality images, as well as images that did not contain sufficient information about the boundaries of closely packed cells. A detailed description of data sources and pre-processing steps can be found in Appendix A.

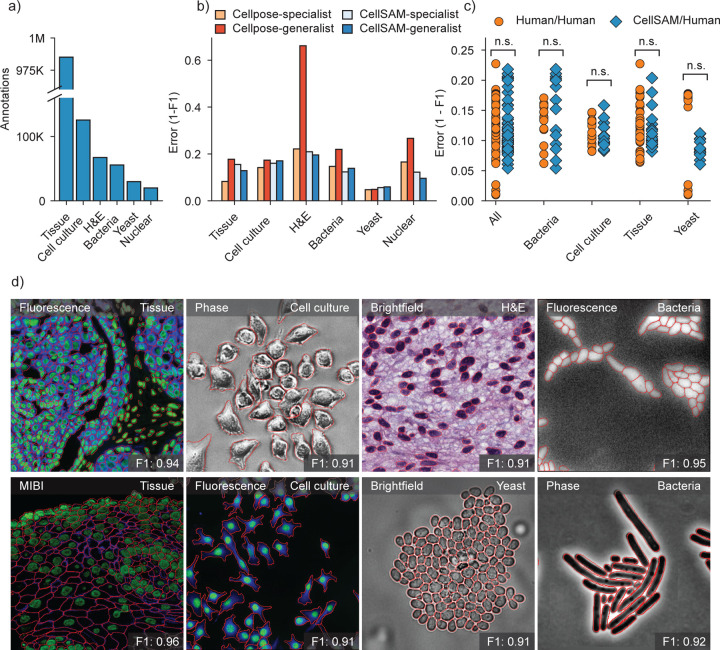

Fig. 2: CellSAM is a strong generalist model for cell segmentation.

a) For training and evaluating CellSAM, we curated a diverse cell segmentation dataset from the literature. The number of annotated cells is given for each data type. Nuclear refers to a heterogeneous dataset (DSB) 65 containing nuclear segmentation labels. b) Segmentation performance for CellSAM and Cellpose across different data types. We compared the segmentation error (1-F1) for models that were trained as specialists (i.e., on one dataset) or generalists (i.e., the full dataset). Models were trained for a similar number of steps across all datasets. We observed that CellSAM-generalhad a lower error than Cellpose-general on almost all tested datasets. Furthermore, we observed that generalist training either preserved or improved CellSAM’s performance compared to specialist training. c) Human vs human and CellSAM-general vs human (CS/human) inter-rater performance comparison. A t-test confirms that no statistical difference between CellSAM and human performance exists. d) Qualitative results of CellSAM segmentations for different data and imaging modalities. Predicted segmentations are outlined in red.

2.2. Bounding boxes are accurate prompts for cell segmentation with SAM

For accurate inference, SAM needs to be provided with approximate information about the location of cells in the form of prompts. To better engineer prompts, we first assessed SAM’s ability to generate masks with provided prompts derived from ground truth labels - either point prompts (derived from the cell’s center of mass) or bounding box prompts. For these tests, we used the pre-trained model weights that were publicly released 48. Our benchmarking revealed that bounding boxes had significantly higher zero-shot performance than point prompting (Fig. S3). However, both approaches struggle to achieve performance standards required for real world use, which we take as an error / 0.2 (Fig. 2c). To improve SAM’s mask generation ability for cellular image data, we explored fine-tuning SAM on our compiled data to help it bridge the gap from natural to cellular images. During these fine-tuning experiments, we observed that fine-tuning all of SAM was unnecessary; instead, we only needed to fine-tune the layers connecting SAM’s ViT to its decoder, the model neck, to achieve good performance (Fig. 1). All other layers can be frozen. Fine-tuning SAM in this fashion led to a model capable of generating high-quality cell masks when prompted by ground truth bounding boxes, as seen in Fig. S3.

2.3. CellFinder enables accurate and automated cell segmentation for CellSAM

Because the ground-truth bounding box prompts yield accurate segmentation masks from SAM across various datasets, we sought to develop an object detector that could generate prompts for SAM in an automated fashion. Given that our zero-shot experiments demonstrated that ViT features can form robust internal representations of cellular images, we reasoned that we could build an object detector using the image features generated by SAM’s ViT. Previous work has explored this space and demonstrated that ViT backbones can achieve SOTA performance on natural images 73,74. For our object detection module, we use the Anchor DETR57 framework with the same ViT backbone as the SAM module; we call this object detection module CellFinder. Anchor DETR is well-suited for object detection in cellular images because it formulates object detection as a set prediction task. This allows it to perform cell segmentation in images with densely packed objects, a common occurrence in cellular imaging data. Alternative bounding box methods (e.g., the R-CNN family) rely on non-maximum suppression 75,76, leading to poor performance in this regime. Methods that frame cell segmentation as a dense, pixel-wise prediction task (e.g., Mesmer 27, Cellpose 24, and Hover-net29) assume that each pixel can be uniquely assigned to a single cell and cannot handle overlapping objects.

We train CellSAM in two stages; the full details can be found in the supplement. In the first stage, we train CellFinder on the object detection task. We convert the ground truth cell masks into bounding boxes and train the ViT backbone and the CellFinder module. Once CellFinder is trained, we freeze the model weights of the ViT and fine-tune the SAM module as described above. This accounts for the distribution shifts in the ViT features that occur during the CellFinder training. Once training is complete, we use CellFinder to prompt SAM’s mask decoder. We refer to the collective method as CellSAM; Fig. 1 outlines an image’s full path through CellSAM during inference. We benchmarked CellSAM’s performance using a suite of metrics (Fig. 2b and 2b and Supplemental Fig. S2) and found that it outperformed generalist Cellpose models and was equivalent to specialist Cellpose models trained on comparable datasets. We highlight features of our benchmarking analyses below.

CellSAM is a strong generalist model. Generalization across cell morphologies and imaging datasets has been a significant challenge for deep learning-based cell segmentation algorithms. To evaluate CellSAM’s generalization capabilities, we compared the performance of CellSAM and Cellpose models trained as specialists (i.e., on a single dataset) to generalists (i.e., on all datasets). Consistent with the literature, we observe that Cellpose’s performance degraded when trained as a generalist (Fig. 2c). In contrast, we observed that CellSAMpreserved its performance in the generalist setting. Here we defined two methods to have equivalent performance if the difference in F1 scores was ¡ 0.05, which was the standard deviation of the human-human annotator agreement (Fig. 2c). By this definition, we found the performance of CellSAM-general was equivalent to CellSAM-specific across all data categories and datasets. Moreover, CellSAM-general performed equivalent to Cellpose-specific on five of six data categories and eight of the ten datasets (Fig. 2b and Supplemental Fig. S2), with the exceptions being the DSB and Phase400 datasets. This analysis highlights an essential feature of a foundational model: maintaining performance with increasing data diversity and scale.

CellSAM achieves human-level accuracy for generalized cell segmentation. We use the error (1-F1) to assess the consistency of segmentation predictions and annotator masks across a series of images. We compared the annotations of three experts with each other (human vs. human) and with CellSAM (human vs. CellSAM). We compared annotations across four data categories: mammalian cells in tissue, mammalian cells in cell culture, bacterial cells, and yeast cells. Our analysis revealed no significant differences between these two comparisons, indicating that CellSAM’s outputs are comparable to expert human annotators (Fig. 2f).

CellSAM enables fast and accurate labeling When provided with ground truth bounding boxes, CellSAM achieves high-quality cell masks without any fine-tuning on unseen datasets (Figure S4). Because drawing bounding boxes consumes significantly less time than drawing individual masks, this means CellSAM can be used to quickly generate highly accurate labels, even for out-of-distribution data.

CellSAM is a strong zero-shot and few-shot learner. We used the LIVECell dataset to explore CellSAM’s performance in zero-shot and few-shot settings. We stratified CellSAM’s zero-shot by cell lines present in LIVECell (Supplemental Fig. S4b). We found that while performance varied by cell line, we could recover adequate performance in the few-shot regime (e.g., A172 ). Supplemental Figures S4 and S5 show that CellSAM can considerably improve its performance with only ten additional fields of views (102 — 103 cells) for each cell line. For cell lines with morphologies far from the training data distribution (e.g., SHSY5Y ), we found fine-tuning could not recover performance. This may reflect a limitation of bounding boxes as a prompting strategy for SAM models.

2.4. CellSAM unifies biological image analysis workflows

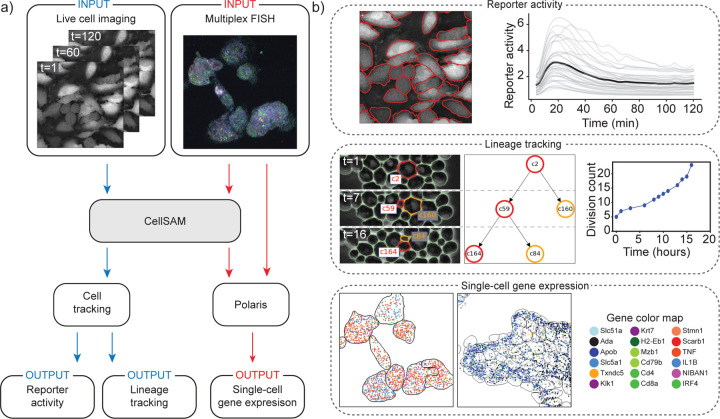

Cell segmentation is a critical component of many spatial biology analysis pipelines; a single foundation model that generalizes across cell morphologies and imaging methods would fill a crucial gap in modern biological workflows by expanding the scope of the data that can be processed. In this section, we demonstrate how CellSAM ‘s generality can diversify the scope of biological imaging analysis pipelines by highlighting two use cases – spatial transcriptomics and cell tracking (Fig.3).

Fig. 3: CellSAM unifies biological imaging analysis workflows.

Because CellSAM functions across image modalities and cellular targets, it can be immediately applied across bioimaging analysis workflows without requiring task-specific adaptations. (a) We schematically depict how CellSAM fits into the analysis pipeline for live cell imaging and spatial transcriptomics, eliminating the need for different segmentation tools and expanding the scope of possible assays to which these tools can be applied. (b) Application of CellSAM to various biological imaging. (Top) Segmentations from CellSAM are used to track cells 81 and quantify fluorescent live-cell reporter activity in cell culture. (Middle) CellSAM segments cells in multiple frames from a video of budding yeast cells. These cells are tracked across frames using a tracking algorithm 81 that ensures consistent identities, enabling accurate lineage construction and cell division quantification. (Bottom) Segmentations generated using CellSAM integrate with Polaris 79, a spatial transcriptomics analysis pipeline. Because of CellSAM ‘s generalist nature, we can apply this workflow across sample types (e.g., tissue and cell culture) and imaging modalities (e.g., seqFISH and MERFISH). Datasets of cultured macrophage cells (seqFISH) and mouse ileum tissue (MERFISH) 80 were used to generate the data in this example. MERFISH segmentations were generated with CellSAM with an image of a nuclear and membrane stain; seqFISH segmentations were generated with CellSAM with a maximum intensity projection image of all spots.

Spatial transcriptomics methods measure single-cell gene expression while retaining the spatial organization of the sample. These experiments (e.g., MERFISH 77 and seqFISH 78) fluorescently label individual mRNA transcripts; the number of spots for a gene inside a cell corresponds to that gene’s expression level in that cell. These methods enable the investigation of spatial gene expression patterns from the sub-cellular to tissue scales but require accurate cell segmentation to yield meaningful insights. Here, we use CellSAM in combination with Polaris 79, a deep learning-enabled analysis pipeline for image-based spatial transcriptomics, to analyze gene expression at the single-cell level in MERFISH 80 and seqFISH 78 data (Fig. 3). With accurate segmentation, we can assign genes to specific cells (Fig. 3). We note that CellSAM can perform segmentation on either images of nuclear and membrane stains or images derived from the spots themselves (e.g., a maximum intensity projection of all spots). CellSAM’s ability to perform nuclear and whole-cell segmentation for challenging tissue images of dense cells with complex morphologies expands the scope of datasets to which Polaris can be applied.

The dynamics of cellular systems captured by live-cell imaging experiments elucidate various cellular processes such as cell division, morphological transitions, and signal transduction 32. The analysis of live-cell imaging data requires segmenting and tracking individual cells throughout whole movies. Here, we use CellSAM in combination with a cell tracking algorithm 81 (Fig. 3) in two settings. The first was a live cell imaging experiment with HeLa cells transiently expressing an AMP Kinase reporter 82 dosed with 20mM 2-Deoxy-D-glucose, a setup reflective of many experiments exploring cell signaling dynamics 14. We imaged, segmented, and tracked the cells over 60 frames or 120 minutes to quantify AMP Kinase activity over time (Fig. 3). The second setting was lineage tracking in budding yeast cells. We again used CellSAM and cell tracking to segment and track cells; we further used a division detection algorithm to count the cumulative number of divisions over time and trace individual cell lineages (Fig. 3). While these use cases span diverse biological image data, their analysis can be simplified into a few key steps. As CellSAM demonstrates, as the algorithms that perform these steps generalize, so too do the entire pipelines.

3. Discussion

Cell segmentation is a critical task for cellular imaging experiments. While deep learning methods have made substantial progress in recent years, there remains a need for methods that can generalize across diverse images and further reduce the marginal cost of image labeling. In this work, we sought to meet these needs by developing CellSAM, a foundation model for cell segmentation. Transformer-based methods for cell segmentation are showing promising performance. CellSAM builds on these works by integrating the mask generation capabilities of SAM with transformer-based object detection to empower both scalable image labeling and automated inference. We trained CellSAM on a diverse dataset, and our benchmarking demonstrated that CellSAM achieves human-level performance on generalized cell segmentation. Compared to previous methods, CellSAM preserves its performance when trained on increasingly diverse data, which is essential for a foundational model. We found that CellSAM could be used on novel cell types in a zero-shot setting, and that re-training with few labels could yield a strong boost in performance if needed. Moreover, we demonstrated that CellSAM’s ability to generalize can be extended to entire image analysis pipelines, as illustrated by use cases in spatial transcriptomics and live cell imaging. Given its utility in image labeling and high accuracy during inference, we believe CellSAM is a valuable contribution to the field, both as a tool for spatial biology and as a means to creating the data infrastructure required for cellular imaging’s AI-powered future. To facilitate the former, we have deployed a user interface for CellSAM at https://cellsam.deepcell.org/ that allows for both automated and manual prompting.

The work described here has importance beyond aiding life scientists with cell segmentation. First, foundation models are immensely useful for natural language and vision tasks and hold similar promise for the life sciences, provided they are suitably adapted to this new domain. We can see several uses for CellSAM that might be within reach of future work. First, given its generalization capabilities, it is likely that CellSAM has learned a general representation for the notion of “cells” used to query imaging data. These representations might serve as an interface between imaging data and other modalities (e.g., single-cell RNA Sequencing), provided there is suitable alignment between cellular representations for each domain 83,84. Second, much like what has occurred with natural images, we foresee that the integration of natural language labels in addition to cell-level labels might lead to vision-language models capable of generating human-like descriptors of cellular images with entity-level resolution 46. Third, the generalization capabilities may enable the standardization of cellular image analysis pipelines across all the life sciences. If the accuracy is sufficient, microbiologists and tissue biologists could use the same collection of foundation models for interpreting their imaging data even for challenging experiments 85,86.

While the work presented here highlights the potential foundation models hold for cellular image analysis, much work remains to be done for this future to manifest. Extension of this methodology to 3D imaging data is essential; recent work on memory-efficient attention kernels 87 will aid these efforts. Exploring how to enable foundation models to leverage the full information content of images (e.g., multiple stains, temporal information for movies, etc.) is an essential avenue of future work. Expanding the space of labeled data remains a priority - this includes images of perturbed cells and cells with more challenging morphologies (e.g., neurons). Data generated by pooled optical screens 88 may synergize well with the data needs of foundation models. Compute-efficient fine-tuning strategies must be developed to enable flexible adaptation to new image domains. Lastly, prompt engineering is a critical area of future work, as it is critical to maximizing model performance. The work we presented here can be thought of as prompt engineering, as we leverage CellFinder to produce bounding box prompts for SAM. As more challenging labeled datasets are incorporated, the nature of the “best” prompts will likely evolve. Finding the best prompts for these new data is a task that will likely fall on both the computer vision and life science communities.

Supplementary Material

Acknowledgements

We thank Leeat Keren, Noah Greenwald, Sam Cooper, Jan Funke, Uri Manor, Joe Horsman, Michael Baym, Paul Blainey, Ian Cheeseman, Manuel Leonetti, Neehar Kondapaneni, and Elijah Cole for valuable conversations and insightful feedback. We also thank William Graf, Geneva Miller, and Kevin Yu, whose time in the Van Valen lab established the infrastructure and software tools that made this work possible. We thank Nader Khalil, Harper Carroll, Alec Fong, and the entire Brev.dev team for their support in establishing the computational infrastructure required for this work. We also thank Rosalind J. Xu and Jeffrey Moffitt for providing unpublished MERFISH data for the spatial transcriptomics workflow. We utilized images of the HeLa cell line in this research. Henrietta Lacks and the HeLa cell line established from her tumor cells without her knowledge or consent in 1951 has significantly contributed to scientific progress and advances in human health. We are grateful to Lacks, now deceased, and the Lacks family for their contributions to biomedical research. This work was supported by awards from the Shurl and Kay Curci Foundation (to DVV), the Rita Allen Foundation (to DVV), the Susan E. Riley Foundation (to DVV), the Pew-Stewart Cancer Scholars program (to DVV), the Gordon and Betty Moore Foundation (to DVV), the Schmidt Academy for Software Engineering (to SL), the Michael J. Fox Foundation through the Aligning Science Across Parkinson’s consortium (to DVV), the Heritage Medical Research Institute (to DVV), the National Institutes of Health New Innovator program (DP2-GM149556) (to DVV), the National Institutes of Health HuBMAP consortium (OT2-OD033756) (to DVV), and the Howard Hughes Medical Institute Freeman Hrabowski Scholars program (to DVV). National Institutes of Health (R01-MH123612A) (to PP). NIH/Ohio State University (R01-DC014498) (to PP). Chen Institute (to PP). The Emerald Foundation and Black in Cancer (to UI). Caltech Presidential Postdoctoral Fellowship Program (PPFP) (to UI).

Footnotes

Declarations

Code Availability

The code for CellSAM is publicly available at https://github.com/vanvalenlab/cellsam.

Disclosures

David Van Valen is a co-founder and Chief Scientist of Barrier Biosciences and holds equity in the company. All other authors declare no competing interests.

Data Availability

All datasets with test/training/validation splits are publicly available at https://cellsam.deepcell.org.

References

- [1].Palla G., Fischer D. S., Regev A., and Theis F. J., “Spatial components of molecular tissue biology,” Nature Biotechnology, vol. 40, no. 3, pp. 308–318, 2022. [DOI] [PubMed] [Google Scholar]

- [2].Moffitt J. R., Lundberg E., and Heyn H., “The emerging landscape of spatial profiling technologies,” Nature Reviews Genetics, vol. 23, no. 12, pp. 741–759, 2022. [DOI] [PubMed] [Google Scholar]

- [3].Moses L. and Pachter L., “Museum of spatial transcriptomics,” Nature Methods, vol. 19, no. 5, pp. 534–546, 2022. [DOI] [PubMed] [Google Scholar]

- [4].Hickey J. W., Neumann E. K., Radtke A. J., Camarillo J. M., Beuschel R. T., Albanese A., McDonough E., Hatler J., Wiblin A. E., Fisher J. et al. , “Spatial mapping of protein composition and tissue organization: a primer for multiplexed antibody-based imaging,” Nature methods, vol. 19, no. 3, pp. 284–295, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Ko J., Wilkovitsch M., Oh J., Kohler R. H., Bolli E., Pittet M. J., Vinegoni C., Sykes D. B., Mikula H., Weissleder R. et al. , “Spatiotemporal multiplexed immunofluorescence imaging of living cells and tissues with bioorthogonal cycling of fluorescent probes,” Nature Biotechnology, vol. 40, no. 11, pp. 1654–1662, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Wang S., Furchtgott L., Huang K. C., and Shaevitz J. W., “Helical insertion of peptidoglycan produces chiral ordering of the bacterial cell wall,” Proceedings of the National Academy of Sciences, vol. 109, no. 10, pp. E595–E604, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Rojas E. R., Billings G., Odermatt P. D., Auer G. K., Zhu L., Miguel A., Chang F., Weibel D. B., Theriot J. A., and Huang K. C., “The outer membrane is an essential load-bearing element in gram-negative bacteria,” Nature, vol. 559, no. 7715, pp. 617–621, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Hansen A. S. and O’Shea E. K., “Limits on information transduction through amplitude and frequency regulation of transcription factor activity,” Elife, vol. 4, p. e06559, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Hansen A. S. and O’shea E. K., “Promoter decoding of transcription factor dynamics involves a trade-off between noise and control of gene expression,” Molecular systems biology, vol. 9, no. 1, p. 704, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Tay S., Hughey J. J., Lee T. K., Lipniacki T., Quake S. R., and Covert M. W., “Single-cell nf-κb dynamics reveal digital activation and analogue information processing,” Nature, vol. 466, no. 7303, pp. 267–271, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Regot S., Hughey J. J., Bajar B. T., Carrasco S., and Covert M. W., “High-sensitivity measurements of multiple kinase activities in live single cells,” Cell, vol. 157, no. 7, pp. 1724–1734, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Alieva M., Wezenaar A. K., Wehrens E. J., and Rios A. C., “Bridging live-cell imaging and next-generation cancer treatment,” Nature Reviews Cancer, pp. 1–15, 2023. [DOI] [PubMed]

- [13].Cao J., Guan G., Ho V. W. S., Wong M.-K., Chan L.-Y., Tang C., Zhao Z., and Yan H., “Establishment of a morphological atlas of the caenorhabditis elegans embryo using deep-learning-based 4d segmentation,” Nature communications, vol. 11, no. 1, p. 6254, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Purvis J. E. and Lahav G., “Encoding and decoding cellular information through signaling dynamics,” Cell, vol. 152, no. 5, pp. 945–956, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Schmitt D. L., Mehta S., and Zhang J., “Study of spatiotemporal regulation of kinase signaling using genetically encodable molecular tools,” Current opinion in chemical biology, vol. 71, p. 102224, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Earle K. A., Billings G., Sigal M., Lichtman J. S., Hansson G. C., Elias J. E., Amieva M. R., Huang K. C., and Sonnenburg J. L., “Quantitative imaging of gut microbiota spatial organization,” Cell host & microbe, vol. 18, no. 4, pp. 478–488, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Peters J. M., Colavin A., Shi H., Czarny T. L., Larson M. H., Wong S., Hawkins J. S., Lu C. H., Koo B.-M., Marta E. et al. , “A comprehensive, crispr-based functional analysis of essential genes in bacteria,” Cell, vol. 165, no. 6, pp. 1493–1506, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Jun S., Si F., Pugatch R., and Scott M., “Fundamental principles in bacterial physiology—history, recent progress, and the future with focus on cell size control: a review,” Reports on Progress in Physics, vol. 81, no. 5, p. 056601, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Wang P., Robert L., Pelletier J., Dang W. L., Taddei F., Wright A., and Jun S., “Robust growth of escherichia coli,” Current biology, vol. 20, no. 12, pp. 1099–1103, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Huh W.-K., Falvo J. V., Gerke L. C., Carroll A. S., Howson R. W., Weissman J. S., and O’Shea E. K., “Global analysis of protein localization in budding yeast,” Nature, vol. 425, no. 6959, pp. 686–691, 2003. [DOI] [PubMed] [Google Scholar]

- [21].Hao N., Budnik B. A., Gunawardena J., and O’Shea E. K., “Tunable signal processing through modular control of transcription factor translocation,” Science, vol. 339, no. 6118, pp. 460–464, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Hao N. and O’shea E. K., “Signal-dependent dynamics of transcription factor translocation controls gene expression,” Nature structural & molecular biology, vol. 19, no. 1, pp. 31–39, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Schwartz M., Israel U., Wang X., Laubscher E., Yu C., Dilip R., Li Q., Mari J., Soro J., Yu K. et al. , “Scaling biological discovery at the interface of deep learning and cellular imaging,” Nature Methods, vol. 20, no. 7, pp. 956–957, 2023. [DOI] [PubMed] [Google Scholar]

- [24].Stringer C., Wang T., Michaelos M., and Pachitariu M., “Cellpose: a generalist algorithm for cellular segmentation,” Nature methods, vol. 18, no. 1, pp. 100–106, 2021. [DOI] [PubMed] [Google Scholar]

- [25].Pachitariu M. and Stringer C., “Cellpose 2.0: how to train your own model,” Nature Methods, pp. 1–8, 2022. [DOI] [PMC free article] [PubMed]

- [26].Schmidt U., Weigert M., Broaddus C., and Myers G., “Cell detection with star-convex polygons,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, September 16–20, 2018, Proceedings, Part II 11. Springer, 2018, pp. 265–273. [Google Scholar]

- [27].Greenwald N. F., Miller G., Moen E., Kong A., Kagel A., Dougherty T., Fullaway C. C., McIntosh B. J., Leow K. X., Schwartz M. S. et al. , “Whole-cell segmentation of tissue images with human-level performance using large-scale data annotation and deep learning,” Nature biotechnology, vol. 40, no. 4, pp. 555–565, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Hollandi R., Szkalisity A., Toth T., Tasnadi E., Molnar C., Mathe B., Grexa I., Molnar J., Balind A., Gorbe M. et al. , “nucleaizer: a parameter-free deep learning framework for nucleus segmentation using image style transfer,” Cell Systems, vol. 10, no. 5, pp. 453–458, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Graham S., Vu Q. D., Raza S. E. A., Azam A., Tsang Y. W., Kwak J. T., and Rajpoot N., “Hover-net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images,” Medical image analysis, vol. 58, p. 101563, 2019. [DOI] [PubMed] [Google Scholar]

- [30].Wang W., Taft D. A., Chen Y.-J., Zhang J., Wallace C. T., Xu M., Watkins S. C., and Xing J., “Learn to segment single cells with deep distance estimator and deep cell detector,” Computers in biology and medicine, vol. 108, pp. 133–141, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Van Valen D. A., Kudo T., Lane K. M., Macklin D. N., Quach N. T., DeFelice M. M., Maayan I., Tanouchi Y., Ashley E. A., and Covert M. W., “Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments,” PLoS computational biology, vol. 12, no. 11, p. e1005177, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Schwartz M. S., Moen E., Miller G., Dougherty T., Borba E., Ding R., Graf W., Pao E., and Valen D. V., “Caliban: Accurate cell tracking and lineage construction in live-cell imaging experiments with deep learning,” bioRxiv, 2023. [Online]. Available: https://www.biorxiv.org/content/early/2023/09/12/803205

- [33].Vaswani A., Shazeer N., Parmar N., Uszkoreit J., Jones L., Gomez A. N., Kaiser Ł., and Polosukhin I., “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017. [Google Scholar]

- [34].Bommasani R., Hudson D. A., Adeli E., Altman R., Arora S., von Arx S., Bernstein M. S., Bohg J., Bosselut A., Brunskill E., Brynjolfsson E., Buch S., Card D., Castellon R., Chatterji N., Chen A., Creel K., Davis J. Q., Demszky D., Donahue C., Doumbouya M., Durmus E., Ermon S., Etchemendy J., Ethayarajh K., Fei-Fei L., Finn C., Gale T., Gillespie L., Goel K., Goodman N., Grossman S., Guha N., Hashimoto T., Henderson P., Hewitt J., Ho D. E., Hong J., Hsu K., Huang J., Icard T., Jain S., Jurafsky D., Kalluri P., Karamcheti S., Keeling G., Khani F., Khattab O., Koh P. W., Krass M., Krishna R., Kuditipudi R., Kumar A., Ladhak F., Lee M., Lee T., Leskovec J., Levent I., Li X. L., Li X., Ma T., Malik A., Manning C. D., Mirchandani S., Mitchell E., Munyikwa Z., Nair S., Narayan A., Narayanan D., Newman B., Nie A., Niebles J. C., Nilforoshan H., Nyarko J., Ogut G., Orr L., Papadimitriou I., Park J. S., Piech C., Portelance E., Potts C., Raghunathan A., Reich R., Ren H., Rong F., Roohani Y., Ruiz C., Ryan J., Ré C., Sadigh D., Sagawa S., Santhanam K., Shih A., Srinivasan K., Tamkin A., Taori R., Thomas A. W., Tramèr F., Wang R. E., Wang W., Wu B., Wu J., Wu Y., Xie S. M., Yasunaga M., You J., Zaharia M., Zhang M., Zhang T., Zhang X., Zhang Y., Zheng L., Zhou K., and Liang P., “On the opportunities and risks of foundation models,” 2022.

- [35].Brown T., Mann B., Ryder N., Subbiah M., Kaplan J. D., Dhariwal P., Neelakantan A., Shyam P., Sastry G., Askell A. et al. , “Language models are few-shot learners,” Advances in neural information processing systems, vol. 33, pp. 1877–1901, 2020. [Google Scholar]

- [36].OpenAI, “Gpt-4 technical report,” 2023.

- [37].Lin Z., Akin H., Rao R., Hie B., Zhu Z., Lu W., Smetanin N., Verkuil R., Kabeli O., Shmueli Y. et al. , “Evolutionary-scale prediction of atomic-level protein structure with a language model,” Science, vol. 379, no. 6637, pp. 1123–1130, 2023. [DOI] [PubMed] [Google Scholar]

- [38].Brandes N., Ofer D., Peleg Y., Rappoport N., and Linial M., “Proteinbert: a universal deep-learning model of protein sequence and function,” Bioinformatics, vol. 38, no. 8, pp. 2102–2110, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Elnaggar A., Heinzinger M., Dallago C., Rehawi G., Wang Y., Jones L., Gibbs T., Feher T., Angerer C., Steinegger M., Bhowmik D., and Rost B., “Prottrans: Towards cracking the language of life’s code through self-supervised learning,” bioRxiv, 2021. [Online]. Available: https://www.biorxiv.org/content/early/2021/05/04/2020.07.12.199554 [DOI] [PubMed]

- [40].Madani A., McCann B., Naik N., Keskar N. S., Anand N., Eguchi R. R., Huang P.-S., and Socher R., “Progen: Language modeling for protein generation,” arXiv preprint arXiv:2004.03497, 2020.

- [41].Beal D. J., “Esm 2.0: State of the art and future potential of experience sampling methods in organizational research,” Annu. Rev. Organ. Psychol. Organ. Behav., vol. 2, no. 1, pp. 383–407, 2015. [Google Scholar]

- [42].Dosovitskiy A., Beyer L., Kolesnikov A., Weissenborn D., Zhai X., Unterthiner T., Dehghani M., Minderer M., Heigold G., Gelly S. et al. , “An image is worth 16×16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [43].Caron M., Touvron H., Misra I., Jégou H., Mairal J., Bojanowski P., and Joulin A., “Emerging properties in self-supervised vision transformers,” 2021.

- [44].Oquab M., Darcet T., Moutakanni T., Vo H., Szafraniec M., Khalidov V., Fernandez P., Haziza D., Massa F., El-Nouby A., Assran M., Ballas N., Galuba W., Howes R., Huang P.-Y., Li S.-W., Misra I., Rabbat M., Sharma V., Synnaeve G., Xu H., Jegou H., Mairal J., Labatut P., Joulin A., and Bojanowski P., “Dinov2: Learning robust visual features without supervision,” 2023.

- [45].Fang Y., Wang W., Xie B., Sun Q., Wu L., Wang X., Huang T., Wang X., and Cao Y., “Eva: Exploring the limits of masked visual representation learning at scale,” 2022.

- [46].Radford A., Kim J. W., Hallacy C., Ramesh A., Goh G., Agarwal S., Sastry G., Askell A., Mishkin P., Clark J., Krueger G., and Sutskever I., “Learning transferable visual models from natural language supervision,” 2021.

- [47].Alayrac J.-B., Donahue J., Luc P., Miech A., Barr I., Hasson Y., Lenc K., Mensch A., Millican K., Reynolds M. et al. , “Flamingo: a visual language model for few-shot learning,” Advances in Neural Information Processing Systems, vol. 35, pp. 23 716–23 736, 2022. [Google Scholar]

- [48].Kirillov A., Mintun E., Ravi N., Mao H., Rolland C., Gustafson L., Xiao T., Whitehead S., Berg A. C., Lo W.-Y. et al. , “Segment anything,” arXiv preprint arXiv:2304.02643, 2023.

- [49].Huang Y., Yang X., Liu L., Zhou H., Chang A., Zhou X., Chen R., Yu J., Chen J., Chen C., Chi H., Hu X., Fan D.-P., Dong F., and Ni D., “Segment anything model for medical images?” 2023. [DOI] [PubMed]

- [50].Zhang Y., Zhou T., Wang S., Liang P., and Chen D. Z., “Input augmentation with sam: Boosting medical image segmentation with segmentation foundation model,” 2023.

- [51].Lei W., Wei X., Zhang X., Li K., and Zhang S., “Medlsam: Localize and segment anything model for 3d medical images,” 2023. [DOI] [PubMed]

- [52].Shi P., Qiu J., Abaxi S. M. D., Wei H., Lo F. P.-W., and Yuan W., “Generalist vision foundation models for medical imaging: A case study of segment anything model on zero-shot medical segmentation,” Diagnostics, vol. 13, no. 11, p. 1947, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Hu M., Li Y., and Yang X., “Skinsam: Empowering skin cancer segmentation with segment anything model,” 2023.

- [54].Deng R., Cui C., Liu Q., Yao T., Remedios L. W., Bao S., Landman B. A., Wheless L. E., Coburn L. A., Wilson K. T., Wang Y., Zhao S., Fogo A. B., Yang H., Tang Y., and Huo Y., “Segment anything model (sam) for digital pathology: Assess zero-shot segmentation on whole slide imaging,” 2023.

- [55].Hörst F., Rempe M., Heine L., Seibold C., Keyl J., Baldini G., Ugurel S., Siveke J., Grünwald B., Egger J., and Kleesiek J., “Cellvit: Vision transformers for precise cell segmentation and classification,” 2023. [DOI] [PubMed]

- [56].Archit A., Nair S., Khalid N., Hilt P., Rajashekar V., Freitag M., Gupta S., Dengel A., Ahmed S., and Pape C., “Segment anything for microscopy,” bioRxiv, 2023. [Online]. Available: https://www.biorxiv.org/content/early/2023/08/22/2023.08.21.554208

- [57].Wang Y., Zhang X., Yang T., and Sun J., “Anchor detr: Query design for transformer-based detector,” in Proceedings of the AAAI conference on artificial intelligence, vol. 36, no. 3, 2022, pp. 2567–2575. [Google Scholar]

- [58].Edlund C., Jackson T. R., Khalid N., Bevan N., Dale T., Dengel A., Ahmed S., Trygg J., and Sjögren R., “Livecell—a large-scale dataset for label-free live cell segmentation,” Nature methods, vol. 18, no. 9, pp. 1038–1045, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].Spahn C., Gómez-de Mariscal E., Laine R. F., Pereira P. M., von Chamier L., Conduit M., Pinho M. G., Jacquemet G., Holden S., Heilemann M. et al. , “Deepbacs for multi-task bacterial image analysis using open-source deep learning approaches,” Communications Biology, vol. 5, no. 1, p. 688, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Mathieu G., Bachir E. D. et al. , “Brifiseg: a deep learning-based method for semantic and instance segmentation of nuclei in brightfield images,” arXiv preprint arXiv:2211.03072, 2022.

- [61].Cutler K. J., Stringer C., Wiggins P. A., and Mougous J. D., “Omnipose: a high-precision morphology-independent solution for bacterial cell segmentation,” bioRxiv, 2021. [DOI] [PMC free article] [PubMed]

- [62].Cutler K. J., Stringer C., Lo T. W., Rappez L., Stroustrup N., Brook Peterson S., Wiggins P. A., and Mougous J. D., “Omnipose: a high-precision morphology-independent solution for bacterial cell segmentation,” Nature methods, vol. 19, no. 11, pp. 1438–1448, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Kim H., Shin J., Kim E., Kim H., Hwang S., Shim J. E., and Lee I., “Yeastnet v3: a public database of data-specific and integrated functional gene networks for saccharomyces cerevisiae,” Nucleic acids research, vol. 42, no. D1, pp. D731–D736, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Dietler N., Minder M., Gligorovski V., Economou A. M., Lucien Joly D. A. H., Sadeghi A., Michael Chan C. H., Koziński M., Weigert M., Bitbol A.-F. et al. , “Yeaz: A convolutional neural network for highly accurate, label-free segmentation of yeast microscopy images,” bioRxiv, pp. 2020–05, 2020. [DOI] [PMC free article] [PubMed]

- [65].Caicedo J. C., Goodman A., Karhohs K. W., Cimini B. A., Ackerman J., Haghighi M., Heng C., Becker T., Doan M., McQuin C. et al. , “Nucleus segmentation across imaging experiments: the 2018 data science bowl,” Nature methods, vol. 16, no. 12, pp. 1247–1253, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Kumar N., Verma R., Sharma S., Bhargava S., Vahadane A., and Sethi A., “A dataset and a technique for generalized nuclear segmentation for computational pathology,” IEEE transactions on medical imaging, vol. 36, no. 7, pp. 1550–1560, 2017. [DOI] [PubMed] [Google Scholar]

- [67].Mahbod A., Schaefer G., Bancher B., Löw C., Dorffner G., Ecker R., and Ellinger I., “Cryonuseg: A dataset for nuclei instance segmentation of cryosectioned h&e-stained histological images,” Computers in biology and medicine, vol. 132, p. 104349, 2021. [DOI] [PubMed] [Google Scholar]

- [68].Mahbod A., Polak C., Feldmann K., Khan R., Gelles K., Dorffner G., Woitek R., Hatamikia S., and Ellinger I., “Nuinsseg: a fully annotated dataset for nuclei instance segmentation in h&e-stained histological images,” arXiv preprint arXiv:2308.01760, 2023. [DOI] [PMC free article] [PubMed]

- [69].Naylor P., Laé M., Reyal F., and Walter T., “Segmentation of nuclei in histopathology images by deep regression of the distance map,” IEEE transactions on medical imaging, vol. 38, no. 2, pp. 448–459, 2018. [DOI] [PubMed] [Google Scholar]

- [70].Kumar N., Verma R., Anand D., Zhou Y., Onder O. F., Tsougenis E., Chen H., Heng P.-A., Li J., Hu Z. et al. , “A multi-organ nucleus segmentation challenge,” IEEE transactions on medical imaging, vol. 39, no. 5, pp. 1380–1391, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [71].Vu Q. D., Graham S., Kurc T., To M. N. N., Shaban M., Qaiser T., Koohbanani N. A., Khurram S. A., Kalpathy-Cramer J., Zhao T. et al. , “Methods for segmentation and classification of digital microscopy tissue images,” Frontiers in bioengineering and biotechnology, p. 53, 2019. [DOI] [PMC free article] [PubMed]

- [72].Verma R., Kumar N., Patil A., Kurian N. C., Rane S., Graham S., Vu Q. D., Zwager M., Raza S. E. A., Rajpoot N. et al. , “Monusac2020: A multi-organ nuclei segmentation and classification challenge,” IEEE Transactions on Medical Imaging, vol. 40, no. 12, pp. 3413–3423, 2021. [DOI] [PubMed] [Google Scholar]

- [73].Li Y., Mao H., Girshick R., and He K., “Exploring plain vision transformer backbones for object detection,” in European Conference on Computer Vision. Springer, 2022, pp. 280–296. [Google Scholar]

- [74].Lin T.-Y., Maire M., Belongie S., Hays J., Perona P., Ramanan D., Dollár P., and Zitnick C. L., “Microsoft coco: Common objects in context,” in Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V 13. Springer, 2014, pp. 740–755. [Google Scholar]

- [75].Girshick R., Donahue J., Darrell T., and Malik J., “Rich feature hierarchies for accurate object detection and semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, pp. 580–587. [Google Scholar]

- [76].Ren S., He K., Girshick R., and Sun J., “Faster r-cnn: Towards real-time object detection with region proposal networks,” 2016. [DOI] [PubMed]

- [77].Chen K. H., Boettiger A. N., Moffitt J. R., Wang S., and Zhuang X., “Spatially resolved, highly multiplexed rna profiling in single cells,” Science, vol. 348, no. 6233, p. aaa6090, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [78].Eng C.-H. L., Lawson M., Zhu Q., Dries R., Koulena N., Takei Y., Yun J., Cronin C., Karp C., Yuan G.-C. et al. , “Transcriptome-scale super-resolved imaging in tissues by rna seqfish+,” Nature, vol. 568, no. 7751, pp. 235–239, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [79].Laubscher E., Wang X. J., Razin N., Dougherty T., Xu R. J., Ombelets L., Pao E., Graf W., Moffitt J. R., Yue Y. et al. , “Accurate single-molecule spot detection for image-based spatial transcriptomics with weakly supervised deep learning,” bioRxiv, 2023. [DOI] [PubMed]

- [80].Petukhov V., Xu R. J., Soldatov R. A., Cadinu P., Khodosevich K., Moffitt J. R., and Kharchenko P. V., “Cell segmentation in imaging-based spatial transcriptomics,” Nature biotechnology, vol. 40, no. 3, pp. 345–354, 2022. [DOI] [PubMed] [Google Scholar]

- [81].Bochinski E., Eiselein V., and Sikora T., “High-speed tracking-by-detection without using image information,” in 2017 14th IEEE international conference on advanced video and signal based surveillance (AVSS). IEEE, 2017, pp. 1–6. [Google Scholar]

- [82].Schmitt D. L., Curtis S. D., Lyons A. C., Zhang J.-f., Chen M., He C. Y., Mehta S., Shaw R. J., and Zhang J., “Spatial regulation of ampk signaling revealed by a sensitive kinase activity reporter,” Nature communications, vol. 13, no. 1, p. 3856, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [83].Zhang X., Wang X., Shivashankar G., and Uhler C., “Graph-based autoencoder integrates spatial transcriptomics with chromatin images and identifies joint biomarkers for alzheimer’s disease,” Nature Communications, vol. 13, no. 1, p. 7480, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [84].Yang K. D., Belyaeva A., Venkatachalapathy S., Damodaran K., Katcoff A., Radhakrishnan A., Shivashankar G., and Uhler C., “Multi-domain translation between single-cell imaging and sequencing data using autoencoders,” Nature communications, vol. 12, no. 1, p. 31, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [85].Shah S., Lubeck E., Zhou W., and Cai L., “seqfish accurately detects transcripts in single cells and reveals robust spatial organization in the hippocampus,” Neuron, vol. 94, no. 4, pp. 752–758, 2017. [DOI] [PubMed] [Google Scholar]

- [86].Dar D., Dar N., Cai L., and Newman D. K., “Spatial transcriptomics of planktonic and sessile bacterial populations at single-cell resolution,” Science, vol. 373, no. 6556, p. eabi4882, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [87].Nguyen E., Poli M., Faizi M., Thomas A., Birch-Sykes C., Wornow M., Patel A., Rabideau C., Massaroli S., Bengio Y., Ermon S., Baccus S. A., and Ré C., “Hyenadna: Long-range genomic sequence modeling at single nucleotide resolution,” 2023.

- [88].Feldman D., Singh A., Schmid-Burgk J. L., Carlson R. J., Mezger A., Garrity A. J., Zhang F., and Blainey P. C., “Optical pooled screens in human cells,” Cell, vol. 179, no. 3, pp. 787–799, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [89].Pizer S. M., Amburn E. P., Austin J. D., Cromartie R., Geselowitz A., Greer T., ter Haar Romeny B., Zimmerman J. B., and Zuiderveld K., “Adaptive histogram equalization and its variations,” Computer vision, graphics, and image processing, vol. 39, no. 3, pp. 355–368, 1987. [Google Scholar]

- [90].Hosang J., Benenson R., and Schiele B., “Learning non-maximum suppression,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 4507–4515. [Google Scholar]

- [91].He K., Zhang X., Ren S., and Sun J., “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778. [Google Scholar]

- [92].Carion N., Massa F., Synnaeve G., Usunier N., Kirillov A., and Zagoruyko S., “End-to-end object detection with transformers,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I 16. Springer, 2020, pp. 213–229. [Google Scholar]

- [93].Loshchilov I. and Hutter F., “Decoupled weight decay regularization,” arXiv preprint arXiv:1711.05101, 2017.

- [94].Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., Killeen T., Lin Z., Gimelshein N., Antiga L., Desmaison A., Kopf A., Yang E., DeVito Z., Raison M., Tejani A., Chilamkurthy S., Steiner B., Fang L., Bai J., and Chintala S., “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems 32. Curran Associates, Inc., 2019, pp. 8024–8035. [Online]. Available: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf [Google Scholar]

- [95].Falcon W. and The PyTorch Lightning team, “PyTorch Lightning,” Mar. 2019. [Online]. Available: https://github.com/Lightning-AI/lightning

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All datasets with test/training/validation splits are publicly available at https://cellsam.deepcell.org.