Abstract

A major remaining challenge for magnetic resonance-based attenuation correction methods (MRAC) is their susceptibility to sources of magnetic resonance imaging (MRI) artifacts (e.g., implants and motion) and uncertainties due to the limitations of MRI contrast (e.g., accurate bone delineation and density, and separation of air/bone). We propose using a Bayesian deep convolutional neural network that in addition to generating an initial pseudo-CT from MR data, it also produces uncertainty estimates of the pseudo-CT to quantify the limitations of the MR data. These outputs are combined with the maximum-likelihood estimation of activity and attenuation (MLAA) reconstruction that uses the PET emission data to improve the attenuation maps. With the proposed approach uncertainty estimation and pseudo-CT prior for robust MLAA (UpCT-MLAA), we demonstrate accurate estimation of PET uptake in pelvic lesions and show recovery of metal implants. In patients without implants, UpCT-MLAA had acceptable but slightly higher root-mean-squared-error (RMSE) than Zero-echotime and Dixon Deep pseudo-CT when compared to CTAC. In patients with metal implants, MLAA recovered the metal implant; however, anatomy outside the implant region was obscured by noise and crosstalk artifacts. Attenuation coefficients from the pseudo-CT from Dixon MRI were accurate in normal anatomy; however, the metal implant region was estimated to have attenuation coefficients of air. UpCT-MLAA estimated attenuation coefficients of metal implants alongside accurate anatomic depiction outside of implant regions.

Keywords: Bayesian deep learning, deep learning, magnetic resonance-based attenuation correction (MRAC), maximum-likelihood estimation of activity and attenuation (MLAA), synthetic CT

I. Introduction

The Quantitative accuracy of simultaneous positron emission tomography and magnetic resonance imaging (PET/MRI) depends on accurate attenuation correction. Simultaneous imaging with positron emission tomography and computed tomography (PET/CT) is the current clinical gold standard for PET attenuation correction since the CT images can be used for attenuation correction of 511-keV photons with piecewise-linear models [1]. Magnetic resonance imaging (MRI) measures spin density rather than electron density and, thus, cannot directly be used for PET attenuation correction.

A comprehensive review of attenuation correction methods for PET/MRI can be found at [2]. Briefly, current methods for attenuation correction in PET/MRI can be grouped into the following categories: atlas based, segmentation based, and machine learning based. Atlas-based methods utilize a CT atlas that is generated and registered to the acquired MRI [3]–[6]. Segmentation-based methods use special sequences such as ultrashort echo-time (UTE) [7]–[11] or zero echo-time (ZTE) [12]–[16] to estimate bone density and Dixon sequences [17]–[19] to estimate soft-tissue densities. Machine learning-based methods, including deep learning methods, use sophisticated machine learning models to learn mappings from MRI to pseudo-CT images [20]–[26] or PET transmission images [27]. There have also been methods that estimate attenuation coefficient maps from the PET emission data [28], [29] or directly correct PET emission data [30]–[32] using deep learning.

For PET alone, an alternative method for attenuation correction is “joint estimation,” also known as maximum-likelihood estimation of activity and attenuation (MLAA) [33], [34]. Rather than relying on an attenuation map that was measured or estimated with another scan or modality, the PET activity image (-map) and PET attenuation coefficient map (-map) are estimated jointly from the PET emission data only. However, MLAA suffers from numerous artifacts and high noise [35].

In positron emission tomography and MRI (PET/MRI), recent methods developed to overcome the limitations of MLAA include using MR-based priors [36], [37], constraining the region of joint estimation [38] or using deep learning to denoise the resulting -map and/or -map from MLAA [39]–[42]. Mehranian and Zaidi’s [36] approach of using priors improved MLAA results; however, this was not demonstrated on metal implants. Ahn et al.’s and Fuin et al.’s methods [37], [38] that also use priors were able to recover metal implants in the PET image reconstruction, but the -maps were missing bones and other anatomical features. Furthermore, their methods require a manual or semiautomated segmentation step to delineate the regions where to apply the correct priors (such as the metal implant region). The approaches by Hwang et al. [39]–[41] and Choi et al. [42] that utilize supervised deep learning resulted in anatomically correct and accurate -maps; however, the method was not demonstrated in the presence of metal implants.

Utilizing supervised deep learning is considered a very promising method for accurate and precise PET/MRI attenuation correction. However, the main limitation of a supervised deep learning method is the finite data set that needs to have a diverse set of well-matched inputs and outputs.

In PET/MRI, the presence of metal implants complicates training because there are resulting metal artifacts in both CT and MRI. Furthermore, the artifacts appears differently: a metal implant produces a star-like streaking pattern with high Hounsfield unit values in the CT image [43] and a signal void in the MRI image [37]. This makes registration between MRI and CT images difficult and the artifacts lead to intrinsic errors in the training dataset.

In addition, there will arguably always be edge cases and rare features that cannot be captured with enough representation in a training data set. Images of humans can have rare features not easily obtained (e.g., missing organs due to surgery, a new or uncommon implant). Under these conditions, a standard supervised deep learning approach may produce incorrect predictions and the user (or any downstream algorithm) will be unaware of the errors.

A recent study by Ladefoged et al. [44] demonstrated the importance of a high-quality data set in deep learning-based brain PET/MRI attenuation correction. A large, diverse set of at least 50 training examples were required to achieve robustness and they highlighted that the remaining errors and limitations in deep learning-based MR attenuation correction were due to “abnormal bone structures, surgical deformation, and metal implants.”

In this work, we propose the use of supervised Bayesian deep learning to estimate predictive uncertainty to detect rare or previously unseen image structures and estimate intrinsic errors that traditional supervised deep learning approaches cannot.

Bayesian deep learning provides tools to address the limitations of a finite training dataset: the estimation of epistemic and predictive uncertainty [45]. A general introduction to uncertainties in machine learning can be found at [46].

Epistemic uncertainty is the uncertainty on learned model parameters that arises due to incomplete knowledge or, in the case of supervised machine learning, the lack of training data. Epistemic uncertainty is manifested as a diverse set of different model parameters that fit the training data.

The epistemic uncertainty of the model can then be used to produce predictive uncertainty that captures if there are any features or structures that deviate from the training dataset on a test image. This allows for the detection of rare or previously unseen image structures without explicitly training to identify these structures.

Typical supervised deep learning approaches do not capture the epistemic nor predictive uncertainty because only one set of model parameters is learned and only a single prediction is produced (e.g., a single pseudo-CT image).

In this work for PET/MRI attenuation correction, the predictive uncertainty is used to automatically weight the balance between the deep learning -map prediction from MRI and the -map estimates from the PET emission data from MLAA. When the model is expected to have good performance on a region in a test image, then MLAA has minimal contribution. However, when the model is expected to have poor performance on regions in a test image, then MLAA has a stronger contribution to the attenuation coefficient estimates of those regions.

Specifically, we extend the framework of Ahn et al.’s MLAA regularized with MR-based priors [37] and generate MR-based priors with a Bayesian convolutional neural network (BCNN) [47] that additionally provides a predictive uncertainty map to automatically modulate the strength of the MLAA priors. We demonstrate a proof-of-concept methodology that produces anatomically correct, accurate, and precise -maps with high SNR that can recover metal implants for PET/MRI attenuation correction in the pelvis.

II. Materials and Methods

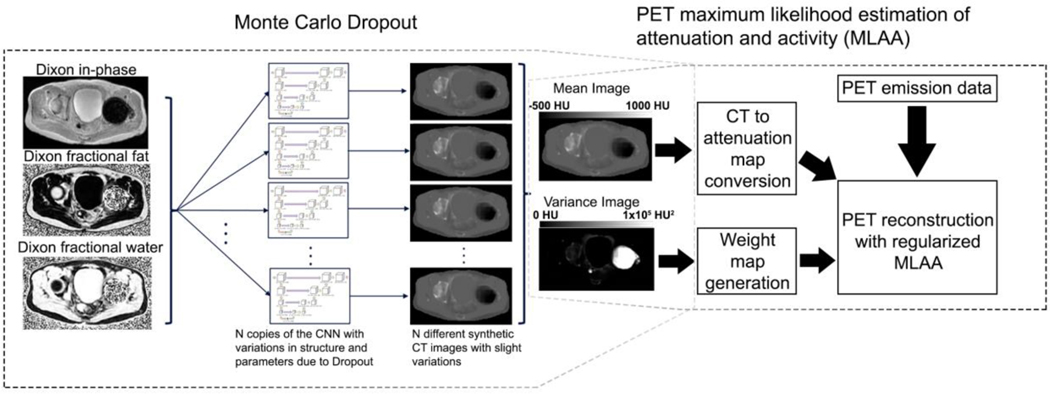

Uncertainty estimation and pseudo-CT prior for robust MLAA (UpCT-MLAA) is composed of two major elements: 1) initial pseudo-CT characterization with Bayesian deep learning through the Monte Carlo Dropout [47] and 2) PET reconstruction with regularized MLAA [37]. The algorithm is depicted in Fig. 1 and each component is described in detail below.

Fig. 1.

Schematic flow of UpCT-MLAA. Monte Carlo Dropout is first performed with the BCNN, then the outputs are provided as inputs to PET reconstruction with regularized MLAA.

A. Bayesian Deep Learning

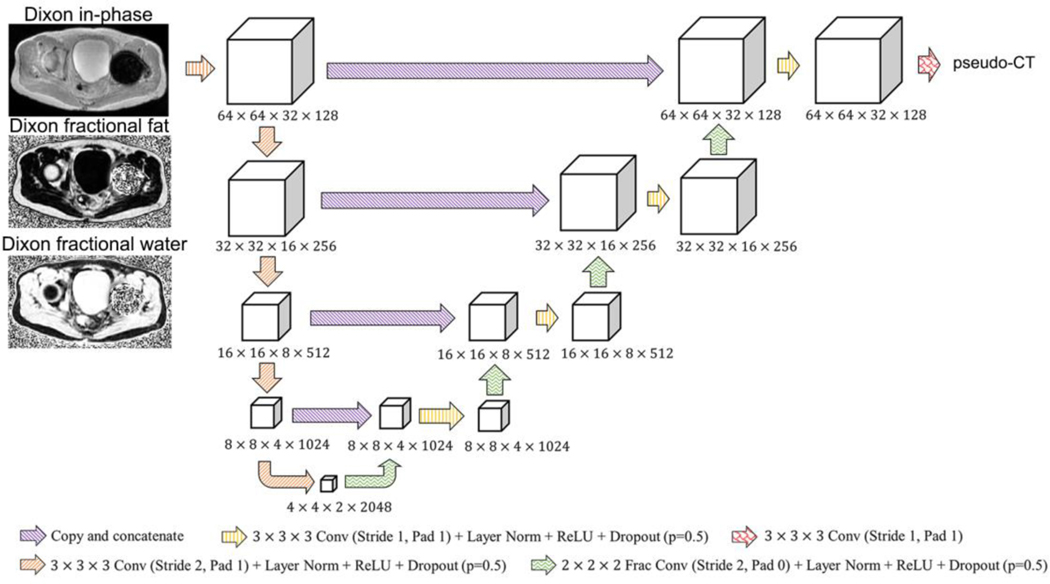

The architecture of the BCNN is shown in Fig. 2. It was based on the U-net-like network in [21] with the following modifications: 1) Dropout [47], [48] was included after every convolution; 2) the patch size was increased to 64 × 64 × 32 voxels; and 3) each layer’s number of channels was increased by four times to compensate for the reduction of information capacity due to the Dropout. The PyTorch software package [49] (v0.4.1, http//pytorch.org) was used.

Fig. 2.

Deep convolutional neural network architecture used in this work.

Inputs to the model were volume patches of the following dimensions and size: 64 pixels × 64 pixels × 32 pixels × 3 channels. Each channel was a volume patch of the bias-corrected and fat-tissue normalized Dixon in-phase image, Dixon fractional fat image, and Dixon fractional water image, respectively, at the same spatial locations [50]. The output was a corresponding pseudo-CT image with size 64 pixels × 64 pixels × 32 pixels × 1 channel. ZTE MRI was not used as inputs to this model since it has been demonstrated that accurate HU estimates can be achieved with only the Dixon MR pulse sequence [22], [50].

1). Model Training:

Model training was performed similarly to our previous work [21], [50]. The loss function was a combination of the -loss, gradient difference loss (GDL), and the Laplacian difference loss (LDL)

| (1) |

where is the gradient operator, is the Laplacian operator, is the ground-truth CT image patch, and is the output pseudo-CT image patch with and . The Adam optimizer [51] (, , , ) was used to train the neural network. An L2 regularization () on the weights of the network was used. He initialization [52] was used and a minibatch of four volumetric patches was used for training on two NVIDIA GTX Titan X Pascal (NVIDIA Corporation, Santa Clara, CA, USA) graphics processing units. The models were trained for approximately 68 h to achieve 100000 iterations.

B. Pseudo-CT Prior and Weight Map

The generation of the pseudo-CT estimate and variance image was performed through Monte Carlo Dropout [47] with the BCNN described above. The Monte Carlo Dropout inference is outlined in Fig. 1. A total of 243 Monte Carlo samples were performed to generate a pseudo-CT estimate and a variance map

| (2) |

| (3) |

where is a sample of the BCNN with Dropout, is the input Dixon MRI, and is the number of Monte Carlo samples. Inference took approximately 40 min per patient on 8 NVIDIA K80 graphics processing units. We include a detailed description of the sources of uncertainties and variations in the supplementary material.

The pseudo-CT estimate was converted to a -map with a bilinear model [1] and the variance map was converted to a weight map with a range of 0.0 to 1.0 with the following empirical transformation:

| (4) |

where is the variance at voxel position . The sigmoidal transformation was calibrated by inspecting the resulting variance maps. It was designed such that the transition band of the sigmoid covers the range of variances in the body and finally saturates at uncertainty values of bowel air and metal artifact regions. With the constants chosen, the transition band of the sigmoid corresponds to variances of 0 to ∼100000 HU2 (standard deviations of 0 to ∼300 HU). The weight map was then linearly scaled to have a range of 1×103 to 5×106, called . The low values correspond to regions with high uncertainty and, thus, the estimation for these regions would be dominated by the emission data. Additional information about the empirical transformation is provided in the supplementary material.

The weight map was additionally processed to set weights outside the body (e.g., air voxels) to 0.0 so that these were not included in MLAA reconstruction. A body mask was generated by thresholding (> −400 HU) the pseudo-CT estimate. The initial body mask was morphologically eroded by a 1-voxel radius sphere. Holes in the body were then filled in with the imfill function (Image Processing Toolbox, MATLAB 2014b) at each axial slice. The body masks were then further refined by removing arms as in our previous work [14].

C. Uncertainty Estimation and Pseudo-CT Prior for Robust Maximum-Likelihood Estimation of Activity and Attenuation

UpCT-MLAA is a combination of the outputs of the BCNN and regularized MLAA. The process is depicted in Fig. 1. MRI and CT images of patients without metal implants were used to train the BCNN.

We explicitly trained the network only on patients without metal implants to force the BCNN to extrapolate on the voxel regions containing metal implant (i.e., “out-of-distribution” features) to maximize the uncertainty in these regions.

Thus, a high variance () emerged in implant regions compared to a low variance in normal anatomy (0 to ~2.5×104 HU2) with the uncertainty estimation as can be seen in Fig. 1. The -map estimate and the weight map were then provided to the regularized MLAA [37] to perform PET reconstruction (five iterations with 28 subsets, each iteration consists of one time-of-flight ordered subsets expectation maximization with a point spread function model (TOF-OSEM) iteration and five ordered subsets transmission (OSTR) iterations, as described above, ). Specifically, the MR-based regularization term in MLAA is

| (5) |

where indexes over each voxel in the volume. is determined from the mean pseudo-CT image and is determined from the variance image through the weight map transformation. The formulation in (5) is slightly different from that in [37, Sec. 2.3.2] but has the same effect.

III. Patient Studies

The study was approved by the local Institutional Review Board (IRB). Patients who were imaged with PSMA-11 signed a written informed consent form while the IRB waived the requirement for informed consent for FDG and DOTATATE studies.

Patients with pelvic lesions were scanned using an integrated 3 Tesla time-of-flight PET/MRI system [53] (SIGNA PET/MR, GE Healthcare, Chicago, IL, USA). The patient population consisted of 29 patients (Age = 58.7±13.9 years old, 16 males, 13 females): ten patients without implants were used for model training, 16 patients without implants were used for evaluation with a CT reference, and three patients with implants were used for evaluation in the presence of metal artifacts.

A. PET/MRI Acquisition.

The PET acquisition on the evaluation set was performed with different radiotracers: 18F-FDG (11 patients), 68Ga-PSMA-11 (seven patients), 68Ga-DOTATATE (one patient). The PET scan had 600-mm transaxial field-of-view (FOV) and 25 cm axial FOV, with a time-of-flight timing resolution of approximately 400 ps. The imaging protocol included a six bed-position whole-body PET/MRI and a dedicated pelvic PET/MRI acquisition. The PET data were acquired for 15–20 min during the dedicated pelvis acquisition, during which clinical MRI sequences and the following magnetic resonance-based attenuation correction (MRAC) sequences were acquired: Dixon (FOV = 500×500×312 mm, resolution = 1.95 × 1.95 mm, slice thickness = 5.2 mm, slice spacing = 2.6 mm, and scan time = 18 s) and ZTE MR (cubical FOV = 340×340×340 mm, isotropic resolution = 2×2×2 mm, 1.36 ms readout duration, FA = 0.6°, 4 s hard RF pulse, and scan time = 123 s).

B. CT Imaging.

Helical CT images of the patients were acquired separately on different machines (GE Discovery STE, GE Discovery ST, Siemens Biograph 16, Siemens Biograph 6, Philips Gemini TF ToF 16, Philips Gemini TF ToF 64, Siemens SOMATOM Definition AS) and were co-registered to the MR images using the method outlined below. Multiple CT protocols were used with varying parameter settings (110–130 kVp, 30–494 mA, rotation time = 0.5 s, pitch = 0.6–1.375, 11.5–55 mm/rotation, axial FOV = 500–700 mm, slice thickness = 3–5 mm, and matrix size = 512×512).

Preprocessing consisted of filling in bowel air with softtissue HU values and copying arms from the Dixon-derived pseudo-CT due to the differences in bowel air distribution and the CT scan being acquired with arms up, respectively [14].

MRI and CT image pairs were co-registered using the ANTS [54] registration package and the SyN diffeomorphic deformation model with combined mutual information and cross-correlation metrics [14], [21], [50].

C. PET Reconstructions

In addition to UpCT-MLAA, additional PET reconstructions were performed for comparison.

For each patient without metal implants: 1) UpCT-MLAA was performed and TOF-OSEM [55] (transaxial FOV = 600 mm, two iterations, 28 subsets, matrix size = 192 × 192, and 89 slices of 2.78-mm thickness) with two -maps: 2) ZeDD-CTAC; 3) initial AC estimate of the BCNN (BpCT-AC); and 4) CTAC, for comparison. BpCT-AC is a surrogate for ZeDD-CTAC but without the use of a specialized MR sequence.

For each patient with metal implants, UpCT-MLAA was performed along with: 1) naive MLAA; 2)–4) regularized MLAA with increasing regularization parameters (, constant over the volume); 5) TOF-OSEM with BpCT-AC; and 6) TOF-OSEM with CTAC for comparison.

D. Data Analysis.

Image error analysis and lesion-based analysis were performed for patients without metal implants: the average () and standard deviation () of the error, mean-absolute-error (MAE), and root-mean-squared-error (RMSE) were computed over voxels that met a minimum signal amplitude and/or signal-to-noise criteria [21]. Global HU and PET SUV comparisons were only performed in voxels with amplitudes > −950 HU in the ground-truth CT to exclude air, and a similar threshold of > 0.01 cm−1 attenuation in the CTAC was used for comparison of AC maps. Bone and soft-tissue lesions were identified by a board-certified radiologist. Bone lesions are defined as lesions inside the bone or with lesion boundaries within 10 mm of bone [56]. A Wilcoxon signed-rank test was used to compare the biases compared to CTAC of individual lesions.

In the cases where a metal implant was present, we qualitatively examined the resulting AC maps of the different reconstructions and quantitatively compared with reference CTAC PET. High uptake lesions and lesion-like objects were identified on the PET images reconstructed with UpCT-MLAA and separated into two categories: 1) inplane with the metal implant and 2) out-plane of the metal implant. A Wilcoxon signed-rank test was used to compare the SUV and values between the different reconstruction methods and CTAC PET.

IV. Results

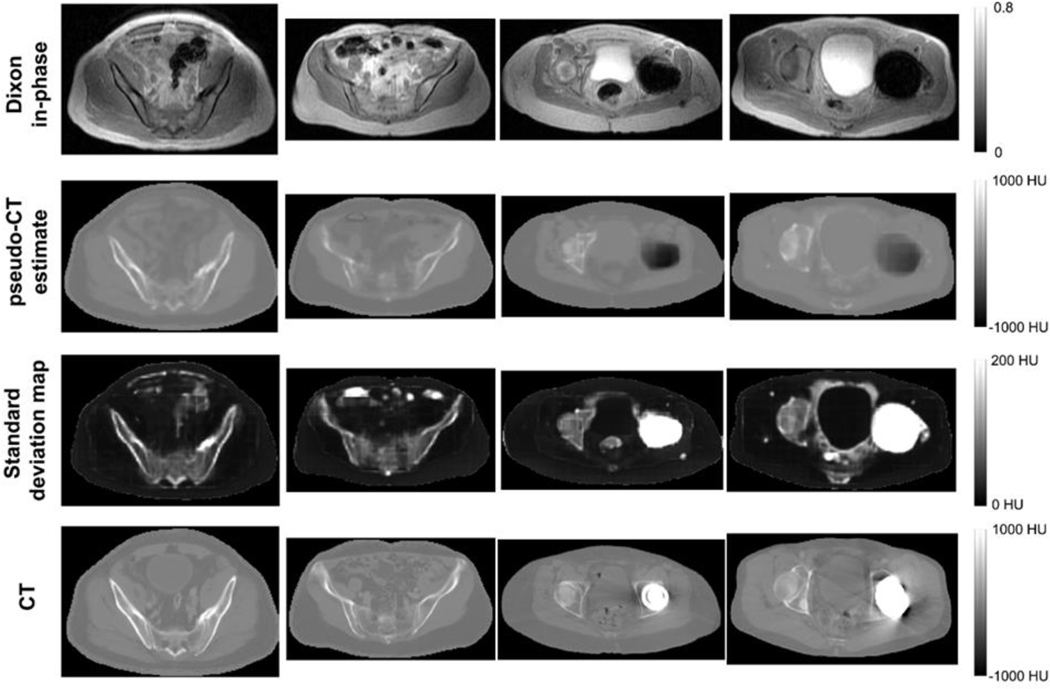

A. Monte Carlo Dropout

Representative images of the output of the BCNN with the Monte Carlo Dropout is shown in Fig. 3. The same mask used for the weight maps was used to remove voxels outside the body. The pseudo-CT images visually resemble the ground-truth CT images for patients without implants. While in patients with implants, the metal artifact region in the MRI was assigned air HU values. Nonetheless, the associated standard deviation maps highlighted image structures that the network had high predictive uncertainty. The most important of which are air pockets and the metal implant. The BCNN highlighted these regions and structures in the standard deviation image without being explicitly trained to do so.

Fig. 3.

Representative intermediate image outputs of the BCNN with Monte Carlo Dropout compared to the reference CT images for patients without metal implants (columns 1 and 2) and patients with metal implants (columns 3 and 4). The voxelwise standard deviation map is shown instead of variance for better visual depiction. Regions with high standard deviation correspond to bone, bowel air, skin boundary, implants, blood vessels, and regions with likely modeling error (e.g., around the bladder in the standard deviation map in the rightmost column.)

An additional example of the uncertainty estimation is provided in Fig. 1 in the supplementary material. The input MRI had motion artifacts due to breathing and arm truncation due to inhomogeneity at the edge of the FOV. Like the metal implants, the BCNN highlighted the motion artifact region and arm truncation in the variance image without being explicitly trained to do so.

B. Patients Without Implants

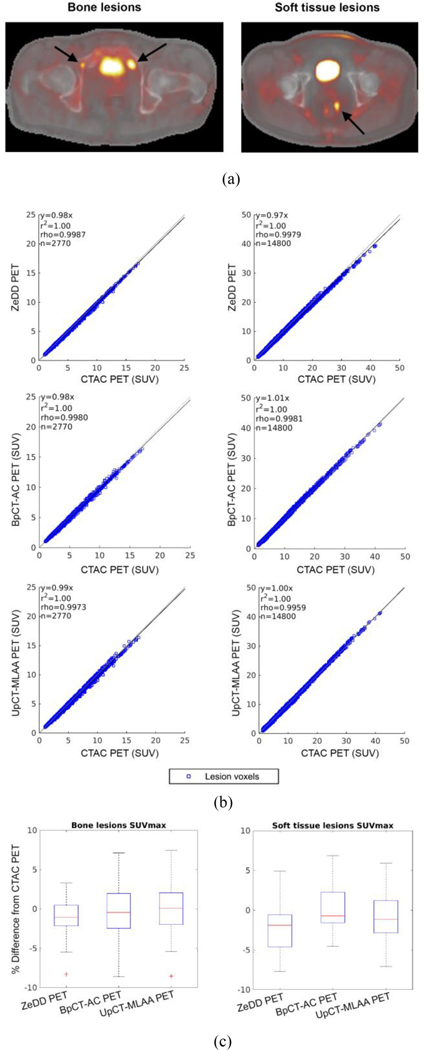

The PET reconstruction results for the patients without implants are summarized in Fig. 4. The RMSE is reported along with the average and standard deviation of the error as RMSE . Additional results for the pseudo-CT, AC maps, and PET data are provided in Figs. 2–5 in the supplementary material.

Fig. 4.

(a) Representative images of bone and soft-tissue lesions for patients without implants [reproduced from (20)]. (b) Scatter plots of SUV in every lesion voxel. (c) Box plots of the in each lesion. This shows that BpCT-AC and UpCT-MLAA-AC is near equivalent to ZeDD-CTAC in patients without implants when comparing to CTAC.

Fig. 5.

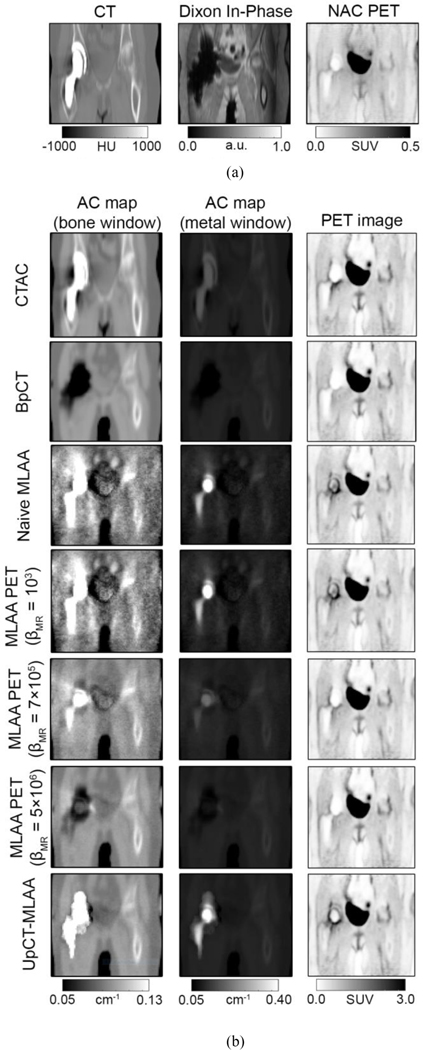

Representative images from metal implant patient #3 imaged with 18F-FDG. (a) CT, Dixon in-phase, and NAC PET images. (b, first and second column) AC maps and (b, third column) associated PET reconstructions. The AC maps are shown in two different window levels to highlight (b, first column) bone and soft tissue and (b, second column) the metal implant.

1). Pseudo-CT Results:

The total RMSE for the pseudo-CT compared to gold-standard CT across all volumes were 98 HU (−13 ± 97 HU) for ZeDD-CT and 95 HU (−6.5 ± 94 HU) for BpCT. The BpCT is the same pseudo-CT image used in UpCT-MLAA.

2). Attenuation Coefficient Map Results:

The total RMSE for the AC maps compared to gold-standard CTAC across all volumes were 3.1×10−3cm−1(−5.0×10−4±3.1×10−3cm−1) for ZeDD-CTAC, 3.2 × 10−3cm−1(−3.8 × 10−5 ± 3.2 × 10−3cm−1) for BpCT-AC, and 3.5×10−3cm−1(−2.6 × 10−5±3.5×10−3cm−1) for UpCT-MLAA-AC.

3). PET Images:

The total RMSE for PET images compared to gold-standard CTAC PET across all volumes were 0.023 SUV(−0.005 ± 0.023 SUV) for ZeDD PET, 0.022 SUV (−8.1×10−5 ± 0.022 SUV) for BpCT-AC PET, and 0.027 SUV (1.5×10−4 ± 0.027 SUV) for UpCT-MLAA PET.

4). Lesion Uptake and :

The results for lesion analysis for patients without implants are shown in Fig. 4. There were 30 bone lesions and 60 soft-tissue lesions across the 16 patient datasets. The RMSE w.r.t. CTAC PET SUV and are summarized in Table I. For of bone lesions, no significant difference was found for ZeDD PET and BpCT-AC PET (p = 0.116) while PET ZeDD PET and UpCT-MLAA PET were significantly different (p = 0.037). For of soft-tissue lesions, ZeDD PET and BpCT-AC PET were significantly different (p < 0.001) while no significant difference was found between ZeDD PET and UpCT-MLAA PET (p = 0.16).

TABLE I.

Lesion SUV Errors Over the Volume Compared to CTAC in Patients Without Implants

| Bone lesions | |

|

| |

| Method | SUVRMSE () |

|

| |

| ZeDD-CTAC BpCT-AC UpCT-MLAA |

2.6 % (−1.3 ± 2.3 %) 3.2 % (−0.9 ± 3.1%) 3.6 % (−0.3 ± 3.6 %) |

|

| |

| Method | RMSE () |

|

| |

| ZeDD-CTAC BpCT-AC UpCT-MLAA |

2.6 % (−1.3 ± 2.3 %) .1% (−0.3 ± 3.1%) .4 % (0.03 ± 3.4 %) |

|

| |

| Soft tissue lesions | |

|

| |

| Method | SUV RMSE () |

|

| |

| ZeDD-CTAC BpCT-AC UpCT-MLAA |

4.4 % (−2.9 + 3.3 %) 3.5% (0.01 + 3.5%) 4.6 % (−1.1 ± 4.5 %) |

|

| |

| Method | RMSE () |

|

| |

| ZeDD-CTAC BpCT-AC UpCT-MLAA |

4.1 % (−2.3 ± 3.4 %) 3.4% (−0.1 ± 3.4%) 4.8 % (−1.6 + 4.5 %) |

C. Patients With Metal Implants

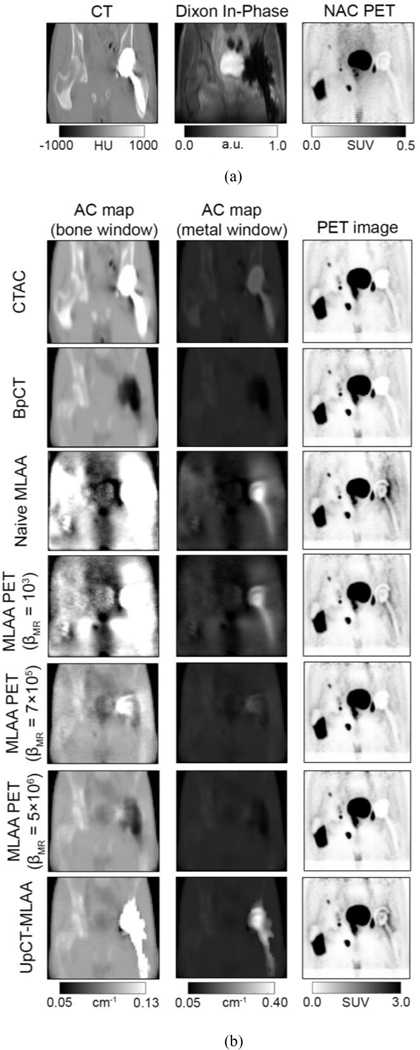

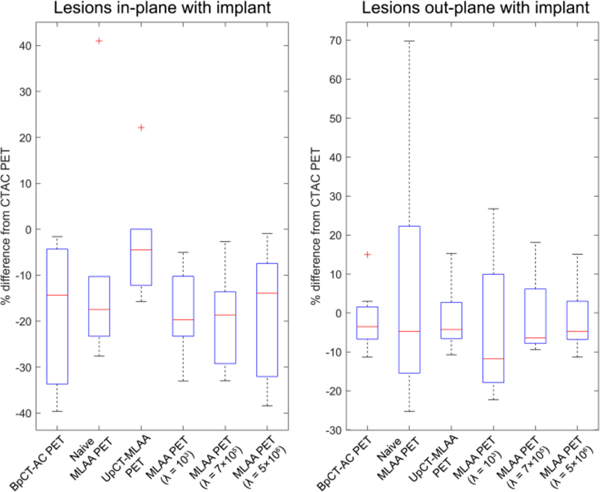

Figs. 5 and 6 show the different AC maps generated with the different reconstruction processes and associated PET image reconstructions on two different radiotracers (18F-FDG and 68Ga-PSMA) and Fig. 7 shows the summary of the results. Additional results for pseudo-CT, AC maps, and PET images are provided in Figs. 6–11 in the supplementary material.

Fig. 6.

Representative images from metal implant patient #1 imaged with 68Ga-PSMA. (a) CT, Dixon in-phase, and NAC PET images. (b, first and second column) AC maps and (b, third column) associated PET reconstructions. The AC maps are shown in two different window levels to highlight (b, first column) bone and soft tissue and (b, second column) the metal implant.

Fig. 7.

Box plot summarizing the results comparing to CTAC PET for patients with implants. The red crosses denote outliers.

1). Metal Implant Recovery:

Figs. 5(b) (1st and 2nd columns) and 6(b) (1st and 2nd columns) show the AC map estimation results.

BpCT-AC filled in the metal implant region with air since the metal artifact in MRI appears as a signal void. Although reconstructing using naive MLAA recovers the metal implant, the AC map was noisy and anatomical structures were difficult to depict. The addition of regularization (increasing ) reduces the noise, however over-regularization eliminates the presence of the metal implant. The use of a different radiotracer also influenced reconstruction performance: the MLAA-based methods performed worse when the tracer was 68Ga-PSMA compared to 18F-FDG with low regularization. In contrast, UpCT-MLAA-AC recovered the metal implant while maintaining high SNR depiction of anatomical structures outside the implant region for both radiotracers. The high attenuation coefficients were constrained in the regions where high variance was measured (or where the metal artifact was present on the BpCT AC maps).

2). PET Image Reconstruction:

Figs. 5(b) (3rd column) and 6(b) (3rd column) show the PET image reconstruction results.

Qualitatively, the MLAA-based methods (UpCT-MLAA and Standard MLAA) show uptake around the implant, whereas BpCT-AC PET and CTAC PET show the implant region without any uptake. When compared to the NAC PET, the MLAA-based methods better match what is depicted within the implant region. Quantitatively, Table I summarizes the SUV results for voxels in-plane of the metal implant and out-plane of the metal implant.

3). Quantification:

Fig. 7 shows the comparisons of of lesions in-plane and out-plane of the metal implant and Tables II and III list the RMSE values for SUV and . There were six lesions in-plane and 15 lesions out-plane with the metal implants across the three patients with implants. Only UpCT-MLAA provided relatively low quantification errors on lesions both in-plane and out-plane of the metal implant.

TABLE II.

SUV Errors Over the Volume Compared to CTAC

| Method | SUV RMSE (, , p-value) |

|---|---|

|

| |

| In-plane with metal implant | |

|

| |

| BpCT-AC | 0.10 SUV (SUV, SUV,p< 0.001) |

| Naive MLAA | 0.19 SUV (SUV, SUV,p < 0.001) |

| MLAAPET () | 0.16 SUV (, , p < 0.001) |

| MLAAPET () | 0.09 SUV (, , p < 0.001) |

| MLAAPET () | 0.09 SUV (, , p < 0.001) |

| UpCT-MLAA | 0.12 SUV (SUV, SUV, p< 0.001) |

|

| |

| Out-plane with metal implant | |

|

| |

| BpCT-AC | 0.086 SUV SUV, SUV, p< 0.001) |

| Naive MLAA | 0.14 SUV SUV, SUV,p < 0.001) |

| MLAAPET () | 0.13 SUV , , p < 0.001) |

| MLAAPET () | 0.09 SUV , , p < 0.001) |

| MLAAPET () | 0.09 SUV (, , p < 0.001) |

| UpCT-MLAA | 0.086 SUV (SUV, SUV, p< 0.001) |

TABLE III.

Lesion Percent Errors

| Method | % RMSE (, , p-value) |

|---|---|

|

| |

| In-plane with metal implant () | |

| BpCT-AC | 24.2% , , p = 0.03) |

| Naive MLAA | 26.9% , , p = 0.31) |

| MLAAPET () | 21.0% , , p = 0.03) |

| MLAAPET () | 22.2% , , p = 0.03) |

| MLAAPET () | 23.1% (, , p = 0.03) |

| UpCT-MLAA | 13.6% , , p — 0.44) |

|

| |

| Out-plane with metal implant () | |

|

| |

| BpCT-AC | 6.9% , , p = 0.07) |

| Naive MLAA | 27.9% , , p = 0.72) |

| MLAAPET () | 18.7% , , p = 0.28) |

| MLAAPET () | 9.6% , , p = 0.33) |

| MLAAPET () | 7.4% , , p = 0.21) |

| UpCT-MLAA | 7.1% , , p = 0.19) |

For lesions in-plane of the metal implant, BpCT-AC PET had large underestimation of , naive MLAA PET had better mean estimation of but had a large standard deviation. The addition of light regularization to MLAA improves the RMSE by decreasing the standard deviation at the cost of increased mean error. Increasing regularization increases RMSE but reduces the bias error with increased standard deviation. UpCT-MLAA PET had the best agreement with CTAC PET. Only Naive MLAA and UpCT-MLAA had results where a significant difference could not be found when compared to CTAC (p > 0.05).

For lesions out-plane of the metal implant, the trend is reverse for BpCT-AC PET and the MLAA methods. BpCT-AC PET had the best agreement with CTAC PET and the MLAA methods showed decreasing RMSE with increasing regularization. UpCT-MLAA had the second-best agreement with CTAC PET. No significant difference could be found for all methods when compared to CTAC (p > 0.05).

V. DISCUSSION

This article presents the use of a Bayesian deep convolutional neural network to enhance MLAA by providing an accurate pseudo-CT prior alongside predictive uncertainty estimates that automatically modulate the strength of the priors (UpCT-MLAA). The method was evaluated in patients without and with implants with pelvic lesions. The performance for metal implant recovery and uptake estimation in pelvic lesions in patients with metal implants was characterized. This is the first work that demonstrated an MLAA algorithm for PET/MRI that was able to recover metal implants while also accurately depicting detailed anatomic structures in the pelvis. This is also the first work to synergistically combine supervised Bayesian deep learning and MLAA in a coherent framework for simultaneous PET/MRI reconstruction in the pelvis. The UpCT-MLAA method demonstrated similar quantitative uptake estimation of pelvic lesions to a state-of-the-art attenuation correction method (ZeDD-CT) while additionally providing the capability to perform reasonable PET reconstruction in the presence of metal implants and removing the need of a specialized MR pulse sequence.

One of the major advantages of using MLAA is that it uses the PET emission data to estimate the attenuation coefficients alongside the emission activity. This gives MLAA the capability to truly capture the underlying imaging conditions that the PET photons undergo. This is especially important in simultaneous PET/MRI where true ground-truth attenuation maps cannot be derived. Currently, the most successful methods for obtaining attenuation maps are through deep learning-based methods [20]–[28]. However, these methods are inherently supervised model-based techniques and have limited capacity to capture imaging conditions that were not present in the training set nor conditions that cannot be reliably modeled, such as the movement and mismatch of bowel air and the presence of metal artifacts. Since MLAA derives the attenuation maps from the PET emission data, MLAA can derive actual imaging conditions that supervised model-based techniques are unable to capture. Furthermore, this eliminates the need for specialized MR pulse sequence (such as ZTE for bone) since the bone AC would be estimated by MLAA instead. This would allow for more accurate and precise uptake quantification in simultaneous PET/MRI.

To the best of our knowledge, only a few other methods combines MLAA with deep learning [39]–[42]. Their methods apply deep learning to denoise an MLAA reconstruction by training a deep convolutional neural network to produce an equivalent CTAC from MLAA estimates of activity and attenuation maps. This method inherently requires ground-truth CTAC maps to train the deep convolutional neural network and, thus, is affected by the same limitations that supervised deep learning and model-based methods have. Unlike their method, our method (UpCT-MLAA) preserves the underlying MLAA reconstruction while still providing the same reduction of crosstalk artifacts and noise.

Our approach is different from all other approaches because we leverage supervised Bayesian deep learning uncertainty estimation to detect rare and previously unseen structures in pseudo-CT estimation. There are only a few previous works that estimate uncertainty on pseudo-CT generation [57], [58]. Klages et al. [57] utilized a standard deep learning approach and extracted patch uncertainty but did not assess their method on cases with artifacts or implants. Hemsley et al. [58] utilized a Bayesian deep learning approach to estimate total predictive uncertainty and similarly demonstrated high uncertainty on metal artifacts. Both approaches were intended for radiotherapy planning and our work is the first to apply uncertainty estimation toward PET/MRI attenuation correction. We demonstrated how likely -map errors can be detected and resolved with the use of PET emission data through MLAA.

High uncertainty was present in many different regions. Metal artifact regions had high uncertainty because they were explicitly excluded in the training process—i.e., an out-of-distribution structure. Air pockets had high uncertainty likely because of the inconsistent correspondence of air between MRI and CT—i.e., intrinsic dataset errors. Other image artifacts (such as motion due to breathing) have high uncertainty likely due to the rare occurrence of these features in the training dataset and its inconsistency with the corresponding CT images. Bone had high uncertainty since there is practically no bone signal in the Dixon MRI. Thus, the CNN likely learned to derive the bone value based on the surrounding structure and the variance image shows the intrinsic uncertainty and limitations of estimating bone HU values from Dixon MRI. Again, these regions were highlighted by being assigned high uncertainty without the network being explicitly trained to identify these regions.

On evaluation with patients without implants, we demonstrated that BpCT was a sufficient surrogate of ZeDD-CT for attenuation correction across all lesion types: BpCT provided comparable SUV estimation on bone lesions and improved SUV estimation on soft-tissue lesions. However, the BpCT images lacked accurate estimation of bone HU values that resulted in average underestimation of bone lesion SUV values (−0.9%). The average underestimation was reduced with UpCT-MLAA (−0.3%). Although the mean underestimation values improved, the RMSE of UpCT-MLAA was higher than BpCT-AC (3.6% versus 3.2%, respectively) due to the increase in standard deviation (3.6% versus 3.1%, respectively). This trend was more apparent for soft-tissue lesions. The RMSE, mean error, and standard deviation were worse for UpCT-MLAA versus BpCT. Since the PET/MRI and CT were acquired in separate sessions, possibly months apart, there may be significant changes in tissue distribution. This could explain the increase in errors of BpCT-AC under UpCT-MLAA.

On the patients with metal implants, UpCT-MLAA was the most comparable to CTAC across all lesion types. Notably, there was an opposing trend in the PET results for lesions in/out-plane of the metal implant between BpCT-AC and the MLAA methods. These were likely due to the sources of data for reconstruction. BpCT-AC has attenuation coefficients estimated only from the MRI, whereas Naïve MLAA has attenuation coefficients estimated only from the PET emission data. The input MRI was affected by large metal artifacts due to the metal implants that make the regions appear to be large pockets of air. Thus, in BpCT-AC, the attenuation coefficients of air were assigned to the metal artifact region. For lesions in-plane of the implant, this led to a large bias due to the bulk error in attenuation coefficients and a large variance due to the large range of attenuation coefficients with BpCT-AC, while this is resolved with MLAA. For lesions outplane of the implant, the opposite trend arises. For MLAA the variance is large due to the noise in the attenuation coefficient estimates. This is resolved in BpCT-AC since the attenuation coefficients are learned for normal anatomical structures that are unaffected by metal artifacts. The combination of BpCT with MLAA through UpCT-MLAA resolved these disparities.

A major challenge to evaluate PET reconstructions in the presence of metal implants is that typical CT protocols for CTAC produce metal implant artifacts that may cause overestimation of uptake and, thus, does not serve as a true reference. Since our method relies on time-of-flight MLAA, we believe that our method would produce a more accurate AC map and, therefore, a more accurate SUV map. This is demonstrated by the lower estimates of UpCT-MLAA compared to CTAC PET. However, to have precise evaluation, a potential approach to evaluate UpCT-MLAA is to use metal artifact reduction techniques on the CT acquisition [43] or by acquiring transmission PET images [59].

Accurate co-registration of CT and MRI with metal implant artifacts was a limitation since the artifacts present themselves differently. Furthermore, the CT and MRI images were acquired in separate sessions. These can be mitigated by acquiring images sequentially in a trimodality system [60].

Another limitation of this study was the small study population. Having a larger population would allow evaluation with a larger variety of implant configurations and radiotracers and validation of the robustness of the attenuation correction strategy.

Finally, the performance of the algorithm can be further improved. In this study, we only sought to demonstrate the utility of uncertainty estimation with a Bayesian deep learning regime for the attenuation correction in the presence of metal implants: that the structure of the anatomy is preserved and implants can be recovered while still providing similar PET uptake estimation performance in pelvic lesions. Our proposed UpCT-MLAA was based on MLAA regularized with MR-based priors [27], which can be viewed as unimodal Gaussian priors. We speculate that this could be further improved by using Gaussian mixture priors for MLAA as in [36]. The major task to combine these methods would be to learn the Gaussian mixture model parameters from patients with implants. With additional tuning of the algorithm and optimization of the BCNN, UpCT-MLAA can potentially produce the most accurate and precise attenuation coefficients in all tissues and in any imaging conditions.

VI. Conclusion

We have developed and evaluated an algorithm that utilizes a Bayesian deep convolutional neural network that provides accurate pseudo-CT priors with uncertainty estimation to enhance MLAA PET reconstruction. The uncertainty estimation allows for the detection of “out-of-distribution” pseudo-CT estimates that MLAA can subsequently correct. We demonstrated quantitative accuracy in pelvic lesions and recovery of metal implants in pelvis PET/MRI.

Supplementary Material

ACKNOWLEDGMENT

The Titan X Pascal used was donated by the NVIDIA Corporation.

This work was supported in part by NIH/NCI under Grant R01CA212148; in part by NIH/NIAMS under Grant R01AR074492; in part by the UCSF Graduate Research Mentorship Fellowship award; and in part by GE Healthcare.

This work involved human subjects or animals in its research. Approval of all ethical and experimental procedures and protocols was granted by the UCSF Institutional Review Board (IRB #17-21852).

Footnotes

This article has supplementary material provided by the authors and color versions of one or more figures available at https://doi.org/10.1109/TRPMS.2021.3118325.

Contributor Information

Andrew P. Leynes, Department of Radiology and Biomedical Imaging, University of California at San Francisco, San Francisco, CA 94158 USA UC Berkeley–UC San Francisco Joint Graduate Program in Bioengineering, University of California at Berkeley, Berkeley, CA 94720 USA.

Sangtae Ahn, Biology and Physics Department, GE Research, Niskayuna, NY 12309 USA..

Kristen A. Wangerin, PET/MR, GE Healthcare, Waukesha, WI 53188 USA.

Sandeep S. Kaushik, MR Applications Science Laboratory Europe, GE Healthcare, 80807 Munich, Germany Department of Computer Science, Technical University of Munich, 80333 Munich, Germany; Department of Quantitative Biomedicine, University of Zurich, 8057 Zurich, Switzerland..

Florian Wiesinger, MR Applications Science Laboratory Europe, GE Healthcare, 80807 Munich, Germany..

Thomas A. Hope, Department of Radiology and Biomedical Imaging, University of California at San Francisco, San Francisco, CA, USA Department of Radiology, San Francisco VA Medical Center, San Francisco, CA 94121 USA..

Peder E. Z. Larson, Department of Radiology and Biomedical Imaging, University of California at San Francisco, San Francisco, CA 94158 USA; UC Berkeley–UC San Francisco Joint Graduate Program in Bioengineering, University of California at Berkeley, Berkeley, CA 94720 USA.

REFERENCES

- [1].Kinahan PE, Hasegawa BH, and Beyer T, “X-ray-based attenuation correction for positron emission tomography/computed tomography scanners,” Semin. Nucl. Med, vol. 33, no. 3, pp. 166–179, Jul. 2003, doi: 10.1053/snuc.2003.127307. [DOI] [PubMed] [Google Scholar]

- [2].Lee JS, “A review of deep-learning-based approaches for attenuation correction in positron emission tomography,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 5, no. 2, pp. 160–184, Mar. 2021, doi: 10.1109/TRPMS.2020.3009269. [DOI] [Google Scholar]

- [3].Hofmann M. et al. , “MRI-based attenuation correction for whole-body PET/MRI: Quantitative evaluation of segmentation- and atlas-based methods,” J. Nucl. Med, vol. 52, no. 9, pp. 1392–1399, Sep. 2011, doi: 10.2967/jnumed.110.078949. [DOI] [PubMed] [Google Scholar]

- [4].Wollenweber SD et al. , “Evaluation of an atlas-based PET Head attenuation correction using PET/CT and MR patient data,” IEEE Trans. Nucl. Sci, vol. 60, no. 5, pp. 3383–3390, Oct. 2013, doi: 10.1109/TNS.2013.2273417. [DOI] [Google Scholar]

- [5].Arabi H. and Zaidi H, “One registration multi-atlas-based pseudo-CT generation for attenuation correction in PET/MRI,” Eur. J. Nucl. Med. Mol. Imag, vol. 43, pp. 2021–2035, Jun. 2016, doi: 10.1007/s00259-016-3422-5. [DOI] [PubMed] [Google Scholar]

- [6].Sekine T. et al. , “Evaluation of atlas-based attenuation correction for integrated PET/MR in human brain: Application of a head atlas and comparison to true CT-based attenuation correction,” J. Nucl. Med, vol. 57, no. 2, pp. 215–220, Feb. 2016, doi: 10.2967/jnumed.115.159228. [DOI] [PubMed] [Google Scholar]

- [7].Navalpakkam BK, Braun H, Kuwert T, and Quick HH, “Magnetic resonance–based attenuation correction for PET/MR hybrid imaging using continuous valued attenuation maps,” Invest. Radiol, vol. 48, no. 5, pp. 323–332, May 2013, doi: 10.1097/RLI.0b013e318283292f. [DOI] [PubMed] [Google Scholar]

- [8].Cabello J, Lukas M, Förster S, Pyka T, Nekolla SG, and Ziegler SI, “MR-based attenuation correction using ultrashort-echotime pulse sequences in dementia patients,” J. Nucl. Med, vol. 56, no. 3, pp. 423–429, Mar. 2015, doi: 10.2967/jnumed.114.146308. [DOI] [PubMed] [Google Scholar]

- [9].Ladefoged CN et al. , “Region specific optimization of continuous linear attenuation coefficients based on UTE (RESOLUTE): Application to PET/MR brain imaging,” Phys. Med. Biol, vol. 60, no. 20, p. 8047, 2015, doi: 10.1088/0031-9155/60/20/8047. [DOI] [PubMed] [Google Scholar]

- [10].Juttukonda MR et al. , “MR-based attenuation correction for PET/MRI neurological studies with continuous-valued attenuation coefficients for bone through a conversion from R2* to CTHounsfield units,” NeuroImage, vol. 112, pp. 160–168, May 2015, doi: 10.1016/j.neuroimage.2015.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Keereman V, Fierens Y, Broux T, Deene YD, Lonneux M, and Vandenberghe S, “MRI-based attenuation correction for PET/MRI using ultrashort echo time sequences,” J. Nucl. Med, vol. 51, no. 5, pp. 812–818, May 2010, doi: 10.2967/jnumed.109.065425. [DOI] [PubMed] [Google Scholar]

- [12].Wiesinger F. et al. , “Zero TE-based pseudo-CT image conversion in the head and its application in PET/MR attenuation correction and MR-guided radiation therapy planning,” Magn. Reson. Med, vol. 80, no. 4, pp. 1440–1451, 2018, doi: 10.1002/mrm.27134. [DOI] [PubMed] [Google Scholar]

- [13].Yang J. et al. , “Evaluation of sinus/edge-corrected zero-echo-time–based attenuation correction in brain PET/MRI,” J. Nucl. Med, vol. 58, no. 11, pp. 1873–1879, Nov. 2017, doi: 10.2967/jnumed.116.188268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Leynes AP et al. , “Hybrid ZTE/Dixon MR-based attenuation correction for quantitative uptake estimation of pelvic lesions in PET/MRI,” Med. Phys, vol. 44, no. 3, pp. 902–913, Mar. 2017, doi: 10.1002/mp.12122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Delso G. et al. , “ZTE-based clinical bone imaging for PET/MR,” J. Nucl. Med, vol. 56, no. 3, p. 1806, May 2015. [Google Scholar]

- [16].Sekine T. et al. , “Clinical evaluation of zero-echo-time attenuation correction for brain 18F-FDG PET/MRI: Comparison with atlas attenuation correction,” J. Nucl. Med, vol. 57, no. 12, pp. 1927–1932, Dec. 2016, doi: 10.2967/jnumed.116.175398. [DOI] [PubMed] [Google Scholar]

- [17].Wollenweber SD et al. , “Comparison of 4-class and continuous fat/water methods for whole-body, MR-based PET attenuation correction,” IEEE Trans. Nucl. Sci, vol. 60, no. 5, pp. 3391–3398, Oct. 2013, doi: 10.1109/TNS.2013.2278759. [DOI] [Google Scholar]

- [18].Paulus DH et al. , “Whole-body PET/MR imaging: Quantitative evaluation of a novel model-based MR attenuation correction method including bone,” J. Nucl. Med, vol. 56, no. 7, pp. 1061–1066, Jul. 2015, doi: 10.2967/jnumed.115.156000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Koesters T. et al. , “Dixon sequence with superimposed model-based bone compartment provides highly accurate PET/MR attenuation correction of the brain,” J. Nucl. Med, vol. 57, no. 6, pp. 918–924, Jun. 2016, doi: 10.2967/jnumed.115.166967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Liu F, Jang H, Kijowski R, Bradshaw T, and McMillan AB, “Deep learning MR imaging–based attenuation correction for PET/MR imaging,” Radiology, vol. 286, no. 2, Sep. 2017, Art. no. 170700, doi: 10.1148/radiol.2017170700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Leynes AP et al. , “Zero-echo-time and dixon deep pseudo-CT (ZeDD CT): Direct generation of pseudo-CT images for pelvic PET/MRI attenuation correction using deep convolutional neural networks with multiparametric MRI,” J. Nucl. Med, vol. 59, no. 5, pp. 852–858, May 2018, doi: 10.2967/jnumed.117.198051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Torrado-Carvajal A. et al. , “Dixon-VIBE deep learning (DIVIDE) pseudo-CT synthesis for pelvis PET/MR attenuation correction,” J. Nucl. Med, vol. 60, no. 3, pp. 429–435, Mar. 2019, doi: 10.2967/jnumed.118.209288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Han PK et al. , “MR-based PET attenuation correction using a combined ultrashort echo time/multi-echo Dixon acquisition,” Med. Phys, vol. 47, no. 7, pp. 3064–3077, 2020. [Online]. Available: 10.1002/mp.14180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Chen Y. et al. , “Deep learning-based T1-enhanced selection of linear attenuation coefficients (DL-TESLA) for PET/MR attenuation correction in dementia neuroimaging,” Magn. Reson. Med, vol. 86, no. 1, pp. 499–513, 2021, doi: 10.1002/mrm.28689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Gong K. et al. , “MR-based attenuation correction for brain PET using 3-D cycle-consistent adversarial network,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 5, no. 2, pp. 185–192, Mar. 2021, doi: 10.1109/TRPMS.2020.3006844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Tao L, Fisher J, Anaya E, Li X, and Levin CS, “Pseudo CT image synthesis and bone segmentation From MR images using adversarial networks with residual blocks for MR-based attenuation correction of brain PET data,” IEEE Trans. Radiat. Plasma Med. Sci, vol. 5, no. 2, pp. 193–201, Mar. 2021, doi: 10.1109/TRPMS.2020.2989073. [DOI] [Google Scholar]

- [27].Spuhler KD, Gardus J, Gao Y, DeLorenzo C, Parsey R, and Huang C, “Synthesis of patient-specific transmission data for PET attenuation correction for PET/MRI neuroimaging using a convolutional neural network,” J. Nucl. Med, vol. 60, no. 4, pp. 555–560, Apr. 2019, doi: 10.2967/jnumed.118.214320. [DOI] [PubMed] [Google Scholar]

- [28].Arabi H. and Zaidi H, “Deep learning-guided estimation of attenuation correction factors from time-of-flight PET emission data,” Med. Image Anal, vol. 64, Aug. 2020, Art. no. 101718, doi: 10.1016/j.media.2020.101718. [DOI] [PubMed] [Google Scholar]

- [29].Hashimoto F, Ito M, Ote K, Isobe T, Okada H, and Ouchi Y, “Deep learning-based attenuation correction for brain PET with various radiotracers,” Ann. Nucl. Med, vol. 35, no. 6, pp. 691–701, Jun. 2021, doi: 10.1007/s12149-021-01611-w. [DOI] [PubMed] [Google Scholar]

- [30].Yang J, Park D, Gullberg GT, and Seo Y, “Joint correction of attenuation and scatter in image space using deep convolutional neural networks for dedicated brain 18F-FDG PET,” Phys. Med. Biol, vol. 64, no. 7, Apr. 2019, Art. no. 075019, doi: 10.1088/1361-6560/ab0606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Dong X. et al. , “Deep learning-based attenuation correction in the absence of structural information for whole-body positron emission tomography imaging,” Phys. Med. Biol, vol. 65, no. 5, Mar. 2020, Art. no. 055011, doi: 10.1088/1361-6560/ab652c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Shiri I. et al. , “Deep-JASC: Joint attenuation and scatter correction in whole-body 18F-FDG PET using a deep residual network,” Eur. J. Nucl. Med. Mol. Imag, vol. 47, no. 11, pp. 2533–2548, Oct. 2020, doi: 10.1007/s00259-020-04852-5. [DOI] [PubMed] [Google Scholar]

- [33].Nuyts J, Dupont P, Stroobants S, Benninck R, Mortelmans L, and Suetens P, “Simultaneous maximum a posteriori reconstruction of attenuation and activity distributions from emission sinograms,” IEEE Trans. Med. Imag, vol. 18, no. 5, pp. 393–403, May 1999, doi: 10.1109/42.774167. [DOI] [PubMed] [Google Scholar]

- [34].Rezaei A. et al. , “Simultaneous reconstruction of activity and attenuation in time-of-flight PET,” IEEE Trans. Med. Imag, vol. 31, no. 12, pp. 2224–2233, Dec. 2012, doi: 10.1109/TMI.2012.2212719. [DOI] [PubMed] [Google Scholar]

- [35].Berker Y. and Li Y, “Attenuation correction in emission tomography using the emission data—A review,” Med. Phys, vol. 43, no. 2, pp. 807–832, Jan. 2016, doi: 10.1118/1.4938264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Mehranian A. and Zaidi H, “Joint estimation of activity and attenuation in whole-body TOF PET/MRI using constrained Gaussian mixture models,” IEEE Trans. Med. Imag, vol. 34, no. 9, pp. 1808–1821, Sep. 2015, doi: 10.1109/TMI.2015.2409157. [DOI] [PubMed] [Google Scholar]

- [37].Ahn S. et al. , “Joint estimation of activity and attenuation for PET using pragmatic MR-based prior: Application to clinical TOF PET/MR wholebody data for FDG and non-FDG tracers,” Phys. Med. Biol, vol. 63, no. 4, 2018, Art. no. 045006, doi: 10.1088/1361-6560/aaa8a6. [DOI] [PubMed] [Google Scholar]

- [38].Fuin N. et al. , “PET/MRI in the presence of metal implants: Completion of the attenuation map from PET emission data,” J. Nucl. Med, vol. 58, no. 5, pp. 840–845, May 2017, doi: 10.2967/jnumed.116.183343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Hwang D. et al. , “Improving the accuracy of simultaneously reconstructed activity and attenuation maps using deep learning,” J. Nucl. Med, vol. 59, no. 10, pp. 1624–1629, Oct. 2018, doi: 10.2967/jnumed.117.202317. [DOI] [PubMed] [Google Scholar]

- [40].Hwang D. et al. , “Generation of PET attenuation map for whole-body time-of-flight 18F-FDG PET/MRI using a deep neural network trained with simultaneously reconstructed activity and attenuation maps,” J. Nucl. Med, vol. 60, no. 8, pp. 1183–1189, Aug. 2019, doi: 10.2967/jnumed.118.219493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Hwang D, Kang SK, Kim KY, Choi H, Seo S, and Lee JS, “Data-driven respiratory phase-matched PET attenuation correction without CT,” Phys. Med. Biol, vol. 66, no. 11, May 2021, Art. no. 115009, doi: 10.1088/1361-6560/abfc8f. [DOI] [PubMed] [Google Scholar]

- [42].Choi B-H et al. , “Accurate transmission-less attenuation correction method for amyloid-β brain PET using deep neural network,” Electronics, vol. 10, no. 15, p. 1836, Jan. 2021, doi: 10.3390/electronics10151836. [DOI] [Google Scholar]

- [43].Huang JY et al. , “An evaluation of three commercially available metal artifact reduction methods for CT imaging,” Phys. Med. Biol, vol. 60, no. 3, pp. 1047–1067, Jan. 2015, doi: 10.1088/0031-9155/60/3/1047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Ladefoged CN et al. , “AI-driven attenuation correction for brain PET/MRI: Clinical evaluation of a dementia cohort and importance of the training group size,” NeuroImage, vol. 222, Nov. 2020, Art. no. 117221, doi: 10.1016/j.neuroimage.2020.117221. [DOI] [PubMed] [Google Scholar]

- [45].Kendall A. and Gal Y, “What uncertainties do we need in Bayesian deep learning for computer vision?” in Advances in Neural Information Processing Systems, vol. 30. Red Hook, NY, USA: Curran Assoc., 2017, pp. 5574–5584. Accessed: Jul. 17, 2019. [Online]. Available: http://papers.nips.cc/paper/7141-what-uncertainties-do-we-need-in-bayesian-deep-learning-for-computer-vision.pdf [Google Scholar]

- [46].Hüllermeier E. and Waegeman W, “Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods,” Mach. Learn, vol. 110, no. 3, pp. 457–506, Mar. 2021, doi: 10.1007/s10994-021-05946-3. [DOI] [Google Scholar]

- [47].Gal Y. and Ghahramani Z, “Dropout as a Bayesian approximation: Representing model uncertainty in deep learning,” Jun. 2015. Accessed: May 29, 2018. [Online]. Available: http://arxiv.org/abs/1506.02142. [Google Scholar]

- [48].Srivastava N, Hinton G, Krizhevsky A, Sutskever I, and Salakhutdinov R, “Dropout: A simple way to prevent neural networks from overfitting,” J. Mach. Learn. Res, vol. 15, no. 56, pp. 1929–1958, Jan. 2014. [Google Scholar]

- [49].Paszke A. et al. (Oct. 2017). Automatic Differentiation in PyTorch. Accessed: Jul. 3, 2019. [Online]. Available: https://openreview.net/forum?id=BJJsrmfCZ [Google Scholar]

- [50].Leynes AP and Larson PEZ, “Synthetic CT generation using MRI with deep learning: How does the selection of input images affect the resulting synthetic CT?” in Proc. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), Apr. 2018, pp. 6692–6696, doi: 10.1109/ICASSP.2018.8462419. [DOI] [Google Scholar]

- [51].Kingma DP and Ba J, “Adam: A method for stochastic optimization,” Dec. 2014. [Online]. Available: http://arxiv.org/abs/1412.6980. [Google Scholar]

- [52].He K, Zhang X, Ren S, and Sun J, “Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Dec. 2015, pp. 1026–1034, doi: 10.1109/ICCV.2015.123. [DOI] [Google Scholar]

- [53].Levin CS, Maramraju SH, Khalighi MM, Deller TW, Delso G, and Jansen F, “Design features and mutual compatibility studies of the time-of-flight PET capable GE SIGNA PET/MR system,” IEEE Trans. Med. Imag, vol. 35, no. 8, pp. 1907–1914, Aug. 2016, doi: 10.1109/TMI.2016.2537811. [DOI] [PubMed] [Google Scholar]

- [54].Avants BB, Tustison NJ, Stauffer M, Song G, Wu B, and Gee JC, “The insight ToolKit image registration framework,” Front. Neuroinformat, vol. 8, p. 44, Apr. 2014, doi: 10.3389/fninf.2014.00044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Alessio AM et al. , “Application and evaluation of a measured spatially variant system model for PET image reconstruction,” IEEE Trans. Med. Imag, vol. 29, no. 3, pp. 938–949, Mar. 2010, doi: 10.1109/TMI.2010.2040188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Samarin A. et al. , “PET/MR imaging of bone lesions—Implications for PET quantification from imperfect attenuation correction,” Eur. J. Nucl. Med. Mol. Imag, vol. 39, no. 7, pp. 1154–1160, Apr. 2012, doi: 10.1007/s00259-012-2113-0. [DOI] [PubMed] [Google Scholar]

- [57].Klages P. et al. , “Patch-based generative adversarial neural network models for head and neck MR-only planning,” Med. Phys, vol. 47, no. 2, pp. 626–642, 2020, doi: 10.1002/mp.13927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Hemsley M. et al. , “Deep generative model for synthetic-CT generation with uncertainty predictions,” in Medical Image Computing and Computer Assisted Intervention (MICCAI). Cham, Switzerland: Springer, 2020, pp. 834–844. doi: 10.1007/978-3-030-59710-8_81. [DOI] [Google Scholar]

- [59].Bowen SL, Fuin N, Levine MA, and Catana C, “Transmission imaging for integrated PET-MR systems,” Phys. Med. Biol, vol. 61, no. 15, pp. 5547–5568, Jul. 2016, doi: 10.1088/0031-9155/61/15/5547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Veit-Haibach P, Kuhn FP, Wiesinger F, Delso G, and von Schulthess G, “PET–MR imaging using a tri-modality PET/CT–MR system with a dedicated shuttle in clinical routine,” Magn. Reson. Mater. Phys. Biol. Med, vol. 26, no. 1, pp. 25–35, 2013, doi: 10.1007/s10334-012-0344-5. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.