Abstract

Brain-computer interfaces have so far focused largely on enabling the control of a single effector, for example a single computer cursor or robotic arm. Restoring multi-effector motion could unlock greater functionality for people with paralysis (e.g., bimanual movement). However, it may prove challenging to decode the simultaneous motion of multiple effectors, as we recently found that a compositional neural code links movements across all limbs and that neural tuning changes nonlinearly during dual-effector motion. Here, we demonstrate the feasibility of high-quality bimanual control of two cursors via neural network (NN) decoders. Through simulations, we show that NNs leverage a neural ‘laterality’ dimension to distinguish between left and right-hand movements as neural tuning to both hands become increasingly correlated. In training recurrent neural networks (RNNs) for two-cursor control, we developed a method that alters the temporal structure of the training data by dilating/compressing it in time and re-ordering it, which we show helps RNNs successfully generalize to the online setting. With this method, we demonstrate that a person with paralysis can control two computer cursors simultaneously. Our results suggest that neural network decoders may be advantageous for multi-effector decoding, provided they are designed to transfer to the online setting.

Subject terms: Brain-machine interface, Neural decoding

Introduction

Intracortical brain-computer interfaces (iBCIs) aim to restore movement and communication to people with paralysis by decoding movement signals from the brain via microelectrodes placed in the cortex. Advancements in BCIs have enabled functional restoration of movement and communication, including robotic arm control1–4, reanimation of paralyzed limbs through electrical stimulation5–9, cursor control10–12, translating attempted handwriting movements into text13, and decoding speech14–20. However, one area in which BCI performance remains limited is multi-effector control. Enabling high-quality, simultaneous control of multiple effectors could unlock new applications, such as the control of whole-body exoskeletons or bimanual robotic arms. While there have been some initial encouraging demonstrations of multi-effector control21–23, performance has not yet reached that of single-effector BCIs.

Prior studies have shown that motor cortex contributes to both contralateral and ipsilateral movements and that neural tuning changes nonlinearly between single and dual-limb movements21,24–28. More specifically, during dual movement we found that the neural representation for one effector (‘primary’) stays relatively constant, whereas the other effector’s (‘secondary’) representation gets suppressed while its directional tuning changes. Additionally, there is significant correlation in how movement direction is represented for contralateral and ipsilateral movements. To date, studies that have investigated bimanual BCI control21–23,29 have mainly used linear decoding algorithms (e.g., Kalman filters and ridge regression) despite the seemingly nonlinear relationship between neural activity and bimanual movement. Accounting for these correlations and nonlinear tuning changes could help to prevent unintended movements from leaking from one effector to the other.

Rapid progress in machine learning and artificial intelligence has led to an impressive collection of neural network models capable of learning complex nonlinear relationships between large amounts of data. These approaches have produced significant success in a wide variety of applications30 including, computer vision31–33, natural language processing34–36, and robotics37–39. More recently, a promising application of neural networks has been towards modeling and decoding the brain activity associated with movement via BCIs, which holds potential for improving BCI performance. Of the many network architectures, recurrent neural networks (RNNs) have been a popular decoding approach for BCIs40–42 since RNNs can learn temporal dependence within data, aligned with the dynamical systems view that neural activity in motor cortex evolves in predictable ways over time42–44. However, RNNs often require large amounts of training data and can overlearn the temporal structure within offline data which may not be present in online data, potentially reducing their utility as decoders for real-time BCI applications.

Here, we demonstrate that RNNs can leverage nonlinearities within the neural code for bimanual movements to accomplish simultaneous two-cursor control. We highlight key sources of nonlinearity underlying bimanual movements and use simulations to gain insight into how neural networks handle these nonlinearities. In addition, we show that RNN decoders calibrated on stereotyped training data achieve high offline performance (consistent with prior work40,45–47), but do so in part by overlearning the temporal structure of the task, resulting in poor performance when used for real-time control of a BCI. To solve this problem, we altered the stereotyped structure in training data to introduce temporal and behavioral variability which helps RNNs generalize to the online setting. Using this approach, we demonstrate real-time simultaneous two-cursor control via RNN decoding.

Results

Nonlinear neural coding of unimanual and bimanual directional hand movement

We first sought to understand how bimanual hand movements are represented in motor cortex, including sources of nonlinearity that would motivate the use of RNNs. We used microelectrode recordings from the hand knob area of the left (dominant) precentral gyrus in a clinical trial participant (referred to as T5) to characterize how neural tuning changes between bimanual hand movement (both hands attempting to move simultaneously) and unimanual hand movement (one hand moving individually). T5 has a C4 spinal cord injury and is paralyzed from the neck down; attempted movement resulted in little to no motion of the arms and legs (see Willett*, Deo*, et al. 2020 for more details27). T5 was instructed to attempt to move his hands as if they were controlling joysticks.

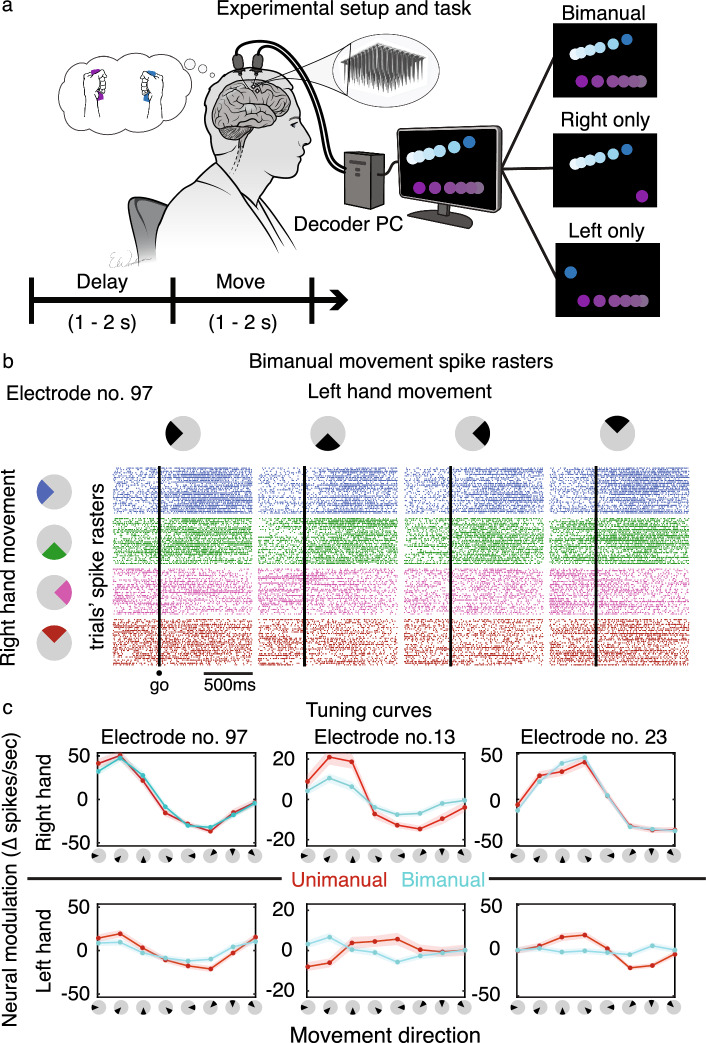

Using a delayed movement task (Fig. 1a), we measured T5’s neural modulation to attempted unimanual and bimanual hand movements. During this task, two cursors were presented on a monitor and moved autonomously to respective target locations. T5 imagined his hands on two joysticks and attempted movements as if he were independently controlling each cursor with the associated joystick. We observed changes in neural spiking activity across many individual electrodes as a function of movement direction during bimanual movements (Fig. 1b presents an example electrode’s responses; see Supplementary Fig. 1c for a count of tuned electrodes). We also observed nonlinear changes in tuning from the unimanual to bimanual context, including tuning suppression and direction changes (Fig. 1c). Here, ‘nonlinear’ is considered any departure from linear tuning to the variables we intend to decode: the x- and y-components of movement direction22, as described in the encoding model (Eq. 1) below:

| 1 |

Figure 1.

Neural tuning to unimanual and bimanual hand movement. (a) Participant T5 performed a delayed-movement task. Cursors on a screen prompted T5 to attempt to make concomitant joystick movements. One of three types of movements were cued on each trial: (1) bimanual: both hands, (2) unimanual left: only left (ipsilateral) hand, or (3) unimanual right: only right (contralateral) hand. (b) Matrix of spike rasters of example electrode no. 97 during bimanual movements. Raster plot (i,j) of the matrix corresponds to electrode 97’s response to right hand movement in direction i while the left hand moved in direction j (colored by right hand direction). Each row of a raster plot represents a trial, and each column is a millisecond time-bin. A dot indicates a threshold crossing spike at the corresponding trial’s time-bin. Different spiking activity can be seen for different bimanual movements, indicating tuning to bimanual movement direction. (c) Tuning curves of example electrodes show a range of tuning changes to each hand (rows) across movement contexts (red/blue). Solid dots indicate the mean firing rates (zero-centered) for movements in the directions indicated on the x-axes. Spikes were binned (20-ms bins) and averaged within a 300–700 ms window after the ‘go’ cue. Shaded areas are 95% CIs (computed via bootstrap resampling). Electrode no. 97 retained tuning for both hands between contexts, electrode no. 13 had suppressed tuning for both hands during bimanual movement, and electrode no. 23 had suppression in left hand tuning during bimanual movement.

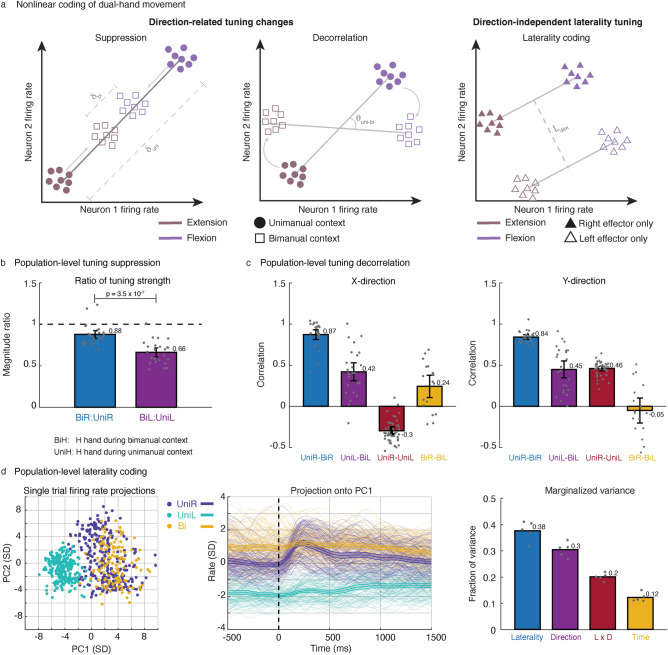

Here, is the average firing rate of a neuron, the terms are the - and -direction components of the right (, ) and left (, ) hand velocities, and the terms are the corresponding coefficients of the velocity components (and is the baseline firing rate). Tuning angle changes (“decorrelation”) and a suppressed tuning magnitude from unimanual to bimanual movement breaks linearity, since the tuning coefficients change based on movement context. In addition, direction-independent laterality tuning (i.e., coding for the side of the body irrespective of movement direction) is another potential key source of nonlinearity. For clarity, Fig. 2a illustrates these three nonlinear phenomena (decorrelation, suppression, and laterality tuning) with a schematic.

Figure 2.

Nonlinear neural code underlying bimanual hand movement. (a) Cartoon examples of three key sources of nonlinearity in the neural coding of directional bimanual and unimanual movement. Firing rates for two exemplary neurons are plotted for flexion (purple) and extension (brown) of an effector (left and middle panels) during unimanual and bimanual contexts, or for two effectors (right panel) during unimanual movement. Direction-related tuning changes consist of suppression (reduction in neural distance between movement representations), and decorrelation (change in tuning axis) between unimanual and bimanual contexts. Direction-independent laterality tuning can be viewed as a large dimension separating movements between effectors on opposite sides of the body. (b) Amount of population-level tuning suppression in offline data. Each bar indicates the mean ratio of tuning strength between bimanual and unimanual contexts for right (blue) and left (purple) hand movement. Significance was determined by a 2-sample t-test. All black intervals on bar plots indicate 95% CIs. Left hand tuning was suppressed more during the bimanual context than right hand tuning. (c) Degree of population-level tuning decorrelation in offline data. Each bar indicates the correlation between the neural population’s x- or y-direction coefficient vectors for pairs of movement types. See Supplementary Table 1 for p values. Right hand directional tuning remained largely unchanged while left hand directional tuning changed more substantially during the bimanual context. (d) Population-level laterality information in offline data. Principal component analysis (PCA) on single trial Z-scored firing rates (SD denotes standard deviation) is used to visualize how movement types cluster (left panels; each dot and line is a single trial). Demixed PCA was used to compute the marginalized variance of different movement factors (right panel). Tuning to laterality was stronger than tuning to movement direction.

Tuning decorrelation and a suppression of ipsilateral related neural activity have been seen previously during bimanual movement24,27. We reproduced these phenomena with a richer set of continuous directional movements (Fig. 2b). The population-level tuning strength of right hand (primary effector) directional movements remained relatively unchanged from unimanual to bimanual contexts (12% suppression during bimanual), whereas tuning strength of the left hand (secondary effector) was suppressed by 34% during bimanual movement. Similarly, population-level directional tuning (Fig. 2c) of the right hand remained relatively unchanged (0.87 and 0.84 correlations for x- and y-directions, respectively) while left hand directional tuning changed more substantially (0.42 and 0.45 correlations for x- and y-directions, respectively) from the unimanual to bimanual context. These results indicate that neural tuning to left hand movements exhibited suppression and decorrelation when moved simultaneously with the right hand, whereas tuning to right hand movements remained mostly unchanged.

A large neural dimension codes for laterality of the hand

Also consistent with our prior work, we found a salient laterality-related neural dimension (Fig. 2d) that codes for the side of the body of the moving hand independently of the hand’s movement direction. We used principal component analysis (PCA) on both unimanual and bimanual neural data to visualize neural activity in the top principal components (PCs). A dimension emerged within the top two PCs clearly separating right from left hand unimanual movements. Interestingly, bimanual neural activity most closely resembled that of unimanual right hand activity in the top PCs, further indicating that the right hand is more strongly represented than the left hand during bimanual movement in the contralateral precentral gyrus. Next, we used demixed PCA48 (dPCA), which decomposes neural data into a set of dimensions that each explain variance related to one marginalization of the data, to quantify the size of the laterality factor in unimanual movement data only. We marginalized the data according to the following factors: time, laterality, movement direction, and the laterality-direction interaction. The laterality marginalization contained the highest fraction of variance (39% marginalized variance) indicating that tuning to laterality was stronger than tuning to direction (30% marginalized variance). From a decoding perspective, laterality dimensions can be useful in distinguishing right hand movements from left hand movements in a unimanual context.

Overall, we found a strong presence of nonlinearities within the neural code governing bimanual hand movement, which suggests that neural networks may be particularly well-suited for decoding multi-effector movement.

Neural networks leverage laterality information for improved unimanual decoding

We hypothesized that nonlinear neural decoders would use the laterality dimensions to identify and isolate which hand (right or left) is moving. Conversely, we expected that linear decoders would be unable to utilize the laterality coding since it is independent of movement direction.

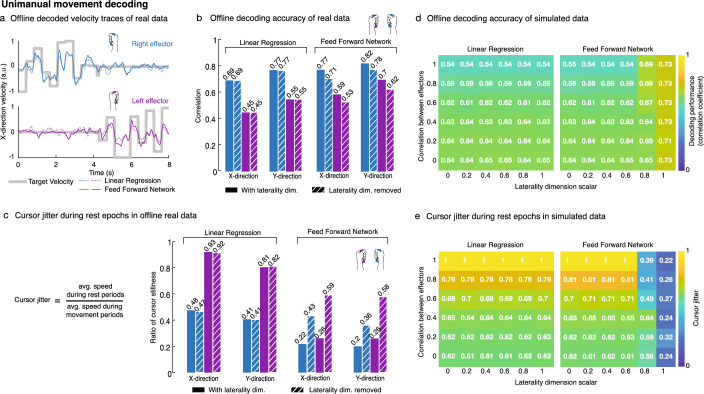

We compared a simple linear decoder (LD; built via ridge regression) to a simple densely connected feed forward neural network (FFN) to assess each decoder’s ability to use laterality information for unimanual movement decoding. These basic decoders were chosen to eliminate the temporal filtering effects present in more complex decoders such as Wiener filters and recurrent neural networks, which are able to use time history. That is, we asked the question: which decoder better predicts the movement encoded in a single time-bin of neural activity? Using data from unimanual trials, both decoders were trained to convert firing rate input features at a single time-bin (20 ms bin) to x- and y-direction velocities for both cursors. Figure 3a shows an example snippet of offline decoded x-direction velocities for unimanual movement of both hands. The FFN outperformed the LD in predicting velocity magnitudes (Fig. 3b) for the left hand, which is consistent with prior results27 indicating that ipsilateral representation is generally weaker than contralateral representation (left hand is 48% weaker; see Supplementary Fig. 1d). Figure 3b summarizes offline unimanual decoding performance where the FFN outperformed the LD across all movement dimensions, with the greatest performance boost for unimanual left hand decoding.

Figure 3.

Nonlinear decoders leverage laterality information to disentangle effectors. (a) Offline single-bin decoding on unimanual data. Neural activity was binned (20-ms bins) and truncated to 400 ms movement windows (300–700 ms after go cue). Linear ridge regression (RR) and a densely connected feed forward neural network (FFN; single layer, 512 units) were trained, using five-fold cross-validation, to decode left and right cursor velocities. Sample 8 s held-out snippets of decoded x-direction velocity traces are shown. (b) Each bar indicates the offline decoding performance (Pearson correlation coefficient) for the RR and FFN decoders across the x- and y-direction velocity dimensions, separated by left hand (purple bars) and right hand (blue bars). Striped bars indicate data where the laterality dimension was removed. The FFN outperformed the LD in decoding movements across all dimensions. Removal of the laterality dimension did not affect LD performance but did reduce FFN performance. (c) Cursor jitter is quantified as the ratio of average cursor speed during rest periods to that during movement periods. A rest period is defined as the period in which the other cursor should be active. Lower ratios indicate less cursor jitter (or more cursor stillness) while the other cursor is active. The FFN outperformed the LD, maintaining a more stable left (non-dominant) cursor position in comparison. The laterality dimension was useful to the FFN in reducing cursor jitter; again, laterality did not affect the LD. (d) Simulated neural activity during unimanual movement was generated for different directional tuning correlation values between the hands and different laterality dimension sizes. Each (i,j) cell of a matrix indicates the decoding performance (Pearson correlation coefficient) for a synthetic dataset with correlation i between hands and a laterality dimension size of j. (e) Cursor jitter for the simulated data in panel d is shown. The FFN leveraged the laterality dimension for improved decoding performance and less cursor jitter as tuning between the hands became more correlated. The LD was unable to use the laterality information to distinguish between the hands.

To further understand the extent to which the decoders used laterality information, we fit and subsequently removed the laterality dimension from neural data (see “Methods”). Removal of the laterality dimension did not affect decoding performance of the LD; however, it did result in a performance decrease across all movement dimensions for the FFN (Fig. 3b). Generally, the FFN’s decoding performance was reduced to similar levels to that of the LD’s performance, although the FFN’s left hand decoding was still better than the LD (and its decoded outputs were larger in magnitude; see Supplementary Fig. 2a for distributions of decoded output magnitudes). Additionally, the FFN was better able to isolate movement decoding to the actively moving hand, which we quantified with cursor ‘jitter’ in Fig. 3c. On average, the FFN outperformed the LD in reducing left cursor jitter during right cursor movement, and vice versa. Removal of the laterality dimension led to an increase in cursor jitter for the FFN. The LD experienced sizable left cursor jitter while the right cursor was active and removal of the laterality dimension did not alter the degree of cursor jitter for the LD.

To gain deeper insight into the role of laterality information in decoding unimanual movement, we simulated unimanual neural activity with the addition of Gaussian noise (see “Methods” and Eqs. 3, 4) where we varied the directional tuning correlation between the hands and varied the size of the laterality dimension. Figure 3d shows decoding performance of LDs and FFNs across the simulated data. As expected, LD performance degraded as the neural activity associated with the hand movements became more correlated regardless of the scale of the laterality dimension. Conversely, when the size of the laterality dimension was sufficiently large, the FFNs were able to achieve high decoding performance irrespective of how correlated the neural activity associated with hand movements became. Additionally, we saw that the LDs were unable to use laterality information in keeping the non-active cursor still and cursor jitter increased as the neural activity became increasingly correlated (Fig. 3e). The FFNs used laterality information, when it was salient enough, to disentangle the cursors which resulted in reduced cursor jitter regardless of how correlated the hands became.

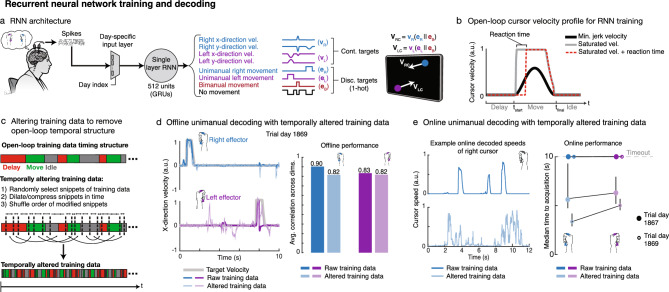

RNNs overlearn the temporal structure of offline data and generate overly stereotyped online behavior

Next, we used a simple RNN architecture (single-layer, 512 gated recurrent units)—similar to the neural network model used in our recent report on decoding attempted handwriting13—to decode bimanual movement from neural activity (Fig. 4a and Supplementary Fig. 3). During RNN calibration, neural activity was recorded while T5 attempted movements in concert with one or both cursors moving on a screen. The structure of this task followed a delayed movement paradigm where T5 prepared to move during a delay period, executed movement during a move period, and then rested at an idle state. This highly stereotyped temporal structure (prepare-move-idle) is typical of BCI calibration tasks in which neural activity can be regressed against the inferred behavior. The RNN was trained to convert neural activity into (1) left and right cursor velocities and (2) discrete movement-context signals that denoted the category of movement being made at each moment in time (unimanual left, unimanual right, bimanual, or no movement). During closed-loop cursor control, the discrete context signals were used to gate the output cursor velocities. Velocity targets for RNN training were modified by introducing a reaction time and saturating the velocity curve (Fig. 4b) to better approximate the participant’s intention to move maximally when far from the target49. More specifically, the saturated velocity profile assumes that the participant is moving with maximum velocity from movement onset up until they are very near the target, at which point they slow down as the velocity tapers to zero.

Figure 4.

Fracturing temporal structure in offline training data helps RNN decoders generalize to the online setting. (a) Diagram of the decoding pipeline. First, neural activity (multiunit threshold crossings) was binned on each electrode (20-ms bins). Then, a trainable day-specific linear input layer transformed the binned activity from a specific day into a common space to account for day-to-day variability in the neural recordings. Next, an RNN converted the day-transformed time series activity into continuous left and right cursor velocities (, and discrete movement context signals (. The movement context signals were then used to gate the cursor velocity outputs. (b) Example open-loop, minimum-jerk cursor velocity (black) and modified saturated velocities (gray/red). Saturated velocity with a prescribed reaction time of 200 ms (red) was used for RNN training since it better approximates the user’s intended behavior. (c) Diagram of data alteration technique that introduces variability in the temporal and behavioral structure of the training data. Data are subdivided into small snippets of variable length; each snippet is then dilated or compressed in time, and the order of the modified snippets is shuffled. (d) Offline decoding performance of RNNs trained with and without data alteration. Sample snippets of x-direction decoded velocities are shown for both cursors during unimanual movement with RNNs trained with and without alteration. Corresponding decoding performance (Pearson correlation coefficient) is summarized via bar plots. Offline performance is better without data alteration. (e) Decoders trained with unaltered data generated pulse-like movements online, as shown in the sample decoded cursor speeds for the right hand (top panel), whereas the RNN trained with altered data (bottom panel) allowed for quicker online corrections. Vertical black bars indicate 95% CIs (bootstrap, n = 10 K). Decoders trained with altered data acquired targets more quickly online.

To investigate the RNN’s decoding efficacy, we first focused on the unimanual movement case, which mitigates decoding challenges due to suppressed left-hand representation during bimanual movement. RNNs trained on open-loop unimanual movements achieved high offline decoding performance for both hands (Fig. 4d; average correlation of 0.9 and 0.83 for the right and left hand, respectively). However, when used for online control, these RNNs generated pulse-like movements that reflected the velocity profiles used for offline training, making it difficult for the user to perform closed-loop error corrections (Fig. 4e). Instead of being able to smoothly correct for inevitable errors that occur during online control, T5 had to make repeated attempted movements—mimicking the prepare-move-idle offline behavior—in succession to successfully acquire targets. In this scenario, offline RNN decoding on held-out test data yielded deceptively high performance which did not translate to high online performance.

Fracturing the stereotyped temporal structure of open-loop training data helps RNNs transfer to online control

Since the RNN decoders overlearned stereotyped prepare-move-idle open-loop behavior, we hypothesized that introducing variability in the temporal and behavioral structure of the training data would help generalize to the closed-loop context. To accomplish this, we altered the training data by randomly selecting snippets of data (ranging between 200 and 800 ms in duration), stretching or compressing the snippets in time using linear interpolation, and then shuffling the order of the modified snippets (Fig. 4c; see “Methods”). This approach aims to intermix variably sized windows of neural activity across the various stages of behavior (prepare, move, and idle) to make the RNN decoder more robust to the rapid changes in movement direction that occur during closed-loop control. Comparing the RNN trained with temporally altered data (altRNN) to that trained with raw data (rawRNN) as described in the previous section, we found that the altRNN was prevented from learning the open-loop task structure, resulting in slightly poorer decoding performance on offline held-out test data and noisier output velocities (Fig. 4d). However, the altRNN led to improved closed-loop control (see Supplementary Movie 4). The decoded cursor speeds were more continuous in nature and did not reflect the pulse-like velocity profiles prescribed to the cursors during the open-loop task (Fig. 4e).

In addition to enforcing that the RNN generalizes to data with less stereotyped structure, this data alteration technique allows for synthetic data generation which also helps to prevent overfitting to the limited amount of data that can be collected in human BCI research. Overall, we found that fracturing temporal and behavioral structure in the training data resulted in more continuous output velocities which translated to better closed-loop cursor control performance.

RNN decoders enable online simultaneous control of two cursors

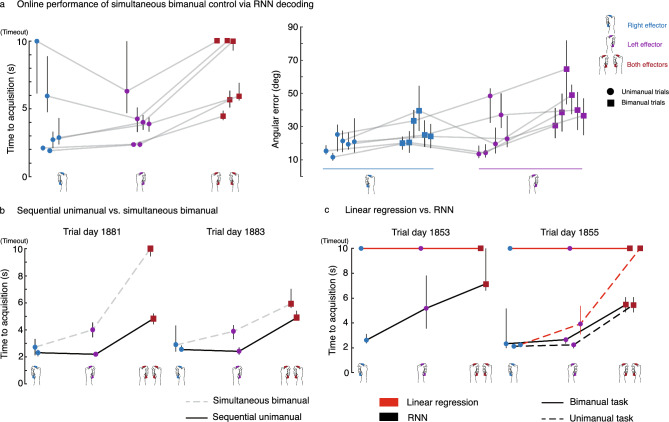

Next, we tested whether an RNN decoder trained with temporally altered data could facilitate real-time neural control of two cursors at the same time. To do so, we trained an RNN on offline and online unimanual and bimanual hand movements collected over multiple sessions (see “Methods”). T5 attempted a series of unimanual or bimanual hand movements to drive two cursors to their intended targets. To acquire targets, the cursors had to dwell simultaneously within their corresponding target for 500 ms. T5 was asked to attempt all bimanual trials with simultaneous hand movements (as opposed to sequential unimanual movement of one cursor at a time). T5 successfully achieved bimanual control across many sessions (see Supplementary Movie 1), where time-to-acquisition (TTA) for bimanual trials was only slightly longer than the TTA for unimanual trials on average (Fig. 5a). Amongst unimanual trials, the average TTA for right- and left-hand trials was similar. The average angular errors for both hands were generally higher during bimanual movement than during unimanual movement.

Figure 5.

RNN decoders enable two-cursor control and outperform simple linear decoders. (a) Median target acquisition time and angular errors are shown for 6 days of simultaneous bimanual two-cursor control as enabled by RNN decoders. Light gray lines connect data points corresponding to the same session day. Each trial had a 10 s timeout after which the trial was considered failed. Angular error was calculated within an initial movement window (300–500 ms after go cue). Vertical black bars in each panel are 95% CIs (bootstrap, n = 10 K). Performance was generally good across most days, although decoders did sometimes fail to enable consistent target acquisition. (b) A sequential unimanual control strategy (moving one cursor at a time; solid black line) was compared to simultaneous bimanual control (dashed gray line) over 2 sessions, of which the median target acquisition times are shown. The sequential unimanual control strategy led to faster target acquisition. (c) RNN decoders were compared to linear decoders on 2 session days. Each point is the median target acquisition time for the corresponding trial type. Solid lines connect points corresponding to the normal bimanual task (consisting of simultaneous dual movements and unimanual single movements). A variation of the task where only unimanual movements were tested (holding the non-active cursor fixed) was used as a control on trial day 1855 to confirm that linear decoders could succeed in a purely unimanual context (dashed lines).

During online control, T5 remarked that sequentially moving the cursors during the bimanual context instead of moving them simultaneously was a more intuitive strategy to employ. To investigate this further, we trained two separate RNNs where one was recalibrated normally as mentioned above, and the other was recalibrated with just unimanual data. On average, the sequential unimanual strategy outperformed the simultaneous bimanual strategy (Fig. 5b, Supplementary Movie 2). Interestingly, the sequential strategy often led to equal performance between unimanual right and unimanual left trials, indicating that the RNN better learned to distinguish between the hands when recalibrated on just unimanual movements.

Lastly, we compared simple linear decoders (consisting of a matrix multiplication combined with exponential smoothing, equivalent to a Kalman filter50) to RNNs for simultaneous two-cursor control. Optimizing linear decoders (LDs) during online evaluation is difficult since it often requires hand tuning of parameters such as output gain51. For the fairest comparison against RNNs, we tested a range of output gain scalars for both the LDs and RNNs. We found that RNNs outperformed the LDs on average across all tested gains (Fig. 5c, Supplementary Movie 3; statistical significance indicated by non-overlapping confidence intervals). In fact, the LDs resulted in mostly failed trials due to their inability to isolate control to one cursor (i.e., intended movements of one cursor would inadvertently move the other such that target acquisition was near impossible). We found that T5 was able to acquire unimanual targets when the non-active cursor was fixed using LDs, indicating that failures during bimanual control were due to the LD's inability to separate left from right hand control.

Discussion

We confronted a challenging nonlinear BCI problem—the simultaneous, continuous bimanual control of two cursors—using an RNN, which should be able to exploit the nonlinear structure in neural data13,40–42 better than linear methods. Prior demonstrations of bimanual control have been described in non-human primates21 and humans22,23,29,52, although most of these studies have focused on linear decoding techniques22,23,29 and/or discrete classification52. We identified key sources of nonlinearity underlying bimanual hand movements and demonstrated, with real and simulated data, that neural networks are able to learn and leverage the nonlinearities for improved decoding. Consistent with prior work40,45–47, the RNN performed exceedingly well on offline data. However, we found that the high offline performance was due to the RNN overlearning the temporal structure of the offline data, resulting in poor online performance. In response, we altered the temporal structure of the training data which helped the RNN generalize to the online setting. Using this approach, we demonstrated real-time simultaneous two-cursor control via RNN in a person with paralysis.

Magnitude suppression and direction change (decorrelation) are two key sources of nonlinearity underlying bimanual movements. We found reduced left hand tuning strength during bimanual movement, resulting in weaker left hand decoding performance (Supplementary Fig. 2b, c). Reduced left hand tuning is consistent with prior reports of weaker neural representation for the ipsilateral effector during simultaneous movement with a contralateral effector21,24,25,27,29,53. One way in which this issue may be addressed is with sensors distributed over both hemispheres21,23,54–56. Continuous bimanual movement representation across hemispheres remains to be explored and can elucidate the extent to which each hemisphere contributes to the control of its respective effector.

During unimanual movement, we found a large direction-independent ‘laterality’ dimension coding for the side of the body on which the hand resides. This laterality information was instrumental in helping neural networks (NNs) distinguish between left and right hand movements, particularly as neural tuning between the hands became increasingly correlated. Consistent with prior work, we found correlated neural representations of movement between contralateral and ipsilateral effectors26,53,57–60 which complicates decoding in that it becomes difficult to distinguish between effectors. Our results suggest that NNs can use laterality dimensions, if present, to separate the effectors. Linear decoders cannot leverage laterality information since it is direction-independent, which may be why intended movement of one effector often resulted in the inadvertent movement of the other effector when using linear decoders.

The data alteration method proposed here is one way to prevent neural networks from learning structure in the offline BCI training data that is counterproductive for online control. In our method, data alteration was accomplished by dilating/compressing smaller snippets of training data and shuffling the order of the modified snippets. There are likely many other methods of helping neural networks generalize to data with less stereotyped structure. For example, there has been a recent compelling approach in non-human primates61 which recalibrates neural networks by using movement intention estimation techniques motivated by the ReFIT (recalibrated feedback intention-trained) algorithm62. In this study, Willsey et al. deployed a shallow feed-forward network for online BCI control where only 150 ms windows of data were used at each time step. Similar to these short windows of data, we suspect that our data alteration method forced the RNN to learn smaller time histories of data, allowing it to learn the temporal characteristics of bimanual movement-related neural activity without overlearning the specific sequence of behaviors performed during open-loop trials. An additional useful feature of this method is that it generates synthetic data which helps prevent overfitting to the limited amount of data that is normally collected in human BCI research (as shown in recent work on handwriting decoding13). Typically, BCI decoder calibration tasks are on the order of minutes and generally do not generate more than a few hundred trials worth of data1,2,3,10–12,27,63, whereas this method can increase this training data quantity by orders of magnitude. Future studies could investigate the utility of altering temporal structure in training data across different network architectures and decoding algorithms. Snippet window widths and the quantity of synthetic data are additional hyperparameters that could be further optimized in future work.

Our participant demonstrated real-time simultaneous two-cursor control via a RNN decoder trained using our data alteration technique. In contrast to RNNs, we found that linear decoders (LDs) exhibited a substantial amount of cross-decoding, where intended movements of one hand resulted in decoded movements for both cursors. For LDs to separate movements across effectors, the representations of each effector’s movements would have to be largely independent. This is generally not the case as has been reported by many studies26,53,57–60, although one group has found largely independent representations between ipsilateral and contralateral arm reaching22 where linear decoding techniques could possibly work well. In this study, we employed a basic linear method for decoding to establish a baseline, however future work could compare NNs to other more advanced linear architectures. Further, there are a multitude of NN architectures, such as feedforward networks61, convolutional networks64,65, and transformers66–68 which have shown great promise in decoding movement kinematics from brain activity that can be explored for multi-effector decoding.

In summary, our results suggest that neural network decoders may be particularly well-suited to the problem of decoding multi-effector motion due to the nonlinear structure of the neural code associated with such movements. We show that it is possible to enable simultaneous control of two cursors using recurrent neural networks with good performance, if care is taken to train them in a way that enables successful transfer to online control. Insights gained from this work may help to expand the scope of BCIs from single-effector control to more challenging applications (e.g., control of a whole-body exoskeleton), unlocking greater functionality for people with paralysis.

Methods

Study permissions and participant details

This work includes data from a single human participant (identified as T5) who gave informed consent and was enrolled in the BrainGate2 Neural Interface System clinical trial (ClinicialTrials.gov Identifier: NCT00912041, registered June 3, 2009). This pilot clinical trial was approved under an Investigational Device Exemption (IDE) by the US Food and Drug Administration (Investigational Device Exemption #G090003). Permission was also granted by the Stanford University Institutional Review Board (protocol #20804) and the Mass General Brigham IRB (protocol #2009P000505). All research was performed in accordance with relevant guidelines and regulations.

Participant T5 is a right-handed man (69 years of age at the time of study) with tetraplegia due to cervical spinal cord injury (classified as C4 AIS-C) which occurred approximately 9 years prior to enrollment in the clinical trial. In August 2016, he had two 96-channel intracortical microelectrode arrays (Blackrock Microsystems, Salt Lake City, UT; 1.5 mm electrode length) placed in the hand knob area of the left (dominant) precentral gyrus. The hand knob area was identified anatomically by preoperative magnetic resonance imaging (MRI). Supplementary Fig. 1a shows array placement locations registered to MRI-derived brain anatomy. T5 has full movement of the face and head and the ability to shrug his shoulders. Below the level of spinal cord injury, T5 has very limited voluntary motion of the arms and legs. Any intentional movement of the body below the level of injury is referred to as being “attempted” movement where small amplitude movements were intermittently observed.

Neural data processing

Neural signals were recorded from two 96-channel Utah microelectrode arrays using the NeuroPort™ system from Blackrock Microsystems (see2 for basic setup). First, neural signals were analog filtered from 0.3 to 7.5 kHz and subsequently digitized at 30 kHz with 250 nV resolution. Next, common mode noise reduction was accomplished via a common average reference filter which subtracted the average signal across the array from every electrode. Finally, a digital high-pass filter at 250 Hz was applied to each electrode prior to spike detection.

Spike threshold crossing detection was implemented using a − 3.5 × RMS threshold applied to each electrode, where RMS is the electrode-specific root mean square of the time series voltage recorded on that electrode. Consistent with other recent work, all analyses and decoding were performed on multiunit spiking activity without spike sorting for single neuron activity69–71.

Session structure and two-cursor tasks

Neural data was recorded from participant T5 in 3–5 h “sessions”, with breaks, on scheduled days (see Supplementary Table 2 for a comprehensive list of data collection sessions). All research sessions were performed at the participant's place of residence. T5 either sat upright in a wheelchair that supported his back and legs or laid down on a bed with his upper body inclined and head resting on a pillow. A computer monitor was placed in front of T5 which displayed two large circles indicating targets (one colored purple and one colored white) and two smaller circles indicating cursors with corresponding colors. The left cursor was labeled ‘L’ and colored purple and the right cursor was labeled ‘R’ and colored white.

During the open-loop task, the cursors moved autonomously to their designated targets in a delayed-movement paradigm. On each trial, one of three movement types were cued randomly: (1) bimanual (simultaneous movement of both cursors), (2) unimanual right (only right cursor movement), and (3) unimanual left (only left cursor movement). Each trial began with a random delay period ranging from 1–2 s where lines appeared and connected each cursor to its intended target. During the delay period, T5 would prepare the movement. After the delay period, indicated by a beep sound denoting the ‘go’ cue, the lines disappeared and the cursors moved to their targets over a period ranging 1–2 s in length, where cursor movement was governed by a minimum-jerk trajectory27,50 (black velocity profile in Fig. 4b). Both cursors arrived at their intended target at the same time. T5’s attempted movement strategy was to imagine that his hands were gripping joysticks (as illustrated in Fig. 1a) and to push on each joystick to control the corresponding cursor’s motion. The end of each trial was indicated by another beep sound where T5 was instructed to stop all attempted movements and to begin preparing for the next trial’s movement.

The closed-loop tasks generally mimicked the open-loop task except that the cursors were controlled via neural decoders (either an RNN or linear decoder) instead of having prescribed motion to their targets. During each closed-loop trial, T5 had a maximum of 10 s to acquire both targets. Target acquisition was defined as both cursors simultaneously dwelling within their intended target for an uninterrupted duration of 500 ms. If any one cursor moved outside of its target before the dwell period elapsed then the dwell timer was restarted. Both targets were illuminated blue during a proper simultaneous dwell (see Supplementary Movie 1). If the targets were not successfully acquired within the 10 s timeout period then the trial was considered failed.

An “assisted” version of the closed-loop task was often used for decoder recalibration prior to true closed-loop evaluation blocks. Assistance was provided in the form of “error assistance” and/or “push assistance”. Error assistance1,72 was accomplished by attenuating velocity commands in the dimensions orthogonal to each cursor’s straight-line path to the respective target. The attenuation factor was determined by a scalar value ranging from 0–1 where 0 provided no error assistance and 1 would remove all orthogonal velocity commands resulting in cursor movement along the line to the target. Push assistance was given for each cursor via adding a unit velocity vector in the direction of the corresponding target (referred to as “push vector”) which was scaled by the decoded cursor speed (magnitude of the velocity vector). The degree of push assistance was also governed by a scalar value ranging from 0–1 where 0 provided no push assistance and 1 would scale the push vector to the size of the decoded cursor velocity vector. The point of push assistance was to reinforce movement to the intended target by only aiding when the participant was trying to move. The amount of push and error assistance on each block was governed by the experimenter to ensure that the participant was able to acquire most, if not all, targets for recalibration purposes.

Since performance during recalibration was generally suboptimal, unimanual trials would often result in movement of both cursors which then would require bimanual control to correct cursor deviation. This was not ideal when considering the balance of training data for trial and movement type. To address this, we instituted a “lock mode” where the non-active cursor’s motion was fixed so that the participant was able to focus on the cursor which was cued to move during unimanual trials.

Offline population-level analyses

Cross-validated estimates of neural tuning strength and tuning correlation between effectors

We used cross-validated estimates of Euclidean distance for the quantification of neural tuning strength and other statistics requiring Euclidean distance, such as Pearson’s correlation between groups of linear model tuning coefficients. These methods are discussed in greater detail in our prior report27 (Willett*, Deo*, et al. 2020; see code repository https://github.com/fwillett/cvVectorStats).

Tuning strength was quantified using a cross-validated implementation of ordinary least squares regression (cvOLS.m) to estimate the magnitude of columns of linear model coefficients. Tuning coefficients were found using the following model:

| 2 |

Here, is the N × 1 firing rate vector for a single time step where N is the number of electrode channels. is an N × 5 matrix of mean firing rates (first column; superscript denotes electrode number) and directional tuning coefficients (second to fifth columns; superscript is electrode number and subscript represents the hand as or and movement as the - or -direction). Variables and of the predictor vector represent the and components of the right () and left () hand’s intended movement defined as the corresponding difference between target position ( terms with superscript ‘target’) and cursor position ( terms with superscript ‘cursor’). was fit via five-fold cross-validated ordinary least-squares regression using 20-ms binned data within a window from 300 to 700 ms after the go cue across all trials. This was accomplished by “stacking” the response (firing rate) and predictor vectors horizontally across all candidate timesteps. Block-wise means were calculated and subtracted from all neural data prior to analyses to adjust for nonstationarities and neural drift over time73,74.

The data used in Fig. 2 were from 5 session days (trial days 1776, 1778, 1792, 1881 and 1883) where we were able to collect large amounts of unimanual and bimanual open-loop data (since cross-validation requires each fold to have enough data to properly estimate regression coefficients). For each session day, we grouped consecutive blocks together in pairs to reach around 40 repetitions, at least, per trial type (unimanual right, unimanual left, and bimanual). Within each block set, we used the cvOLS function to compute the coefficient vectors and their magnitudes for each movement type. That is, we fit a separate model to all unimanual right trials, all unimanual left trials, and all bimanual trials. Notice that fitting the unimanual models reduces the encoding matrix to three columns (e.g., the last two columns related to the left hand are removed when fitting for unimanual right movement). We defined tuning strength for each hand (right or left) under the unimanual or bimanual contexts by averaging over the corresponding model’s x- and y-direction coefficient vector magnitudes. Ratios of these tuning strengths between models across each pair of block sets are reported in Fig. 2b (gray dots; sample size of 25). Tuning correlation in Fig. 2c was quantified by computing the (cross-validated) Pearson correlation between corresponding x or y-direction coefficient vectors between models (gray dots indicate correlations between models, as listed on the x-axis, across all block-sets). Correlations were computed using the cvOLS function. The x- and y-direction correlations are shown separately since the hands are more correlated in the y-direction and anti-correlated in the x-direction, as we have previously shown27, which would result in nullifying effects if correlations were averaged across direction dimensions.

Principal component analysis (PCA) of laterality coding

We used PCA to visualize the neural activity in a lower-dimensional space as illustrated in Fig. 2d. Using data from one of the sessions (trial day 1881) described above (20-ms binned, block-wise mean removed, Z-scored), we computed each trial’s average firing rate vector within the 300–700 ms window after the go cue. We then stacked each trial’s N × 1 firing rate vector horizontally resulting in an N x T matrix where T is the number of trials. PCA was performed on this monolithic matrix and each firing rate vector was subsequently projected onto the top two principal components (PCs) as illustrated in the left panel of Fig. 2d. The single-trial projections were colored by the trial type (unimanual right trial, unimanual left trial, or bimanual trial) to show how the data clustered. Next, we projected each trial’s binned firing rates across time (− 500 ms to 1.5 s relative to the go cue) onto the top PC to visualize a population-level peristimulus time histogram. Each thin line corresponds to a single trial’s projection, colored by trial type, and the bold lines are the mean projections shaded with 95% confidence intervals computed via bootstrap resampling (n = 10 K).

In order to quantify the size of laterality-related tuning, we used a variation of demixed principal component analysis48 (dPCA; Kobak et al., 2016; https://github.com/machenslab/dPCA). A central concept of dPCA is marginalizing the neural data across different sets of experimentally manipulated variables, or factors. Each marginalization is constructed by averaging across all variables that are not in the marginalized set, resulting in a data tensor that captures the effect of the factors on the neural activity. dPCA then finds neural dimensions that explain variance in each marginalization alone, resulting in a useful interpretation of neural activity according to the factors. Leveraging the existing dPCA library, we implemented a cross-validated variance computation to reduce bias by splitting the data into two sets, marginalizing each set, element-wise multiplying the marginalized matrices together, and summing across all entries. The data was marginalized over the following four factors: laterality, movement direction, laterality × movement direction interaction, and time. For each dataset used in Fig. 2b, c (trial days 1776, 1778, 1792, 1881 and 1883), we computed the cross-validated variance in the aforementioned factors. The bar plots in Fig. 2d (rightmost panel) summarize the average cross-validated marginalized variance for each factor (labeled along the x-axis) across all 5 sessions (gray dots).

Single electrode channel tuning

To assess neural tuning to unimanual or bimanual movement on a given electrode as seen in Supplementary Fig. 1, we used a 1-way ANOVA on firing rates observed during directional hand movements within each movement context. This analysis was performed on the same dataset used in Fig. 1 (trial day 1750). We first computed the average firing rate vector for each trial within the 300–700 ms window relative to the go cue. Next, we separated each of the computed average firing rate vectors into the following sets: unimanual right trials, unimanual left trials, and bimanual trials. Within each set, we grouped the vectors into their respective movement direction (4 directions defined by each quadrant in the unit circle) for each hand. Grouping the bimanual trials for right hand movement direction ignored left hand movement direction and vice versa. This resulted in 4 total sets of firing rate vectors grouped by their respective hand’s movement direction (unimanual right directions, unimanual left directions, bimanual right directions, and bimanual left directions) and a separate 1-way ANOVA was performed within each set. If the p-value was less than 0.00001, the electrode was considered to be strongly tuned to that movement context (unimanual or bimanual). To assess the tuning strength of each strongly tuned electrode, we computed FVAF (fraction of variance accounted for) scores27,63. The FVAF score was computed using the following equations:

Here, is the total variance (sum of squares), is the movement direction-related variance, is the total number of trials, is the average firing rate vector for trial , is the average firing rate vector across all trials within the set, and is the average firing rate vector for the particular movement direction cued on trial . FVAF scores range from 0 (no direction-related variance) to 1 (all variance is direction-related).

Offline single-bin decoding of real and simulated unimanual data

Real and simulated neural data for unimanual movement

The real unimanual dataset analyzed for Fig. 3a–c was from trial day 1883. The data were binned (20-ms bins), block-wise mean removed, and each trial truncated to 400 ms movement windows (300–700 ms after the go cue). In keeping with standard BCI decoding practice and to focus on directional movement decoding, we defined the velocity target for each time step as the unit vector pointing from the cursor to the target, resulting in discrete velocity steps as seen in Fig. 3a (thick gray lines).

When generating synthetic data for simulations, we attempted to match the ‘functional’ signal-to-noise ratio (fSNR) of the real dataset for a more practical comparison. The fSNR decomposes decoder output into a signal component (a vector pointing at the target) and a noise component (random trial-to-trial variability). We first generated the decoder output using a cross-validated linear filter to predict a point-at-target unit vector (normalized target position minus cursor position) given neural activity as input.

We then fit the following linear model to describe the decoder output:

Here, is the 2 × 1 point-at-target vector, is the cursor’s predicted velocity vector at timestep t, is the 2 × 2 decoder matrix, and is the 2 × 1 vector of gaussian noise at timestep t.

We computed the functional SNR () as:

Here, and are the diagonal terms (subscripts refer to row i and column j) of the 2 × 2 matrix, and is the standard deviation of (averaged across both dimensions). We estimated D by least squares regression. We estimated by taking the sample standard deviation of the model error. Intuitively, the numerator describes the size of the point-at-target component of the decoder output, and the denominator describes the size of the trial-to-trial variability.

To simulate neural activity, we used the laterality encoding model in Eq. (4) where we varied the directional tuning correlation between the hands and the size of the laterality dimension (as labeled along the x- and y-axes of Fig. 3d, e). We began by generating a synthetic target dataset containing unimanual velocities for the left and right hands. The synthetic targets consisted of approximately 2000 unimanual right trials and 2000 unimanual left trials. Trial lengths were 400 ms in duration to match the real dataset and binned in 20-ms bins. The synthetic target data were balanced across 8 movement direction wedges evenly distributed throughout the unit circle (see x-axes in Fig. 1c for direction wedges). Specifically, a uniformly random unit velocity vector was generated within a direction wedge for each trial ensuring even distribution across all wedges for both hands. Essentially, the synthetic targets resembled the sample real-data targets seen in Fig. 3a (thick gray lines). Next, we generated random tuning coefficients ( terms in Eq. 4) for 192 synthetic neurons by sampling from a standard normal distribution. The population-level tuning vectors were then scaled to match the magnitudes of corresponding tuning vectors from the real dataset (using cvOLS). We then enforced a correlation (which was swept, see y-axes of Fig. 3d, e) between the x-direction tuning vectors for both hands as well as the y-direction vectors. Next, we passed the synthetic velocity targets through the tuning model to compute the population-level firing rates for each time bin. The fSNR for each hand was matched to the real data via adding gaussian noise to each individual channel (sweeping the standard deviation parameter) until the fSNRs of the synthetic data was close to that of real data. The simple noise model is described as follows:

| 3 |

Here, is a T × 1 time-series vector of firing rates for channel where T represents the number of 20-ms time bins, Σ is the T × T diagonal covariance matrix, and σ is the standard deviation. This was a simple noise model with a diagonal covariance matrix used for all channels (i.e., the same σ was used for all channels). We understand that more sophisticated noise models could have been used, but our simplified approach was well enough suited for single-bin decoding where one can assume independence between time bins which is further explained in Supplementary Fig. 4. After matching the fSNRs, we scaled the laterality coefficient vector where a value of 0 removed the laterality dimension completely, and a value of 1 matched the laterality coefficient magnitude of the real data. Finally, we enforced that no firing rates were below zero by clipping negative firing rates to 0.

Linear ridge regression and feed forward neural network for single-bin decoding

The real data was split into 5-folds for cross-validation with balanced unimanual right and unimanual left time steps of data within each fold. Cross-validation was necessary for the real dataset since the number of trials was relatively small (482 total trials) in comparison to the simulated dataset (4000 total trials).The simulated datasets were large enough and balanced in terms of trial types that in addition to cross-validation during decoder training, performance was based on completely held out test sets (20% of total simulated data) which were also balanced for trial type.

Simple linear ridge regression was performed on the real and simulated datasets using a neural decoding python package (https://github.com/KordingLab/Neural_Decoding) and the Scikit-Learn library (RidgeCV function). The ridge parameter was swept until decoding performance (measured as the Pearson correlation coefficient) was maximized across all output dimensions. Each feed forward neural network (FFN) was designed as a single densely connected layer of 512 units (TensorFlow v.1). The FFNs were initialized with random weights and model parameters were tuned based on an offline hyperparameter sweep on pilot data. All decoders were trained to convert firing rate input features (N × 1 vector) at a single time-bin (20 ms bin) to x- and y-direction velocities for both cursors (4 × 1 velocity vector at each time step).

Removing laterality information from real unimanual data

Laterality information was removed from real unimanual data by first fitting the linear tuning model below using cross-validation:

| 4 |

Here, the model resembles that in Eq. (2) except with the addition of a laterality predictor variable () which is + 1 for unimanual right movement or -1 for unimanual left. There is an additional column of coefficients ( terms) in the encoding matrix . After this model was fit, the neural activity was projected onto the laterality dimension (last column vector of ) and the projected neural activity was subsequently subtracted from the original neural activity. To ensure that laterality information was sufficiently removed, we built another linear filter on the laterality-removed data and confirmed that the laterality coefficients were all zero.

Training data augmentation via dilation and randomization of training snippets

The raw data as formatted for RNN training took the form of an input ‘feature’ data tensor of shape S × T × N and an output ‘target’ tensor of shape S × T × R. Here, S is the number of training snippets, T is the number of time points in a snippet, N is the number of electrode channels, and R is the number of response or output variables. The input tensor consisted of neural data which was binned at 20 ms, block-wise mean removed, and Z-scored. The output tensor contained the cursors’ velocities and movement context signals which were also binned at 20 ms (see Fig. 4 and Supplementary Fig. 3). Typically, we held our training snippet length at 10 s (T = 500 at 20-ms bins). We generated a large number of synthetic training snippets by splicing together smaller pieces of the data stream which were also dilated in time and random in order.

Our objective was to generate an augmented dataset which was balanced across movement direction and movement type. We defined 4 gross movement directions corresponding to each quadrant of the unit circle and movement type was defined as unimanual, bimanual, or no-movement. The types of no-movement were further subdivided into the following groups: (1) unimanual right delay period, (2) unimanual left delay period, (3) bimanual delay period, and (3) rest. This distinction in types of no-movement was so that we may equally account and balance for preparatory activity as well as rest activity. The training data was preprocessed to label each data sample’s movement quadrant per hand and movement type. We generated roughly 2000 synthetic training snippets (each snippet of 10 s length) for training, which was chosen based on the time it took to perform the augmentation during an average experiment session (10–15 min). A synthetic 10 s training snippet was generated by appending dilated/compressed clips of raw data. Each raw data clip was selected to begin at a random time point, varied in duration (ranging between 0.2 and 0.8 times the 10 s total snippet length), and had an associated dilation/compression factor drawn from a uniform distribution over the interval [0.5, 2], where indicates no change, indicates compression, and indicates dilation. In order for a candidate clip to be considered valid, it had to abide by the current balancing record which was kept across all of the aforementioned movement conditions. Generally, the input data was balanced in order to achieve a sufficient amount of data for each of the movement types. If the candidate clip did not meet the balancing requirements, then another random clip was drawn. Linear interpolation was used to either compress or stretch both the input and output clips of raw data based on the dilation/compression factor (e.g., a of 0.5 would compress a clip array of length 60 into an array of length 30 by sampling every other element of the original clip). The data augmentation method generated both a training and held-out validation set that did not contain overlapping data. The input data was split into a training and validation set in advance, then from these isolated pools augmented sets of training data could be created.

Online recurrent neural network decoding of two-cursors

We used a single-layer gated recurrent unit (GRU, 512 units) recurrent neural network architecture to convert sequences of threshold crossing neural firing rate vectors (which were binned at 20 ms and Z-scored) into sequences of continuous cursor velocities and discrete movement context signals. The discrete context signals coded for which movement (unimanual right, unimanual left, bimanual, or no movement) occurred at that moment in time and enabled the corresponding cursor velocity commands to be gated. We used a day-specific affine transform to account for inter-day changes in neural tuning when training data were combined across multiple days. The RNN model and training was implemented in TensorFlow v1. The online RNN decoder was deployed on our real-time system by extracting the network weights and implementing the inference step in custom software. The RNN inference step was 20 ms. A diagram of the RNN is given in Supplementary Fig. 3.

Before the first day of real-time evaluation, we collected pilot offline data across 2 session days (trial days 1752 and 1771) comprising 1 h of 780 total trials (balanced for unimanual and bimanual trials) which were combined to train the RNN. All training data were augmented to generate around 2000 training snippets of ten second length amounting to roughly 6 h of data (balancing equally for each movement type). We tuned the initial RNN model’s hyperparameters (input noise, input mean drift, learning rate, batch size, number of training batches, and L2-norm weight regularization) via a random search deployed across 100 RNNs, each with the same validation set which was 10% of the total augmented training data (~ 200 validation examples). The cost function for a single snippet of data (as used in prior work13) was expressed as the sum of an L2 weight regularization, a cross-entropy loss over the discrete variables, and a squared prediction error loss over the continuous variables. The number of GRU layers and units per layer were not optimized as our online system was compute-constrained to be able to perform low-latency real-time inference using Simulink Real-Time software.

On each subsequent day of real-time testing, additional open-loop training data were collected (approximately 25 min of 280 trials; roughly 6 h of 30 K trials after augmentation) to recalibrate the RNN which was subsequently used to collect 4 assisted closed-loop blocks (5 min each) for a final recalibration. For each RNN recalibration, all data that were used for training up until that point in time were included, where 40% of training examples were from the most recently collected dataset and the remaining 60% of training examples were evenly distributed over all other previously collected datasets. During recalibration periods in which the RNN was training, firing rate means and standard deviations were updated via an elongated open-loop block (8-min in length) which were used to Z-score the input firing rates prior to decoding. This RNN training protocol was used for the unimanual and simultaneous bimanual data presented in Fig. 5a. In total, performance was evaluated across 6 days (trial days 1752, 1771, 1776, 1778, 1790, 1792) with each day containing between 4 and 8 blocks (5 min each) with balanced trials across each movement context.

The RNN training varied slightly for the ‘sequential bimanual’ data presented in Fig. 5b. The base RNN (prior to the first day of real-time evaluation) was calibrated in the same fashion as mentioned above, however each subsequent dataset used for recalibration consisted of just unimanual trials and no bimanual trials. Data from two evaluation sessions (trial days 1881 and 1883) were used for Fig. 5b.

The data augmentation panels of Fig. 4d, e were generated based on data from two session days (trial days 1867 and 1869). The two separate RNNs used were trained only on the data gathered during those sessions and did not include any historical data to focus on the effects of our data augmentation technique. One RNN was trained with data that was augmented and the other RNN was trained on the raw non-augmented data. The open-loop results and sample speed traces shown in Fig. 4d, e are from trial day 1869.

Online two-cursor control performance assessment

Online performance was characterized by time-to-acquisition and angular error. Time-to-acquisition for a trial was defined as the amount of time after the go cue in which the targets were successfully acquired. Angular error was defined as the average difference between movement direction within the 300–500 ms window after the go cue to capture the ballistic portion of each movement prior to any error correction. Each trial timed out at 10 s, after which the trial was considered failed.

Comparing linear regression and RNN decoding

We tested a range of output gains for the comparison of online linear decoders and RNNs used for Fig. 5c (includes data from trial days 1853 and 1855) to ensure that performance differences were not due to variation in decoded output magnitudes. The range of gain values was determined on each session day by a closed-loop block (preceding data collection) where the experimenter hand-tuned values until the participant’s control degraded. Hand-tuning of gain values was done for the linear decoder and RNN, separately. Each session day had 4–5 equally spaced gain values for each decoder. For the data presented in Fig. 5c, we averaged over all swept gains to summarize performance for each decoder since it turned out that the result was not affected by what gain was used (e.g., linear decoder results include data from each swept gain).

Supplementary Information

Acknowledgements

We thank participant T5 and his caregivers for their generously volunteered time and dedicated contributions to this research as part of the BrainGate2 pilot clinical trial, Sandrin Kosasih, Beverly Davis, and Kathy Tsou for administrative support, Erika Woodrum for the drawings in Figs. 1a, 4a, and Elias Stein for help in coding the data augmentation. Support provided by the NIH National Institute of Neurological Disorders and Stroke (U01-NS123101); NIH National Institute on Deafness and Other Communication Disorders (R01-DC014034); Wu Tsai Neurosciences Institute; Howard Hughes Medical Institute; Larry and Pamela Garlick; Office of Research and Development, Rehabilitation R&D Service, US Department of Veterans Affairs (A2295R, N2864C). * The contents do not represent the views of the Department of Veterans Affairs or the US Government.

CAUTION: Investigational Device. Limited by Federal Law to Investigational Use.

Author contributions

D.R.D. conceived the study, wrote the manuscript, and led the development, analysis, and interpretation of all experiments. D.R.D and D.T.A. collected the data. L.R.H. is the sponsor-investigator of the multi-site clinical trial. J.M.H. planned and performed T5’s array placement surgery and was responsible for his ongoing clinical care. K.V.S., J.M.H., and F.R.W. supervised and guided the study. All authors reviewed and edited the manuscript.

Data availability

All neural data analyzed in this study are publicly available on Dryad (10.5061/dryad.sn02v6xbb).

Competing interests

The MGH Translational Research Center has clinical research support agreements with Neuralink, Synchron, Axoft, Precision Neuro, and Reach Neuro, for which L.R.H. provides consultative input. MGH is a subcontractor on an NIH SBIR with Paradromics. J.M.H. is a consultant for Neuralink and Paradromics, serves on the Medical Advisory Board of Enspire DBS and is a shareholder in Maplight Therapeutics. He is also an inventor on intellectual property licensed by Stanford University to Blackrock Neurotech and Neuralink. K.V.S. consulted for Neuralink and CTRL-Labs (part of Meta Reality Labs) and was on the scientific advisory boards of MIND-X, Inscopix and Heal. He was also an inventor on intellectual property licensed by Stanford University to Blackrock Neurotech and Neuralink. All other authors have no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Jaimie M. Henderson and Krishna V. Shenoy.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-024-51617-3.

References

- 1.Hochberg LR, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485:372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 3.Collinger JL, et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013;381:557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wodlinger B, et al. Ten-dimensional anthropomorphic arm control in a human brain-machine interface: Difficulties, solutions, and limitations. J. Neural Eng. 2015;12:016011. doi: 10.1088/1741-2560/12/1/016011. [DOI] [PubMed] [Google Scholar]

- 5.Ajiboye AB, et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: A proof-of-concept demonstration. Lancet. 2017;389:1821–1830. doi: 10.1016/S0140-6736(17)30601-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nature. 2008;456:639–642. doi: 10.1038/nature07418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ethier C, Oby ER, Bauman MJ, Miller LE. Restoration of grasp following paralysis through brain-controlled stimulation of muscles. Nature. 2012;485:368–371. doi: 10.1038/nature10987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.O’Doherty JE, et al. Active tactile exploration using a brain–machine–brain interface. Nature. 2011;479:228–231. doi: 10.1038/nature10489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bouton CE, et al. Restoring cortical control of functional movement in a human with quadriplegia. Nature. 2016;533:247–250. doi: 10.1038/nature17435. [DOI] [PubMed] [Google Scholar]

- 10.Gilja V, et al. Clinical translation of a high-performance neural prosthesis. Nat. Med. 2015;21:1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pandarinath C, et al. High performance communication by people with paralysis using an intracortical brain-computer interface. Elife. 2017;6:123. doi: 10.7554/eLife.18554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nuyujukian P, et al. Cortical control of a tablet computer by people with paralysis. PLoS One. 2018;13:e0204566. doi: 10.1371/journal.pone.0204566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Willett FR, Avansino DT, Hochberg LR, Henderson JM, Shenoy KV. High-performance brain-to-text communication via handwriting. Nature. 2021;593:249–254. doi: 10.1038/s41586-021-03506-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Stavisky SD, et al. Neural ensemble dynamics in dorsal motor cortex during speech in people with paralysis. Elife. 2019;8:755. doi: 10.7554/eLife.46015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wilson GH, et al. Decoding spoken English from intracortical electrode arrays in dorsal precentral gyrus. J. Neural Eng. 2020;17:066007. doi: 10.1088/1741-2552/abbfef. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Anumanchipalli GK, Chartier J, Chang EF. Speech synthesis from neural decoding of spoken sentences. Nature. 2019;568:493–498. doi: 10.1038/s41586-019-1119-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Moses DA, et al. Neuroprosthesis for decoding speech in a paralyzed person with anarthria. N. Engl. J. Med. 2021;385:217–227. doi: 10.1056/NEJMoa2027540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Angrick M, et al. Speech synthesis from ECoG using densely connected 3D convolutional neural networks. J. Neural Eng. 2019;16:036019. doi: 10.1088/1741-2552/ab0c59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Willett FR, et al. A high-performance speech neuroprosthesis. Nature. 2023;620:1031–1036. doi: 10.1038/s41586-023-06377-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Metzger SL, et al. A high-performance neuroprosthesis for speech decoding and avatar control. Nature. 2023;620:1037–1046. doi: 10.1038/s41586-023-06443-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ifft PJ, Shokur S, Li Z, Lebedev MA, Nicolelis MAL. A brain-machine interface enables bimanual arm movements in monkeys. Sci. Transl. Med. 2013;5:210–254. doi: 10.1126/scitranslmed.3006159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Downey JE, et al. The motor cortex has independent representations for ipsilateral and contralateral arm movements but correlated representations for grasping. Cerebral Cortex. 2020;30:5400–5409. doi: 10.1093/cercor/bhaa120. [DOI] [PubMed] [Google Scholar]

- 23.Benabid AL, et al. An exoskeleton controlled by an epidural wireless brain–machine interface in a tetraplegic patient: A proof-of-concept demonstration. Lancet Neurol. 2019;18:1112–1122. doi: 10.1016/S1474-4422(19)30321-7. [DOI] [PubMed] [Google Scholar]

- 24.Rokni U, Steinberg O, Vaadia E, Sompolinsky H. Cortical representation of bimanual movements. J. Neurosci. 2003;23:11577–11586. doi: 10.1523/JNEUROSCI.23-37-11577.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Steinberg O, et al. Neuronal populations in primary motor cortex encode bimanual arm movements. Eur. J. Neurosci. 2002;15:1371–1380. doi: 10.1046/j.1460-9568.2002.01968.x. [DOI] [PubMed] [Google Scholar]

- 26.Diedrichsen J, Wiestler T, Krakauer JW. Two distinct ipsilateral cortical representations for individuated finger movements. Cereb. Cortex. 2013;23:1362–1377. doi: 10.1093/cercor/bhs120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Willett FR, et al. Hand knob area of premotor cortex represents the whole body in a compositional way. Cell. 2020;181:396–409.e26. doi: 10.1016/j.cell.2020.02.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lai D, et al. Neuronal representation of bimanual arm motor imagery in the motor cortex of a tetraplegia human, a pilot study. Front. Neurosci. 2023;17:1133928. doi: 10.3389/fnins.2023.1133928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wisneski KJ, et al. Unique cortical physiology associated with ipsilateral hand movements and neuroprosthetic implications. Stroke. 2008;39:3351–3359. doi: 10.1161/STROKEAHA.108.518175. [DOI] [PubMed] [Google Scholar]