Abstract

Background

MRI‐guidance techniques that dynamically adapt radiation beams to follow tumor motion in real time will lead to more accurate cancer treatments and reduced collateral healthy tissue damage. The gold‐standard for reconstruction of undersampled MR data is compressed sensing (CS) which is computationally slow and limits the rate that images can be available for real‐time adaptation.

Purpose

Once trained, neural networks can be used to accurately reconstruct raw MRI data with minimal latency. Here, we test the suitability of deep‐learning‐based image reconstruction for real‐time tracking applications on MRI‐Linacs.

Methods

We use automated transform by manifold approximation (AUTOMAP), a generalized framework that maps raw MR signal to the target image domain, to rapidly reconstruct images from undersampled radial k‐space data. The AUTOMAP neural network was trained to reconstruct images from a golden‐angle radial acquisition, a benchmark for motion‐sensitive imaging, on lung cancer patient data and generic images from ImageNet. Model training was subsequently augmented with motion‐encoded k‐space data derived from videos in the YouTube‐8M dataset to encourage motion robust reconstruction.

Results

AUTOMAP models fine‐tuned on retrospectively acquired lung cancer patient data reconstructed radial k‐space with equivalent accuracy to CS but with much shorter processing times. Validation of motion‐trained models with a virtual dynamic lung tumor phantom showed that the generalized motion properties learned from YouTube lead to improved target tracking accuracy.

Conclusion

AUTOMAP can achieve real‐time, accurate reconstruction of radial data. These findings imply that neural‐network‐based reconstruction is potentially superior to alternative approaches for real‐time image guidance applications.

Keywords: deep learning, MRI, radiotherapy

1. INTRODUCTION

Image‐guided radiotherapy is a pillar of modern cancer treatment as it enables the noninvasive treatment of tumors with millimeter‐scale accuracy while causing minimal damage to surrounding healthy tissue. 1 At the cutting‐edge of radiation oncology is a treatment machine known as an MRI‐Linac, which combines the unrivaled image quality of magnetic resonance imaging (MRI) with linear accelerator (Linac) x‐ray radiation therapy. 2 Commercial MRI‐Linacs are already achieving new standards of precision radiotherapy through image‐guided adaptation to daily anatomical changes, 3 with cutting‐edge developments including the implementation of gating techniques that dynamically shutter the radiation beam to account for patient motion. 4 The next generation of MRI‐Linac technology promises to track tumor motion with a moving radiation beam on the basis of real‐time MRI. 5 However, the accuracy of these targeting approaches, which are likely to improve patient outcomes and reduce side effects, 6 is limited by the low spatio‐temporal resolution of MRI. 7

Fast MRI acquisitions based on acquiring raw k‐space data with sparsely sampled golden‐angle radial trajectories have shown much promise for tumor tracking during MRI‐Linac treatments. These radial trajectories are unique in enabling reconstruction of high‐spatial‐resolution, motion‐robust images 8 in parallel with high‐temporal‐resolution images from the same raw data. 9 Implementation of such imaging strategies would be advantageous in the radiotherapy context where low latency imaging is often reluctantly prioritized over resolution. 10 However, gold‐standard techniques for analytic reconstruction of such undersampled MRI data are computationally slow, presenting a barrier to the real‐time imaging required for dynamic treatment adaptation. 11 , 12

Deep neural networks have fueled recent progress in computer vision, leading to new technologies across diverse fields such as autonomous vehicles, 13 molecular analysis, 14 and medical imaging. 15 , 16 Common to many of these technologies is the requirement for pipelines that convert information acquired in an abstract sensor domain to an interpretable format on which real‐world actions can be based. Recently, neural networks have enabled fast, accurate reconstruction of undersampled MRI data. 17 , 18 Frameworks that directly reconstruct target images from the raw MRI signal are of particular interest for real‐time applications. 19 , 20 , 21 However, despite these prospects, the successful deployment of neural networks for real‐time imaging applications on systems including MRI‐Linacs (see Fig 1a) still hinges on the availability of training data and utilization of a reconstruction framework suitable for more challenging, nonuniformly sampled image reconstruction. 22 , 23 , 24 , 25 In particular, there is a dearth of training data for acquisitions corrupted by nonrigid motion, as a static ground truth does not exist. 26 , 27 , 28

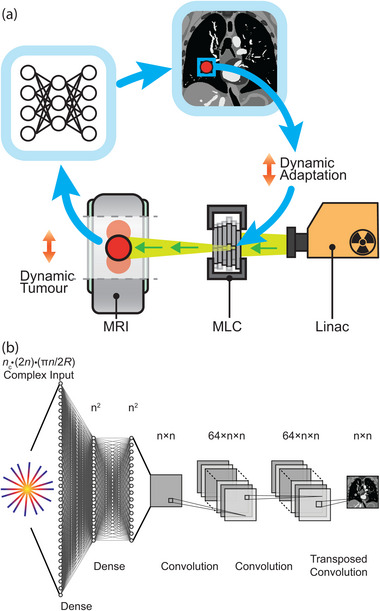

FIGURE 1.

Deep neural networks as a fast, accurate reconstruction technique for tumor tracking applications. (a) Workflow showing the potential role of AUTOMAP in a radiotherapy treatment with dynamic beam adaptation. Dynamic MRI scans are acquired on an MRI‐linac and reconstructed in real time with AUTOMAP. A template‐matching algorithm extracts the target position from images and dynamically adapts the X‐ray beam via a multi‐leaf collimator (MLC). (b) The deep neural network architecture implemented to reconstruct an image from radially sampled MRI data with AUTOMAP. Radial k‐space data are flattened into a 1D vector to create the input to a series of dense and convolutional layers that reconstruct an image.

One approach to performing real‐time radial reconstruction is the use of automated transform by manifold approximation (AUTOMAP), a generalized neural‐network reconstruction framework that learns the transformation from the raw MR signal to the target image domain from a training corpus built using the forward‐encoding model. 19 Once trained, this machine‐learning‐based framework reconstructs images in a single forward pass.

Here, we train AUTOMAP to reconstruct undersampled golden‐angle radial trajectory MR data. Using retrospectively acquired data from lung cancer patients, we compare the performance of AUTOMAP to conventional iterative methods for compressed sensing (CS) reconstruction, showing AUTOMAP gives similar reconstruction accuracy but with much faster processing times. Further, we leverage the YouTube‐8M database to synthesize radial k‐space data acquired in the presence of generic motion but with a known ground truth. We then show that our motion‐trained AUTOMAP model leads to more accurate tumor targeting in a digital lung cancer phantom. 29 These results will guide the development of neural network reconstruction techniques for low‐latency, high accuracy reconstruction in real‐time adaptive radiotherapy.

2. METHODS

2.1. Model architecture and training hyperparameters

We implemented AUTOMAP using the architecture shown in Figure 1b with Keras (2.4.3) operating on a TensorFlow backend (2.5.0). 19 The AUTOMAP architecture was created with five trainable layers. The first two layers are dense with hyperbolic tangent activations and map flattened input data through hidden layers. Data are reshaped to an matrix. Data then pass through two convolutional layers with 64 filters, kernel size 5 × 5, and rectified linear activation functions before a transposed convolution with one filter and a 7 × 7 kernel produces the final image. Models were trained to reconstruct images with , with a different model for each acceleration factor.

Training utilized the Adaptive Moment Estimation (Adam) optimizer with a learning rate of 10−5, a batch size of 20, and a mean square error (MSE) loss function. Model weights corresponding to the minimum validation cost achieved in 300 epochs of training, with a patience of 15, were saved for reconstruction. For reconstruction of lung cancer patient images, models trained on ImageNet were fine‐tuned for up to 100 epochs with a learning rate of 10−6. All computation utilized a NVIDIA RTX 8000 or NVIDIA A6000 graphical processing unit (GPU) on an Ubuntu 18.04 workstation with 128 GB RAM and a 10‐core 3.5 GHz Intel central processing unit (CPU).

2.2. Image data preprocessing

We begin by training AUTOMAP to perform image reconstruction under the assumption that anatomy is static. For initial model training, datasets of 20 000 training images and 1000 validation images depicting generic objects were sourced from ImageNet and augmented four times via a series of flips and rotations. 30 Such large datasets are beneficial to the data‐driven AUTOMAP training process and are easily obtained from ImageNET. We note that as dataset sizes increase, model performance benefits from an increase in the train/test split ratio. 31 ImageNet data were converted to normalized, grayscale images at 256× 256 resolution and augmented further via addition ofsynthetic phase maps. These synthetic phase maps consist of smoothly varying, two‐dimensional sinusoidal waves with randomly generated frequency and phase offsets. Examples of these phase maps are provided in Figure S1. We highlight that data augmentation with these phase maps, which are fully described in Ref. 19, prevents AUTOMAP from overfitting during training.

Using the MATLAB toolbox for realistic analytical phantoms described in Ref. 32, the ImageNet derived datasets were encoded with golden‐angle radial trajectories to generate single channel () k‐space datasets for 128× 128 resolution reconstruction via a nonuniform fast Fourier transform (NUFFT) operation. As is standard on clinical MRI scanners, golden‐angle trajectories were oversampled two times in the frequency‐encode direction, which yielded 256 complex data points for each readout spoke. Data were undersampled in the phase‐encode direction by reduction factors (R) of 1, 2, 4, 8, and 16, which corresponded to radial trajectories with 202, 101, 51, 26, and 13 spokes, respectively.

After preprocessing, the number of complex datapoints at the flattened AUTOMAP input is

| (1) |

where is the number of channels, is the fully sampled image resolution and R is the reduction factor applied in the phase encode direction. The 2n term represents 2 × oversampling in readouts and reflects the number of radial views at a reduction factor of R. Complex data are separated into real and imaginary components that are concatenated into the flattened input to the first dense layer.

The 256 × 256 grayscale images were downsampled via bilinear interpolation to 128 × 128 for use as ground truth target images in model training.

For model fine‐tuning and evaluation, MRI scans from a 13 patient lung cancer dataset were split in the ratio 9/2/2 (training/validation/testing). This lung cancer dataset is fully described in Refs. 33, 34. Radial, single‐coil k‐space data for these images were retrospectively acquired from images saved in the Digital Imaging and Communications in Medicine (DICOM) format with the procedure described above. Slices from T1‐weighted, T2‐weighted, and cine‐MRI scans were analyzed individually, yielding several hundred images per patient. To simulate real‐world sensor noise, additional k‐space datasets with 25 dB of additive white Gaussian noise (AWGN) was added created with the Signal Processing Toolbox in MATLAB.

2.3. Motion data preprocessing

The next part of work this aims to account for intra‐acquisition motion in the neural network reconstruction by incorporating generic motion into the training corpus. Here, we aim to make the motion‐correction generalize by using videos from YouTube as a source of motion‐encoded training data.

For motion training, 7856 image sequences were extracted from 767 videos in the “wildlife” class of the YouTube‐8M database and split randomly into training/validation sets at the ratio 0.85/0.15. 35 The extraction process ensured that the video sequences contained continuous, smooth motion by excluding cases where the structural similarity (SSIM) between any adjacent frames was lower than 0.94. Sequences where the SSIM between first and last frames was lower than 0.5 were also excluded. These thresholds were chosen as typical of the SSIM values observed across the cine‐MRI lung data described above.

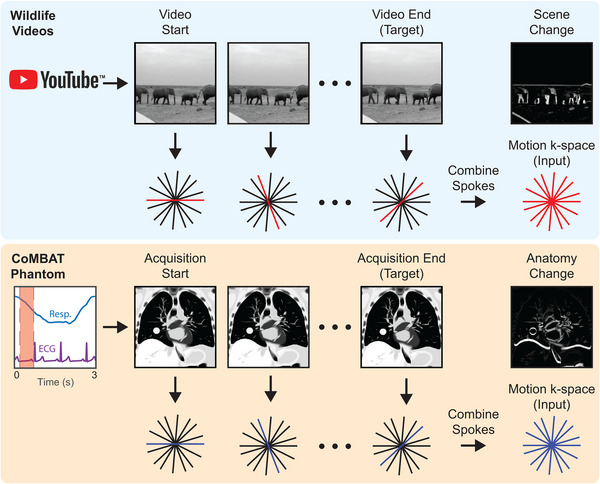

Motion‐encoded radial k‐space data were created from image sequences by combining spokes of readout data from sequential “static” four times undersampled k‐space data generated with the NUFFT procedure described above (see Figure 2). AWGN at 25 dB was added to raw k‐space data to simulate sensor noise. For ground truth data, we selected the image corresponding to the last frame in each k‐space acquisition because knowledge of the most recent anatomical state is desired for real‐time beam adaptation on an MRI‐Linac.

FIGURE 2.

Simulating patient motion during radial acquisitions. The CoMBAT phantom inputs respiratory and ECG traces to simulate patient anatomy during cardiothoracic motion. MR slices are simulated at each timepoint during the acquisition (red shading) and encoded to a golden‐angle radial trajectory. A “motion‐encoded” k‐space is derived by taking individual spokes from the “static” k‐space at individual timepoints during the acquisition. The anatomy change between start and end timepoints is shown as a difference image.

Motion sequence data were augmented by a factor of 8 via a series of flips, rotations, and time‐reversal processes. Data were further augmented by the addition of random phase maps. For final model training, this YouTube‐8M dataset was combined with the ImageNet dataset described above. A separate motion test set of 1000 motion sequences and input data were created from an independent 218 videos in the YouTube‐8M dataset using the SSIM criteria described above.

As a testing tool, a time‐series of 2D lung cancer images were generated using the digital CT/MRI breathing XCAT (CoMBAT) phantom for a balanced steady‐state free precession (bSSFP) sequence with TR/TE = 10/5 ms. 29 , 36 , 37 Image sequences were transformed to 4 × undersampled k‐space data using the process shown in Figure 2.

2.4. Image reconstruction

Neural network image reconstruction was performed by running inference on flattened radial input data with the corresponding, trained AUTOMAP model. The AUTOMAP reconstruction time was measured as wall time taken to perform this inference step in Keras on an unburdened workstation as measured over 20 repeats.

Conventional CS and NUFFT image reconstruction techniques were performed using the Berkeley Advanced Reconstruction Toolbox (BART). 38 The NUFFT reconstruction interpolates k‐space data onto a Cartesian grid and then performs a fast Fourier transform. 39 The CS implementation utilizes a NUFFT with an iterative algorithm to find the l1‐regularized solution to

| (2) |

where is the acquired k‐space, is the (under)sampling operator over the reconstructed image , λ is a regularization parameter, and ψ is the wavelet operator. A grid search was used to optimize λ in the range for a minimum normalized root mean square error (NRMSE) with 30 iterations. Reconstruction times for CS are the self‐reported time for reconstruction as measured by the BART toolbox over 20 repeats.

The NRMSE was used as the primary metric to evaluate reconstruction quality and is calculated as the RMSE between reconstructed image and ground truth divided by the intensity range of the ground truth image. Structural similarity (SSIM) and the peak signal‐to‐noise ratio (PSNR) are also considered as additional quantitative metrics that may indicate the clinical utility of the images. 40 In results quantifying the reconstruction quality for lung cancer patient images, the bar chart values reported for SSIM/NRMSE are the mean and standard deviation across 400 image slices in the two patient subset of lung cancer dataset that was unseen by AUTOMAP during training. We performed a paired t‐test to evaluate the significance of the difference between reconstruction metric distributions yielded from image slices in the testing dataset. Metric distributions were additionally evaluated with a Kolmogorov–Smirnov test to ensure that normality requirements for parametric statistical tests were satisfied.

2.5. Template matching

To simulate target tracking, regions of interest encompassing the tumor and diaphragm were defined in the first ground truth image of the digital phantom. Using CS and trained neural networks, stacks of 240 images were reconstructed from data retrospectively acquired with cardiothoracic motion. A template matching algorithm based on OpenCV software then calculated the closest matching target location from these reconstructed images with a normalized cross‐correlation algorithm at half‐pixel resolution (1 mm). 7

2.6. Multichannel data processing and reconstruction

To explore the potential application of AUTOMAP to reconstruction of multichannel radial MRI data, we trained AUTOMAP to perform a four‐channel () reconstruction for R = 8. AUTOMAP training was performed using the same ImageNET and lung cancer patient datasets described in Section 2.1 for . Four‐channel sensitivity maps for an idealized birdcage coil were defined using included functionality in SigPy. 41 Four‐channel k‐space data were subsequently generated via a NUFFT operation after applying sensitivity maps to images. To simulate real‐world sensor noise, 25 dB of AWGN was applied to the two‐patient test dataset.

Multichannel reconstructions were performed using a conventional NUFFT root‐sum‐of‐squares approach (RSS) and CS for comparison to AUTOMAP. In the NUFFT RSS approach, each channel is reconstructed individually, and the result is the root‐sum‐of‐squares of images from all channels. To perform CS reconstruction, sensitivity maps were derived with the ESPIRiT tool in BART from multichannel images reconstructed at low (24× 24) resolution with NUFFT. 42 Parallel imaging CS reconstruction was subsequently performed with the BART toolbox as described in Section 2.4 but with the additional use of the ESPIRiT‐derived sensitivity maps. The CS regularization parameter was optimized via a grid search for the , multichannel reconstruction.

3. RESULTS

3.1. Model training

Models trained for static reconstruction on ImageNet derived data converged smoothly for all undersampling factors tested. The training time increased with model size (see Table 1 for the number of parameters in each model). The training time per epoch was in the range 5 min. (R = 16) to 15 min. (R = 1). A plateau in validation cost was found within 300 epochs of training for networks with R > 4. Models pretrained on ImageNET data were then fine‐tuned on a lung cancer images. For example, with R = 4, the fine‐tuned AUTOMAP model had a validation cost 9.7 times lower than a model trained from scratch on the lung cancer training data, emphasizing the value of transfer learning from generic pretrained models when only smaller datasets are available for a given anatomy. Examples of training/validation cost training dynamics and further analysis are included in Figure S2.

TABLE 1.

Computational load of image reconstruction techniques. The time to perform single‐channel image reconstruction with AUTOMAP and compressed sensing techniques for different undersampling factors is shown in addition to the number of trainable parameters in AUTOMAP. Compressed sensing reconstructions utilize one optimized hyperparameter value for each undersampling factor.

| Acceleration factor (R) | 1 | 2 | 4 | 8 | 16 | |

|---|---|---|---|---|---|---|

| AUTOMAP 1‐Slice | 16.0 ± 0.3 | 10.4 ± 0.4 | 7.2 ± 0.6 | 5.3 ± 0.7 | 4.7 ± 0.9 | |

| Reconstruction time (ms) | AUTOMAP 10‐Slices | 19.1 ± 0.4 | 13.5 ± 0.4 | 10.0 ± 0.4 | 8.2 ± 0.7 | 7.1 ± 0.8 |

| Compressed Sensing 1‐slice | 253 ± 23 | 240 ± 17 | 235 ± 8 | 236 ± 10 | 231 ± 21 | |

| Number of AUTOMAP parameters | 1.96B | 1.12B | 696M | 487M | 378M | |

| CS regularization parameter ( λ)) | 0.005 | 0.005 | 0.005 | 0.01 | 0.05 | |

3.2. Neural network reconstruction performance

With models trained for the neural network reconstruction task, we now present key results in Figures 3 and 4 that compare the reconstruction error of AUTOMAP to established methods.

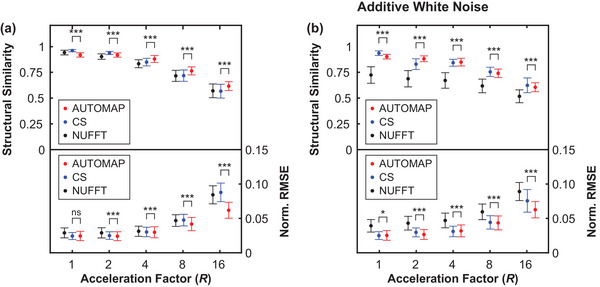

FIGURE 3.

Quality of radial AUTOMAP reconstruction in comparison to conventional techniques. Reconstruction quality as measured via structural similarity and normalized root mean square error metrics (Norm. RMSE) for different undersampling factors. In general, a more accurate image reconstruction technique yields a high structural similarity value and a low normalized RMSE value. Results are shown for AUTOMAP (red), compressed sensing (CS, blue), and nonuniform fast Fourier transform (NUFFT, black). Resulting image quality was assessed for clean radial input data (shown in a) and for the same data with 25 dB of additive white Gaussian noise (shown in b). Data markers have been offset from the acceleration factor values shown to aid visual clarity. Error bars represent the standard deviation of metrics across the test dataset. *p < 0.05, **p < 0.01, ***p < 0.001, ns = no significant difference

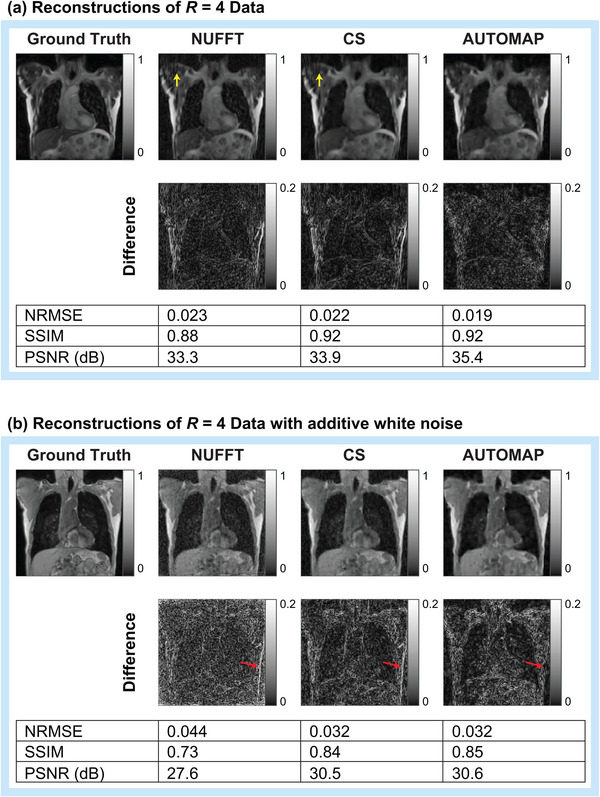

FIGURE 4.

Visual comparison of AUTOMAP reconstruction performance to conventional techniques. AUTOMAP is compared to compressed sensing (CS) and nonuniform fast Fourier transform (NUFFT) techniques in images reconstructed from 4× undersampled golden‐angle radial data. Images from clean radial data (a) and from data with 25‐dB additive white Gaussian noise (b) are shown. Normalized root‐mean‐square error (NRMSE), structural similarity (SSIM), and peak signal‐to‐noise ratio (PSNR) metrics are shown. Yellow arrows in a indicate streaking artifacts (zooming on the electronic version may aid visibility). Red arrows in (b) are provided for discussion in the text.

In Figure 3, we show structural similarity (SSIM) and NRMSE metrics for images reconstructed from golden‐angle radial k‐space data derived from the lung cancer imaging test set at a range of acceleration factors (R). We note that the SSIM metric quantifies the structural quality of reconstructions in terms of changes in the inter‐dependency of pixels that are spatially close, while the NRMSE metric quantifies the absolute error in pixel intensities. In general, higher SSIM values and lower NRMSE values correspond to reconstructions that are perceived by radiologists to be of higher diagnostic quality. 40 AUTOMAP performs strongest with very sparsely sampled data, giving an NRMSE value 0.70 times that of CS reconstruction for data as shown in Figure 3a. We observe that NUFFT reconstructs the “clean” data effectively, having an NRMSE value that is only 1.04 times higher than the NRMSE value for CS when (Figure 3a). However, when white noise, representing the thermal noise present in a standard MRI experiment, 43 is added to the input k‐space data, we observe considerable deterioration in the accuracy of NUFFT reconstructions performed with the NRMSE being 1.52 times higher than for CS at (Figure 3b). For fully sampled data with white noise added (), CS and AUTOMAP have mean NRMSE values of 0.0245 and 0.0247, indicating small differences in reconstruction performance that are on the threshold of statistical significance (p = 0.046).

Aware that NRMSE and SSIM metrics do not fully characterize artifacts that may be present in reconstructed images, we present typical reconstructions for R = 4 shown in Figure 4. While, the overall quality trend is similar to that summarized in Figure 3, we observe that the AUTOMAP difference image shows significantly lower error at the chest wall than CS and NUFFT techniques (see red arrows in Figure 4b). We also note that subtle streaking artifacts are present in the CS and NUFFT images but absent from AUTOMAP reconstructions (see yellow arrows in Figure 4a). Increasing the regularization penalty was observed to improve the structure of CS reconstructed images at the expense of a poorer NRMSE (see Figure S3 for results showing the trade‐off between NRMSE and SSIM when optimizing the regularization penalty).

Having shown that AUTOMAP reconstructs input data with equivalent or superior fidelity to CS, we now evaluate the relative computational performance of the reconstruction methods. We found that our implementation of AUTOMAP was 16–49 times faster than CS reconstruction, reducing reconstruction time by approximately 200 ms (see Table 1). For context, the end‐to‐end imaging and targeting latency should be less than ∼500 ms for real‐time tumor tracking in MR‐guided radiotherapy. 5 The speed of NUFFT reconstruction was not quantified, as it is accepted that this less robust reconstruction technique can be completed within several milliseconds, making it sufficiently fast for real‐time applications. 44 We found that CS reconstruction was fastest running on the CPU without GPU acceleration, presumably due to the relatively small matrix sizes used, and hence, report the CPU‐based times here.

The number of trainable parameters required to implement AUTOMAP were significant (Table 1), reaching up to 2B for the fully sampled network. A single l1‐penalty hyperparameter (λ) was optimized for CS recon.

3.3. Motion‐compensated reconstruction

Here, we test the potential of the AUTOMAP reconstruction technique to correct for anatomical motion encountered during the acquisition process, which is of concern for MRI‐guided RT of thoracic sites in particular. In this section, we consider two AUTOMAP models trained to reconstruct data acquired with R = 4. The first AUTOMAP model is the same as the R = 4 model trained for reconstruction as described above but without fine‐tuning. The second AUTOMAP model was trained to compensate for intra‐acquisition motion through the incorporation of generic motion data from YouTube‐8M into the training dataset.

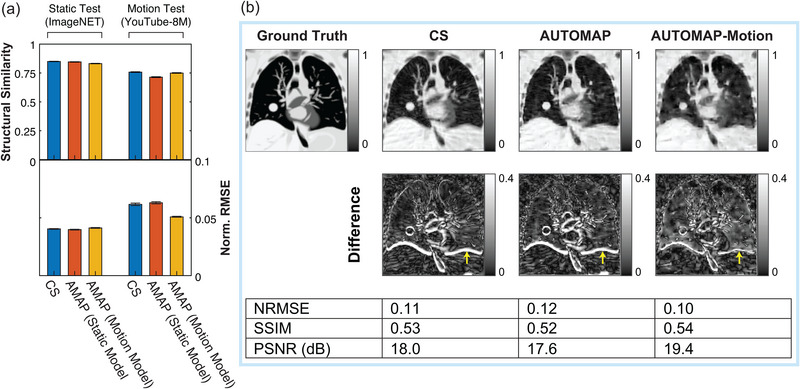

We begin analyzing these models by comparing the quality of CS reconstruction to the AUTOMAP model reconstructions for static and motion inputs in Figure 5a. The quality of all reconstructions is higher for static test data than motion test data, which reflects the increased difficulty of reconstructing motion‐corrupted data. We note that due to the large test sets that could be derived from ImageNET and YouTube‐8M, differences between all metrics summarized in Figure 5a are statistically significant at the p<0.005 level. The best performing technique on motion test data was the motion‐trained AUTOMAP model, which had a mean NRMSE 21% lower than the CS reconstructions. Additionally, the performance of AUTOMAP was comparable on static input data whether or not the model was trained with the inclusion of motion data, indicating that motion training leverages previously underutilized capacity in the over‐parameterized model architecture. Conversely, we note that AUTOMAP trained on the YouTube‐8M dataset alone had a minimum validation loss 68% higher than when static data were included in the training set, likely due to the difficulty of fitting to the underlying manifold with variability in the motion‐encoded data.

FIGURE 5.

Reconstructing motion‐corrupted, undersampled data. (a) Reconstruction quality as measured via structural similarity and normalized root mean square error metrics (Norm. RMSE) for static test images derived from the ImageNET database and for motion‐corrupted test inputs derived from the YouTube 8M database. Results are shown for 4× undersampled data reconstructed with compressed sensing (blue), an AUTOMAP model trained on static data (red) and an AUTOMAP model trained on motion‐encoded data. Bars and lines are the mean and standard error of the mean calculated across 1000 test inputs. (b) Images reconstructed from k‐space data simulated with the CoMBAT phantom for a patient under routine cardiothoracic motion. Results for data reconstructed with compressed sensing, an AUTOMAP model trained on static data, and an AUTOMAP model trained on motion‐encoded data are shown. Normalized root‐mean‐square error (NRMSE), structural similarity (SSIM), and peak signal‐to‐noise ratio (PSNR) image quality metrics are evaluated against the last frame of the image sequence for motion‐encoded data. Yellow arrows indicate errors associated with the position of the diaphragm in reconstructed images.

Having evaluated the performance of these reconstruction techniques on generic motion sequences, we now turn to analyze the performance of these models in reconstructing motion‐corrupted k‐space data simulated from the CoMBAT phantom for a lung cancer patient undergoing realistic cardiothoracic motion. 45 The reconstruction results for CoMBAT data encoded as per the process described in Figure 2 are shown in Figure 5b. AWGN at 25 dB was added to all inputs used to derive this figure. Given that we are reconstructing motion‐corrupted data, the quantitative metrics of reconstruction performance are consistent with the results in Figure 5a, with the motion‐trained AUTOMAP model outperforming the static‐trained model and CS. Inspecting difference images, we see that the output of the motion‐trained AUTOMAP model has the least discrepancy with the ground truth around the diaphragm as indicated by yellow arrows in Figure 5b.

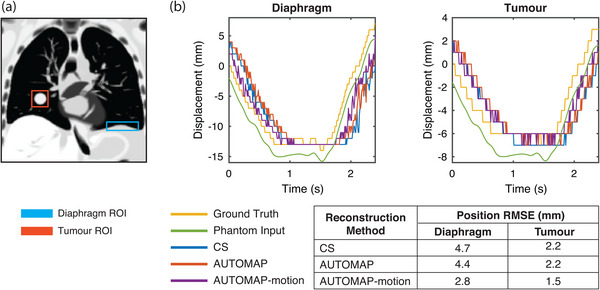

To simulate the impact of motion‐training on an MRI‐Linac tracking experiment, we performed template matching of tumor and diaphragm ROIs in our digital phantom, as shown in Figure 6. Analyzing the difference between template match locations in ground truth images and reconstructed images through one respiratory cycle, we find that the AUTOMAP‐motion model gives an RMSE value 1.9 mm smaller for the diaphragm and 0.7 mm smaller for the tumor than CS.

FIGURE 6.

Target tracking accuracy. (a) Regions of interest (ROIs) for the diaphragm (blue) and tumor (red) are defined in a ground truth image. (b) Displacement of ROIs defined in a as predicted by a template matching algorithm for the ground truth image sequence (yellow) and image sequences reconstructed using compressed sensing (CS, blue), a conventional AUTOMAP model (red) and an AUTOMAP‐motion model (purple). Steps in displacement reflect the underlying image resolution. Root mean square error values are calculated for the difference between target position in reconstructed image sequences and the ground truth image sequence. The motion trace input to the virtual phantom is shown (green) with a vertical offset for visibility.

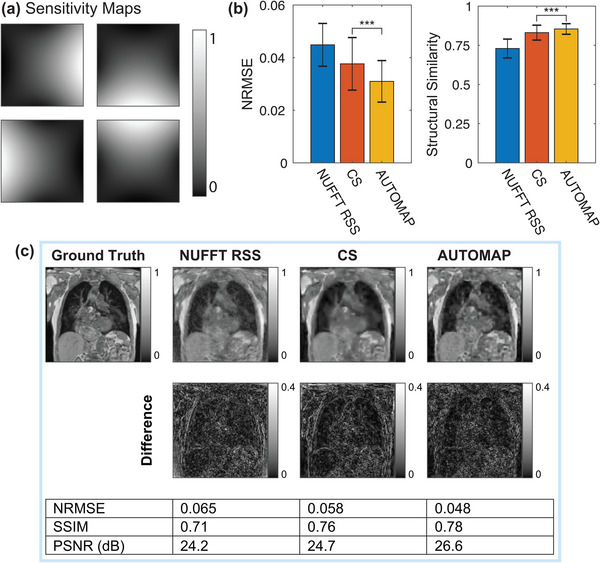

3.4. Multichannel reconstruction

Multichannel reconstructions were performed with data acquired using sensitivity maps for a four‐channel birdcage coil (maps shown in Figure 7a). Metrics quantifying the performance of AUTOMAP, NUFFT RSS, and CS reconstruction approaches are shown in Figure 7b. The multichannel results show that AUTOMAP was superior to NUFFT RSS and CS reconstruction approaches in terms of structural similarity and NRMSE metrics for the , case explored here. A visual comparison of multichannel reconstruction performance is shown in Figure 7c.

FIGURE 7.

Multichannel reconstruction performance of nonuniform fast‐Fourier transform root‐sum‐of‐squares (NUFFT RSS), Compressed sensing (CS) and AUTOMAP techniques. (a) Sensitivity maps used for four‐channel data acquisition and reconstruction with a reduction factor (R) of 8. (b) Average normalized root‐mean‐square error (NRMSE) and structural similarity metrics for each technique as measured across the test set (error bars denote standard deviation). (c) Visual comparison of reconstruction performance. NRMSE, structural similarity (SSIM), and peak signal‐to‐noise ratio (PSNR) metrics are shown. Error bars represent the standard deviation of metrics across the test dataset. *p < 0.05, **p < 0.01, ***p < 0.001, ns = no significant difference

4. DISCUSSION

Our results leverage advances in machine learning to implement fast image reconstruction of undersampled radial data from lung cancer patients with comparable accuracy to conventional iterative reconstruction techniques based on CS. The development of rapid radial reconstruction techniques is of interest for MRIgRT, where the latencies associated with iterative reconstruction are prohibitively long. 46 While our proof‐of‐principle study has focused on the application of AUTOMAP to real‐time targeting of radiotherapy in the lung, we believe our results are extensible to high‐motion sites such as the liver and prostate, where tumor movement would be optimally managed by real‐time adaptive radiotherapy. 47 , 48 We note that due to the relatively high latency of MR acquisition and reconstruction, compared to X‐ray‐based modalities, faster image reconstruction techniques are desired for real‐time beam gating and MLC tracking on MRI‐Linacs, especially for non‐Cartesian acquisition trajectories. 7 , 49 Integrating neural networks with fast data streaming tools, 50 tracking algorithms, 51 and time‐resolved 3D anatomical imaging 52 , 53 will be crucial for use with MRI‐Linac beam adaptation technologies.

The ease with which AUTOMAP generalizes to different reconstruction tasks, like the golden‐angle radial sampling used in this work, is a direct consequence of the sequential dense layers in the model architecture. While these dense layers enable data‐driven learning of the manifold between k‐space and the image domain, they also have significant memory requirements that make the translation to 3D reconstruction and tracking more challenging. 54 , 55 To perform reconstructions above the relatively low resolutions used for motion tracking on an MRI‐Linac will require lighter‐weight reconstruction networks. 17 , 56 One light‐weight implementation of AUTOMAP is decomposed‐AUTOMAP (dAUTOMAP), which replaces dense layers with orthogonal “domain transform” layers. 20 While dAUTOMAP performs strongly for Cartesian trajectories, it assumes that data are acquired in orthogonal directions, making it unsuitable for reconstruction of nonuniform data. Hence, dAUTOMAP is outperformed by NUFFT reconstruction for radial reconstructions. 23 We note that light‐weight architectures that take view angle into account are an active research area. 25 , 57

Without a detailed understanding of the AUTOMAP learning process, it may also seem surprising that the neural network trains faster and performs better relative to CS at high acceleration factors (see Figure 3). However, this reflects the underlying mathematics of AUTOMAP as a tool for manifold approximation, where image reconstruction is achieved via a mapping between sparse representations in signal and image space. Hence, highly undersampled data encourages the network to learn robust, low‐dimensional, latent representation of data that can then be used for manifold approximation. 58 Future work will aim to improve network training via the use of loss metrics other than MSE and via the imposition of layer regularization penalties that promote sparsity. In the present work, we have chosen to focus on golden‐angle radial sampling trajectories as they are relatively insensitive to motion artifacts, which is a significant benefit for applications in MRI‐guided radiotherapy. 2 However, we note that future opportunities exist to investigate how reconstruction accuracy is impacted by increasing acceleration factors for data acquired with spiral and cartesian sampling trajectories.

Our experiments with motion‐encoded k‐space demonstrated that as a highly over‐parameterized model, AUTOMAP has significant capacity to learn additional features. Here, we showed that AUTOMAP could learn generic properties of motion from YouTube videos, leading to lower NRMSE and higher tracking accuracy in reconstructed images of an in silico lung cancer phantom. The relatively low quality of images reconstructed with conventional techniques from this undersampled, motion‐corrupted data indicates the significant potential impact of improved reconstruction frameworks based on machine learning. Further, our tracking results do not account for reconstruction latency, which would be expected to further reduce tracking accuracy with CS. Additionally, there is a significant scope to improve on tracking and reconstruction performance through utilization of more in‐domain training data such as medical dynamic MRI data. However, care must be taken when using dynamic MRI data during the supervised learning process as the ground truth is generally unknown due to intra‐acquisition motion. One alternative is to train networks with synthetically generated motion applied to lung images. Such an approach could be adapted to assist with tracking of targets with out‐of‐plane motion, which represents a persistent challenge in MRI‐guided radiotherapy. 59 Temporal k‐space filtering, 9 optical flow techniques, 23 and neural networks tailored to radial reconstruction could also improve network performance. 57 , 60 , 61

Our results show that AUTOMAP performs well with the addition of white noise, which represents the fundamental thermal limitations to SNR in an MRI scan. However, another valuable strength of manifold‐based reconstruction models, such as AUTOMAP, is that they implicitly learn to suppress MRI artifacts that are caused by inputs outside the training domain, for example, spike noise resulting from RF leakage. 55

Retrospective simulation of k‐space data from DICOMs, as performed in our experiments, can lead to overly optimistic reconstruction results and future work with raw k‐space data will be required to test the robustness of AUTOMAP to other experimental imperfections encountered in MRI, such as B 0 and B 1 inhomogeneity. 62 While the over‐parameterization of AUTOMAP means that it can be intentionally trained to correct for such artifacts, the incorporation of such specific examples into the training corpus will increase the risk of overfitting. Despite the risk of overfitting, it is likely that performance of AUTOMAP will benefit from some fine‐tuning of pretrained networks to the particular MRI system being used. The incorporation of new adversarial approaches into the training corpus will make neural network reconstructions more robust by identifying nonphysical input perturbations that can negatively impact reconstruction performance. 63 , 64

In this work, we have focused on CS with l1‐wavelet regularization as it is widely used and well‐supported in the MRI community. However, other effective regularization methods (e.g., locally low rank) remain to be tested. We note that all standard iterative reconstruction techniques will be slow when compared to frameworks such as AUTOMAP that can reconstruct data in a single forward pass.

We demonstrated that AUTOMAP can be extended to parallel imaging reconstruction tasks based on multicoil acquisitions. However, we note that the sensitivity maps used in our experiment were fixed and further work will be required to ensure that AUTOMAP performs well with variations in sensitivity maps between patients. Future work will be required to extend the reconstruction framework to generalized sensitivity maps that are encountered clinically. 65

In conclusion, we have used AUTOMAP to accurately and rapidly reconstruct retrospectively acquired lung cancer images from radial data. We have also shown that AUTOMAP can adapt to generalized properties of motion learned from generic YouTube videos for real‐time tracking applications. These results will inform the future development of dynamic adaptation technologies for MRI‐Linacs, enabling new standards of personalized radiotherapy.

CONFLICT OF INTEREST

P.J.K. is an inventor on two patents relating to MRI‐Linac systems: US#8,331,531 and US#9,099,271. M.S.R. and N.K. have received research support from GE Healthcare for MR image reconstruction projects. The remaining authors have no relevant conflicts of interest to disclose.

Supporting information

ACKNOWLEDGMENTS

D.E.J.W and P.Z.Y.L are supported by Cancer Institute NSW Early Career Fellowships 2019/ECF1015 and 2019/ECF1032, respectively. This work has been funded by the Australian National Health and Medical Research Council Program Grant APP1132471. The authors thank NVIDIA for providing the RTX 8000 GPU used in this work. M.S.R. acknowledges the gracious support of the Kiyomi and Ed Baird MGH Research Scholar Award.

Open access publishing facilitated by The University of Sydney, as part of the Wiley ‐ The University of Sydney agreement via the Council of Australian University Librarians.

Waddington DEJ, Hindley N, Koonjoo N, et al. Real‐time radial reconstruction with domain transform manifold learning for MRI‐guided radiotherapy. Med Phys. 2023;50:1962–1974. 10.1002/mp.16224

DATA AVAILABILITY STATEMENT

The primary datasets used for training in this study are publicly available. Code for preprocessing data and model training is available at https://github.com/MattRosenLab.

REFERENCES

- 1. Barton MB, Jacob S, Shafiq J, et al. Estimating the demand for radiotherapy from the evidence: a review of changes from 2003 to 2012. Radiother Oncol. 2014;112(1):140‐144. 10.1016/j.radonc.2014.03.024 [DOI] [PubMed] [Google Scholar]

- 2. Keall PJ, Brighi C, Hurst CG, et al. Integrated MRI‐guided radiotherapy—opportunities and challenges. Nat Rev Clin Oncol. 2022;19:458‐470. 10.1038/s41571-022-00631-3 [DOI] [PubMed] [Google Scholar]

- 3. van Sörnsen de Koste JR, Palacios MA, Bruynzeel AM, Slotman BJ, Senan S, Lagerwaard FJ. MR‐guided gated stereotactic radiation therapy delivery for lung, adrenal, and pancreatic tumors: a geometric analysis. Int J Radiat Oncol Biol Phys. 2018;102(4):858‐866. 10.1016/j.ijrobp.2018.05.048 [DOI] [PubMed] [Google Scholar]

- 4. Stark LS, Andratschke N, Baumgartl M, et al. Dosimetric and geometric end‐to‐end accuracy of a magnetic resonance guided linear accelerator. Phys Imaging Radiat Oncol. 2020;16:109‐112. 10.1016/j.phro.2020.09.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Keall PJ, Sawant A, Berbeco RI, et al. AAPM Task Group 264: the safe clinical implementation of MLC tracking in radiotherapy. Med Phys. 2021;48(5):e44‐e64. 10.1002/mp.14625 [DOI] [PubMed] [Google Scholar]

- 6. Colvill E, Booth JT, O'Brien RT, et al. Multileaf collimator tracking improves dose delivery for prostate cancer radiation therapy: results of the first clinical trial. Int J Radiat Oncol Biol Phys. 2015;92(5):1141‐1147. 10.1016/j.ijrobp.2015.04.024 [DOI] [PubMed] [Google Scholar]

- 7. Liu PZ, Dong B, Trang Nguyen D, et al. First experimental investigation of simultaneously tracking two independently moving targets on an MRI‐linac using real‐time MRI and MLC tracking. Med Phys. 2020;47(12):6440‐6449. 10.1002/mp.14536 [DOI] [PubMed] [Google Scholar]

- 8. Kumar S, Rai R, Stemmer A, et al. Feasibility of free breathing lung MRI for radiotherapy using non‐Cartesian k‐space acquisition schemes. Br J Radiol. 2017;90(1080):1‐10. 10.1259/bjr.20170037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Bruijnen T, Stemkens B, Lagendijk JJ, van Den Berg CA, Tijssen RH. Multiresolution radial MRI to reduce IDLE time in pre‐beam imaging on an MR‐Linac (MR‐RIDDLE). Phys Med Biol. 2019;64(5):055011. 10.1088/1361-6560/aafd6b [DOI] [PubMed] [Google Scholar]

- 10. Mickevicius NJ, Paulson ES. Simultaneous acquisition of orthogonal plane cine imaging and isotropic 4D‐MRI using super‐resolution. Radiother Oncol. 2019;136:121‐129. 10.1016/j.radonc.2019.04.005 [DOI] [PubMed] [Google Scholar]

- 11. Feng L, Axel L, Chandarana H, Block KT, Sodickson DK, Otazo R. XD‐GRASP: golden‐angle radial MRI with reconstruction of extra motion‐state dimensions using compressed sensing. Phys Med Biol. 2016;75(2):775‐88. 10.1002/mrm.25665 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Paulson ES, Ahunbay E, Chen X, et al. 4D‐MRI driven MR‐guided online adaptive radiotherapy for abdominal stereotactic body radiation therapy on a high field MR‐Linac: Implementation and Initial clinical experience. Clin Transl Radiat Oncol. 2020;23:72‐79. 10.1016/j.ctro.2020.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Feng S, Yan X, Sun H, Feng Y, Liu HX. Intelligent driving intelligence test for autonomous vehicles with naturalistic and adversarial environment. Nat Commun. 2021;12(1):1‐14. 10.1038/s41467-021-21007-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Zhong ED, Bepler T, Berger B, Davis JH. CryoDRGN: reconstruction of heterogeneous cryo‐EM structures using neural networks. Nat Methods. 2021;18(2):176‐185. 10.1038/s41592-020-01049-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Shad R, Cunningham JP, Ashley EA, Langlotz CP, Hiesinger W. Designing clinically translatable artificial intelligence systems for high‐dimensional medical imaging. Nat Mach Intell. 2021;3(11):929‐935. 10.1038/s42256-021-00399-8 [DOI] [Google Scholar]

- 16. Huynh E, Hosny A, Guthier C, et al. Artificial intelligence in radiation oncology. Nat Rev Clin Oncol. 2020;17(12):771‐781. 10.1038/s41571-020-0417-8 [DOI] [PubMed] [Google Scholar]

- 17. Chandra SS, Lorenzana MB, Liu X, Liu S, Bollmann S, Crozier S. Deep learning in magnetic resonance image reconstruction. J Med Imaging Radiat Oncol. 2021;65:564‐577. 10.1111/1754-9485.13276 [DOI] [PubMed] [Google Scholar]

- 18. Wang G, Ye JC, de Man B. Deep learning for tomographic image reconstruction. Nat Mach Intell. 2020;2(12):737‐748. 10.1038/s42256-020-00273-z [DOI] [Google Scholar]

- 19. Zhu B, Liu JZ, Rosen BR, Rosen MS. Image reconstruction by domain transform manifold learning. Nature. 2018;555(7697):487‐492. 10.1017/CCOL052182303X.002 [DOI] [PubMed] [Google Scholar]

- 20. Schlemper J, Oksuz I, Clough JR, et al. dAUTOMAP: decomposing AUTOMAP to achieve scalability and enhance performance. arXiv. 2019:1909.10995. 10.48550/arXiv.1909.10995 [DOI] [Google Scholar]

- 21. Oh C, Kim D, Chung JY, Han Y, Park HW. A k‐space‐to‐image reconstruction network for MRI using recurrent neural network. Med Phys. 2021;48(1):193‐203. 10.1002/mp.14566 [DOI] [PubMed] [Google Scholar]

- 22. Park J, Lee J, Lee J, Lee SK, Park JY. Strategies for rapid reconstruction in 3D MRI with radial data acquisition: 3D fast Fourier transform vs two‐step 2D filtered back‐projection. Sci Rep. 2020;10(1):1‐11. 10.1038/s41598-020-70698-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Terpstra ML, Maspero M, D'Agata F, et al. Deep learning‐based image reconstruction and motion estimation from undersampled radial k‐space for real‐time MRI‐guided radiotherapy. Phys Med Biol. 2020;65:155015. 10.1088/1361-6560/ab9358 [DOI] [PubMed] [Google Scholar]

- 24. Schlemper J, Sadegh S, Salehi M, et al. Nonuniform variational network: deep learning for accelerated nonuniform mr image reconstruction. Med Image Comput Comput Assist Interv. 2019;11766:57‐64. 10.1007/978-3-030-32248-9 [DOI] [Google Scholar]

- 25. Li Y, Li K, Zhang C, Montoya J, Chen Gh. Learning to reconstruct computed tomography images directly from sinogram data under a variety of data acquisition conditions. IEEE Trans Med Imaging. 2019;38(10):2469‐2481. 10.1109/TMI.2019.2910760 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Johnson PM, Drangova M. Conditional generative adversarial network for 3D rigid‐body motion correction in MRI. Phys Med Biol. 2019;82:901‐910. 10.1002/mrm.27772 [DOI] [PubMed] [Google Scholar]

- 27. Haskell MW, Cauley SF, Bilgic B, et al. Network Accelerated Motion Estimation and Reduction (NAMER): convolutional neural network guided retrospective motion correction using a separable motion model. Phys Med Biol. 2019;82:1452‐1461. 10.1002/mrm.27771 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Lee S, Jung S, Jung KJ, Kim DH. Deep learning in MR motion correction: a brief review and a new motion simulation tool (view2Dmotion). Magn Reson Imaging. 2020;24(4):196. 10.13104/imri.2020.24.4.196 [DOI] [Google Scholar]

- 29. Paganelli C, Summers P, Gianoli C, Bellomi M, Baroni G, Riboldi M. A tool for validating MRI‐guided strategies: a digital breathing CT/MRI phantom of the abdominal site. Med Biol Eng Comput. 2017;55(11):2001‐2014. 10.1007/s11517-017-1646-6 [DOI] [PubMed] [Google Scholar]

- 30. Deng J, Dong W, Socher R, Li LJ, Li K, Fei‐Fei L. ImageNet: a large‐scale hierarchical image database. Proceedings of the 22nd IEEE Conference on Computer Vision and Pattern Recognition 2009:248‐255. 10.14842/jpnjnephrol1959.20.1221 [DOI]

- 31. Joseph VR. Optimal ratio for data splitting. Stat Anal Data Min. 2022:531‐538. 10.1002/sam.11583 [DOI] [Google Scholar]

- 32. Guerquin‐Kern M, Lejeune L, Pruessmann KP, Unser M. Realistic analytical phantoms for parallel magnetic resonance imaging. IEEE Trans Med Imaging. 2012;31(3):626‐636. 10.1109/TMI.2011.2174158 [DOI] [PubMed] [Google Scholar]

- 33. Lee D, Greer PB, Lapuz C, et al. Audiovisual biofeedback guided breath‐hold improves lung tumor position reproducibility and volume consistency. Adv Radiat Oncol. 2017;2(3):354‐362. 10.1016/j.adro.2017.03.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Lee D, Greer PB, Paganelli C, Ludbrook JJ, Kim T, Keall P. Audiovisual biofeedback improves the correlation between internal/external surrogate motion and lung tumor motion. Med Phys. 2018;45(3):1009‐1017. 10.1002/mp.12758 [DOI] [PubMed] [Google Scholar]

- 35. Abu‐El‐Haija S, Kothari N, Lee J, et al. YouTube‐8M: a large‐scale video classification benchmark. arXiv. 2016:1609.08675. 10.48550/arXiv.1609.08675 [DOI] [Google Scholar]

- 36. Segars WP, Sturgeon G, Mendonca S, Grimes J, Tsui BM. 4D XCAT phantom for multimodality imaging research. Med Phys. 2010;37(9):4902‐4915. 10.1118/1.3480985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Reynolds T, Shieh CC, Keall PJ, O'Brien RT. Dual cardiac and respiratory gated thoracic imaging via adaptive gantry velocity and projection rate modulation on a linear accelerator: a proof‐of‐concept simulation study. Med Phys. 2019;49(9):4116‐4126. 10.1002/mp.13670 [DOI] [PubMed] [Google Scholar]

- 38. Blumenthal M, Holme C, Roeloffs V, et al. BART toolbox for computational magnetic resonance imaging. Sep 24, 2022. 10.5281/zenodo.592960 [DOI]

- 39. Fessler JA, Sutton BP. Nonuniform fast Fourier transforms using min‐max interpolation. IEEE Trans Signal Process. 2003;51(2):560‐574. 10.1109/TSP.2002.807005 [DOI] [Google Scholar]

- 40. Mason A, Rioux J, Clarke SE, et al. Comparison of objective image quality metrics to expert radiologists' scoring of diagnostic quality of MR images. IEEE Trans Med Imaging. 2020;39(4):1064‐1072. 10.1109/TMI.2019.2930338 [DOI] [PubMed] [Google Scholar]

- 41. Ong F, Lustig M. SigPy: a python package for high performance iterative reconstruction. In: Proceedings of the International Society for Magnetic Resonance in Medicine; 2019:4819. [Google Scholar]

- 42. Uecker M, Lai P, Murphy MJ, et al. ESPIRiT—an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA. Phys Med Biol. 2014;71(3):990‐1001. 10.1002/mrm.24751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Redpath TW. Signal‐to‐noise ratio in MRI. Br J Radiol. 1998;71:704‐707. 10.1259/bjr.71.847.9771379 [DOI] [PubMed] [Google Scholar]

- 44. Lin JM. Python non‐uniform fast Fourier transform (PyNUFFT): an accelerated non‐cartesian MRI package on a heterogeneous platform (CPU/GPU). J Imaging. 2018;4(3):51. 10.3390/jimaging4030051 [DOI] [Google Scholar]

- 45. García‐González MA, Argelagós‐palau A, Fernández‐chimeno M, Ramos‐castro J, Catalunya PD. A comparison of heartbeat detectors for the seismocardiogram. Comput Cardiol. 2013;40:461‐464. [Google Scholar]

- 46. Jafari R, Do RKG, LaGratta MD, et al. GRASPNET: fast spatiotemporal deep learning reconstruction of golden‐angle radial data for free‐breathing dynamic contrast‐enhanced MRI. NMR Biomed. 2022;35:e4861. 10.1002/nbm.4861 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Bertholet J, Knopf A, Eiben B, et al. Real‐time intrafraction motion monitoring in external beam radiotherapy. Phys Med Biol. 2019;64(15):15TR01. 10.1088/1361-6560/ab2ba8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Booth J, Caillet V, Briggs A, et al. MLC tracking for lung SABR is feasible, efficient and delivers high‐precision target dose and lower normal tissue dose. Radiother Oncol. 2021;155:131‐137. 10.1016/j.radonc.2020.10.036 [DOI] [PubMed] [Google Scholar]

- 49. Fast M, van de Schoot A, van de Lindt T, Carbaat C, van der Heide U, Sonke JJ. Tumor trailing for liver SBRT on the MR‐linac. Int J Radiat Oncol Biol Phys. 2019;103(2):468‐478. 10.1016/j.ijrobp.2018.09.011 [DOI] [PubMed] [Google Scholar]

- 50. Borman PTS, Raaymakers BW, Glitzner M. ReconSocket: a low‐latency raw data streaming interface for real‐time MRI‐guided radiotherapy. Phys Med Biol. 2019;64(18):185008. 10.1088/1361-6560/ab3e99 [DOI] [PubMed] [Google Scholar]

- 51. Toftegaard J, Keall PJ, O'Brien R, et al. Potential improvements of lung and prostate MLC tracking investigated by treatment simulations. Med Phys. 2018;45(5):2218‐2229. 10.1002/mp.12868 [DOI] [PubMed] [Google Scholar]

- 52. Bauman G, Bieri O. Reversed half‐echo stack‐of‐stars TrueFISP (TrueSTAR). Magn Reson Med. 2016;76(2):583‐590. 10.1002/mrm.25880 [DOI] [PubMed] [Google Scholar]

- 53. Rabe M, Paganelli C, Riboldi M, et al. Porcine lung phantom‐based validation of estimated 4D‐MRI using orthogonal cine imaging for low‐field MR‐linacs. Phys Med Biol. 2021;66(5):055006. 10.1088/1361-6560/abc937 [DOI] [PubMed] [Google Scholar]

- 54. Paganelli C, Portoso S, Garau N, et al. Time‐resolved volumetric MRI in MRI‐guided radiotherapy: an in silico comparative analysis. Phys Med Biol. 2019;64(18):185013. 10.1088/1361-6560/ab33e5 [DOI] [PubMed] [Google Scholar]

- 55. Koonjoo N, Zhu B, Bagnall GC, Bhutto D, Rosen MS. Boosting the signal‐to‐noise of low‐field MRI with deep learning image reconstruction. Sci Rep. 2021;11:8248. 10.1038/s41598-021-87482-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Kurz C, Buizza G, Landry G, et al. Medical physics challenges in clinical MR‐guided radiotherapy. Radiat Oncol. 2020;15:93. 10.1186/s13014-020-01524-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Yoo J, Jin KH, Gupta H, Yerly J, Stuber M, Unser M. Time‐dependent deep image prior for dynamic MRI. IEEE Trans Med Imaging. 2021;40:3337‐3348. 10.1109/TMI.2021.3084288 [DOI] [PubMed] [Google Scholar]

- 58. Vincent P, Larochelle H, Bengio Y, Manzagol PA. Extracting and Composing Robust Features with Denoising Autoencoders. Proceedings of the International Conference on Machine Learning. 2008;25:1096‐1103. 10.1016/j.neucom.2018.05.040 [DOI] [Google Scholar]

- 59. van De Lindt TN, Fast MF, van Den Wollenberg W, et al. Validation of a 4D‐MRI guided liver stereotactic body radiation therapy strategy for implementation on the MR‐linac. Phys Med Biol. 2021;66(10):105010. 10.1088/1361-6560/abfada [DOI] [PubMed] [Google Scholar]

- 60. Wang H, Peng H, Chang Y, Liang D. A survey of GPU‐based acceleration techniques in MRI reconstructions. Quant Imaging Med Surg. 2018;8(2):196‐208. 10.21037/qims.2018.03.07 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Liu F, Samsonov A, Chen L, Kijowski R, Feng L. SANTIS: sampling‐augmented neural neTwork with incoherent structure for MR image reconstruction. Magn Reson Med. 2019;82(2):1890‐1904. 10.1002/mrm.27827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Shimron E, Tamir JI, Wang K, Lustig M. Implicit data crimes: machine learning bias arising from misuse of public data. Proc Natl Acad Sci USA. 2022;119(13):e2117203119. 10.1073/pnas.2117203119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Yu T, Hu S, Guo C, Chao WL, Weinberger KQ. A new defense against adversarial images: turning a weakness into a strength. J Inf Process Syst. 2019;33:1633‐1644. 10.48550/arXiv.1910.07629 [DOI] [Google Scholar]

- 64. Antun V, Renna F, Poon C, Adcock B, Hansen AC. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc Natl Acad Sci USA. 2020;117(48):30088‐30095. 10.1073/pnas.1907377117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Knoll F, Hammernik K, Zhang C, et al. Deep learning methods for parallel magnetic resonance image reconstruction. IEEE Signal Process Mag. 2020;37:128‐140. 10.1109/MSP.2019.2950640 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The primary datasets used for training in this study are publicly available. Code for preprocessing data and model training is available at https://github.com/MattRosenLab.