Abstract

As the most promising candidates for the implementation of in-sensor computing, retinomorphic vision sensors can constitute built-in neural networks and directly implement multiply-and-accumulation operations using responsivities as the weights. However, existing retinomorphic vision sensors mainly use a sustained gate bias to maintain the responsivity due to its volatile nature. Here, we propose an ion-induced localized-field strategy to develop retinomorphic vision sensors with nonvolatile tunable responsivity in both positive and negative regimes and construct a broadband and reconfigurable sensory network with locally stored weights to implement in-sensor convolutional processing in spectral range of 400 to 1800 nanometers. In addition to in-sensor computing, this retinomorphic device can implement in-memory computing benefiting from the nonvolatile tunable conductance, and a complete neuromorphic visual system involving front-end in-sensor computing and back-end in-memory computing architectures has been constructed, executing supervised and unsupervised learning tasks as demonstrations. This work paves the way for the development of high-speed and low-power neuromorphic machine vision for time-critical and data-intensive applications.

Non-volatile modulation of responsivity in positive and negative regimes is realized by ion-induced electrostatic field.

INTRODUCTION

Machine vision plays a crucial role in time-critical applications, which require real-time object recognition and classification, such as autonomous driving and robotics (1). With the increase of frame rates and pixel densities of sensors, large quantities of raw and unstructured data are generated in sensory terminals (2, 3), and image processing becomes a data-intensive task, which requires high-efficiency and low-power image processing directly at the sensory terminals (2, 4, 5). The emerging in-sensor computing that can perform low-level and high-level image processing tasks in sensory networks has attracted numerous attentions in recent years, and various neuromorphic devices and structures including optoelectronic synapses (6–11) and one-photosensor–one-memristor arrays (3, 12) have been developed to implement in-sensor computing. In particular, human retina–inspired retinomorphic vision sensors have demonstrated their great potential in in-sensor computing because they can constitute built-in artificial neural networks (ANNs) and implement multiply-and-accumulation (MAC) operations using tunable responsivities as the weights of ANNs (1, 13–18). However, these retinomorphic devices still suffer from the volatile nature of their responsivities, which require a sustained gate bias to maintain (1, 13, 14, 19, 20). That is, the weights of ANNs need to be stored remotely and supplied to each vision sensor via complex external circuits, which would inevitably consume additional energies. More seriously, extremely complex circuits are required for the individual control of each unit in networks, which is almost impossible and unacceptable for large-scale sensory networks considering the limited power and resources available at the edge. In addition, the limited operating spectra of these retinomorphic devices, mainly in ultraviolet (UV) and visible (vis) range (1, 13, 15, 16, 19, 20), hinder the implementation of in-sensor computing in more important infrared band. It is highly desirable to develop broadband retinomorphic vision sensors with nonvolatile tunable responsivities to constitute sensory networks for neuromorphic machine vision.

Here, we propose an ion-induced localized-field modulation strategy to realize the nonvolatile modulation of responsivity and develop a broadband retinomorphic vision sensor with operating spectra covering 400 to 1800 nm based on core-sheath single-walled carbon nanotube@graphdiyne (SWNT@GDY). The responsivity can be linearly modulated in both positive and negative regimes by controlling the localized field induced by the trapped Li+ ions in GDY, enabling the implementation of in-sensor MAC operation and convolutional processing. A 3 × 3 × 3 sensor array is fabricated to constitute a reconfigurable convolutional neural network (CNN) involving three kernels (3 × 3), and low-level and high-level processing tasks for multiband images, including edge detection and sharpness of hyperspectral images and classification of colored letters, are performed as demonstrations. The conductance of this retinomorphic device can also be linearly modulated, and, thus, the device array can also implement in-memory computing tasks. A complete hardware emulation of human visual system including retina and brain visual cortex is achieved by networking the front-end in-sensor CNN and back-end in-memory ANN based on the same device structure, and unsupervised learning (autoencoder) is demonstrated. The nonvolatile nature of responsivity induced by the localized field enables the local storage of weights in sensory networks, which is essential for high-efficiency low-power in-sensor computing at edge.

RESULTS

Broadband retinomorphic vision sensor with nonvolatile responsivity

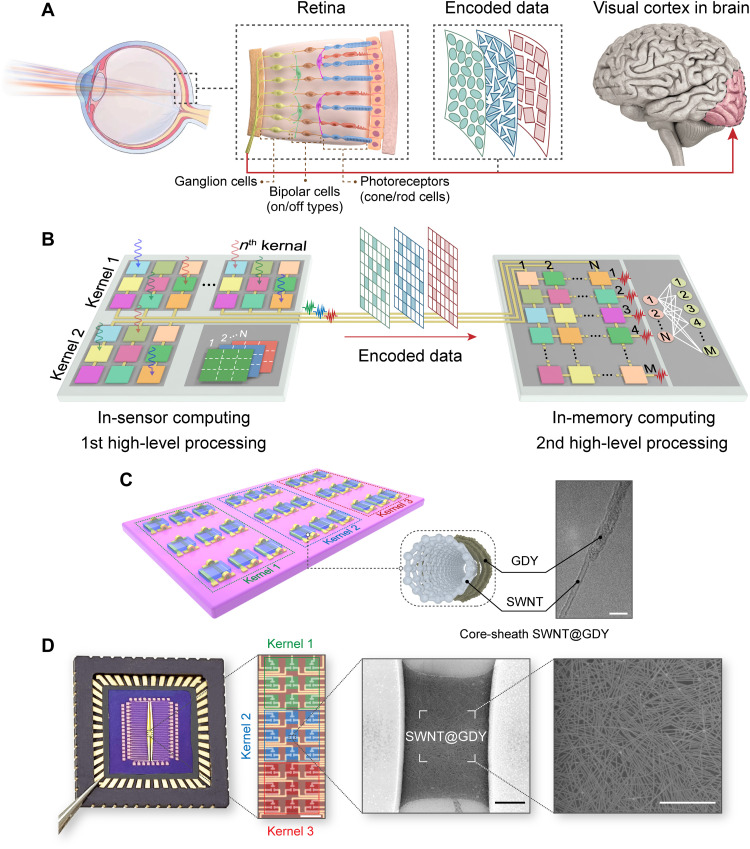

Figure 1A illustrates the schematic of human visual system, in which visual information is sensed and preprocessed in retina, and the extracted features (encoded data) are then transmitted to visual cortex in the brain for further processing (21, 22). The front-end retina plays a crucial role in this system because it can greatly reduce the delivered data to the brain by executing first-stage processing. Inspired by human visual system, neuromorphic machine vision composed of front-end in-sensor computing and back-end in-memory computing architectures (Fig. 1B) provides a high-efficiency and low-power solution for real-time and data-intensive tasks by processing the captured images directly at the sensory terminals. Compared to the well-studied and gradually mature in-memory computing architecture (23–26), the crucial in-sensor computing, however, still requires more efforts.

Fig. 1. Neuromorphic visual systems inspired by human visual system.

(A) Schematic of human visual system consisting retina and brain visual cortex. (B) A complete neuromorphic visual system involving front-end in-sensor computing and back-end in-memory computing. (C) Schematic of sensory networks based on core-sheath SWNT@GDY nanotubes. The middle and right panels show the structure and high-resolution transmission electron microscopy image of the core-sheath SWNT@GDY nanotube. Scale bar, 5 nm. (D) Photograph, optical microscopy, and scanning electron microscopy images of a 3 × 3 × 3 sensor array divided into three kernels. Scale bars (left to right), 50, 2, and 1 μm, respectively.

To emulate the functions of human retina and implement in-sensor computing, retinomorphic vision sensors based on core-sheath SWNT@GDY nanotubes were fabricated by Aerosol Jet printing (Fig. 1, C and D; details in Materials and Methods and figs. S2 and S3). The core-sheath SWNT@GDY nanotubes, which were synthesized via a solution-phase epitaxial strategy (note S1) (27–31), were well designed to realize the nonvolatile modulation of responsivity in both positive and negative regimes. As described in note S2 and figs. S4 to S10, GDY is crucial for the intercalation of Li+ ions and the nonvolatile modulation of conductance and responsivity, benefiting from its evenly distributed large pores and high Li+-ion storage capability (32, 33). Li+ ions in electrolyte can penetrate into GDY layer driven by a small gate bias and store in GDY even after removing the external gate bias (fig. S4). The thickness of the sheath GDY layer was approximately 1 nm, as a thicker GDY layer would obstruct the light absorption of core SWNT and restrict the charge transport between the electrodes and SWNT channel (note S2).

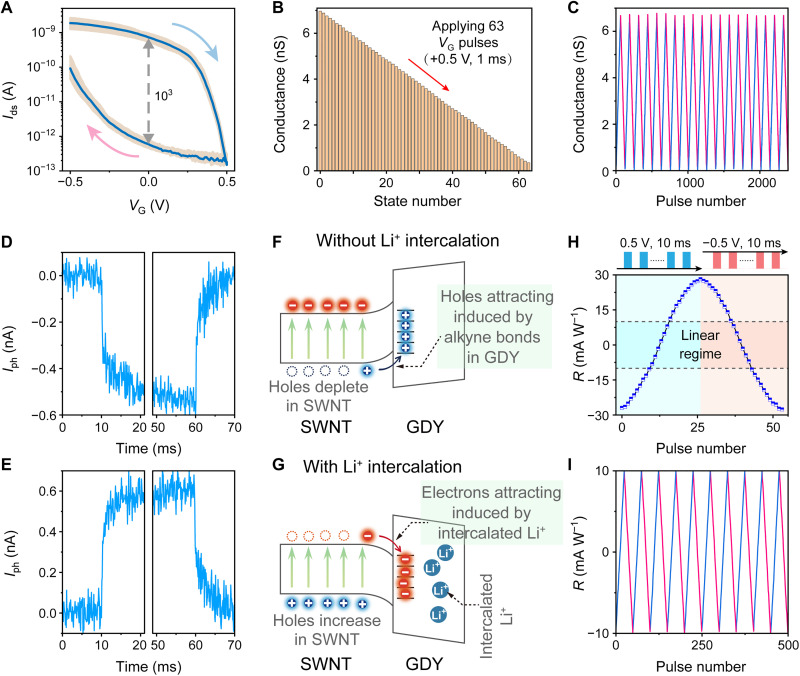

Figure 2A shows the collective transfer curves of the device with gate voltage (VG) sweeping from −0.5 to 0.5 V and back to −0.5 V. A clockwise hysteresis with an on/off ratio of over 103 at VG = 0 V is observed, which is induced by the intercalation of Li+ ions in GDY (32). The reduced conductance after sweeping is ascribed to the residual Li+ ions trapped in GDY, and it can return to its initial state while prolonging the time for Li+-ion extraction (fig. S12). The density of Li+ ions in GDY can be precisely controlled by applying positive or negative VG pulses. As shown in Fig. 2B and fig. S13, the conductance was linearly modulated from 7.0 to 0.3 nS with 64 discrete states by applying VG pulses (0.5 V, 1 ms). These conductance states exhibit nonvolatile characteristics with retention time of over 104 s (fig. S14), which indicates the stability of the intercalated Li+ ions in GDY. A reversible and repeatable conductance update with high linearity and symmetricity was achieved as shown in Fig. 2C, demonstrating its potential in neuromorphic computing (34, 35). The energy consumption for each update operation is calculated as low as 50 aJ (E = VG × IGS × tduration), considering the leakage current IGS in order of 10−13 A (fig. S15). The cyclic endurance of the device was measured by alternatively applying positive and negative VG pulses for 105 cycles (fig. S16), and no degradation was observed for both the high- and low-conductance states during the whole test. All these results demonstrate that frequent and repetitive intercalation/extraction of Li+ ions would not destroy the structure and degrade the performance of our device.

Fig. 2. Retinomorphic vision sensors with nonvolatile conductance and responsivity.

(A) Transfer curves with VG sweeping from −0.5 to 0.5 V and back to −0.5 V. (B) Sixty-four discrete conductance states realized by applying 0.5-V, 1-ms VG pulses. (C) Linear, symmetric, and repeatable conductance-update curves obtained by alternatively applying 64 positive and 64 negative VG pulses (±0.5 V, 1 ms). (D and E) Negative (D) and positive (E) photoresponse triggered by a 532-nm optical pulse. A 0.1-V read voltage (Vds) was used. (F and G) Band alignments and charge transfer for the SWNT/GDY heterostructure without (F) and with (G) Li+-ion intercalation in GDY layer. Without Li+ intercalation, the hole-attracting alkyne bonds in GDY induce the transfer of photogenerated holes from SWNT to the charge trapping sites in GDY, resulting in the decrease of the hole concentration (majority carrier) in SWNT and thus a negative photocurrent. With numerous Li+ intercalation, positively charged Li+ ions attract photogenerated electrons transfer from SWNT to GDY, inducing the increase of hole concentration in SWNT and thus a positive photocurrent. (H) Symmetric and reversible modulation of the responsivity in positive and negative regimes by applying positive and negative VG pulses (±0.5 V, 10 ms). (I) Linear, reversible, and repeatable modulation of responsivity in range of ±10 mA W−1 by controlling the density of intercalated Li+ ions in GDY.

The intercalated Li+ ions in GDY also induce a notable modulation of the device photoresponse. As shown in Fig. 2 (D and E), a negative photoresponse was observed for the device without Li+-ion intercalation, while a positive photoresponse with comparable amplitude can be achieved by intercalating Li+ ions into GDY. This can be explained by the transition of GDY from hole-attracting to electron-attracting with the intercalation of Li+ ions. GDY contains numerous alkyne bonds that are quite active as positive-charge–attracting magnets (36, 37). For the case without Li+-ion intercalation, these positive-charge–attracting magnets facilitate the separation and transfer of photogenerated holes from SWNT to GDY (Fig. 2F), leading to a negative photoresponse considering the p-type nature of SWNT. With the intercalation of Li+ ions, a localized electrostatic field is formed from GDY to SWNT, preventing the injection of holes and offsetting the hole-attracting of alkyne bonds. While the electrostatic field is strong enough, the GDY layer will attract photogenerated electrons and thus induce a positive photoresponse (Fig. 2G). The competition between the hole-attracting of alkyne bonds and electron-attracting of Li+ ions in GDY makes it possible to modulate the photoresponse continuously in negative and positive regimes. As shown in Fig. 2H and fig. S17, by controlling the density of intercalated Li+ ions in GDY, a symmetrical, reversible, and nonvolatile modulation of responsivity was achieved, which was almost linear in range of ±10 mA W−1 (Fig. 2I). The core-sheath structure and π-conjugated coupling of the two carbon allotropes, SWNT and GDY, enable the fast transfer of carriers between SWNT and GDY, and the timescale of the photoresponse is in several milliseconds (Fig. 2, D and E). The response speed is mainly limited by the charge trapping/detrapping mechanism of the device. In addition, given the parasitic capacitance for micro/nano devices usually in orders of femtofarad to sub-picofarad and the high resistance of the device (108 to 109 ohms), the resistance-capacitance (RC) time constant is in orders of microsecond to submillisecond, which is another important factor that limits the further improvement of response speed.

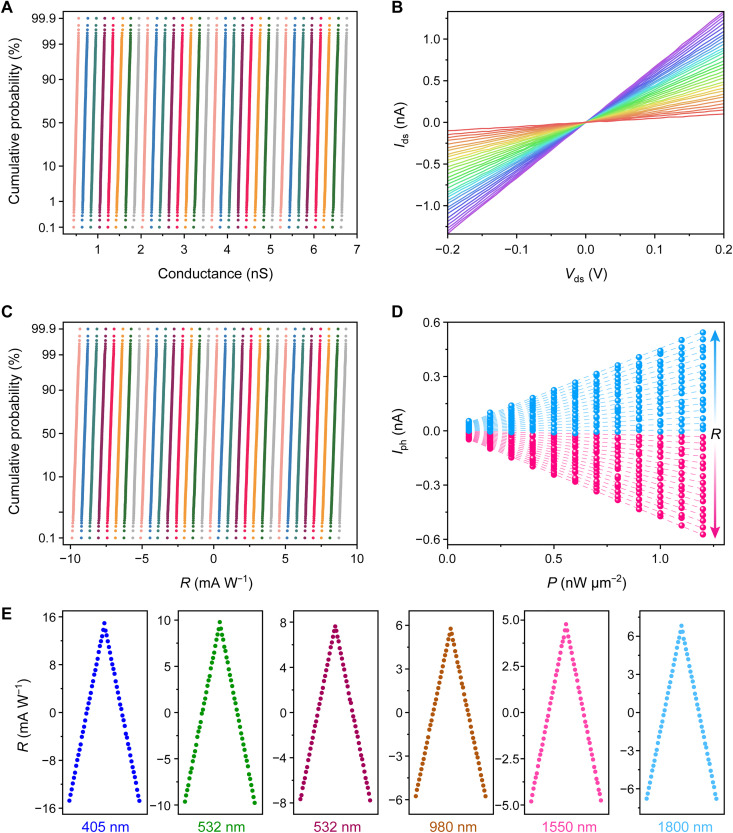

Linear and identical weight update for sensory network

A 3 × 3 × 3 retinomorphic sensor array (Fig. 1D) was fabricated to implement in-sensor computing. For neuromorphic hardware systems, the performance identity and reliability of each element in the neural network are essential for high computing accuracy. To minimize the influence of device-to-device variation and cycle-to-cycle variation for weight update, here, we used a closed-loop programming operation (25) to program the conductance states and responsivities of all the 27 vision sensors in the sensory network (note S3 and figs. S18 to S20). As detailedly described in note S3, the conductance and responsivity were equally divided into 32 levels (5 bits) in their linear regions, and all the 27 devices could be programmed to these states precisely and repeatedly (figs. S21 and S22). Figure 3 (A and C) depicts the cumulative probability distribution of the 27 devices with respect to 32 discrete conductance and responsivity states for 1000 repetitions, where all these states are separated without any overlap (figs. S23 and S24). Figure 3B shows the I-V curves of the device measured at the 32 conductance states, with an excellent linearity in ±0.2 V regime. As well, the light intensity–dependent photocurrents were measured (fig. S25), demonstrating a linear relationship for all the 32 responsivities (Fig. 3D). The linear bias-voltage and light-intensity dependence on the drain current and photocurrent enable accurate analog multiplication operations for in-memory and in-sensor computing, respectively.

Fig. 3. Linear and identical conductive and photoresponse characteristics for the sensory network.

(A) Cumulative probability distribution of the whole 27 devices with respect to 32 discrete conductance states for 1000 repetitions. (B) I-V curves of the device at the 32 conductance states with Vds sweeping from −0.2 to 0.2 V. (C) Cumulative probability distribution of the whole 27 devices with respect to 32 discrete responsivity states for 1000 repetitions. (D) Light-intensity dependence of the photocurrents for the device with 32 different responsivities, under irradiation of 532 nm and read voltage of 0.1 V. (E) Linear and reversible responsivity updates with 32 discrete states for wavelengths of 405, 532, 633, 980, 1550, and 1800 nm, respectively. The conductance and responsivity in this figure were updated using the close-loop programming method.

The broadband absorption of SWNT@GDY (fig. S11) enables the retinomorphic vision sensors to operate in a wide spectral range. The photoresponse of the device triggered by light with wavelengths of 400 to 1800 nm was investigated (fig. S26). The update of responsivities at these wavelengths were also performed using the closed-loop programming method. As shown in Fig. 3E, linear and reversible responsivity updates with 32 discrete levels were achieved for all the six wavelengths, and fig. S27 presents the distribution of the 32 responsivities for all the 27 devices. The linear relationship between light intensity and photoresponse was also demonstrated for the six wavelengths (fig. S28). All these results demonstrate the capability of our retinomorphic vision sensors to implement in-sensor computing for multiband images.

In-sensor convolutional processing of multiband images

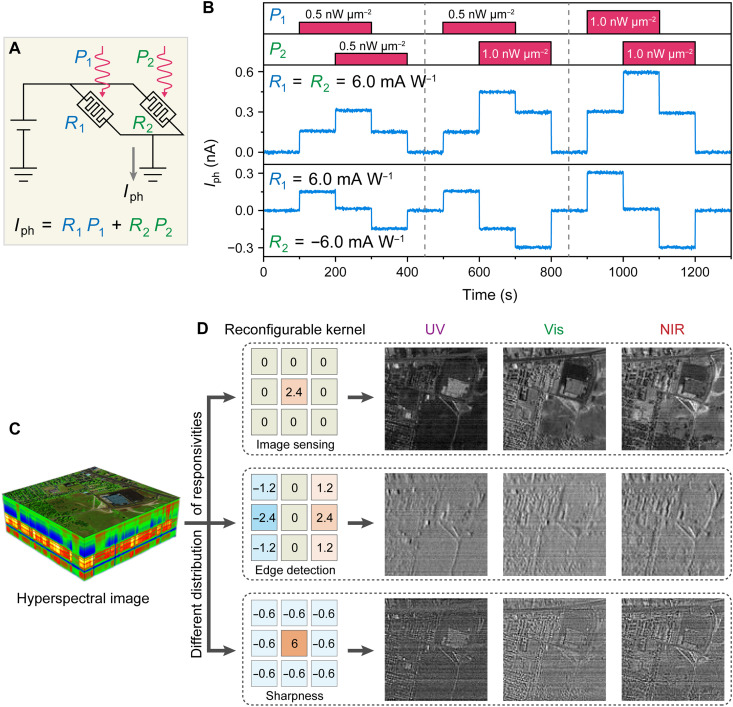

The linear modulation of responsivity and linear light-intensity dependence of photocurrent as demonstrated above make it possible to construct a reconfigurable retinomorphic sensory network by connecting the retinomorphic vision sensors in parallel. In-sensor MAC operation, a fundamental operation for in-sensor computing, can be realized by performing a real-time multiplication of optically projected images with responsivity matrix of the sensor array and summing the photocurrents generated by all the vision sensors via Kirchhoff’s law. To demonstrate the accuracy of the MAC implemented in our SWNT@GDY-based sensor array, a simple network containing two sensors was first investigated (Fig. 4A). As shown in Fig. 4B, two responsivities, R1 and R2, were set to the same and opposite values, respectively, and a sequence of optical pulses with different intensities was projected to the sensors. The measured total photocurrents generated by the two sensors follow the equation of Iph = R1P1 + R2P2, confirming the validity of MAC.

Fig. 4. In-sensor MAC operation and convolutional processing for multiband images.

(A) Schematic circuit diagram of a sensory network containing two retinomorphic vision sensors for implementation of in-sensor MAC operation. R1 and R2 represent the responsivities of the two sensors, while P1 and P2 are the corresponding light intensities. (B) The output total photocurrent generated by the two sensors by applying irradiation with different intensities. Top: The responsivities of the two sensors were both set as 6.0 mA W−1. Bottom: The responsivities were set as 6.0 and −6.0 mA W−1, respectively. (C) Hyperspectral image of Urban (https://rslab.ut.ac.ir/data) (40). (D) Demonstration of different convolutional processing operations (image sensing, edge detection, and sharpness) in UV, vis, and NIR bands by setting different responsivity distributions of the 3 × 3 kernel.

The broadband characteristics of the SWNT@GDY-based vision sensors enable the sensing and processing of multiband images, for example, hyperspectral images captured by aircrafts or satellites. Here, a 3 × 3 sensor array with reconfigurable responsivities was used as a convolutional kernel to sense and process hyperspectral images from Urban datasets (Fig. 4C). The detailed process for the convolutional processing is described in Materials and Methods and fig. S29. Three images (100 × 100 pixels) were extracted from the hyperspectral images to represent images in UV, vis, and near-infrared (NIR) bands, respectively. By setting the kernel with different responsivity distributions, the sensing and processing, e.g., edge detection and sharpness, of multiband images were implemented successfully. Figure 4D presents the reconstructed images obtained by sliding the corresponding kernels on multiband images. End-members including “roof,” “grass,” “tree,” and “road” embedded in the multiband images with different wavelengths (fig. S30) were highlighted by the convolutional processing operated in corresponding bands. The good agreement of the experimental results with simulations (fig. S31) verifies the reliability of in-sensor convolutional processing for multiband images in UV-vis-NIR bands.

In-sensor CNN for classification and autoencoding

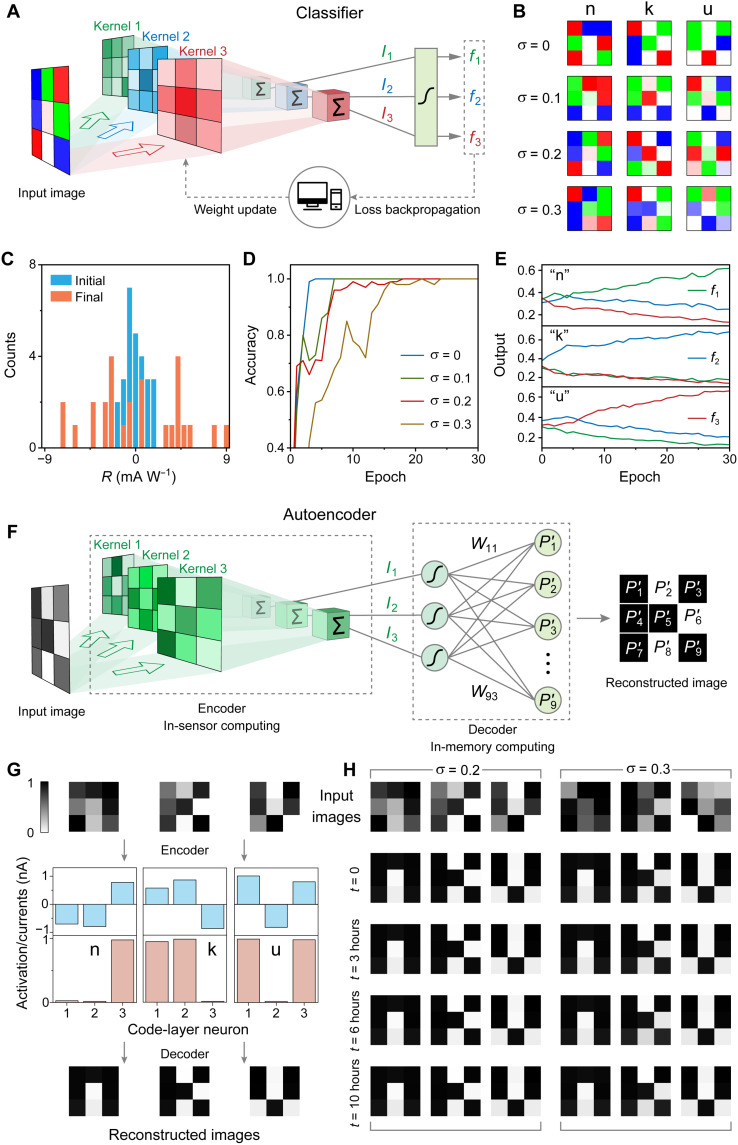

The successful implementation of MAC operation demonstrates the potential of this retinomorphic sensor array to constitute a built-in neural network and perform high-level processing tasks, analogous to the function of human retina. On the basis of the fabricated 3 × 3 × 3 sensor array, a CNN containing three convolutional kernels (3 × 3) was constructed to perform color-image classification tasks (Fig. 5A). Three letters of “n,” “k,” and “u” (3 × 3 pixels) with mixed colors and Gaussian noises (σ = 0, 0.1, 0.2, and 0.3, respectively) were randomly generated as training and test datasets (Fig. 5B and figs. S32 and S33). In each training epoch, a set of 20 randomly chosen letters was optically projected to three kernels (fig. S34), and the corresponding output photocurrents of each kernel Im (m = 1, 2, and 3 representing the kernel index) were further nonlinearly activated by the Softmax function. The outputs f1, f2, and f3 after activation represent the probabilities for letters n, k, and u, respectively. The weights (responsivities) of each kernel were updated by backpropagating the gradients of loss function (here, cross-entropy function). Figure 5C and fig. S35 present the weight distribution of the three kernels before and after 30 epochs, and fig. S36 depicts the evolution of the loss during training. The accuracy of the network was estimated by classifying 100 letters chosen from the test datasets. Although the wavelength-dependent photoresponse would induce slightly degradation in recognition efficiency for mixed-color images (fig. S37), the recognition accuracy still quickly reaches 100% within 10 epochs for σ = 0 and 0.1, and approximately 20 epochs are required for color images with larger noises of σ = 0.2 and 0.3 (Fig. 5D). Figure 5E depicts the evolution of outputs f1, f2, and f3 for letters of n, k, and u with σ = 0.2, and the target image is well separated from the other two letters after 10 epochs, demonstrating the successful classification of color images. Although the wide operation spectral range of the vision sensor enables the recognition of color images, it can just recognize what the letter is (n, k, or u) but still lack the ability to distinguish the color of each pixel. As we emphasized above, the key feature of this retinomorphic vision sensor is the nonvolatile responsivity, and, thus, the weights can be locally stored in the CNN without external field. Figure S38 presents the evolution of the weights after the last training, and the degradation of the responsivity for each device was approximately 5% in 10 hours. This level of degradation would not influence the classification accuracy of the classifier, which still kept 100% even after 10 hours (fig. S39).

Fig. 5. Implementation of in-sensor CNN for classification and autoencoding.

(A) Schematic of an in-sensor classifier containing three 3 × 3 kernels for color-image classification. (B) Color images of letters n, k, and u with randomly generated colors and Gaussian noises (σ = 0, 0.1, 0.2, and 0.3, respectively). (C) Responsivity distributions of the classifier before (initial) and after (final) training for σ = 0.2. (D) Classification accuracy of the classifier during training for datasets with different noise levels. (E) Average output signals (f1, f2, and f3) for each projected letter, measured during each training epoch with a noise level of σ = 0.2. (F) Schematic of an autoencoder using a 3 × 3 × 3 sensory network (in-sensor computing) and a 3 × 9 ANN (in-memory computing) as the encoder and decoder, respectively. (G) Autoencoding process using grayscale images with noise level of σ = 0.2 as demonstration. Each input image was translated into a current code with three elements, which were further nonlinearly activated to a set of encoded data approximately in binary and finally reconstructed to a 3 × 3 image by the decoder. (H) Reconstructed images of the pretrained autoencoder with locally stored weights (responsivity for encoder and conductance for decoder) for input images with noise levels of σ = 0.2 and 0.3. t represents the time after the last weight update, and autoencoding tasks were implemented with different t values.

In addition to in-sensor computing, the linear and nonvolatile modulation of the conductance states enables the implementation of in-memory computing. A complete neuromorphic visual system involving front-end in-sensor computing and back-end in-memory computing, analogous to human visual system including retina and visual cortex, was constructed by networking a 3 × 3 × 3 in-sensor CNN with a 3 × 9 in-memory ANN. As a demonstration, unsupervised autoencoding tasks were implemented using the sensory network and ANN to act as encoder and decoder, respectively (Fig. 5F). Grayscale images (3 × 3 pixels) with Gaussian noises (σ = 0.2 and 0.3) were generated as the training and test datasets for the autoencoding task (fig. S40). The grayscale images were projected into the sensory kernels, and the total photocurrents of each kernel Im were activated by the Sigmoid function, outputting a (3 × 1) encoded data. The encoded data were transformed to a vector of voltage signals and fed into the in-memory ANN for decoding. After the in-memory MAC operation, the currents measured from each output neurons In (n = 1, 2, …, 9) were further nonlinearly activated (Sigmoid function), and the corresponding outputs P′n were used to reconstructed a 3 × 3 pixel image. The network was trained, and backpropagation was performed to update the weights of the encoder and decoder (figs. S41 and S42); the loss evolution during the training is shown in fig. S43. Figure 5G presents the corresponding outputs of the trained autoencoder for input images of n, k, and u. After in-sensor computing, each letter generates a unique encoded data close to binary, and the following in-memory computing can decode it into an image. The reconstructed images interpret the inputs correctly, with reduced noises and enhanced contrast. Figure 5H and fig. S44 depict the reconstructed images produced by the trained autoencoder with different times after the last training. The almost unchanged images undoubtedly demonstrate the local storage of the responsivities and conductance states in the networks, and, thus, the neuromorphic visual system can perform image sensing and processing tasks without external field.

DISCUSSION

Table S1 compares the parameters of the existing retinomorphic vision sensors with our device. The most important advantage of our device is that it can realize the nonvolatile modulation of the responsivity in a quite wide spectral range, which enables the implementation of in-sensor computing for broadband images. Of course, this device still suffers from some limitations for practical applications. (i) A drain bias is still required during the read process, inducing a high dark current and consuming more energy. (ii) The response speed is limited to milliseconds due to the charge trapping/detrapping mechanism, restricting its potential for high-speed image processing. (iii) Although it can operate in a wide spectral range, the discrimination of colors (wavelengths) is still a great challenge, and the wavelength-dependent photoresponse will affect the processing accuracy of the sensory network for color images. (iv) The scale of the sensory network is still limited, which is difficult in meeting the requirements of machine vision for millions of pixels and tens of categories in real scenarios. In the future, we will pay more efforts to solve these challenges. Fortunately, the photovoltaic effect provides a feasible approach to realize high-speed photoresponse in nanosecond timescale (1), and no drain bias is required for devices based on the photovoltaic effect. The RC delay, however, should also be fully considered for high-speed devices because the parasitic capacitance, together with the large resistance of the device, would induce a nonnegligible RC time constant, which limits the further improvement of the processing speed. Mimicking the various human cone cells (short-wave, middle-wave, and long-wave cones) that respond to specific wavelengths (38) might provide a possible approach to realize color discrimination. In addition, the compatibility of the device with modern complementary metal-oxide semiconductor (CMOS) technology is crucial for the large-scale fabrication of the sensory network. The polymer electrolyte used in this work is considered to be the main obstacle for CMOS process due to its poor thermodynamical stability and processability. Nevertheless, inorganic solid electrolyte (e.g., Li3PO4 and LixSiO2) provides a promising candidate to replace the polymer electrolyte (39), which can improve the CMOS compatibility of our device. High-speed, low-power, and large-scale retinomorphic vision sensors with nonvolatile modulation of responsivity and color discrimination ability should be further developed in the future.

In conclusion, we have proposed a strategy that uses localized field induced by captured Li+ ions instead of the externally controlled gate bias to realize nonvolatile modulation of responsivity. Moreover, correspondingly, retinomorphic vision sensors based on specially designed core-sheath SWNT@GDY have been developed to constitute built-in neural networks with locally stored weights, which can implement in-sensor convolutional processing in a wide spectral range covering 400 to 1800 nm. Closely analogous to human visual system, neuromorphic machine vision involving front-end in-sensor CNN and back-end in-memory ANN is constructed to implement supervised and unsupervised learning tasks. It is a great breakthrough for the development of in-sensor neural networks with locally stored responsivities, providing a feasible strategy to develop high-speed and low-power neuromorphic machine vision for real-time object recognition and classification.

MATERIALS AND METHODS

Device fabrication

Core-sheath SWNT@GDY nanotubes were synthesized via a solution-phase epitaxial strategy as described in note S1. The prepatterned source, drain, and side-gate electrodes (Cr/Au, 10/50 nm) of the device array were fabricated on an oxygen plasma–pretreated SiO2/Si substrate (300-nm SiO2) by photolithography, thermal evaporation, and liftoff processes. The SWNT@GDY channels with a thickness of approximately 10 nm were directly printed by an Aerosol Jet printer using core-sheath SWNT@GDY solution as inks, followed by an annealing process at 120°C for 30 min. An insulated polymethyl methacrylate (PMMA) layer was spin-coated on the device surface to protect the source and drain electrodes from contact with the electrolyte, and specific reaction windows on top of the channels and side gates were selectively exposed by electron-beam lithography. The ion-gel electrolyte was prepared by dissolving 30 mg of LiClO4 (Sigma-Aldrich) and 100 mg of polyethylene oxide powders [weight-average molecular weight (Mw) = 100,000, Sigma-Aldrich] into 20 ml of anhydrous methanol. The mixture was stirred for 24 hours at 50°C. After that, the polymer electrolyte with a thickness of 1.2 μm was spin-coated on top of the PMMA layer, connecting the exposed SWNT@GDY channel and side-gate electrode (32). The electrolytes of each device were separated via selective etching to avoid interference between adjacent devices. Last, the device array was mounted and wire-bonded to a chip carrier for measurement.

Measurement setup

For electrical measurements, basic electrical characteristics were measured by a semiconductor analyzer (Keithley, 4200A-SCS). The gate pulses were applied by an arbitrary waveform generator (Tektronix, AFG31052). During the implementation of in-sensor and in-memory computing, drain voltages and corresponding output currents (photocurrents) were applied and measured using a set of source-measurement units (Keithley). For optical inputs, fiber lasers with tunable intensities and wavelengths of 405, 532, 633, 980, 1550, and 1800 nm were used. The 3 × 3 images generated by nine aligned lasers with corresponding wavelengths and intensities were projected onto the device array using a microscope (fig. S34).

Convolutional processing for hyperspectral images

Hyperspectral images with band numbers of 1, 60, and 130 were selected as images with spectra of UV, vis, and NIR, respectively. For simplicity, all these images were first compressed to 100 × 100 pixels. Each image was divided into a series of 3 × 3–pixel subimages with a sliding step of 1 (fig. S29). The pixel values (0 to 255) of these subimages were converted to voltages that were used to control the intensity of input lasers with corresponding wavelengths (405 nm for UV, 633 nm for vis, and 1800 nm for NIR). These subimages were optically projected to a 3 × 3 sensor array in sequence, and the total photocurrents were measured by applying a drain voltage of 0.1 V. The responsivity distribution of the sensor array was set using a closed-loop programming method (fig. S20) one device by one device. The dark currents of the sensor array were measured before and after optical projection to calibrate the photocurrents. Through in-sensor MAC operations, each subimage was converted to a photocurrent value, and processed images were reconstructed by ranking the measured photocurrents in sequence.

Preparation of datasets

The training and test datasets used for classification and autoencoding were generated by Python. For classification task, we prepared four sets of training and test datasets with different Gaussian noises (σ = 0, 0.1, 0.2, and 0.3). For each training dataset, 200 images corresponding to letters of n, k, and u were randomly generated, and, then, colors (blue, green, and red) and noises (with corresponding σ) were randomly added to prepare color images. Test datasets containing 100 images were prepared in the same way. For autoencoding task, grayscale training and test datasets with different Gaussian noises (σ = 0.2 and 0.3) were prepared similarly, just remove the step of adding colors.

Implementation of image classification

The initial weights (responsivities) of the sensory network were randomly chosen with a Gaussian distribution. For each training epoch, a set of 20 color images was randomly chosen from the training dataset with corresponding noise level. These images were optically projected to three kernels (3 × 3), and the output photocurrents of each kernel Im was given by

| (1) |

where i and j represent the row and column indices, respectively. The above-described processes were executed on the sensor array hardware, and the following activation and backpropagation processes were performed on software. In detail, the measured Im was activated by the Softmax function

| (2) |

where ξ = 109 A−1 is the scaling factor for normalization, and M = 3 here corresponds to the number of classes. The output after activation fm represents the predicted probability ŷm corresponding to letters of n, k, and u, respectively, and the letter with the largest ŷm is regarded as the classification result. There is only one convolutional layer and no pooling layer in this CNN network. After each epoch, the loss was calculated using the cross-entropy loss function

| (3) |

where yk and ŷk were the label and prediction, respectively. To update the weights of the neural network, the gradients of the loss function was backpropagated

| (4) |

with learning rate η = 0.2 and the size of batch S = 20. The updated weights were programmed to the sensor array one device by one device, using the closed-loop programming method (note S3). The classification accuracies were measured by projecting 100 test images to the network in each epoch. The distributions of weights during and after training were measured one device by one device as shown in fig. S34E.

Implementation of autoencoding

The initial weights of the front-end CNN and back-end ANN networks were randomly chosen with a Gaussian distribution. The training of the autoencoder was performed by feeding 20 randomly chosen grayscale images to the CNN kernels for each epoch. The images were projected into the sensor array using 3 × 3 laser array (532 nm), and the responsivities in the three kernels were programmed to the corresponding values for 532-nm wavelength. The output photocurrents of each kernel Im were measured and further nonlinearly activated using the Sigmoid function [fm(Im) = (1 + e−Imξ)−1, where ξ = 5 × 109 A−1]. The encoded data were converted to sequential voltage pulses (6-bit precision) with six time intervals (t1, t2, …, t6) (7, 25), and the total output currents were summed by (fig. S45). The nine output currents In were also activated by the Sigmoid function (scaling factor ξ = 3 × 108 A−1). The mean square loss function was used for backpropagation and weight update, where Pn and P′n correspond to the intensity of the nth pixel in original and reconstructed images. The nonlinear activation and backpropagation processes in classification and autoencoding tasks were performed by software. After the last training, the weights (responsivity for encoder and conductance for decoder) and autoencoding tasks were measured every 1 hour.

Acknowledgments

Funding: This work was supported by the National Natural Science Foundation of China (62274119 and 51802220, X.-D.C.; 12174207, Z.-B.L.; and 11974190, J.-G.T.) and the Fundamental Research Funds for the Central Universities (010-63233006 and 010-DK2300010203, X.-D.C.).

Author contributions: X.-D.C. designed the project. X.-D.C., T.-B.L., and J.Z. supervised this project. G.-X.Z. and Z.-C.Z. fabricated the memory device and performed the electrical measurements. L.K. prepared the SWNTs, and Y.L. prepared the GDY film. F.-D.W., K.S., and L.S. performed the characterization. X.-D.C. wrote the manuscript, and Z.-B.L. and J.-G.T. revised the manuscript. All the authors discussed the results and commented on the manuscript.

Competing interests: The authors declare that they have no competing interests.

Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials.

Supplementary Materials

This PDF file includes:

Supplementary Notes 1 to 3

Figs. S1 to S45

Table S1

References

REFERENCES AND NOTES

- 1.L. Mennel, J. Symonowicz, S. Wachter, D. K. Polyushkin, A. J. Molina-Mendoza, T. Mueller, Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020). [DOI] [PubMed] [Google Scholar]

- 2.Y. Chai, In-sensor computing for machine vision. Nature 579, 32–33 (2020). [DOI] [PubMed] [Google Scholar]

- 3.D. Lee, M. Park, Y. Baek, B. Bae, J. Heo, K. Lee, In-sensor image memorization and encoding via optical neurons for bio-stimulus domain reduction toward visual cognitive processing. Nat. Commun. 13, 5223 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.F. Zhou, Y. Chai, Near-sensor and in-sensor computing. Nat. Electron. 3, 664–671 (2020). [Google Scholar]

- 5.T. Wan, B. Shao, S. Ma, Y. Zhou, Q. Li, Y. Chai, In-sensor computing: Materials, devices, and integration technologies. Adv. Mater., 2203830 (2022). [DOI] [PubMed] [Google Scholar]

- 6.K. Liu, T. Zhang, B. Dang, L. Bao, L. Xu, C. Cheng, Z. Yang, R. Huang, Y. Yang, An optoelectronic synapse based on α-In2Se3 with controllable temporal dynamics for multimode and multiscale reservoir computing. Nat. Electron. 5, 761–773 (2022). [Google Scholar]

- 7.Z. Zhang, X. Zhao, X. Zhang, X. Hou, X. Ma, S. Tang, Y. Zhang, G. Xu, Q. Liu, S. Long, In-sensor reservoir computing system for latent fingerprint recognition with deep ultraviolet photo-synapses and memristor array. Nat. Commun. 13, 6590 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.L. Sun, Z. Wang, J. Jiang, Y. Kim, B. Joo, S. Zheng, S. Lee, W. J. Yu, B.-S. Kong, H. Yang, In-sensor reservoir computing for language learning via two-dimensional memristors. Sci. Adv. 7, eabg1455 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.F. Zhou, Z. Zhou, J. Chen, T. H. Choy, J. Wang, N. Zhang, Z. Lin, S. Yu, J. Kang, H. S. P. Wong, Y. Chai, Optoelectronic resistive random access memory for neuromorphic vision sensors. Nat. Nanotechnol. 14, 776–782 (2019). [DOI] [PubMed] [Google Scholar]

- 10.Y.-X. Hou, Y. Li, Z.-C. Zhang, J.-Q. Li, D.-H. Qi, X.-D. Chen, J.-J. Wang, B.-W. Yao, M.-X. Yu, T.-B. Lu, Large-scale and flexible optical synapses for neuromorphic computing and integrated visible information sensing memory processing. ACS Nano 15, 1497–1508 (2021). [DOI] [PubMed] [Google Scholar]

- 11.Z.-C. Zhang, Y. Li, J.-J. Wang, D.-H. Qi, B.-W. Yao, M.-X. Yu, X.-D. Chen, T.-B. Lu, Synthesis of wafer-scale graphdiyne/graphene heterostructure for scalable neuromorphic computing and artificial visual systems. Nano Res. 14, 4591–4600 (2021). [Google Scholar]

- 12.B. Dang, K. Liu, X. Wu, Z. Yang, L. Xu, Y. Yang, R. Huang, One-phototransistor–one-memristor array with high-linearity light-tunable weight for optic neuromorphic computing. Adv. Mater., 2204844 (2022). [DOI] [PubMed] [Google Scholar]

- 13.C.-Y. Wang, S.-J. Liang, S. Wang, P. Wang, Z. Li, Z. Wang, A. Gao, C. Pan, C. Liu, J. Liu, H. Yang, X. Liu, W. Song, C. Wang, X. Wang, K. Chen, Z. Wang, K. Watanabe, T. Taniguchi, J. J. Yang, F. Miao, Gate-tunable van der Waals heterostructure for reconfigurable neural network vision sensor. Sci. Adv. 6, eaba6173 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.L. Pi, P. Wang, S.-J. Liang, P. Luo, H. Wang, D. Li, Z. Li, P. Chen, X. Zhou, F. Miao, T. Zhai, Broadband convolutional processing using band-alignment-tunable heterostructures. Nat. Electron. 5, 248–254 (2022). [Google Scholar]

- 15.B. Cui, Z. Fan, W. Li, Y. Chen, S. Dong, Z. Tan, S. Cheng, B. Tian, R. Tao, G. Tian, D. Chen, Z. Hou, M. Qin, M. Zeng, X. Lu, G. Zhou, X. Gao, J.-M. Liu, Ferroelectric photosensor network: An advanced hardware solution to real-time machine vision. Nat. Commun. 13, 1707 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.X. Fu, T. Li, B. Cai, J. Miao, G. N. Panin, X. Ma, J. Wang, X. Jiang, Q. Li, Y. Dong, C. Hao, J. Sun, H. Xu, Q. Zhao, M. Xia, B. Song, F. Chen, X. Chen, W. Lu, W. Hu, Graphene/MoS2-xOx/graphene photomemristor with tunable non-volatile responsivities for neuromorphic vision processing. Light-Sci. Appl. 12, 39 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.S. Lee, R. Peng, C. Wu, M. Li, Programmable black phosphorus image sensor for broadband optoelectronic edge computing. Nat. Commun. 13, 1485 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Y. Wang, Y. Cai, F. Wang, J. Yang, T. Yan, S. Li, Z. Wu, X. Zhan, K. Xu, J. He, Z. Wang, A three-dimensional neuromorphic photosensor array for nonvolatile in-sensor computing. Nano Lett. 23, 4524–4532 (2023). [DOI] [PubMed] [Google Scholar]

- 19.S. Wang, C.-Y. Wang, P. Wang, C. Wang, Z.-A. Li, C. Pan, Y. Dai, A. Gao, C. Liu, J. Liu, H. Yang, X. Liu, B. Cheng, K. Chen, Z. Wang, K. Watanabe, T. Taniguchi, S.-J. Liang, F. Miao, Networking retinomorphic sensor with memristive crossbar for brain-inspired visual perception. Natl. Sci. Rev. 8, nwaa172 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.X. Hong, Y. Huang, Q. Tian, S. Zhang, C. Liu, L. Wang, K. Zhang, J. Sun, L. Liao, X. Zou, Two-dimensional perovskite-gated AlGaN/GaN high-electron-mobility-transistor for neuromorphic vision sensor. Adv. Sci. 9, 2202019 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.F. Liao, Z. Zhou, B. J. Kim, J. Chen, J. Wang, T. Wan, Y. Zhou, A. T. Hoang, C. Wang, J. Kang, J.-H. Ahn, Y. Chai, Bioinspired in-sensor visual adaptation for accurate perception. Nat. Electron. 5, 84–91 (2022). [Google Scholar]

- 22.T. Euler, S. Haverkamp, T. Schubert, T. Baden, Retinal bipolar cells: Elementary building blocks of vision. Nat. Rev. Neurosci. 15, 507–519 (2014). [DOI] [PubMed] [Google Scholar]

- 23.C. Li, M. Hu, Y. Li, H. Jiang, N. Ge, E. Montgomery, J. Zhang, W. Song, N. Dávila, C. E. Graves, Z. Li, J. P. Strachan, P. Lin, Z. Wang, M. Barnell, Q. Wu, R. S. Williams, J. J. Yang, Q. Xia, Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 52–59 (2018). [Google Scholar]

- 24.D. Ielmini, H.-S. P. Wong, In-memory computing with resistive switching devices. Nat. Electron. 1, 333–343 (2018). [Google Scholar]

- 25.P. Yao, H. Wu, B. Gao, J. Tang, Q. Zhang, W. Zhang, J. J. Yang, H. Qian, Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020). [DOI] [PubMed] [Google Scholar]

- 26.A. Sebastian, M. Le Gallo, R. Khaddam-Aljameh, E. Eleftheriou, Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020). [DOI] [PubMed] [Google Scholar]

- 27.X.-H. Wang, Z.-C. Zhang, J.-J. Wang, X.-D. Chen, B.-W. Yao, Y.-X. Hou, M.-X. Yu, Y. Li, T.-B. Lu, Synthesis of wafer-scale monolayer pyrenyl graphdiyne on ultrathin hexagonal boron nitride for multibit optoelectronic memory. ACS Appl. Mater. Interfaces 12, 33069–33075 (2020). [DOI] [PubMed] [Google Scholar]

- 28.Z. C. Zhang, Y. Li, J. Li, X. D. Chen, B. W. Yao, M. X. Yu, T. B. Lu, J. Zhang, An ultrafast nonvolatile memory with low operation voltage for high-speed and low-power applications. Adv. Funct. Mater. 31, 2102571 (2021). [Google Scholar]

- 29.F.-D. Wang, M.-X. Yu, X.-D. Chen, J. Li, Z.-C. Zhang, Y. Li, G.-X. Zhang, K. Shi, L. Shi, M. Zhang, T.-B. Lu, J. Zhang, Optically modulated dual-mode memristor arrays based on core-shell CsPbBr3@graphdiyne nanocrystals for fully memristive neuromorphic computing hardware. SmartMat 4, e1135 (2023). [Google Scholar]

- 30.X. Gao, Y. Zhu, D. Yi, J. Zhou, S. Zhang, C. Yin, F. Ding, S. Zhang, X. Yi, J. Wang, Ultrathin graphdiyne film on graphene through solution-phase van der Waals epitaxy. Sci. Adv. 4, eaat6378 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Y. Li, Z. C. Zhang, J. Li, X.-D. Chen, Y. Kong, F.-D. Wang, G.-X. Zhang, T.-B. Lu, J. Zhang, Low-voltage ultrafast nonvolatile memory via direct charge injection through a threshold resistive-switching layer. Nat. Commun. 13, 4591 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.B. W. Yao, J. Li, X. D. Chen, M. X. Yu, Z. C. Zhang, Y. Li, T. B. Lu, J. Zhang, Non-volatile electrolyte-gated transistors based on graphdiyne/MoS2 with robust stability for low-power neuromorphic computing and logic-in-memory. Adv. Funct. Mater. 31, 2100069 (2021). [Google Scholar]

- 33.Z.-C. Zhang, X.-D. Chen, T.-B. Lu, Recent progress in neuromorphic and memory devices based on graphdiyne. Sci. Technol. Adv. Mat. 24, 2196240 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.E. J. Fuller, S. T. Keene, A. Melianas, Z. Wang, S. Agarwal, Y. Li, Y. Tuchman, C. D. James, M. J. Marinella, J. J. Yang, A. Salleo, A. A. Talin, Parallel programming of an ionic floating-gate memory array for scalable neuromorphic computing. Science 364, 570–574 (2019). [DOI] [PubMed] [Google Scholar]

- 35.Y. van de Burgt, E. Lubberman, E. J. Fuller, S. T. Keene, G. C. Faria, S. Agarwal, M. J. Marinella, A. Alec Talin, A. Salleo, A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nat. Mater. 16, 414–418 (2017). [DOI] [PubMed] [Google Scholar]

- 36.J. Wen, W. Tang, Z. Kang, Q. Liao, M. Hong, J. Du, X. Zhang, H. Yu, H. Si, Z. Zhang, Y. Zhang, Direct charge trapping multilevel memory with graphdiyne/MoS2 van der waals heterostructure. Adv. Sci. 8, 2101417 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.J. Li, Z. Zhang, Y. Kong, B. Yao, C. Yin, L. Tong, X. Chen, T. Lu, J. Zhang, Synthesis of wafer-scale ultrathin graphdiyne for flexible optoelectronic memory with over 256 storage levels. Chem 7, 1284–1296 (2021). [Google Scholar]

- 38.B. R. Conway, Color vision, cones, and color-coding in the cortex. Neuroscientist 15, 274–290 (2009). [DOI] [PubMed] [Google Scholar]

- 39.Y. Li, J. Lu, D. Shang, Q. Liu, S. Wu, Z. Wu, X. Zhang, J. Yang, Z. Wang, H. Lv, M. Liu, Oxide-based electrolyte-gated transistors for spatiotemporal information processing. Adv. Mater. 32, 2003018 (2020). [DOI] [PubMed] [Google Scholar]

- 40.M. Gomroki, M. Hasanlou, P. Reinartz, STCD-EffV2T Unet: Semi transfer learning EfficientNetV2 T-Unet network for urban/land cover change detection using Sentinel-2 satellite images. Remote Sens. (Basel) 15, 1232 (2023). [Google Scholar]

- 41.G. Li, Y. Li, H. Liu, Y. Guo, Y. Li, D. Zhu, Architecture of graphdiyne nanoscale films. Chem. Commun. 46, 3256–3258 (2010). [DOI] [PubMed] [Google Scholar]

- 42.J. Yao, Y. Li, Y. Li, Q. Sui, H. Wen, L. Cao, P. Cao, L. Kang, J. Tang, H. Jin, S. Qiu, Q. Li, Rapid annealing and cooling induced surface cleaning of semiconducting carbon nanotubes for high-performance thin-film transistors. Carbon 184, 764–771 (2021). [Google Scholar]

- 43.Y. Liu, F. Wang, X. Wang, X. Wang, E. Flahaut, X. Liu, Y. Li, X. Wang, Y. Xu, Y. Shi, R. Zhang, Planar carbon nanotube-graphene hybrid films for high-performance broadband photodetectors. Nat. Commun. 6, 8589 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.S. Dai, Y. Zhao, Y. Wang, J. Zhang, L. Fang, S. Jin, Y. Shao, J. Huang, Recent advances in transistor-based artificial synapses. Adv. Funct. Mater. 29, 1903700 (2019). [Google Scholar]

- 45.S. Jiang, S. Nie, Y. He, R. Liu, C. Chen, Q. Wan, Emerging synaptic devices: From two-terminal memristors to multiterminal neuromorphic transistors. Mater. Today Nano 8, 100059 (2019). [Google Scholar]

- 46.J. Jiang, J. Guo, X. Wan, Y. Yang, H. Xie, D. Niu, J. Yang, J. He, Y. Gao, Q. Wan, 2D MoS2 neuromorphic devices for brain-like computational systems. Small 13, 1700933 (2017). [DOI] [PubMed] [Google Scholar]

- 47.D. Liu, Q. Shi, S. Dai, J. Huang, The design of 3D-interface architecture in an ultralow-power, electrospun single-fiber synaptic transistor for neuromorphic computing. Small 16, 1907472 (2020). [DOI] [PubMed] [Google Scholar]

- 48.C. S. Yang, D. S. Shang, N. Liu, E. J. Fuller, S. Agrawal, A. A. Talin, Y. Q. Li, B. G. Shen, Y. Sun, All-solid-state synaptic transistor with ultralow conductance for neuromorphic computing. Adv. Funct. Mater. 28, 1804170 (2018). [Google Scholar]

- 49.E. J. Fuller, F. E. Gabaly, F. Léonard, S. Agarwal, S. J. Plimpton, R. B. Jacobs-Gedrim, C. D. James, M. J. Marinella, A. A. Talin, Li-ion synaptic transistor for low power analog computing. Adv. Mater. 29, 1604310 (2017). [DOI] [PubMed] [Google Scholar]

- 50.J. Lee, L. G. Kaake, J. H. Cho, X. Y. Zhu, T. P. Lodge, C. D. Frisbie, Ion gel-gated polymer thin-film transistors: Operating mechanism and characterization of gate dielectric capacitance, switching speed, and stability. J. Phys. Chem. C 113, 8972–8981 (2009). [Google Scholar]

- 51.C. Sun, D. J. Searles, Lithium storage on graphdiyne predicted by DFT calculations. J. Phys. Chem. C 116, 26222–26226 (2012). [Google Scholar]

- 52.N. Wang, J. He, K. Wang, Y. Zhao, T. Jiu, C. Huang, Y. Li, Graphdiyne-based materials: Preparation and application for electrochemical energy storage. Adv. Mater. 31, 1803202 (2019). [DOI] [PubMed] [Google Scholar]

- 53.K. Kim, C.-L. Chen, T. Quyen, A. M. Shen, Y. Chen, A carbon nanotube synapse with dynamic logic and learning. Adv. Mater. 25, 1693–1698 (2013). [DOI] [PubMed] [Google Scholar]

- 54.S. Kazaoui, N. Minami, H. Kataura, Y. Achiba, Absorption spectroscopy of single-wall carbon nanotubes: Effects of chemical and electrochemical doping. Synth. Met. 121, 1201–1202 (2001). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Notes 1 to 3

Figs. S1 to S45

Table S1

References