Abstract

Spatial transcriptomics (ST) technologies are rapidly becoming the extension of single-cell RNA sequencing (scRNAseq), holding the potential of profiling gene expression at a single-cell resolution while maintaining cellular compositions within a tissue. Having both expression profiles and tissue organization enables researchers to better understand cellular interactions and heterogeneity, providing insight into complex biological processes that would not be possible with traditional sequencing technologies. Data generated by ST technologies are inherently noisy, high-dimensional, sparse, and multi-modal (including histological images, count matrices, etc.), thus requiring specialized computational tools for accurate and robust analysis. However, many ST studies currently utilize traditional scRNAseq tools, which are inadequate for analyzing complex ST datasets. On the other hand, many of the existing ST-specific methods are built upon traditional statistical or machine learning frameworks, which have shown to be sub-optimal in many applications due to the scale, multi-modality, and limitations of spatially resolved data (such as spatial resolution, sensitivity, and gene coverage). Given these intricacies, researchers have developed deep learning (DL)-based models to alleviate ST-specific challenges. These methods include new state-of-the-art models in alignment, spatial reconstruction, and spatial clustering, among others. However, DL models for ST analysis are nascent and remain largely underexplored. In this review, we provide an overview of existing state-of-the-art tools for analyzing spatially resolved transcriptomics while delving deeper into the DL-based approaches. We discuss the new frontiers and the open questions in this field and highlight domains in which we anticipate transformational DL applications.

I. INTRODUCTION

Although multicellular organisms contain a common genome within their cells, the morphology and gene expression patterns of cells are largely distinct and dynamic. These differences arise from internal gene regulatory systems and external environmental signals. Cells proliferate, differentiate, and function in tissues while sending and receiving signals from their surroundings. These environmental factors cause cell fate to be highly dependent on the environment in which it exists. Therefore, monitoring a cell's behavior in the residing tissue is crucial to understanding cell function, as well as its past and future fate.1

Advancements in single-cell sequencing have transformed the genomics and bioinformatics fields. The advent of single-cell RNA sequencing (scRNAseq) has enabled researchers to profile gene expression levels of various tissues and organs, allowing them to create comprehensive atlases in different species.2–6 Moreover, scRNAseq enables the detection of distinct subpopulations present within a tissue, which has been paramount in discovering new biological processes, the inner workings of diseases, and effectiveness of treatments.7–14 However, high-throughput sequencing of solid tissues requires tissue dissociation, resulting in the loss of spatial information.15,16 To fully understand cellular interactions, data on tissue morphology and spatial information are needed, which scRNAseq alone cannot provide. The placement of cells within a tissue is crucial from the developmental stages (e.g., asymmetric cell fate of mother and daughter cells17) and beyond cell differentiation (such as cellular functions, response to stimuli, and tissue homeostasis18). These limitations would be alleviated by technologies that could preserve spatial information while measuring gene expression at the single-cell level.

Spatial transcriptomics (ST) provide an unbiased view of tissue organization crucial in understanding cell fate, delineating heterogeneity, and other applications.19 However, many current ST technologies suffer from lower sensitivities as compared to scRNAseq while lacking the single-cell resolution that scRNAseq provides.20 Targeted in situ technologies have tried to solve the issue of resolution and sensitivity but are limited in gene throughput and often require a priori knowledge of target genes.20 More specifically, in situ technologies [such as in situ sequencing (ISS),21 single-molecule fluorescence in situ hybridization (smFISH),22–24 targeted expansion sequencing,25 cyclic-ouroboros smFISH (osmFISH),26 multiplexed error-robust fluorescence in situ hybridization (MERFISH),27 sequential FISH (seqFISH+),28 and spatially resolved transcript amplicon readout mapping (STARmap)29] are typically limited to pre-selected genes that are on the order of hundreds, with the accuracy potentially dropping as more probes are added.29 We will refer to these methods as image-based techniques.

On the other hand, next generation sequencing (NGS)-based technologies [such as 10× Genomics' Visium and its predecessor,30,31 Slide-Seq,32 and high-definition spatial transcriptomics (HDST33)] barcode entire transcriptomes but have limited capture rates, and resolutions that are larger than a single cell34 (50–100 μm for Visium and 10 μm for Slide-Seq). Moreover, unlike image-based technologies, NGS-based methods allow for unbiased profiling of large tissue sections without necessitating a set of target genes.35,36 However, NGS-based technologies do not have a single-cell resolution, requiring cellular features to be inferred or related to the histological scale using computational approaches. Many current algorithms use traditional statistical or medical image processing frameworks that require human supervision,34,37,38 which is not ideal for large-scale analyses. Additionally, many algorithms are not generalizable across different sequencing platforms, which limits their utility and restricts multi-omics integration efforts.

Deep learning (DL) methods can use raw data to extract useful representations (or information) needed for performing a task, such as classification or detection.39 This quality makes this class of Machine Learning (ML) algorithms ideal for applications where the available data are large, higher-dimensional, and noisy, such as single-cell omics. DL models have been extensively used in scRNAseq studies (e.g., preprocessing,40,41 clustering,42,43 cell-type identification,44,45 and data augmentation46,47) and have shown to significantly improve upon traditional methods,10 suggesting the potential of such methods in ST analysis. Moreover, DL models can leverage multiple data sources, such as images and text data, to learn a set of tasks.48 Given that spatially resolved transcriptomics are inherently multi-modal (i.e., they consist of images and gene expression count data) and that downstream analysis consists of multiple tasks (e.g., clustering and cell-type detection), researchers have sought to develop ST-specific DL algorithms.

Spatially resolved transcriptomics have been utilized to unravel complex biological processes in many diseases (e.g., COVID-19,49,50 arthritis,51,52 cancer,31,33,53–55 Alzheimer's,56 diabetes,57,58 etc.). Continuous improvements and commercialization of ST technologies (such as 10×'s Visium) are resulting in wider use across individual labs. Therefore, scalable and platform-agnostic computational approaches are needed for the accurate and robust analysis of ST data. So far, DL methods have shown promising results in handling the scale and multi-modality of spatially resolved transcriptomics; however, DL-based models in this space remain nascent. Similar to scRNAseq analysis, we anticipate a suite of DL models to be developed in the near future to address many of the pressing challenges in the spatial omics field. This review aims to provide an overview of the current state-of-the-art (SOTA) DL models developed for ST analysis. Due to the potential and accessibility of NGS-based ST technologies, we primarily focus on methods and techniques developed for these technologies.

The remainder of this manuscript is organized as follows: We provide an overview of common scRNAseq and ST technologies in Sec. II, followed by a general description of common DL architectures used for ST analysis in Sec. III. Section IV is dedicated to the current DL methods developed for analyzing spatially resolved transcriptomics. In Sec. V, we conclude by discussing our outlook on the current challenges and future research directions in the ST domain. We provide an illustration of the main methods reviewed in this paper in Fig. 1 and provide the reader with a list of current SOTA methods for ST analysis in Table I. Given the pace of advancements in this field, the authors have compiled an online list of current DL methods for ST analysis on a dedicated repository (https://github.com/SindiLab/Deep-Learning-in-Spatial-Transcriptomics-Analysis), which will be maintained and continuously updated.

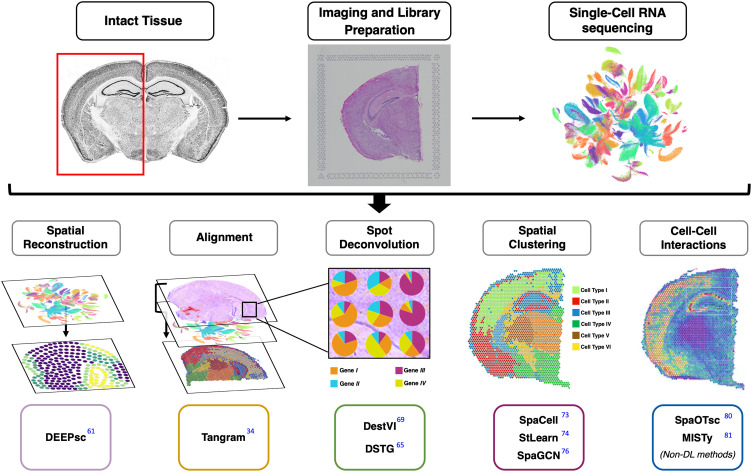

FIG. 1.

An overview of deep learning (and machine learning) methods for spatial transcriptomics presented in this review. In this work, we provide a brief background on related biological concepts, such as single-cell RNA sequencing (scRNAseq) and spatial transcriptomic (ST) technologies (Sec. II), followed by an overview of common deep learning architectures in Sec. III. We then dive deeper into specific machine learning techniques for spatial reconstruction (Sec. IV A), scRNAseq and ST alignment (Sec. IV B), ST spot deconvolution (Sec. IV C), spatial clustering (Sec. IV D), and cell–cell interaction (Sec. IV E). A more comprehensive list of the state-of-the-art methods for spatial transcriptomics is provided in Table I.

TABLE I.

A list of relevant methods for the analysis of spatial transcriptomics data. The italicized boldfaced methods are the ones that utilize deep learning (or elements closely aligned). We review these methods in depth in this paper.

| Category | Method | Year | Framework | Language | Software availability |

|---|---|---|---|---|---|

| Spatial reconstruction | Seurat59 | 2015 | Statistical | R | https://github.com/satijalab/seurat |

| novoSpaRc60 | 2019 | Optimization/statistical | Python | https://github.com/rajewsky-lab/novosparc | |

| DEEPsc 61 | 2021 | Machine learning | MATLAB | https://github.com/fmaseda/DEEPsc | |

| Alignment and integration | Spatial backmapping62 | 2015 | Scoring scheme | R | https://github.com/jbogp/nbt_spatial_backmapping |

| Tangram 34 | 2021 | Machine learning | Python | https://github.com/broadinstitute/Tangram | |

| GLUER 63 | 2021 | Machine learning | Python | https://github.com/software-github/GLUER | |

| Spot deconvolution | Stereoscope64 | 2020 | Statistical | Python | https://github.com/almaan/stereoscope |

| DSTG 65 | 2021 | Machine learning | Python, R | https://github.com/Su-informatics-lab/DSTG | |

| SPOTlight66 | 2021 | Machine learning | R | https://github.com/MarcElosua/SPOTlight | |

| RTCD67 | 2021 | Statistical | R | https://github.com/dmcable/RCTD | |

| SpatialDWLS68 | 2021 | Optimization/statistical | R | https://github.com/RubD/Giotto | |

| DestVI 69 | 2021 | Machine learning | Python | https://github.com/YosefLab/scvi-tools | |

| Cell2location70 | 2022 | Statistical | Python | https://github.com/BayraktarLab/cell2location | |

| CARD71 | 2022 | Machine learning | R, C++ | https://github.com/YingMa0107/CARD | |

| Spatial clustering | HMRF72 | 2018 | Statistical | R, Python, C | https://bitbucket.org/qzhudfci/smfishhmrf-py/ |

| SpaCell 73 | 2019 | Machine learning | Python | https://github.com/BiomedicalMachineLearning/SpaCell | |

| StLearn 74 | 2020 | Machine learning | Python | https://github.com/BiomedicalMachineLearning/stLearn | |

| BayesSpace75 | 2021 | Statistical | R, C++ | https://github.com/edward130603/BayesSpace | |

| SpaGCN 76 | 2021 | Machine learning | Python | https://github.com/jianhuupenn/SpaGCN | |

| Spatially variable genes identification | Trendsceek77 | 2018 | Statistial | R | https://github.com/edsgard/trendsceek |

| SpatialDE78 | 2018 | Statistical | Python | https://github.com/Teichlab/SpatialDE | |

| Spark79 | 2020 | Statistical | R, C++ | https://github.com/xzhoulab/SPARK | |

| Cell–cell communication | SpaOTsc80 | 2020 | Machine learning | Python | https://github.com/zcang/SpaOTsc |

| MISTy81 | 2020 | Machine learning | R | https://github.com/saezlab/mistyR | |

| Giotto82 | 2021 | Statistical | R | https://github.com/RubD/Giotto |

II. BIOLOGICAL BACKGROUND

A. Single-cell RNA sequencing (scRNAseq)

RNA sequencing (RNA-seq) provides comprehensive insight on cellular processes (such as identifying genes that are upregulated or downregulated, etc.). However, traditional bulk RNA-seq is limited to revealing the average expression from a collection of cells, and not disambiguation single-cell behavior. Thus, it is difficult to delineate cellular heterogeneity with traditional RNA-seq, which is a disadvantage since cellular heterogeneity has been shown to play a crucial role in understanding many diseases.83 Therefore, researchers have turned to single-cell RNA-seq (scRNAseq) in order to identify cellular heterogeneity within tissues. ScRNAseq technologies have been instrumental in the study of key biological processes in many diseases, such as cancer,84 Alzheimer's,85 cardiovascular diseases,86 etc. (see Ref. 83 for more details). RNA sequencing of cells at a single-cell resolution, scRNAseq, generally consists of four stages:

-

(i)

Isolation of single-cells and lysing: Cells are selected through laser microdissection, fluorescence-activated cell sorting (FACS), microfluidic/microplate technology (MT), or a combination of these methods,87 with MT being highly complementary to NGS-based technologies.88 MT encapsulates each single-cell into an independent microdroplet containing unique molecular identifiers (UMI), lysis buffer for cell lysis (to increase the capturing of as many RNA molecules as possible), oligonucleotide primers, and deoxynucleotide triphosphates (dNTPs) in addition to the cells themselves. Due to MT's higher isolation capacity, thousands of cells can be simultaneously tagged and analyzed, which is beneficial for large-scale scRNAseq studies.

-

(ii)

Reverse transcription: One challenge in RNA sequencing is that RNA cannot be directly sequenced from cells, and thus, RNA must first be converted to complementary DNA (cDNA).89 Although various technologies employ different techniques, the reverse transcription phase generally involves capturing mRNA using poly[T] sequence primers that bind to mRNA ploy[A] tail prior to cDNA conversion. Based on the sequencing platform, other nucleotide sequences are added to the reverse transcription; for example, in NGS protocols, UMIs are added to tag unique mRNA molecules so that it could be traced back to their originating cells, enabling the combination of different cells for sequencing.

-

(iii)

cDNA amplification: Given that RNA cannot be directly sequenced from cells, single-stranded RNAs must first be reverse transcribed to cDNA. However, due to the small amount of mRNA in cells, limited cDNA is produced, which is not optimal for sequencing. Therefore, the limited quantity of cDNA must be amplified prior to library preparation and sequencing.90 The amplification is often done by either PCR (an exponential amplification process with its efficiency being sequence dependent) or IVT (a linear amplification method that requires an additional round of reverse transcription of the amplified RNA) before sequencing.89,91 The final cDNA library consists of an adaptor-ligated sequencing library attached to each end.

-

(iv)

Sequencing library construction: Finally, every cell's tagged and amplified cDNA is combined for library preparation and sequencing similar to bulk RNA sequencing methods, followed by computational pipelines for processing and analysis.92

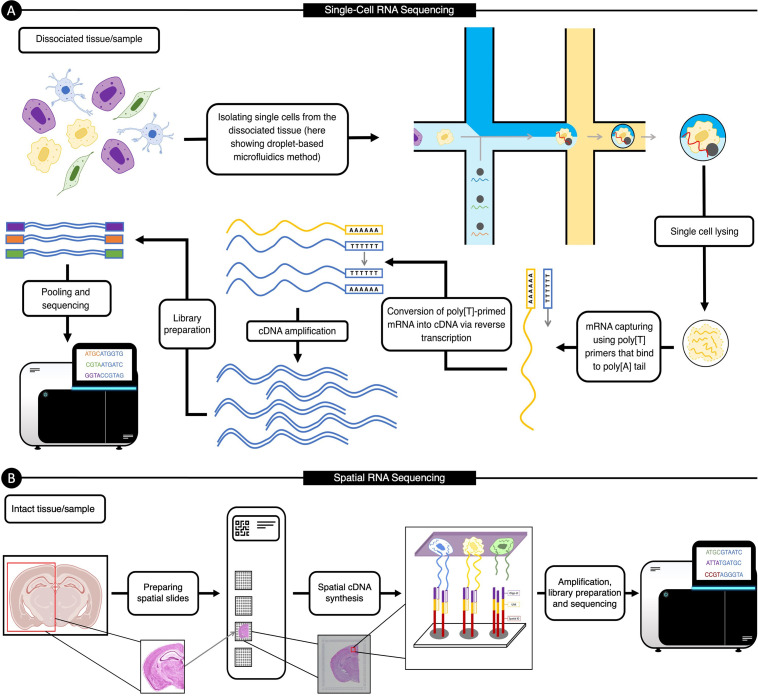

Figure 2(A) illustrates an example of the workflow for scRNAseq. For more details of each stage and various scRNAseq workflows, we refer the reader to Refs. 91 and 93–95.

FIG. 2.

Example of single-cell RNA sequencing and spatial transcriptomics workflows. (A) An illustration of droplet-based microfluidics single-cell RNA sequencing that consists of (1) dissociating a tissue or biological sample, (2) isolating single cells, unique molecular identifiers, and lysis buffer, (3) cell lysis, (4) mRNA capture and reverse transcription, (5) cDNA amplification, and (6) library construction. We describe this process in more detail in Sec. II A). (B) A visualization of the steps for next-generation sequencing-based spatial transcriptomic (as described in Sec. II B), which include (1) tissue preparation, staining, and imaging, (2) permeabilizing the tissue, (3) cDNA synthesis, amplification, and library construction, followed by (5) sequencing.

B. Spatial transcriptomics technologies

More recently, technologies that profile gene expression while retaining spatial information have emerged. These technologies are collectively known as spatial transcriptomics (ST). The various ST technologies provide different advantages and are chosen based on experimental factors, such as the size of tissue to be assayed, the number of genes to be probed, a priori knowledge of target genes, cost, etc. In general, ST technologies can be divided into two broad categories: imaging-based and next-generation sequencing (NSG)-based technologies. In this section, we provide an overview of popular techniques, with more emphasis on NGS-based approaches. For a more comprehensive and technical review of ST technologies, we refer the reader to Asp et al.,96 Rao et al.,20 and Moses and Pachter.97

1. Imaging-based technologies

Imaging-based technologies are broadly subdivided into in situ hybridization (ISH)-based methods, in situ sequencing (ISS)-based methods, or methods that borrow elements from both approaches. Unlike RNAseq methods described above, ISH and most ISS-based techniques require labeled probes. This means that the target genes must be known in advance, and, moreover, the number of genes that can be measured is limited.20

a. In situ hybridization (ISH)-based approaches

ISH-based methods aim to detect the absence or presence of target RNA (or DNA) sequences while localizing the information of the desired sequences to specific cells or chromosomal sites.98,99 ISH-based techniques use the labeled probes (usually made with DNA or RNA) that bind to desired sequences in fixed cells or tissue, therefore detecting the desired sequence through the hybridization of a complementary probe. The hybridized probes are then visualized through isotopic and nonisotopic (fluorescent and nonfluorescent) approaches.99 ISH-based techniques have been limited by the number of distinguishable transcripts; however, recent innovations have resulted in ample multiplexing capabilities.20

b. In situ sequencing (ISS)-based approaches

ISS-based approaches aim to sequence the RNA content of a cell in situ using DNA balls that amplify the RNA signals: RNA is first reverse transcribed to cDNA, followed by circular amplification (to generate a long single-stranded DNA that contains repeats of the target sequence) and sequencing.100 Although the transcript can be localized at subcellular resolution, micrometer- or nanometer-sized DNA balls are often used to amplify the signals to reach sufficient signal for imaging.96 Initially, the first ISS-based method101 used targeted padlock probes (a single-stranded DNA molecule containing regions complementary to the target cDNA) followed by sequence-by-ligation21 to detect desired genes. This method provided a subcellular resolution and an ability to detect single-nucleotide variants (SNVs). This ISS protocol is targeted and yields a detection efficiency of approximately 30%.102 Several recent ISS techniques build upon this approach and improve traditional ISS by introducing novel modifications to the experimental aspect of the protocol, as well as ways to mitigate the number of cells that can be discriminated simultaneously.103–105 For example, barcode in situ targeted sequencing (BaristaSeq)104 uses sequencing-by-synthesis and has led to increased read lengths and enabled higher throughput and cellular barcoding with improved detection efficiency compared to the initial ISS approach.104 Another ISS-based technique is spatially resolved transcript amplicon readout mapping (STARmap)29 that reduces noise and avoids the cDNA conversion complications by utilizing the improved padlock-probe and primer design; STARmap adds a second primer to target the site next to the padlock probe in order to circumvent the reverse transcription step. STARmap also uses advanced hydrogel chemistry and takes advantage of an error-robust sequencing-by-ligation method, resulting in detection efficiency that is comparable to scRNAseq methods (around 40%).29,96 Although most ISS approaches (including the ones mentioned here) are targeted, ISS-based methods could also be untargeted,25,106 but this typically leads to much lower sensitivity (around 0.005%) and molecular crowding, affecting the rolling-circle amplification bias.106,107

In imaging-based approaches, the generated image is segmented and processed to produce a cell-level gene-expression matrix. The gene-expression matrix is generated through processing the generated image(s), which can be done manually or automatically. However, given the biased and laborious nature of manual segmentation, there has been a shift toward designing general and automated techniques.108,109 The accurate and general automation of this process still remains a challenge, therefore motivating the application of recent machine learning and computer vision approaches to this field,108–111 which have shown improvements compared to the traditional methods.112 Although this manuscript focuses on methods for NGS-based technologies, many of those techniques (including the ones in Secs. IV C and IV D) can be extended to image-based technologies as well.

C. Next generation sequencing (NGS)-based technologies

Due to the unbiased capture of mRNA, NGS-based technologies can shed light on the known and unknown morphological features using only the molecular characterization of tissues.1 This unbiased and untargeted nature of NGS technologies makes them ideal for studying and exploring new systems,20 a major advantage compared to most image-based technologies that require target genes a priori. While NGS-based approaches differ in the specifics of the protocols, they all build on the idea of adding spatial barcodes before library preparation, which are then used to map transcripts back to the appropriate positions (known as spots or voxels). An example workflow of NGS-based spatial sequencing is depicted in Fig. 2(B). In this section, we provide a general overview of the four most common spatial transcriptomics technologies. For a more complete review of these technologies, we refer the readers to Refs. 20 and 96.

Ståhl et al.31 were the first to successfully demonstrate the feasibility of using NGS for spatial transcriptomics (this initial approach is often referred to as spatial transcriptomics). Their innovation was to add spatial barcodes prior to library preparation, enabling the mapping of expressions to appropriate spatial spots. More specifically, Ståhl et al. positioned oligo(dT) probes and unique spatial barcodes as microarrays of spots on the surface of slides. Next, fresh frozen tissue slices were placed on the microarray and processed to release mRNA (using enzymatic permeabilization), which was then hybridized with the probes on the surface of the slides. This approach consists of (i) collecting histological imaging [using standard fixation and staining techniques, including hematoxylin and eosin (H and E) staining] for investigating the morphological characteristics and (ii) sequencing the spatially barcoded cDNA to profile gene expressions. In the initial experiments, each slide consisted of approximately 1000 spots, each having a diameter of 100 μm with 200 μm center-to-center distance.1 This approach provides researchers with an unbiased technique for analyzing large tissue areas without the need for selecting target genes in advance.20,35,112

After the initial success of spatial transcriptomics, 10× Genomics subsequently improved the resolution (shrinking the spot diameters to 55 μm with 100 μm center-to-center distance) and sensitivity (capturing more than 104 transcripts per spot) of the approach and eventually commercializing it as Visium30,113) The development and commercialization of the spatial transcriptomics resulted in relatively rapid adoption across fields, such as cancer biology,55,114 developmental biology,115,116 and neuroscience.117,118 The histological imaging and gene expression profiling of Visium are similar to the initial approach: the staining and imaging of the tissues are through traditional staining techniques, including H and E staining for visualizing the tissue sections using a brightfield microscope and immunofluorescence staining to visualize protein detection in the tissue sections through a fluorescent microscope. Visium protocol allows for both fresh frozen (FF) or formalin-fixed paraffin-embedded (FFPE) tissues. For FF tissues, similar to Ståhl et al.,31 the tissue is permeabilized, allowing the release of mRNA, which hybridizes to the spatially barcoded oligonucleotides present on the spots. The captured mRNA then goes through a reverse transcription process that results in cDNA, which is then barcoded and pooled for generating a library.119 For FFPE tissues, tissue is permeabilized to release ligated probe pairs from the cells that bind to the spatial barcodes on the slide, and the barcoded molecules are pooled for downstream processing to library generation.119

Building on the spatial transcriptomics, Vickovic et al.33 proposed high-definition spatial transcriptomics (HDST) that improved the resolution to about 2 μm. Similar to the other approaches, HDST also employs specific barcodes ligated to beads that are coupled to a spot (prior to lysis), so that expressions are mapped to the tissue image. However, the innovation of HDST includes the use of 2 μm beads placed in hexagonal wells, enabling accurate compartmentalization and grouping of the biological materials in the experiment.33 Simultaneously, Rodriques et al.32 introduced SlideSeq that utilizes slides with randomly barcoded beads to capture mRNA, also increasing the resolution (to 10 μm) and sensitivity (500 transcripts per 54 bead) spatial-resolved sequencing compared to Ståhl et al.31 However, SlideSeq placed the barcoded beads in rubber and onto glass slides, as opposed to HDST's hexagonal beads, and determines the position of each random barcode by in situ-indexing.20,32

Despite the differences, all NGS-based technologies use spatial barcodes to tag released RNAs, which then go through conventional processes for sequencing similar to scRNAseq. After sequencing, the data are processed to construct the spatial location of each read (using the spatial barcode) and to construct a gene-expression matrix (mapping the reads to the genome to identify the transcript of origin). Given that most technologies have resolutions larger than a single cell (commonly having expression for 3–30 cells in each spot), the data processing and analysis procedures are relatively similar.

III. MACHINE LEARNING AND DEEP LEARNING BACKGROUND

With the technologies now defined, we next describe common Machine Learning (ML) methods used to analyze the ST data. In this section, we first provide a discussion of the algorithmic development of ML and deep learning (DL) models and then discuss common architectures used for spatially resolved transcriptomics (and scRNAseq data).

ML refers to a computer algorithm's ability to acquire knowledge by extracting patterns and features from the raw data.120 All ML algorithms depend on the data, which must be available before the methods can be used, and a defined mathematical objective. ML models' lifecycle consists of two phases, namely, training and evaluation. During training, ML algorithms analyze the data to extract patterns and adjust their internal parameters based on optimizing their objectives (known as loss function). In the evaluation (or inference) stage, the trained model makes predictions (or performs the task it was trained to do) on unseen data.

There are two main types of ML algorithms: supervised and unsupervised. An ML algorithm is considered to be unsupervised if it utilizes raw inputs without any labels to optimize its objective function (an example would be the K-Means clustering algorithm121). Conversely, if an algorithm uses both raw data and the associated labels (or targets) in training, then it is a supervised learning algorithm. Supervised learning is the most common form of ML.39 An example of supervised learning in scRNAseq analysis would be classifying cell subpopulations using prior annotations: this requires a labeled set of cell types for training (the available annotations), an objective function for calculating learning statistics (“teaching” the model), and testing data for measuring how well the model can predict the cell type (label) on data it has not seen before (i.e., generalizability of the model). Another common example of supervised learning is regression, where a model predicts continuous values as opposed to outputting labels or categorical values in classification. For supervised tasks, a model is trained on the majority of the data (known as training set) and then evaluated on held-out data (test set). Depending on the size of our dataset, there can also be a third data split known as a validation set, which is used to measure the performance of the model throughout training to determine early stopping:122 Early stopping is when we decide to stop the training of a model due to overfitting (or over-optimization) on the training set. Overfitting training data worsens the generalizability of the model on unseen data, which early stopping aims to avoid.122 In addition to supervised and unsupervised algorithms, there are also semi-supervised learning, where a model uses a mix of both supervised and unsupervised tasks, and self-supervised, where the computer algorithm generates new or additional labels to improve its training or to learn a new task.

Raw experimental data typically contains noise or other unwanted features, which present many challenges for ML algorithms. Therefore, it is often necessary to carefully preprocess data or to rely on domain-specific expertise in order to transform the raw data into some internal representation from which ML models can learn.39 Deep learning (DL) algorithms, however, aim to use only raw data to automatically extract and construct useful representations required for learning the tasks at hand. In a broad sense, DL models are able to learn from observations through constructing a hierarchy of concepts, where each concept is defined by its relation to simpler concepts. A graphical representation of the hierarchy of concepts (and learning) will consist of many layers, with many nodes and edges connecting the vertices, somewhat resembling humans' neural network. This graph is referred to as an artificial neural network (ANN). ANNs are composed of interconnected nodes (“artificial neurons”) that resemble and mimic our brains' neuronal functions. An ANN is considered to be a DL model if it consists of many layers—often more than three, hence being called deep.

Many tasks that humans perform can be viewed as mappings between sets of inputs and outputs. For example, humans can take a snapshot image of their surroundings (input) and detect the relevant objects (the outputs). DL, and more generally, Artificial Intelligence, aims to learn such mappings in order to model human-level intelligence. Mathematically, ANNs are universal function approximators, meaning that, theoretically, they can approximate any (continuous) function.123–125 Cybenko123 proved this result for a one-layer neural network with an arbitrary number of neurons (nodes) and a sigmoid activation function by showing that such architecture is dense within the space of continuous functions (this result has now been extended to ANNs with multiple layers124). While constructing arbitrarily-long single-layer ANNs is not possible, it has been shown that ANNs with many layers (deeper) generally learn faster and more reliably than ANNs with few wide (many neurons) layers.126 This has allowed researchers to employ deep networks for learning very complex functions through constructing simple non-linear layers that can transform the representation of each module (starting with the raw input) into a representation at a higher, slightly more abstract level.39

DL models' ability to approximate highly non-linear functions has revolutionized many domains of science, including computer vision,127–129 natural language processing,130–132 and bioinformatics.133–135 DL is becoming increasingly incorporated in many computational pipelines and studies, especially in genomics and bioinformatics, including scRNAseq and spatial transcriptomics analysis. In Secs. III A–III F, we provide a brief overview of essential deep learning architectures that have been used in spatial transcriptomics and scRNAseq analysis. In Fig. 3, we present illustrations of the architectures discussed in Secs. III A–III F. Note that for simplicity, we have categorized all graph convolution networks (GCNs)136 as DL models; this is because (i) GCNs can easily be extended to include more layers (deeper networks) and (ii) lack of other existing methods that incorporate some elements of DL. A more comprehensive description of each architecture can be found in the seminal textbook by Goodfellow et al.120

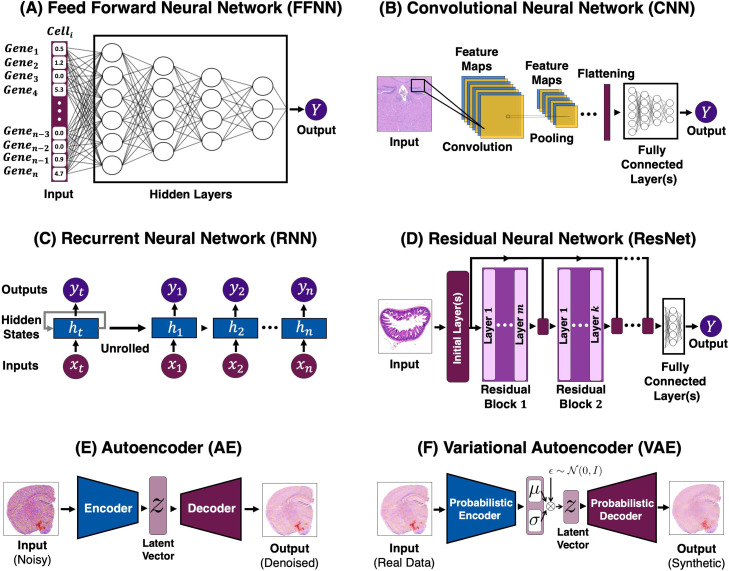

FIG. 3.

Examples of deep learning architectures. Models depicted in (a), (b), (c), and (d) are examples of supervised learning, and networks shown in (e) and (f) are unsupervised. (a) An example of an FFNN architecture with gene expression count as its input. (b) An example of CNN architecture, where the model passes the inputs through the three stages of a CNN (with non-linear activation not depicted) to extract features. Then, outputs are flattened and fed into a fully connected layer (or layers). (c) The general training flow of an RNN, with the unrolled version showing the time step-dependent inputs, hidden state, and outputs. The inputs to RNNs need to have a sequential structure (e.g., time-series data). (d) An illustration of an ResNet. In traditional ResNets, there are identity mappings (or skip connections) that pass the input of a residual block to its output (often through addition). (e) Here, we show the general architecture of a trained denoising AE in the inference stage, with a noisy histology slide as its input, yielding a denoised version of the input image. (6) A depiction of a traditional VAE in the inference stage. VAE's aim is to generate synthetic data that closely resembles the original input. This is done through regularizing the latent space of an AE with the use of a probabilistic encoder and decoder.

A. Feed forward neural network (FFNN)

FFNNs, the quintessential example of Artificial Neural Networks (ANNs), aim to approximate a function mapping a set of inputs to their corresponding targets [see Fig. 3(a)]. More specifically, given an input and a target , where , FFNNs aim to learn the optimal parameters such that . FFNNs are the building blocks of many more advanced architectures (e.g., convolutional neural networks) and, therefore, are of paramount importance in the field of ML.120 As mentioned previously, ANNs are universal function approximators, and they represent a directed acyclic graph of function compositions hierarchy within the network. Each layer of an FFNN, ( being the ith layer), is often a simple linear function: For example, we can have a linear function for outputting of the form Eq. (1), with weight parameters and a bias

| (1) |

However, a model composed of only linear functions can only approximate linear mappings. As such, we must consider non-linear activation functions to increase model capacity, enabling the approximation of complex non-linear functions. In the simplest case, neural networks (NNs) use an affine transform (controlled by learned parameters) followed by a non-linear activation function, which, theoretically, enables them to approximate any non-linear function.137 Moreover, we could compose many of such non-linear transformations to avoid infinitely wide-neural networks when approximating complex functions. However, in this context, finding a set of optimal functions ( ) is a practically impossible task. As such, we restrict the class of function that we use for to the following form in Eq. (2):

| (2) |

where superscript i enumerates the layers, is a non-linear activation function (usually a Rectified Linear Unit138), denotes the output of the layer (with indicating the input data), weights , and biases . Note that because of the dimensionality of the mapping, , and we must have a vector of biases . FFNNs are composed of such functions in chains; to illustrate, consider a three-layer neural network

| (3a) |

| (3b) |

| (3c) |

with representing the hidden states or hidden layers.

FFNNs find the optimal contribution of each parameter (i.e., weights and biases) by minimizing the desired objective. The goal is to generalize the task to data the model has never seen before (testing data). Although the non-linearity increases the capacity of FFNNs, it causes most objective functions to become non-convex. In contrast to convex optimization, non-convex loss functions do not have global convergence guarantees and are sensitive to the initial starting point (parameters of the network).139 Therefore, such optimization is often done through stochastic gradient descent (SGD) (or some variant of it). Moreover, given the sensitivity to initial values, weights are typically chosen to be small random values, with biases initialized to zero or small positive values.120,140,141

Almost all neural networks use iterative gradient descent (GD)-based optimizers to train. GD has three main variants, which differ in the amount of data utilized to calculate the gradients for updating the parameters. The classic GD variant, referred to as batch GD, uses all data points to make the updates to the parameters in one iteration. However, this approach is generally not feasible, since the amount of data required for training DL models almost never fit in memory.142 The second variant of GD is stochastic gradient descent (SGD) where the parameters are updated for every training datum. Computationally, it has been shown that the noise in SGD accelerates its convergence compared to batch GD, but SGD also has the possibility of overshooting, especially for highly non-convex optimization functions.142,143 The third variant, and the most frequently used one for deep learning, is mini-batch GD that updates the parameters for every batch of training data—if the batch size is one, then this variant is just SGD, and if batch size is the entire dataset, then it is equivalent to batch GD. Conventionally, optimization of NNs is done through gradient descent performed backward in the network, which itself consists of two components: a numerical algorithm for efficient computation of the chain rule for derivatives (backpropagation144) and a GD-based optimizer (e.g., Adam145 or AdaGrad146). The optimizer is an algorithm that performs gradient descent, while backpropagation is an algorithm for computing the expression for gradients during the backward pass of the model.

B. Convolutional neural network (CNN)

Learning from images, such as detecting edges and identifying objects, has been of interest for some time in computer science.147 Images contain a lot of information; however, only a small amount of that information is often relevant to the task at hand. For example, an image of a stained tissue contains both important information, namely, the tissue itself, and irrelevant pixels, such as the background. Prior to DL, researchers would hand-design a feature extractor to learn relevant information from the input. Much of the work had focused on the appropriate feature extractors for desired tasks (e.g., see the seminal work by Marr and Hildreth148). However, one of the main goals in ML is to extract features from raw inputs without hand-tuned kernels for feature extraction. CNNs149,150 are a specialized subset of ANNs that use the convolution operation (in at least one of their layers) to learn appropriate kernels for extracting important features beneficial to the task at hand. Mathematically, convolution between two functions f and w is defined as a commutative operation shown in the following equation:

| (4) |

Using our notation, we intuitively view convolution as the area under f(s) weighted by and shifted by x. In most applications, discrete functions are used. As an example, assume we have a 2D kernel K that can detect edges in a 2D image I with dimension m × n. Since I is discrete, we can use the discrete form of Eq. (4) for the convolution of I and K over all pixels

| (5) |

However, since there is less variation in the valid range of m and n (the dimensions of the image) and the operation is commutative, most algorithms implement Eq. (5) equivalently

| (6) |

Typical CNNs consist of a sequence of layers (usually three) which include a layer performing convolution, hence called a convolutional layer (affine transform), a detector stage (non-linear transformation), and a pooling layer. The learning unit of a convolutional layer is called a filter or kernel. Each convolutional filter is a matrix, typically of small dimensions (e.g., 3 × 3 pixels), composed of a set of weights that acts as an object detector, with the weights being continuously calibrated during the learning process. CNNs' objective is to learn an optimal set of filters (weights) that can detect the needed features for specific tasks (e.g., image classification). The result of convolution between the input data and the filter's weights is often referred to as a feature map [as shown in Fig. 3(b)]. Once a feature map is available, each value of this map is passed through a non-linearity (e.g., ReLU). The output of a convolutional layer consists of as many stacked feature maps as the number of filters present within the layer.

There are two key ideas behind the design of CNNs: First, in data with grid-like topology, local neighbors have highly correlated information. Second, equivariance to translation can be obtained if units at different locations share weights. In other words, sharing parameters in CNNs enabled the detection of features regardless of the locations where they appear in. An example of this would be detecting a car. In a dataset, a car could appear at any position in a 2D image, but the network should be able to detect it regardless of the specific coordinates.147 These design choices provide CNNs with three main benefits compared to other ANNs: (i) sparse interactions, (ii) shared weights, and (iii) equivariant representations.149

Another way of achieving equivariance to translation is to utilize pooling layers. Pooling decreases the dimension of learned representations and makes the model insensitive to small shifts and distortions.39 In the pooling layers, we use the outputs of the detector stage (at certain locations) to calculate a summary statistic for a rectangular window of values (e.g., calculating the mean of a 3 × 3 patch). There are many pooling operations, with common choices being max-pooling (taking the maximum value of a rectangular neighborhood), mean-pooling (taking the average), and L2 norm (taking the norm). In all cases, rectangular patches from one or several feature maps are inputted to the pooling layer, where semantically similar features are merged into one. CNNs typically have an ensemble of stacked convolution layers, non-linearity, and pooling layers, followed by fully connected layers that produce the final output of the network. The backpropagation of gradients through CNNs is analogous to FFNNs, enabling the model to learn an optimal set of filters for the task(s) at hand. CNNs have been effectively used in many applications in computer vision and time-series analysis and are being increasingly utilized for analysis of the ST data, since spatial-omics are multi-modal, with one of the modalities being images (as we discuss in Sec. IV).

C. Recurrent neural network (RNN)

Just as CNNs are specialized to process data with a grid-like topology, RNNs'144 special characteristics make them ideal for processing sequential data }, where denotes the ith element in the ordered sequence X. Examples of such sequence-like structures include time series and natural language. RNNs process sequential inputs one at a time and implicitly maintain a history of previous elements of the input sequence. We present an illustration of the conventional RNN architecture in Fig. 3(c). Similar to FFNNs or CNNs, RNNs can be composed of many layers, with each layer depending on the previous hidden state, , and a shared set of parameters, . A deep RNN with n hidden states can be expressed as follows:

| (7a) |

| (7b) |

| (7c) |

The idea behind sharing in RNN states is similar to CNNs: parameter sharing across different time points allows RNNs to generalize the model to sequences of variable lengths and share statistical strengths at different positions in time.120,151 Similar to FFNNs, RNNs learn by propagating the gradients of each hidden state's inputs at discrete times. This process becomes more intuitive if we consider the outputs of hidden units at various time iterations as if they were the outputs of different neurons in a deep multi-layer network. However, due to the sequential nature of RNNs, the backpropagation of gradients shrinks or grows at each time step, causing the gradients to potentially vanish or blow up. This fact and the inability to parallelize training at different hidden states (due to the sequential nature of RNNs) makes RNNs notoriously hard to train, especially for longer sequences.130,152 However, when these issues are averted (via gradient clipping or other techniques), RNNs are powerful models and gain state-of-the-art capabilities in many domains, such as natural language processing. The training challenges combined with the nature of scRNAseq data have resulted in fewer developments of RNNs for single-cell analysis. However, recently, some studies have used RNNs and long short-term memory153 (a variant of RNNs) for predicting cell types and cell motility (e.g., see Kimmel et al.154).

D. Residual neural network (RestNet)

As mentioned above, deep RNNs may suffer from vanishing or exploding gradients. Such issues can also arise in other deep neural networks as well, where gradient information could diminish as the depth increases (through approaches, such as batch normalization,155 aim to help with gradient issues). One way to alleviate vanishing gradients in very deep networks is to allow gradient information from successive layers to pass through, helping with maintaining information propagation even as networks become deeper. ResNets156 achieve this by skip (or residual) connections that add the input to a block (a collection of sequential layers) to its output. For an FFNN, consider function f in Eq. (2). Using the same notation as in Eq. (2), ResNet's inner layers take the form shown in the following equation:

| (8) |

The addition of , the input of the current layer [or the output of the th layer], to the current ith layer output is the skip or residual connection helps flow the information from the input deeper in the network, thus stabilizing training and avoiding vanishing gradient in many cases.156,157 Indeed, this approach can be contextualized within the traditional time integration framework for dynamical systems. For example, consider the following equation:

| (9) |

In the simplest case, this system can be discretized and advanced using and some scaled value of , or a combination of scaled values of . Forward Euler, perhaps the simplest time integrator, advances the solution as shown through the scheme in the following equation:

| (10) |

where h is a sufficiently small real positive value. ResNets use this idea to propose a different way of calculating the transformations in each layer, as shown in Eq. (8).

ResNets consist of residual blocks (also called modules), each of which contains a series of layers. For visual tasks, these blocks often consist of convolutional layers, followed by activation functions, with the skip connection adding the input information to the output of the residual blocks (as opposed to the individual layers inside). ResNets have different depths and architectures, with a number usually describing the depth of the model [e.g., ResNet50 means there are 50 layers (there are 48 convolution layers, one MaxPool, and one AveragePool layer)].

ResNets have transformed DL by enabling the training of very deep neural networks, setting the state-of-the-art performance in many areas, particularly in computer vision.156 The pre-trained ResNets on ImageNet dataset158 are widely used for transfer learning, where the network is either used as is or further fine-tuned on the specific dataset. Pre-trained ResNet models have also been used in spatial transcriptomics analysis, as we discuss later in this manuscript. (ImageNet is the standard dataset for benchmarking performance of machine learning algorithms in classification and object recognition. ImageNet contains more than 14 million hand-annotated images).

E. Autoencoder (AE)

AEs159,160 are neural networks that aim to reconstruct (or copy) the original input via a non-trivial mapping. Conventional AEs have an “hour-glass” architecture [see Fig. 3(e)] consisting of two networks: (i) an encoder network, , which maps an input to a latent vector where, ideally, z contains the most important information from x in a reduced space (i.e., ), (ii) the decoder network, , which takes z as the input and maps it back to , ideally, reconstructing x exactly; i.e., . AEs were traditionally used for dimensionality reduction and denoising, trained by minimizing a mean squared error (MSE) objective between the input data and the reconstructed samples (outputs of the decoder).

Over time, the AE framework has been generalized to stochastic mappings, i.e., probabilistic encoder–decoder mappings, and . A well-known example of such generalization is variational autoencoders (VAEs),161 where by using the same hour-glass architecture, one can use probabilistic encoders and decoders to generate new samples drawn from an approximated posterior. Both traditional AEs and VAEs have practical applications in many biological fields, have been used extensively in scRNAseq (see Ref. 10 for an overview of these models), and are becoming more frequently employed in spatial transcriptomics analysis, which we overview later in this work.

F. Variational autoencoder (VAE)

One can describe VAEs161 as AEs that regularize the encoding distribution, enabling the model to generate new synthetic data. The general idea behind VAEs is to encode the inputs as a distribution over the latent space, as opposed to a single point (which is done by AEs). More specifically, VAEs draw samples z from an encoding distribution, , and subsequently feed the sample through a differentiable generator network, obtaining . Then, x is sampled from a distribution . Moreover, VAEs utilize an approximate inference network (i.e., the encoder) to obtain z. With this approach, now is considered a decoder network, decoding z that comes from . VAEs can take advantage of gradient-based optimization for training through maximizing the variational lower bound, , associated with x. Figure 3(f) depicts the architecture of traditional VAEs.

Mathematically, we can express the objective function as in Eq. (11)

where denotes the entropy, and is the Kullback–Leibler divergence. The first term in Eq. (11a) is the joint log-likelihood of the hidden and visible variables under the approximate posteriors over the latent variables. The second term of Eq. (11a) is the entropy of the approximate posterior. This entropy term encourages the variational posterior to increase the probability mass on a range of z which could have produced x, as opposed to mapping to a one-point estimate of the most likely value.120

Compared to other generative models [e.g., generative adversarial networks (GANs)162], VAEs have desirable mathematical properties and training stability.120 However, they suffer from two major weaknesses: (i) classic VAEs create “blurry” samples (those that adhere to an average of the data points), rather than the sharp samples that GANs generate due to GANs' adversarial training. (ii) The other major issue with VAEs is posterior collapse: when the variational posterior and actual posterior are nearly identical to the prior (or collapse to the prior), which results in poor data generation quality.163 To alleviate these issues, different algorithms have been developed, which have been shown to significantly improve the quality of data generation.163–169 VAEs are used extensively for the analysis of single-cell RNA sequencing (see Erfanian et al.10), and we anticipate them to be applied to a wide range of spatial transcriptomics analyses as well.

IV. DEEP LEARNING MODELS FOR SPATIALLY RESOLVED TRANSCRIPTOMICS ANALYSIS

In Secs. IV A–IV E, we describe the use of ML and DL to problems emerging from spatial transcriptomics.

A. Spatial reconstruction

Prior to the advancement of spatial transcriptomics, several studies aimed to reconstruct spatial information using gene expression data, with most of these works using a statistical framework. As perhaps one of the most influential models in this space, Satija et al.59 introduced Seurat: a tool that utilized spatial reference maps constructed from a few landmark in situ patterns to infer the spatial location of cells from corresponding gene expression profiles (i.e., scRNAseq data). This approach showed promising results: Satija et al. tested Seurat's capabilities and performance on developing zebrafish embryo dataset (containing 851 cells) and a reference atlas constructed from colorigenic in situ data for 47 genes,59 confirming Seurat's accuracy with several experimental assays. Additionally, they showed that Seurat can accurately identify and localize rare cell populations. Satija et al. also demonstrated that Seurat was a feasible computational solution for handling stochastic noise in omics data and finding a correspondence between ST and scRNAseq data.

Although Seurat proved to be successful in some applications, it had the limitation of requiring spatial patterns of marker genes expression.60 To alleviate Seurat's limitations, newer methods that did not require spatial reference atlases were developed. A more recent and an influential model in this space is novoSpaRc,60 with the ability to infer spatial mappings of single cells de novo. For novoSpaRc, Nitzan et al.60 assume that cells that are closer to one another physically have similar gene expressions as well, therefore searching for spatial arrangement possibilities that place cells with similar expressions closer in space. Nitzan et al. formulate this search through a generalized optimal-transport problem for probabilistic embedding.

NovoSpaRc shows very promising results when it is applied to spatially reconstruct mammalian liver and intestinal epithelium, and embryos from fly and zebrafish from gene expression data.60 However, novoSpaRc (and similar models) use a generic framework and cannot be easily adapted to specific biological systems, which may be required given the vast diversity of biological processes and organisms. For this reason, many have utilized ML algorithms to specifically adapt to the biological system by learning from the data, as opposed to using pre-defined algorithms that remain unchanged. Indeed, we anticipate that DL models will soon play a salient role in spatial reconstruction of scRNAseq, given their ability to extract features from the raw data while remaining flexible across different applications. In this section, we review DEEPsc,61 a system-adaptive ML model that aims to impute spatial information onto non-spatial scRNAseq data.

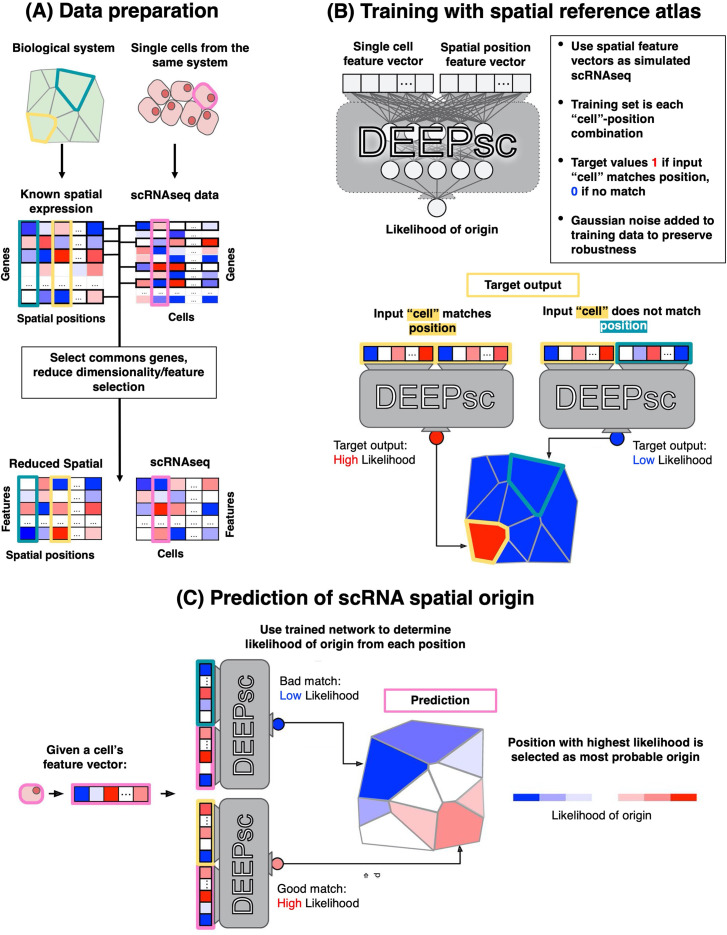

DEEPsc is a spatial reconstruction method that requires a reference atlas (see Fig. 4). This reference map can be expressed as a matrix , where is the number of spatial locations, and is the number of genes. Maseda et al. start by selecting common genes between Mspatial and the gene expression matrix, (where is the number of cells, and is the number of genes), resulting in a spatial matrix and an expression matrix , where is the common genes between the two matrices. Next, S is projected into a lower dimension using principal component analysis (PCA), and the same PCA coefficients are used to project E into these principal components. In the last step of processing, both matrices are normalized by their largest elements, resulting all elements of the matrices E and S to be in [0, 1]. Let us denote the normalized and PCA-reduced spatial and gene expression matrices as and , respectively.

FIG. 4.

An overview of DEEPsc training and inference. (a) Maseda et al. find the common genes in both spatial and scRNAseq data and perform dimensionality reduction on each data modality (with the final matrices having the same number of features). (b) During the training, DEEPsc uses spatial expression to “simulate” single-cell gene expression vectors. More specifically, every feature vector from the spatial expression is concatenated with all other vectors (labeled as “non-match”) and also itself (labeled as “match”) to form the input data to the neural network. (c) During inference, the scRNAseq feature vectors are concatenated with all spatial feature vectors, where the model should place a high probability for locations where the gene expression could have originated from. This figure was obtained from Maseda et al.61 [Front. Genet. 12, 348 (2021). Copyright 2021 Authors; licensed under a Creative Commons Attribution [CC-BY] license).

DEEPsc requires known spatial expression to learn the correct spatial positions, given the gene expression. More specifically, Maseda et al. construct training vectors of size (with N being the number of features preserved in the reduced matrix ). The first N elements of Inpij correspond to the spatial expression at the ith position, and the last N elements correspond to some position j in the reference atlas, including the position j = i. During training, DEEPsc's goal is produce the highest likelihood when (meaning that ) and assign low likelihood when . DEEPsc also adds Gaussian noise to posi (the first N elements of Inpij), which aims to preserve robustness and avoid overfitting. The addition of noise can lead to DEEPsc learning a complex non-linear mapping between the spatial positions in the reference atlas rather than a simple step-like function that activates when an exact match is inputted. During inference stage (i.e., after DEEPsc is trained), posi is replaced with the gene expression feature vector, which are the elements of , and the goal is to predict the likelihood of the expression vector being originated from all possible positions j.

DEEPsc's network is an FFNN with two hidden layers, with each , mapping to an , where y can be viewed as a likelihood that the input cell originated from the input spatial position.170 Given that for each training data Inpij there will be non-matches (labels of zero) and only one match for when j = i, the training labels will have many more zeros than ones. Therefore, Maseda et al. propose a nontraditional objective function that accounts for the imbalance between zeros and ones in the training data. This objective function is shown in the following equation:

| (12) |

where is the networks predicted outputs, and is the true target ( if it exactly matches, and otherwise). This allows DEEPsc to avoid producing trivially zero outputs, which is important given the sparsity of the data. Maseda et al. also employ strategies in data splitting which helps to account for the inherent sparsity in the targets.61 It is important to note that Maseda et al. also formulate a novel system-adaptive scoring scheme to evaluate the performance of DEEPsc using the spatial reference. However, the scoring scheme does not fall within the scope of this manuscript.

Maseda et al. apply DEEPsc for spatial imputation of four different biological systems (Zebrafish,59 Drosophila,171 Cortex,172 and Follicle173) achieving accuracy comparable to several existing models while having higher precision and robustness.61 DEEPsc also shows better consistency across the different biological systems tested, which can be attributed to its system-adaptive design. In addition, the authors attribute the performance and generalizability of DEEPsc to the use of FFNN (which have been noted before in other biological applications as well45,47) and the various strategies for robustness used during the training of DEEPsc. On the other hand, a weakness of DEEPsc is its training time, which depends non-linearly on the number of locations available. However, this issue can be potentially mitigated by considering a small subset of possible locations, or a more optimized design when training the model.

B. Alignment

Alignment in ST analysis refers to the process of mapping scRNAseq data to a physical domain while aiming to match the geometry with the available spatial data. As previously stated, NGS-based technologies suffer from limited capture rates and significant dropout174 (especially at higher resolutions). Before the use of DL in ST analysis, many computational approaches aimed to spatially reconstruct key marker genes scRNAseq data by assuming continuity in the gene space,60 or by leveraging local alignment information.59 Moreover, most techniques for alignment or deconvolution of spatial data either learned a program dictionary32 or estimated a probabilistic distribution of the data64 for the cell types at each spot. However, such approaches are not generalizable to all experimental settings, since finding the mapping of sparse or sporadically distributed genes to the spots is difficult and is error-prone due to dropouts.34

DL frameworks have the potential of providing robust models that can adapt to specific species or technologies while being generalizable to other datasets and platforms. The potential application of DL in the alignment of spatially resolved transcriptomics came to fruition recently through the work of Biancalani et al., called Tangram.34 Tangram is a framework that, among many of its capabilities, can align scRNAseq or single-nucleus(sn) RNAseq profiles to spatial data; for the sake of simplicity, we refer to both data types as scRNAseq, although there are differences between the two methods (see Ref. 175 for a systematic comparison of scRNAseq and snRNAseq approaches). Tangram aims to: (i) learn the transcriptome-wide spatial gene expression map at a single-cell resolution and (ii) relate the spatial information back to histological and anatomical data obtained from the same samples. Tangram's general workflow is to learn a mapping between the data modalities and then construct specific models for the downstream tasks (such as deconvolution, correcting low-quality data, etc.). We first summarize Tangram's alignment algorithm and then provide the applications in which DL models are utilized.

Tangram's general objective is to learn a spatial matrix describing the spatial alignments for the cells, with ncells and ngenes denoting the number of single-cells and the number of genes, respectively. Let the expression of gene k in cell i be denoted by , a non-negative value. Next, Tangram partitions (“voxelizes”) the spatial volume at the finest possible resolution (depending on the spatial technology) as a one-dimensional array. This allows Tangram to construct (1) a matrix , where Gjk is a non-negative value denoting the expression of gene k in voxel j, and (2) a cell-density vector , where is the cell density in voxel j (with the total density for each voxel summing to 1).

The learning of transcriptome-wide spatial gene expression map at a single-resolution happens through learning a mapping operator , where Mij denotes the probability of cell i being in voxel j. Moreover, given any matrix , each element of the operator M is assigned according the following equation:

| (13) |

ensuring that , i.e., assigning a probability distribution along the voxels using the well-known function. Biancalani et al. define an additional quantity, , which denotes the spatial gene expression as predicted by the operator M, and a vector where is the predicted cell density for each voxel j.

Given the preliminary quantities, we can now write Tangram's generic objective function as shown in the following equation:

| (14) |

where “ ” denotes the matrix slicing, and is the cosine similarity, defined as follows:

| (15) |

The objective function, Eq. (14), learns a proportional mapping of the genes to the voxels. Additionally, this loss function can be further modified to incorporate prior knowledge. Indeed, Biancalani et al. modify this to regularize for the learned density distributions and the cells contained within each voxel, as shown in the following equation:

| (16) |

where minimizing the divergence (first term) enforces that the learned density distribution and the expected distribution are similar, and the additional loss over the voxels (third term) penalizes the model if the predicted gene expression is not proportional to the expected gene expression. Biancalani et al. minimize the objective shown in Eq. (16) through gradient-based optimizers implemented in PyTorch.176,177 After optimizing Eq. (16), Tangram is able to map all scRNAseq profiles onto the physical space, thus performing the alignment. It is important to note that although Tangram learns a linear operator M, this mapping could be replaced with a deep neural network as well.

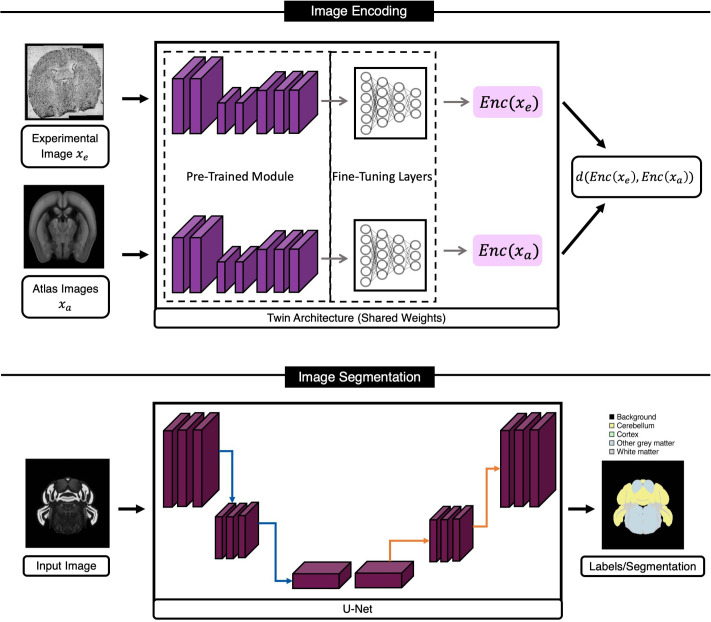

Tangram utilizes DL to integrate anatomical and molecular features, specifically for mouse brain images. To do so, the authors use an image segmentation network (U-Net178) in combination with a “Twin” network179 to produce segmentation masks of anatomical images, with both networks being CNNs. We present a general overview of these architectures in Fig. 5. The twin network uses DenseNet:180 a deep NNs that concatenates the outputs at each layer to propagate salient information to deeper layers in the network (refer to Sec. III D for the motivation behind such approaches). More specifically, Tangram uses a pre-trained DenseNet encoder (trained on ImageNet) to encode images and remove technical noise and artifacts. Biancalani et al. also add two additional layers to the pre-trained encoder, which map the outputs to a smaller latent space. The encoder of the twin network is fine-tuned on learning the prediction of spatial depth difference between two images: Two random images are inputted to the twin network with their spatial difference depth being the desired target output, dtrue. The network then tries to predict the depth, dpred, for all N inputs, ultimately comparing them against the corresponding true depth differences, dtrue (as shown in the following equation):

| (17) |

FIG. 5.

Tangram's DL framework for aligning/integrating histology and anatomical data with molecular data. Tangram's model for this task is a combination of encoding (using a twin network) and segmentation modules. The twin network learns a similarity metric for brain sections based on anatomical features in images, while the U-Net model is trained to segment five different classes on mouse brain images. This figure was recreated for this manuscript based on illustrations from Biancalani et al.34

The segmentation model of Tangram generates five custom segmentation masks (background, cortex, cerebellum, white matter, and other gray matter) that are compatible with the existing Allen ontology atlas. The segmentation model is a U-Net, which uses a pre-trained ResNet50 (Ref. 156) as its core. Finally, for each pixel in input images, the model's last layer (a softmax function) assigns a probability of belonging to one of the five segmentation classes. Tangram's segmentation model aims to optimize the superposition of the cross entropy and Jaccard index, as presented in the following equation:

| (18) |

with p denoting the model prediction and g referring to the ground truth image.

Biancalani et al. demonstrate that Tangram learns an accurate mapping between the spatial data and scRNAseq gene expression when applied to fine- or coarse-grained spatial atlases. The authors show that their approach works well across different technologies (namely, ISH, smFISH, Visium, STARmap, and MERFISH) at different resolutions and gene coverage and is able to learn a robust and accurate alignment mapping for the isocortex of the adult healthy mouse brain.34 While Tangram can offer different advantages based on spatial technology, it can produce consistent spatial mappings and overcome limitations in resolution or throughput, which is beneficial in many ST experiments and studies.

C. Spot deconvolution

One downside of using NGS-based technologies remains to be their resolution: Despite the recent technological advancements, most ST platforms (e.g., spatial transcriptomics, Visium, DBiT-seq,181 Nanostring GeoMx,182 and SlideSeq) do not have a single-cell resolution. The number of cells captured in each spot still varies based on the tissues [about 1–10 (Ref. 183)] and the technology used. On the other hand, we cannot assume that all cells within a spot are the same, due to the heterogeneity of the cells. Therefore, it is necessary to use computational approaches for inferring the cell types in each spot or voxel. Such estimations would be possible if there were a complementary scRNAseq dataset. The process of inferring the cellular composition of each spot is known as cell-type deconvolution. Deconvolution has been at the forefront of computational efforts, and it is important in building organ atlases.20,118,184 In fact, cell-type deconvolution is an existing procedure for inferring cell-type composition in RNAseq data using scRNAseq. However, methods developed for bulk RNAseq do not account for the spatial components of ST datasets and are therefore generally inadequate. Given that deconvolution is an existing practice in RNAseq studies, we will refer to the spatial deconvolution problem as spot deconvolution to distinguish between the traditional methods and the ones developed for ST analysis.

We divide spot deconvolution methods into three categories: (i) statistical methods, (ii) machine learning, and (iii) deep learning, with many of the current models falling into the first two categories. We now dive deeper into the two existing models that use DL for performing spot deconvolution.

1. DestVI

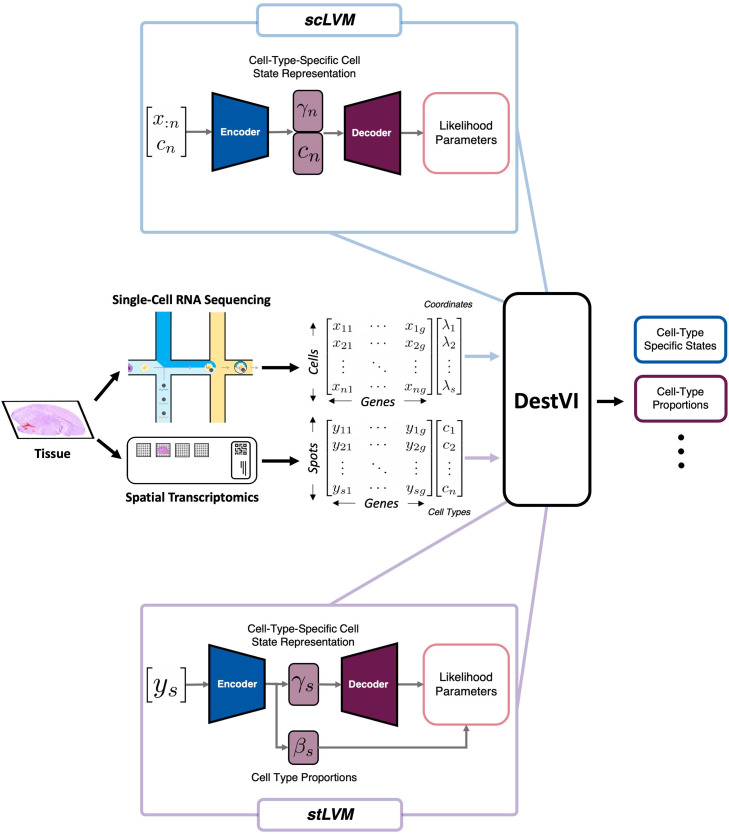

DestVI (deconvolution of spatial transcriptomics profiles using variation inference) is a Bayesian model for spot deconvolution. DestVI employs a conditional deep generative model (similar to scVI,185 a popular model for scRNAseq analysis) to learn cell-type profiles and continuous sub-cell-type variations, aiming to recover the cell-type frequency and the average transcriptions state of cell types at each spot. To do so, DestVI takes a pair of transcriptomics datasets as inputs: (i) a reference scRNA-seq data and (ii) a query spatial transcriptomics data (from the same samples). DestVI then outputs the expected proportion of cell types for every spot and continuous estimation of cell state for the cell types present in each spot, which can be viewed as the average state of the cell types in each spot, which Lopez et al. suggest as useful for downstream analysis and formulation of biological hypotheses.69

DestVI uses two different latent variable models (LVMs) for distinguishing cell-type proportions and delineating cell-type-specific sub-states (shown in Fig. 6). The first LVM is for single-cell data (therefore named scLVM) which assumes the counts follow a negative binomial (NB) distribution, which has shown to model RNAseq count data well.185–187 Specifically, Lopez et al. assume that for each gene g and cell n, the count of observed transcripts, xng, follows an NB distribution parameterized with : is a parameter that depends on the type assigned to the cell cn, the total number of detected molecules ln, and a low-dimensional latent vector γn (which Lopez et al. set ) that describes the variability of cell-type assignment to cell cn, and a neural network f parameters (in this case, a two-layer NN). The second parameter of the NB, pg, is optimized using variational Bayesian inference. We can summarize the assumptions for scLVM as shown in the following equation:

| (19) |

with the latent variable . Each cn (the annotations) are represented by a one-hot encoded vector, which is concatenated with γ to serve as the input of the NN f. Lopez et al. use a VAE to optimize for the marginal conditional likelihood .

FIG. 6.

Visualization of DestVI's computation workflow for spot deconvolution. DestVI uses information from both data modalities of the ST data (coordinates and scRNAseq). DestVI defines two latent variable models (LVMs) for each data modality: an LVM for modeling scRNAseq data (scLVM, shown at the top) and one that aims to model the ST data (stLVM, shown at the bottom). We describe each one in Sec. IV C. This figure was recreated for this manuscript based on illustrations from Lopez et al.69

Finally, for scLVM, a mean-field Gaussian distribution , parameterized by another two-layer NN g, is inferred for each cell which quantifies the cell state and the associated uncertainty. The NN g takes a concatenation of (i) the gene expression vector xn and (ii) the one-hot encoded cell annotations as its inputs. The network g outputs the mean and variance of the variational distribution for γn, obtained through optimizing Eq. (20)

| (20) |

where is the prior likelihood for γn. Similar to training any other VAE, the observations are split into mini-batches, and sampling from the variational distribution is done using the reparameterization trick described in Kingma and Welling.161 The computational workflow for scLVM is visualized in Fig. 6(b).

The second LVM aims to model the spatial transcriptomics data (hence called stLVM) with the assumption that the number of observed transcripts xsg at each spot s for each gene g follows an NB distribution. Additionally, Lopez et al. also assume that each spot has C(s) cells, with each cell n in a spot s being generated from the latent variables . For stLVM's NB distribution, the rate parameter , where αg is a correction factor for the gene-specific bias between spatial and scRNAseq data, lg is the overall number of molecules observed in each spot, and fg is an NN network with parameters . These assumptions and quantities allow Lopez et al. to formulate the total gene expression xsg as shown in the following equation:

| (21) |

Moreover, using a parameter to designate the abundance of every cell type in every spot, βsc, and NB's rate-shape parameterization property (see Aragón et al.188), Eq. (21) can be rewritten as follows:

| (22) |

supposing that cells from a given cell type c in a spot s must come from the same covariate .

The covariate in DestVI allows for the model to account for ST technology discrepancies by assuming various empirical priors (refer to Fig. 6). Lopez et al. simplify the problem of identifying every cell type in each spot to determine the density cell types, i.e., assuming that there cannot be significantly different cell states of the same cell types within a spot. Lopez et al. use a penalized likelihood method to infer point estimates for γc, α, and β. With the additional two strategies to stabilize the training of DestVI, the final objective function for stLVM consists of (i) the negative binomial likelihood, (ii) the likelihood of the empirical prior, and (iii) the variance penalization for α.

Lopez et al. use simulations to present DestVI's ability to provide higher resolution compared to the existing methods and estimate gene expression by every cell type in all spots. Furthermore, they show that DestVI is able to accurately deconvolute spatial organization when applied mouse tumor model. In the cases tested, Lopez et al. demonstrate that DestVI is capable of identifying important cell-type-specific changes in gene expression between different tissue regions or between conditions and that it can provide a high resolution and accurate spatial characterization of the cellular organization of tissues.

2. DSTG

Deconvoluting spatial transcriptomics data through the graph-based convolutional network (DSTG)65 is a recent semi-supervised model that employs graph convolutional networks (GCN)136 for spot deconvolution. DSTG utilizes scRNAseq to construct pseudo-ST data and then build a link graph that represents the similarity between all spots in both real and pseudo-ST data. The pseudo-ST is generated by combining scRNAseq transcriptomics of multiple cells to mimic the expression profiles at each spot; while the real ST data are unlabeled, the pseudo-ST has labels. To construct the link graph, DSTG first reduces the dimensionality of both real and pseudo data using canonical correlation analysis189 and then identifies mutual nearest neighbors.190 Next, a GCN is used on the link graph to propagate the real and pseudo-ST data into a latent space that is turned into a probability distribution of the cell compositions for each spot.

Song et al. form the link graph by taking the number of spots as the number of vertices, resulting in a graph , where denotes the number of spots, and E represents the edges between them. DSTG takes two inputs: (i) the adjacency matrix of graph G, represented by A, and (ii) a combination of both real and pseudo datasets , where m is the number of variable genes, and (the total number of spots in both datasets) with Spseudo and Sreal indicating the number of spots in the pseudo and real ST datasets, respectively. Next, Song et al. normalize the adjacency matrix (for efficient training of DSTG) using the diagonal degree of A, denoted by D, as shown in the following equation:

| (23) |

where (with I denoting the identity matrix). Given the two inputs, DSTG's graph convolution layers take the following form:

| (24) |