Abstract

The Visual World Paradigm (VWP) is a powerful experimental paradigm for language research. Listeners respond to speech in a “visual world” containing potential referents of the speech. Fixations to these referents provides insight into the preliminary states of language processing as decisions unfold. The VWP has become the dominant paradigm in psycholinguistics and extended to every level of language, development, and disorders. Part of its impact is the impressive data visualizations which reveal the millisecond-by-millisecond time course of processing, and advances have been made in developing new analyses that precisely characterize this time course. All theoretical and statistical approaches make the tacit assumption that the time course of fixations is closely related to the underlying activation in the system. However, given the serial nature of fixations and their long refractory period, it is unclear how closely the observed dynamics of the fixation curves are actually coupled to the underlying dynamics of activation. I investigated this assumption with a series of simulations. Each simulation starts with a set of true underlying activation functions and generates simulated fixations using a simple stochastic sampling procedure that respects the sequential nature of fixations. I then analyzed the results to determine the conditions under which the observed fixations curves match the underlying functions, the reliability of the observed data, and the implications for Type I error and power. These simulations demonstrate that even under the simplest fixation-based models, observed fixation curves are systematically biased relative to the underlying activation functions, and they are substantially noisier, with important implications for reliability and power. I then present a potential generative model that may ultimately overcome many of these issues.

Keywords: Visual World Paradigm, Eye movements, Monte Carlo simulations, Time series analysis, Psycholinguistics

Introduction

In the past 25 years, few empirical methods in cognitive science have had the wide-ranging impact of the Visual World Paradigm (VWP; Tanenhaus et al., 1995) on language research (Magnuson, 2019; Salverda et al., 2011, for reviews). The VWP starts from a simple premise. Subjects are situated in a visual world. This could be as simple as four pictures on a computer screen or as complex as a real-world conversation over real objects. They then hear spoken language referring to those objects. Objects represent possible interpretations of the speech. While they perform this task (or just scan the scene), eye movements are monitored. Since eye movements unfold continuously as the subjects interpret the speech, they thus provide insight into the degree to which listeners consider various interpretations over time.

This was initially applied to sentence processing (Eberhard et al., 1995) and word recognition (Allopenna et al., 1998). However, the ultimate impact of the VWP was inconceivable in 1995. It rapidly spread down the language chain to speech perception (McMurray et al., 2002), and up to pragmatics (Hanna & Tanenhaus, 2004; Keysar et al., 2000). It has become important in understanding development (Fernald et al., 1998; Rigler et al., 2015; Snedeker & Trueswell, 2004), and bilingualism (Spivey & Marian, 1999), and for characterizing language comprehension in clinical populations including people who have dyslexia (Desroches et al., 2006), autism (Brock et al., 2008), schizophrenia (Rabagliati et al., 2019), developmental language disorder (McMurray et al., 2010), and brain damage (Mack et al., 2013; Mirman et al., 2011). It has even been applied outside of spoken language, to reading (Hendrickson et al., 2021) and speech production (Griffin, 2001).

The VWP is not a panacea. There are limits on the types of words or objects that can be studied (picturable nouns), and it can be difficult to map complex sentences onto a visual scene while preserving some kind of task for the subject. There are ongoing concerns about whether the visual scene constrains linguistic processing (Magnuson, 2019). Such constraints could be theoretically important as a marker of the embodiment and interactivity of language and vision (Spivey, 2007). They could also be a confound, as in arguments that fixations in the VWP only reflects objects that have been “prenamed” in working memory (see Huettig et al., 2011, for a discussion), though concern has been ruled out empirically at least for word recognition (Apfelbaum et al., in press). Despite these ongoing concerns, the VWP has remained highly influential for three reasons.

First, unlike techniques such as priming, the task of the VWP is natural: understanding language. Thus, it is more ecological and more suitable for populations who may lack the meta-linguistic awareness needed for tasks like lexical decision. Second, most alternatives—including reaction times, but also neuroimaging approaches like MRI and ERPs—make only indirect inferences about language processing by identifying conditions in which processing is difficult. In contrast, the VWP offers more direct access to what interpretations are considered during processing: If a subject is fixating a referent (more than unrelated object), they are considering it.

Perhaps the most important reason for the VWP’s impact is time. The VWP has long been touted as a “real-time” measure, estimating the state of the system while processing unfolds. It is not the first real-time method, but it is richer than many. In prior approaches like cross-modal priming or response deadlines, the researcher defines a small number of time points of interest, and these are probed on distinct trials. For example, if one wanted to use priming to assess processing at 200 and 500 ms after word onset, one would present the orthographic target 200 ms after the word on half the trials, and 500 ms on the other half. Each trial assesses one time point, and the number of total time points that can be measured is limited. In contrast, VWP has been claimed to assess processing nearly continuously in time on all trials. This is an inconceivably large advance in the richness of the data.

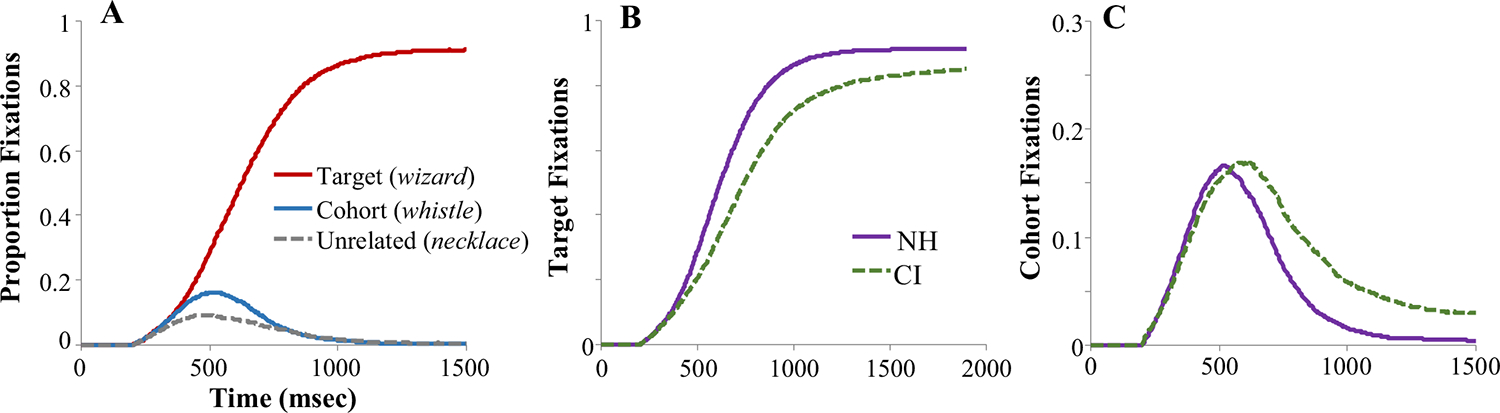

Part of this claim rests on the temporally rich visualizations of the data. From the beginning, researchers adopted visualizations like Fig. 1 (Allopenna et al., 1998) to depict the time course of fixations (termed “fixation curves” here). Multiple studies have illustrated a close correspondence between these kinds of visualizations and continuous output from models such as TRACE (McClelland & Elman, 1986) and TISK (Allopenna et al., 1998; Dahan et al., 2001; Hannagan et al., 2013; McMurray et al., 2009). And this is not mere observation—one can manipulate parameters of the model and show systematic distortions that mimic differences among people with language disorders or brain damage (Dahan et al., 2001; McMurray et al., 2010; Mirman et al., 2011). This has yielded a collective sense that these curves fairly precisely characterize the time course of processing.

Fig. 1.

Typical Visual World Paradigm (VWP) visualizations (adapted from Farris-Trimble et al., 2014, Exp. 1). In this experiment, participants (normal hearing or cochlear implant [CI] users) heard an isolated word (e.g., wizard) and selected the referent from a screen containing pictures of the target (wizard), a cohort competitor (whistle), a rhyme (lizard, not shown), and an unrelated (necklace). a Proportion fixations to the target, cohort, and unrelated in the normal hearing listeners show a precise time-locking to the unfolding ambiguity in the signal: Early on, listeners fixate the target and cohort as the input they have heard thus far (wi-) is consistent with both. Later, they suppress the competitor to hone in on the target. b–c Fixations to the target (b) or cohort (c) as a function of listener group (NH: normal hearing; CI: cochlear implant users) can reveal precise quantitative differences in the time course of processing. (Color figure online)

The power of these visualizations has led to an explosion of techniques for making precise statistical estimates about the dynamics of the fixation curve (Cho et al., 2018; McMurray et al., 2010; Porretta et al., 2018; Seedorff et al., 2018). These approaches offer evermore mathematically and statistically precise ways to characterize these nonlinear functions, and the factors that affect them. All of these approaches—including ones I helped develop—assume the face validity of the fixation curve. This validity has not been questioned.

This manuscript starts from the premise that all of these approaches fail to take seriously the nature of the fixation record as a stochastic series of discrete and fairly long-lasting physiologically constrained events. A model of this process is implemented to determine whether the form of this stochastic process alters the observed fixation curves that one should expect from a given underlying function. The short answer to this question is that the regularities of this stochastic process have both systematic and unpredictable effects on the observed fixation curves; they add not only noise but also bias. As a result, the fixation curves do not always resemble the underlying dynamics of the system, and this may necessitate alternative statistical models and caution in interpreting existing models. Critically, as the field moves to issues of power, reliability, and replicability, understanding the contributions of this stochastic component to the data is critical for designing experiments, identifying indices of psychological constructs in the fixation record, and evaluating the rigor of VWP studies more broadly.

This manuscript starts with a brief review of current analytic approaches. It then turns to a detailed discussion of how fixation curves are derived and the linking hypotheses that conceptualize the relationship between the underlying activation in the system and observed fixations before presenting the simulations.

Analytic approaches to the VWP

This project’s goal is not to evaluate analytic approaches, but its questions are motivated by current analysis methods. Three broad classes of analytic approaches have been used.

Fixation-driven approaches

The earliest studies used fixation-driven approaches, which emphasize specific properties of the fixation record: the number of fixations to an object, the duration, the likelihood of transition from one object to another, and so forth (e.g., see McMurray et al., 2008a, 2009; Spivey & Marian, 1999; Tanenhaus et al., 1995). Such approaches are historically related to work done on eye-movement control in reading, which has led to fixation-driven measures tied to theoretical constructs (e.g., first fixation duration, regressions; cf. Rayner et al., 1998). These measures are highly physiologically grounded and straightforward to estimate. However, there are several limits.

First, there are many such measures in principle. In reading, measures like first fixation time or the likelihood of refixation are linked to theoretical concepts about reading, lexical access, and sentence processing, permitting a hypothesis-driven approach. However, eye-movement control in reading may be somewhat simpler than in the VWP. In reading, eye-movement control is routinized (overpracticed) and largely directed by the problem of extracting information. However, in the VWP, eye movements simultaneously reflect visual information uptake, language processing (matching lexical activation to the scene), and response planning (and this may differ as the task/decision unfolds; Magnuson, 2019). With no clear theory to guide variable selection, this can lead to too many researcher degrees of freedom.

Second, this problem is exacerbated by the fact that an effect on the underlying decision function may be spread across multiple fixation variables. For example, increased competitor activation could increase the probability of fixating an object, extend the fixation’s duration, or increase the likelihood of returning to it. Any single measure may lack power to detect effects.

Finally, fixation-driven measures are not always temporally precise, and there are fundamental limitations on this. Consider a simple measure like the likelihood of fixating the competitor. One might like to estimate this at multiple times (e.g., between 100 and 200 ms, 200 and 300). However, given the low likelihood of fixating the competitor overall, there may be very few trials to contribute to any individual bin for a given subject.1

Fixation curves: Indices

Using the fixation curves (Fig. 1) as the basis of analysis overcomes some of these limits. When the VWP was developed, we lacked statistical tools to fully characterize these functions in time. Consequently, many studies started from these curves as a visualization tool but derived individual indices to assess aspects of them. An example is area under the curve (AUC), in which one simply averages over the time span of interest and uses that as a measure of how much the subject is fixating a given object.

Index approaches offer some advantages over fixation-based ones. First, the fixation curve is simultaneously affected by many properties of the fixation record (likelihood of fixating, transitions, duration, and so forth). These would all be independent Dependent Variables (DVs) in a fixation-based analyses (with lower power). In contrast, the fixation curves functionally collapse across these properties to a single DV. A subject could have a higher AUC because fixations were longer, they were more likely to look at the competitor, or they fixated it twice. Thus, indices may overcome some of the degrees of freedom associated with fixation-based approaches. Of course, they add their own degrees of freedom. AUC, for example, requires the researcher to specify a time window. However, recent approaches like permutation-based clustering (Maris & Oostenveld, 2007) and BDOTS (Oleson et al., 2017) can detect that there is a difference and also the time window over which it occurs.

Second, these measures can be much more temporally specific than fixation-based measures. They do not rely on having some quantity of fixation that precisely starts or stops at certain times, and the temporal precision is limited only by the sampling period.

Third, index approaches are not limited to the overall degree of looking. This can lead to measures that are highly theoretically informed, provided we accept the validity of the fixation curves. For example, indices have been identified to determine when the fixation curve crosses a threshold (Ben-David et al., 2011), to estimate the peak looking to a competitor, or the duration over which competitor fixations were above threshold (Rigler et al., 2015). Perhaps the most sophisticated approach is an onset detection technique developed by me and my colleagues (Galle et al., 2019; McMurray et al., 2008b; and see Reinisch & Sjerps, 2013). These combine both target and competitor fixations to estimate when the fixations record is biased by different factors in the input.

In the best versions, indices are hypothesis driven; some (like onset detection) have been in use sufficiently across papers that they are fairly standard (minimizing researcher degrees of freedom [d.f.]). Such indices can serve as a confirmatory hypothesis test in which the researcher proposes a measure—in advance—and tests only that DV. However, at worst, they can also be unconstrained.

These approaches have fallen out of favor, but not for the reasons you think. In their heyday, questionable research practices were not the concern that they are now. Instead, researchers saw a different glaring weakness: While they start from the fixation curves, index approaches do not model the full time course. To many (including me), this felt like a missed opportunity.

Time course analyses

In the past decade, new approaches have been developed to more precisely model the fixation curve. The first used polynomial growth curves in a mixed model (Mirman et al., 2008). Shortly later, my lab introduced nonlinear curvefitting (Farris-Trimble & McMurray, 2013; McMurray et al., 2010) to more intuitively capture the shape of the fixation curve, though only when it fit a predefined form. Newer approaches offer more flexibility and precision. generalized additive mixed models (GAMMS; Porretta et al., 2018) use “smoothing” functions to capture virtually any nonlinear effect of time. Cho et al. (2018) propose a more radical auto-regressive framework in which time is not an explicit factor. Rather, the proportion of fixations at each time is modeled as a function of the proportion at the previous time. These approaches offer even more flexibility and can accommodate curves of virtually any shape, and in a mixed-model framework that can handle cross-random effects.

The current zeitgeist—as seen in the evolution of these statistical approaches—is to model the precise time course of fixations with evermore precision and rigor. These precise specifications are then interpreted as reflecting precise read outs of cognitive states. However, this rests on the assumption of a direct linking function between underlying decision dynamics and measurable fixation curves. This linking function has not been explicitly investigated.

What are fixation curves, and where do they come from?

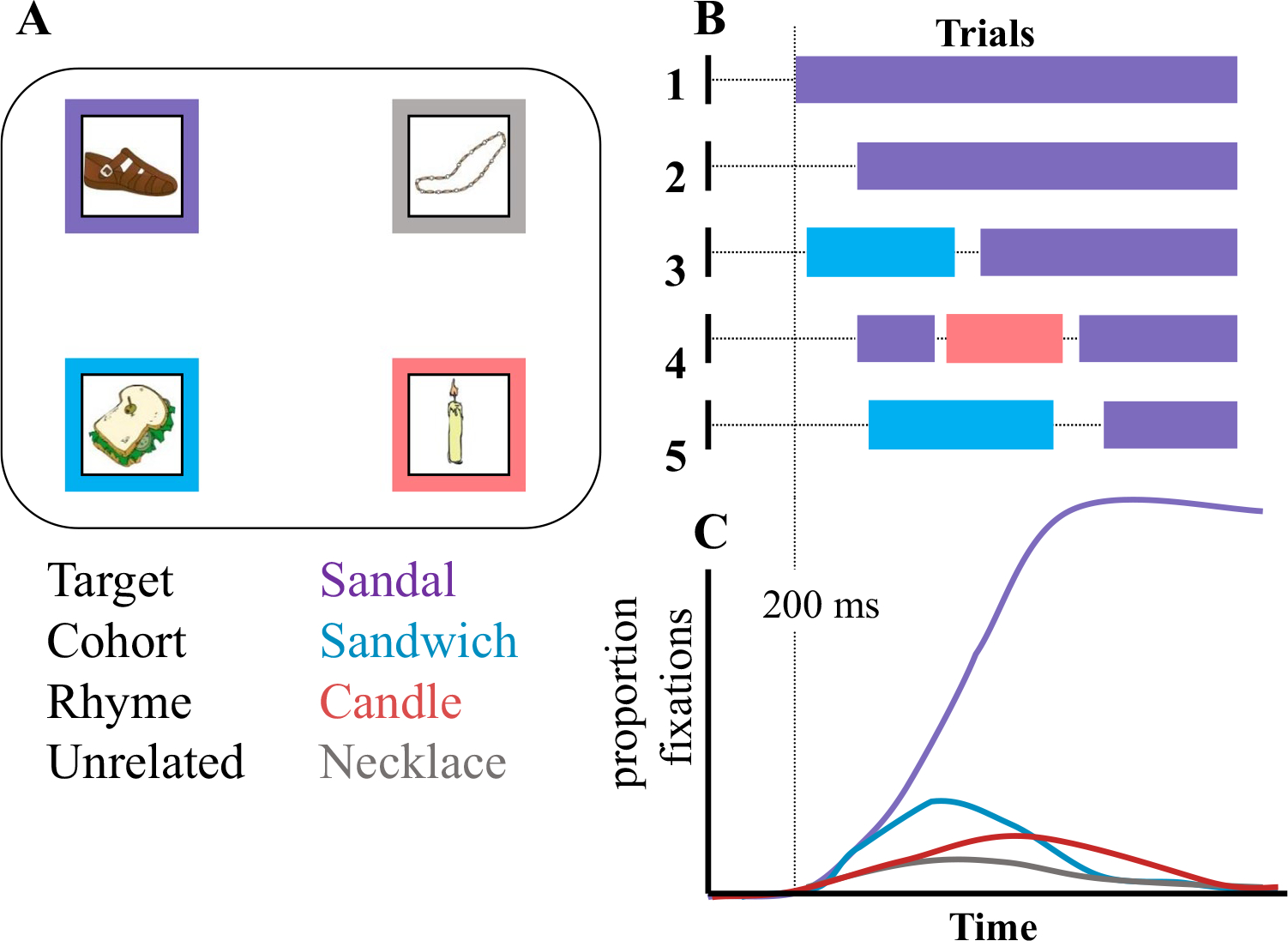

Typical fixation curves appear to be continuous and smooth, but they are actually built from discontinuous and “chunky” data. Saccades are relatively ballistic, and fixations are discrete. Listeners can only fixate one thing at a time, and once they get there, there is a roughly 200-ms refractory period until they can move. Figure 2 shows a schematic of how these smooth curves are built from this data. In this example, the visual display (Fig. 2a) might contain a target object (sandal, in purple), a cohort (sandwich, in blue), a rhyme (candle, in red), and an unrelated object (necklace, in gray). On each trial, subjects make a series of discrete fixations to these objects (Fig. 2b). On Trial 1, for example, they may look at the correct object and stay there, while on Trial 3, they may briefly fixate the cohort before moving to the target.

Fig. 2.

Schematic illustrating the source of the fixation curves (courtesy Richard Aslin). a A typical screen in the Visual World Paradigm (VWP) used to study word recognition may contain four objects: a target (corresponding to the auditory stimulus, sandal, in green), a cohort or onset competitor (sandwich, in blue), a rhyme (candle, in red), and an unrelated object. b On each trial, listeners launch a discrete series of fixations. On some trials, they may look at the target and stay; on others, they may look briefly at a competitor before the target. c To obtain the time course of fixations, one computes the proportion of trials in which the participant is fixating each object at each time slice. (Color figure online)

To construct the fixation curves, the researcher averages across trials, within times, to compute the proportion of trials on which subjects were fixating a class of referents (Fig. 2c).2 Two-hundred ms is marked here as a rough estimate of the time it takes to plan and launch an eye movement (Viviani, 1990)—fixations initiated before then are not thought to be driven by the auditory input, and a saccade launched at 600 ms (for example) was likely planned at 400 ms. It also thus represents the refractory period before the subject can make another fixation.

These curves are used to make inferences about activation that is presumed to be continuous over time. The typical (unspoken) assumption in analyzing these data can be termed the high-frequency sampling (HFS) assumption. Under this assumption, the underlying activation of a word or interpretation determines the probability of fixating the corresponding object. If the researcher is sampling at 4 ms intervals, the fixation curve thus derives from a probabilistic sample every 4 ms. To account for the oculomotor delay, the likelihood of fixating an object at a given time is a function of the underlying probability 200 ms prior.

The HFS sampling assumption is most clear in studies using the Luce Choice rule to generate VWP predictions from continuous activation models (Allopenna et al., 1998; Dahan et al., 2001; Hannagan et al., 2013; McMurray et al., 2010; Mirman et al., 2011). Here, the underlying activation is given by a model (TRACE or TISK), and this activation is transformed into a likelihood of fixating each object at each timestep. This is done with no attempt to model the intervening fixations (though see Chapter 7 in Spivey, 2007). To be fair, this works well; these studies show a very high concordance between model predictions and fixation curves.

The labs that have used HFS in this explicit way typically treat HFS as a simplifying assumption that enables one to make theoretical claims about underlying decision dynamics from the data. It has never been treated as a strong theoretical claim about how fixations are linked to underlying activation. In fact, the HFS assumption is patently untrue. Subjects cannot move their eyes every 4 ms. Once a subjects’ gaze lands on something, they must stay there for about 200 ms; and saccades (which typically last 30–50 ms) can only be altered in flight in rare circumstances. Moreover, the duration of fixations to competitors can be related to experimental conditions (such as the degree of phonemic ambiguity: McMurray et al., 2008a), and people are more likely to look at some screen locations (e.g., top left > top right > bottom left; Salverda et al., 2011), or are more likely to make transitions between nearby objects but not more distal (e.g., diagonal). The common defense is that when sampling across a reasonably large number of trials, the precise timing and duration of eye movements is fairly random. Consequently, it is reasonable to treat each time slice as an independent sample from the underlying activation function 200 ms prior. But is it safe to assume that noise across trials uniformly smears over the discrete nature of fixations?

Current statistical approaches attack this issue around the edges. For example, one of the consequences of the fact that fixations unfold as a series of discrete events is that the fixation curve at each step is related to prior steps, since adjacent samples are likely to be influenced by the same fixation. Models then may be improved by assuming autocorrelated errors (Oleson et al., 2017), or by modeling the mean in terms of this autocorrelation (Cho et al., 2018). However, this still assumes each time point is an independent draw from the underlying probability function (even though the mean or variance of that distribution might be related to prior times).

The open question addressed here is whether these systematic effects on fixations can be treated as noise or if they bias the fixation curves away from the underlying decision curves that generated them. If HFS does not hold, this may not be a major problem for index approaches as these do not attempt to precisely capture the entire time course. However, the goal of all existing time-course-based approaches is to extract precise estimates of when the fixation curves affected by experimental conditions. If the HFS does not hold, this raises a conundrum. These approaches may be more accurate at modeling the data, but the fine-grained dynamics they purport to capture may not accurately reflect the underlying dynamics we wish to assess.

Goals of the present study

As psychology and cognitive science have begun to pay more attention to methodological rigor, it has become clear that in addition to issues of statistical impropriety and researcher degrees of freedom (Bakker et al., 2012; Open Science Collaboration, 2015; Stroebe et al., 2012), one contributor to the replication crisis is poor theoretical specificity (Oberauer & Lewandowsky, 2019). There is a chain of assumptions that allow researchers to derive predictions of behavioral data from a theory that is rarely couched in behavioral terms (Meehl, 1990; Scheel et al., 2021). Fleshing out this derivation chain and validating its components are essential for generating accurate empirical predictions from theory. Without confidence in what a theory predicts, a theory could be true, but this cannot be detected by available empirical evidence. Or conversely, it may be falsely assumed to be true on the basis of invalid predictions. In this context, understanding how the sequential and chunky nature of the fixation system contributes to the smooth fixation curves is an essential component of the derivation chain for the VWP.

To accomplish this, a series of Monte Carlo simulations were run, in which an underlying activation function for a given “subject” was known, and a series of fixations were generated using both the HFS model as well as more sophisticated models that capture the chunkiness of the fixation system. The observed fixation curves were then related to the underlying activation to characterize the role of the fixation-generating system in shaping this common visualization.

This study addressed six key questions. However, it is important to note the context. Many VWP experiments ask essentially either/or questions. For example, they might ask whether listeners fixate a competitor more or less in some or are faster to converge on the target in conditions than others. These are referred to as ordinal questions. For these coarser questions, perhaps the HFS assumption is close enough. But even in this case, there are three questions that remain relevant to ordinal designs.

First, do the assumptions about the underlying generating function lead to differences in the statistical properties of the fixation curves? Does the stochasticity lead to reduced power or create the possibility of a Type I error?

Second, and relatedly, as the VWP is increasingly applied to individual differences or correlational designs, its reliability is of concern (though this is also relevant for experimental work: Hedge et al., 2018; Schmidt, 2010). Reliability is clearly a function of the task itself, but in any system in which results are sampled stochastically, some portion derives from the laws of probabilistic sampling. This is particularly unpredictable in a complex system such as the saccade system. Thus, we ask if some portion of the reliability of the VWP derives from the fixation system? This is essential for knowing the upper limit of efforts to improve reliability by improving items, task properties, and so on.

Third, since the data are probabilistic, power, Type I error, and reliability are all likely shaped by the number of samples (trials).

Understanding these factors are important for designing more rigorous experiments, planning power, identifying the cause of null effects, and avoiding spurious significant effects. Moreover, fixation-generating function clearly accounts for some proportion of the variance. To the extent that this can be understood or even modeled, we can attain a more complete characterization of the data (and this may help reveal small effects).

There is also increasing interest in going beyond simple differences, to use the fixation curves to make fine-grained claims about the time course of processing using what I term termed continuous-in-time experiments. This is seen in evermore sophisticated statistical approaches (Cho et al., 2018; Mirman et al., 2008; Porretta et al., 2018). Such designs raise new questions.

Fourth, we ask whether it is necessary to model the time course with such accuracy, or conversely, if failing to do so leads to Type I error or loss of power.

Fifth, theoretical accounts have argued that different aspects of the fixation curves mean different things. For example, we have proposed that typical development is reflected in differences in the slope of the target function (reflecting increasing rate of spreading activation) while language disorders are reflected in the asymptote (reflecting failure to resolve competition; McMurray et al., 2022a). Thus, it is important to understand the degree to which specific assertions such as these can be tested: Can a difference in the underlying slope appear as a difference in the asymptote because of the fixation-generating system? Are some aspects of the curves more corrupted by the fixation-generating functions than others?

Sixth, studies are increasingly time locking analysis of the fixation curves (and theoretical claims) to the time of linguistic events in the real world. For example, they may analyze fixations prior to a particular event to document anticipation (Altmann & Kamide, 1999; Salverda et al., 2014), or they may examine only fixations after a particular event to ask if information or decision processes persist (Dahan & Gaskell, 2007; McMurray et al., 2009). However, it is possible that the inherent dynamics of the fixation system could systematically bias the ability to map the time of change in the fixation curve to real time. Thus, it is important to know the circumstances under which such inferences can be made.

More broadly, the VWP is often used to make claims like an effect occurs as soon as incoming speech hits the language system, or that competitor activation persists surprisingly long. This is supported by modeling work showing a close time-locking of VWP results to computational models (Allopenna et al., 1998; Hannagan et al., 2013; McMurray et al., 2010; Mirman et al., 2011). This modeling work—and these colloquial assumptions—assume a linear mapping from time in the world to the timing of the fixation curves. But this assumption may not be guaranteed given the nature of the fixation system. A broader investigation could either support this conceptualization or raise the need for more cautious interpretations of VWP data.

Simulation 0: Stochastic sampling

Before discussing simulations of actual fixation curves, it is helpful to consider the simple math of random sampling. If one flips a coin four times, the most likely value is to get two heads and two tails. But that is only likely to occur on 37.5% of the “runs.” That is, less than half the time, the outcome of this experiment does not reflect the true underlying probability (it has low validity). If one were to perform this twice (test–retest reliability), reliability would be poor: On many tests, you might get three heads on one run but two on another. In fact, the chances of observing two heads on two consecutive runs is only 14%! However, in a run of 100 flips, things might look quite different—one is much more likely to get an observed value close to 50%, and to be to obtain something close to that twice in a row. These properties are also a consequence of the probability. What if the coin were loaded such that likelihood of a head is only 0.25? Here, if the coin is flipped four times, the most likely outcome is one head and three tails. That will occur slightly more often than the mostly likely outcome for a fair coin (42.2%) and the chances of getting it twice in a row is higher (17%). Thus, validity and reliability are lawful consequences of the number of coin-flips or draws and the probability.

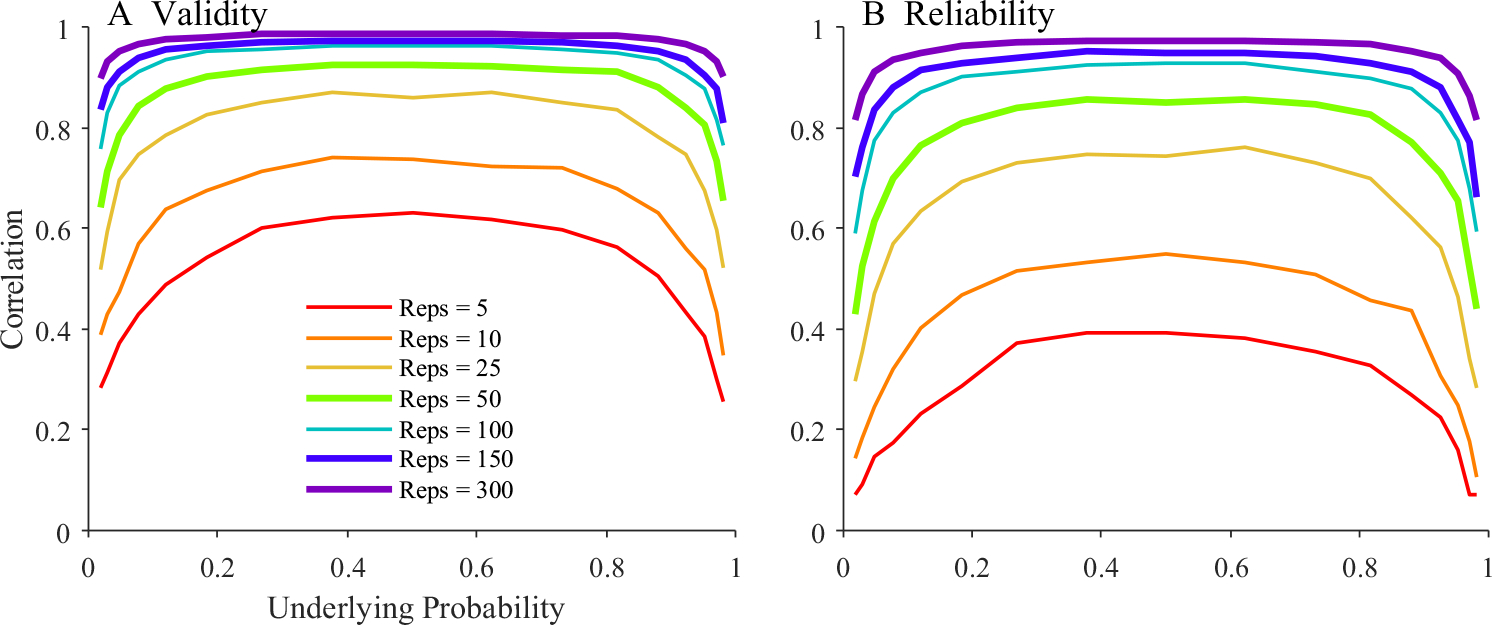

The stochastic generation of eye movements must obey these simple principles. For example, the underlying probability of a cohort fixation may be quite low, whereas the target could be closer to 50%. This will clearly influence the reliability and power of an experiment that relies on the target or the competitor; and such considerations should be taken into account when choosing the number of trials. Thus, it was important to visualize the consequences of these simple principles of stochastic sampling before considering the more complex consequences of an eye-movement generating function.

Virtually all tests based on accuracy fall prey to this issue. However, I have found no published descriptions of the relationship between number of samples, the baseline probability, and the validity/reliability of a measure. Thus, a simple simulation (see Supplement S1 for methods) was conducted in which an underlying probability was chosen, some number of draws were performed, and the mean was saved. This was done twice, and the whole process repeated for 50 subjects. The correlation (across subjects) between the mean and the original probability, and between the means from the two runs was then computed. This was done for a range of underlying probabilities, from low values like.15 (typical of cohort fixations) to higher values like.8 (typical of target fixations). This was done with a range of draws (from 5 to 300), even though typical VWP experiments might only include 15–30 trials (repetitions) per condition.

Figure 3 shows the results. At low numbers of repetitions (5–10 trials), validity is poor and only greater than 0.6 when the underlying probability was near the middle of the range. Reliability was extremely poor and did not crest 0.5 in even the best of circumstances. In fact, at low or high underlying probabilities, reliability didn’t cross 0.2! At a more reasonable 50 trials, when the underlying probability was 0.5, validity (r >.9) and reliability (r ~.8) were strong. But, at more extreme values typical of the VWP, both fell precipitously. And only with more than 150 repetitions were validity and reliability reasonable at all underlying probabilities.

Fig. 3.

Results of a simple probability sampling procedure as a function of the underlying probability and the number of trials sampled. a Validity—the correlation between underlying and observed probability. b Test–retest reliability (correlation between two runs). Thicker lines represent the numbers of repetitions used in the simulations of fixation curves below

Of course, a fixation analysis is more complex than this simple simulation—typical measures for the VWP pool across multiple samples and extract complex values (e.g., the slope). This could enhance reliability and validity (since each trial effectively contributes multiple draws) or threaten it (from compounding noise). Thus, this simulation offers only a baseline for validity and reliability; it is unclear to what extent the fixation model may impact these psychometric properties.

Overview and methods of simulations

To investigate the role of the fixation-generating model, a series of simple simulations were developed.3 These simulations started by first selecting a function that describes the probability of fixating the target (or a competitor) over time. Parameters of this function are then randomly selected to simulate a single subject. This is termed the underlying or generating function. I next randomly generated a series of fixations from this underlying function using various assumptions about how eye movements are generated. This was then done for 300 trials, and the data were averaged to produce a fixation curve for that subject. Next, new parameters of the underlying function were selected for 1,000 subjects, and the process was repeated. Finally, results were analyzed, using a curvefitting technique, and the estimated fixation curves were compared with the underlying functions.

For both the generating (underlying) function and the analysis of the derived data, a nonlinear curvefitting approach (McMurray, 2017; McMurray et al., 2010) was used for several reasons. First, it was easy to randomly select the parameters to describe a single subject (e.g., the slope and asymptotes) and still get reasonable curves (e.g., between 0 and 1). This would have been harder with polynomial growth curves (for example), where the parameters do not map clearly onto the shape of the resulting function, nor is the function limited to be between 0 and 1.

Second, this approach can precisely characterize the data in a way that is independent of the full data set. In contrast, mixed-model approaches like growth curves or GAMMs cannot analyze subjects individually. When a subject-specific parameter (e.g., a subject’s slope) is estimated, values are not based only on that subject’s data and are biased toward the mean (shrinkage). While this is a strength for analysis, it makes the interpretation of results less clear here, where the goal is to ask if a subject’s observed data matches their underlying function.

Finally, the parameters of the nonlinear function are meaningful descriptors of the fixation curves. Parameters like slope and asymptote are meaningful descriptors—even if researchers use a different approach for statistical modeling—because they describe readily observable aspects of the functions. This allows clear questions such as whether a particular fixation model makes the slope of the target function shallower than the underlying function.

Methods

Source code for all of the simulations presented here is available online (https://osf.io/wbgc7/). Curvefitting was performed using functions in McMurray (2017, Version 24), available as a standalone package at (https://osf.io/4atgv/).

Target and competitor fixations were modeled with separate functions. Each describes the (binomial) probability that the subject fixated the target (or competitor) at that time or not. This is an oversimplification: In a real experiment, probabilities would be related in a multinomial distribution (e.g., if the subject fixates the target, he or she cannot be fixating the competitor). However, the binomial case is a reasonable starting place as most studies analyze fixations to each object separately. Supplement S3 presents a multinomial model showing similar results.

Target fixations

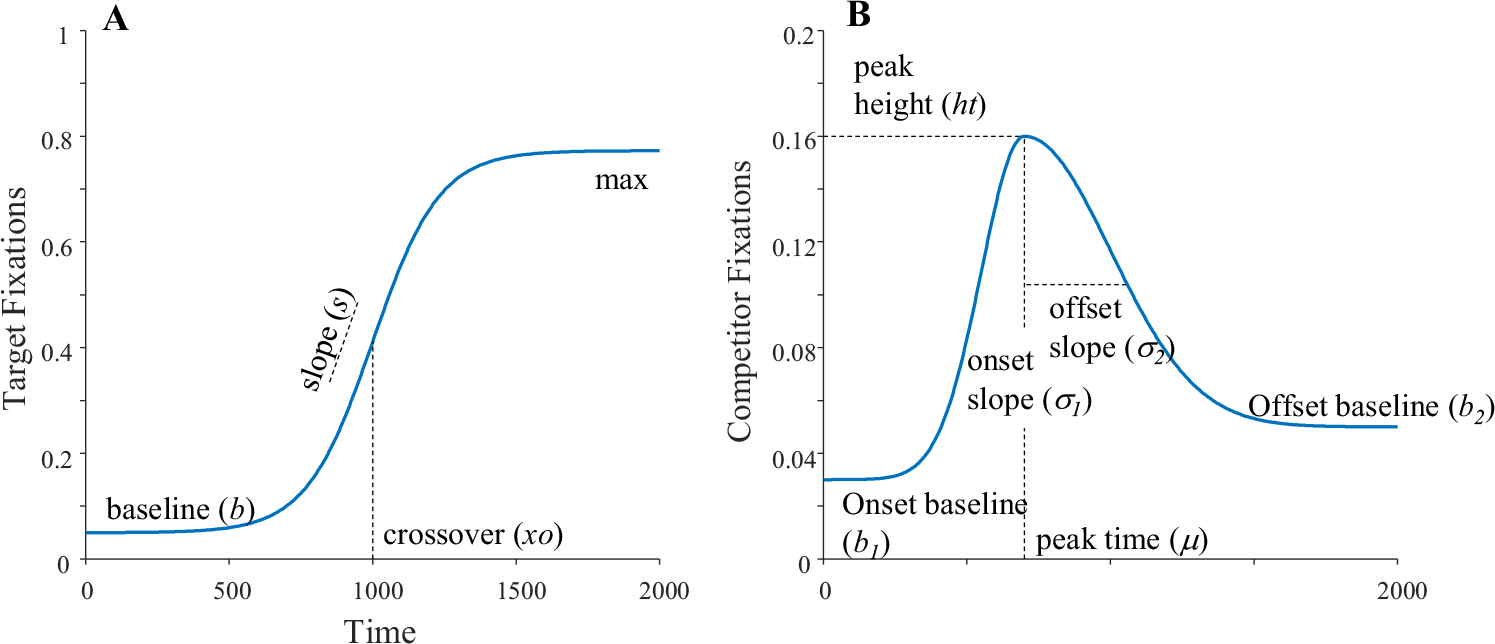

Target fixations were modeled with a four-parameter logistic (Equation 1, Fig. 4a), as in McMurray et al. (2010):

| (1) |

Fig. 4.

Functions used to generate and fit the fixation functions, annotated with the free parameters. a A four-parameter logistic function. b An asymmetric Gaussian

This function starts from a lower asymptote or baseline (), and transitions to an upper asymptote (). The transition is at (crossover), and the slope () is the derivative at the crossover.

Parameters for the underlying function were selected randomly for each run of the model. Each parameter was selected from a random normal distribution whose means and standard deviations were based on the normal hearing participants in (Farris-Trimble et al., 2014). Some constraints were put on these functions to ensure the resulting fixation function took a reasonable form (e.g., the crossover was between 0 and 2,000). See Supplement S2.

Competitors

Competitors were modeled with the asymmetric Gaussian (Equation 2, Fig. 4b):

| (2) |

This function consists of two Gaussians, each with their own asymptotes ( and ) and their own slopes (onset slope and offset slope ). They share a common peak height () and a common time at which peak is reached (peak time, ).

As before, parameters for the underlying functions were drawn from constrained normal distributions whose means and variances were taken from prior work (Farris-Trimble et al., 2014). Constraints were designed to keep the values in a reasonable range (e.g., competitors should peak below 0.4, and above the baselines).

Fixation generation

After selecting the parameters of the underlying function, a series of fixations were generated for a set number of trials to simulate an experiment. These models started from the underlying function as the probability of fixating the object, and models made progressively more sophisticated assumptions about the fixation-generating system. Data were then averaged to compute a fixation curve for that subject.

Analysis

Fixation curves were fit using a constrained gradient descent procedure used in prior studies (Farris-Trimble & McMurray, 2013; Farris-Trimble et al., 2014; McMurray et al., 2010). This uses a constrained gradient descent technique to find the parameters that minimize the least squared error between the observed fixation curve and the predicted function. There is no analytic solution to this problem, so suboptimal fits (local minima) can occur. Typically, one should visually inspect fits, and poor fits can often be corrected with hand-selected starting parameters. However, with the large number of fits here, this was impossible. Thus, three steps were taken. First, starting parameter estimates were improved with new techniques. Second, around 100 fits for each simulation were manually inspected to ensure fits were good. Finally, any fit whose correlation to the observed data was below 0.8 (across all runs) was dropped.

After obtaining fits, the parameters of the function (e.g., the slope, crossover, peak height) were the unit of analysis. Since these parameters were of the same form as the generating function, this permitted simple analyses asking whether the observed and underlying parameters were correlated, if there was bias, and so forth.

Overview of simulations

Our first simulation examined the high-frequency sampling (HFS) assumption. While this assumption is unrealistic (and one might say, inconceivable), these simulations document what the results of this Monte Carlo procedure look like under ideal circumstances. Simulation 2 turns to a simple fixation-based sampling (FBS) model in which fixations are a series of discrete units. Simulation 3 follows that up with a slightly more complex sampling scheme that acknowledges that eye movements may persist longer than average on activated objects (fixation-based sampling with enhanced target duration [FBS+T]), and Simulation 4 (Supplement S3) generalizes these to a multinomial model. The next simulations investigate the consequences of these models for measurement: Simulation 5 investigates test–retest reliability, and Simulation 6 investigates power and Type I error. Finally, Simulation 7 presents an exploratory new analysis that builds a fixation-generating model into the analysis.

Simulation 1: Stochastic high-frequency sampling

Approach

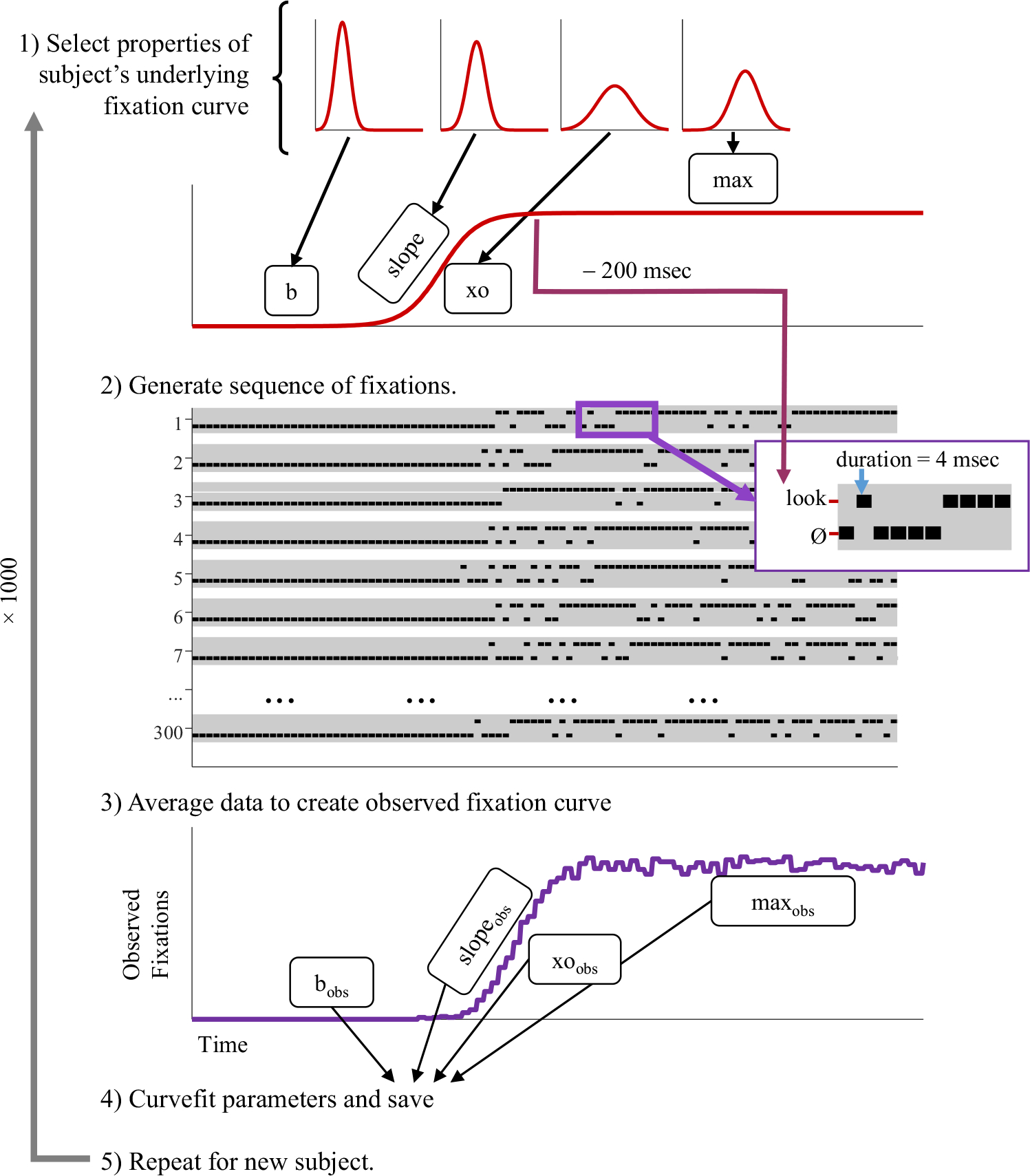

Figure 5 shows an overview of the approach. First, the parameters of a subjects’ underlying function were randomly generated. Next, 300 trials were generated. At each 4-ms time slice, the probability of fixating the target or competitor was computed from the subject’s underlying function (Eqs. 1 or 2, respectively). This probability was used to determine whether the subject was looking at the object or not at that time at each 4-ms time slice. To simulate the assumed 200-ms oculomotor delay, when computing the likelihood of fixating, the time was shifted by 200 ms. Thus, the likelihood of fixating the target at 600 ms was based on the logistic function at 400 ms. The series of fixations was then averaged across trials to generate the observed fixation curve. This was curvefit and compared with the underlying parameters.

Fig. 5.

Overview of Simulation 1. (1) Parameters of the underlying function are selected from Gaussian distributions derived from empirical data (b [baseline], xo [crossover], slope, and max). These are used to define the underlying function specifying the likelihood of looking at each time. (2) Next, a series of fixations is generated. These are sampled every 4 ms. The likelihood of fixating comes from the underlying function at 200 ms before the current fixation. (3) Next, the data are averaged to compute the observed fixations. (4) Observed functions are fitted to extract observed parameters. (5) This is repeated for 1,000 subjects and the estimated and observed parameters are compared

Results and discussion

Target

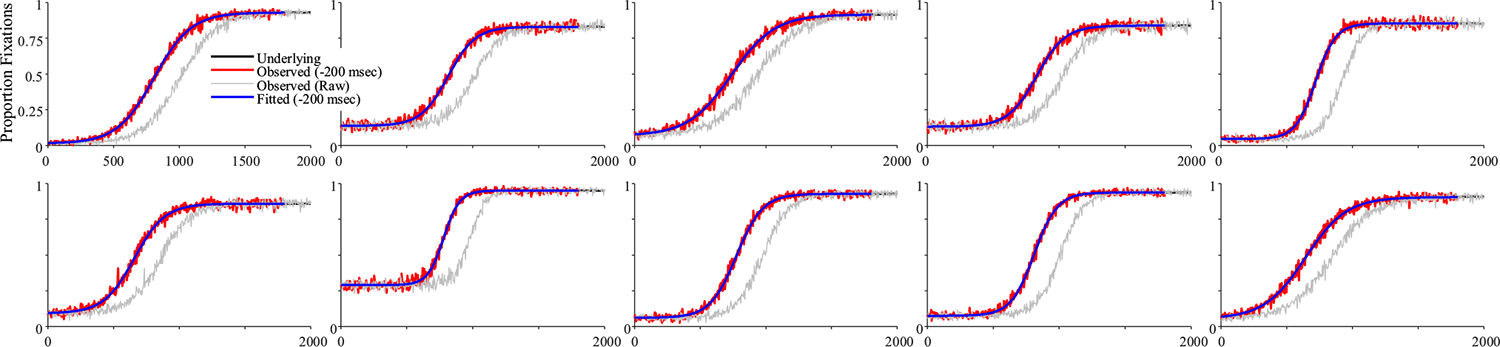

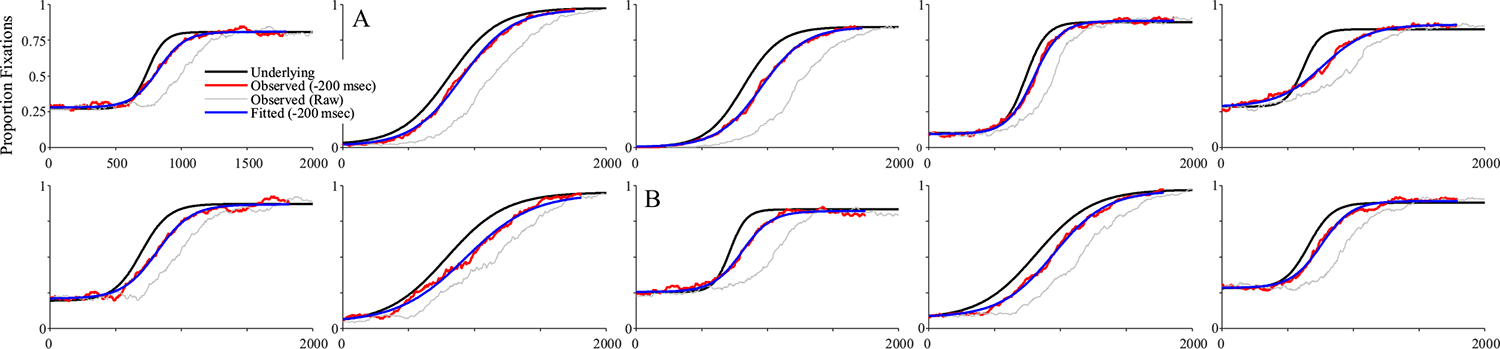

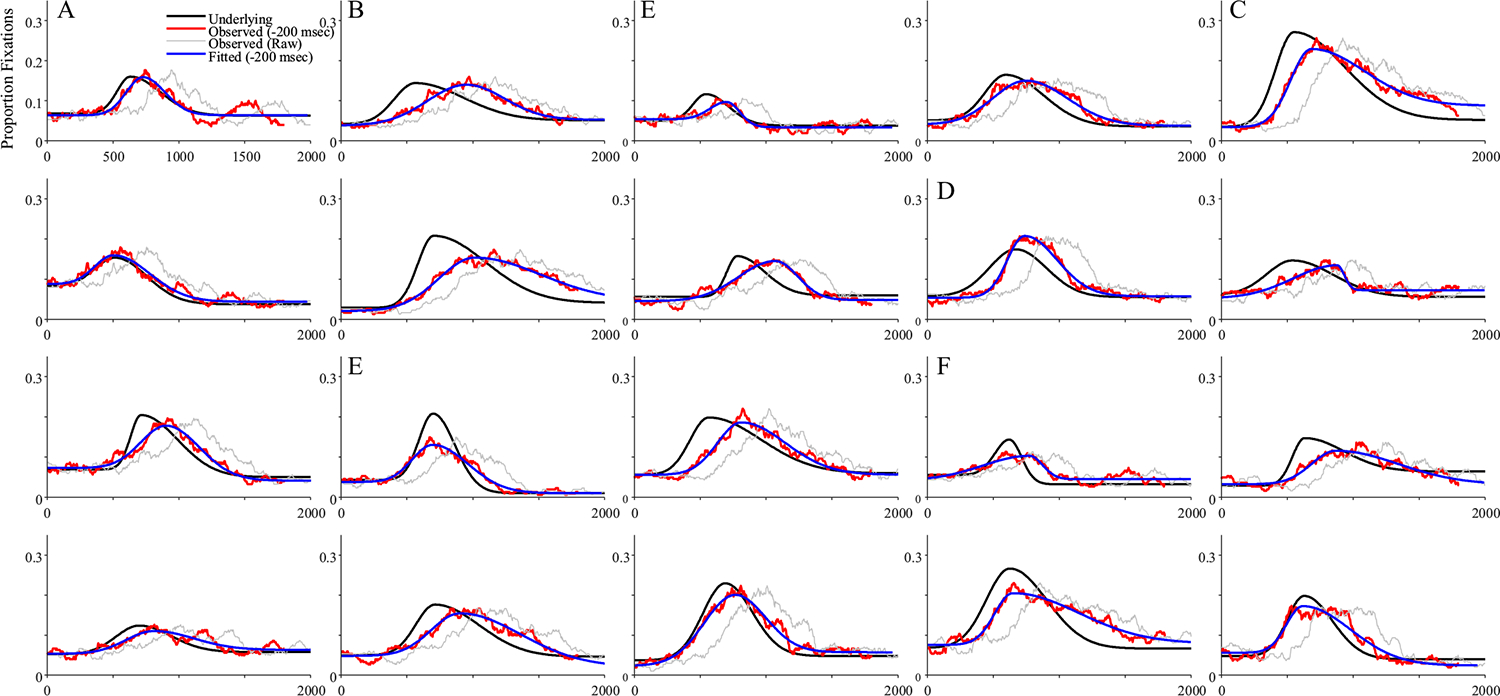

Figure 6 shows 10 representative subjects.4 Shown in black is the underlying function for that subject, and in gray is the observed data. To facilitate comparison to the underlying curves, red curves show the observed data after accounting for the oculomotor delay (200 ms). Fitted functions are shown in blue, also shifted for the oculomotor delay. Fits were good with an average correlation of 0.997 between the fits and the data, and no fits were dropped for poor correlations to the observed data.

Fig. 6.

Representative subjects from the high-frequency stochastic sampling (HFS) simulations of target fixations. Shown is the underlying and observed likelihood of fixating the target over time for a single subject (in each panel). In black is the underlying function. In gray is the observed (generated data). Red shows the same data but shifted by 200 ms to account for the oculomotor delay. Blue is the logistic curve fit to the observed data (also shifted by 200 ms). Note that in all cases shown here, the fitted curve is directly over the underlying. Under HFS assumptions, once the oculomotor delay is accounted for, the observed data are a close match to the underlying function. (Color figure online)

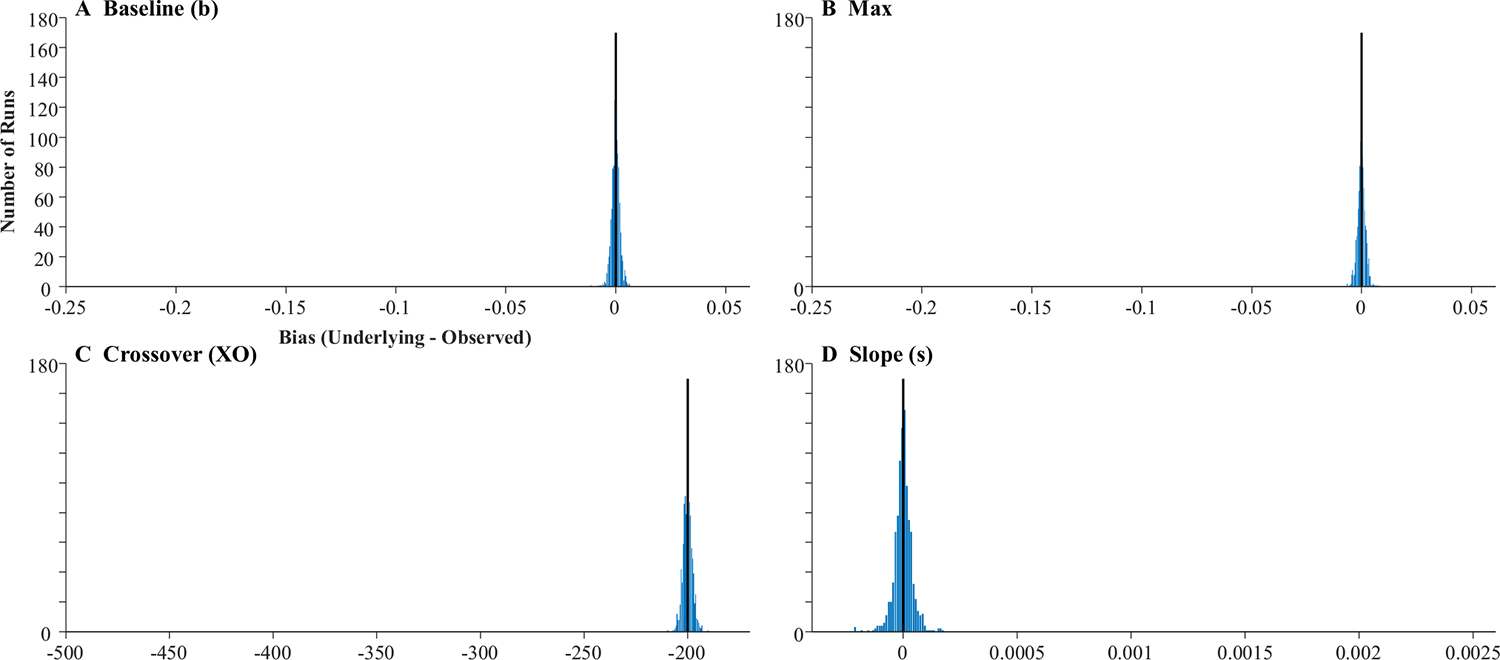

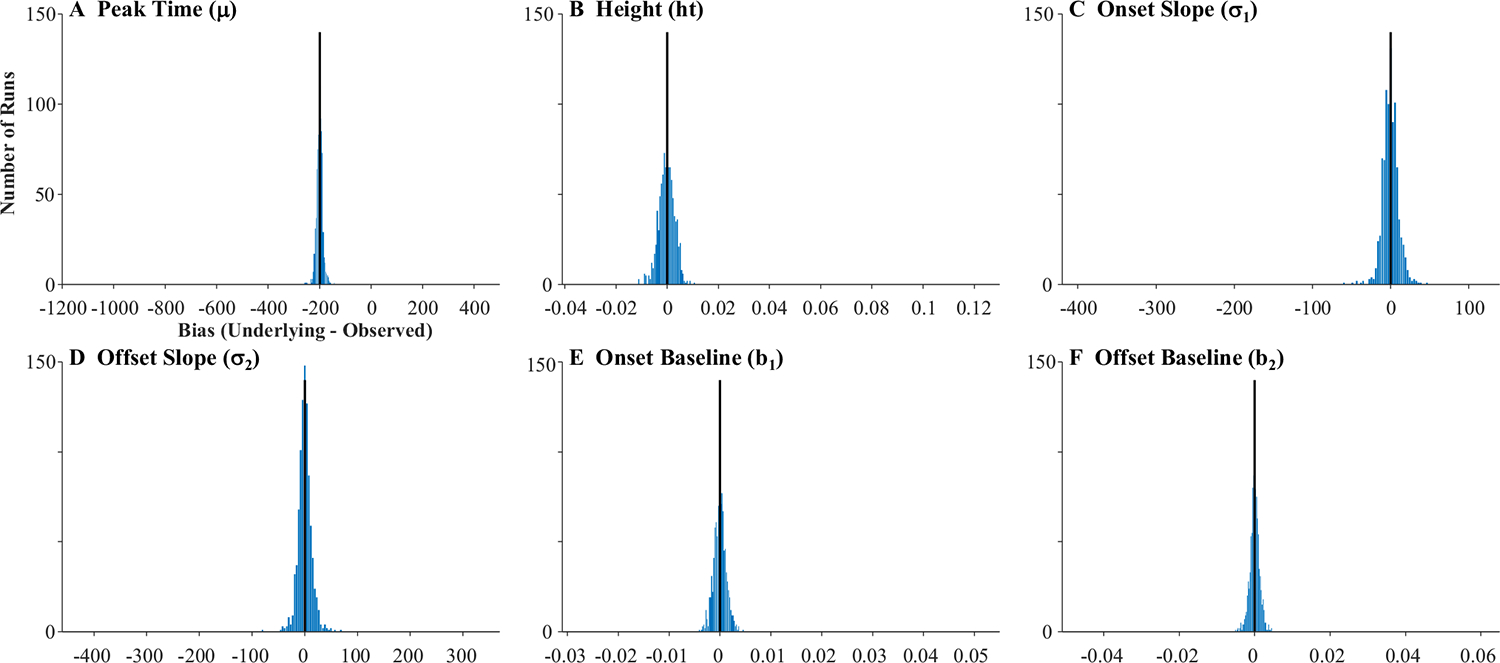

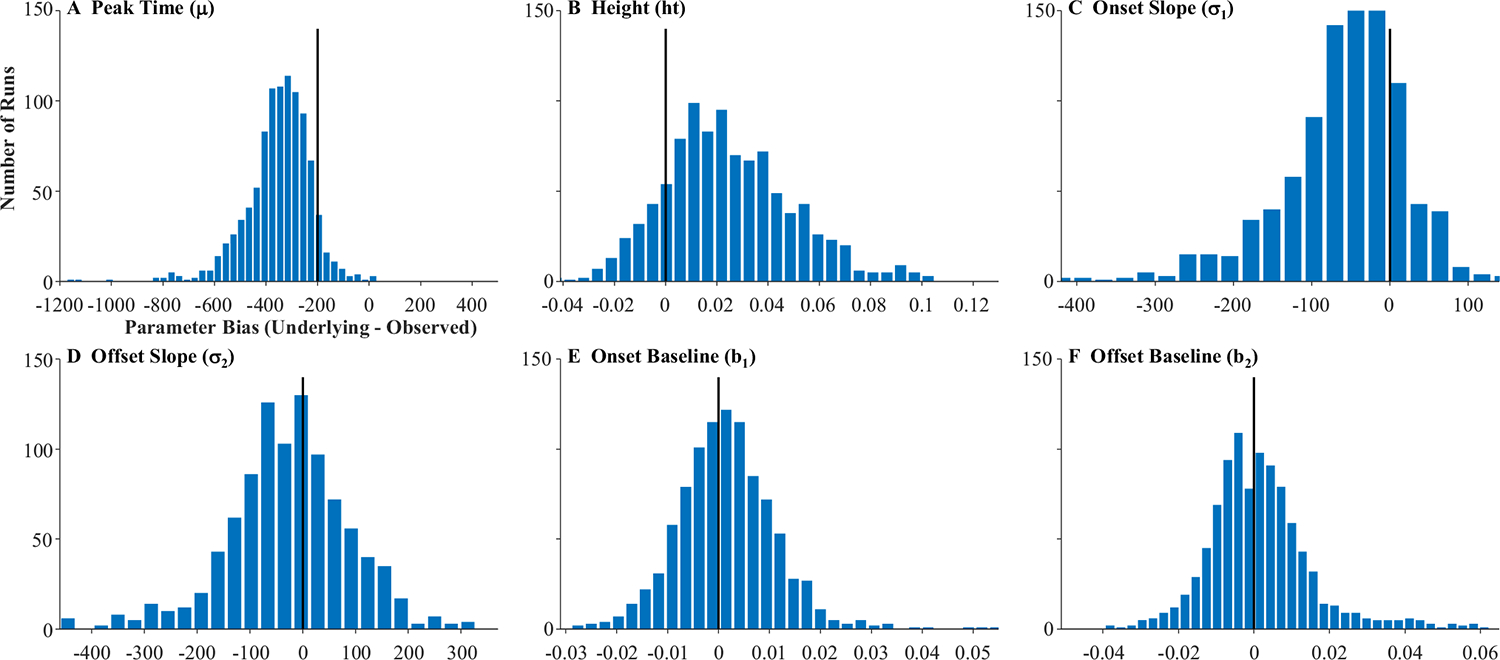

The fitted curves were almost uniformly on top of the underlying curves (the reason you cannot see the black curves in Fig. 6). This is supported by the high correlations between the underlying parameter values and the fitted values (Table 1, Column 1): the baseline, peak, and crossover were all at 1.0 and slope was at 0.998. A high correlation could still be observed even if the data were systematically biased (e.g., if fitted crossovers were consistently later than the true crossovers). Thus, for each run, a difference score was computed (underlying − observed). Table 1 shows the average bias. As Fig. 7 shows, these were near zero for all parameters except crossover, which had a bias of −200 ms (the oculomotor delay), and standard deviations were very small. Finally, the correlation among observed parameters (Table 1) was computed to determine whether anything about our sampling procedure was imposing structure on the data that was not there in underlying latent functions (the underlying parameters were uncorrelated). Cross-correlations among the observed parameters were very low and always less than.05.

Table 1.

Summary statistics for the target simulations assuming a high-frequency sampling (HFS) eye-movement model

| Parameter | Correl with underlying | Mean | Bias |

Cross correlations among observed parameters |

|||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | D | b | max | xo | s | |||

|

| |||||||||

| Baseline (b) | 1.0 | .135 | <.001 | 0.002 | −.025 | −.016 | .005 | .008 | |

| Max | 1.0 | .852 | ≥.001 | 0.0018 | −.062 | −.016 | −.007 | .036 | |

| Crossover (xo) | 1.0 | 969.3 | −200.0 | 2.26 | −88.68 | .0005 | −.007 | .007 | |

| Slope (s) | .998 | .0019 | <.0001 | <0.0001 | .0019 | .008 | .036 | .008 | |

The first column shows the correlation between the observed fits and the underlying function. Mean bias refers to the average difference between observed and underlying value for that parameter (note the −200-ms bias for crossover matches the oculomotor delay). SD refers to the standard deviation (across subjects) for bias. b: initial asymptote; max: upper asymptote, xo: crossover, s: slope

Fig. 7.

Histograms showing distribution of bias across subjects in the four parameters of the target model assuming high-frequency stochastic sampling (HFS). Histograms include 40 evenly sized bins, optimally spaced to reflect the distribution of the data. Axes are expanded to match the other histograms in this manuscript. The black line indicates what would be expected for an unbiased measure

Competitor fixations

A similar pattern was observed for competitor fixations. Figure 8 shows representative subjects. Fits were good with an average correlation of.919 (68/1,000 fits were dropped5). Again, observed fixations curves showed a very close match to the underlying curves, with the blue curves masking the black in virtually all conditions, and very high correlations between the observed and underlying parameters (Table 2; all rs >.94, and 5 were >.98). Examination of the difference scores (underlying − observed) showed little bias. The mean bias was very low, except for peak time, which was biased at −200 ms (again, the oculomotor delay). Histograms (Fig. 9) were centered where expected. Standard deviations of the bias were very low—μ, for example, scaled in ms, and had a standard deviation of only 11.7 ms.

Fig. 8.

Representative subjects from the high-frequency stochastic sampling (HFS) simulations of competitor fixations. Shown is the underlying and observed likelihood of fixating the competitor over time for a single subject (in each panel). Note that in all cases shown here, the fitted curve is directly over the underlying. Under HFS assumptions, once the oculomotor delay is accounted for, the observed data are a close match to the underlying probability function. (Color figure online)

Table 2.

Summary statistics for the competitor simulations assuming a high-frequency sampling (HFS) eye-movement model

| Parameter | Correl with underlying | Mean | Bias |

Cross correlations among observed parameters |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | D | μ | ht | σ1 | σ1 | b1 | b2 | |||

|

| |||||||||||

| Peak time (μ) | .988 | 828.2 | −200.0 | 11.8 | −16.80 | .001 | .069 | −.002 | .008 | −.004 | |

| Peak height (ht) | .997 | .187 | ≥.0002 | 0.003 | −.056 | .001 | .059 | −.025 | .054 | .050 | |

| Onset slope (σ1) | .946 | 130.7 | −.06 | 10.2 | −.033 | .069 | .059 | .008 | .009 | .009 | |

| Offset slope (σ2) | .990 | 240.0 | .13 | 12.9 | .014 | −.002 | −.025 | .008 | .024 | −.043 | |

| Onset asymp (b1) | .996 | .049 | <.0001 | .0012 | −.002 | .008 | .054 | .009 | .024 | −.021 | |

| Offset asymp (b2) | .996 | .049 | <.0001 | .0013 | .003 | −.004 | .050 | .009 | −.043 | −.021 | |

μ: Time of peak; ht: peak height; σ1: onset slope; σ2: offset slope; b1: onset asymptote; b2: offset asymptote

Fig. 9.

Histograms showing distribution of bias across subjects in the six parameters of the competitor model assuming high-frequency stochastic sampling (HFS). Histograms include 40 evenly sized bins, optimally spaced to reflect the distribution of the data. Axes are expanded to match the other histograms in this manuscript. The black line indicates what would be expected for an unbiased measure

Discussion

These simulations largely validate the modeling approach. When data were explicitly generated using an HFS model, underlying parameters of both targets and competitors could be reliably estimated. Estimates had low variance, were unbiased, and the curvefitting approach does not impose correlations on the estimated parameters. Moreover, validity was stronger than what would be expected based on the number of trials alone—even cohort asymptotes, with a mean of.05 showed correlations of greater than 0.996 (by comparison, Fig. 2 suggests these should be at.95). This is likely because these curvefit parameters are implicitly pooling across many draws (successive samples). HFS is, of course, implausible. However, this offers a picture of what results should look like when the assumptions of the analysis hold.

Simulation 2: Fixation-based sampling

Approach

Simulation 2 developed a rudimentary fixating generating model. The fixation-based sampling (FBS) model derives fixation curves from a series of discrete fixations with a reasonable refractory period. This model treats the fixations as primarily a read out of the unfolding decision. While this ignores the role of the fixation as an information gathering behavior, this is consistent with some views of the VWP that treat the fixation as primarily reflecting motor preparation (which is based on the unfolding decision), the parallel contingent independence assumption of Magnuson (2019). This is not intended as a complete model of fixations in the VWP. Rather, this model asks if the ballistic and chunky nature of the saccade/fixation system alone is sufficient to create noise and/or bias in the observed fixation curves. If even this minimal model shows enhanced noise and bias in the observed data, any more complex model is likely to as well.

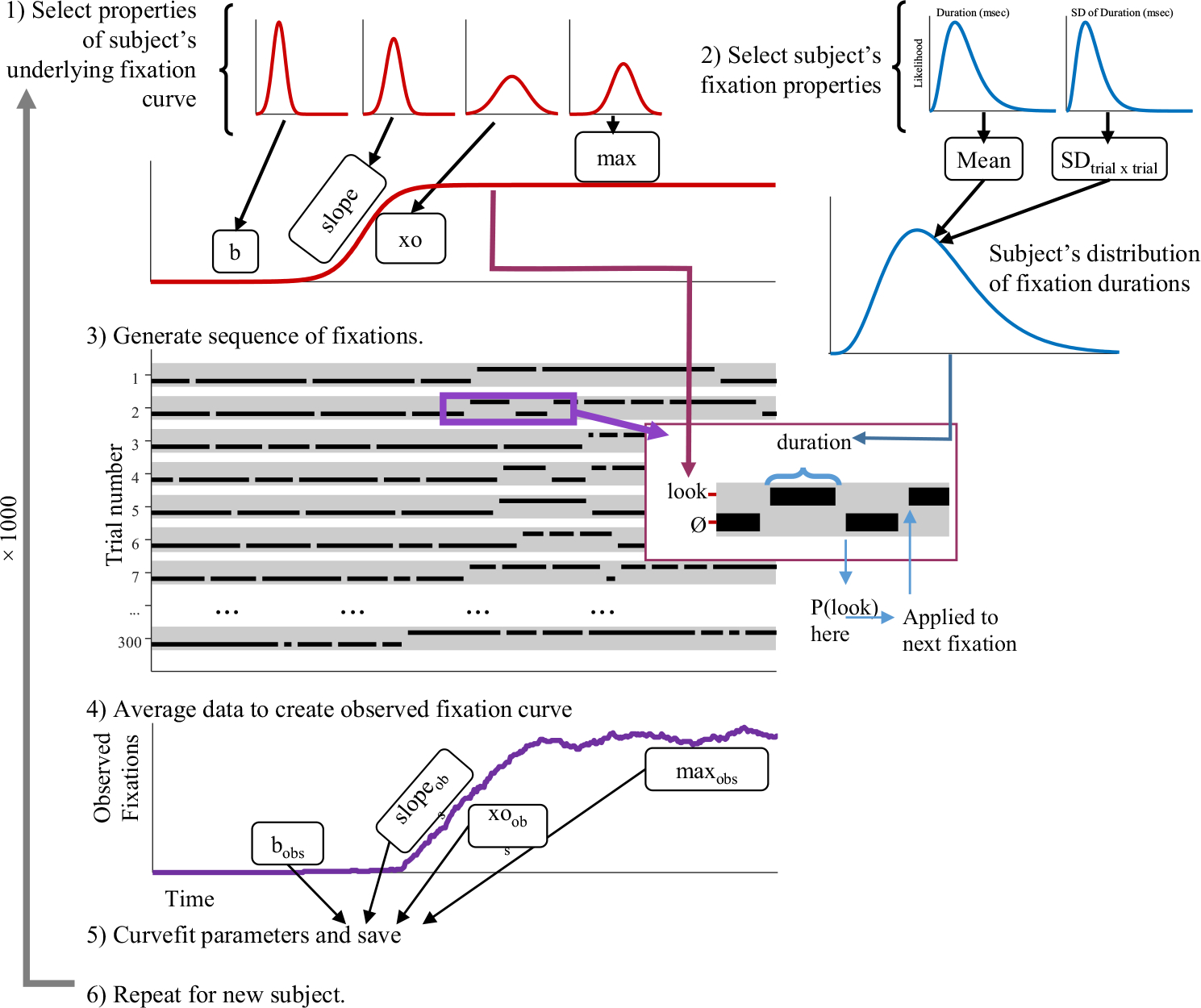

In the FBS model (Fig. 10), the underlying curves for each subject were randomly generated in the same way as Simulation 1. Unlike that model, data on each trial is generated as a series of fixations whose duration were randomly determined from fixation to fixation. Once a fixation was “drawn,” the subject was presumed to be fixating on a single object throughout this time. The likelihood of fixating the target (or competitor) or not over this fixation was again determined by the underlying time-course function (either the logistic or asymmetric Gaussian), using the onset of the previous fixation as the time parameter. This assumes that at fixation onset, the subject immediately plans whether or not to look at the next object, but then wait through the fixation (the refractory period) before fixating it.

Fig. 10.

Overview of Simulation 2 (fixation-based sampling [FBS]). (1) Parameters of the underlying function (b [baseline], xo [crossover], slope, and max) are selected from Gaussian distributions derived from empirical data, as in Simulation 1. (2) A mean fixation duration, and a trial × trial SD of fixation duration are randomly selected from Gamma distributions derive from empirical data. (3) A series of fixations is then randomly generated. For each fixation (inset) the duration is randomly chosen from that subject’s distribution of fixation durations. The likelihood of fixating the object comes from the underlying function sampled from the onset of the previous fixation. (4) Next the data are averaged to compute the observed fixations. (5) Observed functions are fitted to extract observed parameters. (6) This is repeated for 1,000 subjects, and the estimated and observed parameters are compared

In addition to each subject’s own parameters for the underlying curves, each subject had their own unique distribution of fixation durations (the mean and SD), randomly chosen for that subject. These distributions (Table 3) were based on an analysis of the normal hearing participants (N = 37 subjects, 290 trials each) of Farris-Trimble et al. (2014). Across subjects, the mean fixation duration (to nontarget objects) was about 200 ms for nontarget objects, and subjects did not vary substantially (SD ~ 30 ms). Fixations were longer to the target than to other objects (or to nothing). Simulation 2 ignored this to create a minimal contrast with the HFS model (but Simulation 3 tests this). The mean duration was thus set to the average of the three nontarget objects (the last row). This was 204 ms, a close analogue to conventional estimates of the oculomotor planning time. Each subject also had their own trial-to-trial variability. These were estimated from the within-subject standard deviation in duration (Table 3, the within-subject-columns). These standard deviations were high, with individual fixations varying by 90–100 ms (the mean of SD column). Both the mean and within-trial standard deviation were chosen randomly and fixed for that subject.

Table 3.

Properties of fixation durations

| Object | b/w Subject Mean Duration |

w/in Subject (Trial × Trial) SD |

||

|---|---|---|---|---|

| M | SD | Mean of SD | SD of SD | |

|

| ||||

| Target | 360.3 | 65.8 | 195.1 | 39.04 |

| Cohort | 211.3 | 31.1 | 91.0 | 14.7 |

| Unrelated | 202.8 | 32.9 | 80.1 | 17.1 |

| None | 200.1 | 33.9 | 118.6 | 41.9 |

| All nontarget | 204.7 | 32.6 | 96.6 | 24.6 |

The random duration of a specific fixation, and the general properties of a subject (e.g., their mean distribution), were generated from a gamma distribution. This distribution (unlike a Gaussian ) is zero bounded with a long tail, so it matches the distribution of real fixations well. It has two free parameters—shape and scale. To convert means and standard deviations of the empirical data to shape and scale, shape was set to M2/SD2 and scale to SD2/M.

Once a subject’s underlying distribution of fixations was selected, a series of trials was simulated. On these trials, fixations were simulated sequentially, so the second fixation was not randomly selected until after the first. For each fixation, the duration was selected randomly from a gamma distribution with the subject’s shape and scale. Whether or not a fixation was directed to the object (e.g., to the target or not) was based on the time of the onset of the prior fixation.

After generating a series of trials, data were averaged and analyzed as in Simulation 1. For display, when adjusting for the oculomotor delay, time was shifted by the mean fixation duration for that subject (which on average was about 200 ms).

Results and discussion

Target

Figure 11 shows representative subjects for the target simulation. Fits were good, with an average correlation of.998 and no excluded fits. Asymptotes generally lined up fairly well between the observed and underlying function. However, FBS created a much larger delay between the raw data (in gray) and the underlying function (in black) than the HFS model: Even after adjusting for that subject’s mean fixation duration (red for data, blue for fits), the observed curves were still delayed relative to the underlying curves. Slopes and crossovers were not only delayed but also less consistent. For example, in Fig. 11a, the delay was carried by a difference in crossover (slope was the same between observed and underlying), while in Fig. 11b, slope was shallower in the observed data. These observations are mimicked in the validity estimates (Table 4). While correlations between the observed and underlying values were above 0.98 for the asymptotes, they were lower (but still high) for crossover (r = .855) and slope (r = .751).

Fig. 11.

Representative subjects for target fixations assuming fixation-based sampling (FBS). Two patterns are highlighted: a The slope of the underlying function is preserved, but the crossover is delayed (even beyond the oculomotor delay). b The slope of the observed data is shallower than that of the observed data. (Color figure online)

Table 4.

Summary statistics for the target simulations assuming the fixation-based sampling eye-movement model

| Parameter | Correl with underlying | Mean | Bias |

Cross correlations among observed parameters |

|||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | D | b | max | xo | s | |||

|

| |||||||||

| Lower asymp (b) | .987 | .132 | .003 | .012 | .24 | .008 | .059 | −.155 | |

| max | .986 | .848 | .0014 | .016 | .09 | .008 | .006 | .237 | |

| crossover (xo) | .855 | 1094.0 | −327.5 | 50.8 | −6.44 | .059 | .006 | −.071 | |

| slope (s) | .751 | .0014 | .0005 | .0004 | 1.22 | −.155 | .237 | −.071 | |

b: initial asymptote; max: upper asymptote, xo: crossover, s: slope

Bias (Fig. 12) showed a similar pattern. The asymptotes were unbiased, with means near zero and fairly low variance. In contrast, the crossover was significantly biased; on average, the underlying crossover was 327.5 ms earlier than the observed. This was substantially more than one would have expected given the mean fixation duration of 203.7. Slope was also biased downward, as observed data showed generally shallower slopes than the underlying.

Fig. 12.

Histograms showing distribution of bias across subjects in the four parameters of the target model assuming fixation-based sampling (FBS). Histograms include 40 evenly sized bins, optimally spaced to reflect the distribution of the data. Axes are expanded to match the other histograms in this manuscript. The black line indicates what would be expected for an unbiased measure

Bias was highly related to the mean and standard deviation of the subject’s fixation durations. The bias in the crossover was correlated at r = −.897 with the mean duration: Subjects with longer fixations tended to have crossovers that were even later than the underlying crossover. To understand this, consider a fixation that was initiated at 500 ms—a time when the underlying probability function is just starting to rise. Whether or not it was directed to the target, however, is not dictated by the probability function at 500 ms, but by the function at 300 ms (when it was planned). However, for a participant with even longer fixation durations, it would have been planned even earlier (when the function was even lower). This would then slow the ultimate rise of the fixation curve. Moreover, once the subject decides to look (or not) that outcome is locked for the duration of the fixation, even if the underlying curve rises during that time. Thus, a long mean fixation duration acts as a drag, delaying the growth of the observed fixation curve. In contrast, the bias in slope was not highly related to the mean duration (r = .045) but was moderately related to the standard deviation (r = .269): Greater variability in the durations tended to smooth out the functions, resulting in slopes that were shallower than expected.

Finally, unlike the HFS model, the fixation-generating model appeared to impose correlations on the parameter estimates. There were moderate correlations between slope and the asymptotes (onset: r = −.155, offset: r = .237; Table 4 for complete matrix). Subjects with more extreme asymptotes tended to have larger slopes. Neither of these correlations were present in the underlying values (onset: r = .032; offset: r = .021). Thus, the fixation-generating model may make it difficult to achieve an unbiased estimate of a subject’s underlying fixation curve.

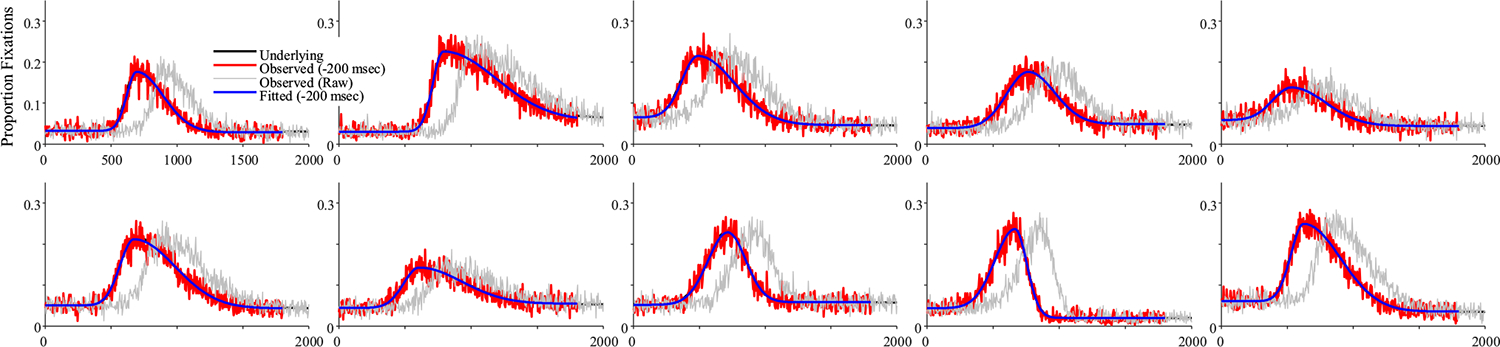

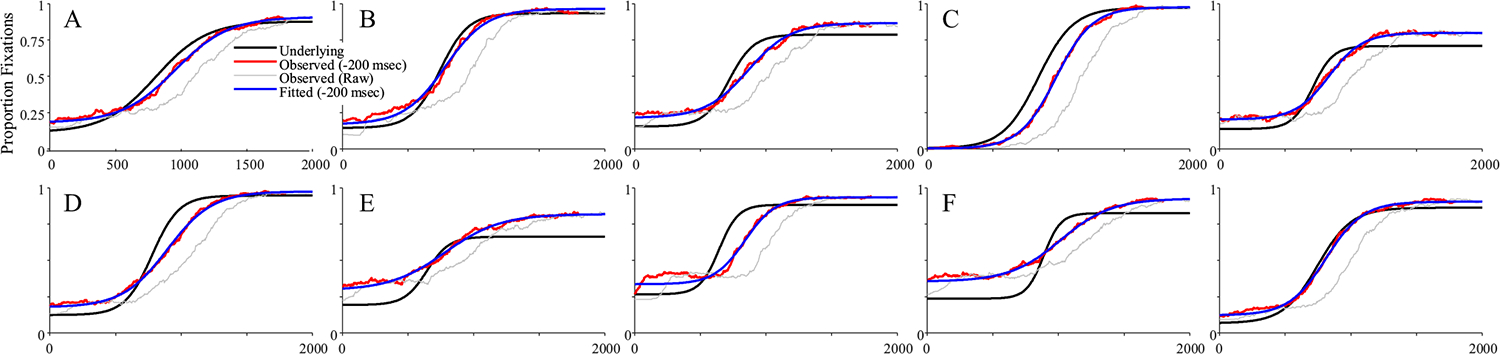

Competitor

Fits were good with an average correlation of 0.958, and 23 dropped runs. Figure 13 shows results. Competitors were much less consistent than targets. In some cases, the observed and underlying data matched (Fig. 13a); in others, there was a delayed onset, but otherwise similar functions (Fig. 13c), and in others the function was stretched (Fig. 13b). Some runs showed higher peaks (Fig. 13d), but others, lower (Fig. 13e). Thus, even with a large number of trials (300), most FBS runs were unlikely to approximate the underlying function.

Fig. 13.

Representative subjects from the fixation-based sampling (FBS) simulations of competitor fixations. Shown is the underlying and observed likelihood of fixating the competitor over time for a single subject (in each panel). Several patterns emerged: a The observed data matched the underlying (after accounting for the oculomotor delay). b The observed function is delayed and slower to build and fall. c The observed function is delayed but otherwise similar. d The observed function has a higher peak than the underlying. e The observed data have a lower peak. (Color figure online)

This was reflected in the correlations between underlying and observed parameters (Table 5). Here, only parameters directly related to the overall amount of looking (peak height and asymptotes) were reasonably correlated with their underlying values (r ~.7). Peak timing (μ) was correlated but lower (r = .50), and the slope parameters had only small correlations. This lack of validity was not directly related to any of the eye movement parameters. Only peak timing was correlated with the mean fixation duration (r = .357; other parameters |r| < .1).

Table 5.

Summary statistics for the competitor simulations assuming a fixation-based sampling (FBS) eye-movement model

| Parameter | Correl with underlying | Mean | Bias |

Cross Correlations among Observed parameters |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | D | μ | ht | σ1 | σ1 | b1 | b2 | |||

|

| |||||||||||

| peak time (μ) | .502 | 976.2 | −351.3 | 126.4 | −2.13 | −.030 | .493 | −.233 | −.104 | .038 | |

| peak height (ht) | .845 | .158 | .0245 | .025 | .85 | −.030 | −.169 | −.082 | .178 | .188 | |

| onset slope (σ1) | .112 | 202.4 | −72.0 | 121.2 | −.55 | .493 | −.169 | −.145 | −.319 | −.015 | |

| offset slope (σ2) | .327 | 271.4 | −37.3 | 140.8 | −.27 | −.233 | −.082 | −.145 | −.019 | −.453 | |

| onset asymp (b1) | .799 | .048 | .0019 | .011 | .20 | −.104 | .178 | −.319 | −.019 | .053 | |

| offset asymp (b2) | .681 | .048 | .0019 | .015 | .14 | .038 | .188 | −.015 | −.453 | .053 | |

μ: Time of peak; ht: peak height; σ1: onset slope; σ2: offset slope; b1: onset asymptote; b2: offset asymptote

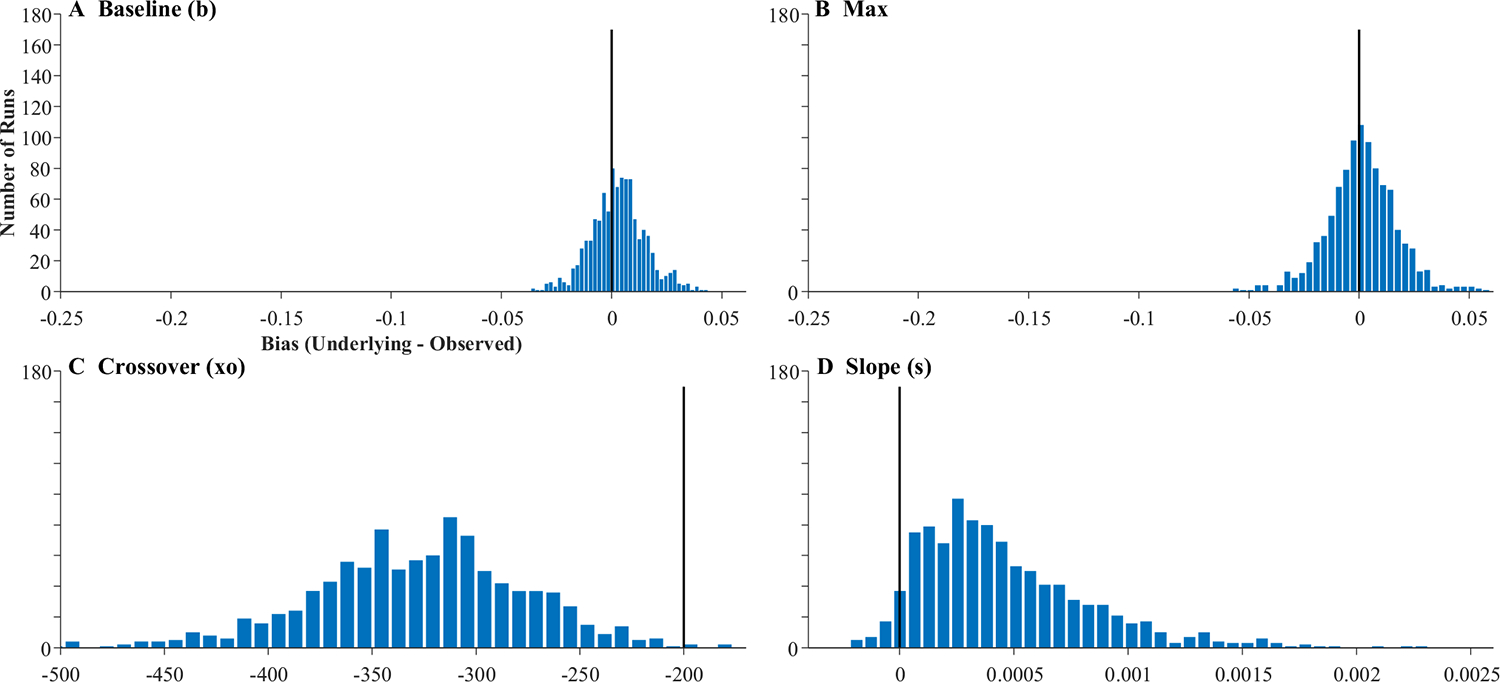

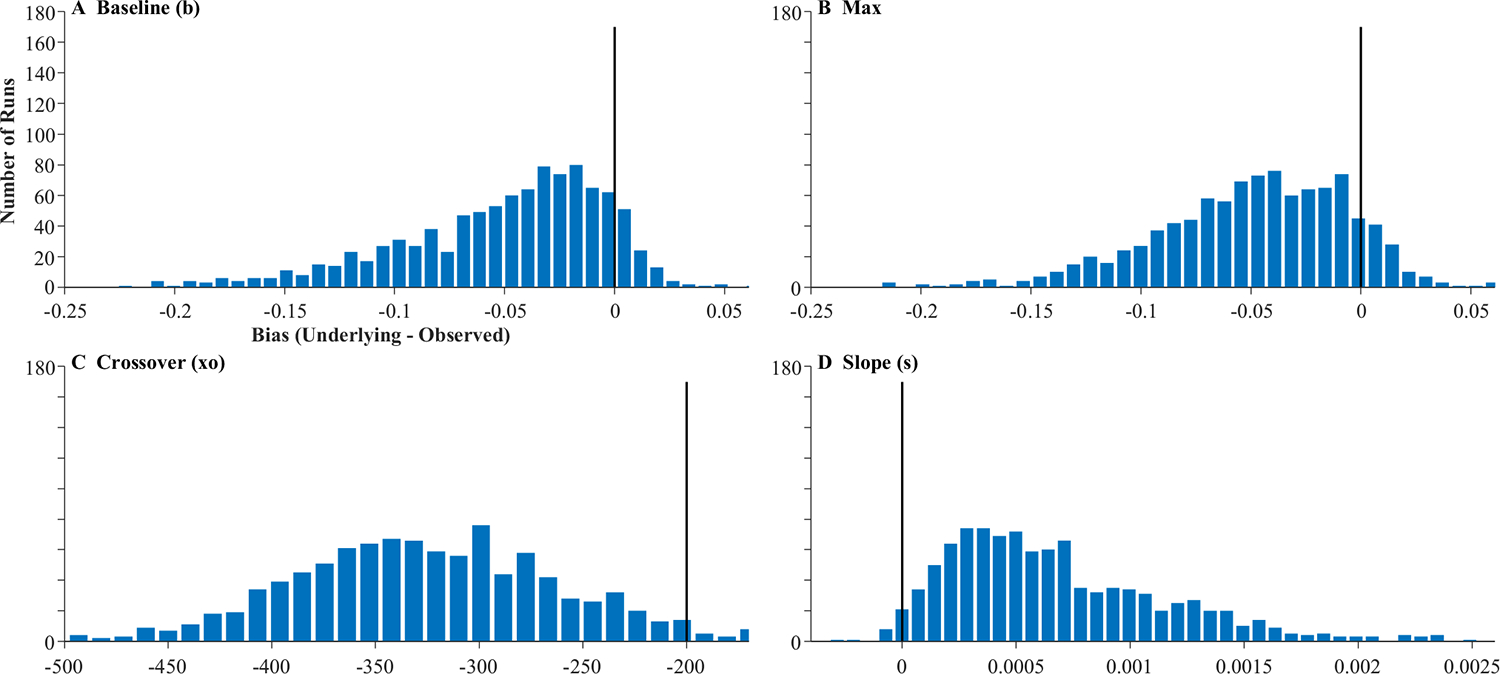

This lack of systematicity was also seen in bias (Fig. 14). While b1 and b2 were unbiased, peak timing (μ) was biased well beyond the 200-ms oculomotor delay. Height was biased downward, with lower values than the underlying. However, in quite a few runs (139/1,000), the observed ht was higher than the underlying value. Onset and offset slopes were generally lower (steeper) than the underlying values. Bias was related to the properties of the subject’s fixations. Predictably, the bias in peak timing (μ) was negatively related to the mean fixation duration (r = −.383): subjects with longer fixation durations showed more delayed peak timing values relative to their underlying values. Bias in peak height (ht) was correlated with the variability in a subject’s fixations (r = .248): subjects with more variable fixations tended to have lower heights relative to the underlying. Importantly, all parameter estimates showed substantially more variance than the HFS model (Fig. 8). Thus, with a more realistic fixation model, the observed data are biased in some parameters and there is dramatically more noise.

Fig. 14.

Histograms showing distribution of bias across subjects in the six parameters of the competitor model assuming fixation-based sampling. Histograms include 40 evenly sized bins, optimally spaced to reflect the distribution of the data. Axes are expanded to match the other histograms in this manuscript. The black line indicates what would be expected for an unbiased measure

Finally, even as the underlying parameters were uncorrelated, the FBS generating model imposed moderate correlations among several observed parameters (Table 5). Onset slope (σ1) was strongly correlated with peak timing (μ) and had a small correlation with b1. Offset slope (σ2) was also correlated with b2. All of the parameters had small but non negligible correlations above 0.1. Thus, the fixation-generating model created spurious correlations among estimates.

Discussion

These simulations suggest that assuming even a simple serial fixation model has substantial effects on the observed data. Observed target fixations rose more slowly and were delayed far beyond the expected 200 ms oculomotor delay. Competitor fixations were also delayed. Moreover, there was large variance across subjects, and in many individual runs, the competitor fixations did not provide a close fit to the underlying data. One bright spot was the asymptotes which tended to be unbiased for both types of fixations (even as the variance was higher), which seemed to be reliable even when the FBS model was assumed.

Competitor estimates were noticeably poorer—particularly for parameters related to timing (slopes and peak time). To some extent this drop in validity is to be expected. These parameters are working from a portion of the underlying curve that is low (e.g., Fig. 3a); but these estimates are far lower than would be expected by stochastic sampling alone (with 300 trials, the lowest correlation in Simulation 0 was still greater than.9). Thus, this suggests that the FBS system is creating additional (and complex) noise in the system.

Notably, when the underlying function showed only a small peak (e.g., Fig. 13e–f) the fixation curve tended to show an even smaller peak. This is because if the heightened underlying activation was short lived, it was less likely that there would be a fixation launched in that window (to reflect that). This may explain why Simmons and Magnuson (2018) did not find evidence for rhyme activation in one-syllable words and Teruya and Kapatsinski (2019) did not observe it for one-phoneme overlap cohorts. It is not that these competitors were inactive; rather, the stochastic process of generating fixations made it difficult to see it.

Simulation 3: Fixation-based sampling with enhanced target duration

Approach

In the empirical record (Table 3; Farris-Trimble et al., 2014), target fixations were about 160-ms longer than fixations to other objects: Once the subject fixated something reasonably active, they were more likely to stay. This was not accounted for by the FBS model, and it was unclear how this would change the results. It could further delay target fixations. However, it also could enhance overall target looking, speeding the function.

This simulation thus implemented a simple modification to the FBS model. Fixations were randomly selected as before. However, if a given fixation was to be to the target, its duration was drawn from a distribution with longer mean and standard deviation (Table 3). If the fixation was to be away from the target, it was drawn from the same distribution as before (shorter durations). Since this effect was not observed in competitor fixations, Simulation 3 only considered targets.

Results

Fits were good averaging r = .998, and no subjects were dropped. Figure 15 shows representative results. It is noteworthy that many of these functions are no longer symmetrical and could have shallower slopes at early times and steeper slopes at later ones (Fig. 15b), or the converse (Fig. 15e). This is commonly observed in real data (and a limit of using the four-parameter logistic). This finding suggests this asymmetry may come in part from fixation sampling issues. The degree of match between underlying and observed fixation curves was much more variable. In Fig. 15a, for example, the observed data matched the underlying data fairly closely, and do not even show the heightened delay seen of the FBS models (see Fig. 11). However, this was not consistent across runs (cf. Fig. 15c). For the first time, there was also a significant failure of the observed data to preserve the asymptotes. In Fig. 15d, for example the observed lower asymptote is above the underlying, while in Fig. 15f, both asymptotes are off. As a result, estimated parameters are less correlated with their true values than in Simulation 2 (Table 6). The asymptotes are off ceiling (though still high), and crossover (r = .792) and slope (r = .616) are quite a bit lower.

Fig. 15.

Representative subjects for target fixations assuming fixation-based sampling with enhanced target duration (FBS+T). Two patterns are highlighted: a The underlying and observed data are a fairly close match. b There is an additional delay but otherwise a close match in slope. c The slope is shallower. d Asymptotes of the observed data exceed the underlying. (Color figure online)

Table 6.

Summary statistics for the target simulations assuming a fixation-based sampling with enhanced target duration (FBS+T) eye-movement model

| Parameter | Correl with under | Mean | Bias |

Cross correlations among observed parameters |

|||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | D | b | max | xo | s | |||

|

| |||||||||

| Lower asymp (b) | .935 | .187 | −.051 | .047 | −1.08 | .147 | .002 | −.528 | |

| max | .892 | .895 | −.050 | .044 | −1.12 | .147 | −.209 | −.049 | |

| crossover (xo) | .792 | 1,087.9 | −322.6 | 66.2 | −4.88 | .002 | −.209 | 0.114 | |

| slope (s) | .616 | .0012 | .0007 | .0005 | 1.41 | −.528 | −.049 | .114 | |

b: initial asymptote; max: upper asymptote, xo: crossover, s: slope

There was bias in all four parameters (Fig. 16). Both asymptotes were systematically shifted to be greater than observed values. The crossover was later, and slope was systematically higher. We also saw increased variance—particularly in the asymptotes—over Simulation 2. However, correlations between the estimated parameters were only a little worse than in Simulation 2 (Table 6). Slope was highly correlated with the lower asymptotes (but not the upper), and crossover and the upper asymptote had a small correlation.

Fig. 16.

Histograms showing distribution of bias across subjects in the four parameters of the target model assuming fixation-based sampling with enhanced target duration (FBS+T). Histograms include 40 evenly sized bins, optimally spaced to reflect the distribution of the data. Axes are expanded to match the other histograms in this manuscript. The black line indicates what would be expected for an unbiased measure

Discussion

This simulation added realism to the FBS model in one small step: If the subject fixated the target at a given moment, that fixation was lengthened by about 160 ms. Doing so added even more variability—even to the asymptotes—and bias to all parameters.

Simulation 4 (supplement)

The forgoing simulations treated fixations to each object (target or competitor) as deriving from a binomial distribution (is the participant fixating the target/competitor or not). This is an oversimplification of the true, multinomial process, in which the participant must choose which object (of the four) to fixate. Supplement S3 thus constructed a multinomial version of Simulations 1–3. Findings were similar with a fairly close alignment between the underlying and observed fixation curves under the HFS fixation model and increasing variability and bias under more realistic FBS and FBS+T models.

Simulation 5: Reliability

Approach

Simulations 1–4 suggest that more realistic fixation-generating models lead to systematic and unsystematic differences between the observed data and the underlying function. While the systematic differences (bias) pose a problem for interpretating the data, unsystematic differences (noise) may impact the psychometric properties of the VWP: reliability, power, and Type I error (TIE). For example, in competitor models, the observed peak was sometimes higher than underlying and sometimes lower (Fig. 12d–e). This raises the question of whether the observed data are reliable. That is, if one generated a series of fixations from the same underlying function twice, would the fixation curves from each run match? It is possible that even with a reasonable number of trials, there would not be sufficient samples to converge on the “true” observed data (e.g., Fig. 3b). In contrast, the observed data could be reliable from run to run, and the unpredictability across different runs in the prior simulations is stable. This would imply that the mapping between underlying and observed functions is lawful, but highly complex.

This is a question of reliability. This issue is being confronted throughout our field as experimental measures are adapted to individual difference metrics (Enkavi et al., 2019; Hedge et al., 2018). As the replication crisis in experimental psychology has unfolded (Open Science Collaboration, 2015), there has been significant attention paid to poor scientific (Bakker et al., 2012) and statistical (J. P. Simmons et al., 2011) practices and to small effect sizes. However, equally important is reliability: A true effect may fail because the empirical measure is not reliable (Schmidt, 2010). This is particularly a problem for measures adapted from experimental psychology, which are often optimized to minimize within-subject variability (Hedge et al., 2018). In that way a failure to understand (and report) the reliability of the VWP is an example of a questionable measurement practice (Flake & Fried, 2020).

This is increasingly important for the VWP. As the VWP is applied to clinical populations, individual differences and development (Desroches et al., 2006; McMurray et al., 2019b; Mirman et al., 2008; Rigler et al., 2015; Yee et al., 2008), its reliability is the upper limit of what effect sizes can be detected. If a measure is only correlated with itself at r = .6 (test–retest reliability), it cannot have a higher correlation than.6 with any other factor. There is only one published study of the test–retest reliability of the VWP (though one hopes for more; Farris-Trimble & McMurray, 2013). It showed good to moderate (r = .5–.75) reliability depending on which aspects of the fixation curves were examined.

Test–retest reliability in the real world is a product of two things. The first is how stable the latent trait is. In the framework developed here, this corresponds to the underlying curve. Second, reliability is a product of how consistently the test assesses those latent values. Usually, we think of measurement accuracy as a function of properties of the test: The items, the timing of the stimuli, fatigue, and so on. However, the previous simulations suggest that in the VWP substantial noise may derive from the stochastic fixation-generating function. If the stochasticity of the eye-movement system also contributes to lack of reliability, there may be an upper limit to what can be achieved by optimizing the VWP along traditional dimensions (items, trial design, etc.).

Simulation 5 thus ask how much the fixation-generating process contributes to a lack of reliability by locking the underlying latent curve and examining reliability solely as a function of the stochasticity of the generating process. On each “trial” a subject’s underlying fixation curve was randomly selected. Next, fixations were generated for a fixed number of trials and fit as before. After that, a new set of trials was generated from the same underlying function. This was done for 1,000 subjects, and the estimated parameters of the fixation curve were correlated across test and retest. This was done for all three eye-movement-generating models (HFS, FBS, and FBS+T), and for three different sizes of experiments (50, 150, and 300 trials).

Note that these estimates will be higher than empirically estimated reliability, as they only reflect a single source of lack of noise (the stochasticity of the eye movements) and assume perfect stability of the latent trait. Moreover, these simulations used the Pearson correlation to estimate reliability; better estimates would be obtained with the concordance or interclass correlations (Bartko, 1966; Lin, 1989), both of which test for a one-to-one relationship (rather than mere predictability). The simpler Pearson coefficient was used for comparability to the only published work on the reliability of the VWP (Farris-Trimble & McMurray, 2013). Estimates with the concordance or interclass correlation are likely to be smaller.

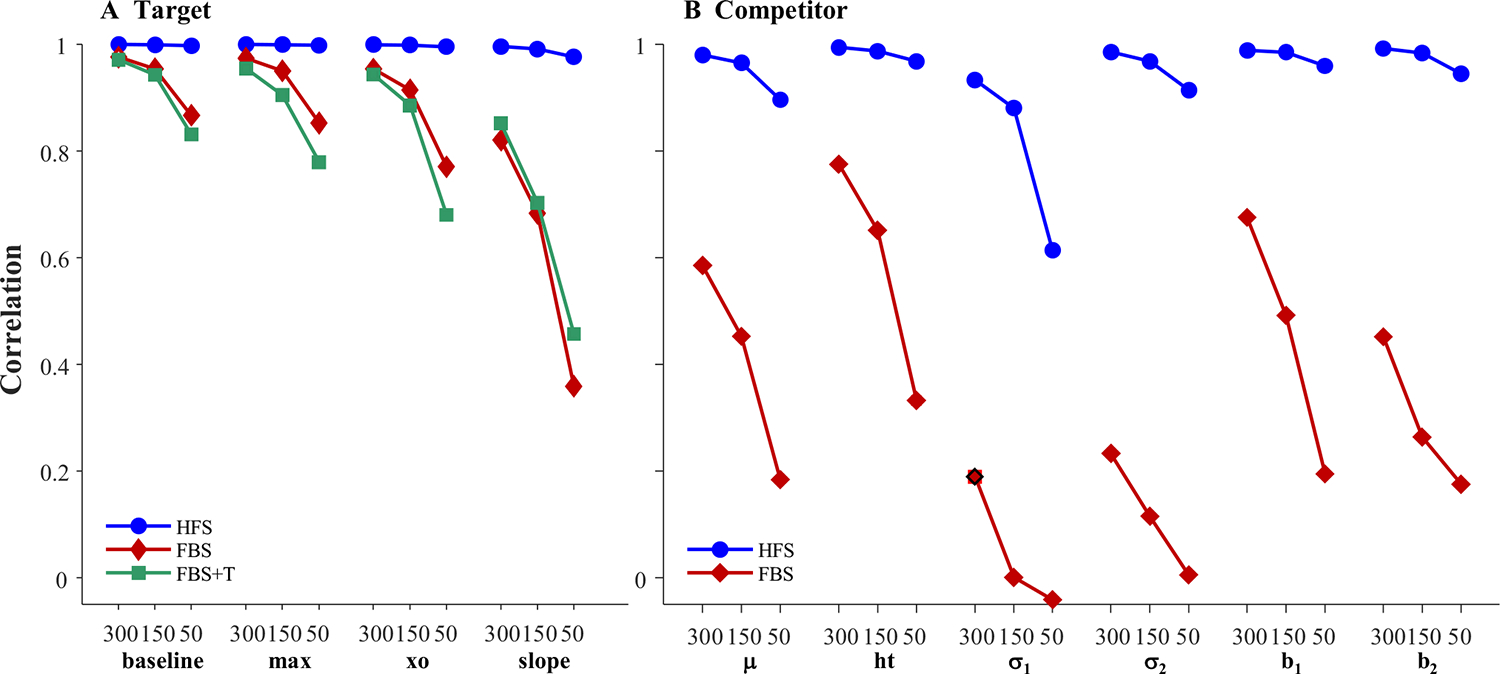

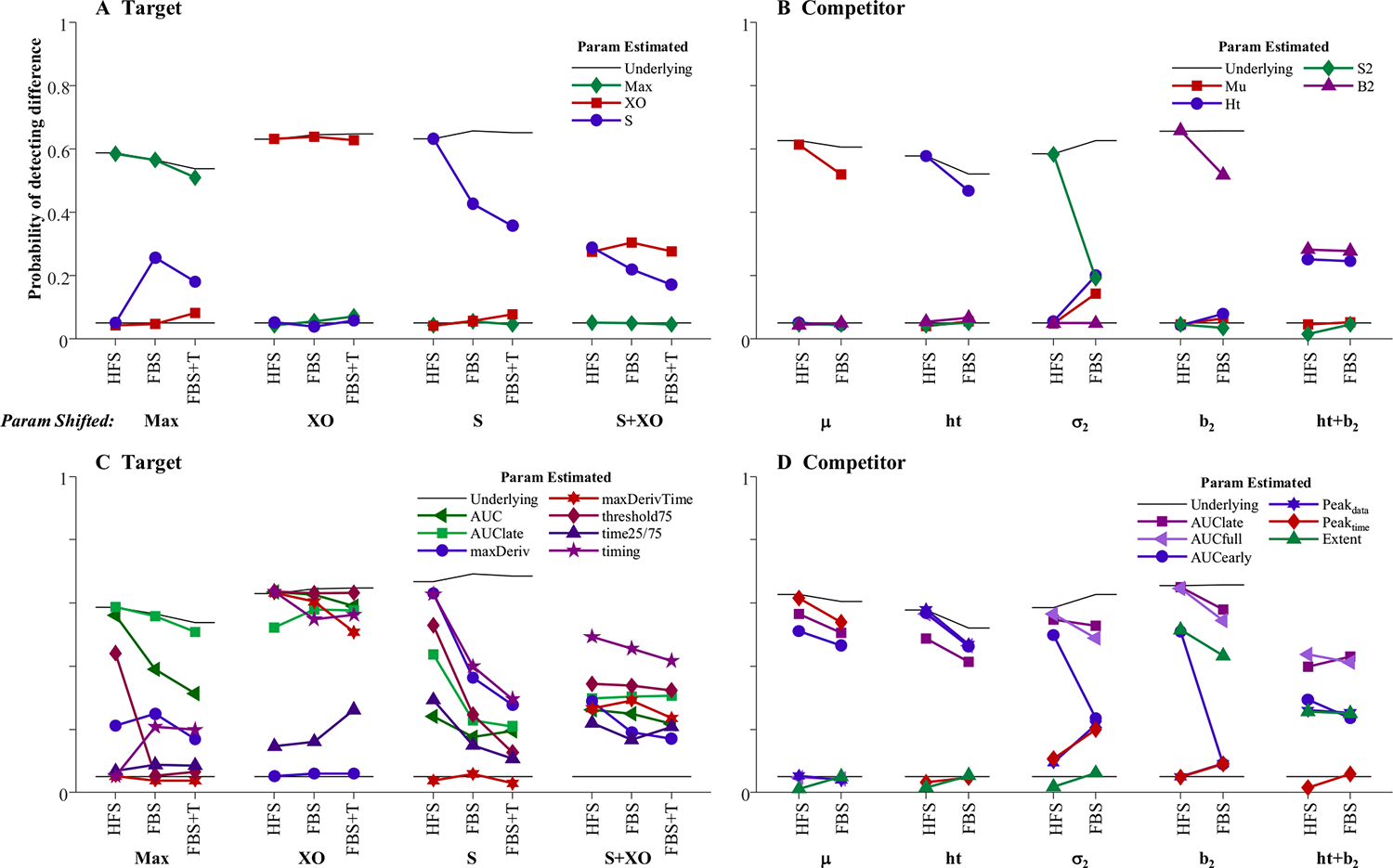

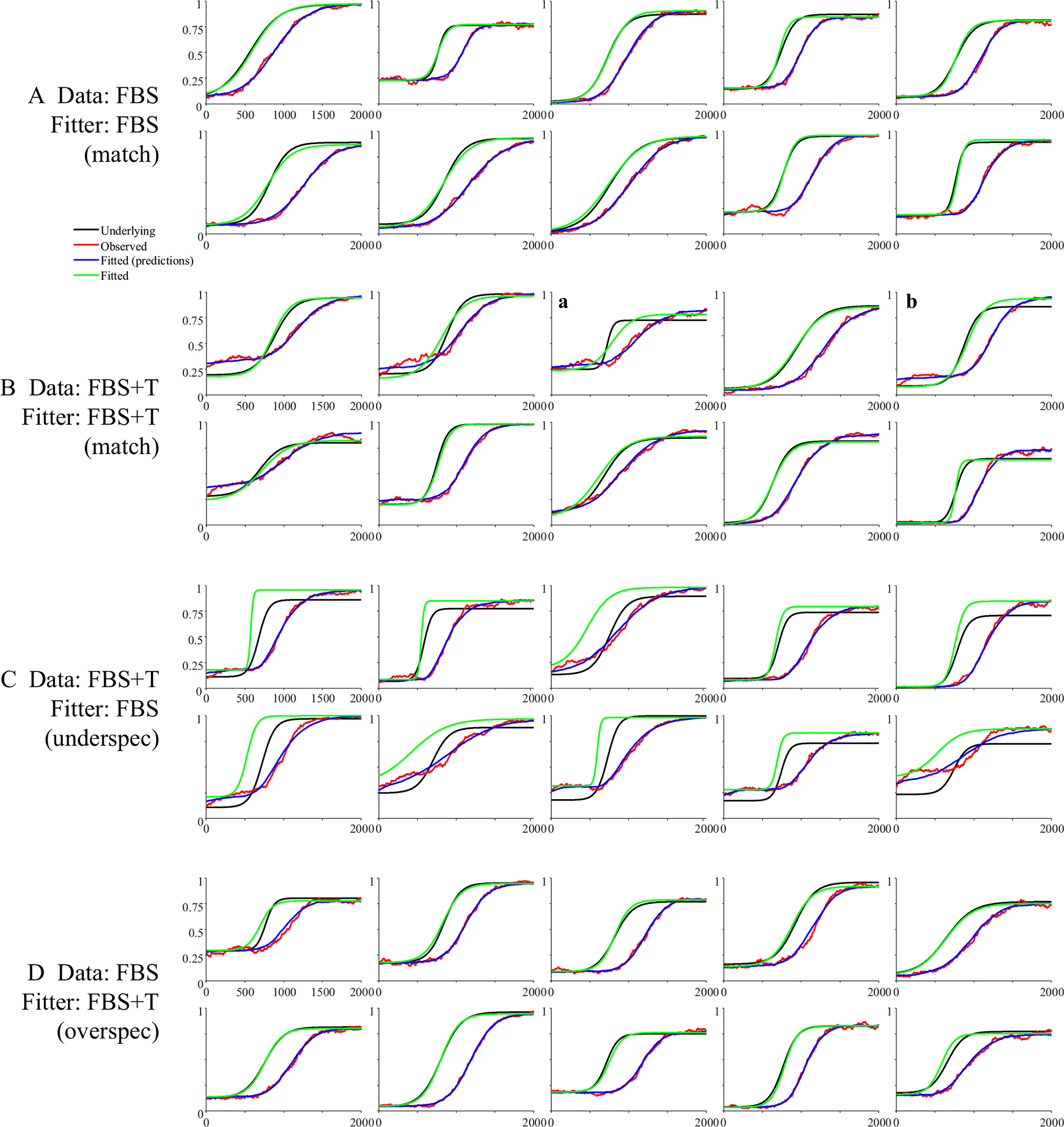

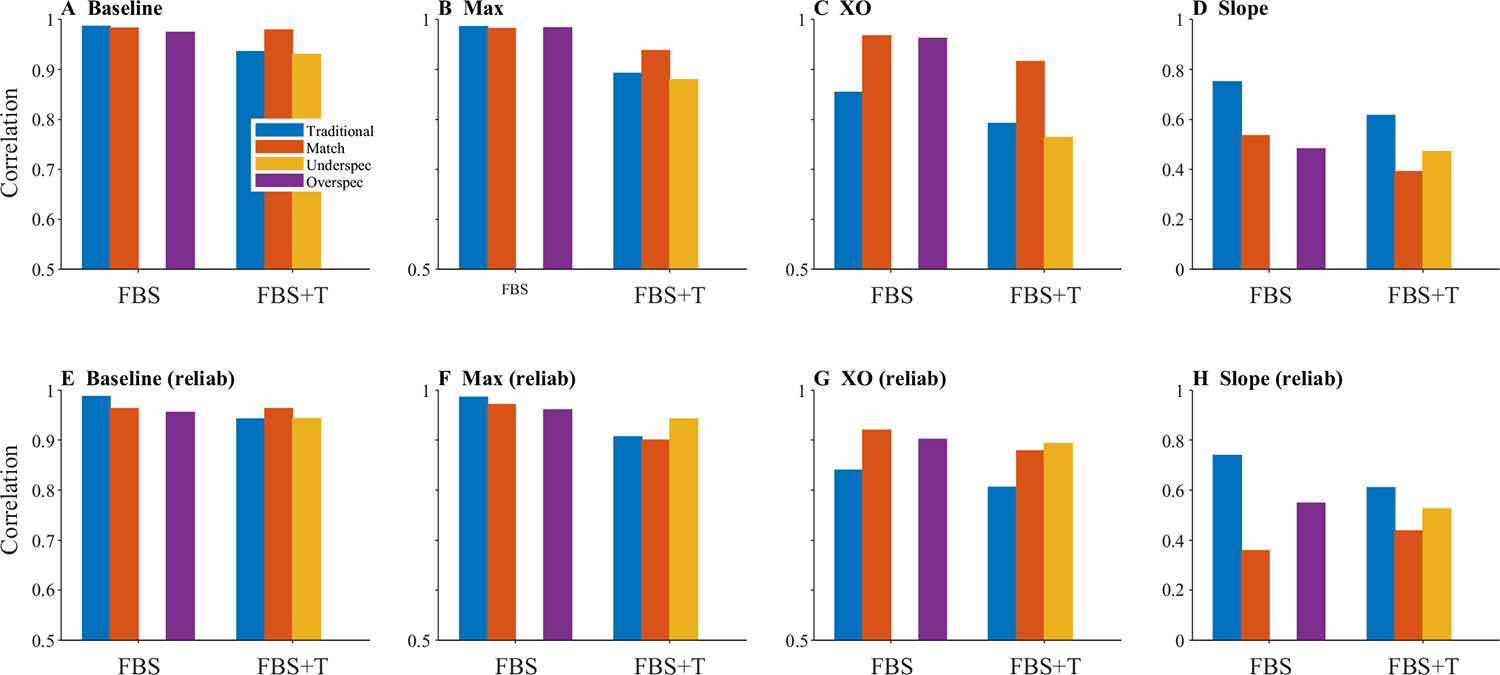

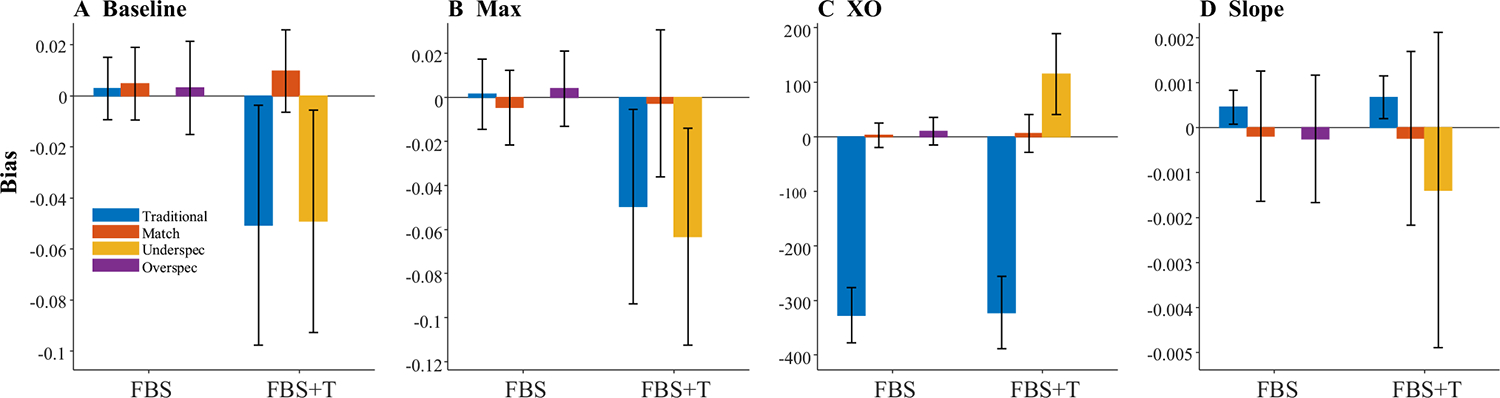

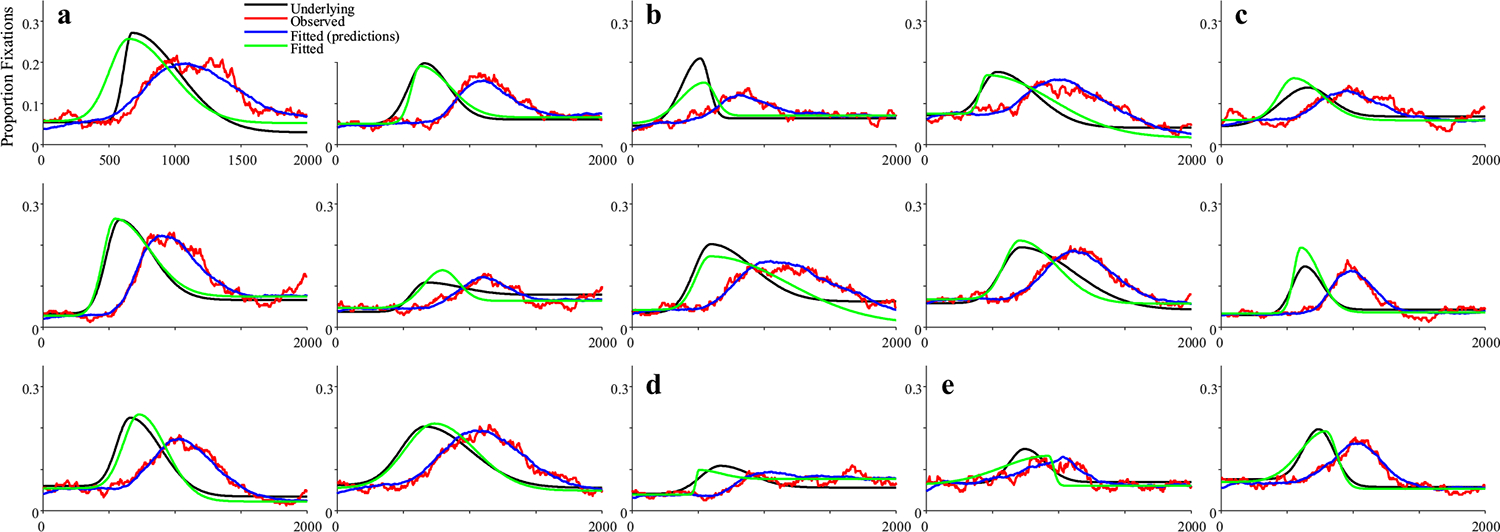

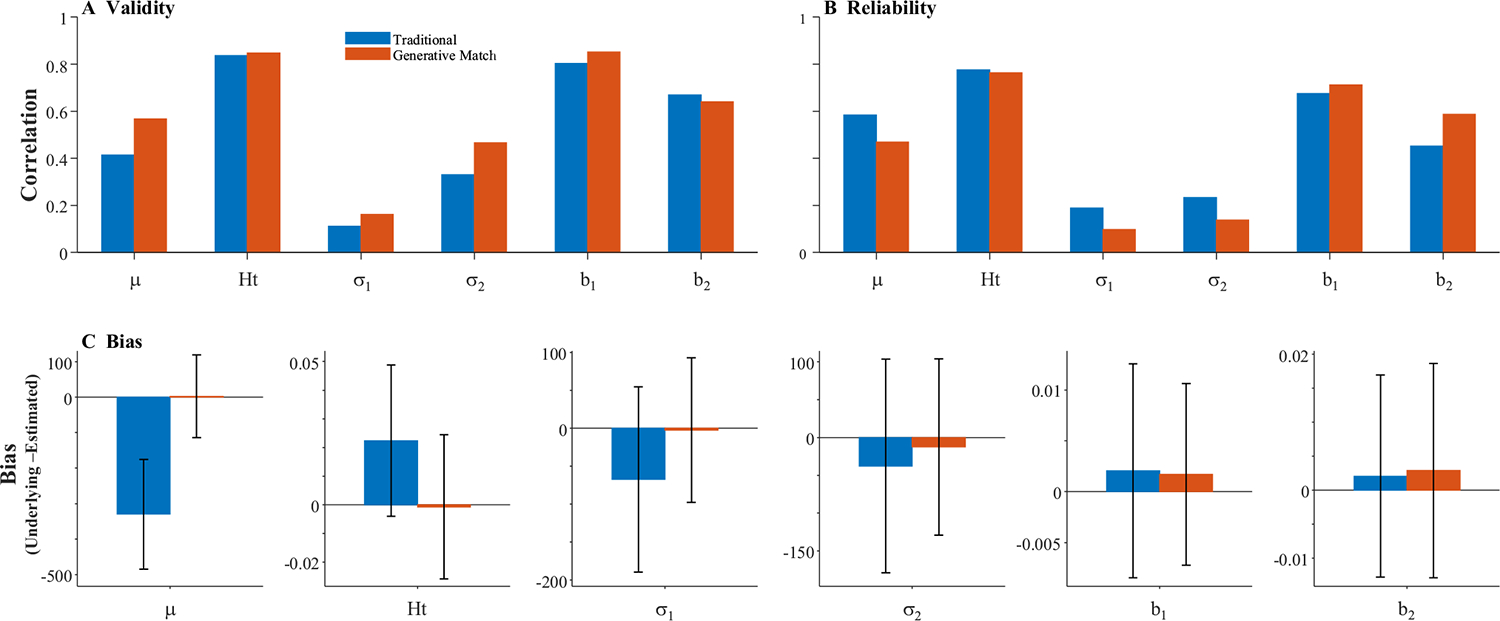

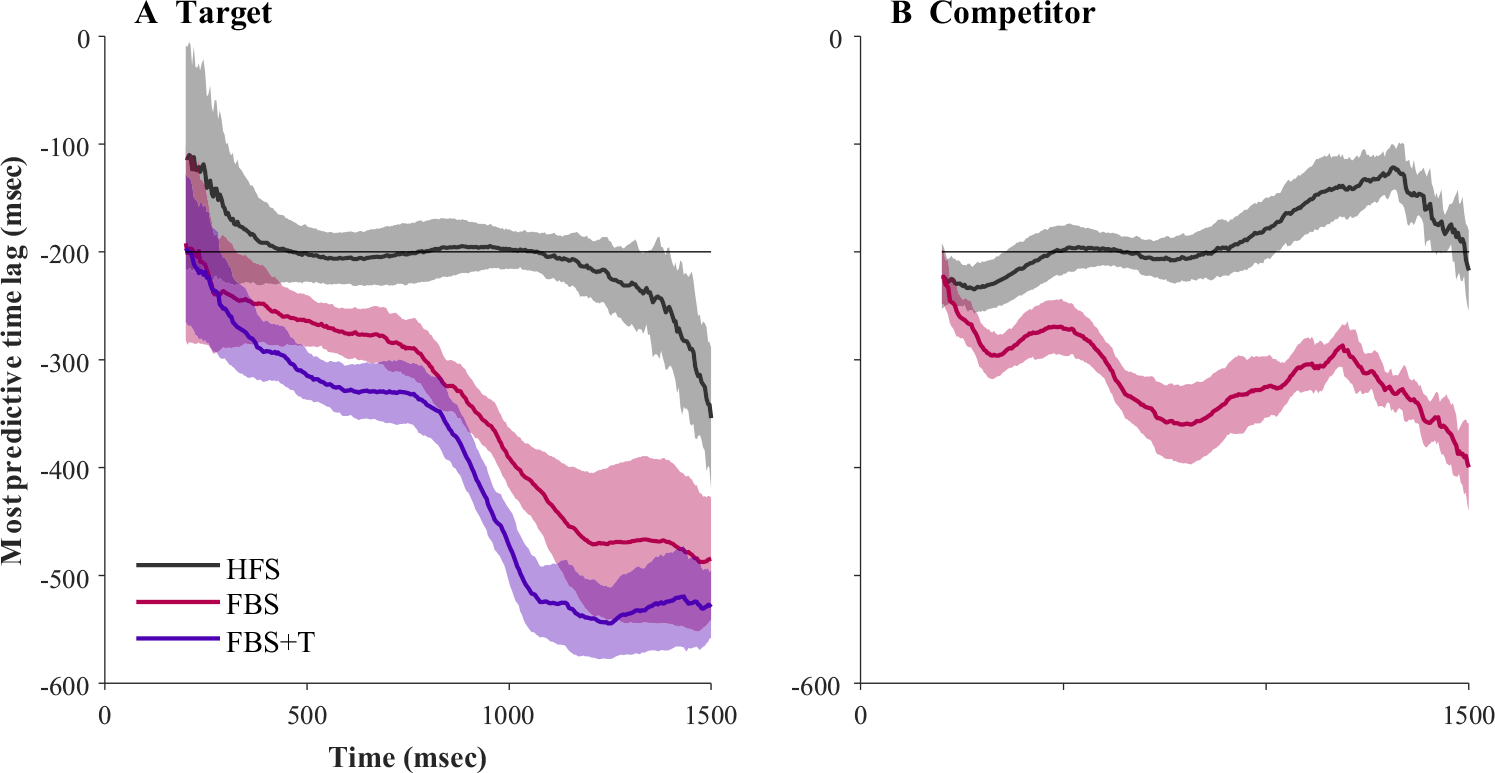

Results