Version Changes

Revised. Amendments from Version 1

The authors thank the reviewers for the kind comments and helpful suggestions. Thanks to their suggestions there have been wording updates to the material and some extra suggestions and ideas for implementing these ideals in the classroom. - We tried to make it more clear in the abstract that we see “iteratively updating” as the fourth ideal. - We removed the unnecessary mention of computational biology in the first sentence. - We added an explanation to explain what “live coding” is in the context of the classroom. - We added a suggestion that “reprex” or “reproducible examples” are a great idea for building communication skills. - We renamed the “Practical Idea” sections to “Practical Suggestions” - We made it clear that silliness is only helpful if it is also appropriately inclusive. - We added links to Software Carpentries as an example of how to contribute to curriculum. - We added a suggestion that in order to iteratively update we need to set aside time to do so. - We smoothed some other minor awkward wordings.

Abstract

Data science education provides tremendous opportunities but remains inaccessible to many communities. Increasing the accessibility of data science to these communities not only benefits the individuals entering data science, but also increases the field's innovation and potential impact as a whole. Education is the most scalable solution to meet these needs, but many data science educators lack formal training in education. Our group has led education efforts for a variety of audiences: from professional scientists to high school students to lay audiences. These experiences have helped form our teaching philosophy which we have summarized into three main ideals: 1) motivation, 2) inclusivity, and 3) realism. 20 we also aim to iteratively update our teaching approaches and curriculum as we find ways to better reach these ideals. In this manuscript we discuss these ideals as well practical ideas for how to implement these philosophies in the classroom.

Keywords: education, data science, pedagogy, teaching, informatics

Introduction

Data science is booming and many fields have rapidly evolving data science needs. 1 , 2 For these needs to be met, scalable education efforts need to be supported. 3 Data science classes are often taught by practicing data scientists who have taken on a teaching role. This means they may have an idea of the changing landscape of data science but may lack experience in education. This phenomenon has been discussed in academia more generally, and it may be especially relevant to data science teaching. 4 – 6 Data science experts can be very passionate about teaching the next generation, but educators also need training in education methods for writing curriculum, lecturing, creating assessments, constructing learning objectives, or the many other duties that accompany teaching roles. 4 , 5

Talent for data science careers is equally distributed, but opportunity for such careers is not. 7 – 10 Educational barriers in STEM often begin as early as elementary school. 11 However, access to education and resources as well as efforts to raise awareness have the potential to reverse this pattern. 12 As a next step, helping empower educators in best practices can help make opportunities for data science careers more equitable. Data science educators must tailor their teaching methods to equip students with relevant skills, while also contextualizing the material based on the students’ interests and backgrounds. 13 Furthermore, democratizing this knowledge to enhance more diverse representation in data-driven fields holds the potential to increase innovation 14 while avoiding harms. 15

Data science education programs have become increasingly popular. Organizations like The Carpentries and Dataquest, fast.ai, as well as massive open online courses (MOOCs) and formal Master’s programs and certificates in data science indicate the great demand for these materials. 16 – 18 MOOCs, while helpful, tend to primarily benefit highly educated individuals. 19 Students often need instructors who understand their needs and are willing to work with and build relationships with them. While data science instructors can access excellent and inspiring training materials directly, educators are often in need of more guidance. For example, The Carpentries has an instructor training course that covers teaching approaches and skills that emphasize motivating, inclusive, and accessible practices. 16 For implementation at the high school age level, the Introduction to Data Science curriculum from UCLA has materials for training teachers. 20

In this opinion article, we combine advice from existing resources with our own education experiences for instructors who plan to take the next step in designing and implementing data science educational content. Our lab is involved in a number of data science education efforts, including training high school students, graduate students, postdocs, researchers, and university faculty, and these experiences have taught us a great deal about data science teaching that may be useful for others’ education efforts. 9 , 21 , 22 We will discuss the overarching lessons we have learned as well as practical tips for how these lessons can be applied in the classroom. The deeper learning occurs when we apply or create using what we’ve learned. 23 We encourage data science educators to use any advice from this discussion that best fits their classroom and audiences. We will also strive to better apply these ideals in our own teaching as we continue to learn. For summaries and lists of resources from this manuscript, see the Supplementary Materials section.

Teaching Ideals

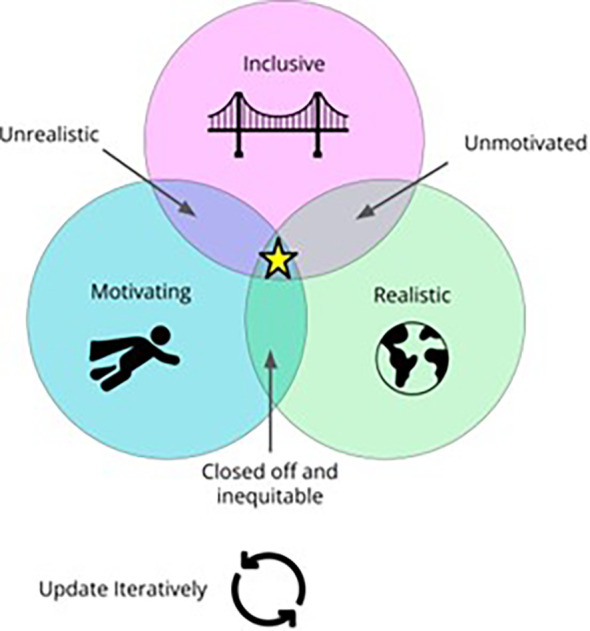

The lessons we have learned inform our teaching philosophy, summarized into these main ideals (See Figure 1).

-

•

Motivation: Aspiring data scientists face many demoralizing steep learning curves. As others have noted, 16 motivation is key for learners to persist and succeed despite these challenging learning curves of data science.

-

•

Inclusivity: Diversity is lacking in data science education. 24 Making data science more inclusive is not only the right thing to do, but improves innovation and understanding. 14 , 25 , 26 Data science suffers when there is inequitable entry into the field.

-

•

Realism: The best learning approaches are those that attempt to prepare students for “real life” as much as possible. 27 We strive to have our curriculum be hands-on and interactive in ways that reflect what our students will be doing as data scientists in their communities and outside the classroom.

-

•

Iteratively update: Students are not the only ones learning. Data science is a fast-changing field. Not only do we need to keep up with data science as a field to best prepare our students, but we also need to learn best teaching practices from education research. 28

Figure 1. A Venn diagram illustrating our teaching philosophy.

Ideally, data science teaching should be motivating, inclusive and realistic. If any of these ideals are lacking, our teaching effectiveness suffers, which is why the fourth ideal includes iteratively updating our curriculum and approaches as we find better ways to meet these ideals.

Our teaching philosophy depends on all these ideals together. As the diagram illustrates, when one ideal is lacking, our teaching is less effective. Without motivation, students will struggle to persevere through challenging material. Without realism, our students will not be adequately prepared for careers as data scientists. Inclusivity makes these ideals more democratic. Inevitably these ideals are intertwined and in this discussion you may find suggestions that are not only promoting inclusivity, but also motivation and realism. Here, we will discuss practical implementation of motivating, realistic, and inclusive data science teaching.

Education Projects

The ideals and practical advice in this paper have come from our collected teaching experience in these various projects we will highlight.

DataTrail

DataTrail is a 14-week paid educational initiative that promotes inclusivity in data science education for young adults, high school graduates, and GED recipients. We provide financial, social, and academic support, including weekly check- ins, tutoring, and internships for graduates. Our initiative goes beyond programming to prepare students for real-world situations. We continue to learn lessons about how our teaching has helped our students in their internships following graduation. 22

GDSCN

The Genomic Data Science Community Network works to broaden participation in genomics research by supporting re- searchers, educators, and students from diverse institutions. These institutions include community colleges, Historically Black Colleges and Universities, Hispanic-Serving Institutions, and Tribal Colleges and Universities. Our vision is one where participation is not limited by an institution’s scientific clout, resources, geographic location, or infrastructure. These institutions play a critical role in educating underrepresented students. 29 We work with and support faculty from these institutions as they address systemic bottlenecks.

ITCR Training Network

The ITCR Training Network supports cancer research by equipping users and developers of cancer informatics tools with training opportunities. These audiences are professional learners with advanced degrees. They often have specific project goals that they are looking to apply data science skills to. We attempt to equip the users of cancer informatics tools with data science foundations they need. We also have other training opportunities for the developers of cancer informatics with skills and principles they can use to increase the usability of their data science tools.

Open Case Studies

The Open Case Studies project provides an archive of experiential data analysis guides that covers analyses using real- world data from start to finish. 21 There are over 10 case studies that are currently focused on utilizing methods in R, statistics, data science, and public health. These case studies evaluate timely and relevant public health questions. These case studies are intended to be used by instructors to assist with teaching courses, as supplemental resources for courses, or as standalone resources for learners. They are aimed for undergraduate and graduate learners, but also have material appropriate for teaching high school students. The case studies help showcase the data science process and demonstrate the decisions involved.

Motivation

A common barrier for individuals entering data science is motivation 30 to overcome the steep learning curves involved in becoming a data scientist – namely learning programming and statistics. For many students, having a computer print “Hello world!” isn’t enough to sustain their interest through the trial and error of background programming and statistics necessary for more inspiring data visualizations or interactive apps. Motivation is the fuel that is needed for successfully overcoming these learning curves. 16 Budding potential data scientists need to be made aware that frustration, failure, and mistakes are normal and do not indicate that they are unsuited for the field!

The best motivation for data science is usually not a deep interest in programming or statistics, rather it is a data related question or problem that a budding data scientist cares about. This is why data science is such a broad and pervasive field. Data scientists may arise from a variety of different questions, problems and contexts. The best motivation for becoming a data scientist is to have a problem that has a quantitative question behind it.

It’s also worth noting that some students may never truly be interested in the programming side of data science as a career and that is also okay. It is also our goal to increase data literacy so that students who pursue other interests walk away with useful interdisciplinary skills.

Confidence and repetition are more important than talent

Learning data science is hard. This means learners tend to believe that they are “just not good at it" and that this is a fixed intrinsic quality they possess. These beliefs can affect not only a student’s confidence but perhaps worse, a teacher’s behavior that may reinforce stereotypes. 31 We tend to attribute innate talent to one’s success instead of realizing that it is often due to dedication and practice. 32 In some ways, learning to program is no different from learning a spoken or written language. Practice and mistakes build fluency. But many students become disheartened if they do not learn programming right away. Instead, we want to encourage a “growth” mindset. 16 , 33 A growth mindset emphasizes that success is not about where you started, but the idea that you can continue to improve your skills if you continue practicing.

Through the DataTrail program, we have witnessed students become disheartened as data science skills become difficult to learn. To better encourage our students, we reorganized our curriculum to get to the “fun” things sooner. Previously, we didn’t teach data visualization until the last quarter of the course. We realized that making visuals is something students generally enjoy and can boost confidence. Students often learn best with utilizing different modalities (images and non-images for example). 34 So now, we have students create visuals in the very first project (with some help of code we have pre-written for them). Not only has this helped motivate our students, but it has the added benefit of giving us more insight into what students are writing and trying with their code.

Practical suggestions for boosting confidence

-

•

Get to the magic: Have your students see a glimpse of the “magic” early on. Images, plots and interactive pieces of code and apps are the most fun. You can use “cooking show” magic and provide your students with something 99% processed but then have them be able to customize or fill in the last bit of code.

-

•

Emphasize the “yet”: Encourage a growth mindset by assuring the student that with practice and perseverance they will learn this even if they haven’t learned it “yet”. 16

-

•

Validate trickiness: Try to stay away from using phrases like “it’s easy”. Although you might be attempting to impart confidence, it may make students feel inadequate if they don’t also find it easy. Validating the challenges in data science can be reassuring and help renew a student’s confidence.

-

•

Assure togetherness: Sometimes frustration regarding a problem can feel lonely and impossible. Assuring the student that you are there to help can be a powerful learning tool.

-

•

Celebrate the wins: No matter how small the win, celebrate what your student has accomplished. This can mean congratulating them, but also may mean encouraging them to share their success with their peers.

-

•

Do not compare: Be careful not to compare your students’ skills with your own or with other students. Emphasize the idea that everyone has different starting points and aptitudes. What matters is not where you are today, but that everyone continues to learn.

-

•

Have madlibs code: By madlibs code, we mean code that is mostly written but has the student fill in the blanks. This allows the student to see the control they have over code without requiring them to write a whole project from scratch before they are ready to do so.

Mistakes are good and should be expected

Feeling a bit uncomfortable and making mistakes is an important part of learning. In fact, we remember better when we make mistakes. 35 We also want everyone to feel comfortable with what they do not know. This starts with the instructor! It is incredibly valuable for a student to see how their instructor works through a problem or goes about finding a solution. It can be tempting to feel like you need to be a font of wisdom and it can feel like it undermines your authority if you let students see you struggle through a solution. However, it is just as important to model how to tackle the unknown as it is to demonstrate your knowledge and mastery. It can be very helpful to model the process it takes from not knowing something and making mistakes and iteratively figuring it out. Evidence shows that people who expect discomfort and challenge are more likely to overcome those challenges and perceive their accomplishment more positively. 30

In the DataTrail program, before we begin to code, we attempt to help manage our students’ expectations about programming by discussing the role mistakes have in data science. We have a chapter about how to learn in data science with a strong emphasis that data science involves questions and sometimes failure and that is to be expected!

Practical suggestions for encouraging mistakes

-

•

Talk about mistakes you’ve made: It can be helpful if, as the educator you also tell of times you’ve made mistakes in your work. It can help students start to reverse the idea that mistakes should be hidden but instead that mistakes are normal! This is something that we’ve embraced from the Data Mishaps Night. 36

-

•

Model making mistakes: Although you don’t need to purposely make mistakes in live coding, if you do see yourself starting to make a mistake, maybe don’t stop it right away. Try to let students catch your mistake instead of pointing it out to them. When they do point it out to you, be sure to be happy about the fact that as a team, you have caught the mistake.

-

•

Say “I don’t know”: If a student asks a question that you are unsure of, say “I don’t know” proudly. Use this as an opportunity to demonstrate that not knowing something is expected. The class can also take the opportunity to look something up together. If the question is less relevant to the whole class, let the student know you will look into it and get back to them or you and the student can look into it together later.

-

•

Encourage iteration: Reaffirm the idea that drafts are okay. Blank pages are harder to work from than pages full of mistakes. Rather than striving for perfection on the first try, emphasize the idea that we can use version control and return to this code later to better polish it.

-

•

Normalize questions: Instead of “are there any questions?”, ask “what questions do people have?”. This small wording change can help lower the intimidation factor by implying questions are to be expected.

Atmosphere boosting to encourage learning

For people to learn, they need to feel comfortable. A great way to make people feel comfortable is by being more informal and showing it is okay to be silly. Our education group encourages using silliness as a teaching tool. Research shows that humor can increase motivation, learning, and perception of authentic teaching. 37 – 39 Silliness defuses frustration and lowers our anxieties about a topic. 30 We prioritize being silly over seeming important and solemn. Silliness is an atmosphere booster and can help educators seem more approachable. Being silly means: minimizing the use of jargon, making it okay to laugh at ourselves, and trying to connect with people. Of course being silly is only truly beneficial if it is also paired with being appropriate and inclusive. Instructors being comfortable with yourself also makes you a good role model. Learners, particularly those who are underrepresented, benefit when they see their instructors are real people. 40 , 41 Your own brand of in-class examples or metaphors are often the ones that stick with students the longest.

Practical suggestions for being silly

-

•

Study silly data: Data science doesn’t always have to be about life changing questions. Sometimes it can be a lot of fun to analyze datasets about movies, the best halloween candies, and bigfoot sightings. But it is also critical to ensure that these dataset selections are inclusive and appropriate.

-

•

Use GIFs and cartoons: Cartoons and GIFs can be mood boosters and can even make salient points that will stick with your students after class is over.

-

•

Use fun data examples: People like movies and pop culture. So long as the data is appropriate, it can be fun to use data examples that have material that people enjoy.

-

•

Use silly icebreakers: The more the classroom feels comfortable with each other, the more they will be ready to learn and participate. Let the class know it’s okay to be silly by asking a silly question.

-

•

Take snack breaks: Snack breaks or other kinds of breaks don’t need to be silly per se, but being silly during breaks are important to help people stay refreshed and ready to learn.

Inclusivity

Data science suffers from a lack of diverse perspectives. It is disproportionately white, upper class, and male; concen- trated in select geographical locations; harder to access by persons with disabilities; and challenging for first-generation students. 42 Some of the largest biomedical data science institutions are located in areas with low income mobility 8 and likely have contributed to lack of opportunities. 43 However, income mobility can be mitigated by education. 44 Inclusive and diverse research and science benefits individuals and is also more innovative. 14 We therefore prioritize the promotion of Inclusion, Diversity, Anti-Racism, and Equity (IDARE) through our curriculum and in our classrooms. Everyone learns more and is more productive when we emphasize inclusive practices and continue to look for opportunities for learning and growth. 45 , 46

Underrepresented communities face barriers

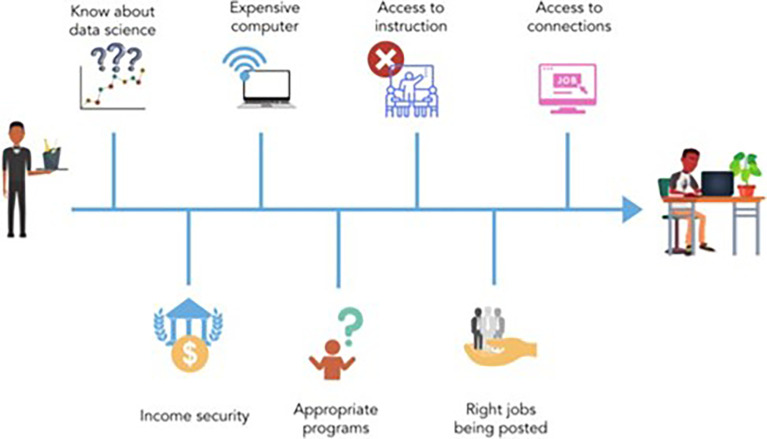

Despite increased awareness, large disparities in funding and support persist across primary and secondary education. These disparities have generally been at the expense of underrepresented groups such as students from Black and Indigenous communities. Primary schools with greater resources might be able to introduce data science at much earlier ages, enhancing students’ ability to pick it up and understand it. 47 , 48 By contrast, students from under- resourced schools might have few opportunities to learn about data science. Students might also encounter barriers when gaining access to a computer and/or internet, securing childcare or time off work, and breaking into job networks (See Figure 2). These systemic disparities in education mean that learners from underrepresented groups may need more support. 9

Figure 2. This figure represents the barriers that many members of underrepresented and underserved communities face when entering the field of data science.

Some of the barriers to entering data science include knowing about data science as a career option, income security, access to an expensive computer, access to instruction, knowledge of the appropriate education programs, having connections to the industry and having the right jobs being posted, and knowing how to look for the appropriate job openings.

You are not your student

In user experience design, there is a saying that “you are not your user”. 49 In data science education, we could just as easily say “you are not your student”. Help your students find their passion in data science realizing it might differ from yours. Students will be more motivated to persevere through the challenges of data science (such as learning programming syntax and troubleshooting confusing errors) if they are fueled by a curiosity or passion behind their data science project. Instructors should ask students about their interests and what they want to do with their career to encourage exploration that aligns with their interests. 9

Also realize that students from underrepresented backgrounds likely have talents that are unrecognized or unrewarded by our traditional educational systems. Students have diverse talents and likely have awareness of important problems that might be missed by instructors. Try to emphasize in the early stages of data education about how to bring these skills to the surface. Data science education needs to focus on more than just technical skillsets.

If you have been coding for some time, you have likely forgotten how frustrating it was for you when you first started. Not only should you have empathy for your students as they approach this steep learning curve, but you also should remember that your students may have very different experiences and backgrounds than yours. Be aware not everyone “gets it” in the same way you do. Going back through the material as if you are witnessing it for the first time with empathy for students who are completely unfamiliar and noticing what information you might take for granted as common knowledge can help. In addition, teaching a topic that you have just learned can also help.

Practical suggestions decreasing barriers

-

•

Encourage or require office hours: Many students believe office hours are not relevant to them. 50 Encourage your students to drop by and introduce themselves any time, not only when they are stuck.

-

•

Survey your students: In-class anonymous surveys can answer questions like “why are you taking this class?” and “what career fields interest you?”, which can serve as a starting point for conversation.

-

•

Provide Mentorship: Young data scientists need support, particularly those from disadvantaged backgrounds. When possible, try to connect learners to supportive mentors who have time and understanding to devote to the learner. Ideally a mentor can be someone of a similar background to help encourage the learner through shared experience and understanding, but any form of mentorship is still beneficial.

-

•

Don’t require people to buy expensive things: Income insecurity can be a massive barrier to entry into the field of data science, but it doesn’t have to be. When possible, pursue cloud-based computing resources for your students to use. 51 This will allow them to run more computationally costly analyses on nearly any machine, including relatively inexpensive computers like Chromebooks. 8

In the DataTrail program, we attempt to mitigate many of these barriers. We provide individuals in the program with inexpensive Chromebooks that allow students to use cloud programming platforms to conduct their analyses. We pay individuals to participate in our program to help mitigate the issue of income insecurity. We also have partnered with non-profit hiring partners to place graduates of the program in internships. Even with our program’s social and financial supports, it is difficult for individuals to overcome the institutional and systemic biases that have been rooted in our society, but we hope that these supports give improved access to the individuals in our program. We encourage other data science program administrators and instructors to attempt to add financial and social support for underrepresented individuals whenever possible.

Practical suggestions for inclusive classrooms

-

•

Frequently take the temperature of the room: Silence and pauses may feel awkward, but they are critical to good teaching. Allow students to have time to think. Another useful tool is using sticky notes to keep track of whether students who are actively coding need help or are doing okay. People who are doing well can put up a green post it, while people who need help with something can put up a different color post-it. 16 Try to create an atmosphere that helps to decrease the intimidation factor of asking questions. Additionally, tools like Slido can help you collect interactive responses from your students from their smartphones or computers. 52

-

•

Explain things in multiple different ways: Perhaps you understand things well using a particular analogy. But that analogy may not resonate with all your students. Try to think outside of the box and explain things in multiple different ways.

-

•

Do not assume everyone knows the basics: Err on the side of explaining the most fundamental piece of knowl- edge. Less experienced students will be less likely to get lost when the curriculum advances, but more experienced students may overestimate how well they know something. Everyone benefits from starting off on the same page with the basics.

-

•

Use inclusive language: Refer to guidelines for creating inclusive communities. 53 Educators can unknowingly use phrases that reinforce stereotypes or perpetuate gaps in the STEM fields. 16 This also means that as an educator you should always be ready to be corrected and change course should a student share with you how they could be better accommodated.

-

•

Look for ways to improve the accessibility of your classroom: This includes simple things like making sure your curriculum can be read by a screen reader and testing your curriculum for color vision compatibility with tools like ColorOracle. 54 See our Supplemental information for a longer list of accessibility items to consider.

-

•

Be aware of implicit biases and stereotype threat: Though behaviors with implicit bias are unintentional, they can be very harmful all the same. 55 See our Supplemental information for resources and classes to take to combat implicit bias, stereotype threat and related issues.

Realism

As educators, a key goal is to prepare students to pursue their interests in their chosen careers. We do learners a disser- vice if we do not adequately teach them the skills they will need. Instructors must take note of the data science needs, whether within large companies or smaller community non-profits, and teach those skills. This includes collaborative “soft” skills, like giving/receiving feedback and code review. This also means using real data and real workflows 21 as well as incorporating data ethics and domain specific contexts. 56

Being realistic also means utilizing the ideas of “just in time teaching”. 57 We want to bring the concepts we discuss into real life scenarios as quickly as possible. Anyone who has been taught a complicated board game has felt the importance of just in time teaching. It is often overwhelming to be told a long list of rules and concepts that mean nothing to you before you have even begun to apply anything. Similarly, it is highly stressful to be told to remember things and “it will make sense later.” A more effective teaching tool to tell your students something and then directly apply it in an activity. Not only does this better align with a deeper level of application on the Bloom’s taxonomy educational objectives, it also avoids oversaturation, over otherwise overloading students with too much too soon. 23 Application and practice are key.

Practically, this means introducing a concept and following up quickly with live coding. Live coding refers to an instructor programming in front of their class. This is most impactful when it is followed up with hands-on practice by the learners themselves. Live coding is more useful than long lectures about concepts and helps ease learners into trying the code themselves. 58 Perhaps the most useful bit of live coding is when you employ strategies discussed or accidentally write errors yourself! 59 If you make an error while live coding, it allows students to see that everyone’s mistakes and provides an opportunity to demonstrate how you might go about investigating an error.

Providing concepts using a variety of real datasets and asking real data science questions relating to a diversity of learners can also be extremely motivating and helpful for students, especially when they try applying what they learned to other contexts. 60 Not all applications of teaching in “context” are useful. We have found that this needs to be done in a careful manner that does not overwhelm the learners as well as with intention to describe the data analysis process. Focusing on simple examples with fewer datasets helps to first introduce topics, particularly for beginners. This can be followed up with more examples and discussion of when certain methods need to be used for different types of data and analyses.

Soft skills like communication are critical but often overlooked in curricula. Students may need explicit training in areas such as asking questions about a project, giving effective presentations, writing professional emails, providing feedback to colleagues, or participating in meetings. In earlier iterations of DataTrail we did not emphasize these skills as we did not realize how much training our decades of professional experience had given us. We now include a unit on communication (both formal and informal) and a unit on career development as part of the DataTrail curriculum, and we continue to refine our approach.

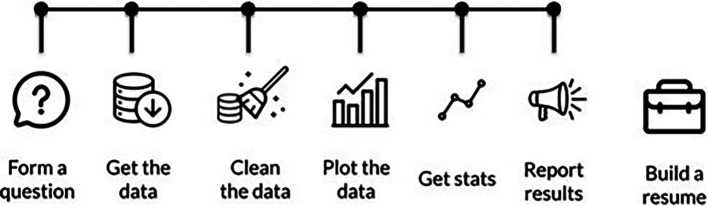

Additionally, we realized that we need to have our students learn in a project-based manner that better reflects “real life” data. We restructured our curriculum to have more projects earlier so that the whole curriculum parallels the steps that are taken in a “typical” data science workflow. Our projects start out heavily scaffolded, with a lot of the necessary code for a project written in so students only have to fill in minor steps, but with each chapter we leave more and more of the data science process to the students to determine for themselves (See Figure 3).

Figure 3. Our DataTrail curriculum is now structured to reflect the typical steps performed in a data analysis.

Our earliest chapters cover how to form a data science question, and then the following chapters take the students through how to get data, clean data, create visualizations, collect statistics, and share those results.

Data science involves teamwork

Data science is best done as a part of a team. It involves being relatively skilled at many different fields: computer science, statistics, writing, web design, programming, etc. Likely one person will not be an expert at all these things, which is why teamwork is vital to good data science. Help your students realize that there’s no such thing as a “lone genius”. In real life teamwork not only helps us all learn, it also creates better end products. 61 Increasing the diversity of data science teams can increase the diversity of perspectives and potential solutions!

Practical suggestions for promoting teamwork

-

•

Use pair programming: Have designated time to have students practice paired programming. Or have optional or required time that students can pair programs with you or other tutors.

-

•

Cover code review: Explicitly cover techniques for how to conduct formal code review and why it is important. 62 , 63

-

•

Highlight coding communities: Introduce your students to online or in-person coding communities such as R-Ladies, StackOverflow, etc. 64

Data science requires being flexibly prescriptive

Data science projects can be quite varied and decisions can be stressful. Being flexibly prescriptive means giving specific instructions and making choices for them now, while preparing students for deviating from these choices later depending on what their project calls for. In practice, this means showing students one way to do something while letting them know about the existence of other relevant ways to do it that they may encounter. Data science, and code in particular, can have unlimited numbers of solutions to reach the same endpoint. Ultimately, some decisions are made based on what is comfortable to the data scientists working on the project, while other decisions may be based on what the project calls for. Instructors should give students something to start with but also acknowledge that they might use different methods in the future and that is okay.

Practical suggestions for being flexibly prescriptive

-

•

Be aware of the stage of your audience: What concepts do your students understand well? What concepts overwhelm them? If you are at an early stage of the process where students are attempting to grapple with a lot of information at once, do not bring up alternatives.

-

•

Acknowledge the existence of alternatives If learners are likely to encounter common alternatives for particular methods in the real world, be upfront about this. This does not mean that learners necessarily need to dive into these alternatives, but simply noting the names of such methods can enable learners to recognize them in the future.

-

•

Many solutions to the same endpoint: Reinforce the idea that there may be a multitude of ways in code to reach the same endpoint. The priorities should be that the code works and is relatively readable. This can tie in well with practicing code review, which lets them see and evaluate approaches taken by others.

Keep it simple

It is often more difficult to figure out what to skip in the curriculum as opposed to what to discuss. The best lessons are based on well-structured learning objectives with relatively narrow scope. It is often better for students to walk away understanding a few things well, rather than many things shallowly or not at all. As an enthusiastic teacher who is very knowledgeable, you may have an impulse to teach everything to your students all at once. As scientists, many of us feel an impulse to say “well actually” or “technically” and give more nuance than is needed at a particular stage. It is the educator’s job to curb these impulses and try to remain as simple and focused as possible. For example, it is incorrect to say that Docker is a virtual machine, but when explaining what Docker is, it can be helpful to explain it as “a computer that you run on your computer to ensure the same specs as another person”. At a later point in time, when the student is ready, they might be able to replace this partially incorrect concept with a more nuanced one. Understanding nuance also means understanding what learners need or want to know and respecting that they are also busy with other things in their life.

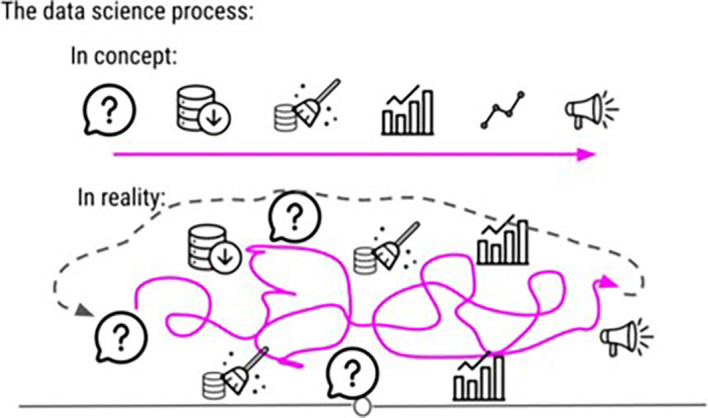

In the DataTrail program, our original curriculum had a disproportionately deep level of coverage of statistical theory. We found that this did not match our students’ career goals or background knowledge and was overwhelming. We restructured our lessons about statistics away from high level theoretical concepts to instead be based in practical examples, focusing on how to make decisions about which tests to use and how to interpret results in plain language. Keeping it simple is also advice relative to the stage of your students. For example, in the DataTrail program, we initially keep it simple by introducing data science as a linear and step wise process. Later in the course, we expand on this to explain to our students that, the data science process is rarely linear and usually involves a lot of side investigations, dead ends, and sometimes starting from scratch entirely (See Figure 4).

Figure 4. This is an example image from our DataTrail course demonstrating how we sometimes use oversimplification as a tool in our curriculum.

In the earlier chapters of the course, we tell students that the data science process is linear. In the latter half of the course, we add on to this concept by letting them know that actually, data science is rarely a stepwise, linear process, but instead a process that involves a number of side investigations that may or may not lead to dead ends.

Practical suggestions for keeping it simple

-

•

Stop yourself: Interrogate why you might give a complex answer. Is it because you want people to know you are knowledgeable? Are you very enthusiastic about the material? Remember to focus on the learner. Too much nuance or focusing on exceptions to rules will likely be a disservice to their learning experience.

-

•

Chunk it out: In code outside of the classroom, you may try to reduce the number of lines and put similar steps together. However, in the classroom, it can be beneficial for you to break down each step separately. This may look like making one chunk of code into multiple separate steps that you walk through with the students. You should also explain and encourage students how to chunk out code for their own troubleshooting technique.

-

•

Keep it practical: You likely have a lot more information about a topic than you need to share. Ask yourself what practical information would your students need to know in a “real world” data science project? For many topics, they won’t need to know deep history or the ins and outs of each parameter of a function. We need to be selective about when history of something aids to understanding and when it does not.

-

•

Make it skimmable: You may notice we use lists and bold type in this paper to highlight main points. Respect that your students are busy and your class is not the only thing they have going on. What’s the most efficient way for you to communicate this (either in print or verbally)?

-

•

Link it out: If you have additional information for the particularly curious student, feel free to share it, but don’t make it central. Add links or a collapsible menu where students can find more information, but don’t use time in a lecture to cover it.

Encourage curiosity

Some of the best investigative data science starts off with a “that’s weird". This concept applies to multiple scenarios. Science means chasing the “why” of an unexpected result. It may be the truth is weird, or there could be a mistake in the data handling. The only way to find out is to poke around at each step. Similarly, the best way to understand how code works is to take someone else’s code and change it. You will inevitably break the code, but as you break it, you will learn what each part of the code does. To find out how code works, investigate it piece by piece while examining the output of each part. Trying each piece of code interactively can help you build together what a longer line of code is actually doing.

Practical suggestions for teaching students to encourage curiosity

-

•

Pause and think: Sometimes in an effort to complete a project quickly we can move too quickly and miss a critical clue in the data. Pauses are effective tools for thinking effectively about a project and what you are seeing. Encourage students that they do not have to answer questions right away. They can walk away, think about it for minutes or days and come back to it.

-

•

Show real examples: Real data have weirdness. Your curriculum should include an example of real data weird- ness and how someone found that weirdness. What functions were used? What aspects of the data were the first red flags that the person who did the data analysis followed? Tell the story about how we found out this weird thing about this real data.

-

•

Give them an investigative tool belt: Give your students a set of strategies they can use to investigate weirdness. What functions or tests can they use to interrogate a piece of data or weirdness in a package? Where can they go to find more information? Demonstrate Googling, StackOverflow, and package documentation as investigative tools.

-

•

Model investigative data science: In a live coding or pair programming session, encourage your students to look for abnormalities. Ask them questions about what they think about the results or what we might want to look out for. Model checking your data after each step.

-

•

Leave in the side journeys: Often, complete analyses involve several side explorations and dead ends. Although we often want to show a polished data science story, sometimes it can be beneficial to briefly demonstrate your development process and the side journeys it took to get there.

Communication is critical

The most ground-breaking data science project is not worth anything if its results and importance cannot be communicated to others. While programming abilities are important, communication, documentation, and other professional skills are perhaps even more important – and unfortunately often harder to teach than programming. These skills have generally not been taught directly at educational institutions but historically have been passively learned through being in the workplace. But given that data science today is commonly remote work, it can be challenging for learners to build their communication and task management skills. These communication skills will be critical for learners’ success in the data science environment. Data science communication can be summarized by a few different aspects:

Simple analyses with well communicated results are always better than overly complicated analyses that are poorly communicated. Data scientists translate numbers into stories that we can act on. Presenting results is just that – telling stories about the data science project journey. This also includes recognizing misleading visualizations and avoiding using them for communication. In code and results, we should be writing down our thoughts as we are developing analyses. For more about good documentation you can see the ITN course. 49 The example code that you use to teach should be self-explanatory. Documentation must make sense to whoever will be using the code, whether this is the instructors, team members, or students themselves.

Communication in data science is also critical as a part of a team. Every data scientist gets stuck at some point and needs to ask someone else for help. Knowing when to keep working on something yourself, versus asking for help, is a critical soft skill for data science work.

Practical suggestions for imparting good communication skills

-

•

Practice how to ask for help: Have students practice writing “help” posts and discuss the standard outline of what a call for help should have. 64 StackOverflow and other online communities can be very helpful, but often this starts with a well-crafted post.

-

•

Be available: Always reiterate your availability (and truly be available too). When students do come to you with questions, try to be enthusiastic and supportive of them.

-

•

Automate questions: Set up systems that regularly ask your students what questions or problems they have. You can set up reminders for yourself or them.

-

•

Structured one-on-one sessions Set up structured one-on-one mentoring meetings. By structured, we mean use a document that your student fills out that asks them to answer: what are they working on? what is going well? what is not going so well? and so on.

-

•

Model good communication yourself: When live coding, add documentation and try to stick to a code style. Example code should be even more extraordinarily well documented. Emphasize stories where you have messed up code or been stuck and asked someone for help.

-

•

Low(er) stakes presentations: Have students practice presenting to their peers. Public speaking is notoriously scary, particularly if you are presenting on a new topic. The most effective way to make it less scary is to practice. Encourage your students to share their results regularly. When they do present, reaffirm that everyone is rooting for them and no one will interrogate them. Presentations by early professionals are the time to be supportive and enthusiastic, not critique the results or code.

-

•

Rubber ducking: Rubber ducking refers to the debugging code by explaining it aloud, even if no one is listening to your explanation. Encourage students to walk through their code on their own and translate it into “normal speak”. This not only helps them troubleshoot, but also builds explanatory skills and deeper understanding of their code.

-

•

Creating reprex examples: Asking for help as a programmer requires communication skills particularly in the context of troubleshooting. Reproducible examples (often called “reprex”) are a communication skill that class time can devote time too. This not only helps communication within the class when help is needed, but also after students are in the field.

Iteratively update

To create education that is motivating, inclusive, and realistic, we need to continually update our curriculum and teaching approaches to better serve our students. To best prepare our students for practicing data science, we need to keep pace with the latest data science techniques and educational approaches. This means we should avoid viewing curriculum and teaching methods as set in stone. Instead, we should utilize systems that allow us to easily update and maintain our curriculum and teaching guides. 65 , 66 The Software Carpentries developers have their own setup and that is great both as an example or if you’d like to contribute to their curriculum. 70 Continuous improvement applies in a social context as well; students’ interests, career goals, and how they are feeling about the course material is an ongoing conversation.

Practical suggestions for iteratively updating

-

•

Version control your curriculum: Not only should our data analyses be well tracked, documented and version controlled, but our curriculum should be too. Where possible, curriculum should be open source and on GitHub. 65 Also consider using permissive licenses such as a Creative Commons licenses such as CC-BY which requires attribution but is otherwise open to repurpose and reuse.

-

•

Minimize maintenance pains: Create your curriculum in a way that minimizes the pain of maintenance. We use Open-source Tools for Training Resources (OTTR) to create our curriculum. 65 We also utilize the exrcise package to automatically generate our exercise notebooks without solutions. 67 See the Supplementary info for more resources for how to automate your curriculum maintenance.

-

•

Take notes: In each iteration of your class, take notes and debrief with your education team about strengths of your course and opportunities for improvement. You can easily track ideas and notes as issues in your GitHub repository.

-

•

Survey your students: Use short and focused surveys to take the temperature of the class. Note that some interpretation of surveys is needed. For example, if half your students feel the course speed is “too fast” and the other half feel that the course speed is “too slow”, it may mean the course speed is just right.

-

•

Set aside time to update: Potentially the most important aspect of iteratively updating materials is that we actually need to set aside time to do it. Too often updates and maintenance are not prioritized like they should be.

Limitations

The tips and philosophies that we have discussed here are quite general. There are many different contexts in which data science may be taught, and many different audiences. It is up to you as the educator to determine which of these ideas would be appropriate. To a certain extent, we also encourage you to experiment with tactics (you are a scientist, after all!) and see what works. Always ask your students how things are working for them. Please comment on this F1000 manuscript or leave us a GitHub issue on a related GitHub repository on one of our affiliated GitHub organizations: jhudsl and fhdsl.

Conclusion

Data scientists are in high demand in nearly every modern industry. There is also great potential for using data science in ways that benefit the public good. 68 The insights and power of data science are exciting, so we feel that the teaching of these skills should be done with a matching level of excitement and with thoughtfulness about the implications of our work. Educational opportunities for learning these skills are not yet equitably distributed but have potential to scale to meet industry’s demands for data science. Not only will improving data science education techniques help meet the hiring demand, it will also help empower the lives of young people, researchers, and other students of data science. We have included many tools and resources to help you apply these ideals to your own teaching. We hope that this manuscript opens communication about ways to improve data science teaching approaches in ways that empower everyone in a more equitable manner. Equal opportunities for data science starts with equal opportunities for education.

Future directions

We the authors will also continue to strive to apply these ideals in our teaching and expand them as we continue to learn. More useful resources for data science teaching are being made every day (see our affiliated GitHub organizations: jhudsl and fhdsl). We invite you to contact us, either through GitHub issues or email, if you know of resources that should be added to this list.

Resource guides

-

•

Summary page of practical tips This page includes all of the ideas and advice in this manuscript into one summarized page Link.

-

•

Promoting IDARE resources This page includes links to recommended classes and resources for promoting IDARE principles in the classroom Link.

-

•

Curriculum tools This page includes tools and resources that are very useful for curriculum maintenance and creation Link.

Acknowledgements

We would like to acknowledge all our colleagues that assisted us with the various projects mentioned who taught us more about teaching data science. We would also like to acknowledge the students we have taught, who continue to inspire us to be better educators and have provided valuable and helpful feedback.

Funding Statement

This work was supported by the National Cancer Institute under Grant UE5CA254170, the National Human Genome Research Institute under Grant U24HG010263. AMH, EH, KELC, and FJT were supported by the GDSCN through a contract to Johns Hopkins University (75N92020P00235) and the AnVIL Project through cooperative agreement awards from the National Human Genome Research Institute with cofunding from OD/ODSS to the Broad Institute (U24HG010262) and Johns Hopkins University (U24HG010263). DataTrail is supported by donations from Posit, Bloomberg Philanthropies, the Abell Foundation, and Johns Hopkins Bloomberg School of Public Health.

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 2; peer review: 2 approved]

Data availability

No data is associated with this article.

References

- 1. U.S. Bureau of Labor Statistics: Computer and information research scientists. 2021. Reference Source

- 2. Przybyla M: Should You Become a Data Scientist in 2021? December 2020. Reference Source

- 3. DeMasi O, Paxton A, Koy K: Ad hoc efforts for advancing data science education. PLOS Computational Biology. May 2020;16(5):e1007695. . 10.1371/journal.pcbi.1007695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Flaherty C: Required Pedagogy,2023. Reference Source

- 5. Robinson TE, Hope WC: Teaching in Higher Education: Is There a Need for Training in Pedagogy in Graduate Degree Programs? Research in Higher Education Journal. 21, August 2013. ERIC Number: EJ1064657. Reference Source [Google Scholar]

- 6. Stenhaug B: Teaching data science is broken November 2019. Reference Source

- 7. Janah L: Leila janah is on a mission to fight global poverty with technology,October 2014. Reference Source

- 8. Leek J: DataTrail,2017. Reference Source

- 9. Genomic Data Science Community Network. Diversifying the genomic data science research community. Genome Research. July 2022;32(7):1231–1241. . 10.1101/gr.276496.121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Hall Mark DL: Academic Achievement Gap or Gap of Opportunities? Urban Education. March 2013;48(2):335–343. . 10.1177/0042085913476936 [DOI] [Google Scholar]

- 11. Fuller JA, Luckey S, Odean R, et al. : Creating a diverse, inclusive, and equitable learning environment to support children of color’s early introductions to stem. Translational Issues in Psychological Science. 2021;7(4):473–486. 10.1037/tps0000313 Reference Source [DOI] [Google Scholar]

- 12. Canner JE, McEligot AJ, Pérez M-E, et al. : Enhancing diversity in biomedical data science. Ethnicity & Disease. 2017;27(2):107–116. 10.18865/ed.27.2.107 Reference Source [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Hazzan O, Mike K: What is data science? Guide to Teaching Data Science: An Interdisciplinary Approach. Springer;2023; pages19–34. [Google Scholar]

- 14. Hofstra B, Kulkarni VV, Galvez SM-N, et al. : The Di- versity–Innovation Paradox in Science. Proceedings of the National Academy of Sciences. April 2020;117(17):9284–9291. . 10.1073/pnas.1915378117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Hond AAH, Buchem MM, Hernandez-Boussard T: Picture a data scientist: a call to action for increasing diversity, equity, and inclusion in the age of AI. Journal of the American Medical Informatics Association. 09 2022;29(12):2178–2181. . 10.1093/jamia/ocac156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Software Carpentries: Instructor Training: Carpentry Teaching Practices, 2017. Reference Source

- 17. Dataquest: Learn Data Science — Python, R, SQL, PowerBI 2023. Reference Source

- 18. fast.ai - fast.ai—Making neural nets uncool again. 2023. Reference Source

- 19. Ezekiel J: Emanuel. MOOCs taken by educated few. Nature. November 2013;503(7476):342–342. . 10.1038/503342a Reference Source [DOI] [PubMed] [Google Scholar]

- 20. Gould TAJR, Machado S, Molyneux J: Introduction to data science 2014. Reference Source

- 21. Wright C, Meng Q, Breshock MR, et al. : Open Case Studies: Statistics and Data Science Education through Real-World Applications January 2023. arXiv:2301.05298 [stat]. Reference Source

- 22. Kross S, Guo PJ: nd-User Programmers Repurposing End-User Programming Tools to Foster Diversity in Adult End-User Programming Education. 2019 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC). October 2019; pages65–74. . 10.1109/VLHCC.2019.8818824 [DOI]

- 23. Bloom BS: Taxonomy of Educational Objectives: The Classification of Educational Goals. Green: Longmans;1956. 9780582323865. Google-Books-ID: hos6AAAAIAAJ. [Google Scholar]

- 24. General Assembly: Data science education lags behind in diversity. September 2017. Reference Source

- 25. Tomašev N, Cornebise J, Hutter F, et al. : Ai for social good: unlocking the opportunity for positive impact. Nature Communications. 2020;11(1):2468. 10.1038/s41467-020-15871-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Gaynor KM, Azevedo T, Boyajian C, et al. : Ten simple rules to cultivate belonging in collaborative data science research teams. PLOS Computational Biology. 2022;18(11):e1010567–e1010512. 10.1371/journal.pcbi.1010567 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Meyers NM, Nulty DD: How to use (five) curriculum design principles to align authentic learning environ- ments, assessment, students’ approaches to thinking and learning outcomes. Assessment & Evaluation in Higher Education. October 2009;34(5):565–577. . 10.1080/02602930802226502 [DOI] [Google Scholar]

- 28. Schwab-McCoy A, Baker CM, Gasper RE: Data science in 2020: Computing, curricula, and challenges for the next 10 years. Journal of Statistics and Data Science Education. 2021;29(sup1):S40–S50. 10.1080/10691898.2020.1851159 [DOI] [Google Scholar]

- 29. Li X, Dennis Carroll C: Characteristics of minority-serving institutions and minority undergraduates enrolled in these institutions: Postsecondary education descriptive analysis report (nces 2008-156). Washington, DC: National Center for Education Statistics;August 2007. Reference Source [Google Scholar]

- 30. Woolley K, Fishbach A: Motivating Personal Growth by Seeking Discomfort. Psychological Science. April 2022;33(4):510–523. . 10.1177/09567976211044685 [DOI] [PubMed] [Google Scholar]

- 31. Makarova E, Aeschlimann B, Herzog W: The Gender Gap in STEM Fields: The Impact of the Gender Stereotype of Math and Science on Secondary Students’ Career Aspirations. Frontiers in Education. 2019;4. . 10.3389/feduc.2019.00060 [DOI] [Google Scholar]

- 32. Chambliss DF: The Mundanity of Excellence: An Ethnographic Report on Stratification and Olympic Swimmers. Socio- logical Theory. 1989;7(1):70–86. 10.2307/202063 [DOI] [Google Scholar]

- 33. American University: How to Foster a Growth Mindset in the Classroom. American University;December 2020. Reference Source [Google Scholar]

- 34. Clark JM, Paivio A: Dual coding theory and education. Educational Psychology Review. September 1991;3(3):149–210. . 10.1007/BF01320076 [DOI] [Google Scholar]

- 35. Cyr A-A, Anderson ND: Mistakes as stepping stones: Effects of errors on episodic memory among younger and older adults. Journal of Experimental Psychology. Learning, Memory, and Cognition. 41(3):841–850,May 2015. . 10.1037/xlm0000073 [DOI] [PubMed] [Google Scholar]

- 36. Hudon C, Ellis L: Data mishaps night. 2023. Reference Source

- 37. Johnson ZD, LaBelle S: An examination of teacher authenticity in the college classroom. Communication Education. October 2017;66(4):423–439. . 10.1080/03634523.2017.1324167 [DOI] [Google Scholar]

- 38. Banas JA, Dunbar N, Rodriguez D, Liu S-J: A Review of Humor in Educational Settings: Four Decades of Research. Communication Education. January 2011;60(1):115–144. . 10.1080/03634523.2010.496867 [DOI] [Google Scholar]

- 39. Wanzer MB, Frymier AB, Irwin J: An Explanation of the Relationship between Instructor Humor and Student Learning: Instructional Humor Processing Theory. Communication Education. January 2010;59(1):1–18. . 10.1080/03634520903367238 [DOI] [Google Scholar]

- 40. Reupert A, Maybery D, Patrick K, et al. : The importance of being human: Instructors’ personal presence in distance programs. International Journal of Teaching and Learning in Higher Education. 21(1):47–56,2009. . Reference Source [Google Scholar]

- 41. Pacansky-Brock M, Smedshammer M, Vincent-Layton K: Humanizing online teaching to equitize higher education. Current Issues in Education. 2020;21(2):1–21. 10.13140/RG.2.2.33218.94402 [DOI] [Google Scholar]

- 42. zippia: Data science demographics in the u.s. 2023. Reference Source

- 43. Dawkins CJ: Bringing institutions into the opportunity hoarding debate. Housing Policy Debate. 2023;33:793–796. 10.1080/10511482.2023.2173981 [DOI] [Google Scholar]

- 44. Chetty R, Stepner M, Abraham S, et al. : The Association Between Income and Life Expectancy in the United States, 2001-2014. JAMA. April 2016;315(16):1750–1766. . 10.1001/jama.2016.4226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. NOT-OD-20-031: Notice of NIH’s Interest in Diversity:2023. Reference Source

- 46. Puritty C, Strickland LR, Alia E, et al. : Without inclusion, diversity initiatives may not be enough. Science. September 2017;357(6356):1101–1102. . 10.1126/science.aai9054 [DOI] [PubMed] [Google Scholar]

- 47. Morgan PL, Farkas G, Hillemeier MM, et al. : Science Achievement Gaps Begin Very Early, Persist, and Are Largely Explained by Modifiable Factors. Educational Researcher. January 2016;45(1):18–35. . 10.3102/0013189X16633182 [DOI] [Google Scholar]

- 48. Lee VR, Wilkerson MH, Lanouette K: A Call for a Humanistic Stance Toward K–12 Data Sci- ence Education. Educational Researcher. 50(9):664–672,December 2021. . 10.3102/0013189X211048810 [DOI] [Google Scholar]

- 49. Savonen C: Documentation and Usability. 2021. Reference Source

- 50. Abdul-Wahab SA, Salem NM, Yetilmezsoy K, et al. : Students’ reluctance to attend office hours: Reasons and suggested solutions. Journal of Educational and Psychological Studies. 2019;13(4):715–732. 10.53543/jeps.vol13iss4pp715-732 [DOI] [Google Scholar]

- 51. Kumar V, Sharma D: Cloud computing as a catalyst in stem education. International Journal of Information and Communication Technology Education. 2017;13(2):38–51. 10.4018/IJICTE.2017040104 [DOI] [Google Scholar]

- 52. Webex: Slido 2023. Reference Source

- 53. Lee C: What can i do today to create a more inclusive community in cs? 2016. Reference Source

- 54. Color oracle:2018. Reference Source

- 55. American Psychological Association: Implicit bias. 2023. Reference Source

- 56. Oliver JC, McNeil T: Undergraduate data science degrees emphasize computer science and statistics but fall short in ethics training and domain-specific context. PeerJ Computer Science. March 2021;7:e441. . 10.7717/peerj-cs.441 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Novak G: Just-in-time teaching: Blending active learning with web technology. 1999. Reference Source

- 58. Nederbragt A, Harris RM, Hill AP, et al. : Ten quick tips for teaching with partic- ipatory live coding. PLOS Computational Biology. September 2020;16(9):e1008090. . 10.1371/journal.pcbi.1008090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Shapiro J: Teaching with live coding in R and RStudio,2022. Reference Source

- 60. Podschuweit S, Bernholt S: Composition-Effects of Context-based Learning Opportunities on Students’ Un- derstanding of Energy. Research in Science Education. August 2018;48(4):717–752. . 10.1007/s11165-016-9585-z [DOI] [Google Scholar]

- 61. Parker H: Opinionated analysis development. Technical Report e3210v1, PeerJ Preprints August 2017. Reference Source

- 62. Resources for lab developers:2022. Reference Source

- 63. Bacchelli A, Bird C: Expectations, outcomes, and challenges of modern code review. 2013 35th International Conference on Software Engineering (ICSE). May 2013; pages712–721. . 10.1109/ICSE.2013.6606617 [DOI]

- 64. StackOverflow: How do I ask a good question? 2023. Reference Source

- 65. Savonen C, Wright C, Hoffman AM, et al. : Open- source Tools for Training Resources – OTTR. Journal of Statistics and Data Science Education. January 2023;31(1):57–65. 10.1080/26939169.2022.2118646 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Lau S, Eldridge J, Ellis S, et al. : The challenges of evolving technical courses at scale: Four case studies of updating large data science courses. Proceedings of the Ninth ACM Conference on Learning @ Scale, L@S’22, page 201–211, New York, NY, USA, 2022. Association for Computing Machinery. 9781450391580. 10.1145/3491140.3528278 [DOI]

- 67. Shapiro J: exrcise. 2019. Reference Source

- 68. Jones Q, Vindas Meléndez AR, Mendible A, Aminian M, et al. : Data science and social justice in the mathematics community. arXiv. 2023. 10.48550/arXiv.2303.09282 [DOI]

- 69. Dweck C: Mindset: The New Psychology of Success. Ballantine Books;2016. [Google Scholar]

- 70. Kamvar Z, Nitta J: sandpaper: Create and Curate Carpentries Lessons. R package version 0.16.2,2024. https://github.com/carpentries/sandpaper/ https://carpentries.github.io/workbench/ https://carpentries.github.io/sandpaper/ [Google Scholar]