Abstract

High-quality data is crucial for accurate machine learning and actionable analytics, however, mislabeled or noisy data is a common problem in many domains. Distinguishing low- from high-quality data can be challenging, often requiring expert knowledge and considerable manual intervention. Data Valuation algorithms are a class of methods that seek to quantify the value of each sample in a dataset based on its contribution or importance to a given predictive task. These data values have shown an impressive ability to identify mislabeled observations, and filtering low-value data can boost machine learning performance. In this work, we present a simple alternative to existing methods, termed Data Valuation with Gradient Similarity (DVGS). This approach can be easily applied to any gradient descent learning algorithm, scales well to large datasets, and performs comparably or better than baseline valuation methods for tasks such as corrupted label discovery and noise quantification. We evaluate the DVGS method on tabular, image and RNA expression datasets to show the effectiveness of the method across domains. Our approach has the ability to rapidly and accurately identify low-quality data, which can reduce the need for expert knowledge and manual intervention in data cleaning tasks.

Keywords: Data Valuation, Deep Learning, Drug Response, LINCS

1. Background

1.1. Introduction

Modern research and “big data” have led to remarkable discoveries and spurred many fields toward high-throughput data collection to capitalize on emerging methods in data science, machine learning, and artificial intelligence. Scientists involved in data collection go to great efforts to generate accurate and reproducible data, however, unavoidable measurement noise, batch effects, and natural stochasticity often lead to varying levels of data quality. Many foundational high-throughput datasets are affected by reproducibility and data quality issues, which often limit the actionable results of these resources [1, 2, 3, 4].

1.1.1. Data Valuation

Data quality relates to the capacity of data to represent the underlying process. For example, the objective of photography is to gather information about a three-dimensional scene, while the purpose of measuring temperature is to reflect the kinetic energy of an object. Data quality issues can arise from many sources; for instance, chromatic aberration or lens imperfections in photography can distort images, creating inaccurate representations of a scene. Similarly, a miscalibrated thermometer might not measure temperature correctly. Data quality issues can be particularly problematic in machine learning [5, 6, 7], as a small subset of inaccurate samples can significantly degrade modeling performance even if the majority of samples are high-quality. Curating high-quality datasets can be challenging and usually requires expert knowledge of both the data generation process and the underlying process being measured. A more automated approach to quantify data quality is a class of algorithms called data valuation, which assigns a numerical value to each sample in a dataset that characterizes its usefulness toward a predictive task. In the right context, data valuation can effectively capture many aspects of data quality. While there are a number of published data valuation algorithms, many of them follow a similar overarching approach, in which the user must define:

Source dataset: The samples that will be valued. Note that this is sometimes called the training dataset1.

Target dataset: This dataset characterizes the task or goal of the data valuation, and the choice of alternative target datasets are liable to result in different data values. Note that this is sometimes called the validation dataset2.

Learning algorithm: The choice of predictive model, e.g., Logistic regression, random forest, neural network, etc.

Performance metric: The evaluation metric used to compare the learning algorithms predictions against the ground truth, e.g., Accuracy, area-under-the-receiver-operator-curve (for classification), mean-squared-error, (for regression), etc.

Provided these four user-defined elements, a Data Valuation algorithm then assigns a numerical value to each sample in the source dataset that quantifies the importance of a sample, or its contribution to the predictive performance of the learning algorithm as evaluated on the target dataset. This method can be used in a number of ways, such as:

Model Enhancement: To improve the predictive performance of a model by filtering low-quality data or identifying mis-labeled samples.

Attribution: To quantify data value for monetary recompense or to quantify fair contribution, i.e., credit.

Domain Adaptation: To identify samples from an alternative domain that are relevant to a target task.

Efficiency: Reduce the compute resources (runtime or memory) required to train machine learning models.

Existing methods for data valuation include Leave-One-Out (LOO) [10], Data Shapley [8], and Data Valuation using Reinforcement Learning (DVRL) [9]. Under some conditions, DVRL has been shown to out-perform both Data Shapley and LOO and has been applied to large datasets (more than 500k samples). In noisy or corrupted datasets, these methods can be used to significantly improve machine learning prediction performance by filtering low data values prior to model training. Additionally, data values were shown to effectively quantify data quality aspects such as the amount of noise in an image or incorrect class labels [8] (i.e., low values correlate with high-noise or mislabeled observations). As a demonstration of these methods, a recent paper used Data Shapley to value an x-ray image dataset for the prediction of pneumonia. By removing approximately 20% of their training data with the lowest data values, the authors were able to improve the test set prediction accuracy by more than 15%. Furthermore, when the authors inspected a subset of images with the lowest data values, they found it significantly enriched for mislabeled images [11].

A key aspect of Data Shapley is the definition of equitable data conditions [8], which we summarize as:

Nullity: If a sample does not affect model performance, it should have a value of zero.

Equivalency: Two samples with equal contribution should have equal values.

Additivity: The sum of samples data values should be equal to the data value of the grouped samples.

While these conditions are convenient descriptors of data in many settings, they are not required for most of the pragmatic tasks of data valuation. Furthermore, Data Shapley is the only data valuation method to our knowledge with theoretical justifications fulfilling these conditions. Other methods, such as DVRL, perform comparably or better in many data valuation applications, such as corrupted label identification [9].

1.2. Library of Integrated Network-Based Cellular Signatures

There are few, if any, datasets devoid of data quality issues, and addressing these challenges can improve the results of downstream analytics. A foundational dataset that has been highly impactful in modern research, especially in the cancer and drug-development domain, is the Library of Integrated Network-Based Cellular Signatures (LINCS) project. The LINCS program has generated high-dimension transcriptomic profiles (L1000 assay; 978 landmark genes) characterizing the effect of chemical and genetic perturbations across a range of cellular contexts, time points, and dosages [12]. This data has been used successfully in many applications; however, a continued challenge with high-throughput data pipelines is the identification of low-quality samples. In 2016, a systematic quality control analysis of LINCS L1000 data showed that differentially expressed genes (DEGs) inferred from the L1000 platform were often unreliable. For example, only 30% of DEGs overlapped between any two selected control viral vectors in short-hairpin RNA (shRNA) perturbations [4]. To address these issues, many researchers have proposed methods to improve the L1000 data analysis pipeline, including alternative approaches to peak deconvolution [13, 14], and a novel method of aggregating bio-replicates in order to improve the noise-to-signal ratio [15, 16].

A recent paper, which sought to use the LINCS L1000 dataset for the repurposing of COVID-19 drugs, proposed a simple but effective method of quantifying sample-level data quality by computing the average Pearson correlation (APC) between the replicates of a perturbation. Intuitively, if replicates are discordant, and therefore have low or negative pairwise correlations, then the resulting APC value is low; however, if the replicates are concordant and have high pairwise correlations, then the APC value is high. The authors went on to show that filtering L1000 data based on APC values could significantly improve the predictive accuracy of machine learning models [17].

Improvement of data quality in large publicly available datasets, such as the LINCS project, has the potential to markedly improve the usefulness and impact of these datasets. In addition, effective data quality metrics could be used to inform the selection of new conditions that will be most beneficial to select prediction tasks or to avoid conditions that are unlikely to be useful.

1.3. Related Work

Dataset Distillation is a related field, which attempts to distill knowledge from a large dataset into a small one by synthesizing a new dataset that is representative of the original dataset but much smaller [18, 19]. Adjacent to this domain is core-set or instance selection that focus on selecting a subset of a dataset that leads to comparable or better machine learning performance. In many pragmatic applications, data valuation can be seen as coreset or instance selection method; For instance, data valuation produces a ranked list of the samples in a given dataset, based on their value or usefulness towards a predictive task. A ranked list of observations can easily be treated as an instance selection problem by choice of a threshold. Selection of a data value threshold, either by post-hoc analysis or manual choice, reframes data valuation methods as a instance selection approach. Additionally, many of the evaluation techniques of common data valuation methods are analogous to instance selection (e.g., machine learning performance improvement goals). There is no analog for the equitable data value conditions described by Ghorbani et al. [8] in core-set or instance selection. Several notable methods of core-set or instance selection includes herding [20, 21], distribution-matching [22, 23] and incremental-gradient matching approaches [24]. There have also been instance selection approaches for large language models, which require large amounts of data to train, and the choice of prompting can have drastic impacts on model performance [25, 26].

Anomaly detection or outlier detection attempts to separate data instances that deviate from the majority of samples [27]. Data valuation, especially when used to identify corrupted labels or characterizing exogenous feature noise, can be examined from the lens of anomaly detection. For instance, the DVRL Estimator model tries to learn a joint probability distribution of exogenous and endogenous features that maximizes predictive performance of a given learning algorithm. If we make the assumption that identifying in-distribution training data will lead to test performance generalization, then DVRL can be thought of as a method for separating anomalous (out-of-distribution) from normal samples (in-distribution). There have been countless methods introduced for anomaly detection, however, of particular relevance to this paper is a gradient-based anomaly representation for autoencoders proposed by Kwon et. al, which defines an anomaly score based on both reconstruction error and the gradient. [28].

There has also been significant research on how to train machine learning models in the presence of noisy or corrupted data. These methods range broadly and include meta learning, sample re-weighting schemes [29, 30], noise-robust loss functions [31] and loss correction algorithms [32]. These methods predominately focus on training high-performing models without explicitly removing corrupted or spurious observations; however, several of these methods use re-weighting schemes that rely on interim observation-specific weights and could be considered analogous to data values.

1.4. Contributions

Data valuation is an efficient and automated approach to characterizing sample informativeness, particularly in data cleaning tasks such as identifying incorrectly labeled or noisy samples. Existing data valuation methods, however, have limitations that hinder widespread application. Data Shapley does not scale well to large datasets and underperforms in certain tasks like corrupted label identification compared to DVRL. DVRL often exhibits high performance in data valuation applications, but is sensitive to hyperparameters, choice of dataset, and predictive model. It can be inconvenient and time consuming to tune the DVRL hyperparameters and is ineffective in some predictive tasks. Furthermore, while DVRL is significantly faster than Data Shapley, this method still requires sequential training of models to accurately estimate data values, which consumes significant computational resources.

In this paper, we introduce a novel data valuation method and compare it against baselines in two key tasks: 1) identifying corrupted labels and 2) identifying samples with high exogenous feature noise. We also explore the application of data valuation in unsupervised learning settings, which to our knowledge is the first method to evaluate this. Unsupervised data valuation is ideal for quantifying sample noise in biological data types such as ‘omics sequencing data (RNA expression, DNA mutation, methylation, etc.). Finally, we apply our method to compute data values for the LINCS L1000 level 5 dataset, which contains more than 700,000 high-dimensional samples. Our method demonstrates performance comparable to that of Data Shapley [8] and DVRL [9] while being significantly more computationally efficient. The speed and scalability of our method make it applicable to large datasets, even with small compute budgets. Moreover, our method is robust to hyperparameters, making it user-friendly.

Although data quality metrics have been proposed for the LINCS L1000 dataset, such as the average Pearson correlation (APC) between replicates [17], our data valuation results offer an alternative data quality metric. We show that filtering data based on our data values results in equivalent or higher-performing models than data filtering based on APC. Additionally, we show that our method is more effective in capturing high-valued samples than the APC metric, which could be used to inform future data acquisition decisions.

2. Proposed Methods

2.1. Data Valuation with Gradient Similarity

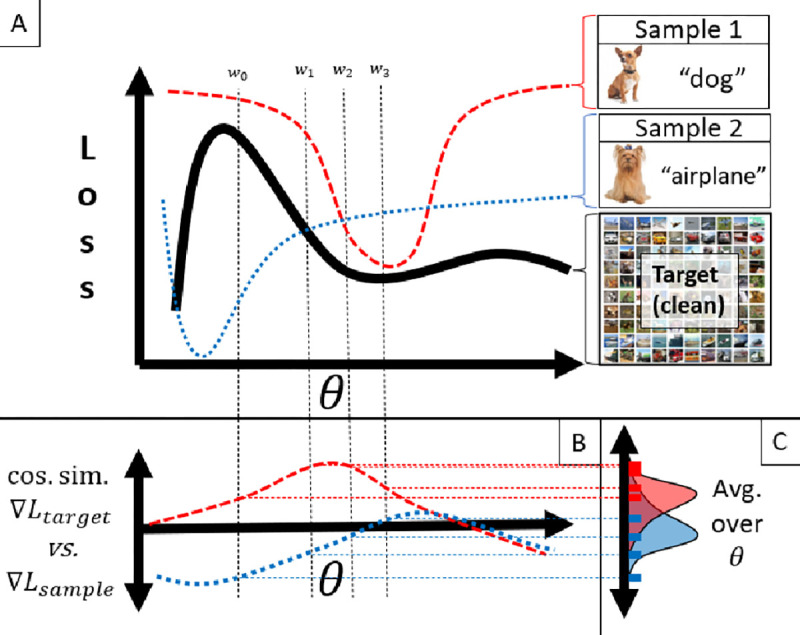

We propose a method of Data Valuation with Gradient Similarity (DVGS), based on the premise that source samples with a loss surface similar to the target loss surface will be more useful to a shared predictive task than source samples with dissimilar loss surfaces. For instance, a training dataset loss surface with a similar shape and minima to the validation dataset loss surface is likely to positively contribute to the validation predictive task. This premise is visualized by a toy example in Figure 1. Analytically computing the loss criteria for all possible parameter values (i.e., the full loss surface) is intractable for most problems, and therefore a comprehensive comparison of loss surfaces is challenging. However, we can approximate the comparison of loss surfaces by comparing gradient similarities at select parameter values. Comparison of gradients is also advantageous as it factors out the absolute loss value.

Figure 1:

We propose a method of data valuation that compares each source sample to the target samples by computing the similarity of gradients during stochastic gradient descent. In panel A, we depict a toy-example of a 1-d loss landscape. Sample 1 (red) is an accurately labeled (high-quality), whereas sample 2 (blue) is incorrectly labeled (low quality). In panel B, we plot the similarity of each source sample gradient compared to the target set gradient (black solid line in panel A). Panel C shows the marginal distribution of gradient similarities, which is averaged to obtain the final source sample data value. To make this process tractable, gradient similarities are computed over a limited number of model parameter values during traditional stochastic gradient descent. The computed gradients are visualized by dotted lines in panels A,B and . To choose the relevant values of , we use stochastic gradient descent (SGD), with gradients calculated from the target set.

Similarly to other data valuation methods, DVGS requires a target dataset that characterizes the desired predictive task. The target dataset may be of high quality, specific prediction domain, or a randomly sampled holdout set. Additionally, the user must define a differentiable predictive model that can be trained using stochastic gradient descent (SGD). The source dataset serves as input on which data valuation will occur, with the goal of characterizing useful or detrimental samples. To perform DVGS, we optimize model parameters using SGD on the target dataset and at each iteration compute the similarity of the target batch gradient to each source sample gradient. We posit that this approach will accurately estimate data values if the gradient similarities are measured in critical regions of the weight-space, such as regions commonly explored during optimization. This procedure is documented in Algorithm 1. We do not expect or justify that this approach satisfies the equitable data value conditions proposed by Ghorbani et al., however, we empirically demonstrate that this approach effectively characterizes data quality in many real-world prediction tasks while being simple, scalable, and easily extensible to a wide range of model architectures and predictive tasks.

Algorithm 1.

Data Valuation with Gradient Similarity

| Require: Differentiable model , learning rate , source dataset , target dataset , number of training iterations , target batch size , loss criteria , and similarity criteria . | |

| 1: for do | |

| 2: | ▷ sample mini-batch from target set |

| 3: for do | |

| 4: | |

| 5: | ▷ predict outcome for target batch |

| 6: end for | |

| 7: | ▷ compute target batch gradient |

| 8: for do | |

| 9: | |

| 10: | ▷ predict outcome for source sample |

| 11: | ▷ compute the gradient for the source sample |

| 12: | ▷ compute similarity of source sample gradient to the target batch gradient |

| 13: end for | |

| 14: | ▷ update model parameters using the target batch gradient |

| 15: end for | |

| 16: for do | |

| 17: | ▷ compute the average gradient similarity for each source sample |

| 18: end for | |

Calculating the similarity between the gradients of the source samples and the target dataset requires a function that takes as input two high-dimensional gradient vectors and returns a single scalar characterizing similarity. Theoretically, any distance metric is applicable here, however, we chose to use cosine similarity because it produces easily interpreted values between [−1,1] and neglects vector magnitude. We were concerned that gradient magnitudes may vary between early- and late-stage training, and to avoid biasing data values by large gradient magnitudes, we rationalize that gradient magnitude should be ignored.

In classification problems, each class is likely to induce a distinct gradient, and therefore target sets with a class imbalance are likely to introduce class-specific biases to data values. For instance, in a binary classification problem, if the target set has a majority of the positive class, then the source samples with the negative class may be particularly dissimilar, even if they are valuable to the optimization process. To avoid inadvertent bias of class-based data values, we suggest balancing class weights [33] when computing target gradients. Future approaches should explore the comparison of within-class gradient similarities, which may mitigate this problem without class balancing.

Intuitively, the choice of initialization weights is likely to produce different data values, especially if the target set has a complex multimodal loss surface. To prevent variance in DVGS data values due to weight initialization or stochastic mini-batch sampling, we add the option to run the DVGS algorithm multiple times, each with unique weight initialization and randomization seeds. Using this approach enables DVGS to explore multiple minima and compute similarity values on a wider range of parameter values. To aggregate a final data value, gradient similarities are averaged across all iterations and runs.

2.2. Time Complexity

In most applications, it is reasonable to assume that the target dataset is much smaller than the source dataset, and therefore most of the runtime is spent computing the source gradients. This can be partially mitigated by only computing gradient similarities every iterations or by pretraining the model. We estimate1 the computational complexity in big O notation:

We expect that the DVGS method will scale linearly with the number of source samples and training iterations. A particular advantage of the DVGS methods is that only a single model need be trained, whereas Data Shapley and DVRL require training many models sequentially. This time complexity makes it suitable for application to large datasets. Additionally, DVGS can be run in parallel and the results averaged to compute more accurate data values; Such an ensemble approach is ideal for large datasets and complex loss surfaces. In many tasks, such as image classification with convolutional neural networks, it can be advantageous to pretrain the convolutional layers prior to performing DVGS.

2.3. Data

In this paper, we apply our data valuation algorithm to four datasets under various conditions.

The ADULT dataset, also known as the “census income” dataset, consists of 14 categorical or integer features representative of an adult individual and labeled based on whether they make more than 50k dollars per year [34].

The BLOG dataset consists of internet blog characteristics parsed from the raw HTML file and the output is the average number of comments received; We then binarize the endogenous variable with threshold of 0 [35].

The CIFAR10 dataset, which consists of tiny images labeled as one of 10 possible objects [36]; we transform the images into an informative feature representations using a pre-trained InceptionNet prior to data valuation [37].

The LINCS L1000 dataset measures RNA expression in cell lines some time after a chemical or genetic perturbation [12] We further break the LINCS L1000 into two data partitions: 1) all data and 2) high-APC (>0.5) data (see supp. note 5.2).

We chose the first three datasets and pre-processing steps (ADULT, BLOG, and CIFAR10) to match the evaluations performed in previous work [9, 8]. Similarly, we try to match the respective dataset size (target, source, test) choices made in previous work to provide similar evaluations.

The LINCS L1000 is a widely used biological dataset that suffers from known data quality issues [12, 16, 14, 13, 1, 17] and removing inaccurate or noisy samples from this dataset could benefit the cancer drug response domain.

2.4. Dataset Corruption

To simulate poor data quality, we artificially corrupt datasets in two ways:

Label Corruption; Endogenous variable (y)

Feature Corruption; Exogenous variable (x)

Labels are corrupted by randomly relabeling a proportion of the source dataset class labels; for instance, an image of a “dog” might be re-labeled as “cat”. The corrupted sample indices are then used as the ground truth of data quality and can be compared to data values. The expectation is that corrupted labels will have lower data values indicating that they are less valuable to model performance. To summarize the ability of data values to identify corrupted samples, we use the area under the receiver operator curve (AUROC) metric:

Where is the corrupted label mask (0 = uncorrupted; 1 = corrupted) and is the data values. Notably, we flip the data value sign as we expect large data values to indicate high quality data, and small data values to indicate low quality or mislabeled observations.

To explore the ability of data valuation to capture exogenous feature sample quality, we add Gaussian noise to each observation:

where is feature of the corrupted sample , and is an observation-specific noise rate sampled from a uniform distribution. Thus, samples with larger noise rates , will have noise with greater variance. The primary evaluation task is to apply data valuation and compare the data values with the sample-specific noise rates. We expect that samples with large noise rates will have small data values, indicating that they are less valuable to model performance. To evaluate performance on this task, we use Spearman correlation [38]. Note that we change the sign of our data values as we expect that high data values should correlate with large noise rates:

3. Results

3.1. Label Corruption

To evaluate the ability of data values to capture mislabeled samples, we artificially corrupt labels in three classification datasets: ADULT, BLOG, and CIFAR10. We compare DVGS to several baseline methods:

Randomly assigned data values (null model)

Leave-out-out (LOO) [10]

Truncated Monte-Carlo Data Shapley (dshap) [8]

Data Valuation with Reinforcement learning (DVRL) [9]

The Leave-one-out and Data Shapley algorithms are only applied to the ADULT and BLOG datasets due to compute resource constraints.

In all three datasets, we corrupt 20% of the labels. For the ADULT and BLOG datasets we use 1000 source observations and 400 target observations. For the CIFAR10 dataset, we use 5000 source observations and 2000 target observations. We expect accurate data valuation to produce values such that corrupted samples data values will be smaller than uncorrupted samples, indicating that they are less valuable or useful toward our target predictive task. Additionally, we expect that filtering corrupted labels should improve model performance. In each experiment, we evaluate the ability of data values to 1) identify corrupted labels and 2) modify model performance as measured on a hold-out test set when we filter a proportion of the dataset. In this second task, we evaluate the performance changes when we filter high-values (expectation that performance will decrease) versus low-values (expectation that performance will improve or be unaffected).

For all three datasets, we use a 2-layer neural network as the learning algorithm and the area under the receiver operator curve (AUROC) as the performance metric [39]. Each experiment is run at least five times with randomly sampled data subsets and unique weight initialization. Experiments are repeated to ensure stable results across diverse subsets of data and weight initialization.

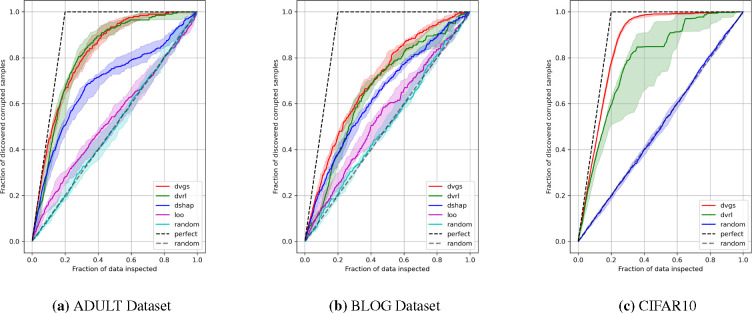

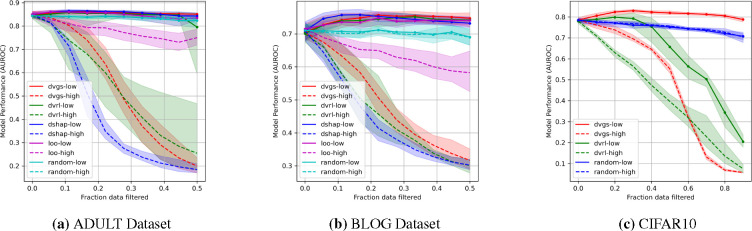

Figure 2 compares the ability of five data valuation methods to identify corrupt labels. Figure 3 compares the effects of filtering based on data values on performance. In all three datasets, DVGS performs comparably or better than baseline data valuation methods. DVGS performs particularly well on the CIFAR10 dataset, which may be due to the informative features extracted from a pretrained InceptionNet model [37].

Figure 2:

Evaluation of respective data valuation methods ability to identify corrupted labels. The Gray dashed “random” are theoretical random performance, whereas blue/cyan “random” is empirically measured random values.

Figure 3:

The evaluation of respective data valuation methods ability to impact model performance when filtering either high value (dashed lines) or low values (solid lines).

The predictive quality of the data values for the identification of corrupt labels is shown in Table 1. DVGS data values are the most predictive of corrupted labels in all three datasets, as measured by the AUROC score. DVRL often performed comparably to DVGS, however, DVRL convergence was inconsistent and occasionally resulted in a suboptimal policy, as evidenced by the wide confidence intervals of DVRL in Figure 2 and large standard deviations of CIFAR10 in Table 1. Additionally, we note that DVGS underperforms compared to Data Shapley when characterizing high data value, as seen in relative performance trends when filtering high-value data in Figure 3.

Table 1:

The Area under the receiver operator curve (AUROC) scores if the data values are used to predict corrupted labels (score = AUROC(noise_labels, -data_values)); mean ± std.

| DATASET | DVGS | DSHAP | DVRL | LOO | RANDOM |

|---|---|---|---|---|---|

| adult | 0.896 ± 0.030 | 0.731 ± 0.049 | 0.887 ± 0.042 | 0.542 ± 0.056 | 0.503 ± 0.050 |

| blog | 0.750 ± 0.028 | 0.671 ± 0.021 | 0.697 ± 0.033 | 0.558 ± 0.063 | 0.509 ± 0.028 |

| cifar10 | 0.954 ± 0.009 | NA | 0.835 ± 0.110 | NA | 0.499 ± 0.019 |

3.2. Characterization of Sample Noise

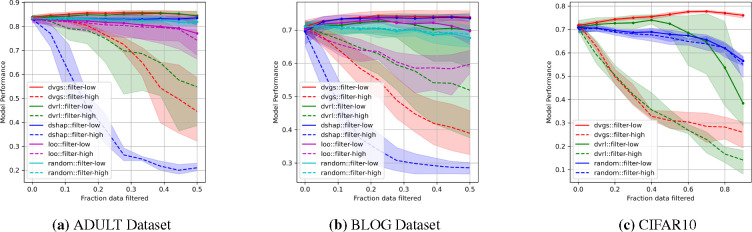

In many domains, input features may be noisy due to measurement error, natural stochasticity, or batch effects, leading to inaccurate sample informativeness. To explore the ability of data valuation to quantify input feature noise, we artificially corrupt exogenous features as described in Section 2. For this task, we evaluate data valuation in supervised (ADULT, BLOG and CIFAR10) and unsupervised learning (CIFAR10 and LINCS) settings. In the supervised setting, we use architectures and hyper-parameters identical to those described in Section 3.1. In unsupervised settings, we use an autoencoder architecture [40, 41] to create a low-dimensional representation and optimize using reconstruction mean square error (MSE). We justify that noisy samples will be more difficult to reconstruct and are likely to be detrimental to the performance. For the unsupervised setting, we apply our methods to two datasets: the CIFAR10 dataset and a high-quality subset of the LINCS L10002. The ability of the data values to characterize the exogenous feature noise rates is reported in Table 2. Compared to baseline methods, DVGS produces data values that most strongly correlate3 with ground-truth noise rates. As in Section 3.1, we also evaluate the performance impact of filtering data based on data values, and these results are shown in Figure 4. We find that DVGS can most effectively characterize noise rates across all datasets. Additionally, when we compare model performance improvements when low value data are removed, as shown by the solid lines in Figure 4, we find that the performance of the DVGS method is comparable to or better than the baseline methods. As observed in the results of the supervised setting, we find that Data Shapley outperforms DVGS in quantifying high-quality data, measured by model performance decrease when filtering high-value data in both the ADULT and BLOG datasets, shown in Figure 4 (a,b). In some of the learning tasks listed in Table 2 only one or none of the baseline methods are calculated due to compute limitations.

Table 2:

The Spearman correlation of predicted data values and artificial sample noise rates. The top performing method for each row is bolded; mean ± std.

| Dataset | Learning | DVGS | DSHAP | DVRL | LOO | RANDOM |

|---|---|---|---|---|---|---|

| adult | supervised | 0.225 ± 0.061 | 0.130 ± 0.091 | 0.159 ± 0.074 | 0.022 ± 0.076 | −0.007 ± 0.026 |

| blog | supervised | 0.106 ± 0.077 | 0.086 ± 0.074 | 0.100 ± 0.344 | 0.045 ± 0.078 | 0.011 ± 0.054 |

| cifar10 | supervised | 0.402 ± 0.081 | NA | 0.358 ± 0.103 | NA | 0.000 ± 0.018 |

| cifar10 | unsupervised | 0.757 ± 0.131 | NA | NA | NA | 0.003 ± 0.014 |

| lincs (APC>0.5) | unsupervised | 0.505 ± 0.018 | NA | NA | NA | NA |

Figure 4:

The evaluation of respective data valuation methods ability to impact model performance when filtering either high value (dashed lines) or low values (solid lines). The y-axis measures the model performance using the AUROC metric.

3.3. Computational Complexity

DVGS can be applied to large datasets and complex tasks with markedly lower computational costs than previous data valuation methods and enables application to new domains and data types. In Table 3, we show the runtime of four data valuation algorithms. On average, DVGS is roughly five times faster than DVRL and more than 100 times faster than truncated Monte-Carlo (TMC) Data Shapley. Compared to DVRL and Data Shapley, which require sequential training of models on different subsets of data, the DVGS method requires training only one model. Furthermore, by computing the gradient similarities every batches, the DVGS runtime can be reduced by a factor of . In practice, we find that using values of between 2 and 5 has a marginal impact on the performance of the data values used for corrupted label discovery. These experiments are described in more detail in Supplementary Section 5.3.

Table 3:

Average runtime (in minutes) of 8 experiments. Experiments 1–3 were for label corruption; Experiments 4–6 were for noise characterization; Experiments 7 and 8 were unsupervised characterization of noise.

| method | exp1 | exp2 | exp3 | exp4 | exp5 | exp6 | exp7 | exp8 |

|---|---|---|---|---|---|---|---|---|

| dshap | 515.2 | 774.9 | NaN | 404.5 | 631.0 | NaN | NaN | NaN |

| dvgs | 1.3 | 1.2 | 5.3 | 1.4 | 1.3 | 5.1 | 154.0 | 41.7 |

| dvrl | 9.9 | 9.5 | 13.2 | 9.8 | 9.8 | 11.7 | NaN | NaN |

| loo | 33.0 | 34.0 | NaN | 35.1 | 34.7 | NaN | NaN | NaN |

3.4. Data Valuation of the LINCS dataset

In this section, we apply our DVGS method to quantify LINCS L1000 sample quality across all chemical perturbations. In each experiment, we randomly sampled a target and a test set (5000 observations each) in two conditions:

Noisy Target set (high-APC). Target dataset sampled from all available observations.

Clean Target set (all-APC). Target dataset sampled from high-APC observations (APC > 0.5).

In both configurations, we adjust the target set sampling probabilities so that the target set is balanced by perturbation type. The source set consists of all samples that are not in the target or test sets. See Supplementary 5.2 for more information on APC calculation.

Data valuation of LINCS could be done in a supervised or unsupervised setting, however, we chose to use an unsupervised prediction task for the following reasons:

Simplicity: Encoding drug, cell line, concentration and measurement time requires additional overhead and may bias the results toward the encoding method chosen; e.g., encoded by drug targets, cell line expression, etc.

Imbalanced Dataset: drug perturbations and cell lines are not equally represented in the LINCS dataset, and this may cause bias toward the over represented drugs or cell lines. While this is a concern in an unsupervised setting, we rationalize that removing exogenous variables may help mitigate the issue. Additionally, to further mitigate this concern we select a target set with more balanced proportions of drug perturbations.

Noise Quantification: We consider measurement noise to be the primary data quality issue in the LINCS L1000 dataset and would like our data values to characterize sample noise rates. The results from Section 3 indicates that DVGS can effectively quantify sample noise using an unsupervised learning task.

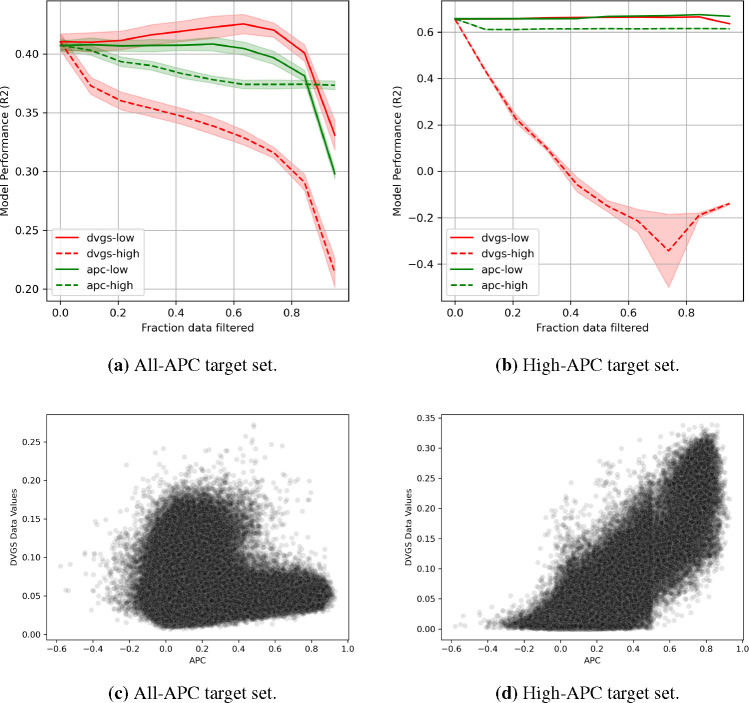

For this task, we use an autoencoder with 2-layers in the encoder and decoder networks and 32 latent channels (embedding dimension). To avoid dependence on a specific target set, we ran the experiment several times using different source, target, and test sets, as well as unique weight initializations. We compare the DVGS data values with the APC metric, proposed by Pham et al., to compare the generated data values to previous LINCS L1000 sample quality metrics. We evaluate the performance of LINCS data values by their ability to modify model performance when filtering high- and low-value data. Figure 5 shows the performance comparison between the APC and DVGS data values. In the high-APC and all-APC conditions, we see that DVGS captures low data quality much better than the APC metric. In the all-APC condition, DVGS outperforms APC in capturing high-quality data, however, the DVGS data values and APC perform comparably in the high-APC condition. Additionally, we find that DVGS values and APC values correlate in the high-APC condition (Pearson Correlation ~ 0.84) but not in the all-APC condition (Pearson Correlation ~ −0.05). More specifically, in Figure 5c we see that high APC values are depleted for high data values, suggesting that DVGS data values in the all-APC condition may characterize a different aspect of data quality or usefulness than APC.

Figure 5:

(a-b) The reconstruction performance of autoencoders applied to the LINCS L1000 data when filtering low- and high- value data. (c-d) DVGS data values compared to APC values.

4. Discussion

In this work, we address scalability limitations of current data valuation methods by proposing a fast and robust method to estimate data values. We show that this method performs comparably or better than baseline methods in several tasks, including 1) identifying corrupted labels and 2) characterizing exogenous feature noise. Additionally, we have shown that our method works well to modify model performance when filtering data based on data values, and performs comparably or better than baselines when filtering low-value data. While Data Shapley and DVRL tend to lead to larger decreases in model performances when filtering high-value data, DVGS performs exceptionally well at identifying corrupted labels and noisy samples, especially in vision tasks using pretrained models. DVGS is also, on average, 100 times faster than Data Shapley (TMC) and 5 times faster than DVRL. This improvement in time complexity makes DVGS applicable to a wide range of datasets and domains. Additionally, in the reported experiments, DVGS was stable across hyperparameters (see Supplementary note 5.1), data partition, and weight initialization. These characteristics make DVGS convenient and robust for many applications in data cleaning and machine learning.

To show the value of our DVGS method in a real world scenario and to address data quality issues in a foundational dataset, we apply DVGS to the LINCS L1000 level 5 dataset that has more than 700k high-dimensional samples. We compare our method with a previous LINCS quality metric, the Average Pearson Correlation (APC), and show that our DVGS-produced data values are better able to modify model performance when filtering based on value. Interestingly, using a target dataset drawn randomly from the dataset (not necessarily high-quality) leads to data values that 1) do not correlate well with APC, and 2) significantly outperform APC as measured on a hold-out test set drawn from the full dataset.

4.1. Limitations and Future Directions

Similarly to DVRL, our DVGS method lack the equitable data value properties proposed by Ghorbani et al., and therefore should not be interpreted in the same way; DVGS data values do not have a convenient interpretation like Data Shapley values. Rather, DVGS data values should be considered latent variables characterizing data usefulness, and we make no assumption about the linearity or magnitude of DVGS data values. These traits suggest that DVGS data values should be treated contextually as an ordered list of valuable samples. Pragmatically, ranked sample values meet the requirements of many of the evaluation techniques used by previous data valuation methods [8, 9] including identifying corrupted labels and noise quantification. Future directions may consider learning a task-specific function to estimate Data Shapley values from DVGS data values, which would allow users to interpret the DVGS data values in a way comparable to Data Shapley. This could be done by performing DVGS data valuation and calculating a limited number of Data Shapley values, which could then be used as a training set to infer Data Shapley values from DVGS values. Such an approach may help merge the scalability advantages of DVGS with the interpretability of Data Shapley.

Through the lens of anomaly detection, DVGS can be viewed as a meta-learning algorithm that quantifies the similarity of the source samples to the target dataset and could potentially be used for anomaly detection. Additionally, this perspective may help explain why the DVGS method underperforms compared to baselines in identifying high-value data. For instance, if DVGS data values are considered a metric of similarity to the target set, then it may be that the most “similar” samples are not necessarily the most useful, whereas the most “dissimilar” data are likely erroneous or detrimental. It is therefore important that large data values be treated with caution. Additionally, it raises the question: how does DVGS handle redundant (or highly-similar) data in either the target or source datasets? Future work should address these concerns and characterize how redundancy can skew or alter DVGS data values.

While DVGS works remarkably well on the evaluations listed in this paper, we do recognize that it is rare for gradient-based learning algorithms to be trained on gradient from single samples (e.g., on-line learning) and that most optimization algorithms are trained using mini-batches, thus implying that any sample’s value or usefulness toward a predictive task cannot be considered independent of the other samples. Future work may wish to address this by looking at gradient similarity within mini-batches, or by selecting samples that align mini-batch gradients to the target dataset. One can imagine bior multi-modal sample-gradients, all of which may align poorly to a target mini-batch gradient, but when source samples are averaged in a mini-batch may align far more closely.

Supplementary Material

Acknowledgements

This work is supported by the National Library of Medicine (NLM) Training Grant (T15-LM07088).

The authors thank Dr. Yoon for input on DVRL implementation details and Dr. Ben Cordier for the many discussions about data valuation.

Footnotes

Code and Data Availability

The Adult, Blog and Cifar10 datasets can be accessed from the UCI machine learning repository [34]. The LINCS data can be accessed from the CLUE data library. All code used for production of the paper figures and the methods described can be found here https://github.com/nathanieljevans/DVGS. Further questions can be directed to Nathaniel Evans (evansna@ohsu.edu).

We use this naming convention to avoid confusion later since DVGS updates model parameters based on gradient from the “Target Dataset” rather than the “Source Dataset.” The Data Shapley [8] and Data Valuation with Reinforcement Learning (DVRL) [9] would refer to this as the “Training” dataset.

The Data Shapley [8] and Data Valuation with Reinforcement Learning (DVRL) [9] would refer to this as the “Validation” dataset.

See supplementary note 5.3 for experimental evaluation of time complexity.

Observations with an average Pearson correlation between replicates greater than 0.5

More positive correlation is better performance; As described in Section 2, evaluation is performed by , since low data values are expected to correlate with high noise rates.

References

- [1].Niepel Mario, Hafner Marc, Mills Caitlin E., Subramanian Kartik, Williams Elizabeth H., Chung Mirra, Gaudio Benjamin, Anne Marie Barrette Alan D. Stern, Hu Bin, Korkola James E., Shamu Caroline E., Jayaraman Gomathi, Azeloglu Evren U., Iyengar Ravi, Sobie Eric A., Mills Gordon B., Liby Tiera, Jaffe Jacob D., Alimova Maria, Davison Desiree, Lu Xiaodong, Golub Todd R., Subramanian Aravind, Shelley Brandon, Svendsen Clive N., Ma’ayan Avi, Medvedovic Mario, Feiler Heidi S., Smith Rebecca, Devlin Kaylyn, Gray Joe W., Birtwistle Marc R., Heiser Laura M., and Sorger Peter K.. A multi-center study on the reproducibility of drug-response assays in mammalian cell lines. Cell Systems, 9(1):35–48.e5, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Begley C Glenn and Ellis Lee M. Drug development: Raise standards for preclinical cancer research. Nature, 483(7391):531—533, March 2012. [DOI] [PubMed] [Google Scholar]

- [3].Prinz Florian, Schlange Thomas, and Asadullah Khusru. Believe it or not: how much can we rely on published data on potential drug targets? Nature Reviews Drug Discovery, 10:712–712, 2011. [DOI] [PubMed] [Google Scholar]

- [4].Cheng L and Li L. Systematic quality control analysis of LINCS data. 5(11):588–598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Chen Hong, Hailey David, Wang Ning, and Yu Ping. A review of data quality assessment methods for public health information systems. International journal of environmental research and public health, 11(5):5170–5207, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Budach Lukas, Feuerpfeil Moritz, Ihde Nina, Nathansen Andrea, Noack Nele, Patzlaff Hendrik, Naumann Felix, and Harmouch Hazar. The effects of data quality on machine learning performance, 2022. [Google Scholar]

- [7].Cai Li and Zhu Yangyong. The challenges of data quality and data quality assessment in the big data era. Data Sci. J., 14:2, 2015. [Google Scholar]

- [8].Ghorbani Amirata and Zou James. Data shapley: Equitable valuation of data for machine learning. [Google Scholar]

- [9].Yoon Jinsung, Arik Sercan O., and Pfister Tomas. Data valuation using reinforcement learning. Number: arXiv:1909.11671. [Google Scholar]

- [10].Dennis Cook R.. Detection of influential observation in linear regression. 19(1):15–18. Publisher: [Taylor & Francis, Ltd., American Statistical Association, American Society for Quality; ]. [Google Scholar]

- [11].Tang Siyi, Ghorbani Amirata, Yamashita Rikiya, Rehman Sameer, Dunnmon Jared A., Zou James, and Rubin Daniel L.. Data valuation for medical imaging using shapley value and application to a large-scale chest x-ray dataset. 11(1):8366. Number: 1 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Subramanian Aravind, Narayan Rajiv, Corsello Steven M., Peck David, Natoli Ted E., Lu Xiaodong, Gould Joshua, Davis John F., Tubelli Andrew A., Asiedu Jacob K., Lahr David L., Hirschman Jodi E., Liu Zihan, Donahue Melanie K., Julian Bina, Khan Mariya, Wadden David, Smith Ian, Lam Daniel, Liberzon Arthur, Toder Courtney, Bagul Mukta, Orzechowski Marek, Enache Oana M., Piccioni Federica, Johnson Sarah, Lyons Nicholas J., Berger Alice H., Shamji Alykhan F., Brooks Angela N., Vrcic Anita, Flynn Corey, Rosains Jacqueline, Takeda David Y., Hu Roger, Davison Desiree, Lamb Justin, Ardlie Kristin G., Larson J Hogstrom Peyton Greenside, Gray Nathanael S., Clemons Paul A., Silver Serena J., Wu Xiaoyun, Zhao Wen-Ning, Willis Read-Button Xiaohua Wu, Haggarty Stephen J., Ronco Lucienne V., Boehm Jesse S., Schreiber Stuart L., Doench John G., Bittker Joshua A., Root David E., Wong Bang, and Golub Todd R.. A next generation connectivity map: L1000 platform and the first 1,000,000 profiles. Cell, 171:1437–1452.e17, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Qiu Yue, Lu Tianhuan, Lim Hansaim, and Xie Lei. A Bayesian approach to accurate and robust signature detection on LINCS L1000 data. Bioinformatics, 36(9):2787–2795, 01 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Li Zhaoyang, Li Jin, and Peng YU. l1kdeconv: an r package for peak calling analysis with lincs l1000 data. BMC Bioinformatics, 18, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Clark Neil R., Hu Kevin S., Feldmann Axel S., Kou Yan, Chen Edward Y., Duan Qiaonan, and Ma’ayan Avi. The characteristic direction: a geometrical approach to identify differentially expressed genes. BMC Bioinformatics, 15, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Duan Qiaonan, Reid St. Patrick, Clark Neil R., Wang Zichen, Fernandez Nicolas F., Rouillard Andrew D., Readhead Ben, Tritsch Sarah R., Hodos Rachel, Hafner Marc, Niepel Mario, Sorger Peter K., Dudley Joel T., Bavari Sina, Panchal Rekha, and Ma’ayan Avi. L1000cds2: Lincs l1000 characteristic direction signatures search engine. NPJ Systems Biology and Applications, 2, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Pham Thai-Hoang, Qiu Yue, Zeng Jucheng, Xie Lei, and Zhang Ping. A deep learning framework for high-throughput mechanism-driven phenotype compound screening and its application to COVID-19 drug repurposing. 3(3):247–257. Number: 3 Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Wang Tongzhou, Zhu Jun-Yan, Torralba Antonio, and Efros Alexei A.. Dataset distillation, 2018. [Google Scholar]

- [19].Yu Ruonan, Liu Songhua, and Wang Xinchao. Dataset distillation: A comprehensive review, 2023. [DOI] [PubMed] [Google Scholar]

- [20].Welling Max. Herding dynamical weights to learn. In Proceedings of the 26th Annual International Conference on Machine Learning, ICML ‘09, page 1121–1128, New York, NY, USA, 2009. Association for Computing Machinery. [Google Scholar]

- [21].Chen Yutian, Welling Max, and Smola Alex. Supersamples from kernel herding, 2012. [Google Scholar]

- [22].Bachem Olivier, Lucic Mario, and Krause Andreas. Coresets for nonparametric estimation - the case of dp-means. In Bach Francis and Blei David, editors, Proceedings of the 32nd International Conference on Machine Learning, volume 37 of Proceedings of Machine Learning Research, pages 209–217, Lille, France, 07–09 Jul 2015. PMLR. [Google Scholar]

- [23].Feldman Dan, Faulkner Matthew, and Krause Andreas. Scalable training of mixture models via coresets. In Shawe-Taylor J., Zemel R., Bartlett P., Pereira F., and Weinberger K.Q., editors, Advances in Neural Information Processing Systems, volume 24. Curran Associates, Inc., 2011. [Google Scholar]

- [24].Mirzasoleiman Baharan, Bilmes Jeff, and Leskovec Jure. Coresets for data-efficient training of machine learning models. 2019. [Google Scholar]

- [25].Lu Pan, Qiu Liang, Chang Kai-Wei, Ying Nian Wu Song-Chun Zhu, Rajpurohit Tanmay, Clark Peter, and Kalyan Ashwin. Dynamic prompt learning via policy gradient for semi-structured mathematical reasoning, 2022. [Google Scholar]

- [26].Sang Michael Xie Shibani Santurkar, Ma Tengyu, and Liang Percy. Data selection for language models via importance resampling, 2023. [Google Scholar]

- [27].Pang Guansong, Shen Chunhua, Cao Longbing, and Anton Van Den Hengel. Deep learning for anomaly detection. ACM Computing Surveys, 54(2):1–38, mar 2021. [Google Scholar]

- [28].Kwon Gukyeong, Prabhushankar Mohit, Temel Dogancan, and Ghassan AlRegib. Backpropagated gradient representations for anomaly detection, 2020. [Google Scholar]

- [29].Ren Mengye, Zeng Wenyuan, Yang Bin, and Urtasun Raquel. Learning to reweight examples for robust deep learning, 2018. [Google Scholar]

- [30].Jiang Lu, Zhou Zhengyuan, Leung Thomas, Li LiJia, and Fei-Fei Li. Mentornet: Learning data-driven curriculum for very deep neural networks on corrupted labels. 2017. [Google Scholar]

- [31].Zhang Zhilu and Sabuncu Mert Rory. Generalized cross entropy loss for training deep neural networks with noisy labels. ArXiv, abs/1805.07836, 2018. [PMC free article] [PubMed] [Google Scholar]

- [32].Hendrycks Dan, Mazeika Mantas, Wilson Duncan, and Gimpel Kevin. Using trusted data to train deep networks on labels corrupted by severe noise. ArXiv, abs/1802.05300, 2018. [Google Scholar]

- [33].Zeng Langche. Logistic regression in rare events data 1. 1999. [Google Scholar]

- [34].Dua D. and Graff C.. UCI machine learning repository. [Google Scholar]

- [35].Búza Krisztián. Feedback prediction for blogs. In Annual Conference of the Gesellschaft für Klassifikation, 2012. [Google Scholar]

- [36].Krizhevsky Alex. Learning multiple layers of features from tiny images. pages 32–33, 2009. [Google Scholar]

- [37].Szegedy Christian, Liu Wei, Jia Yangqing, Sermanet Pierre, Reed Scott, Anguelov Dragomir, Erhan Dumitru, Vanhoucke Vincent, and Rabinovich Andrew. Going deeper with convolutions, 2014. [Google Scholar]

- [38].Spearman C.. The proof and measurement of association between two things. by c. spearman, 1904. The American journal of psychology, 100 3–4:441–71, 1987. [PubMed] [Google Scholar]

- [39].Hanley James A and McNeil Barbara J. The meaning and use of the area under a receiver operating characteristic (roc) curve. Radiology, 143(1):29–36, 1982. [DOI] [PubMed] [Google Scholar]

- [40].Rumelhart David E. and McClelland James L.. Learning Internal Representations by Error Propagation, pages 318–362. 1987. [Google Scholar]

- [41].Baldi Pierre. Autoencoders, unsupervised learning, and deep architectures. In Guyon Isabelle, Dror Gideon, Lemaire Vincent, Taylor Graham, and Silver Daniel, editors, Proceedings of ICML Workshop on Unsupervised and Transfer Learning, volume 27 of Proceedings of Machine Learning Research, pages 37–49, Bellevue, Washington, USA, 02 Jul 2012. PMLR. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.