Abstract

Endo‐microscopy is crucial for real‐time 3D visualization of internal tissues and subcellular structures. Conventional methods rely on axial movement of optical components for precise focus adjustment, limiting miniaturization and complicating procedures. Meta‐device, composed of artificial nanostructures, is an emerging optical flat device that can freely manipulate the phase and amplitude of light. Here, an intelligent fluorescence endo‐microscope is developed based on varifocal meta‐lens and deep learning (DL). The breakthrough enables in vivo 3D imaging of mouse brains, where varifocal meta‐lens focal length adjusts through relative rotation angle. The system offers key advantages such as invariant magnification, a large field‐of‐view, and optical sectioning at a maximum focal length tuning range of ≈2 mm with 3 µm lateral resolution. Using a DL network, image acquisition time and system complexity are significantly reduced, and in vivo high‐resolution brain images of detailed vessels and surrounding perivascular space are clearly observed within 0.1 s (≈50 times faster). The approach will benefit various surgical procedures, such as gastrointestinal biopsies, neural imaging, brain surgery, etc.

Keywords: deep learning, endoscopy, HiLo fluorescence imaging, metalens, optical sectioning, telecentric configuration, three‐dimensional imaging

Intelligent fluorescence endo‐microscopy demonstrates the invariant magnification, large field‐of‐view, and optical sectioning in vivo 3D imaging of mouse brains by the varifocal metalens and deep learning (DL). This study provides a pathway for the next‐generation endo‐microscopy and has excellent potential for the applications of future rapid optical biopsies, such as gastrointestinal biopsies, neural imaging, brain surgery, etc.

1. Introduction

Endo‐microscopy is a rapidly evolving field for the optical visualization of internal organs under minimally invasive conditions. It provides detailed fine features of various tissues and subcellular structures for optical biopsy, which has transformed the diagnosis and treatment of numerous medical conditions. By leveraging advanced optics and high‐speed image acquisition, wide‐field optical endo‐microscopies have emerged as a powerful imaging technique to obtain broad field‐of‐view (FOV) and 3D images of various organs.[ 1 , 2 ] Despite its advantages, the lack of optical sectioning capability leads to it facing the challenge of image volumetric tissue conditions due to strong out‐of‐focus background noise.[ 3 , 4 , 5 , 6 ] Confocal endo‐microscopy is the most widely adopted imaging method that excels in providing high spatial resolution and exceptional out‐of‐focus background rejection.[ 7 , 8 , 9 , 10 ] However, point‐by‐point scanning of desired voxels within a target tissue can be time‐consuming and cause high photobleaching, which can limit the amount of data that can be acquired and the quality of the resulting images. Nonetheless, deconvolution methods and computational iterative algorithms can improve image quality by reducing noise, increasing contrast, and enhancing resolution. But they have limitations such as high computation time, the requirement of a precise point spread function, and the inability to overcome the missing cone problem, which can limit their practical utility in certain applications.[ 11 , 12 , 13 , 14 ]

Alternatively, structured illumination enables optical sectioning endo‐microscopy in an enticing wide‐field fashion.[ 15 , 16 ] It utilizes grid illumination and phase‐shifting computational post‐processing to acquire optically sectioned images at a single depth. Nonetheless, the phase‐shifting approach mandates the acquisition of at least three‐phase illuminated patterns onto the tissue, followed by a demanding demodulation process. To circumvent these limitations, a comprehensive, structured illumination for HiLo endo‐microscopes has been developed,[ 3 , 17 ] exhibiting an exceptional ability to obtain sectioning images efficiently. Furthermore, the utilization of speckle illumination in the HiLo approach can facilitate deep tissue penetration.[ 18 , 19 , 20 ] Even though HiLo endo‐microscopy renders remarkable optical sectioning images, it necessitates axial scanning by moving the endoscope probe or sample, thereby greatly increasing the risk of injuring tissue from external compression. The optically sectioned HiLo images need to be additionally computed from pairwise images.

In general, axial focal positions in endo‐microscopy can be controlled by tunable lenses of different types.[21 ] However, conventional zoom lenses, with their composite construction, present difficulties in achieving miniaturization. [22 ] Liquid lenses, which rely on tunable curvature, are limited by the effects of gravity and tend to produce aberrations that degrade image quality.[23 ] Addressing these challenges, Bernet et al. recently presented a Moiré lens, a paired diffractive optical elements (DOEs) lens, for focus tunability.[ 24 , 25 , 26 , 27 ] By tuning the mutual angles of complementary phase masks, focal lengths can be continuously varied without axial movement. Conventional DOEs are binary‐phase gratings, suffering from low efficiency and low uniformity.[28 ] In addition, the fabrication of DOEs with complex morphology and compact size remains a significant challenge that requires further attention.

The contemporary advent of metasurfaces, as a groundbreaking technique for manipulating light, has given rise to unparalleled solutions for optical system designs.[29 [ These ultrathin and planar optical elements possess an exceptional ability to tailor light properties,[30 ] thus promising seamless integration into an array of devices.[ 31 , 32 , 33 , 34 , 35 , 36 ] In comparison to conventional refractive optical counterparts, a metalens is able to offer a lightweight, miniaturized, and high degree of freedom lens, which has great potential to be integrated into optical systems.[ 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 ]

In the present, the exponential advancement in computing power, particularly the remarkable progress in computer and GPU‐related technologies, causes a transformative era for artificial intelligence (AI). AI has surged to the forefront in biomedical imaging, acting as a leading methodology for computer‐assisted interventions,[46 ] quantitative phase imaging,[47 ] and high‐resolution fluorescence imaging.[48 ] With its capacity to transcend hardware restrictions and consolidate the advantages of different optical techniques in imaging, classification, and regression problems,[ 49 , 50 , 51 , 52 , 53 , 54 , 55 , 56 , 57 , 58 ] AI promises to engender significant improvements in image quality. Integrating metasurfaces with AI represents one of the pioneers in futuristic optical systems. The precise control over light by metasurfaces, coupled with the learning‐based adaptability of AI, creates a synergistic partnership, enabling novel capabilities for high‐resolution imaging, real‐time diagnostics, and intelligent decision‐making in optical‐based applications. Even with the latest endo‐microscopic optical instrument, there is still enormous scope to improve using deep learning (DL) methods.[46 ]

So far, several works have been reported to utilize metalens in endoscopic systems, which include fiber bundle endoscopy,[59 ] optical coherence tomography,[ 60 , 61 ] and two‐photon micro‐endoscopy.[62 ] Nevertheless, none of the studies can perform in vivo fluorescence optical sectioning images. In this work, a Moiré metalens based DL fluorescence endo‐microscopy capable of observing optically sectioned in vivo images with high resolution is demonstrated. The Moiré metalens is realized by using two complementary dielectric metasurfaces.[63 ] With a telecentric configuration,[64 ] the micro‐endoscope reaches uniform image magnification, which is critical for 3D biomedical imaging. To empower optical sectioning capability, speckle illumination for HiLo imaging is applied to the endo‐microscope. Furthermore, to reduce imaging acquisition time, the DL method is adopted to achieve HiLo imaging in a single shot. The imaging process time is faster than the traditional HiLo imaging process. The high‐resolution images are obtained by the DL method directly. We have experimentally demonstrated 3D in vivo mouse brain imaging using the Moiré metalens. Our system obtains high‐resolution mouse brain images of both transparent and opaque mouse brains, up to 200 µm and 100 µm in depth, respectively. To the best of our knowledge, our approach is the first report on the implementation of metalens for focus tunable fluorescence endo‐microscopy to achieve a long axial scanning range for deep tissue in vivo imaging. In addition, a DL model to assist in vivo optical sectioning of the mouse brain and analytical expressions for the focus tunability of endoscopic systems are presented. The endo‐microscope has multiple functionality and clinical viability, which augment existing systems in performing rapid in situ volumetric imaging before surgeries. With the aid of the image‐to‐image translation residual convolutional neural network (RCNN) DL model, we have accurately and rapidly performed in vivo optical sectioning images of detailed brain vessels and surrounding perivascular space. The present method can directly help in investigating the functioning of the glymphatic system in live organisms by utilizing a cerebrospinal fluid (CSF) tracer to track its flow through the perivascular spaces into the brain tissue.

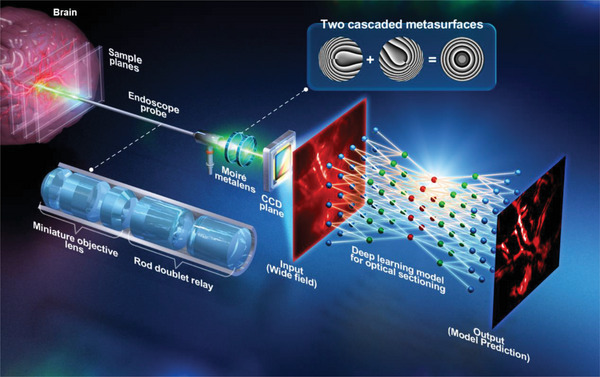

The schematic of the proposed fluorescence optical sectioning endo‐microscopy is shown in Figure 1 . A customized rigid endoscopic probe is stretched into and approached to a target brain area, and fluorescence subcellular brain images are captured by the endoscopic probe. We introduce a miniaturized Moiré metalens into the endo‐microscope, which is composed of two phase metasurfaces (highlighted box region in Figure 1) for 3D imaging without any axially moving components in depths. Due to telecentric configuration, system FOV maintains constant during axial scanning by rotating different angles between two metasurfaces. Ex vivo transparent mouse brain tissues are used to demonstrate the fine optical sectioning capability of the endo‐microscope by utilizing the HiLo imaging method with pairwise images (i.e., one uniform illumination and one speckle illumination). In order to expedite the entire processing time of HiLo optical sectioning, we adopt a DL[ 65 ] RCNN[ 66 , 67 ] model algorithm to obtain 3D optical sectioning images of in vivo mouse brains. It only requests one image under uniform illumination, and the well‐trained RCNN model predicts a high‐contrast optical sectioning image within a single shot in real time.

Figure 1.

Schematic of the varifocal metalens based intelligent fluorescence endo‐microscopy. A Moiré metalens is positioned at the system's Fourier plane to tune focal points for 3D imaging. Fluorescence images of the in vivo brain at different depths are captured by the endoscope probe, and the RCNN model for the HiLo process achieves optical sectioning imaging in a wide‐field manner within a single shot.

2. Result and Discussion

2.1. Customized Rigid Endoscope Probe

The customized endoscopic probe is designed and optimized by OpticStudio ray‐tracing software (Zemax, LLC).[68 ] The probe is composed of two parts: a miniature objective lens and a rod doublet relay, as shown in Figure 1. The total length of our customized rigid endoscope probe is ≈18 cm. In the first part, the miniature objective lens includes two doublet lenses and one meniscus lens, which are designed based on the Cooke triplet objective lens.[69 ] The diameter and numerical aperture (NA) of the miniature objective lens are 8 mm and 0.26, respectively. In the second part, the rod doublet relay consists of a rod relay lens and an achromatic doublet relay. The rod relay lens includes two sets of field lenses and a Hopkins rod relay with an optimized radius of curvature on all surfaces. Compared to a conventional relay system, the Hopkins rod relay incorporates a glass rod that improves light throughput and reduces vignetting.[70 ] The achromatic doublet relay consists of two kinds of achromatic doublet lenses (AC254‐50‐A and AC254‐100‐A) capable of decreasing spherical aberration and field curvature.

2.2. Design of Moiré Metalens

The Moiré metalens consists of two cascaded metasurfaces,[ 25 , 26 , 63 ] which are made of Gallium Nitride (GaN) dielectric material.[ 35 , 71 ] The complex conjugate transmission functions of the two metasurfaces (D1, D2) are

| (1) |

| (2) |

where R =, ϕ = atan2(y,x), and c = π⁄λf. R denotes the position in the radial coordinate, Φ is the polar angle, and c is a constant, which can be controlled by adjusting the focal length (f), and operation wavelength (λ). The phase profile of the metalens is restricted in the range 0–2π, which is relevant to a Fresnel lens.[72 ] The round(⋅) is the function of rounding the input parameter to the nearest integer number[ 25 ] that can effectively avoid a discontinuity at the region of the polar angle equal to π. In the actual function of our Moiré metalens, the paired metasurfaces need to overlap together. When the second metasurface is rotated with an angle (θ) relative to the first one, the transmission function of the Moiré metalens (D Moire) becomes the following:

| (3) |

According to the transmission function of an ideal lens,[ 26 , 73 ] the effective focal length of the Moiré metalens can be defined as

| (4) |

From Equation (4), the focal length (f Moire) of our Moiré metalenses is inversely proportional to the rotation angle between the paired metasurfaces. The 800 nm‐height GaN nanopillars with various diameters are designed for 2π phase modulation of the designed Moiré phase arrangement, as shown in Section 1 of Supporting Information. The fabrication details of the Moiré metalens can be found in the Experimental Section and Section 2 of Supporting Information.

2.3. Design of Telecentric Configuration

The varifocal observation capability of our system is achieved by telecentric configuration has the advantage of providing invariant magnification and contrast for optical imaging.[64 ] The ray transfer matrix (i.e., ABCD matrix) is applied to perform telecentric design.[74 ] ABCD matrix derivation for telecentric design is discussed in Section 3 and 4 of Supporting Information. Initially, the system adheres to the telecentric design to position the Moiré metalens at the Fourier plane of the micro‐endoscope (Figure 1). Subsequently, our endoscopic system FOV can remain constant during varifocal observation by rotating different angles between two metasurfaces. The detailed verification of invariant magnification property and configuration of telecentric endo‐microscopy combined with Moiré metalens can be found in Figure S3 (Supporting Information), and the maximum focal length tuning range is ≈2 mm with 3 µm lateral resolution. The telecentric characterization of experimentally measured beam profiles with a tuning range of ≈6 mm at three different operation wavelengths of blue (491 nm), green (532 nm), and red (633 nm) can also be found in Section 5 of Supporting Information.

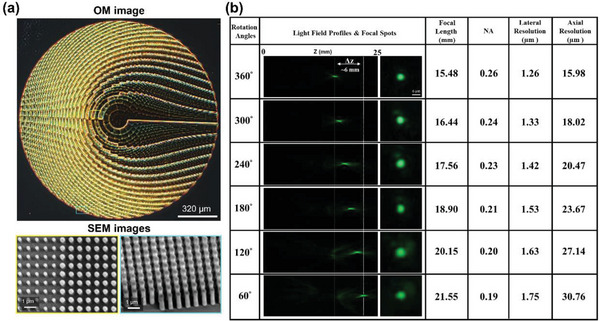

2.4. Varifocal Optical Sectioning Endo‐Microscopy

Moiré metalens is positioned in the Fourier plane of the system. The Moiré metalens is mounted on an electrically controlled rotation stage (GT45, Dima Inc., maximum rotation speed: 140 RPM with 0.004° maximum rotation accuracy in each step) to tune the focus by changing the relative angle between the paired metasurfaces. To precisely adjust the rotation stage, a data acquisition card (myDAQ, NI Inc.) is used for automation. The Moiré metasurface optical microscopy (OM) and scanning electron microscope (SEM) images are shown in Figure 2a. In the telecentric measurement, we use the green laser (532 nm) as the light source in Figure 2b. The rotation angles of the Moiré metalens are adjusted from 360° to 60° with a step size of 60°, and the corresponding focal length of the endoscope probe can be tuned from ≈15.5 to ≈21.6 mm (Δz = ≈6 mm). The NA of the endoscope probe varies from ≈0.26 to ≈0.19. To quantify the optical sectioning ability of our micro‐endoscope, fluorescently labeled microspheres (Fluoresbrite YG microspheres, 90 µm in diameter, Polysciences Inc.) are sequentially scanned along the axial direction. The detailed HiLo imaging principle and varifocal optical sectioning endo‐microscopy system calibration is described in Sections 6 and 7 of Supporting Information). The 3D reconstruction volume images of mouse brain various perivascular space locations in uniform illumination and HiLo process are shown in Section 7 of Supporting Information. In addition, the ex vivo mouse brain dyed with Alexa Fluor 488 imaging results can be found in Section 8.

Figure 2.

Experimental measurement of varifocal optical sectioning endo‐microscopy. a) The optical microscopy (OM) and scanning electron microscope (SEM) images of our Moiré metasurface (diameter is 1.6 mm, nano cylinder height is 800 nm, and period is 300 nm). b) Telecentric focusing measurement for green wavelength at 532 nm (the rotation angles from 360° to 60° can create the focal length difference Δz ∼ 6 mm). Different NA can generate corresponding lateral and axial resolutions.

2.5. Deep Learning for Optical Sectioning Imaging

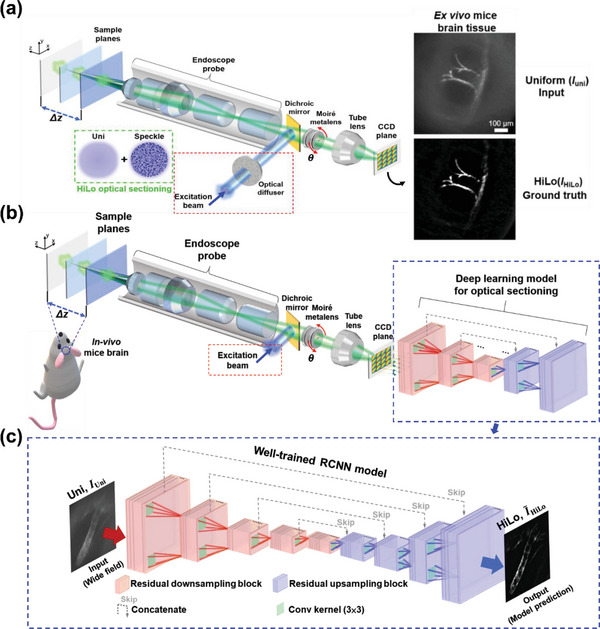

The HiLo imaging method is utilized in the endo‐microscope to generate high‐resolution images with fine optical sectioning capacity, as shown in Figure 3a. The experimental setup of the Moiré metalens optical sectioning endo‐microscopy of HiLo imaging is used for obtaining DL model training and validating datasets. Conventionally, HiLo imaging under speckle illumination still requires paired images, one is under uniform illumination (I uni), and the other is under speckle illumination (I sp), to obtain an optical sectioning image (i.e., I HiLo). For the uniform imaging, the excitation green laser beam (λ = 532 nm, Cobolt Inc.) is reflected by a dichroic mirror (DMLP567R, Thorlabs Inc.), located between the endoscope probe and Moiré metalens, and propagates through the endoscope probe to directly illuminate the specimen. The red emission light generated by the fluorescently labeled specimen is then collected by the endoscope probe and displayed onto the CCD (GE1650, Prosilica Inc.) to generate the uniform imaging. For the speckle imaging, the excitation laser beam passes through an optical diffuser to produce randomly distributed speckle patterns along the axial direction to generate the speckle illumination for the HiLo imaging process. Randomly selected data of five different types of fluorescent samples with 2800 training and 700 validation dataset image pairs are shown in Section 9 of Supporting Information. The entire process of conventional HiLo is time‐consuming and makes the system too complicated. To overcome these limitations, the micro‐endoscope utilizes a RCNN DL model for HiLo sectioning images. Figure 3b shows the configuration of the RCNN DL model for the HiLo optical sectioning imaging technique. In the proposed RCNN DL model, the input is uniformly illuminated I uni obtained by the endo‐microscopy, and a well‐trained RCNN model transfers the I uni images into optically sectioned HiLo images, as shown in Figure 3c. A more detailed discussion of the proposed RCNN architecture can be found in Section 10 of Supporting Information. Using the RCNN DL model, the optical diffuser can be removed from the endo‐microscope to reduce the setup complexity, as shown in the red dashed boxes of Figure 3a,b. In addition, the acquisition speed of optical sectioning images is significantly enhanced up to 0.1 s (≈50 times faster). The 3500 image pairs of I uni and I HiLo are composed of five different types of fluorescent samples, including ex vivo mouse brain tissue, fluorescent beads, corn stem, lily anther, and basswood stem, captured by our endo‐microscopy for both training and validation datasets of the RCNN model. The 2800 image pairs (80%) and 700 image pairs (20%) are used for training and validation, respectively, as shown in Figure S13 (Supporting Information). The validation datasets do not exist in the training dataset. Image data of five different types provide different features to the model, such as slender, hollow, and circular structures, to expand the model's versatility. Depending on the supervised learning method, the I uni images are set as the input, and the corresponding I HiLo images are the ground truth. In order to intuitively operate the model, input, and ground truth images are scaled to the same size (256×256 pixels).

Figure 3.

RCNN DL model for optical sectioning endo‐microscopy. a) The experimental setup of the Moiré metalens optical sectioning endo‐microscopy for DL model training and validating datasets. Randomly selected data of five different types of fluorescent samples with 2800 training and 700 validation dataset image pairs (see Section 9 of Supplementary Materials). An optical diffuser is added to the micro‐endoscopic system for HiLo sectioning method to generate speckle illumination (green dash square). b) The proposed RCNN architecture for HiLo optical sectioning. Red square regions represent residual down‐sampling blocks to reduce the dimensionality of image features. Blue square regions represent residual up‐sampling blocks, which bring back low dimensional features equal to the original input size. c) RCNN DL model for optical sectioning endo‐microscopy. In our varifocal optical sectioning endo‐microscopy) the RCNN DL model is used to replace the conventional HiLo method to simplify optical sectioning acquisition process.

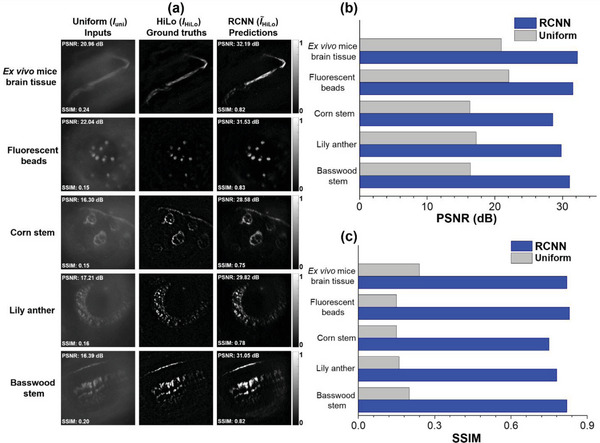

To evaluate the prediction results of the RCNN model, I uni, I HiLo, and HiLo is compared in Figure 4 . Figure 4a shows the resultant images of five fluorescent samples from the validation dataset, and the resultant images of the training dataset are discussed in Section 12 of Supporting Information. The absolute error map is utilized to visualize their difference, as shown in Supporting Information Section 11 Figure S16.

Figure 4.

Comparison of inputs, ground truths, and predictions from validation dataset. a) Resultant images of five different types of fluorescent samples that include ex vivo mouse brain tissues, fluorescent beads, corn stem, lily anther and basswood stem taken by validation dataset. b) The PSNR comparison analysis bar chart for inputs and model predictions. c) The SSIM comparison analysis bar chart for inputs and model predictions.

In order to quantitatively evaluate the well‐trained RCNN model, both peak signal‐to‐noise ratio (PSNR) and structural similarity index (SSIM) evaluation metrics are measured. The PSNR indicates the quality of the images, and the SSIM can quantify the similarity of the model‐predicted and ground truth images[ 75 ] (the detailed definitions of PSNR and SSIM are described in Section 13 of Supporting Information). In Figure 4a, the PSNR and SSIM of each I uni and HiLo image are shown in the corner. Moreover, the comparison bar chart for the PSNR and SSIM is shown in Figure 4b,c, respectively. Compared to the input images, the RCNN predicted images can significantly improve both evaluation metrics for all five different types of fluorescent samples (the average PSNR and SSIM values for I uni and HiLo from the validation dataset is described in Section 13 of Supporting Information). To verify the versatility and stability of the RCNN model, ex vivo mouse brain tissues without the RapiClear transparent process are fluorescently labeled with Alexa Fluor 555 for the testing dataset, which does not exist in both training and validation datasets (in Section 14 of Supporting Information).

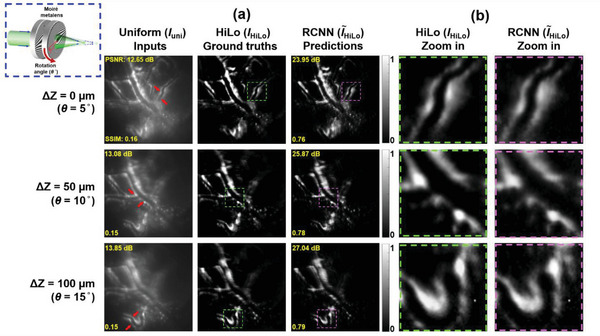

2.6. In Vivo Imaging of Mouse Brain for Preclinical Usage

To evaluate our endo‐microscopy for 3D live fluorescence imaging applications, we further perform in vivo images of a mouse brain (image depth: 100 µm), which is labeled with a fluorescence tracer (Alexa Fluor 555). The detailed surgical and fluorescently labeled preparation procedure of the in vivo mouse brain is described in the Section of Materials and Methods. While imaging, the anesthetized mice are fixed onto a customized stereotaxic frame (Stoelting Inc.), and the surgical incision of the brain can face the endoscopic probe. The stereotaxic frame is positioned onto a three‐axis linear stage (TSD‐652S‐M6 & TSD‐653L‐M6, OptoSigma Inc.) for fine alignment. Resultant images of the in vivo mouse brain are shown in Figure 5 . The green excitation beam (λ = 532 nm) is used to illuminate the brain, and in vivo fluorescence images in red (λ = ∼600 nm) are observed. Figure 5a shows three in vivo images (I uni, I HiLo, and HiLo) of the parenchymal brain at different axial positions by rotating the Moiré metalens with the corresponding angles of θ = 5, 10°, and 15°. In Figure 5a, the HiLo images using the well‐trained RCNN model provide fine optical sectioning images, which are comparable to the ground truth (i.e., I HiLo). The background inside vessels (i.e., the black tube area in Figure 5a) and the tracer of the perivascular space (i.e., the white area surrounding a vessel in Figure 5a) are clearly observed in HiLo.

Figure 5.

In vivo fluorescence images of mouse brain. a) In vivo images of wide‐field, ground truth, and model predictions at three different depths (the corner dashed box show the rotation of Moiré metalens for the corresponding focal plane, and the arrows in I uni point out the focused area). b) Zoom in results corresponding to the dash square region in (a), the left column (green dash square), and the right column (purple dash square) show the I HiLo and HiLo zoom in images, respectively.

Due to the natural scattering properties of in vivo brain tissues, strong background noise is clearly observed in I uni images. Compared to the above‐described ex vivo results of I uni in Figure S18a (Supporting Information), the vessel inside the parenchymal brain, as shown in Figure 5a, is about three times thicker, and the background noise surrounding perivascular space is around twice high. However, with our RCNN model, HiLo demonstrates high‐contrast optical sectioning images, and background noise is significantly suppressed, which is evident from the zoom in results in Figure 5b. In all three depths, the model can successfully transfer the uniform input images into the high‐contrast optical sectioning images, which is equivalent to HiLo imaging results. Furthermore, the quantitative results of PSNR and SSIM in HiLo are 25.62 dB and 0.78, respectively, which is ≈12 dB and 5 times higher than those of I uni. It is important to note that even though in vivo mouse brain fluorescent images do not exist in the training dataset, the well‐trained RCNN model still can function equally to prove its generality. The tracer we inject into the mouse brain mainly flows into the perivascular space to show the glymphatic system, which causes the negatively visualized images.[76 ] The additional results and discussion of in vivo fluorescence images of the left and right side of the mouse brain are shown in Section 15 of Supporting Information.

3. Conclusion

In summary, we demonstrate a high‐resolution optical sectioning meta‐device varifocal endo‐microscope, which utilizes a combination of compact varifocal metalens and an endoscopic probe in a telecentric design. A dielectric meta‐device is designed, fabricated, and characterized for this purpose. Due to the flat and compact nature of the metasurface, focus tunability is achieved with a miniature optical component with a thickness of less than 2 µm. The telecentric configuration of the meta‐device varifocal end‐microscopy provides uniform magnification throughout the axial scanning range. In addition, using a ray tracing matrix, we present a mathematical expression for focus tunability. The maximum focal length tuning range is ≈2 mm with 3 µm lateral resolution. Variable focus, together with the HiLo process, gives the advantage of non‐invasive multiplane imaging with fine optical sectioning.

The RCNN model is utilized to speed up imaging acquisition. The model is trained using various samples with different sizes and shapes. Results of the absolute error map show that predicted images using the RCNN have comparable optical sectioning capabilities as the conventional HiLo method. The well‐trained RCNN significantly suppresses the out‐of‐focus background noise and obtains high‐contrast fine features at the in‐focus plane. Compared to I uni, PSNR and SSIM values in the HiLo are enhanced to ≈14 dB and ≈4.5 times, respectively. The results show our RCNN model outperforms the basic U‐net model, and HiLo imaging computational time reduces from 5 s to 0.1 s, which makes our method conducive for high‐speed 3D imaging. With the help of the shortcut connection operations, the model turns into the counterpart residual version of inputs. Compared to the conventional U‐net, this capability positions RCNN as a pioneering architecture for capturing intricate hierarchical features in the data, facilitating the learning of complex patterns, and enhancing model performance. It solves vanishing/exploding gradients and degradation issues of the conventional convolutional neural network. The versatility and effectiveness of our trained RCNN model have been shown by predicting in vivo mouse brain vessels and surrounding perivascular space images, which do not exist in the training and validation datasets.

Focus tunability with optical sectioning potentially augments the diagnostic yield of existing endo‐microscopic imaging. By proper choice of fluorescent marker for near‐infrared imaging, penetration depth can be significantly extended. Compared to the conventional two‐photon technique employed for in vivo imaging, the proposed approach can offer unparalleled advantages such as large FOV, high imaging speed, and low cost‐effective. The direct impact of our volumetric endo‐microscopic imaging approach will be in performing rapid optical biopsies, which are required for various surgical procedures, including gastrointestinal biopsies, neural imaging, brain surgery, etc.

4. Experimental Section

The Design and Fabrication of Moiré Metalens

The commercial numerical analysis tool Computer Simulation Technology Microwave Studio (CST MWS) for full‐wave simulation was applied, which was able to design and select the constituted meta‐atom for the metalens.[ 71 , 77 ] In the simulation process, the boundary conditions of cylinder meta‐atoms in the x and y directions was chosen, and both were unit cell modes, and the z direction was the open mode to optimize the simulation. The material of the dielectric substrate and meta‐atoms was sapphire (Al2O3, refractive index = 1.77 at λ = 532 nm) and Gallium Nitride (GaN, refractive index = 2.42 at λ = 532 nm), respectively as depicted in Figure S1a (Supporting Information). The height (H) of each GaN nano cylinder was 800 nm, and the period (P) of Al2O3 substrate was 300 nm. For the simulated spectral response (Figure S1b, Supporting Information), only the parameter setting (diameter, D) of cylinder meta‐atoms was picked with transmission efficiency higher than 80% to ensure high transmission performance of the metalens. Figure S1b (Supporting Information) shows the relationship of the meta‐atom size and the corresponding phase. Finally, using cylinder meta‐atoms (Table S1, Supporting Information) with different diameters, phase structures of Moiré metalens were designed.

For the fabrication procedure of the GaN Moiré metalens, the previous method was followed, as shown in Figure S2 (Supporting Information).[ 35 , 63 ] First, the 800 nm high refractive index GaN as the upper layer was grown on a low refractive index double‐polished Al2O3 substrate by the metalorganic chemical vapor deposition (MOCVD). And a 200 nm SiO2 thin film as a hard mask layer was deposited on the GaN layer by using an electron‐gun evaporator. Next, the photoresist (PMMA A4) was coated onto the SiO2 layer by spin coaters. After the substrate was prepared, the electron beam lithography system (Elionix ELS‐HS50) was utilized with 1 nA beam current and 50 kV acceleration voltage for the exposure procedure, transferring the unit cells arrangement of Moiré metalens to the photoresist layer. Then the substrate was immersed into the developer solution (MIBK: IPA = 1:3), and the 40 nm chromium layer was deposited onto the developed substrate by an electron‐gun evaporator to form the mask for the etching process. To remove the unnecessary photoresist on the surface, the substrate was soaked into the acetone solution for the lift‐off procedure. For the etching process, it can be divided into two steps. In the first step, the 90 W plasma power reactive‐ion etching (RIE) was utilized to transfer the designed pattern to the SiO2 mask layer. Second, the Cl2‐based inductively coupled plasma RIE (ICP‐RIE, 13.56 MHZ operated radio frequency) to etch the 800 nm GaN layer was used. Finally, the designed varifocal Moiré metalens can be generated by using a buffered oxide etch (BOE) solution to remove the unnecessary SiO2 layer.

RCNN Model Implementation

The entire architecture of the proposed RCNN model was developed on the open‐source library TensorFlow (Version 2.4.0), and the model was trained and tested on a server‐level computer with 256 GB of RAM, two 2.4 GHz CPU cores (Xeon Silver 4210R, Intel Inc.), and dual RTX A5000 GPUs (24GB GPU Memory, NVIDIA Inc.). With the help of the NVIDIA CUDA GPU‐accelerated library (cuDNN), the entire training process was completed in ≈14.5 hours, which was 80 times faster than the non‐acceleration condition. In addition, the training optimizer for the RCNN model was a gradient decent‐based Adam optimization algorithm with the learning rate 1e‐3. The total training iteration (epochs) number was set to 3500, and the loss function for the model was the mean absolute error (MAE).

Fixed Brain Imaging

Thirty minutes after the start of intracisternal injection, the mice have been sacrificed, and the brains were fixed overnight with 4% cold paraformaldehyde in PBS. To evaluating the tissue clearing images, the 250 µm brain coronal sections were cut on a vibratome (MicroSlicer DTK‐1000N, DSK, Japan), and tissue clearing was performed by RapiClear 1.47 (SunJin Lab Inc.), as described in the manufacturer's protocol.

Intracisternal Injection for In Vivo Brain Imaging

The intracisternal injection method was based on previous literature with minor modifications.[78 ] Mice (20≈25 g) were anesthetized intraperitoneally with a combination of Zoletil (50 mg k−1g) and xylazine (2.3 mg k−1g). After confirming the loss of response to a toe pinch, mice were placed in a stereotaxic frame (Stoelting, USA), and the cisterna magna was exposed through a surgical incision. A 30‐gauge needle connected to a Hamilton syringe via a polyethylene tube (PE10) was implanted in the cisterna magna, and the catheter was secured via cyanoacrylate glue and dental cement. 10 µl CSF tracer (5 mg ml−1 albumin‐Alexa Fluor 555, ThermoFisher Scientific, Cat no. A34786, USA) was infused at a constant rate of 1 µl min−1 with a syringe pump (Harvard Apparatus, USA). Twenty minutes later, the tracer was observed transcranially through fluorescence microscopy (Olympus, Cat No. MVX10, Japan) to confirm that the tracer has entered the perivascular space, and then the skull was opened and the in vivo brain imaging was ready for the proposed endo‐microscopy.

Animals

All animal experiments were carried out at the National Taiwan University College of Medicine in accordance with the guidelines of Institutional Animal Care and Use Committee (IACUC approval No. 20 201 028, 20 220 503 & 20 230 043). Mice (C57BL/6JNarl) have access to food and water ad libitum and were maintained in a climate‐controlled room under a 12 h/12 h of light/dark cycle.

Conflict of Interest

The authors declare no conflict of interest.

Author Contributions

Y.H.C. and W.H.L. contributed equally to this work. Y.H.C., C.H.C., and S.V. conceived the design, and performed the numerical design, optical measurement, and data analysis. W.H.L. and W.S.C. performed the in vivo and ex vivo sample preparation. C.H.C., T.Y., M.K.C., T.T., Y.L. and D.P.T. conceived the principle, design, and characterization of the metasurface and meta‐optics of the system. Y.H.C., X.L., and M.K.C. built up the optical system for measurement and the deep learning model and collected the dataset for the model. Y.H.C., S.V., Y.L., Y.Y.H., W.S.C., and D.P.T. organized the project, designed experiments, analyzed the results, and prepared the manuscripts. All authors discussed the results and commented on the manuscript.

Supporting information

Supporting Information

Acknowledgements

Y.H.C. and W.H.L. contributed equally to this work. The authors acknowledge the financial support from the National Science and Technology Council, Taiwan (Grant Nos. NSTC 112‐2221‐E‐002‐055‐MY3, NSTC 112‐2221‐E‐002‐212‐MY3 and MOST‐108‐2221‐E‐002‐168‐MY4), National Taiwan University (Grant Nos. NTU‐CC‐113L891102, NTU‐113L8507, NTU‐CC‐112L892902, NTU‐107L7728, NTU‐107L7807 and NTU‐YIH‐08HZT49001), the University Grants Committee / Research Grants Council of the Hong Kong Special Administrative Region, China (Project No. AoE/P‐502/20, CRF Project: C1015‐21E, C5031‐22G, and GRF Project: 15303521, 11310522, 11305223, and 11300123), the Department of Science and Technology of Guangdong Province (2020B1515120073), and City University of Hong Kong (Project No. 9380131, 9610628, and 7005867), National Health Research Institutes (NHRI‐EX113‐11327EI), and JST CREST (Grant No. JPMJCR1904).

Chia Y.‐H., Liao W.‐H., Vyas S., Chu C. H., Yamaguchi T., Liu X., Tanaka T., Huang Y.‐Y., Chen M. K., Chen W.‐S., Tsai D. P., Luo Y., In Vivo Intelligent Fluorescence Endo‐Microscopy by Varifocal Meta‐Device and Deep Learning. Adv. Sci. 2024, 11, 2307837. 10.1002/advs.202307837

Contributor Information

Mu Ku Chen, Email: mkchen@cityu.edu.hk.

Wen‐Shiang Chen, Email: wenshiangchen@ntu.edu.tw.

Din Ping Tsai, Email: dptsai@cityu.edu.hk.

Yuan Luo, Email: yuanluo@ntu.edu.tw.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- 1. Smith B. M., The British Medical Association Complete Family Health Encyclopedia Complete Family Health Encyclopedia, Dorling Kindersley, 1990. [Google Scholar]

- 2. Liang R., Optical design for biomedical imaging, SPIE Press, 2011. [Google Scholar]

- 3. Santos S., Chu K. K., Lim D., Bozinovic N., Ford T. N., Hourtoule C., Bartoo A. C., Singh S. K., Mertz J., J. Biomed. Opt. 2009, 14, 030502. [DOI] [PubMed] [Google Scholar]

- 4. Mertz J., Nat. Methods. 2011, 8, 811. [DOI] [PubMed] [Google Scholar]

- 5. Choi Y., Yoon C., Kim M., Yang T. D., Fang‐Yen C., Dasari R. R., Lee K. J., Choi W., Phys. Rev. Lett. 2012, 109, 203901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Angelo J. P., van de Giessen M., Gioux S., Biomed. Opt. Express 2017, 8, 5113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Rouse A. R., Gmitro A. F, Opt. Lett. 2000, 25, 1708. [DOI] [PubMed] [Google Scholar]

- 8. Goetz M., Watson A., Kiesslich R., J. Biophotonics 2011, 4, 498. [DOI] [PubMed] [Google Scholar]

- 9. Wang J., A confocal endoscope for cellular imaging. Engineering 2015, 1, 351. [Google Scholar]

- 10. Krishna S. G., Brugge W. R., Dewitt J. M., Kongkam P., Napoleon B., Robles‐Medranda C., Tan D., El‐Dika S., McCarthy S., Walker J., Dillhoff M. E., Manilchuk A., Schmidt C., Swanson B., Shah Z. K., Hart P. A., Conwell D. L., Gastrointest. Endosc. 2017, 86, 644 . [DOI] [PubMed] [Google Scholar]

- 11. Richardson W. H., J. Opt. Soc. Am. 1972, 62, 55. [Google Scholar]

- 12. Lucy L. B., Astron. J. 1974, 79, 745. [Google Scholar]

- 13. Biggs D. S., Curr. Protoc. Cytom. 2010, 52, 12.19.11. [DOI] [PubMed] [Google Scholar]

- 14. Sage D., Donati L., Soulez F., Fortun D., Schmit G., Seitz A., Guiet R., Vonesch C., Unser M., Methods 2017, 115, 28. [DOI] [PubMed] [Google Scholar]

- 15. Karadaglić D., Juškaitis R., Wilson T., Confocal endoscopy via structured illumination. Scanning 2002, 24, 301. [DOI] [PubMed] [Google Scholar]

- 16. Bozinovic N., Ventalon C., Ford T., Mertz J., Opt. Express 2008, 16, 8016. [DOI] [PubMed] [Google Scholar]

- 17. Ford T. N., Lim D., Mertz J., J. Biomed. Opt. 2012, 17, 021105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Lim D., Ford T. N., Chu K. K., Metz J., J. Biomed. Opt. 2011, 16, 016014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Mazzaferri J., Kunik D., Belisle J. M., Singh K., Lefrançois S., Costantino S., Opt. Express 2011, 19, 14508. [DOI] [PubMed] [Google Scholar]

- 20. Chia Y.‐H., Yeh J. A., Huang Y.‐Y., Luo Y., Opt. Express 2020, 28, 37177. [DOI] [PubMed] [Google Scholar]

- 21. Hsiao H., Lin C.‐Y., Vyas S., Huang K.‐Y., Yeh J. A., Luo Y., J. Biophotonics 2021, 14, 202000335. [DOI] [PubMed] [Google Scholar]

- 22. Chang Y.‐S., Hsu L., Huang K.‐L, Appl. Opt. 2018, 57, 1091. [DOI] [PubMed] [Google Scholar]

- 23. Kuiper S., Electrowetting‐based liquid lenses for endoscopy. SPIE Moems and Miniaturized Systems X, Vol. 7930, 2011, pp. 47–54. [Google Scholar]

- 24. Burch J., Williams D., Appl. Opt. 1977, 16, 2445. [DOI] [PubMed] [Google Scholar]

- 25. Bernet S., Ritsch‐Marte M., Appl. Opt. 2008, 47, 3722. [DOI] [PubMed] [Google Scholar]

- 26. Bernet S., Harm W., Ritsch‐Marte M., Opt. Express 2013, 21, 6955. [DOI] [PubMed] [Google Scholar]

- 27. Bawart M., May M. A., Öttl T., Roider C., Bernet S., Schmidt M., Ritsch‐Marte M., Jesacher A., Opt. Express 2020, 28, 26336. [DOI] [PubMed] [Google Scholar]

- 28. Ni Y., Chen S., Wang Y., Tan Q., Xiao S., Yang Y., Nano Lett. 2020, 20, 6719. [DOI] [PubMed] [Google Scholar]

- 29. Lee D., Gwak J., Badloe T., Palomba S., Rho J., Nanoscale Adv 2020, 2, 605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Chu C. H., Tseng M. L., Chen J., Wu P. C., Chen Y.i‐H., Wang H.‐C., Chen T.‐Y., Hsieh W. T., Wu H. J., Sun G., Tsai D. P., Laser Photonics Rev. 2016, 10, 986. [Google Scholar]

- 31. Yu N., Genevet P., Kats M. A., Aieta F., Tetienne J.‐P., Capasso F., Gaburro Z., Science 2011, 334, 333. [DOI] [PubMed] [Google Scholar]

- 32. Hsiao H. H., Chu C. H., Tsai D. P., Small Methods 2017, 1, 1600064. [Google Scholar]

- 33. Wang S., Nat. Commun. 2017, 8, 187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Wang S., Wu P. C., Su V.‐C., Lai Y.‐C., Chen M.‐K., Kuo H. Y., Chen B. H., Chen Y. H., Huang T.‐T., Wang J.‐H., Lin R.‐M., Kuan C.‐H., Li T., Wang Z., Zhu S., Tsai D. P., Nat. Nanotechnol. 2018, 13, 227. [DOI] [PubMed] [Google Scholar]

- 35. Su V.‐C., Chu C. H., Sun G., Tsai D. P., Opt. Express 2018, 26, 13148. [DOI] [PubMed] [Google Scholar]

- 36. Wang Z., Hu G., Wang X., Ding X., Zhang K., Li H., Burokur S. N., Wu Q., Liu J., Tan J., Qiu C.‐W., Nat. Commun. 2022, 13, 2188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Kuo H. Y., Vyas S., Chu C. H., Chen M.u K.u, Shi X.u, Misawa H., Lu Y.‐J., Luo Y., Tsai D. P., Nanomaterials 2021, 11, 1730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Luo Y., Tseng M. L., Vyas S., Hsieh T.‐Y., Wu J.‐C., Chen S.‐Y., Peng H.‐F., Su V.‐C., Huang T.‐T., Kuo H. Y., Chu C. H., Chen M. K., Chen J.‐W., Chen Y.‐C., Huang K.‐Y., Kuan C.‐H., Shi X.u, Misawa H., Tsai D. P., Nanophotonics 2022, 1949. [Google Scholar]

- 39. Luo Y., Tseng M. L., Vyas S., Kuo H. Y., Chu C. H., Chen M. K., Lee H.‐C., Chen W.‐P., Su V.‐C., Shi X.u, Misawa H., Tsai D. P., Yang P.‐C., Small Methods 2022, 2101228. [DOI] [PubMed] [Google Scholar]

- 40. Wu P. C., Chen J.‐W., Yin C.‐W., Lai Y.‐C., Chung T. L., Liao C. Y., Chen B. H., Lee K.‐W., Chuang C.‐J., Wang C.‐M., Tsai D. P., ACS Photonics 2017, 5, 2568. [Google Scholar]

- 41. Li L., Liu Z., Ren X., Wang S., Su V.‐C., Chen M.‐K., Chu C. H., Kuo H. Y., Liu B., Zang W., Guo G., Zhang L., Wang Z., Zhu S., Tsai D. P., Science 2020, 368, 1487. [DOI] [PubMed] [Google Scholar]

- 42. Chen M. K., Wu Y., Feng L., Fan Q., Lu M., Xu T., Tsai D. P., Adv. Opt. Mater. 2021, 9, 2001414. [Google Scholar]

- 43. Zhang S., Wong C. L., Zeng S., Bi R., Tai K., Dholakia K., Olivo M., Nanophotonics 2021, 10, 259. [Google Scholar]

- 44. Liu X., Chen M. K., Chu C. H., Zhang J., Leng B., Yamaguchi T., Tanaka T., Tsai D. P., ACS Photonics 2023, 10, 2382. [Google Scholar]

- 45. Wang X., Wang H., Wang J., Liu X., Hao H., Tan Y. S., Zhang Y., Zhang H.e, Ding X., Zhao W., Wang Y., Lu Z., Liu J., Yang J. K. W., Tan J., Li H., Qiu C.‐W., Hu G., Ding X., Nat. Commun. 2023, 14, 2063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Chadebecq F., Lovat L. B., Stoyanov D., Nat. Rev. Gastroenterol. Hepatol. 2022, 171. [DOI] [PubMed] [Google Scholar]

- 47. Li A.‐C., Vyas S., Lin Y.‐H., Huang Y.‐Y., Huang H.‐M., Luo Y., IEEE Trans. Med. Imaging 2021, 40, 3229. [DOI] [PubMed] [Google Scholar]

- 48. Wang H., Rivenson Y., Jin Y., Wei Z., Gao R., Günaydin H., Bentolila L. A., Kural C., Ozcan A., Nat. Methods 2019, 16, 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Rivenson Y., Zhang Y., Günaydın H., Teng D., Ozcan A., Light Sci. Appl. 2018, 7, 17141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Borhani N., Bower A. J., Boppart S. A., Psaltis D., Biomed. Opt. Express 2019, 10, 1339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Wang H., Rivenson Y., Jin Y., Wei Z., Gao R., Günaydin H., Bentolila L. A., Kural C., Ozcan A., Nat. Methods. 2019, 16, 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Dong X., Lei Y., Wang T., Higgins K., Liu T., Curran W. J., Mao H., Nye J. A., Yang X., Phys. Med. Biol. 2020, 65, 055011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Wang F., Bian Y., Wang H., Lyu M., Pedrini G., Osten W., Barbastathis G., Situ G., Light Sci. Appl. 2020, 9, 77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Barbastathis G., Ozcan A., Situ G., Optica 2019, 6, 921. [Google Scholar]

- 55. Li S., Deng M., Lee, J. , Sinha, A. , Barbastathis, G. , Optica 2018, 5, 803. [Google Scholar]

- 56. Rivenson Y., Göröcs Z., Günaydin H., Zhang Y., Wang H., Ozcan A., Optica 2017, 4, 1437. [Google Scholar]

- 57. Chen M. K., Adv. Mater. 2022, 2107465. [Google Scholar]

- 58. Chen M. K., Liu X., Sun Y., Tsai D. P. J. C. R, Chem. Rev. 2022, 122, 15356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Liu Y., Yu Q.‐Y., Chen Z.‐M., Qiu H.‐Y., Chen R., Jiang S.‐J., He X.‐T., Zhao F.‐L., Dong J.‐W., Photonics Res 2021, 9, 106. [Google Scholar]

- 60. Pahlevaninezhad H., Khorasaninejad M., Huang Y.‐W., Shi Z., Hariri L. P., Adams D. C., Ding V., Zhu A., Qiu C.‐W., Capasso F., Suter M. J., Nat. Photonics 2018, 12, 540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Pahlevaninezhad M., Huang Y.‐W., Pahlevani M., Bouma B., Suter M. J., Capasso F., Pahlevaninezhad H., Nat. Photonics 2022, 16, 203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Sun D., Yang Y., Liu S., Li Y., Luo M., Qi X., Ma Z., Biomed. Opt. Express 2020, 11, 4408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Luo Y., Chu C. H., Vyas S., Kuo H. Y., Chia Y. H., Chen M. K., Shi X.u, Tanaka T., Misawa H., Huang Y.‐Y., Tsai D. P., Nano Lett. 2021, 21, 5133. [DOI] [PubMed] [Google Scholar]

- 64. Kim J.‐S., Kanade T., Opt. Lett. 2011, 36, 1050. [DOI] [PubMed] [Google Scholar]

- 65. Sinha A., Lee J., Li S., Barbastathis G., Optica 2017, 4, 1117. [Google Scholar]

- 66. He K., Zhang X., Ren S., Sun J., Deep residual learning for image recognition. IEEE conference on computer vision and pattern recognition , 2016, pp. 770–778.

- 67. Gao L., Song W., Dai J., Chen Y., Remote Sens 2019, 11, 552. [Google Scholar]

- 68. Lin W. T., Lin C. Y., Singh V. R., Luo Y., J. Biophotonics 2018, 11, 201800010. [Google Scholar]

- 69. Banerjee S., Hazra L., Novel Optical Systems and Large‐Aperture Imaging 1998, 3430, 175. [Google Scholar]

- 70. Tomkinson T. H., Bentley J. L., Crawford M. K., Harkrider C. J., Moore D. T., Rouke J. L., Appl. Opt. 1996, 35, 6674. [DOI] [PubMed] [Google Scholar]

- 71. Jr A. B., Ilegems M., Phys. Rev. B 1973, 7, 743. [Google Scholar]

- 72. Lohmann A., Paris D., Appl. Opt. 1967, 6, 1567. [DOI] [PubMed] [Google Scholar]

- 73. Goodman J. W., Introduction to Fourier optics, Roberts and Company Publishers, 2005. [Google Scholar]

- 74. Sancataldo G., Scipioni L., Ravasenga T., Lanzanò L., Diaspro A., Barberis A., Duocastella M., Optica 2017, 4, 367. [Google Scholar]

- 75. Hore A., Ziou D., Image quality metrics: PSNR vs. SSIM, 2010 20th international conference on pattern recognition, IEEE, 2010, pp. 2366–2369.

- 76. Mestre H., Mori Y., Nedergaard M., TINS 2020, 43, 458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Malitson I. H., J. Opt. Soc. Am. 1962, 52, 1377. [Google Scholar]

- 78. Xavier A. L., JoVE 2018, e57378. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.