Members of the medical profession seem reluctant to value research into the effectiveness of educational interventions.1 One reason for this reluctance may be that there is a fundamental difficulty in addressing the questions that everyone wants answered: what works, in what context, with which groups, and at what cost? Unfortunately, there may not be simpleanswers to these questions. Defining true effectiveness, separating out the part played by the various components of an educational intervention, and clarifying the real cost:benefit ratio are as difficult in educational research as they are in the evaluation of a complex treatment performed on a sample group of people who each have different needs, circumstances, and personalities.

Summary points

Health professionals are often reluctant to value research into the effectiveness of educational interventions

As in clinical research, the need for an evidence base in the practice of medical education is essential

Choosing a methodology to investigate a research question in educational research is no different from choosing one for any other type of research

Rigorously designed research into the effectiveness of education is needed to attract research funding, to provide generalisable results, and to elevate the profile of educational research within the medical profession

Methodology

Choosing a methodology to use to investigate a research question is no different in educational research than it is in any other type of research. Careful attention must be paid to the aims of the research and the validity of the method and tools selected. Educational research uses two main designs: naturalistic and experimental.2,3

Naturalistic design

Naturalistic designs look at specific or general issues as they occur—for example, what makes practitioners change their practice, how often is feedback given in primary care settings, what processes are occurring over time in an educational course, what are the different experiences and outcomes for participants, and can these differencesbe explained? Like case reports, population surveys, and other well designed observational methodologies, naturalistic studies have a place in providing generalisable information to a wider audience.4

Experimental design

In contrast to a naturalistic research design, experimental designs usually involve an educational intervention. The parallels with clinical interventions highlight the three main areas of difficulty in performing experimental research into educational interventions.

Complex nature of education

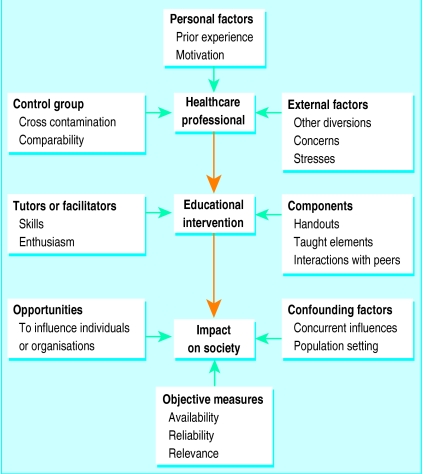

An educational event, from reading a journal article to completing a degree course, is an intervention; it is a complex intervention, and often several components act synergistically. Interventions that have been shown to be effective in one setting may, quite reasonably, not translate to other settings. Educational events are multifaceted interactions occurring in a changing world and involving the most complex of subjects. Many factors can influence the effectiveness of educational interventions (fig 1).

Figure 1.

Examples of factors which may influence the effectiveness of educational interventions

Sampling

The randomised controlled trial is regarded as essential to proving the effectiveness of a clinical intervention. In educational research, especially in postgraduate and continuing medical education, the numbers that can be enrolled in a study may not be large enough to allow researchers to achieve statistically significant quantitative results. Comparable control groups may be susceptible to cross contamination from access to some of the elements of the intervention under scrutiny (for example, students may pass their handouts to other students).

Purposive sampling may give different but valid perspectives.3 For instance, if 25 out of 30 people are shown to have benefited from a course, the most interesting question might be “why didn’t the other five people benefit?” Were they demographically different? Did they have different learning styles? More detailed interviews with those five students may be more informative about the philosophy and utility of a course than the simple statistics. Analysing the deviant cases as a project proceeds is important for the validity of results.5

Outcome measures

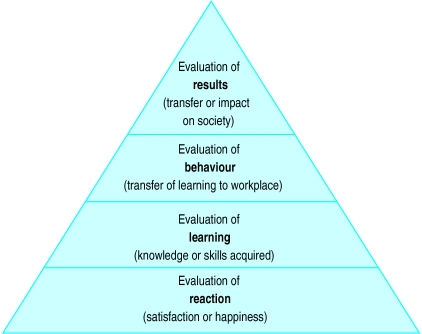

Kirkpatrick described four levels of evaluation in which the complexity of the behavioural change increases as evaluation strategies ascend to each higher level (fig 2).6The length of time needed for the evaluation, the lack of reliable objective measures, and the number of potential confounding factors all increase with the complexity of the change. Researchers in medical education are aware that the availability of funds for research and development is limited unless a link can be made between the proposed intervention and its impact on patient care, yet this is the most difficult link to make.

Figure 2.

Kirkpatrick’s hierarchy of levels of evaluation. Complexity of behavioural change increases as evaluation of intervention ascends the hierarchy

When does evaluation become research?

Evaluations of the effectiveness of educational interventions may not reach the rigour required for research even though they may use similar study designs, methods of data collection, and analytical techniques. The evaluation of an educational event may have many purposes; each evaluation should be designed for the specific purpose for which it is required and for the stakeholders involved.7,8 For a short educational course, for example, the purpose might be to assist organisers in planning improvements for the next time it is held. The systematic collection of participants’ opinions using a specifically designed questionnaire may be appropriate. Just as a well designed audit, although not true research, may be informative and useful to a wider audience, so an evaluation may be useful if it is disseminated through publication. But that does not mean that it is research.

For an evaluation of an educational intervention to be considered as research, rigorous standards of reliability and validity must be applied regardless of whether qualitative or quantitative methodologies are used.9,10 For example, if the questionnaires used are not standardised and have not been validated for use in a population similar to the one being studied, they should be piloted and should include checks on their internal consistency. A high response rate—of 80-100%—is required.11 Numerical scores will be meaningless if they are derived from poorly designed scoring systems. If a control group is used, randomisation or case control procedures should meet accepted standards.11

The Cochrane Collaboration module on effective professional practice details quality assessment criteria for randomised studies, interrupted time series, and controlled before and after studies that have been designed to evaluate interventions aimed at improving professional practice and the delivery of effective health care.11 Educational strategies and events for healthcare professionals fall into this remit.

Conclusion

As in clinical research, the need for an evidence base in the practice of medical education is essential for the targeting of limited resources and for informing development strategies. The complexity of the subject matter and the limited availability of reliable, meaningful outcome measures are challenges that must be faced. These difficulties are not enough to excuse complacency. Rigorous research design and application are needed to attract research funding, to provide valid generalisable results, and to elevate the profile of educational research within the medical profession.

Footnotes

Competing interests: None declared.

References

- 1.Buckley G. Partial truths: research papers in medical education. Med Educ. 1998;32:1–2. doi: 10.1046/j.1365-2923.1998.00187.x. [DOI] [PubMed] [Google Scholar]

- 2.Patton MQ. How to use qualitative methods in evaluation. Newbury Park, CA: Sage; 1987. [Google Scholar]

- 3.Robson C. Real world research. Oxford: Blackwell; 1993. [Google Scholar]

- 4.Vandenbroucke JP. Case reports in an evidence-based world. J R Soc Med. 1999;92:159–163. doi: 10.1177/014107689909200401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Green J. Grounded theory and the constant comparative method. BMJ. 1998;316:1064–1065. [PubMed] [Google Scholar]

- 6.Kirkpatrick DI. Evaluation of training. In: Craig R, Bittel I, editors. Training and development handbook. New York: McGraw-Hill; 1967. [Google Scholar]

- 7.Edwards J. Evaluation in adult and further education: a practical handbook for teachers and organisers. Liverpool: Workers’ Educational Association; 1991. [Google Scholar]

- 8.Calder J. Programme evaluation and quality. London: Kogan Page; 1994. [Google Scholar]

- 9.Kirk J, Miller ML. Reliability and validity in qualitative research. Newbury Park, CA: Sage; 1986. [Google Scholar]

- 10.Green J, Britten N. Qualitative research and evidence based medicine. BMJ. 1998;316:1230–1232. doi: 10.1136/bmj.316.7139.1230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cochrane Collaboration, editors. Cochrane Library. Issue 2. Oxford: Update Software; 1998. Effective professional practice module. [Google Scholar]