Pilots and doctors operate in complex environments where teams interact with technology. In both domains, risk varies from low to high with threats coming from a variety of sources in the environment. Safety is paramount for both professions, but cost issues can influence the commitment of resources for safety efforts. Aircraft accidents are infrequent, highly visible, and often involve massive loss of life, resulting in exhaustive investigation into causal factors, public reports, and remedial action. Research by the National Aeronautics and Space Administration into aviation accidents has found that 70% involve human error.1

In contrast, medical adverse events happen to individual patients and seldom receive national publicity. More importantly, there is no standardised method of investigation, documentation, and dissemination. The US Institute of Medicine estimates that each year between 44 000 and 98 000 people die as a result of medical errors. When error is suspected, litigation and new regulations are threats in both medicine and aviation.

Summary points

In aviation, accidents are usually highly visible, and as a result aviation has developed standardised methods of investigating, documenting, and disseminating errors and their lessons

Although operating theatres are not cockpits, medicine could learn from aviation

Observation of flights in operation has identified failures of compliance, communication, procedures, proficiency, and decision making in contributing to errors

Surveys in operating theatres have confirmed that pilots and doctors have common interpersonal problem areas and similarities in professional culture

Accepting the inevitability of error and the importance of reliable data on error and its management will allow systematic efforts to reduce the frequency and severity of adverse events

Error results from physiological and psychological limitations of humans.2 Causes of error include fatigue, workload, and fear as well as cognitive overload, poor interpersonal communications, imperfect information processing, and flawed decision making.3 In both aviation and medicine, teamwork is required, and team error can be defined as action or inaction leading to deviation from team or organisational intentions. Aviation increasingly uses error management strategies to improve safety. Error management is based on understanding the nature and extent of error, changing the conditions that induce error, determining behaviours that prevent or mitigate error, and training personnel in their use.4 Though recognising that operating theatres are not cockpits, I describe approaches that may help improve patient safety.

Data requirements for error management

Multiple sources of data are essential in assessing aviation safety. Confidential surveys of pilots and other crew members provide insights into perceptions of organisational commitment to safety, appropriate teamwork and leadership, and error.3 Examples of survey results can clarify their importance. Attitudes about the appropriateness of juniors speaking up when problems are observed and leaders soliciting and accepting inputs help define the safety climate. Attitudes about the flying job and personal capabilities define pilots' professional culture. Overwhelmingly, pilots like their work and are proud of their profession. However, their professional culture shows a negative component in denying personal vulnerability. Most of the 30 000 pilots surveyed report that their decision making is as good in emergencies as under normal conditions, that they can leave behind personal problems, and that they perform effectively when fatigued.2 Such inaccurate self perceptions can lead to overconfidence in difficult situations.

A second data source consists of non-punitive incident reporting systems. These provide insights about conditions that induce errors and the errors that result. The United States, Britain, and other countries have national aviation incident reporting systems that remove identifying information about organisations and respondents and allow data to be shared. In the United States, aviation safety action programmes permit pilots to report incidents to their own companies without fear of reprisal, allowing immediate corrective action.5 Because incident reports are voluntary, however, they don't provide data on base rates of risk and error.

Sources of threat and types of error observed during line operations safety audit

Sources of threat

Terrain (mountains, buildings)—58% of flights

Adverse weather—28% of flights

Aircraft malfunctions—15% of flights

Unusual air traffic commands—11% of flights

External errors (air traffic control, maintenance, cabin, dispatch, and ground crew)—8% of flights

Operational pressures—8% of flights

Types of error—with examples

Violation (conscious failure to adhere to procedures or regulations)—performing a checklist from memory

Procedural (followed procedures with wrong execution)—wrong entry into flight management computer

Communications (missing or wrong information exchange or misinterpretation)—misunderstood altitude clearance

Proficiency (error due to lack of knowledge or skill)—inability to progam automation

Decision (decision that unnecessarily increases risk)—unnecessary navigation through adverse weather

A third data source has been under development over 15 years by our project (www.psy.utexas.edu/psy/helmreich/nasaut.htm). It is an observational methodology, the line operations safety audit (LOSA), which uses expert observers in the cockpit during normal flights to record threats to safety, errors and their management, and behaviours identified as critical in preventing accidents. Confidential data have been collected on more than 3500 domestic and international airline flights—an approach supported by the Federal Aviation Administration and the International Civil Aviation Organisation.6

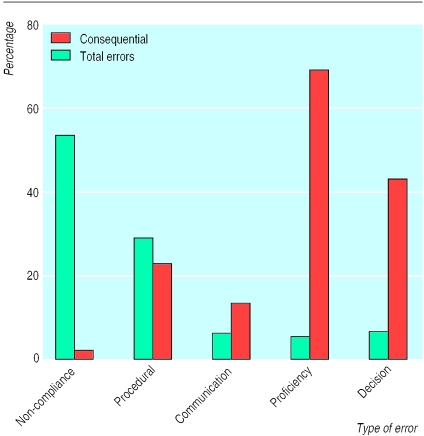

The results of the line operations safety audit confirm that threat and error are ubiquitous in the aviation environment, with an average of two threats and two errors observed per flight.7 The box shows the major sources of threat observed and the five categories of error empirically identified; fig 1 shows the relative frequency of each category. This error classification is useful because different interventions are required to mitigate different types of error.

Figure 1.

Percentage of each type of error and proportion classified as consequential (resulting in undesired aircraft states)

Proficiency errors suggest the need for technical training, whereas communications and decision errors call for team training. Procedural errors may result from human limitations or from inadequate procedures that need to be changed. Violations can stem from a culture of non-compliance, perceptions of invulnerability, or poor procedures. That more than half of observed errors were violations was unexpected. This lack of compliance is a source of concern that has triggered internal reviews of procedures and organisational cultures. Figure 1 also shows the percentage of errors that were classified as consequential—that is, those errors resulting in undesired aircraft states such as near misses, navigational deviation, or other error. Although the percentage of proficiency and decision errors is low, they have a higher probability of being consequential.7 Even non-consequential errors increase risk: teams that violate procedures are 1.4 times more likely to commit other types of errors.8

Managing error in aviation

Given the ubiquity of threat and error, the key to safety is their effective management. One safety effort is training known as crew resource management (CRM).4 This represents a major change in training, which had previously dealt with only the technical aspects of flying. It considers human performance limiters (such as fatigue and stress) and the nature of human error, and it defines behaviours that are countermeasures to error, such as leadership, briefings, monitoring and cross checking, decision making, and review and modification of plans. Crew resource management is now required for flight crews worldwide, and data support its effectiveness in changing attitudes and behaviour and in enhancing safety.9

Simulation also plays an important role in crew resource management training. Sophisticated simulators allow full crews to practice dealing with error inducing situations without jeopardy and to receive feedback on both their individual and team performance. Two important conclusions emerge from evaluations of crew resource management training: firstly, such training needs to be ongoing, because in the absence of recurrent training and reinforcement, attitudes and practices decay; and secondly, it needs to be tailored to conditions and experience within organisations.7

Understanding how threat and error and their management interact to determine outcomes is critical to safety efforts. To this end, a model has been developed that facilitates analyses both of causes of mishaps and of the effectiveness of avoidance and mitigation strategies. A model should capture the treatment context, including the types of errors, and classify the processes of managing threat and error. Application of the model shows that there is seldom a single cause, but instead a concatenation of contributing factors. The greatest value of analyses using the model is in uncovering latent threats that can induce error.10

By latent threats we mean existing conditions that may interact with ongoing activities to precipitate error. For example, analysis of a Canadian crash caused by a take-off with wing icing uncovered 10 latent factors, including aircraft design, inadequate oversight by the government, and organisational characteristics including management disregard for de-icing and inadequate maintenance and training.3 Until this post-accident analysis, these risks and threats were mostly hidden. Since accidents occur so infrequently, an examination of threat and error under routine conditions can yield rich data for improving safety margins.

Applications to medical error

Discussion of applications to medical error will centre on the operating theatre, in which I have some experience as an observer and in which our project has collected observational data. This is a milieu more complex than the cockpit, with differing specialties interacting to treat a patient whose condition and response may have unknown characteristics.11 Aircraft tend to be more predictable than patients.

Though there are legal and cultural barriers to the disclosure of error, aviation's methodologies can be used to gain essential data and to develop comparable interventions. The project team has used both survey and observational methods with operating theatre staff. In observing operations, we noted instances of suboptimal teamwork and communications paralleling those found in the cockpit. Behaviours seen in a European hospital are shown in the box, with examples of negative impact on patients. These are behaviours addressed in crew resource management training.

Behaviours that increase risk to patients in operating theatres

Communication:

Failure to inform team of patient's problem—for example, surgeon fails to inform anaesthetist of use of drug before blood pressure is seriously affected

Failure to discuss alternative procedures

Leadership:

Failure to establish leadership for operating room team

Interpersonal relations, conflict:

Overt hostility and frustration—for example, patient deteriorates while surgeon and anaesthetist are in conflict over whether to terminate surgery after pneumothorax

Preparation, planning, vigilance:

Failure to plan for contingencies in treatment plan

Failure to monitor situation and other team's activities—for example, distracted anaesthetist fails to note drop in blood pressure after monitor's power fails

In addition to these observations, surveys confirm that pilots and doctors have common interpersonal problem areas and similarities in professional culture.2,12 In response to an open ended query about what is most needed to improve safety and efficiency in the operating theatre, two thirds of doctors and nurses in one hospital cited better communications.11 Most doctors deny the deleterious effects of stressors and proclaim that their decision making is as good in emergencies as in normal situations. In data just collected in a US teaching hospital, 30% of doctors and nurses working in intensive care units denied committing errors.13

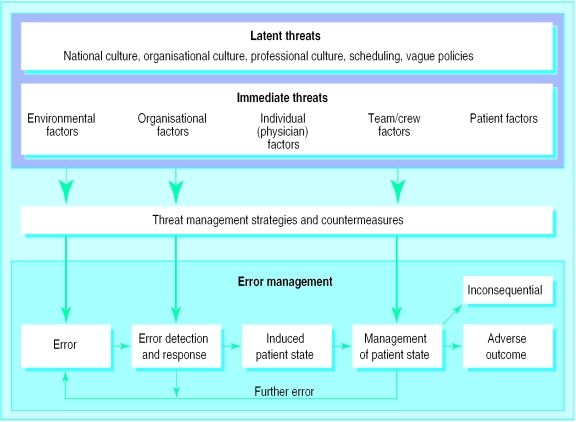

Further exploring the relevance of aviation experience, we have started to adapt the threat and error model to the medical environment. A model of threat and error management fits within a general “input-process-outcomes” concept of team performance, in which input factors include individual, team, organisational, environmental, and patient characteristics. Professional and organisational cultures are critical components of such a model.

Threats are defined as factors that increase the likelihood of errors and include environmental conditions such as lighting; staff related conditions such as fatigue and norms of communication and authority; and patient related issues such as difficult airways or undiagnosed conditions. Latent threats are aspects of the system predisposing threat or error, such as staff scheduling policies. The model is shown in fig 2 and is explained more fully, together with a case study (see box for summary), on the BMJ 's website.

Case study: synopsis

An 8 year old boy was admitted for elective surgery on the eardrum. He was anaesthetised and an endotracheal tube inserted, along with internal stethoscope and temperature probe. The anaesthetist did not listen to the chest after inserting the tube. The temperature probe connector was not compatible with the monitor (the hospital had changed brands the previous day). The anaesthetist asked for another but did not connect it; he also did not connect the stethoscope.

Surgery began at 08 20 and carbon dioxide concentrations began to rise after about 30 minutes. The anaesthetist stopped entering CO2 and pulse on the patient's chart. Nurses observed the anaesthetist nodding in his chair, head bobbing; they did not speak to him because they “were afraid of a confrontation.”

At 10 15 the surgeon heard a gurgling sound and realised that the airway tube was disconnected. The problem was called out to the anaesthetist, who reconnected the tube. The anaesthetist did not check breathing sounds with the stethoscope.

At 10 30 the patient was breathing so rapidly the surgeon could not operate; he notified the anaesthetist that the rate was 60/min. The anaesthetist did nothing after being alerted.

At 10 45 the monitor showed irregular heartbeats. Just before 11 00 the anaesthetist noted extreme heartbeat irregularity and asked the surgeon to stop operating. The patient was given a dose of lignocaine, but his condition worsened.

At 11 02 the patient's heart stopped beating. The anaesthetist called for code, summoning the emergency team. The endotracheal tube was removed and found to be 50% obstructed by a mucous plug. A new tube was inserted and the patient was ventilated. The emergency team anaesthetist noticed that the airway heater had caused the breathing circuit's plastic tubing to melt and turned the heater off. The patient's temperature was 108°F. The patient died despite the efforts of the code team.

Figure 2.

Threat and error model, University of Texas human factors research project

At first glance, the case seems to be a simple instance of gross negligence during surgery by an anaesthetist who contributed to the death of a healthy 8 year old boy by failing to connect sensors and monitor his condition. When the model was applied, however, nine sequential errors were identified, including those of nurses who failed to speak up when they observed the anaesthetist nodding in a chair and the surgeon who continued operating even after the anaesthetist failed to respond to the boy's deteriorating condition. More importantly, latent organisational and professional threats were revealed, including failure to act on reports about the anaesthetist's previous behaviour, lack of policy for monitoring patients, pressure to perform when fatigued, and professional tolerance of peer misbehaviour.

Establishing error management programmes

Available data, including analyses of adverse events, suggest that aviation's strategies for enhancing teamwork and safety can be applied to medicine. I am not suggesting the mindless import of existing programmes; rather, aviation experience should be used as a template for developing data driven actions reflecting the unique situation of each organisation.

This can be summarised in a six step approach. As in the treatment of disease, action should begin with

History and examination; and

Diagnosis.

The history must include detailed knowledge of the organisation, its norms, and its staff. Diagnosis should include data from confidential incident reporting systems and surveys, systematic observations of team performance, and details of adverse events and near misses.

Further steps are:

Dealing with latent factors that have been detected, changing the organisational and professional cultures, providing clear performance standards, and adopting a non-punitive approach to error (but not to violations of safety procedures);

Providing formal training in teamwork, the nature of error, and in limitations of human performance;

Providing feedback and reinforcement on both interpersonal and technical performance; and

Making error management an ongoing organisational commitment through recurrent training and data collection.

Some might conclude that such programmes may add bureaucratic layers and burden to an already overtaxed system. But in aviation, one of the strongest proponents and practitioners of these measures is an airline that eschews anything bureaucratic, learns from everyday mistakes, and enjoys an enviable safety record.

Funding for research into medical error, latent factors in the system, incident reporting systems, and development of training is essential for implementation of such programmes. Research in medicine is historically specific to diseases, but error cuts across all illnesses and medical specialties.

I believe that if organisational and professional cultures accept the inevitability of error and the importance of reliable data on error and its management, systematic efforts to improve safety will reduce the frequency and severity of adverse events.

Acknowledgments

Thanks to David Musson, Bryan Sexton, William Taggart, and John Wilhelm for their counsel.

Footnotes

Funding: Partial support was provided by the Gottlieb Daimler und Carl Benz Stiftung.

Competing interests: RH has received grants for research in aviation from the federal government, has been a consultant for airlines, and has received honorariums for speaking to medical groups.

website extra: A full explanation of the threat and error management model, with a case study, appears on the BMJ's website www.bmj.com

References

- 1.Helmreich RL, Foushee HC. Why crew resource management? Empirical and theoretical bases of human factors training in aviation. In: Wiener E, Kanki B, Helmreich R, editors. Cockpit resource management. San Diego: Academic Press; 1993. pp. 3–45. [Google Scholar]

- 2.Amalberti R. La conduite de systèmes à risques. Paris: Presses Universitaires de France; 1996. [Google Scholar]

- 3.Helmreich RL, Merritt AC. Culture at work: national, organisational and professional influences. Aldershot: Ashgate; 1998. [Google Scholar]

- 4.Helmreich RL, Merritt AC, Wilhelm JA. The evolution of crew resource management in commercial aviation. Int J Aviation Psychol. 1999;9:19–32. doi: 10.1207/s15327108ijap0901_2. [DOI] [PubMed] [Google Scholar]

- 5.Federal Aviation Administration. Aviation safety action programs. Washington, DC: FAA; 1999. . (Advisory circular 120-66A.) [Google Scholar]

- 6.Helmreich RL, Klinect JR, Wilhelm JA. Proceedings of the tenth international symposium on aviation psychology. Columbus: Ohio State University; 1999. Models of threat, error, and CRM in flight operations; pp. 677–682. [Google Scholar]

- 7.Klinect JR, Wilhelm JA, Helmreich RL. Proceedings of the tenth international symposium on aviation psychology. Columbus: Ohio State University; 1999. Threat and error management: data from line operations safety audits; pp. 683–688. [Google Scholar]

- 8.Helmreich RL. Culture and error. In: Safety in aviation: the management commitment: proceedings of a conference. London: Royal Aeronautical Society (in press).

- 9.Helmreich RL, Wilhelm JA. Outcomes of crew resource management training. Int J Aviation Psychol. 1991;1:287–300. doi: 10.1207/s15327108ijap0104_3. [DOI] [PubMed] [Google Scholar]

- 10.Reason J. Managing the risks of organisational accidents. Aldershot: Ashgate; 1997. [Google Scholar]

- 11.Helmreich RL, Schaefer H-G. Team performance in the operating room. In: Bogner MS, editor. Human error in medicine. Hillside, NJ: Erlbaum; 1994. pp. 225–253. [Google Scholar]

- 12.Helmreich RL, Davies JM. Human factors in the operating room: Interpersonal determinants of safety, efficiency and morale. In: Aitkenhead AA, editor. Baillière's clinical anaesthesiology: safety and risk management in anaesthesia. London: Ballière Tindall; 1996. pp. 277–296. [Google Scholar]

- 13.Sexton JB, Thomas EJ, Helmreich RL. Error, stress, and teamwork in medicine and aviation: cross sectional surveys. BMJ. 2000;320:745–749. doi: 10.1136/bmj.320.7237.745. [DOI] [PMC free article] [PubMed] [Google Scholar]