Abstract

Both schools and caregivers play an important role in supporting children’s mental health, but there are few mechanisms for caregivers and school-based mental health providers to work collaboratively to address children’s needs. Closures of schools during the first wave of the COVID-19 pandemic left gaps in mental health support services to children and increased the burden on caregivers to ensure their children’s well-being. In this study, investigators explored the feasibility and acceptability of a motivational interviewing-based program in which school-based mental health providers were trained to connect directly to caregivers to assist them in supporting key aspects of their children’s well-being, including sleep, coping, and academic behavior. Results indicated a high degree of satisfaction with the program and a perception that it was helpful to caregivers and children. However, major challenges in recruitment of providers, as well as qualitative interviews with those providers who participated, indicated that the feasibility of implementing such a program is limited without significant additional implementation infrastructure. Findings suggest that structured support of caregivers, accessed through their children’s schools, has high potential for improving child outcomes and family well-being. Future research should explore what implementation infrastructure is needed for schools to effectively offer these types of supports.

Keywords: Intervention, Mental health, Parents, School social work

Introduction

Mental health is essential to children’s academic, social, and physical well-being (see: Bitsko et al., 2018, 2022; Kessler et al., 1995; Shomaker et al, 2011). Increasing awareness of the relationship between mental health and metrics of academic success has led to more attention to the role of schools in promoting and supporting children’s mental health (e.g., Adelman & Taylor, 2006; Atkins et al., 2010; Rossen & Cowan, 2014). In the USA, the public school system has played a central role in addressing and supporting children’s emotional, psychological, and social well-being since the early twentieth century. School-based mental health supports have primarily focused on providing direct services to children during the school day, via individual counseling, group counseling, and/or school-wide social–emotional programs (Garbacz et al., 2018). However, ecological systems theory (Bronfenbrenner, 1979) has long held that a child’s development and well-being are shaped by ongoing interactions among the child and the various contexts (“microsystems”) they experience, of which school is only one (Bronfenbrenner, 1979). Despite strong evidence for efficacy of interventions that operate across ecological systems (e.g., school, home) for children’s mental health (Sheridan et al., 2019; Smith et al., 2020), there has been only limited implementation of such approaches as part of school-based mental health efforts in US public schools (Smith et al., 2020). One reason for this lack of widespread implementation may be the relatively intensive training requirements of existing family–school interventions (Sheridan et al., 2017; Stormshak et al., 2010).

The need for scalable and sustainable ecological approaches to children’s mental health became particularly urgent during the early stages of the COVID-19 pandemic, when school closures eliminated the option for providing supports to children within school buildings. Thus, the present study examined the acceptability, feasibility, and perceived effectiveness of an intervention designed to quickly train and mobilize existing school-based mental health providers (SBMHPs) to support parents in response to remote learning during the pandemic. This model was adapted from existing models of family–school partnerships to allow it to be implemented quickly by school staff. Although the study was motivated by the pandemic, it has potential utility beyond the pandemic. Below we review the historical and theoretical context for this intervention, the prior literature on models of family–school partnerships designed to improve children’s well-being, and describe the current intervention and research questions.

History of School-Based Mental Health Supports

School social workers began working in public schools in 1906 when the population of school children was becoming increasingly diverse (Constable, 2016; Phillippo & Blosser, 2013). Early school social work practice included improving student behavior and attendance, facilitating caregiver–teacher relationships, assisting with student and parent vocational needs, and making referrals to psychiatric clinics and social service agencies (Sugrue, 2017). School counselors and school psychologists also began to appear in the public schools in the early twentieth century. Although school counselors originally focused on vocational counseling (Gysbers, 2010) and school psychologists were charged primarily with conducting psychological testing and examinations (Fagan, 1987), both professions have increasingly engaged with supporting children’s mental health.

School-based support for children’s mental health expanded substantially in the second half of the twentieth century with the passage of federal legislation that provided both increased funding and specific mandates regarding schools’ roles in supporting the overall well-being of children (Phillippo & Blosser, 2013). During the same period, the social–emotional learning movement led to significant increases in research and programming aimed at children’s social and emotional learning and promoting its essential role in public education (Edutopia, 2011). In the late twentieth and early twenty-first centuries, school-based support has expanded to include the embedding of community-based therapists in school buildings who meet individually with students to provide billable psychotherapy sessions (Doll et al., 2017; Osagiede et al., 2018).

Theoretical Framework: Ecological Systems Theory

The current study is based on ecological systems theory (Bronfenbrenner, 1979), which suggests that a child’s development and well-being are shaped by interactions between the child and the various microsystems they experience, including home and school (Bronfenbrenner, 1979). The home or family microsystem is particularly critical in that it not only has important influences on children’s well-being but also interacts with the school microsystem in what Bronfenbrenner terms a “mesosystem.” Given what is known about the centrality of the home/family microsystem to children’s development, particularly at younger ages (see: Bronfenbrenner, 1979; Cicchetti & Lynch, 1993; Evans & Wachs, 2010; Krishnan, 2010), it is likely that interventions will be most effective if they include supports that go beyond the school microsystem and consider children’s social-ecological context more broadly.

The COVID-19 pandemic highlighted the need for effective ecological approaches to supporting children’s mental health, as school closures eliminated the option for providing supports to children within school buildings even as the stressors of the pandemic exacerbated children’s mental health problems (Leeb et al., 2020; Lurie Children’s Hospital of Chicago, 2021; Margolius et al., 2020). Additionally, for many months during nationwide distance learning, children were participating in the school microsystem remotely while physically in their home/family microsystem. Thus, the relationship between school and home was dramatically altered during this time, with responsibilities for maintaining discipline and academic engagement often shifting from teachers to caregivers. Likewise, traditional structures and supports available to students were upended, including the opportunities for teachers and school social workers to meet physically with children, leaving caregivers as the main—or only—mental health resource available (Sugrue et al., 2023; Capp et al., 2021; Kelly et al, 2021).

Ecological Interventions for Children’s Mental Health

The benefits of ecological interventions for children’s mental health are supported by multiple meta-analyses that have examined a variety of family–school intervention models and programs (Sheridan et al., 2019; Smith et al., 2020). For example, Smith and colleagues (2020) conducted a meta-analysis of studies on programs that they referred to as family–school partnerships (FSPs). Their results showed that FSPs led to children exhibiting decreases in disruptive behavior, improved adaptive and social skills, and improved academic achievement. Likewise, Sheridan and colleagues’s (2019) meta-analysis found significant positive effects of family–school interventions on children’s social-behavioral functioning and mental health. Finally, a model of a family–school intervention with particularly robust evidence base is the family check-up (FCU) (Dishion & Kavanaugh, 2000). FCU is a family-centered brief parent-training intervention. Rooted in strategies from motivational interviewing (Miller & Rollnick, 2012), the FCU is a school-based intervention that involves providers meeting with a child’s primary caregivers to identify goals and concerns, assess needs and motivation for change, and provide appropriate resources (Stormshak et al., 2010). Research has shown that children who participated in FCU have lower rates of behavior and emotional problems, improved emotional regulation, and better relationships with peers, teachers, and caregivers (Dishion et al, 2008, 2014; Shaw et al., 2006; Stormshak et al., 2010, 2019; Wang et al., 2019). However, there are important challenges to implementing the FCU, including intensive training requirements for providers (e.g., several weeks of didactic training; Stormshak et al., 2010; Sheridan et al., 2017). Indeed, the FCU has often been implemented by outside providers that push in to schools, rather than training school-based providers (e.g., Stormshak et al., 2019), although some studies have trained school staff in the FCU approach (Smolkowski et al., 2017).

The intensity of training and reliance on outside providers may be one reason that there has been only limited implementation of these family–school approaches as part of school-based mental health efforts in US public schools (Smith et al., 2020). One potential way to increase sustainability and scalability of ecological approaches is to provide expedited training to SBMHPs to help them engage caregivers within the existing school-based mental health infrastructure. This type of approach has been used in clinical settings, where training pediatric mental healthcare providers to engage with parents has been found to improve parental involvement in their child’s mental healthcare (Stadnick et al., 2016; Haine-Schlagel et al., 2022). In school settings, interventions that train teachers to communicate with parents have been found to improve children’s classroom behavior (Fefer et al., 2020). Efficacy trials training school staff to implement models such as the FCU have shown success but have generally depended on significant outside investments such as study consultants that work intensively with school staff (Dishion et al., 2020). These investments have been necessary to overcome implementation challenges such as limited school staff time for implementing the model (Fosco et al., 2014; Stormshak et al. 2016; Dishion et al., 2020), lack of staff roles dedicated to parent support (Dishion et al., 2020; Fosco et al., 2014), and limited school and district prioritization of parent engagement (Dishion et al., 2020). Importantly, however, the pandemic disrupted several of these implementation barriers. For example, during the early months of remote schooling during the pandemic, SBMHPs were not engaged in their normal day-to-day activities, opening up time to work with caregivers. Further, the reliance on caregivers for student success during remote learning increased the potential for prioritizing caregiver engagement. This unique context provided an opening for the current intervention, which was designed to help SBMHPs rapidly incorporate a relatively simple and brief model of caregiver engagement into their work.

Current Study

The current study was developed to assess the acceptability, feasibility, and perceived effectiveness of a brief ecological intervention to train SBMHPs to work directly with caregivers to address key concerns about their children in grades 3 through 6. The intervention was initially piloted during the nationwide distance-learning phase of the COVID-19 pandemic (Spring 2020) but later phases were conducted during the return to in-person schooling (Spring 2021 and Fall 2021). The intervention, “Family Connect,” draws from evidence-based approaches to school-based behavioral and mental health models, including the family check-up (FCU) (Dishion & Kavanaugh), school-based approaches to motivational interviewing (Rollnick et al., 2016), and solution-focused brief therapy (Kim & Franklin, 2009). Family Connect was designed to allow quick implementation by school staff in the context of the pandemic. Specifically, Family Connect offered expedited training for SBMHPs and provided resources, such as parenting skills videos that providers could watch with caregivers, to increase the capacity of providers with more limited training to implement the model. The model was designed to address three key domains of caregiver concerns: child’s sleep, behavior around school work (e.g., doing homework), and coping with stress, chosen because they are major determinants of child and family wellbeing (Compas et al., 2017; Kremer et al., 2016; Williamson et al., 2020). The goal of this pilot study was to determine: (1) whether parents and providers find the program acceptable; (2) whether providers feel the program is feasible; and (3) whether parents and providers perceive the program to be effective.

Methods

Recruitment and Consent

All study protocols were approved by the Institutional Review Board of the University of Minnesota. Providers were recruited from districts in three states (Minnesota, Ohio, and California) and were eligible for participation if they were a school-based mental health practitioner (school social worker, counselor, or psychologist) serving children in grades 3 through 6. Families were eligible to participate if they had a child in grades 3 through 6 at a school with a participating provider. We focused on grades 3–6 because we posited that, due to their younger developmental stage, the well-being of these children would be most immediately affected by parental behaviors and skills (Utech & Hoving, 1969). We did not include children younger than grade 3 due to an initial plan to conduct brief pre- and post-intervention surveys of children, which would require children to read; thus, we chose grades 3–6 as relatively young children who are reading age. Caregivers under the age of 18 were not included in the study. During the first two waves of the pilot project, the intervention was offered in English only, and thus only English-speaking families were eligible. In the third wave, Spanish language materials were developed, and included Spanish-speaking families.

Participating providers were required to complete two brief web-based training modules: one on identification and recruitment of potential family participants, and the other on the Family Connect intervention (described below). After training, providers gave informed consent for their participation in the study. After consenting, each provider was asked to identify, through standard referral processes, 2 families in need of additional support. Providers were instructed to contact caregivers in these families to assess their interest in participation in the study, and to obtain verbal consent to share interested caregivers’ contact information with the study team. Providers then filled out a REDCap “interest form” on behalf of interested families so that the research team could follow up with families. If an interested family had more than one child who was eligible for the study, providers were asked to select one child to be the primary participant.

Once interested families were identified, research staff emailed caregivers to provide a link to an e-consent form. Caregivers were provided information on the study, including risks and benefits, and were asked to consent to the study and provide assent for their children. After consenting, each caregiver was sent a link to a pre-intervention questionnaire. Providers were emailed to notify them when their family had consented, and were encouraged to remind families to complete the pre-intervention questionnaire. Once families had completed the pre-intervention questionnaire, the study team notified providers to begin the intervention with families.

Provider Training

Participating providers were required to complete one web-based training module on the Family Connect model. This training lasted approximately one hour and was a didactic introduction to the intervention approach, including the recommended content of each session. The training also introduced providers to the toolkit provided by the study team, described in the Intervention section below. Providers were asked to complete a brief post-training survey to assess whether they had completed the training. A detailed post-training assessment of knowledge was not conducted, as the goal of this model was to expedite the process of connecting providers to caregivers by requiring only a brief training on the intervention process and offering a toolkit of resources, rather than requiring providers to build depth of knowledge.

Intervention

Family Connect consisted of structured online or telephone-based 30–60 min interactions between providers and caregivers once weekly for 6–8 weeks in duration. The goal of the intervention was to help parents develop skills in addressing key domains of children’s well-being: sleep, behavior around school work (e.g., doing homework), and coping with stress. These three domains were chosen a priori by the study team based on issues likely to pose a challenge during the pandemic. The intervention consisted of the following steps, based on motivational interviewing processes: (1) provider builds rapport with the caregiver (meeting 1); (2) provider engages caregiver in assessing their child’s well-being in each domain (meeting 1 or 2); (3) provider uses these assessments to summarize and reflect back to caregiver areas of strength and areas in need of improvement (meeting 1 or 2); (4) provider supports caregiver to focus on one of the three domains to work on (meeting 1 or 2); (5) provider works with caregiver to evoke their motivations and goals for improving this domain of their child’s well-being (meeting 2 or 3); (6) provider offers a menu of strategies and helps caregiver make a plan to address the selected topic (meeting 2 or 3); and (7) provider schedules ongoing check-in meetings with caregiver to support implementation and accountability, assess progress toward goals, and assist the caregiver to troubleshoot any challenges (remaining meetings).

To maximize ease of implementation of the Family Connect model, providers were given a set of tools to use in their interactions with parents. These tools included: (1) scripts incorporating motivational interviewing techniques to engage parents in a cycle of self-assessment, goal-setting, planning, and evaluation of strategies; (2) rating scales to allow parents to assess their children’s well-being in the three domains, and instructions on using the results from the scales to guide parents to identify a goal for improvement; (3) a menu of strategies for addressing each domain from which the parent could choose a preferred option; and (4) brief videos describing and illustrating each strategy for providers to watch with parents. The purpose of this comprehensive toolkit was to increase the efficiency and scalability of the Family Connect model by expediting provider ability to support parents after brief training.

Data Collection and Measures

Providers

During the intervention period, providers were asked to complete brief weekly process questionnaires documenting the extent to which they were able to adhere to the intervention structure. Process questionnaires asked providers to indicate how true the following statements were (Definitely True, Somewhat True, Not at all True): “I delivered each of the components/steps of the Family Connect session as planned”; “I added more components or made adjustments”; “The family was paying attention and participating”; and “The family was engaged.” Each week providers were also asked to rate their rapport with the family on a scale of 1 (low) to 10 (high). Questionnaires were developed by the study team based on established guidelines for assessing key aspects of fidelity including adherence (delivering the intervention as planned; added components or adjustments) and participant responsiveness (family paying attention; family engaged) (Bellg et al., 2004; An et al., 2020). Once the intervention was complete, providers were asked to complete a post-intervention questionnaire asking about their experiences with the intervention (see items in Table 1). Post-intervention questions were designed to assess feasibility (items i and ii), acceptability (items v and vi), and perceived effectiveness (items iii and iv).

Table 1.

Provider post-intervention rating scale

| Question stem: To what extent do you agree with the following statements? |

| Response options: Not at all, Slight extent, Moderate extent, Great extent, Very great extent |

|

|

| (i) This intervention was easy to learn |

| (ii) This intervention was easy to use |

| (iii) This intervention helped me connect with families |

| (iv) This intervention seemed to help families |

| (v) Parent(s) seemed to like the intervention |

| (vi) I would use this intervention again in the future |

Feasibility was assessed with items i and ii, acceptability with items v and vi, and perceived effectiveness with items iii and iv

One team member developed initial drafts of process and post-intervention questionaires. All team members then reviewed and provided feedback. The final version of each question was based on consensus. Because this study was conducted rapidly in response to the pandemic there was not time for pre-testing of these measures. In addition to questionnaire data collection, the last author also conducted brief (~ 15-min) post-intervention semi-structured interviews with providers via a remote meeting platform. Interview questions asked what providers liked most and least about the program, and what would improve the program, with a focus on perceived acceptability, feasibility, and effectiveness of the program.

Families

Caregivers were asked to complete pre-intervention and post-intervention questionnaires. Sociodemographics were collected on the pre-intervention questionnaire and included caregiver age, child and caregiver gender, caregiver educational attainment, caregiver income level, free/reduced lunch status, receipt of other public assistance, family structure, financial stress, food insecurity, race/ethnicity, and number of children in the household. Caregiver mental health was assessed with the Generalized Anxiety Disorder-7 Scale (GAD-7; Spitzer et al., 2007) and the Patient Health Questionnaire-9 Scale (PHQ-9; Kroenke et al., 2001). The post-intervention questionnaire (Table 2) asked caregivers about their experiences with the intervention, including feasibility (item i), acceptability (items ii, iv, and v), and perceived effectiveness (item iii). Parents were asked in open-ended questions about challenges they experienced with the intervention and suggestions for improvement. The caregiver questionnaire was developed by the study team as described above for the providers. The first and last authors also conducted brief (15-min) post-intervention semi-structured interviews with caregivers via a remote meeting platform. Interview questions were similar to those for providers.

Table 2.

Parent post-intervention rating scale

| Question stem: To what extent do you agree with the following statements? |

| Response options: Not at all, Slight extent, Moderate extent, Great extent, Very great extent |

|

|

| (i) This program was easy to participate in |

| (ii) This program focused on things that are important to me |

| (iii) This program helped me reach my parenting or family goals |

| (iv) I liked participating in this program |

| (v) I would participate in this program again in the future |

Feasibility was assessed with item i; acceptability was assessed with items ii, iv, and v; perceived effectiveness was assessed with item iii

Data Analysis

Quantitative data were used to describe the participant sample, and to gauge provider and parent assessment of feasibility, acceptability, and perceived effectiveness. Qualitative data were collected from open-ended responses to the post-intervention surveys and from post-intervention interviews with caregivers and providers. Interviews were transcribed through Rev.com and checked against audio recordings to ensure accuracy. Open-ended responses and transcribed interviews were read by team members to identify common patterns and themes. All team members who engaged in the data analysis were White, female-identifying, and had experience in qualitative data analysis. The first author is a social epidemiologist with expertise in maternal and child health. The second author is a nurse practitioner and epidemiology PhD student who has previously worked with at-risk youth. The third author is a clinical psychologist with significant experience leading intervention research with children. The fourth author has over a decade of experience as a school social worker and thus a substantial understanding of the realities of school-based mental health and family interventions.

Using a deductive approach, we organized the data across participants by question, combining the open-ended survey responses and the data from the interview transcripts. After becoming familiar with the data the second author used “theoretical thematic coding” (Braun & Clarke, 2006, p. 84) to code the data deductively based on the questionnaire/interview question and the primary research questions. The second author began by generating initial codes, related to the interview questions, and then coding for themes within each initial code (Braun & Clarke, 2006). After the second author completed the thematic coding, the themes were checked by the first and fourth authors, with attention paid to the accuracy of the themes in reflecting each of the individual coded extracts and in terms of their validity in capturing the meanings of the data as a whole (Braun & Clarke, 2006). Any inconsistencies among the authors were discussed and resolved.

Results

Descriptive Statistics of the Sample

Sample characteristics are presented in Table 3 and described below.

Table 3.

Sample characteristics

| Provider demographics (n = 9) | |

|---|---|

| Job classification | |

| School counselor/social worker | 4 (44%) |

| Counselor/social worker intern | 5 (56%) |

| State | |

| Minnesota | 3 (33%) |

| Ohio | 1 (11%) |

| California | 5 (56%) |

|

| |

| Caregiver demographics (n = 14) | |

|

| |

| Age of caregiver, years (mean, [SD]) or range | 40 (8.1) range:32–66 |

| Age of child, years (mean, [SD]) or range | 10 (1.5) range:8–13 |

| Number of children in the household (mean, range) | 2.3 (1–4) |

| Race/ethnicity | |

| White, non-Hispanic | 7 (50%) |

| White, Hispanic | 3 (21%) |

| Other, Hispanic | 3 (21%) |

| Asian | 1 (7%) |

| Caregiver education | |

| < High school | 1 (7%) |

| High school | 2 (14%) |

| Some college | 6 (43%) |

| > = College | 5 (36%) |

| Relationship status | |

| Married, living with spouse | 7 (50%) |

| Unmarried, living with romantic partner | 3 (21%) |

| Divorced | 3 (21%) |

| Not in a romantic relationship | 1 (7%) |

| Current work status | |

| Working for pay 30 + hours/week | 6 (43%) |

| Working for pay < 30 h/week | 5 (36%) |

| Not working for pay by choice (stay at home parent) | 1 (7%) |

| Unemployed | 2 (14%) |

| Unemployed or reduced work hours since March 1, 2020 | 5 (36%) |

Providers

Nine providers initiated the intervention with one or more families. Five of the participating providers were social work interns, and the rest were school social workers or counselors. Providers worked in schools in Minnesota (n = 3), Ohio (n = 1), and California (n = 5). Six providers completed one or more weekly process questionnaires. Five providers completed the post-intervention questionnaire.

Families

Fourteen families completed the intervention. Caregivers ranged in age from 32 to 66, and participating children’s ages ranged from 8 to 13. On average, families had 2.3 children in the household. Half of caregivers identified as White (50%); 42% identified as Hispanic; and one caregiver (7%) identified as Asian. Approximately one-third of caregivers had a college education. Slightly over half (57%) of caregivers reported receiving public assistance, and approximately two-thirds reported that it was somewhat or very difficult to live on their income at the time of the survey; three participants reported being food insecure. Two-thirds of caregivers reported moderate-to-severe symptoms of anxiety on the GAD-7, and three quarters reported moderate-to-severe symptoms of depression on the PHQ-9. Five caregivers completed the post-intervention questionnaire.

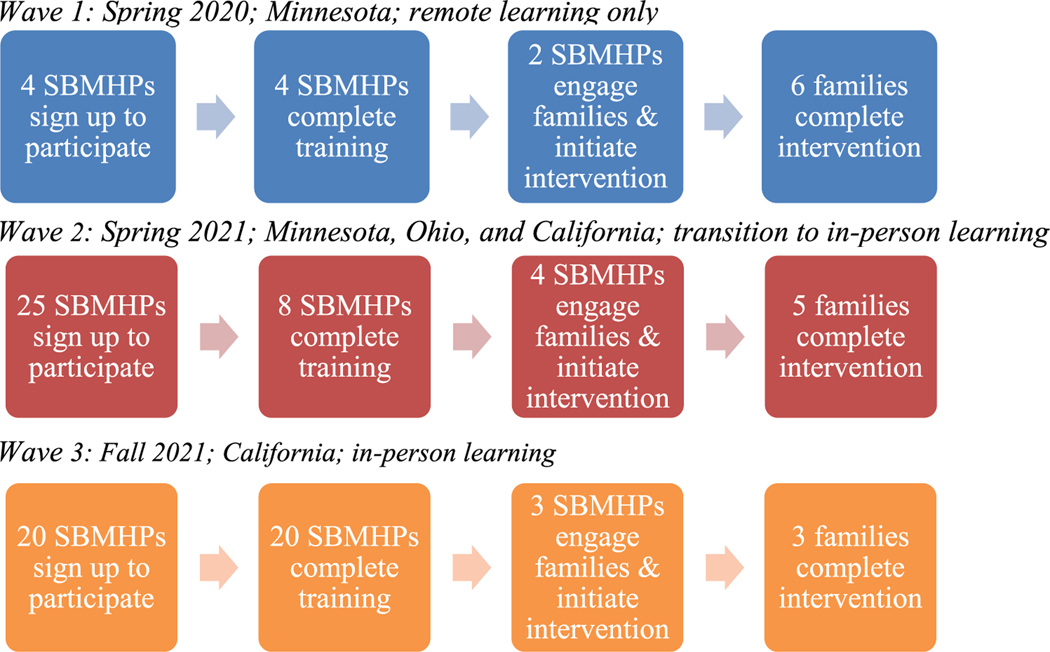

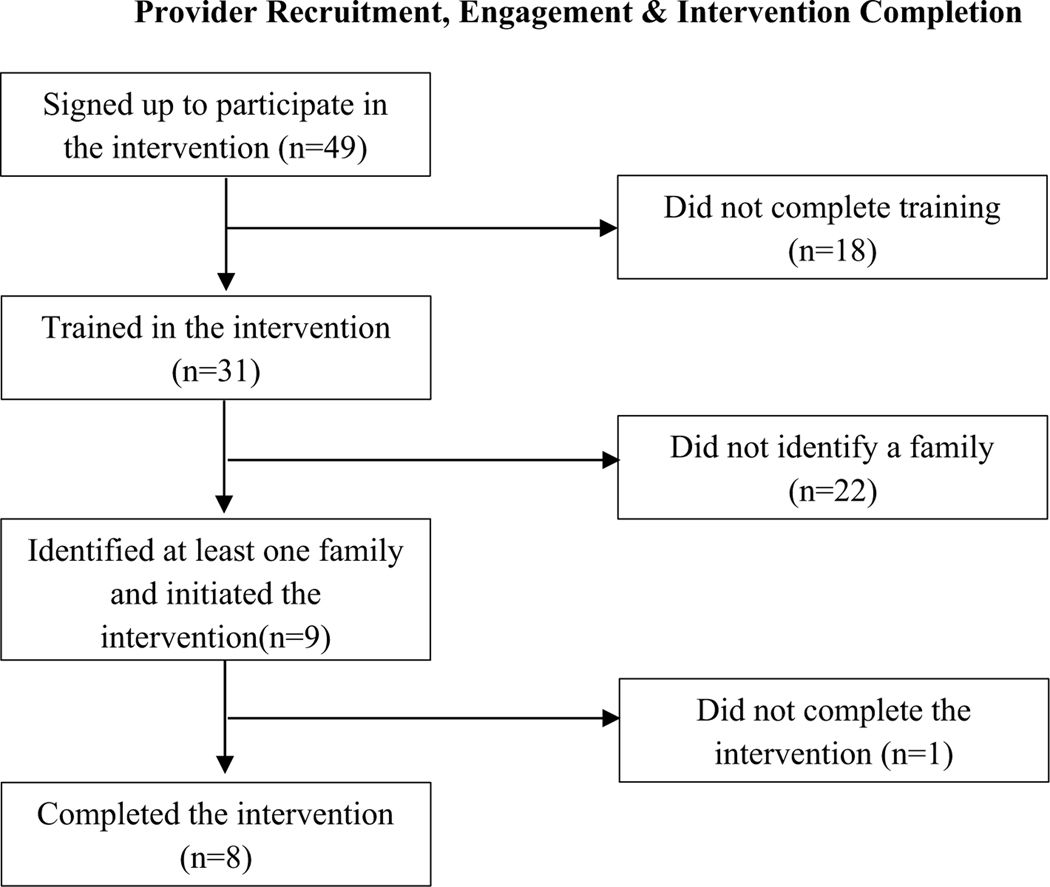

Feasibility of Intervention

Feasibility of the intervention was primarily assessed with data on the rates of participation in the program and via qualitative interviews with providers. The project was conducted in three waves: Wave 1 in late Spring 2020; Wave 2 in the Fall 2020–Spring 2021 academic year; Wave 3 in the Fall 2021–Spring 2022 academic year. Throughout the multiple waves of the pilot program, a discrepancy existed between initial interest in and support for the program and actual engagement in and completion of the intervention. Specifically, over the course of the three pilot waves, 49 providers signed up to participate in the program, 31 providers were trained on the intervention and provided consent to participate, 13 identified families with which to work, and 9 initiated the intervention with families (see Fig. 1; summary recruitment over all waves is presented in the Appendix).

Fig. 1.

Recruitment by study wave

Wave 1: Spring 2020

In the early months of the COVID-19 pandemic and the nationwide move to distance learning, the research team partnered with one suburban public school district in Minnesota whose leadership team had expressed interest in participating in Family Connect. Four SBMHPs completed the intervention training but one dropped out before beginning the program, due to a stated lack of time and capacity, and another dropped out because they were unable to recruit families to participate. Two SBMHPs ended up participating in the intervention, working with a total of six families (one provider worked with four families and the other worked with two families). All six families completed the intervention (Fig. 1).

Wave 2: Fall 2020—Spring 2021

Beginning in the fall of 2020, the research team began recruiting school districts in Minnesota to participate in a second wave but faced significant difficulties in identifying districts who were willing to commit to participating. In October 2020, members of the research team gave a live virtual presentation on Family Connect to two different school districts, including their leadership teams and their SBMHPs. Both school districts declined to participate due to a reported lack of staff capacity. In December 2020, members of the research team gave a presentation on the project to the Director of Support Services at an additional district who had expressed interest. In January 2021, the Director reported that her staff were not interested in the project at that time. In late January 2021, members of the research team presented the project to two additional school districts. Leaders and SBMHPs at both districts expressed interest and enthusiasm for the intervention. However, after being provided the link to sign up to participate in the project, no providers from either district signed up. In February 2021, members of the research team spoke with the Assistant Director of Special Services in another Minnesota public school district. The Director expressed interest but stated School Board approval would be required. This Director never responded to follow-up communications from the research team.

In late March 2021, members of the research team presented to the counseling and social work teams in two school districts outside of Minnesota, one in Southern California and one in Ohio. Additionally, a recruitment email was sent to the University of Minnesota’s Center for Applied Research & Educational Improvement (CAREI) district member listserv. From these recruitment attempts, 25 SBMHPs filled out the interest form to participate, but only eight completed the training and consent process. From these eight, only four SBMHPs successfully engaged and participated in the project: 2 SBMHPs from the Southern California district; 1 SBMHP from Ohio district; 1 SBMHP from a Minnesota district. Two providers worked with two families each and two providers worked with one family each, for a total of six families who initiated the intervention. Five families successfully completed the intervention (Fig. 1).

Wave 3: Summer 2021—Winter 2022

Preparation for the final wave began in Summer 2021. The research team partnered exclusively with the district in Southern California who had participated in Wave 2. The district’s Director of Special Services and Social Work Supervisor expressed significant interest in and enthusiasm for the intervention and informed the research team that all of the district’s social work interns (~ 20) would participate in the program as a component of the internship program. In Fall 2021, 20 social work interns completed the training and consent process. However, only three social work interns ended up successfully completing the project, with each intern working with one family, for a total of three families (Fig. 1).

After Waves 2 and 3, the study team asked participating SBMHPs and District administrators why they thought it was difficult to get many SBMHPs and families to fully participate in Family Connect. Various systems-level and individual-level barriers were identified. In terms of systemwide issues, SBMHPs reported that Wave 2 occurred during state standardized testing, which required significant energy and attention on their part, decreasing their ability to devote time to the intervention. Wave 2 also occurred in the late spring of 2021, and district administrators and SBMHPs reported school staff and families being exhausted after a long and unpredictable 2020–2021 school year, resulting in little enthusiasm or capacity for beginning a new intervention program. In Wave 3, the social work interns who were assigned to participate in Family Connect were only scheduled to be on sites 2 days/week, limiting the days and times that these SBMHPs could meet with caregivers. On an individual level, some SBMHPs worked mostly with students in middle and high school and had difficulty identifying students who fit the age range of the intervention (Grades 3–6). Some SBHMPs expressed difficulty in getting caregivers to respond to their calls or emails as the primary barrier to successful engagement in the intervention.

Adherence to the Intervention

Six unique providers filled out at least one of the weekly process questionnaires, with a total of 39 responses. In 74% of the 39 responses, providers endorsed “definitely true” for the question of whether they had adhered to the planned components of Family Connect that week; in 26% of cases providers indicated that they had made adjustments, with most noting that they had added resources or watched additional videos with families. In 100% of responses, providers endorsed “definitely true” that the family was paying attention and participating, and 97% of responses indicated “definitely true” that the family was engaged (a single response indicated “somewhat true”). Rapport ratings ranged from 7 to 10, with both mean and median of 9.

Acceptability and Perceived Effectiveness of the Intervention

Quantitative Results

Responses to closed-ended questions in post-intervention questionnaires (n = 5) indicated that providers rated the intervention highly on ease of use (Table 4); 4 of 5 respondents indicated that they agreed to a “great extent” or “very great extent” that the intervention was easy to learn and easy to implement. Providers also reported a high level of satisfaction with the intervention, with 4 of 5 respondents indicating that they thought that the intervention had helped them connect to families to a “great extent” or “very great extent,” and the same number strongly endorsing that they would like to use the intervention again in the future. Finally, all providers believed that the intervention had been helpful to, and liked by, families.

Table 4.

Provider rating of intervention (n = 5)

| Item | Mean rating | Response range | SD |

|---|---|---|---|

| (i) This intervention was easy to learn | 3.2 | 2–4 | 0.84 |

| (ii) This intervention was easy to use | 3.4 | 2–4 | 0.89 |

| (iii) This intervention helped me connect with families | 3.4 | 2–4 | 0.89 |

| (iv) This intervention seemed to help families | 3.6 | 3–4 | 0.56 |

| (v) Parent(s) seemed to like the intervention | 3.6 | 3–4 | 0.56 |

| (vi) I would use this intervention again in the future | 3.4 | 2–4 | 0.89 |

Response options: 0 = Not at all, 1 = Slight extent, 2 = Moderate extent, 3 = Great extent, 4 = Very great extent

Five caregivers completed the post-intervention questionnaire. Parent responses were even more favorable toward the intervention (Table 5). Parents rated the program highly on its ease of use. All caregivers agreed to a “great extent” or “very great extent” that the program addressed things that were important to them, and 4 of 5 caregivers strongly endorsed the statement that the program had helped them reach their goals. All parent respondents indicated that they would like to participate in the program again in the future.

Table 5.

Parent rating of intervention (n = 5)

| Item | Mean rating | Response range | SD |

|---|---|---|---|

| (i) This program was easy to participate in | 3.8 | 3–4 | 0.45 |

| (ii) This program focused on things that are important to me | 3.6 | 3–4 | 0.56 |

| (iii) This program helped me reach my parenting or family goals | 3.4 | 2–4 | 0.89 |

| (iv) I liked participating in this program | 3.6 | 2–4 | 0.89 |

| (v) I would participate in this program again in the future | 3.6 | 3–4 | 0.56 |

Response options: 0 = Not at all, 1 = Slight extent, 2 = Moderate extent, 3 = Great extent, 4 = Very great extent

Qualitative Results

Provider perceptions.

Providers were asked what they liked most and least about the intervention program (acceptability), and what they thought would improve the program. When asked what they liked most, providers expressed appreciation for the framework/structure provided by the program, and the resources that they were able to share with caregivers (Theme 1: structure and resources). As one provider said, “I loved having the videos and the visuals available so I didn’t have to create anything in order to get started. I loved that. Having something ready to go is just so nice because just the time it takes to create something in the middle of a busy school year is impossible.” Another provider appreciated how the structure of the program and the resources provided allowed her to support caregivers “without making the parent feel like I’m telling you how to parent your kids.” She explained, “I could just send out those strategies... and I let the mom choose which area. I think just having that resource to be able to send to parents is really helpful [as well as] the option [for the parent] to be able to choose which strategy they were going to try and work out what worked really well for them.” Providers also liked that caregivers found the intervention helpful and accessible (Theme 2: perceived effectiveness). One provider stated, “Parents expressed how easy they felt they would be to implement and wished they would have thought of them sooner.”

Providers were also asked what they liked least about the program. Several providers indicated that logistics were challenging, particularly around scheduling meetings, and disruptions both due to regular school breaks and the pandemic (Theme 3: logistical challenges). Some providers also noted that the program was outside of their comfort zone (Theme 4: discomfort). As SBMHPs, most of their intervention work involves working directly with children and not caregivers. One provider explained, “I have worked with families, but not in this capacity, so it was a little nerve wracking...and I was concerned about doing it properly.” Another stated, “I felt a little nervous starting the intervention. I have never done parent training before.”

Finally, providers were asked what changes they thought would improve the program. Several providers indicated interest in having more resources available and the option of more domains that caregivers could choose to work on (Theme 5: program expansion). One provider noted, “I would add more activities and interventions and have more specific topics like Self-Esteem or Grief/Loss etc. I would like more resources for children who are Special Ed and can’t read/write very well.” Another provider stated, “I wish there were more resources I could draw from. I felt like I exhausted what was shared with us.” Providers suggested a number of additional improvements to the intervention (Theme 6: other improvements), including options for children to participate directly in the intervention sessions; resources that address caregivers’ mental health needs, instead of only focusing on the child’s needs; group sessions for caregivers; and in-person meetings instead of solely phone/Zoom sessions.

Caregiver perceptions.

Caregivers were also asked what they liked most and least about the program, and what they thought could improve it. With regard to what they liked most, caregivers identified the parenting strategies they learned as highlights of the intervention (Theme 1: parenting strategies). For example, a parent who was working on sleep issues with their children appreciated the sleep hygiene strategies they learned, “I was like, nope, you can have no devices. That’s why you guys cannot sleep during the night. So bedtime is at eight for them and thirty minutes before I said you cannot have no devices. Remember, that’s the number one rule... in order for you guys to sleep.” Likewise, caregivers who worked with their children on coping with stress liked the strategies offered to help their child manage difficult feelings and self-regulate, such as deep breathing and meditation practices. Parents felt that these strategies helped them communicate better and “connect on a deeper level” with their children.

When asked what they liked least, most caregivers reported that there was nothing they disliked about the program, although some wished the intervention had lasted longer (Theme 2: extending the program). Likewise, when asked for ideas for improving the intervention, increasing the length of the program was the primary suggestion offered by caregivers. In addition, caregivers suggested potentially offering more tangible tools (e.g., examples of daily schedules, worksheets for their children that help explain new skills) to support the new strategies they learned during the intervention (Theme 3: more resources). Finally, some caregivers mentioned that they would have liked their children to be more directly involved in the intervention (Theme 4: involvement of children). One parent noted, “The program, honestly, I think it really benefited my son. The only thing that I didn’t like too much was children weren’t involved as much as I would wish.”

Discussion

In this series of small pilot studies, we examined the potential for SBMHPs to advance student well-being by engaging caregivers to improve their parenting skills in key domains hypothesized to be especially challenging during the COVID-19 pandemic. Although the motivation for connecting SBMHPs to caregivers was the disruption to in-person schooling (and thus in-person supports for students) stemming from the COVID-19 pandemic, the central importance of parenting to children’s well-being suggests the potential utility of this approach beyond the pandemic. Overall, our study demonstrated a high level of initial provider interest in the program, as well as positive experiences among both providers and caregivers who participated. However, the significant drop-off in provider engagement from initial interest to execution of the program suggests that the Family Connect program is not feasible in its current form.

Interviews with providers confirmed the feasibility challenges suggested by the difficulty of recruitment: providers indicated that immediate, urgent demands overwhelm their time and attention, making investment in important long-term goals, such as sustained prevention of student mental health problems, nearly impossible. These challenges were exacerbated by continued pandemic-related disruptions, and the need for staff to pivot quickly from remote instruction to in-person and back to remote during the study period. However, the major barriers to implementation of this program, including time constraints and an incentive structure that prioritizes reaction to immediate crises, were present prior to the pandemic, and will likely persist beyond the pandemic. These findings suggest that a stronger implementation infrastructure is needed to support models such as Family Connect.

The challenges of implementing Family Connect mirror those described with other school-based mental health services. For example, a recent scoping review (Richter et al., 2022) found that an important challenge to implementation was different goals among various actors within the school; in the case of our study, SBMHPs indicated that they faced conflicts between the prevention goals of Family Connect, and the immediate goals of addressing attendance and responding to crises. In addition, Richter et al. found that engagement of key stakeholders and participants was critical to implementation of many programs, and when a program “primarily focused on increasing the competence of physicians and teachers, the program did not achieve engagement of the targeted group” (Richter et al., 2022). A study scaling up a parent-school intervention involving the FCU model similarly noted that staff time and investment of key stakeholders is critical for successful implementation of such programs (Smolkowski et al., 2017). The implementation of Family Connect described here focused primarily on increasing competence of SBMHPs; more deeply engaging stakeholders including school leadership, teachers, SBMHPs, and parents may increase the feasibility of this program.

There is a strong evidence base indicating that programs that support caregivers can have significant positive impacts on child well-being (Doyle, 2022; Peacock et al., 2013a, 2013b; Sheridan et al., 2019; Smith et al., 2020). Among the most well-established are home visiting programs, which provide in-home supports to at-risk caregivers of infants. Home visiting programs offered prenatally and during the postpartum period have been found to improve children’s cognitive development, reduce problem behaviors, prevent child maltreatment, and reduce incidence of health problems in children (Peacock et al, 2013a, 2013b), but do not generally extend past infancy and early childhood. Other programs, such as the Triple P Parenting Program (Nogueira et al, 2022) and Incredible Years Parenting Program (Gardner et al, 2006), are designed to support families of pre-school- and early elementary-aged children and have been shown to be effective (Furlong et al, 2013), although their reach is often limited (Doyle et al., 2022). Although parenting and its challenges do not end when a child goes to kindergarten, few programs are available to address the needs of caregivers of school-aged children. The most developmentally appropriate approach to supporting the well-being of elementary and early middle-school age children considers the child’s social–ecological context and the influential role of caregivers and families (Bronfenbrenner, 1979; Garbacz et al., 2017; Gutkin, 2005; Sheridan & Gutkin, 2000). Such an approach could have not only a positive impact on individual children, but also on school environments and academic achievements. Thus, despite the sobering indications in this study that schools have somewhat limited capacity to support caregivers with their existing structure and staffing, we believe it is important to continue to seek alternative approaches that will meet caregivers’ needs.

Several strengths and limitations of this study should be noted. Strengths include development of a novel and theoretically-grounded program with implementation across diverse school contexts, a trial of multiple recruitment strategies including working with entire districts as well as individual schools, and both quantitative and qualitative data collection in providers and families. Several limitations of this study should be noted. First, the small sample size precluded more sophisticated analyses, which may have yielded important additional findings. Second, initiating this study in response to the COVID-19 crisis limited the time and in-person connections needed to build deeper partnerships. Third, prioritizing expedited training and initiation of the program limited our capacity to assess fidelity. While we had planned to record provider-parent interactions to assess fidelity, the challenge with recruitment limited our ability to conduct this part of the study. Finally, because the study was conducted during the pandemic, findings may not be generalizable to non-pandemic times. That said, many of the findings and themes identified (e.g., provider time being overwhelmed by immediate crises) appear to be ones that are not pandemic-specific, although they may have been exacerbated during the pandemic.

In conclusion, findings from this study suggest that both caregivers and providers are eager for programs that can assist caregivers in supporting their children’s well-being, and had positive reactions to this program. At the same time, it became clear through three iterations of this project that, with their current staffing and structure, schools cannot realistically offer such programs without substantially greater implementation infrastructure than what our study could offer. Given these two divergent realities, it will be important to identify alternative ways to provide caregivers with the supports that they need, and then to rigorously test such approaches to ensure they are a cost-effective use of resources. The pandemic taught a number of lessons, including that caregivers are the safety net when schools are disrupted. We hope that researchers, school leaders, and policymakers will all take this lesson to heart, and work to develop, and invest in, effective programs to ensure that this safety net remains strong.

Acknowledgements

We thank Dr. Clayton Cook for his invaluable assistance in development of this intervention and connection to school partners.

Funding

This work was funded by a University of Minnesota Office of Academic Clinical Affairs COVID-19 Rapid Response Grant (PI: Mason), and matching funds from the Minnesota Population Center.

Appendix

Footnotes

Declarations

Conflict of interest We have no relevant financial or non-financial interests to disclose.

References

- Adelman HS, & Taylor L. (2006). Mental health in schools and public health. Public Health Reports, 121(3), 294–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- An M, Dusing SC, Harbourne RT, Sheridan SM, & START-Play Consortium. (2020). What really works in intervention? Using fidelity measures to support optimal outcomes. Physical Therapy, 100(5), 757–765. 10.1093/ptj/pzaa006 [DOI] [PubMed] [Google Scholar]

- Atkins MS, Hoagwood KE, Kutash K, & Seidman E. (2010). Toward the integration of education and mental health in schools. Administration and Policy in Mental Health and Mental Health Services Research, 37(1), 40–47. 10.1007/s10488-010-0299-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M, Ogedegbe G, Orwig D, Ernst D, & Czajkowski S. (2004). Enhancing treatment fidelity in health behavior change studies: Best practices and recommendations from the NIH Behavior Change Consortium. Health Psychology., 23, 443. [DOI] [PubMed] [Google Scholar]

- Bitsko RH, Claussen AH, Lichstein J, Black LI, Jones SE, Danielson ML, Ghandour RM, & Ghandour RM (2022). Mental health surveillance among children—United States, 2013–2019. MMWR Supplements, 71(2), 1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitsko RH, Holbrook JR, Ghandour RM, Blumberg SJ, Visser SN, Perou R, & Walkup JT (2018). Epidemiology and impact of health care provider–diagnosed anxiety and depression among US children. Journal of Developmental and Behavioral Pediatrics: JDBP, 39(5), 395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun V, & Clarke V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Google Scholar]

- Bronfenbrenner U. (1979). The ecology of human development: Experiments by nature and design. Harvard University Press. [Google Scholar]

- Capp G, Watson K, Astor RA, Kelly MS, & Benbenishty R. (2021). School social worker voice during COVID-19 school disruptions: A national qualitative analysis. Children & Schools, 43(2), 79–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchetti D, & Lynch M. (1993). Toward an ecological/transactional model of community violence and child maltreatment: Consequences for children’s development. Psychiatry, 56(1), 96–118. [DOI] [PubMed] [Google Scholar]

- Compas BE, Jaser SS, Bettis AH, Watson KH, Gruhn MA, Dunbar JP, Williams E, & Thigpen JC (2017). Coping, emotion regulation, and psychopathology in childhood and adolescence: A meta-analysis and narrative review. Psychological Bulletin, 143(9), 939–991. 10.1037/bul0000110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Constable R. (2016). The role of the school social worker. In Massat CR, Kelly MS, & Constable R. (Eds.), School social work: Practice, policy, and research (8th ed., pp. 3–24). Oxford: Oxford University Press. [Google Scholar]

- Dishion TJ, Brennan LM, Shaw DS, McEachern AD, Wilson MN, & Jo B. (2014). Prevention of problem behavior through annual family check-ups in early childhood: Intervention effects from home to early elementary school. Journal of Abnormal Child Psychology, 42(3), 343–354. 10.1007/s10802-013-9768-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dishion TJ, Garbacz A, Seeley JR, Stormshak EA, Smokowski K, Moore KJ, Falkenstein C, Gau JM, Kim H, & Fosco GM (2020). Translational research on evidence-based parenting support within public schools: Strategies, challenges, and potential solutions. In Garbacz SA (Ed.), Establishing family-school partnerships in school psychology: Critical skills (pp. 223–244). Routledge/Taylor & Francis Group. 10.4324/9781138400382-11 [DOI] [Google Scholar]

- Dishion TJ, & Kavanagh K. (2000). A multilevel approach to family-centered prevention in schools: Process and outcome. Addictive Behaviors, 25(6), 899–911. 10.1016/S0306-4603(00)00126-X [DOI] [PubMed] [Google Scholar]

- Dishion TJ, Shaw D, Connell A, Gardner F, Weaver C, & Wilson M. (2008). The family check-up with high-risk indigent families: Preventing problem behavior by increasing parents’ positive behavior support in early childhood. Child Development, 79(5), 1395–1414. 10.1111/j.1467-8624.2008.01195.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doll B, Nastasi BK, Cornell L, & Song SY (2017). School-based mental health services: Definitions and models of effective practice. Journal of Applied School Psychology, 33(3), 179–194. 10.1080/15377903.2017.1317143 [DOI] [Google Scholar]

- Doyle FL, Morawska A, Higgins DJ, Havighurst SS, Mazzucchelli TG, Toumbourou JW, Middeldorp CM, Chainey C, Cobham VE, Harnett P, & Sanders MR (2022). Policies are needed to increase the reach and impact of evidence-based parenting supports: A call for a population-based approach to supporting parents, children, and families. Child Psychiatry & Human Development. 10.1007/s10578-021-01309-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edutopia. (2011, October 6). Social and emotional learning: a short history. https://www.edutopia.org/social-emotional-learning-history

- Evans GW, & Wachs TD (2010). Chaos and its influence on children’s development: An ecological perspective (pp. xviii–277). American Psychological Association. [Google Scholar]

- Fagan TK (1987). Gesell: The first school psychologist part I. The road to Connecticut. School Psychology Review, 16(1), 103–107. 10.1080/02796015.1987.12085274 [DOI] [Google Scholar]

- Fefer SA, Hieneman M, Virga C, Thoma A, & Donnelly M. (2020). Evaluating the effect of positive parent contact on elementary students’ on-task behavior. Journal of Positive Behavior Interventions, 22(4), 234–245. 10.1177/1098300720908009 [DOI] [Google Scholar]

- Fosco GM, Seeley JR, Dishion TJ, Smolkowski K, Stormshak EA, Downey-McCarthy R, & Strycker LA (2014). Lessons learned from scaling up the ecological approach to family interventions and treatment (EcoFIT) program in middle schools. InWeist M, Lever N, Bradshaw C, & Owens J. (Eds.), Handbook of school mental health (2nd ed., pp. 237–253). New York: Springer. [Google Scholar]

- Furlong M, McGilloway S, Bywater T, Hutchings J, Smith SM, & Donnelly M. (2013). Cochrane review: Behavioural and cognitive-behavioural group-based parenting programmes for early-onset conduct problems in children aged 3 to 12 years. Evidence-Based Child Health: A Cochrane Review Journal, 8(2), 318–692. 10.1002/ebch.1905 [DOI] [PubMed] [Google Scholar]

- Garbacz SA, Herman KC, Thompson AM, & Reinke WM (2017). Family engagement in education and intervention: Implementation and evaluation to maximize family, school, and student outcomes. Journal of School Psychology, 62, 1–10. [DOI] [PubMed] [Google Scholar]

- Garbacz SA, Hirano K, McIntosh K, Eagle JW, Minch D, & Vatland C. (2018). Family engagement in schoolwide positive behavioral interventions and supports: Barriers and facilitators to implementation. School Psychology Quarterly, 33(3), 448–459. 10.1037/spq0000216 [DOI] [PubMed] [Google Scholar]

- Gardner F, Burton J, & Klimes I. (2006). Randomised controlled trial of a parenting intervention in the voluntary sector for reducing child conduct problems: Outcomes and mechanisms of change. Journal of Child Psychology and Psychiatry, 47(11), 1123–1132. 10.1111/j.1469-7610.2006.01668.x [DOI] [PubMed] [Google Scholar]

- Gutkin TB (2012). Ecological psychology: Replacing the medical model paradigm for school-based psychological and psychoeducational services. Journal of Educational and Psychological Consultation, 22(1–2), 1–20. [Google Scholar]

- Gysbers NC (2010). Remembering the past, shaping the future: A history of school counseling. American School Counselor Association. [Google Scholar]

- Haine-Schlagel R, Dickson KS, Lind T, Kim JJ, May GC, Walsh NE, Lazarevic V, Crandal BR, & Yeh M. (2022). Caregiver participation engagement in child mental health prevention programs: A systematic review. Prevention Science: THe Official Journal of the Society for Prevention Research, 23(2), 321–339. 10.1007/s11121-021-01303-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazak AE (2006). Pediatric psychosocial preventative health model (PPPHM): Research, practice, and collaboration in pediatric family systems medicine. Families, Systems, & Health, 24(4), 381. [Google Scholar]

- Kelly MS, Benbenishty R, Capp G, Watson K, & Astor R. (2021). Practice in a pandemic: School social workers’ adaptations and experiences during the 2020 COVID-19 school disruptions. Families in Society: the Journal of Contemporary Social Services, 102(3), 400–413. 10.1177/10443894211009863 [DOI] [Google Scholar]

- Kessler RC, Foster CL, Saunders WB, & Stang PE (1995). Social consequences of psychiatric disorders, I: Educational attainment. American Journal of Psychiatry, 152(7), 1026–1032. [DOI] [PubMed] [Google Scholar]

- Kim JS, & Franklin C. (2009). Solution-focused brief therapy in schools: A review of the outcome literature. Children and Youth Services Review, 31(4), 464–470. 10.1016/j.childyouth.2008.10.002 [DOI] [Google Scholar]

- Kremer KP, Flower A, Huang J, & Vaughn MG (2016). Behavior problems and children’s academic achievement: A test of growth-curve models with gender and racial differences. Children and Youth Services Review, 67, 95–104. 10.1016/j.childyouth.2016.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan V. (2010, May). Early child development: A conceptual model. Paper presented at the Early Childhood Council Annual Conference. Edmonton: University of Alberta. [Google Scholar]

- Kroenke K, Spitzer RL, & Williams JB (2001). The PHQ-9: Validity of a brief depression severity measure. Journal of General Internal Medicine, 16(9), 606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leeb RT, Bitsko RH, Radhakrishnan L, Martinez P, Njai R, & Holland KM (2020). Mental health–related emergency department visits among children aged< 18 years during the COVID-19 pandemic—United States, January 1–October 17, 2020. Morbidity and Mortality Weekly Report, 69(45), 1675–1680. 10.15585/mmwr.mm6945a3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lurie Children’s Hospital. (2021, May 27). Children’s mental health during the COVID-19 pandemic. https://www.luriechildrens.org/en/blog/childrens-mental-health-pandemic-statistics/

- Margolius M, Doyle Lynch A, Pufall Jones E, & Hynes M. (2020). The state of young people during COVID-19: Findings from a nationally representative survey of high school youth. America’s Promise Alliance. https://eric.ed.gov/?id=ED606305 [Google Scholar]

- Miller WR, & Rollnick S. (2012). Motivational interviewing: Helping people change. Guilford Press. [Google Scholar]

- Nogueira S, Canário AC, Abreu-Lima I, Teixeira P, & Cruz O. (2022). Group Triple P intervention effects on children and parents: A systematic review and meta-analysis. International Journal of Environmental Research and Public Health, 19(4), 2113. 10.3390/ijerph19042113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osagiede O, Costa S, Spaulding A, Rose J, Allen KE, Rose M, & Apatu E. (2018). Teachers’ perceptions of student mental health: The role of school-based mental health services delivery model. Children & Schools, 40(4), 240–248. 10.1093/cs/cdy020 [DOI] [Google Scholar]

- Peacock S, Konrad S, Watson E, Nickel D, & Muhajarine N. (2013a). Effectiveness of home visiting programs on child outcomes: A systematic review. BMC Public Health, 13, 17. 10.1186/1471-2458-13-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peacock S, Konrad S, Watson E, Nickel D, & Muhajarine N. (2013b). Effectiveness of home visiting programs on child outcomes: A systematic review. BMC Public Health, 13(1), 17. 10.1186/1471-2458-13-17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- People Incorporated Mental Health Services. School-linked Mental Health Services. https://www.peopleincorporated.org/school-linked-mental-health-services/. Accessed July 14, 2022.

- Phillippo KL, & Blosser A. (2013). Specialty practice or interstitial practice? A reconsideration of school social work’s past and present. Children & Schools, 35(1), 19–31. 10.1093/cs/cds039 [DOI] [Google Scholar]

- Richter A, Sjunnestrand M, Romare Strandh M, & Hasson H. (2022). Implementing school-based mental health services: A scoping review of the literature summarizing the factors that affect implementation. International Journal of Environmental Research and Public Health, 19(6), 3489. 10.3390/ijerph19063489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rollnick S, Kaplan SG, & Rutschman R. (2016). Motivational interviewing in schools: Conversations to improve behavior and learning. Guilford Publications. http://ebookcentral.proquest.com/lib/umn/detail.action?docID=4617094 [Google Scholar]

- Rossen E, & Cowan KC (2014). Improving mental health in schools. The Phi Delta Kappan, 96(4), 8–13. [Google Scholar]

- Shaw DS, Dishion TJ, Supplee L, Gardner F, & Arnds K. (2006). Randomized trial of a family-centered approach to the prevention of early conduct problems: 2-year effects of the family check-up in early childhood. Journal of Consulting and Clinical Psychology, 74(1), 1–9. 10.1037/0022-006X.74.1.1 [DOI] [PubMed] [Google Scholar]

- Sheridan SM, & Gutkin TB (2000). The ecology of school psychology: Examining and changing our paradigm for the 21st century. School Psychology Review, 29(4), 485–502. [Google Scholar]

- Sheridan SM, Smith TE, Moorman Kim E, Beretvas SN, & Park S. (2019). A meta-analysis of family-school interventions and children’s social-emotional functioning: Moderators and components of efficacy. Review of Educational Research, 89(2), 296–332. 10.3102/0034654318825437 [DOI] [Google Scholar]

- Sheridan SM, Witte AL, Holmes SR, Coutts MJ, Dent AL, Kunz GM, & Wu C. (2017). A randomized trial examining the effects of conjoint behavioral consultation in rural schools: Student outcomes and the mediating role of the teacher–parent relationship. Journal of School Psychology, 61, 33–53. [DOI] [PubMed] [Google Scholar]

- Shomaker LB, Tanofsky-Kraff M, Stern EA, Miller R, Zocca JM, Field SE, Yanovski JA, & Yanovski JA (2011). Longitudinal study of depressive symptoms and progression of insulin resistance in youth at risk for adult obesity. Diabetes Care, 34(11), 2458–2463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith TE, Sheridan SM, Kim EM, Park S, & Beretvas SN (2020). The effects of family-school partnership interventions on academic and social-emotional functioning: A meta-analysis exploring what works for whom. Educational Psychology Review, 32(2), 511–544. 10.1007/s10648-019-09509-w [DOI] [Google Scholar]

- Smolkowski K, Seeley JR, Gau JM, Dishion TJ, Stormshak EA, Moore KJ, Falkenstein CA, Fosco GM, & Garbacz SA (2017). Effectiveness evaluation of the positive family support intervention: A three-tiered public health delivery model for middle schools. Journal of School Psychology, 62, 103–125. [DOI] [PubMed] [Google Scholar]

- Spitzer RL, Kroenke K, Williams JB, & Löwe B. (2006). A brief measure for assessing generalized anxiety disorder: The GAD-7. Archives of Internal Medicine, 166(10), 1092–1097. 10.1001/archinte.166.10.1092 [DOI] [PubMed] [Google Scholar]

- Stadnick N, Haine-Schlagel R, & Martinez J. (2016). Using observational assessment to help identify factors associated with parent participation engagement in community-based child mental health services. Child and Youth Care Forum, 45(5), 745–758. 10.1007/s10566-016-9356-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stormshak EA, Brown KL, Moore KJ, Dishion T, Seeley J, & Smolkowski K. (2016). Going to scale with family-centered, school-based interventions: Challenges and future directions. In Family-school partnerships in context; (pp. 25–44). [Google Scholar]

- Stormshak EA, Fosco GM, & Dishion TJ (2010). Implementing interventions with families in schools to increase youth school engagement: The Family Check-Up Model. School Mental Health, 2(2), 82–92. 10.1007/s12310-009-9025-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stormshak E, Seeley J, Caruthers A, Cardenas L, Moore K, Tyler M, Fleming C, Gau J, & Danaher B. (2019). Evaluating the efficacy of the Family Check-Up Online: A school-based, eHealth model for the prevention of problem behavior during the middle school years. Development and Psychopathology, 31(5), 1873–1886. 10.1017/S0954579419000907 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugrue EP (2017). The professional legitimation of early school social work: An historical analysis. School Social Work Journal, 42(1), 16–36. [Google Scholar]

- Sugrue E, Ball A, Harrell DR, & Daftary AH (2023). Family engagement during the COVID-19 pandemic: Opportunities & challenges for school social work. Children & Family Social Work. 10.1111/cfs.13011 [DOI] [Google Scholar]

- Utech DA, & Hoving KL (1969). Parents and peers as competing influences in the decisions of children of differing ages. The Journal of Social Psychology, 78(2), 267–274. [Google Scholar]

- Wang FL, Feldman JS, Lemery-Chalfant K, Wilson MN, & Shaw DS (2019). Family-based prevention of adolescents’ co-occurring internalizing/externalizing problems through early childhood parent factors. Journal of Consulting and Clinical Psychology, 87(11), 1056–1067. 10.1037/ccp0000439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williamson A, Mindell J, Hiscock H, & Quach J. (2020). Longitudinal sleep problem trajectories are associated with multiple impairments in child well-being. Journal of Child Psychology and Psychiatry, 61(10), 1092–1103. [DOI] [PMC free article] [PubMed] [Google Scholar]