Abstract

Multiple oscillating time series are typically analyzed in the frequency domain, where coherence is usually said to represent the magnitude of the correlation between two signals at a particular frequency. The correlation being referenced is complex-valued and is similar to the real-valued Pearson correlation in some ways but not others. We discuss the dependence among oscillating series in the context of the multivariate complex normal distribution, which plays a role for vectors of complex random variables analogous to the usual multivariate normal distribution for vectors of real-valued random variables. We emphasize special cases that are valuable for the neural data we are interested in and provide new variations on existing results. We then introduce a complex latent variable model for narrowly band-pass-filtered signals at some frequency, and show that the resulting maximum likelihood estimate produces a latent coherence that is equivalent to the magnitude of the complex canonical correlation at the given frequency. We also derive an equivalence between partial coherence and the magnitude of complex partial correlation, at a given frequency. Our theoretical framework leads to interpretable results for an interesting multivariate dataset from the Allen Institute for Brain Science.

Keywords: Coherence, complex normal distribution, latent variable model, oscillations, Primary 62H20, Secondary 62P10

Abstract

Les séries temporelles à oscillations multiples sont généralement étudiées dans le domaine fréquentiel, où la cohérence est souvent considérée comme l’amplitude de la corrélation entre deux signaux à une fréquence spécifique. Cette corrélation est à valeurs complexes et présente des similitudes avec la corrélation de Pearson pour les valeurs réelles, tout en présentant des différences distinctes. Dans cette étude, les auteurs explorent la dépendance entre les séries oscillantes en utilisant la distribution normale complexe multivariée. Cette distribution est l’équivalent de la distribution normale multivariée classique, mais adaptée aux vecteurs de variables aléatoires complexes plutôt qu’aux vecteurs de variables aléatoires réelles. Les auteurs mettent l’accent sur des cas spécifiques qui revêtent une importance particulière pour les données neuronales qui les intéressent, tout en proposant de nouvelles approches et des variations des résultats existants. Ils introduisent un modèle de variables latentes complexes pour les signaux filtrés en bande passante étroite à une fréquence donnée. Ils démontrent ensuite que l’estimation du maximum de vraisemblance dans ce modèle produit une cohérence latente équivalente à l’amplitude de la corrélation canonique complexe à la fréquence spécifiée. Ils établissent également une équivalence entre la cohérence partielle et l’amplitude de la corrélation partielle complexe, toujours à une fréquence donnée. Leur approche théorique conduit à des résultats interprétables pour un ensemble de données multivariées intéressant provenant de l’Allen Institute for Brain Science.

1. INTRODUCTION

Oscillations in neural circuits have been observed under a variety of circumstances and have provoked much speculation about their physiological function (Buzsaki & Draguhn, 2004; Fries, 2005). In the past 15 years, the role of oscillations at particular frequencies has been the subject of considerable experimental investigation, including causal manipulation (Cardin etal.,2009).Of particular interest is the intriguing possibility that oscillations facilitate purposeful communication across distinct parts of the brain, such as when an organism must retrieve and hold items from memory or direct its visual attention to a particular location (Miller, Lundqvist & Bastos, 2018; Schmidt et al., 2019). This has led to the idea that alterations of circuit oscillations could indicate brain dysfunction (Mathalon & Sohal, 2015).

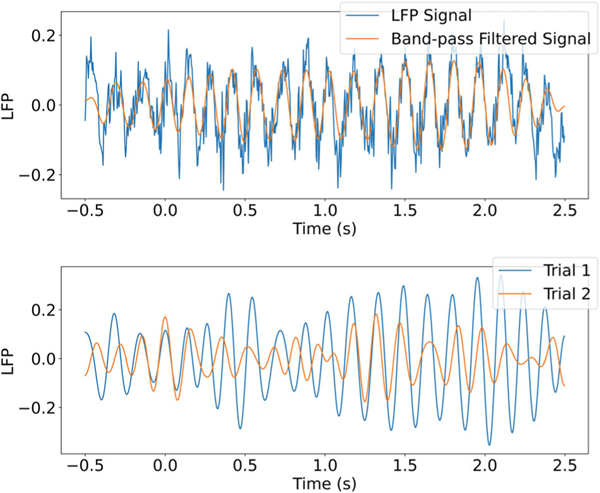

From a statistical perspective, regardless of their mechanistic function, neural oscillations can be considered useful indicators of coordinated activity across brain regions. A short snippet of data, typical of those we have analyzed, is shown in the top panel of Figure 1 together with a band-pass-filtered version. In the bottom panel are band-pass-filtered series for two trials, where at many points in time the amplitudes, phases, or both are different. When series from two electrodes (in different parts of the brain) are considered, the two phases may tend to shift forward or backward together across trials, which indicates coordinated activity. Similarly, there may be trial-to-trial correlation between the amplitudes.

Figure 1:

Three seconds of local field potential (LFP) data filtered at 6.5Hz with a window from 6 to 7Hz. In the top figure, we plot both the raw LFP signal, which consists of a noisy oscillation around 6.5Hz, and the filtered signal, which removes the noise. In the bottom figure, we plot filtered data from the same electrode across two trials. At many points in time, the two trials have different phases or amplitudes.

To quantify this form of association, an obvious question is whether it might be advantageous to consider phase and amplitude together, as defining complex numbers, and model association using multivariate complex distributions. In addition, data such as those in Figure 1 often come from many recordings in each brain region that somehow must be combined. We investigate the properties of the multivariate complex normal distribution with the goal of analyzing interactions among multiple groups of oscillating time series.

A related conceptual concern comes from the standard interpretation of coherence, which is the starting point for much frequency-based analysis of co-dependence. Under the Cramér (or Cramér–Khintchine) representation of a bivariate stationary time series, coherence is usually said to be the magnitude of the time series correlation at a particular frequency (e.g., Shumway & Stoffer, 2017, Section 4.6). The difficulty with this interpretation is that “correlation” here refers to the complex correlation for complex random variables, which is analogous to the Pearson correlation for real-valued variables in certain respects, but not others.

This article reviews and reformulates ideas drawn from the literature and then provides several new results, including a summary of interacting groups of oscillating multivariate time series using a time-domain rendering of latent coherence, which turns out to be the magnitude of a complex-valued canonical correlation. Although we have been motivated by the analysis of neural data, we believe this article will be of general statistical interest as it concerns a basic topic in time series analysis. We also hope it represents a suitable tribute to Nancy Reid, whose work has often aimed to advance statistics through conceptual clarification and consolidation.

2. BACKGROUND AND SUMMARY

We are interested in the covariation of both phase and amplitude in two or more time series. The data we analyze in Section 6 come from experiments during which animals are shown a visual stimulus repeatedly, across many trials, while neural activity is recorded from electrodes inserted into the brain. Repeated measurements across many trials is typical of neurophysiological data, and these repetitions are helpful in dealing with the striking nonstationarity present in neural recordings (see, e.g., Kass et al., 2018). In addition, neurophysiological experiments often collect data from multiple electrodes embedded in multiple brain regions.

From oscillating series such as those in the top panel of Figure 1, the band-pass-filtered version can be obtained by Fourier analytic methods (though it can also be obtained using wavelets). As long as the band in the filtering is sufficiently narrow (surrounding some particular frequency), at any point in time the phase and amplitude of the real-valued, filtered time series can be recovered using the Hilbert transform (see the Appendix) under an assumption of local stationarity (Ombao & Van Bellegem, 2008). A phase and an amplitude define a complex number, and the Hilbert transform converts a real-valued signal into the corresponding complex-valued signal. For a pair of repeatedly observed complex-valued variables, correlation is more complicated than in the real case: covariance becomes complex-valued and there are two forms of linear covariation, called covariance and pseudo-covariance. Complex covariance is defined for two complex-valued random variables and as

where is the conjugate of a complex number . Note that is a complex number. Complex pseudo-covariance is defined as

The variance–covariance and pseudo-variance–covariance matrices for a complex random vector are defined analogously to the case of complex random variables, using the Hermitian and transpose operators, respectively:

where . When the pseudo-covariance is zero, a complex random vector is called proper.

In addition to complex correlation, dependence between phase angles can be measured through phase-locking value (PLV) (Lachaux et al., 1999) which, as we show in Section 3.2.2, can be viewed as an analogue of correlation for angular random variables. For more than two phases, a recently developed class of models called torus graphs provides a thorough rendering of multivariate phase dependence (Klein et al., 2020). A torus graph is any member of the full exponential family with means and cross-products (interaction terms) on a multidimensional torus, which is the natural home for multivariate circular data because the product of circles is a torus. Thus, torus graphs are probabilistic graphical models and represent an analogue to Gaussian graphical models for circular (angular) data.

Phase coupling is also analyzed using coherence, the magnitude of coherency, which is a frequency-domain measure that has a form resembling correlation in terms of spectral densities and cross-spectral densities. One way to understand how coherence becomes complex correlation at a particular frequency is to consider the result of filtering signals with a complex band-pass filter in a band for some small . Ombao & Van Bellegem (2008) noted that the resulting narrow-band coherence, which they called “band coherence,” is the correlation between the filtered signals. We can effectively pass to the limit as to get the infinitesimal version that appears in the Cramér–Khintchine representation, displayed in Section 4.1. In a similar vein, in Section 4.1 we also note that a single-frequency bivariate process with stochastic amplitudes and phases yields coherence as the magnitude of complex correlation.

Coherence has been used to understand conditional dependence relationships between time series. Dahlhaus (2000) studied so-called coherence graphs for real-valued time series and showed how conditional dependence may be inferred from the partial coherence between these signals. Partial coherence arises by inverting and rescaling a matrix of coherency values for time series, and represents a form of conditional dependence between signals (Ombao & Pinto, 2022). Tugnait (2019a,b) analyzed conditional dependence between signals with arbitrary spectra by observing that the limiting distribution of a certain class of transformations of real-valued time series takes the form of a complex normal distribution.

Our setting, in which we observe multiple signals oscillating at the same frequency, differs from those previously studied in Dahlhaus (2000) and Tugnait (2019a,b) because our signals are oscillating only at a single frequency. In Section 4, we provide a comprehensive overview of how, for our setting, complex correlation and coherency are equivalent when we assume complex normality. Similarly, we show that complex partial correlation and partial coherency are equivalent, and we discuss how these concepts are directly tied to the conditional dependence between the observed oscillating time series. Thus, by modelling band-pass-filtered data with the complex normal distribution, we obtain simple interpretations of estimated correlation and partial correlation matrices in terms of coherence and partial coherence.

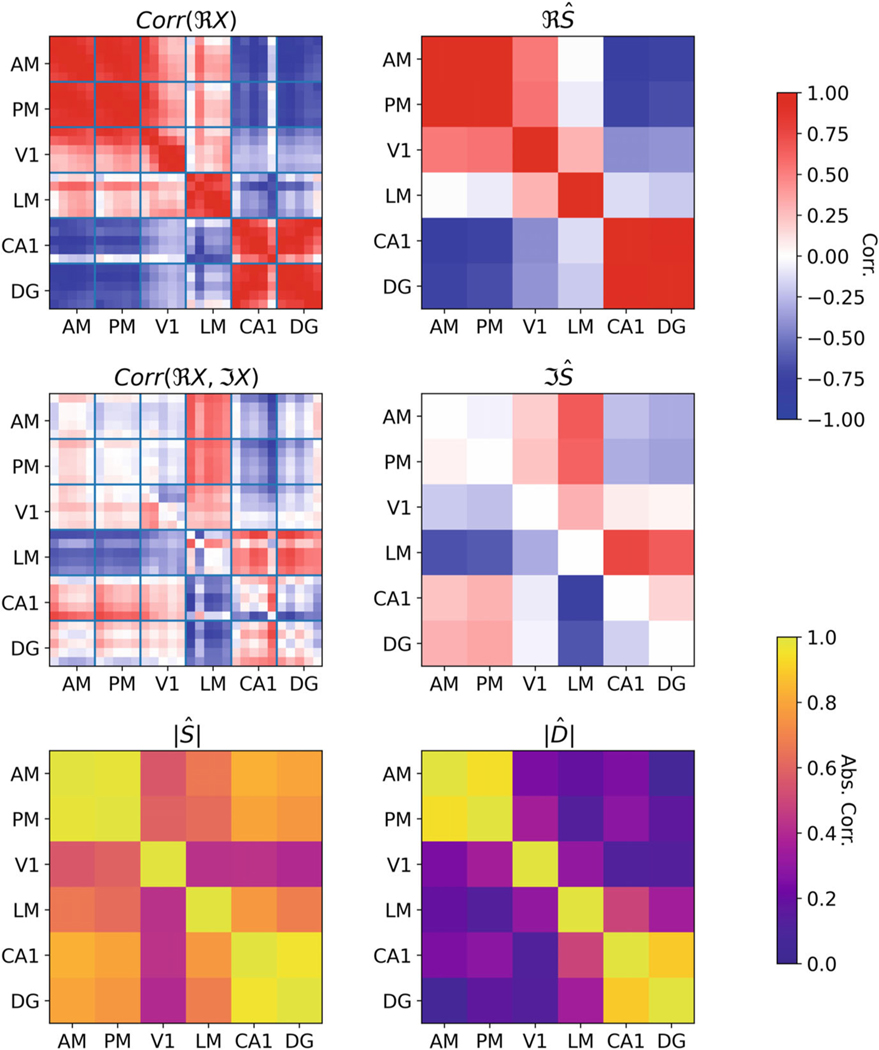

Our interest is in recordings from multiple electrodes embedded in several brain regions. To take advantage of the multiple sources of activity observed from each region, we developed a novel complex normal latent variable model in which each of several latent variables represents activity in one of the regions (at a particular point in time). Our goal is to estimate the dependence among these latent variables. We specify the model in Section 5 and apply it to data in Section 6. The data we analyze are local field potentials (LFPs), which are voltage recordings from electrodes inserted into the brain; they are low-pass-filtered (smoothed) and typically down-sampled to 1kHz so that each second of data has 1000 observations. LFPs represent bulk activity near the electrode (roughly within 150–200 μm) involving large numbers of neurons (Buzsáki, Anastassiou & Koch, 2012; Einevoll et al., 2013; Pesaran et al., 2018). Our theoretical results enable us to obtain a simple interpretation of data from the Allen Institute for Brain Science, Seattle, WA. We examined three recordings in each of six regions of the visual cortex in response to a visual stimulus and estimated latent correlation and partial correlation matrices after band-pass-filtering at 6.5Hz to isolate the theta rhythm. There are many large latent correlations of the theta rhythm oscillations between regions (which, as we show, may be considered large values of latent coherence). However, the unique associations, that is, the latent partial correlations, between these regions are modest in size with the exception of the unique associations between AM and PM, as well as between DG and CA1, which exhibit latent partial correlations (latent partial coherence) close to 1.

Although some of the theorems in this article may be unsurprising to experts on the complex normal distribution and frequency-based analysis, they all represent at least novel extensions and reformulations, and several results are entirely new. In Section 3.3.3, we discuss the conditional distribution of the angles given the amplitudes in the polar coordinate representation of the complex normal distribution. Navarro, Frellsen & Turner (2017) showed that the multivariate generalized von Mises (mGvM) distribution can be viewed as a conditional distribution of the 2-dimensional, real-valued multivariate normal distribution when all the amplitudes are equal to 1. Here, we change the setting of this result to the complex normal distribution, generalize the result to arbitrary amplitudes, and show how the conditional distribution changes when certain relevant restrictions are made on the parameters of the complex normal distribution. These results (appearing in Theorems 2 and 3 and Corollary 4) form new characterizations of torus graphs. In Section 4 we provide a general treatment of partial correlation, conditional correlation, and conditional dependence for the complex normal distribution. These results consolidate and reformulate what was previously available in the literature (see Section 4 for specific citations). The new results in Corollary 13 and Theorem 12 show that maximum likelihood estimation in our latent variable model produces estimates that are equivalent to complex-valued versions of canonical correlation. The proofs of the theorems and corollaries we show are provided in the Supplementary Material Section S1.

3. DEPENDENCE OF COMPLEX-VALUED RANDOM VARIABLES

In this section, we first present the data-generating setting we are attempting to model. We observe complex-valued random vectors, and our goal is to measure associations between their entries. After introducing this setting, we mostly ignore the fact that we are dealing with oscillating signals, but we will return to this important point in Section 4 when we study the relationship between coherence, complex correlation, and the complex normal distribution. This important correspondence is the basis for the analysis presented in this paper.

We first review various measures of pairwise association between complex-valued random variables, including complex correlation, PLV, and amplitude correlation. We then discuss two multivariate models for association between complex-valued random vectors: the complex normal distribution, which is based on the linear association between complex random vectors, and the torus graph distribution, which is a multivariate model for phase. In Section 3.3.3, we show some new results that describe how the torus graph distribution arises by conditioning angles on amplitudes in the complex normal distribution.

3.1. Setting: Repeated Observations of Oscillating Signals

In this article, we assume we observe time series that are oscillating at some frequency on each of many repeated trials. We can model the data as a -dimensional random vector , and assume these vectors are i.i.d. across trials. Throughout most of this article, we omit the trial number from our notation. We can first apply a local band-pass filter to the raw vector around the frequency at time , and then apply the Hilbert transform to obtain a complex-valued random vector having components that are functions of the phases and amplitudes of the oscillations: for and for components . Here and everywhere else in the article, , where represents the imaginary unit.

3.2. Pairwise Association Between Complex-Valued Random Variables

3.2.1. Complex Correlation

Linear association between complex-valued random variables is measured by complex correlation. We defined both covariance and pseudo-covariance between complex random variables and random vectors in Section 2. The corresponding complex correlations are

3.2.2. Angular Association: Phase-Locking Value

We can study the association between two phases by considering their representations as unit-length complex random variables and . The set of such pairs is the product of two circles, a two-dimensional torus.

The strength of the association between two angular random variables , can be measured using PLV (Lachaux et al., 1999). When we have repeated observations , across trials of angular observations, then we can define

which is widely used to measure the angular dependence between oscillating signals (Lepage & Vijayan, 2017).

To better understand PLV, we provide a theoretical analysis of the components of phase-based association. Suppose that and for . The first moment of is

where and is the angle of the vector (, ) relative to (1,0) for . The complex covariance of and is

where the pseudo-covariance of and is

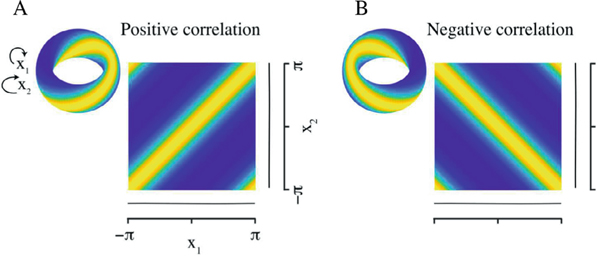

Covariance and pseudo-covariance, respectively, represent rotational and reflectional association between and . Rotational covariance measures clockwise–clockwise association, and reflectional covariance measures clockwise–anticlockwise association. Both types of association are shown in Figure 2. The amplitude of the rotational covariance controls the width of the yellow high-probability band, and the phase of this complex number specifies the shift of this band, which is seen along the diagonal of the Cartesian plane; the band shifts in direction from bottom-right toward top-left (Figure 2a). The same notion applies to the reflectional covariance magnitude, but the band is positioned on the other diagonal (Figure 2b). The presence of both types of covariation is possible and can lead to concentrated marginals. However, in our experience with phases extracted from LFP neural data, we have almost always observed exclusively rotational dependence. In addition, the marginals are close to uniform. If (e.g., if the are uniform), then

The quantity is the theoretical counterpart of PLV. Thus, in the absence of reflectional covariation, if , then is the analogue of Pearson correlation for angular random variables.

Figure 2:

The torus is the natural domain for a pair of circular random variables. Illustration of both types of circular covariance in a torus graph bivariate density, with uniform marginal densities, plotted side by side on a two-dimensional torus and a Cartesian plane. (a) Positive rotational dependence. (b) Negative reflectional dependence. The figure has been adapted from a figure in Klein et al. (2020).

3.2.3. Amplitude Correlation

For two amplitudes , , the simplest way to characterize amplitude correlation is through the ordinary Pearson correlation of real-valued random variables . Other approaches involve calculating the correlation between the log of the amplitudes or between the square of the amplitudes (Nolte et al., 2020).

3.3. Multivariate Models

3.3.1. The Complex Normal Distribution

A complex random vector is said to be complex normal (CN) if its real and imaginary parts are jointly multivariate normal (Andersen et al., 1995). Suppose that , where

so that , , , , and . Then

giving a bijection between (, , ) and (, ). Thus, the complex normal distribution is well defined by the parameter set (, , ). We denote the distribution by . Recall that the distribution of is said to be proper if the pseudo-covariance matrix is zero. Further, is circularly symmetric, meaning component-wise circularly symmetric so that its pdf satisfies for all if and only if is proper and has mean zero (Adali, Schreier & Scharf, 2011).

Circularly symmetric, proper, and restriction-less CN distributions form full regular exponential families. This ensures that the maximum likelihood estimator, which involves firstand second-order moment statistics, is sufficient. In addition, these families form probabilistic graphical models so that conditional independence is easily characterized. We return to this in Theorem 8 and Corollary 9. We provide an abbreviated version of this theorem here; for full details, see the Supplementary Material Section S1.

Theorem 1.

Each of the following families forms a full and regular exponential family: (i) the family of CN distributions represented by ; (ii) the family of proper CN distributions ; and (iii) the family of circularly symmetric CN distributions .

3.3.2. Torus Graphs

Multivariate models for angular random variables are most naturally defined on the torus rather than on Euclidean space. Such a model is given in Klein et al. (2020). Here, we briefly review its construction.

To define an exponential family on a torus with a given mean and covariance structure, first- and second-order sufficient statistics are needed. Using two-dimensional rectangular coordinates (involving cosines and sines), the first-order sufficient statistics are and . Second-order behaviour is summarized by

We can then write a natural exponential family density for with a canonical parameter consisting of and for , as

| (1) |

For , (1) extends to an exponential family on a -dimensional torus as

| (2) |

Based on simple trigonometric identities, Klein et al. (2020) reparameterized this family into a more interpretable form

| (3) |

which uses the first- and second-order statistics seen in the definitions of complex covariance. Klein et al. (2020) then defined a -dimensional torus graph (TG) to be any member of the family of distributions specified by (2) or (3). If is distributed according to , then we say that ; likewise, if is distributed according to , then we say that .

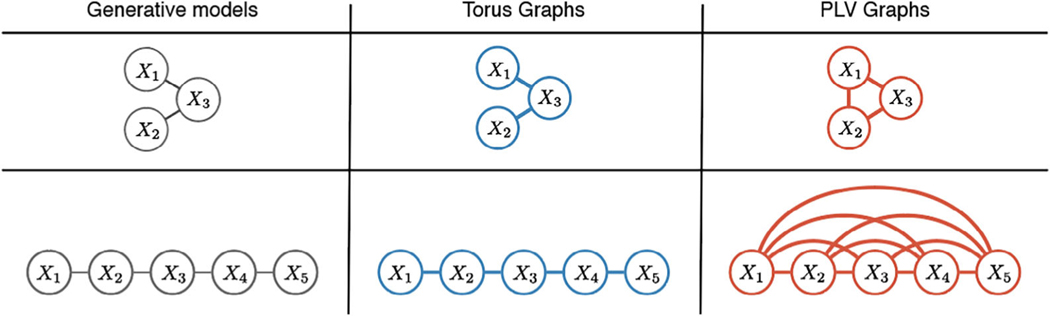

Because torus graphs form exponential families, a pair of random variables and will be conditionally independent given all other variables if and only if the four elements in the pairwise interaction parameter are zero. Thus, torus graphs define probabilistic graphical models.

Torus graphs can uncover conditional dependence relationships, meaning pairwise dependence edges that are still present after conditioning on the rest of the random variables in the model. Conditional dependence is obscured with simple correlation-type measures such as PLV because for each edge, they consider only two random variables (see Figure 3, which displays a conditional independence graph from Klein et al. (2020)).

Figure 3:

Torus graphs can recover conditional dependence graphs when PLV fails in simulated data; see Klein et al. (2020) for details. The figure has been adapted from one in Klein et al. (2020).

There are three parameter groups in (3): marginal concentrations, rotational covariance, and reflectional covariance. Klein et al. (2020) showed that the submodel with only rotational covariance parameters (which corresponds to a proper distribution when the statistics and are considered to be complex variables) does a good job of fitting phase angles extracted from neural LFP data. In addition, they described how several alternative families of distributions can be seen as special cases of torus graphs. They then applied their torus graph model to characterize a network graph of interactions among recordings from four brain regions during a memory task.

Although torus graphs provide interesting exponential families that could have been defined long ago, they would have been irrelevant to data analysis before recent practical developments used by Klein et al. (2020) to estimate the parameters of the model.

3.3.3. Characterization of Torus Graphs Using the Complex Normal Distribution

We consider the polar coordinate representation of a random vector with a complex normal distribution and prove that the conditional distribution of the phases, given the amplitudes, form a torus graph. We provide a general statement and then offer additional theorems in the proper and circularly symmetric cases.

Suppose that and are the amplitude and phase of for . In other words, (, ) is the polar coordinate representation of . We consider the conditional distribution of the phases given the amplitudes. Under certain parameter restrictions on a complex normal distribution, this conditional distribution forms a torus graph.

Theorem 2.

Let be a complex normal random vector such that . Let be the inverse covariance matrix. If and for , then , where and for , .

In Theorem 2, the components of the conditioning vector appear as multipliers in the natural parameters but the graph is the same for every vector .

There exists another distribution in the literature, known as the mGvM distribution, which is a more general version of the TG distribution that includes all second-moment terms (Navarro, Frellsen & Turner, 2017). Navarro et al. showed that, given a -dimensional normal distribution analogous to the complex normal distribution, is a mGvM distribution without requiring any restrictions on the parameters of the original normal distribution. In| the Supplementary Material Section S1, we generalize this result for any conditional distribution and then leverage it to prove Theorem 2 (see the Supplementary Material Section S1.2).

We can obtain a more precise characterization of the conditional distribution under the parameter restrictions and .

Theorem 3.

If , so that the complex normal distribution of is proper, then as in (3), where and denotes the component of .

Corollary 4.

If , so that the complex normal distribution of is circularly symmetric, then with the same restrictions as in Theorem 3 and with the additional restriction that for all .

These theorems say that when the complex normal distribution is proper, the resulting torus graph family has only rotational dependence. The absence of reflectional dependence is intuitive from the definition in Section 3.3.2 of reflectional covariance as pseudo-covariance. Under circular symmetry, because , the conditional distribution is uniform over and, additionally, is marginally uniform. We repeat that the combination of uniformity and| only rotational dependence is a particularly important special case, partly because this is the case in which PLV becomes a circular analogue to Pearson correlation. From a theoretical perspective, according to an argument given by Picinbono (1994), stationary band-pass-filtered signals with sufficiently narrow bands are proper. In the context of the particular neural data application reported here, we provide empirical evidence for circularity in Figure S2, which shows that the sample covariance matrix has much larger entries than the sample pseudo-covariance matrix.

4. COHERENCY AND THE COMPLEX NORMAL DISTRIBUTION

Assume we have a -dimensional time series for which every dimension contains an oscillation at a given frequency. As discussed previously, these oscillations can be extracted by band-pass-filtering. Spectral dependence and coherency were analyzed using the complex normal distribution by Tugnait (2019a,b), who considered a more general setting in which the spectrum of the signals may be distributed across numerous frequencies. However, when band-pass-filtered signals all oscillate at the same frequency, we get simpler and more interpretable results, which we leverage using our latent variable model in Section 5.

The key to our approach is a well-known result that coherency is a form of complex correlation for stationary signals oscillating at a particular frequency (Ombao & Van Bellegem, 2008). For single-frequency signals, it further turns out that partial coherency, which is used to study conditional dependence among time series, is equivalent to partial correlation (Dahlhaus, 2000; Ombao & Pinto, 2022). For the complex normal distribution, we provide a systematic study of complex conditional correlation, complex partial correlation, and conditional independence. We also review the relationship between complex correlation and other measures of pairwise dependence.

4.1. Coherency and Pairwise Complex Correlation

Suppose we have stationary signals and on , which may be complex-valued. Assume they have auto-covariance functions for , and a cross-covariance function . These definitions do not depend on because of stationarity. For , the spectrum and cross-spectrum of the signals are

and the coherency is

The coherency is complex-valued. Coherence is the magnitude of the coherency. The Cramér–Khintchine decomposition (see Ch. 3 of Brémaud, 2014) is

where is a bivariate orthogonal-increment random process. In this case, the coherency between and at the frequency is often considered as the complex-valued correlation coefficient between the infinitesimal increments of and at , which is to say

A quick way to see that this characterization makes sense is to consider two time series oscillating at a single frequency , i.e.,

where and are random variables, which we think of as representing the trial-specific amplitude and phase of , respectively, for . Then the auto-covariance and cross-covariance kernels are and . The spectrum and cross-spectrum at the frequency are

| (4) |

and

| (5) |

respectively. In this case, the coherency is the complex-valued correlation between and for every :

As a generalization of the single-frequency case, if a pair of signals are band-pass-filtered over a small frequency band, it is possible to define “band coherency” over a narrow band of frequencies. In this case, the band coherency of the filtered signals is equal to the complex correlation of the filtered signals (Ombao & Van Bellegem, 2008).

4.2. Partial Correlation, Conditional Correlation, and Conditional Independence in the Complex Normal Distribution

In the case of real-valued random variables, conditional correlation and partial correlation have been studied extensively (Baba, Shibata & Sibuya, 2004). For such real-valued random variables, conditional correlation and partial correlation are distinct quantities, and conditional dependence between random variables need not imply that either quantity is nonzero. However, real random variables that are jointly normal have the following special properties: (i) conditional correlation and partial correlation are equal, and (ii) zero conditional correlation (or partial correlation) is equivalent to conditional independence.

In the complex case, the relationship between these quantities has been partially studied in Tugnait (2019a,b) and Andersen et al. (1995). Andersen et al. (1995) define conditional covariance for the proper complex normal distribution and show how it is related to various entries in the complex precision matrix. They further relate conditional dependence to conditional covariation for the proper complex normal distribution. Tugnait (2019a,b) discusses how to assess conditional dependence for proper and improper complex normal distributions.

In this section, we define partial correlation for complex random vectors in a manner analogous to the real case. We describe the differences between partial correlation and conditional correlation for complex random variables, and we provide results relating partial correlation and conditional independence, even in the improper complex normal case.

In what follows, assume we have some complex-valued random vector with finite first and second moments. For any subset , let denote the random vector given by the elements of whose indices are in (in order). Let be the concatenation of and its conjugate, i.e., . Let be the linear space spanned by and, for every , let be the projection of onto that space.

Definition 5.

For , , the partial correlation between and is

and the partial pseudo-correlation between and is

The conditional correlation is

The conditional pseudo-correlation is defined analogously by replacing cov with pcov.

We start by stating a correspondence between partial correlation and entries in a transformed precision matrix. The results in this section are proven in the Supplementary Material Section S1.

Theorem 6.

For any complex-valued random vector , let . Denote by diag() the diagonal matrix, with diagonal entries the same as . Let

Then and .

Although conditional correlation and partial correlation may be different in general (Baba, Shibata & Sibuya, 2004), they coincide in the complex normal case.

Theorem 7.

If , then, for ,

and

In the real-valued case, if the partial correlation is zero for jointly complex normal random variables, then the two variables are conditionally independent. Because in the complex case we have to consider both types of second-order association, we must consider both partial correlation and pseudo-partial correlation. For the following theorems, given random variables , , and , we write if the conditional distributions and are independent.

Theorem 8.

If , then, and if and only if .

A corollary of this theorem allows us to drop the reliance on the pseudo-correlation in the proper complex normal case since such a correlation must be zero.

Corollary 9.

If , then if and only if .

By applying Theorems 6 and 8, we get the following corollary:

Corollary 10.

If , then if and only if and . In addition, if , then , are conditionally independent if and only if for .

4.3. Partial Coherency and Partial Correlation

We now leverage the results from Section 4.2 to show a correspondence between partial coherency, a modification of coherency to account for conditional dependence, and partial correlation. Partial coherency was developed to better differentiate unique pairwise associations from associations that are shared with one or more other observed variables (Ombao & Pinto, 2022). Assume we have signals on . Let , and define as in Section 4.1. Consider the matrix formed by assembling the entries of . Let be the matrix containing the diagonal elements of and zero on the off-diagonals. Let

We call the partial coherency between signals and . If are all oscillating signals at some frequency , we obtain the following theorem.

Theorem 11.

If for all and , then

This theorem follows from observing that, by our results in Section 4.1, for such signals. We can then apply Theorem 6 and, observing that the upper-right block of is zero because , we obtain the desired result.

Researchers have previously studied partial coherency as a way to construct conditional independence graphs for time series (Dahlhaus, 2000). For instance, Tugnait (2019a,b) estimates conditional independence between time series by first constructing a sufficient statistic that accounts for the oscillations in a time series at all observable frequencies and then, after observing that the limiting distribution of this statistic is a complex normal random variable, deriving conditional independence tests based on this limiting distribution. As we have seen, because we are dealing with signals oscillating at a single frequency, we can estimate partial coherency simply by computing the partial correlation between the observed variables. We will use this to interpret our latent-variable model. The Supplementary Material Section S2 details various simple examples in which PLV, amplitude correlation, and coherence are compared.

4.4. Alternative Measures of Pairwise Association Between Oscillating Signals

Thus far, we have focused on coherence as a measure of association for oscillating signals. As described in Section 3, other measures exist for studying the dependence between oscillating signals. In particular, we described PLV, which many investigators use as an alternative to coherence. Since PLV was first introduced, questions have arisen over whether to use PLV, coherence, or amplitude correlation to measure connectivity between oscillatory neural signals (e.g., Lachaux et al., 1999; Srinath & Ray, 2014; Lowet et al., 2016; Lepage & Vijayan, 2017). Our intention here is to review briefly some of these arguments to understand how coherence is situated among these other possible measures of association.

Clearly, coherence depends on both the phase and amplitude of oscillatory signals. Dependence on amplitude, however, has invited criticism of coherence as a pure representation of the degree of synchrony among the phases of oscillatory signals. PLV was introduced in part to overcome the perceived limitations of this dependence (Lachaux et al., 1999). Later work showed that, particularly in nonstationary settings, estimators of coherence are not well behaved and can fail to accurately represent synchrony (Lowet et al., 2016). Other authors have criticized coherence on the grounds that it can be biased by amplitude correlation (Srinath & Ray, 2014). However, some investigators have responded to these criticisms by pointing out that coherence may up-weight trials with larger amplitude oscillations, where information about phase is likely to be stronger (Lepage & Vijayan, 2017). Thus, the inclusion of amplitude information may be beneficial.

Further, some researchers have analyzed the relationship between PLV, amplitude correlation, and coherence when particular parametric models are assumed for data. For instance, Aydore, Pantazis & Leahy (2013) found that when data are distributed according to the complex normal distribution, PLV can be written as a function of coherence, which indicates that coherence provides all the information that PLV does. Further, Aydore, Pantazis & Leahy (2013) found that both the von Mises distribution and Gaussian models are effective for studying phase associations in LFP data, and argued that PLV and cross-correlation capture the same information. More generally, Nolte et al. (2020) made the remark that, because cross-correlation provides all possible information about the second-order statistical properties of circularly symmetric, bivariate, complex normal random variables, any pairwise measure of association can be written as a function of cross-correlation (and thus coherency). Nolte et al. found that PLV and related phase-based coupling statistics, as well as amplitude–amplitude correlational statistics, can be written as functions of coherence for complex normal random variables.

Thus, if the data are assumed to be proper and complex-normal, then complex correlation, and thus coherence, provides the most detailed measure of association available. For the oscillating neural signals that are the focus of this work, the complex normal model appears to be a reasonable approximation of the data, and so we focus on coherence as our measure of pairwise association since coherence may provide much of the information contained within other measures of association.

5. A COMPLEX-NORMAL, LATENT-VARIABLE MODEL

5.1. Model

We assume that we have recordings from brain regions, and that the activity of each brain region is recorded by electrodes over repeated experimental trials. We assume that each electrode records an oscillating signal at some frequency, and we band-pass-filter the data to extract this oscillating signal as a preprocessing step to obtain a complex number that represents the phase and amplitude of the oscillation. For each trial , the observation from brain region is a -dimensional complex-valued variable. We further assume that each is driven by a univariate latent factor , which is also complex-variate, for the model

| (6) |

| (7) |

where is a complex-valued factor loading, is the latent covariance matrix, which is assumed to be positive definite, and is a region-specific noise with the covariance parameter , which also is assumed to be positive definite.

Using basic properties of the complex normal distribution, the marginal distribution of the vector of all the observed signals is

| (8) |

This representation shows that the model is nonidentifiable without adding additional constraints. In particular, can be scaled by an arbitrary complex number, and corresponding entries in scaled by the inverse conjugate of that number, with no change to the marginal distribution. Additionally, given an arbitrary real value , we have that , which indicates that, while is positive semidefinite (PSD), we can add to the diagonal of and subtract from and retain the same marginal distribution.

Thus, to ensure the identifiability of the parameters, we require that

and , where is the first component of , and

.

To better understand the model, we show how it can produce maximum likelihood estimates that are related to the solutions for a complex extension of canonical correlation analysis (CCA; Hotelling, 1936). Bong et al. (2023) found that a real-valued, latent-variable model, which bears some similarity to the complex-valued, latent-variable model described here, could be viewed as a form of probabilistic CCA, first described in Bach & Jordan (2005). Because CCA has a distribution-free definition, the correspondence we show below with an analogue of CCA for complex-valued random vectors provides a similar distribution-free motivation for the estimation procedure we derive here.

If we observe a pair of real random vectors and , CCA finds weights , (where , ) that maximize . Kettenring (1971) extended CCA to multiple vector observations. For , this so-called “multiset CCA” finds weights maximizing a notion of the cross-correlation among . Multiset CCA easily extends to complex-valued random vectors, . In particular, we can write the optimization problem solved by complex multiset CCA as

| (9) |

The solution weights that achieve the optimum of (9) are called canonical weights, and the resulting correlation matrix is called a canonical correlation matrix. The sample estimates and are obtained by replacing corr in (9) with the sample version based on the observed signals during experimental trials . We now state a theorem describing the equivalence between the canonical weights, the canonical correlation matrix, and the maximum likelihood estimator of the parameters in the model given in (6) and (7) under the given identifiability constraints. A proof is given in the Appendix.

Theorem 12.

Suppose that , , and are the maximum likelihood estimators of the parameters in (6) and (7) under the given identifiability constraints, labelled as 1 and 2 following (8), based on observed tuples . We have the following equivalence between the maximum likelihood estimators, the canonical weights, and the canonical correlation matrix:

for , where is the diagonal matrix with entries .

As a corollary of the theorem, if , then our model is equivalent to the pairwise CCA for two complex random vectors and . To demonstrate this equivalence, let us write

let be the resulting canonical correlation coefficient, and let and be the corresponding sample estimators. We can then state the following corollary.

Corollary 13.

If , , and are the maximum likelihood estimators of (6) and (7) under the given identifiability constraints, then they are related to the weights and canonical correlation values through

In the general case with more than two latent factors, fitting the marginal likelihood directly is an intractable optimization problem. Instead, we fit the model using the expectation maximization (EM) algorithm; we describe our fitting procedure in the Appendix. In Figure S1, we provide evidence that, for simulated datasets similar to the real dataset we analyze in Section 6, the estimates provided by the EM fitting procedure converge to the true parameters as the size of the dataset increases. Code for performing inference and analysis with our model is available at https://github.com/urbkn7/latent_cn.

In applying Theorem 12 to the data in Section 6, we first band-pass-filter the data so that the magnitude of the complex correlation becomes the coherence (as discussed in Section 4.1). We also decompose the covariance matrix to get partial coherence (Section 4.3).

5.2. Inference

We study the strength of the associations among the estimated latent factors in our model. To do so, we develop a parametric bootstrap procedure to test for conditional dependence between brain areas. Recall from Section 4 that for vectors distributed according to the complex normal distribution, zero entries in the latent precision matrix correspond to conditional independence relationships between the associated dimensions of the random vector. Therefore, for two regions with indices , , we want to test the null hypothesis against the alternative hypothesis .

To employ the parametric bootstrap, we first estimate the parameters of the model on the original dataset. Let , and let denote the likelihood of the parameters under the model given by (8) for the dataset . We first estimate with the EM algorithm to obtain , our approximation of the maximum likelihood estimator for the dataset . Then, we optimize the likelihood with a modified EM algorithm under the additional constraint that (see the Appendix for details on how we solve this optimization problem) to obtain . We then simulate datasets , where and is the total number of bootstrap datasets. Let

We can observe the quantile of the likelihood ratio statistic for the original data, , relative to the set of likelihood ratio statistics for the bootstrapped datasets to obtain a -value. We can also obtain confidence intervals for parameters of interest by employing the ordinary nonparametric bootstrap. The parameters we are most interested in are contained in the latent correlation and conditional correlation matrices. We have the latent correlation matrix and the conditional correlation matrix .

6. DATA ANALYSIS

We apply the techniques we have discussed so far to a dataset of LFP data from the Allen Institute (Siegle et al., 2021). In the experiment, six electrode probes were simultaneously inserted into the mouse brain, with each probe targeting some area of the visual cortex but also recording other regions. During the experiment, the mice were presented with a variety of visual stimuli; here, we focus on presentations of drifting grating stimuli, which appear as bars moving across a screen in the mouse’s field of view. In the interest of utilizing as much data as possible, we ignore differences in the direction and size of the stimuli presented. This gives us 630 trials.

The experimenters marked every electrode with the anatomical brain area in which it resided during the experiment. We observed an oscillation at 6.5Hz in many of these areas. Therefore, we band-pass-filtered the LFP signal and then used the Hilbert transform to recover the analytic signal. We selected a single time point, 2 s into the trial, which occurs well after the stimulus disappears from the screen. Selecting this time point removes the influence of trial-locked changes in brain activity. In addition, we used five electrodes from each of the six regions for which we have data to form the set of time series we analyze.

We then applied the latent variable model to this dataset. For all analyses, we used one latent variable per brain area analyzed. Each brain area has multiple electrodes embedded, which record the LFP signals; we select five electrodes from each region, and the signals from these electrodes are the observed variables in our model. In Figure 4, we show data from a single probe passing through four visual regions (AM, PM, V1, and LM) and two hippocampal regions (CA1 and DG). We display the empirical correlation matrices of the observed data as well as the latent correlation matrices and partial correlation matrices estimated using our model. These matrices of latent correlation and partial correlations are of interest since, as discussed in Section 4, in our setting they are equivalent to the coherency and partial coherency between regions. While the estimated latent marginal correlations are relatively large between many areas, the estimated partial correlations (the ) are strongest between two pairs of areas: between AM and PM as well as between CA1 and DG.

Figure 4:

An example of an application of the latent variable model to LFP data from the Allen Institute. The entries in the correlation matrices are arranged consecutively according to their vertical position in the inserted probe. The sample correlation for the real part of the data and the sample correlation between the real and imaginary parts of the data are denoted by and . The estimated latent correlation matrix is denoted by , and the estimated latent partial correlation matrix is denoted by . The notations and denote the absolute value of each entry in the corresponding matrices. Electrodes are labelled by the anatomical region in which they resided during the experiment. We observe that while the estimated latent correlation matrix has large values between many regions, the estimated latent partial correlation primarily has large values between two pairs of regions: between DG and CA1 as well as between AM and PM.

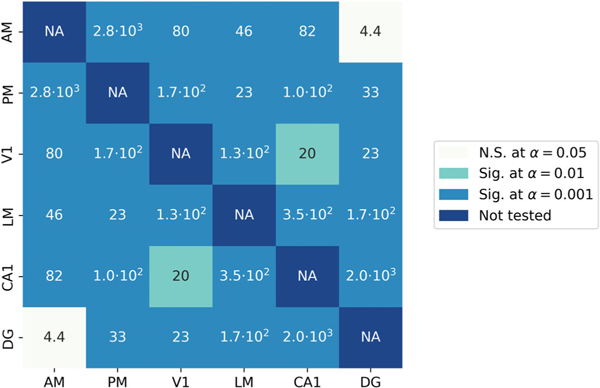

We perform the parametric bootstrap test discussed in Section 5.2 to study the significance of the entries in the latent partial correlation matrix. In Figure 5, we plot the results of this analysis. We observe significant correlations between the majority of pairs of areas for the 6.5Hz frequency.

Figure 5:

Results of parametric bootstrap test performed on all pairs of regions to test if a significant conditional correlation exists between each pair of areas. Numbers inside the boxes are likelihood ratio statistics testing the hypothesis that . The colour of each box represents the results of significance tests for three values of , the false-positive rate, where each significance test is done with a Bonferroni correction for multiple comparisons. The level is the smallest we are able to test given our simulation settings. The likelihood ratio values indicate that some pairs would be significant at much smaller values of . There are significant partial correlations between most pairs of areas at , except between DG and AM as well as between V1 and CA1.

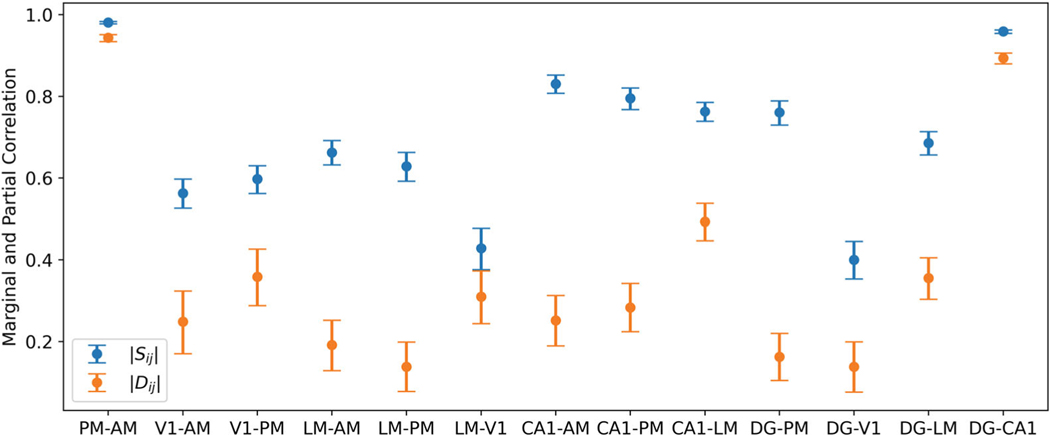

To further understand how strong the correlations between these areas are, we report bootstrap confidence intervals for the significantly nonzero parameters of the correlation and partial correlation matrices in Figure 6. For nearly all pairs of regions, the partial correlations are of modest size and are substantially smaller than the corresponding marginal correlations. However, the pairs PM–AM and DG–CA1 have large partial correlations close to 1. Also of interest are the patterns of association for the LM area. Note that the strength of the correlation between the LM and the CA1 areas is larger than that between LM and any other visual region, suggesting that LM has stronger oscillatory associations at 6.5Hz frequency with hippocampal regions than with other visual regions, despite LM itself being a visual region.

Figure 6:

Point estimates and confidence intervals of correlations (blue) and partial correlations (orange) for all pairs of regions with significantly nonzero latent partial correlations. All confidence intervals have 95% coverage. The partial correlations are smaller than the corresponding marginal correlations, with the exception of the large partial correlations between PM and AM and between DG and CA1.

7. DISCUSSION

We began with two separate goals, and ended by merging them. On the one hand, we wanted to see whether the multivariate complex normal distribution might be useful in analyzing dependence among groups of oscillating time series. On the other hand, we wished to better understand complex correlation. Our investigation, built on existing literature concerning dependence among complex random variables and complex normal variables in particular, uncovered several interesting relationships that were either not known previously or not spelled out clearly. For complex random vectors, we reviewed the distinction between partial correlation and conditional correlation(Baba,Shibata&Sibuya,2004), which are equivalent for real, normal random vectors, and we showed that the complex partial correlation and conditional correlation also coincide under complex normality. We phrased many of our theorems in terms of the general form of complex normality, including both covariance and pseudo-covariance, and then specialized to the proper case where pseudo-covariances vanish. We showed that in the proper case, pairwise conditional independence coincides with zero partial correlation, as it does for real multivariate normal distributions, and that partial coherency may be considered a partial complex correlation at a given frequency. These facts are important for analytic interpretation, as we demonstrated in our real data example.

The scientific backdrop for our work on the analysis of neural data is one of the most pressing problems in the application of statistics to neurophysiology. Specifically, the problem lies in identifying coordinated activity across two or more regions of the brain based on recordings of multiple time series that are highly nonstationary but are repeated across experimental trials. When there are many repetitions of multiple recorded values in two regions at a single time point, CCA provides a solution to the problem of determining their dependence. In the two-region case, we showed that maximum likelihood estimation for our latent variable model produces a form of latent coherence which is equivalent to the magnitude of the complex canonical correlation. In the multi-region case, maximum likelihood estimation for our model produces a generalized canonical correlation.

The latent coherencies and partial coherencies from our latent-variable model are not computed in the frequency domain but are obtained instead in the time domain with band-pass-filtering in a narrow band. For this we leaned on a key observation by Ombao & Van Bellegem (2008) that “band coherence” (a version of coherence written in terms of integrals over the band) could be interpreted as the magnitude of complex correlation of two signals. We used our results for the complex normal distribution to interpret the complex correlations and partial correlations we found in the data.

Part of understanding coherence requires a comparison to PLV, which can be generalized to the multivariate setting with torus graphs. The original motivation for PLV was discomfort with the dependence of coherence on amplitude (Lachaux et al., 1999). Like PLV, torus graphs ignore amplitude variation and any possible cross-covariation between amplitudes and phases. Thus, torus graphs might be considered models of covariation among phases after marginalizing over amplitudes. The complex normal results characterizing torus graphs as conditional distributions after conditioning on amplitudes show that even though the graphical structure (the conditional independence structure) of torus graphs does not depend on amplitude, calibration of the magnitude of interaction effects apparently depends on the amplitudes. When the amplitudes are roughly constant, coherence and PLV provide essentially equivalent results (see the Supplementary Material Section S2 and Lepage & Vijayan, 2017).

The results we found in the data are striking and intriguing. There are large partial coherences in two pairs of areas, one involving higher visual areas (AM and PM, the anterior and posterior parts of the medial visual area, which are further downstream than primary visual cortex, V1) and the other involving the hippocampus (CA1, a sub-region of the cornu ammonis and DG, the dentate gyrus). Both pairs involve areas that are contiguous, but that alone does not explain their interaction because all of these areas share anatomical boundaries with some other areas. In the case of CA1 and DG, these are the only hippocampal areas in the data. The coherence between the AM and PM areas could indicate close collaboration in neural processing and may be worth further investigation. In addition, we observed stronger coherences between LM and hippocampal regions than between LM and other visual regions, which could also be a subject of further investigation.

Often, there are dozens of time series in each region, and latent-variable models are attractive ways to reduce dimensionality for examining cross-region interactions. In an unpublished work (Orellana & Kass, 2023), a hierarchical model based on torus graphs has been used to describe large numbers of phase measurements made in each of several brain areas. This hierarchical structure has the advantage of reducing the total number of parameters, which results in better statistical inference when the amount of data is small relative to its dimensionality. To assess time-varying amplitude interaction, Bong et al. (2023) developed a time-series generalization of a factor analysis model with one latent factor for each region. They allowed for nonstationarity and included all relevant time-lagged cross-correlations. They first showed how the model leads to a time-series generalization of probabilistic CCA, based on multiset CCA (analogous to Theorem 12 here). Because of nonstationarity, each combination of a time point in one region and a time point in another region could have a unique correlation across trials, which made the covariance matrix have a large number of free parameters. The authors adapted sparse estimation methods to solve the high-dimensional inference problem and showed how it produced interpretable and interesting results when applied to their data. Closely related work can be found in Bong et al. (2020).

It would be straightforward to apply the approach here at many time points, but modelling time lags, as is done in Bong et al. (2023), would require additional work. It would also be possible to estimate partial coherence, even at a single point, using a latent, multivariate time series model, but this would require either a very high dimensional formulation along the lines of Bong et al. (2023) or a specific time series model, both of which present their own challenges.

To simplify interpretation, it was important for us to assume that the complex normal distributions were proper. In our data, this seemed to be a reasonable assumption, and in all the data we have examined using torus graphs, reflectional dependence is, similarly, either absent or difficult to detect. Perhaps future uses of the complex normal distribution, along the lines outlined here, will reveal situations in which pseudo-covariance needs to be considered. The substantial additional complication of such cases would likely present new challenges, but we hope the framework we have summarized here would provide a useful starting point.

Supplementary Material

ACKNOWLEDGEMENTS

This work was supported in part by the National Institute of Mental Health (grant RO1 MH064537). Urban was supported in part by the National Institute on Drug Abuse (grant 5T90DA022762).

APPENDIX

A NOTE ON THE HILBERT TRANSFORM

Here we provide an overview of the Hilbert transform, which is commonly used to recover phase and amplitude from oscillating signals (Cohen, 2014). Let be the output of a complex signal that has been filtered in a band .

We only observe , and the problem is to recover , which is possible when is sufficiently small. The Hilbert transform operates on to produce according to

| (A1) |

where the integral is over the domain of . The notation denotes the Cauchy principal value; this formula is given in numerous sources (e.g., Pandey, 2011), but because we have not seen a concise derivation, we provide one here.

Let us write the Fourier transforms of and its complex conjugate as and , and the evaluation of such transforms at a frequency by , etc. From the general relations

we have the Fourier transform

Note that is, from the band-pass filtering, concentrated around , and is concentrated around . Therefore, when the formula above gives and, similarly, when , . Thus, using to denote the sign of , when we multiply by , we get

As a result, the convolution satisfies

Inserting the formula for into the definition of convolution produces (A1), giving the Hilbert transform of . The resulting is called the analytic signal.

FITTING PROCEDURE FOR THE LATENT FACTOR MODEL IN SECTION 5

In this section, we discuss the procedure for fitting the latent factor model (Eqs. 6 and 7) in Section 5 to the data from independent repeated observations. The procedure provides estimates of the model parameters , , and using the EM algorithm (Dempster, Laird & Rubin, 1977). The EM algorithm starts with initial estimates for the parameters, denoted by , , and , and iteratively updates the estimates to optimize the likelihood through alternating E-steps and M-steps, which we will describe next. We denote the estimates after the update by , , and .

At the iteration, the E-step calculates the sufficient statistics of the latent factors conditional on the observed data and the parameter estimates , , and after the iteration. The full joint probability density of the observed data and the latent factors, given the parameter estimates, is

where is the concatenation of the latent factors. Further,

where is a diagonal matrix with entries , is a vector of elements , and is the zero matrix. For brevity, we denote and by and . (We note that is invariant over .)

In the M-step, we find the parameters maximizing the expectation of the full log-likelihood function with respect to both and conditional on , , , and . The conditional expectation of the full log-likelihood function for the model is

The parameters are complex-valued, so we use Wirtinger calculus to take derivatives. Using formulas from Adali, Schreier & Scharf (2011), we take derivatives and set them equal to zero to achieve the update steps

We also have the identifiability constraints , , and . To produce estimates that satisfy these constraints, we utilize the following procedure. First, we adjust the elements of and so that , and ; this can be accomplished by multiplying the rows and columns in and the vectors by a complex-valued scalar. To ensure that , we solve a convex optimization problem by maximizing under the constraint that . We then subtract from and add to .

We use a procedure based on the method of moments to obtain starting values for the EM algorithm, a strategy that has been shown theoretically to often result in near-optimal parameter estimates (Balakrishnan, Wainwright & Yu, 2017). To do so, we start by computing the sample covariance matrix over the data. Then, we estimate the parameter vectors by examining the submatrices given by . In particular, because under the correct model specification, we have that , we can take any column of and normalize it according to the identifiability constraints to obtain an estimate of . We do this and average over all the corresponding columns to get an overall estimate of . Once and have been estimated, it is then straightforward to obtain estimates of by dividing the entries in by those in .

Now we briefly address our strategy for parameter estimation under a null hypothesis, as is done in the inference method we introduce. Under the null hypothesis, we are assuming that some entry of the latent precision matrix, , is zero. To satisfy this assumption, we need to modify our estimation procedure. Observe that the conditional expectation of the log-likelihood depends on through

Thus, we need to optimize this expression over under the constraints that while ensuring that remains a Hermitian PSD matrix. Fortunately, this is a convex optimization problem, and while there is no explicit expression for the optimal under these constraints, we can solve this problem numerically using a convex optimization package. Then, we can estimate the remaining variables using the explicit formulas given above.

PROOF OF THEOREM 12

Let and , where is a submatrix of the marginal covariance matrix of and if and otherwise, for , . Then the second identifiability constraint can be rewritten as and

That is, is orthogonal to . Denoting the block diagonal matrix of by , we have that has submatrices

Because of the orthogonality between and , the calculation of and is straightforward: and consists of submatrices

where is the inverse correlation matrix and and are the pseudo determinant and Moore–Penrose pseudo-inverse of a PSD matrix . Notice that and hence . In turn, the negative log-likelihood under the model (Eqs. 6 and 7) of the parameter set with respect to the observed time series is

where

and and indicate the sample variance and covariance operators, for , . The maximum likelihood estimator minimizes with respect to . That is,

for some and for all . Because ,

Therefore, the two terms and may be rewritten as

and

The maximum likelihood estimation problem then reduces to minimizing

with the restriction that . Let , , and . The likelihood can be rewritten as

which is maximized when , given that is fixed for . Thus, maximum likelihood estimation is equivalent to finding minimizing under for , which is the GENVAR procedure of Kettenring (1971).

Footnotes

Additional Supporting Information may be found in the online version of this article at the publisher’s website.

REFERENCES

- Adali T., Schreier PJ., & Scharf LL. (2011). Complex-valued signal processing: The proper way to deal with impropriety. IEEE Transactions on Signal Processing, 59(11), 5101–5125. [Google Scholar]

- Andersen HH, Hojbjerre M, Sorensen D, & Eriksen PS (1995). Linear and Graphical Models: For the Multivariate Complex Normal Distribution, Springer, New York. [Google Scholar]

- Aydore S, Pantazis D, & Leahy RM (2013). A note on the phase locking value and its properties. Neuroimage, 74, 231–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baba K, Shibata R, & Sibuya M (2004). Partial correlation and conditional correlation as measures of conditional independence. Australian & New Zealand Journal of Statistics, 46(4), 657–664. [Google Scholar]

- Bach FR & Jordan MI (2005). A Probabilistic Interpretation of Canonical Correlation Analysis, Technical Report 688, University of California, Berkeley. [Google Scholar]

- Balakrishnan S, Wainwright MJ, & Yu B (2017). Statistical guarantees for the EM algorithm: From population to sample-based analysis. The Annals of Statistics, 45(1), 77–120. [Google Scholar]

- Bong H, Liu Z, Ren Z, Smith M, Ventura V, & Kass RE (2020). Latent dynamic factor analysis of high-dimensional neural recordings. In Advances in Neural Information Processing Systems, Vol. 33, Neural Information Processing Systems Foundation Inc., San Diego, 16446–16456. [PMC free article] [PubMed] [Google Scholar]

- Bong H, Ventura V, Yttri EA, Smith MA & Kass RE (2023). Cross-population amplitude coupling in high-dimensional oscillatory neural time series. arXiv preprint, arXiv:2105.03508.

- Brémaud P (2014). Fourier Analysis and Stochastic Processes, Springer, Cham, Switzerland. [Google Scholar]

- Buzsáki G, Anastassiou CA, & Koch C (2012). The origin of extracellular fields and currents—EEG, ECoG, LFP and spikes. Nature Reviews Neuroscience, 13(6), 407–420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsaki G & Draguhn A (2004). Neuronal oscillations in cortical networks. Science, 304(5679), 1926–1929. [DOI] [PubMed] [Google Scholar]

- Cardin JA, Carlén M, Meletis K, Knoblich U, Zhang F, Deisseroth K, Tsai L-H, & Moore CI (2009). Driving fast-spiking cells induces gamma rhythm and controls sensory responses. Nature, 459(7247), 663–667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MX (2014). Analyzing Neural Time Series Data: Theory and Practice, MIT Press, Cambridge, MA. [Google Scholar]

- Dahlhaus R (2000). Graphical interaction models for multivariate time series. Metrika, 51(2), 157–172. [Google Scholar]

- Dempster AP, Laird NM, & Rubin DB (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39, 1–22. [Google Scholar]

- Einevoll GT, Kayser C, Logothetis NK, & Panzeri S (2013). Modelling and analysis of local field potentials for studying the function of cortical circuits. Nature Reviews Neuroscience, 14(11), 770–785. [DOI] [PubMed] [Google Scholar]

- Fries P (2005). A mechanism for cognitive dynamics: Neuronal communication through neuronal coherence. Trends in Cognitive Sciences, 9(10), 474–480. [DOI] [PubMed] [Google Scholar]

- Hotelling H (1936). Relations between two sets of variates. Biometrika, 28(3–4), 321–377. [Google Scholar]

- Kass RE, Amari S-I, Arai K, Brown EN, Diekman CO, Diesmann M, Doiron B et al. (2018). Computational neuroscience: Mathematical and statistical perspectives. Annual Review of Statistics and its Application, 5, 183–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kettenring JR (1971). Canonical analysis of several sets of variables. Biometrika, 58(3), 433–451. [Google Scholar]

- Klein N, Orellana J, Brincat SL, Miller EK, & Kass RE (2020). Torus graphs for multivariate phase coupling analysis. The Annals of Applied Statistics, 14(2), 635–660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux J-P, Rodriguez E, Martinerie J, & Varela FJ (1999). Measuring phase synchrony in brain signals. Human Brain Mapping, 8(4), 194–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lepage KQ & Vijayan S (2017). The relationship between coherence and the phase-locking value. Journal of Theoretical Biology, 435, 106–109. [DOI] [PubMed] [Google Scholar]

- Lowet E, Roberts MJ, Bonizzi P, Karel J, & De Weerd P (2016). Quantifying neural oscillatory synchronization: A comparison between spectral coherence and phase-locking value approaches. PloS One, 11(1), e0146443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathalon DH & Sohal VS (2015). Neural oscillations and synchrony in brain dysfunction and neuropsychiatric disorders: It’s about time. Journal of the American Medical Association Psychiatry, 72(8), 840–844. [DOI] [PubMed] [Google Scholar]

- Miller EK, Lundqvist M, & Bastos AM (2018). Working memory 2.0. Neuron, 100(2), 463–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarro A., Frellsen J., & Turner R. (2017). The multivariate generalised von Mises distribution: Inference and applications. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 31, Association for the Advancement of Artificial Intelligence, Washington, DC. [Google Scholar]

- Nolte G, Galindo-Leon E, Li Z, Liu X, & Engel AK (2020). Mathematical relations between measures of brain connectivity estimated from electrophysiological recordings for Gaussian distributed data. Frontiers in Neuroscience, 14, 577574. https://www.frontiersin.org/articles/10.3389/fnins.2020.577574/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ombao H & Pinto M (2022). Spectral dependence. Econometrics and Statistics. 10.1016/j.ecosta.2022.10.005 [DOI]

- Ombao H&VanBellegem S.(2008).Evolutionarycoherenceofnonstationarysignals.IEEETransactions on Signal Processing, 56(6), 2259–2266. [Google Scholar]

- Orellana J & Kass RE (2023). Latent Torus Graphs for Dense Recordings and Cross-Region Phase Coupling Analysis (unpublished manuscript).

- Pandey JN (2011). The Hilbert Transform of Schwartz Distributions and Applications, Wiley, New York. [Google Scholar]

- Pesaran B, Vinck M, Einevoll GT, Sirota A, Fries P, Siegel M, Truccolo W, Schroeder CE, & Srinivasan R (2018). Investigating large-scale brain dynamics using field potential recordings: Analysis and interpretation. Nature Neuroscience, 21(7), 903–919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picinbono B (1994). On circularity. IEEE Transactions on Signal Processing, 42(12), 3473–3482. [Google Scholar]

- Schmidt R, Ruiz MH, Kilavik BE, Lundqvist M, Starr PA, & Aron AR (2019). Beta oscillations in working memory, executive control of movement and thought, and sensorimotor function. Journal of Neuroscience, 39(42), 8231–8238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shumway RH & Stoffer DS (2017). Time Series Analysis and its Applications: With R Examples, Springer, Cham, Switzerland. [Google Scholar]

- Siegle JH, Jia X, Durand S, Gale S, Bennett C, Graddis N, Heller G et al. (2021). Survey of spiking in the mouse visual system reveals functional hierarchy. Nature, 592(7852), 86–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srinath R & Ray S (2014). Effect of amplitude correlations on coherence in the local field potential. Journal of Neurophysiology, 112(4), 741–751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tugnait JK (2019a). Edge exclusion tests for graphical model selection: Complex Gaussian vectors and time series. IEEE Transactions on Signal Processing, 67(19), 5062–5077. [Google Scholar]

- Tugnait JK (2019b). Edge exclusion tests for improper complex Gaussian graphical model selection. IEEE Transactions on Signal Processing, 67(13), 3547–3560. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.