Abstract

The Metaverse has gained wide attention for being the application interface for the next generation of Internet. The potential of the Metaverse is growing, as Web 3·0 development and adoption continues to advance medicine and healthcare. We define the next generation of interoperable healthcare ecosystem in the Metaverse. We examine the existing literature regarding the Metaverse, explain the technology framework to deliver an immersive experience, along with a technical comparison of legacy and novel Metaverse platforms that are publicly released and in active use. The potential applications of different features of the Metaverse, including avatar-based meetings, immersive simulations, and social interactions are examined with different roles from patients to healthcare providers and healthcare organizations. Present challenges in the development of the Metaverse healthcare ecosystem are discussed, along with potential solutions including capabilities requiring technological innovation, use cases requiring regulatory supervision, and sound governance. This proposed concept and framework of the Metaverse could potentially redefine the traditional healthcare system and enhance digital transformation in healthcare. Similar to AI technology at the beginning of this decade, real-world development and implementation of these capabilities are relatively nascent. Further pragmatic research is needed for the development of an interoperable healthcare ecosystem in the Metaverse.

Keywords: Metaverse, Healthcare, Ecosystem, Artificial intelligence, Web 3·0

Highlights

-

•

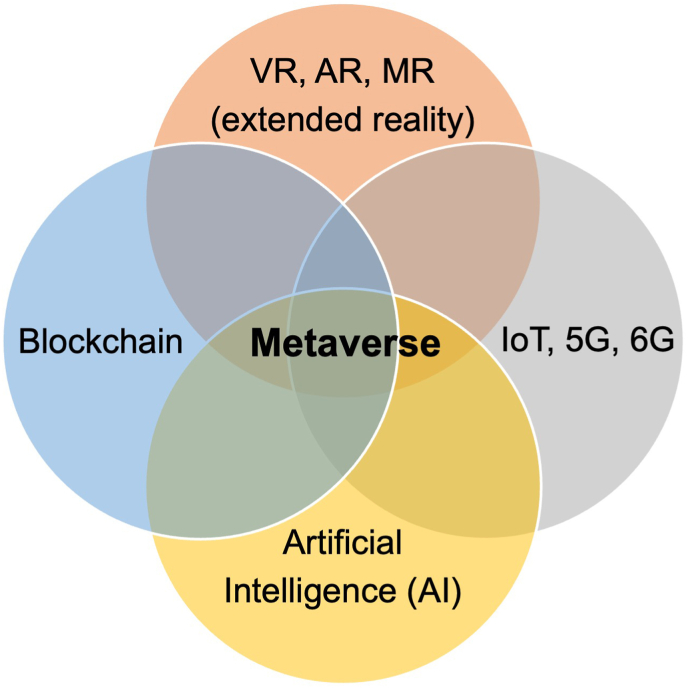

The Metaverse combines extended reality, AI, blockchain, Internet of Things, 5G and 6G.

-

•

The applications in healthcare: avatar-based meetings, immersive simulations, social interactions.

-

•

Patients, healthcare providers, and organizations, can achieve these applications.

-

•

Further research is needed for developing the healthcare ecosystem in the Metaverse.

1. Introduction

Digital health innovations are transforming medicine, with change accelerated through necessity during the COVID-19 pandemic [1]. Telemedicine helps reduce pressures on physical clinic capacity, and improves access to healthcare services [2]. Virtual care using telemedicine is primarily delivered using mobile phone calls and cloud-based video conferencing software today [3]. However, patient engagement and satisfaction in their interactions with virtual care or autonomous digital applications can vary depending on clinical context [[4], [5], [6]], potentially hinder long-term patient engagement, and impair therapeutic relationships between patients and their providers. The Metaverse offers innovative solutions to address these limitations, especially for patients facing access barriers due to physical disabilities or geographical distance.

Besides healthcare service delivery, the Metaverse holds significant potential for healthcare applications, including enhance collaboration between clinicians and researchers. While existing technology has facilitated remote communication, challenges in team bonding and creative tasks persist, underscoring the need for more immersive platforms. Clearly, the best is yet to be for existing platforms, and there is room for improvement in simulating aspects of communication dependent on gestures and the physical characteristics of objects.

Virtual reality (VR) and augmented reality (AR) create immersive Metaverse environments, with growing interest as VR and AR technology becomes more accessible, with an estimated 50,000 users in October 2021 [7]. Examining the capabilities and ethical considerations of the emerging Metaverse is critical for healthcare innovation [8], enabling improved collaboration, education, and healthcare service delivery [9]. The latest developments in web-based Metaverse platforms facilitate customization, expanding the scope of potential applications across medicine [10], including multidisciplinary team meetings and conferences. Potential barriers to implementation include cost, accessibility, infrastructure, cybersecurity, and ethical considerations - these must be overcome to develop an effective Metaverse for all [11].

In this study, we define the Metaverse, explore its technical framework, discuss how a virtual healthcare ecosystem may be developed in the context of Metaverse, and describe how such a system may look to patients, healthcare providers, and healthcare organizations. We also outline challenges and potential solutions in the development of the Metaverse, emphasizing its transformative potential in telemedicine, healthcare communication, education, training, and clinical research collaboration, contingent on rigorous development, validation, and governance. Understanding and addressing these challenges are pivotal for the future of healthcare innovation.

2. Defining the metaverse

As a term, ‘The Metaverse’ was first coined by writer Neal Stephenson in 1992 in the science fiction novel Snow Crash [12], and has seen a surge in interest across sectors in recent years. It pictures a three-dimensional (3D) environment of interconnected virtual spaces, for users to interact freely as avatars and generate content supported by a decentralized economy [Fig. 1] [13]. With ongoing experimentation and incorporation of new technological developments in cryptography, our understanding of the metaverse today has advanced greatly. It has now been established the be the likely application layer for the next generation of the internet, or Web 3·0. The Metaverse can enable immersive digital experiences in extended reality including VR, AR, and mixed reality (MR) through the convergence of advancements in supporting technologies such as AI, blockchain, Internet of things (IoT), 5G and 6G [Fig. 1].

Fig. 1.

The Metaverse is a technological convergence of extended reality, AI, blockchain, IoT, 5G and 6G technology. VR, virtual reality; AR, augmented reality; MR, mixed reality; IoT, Internet of Things.

Immersive experiences are now enabled on different interfaces and mobile application platforms, such as mobile smart phones, web desktop, gaming consoles, AR and VR headsets [14]. This is facilitated by the shifting emphasis of leading technology giants like Google, Apple, and Meta (previously known as Facebook) to drive new technological innovations, such as the Oculus Quest 2 VR headset, Vision Pro, and incorporating finger dexterity and haptic feedback tracking by Meta's Reality Labs Research teams [15]. These experiences have opened up a multitude of possibilities to redefine traditional systems across sectors, from sales and retail [16], music and art [17], social networking, journalism [18], to healthcare [9].

Use of digital avatars and digital twins, as physical embodiment of the individual self and physical environments, breaks the boundaries of current 2D platforms to further enhance the sense of self. They also opens opportunities for modelling and prediction of physical processes using its digital counterparts [19]. Another key feature of blockchain-enabled Metaverse platforms is the use of non-fungible tokens (NFTs) [20], that provide a digital warranty of authenticity to allow trustless exchange and secure storage of content in a decentralized manner [9,21]. The foundation for connectivity is the Internet. The rapidly innovations in Internet technology including the 5G and 6G. IoT can also be leveraged in the Metaverse, mapping real-time IoT data from real life into the virtual world. The IoT can also supplement the experience interface of the users into the virtual world created [Fig. 1)] [22,23].

3. Technology background

Metaverse applications are user-consumable interactive multimedia experiences that combine immersive 3D renders, distributed real-world data, personalized digital assets, and metadata that bridge the digital and physical worlds.

3.1. Immersive technologies

Immersive technologies, including VR, AR, and MR, enable users to perceive and interact with these applications. The level of immersion offered by these technologies depends on the extent to which the human senses are engaged and their hardware capabilities [24]. Typical audio-visual experience can allow users to perceive the digital world visually through volumetric content and aurally through spatial audio in three dimensions. For example, VR can be used for medical training simulations, allowing surgeons to practice procedures in a realistic virtual environment before performing on patients. AR games like Pokémon Go overlay digital creatures onto the real world, creating an interactive gaming experience.

3.2. Multisensory immersion

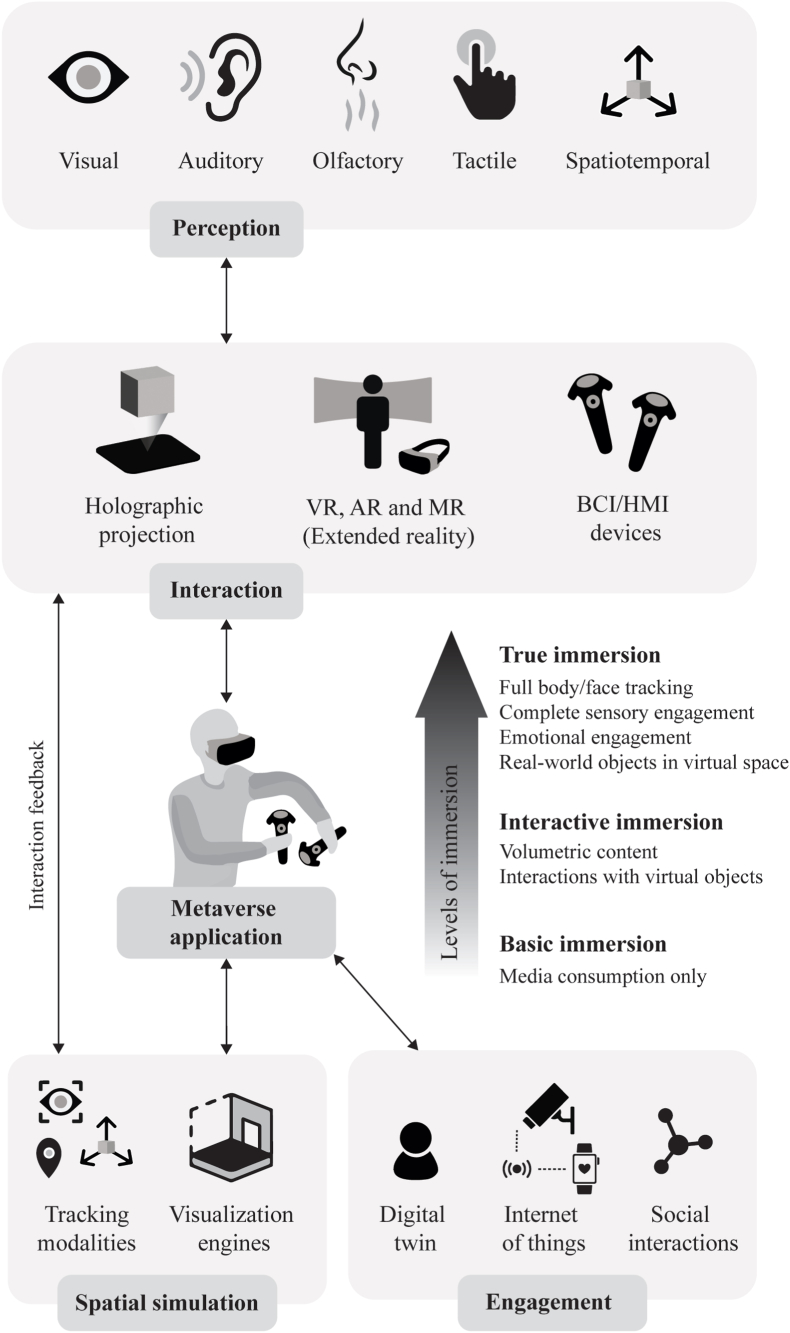

The inclusion of haptic feedback and spatial awareness can further augment these experiences. Spatial awareness changes the field of vision and stereo sound perceived by the user based on their position in physical space. At the same time, haptic feedback simulates the sensation of touch while interacting with virtual objects. In addition, digital scent technology can stimulate olfactory perception, allowing users to experience different odors to provide a more immersive, real-world experience. Furthermore, eye-tracking includes eye-facing cameras that collect user data on gazing, facial expressions, and pupil dilations from the real world. They also render realistic avatar reconstructions (digital twin) in the digital world by accurately reflecting their expressions and eye movements. Ultimately, these technologies aim towards a fully immersive multisensory experience to engage users at cognitive and affective levels. Figure 2 illustrates the underlying framework that delivers an immersive multisensory experience.

Fig. 2.

Underlying framework to deliver an immersive Metaverse experience. VR, virtual reality; AR, augmented reality; MR, mixed reality; BCI, brain computer interface; HMI, human machine interface.

3.3. Near-eye displays (NEDs)

While hardware technologies allow users to interact and immerse themselves, software technologies enable rendering virtual environments and processing user data for a more dynamic experience. Near-eye displays (NEDs) are head-mounted display technologies for AR and VR that have become ubiquitous for metaverse interactions. The display hardware is gaining usability and wider adoption for their performance improvements with the field of view, brightness, spatial and angular resolution. They are also becoming more ergonomic, providing for smaller form factors and mitigating vergence-accommodation conflict [25,26]. NED headsets also integrate several sensors like inertial measurement units (IMUs), Global Positioning System (GPS), light detection and ranging (LIDAR), and head-mounted cameras to facilitate spatial awareness. By utilizing these sensors, they also trigger haptic feedback. Thus, the integration of sensor technology into NED headsets makes interactions via joysticks and pointing devices obsolete and augments visual immersion to the next level [24]. For example, smart glasses like Google Glass overlay digital information onto the user's field of view; devices like the Oculus Rift provide immersive VR experiences with high-quality visuals and tracking capabilities.

3.4. Brain-computer interfaces (BCI)

In addition to VR, AR, and MR technologies, holographic displays and brain-computer interfaces (BCI) are also gaining popularity. Holographic projection enables users to see the virtual image with the naked eye at different viewing angles without any wearable device [27]. On the other hand, BCI would decode brain signals via electroencephalography in order to send user commands as a means of interaction in the Metaverse [28]. BCI can potentially transduce user commands and stimulate sensory perception by activating corresponding areas of the brain [29]. Thus, BCI may deliver the most natural form of interaction among these hardware technologies in future. For instance, BCI can assist individuals with paralysis by enabling them to control computers or prosthetic devices through brain signals. It can also offer more natural and immersive control in VR games by interpreting user's thoughts and intentions.

3.5. Dara processing and networks

The Metaverse unlocks the true potential of immersiveness by processing a large amount of data at high speeds for content generation. For a comfortable and immersive experience, real-time 3D rendering must be done at 60–90 FPS with very low latency [30]. Cloud computing is deployed to run visualization engines (software applications) that process high-resolution graphics for rendering the virtual world. Whereas real-time processing, such as data from AR/VR devices, gaming commands, haptic feedback, etc., are offloaded to edge devices at the user end. 5G/6G networks are rising as they enhance mobile broadband for personal devices and large-scale IoT infrastructure with high data rates, low latency, and reliability [31].

4. Metaverse platforms

Most internet activity currently operates on ‘Web 2·0’, whereby large technology companies develop and provide users access to centralized digital platforms. These platforms have often hosted marketplaces for products and services such as social networks and multimedia streaming [Table 1]. These platforms provide avenues for individuals and businesses to create and share user generated content (UGC). Benefits to users include access to these platforms at little to no cost [32,33]. Many users have made careers out of monetizing UGC shared on these platforms through reviews and podcasts, while others have incorporated use of digital platforms within their core business models and lifestyles [34]. The early users and adopters of these platforms are central to the platforms' popularity and success, which often hinges on the quantity, quality, and variety of UGC contributed within them [35].

Table 1.

List of web 2.0 legacy and web 3.0 novel metaverse platforms.

| Name | Web | Terminal | Dimension | Technical Capabilities | Site Visits | Average Duration |

|---|---|---|---|---|---|---|

| Gathertown | 2.0 | Mobile, desktop (web-based) | 2D | Virtualize physical events; spaces integration between customizable physical avatar-fluid video chat | 2.9 M | 0:04:23 |

| Engage VR | 2.0 | Mobile; desktop, VR | 2D | Simulate physical interactions, host multi-user events, collaboration, training, education | 16.4K | 0:03:03 |

| Queppelin | 2.0 | Desktop, VR | 3D | Conduct seminars, meetings, business events in a virtual environment | <5k | NA |

| serl.io | 2.0 | Mobile, desktop, MR | 3D | Create MR content, deploy in collaborative sessions to engage participants in real-time 3D | <5k | NA |

| Decentraland | 3.0 | Desktop, VR | 3D | Land NFT for Social Metaverse built on Ethereum chain. | 599K | 0:07:48 |

| Sandbox | 3.0 | Desktop (web-based) | 3D | Land NFT for Games and Entertainment built on Ethereum and Matic chains. | 4.7 M | 0:09:35 |

| The Otherside | 3.0 | Mobile, desktop (web-based) | 3D | Land NFT for Games and Social metaverse built on Ethereum chain. | 66.3K | 0:03:09 |

| NFTworlds | 3.0 | Desktop (web-based) using Minecraft | 3D | Land NFT for Games built on Ethereum chain. | 47.7K | 0:01:37 |

| Worldwide webb | 3.0 | Mobile, desktop (web-based) | 2D | Land NFT for Games and Social metaverse built on Ethereum chain. | 25.7K | 0:03:18 |

| Metapolis | 3.0 | Desktop, VR | 3D | Metaverse-as-a-Service (MaaS) built on Ziliqa and for Multi-chain compatibility. | <5K | NA |

| Somnium space | 3.0 | Desktop, VR | 3D | Land NFT for Games built on Ethereum and Solana chains. | 70.5K | 0:01:54 |

| Chillchat | 3.0 | Desktop (web-based) | 2D | Origin NFT for Metaverse-as-a-Service (MaaS) built on Solana chain. | 7.5K | 0:00:11 |

| Portals | 3.0 | Desktop (web-based) | 3D | Land NFT of Social metaverse for sports fans built on Solana chain. | 41.3K | 0:01:25 |

Abbreviations: VR: virtual reality; MR: mixed reality; K: thousand; M: million.

However, the low cost of adoption for users comes with several trade-offs, as these digital platforms are reliant on exploiting their users to attain financial sustainability. Firstly, individual privacy is a common trade-off whereby personal data and behavioral patterns of users are collected and monetized by these platforms through sales of data to third parties for the marketing of goods and services [36]. Secondly, new fee structures and paywalls can be added by providers without any notice given to users. These are often predatory and designed to penalize the users with inelastic demand, i.e., users that are most reliant on the platform or most likely to experience disruptions should they lose access to them. Thirdly, these Web 2·0 platforms are operationalized in a permissioned manner. For instance, providers controlling these digital platforms may make unilateral decisions to revoke permissions or access to the platforms. At times, this can be at the detriment of groups of users that may be regular contributors of UGC that enabled these platforms to gain popularity in the first place, even if they might be reliant on the platforms to make a living.

In contrast, ‘Web 3·0’ is centered on the empowerment of individual users through technology. This is enabled by the applications of blockchain, including smart contracts and NFTs. Blockchain technology is increasingly used in healthcare to enhance cybersecurity. It secures patient records by creating an immutable ledger for medical data. This ensures data integrity and reduces the risk of data breaches. Data interoperability is improved as blockchain provides a standardized format for sharing information among healthcare providers. Patients can manage data access through smart contracts, enhancing consent management.

The concept of the Metaverse and Web 3.0 are closely related. They share common goals of decentralization, semantic data, interoperability, trust, and security. The Metaverse can be seen as a prominent use case and a manifestation of the principles and technologies that Web 3.0 seeks to advance, creating a more immersive and interconnected digital experience for users. These two concepts are likely to shape the future of the internet and digital interactions significantly. Examples were collated in [Table 1] for popular applications based on market capitalization or applications with liquid cryptocurrencies [37], as well as transactional volume and unique niche applications based on listings in leading NFT marketplaces [38,39]. In Web 3·0 metaverse applications, users may retain ownership of the UGC that they create, set usage rights for others to repurpose their content, and monetize their content independently. This is facilitated and governed at scale through smart contracts which manage the terms of sale for assets such as UGC. Innovations include programmable royalties collected as a percentage of each sale, at the point of sale. The composability of blockchains also reduces the friction for individuals to switch digital platform providers, given that they own their UGC and can implement it into other platforms. This is quite unlike Web 2·0, where UGC ownership is retained by the technology companies that host UGC on digital platforms, which introduces significant friction for producers and consumers of UGC who wish to switch digital platform providers.

Royalties can be accrued to a treasury of the NFT collection, which can enable owners to vote democratically on the funding of new initiatives and development of new commercial directions within affiliated platforms. This is enabled by the composable and transparent nature of public blockchains, as snapshots of on-chain ownership can be saved at key milestones. This ensures that active stakeholders, who have partial ownership and aligned incentives with the success of the NFT collection, are enfranchised with the ability to participate in the governance and operationalization of these digital platforms.

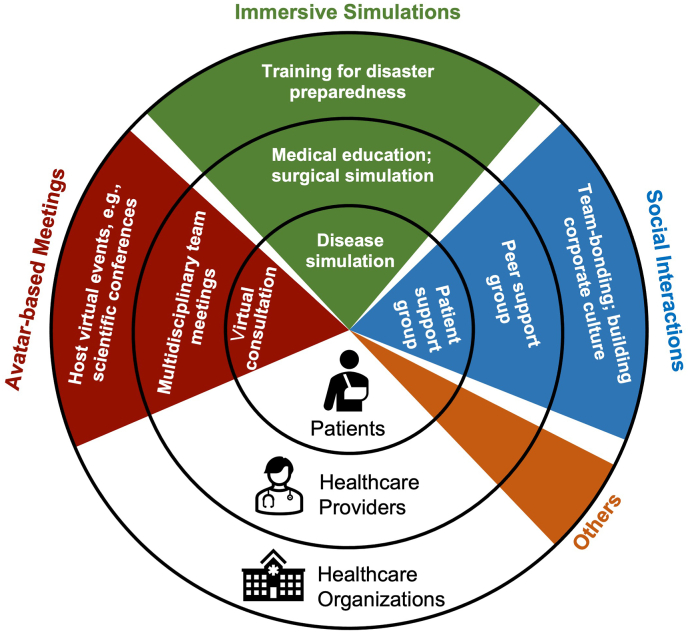

5. Developing an interoperable healthcare ecosystem

Healthcare ecosystems involve a wide range of actors, including patients, physicians, allied health practitioners, state departments, academic institutions, non-governmental organizations (NGOs), and corporations. They incorporate knowledge flows originating from or co-produced by all of these actors with the aim of pursuing a collective goal [40]. For conciseness, discussion focuses here on the three primary actors in the healthcare ecosystem: patients, healthcare providers (physicians, nurses, and other allied health practitioners), and healthcare organizations. The different dimensions of features in the Metaverse, including avatar-based meetings, immersive simulations, social interactions, and others can be applied to all of these actors, creating an interoperable healthcare ecosystem [Fig. 3].

Fig. 3.

The interoperable healthcare ecosystem in the Metaverse.

5.1. Avatar-based meetings

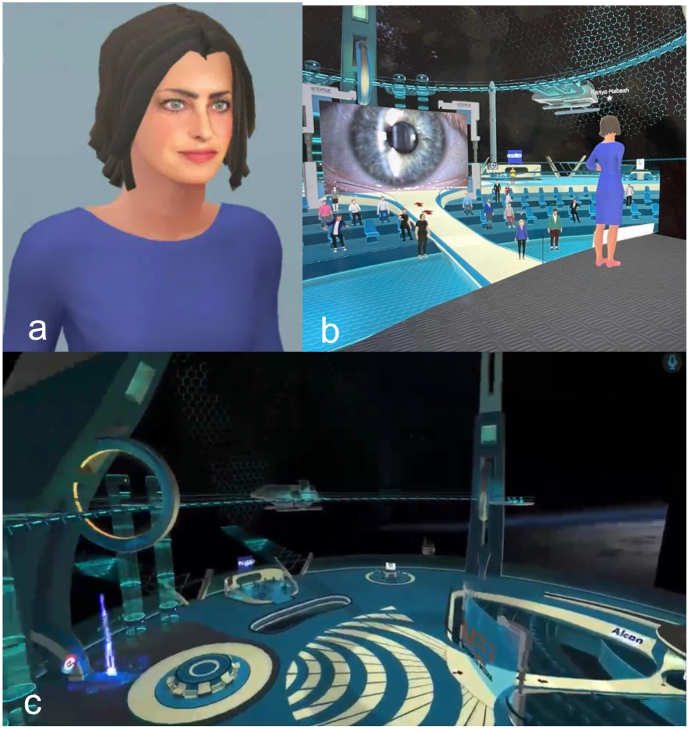

One of the important features in the Metaverse is the capability to host avatar-based meetings. As the Metaverse integrates all kinds of apps and different dimensions of virtual worlds, the avatar can act as the single point of entry and unique identity, where individuals explore, interact, and engage [Fig. 4a]. With the digital avatars, people can interact with each other and the virtual environment to a much greater extent, compared with conventional online video meetings.

Fig. 4.

Hosting ophthalmic conferences by Digital Ophthalmic Society in the Metaverse. (A) The digital avatar of one of the attendees; (B) the experience of the attendee on the conference; (C) the virtual setting of the conference.

Patients are able to attend virtual consultations in the Metaverse. Traditional online consultations, which are limited to voice or video talk, are often inefficient for real-time communication between patients and medical staff. Compared with video or teleconsultation, deeper interaction and communication with clinicians could be achieved through avatars that mirror their behaviors. VR and AR based virtual diagnostic and therapeutic tools are becoming more tangible through avatars [41,42], creating the realism and “personal-touch” of remote consultations, reducing the requirement for face-to-face interaction. In addition, informed consent for surgery and clinical research includes elements of voluntarism, capacity, disclosure, understanding, and decision [43], which requires the interaction of psychological and intellectual characteristics of an individual. Avatar-based communication with clinicians could enhance patients’ understanding regarding various components of the informed consent process. Lastly, patient outcomes can be improved through VR and AR based digital health tools which offer more successful diagnostic and therapeutic options [44,45].

For healthcare providers, the Metaverse could enhance multidisciplinary team meetings (MDTs). MDTs are patient case discussions involving groups of specialists with diverse expertise relevant to the clinical management of a given patient. These are a core element of collaborative work in hospitals, particularly in caring for clinically complicated patients [46]. The extent of organization and the type of communication in MDTs have a direct effect on the quality of patient care provided [46]. However, traditional face-to-face MDTs are costly in both time and money, with virtual MDTs exhibiting promise to ameliorate these costs [47]. Incorporating participation as avatars, MDTs in the Metaverse can overcome the barriers of traditional online MDTs, including difficulty in building a sense of identity, or misunderstandings that can jeopardize team cohesion. Another advantage of MDTs in the Metaverse is facilitation of the human-computer MDT, which aimed for consultation based on human-computer communication and interaction [48]. This aims to provide comprehensive diagnosis and treatment plans that combine clinicians’ expertise, computerized data, and AI-derived knowledge and guidance.

For healthcare organizations, including hospitals, clinics, institutions, and international medical societies, avatar-based virtual events such as scientific conferences can be hosted in the Metaverse. The advantages of avatar-based virtual events include creating the sense of co-presence which allows for shared experiences in the virtual environments and building deeper connection and interactivity between the avatars. The 3D spatial audio capability even allows avatars to hold intimate conversations based on proximity, just as in live meetings. Recently the Digital Ophthalmic Society, hosted by MetaMed Media, held the first Metaverse ophthalmic meeting, serving as a proof of concept for hosting scientific conferences in the Metaverse [Fig. 4]. These experiences provide numerous benefits over in-person conferences, such as the lack of physical constraints such as venue capacity, and reduced barriers to participation given that expenses for travel and accommodation would not be required.

5.2. Immersive simulations

The Metaverse may host more immersive simulation experiences than currently possible, through extended reality incorporating developing technologies; augmenting electronic, digital environments where data are represented and projected [49]. In these extended reality environments, humans interact and observe partially or fully synthetic digital environments constructed by technology.

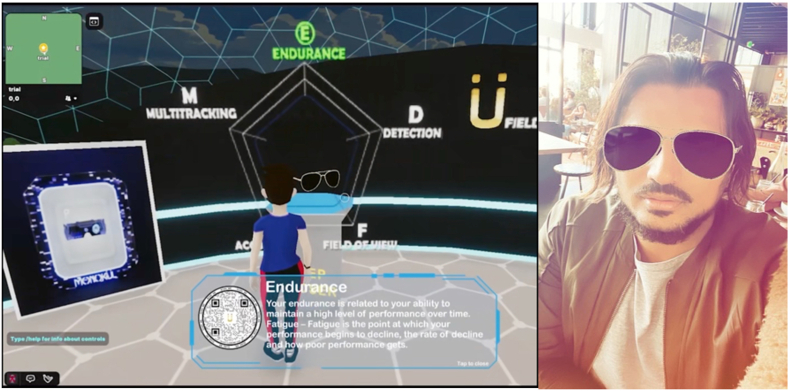

For patient-centered communication, clinicians need to elicit and understand patient perspectives within their unique psychosocial and cultural context, and finally reach a shared understanding of patient problems [50]. Chronic disease self-management and preventive health programs focus on promoting informed lifestyle choices, risk factor modification, and active patient self-management of chronic diseases [51], which relies heavily on better information and communication practices. The immersive simulation platform in the Metaverse can be applied for disease simulation and patient education. Significant clinical benefits have been identified from self-management or lifestyle interventions across conditions including coronary heart disease, chronic kidney disease, diabetes, and rheumatoid arthritis [51]. In addition, it could also possible to perform clinical examinations and treatment in the Metaverse, such as visual acuity [52], visual function assessment [53,54], psychotherapy [55,56], and rehabilitation therapy [44,45,57]. An example of performing clinical examination in the Metaverse can be found at the Stanford Vision Performance Center at the Byers Eye Institute, where patients can visit a clinic in the Metaverse, and can play videogames enabled to assess visual, cognitive, and motor function via the Vision Performance Index [Fig. 5].

Fig. 5.

A patient visiting a virtual clinic in the Metaverse.

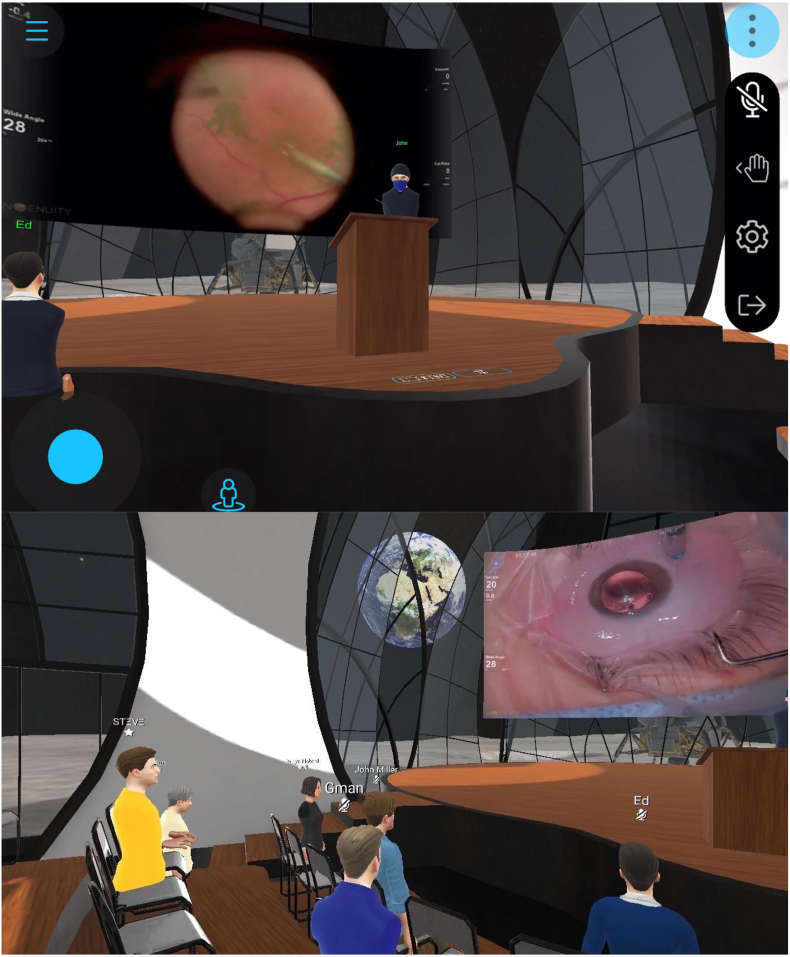

For healthcare providers, the Metaverse can be applied for medical education, including clinical simulation training, surgical simulation training, and code blue training. A recent prospective study demonstrated that the Metaverse can be an effective system for facilitating and enabling interactions amongst international colleagues for scientific instructions and mentorship [58]. For example, MetaMed Media hosts regular 3D surgical rounds with case discussions between ophthalmology key opinion leaders from across the world and trainees from several academic institutions [Fig. 6]. Furthermore, 3D volumetric capture allows attendees to revisit the event in immersive fashion, similar to the Halliday Journals archive in Ready Player One [59]. With the development of AR and VR devices, the immersive experience is more effective in areas like surgical training that require advanced hand skills and interaction [60]. There are a growing number of studies in the literature that support the efficacy of using VR for surgical training initiatives. For example, a 38 % reduction in cataract surgery complication rates was associated with the use of VR training in a group of first- and second-year surgical trainees [61]. In orthopaedic surgery, it is identified that the use of VR is an effective method of improving technical skill acquisition among trainees learning arthroscopic procedures [62]. Promising findings pertaining to the viability of using extended reality technology for training initiatives have similarly been expressed in plastic surgery [63], cardiothoracic surgery [64], and neurosurgery [65]. Besides medical education, the Metaverse also provides a virtual environment for remote surgeons to conduct actual surgical procedures, such as through surgical robots [66]. This may extend surgical expertise geographically, as already occurring at scale in radiology, allowing high-precision operations to be scheduled around the clock, without reducing accuracy and flexibility [60].

Fig. 6.

3D ophthalmic surgical rounds host by MetaMed Media.

For healthcare organizations, the Metaverse is a promising training platform for disaster preparedness. The preparedness of a hospital for disaster response and mass-casualty incident includes activities, programs and systems developed and implemented before the event [67]. A well-established preparedness program is essential for the effective response to emergencies of healthcare systems. The virtual reality simulation system, for example, has shown to be a viable, cost-effective approach for professional training in in-hospital disaster preparedness [68]. For example, there are emerging virtual simulation methods and platforms designed for pandemic preparedness and response during COVID-19 pandemic [[69], [70], [71]], which could be potentially further upgraded and incorporated into the Metaverse. The reproducibility, repeatability, and real-time training features of the Metaverse platform, in addition to its low cost of implementation, suggest that it could serve as a promising adjunct to conventional approaches to training [68].

5.3. Social interactions

Currently, the majority applications of the Metaverse are focused on social and gaming industries. Therefore, enhanced social interactions within the healthcare ecosystem are perhaps the most accessible function of the Metaverse. Besides interactions between the patients and healthcare providers discussed above, the Metaverse also provides virtual platforms for patients' and medical professionals’ communities. Patients may even find them useful to form virtual patient support groups. Studies show that patients benefit from engagement in groups offering emotional support, confidence, strength, and hope; leading to measurable improvements in quality of life [72]. The Metaverse provides the technical infrastructure to create virtual spaces where patients with similar medical conditions or challenges can gather. These platforms may include VR or AR environments, web-based applications, or even mobile apps with augmented reality features. Ensuring the privacy and security of patient data within these virtual support groups is paramount. Utilizing blockchain or decentralized identity systems can help manage user identities and control access to sensitive information. Technical solutions must support real-time interactions, such as voice and video chat, and even gestures within virtual environments. This requires robust network infrastructure and low-latency communication protocols. For healthcare providers, virtual peer support groups could similarly provide accessible emotional support for them by combatting stigma and by creating a supportive forum for healthcare professionals to communicate with each other [73]. Healthcare professionals need secure channels to communicate with each other. Implementing end-to-end encryption and secure messaging protocols can protect sensitive discussions within virtual peer support groups. To ensure that participants in healthcare provider communities are genuine professionals, technologies like digital badges or certificates issued on blockchain can verify their qualifications and credentials. Healthcare organizations may use the Metaverse to host team-bonding activities and build a positive company culture. The Metaverse can facilitate virtual team-building events, workshops, and conferences. These events may involve virtual reality environments, live streaming, and interactive elements like polls and Q&A sessions. Technical aspects include avatar customization to represent users in the Metaverse accurately. Healthcare organizations may vary in size, and technical solutions need to scale to accommodate large numbers of participants in virtual events and activities. Cloud-based infrastructure and content delivery networks (CDNs) can help ensure scalability.

5.4. Others

The Metaverse also includes the retail sector of the healthcare ecosystem. Given early consumer adoption has been focused on social, leveraging mobile devices capabilities via cameras and AR enabled platforms including Instagram and Snap, allows for bidirectional experiences in the Metaverse. An example of such experience is visiting a virtual clinic in the Metaverse, such as the Stanford Vision Performance Center at the Byers Eye Institute [Fig. 7]. Patients are able to visit a virtual clinic where they are provided services, including a virtual optical shop, where one can move from a virtual experience to an augmented experience as illustrated below. The virtual try-on via an AR experience in Instagram allows an individual to explore the Metaverse, interact with experience which can lead to a real-world transaction, including buying a pair of glasses in both the digital world and physical world.

Fig. 7.

Virtual optical shop in the Metaverse. It can activate an augmented reality try-on and purchase via a QR code.

6. Challenges and future directions of the metaverse

There remain several potential barriers to widespread implementation of the Metaverse. These include cybersecurity risks, regions with limited access to internet connectivity, lack of technology literacy, and other physical issues that may impair accessibility, such as among visually impaired individuals.

Firstly, the Metaverse carries inherent cybersecurity risks of hacking and exposure of patient privacy data. However, advancement of healthcare organizational cybersecurity protocols has provided potential measures to reduce these risks. For example, the increasing application of blockchain technology could provide “protect and permit” for secure sharing of patients’ data [74]. There have been several reports of healthcare applications of blockchain, with health record management and supporting security within mobile and hardware solutions [75].

Secondly, the increasing bandwidth requirement of immersive experiences in the 3D environment may present challenges for applying these technologies in less technologically developed areas [76]. Sparse internet connectivity has challenged recent efforts to implement AI in healthcare, whereas latency has also been reported as a limitation to implementation in real-world clinical settings [77,78]. Nevertheless, there are emerging solutions to improve connectivity in the field of 5G telecommunications, laying the foundation for a pragmatic avenue to assist application in less-developed areas [79]. These advancements can support the implementation of immersive healthcare solutions and overcome connectivity limitations.

Thirdly, another important barrier to the development of the Metaverse is the learning curve associated with new technology, leading to difficulties in interpreting the user interface, which could lead to clinically significant misunderstandings [[80], [81], [82]]. User-centered design and training programs can ease the learning curve, making immersive technology more accessible and understandable for healthcare professionals. In addition, there may also be issues associated with privacy and corporate overreach relating to commercialization of personal data, as on mainstream Web 2·0 platforms. These are potential barriers to application that may be addressed with focused research aiming to identify stakeholders’ capabilities to apply these technologies and their attitudes towards these solutions [83]. Early research in the field has explored the implementation of immersive technology including AR and VR in healthcare, as most investigated patients show interest in these technological solutions.

Finally, despite the great potential for implementation of immersive technology in healthcare [84], the usage of these technologies for patients with visual or hearing impairment may still remain challenging. For instance, patients with severe visual impairment may not be able to appreciate the full content in a 3D or VR simulation situation [85]. Therefore, advancement of new technology that can customize simulations for patients with special visual or audio needs is warranted to facilitate comprehensive development of the Metaverse.

7. Conclusions

The Metaverse, as the anticipated application interface for the next generation of the Internet (Web 3.0), is poised to revolutionize healthcare. In this context, we have defined a vision of the next-generation interoperable healthcare ecosystem within the Metaverse. The Metaverse offers a wealth of possibilities for healthcare, from avatar-based meetings facilitating remote consultations to immersive simulations aiding medical training and social interactions fostering patient engagement. To unlock the full potential of the Metaverse in healthcare, we must address several critical factors. These include the need for continuous technological innovation to refine and expand Metaverse capabilities, the requirement for robust regulatory supervision to ensure data security and ethical use cases, and the implementation of sound governance structures to guide this evolving digital landscape. The concept and framework we propose for the Metaverse have the potential to redefine traditional healthcare systems and accelerate digital transformation in the field. While the Metaverse is currently nascent, its potential is huge; comparable to AI technology at the beginning of the 2010s. Further research into technology, security, and applications is necessary for the real-world development of an interoperable healthcare ecosystem in the Metaverse.

Author contributions

All authors conceptualised the manuscript, researched its contents, wrote the manuscript, edited all revisions, and approved the final version. Authors YL and DVG are co-first authors that contributed equally to the manuscript.

Funding

This work was supported by the following funding: National Medical Research Council, Singapore: MOH-000655-00 & MOH-001014-00; Duke-NUS Medical School: Duke-NUS/RSF/2021/0018 & 05/FY2020/EX/15-A58; Agency for Science, Technology and Research: A20H4g2141 & H20C6a0032.

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Declaration of competing interest

Author DVG reports investment in DoctorBell (acquired by MaNaDr, Mobile Health), AskDr, VISRE, Healthlink, and Shyfts. He reports serving as senior lecturer and faculty advisor to the medical innovation program of NUS, and appointment as physician leader (telemedicine) and general practitioner at Raffles Medical Group (RMG, SGX:$BSL).

Footnotes

Peer review under responsibility of Chang Gung University.

References

- 1.Gunasekeran D.V., Tham Y.C., Ting D.S.W., Tan G.S.W., Wong T.Y. Digital health during COVID-19: lessons from operationalising new models of care in ophthalmology. The Lancet Digital Health. 2021;3(2):e124–34. doi: 10.1016/S2589-7500(20)30287-9. [DOI] [PubMed] [Google Scholar]

- 2.Tuckson R.V., Edmunds M., Hodgkins M.L. Telehealth. N Engl J Med. 2017;377(16):1585–1592. doi: 10.1056/NEJMsr1503323. [DOI] [PubMed] [Google Scholar]

- 3.Ting D.S., Gunasekeran D.V., Wickham L., Wong T.Y. Next generation telemedicine platforms to screen and triage. Br J Ophthalmol. 2020;104(3):299–300. doi: 10.1136/bjophthalmol-2019-315066. [DOI] [PubMed] [Google Scholar]

- 4.Pogorzelska K., Chlabicz S. Patient satisfaction with telemedicine during the COVID-19 pandemic—a systematic review. Int J Environ Res Publ Health. 2022;19(10):6113. doi: 10.3390/ijerph19106113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wargnier P., Benveniste S., Jouvelot P., Rigaud A.S. Usability assessment of interaction management support in LOUISE, an ECA-based user interface for elders with cognitive impairment. Technol Disabil. 2018;30(3):105–126. [Google Scholar]

- 6.Bin Sawad A., Narayan B., Alnefaie A., Maqbool A., Mckie I., Smith J., et al. A systematic review on healthcare artificial intelligent conversational agents for chronic conditions. Sensors. 2022;22(7):2625. doi: 10.3390/s22072625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Statista Total users of selected virtual platforms worldwide 2021, https://www.statista.com/statistics/1280597/global-total-users-virtual-platforms/

- 8.Vial G. In: Managing Digital Transformation: Understanding the Strategic Process. 1st ed. Hinterhuber A., Vescovi T., Checchinato F., editors. Routledge; London: 2021. Understanding digital transformation: A review and a research agenda; pp. 13–66. [Google Scholar]

- 9.Thomason J. MetaHealth-how will the metaverse change health care? Journal of Metaverse. 2021;1(1):13–16. [Google Scholar]

- 10.Wiederhold B.K. Metaverse games: game changer for healthcare? Cyberpsychol Behav Soc Netw. 2022;25(5):267–269. doi: 10.1089/cyber.2022.29246.editorial. [DOI] [PubMed] [Google Scholar]

- 11.Scott Kruse C., Karem P., Shifflett K., Vegi L., Ravi K., Brooks M. Evaluating barriers to adopting telemedicine worldwide: a systematic review. J Telemed Telecare. 2018;24(1):4–12. doi: 10.1177/1357633X16674087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wu J., Cao Z., Chen P., He C., Ke D. Users’ information behaviour from the metaverse perspective: framework and prospects. J Inf Resources Manag. 2022;12(1):4–20. [Google Scholar]

- 13.Zhang T., Gao L., He C., Zhang M., Krishnamachari B., Avestimehr A.S. Federated learning for the internet of things: applications, challenges, and opportunities. IEEE Internet of Things Magazine. 2022;5(1):24–29. [Google Scholar]

- 14.Park S., Kim S.P., Whang M. Individual’s social perception of virtual avatars embodied with their habitual facial expressions and facial appearance. Sensors (Basel) 2021;21(17):5986. doi: 10.3390/s21175986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Meta Inside reality Labs research: bringing touch to the virtual world. 2021. https://about.fb.com/news/2021/11/reality-labs-haptic-gloves-research/

- 16.Bourlakis M., Papagiannidis S., Li F. Retail spatial evolution: paving the way from traditional to metaverse retailing. Electron Commer Res. 2009;9(1):135–148. [Google Scholar]

- 17.Choi HS, Kim SH. A content service deployment plan for metaverse museum exhibitions—centering on the combination of beacons and HMDs. Int J Inf Manag. 2017;37(1):1519–1527. [Google Scholar]

- 18.Brennen B, Dela Cerna E. Journalism in second life. Journal Stud. 2010;11(4):546–554. [Google Scholar]

- 19.Croatti A, Gabellini M, Montagna S, Ricci A. On the integration of agents and digital twins in healthcare. J Med Syst. 2020;44(9):161. doi: 10.1007/s10916-020-01623-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bamakan SMH, Nezhadsistani N, Bodaghi O, Qu Q. Patents and intellectual property assets as non-fungible tokens; key technologies and challenges. Sci Rep. 2022;12(1):2178. doi: 10.1038/s41598-022-05920-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tan T.F., Li Y., Lim J.S., Gunasekeran D.V., Teo Z.L., Ng W.Y., et al. Metaverse and virtual health care in ophthalmology: opportunities and challenges. Asia Pac J Ophthalmol (Phila) 2022;11(3):237–246. doi: 10.1097/APO.0000000000000537. [DOI] [PubMed] [Google Scholar]

- 22.Li K., Cui Y., Li W., Lv T., Yuan X., Li S., et al. 2022. When internet of things meets metaverse: convergence of physical and cyber worlds. arXiv preprint arXiv:220813501. [Google Scholar]

- 23.Mozumder MAI, Sheeraz MM, Athar A, Aich S, Kim HC. 2022 24th international conference on advanced communication technology (ICACT) ; 2022;. Overview: technology roadmap of the future trend of metaverse based on IoT, blockchain, AI technique, and medical domain metaverse activity; pp. p. 256–61.. [Google Scholar]

- 24.Kim H, Kwon YT, Lim HR, Kim JH, Kim YS, Yeo WH. Recent advances in wearable sensors and integrated functional devices for virtual and augmented reality applications. Adv Funct Mater. 2021;31(39) [Google Scholar]

- 25.Jang HJ, Lee JY, Baek GW, Kwak J, Park JH. Progress in the development of the display performance of AR, VR, QLED and OLED devices in recent years. Journal of Information Display. 2022;23(1):1–17. [Google Scholar]

- 26.Zhan T, Yin K, Xiong J, He Z, Wu ST. Augmented reality and virtual reality displays: perspectives and challenges. iScience. 2020;23(8) doi: 10.1016/j.isci.2020.101397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Xiong J, Hsiang EL, He Z, Zhan T, Wu ST. Augmented reality and virtual reality displays: emerging technologies and future perspectives. Light Sci Appl. 2021;10(1):216. [Google Scholar]

- 28.Coogan CG, He B. Brain-computer interface control in a virtual reality environment and applications for the internet of things. IEEE Access. 2018;6:10840–10849. doi: 10.1109/ACCESS.2018.2809453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bjånes DA, Moritz CT. In: Handbook of Neuroengineering. Thakor NV, editor. Springer; Singapore: 2022. Artificial sensory feedback to the brain: somatosensory feedback for neural devices and BCI; pp. 1–23. [Google Scholar]

- 30.Gunkel SNB, Dijkstra-Soudarissanane S, Stokking HM, Niamut OA. From 2D to 3D video conferencing: modular RGB-D capture and reconstruction for interactive natural user representations in immersive extended reality (XR) communication. Front. Signal Process. 2023:3:1139897. [Google Scholar]

- 31.Silva BN, Khan M, Han K. Internet of things: a comprehensive review of enabling technologies, architecture, and challenges. IETE Tech Rev. 2018;35(2):205–220. [Google Scholar]

- 32.Heinonen K. Consumer activity in social media: managerial approaches to consumers’ social media behavior. J Consum Behav. 2011;10(6):356–364. [Google Scholar]

- 33.Geng R, Chen J. The influencing mechanism of interaction quality of UGC on consumers’ purchase intention–an empirical analysis. Front Psychol. 2021;12:697382 doi: 10.3389/fpsyg.2021.697382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Plangger K. The power of popularity: how the size of a virtual community adds to firm value. J Publ Aff. 2012;12(2):145–153. [Google Scholar]

- 35.Archak N, Ghose A, Ipeirotis PG Science M. Deriving the pricing power of product features by mining consumer reviews. Manag Sci. 2011;57(8):1485–1509. [Google Scholar]

- 36.Goldfarb A., Tucker CE. Privacy regulation and online advertising. Manag Sci. 2010;57(1):57–71. [Google Scholar]

- 37.Top metaverse tokens by market capitalization. 2022. https://coinmarketcap.com/view/metaverse/

- 38.OpenSea Explore, collect, and sell NFTs. 2022. https://opensea.io/

- 39.Magic Eden 2022. https://magiceden.io/

- 40.Secundo G, Toma A, Schiuma G, Passiante G. Knowledge transfer in open innovation. Bus Process Manag J. 2019;25(1):144–163. [Google Scholar]

- 41.Liu Y, Tan W, Chen C, Liu C, Yang J, Zhang Y. A review of the application of virtual reality technology in the diagnosis and treatment of cognitive impairment. Front Aging Neurosci. 2019;11:280. doi: 10.3389/fnagi.2019.00280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Chen H, Zou Q, Wang Q. Clinical manifestations of ultrasonic virtual reality in the diagnosis and treatment of cardiovascular diseases. J Healthc Eng. 2021:1746945. doi: 10.1155/2021/1746945. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Falagas ME, Korbila IP, Giannopoulou KP, Kondilis BK, Peppas G. Informed consent: how much and what do patients understand? Am J Surg. 2009;198(3):420–435. doi: 10.1016/j.amjsurg.2009.02.010. [DOI] [PubMed] [Google Scholar]

- 44.Falcone MM, Hunter DG, Gaier ED. Emerging therapies for amblyopia. Semin Ophthalmol. 2021;36(4):282–288. doi: 10.1080/08820538.2021.1893765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Luminopia FDA-approved digital therapeutic for amblyopia. 2022. https://luminopia.com/

- 46.Ruhstaller T, Roe H, Thürlimann B, Nicoll JJ. The multidisciplinary meeting: an indispensable aid to communication between different specialities. Eur J Cancer. 2006;42(15):2459–2462. doi: 10.1016/j.ejca.2006.03.034. [DOI] [PubMed] [Google Scholar]

- 47.Munro AJ, Swartzman S. What is a virtual multidisciplinary team (vMDT)? Br J Cancer. 2013;108(12):2433–2441. doi: 10.1038/bjc.2013.231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Yang D., Zhou J., Chen R., Chen R., Song Y., Song Z., et al. Expert consensus on the metaverse in medicine. Clinical eHealth. 2022;5:1–9. [Google Scholar]

- 49.Mystakidis S. Metaverse. Encyclopedia. 2022;2(1):486–497. [Google Scholar]

- 50.King A, Hoppe RB. Best practice” for patient-centered communication: a narrative review. J Grad Med Educ. 2013;5(3):385–393. doi: 10.4300/JGME-D-13-00072.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Adams RJ. Improving health outcomes with better patient understanding and education. Risk Manag Healthc Policy. 2010;3:61-72. doi: 10.2147/RMHP.S7500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Thirunavukarasu AJ, Mullinger D, Rufus-Toye RM, Farrell S, Allen LE. Clinical validation of a novel web-application for remote assessment of distance visual acuity. Eye (Lond) 2022;36(10):2057–2061. doi: 10.1038/s41433-021-01760-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Skalicky SE, Kong GY. Novel means of clinical visual function testing among glaucoma patients, including virtual reality. J Curr Glaucoma Pract. 2019;13(3):83-7. doi: 10.5005/jp-journals-10078-1265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Goldbach A.H., Shousha M.A., Duque C., Kashem R., Mohder F., Shaheen A.R., et al. Visual field measurements using Heru Visual Field Multi-platform application downloaded on two different commercially available augmented reality devices. Investigative Ophthalmology & Visual Science. 2021;62(8):1017. [Google Scholar]

- 55.Bioulac S, Micoulaud-Franchi JA, Maire J, Bouvard MP, Rizzo AA, Sagaspe P, et al. Virtual remediation versus methylphenidate to improve distractibility in children with ADHD: a controlled randomized clinical trial study. J Atten Disord. 2020;24(2):326–335. doi: 10.1177/1087054718759751. [DOI] [PubMed] [Google Scholar]

- 56.Lee J., Lee T.S., Lee S., Jang J., Yoo S., Choi Y., et al. Development and application of a metaverse-based social skills training program for children with autism spectrum disorder to improve social interaction: protocol for a randomized controlled trial. JMIR Res Protoc. 2022;11(11) doi: 10.2196/35960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Loureiro APC, Ribas CG, Zotz TGG, Chen R, Ribas F. Feasibility of virtual therapy in rehabilitation of Parkinson’s disease patients: pilot study. Fisioterapia em movimento. 2012;25(3):659–666. [Google Scholar]

- 58.Radford D, Ahmed S, Tai Loy Lee T, et al. 81 Meeting in the metaverse - a new paradigm for scientific mentorship. Heart. 2022;108(Suppl 1):A59. [Google Scholar]

- 59.Condis MA. Playing the game of literature: ready player one, the ludic novel, and the geeky “canon” of white masculinity. Jml: J Mod Lit. 2016;39(2):1–19. [Google Scholar]

- 60.Chen D, Zhang R. Exploring research trends of emerging technologies in health metaverse: A Bibliometric Analysis. SSRN J. 2022 [Google Scholar]

- 61.Ferris JD, Donachie PH, Johnston RL, Barnes B, Olaitan M, Sparrow JM. Royal College of Ophthalmologists’ National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020;104(3):324–329. doi: 10.1136/bjophthalmol-2018-313817. [DOI] [PubMed] [Google Scholar]

- 62.Aïm F, Lonjon G, Hannouche D, Nizard R. Effectiveness of virtual reality training in orthopaedic surgery. Arthroscopy. 2016;32(1):224–232. doi: 10.1016/j.arthro.2015.07.023. [DOI] [PubMed] [Google Scholar]

- 63.Sayadi L.R., Naides A., Eng M., Fijany A., Chopan M., Sayadi J.J., et al. The new frontier: a review of augmented reality and virtual reality in plastic surgery. Aesthet Surg J. 2019;39(9):1007–1016. doi: 10.1093/asj/sjz043. [DOI] [PubMed] [Google Scholar]

- 64.Sadeghi A.H., Mathari S.E., Abjigitova D., Maat A.P.W.M., Taverne Y.J.H.J., Bogers A.J.J.C., et al. Current and future applications of virtual, augmented, and mixed reality in cardiothoracic surgery. Ann Thorac Surg. 2022;113(2):681–691. doi: 10.1016/j.athoracsur.2020.11.030. [DOI] [PubMed] [Google Scholar]

- 65.Dadario NB, Quinoa T, Khatri D, Boockvar J, Langer D, D’Amico RS. Examining the benefits of extended reality in neurosurgery: a systematic review. J Clin Neurosci. 2021;94:41–53. doi: 10.1016/j.jocn.2021.09.037. [DOI] [PubMed] [Google Scholar]

- 66.Penza V, Soriero D, Barresi G, Pertile D, Scabini S, Mattos LS. The GPS for surgery: a user‐centered evaluation of a navigation system for laparoscopic surgery. Int J Med Robot. 2020;16(5):1–13. doi: 10.1002/rcs.2119. [DOI] [PubMed] [Google Scholar]

- 67.Goniewicz K, Goniewicz M. Disaster preparedness and professional competence among healthcare providers: pilot study results. Sustainability. 2020;12(12):4931. [Google Scholar]

- 68.Jung Y. Virtual reality simulation for disaster preparedness training in hospitals: integrated review. J Med Internet Res. 2022;24(1) doi: 10.2196/30600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Blaak MJ, Fadaak R, Davies JM, Pinto N, Conly J, Leslie M. Virtual tabletop simulations for primary care pandemic preparedness and response. BMJ Simul Technol Enhanc Learn. 2021;7(6):487-93. doi: 10.1136/bmjstel-2020-000854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Ghiga I, Richardson S, Álvarez AMR, et al. PIPDeploy: development and implementation of a gamified table top simulation exercise to strengthen national pandemic vaccine preparedness and readiness. Vaccine. 2021;39(2):364–371. doi: 10.1016/j.vaccine.2020.11.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Zhang D., Liao H., Jia Y., Yang W., He P., Wang D., et al. Effect of virtual reality simulation training on the response capability of public health emergency reserve nurses in China: a quasiexperimental study. BMJ Open. 2021;11(9) doi: 10.1136/bmjopen-2021-048611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Van Uden-Kraan CF, Drossaert CH, Taal E, Smit WM, Moens HJB, Van de Laar MA. Determinants of engagement in face-to-face and online patient support groups. J Med Internet Res. 2011;13(4) doi: 10.2196/jmir.1718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Van Pelt F. Peer support: healthcare professionals supporting each other after adverse medical events. Qual Saf Health Care. 2008;17(4):249–252. doi: 10.1136/qshc.2007.025536. [DOI] [PubMed] [Google Scholar]

- 74.Ng W.Y., Tan T.E., Xiao Z., Movva P.V.H., Foo F.S.S., Yun D., et al. Blockchain technology for ophthalmology: coming of age? Asia Pac J Ophthalmol (Phila) 2021;10(4):343–347. doi: 10.1097/APO.0000000000000399. [DOI] [PubMed] [Google Scholar]

- 75.Ng WY, Tan TE, Movva PVH, Fang AHS, Yeo KK, Ho D, et al. Blockchain applications in health care for COVID-19 and beyond: a systematic review. Lancet Digit Health. 2021;3(12):e819–29. doi: 10.1016/S2589-7500(21)00210-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Singh G, Casson R, Chan W. The potential impact of 5G telecommunication technology on ophthalmology. Eye (Lond) 2021;35(7):1859–1868. doi: 10.1038/s41433-021-01450-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Beede E., Baylor E., Hersch F., et al. In Proceedings of the 2020 CHI conference on human factors in computing systems. 2020. A human-centered evaluation of a deep learning system deployed in clinics for the detection of diabetic retinopathy; pp. 1–12. [Google Scholar]

- 78.Gunasekeran DV, Wong TY. Artificial intelligence in ophthalmology in 2020: a technology on the cusp for translation and implementation. Asia Pac J Ophthalmol (Phila) 2020;9(2):61–66. doi: 10.1097/01.APO.0000656984.56467.2c. [DOI] [PubMed] [Google Scholar]

- 79.Chávez-Santiago R, Szydełko M, Kliks A, Foukalas F, Haddad Y, Nolan KE, et al. 5G: The convergence of wireless communications. Wirel Pers Commun. 2015;83(3):1617–1642. doi: 10.1007/s11277-015-2467-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Rahimi B, Nadri H, Lotfnezhad Afshar H, Timpka T. A systematic review of the technology acceptance model in health informatics. Appl Clin Inform. 2018;9(3):604–634. doi: 10.1055/s-0038-1668091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Gogia S.B., Maeder A., Mars M., Hartvigsen G., Basu A., Abbott P. Unintended consequences of tele health and their possible solutions. Contribution of the IMIA Working Group on Telehealth. Yearb Med Inform. 2016;(1):41–46. doi: 10.15265/IY-2016-012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Gunasekeran DV. Technology and chronic disease management. Lancet Diabetes Endocrinol. 2018;6(2):91. doi: 10.1016/S2213-8587(17)30441-2. [DOI] [PubMed] [Google Scholar]

- 83.Gunasekeran DV, Tseng RMWW, Tham YC, Wong TY. Applications of digital health for public health responses to COVID-19: a systematic scoping review of artificial intelligence, telehealth and related technologies. NPJ Digit Med. 2021;4(1):40. doi: 10.1038/s41746-021-00412-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Ong CW, Tan MCJ, Lam M, Koh VTC. Applications of extended reality in ophthalmology: systematic review. J Med Internet Res. 2021;23(8) doi: 10.2196/24152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Deemer A.D., Bradley C.K., Ross N.C., Natale D.M., Itthipanichpong R., Werblin F.S., et al. Low vision enhancement with head-mounted video display systems: are we there yet? Optom Vis Sci. 2018;95(9):694–703. doi: 10.1097/OPX.0000000000001278. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.