Abstract

Predicting the stability and fitness effects of amino-acid mutations in proteins is a cornerstone of biological discovery and engineering. Various experimental techniques have been developed to measure mutational effects, providing us with extensive datasets across a diverse range of proteins. By training on these data, machine learning approaches have advanced significantly in predicting mutational effects. Here, we introduce HERMES, a 3D rotationally equivariant structure-based neural network model for mutation effect prediction. Pre-trained to predict amino-acid propensities from their surrounding 3D structure atomic environments, HERMES can be efficiently fine-tuned to predict mutational effects, thanks to its symmetry-aware parameterization of the output space. Benchmarking against other models demonstrates that HERMES often outperforms or matches their performance in predicting mutation effects on stability, binding, and fitness, using either computationally or experimentally resolved protein structures. HERMES offers a versatile suit of tools for evaluating mutation effects and can be easily fine-tuned for specific predictive objectives using our open-source code.

1. Introduction and Related Work

Understanding the effects of amino acid mutations on a protein’s function is a hallmark of biological discovery and engineering. Identifying disease-causing mutations [1, 2], enhancing enzymes’ catalytic activity [3, 4], forecasting viral escape [5, 6, 7], and engineering high-affinity antibodies [8], are just some of the areas of study that rely on accurate modeling of mutational effects. Effects on protein stability are likely the most studied, as sufficient stability is usually a prerequisite of the protein’s successful carrying of its function [9]. Understanding the impact of mutations on the protein’s binding affinity to its partner is also crucial, as most functions are mediated by binding events. These effects can be accurately measured experimentally, for example via thermal or chemical denaturation assays [10], by surface plasmon resonance [11], and, more recently, by Deep Mutational Scanning (DMS) [12, 13, 14]. These experiments are laborious and with limited throughput.

Computational modeling of mutational effects remains an attractive alternative to costly experiments. Methods based on molecular dynamics simulations are accurate for short-time (nano seconds) protein responses but are limited in predicting substantial changes in protein often inflicted by amino acid mutations [15]. Models using physical energy functions such as FoldX [16] and Rosetta [17] are well-established and remain widely used for predicting the stability effect of mutations, though they often lack accuracy and are slow [2]. Recently, machine learning models have shown substantial progress in this domain. Sequence-based [18, 19] or structure-based [20, 21, 22, 2, 23, 24], approaches are used to predict the propensity of amino acids, and by extension, the effect of mutations in a protein [18, 19, 20]. These pre-trained models serve as robust baselines, upon which additional fine-tuning on smaller protein stability datasets can significantly enhance the accuracy of predictions for mutational effects [2, 22].

Here, we introduce HERMES, which is built upon a self-supervised structure-based model H-CNN [20], and fine-tuned to predict mutational effects in proteins. Similar to H-CNN, HERMES has a 3D rotationally equivariant architecture, but with an improved performance. During pre-training, HERMES is trained to predict a residue’s amino acid identity from its surrounding atomic neighborhood within a 10 Å radius in the 3D structure. To fine-tune HERMES for mutational effects, we take the pre-trained model’s logits corresponding to the amino acid pair of interest, and train a model to match the experimental data for the functional difference between them. With our parametrization, HERMES automatically respects the permutational anti-symmetry in the mutational effects, which other models achieve through data augmentation [22]. We extensively benchmarked HERMES across various datasets, demonstrating state-of-the-art performance in predicting stability and highly competitive results in predicting binding effect of mutations, even when using computationally resolved protein structures. Our code is open source at https://github.com/StatPhysBio/hermes/tree/main, and allows users to both run the models presented in this paper, and easily fine-tune HERMES on their data.

2. Methods

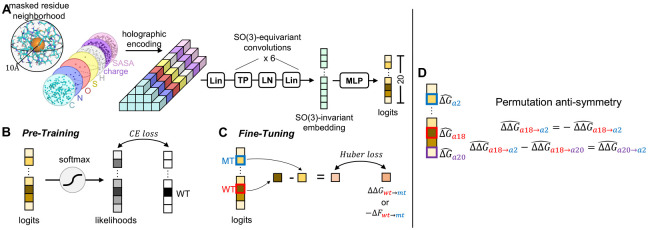

HERMES is trained in two steps (Figure 1). First, following [20], we train an improved version of the model Holographic Convolutional Neural Network (H-CNN) to predict the identity of an amino acid from its surrounding structural neighborhood. Specifically, we remove (mask) all atoms associated with the focal residue and predict its identity using all atoms within 10 Åof the the focal residue’s C-α (Figure 1B). Second, we develop a procedure to fine-tune HERMES on mutation effects ΔF in general, with a specific focus on predicting the stability effect of mutations ΔΔG (Figure 1).

Figure 1: Schematic of HERMES.

(A) Model architecture. We refer the reader to [25, 26] for details. (B) Pre-training procedure. We train HERMES to predict the identity of the central neighborhood’s amino-acid, whose atoms have been masked. (C) Fine-tuning procedure over mutation effects. We simply fine-tune HERMES to make the difference of logits for two amino-acids regress over the corresponding mutation’s score. (D) Our fine-tuning procedure makes the 20 logits values effectively converge to predicted ΔG (or, more broadly, F) values, up to a site-specific constant. This ensures that permutation anti-symmetry is respected without the need for data augmentation. This symmetry is however only approximate, as the output is conditioned on a neighborhood bearing the signature of the wildtype amino-acid.

Preprocessing of protein structures.

To pre-process the protein structure data, we devise two distinct pipelines, relying either on (i) Pyrosetta [27], or (ii) Biopython [28] and other open source tools with the code adapted from [2]; see Section A.1.1 for details. The Pyrosetta pipeline is considerably faster, but requires a license, whereas the Biopython pipeline is open-source. We train models using both pipelines. Pipelines used at inference and training must match. Differences in results between the two pipelines are minimal (see Figs. S2, S5 and Table S1); for our main analyses, we report only the results using Pyrosetta.

HERMES architecture and pre-training.

Similar to H-CNN, HERMES has a 3D rotationally equivariant architecture, with comparable number of parameters (~3.5M), but with a ~2.75× improved speed in its forward pass, and a higher accuracy (Figure 1A). In short, atomic neighborhoods - i.e., featurized point clouds - are first projected onto the orthonormal Zernike Fourier basis, centered at the (masked) central residue’s C-α. We term the resulting Fourier encoding of the data an holographic encoding, as it presents a superposition of 3D spherical holograms [20]. Then, the resulting holograms are fed to a stack of SO(3)-Equivariant layers, which convert the holograms to an SO(3)-invariant embedding - i.e. a representation that is invariant to 3D rotations about the center of the initial holographic projection. These embeddings are then passed through an MLP to generate the desired predictions. Each HERMES model is an ensemble of 10 individually-trained architectures. We trained versions of HERMES after adding Gaussian noise to the 3D coordinates, with standard deviation 0.5 Å, and different random seeds for each of the 10 models. We refer the reader to [26, 25] for further details on the architecture, and the mathematical introduction to SO(3)-equivariant models in Fourier space. We implement HERMES using e3nn [29].

We pre-train HERMES on neighborhoods from protein chains in ProteinNet’s CASP12 set with 30% similarity cutoff [30], and featurize atomic neighborhoods using atom type - including computationally-added hydrogens - partial charge, and Solvent Accessible Surface Area.

Predicting fitness effect of mutations with HERMES.

HERMES can be seen as a generative model for amino-acid labels for a residue, conditioned on the atomic environment surrounding the residue. Conditional generative models of amino-acid labels are shown to successfully make zero-shot predictions for mutational effects [18, 19, 20]. The log-likelihood difference between the original amino acid (often wildtype) aa0 and the mutant aa1 at a given residue i, conditioned on the surrounding neighborhood Xi, can well approximate mutational effects

| (1) |

The superscripts on Xi indicate the structure from which the atomic neighborhood is extracted, highlighting that mutations at a residue can reorganize the surrounding structural neighborhood. Computational tools like Rosetta [27] can be used to relax the structural neighborhoods subject to mutations, when the mutant structure is not available [20]. However, this procedure can be inaccurate and computationally expensive. For practical use of HERMES, we only consider the use of a single (often the original wildtype) structure to predict all possible variant effects, approximating eq. 1 by

| (2) |

where is the logit associated with the indicated amino acid, conditioned on the surrounding neighborhood for the initial amino acid aa0 (e.g. wildtype). A similar approach was taken by [22].

Fine-tuning on mutation effects.

We fine-tune pre-trained HERMES models on mutation effects, similar to prior work [2, 22]. However, unlike those works which train a separate regression head using as input embeddings from the pre-trained model, we simply fine-tune the model itself to make the predicted logit differences in eq. 2 regress over mutation effects (Figure 1C); see Section A.1.2 for details. We fine-tune HERMES on several datasets, as reported in the Results section.

Permutational anti-symmetry for mutation effects.

The thermodynamic changes in the stability of a protein ΔΔGaa0→aa1 by a mutation aa0 → aa1 is simply equal to the difference between the free energy of the mutant structure ΔGaa1 and that of the original structure ΔGaa0. Thus, the back mutation should have the opposite effect on the stability ΔΔGaa1→aa0 = −ΔΔGaa0→aa1; a similar property occurs when considering triplets of amino acids ΔΔGaa1→aa0 = ΔΔGaa2→aa0 −ΔΔGaa2→aa1. The same anti-symmetric effects are present for the effect of mutations on protein fitness. HERMES is parametrized to automatically account for the anti-symmetric nature of mutations by learning a score for each of the 20 amino acids at a given site, which is associated to their fitness or thermodynamic free energy contribution, up to a site specific constant (Figure 1D). This is in contrast to other popular methods for mutation effect predictions [22, 2]. For example, Stability-Oracle only achieves this anti-symmetric property through data augmentation, by training on all the 380 possible amino acid pairs at each site, resulting in dataset augmented from 117k to 2.2M examples [22].

3. Predicting stability effect of mutations

We evaluate the performance of HERMES on datasets used by RaSP [2] and Stability-Oracle [22]. RaSP was fine-tuned on stability effects computed with Rosetta [27] for 35 protein structures, then tested on Rosetta-computed stability effects for 10 other proteins, as well as on experimentally determined stability effects; we indicate models fine-tuned on this data by “+ Ros”. Stability-Oracle was trained on a curated dataset of experimentally measured stability effects termed “cDNA117K”, and tested on a dataset termed “T2837”; we indicate models fine-tuned on this data by “+ cDNA117K”.

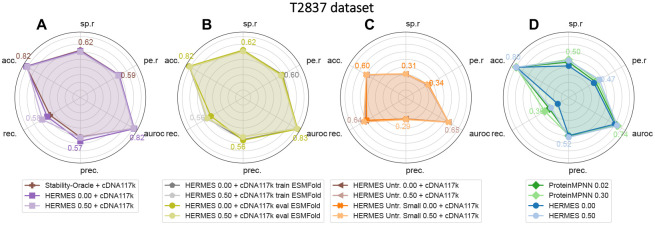

HERMES achieves state-of-the-art performance compared to RaSP (Figs. S1,S2) and Stability-Oracle (Figs. 2A,S4A,S3), using the same fine-tuning data, and without any data augmentation. Moreover, HERMES’ predictions are robust to the use of structures computationally resolved by ESMFold [31] at either training or testing time (Figure 2B). Our results also indicate that pre-training on the wildtype amino-acid classification task provides significant help for downstream stability predictions. Notably, models that are pre-trained only, without any fine-tuning, perform significantly better than models trained solely on mutation effects without pre-training (Figs. 2C, 2D). In fact, we could not prevent overfitting in the non-pre-trained HERMES model, even after significantly reducing the model size from 3.5M to 50k parameters (Figure 2C).

Figure 2: Predicting stability effect of mutations in T2837 dataset.

The Pearson correlation (pe.r), Spearmann correlation (sp.r), accuracy (acc.), recall (rec.), precision (prec.), and AUROC are shown for different models. (A) When fine-tuned on the same dataset, HERMES models perform equivalently to the Stability-Oracle [22]. (B) HERMES performance does not change even when trained on ESMFold-predicted structures and evaluated on crystal structures, and vice-versa. (C) Non-pre-trained HERMES models perform the worst, and reducing their size from 3.5M to 50k parameters does not improve performance. (D) Models without fine-tuning show decent performance. Adding noise to structures during training consistently enhances their performance, though this effect is not observed in fine-tuned models.

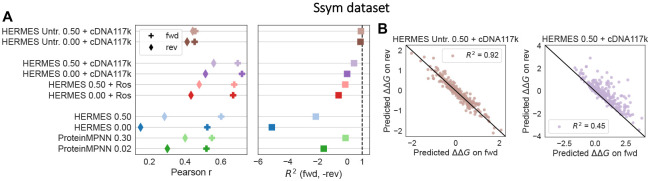

Note that HERMES uses only the starting structure to predict mutational effects (eq. 2), and thus, its prediction are only approximately permutation anti-symmetric with respect to mutations. Specifically, the predicted effect of a forward mutation, using the initial structure, is only approximately negative of the effect of the reverse mutation, using the final structure. To assess the extent of deviation from anti-symmetry resulting from our approximation, we use the Ssym dataset, which includes measurements for the stability effect of 352 mutations across 19 different proteins structures, together with the experimentally-determined structures of all of the 352 mutants[32].

We find that HERMES models, as well as ProteinMPNN, consistently predict the stability effect of mutations in the “forward” direction (from wildtype) more accurately than in the reverse direction (Figure 3A). Although none of the Ssym structures were included in our training data, we hypothesize that this effect arises from our models being pre-trained to classify amino acids in wild-type structures, some of which may be homologues to the Ssym structures. Indeed, we observe that removing the pre-training step lessens the discrepancy between forward and reverse predictions, though this comes at the cost of reduced accuracies for both cases (Figure 3A; brown points). Moreover, HERMES models with pre-training tend to predict a larger magnitude of stability effect for forward mutations compared to reverse mutations, further underscoring the bias of these models toward wildtype structures (Figure 3B). Adding noise during training partly mitigates the bias, as it can reduce the model’s wildtype preferences (Table S1).

Figure 3: Permutational anti-symmetry of stability effect of mutations.

(A) Pearson correlation between the measured stability effects of mutations from the Ssym dataset and the predictions on the forward and reverse mutations are shown (left). The effects of reverse mutations are computed using the mutant structures. The R2 between the forward and (negative) reverse predictions is shown, with higher values indicating more respect for Permutational anti-symmetry (right). (B) Models with pre-training (right) tend to predict a larger magnitude of stability effect for forward mutations compared to reverse mutations, compared to non-pre-trained models (left).

4. Predicting binding effect of mutations

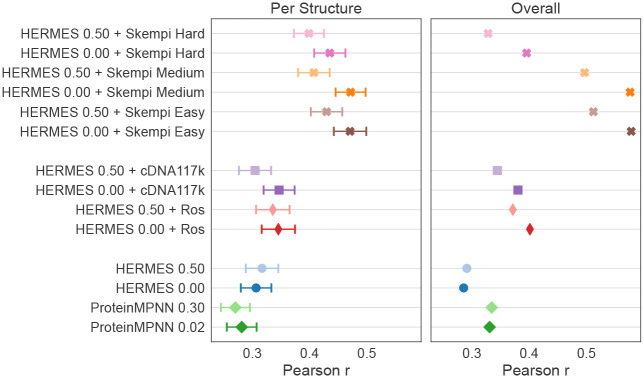

We tested the accuracy of HERMES on predicting the binding effect of mutations on the SKEMPI v2.0 dataset [33], which, to our knowledge, is the most comprehensive dataset comprising mutationl effects on protein-protein binding interactions, with the associated crystal structures of the wildtype’s bound complex. We evaluate pre-trained-only ProteinMPNN and HERMES models, as well as HERMES models fine-tuned on stability changes, on predicting binding affinity changes on the wild-type bound structures. Furthermore, for single-point-mutations only, we fine-tune HERMES models on SKEMPI itself using a 3-fold cross-validation scheme, thus ensuring that every point of SKEMPI is evaluated upon. Using structural homology, we provide three splitting strategies with increasing levels of difficulty; see Section A.1.5 for details.

Following [21], we report the accuracy of our predictions both across mutations within each structure individually (“per-structure” correlations), and across mutations pooled from all structure (“overall” correlations). Note that “per-structure” accuracy is particularly relevant when optimizing the binding of a specific protein to its target. As shown in Fig. 4 and Table S2, pre-trained-only models demonstrate some predictive power, and fine-tuning on stability effects enhances the accuracy of binding effect predictions, confirming that transfer learning can be leveraged between the two tasks. Fine-tuning directly on the SKEMPI dataset offers even greater improvements, achieving state-of-the-art performance for “Per-Structure” analysis and competitive results for “Overall” analysis (Table S2).

Figure 4: Single-point mutational effects on binding affinity from SKEMPI.

Averaged “per-structure” and “overall” Pearson correlations between the predicted binding effect of mutations and the measurements from the SKEMPI data are shown, for non-fine-tuned models (bottom), models fine-tuned for stability prediction (middle), and models fine-tuned on the SKEMPI data (top).

5. Discussion

Here, we presented HERMES, an efficient deep learning method for inferring the effects of mutations on protein function, conditioned on the local atomic environment surrounding the mutated residue. HERMES is pre-trained to model amino-acid preferences in protein structures, and can be optionally fine-tuned on arbitrary mutation effects datasets. We provide HERMES models pre-trained on a large non-reduntant chunk of the protein structure universe, as well as the same models fine-tuned on stability and binding effects of mutations. We thoroughly benchmark HERMES against other state-of-the-art models, showing robust performance on a wide variety of proteins and functions: stability effects, binding affinity, and several deep mutational scanning assays. We open-source our code and data used for experiments, where we provide easy-to-use scripts to run HERMES models on desired protein structures and mutation effects, as well as code to fine-tune our pre-trained HERMES models on the user’s own mutation effect data.

Supplementary Material

Acknowledgement

This work has been supported by the National Institutes of Health MIRA award (R35 GM142795), the CAREER award from the National Science Foundation (grant No: 2045054), the Royalty Research Fund from the University of Washington (no. A153352), and the Allen School Computer Science & Engineering Research Fellowship from the Paul G. Allen School of Computer Science & Engineering at the University of Washington. This work is also supported, in part, through the Departments of Physics and Computer Science and Engineering, and the College of Arts and Sciences at the University of Washington.

Contributor Information

Gian Marco Visani, Paul G. Allen School of Computer Science and Engineering, University of Washington.

Michael N. Pun, Department of Physics, University of Washington

William Galvin, Paul G. Allen School of Computer Science and Engineering, University of Washington.

Eric Daniel, Paul G. Allen School of Computer Science and Engineering, University of Washington.

Kevin Borisiak, Department of Physics, University of Washington.

Utheri Wagura, Department of Physics, MIT.

Armita Nourmohammad, Department of Physics, Applied Math, and CSE, University of Washington, Fred Hutch Cancer Research Center, Seattle, WA.

References

- [1].Gerasimavicius Lukas, Liu Xin, and Marsh Joseph A.. Identification of pathogenic missense mutations using protein stability predictors. Scientific Reports, 10(1):15387, September 2020. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Blaabjerg Lasse M, Kassem Maher M, Good Lydia L, Jonsson Nicolas, Cagiada Matteo, Johansson Kristoffer E, Boomsma Wouter, Stein Amelie, and Lindorff-Larsen Kresten. Rapid protein stability prediction using deep learning representations. eLife, 12:e82593, May 2023. Publisher: eLife Sciences Publications, Ltd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Ishida Toyokazu. Effects of Point Mutation on Enzymatic Activity: Correlation between Protein Electronic Structure and Motion in Chorismate Mutase Reaction. Journal of the American Chemical Society, 132(20):7104–7118, May 2010. Publisher: American Chemical Society. [DOI] [PubMed] [Google Scholar]

- [4].Wang Xiaoyu, Zhang Xinben, Peng Cheng, Shi Yulong, Li Huiyu, Xu Zhijian, and Zhu Weiliang. D3DistalMutation: a Database to Explore the Effect of Distal Mutations on Enzyme Activity. Journal of Chemical Information and Modeling, 61(5):2499–2508, May 2021. Publisher: American Chemical Society. [DOI] [PubMed] [Google Scholar]

- [5].Thadani Nicole N., Gurev Sarah, Notin Pascal, Youssef Noor, Rollins Nathan J., Ritter Daniel, Sander Chris, Gal Yarin, and Marks Debora S.. Learning from prepandemic data to forecast viral escape. Nature, 622(7984):818–825, October 2023. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Łuksza Marta and Lässig Michael. A predictive fitness model for influenza. Nature, 507(7490):57–61, February 2014. [DOI] [PubMed] [Google Scholar]

- [7].Neher Richard A, Russell Colin A, and Shraiman Boris I. Predicting evolution from the shape of genealogical trees. Elife, 3, November 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Hie Brian L., Shanker Varun R., Xu Duo, Bruun Theodora U. J., Weidenbacher Payton A., Tang Shaogeng, Wu Wesley, Pak John E., and Kim Peter S.. Efficient evolution of human antibodies from general protein language models. Nature Biotechnology, 42(2):275–283, February 2024. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Rocklin Gabriel J., Chidyausiku Tamuka M., Goreshnik Inna, Ford Alex, Houliston Scott, Lemak Alexander, Carter Lauren, Ravichandran Rashmi, Mulligan Vikram K., Chevalier Aaron, Arrowsmith Cheryl H., and Baker David. Global analysis of protein folding using massively parallel design, synthesis, and testing. Science, 357(6347):168–175, July 2017. Publisher: American Association for the Advancement of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Lindorff-Larsen Kresten and Teilum Kaare. Linking thermodynamics and measurements of protein stability. Protein Engineering, Design and Selection, 34:gzab002, February 2021. [DOI] [PubMed] [Google Scholar]

- [11].Karlsson Robert. SPR for molecular interaction analysis: a review of emerging application areas. Journal of Molecular Recognition, 17(3):151–161, 2004. _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/jmr.660. [DOI] [PubMed] [Google Scholar]

- [12].Fowler Douglas M. and Fields Stanley. Deep mutational scanning: a new style of protein science. Nature Methods, 11(8):801–807, August 2014. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Kinney Justin B and McCandlish David M. Massively parallel assays and quantitative Sequence–Function relationships. Annu. Rev. Genomics Hum. Genet., 20(Volume 20, 2019):99–127, August 2019. [DOI] [PubMed] [Google Scholar]

- [14].Starr Tyler N., Greaney Allison J., Hilton Sarah K., Ellis Daniel, Crawford Katharine H.D., Dingens Adam S., Navarro Mary Jane, Bowen John E., Tortorici M. Alejandra, Walls Alexandra C., King Neil P., Veesler David, and Bloom Jesse D.. Deep Mutational Scanning of SARS-CoV-2 Receptor Binding Domain Reveals Constraints on Folding and ACE2 Binding. Cell, 182(5):1295–1310.e20, September 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Gapsys Vytautas, Michielssens Servaas, Seeliger Daniel, and de Groot Bert L.. Accurate and Rigorous Prediction of the Changes in Protein Free Energies in a Large-Scale Mutation Scan. Angewandte Chemie International Edition, 55(26):7364–7368, 2016. _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/anie.201510054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Schymkowitz Joost, Borg Jesper, Stricher Francois, Nys Robby, Rousseau Frederic, and Serrano Luis. The FoldX web server: an online force field. Nucleic Acids Research, 33(suppl_2):W382–W388, July 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Kellogg Elizabeth H., Leaver-Fay Andrew, and Baker David. Role of conformational sampling in computing mutation-induced changes in protein structure and stability. Proteins: Structure, Function, and Bioinformatics, 79(3):830–838, 2011. _eprint: https://onlinelibrary.wiley.com/doi/pdf/10.1002/prot.22921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Riesselman Adam J., Ingraham John B., and Marks Debora S.. Deep generative models of genetic variation capture the effects of mutations. Nature Methods, 15(10):816–822, October 2018. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Meier Joshua, Rao Roshan, Verkuil Robert, Liu Jason, Sercu Tom, and Rives Alex. Language models enable zero-shot prediction of the effects of mutations on protein function. In Advances in Neural Information Processing Systems, volume 34, pages 29287–29303. Curran Associates, Inc., 2021. [Google Scholar]

- [20].Pun Michael N., Ivanov Andrew, Bellamy Quinn, Montague Zachary, LaMont Colin, Bradley Philip, Otwinowski Jakub, and Nourmohammad Armita. Learning the shape of protein microenvironments with a holographic convolutional neural network. Proceedings of the National Academy of Sciences, 121(6), February 2024. Publisher: Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Luo Shitong, Su Yufeng, Wu Zuofan, Su Chenpeng, Peng Jian, and Ma Jianzhu. Rotamer Density Estimator is an Unsupervised Learner of the Effect of Mutations on Protein-Protein Interaction. September 2022.

- [22].Diaz Daniel J., Gong Chengyue, Ouyang-Zhang Jeffrey, Loy James M., Wells Jordan, Yang David, Ellington Andrew D., Dimakis Alexandros G., and Klivans Adam R.. Stability Oracle: a structure-based graph-transformer framework for identifying stabilizing mutations. Nature Communications, 15(1):6170, July 2024. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Li Bian, Yang Yucheng T., Capra John A., and Gerstein Mark B.. Predicting changes in protein thermodynamic stability upon point mutation with deep 3D convolutional neural networks. PLOS Computational Biology, 16(11):e1008291, November 2020. Publisher: Public Library of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Benevenuta S., Pancotti C., Fariselli P., Birolo G., and Sanavia T.. An antisymmetric neural network to predict free energy changes in protein variants. Journal of Physics D: Applied Physics, 54(24):245403, March 2021. Publisher: IOP Publishing. [Google Scholar]

- [25].Visani Gian Marco, Galvin William, Pun Michael, and Nourmohammad Armita. H-Packer: Holographic Rotationally Equivariant Convolutional Neural Network for Protein Side-Chain Packing. In Proceedings of the 18th Machine Learning in Computational Biology meeting, pages 230–249. PMLR, March 2024. ISSN: 2640–3498. [Google Scholar]

- [26].Visani Gian Marco, Pun Michael N., Angaji Arman, and Nourmohammad Armita. Holographic-(V)AE: An end-to-end SO(3)-equivariant (variational) autoencoder in Fourier space. Physical Review Research, 6(2):023006, April 2024. Publisher: American Physical Society. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Chaudhury Sidhartha, Lyskov Sergey, and Gray Jeffrey J.. PyRosetta: a script-based interface for implementing molecular modeling algorithms using Rosetta. Bioinformatics (Oxford, England), 26(5):689–691, March 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Cock Peter J. A., Antao Tiago, Chang Jeffrey T., Chapman Brad A., Cox Cymon J., Dalke Andrew, Friedberg Iddo, Hamelryck Thomas, Kauff Frank, Wilczynski Bartek, and de Hoon Michiel J. L.. Biopython: freely available Python tools for computational molecular biology and bioinformatics. Bioinformatics, 25(11):1422–1423, June 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Geiger Mario and Smidt Tess. e3nn: Euclidean Neural Networks, July 2022. arXiv:2207.09453 [cs]. [Google Scholar]

- [30].AlQuraishi Mohammed. ProteinNet: a standardized data set for machine learning of protein structure. BMC Bioinformatics, 20(1):311, June 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Lin Zeming, Akin Halil, Rao Roshan, Hie Brian, Zhu Zhongkai, Lu Wenting, Smetanin Nikita, Verkuil Robert, Kabeli Ori, Shmueli Yaniv, Allan dos Santos Costa, Maryam Fazel-Zarandi, Tom Sercu, Salvatore Candido, and Alexander Rives. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science, 379(6637):1123–1130, March 2023. Publisher: American Association for the Advancement of Science. [DOI] [PubMed] [Google Scholar]

- [32].Pancotti Corrado, Benevenuta Silvia, Birolo Giovanni, Alberini Virginia, Repetto Valeria, Sanavia Tiziana, Capriotti Emidio, and Fariselli Piero. Predicting protein stability changes upon single-point mutation: a thorough comparison of the available tools on a new dataset. Briefings in Bioinformatics, 23(2):bbab555, March 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Jankauskaite Justina, Jiménez-García Brian, Dapkūnas Justas, Fernández-Recio Juan, and Moal Iain H. SKEMPI 2.0: an updated benchmark of changes in protein–protein binding energy, kinetics and thermodynamics upon mutation. Bioinformatics, 35(3):462–469, February 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Eastman Peter, Friedrichs Mark S., Chodera John D., Radmer Randall J., Bruns Christopher M., Ku Joy P., Beauchamp Kyle A., Lane Thomas J., Wang Lee-Ping, Shukla Diwakar, Tye Tony, Houston Mike, Stich Timo, Klein Christoph, Shirts Michael R., and Pande Vijay S.. OpenMM 4: A Reusable, Extensible, Hardware Independent Library for High Performance Molecular Simulation. Journal of Chemical Theory and Computation, 9(1):461–469, January 2013. Publisher: American Chemical Society. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Michael Word J., Lovell Simon C., Richardson Jane S., and Richardson David C.. Asparagine and glutamine: using hydrogen atom contacts in the choice of side-chain amide orientation1. Journal of Molecular Biology, 285(4):1735–1747, January 1999. [DOI] [PubMed] [Google Scholar]

- [36].Ponder Jay W. and Case David A.. Force Fields for Protein Simulations. In Advances in Protein Chemistry, volume 66 of Protein Simulations, pages 27–85. Academic Press, January 2003. [DOI] [PubMed] [Google Scholar]

- [37].Dauparas J., Anishchenko I., Bennett N., Bai H., Ragotte R. J., Milles L. F., Wicky B. I. M., Courbet A., de Haas R. J., Bethel N., Leung P. J. Y., Huddy T. F., Pellock S., Tischer D., Chan F., Koepnick B., Nguyen H., Kang A., Sankaran B., Bera A. K., King N. P., and Baker D.. Robust deep learning–based protein sequence design using ProteinMPNN. Science, 378(6615):49–56, October 2022. Publisher: American Association for the Advancement of Science. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Armstrong David R, Berrisford John M, Conroy Matthew J, Gutmanas Aleksandras, Anyango Stephen, Choudhary Preeti, Clark Alice R, Dana Jose M, Deshpande Mandar, Dunlop Roisin, Gane Paul, Gáborová Romana, Gupta Deepti, Haslam Pauline, Koča Jaroslav, Mak Lora, Mir Saqib, Mukhopadhyay Abhik, Nadzirin Nurul, Nair Sreenath, Paysan-Lafosse Typhaine, Pravda Lukas, Sehnal David, Salih Osman, Smart Oliver, Tolchard James, Varadi Mihaly, Svobodova-Vařeková Radka, Zaki Hossam, Kleywegt Gerard J, and Velankar Sameer. PDBe: improved findability of macromolecular structure data in the PDB. Nucleic acids research, 48(D1):D335–D343, January 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Jumper John, Evans Richard, Pritzel Alexander, Green Tim, Figurnov Michael, Ronneberger Olaf, Tunyasuvunakool Kathryn, Bates Russ, Žídek Augustin, Potapenko Anna, Bridgland Alex, Meyer Clemens, Kohl Simon A. A., Ballard Andrew J., Cowie Andrew, Romera-Paredes Bernardino, Nikolov Stanislav, Jain Rishub, Adler Jonas, Back Trevor, Petersen Stig, Reiman David, Clancy Ellen, Zielinski Michal, Steinegger Martin, Pacholska Michalina, Berghammer Tamas, Bodenstein Sebastian, Silver David, Vinyals Oriol, Senior Andrew W., Kavukcuoglu Koray, Kohli Pushmeet, and Hassabis Demis. Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873):583–589, August 2021. Publisher: Nature Publishing Group. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Hsu Chloe, Verkuil Robert, Liu Jason, Lin Zeming, Hie Brian, Sercu Tom, Lerer Adam, and Rives Alexander. Learning inverse folding from millions of predicted structures. In Proceedings of the 39th International Conference on Machine Learning, pages 8946–8970. PMLR, June 2022. ISSN: 2640–3498. [Google Scholar]

- [41].Shan Sisi, Luo Shitong, Yang Ziqing, Hong Junxian, Su Yufeng, Ding Fan, Fu Lili, Li Chenyu, Chen Peng, Ma Jianzhu, Shi Xuanling, Zhang Qi, Berger Bonnie, Zhang Linqi, and Peng Jian. Deep learning guided optimization of human antibody against SARS-CoV-2 variants with broad neutralization. Proceedings of the National Academy of Sciences, 119(11):e2122954119, March 2022. Publisher: Proceedings of the National Academy of Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [42].Notin Pascal, Dias Mafalda, Frazer Jonathan, Marchena-Hurtado Javier, Gomez Aidan N., Marks Debora, and Gal Yarin. Tranception: Protein Fitness Prediction with Autoregressive Transformers and Inference-time Retrieval. In Proceedings of the 39th International Conference on Machine Learning, pages 16990–17017. PMLR, June 2022. ISSN: 2640–3498. [Google Scholar]

- [43].Rao Roshan M., Liu Jason, Verkuil Robert, Meier Joshua, Canny John, Abbeel Pieter, Sercu Tom, and Rives Alexander. MSA Transformer. In Proceedings of the 38th International Conference on Machine Learning, pages 8844–8856. PMLR, July 2021. ISSN: 2640–3498. [Google Scholar]

- [44].Delgado Javier, Radusky Leandro G, Cianferoni Damiano, and Serrano Luis. FoldX 5.0: working with RNA, small molecules and a new graphical interface. Bioinformatics, 35(20):4168–4169, October 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Park Hahnbeom, Bradley Philip, Greisen Per Jr., Liu Yuan, Mulligan Vikram Khipple, Kim David E., Baker David, and DiMaio Frank. Simultaneous Optimization of Biomolecular Energy Functions on Features from Small Molecules and Macromolecules. Journal of Chemical Theory and Computation, 12(12):6201–6212, December 2016. Publisher: American Chemical Society. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.