Abstract

Endometrial cancer screening is crucial for clinical treatment. Currently, cytopathologists analyze cytopathology images is considered a popular screening method, but manual diagnosis is time-consuming and laborious. Deep learning can provide objective guidance efficiency. But endometrial cytopathology images often come from different medical centers with different staining styles. It decreases the generalization ability of deep learning models in cytopathology images analysis, leading to poor performance. This study presents a robust automated screening framework for endometrial cancer that can be applied to cytopathology images with different staining styles, and provide an objective diagnostic reference for cytopathologists, thus contributing to clinical treatment. We collected and built the XJTU-EC dataset, the first cytopathology dataset that includes segmentation and classification labels. And we propose an efficient two-stage framework for adapting different staining style images, and screening endometrial cancer at the cellular level. Specifically, in the first stage, a novel CM-UNet is utilized to segment cell clumps, with a channel attention (CA) module and a multi-level semantic supervision (MSS) module. It can ignore staining variance and focus on extracting semantic information for segmentation. In the second stage, we propose a robust and effective classification algorithm based on contrastive learning, ECRNet. By momentum-based updating and adding labeled memory banks, it can reduce most of the false negative results. On the XJTU-EC dataset, CM-UNet achieves an excellent segmentation performance, and ECRNet obtains an accuracy of 98.50%, a precision of 99.32% and a sensitivity of 97.67% on the test set, which outperforms other competitive classical models. Our method robustly predicts endometrial cancer on cytopathologic images with different staining styles, which will further advance research in endometrial cancer screening and provide early diagnosis for patients. The code will be available on GitHub.

Introduction

Endometrial cancer is one of the most common tumors in the female reproductive system and usually occurs in postmenopausal women [1, 2]. It is the leading cause of cancer-related deaths in women worldwide [3], with approximately 76,000 deaths each year [1]. And the incidence and mortality of endometrial cancer is expected to continue to rise in the coming decades [4, 5]. Studies have shown that endometrial screening can help to detect cellular lesions, and improve long-term patient outcomes [6, 7]. It would significantly improve survival rates [8]. So, endometrial cancer screening is crucial.

However, there are few tools available for the endometrial cancer screening. A minimally invasive method based on cytopathology to address endometrial cancer screening is a hot topic of current research and future development [9]. And it has been widely used in countries such as Japan [10, 11]. Moreover, it is considered to be cost-effective and more useful for early screening than invasive endometrial biopsy and hysteroscopy [12–15]. Nevertheless, there are still many difficulties in advancing cytopathological screening.

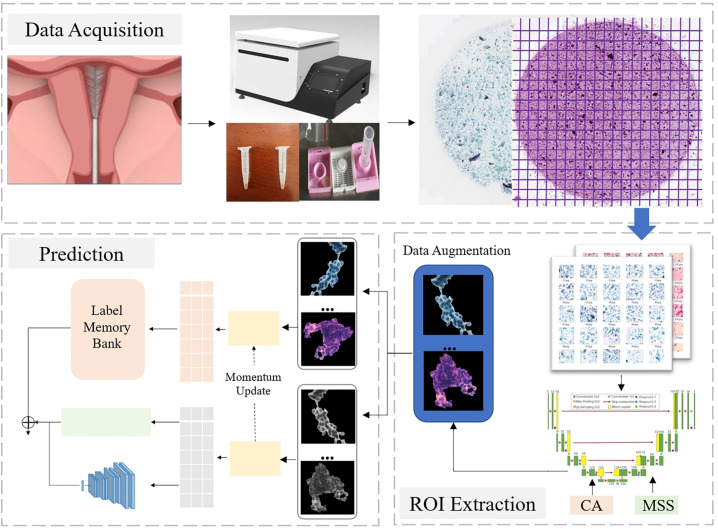

Firstly, there are no endometrial cytopathology datasets that contain segmentation and classification labels, due to the difficulty of data acquisition and the high cost of high-quality annotation. To combat the challenge, our team collecting 139 cytopathology whole slide images (WSI) with our own designed endometrial sampling device Li Brush (20152660054, Xi’an Meijiajia Medical Technology Co., Ltd., China). Among them, 39 WSIs are papanicolaou stained, and 100 WSIs are hematoxylin and eosin (H&E) stained. These WSIs are annotated by two cytopathologists, thus building a dataset for cytological screening of endometrial cancer. To the best of our knowledge, this is the first cytopathology dataset that includes segmentation and classification labels.

Secondly, diagnosing cytopathological slides is a time-consuming and complex task [16]. Subjective discrepancies and heavy workloads affect the productivity of cytopathologists [17]. As a powerful tool, deep learning can provide objective references for doctors and further improve their work efficiency [18, 19]. Therefore, it is widely used in thyroid cancer [20], cervical cancer [21], liver cancer [22, 23], and other diseases to improve the diagnostic efficiency [24]. And in endometrial diagnosis, deep learning is usually used for segmentation and classification tasks. In the field of segmentation, Erlend Hodneland et al. used a UNet-based 3D convolutional neural network (CNN) to segment endometrial tumors on radiology images, which aimed at generating tumor models for better individualized treatment strategies [25]. Zhiyong Xia et al. designed a dense pyramidal attention U-Net for hysteroscopic images and ultrasound images, which can help doctors to accurately localize the lesion site [26]. In addition, segmentation algorithms are often used to assist in confirming the depth of myometrial infiltration in endometrial cancer [27–30].In the field of classification, Christina Fell et al. used CNNs to classify endometrial histopathology images at the WSI level, which are categorized as “malignant”, “other or benign” and “insufficient” [31]. Sarah Fremond et al. proposed an interpretable endometrial cancer classification system, which can further predict four molecular subtypes of endometrial cancer through self-supervised learning [32]. Min Feng et al. develop a deep learning model for predicting lymph node metastasis from histopathologic images of endometrial cancer, which is believed to predict metastatic status and improve accuracy [33]. In summary, In summary, we note that deep learning models are commonly used for radiology images [34, 35] and histopathology images [36–38] in endometrial studies. Therefore, there is still a lack of endometrial cancer screening algorithms based on cytopathologic images. The aim of our study is to develop a new algorithm that learns cytopathological features and provides an assisted diagnostic strategy.

Here we propose an innovative two-stage framework for endometrial cancer screening. In this study, we found that the staining styles of slides was performed differently in different medical centers [39]. Some endometrial samples were stained with H&E, while others were stained with papanicolaou. In addition, the stained slides can also be highly variable due to the preservation environment, changes in the scanner, etc [39]. This can affect the final diagnosis results [40, 41]. Therefore, we have improved the automated screening framework to increase its robustness and accuracy.

In clinical diagnosis, cell clumps are regions of interest (ROIs) for cytopathologists, while the background contains unnecessary noises [42]. So, in the first stage, we propose an improved segmentation network CM-UNet, which extracts ROIs from cytopathology images. We introduce a channel attention (CA) module and a multilevel semantic supervision (MSS) module to obtain more local and global contextual representations. In addition, we added novel skip connections to efficiently extract multi-scale features.

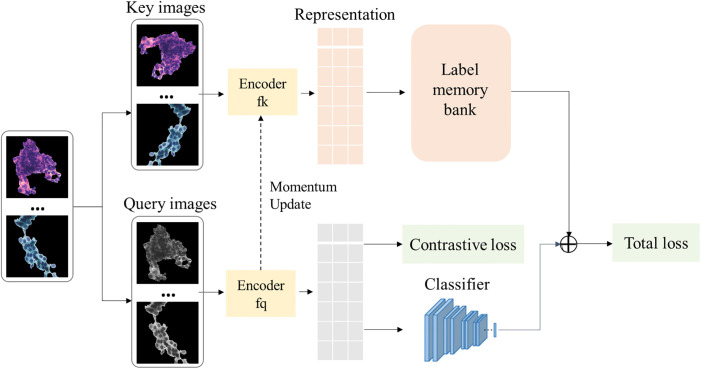

In the second stage, we need to classify ROIs to screen positive cell clumps. Since the obtained ROIs vary in shape and size, the different representations among these ROIs may affect the performance of the classification model. We propose the contrastive learning based algorithm ECRNet to classify ROIs. In contrast to current contrastive learning methods that treat different augmentations of the same image as positive pairs, we introduce the label memory bank to preserve the representation information of the image and the corresponding labels. ECRNet treats two instances with the same label as a positive sample pair, and two instances with different labels as a negative sample pair. Thereby, different images with the same semantics are better aggregated in the representation space, while negative sample pairs are separated in the representation space. This makes better use of class-level discriminative information and enhances the generality of the algorithm to some extent.

Finally, our experimental results show that the two-stage framework performs well on cytopathology image with different staining styles. The framework can accurately present negative and positive cell clumps to cytopathologists, providing objective decision support.

The main contributions of our study are as follows:

Computer-aided diagnostic studies for endometrial cancer screening are scarce and there is a lack of available datasets. Therefore, our team created an endometrial cancer cytology dataset, which was annotated by two cytopathologists. This dataset contains segmentation labels and classification labels that can be used for deep learning.

Compared to histopathology image segmentation, endometrial cytology images have more noise and sparser semantic features, which pose a challenge to segmentation algorithms. We propose a segmentation model based on the UNet architecture, and for better extraction of semantic features in cytology images, we introduce the CA module and the MSS module to learn more local and global contextual representations.

Considering that images with the same classification label may be represented differently from each other, e.g., variations in staining styles, which may affect the classification model performance. In order to make full use of the image content information and label information, we propose ECRNet and introduce the label memory bank to make ECRNet focus more on the class-level discriminative information.

The framework performs efficiently on H&E-stained and papanicolaou-stained cytopathology images, and shows cytopathologists the negative and positive cell clumps. On the test set, it achieved an average accuracy of 98.50%, an area under the curve (AUC) of 93.66% compared to other classical models. The results show that our model can contribute to medical decision-making.

The rest of this paper is organized as follows: Section 2 describes the materials and methods; Section 3 analyzes our results; Section 4 and Section 5 discuss and conclude our work, respectively.

Methods

Data collection

From July 2015, we collected endometrial cells using a sampling device of our own design, the Li Brush (20152660054, Xi’an Meijia Medical Technology Co., Ltd., Xi’an, China). This hospital routine work lasted for seven years since 2015 (XJTU1AHCR2014-007). Until after 2019, endometrial cells obtained from the Li Brush were used in this study (XJTU1AFCRC2019SJ-002). It is important to note that our team spent seven years collecting endometrial cells. However, all data that was used for analysis was obtained after 2019. Therefore, no retrospective ethical approval was involved. The endometrial cells collected from 03/12/2019 to 03/12/2020 used in this study were done so under IRB approval.

From 2019 to 2020, our team collecting images. After 2020 and up to 2022, we are mainly working on the annotation process, and building the endometrial cytopathology image dataset. 139 women who underwent curettage or hysterectomy at the First Affiliated Hospital of Xi’an Jiaotong University were registered in the Obstetrics and Gynecology Registry. Patient exclusion criteria were as follows: (1) diagnosed with suspected pregnancy or pregnancy; (2) diagnosed with acute inflammation of the reproductive system; (3) patients who had undergone hysterectomy for a previous diagnosis of cervical cancer, cervical intraepithelial neoplasia, or ovarian cancer and so on; (4) diagnosed with dysfunctional clotting diseases; and (5) women who body temperature at 37.5°C or higher twice a day were also excluded.

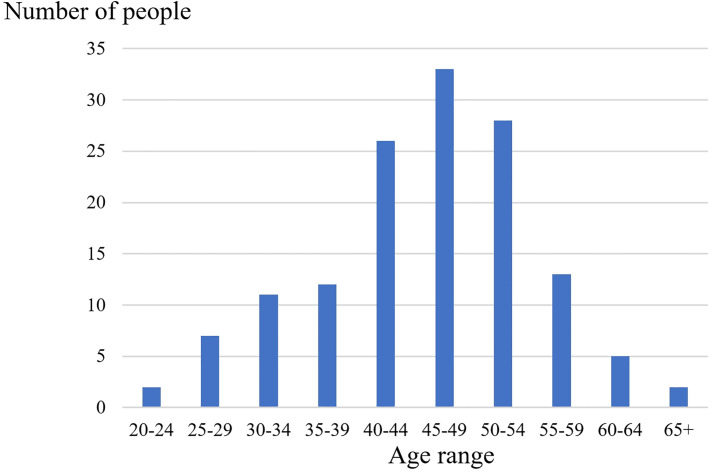

The study is approved by the Ethics Committee of the First Affiliated Hospital of Xi’an Jiaotong University (XJTU1AFCRC2019SJ-002), and written consent was obtained from all patients. Minors were not included in the study. And the authors will not have access to information that could identify individual participants. After three years of collection, 139 patients are eventually included in the study and their details are shown in Table 1, which includes the age of the patients, childbirth history, menstrual status, and any other diseases. The detailed information of age distribution is shown in Fig 1. In addition, histopathological diagnosis was also collected and the specific information is shown in Table 2. It is worth mentioning that the protocols used in this study were all in accordance with the ethical principles of the Declaration of Helsinki on Medical Research [43].

Table 1. Patient characteristics.

| Characteristics | Number |

|---|---|

| SOURCE | |

| IPD | 81 |

| OP | 58 |

| AGE | |

| <40 years old | 32 |

| ≥40 years old | 107 |

| MENSTRUAL STATUS | |

| Premenopausal | 77 |

| Postmenopausal | 35 |

| AUB | 27 |

| OTHER DISEASE | |

| Ovarian cancer | 18 |

| Hypertension | 24 |

| Diabetes | 24 |

| Hormone replacement therapy | 32 |

| CHILDBIRTH EXPERIENCE | |

| Yes | 101 |

| No | 28 |

IPD, Inpatient Department. OP, Outpatient. AUB, Abnormal uterus bleeding. Some information of the patients is missing.

Fig 1. The age distribution of the patients.

Table 2. Pathological diagnosis.

| Histological diagnostic results | Number |

|---|---|

| Proliferative endometrium | 14 |

| Secretory endometrium | 8 |

| Atrophic endometrium | 10 |

| Mixed endometrium | 2 |

| Endometrial hyperplasia without atypia | 39 |

| Endometrial atypical hyperplasia | 4 |

| ENDOMETRIAL CARCINOMA | 62 |

| Endometrioid carcinoma, G1/G2 | 47 |

| Endometrioid carcinoma, G3 | 11 |

| Serous carcinoma | 2 |

| Clear cell carcinoma | 2 |

G1, G2, G3 represent grade 1, grade 2, grade 3 respectively.

Our study was based on all the cases collected from 2019 to 2020. The data in this work was cleaned so that it did not contain private patient information. The datasets used or analysed during the current study are available from the corresponding author on reasonable request.

We collected endometrial cells with Li Brush, and H&E staining or papanicolaou staining was used for liquid-based cytology specimens of endometrial cells. Histopathological diagnosis of the same patient was also collected at the same time. When the cytopathologic diagnosis was consistent with the histopathologic diagnosis, the case was included in the study. Finally, 39 whole slide images (WSIs) are papanicolaou stained and 100 WSIs are H&E stained.

The MOTIC digital biopsy scanner (EasyScan 60, 20192220065, China) with an ×20 lens was used to scan cytopathological slides in the counterclockwise spiral. And the focal length is automatically adjusted. Since each WSI is very large (e.g., 95200 × 87000 pixels), which cannot be directly input to the deep learning model. We crop the WSIs into same-sized images (1024 × 1024 pixels). Then a simple but effective thresholding algorithm is used to remove the meaningless background images. Specifically, we first calculate the mean and standard deviation of each image in RGB space. And then, those images with mean between 50 and 230 and standard deviation above 20 are retained. These images often contain meaningful cell clumps.

And the image annotation process includes segmentation label annotation and classification label annotation. Segmentation labels were obtained by two experienced pathologists using Adobe Photoshop CC (2019 v20.0.2.30). First, one senior cytopathologist segmented the cell clumps and the results were reviewed by the other cytopathologist. After the review is accurate, the pathologists annotated the cell clumps according to the International Society of Gynecologic Pathologists and the 2014 World Health Organization classification of uterine tumors. All cell clumps were classified into two categories: malignant (atypical cells of undetermined significance, suspected malignant tumor cells, and malignant tumor cells), and benign (non-malignant tumor cells). Benign diagnosis is defined as cell clumps with neat edges, nuclei with oval or spindle shape, and evenly distributed, finely granular chromatin. Malignant diagnosis referred to a three-dimensional appearance, irregular (including dilated, branched, protruding, and papillotubular) edge, with the nucleus poloidal disordering or disappearing (including megakaryocyte appearance, nuclear membrane thickness, and coarse granular or coarse block chromatin). Both benign and malignant tumors were followed up histologically. Undoubtedly, the cell clumps in the negative slides are all negative, but the ones in the positive slides have both negative and positive cell clumps. Therefore, the two cytopathologists again vote on the labeling of each cell clump, when the votes do not agree, they will discuss it. If the discussion fails to result in an accurate diagnosis, the cell clump is discarded. These measures ensure the accuracy and consistency of segmentation and classification labels.

Based on the results annotated by pathologists, we established the XJTU-EC dataset, which containing 3,620 positive images (endometrial cancer cell clumps and endometrial atypical hyperplasia cell clumps) and 2,380 negative images.

Endometrial cancer screening

In this paper, we propose a novel framework for endometrial cytology image analysis, applying two fully convolutional networks for early diagnosis. Firstly, a dense connection-based semantic segmentation network, with CA and MSS modules, is used for extract ROIs; secondly, a model based on contrastive learning is applied to classify ROIs. The final results confirm the effectiveness of this strategy. All details as shown in Fig 2.

Fig 2. The proposed pipeline for cancer screening using endometrial cytology data.

CM-UNet

To eliminate the interference of neutrophils, dead cells and other impurities contained in the background, and helping the pathologist to better localize the lesion, the first step is to segment the endometrial cell clumps.

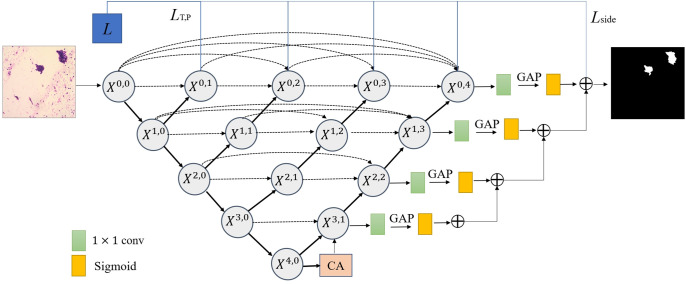

The CM-UNet performed ResNet101 as the backbone, and computes the aggregation of all feature maps at each node by applying dense connections [44–46]. As shown in Fig 3.

Fig 3. Pipeline of the proposed framework for cell clumps segmentation.

CM-UNet allows more flexible feature fusion at decoder nodes through densely connected skip connections. L is the loss function. The bold links represent the necessary depth supervision and the light coloured links represent the optional ones.

Let xi,j denote the output of node XI,J, then xi,j can be represented as follows:

| (1) |

where H(·) is a convolution operation followed by an activation function, D(·) and U(·) denote a down-sampling layer and an up-sampling layer respectively, and [·] denotes the concatenation layer.

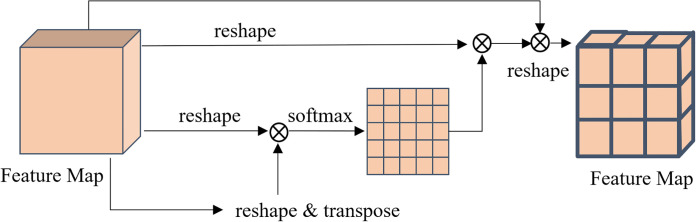

To better accommodate different image staining styles, inspired by [47], we apply CA module at the bottleneck of the encoder-decoder network. The CA module integrates the semantic relationships between different channel mappings, and emphasises strongly interdependent channel mappings by adjusting the weights [48]. As shown in Fig 4.

Fig 4. The details of the channel attention module.

We perform matrix multiplication between the feature maps x4,0 and the transpose of x4,0. Then apply a softmax layer to calculate the influence of the ath channel on the bth channel:

| (2) |

where K is the number of channels. On this basis, the final output E is described as follows:

| (3) |

the scaling parameter β is gradually learns a weight from 0, which is updated in subsequent learning.

To solve the gradient disappearance/explosion problem, we introduce the MSS module [49]. By setting appropriate weights for different side output layers, a deeper semantic representation is learned. Assuming that the original input image is represented as R, and d represents the depth of the CM-UNet model, each output layer S performs a 1 × 1 convolution operation, followed by the global average pooling to extract global contextual information. And then we assign a weight factor α for each layer of the model. So, the side loss can be defined as:

| (4) |

where θi denotes the relevant parameter of the ith output layer. And α3, α2, α1, α0 is set sequentially to 0.1, 0.3, 0.6, 0.9.

In addition, we introduce a hybrid segmentation loss LT,P to address the class imbalance in the segmentation task:

| (5) |

where tn,c ∈ T, and pn,c ∈ P denote the ground truth and predicted label for class c and nth pixel in the batch. T represents the ground truth, and P represents the prediction probability. C represents the number of categories, and N represents the number of pixels in one batch.

Ultimately, the overall loss function of CM-UNet is defined as the weighted sum of the hybrid segmentation loss LT,P and the side loss Lside. The final loss function is shown as below:

| (6) |

where d represents the depth of the CM-UNet model.

We trained the segmentation network using the dataset annotated with pathologists, and performed ten-fold cross-validation. It should be noted that segmentation results often have some holes and flaws. Gaps and noise in the images are eliminated by morphological processing. Finally, we obtained a ROI dataset consisting of cell clumps for the next stage.

ECRNet

After segmentation of the cytopathological images, noise such as single cells and leukocytes are removed from the background, leaving only the ROIs, as shown in Fig 2. Next, we filled its surroundings with pixels of value 0 until the size was adjusted to 512 × 512, in order to further the image analysis task.

Due to the complexity of the endometrial cell features, there is an urgent need for a powerful deep learning classifier to learn and classify cell features. We propose a state-of-the-art method based on contrastive learning to address the above needs, and named ECRNet. The details are shown in Fig 5.

Fig 5. The details of ECRNet.

ECRNet consists of two parts: contrastive learning and supervised learning. In contrastive learning, we want to import classification labels in the training data to improve the performance of the classification task. Therefore, we introduce the label memory bank [50]. Two instances with the same label are considered as the same pair, while two instances with different labels are considered as different pairs. This process can be considered as a dictionary look-up task. Given an encoding query q (with label y), we need to look up the corresponding positive key k from a dictionary. Assume that a dictionary of n encoded labeled keys {(k1, y1), (k2, y2),…, (kn, yn)}, for the given encoded query (q, y), its label contrastive loss Lcon can be calculated as:

| (7) |

where II is an indicator function that takes the value of 1 if y (the label of the q) and yi (the label of the ki) are the same, otherwise it is 0. sim(·) is a similarity function, and τ is a temperature parameter.

In order to store the large number of image representations and labels in the label memory bank, we introduce the momentum update method so as to dynamically construct a large and consistent dictionary [51]. This not only reduces the computational overhead, but also allows the learned representations transfer well to the downstream task. The specific update equation is as follows:

| (8) |

Here m∈is a momentum coefficient, taking values between [0,1). θk is the parameter of the encoder fk, θq is the parameter of the encoder fq.

In supervised learning, we choose the VGG-16 as the classifier [52, 53]. The cross-entropy loss function is our classifier loss functions and defined as follows:

| (9) |

where Q is the set of the query image representations, Y is the label set, is the predicted probability that the query image qi is predicted to be j. II is an indicator function that takes the value of 1 if the query image qi is classified correctly, otherwise it is 0.

Finally, the ECRNet total loss is calculated as follows:

| (10) |

where β is a hyperparameter to adjust the relative weight between classification loss and contrastive loss. The value of β in general is 0.5.

Evaluation metrics

We chose the Dice coefficient to evaluate the segmentation model. It is a measure of the similarity between two samples and is one of the commonly used evaluation criteria for segmentation [54]. When the Dice coefficient is 1, it means that the segmentation model achieves perfect results. The Dice coefficient is then calculated as follows:

| (11) |

where T is the set of ground truth, and P is the set of corresponding segmentation results, respectively.

To better evaluate the performance of the classification model, we use four commonly used quantitative indicators of accuracy, sensitivity, specificity, and F1-Score as the evaluation indicators of the classification model. The indicators are defined as follows:

| (12) |

| (13) |

| (14) |

| (15) |

where TP, TN, FP and FN represent true positives (correctly classified as positive), true negatives (correctly classified as negative), false positives (incorrectly classified as positive) and false negatives (incorrectly classified as negative), respectively.

In addition, in order to compare the different performance of various classifiers, we select ROC curve and AUC value to visualize the classification results of each classifier. The ROC curve graph reflects the relationship between sensitivity and specificity. Its abscissa represents FPR (false positive rate), and the ordinate is called TPR (true positive rate). The AUC value can be obtained by calculating the area under the ROC curve. A higher AUC value can prove the superiority of the classification model.

Experiments and results

Implementation details

The cytopathology images are all augmented by vertical flipping, horizontal flipping, random rotation (90°, 180°, 270°), scaling and graying to improve the framework performance. We use the ImageNet 25 pre-trained weights as the encoder weights to initialize the segmentation and classification models, respectively, while the weights for the decoder part are randomly initialized. And the Adam optimizer is introduced to optimise the model, with an initial learning rate of 5 × 10−3 [55]. The temperature parameter τ is 0.07, the momentum parameter m is 0.9. Finally, the training batch size is set to 32.

We used ten-fold cross-validation to test our models. All networks are implemented based on the TensorFlow framework, and trained by two GPU cards (NVIDIA GeForce GTX 1080), with Python 3.6.12(Python Software Foundation, Wilmington, DE, USA), keras 2.4.3 (Google Brain, Mountain View, CA, USA) and TensorFlow 2.2.0 (Google Brain, Mountain View, CA, USA).

Segmentation results

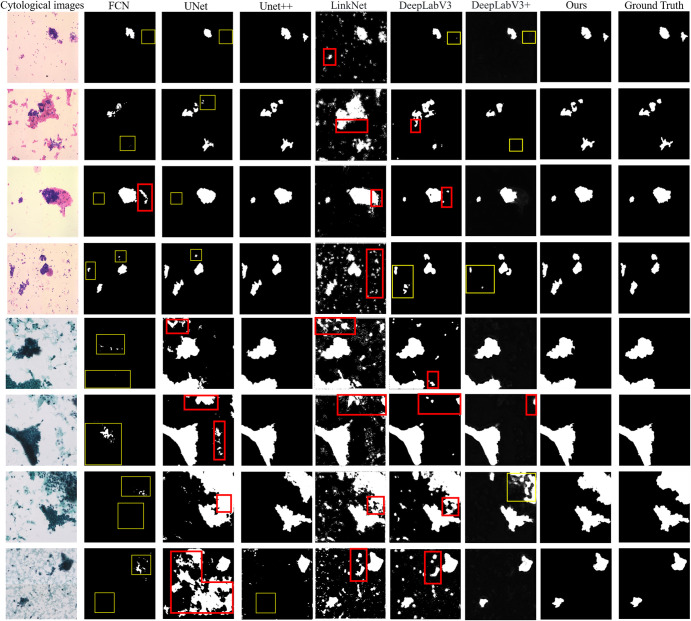

We applied our segmentation algorithm and other classical segmentation algorithms, such as fully convolutional networks (FCN) [56], UNet [44], UNet++ [45], LinkNet [57], DeepLabV3 [58], and DeepLabV3+ [59] on HE-stained images and papanicolaou-stained images, respectively. The experimental results are shown in Table 3. Our model shows great potential in segmenting cell clumps, with the average Dice value exceeding 0.85. In addition, we conducted ablation experiments, as shown in Table 3, to verify the role played by the CA module and the MSS module in the segmentation model.

Table 3. Comparison experiments.

| Model | Dice | Training time | Inference time | Params |

|---|---|---|---|---|

| FCN | 0.61 | 2.17h | 0.045s | 270M |

| UNet | 0.75 | 2.50h | 0.022s | 33M |

| UNet++ | 0.85 | 1.49h | 0.029s | 30M |

| LinkNet | 0.79 | 1.21h | 0.030s | 12M |

| DeepLabV3 | 0.81 | 2.30h | 0.030s | 54M |

| DeepLabV3+ | 0.85 | 1.80h | 0.025s | 41M |

| UNet++ (with CA) |

0.86 | 1.52h | 0.030s | 31M |

| UNet++ (with MSS) |

0.88 | 1.88h | 0.039s | 33M |

| CM-UNet | 0.89 | 1.90h | 0.039s | 33M |

Comparison experiment of our segmentation algorithm with other classical segmentation algorithms. The inference time is calculated by the single image.

The segmentation results are shown in Fig 6. The first column is the cytological image, the second column is the result of FCN, the third column is the result of UNet, the fourth column is the result of UNet++, the fifth column is the result of LinkNet, the sixth column is the result of DeepLabV3, the seventh column is the result of DeepLabV3+, the eighth column is the result of CM-UNet, and the ninth column is the ground truth annotated by the pathologist. The red boxes represent the over-segmented area, and the yellow boxes represent the under-segmented area. As can be seen from this figure, FCN and UNet have more under-segmentation and fail to identify all cell clumps, which is not suitable for cytopathology image segmentation. Whereas LinkNet and DeepLabV3 tend to over-segment, mistaking mucus and single cells for cell clumps, which does not benefit the subsequent classification task and is therefore also not applicable. The segmentation results of UNet++, DeepLabV3+ and CM-UNet basically conform to the gold standard. However, UNet++ performed moderately well on H&E-stained images but poorly on papanicolaou-stained images, occasionally missing cell clumps. DeepLabV3+, on the other hand, made fewer errors on the papanicolaou-stained images but missed cell clumps on the H&E-stained images. Taken together, the segmentation result of CM-UNet is closer to the annotation of pathologists. It is able to segment all cell clumps and extract ROIs. This demonstrates the performance of our segmentation network.

Fig 6. Comparison with classical segmentation algorithms.

We randomly show the segmentation results of four H&E-stained and four papanicolaou-stained cytopathology images. The red boxes represent the over-segmented area, and the yellow boxes represent the under-segmented area.

Classification results

We input the ROIs into ECRNet for ten-fold cross-validation. Table 4 shows the results of the ablation experiments. The backbone (VGG-16) classified the extracted ROI dataset with an accuracy of 91.07%. In contrast, VGG-16 with contrastive learning component achieves 7.43% higher accuracy than backbone.

Table 4. Ablation experiments.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| VGG-16 (One stage) |

84.29 | 83.23 | 88.74 | 85.90 |

| VGG-16 (Two stage) |

91.07 | 90.38 | 93.38 | 91.86 |

| ECRNet (One stage) |

89.17 | 88.03 | 90.67 | 89.33 |

| ECRNet (Two stage) |

98.50 | 99.32 | 97.67 | 99.33 |

Table 4 also shows the importance of the segmentation strategy. In the two-stage strategy, we first segment the cytopathology images to obtain ROIs, and apply the classifier to classify the ROIs. In the one-stage strategy, the classifier directly classifies the cytopathology images containing the background. All data sizes are scaled to 512 × 512. The results of the experiment. Among them, VGG-16 performed the worst under the one-stage strategy, with an accuracy of 84.29%. In contrast, ECRNet performed best under the two-stage strategy.

As shown in Table 5, we compared ECRNet with five classical deep learning models. There are the MobileNet [60], the ResNet-101 [61], the Inception-V3 [62], the ViT [63], the ResNeXt-101 [64], the EfficientNet [65], the DenseNet-121 [66], and VGG-16 [67]. Note that all network parameters remain the same as previously described, and the initialization weights are the ImageNet pre-trained weights. These results are obtained in the two-stage framework, which is based on the classification of cell clumps.

Table 5. Comparison with baseline methods.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) | Params |

|---|---|---|---|---|---|

| VGG-16 +SVM | 78.83 | 75.62 | 78.15 | 76.86 | 138M |

| ResNet-101 +SVM | 88.55 | 84.30 | 85.71 | 85.00 | 24M |

| Inception-V3 +SVM | 87.60 | 80.77 | 88.24 | 84.34 | 22M |

| MobileNet-V1 | 82.99 | 79.11 | 74.79 | 76.89 | 5M |

| Inception-V3 | 82.17 | 81.43 | 83.33 | 82.37 | 22M |

| ViT | 65.00 | 62.43 | 95.58 | 75.52 | 343M |

| ResNeXt-101 | 86.50 | 81.16 | 99.12 | 89.24 | 79M |

| EfficientNet-B7 | 88.50 | 88.79 | 91.15 | 89.96 | 66M |

| ResNet-101 | 92.17 | 97.03 | 87.00 | 91.74 | 24M |

| DenseNet-121 | 93.50 | 92.23 | 95.00 | 93.59 | 8M |

| Ours | 98.50 | 99.32 | 97.67 | 99.33 | 138M |

In this part, we found that the ViT model performs poorly, which may be due to the small size of our dataset and overfitting of the ViT model. In addition, the MobileNet-V1 model also performs poorly, which may be due to the fact that lightweight networks are not good at learning complex cytopathological features. In contrast, the Inception-V3, the ResNeXt-101, the EfficientNet-B7, the ResNet-101, the DenseNet-121, and the ECRNet models performed better, with mean values of accuracy, precision, recall, and F1-scores above 80%. Specifically, ResNeXt-101 has the highest recall, but has 12% less classification accuracy than ECRNet. And ResNet-101 has a precision of 97.03%, second only to ECRNet, which indicates that it has a lower false positive rate. However, the recall of ResNet-101 is only 87.00%, which indicates that it has a higher false negative rate. And it is more likely to miss cancer cell clumps. And ECRNet has the best classification performance, outperforming other classification models in terms of accuracy, precision and F1-score.

Furthermore, several studies [68] show that using CNNs to extract features and train linear support vector machines (SVMs) achieves better performance than end-to-end CNN-based classifiers. Therefore, we use three classical CNNs, that is VGG-16, ResNet-101 and Inception-V3, to obtain the image feature vectors. These feature vectors are then used to train a linear SVM that classifies all ROIs as positive or negative. As the dimensionality of the feature maps is large, we used principal component analysis to reduce the dimensionality of the image features. The SVM classifier uses a radial basis function kernel with parameters γ and C set to 0.0078 and 2, respectively. And the rest of the experimental settings are consistent with those described previously. The results are shown in Table 5. As can be seen, all results are unsatisfactory, with VGG-16+SVM having an accuracy of only 78.83%.

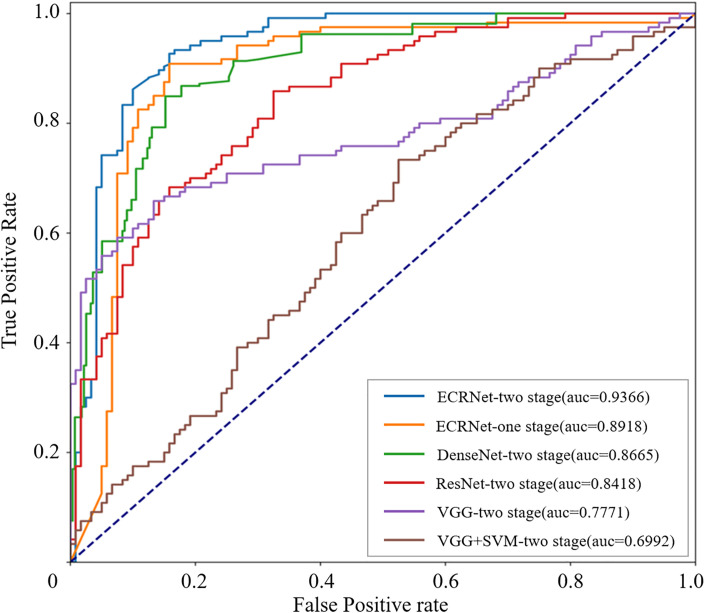

We further plotted the ROC curves for the classifiers in the binary classification task. As can be seen from Fig 7, ECRNet outperformed all the models. This experiment suggests that the model benefits more from two-stage framework than one-stage framework. Our two stage strategy is effective.

Fig 7. The false positive rate, the y-axis represents the true positive rate.

The points above the diagonal line are indicated by dashed lines indicating a better than random classification result, i.e. an AUC value of 0.5.

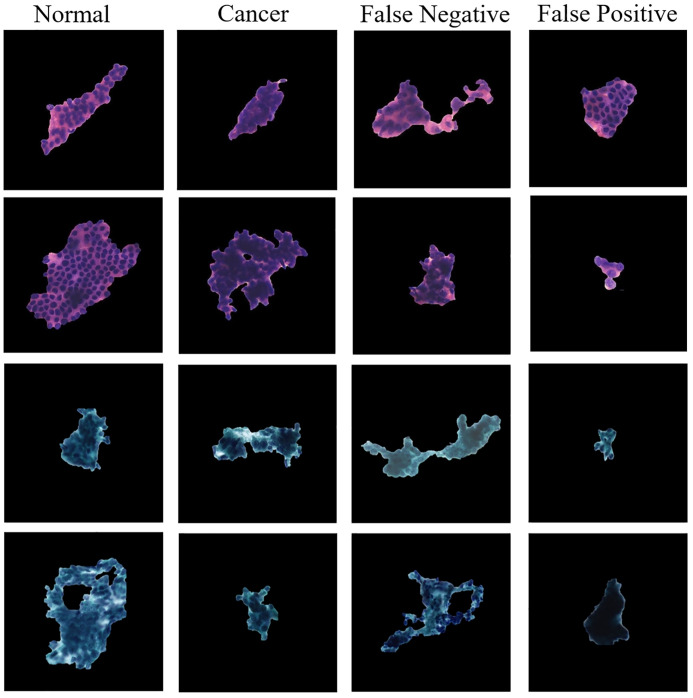

Finally, we also discuss the ECRNet classification failure cases. As shown in Fig 8, we randomly listed 8 correctly classified cell clumps and 8 failure cases in the test set. Of these, the 4 false-negative (missed diagnosis) cases consisted of 1 well-differentiated endometrial adenocarcinoma and 3 poorly differentiated endometrial adenocarcinomas. In contrast, 4 false-positive (over diagnosed) cases included 3 normal cell clumps that were classified as cancer. In some of these classification failure samples, it was difficult for the classification model to extract deeper features because of cell stacking and obscure structural features. In addition, another part of the failed cases showed that the number of cells in the image was small, which was easier to be misclassified by the classification model. This suggests that ECRNet’s ability to classify small targets needs to be strengthened in future work.

Fig 8. Examples of correct and incorrect predictions of ECRNet.

In addition, we conducted an external validation of the paper’s algorithm using the public dataset. The externally validated data were obtained from the public data platform, AIstudio, accessible via the Internet [69]. It is worth noting that the data used for external validation came from the public dataset and did not contain segmentation labels, so this external validation mainly evaluated the classification performance of ECRNet.

The dataset used for external validation consisted of 848 negative endometrial cytopathology images and 785 positive endometrial cytopathology images. The ratio of negative to positive images was 1.08:1. All images are papanicolaou stained images. During this external validation, we also chose four classification models (the ResNet-101 model, the DenseNet-121 model, the EfficientNet-B7 model, and the ResNeXt-101 model) to compare with our model. This is because these four models perform well in the classification task, second only to ECRNet. Specifically, these models above were first trained using images (1024 × 1024 pixels) from the XJTU-EC dataset instead of cell clumps (ROIs), and then tested directly on an external dataset. The specific results are shown in Table 6 as following:

Table 6. External validation comparison results.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| ResNeXt-101 | 64.50 | 86.20 | 44.20 | 58.44 |

| EfficientNet-B7 | 83.53 | 78.72 | 59.2 | 67.58 |

| ResNet-101 | 73.50 | 100.00 | 53.10 | 69.37 |

| DenseNet-121 | 80.10 | 77.20 | 67.20 | 71.90 |

| Ours | 95.32 | 94.57 | 96.17 | 95.37 |

In this external validation experiment, ResNet-101 has the highest precision of 100%, which means it has no false positives in the external validation. However, both ResNeXt-101, EfficientNet-B7 ResNet-101, and DenseNet-121 have low recall, which means they can easily miss screening positive patients. This is unacceptable for clinical tasks. In contrast, our model achieved the highest recall of 96.17%. In addition, ECRNet has the highest accuracy of 95.32%, followed by the DenseNet classifier with 83.53%. It is worth noting that ECRNet significantly outperforms the other four classifiers in terms of the F1 score. The F1 score is the reconciled average of precision and recall, which combines the information of precision and recall to provide a more comprehensive assessment of the performance of the classifiers, and the higher the F1 score, the better the performance of the classifiers. The higher the F1 score, the better the performance of the classifier. In summary, ECRNet has the best performance in this external validation experiment.

Discussion

Currently, there is no well-established method to screen endometrial cancer. The main screening tests for endometrial cancer include ultrasound, hysteroscopy and endometrial biopsy. Sequential transvaginal ultrasound scan is a less invasive method of assessment, but lacks a high degree of specificity. Until now, the collection of tissue samples from the endometrium and analysis of histopathological images by physicians has been the gold standard for the diagnosis of endometrial cancer. However, both endometrial biopsy and hysteroscopy are invasive and require the cooperation of anaesthetists, which is expensive. As a result, cytopathology-based screening for endometrial cancer is becoming increasingly desirable.

Due to the lack of relevant data and the complexity of cell morphology, endometrial cancer screening based on cytopathology is difficult to promote. Therefore, our team spent three years collecting and annotating WSIs from 139 patients to create the endometrial cytopathology image dataset, named the XJTU-EC dataset. Since our dataset contains both papanicolaou and H&E stained images, which are the most common staining modalities for cytology images. Therefore, it can be somewhat considered a representative dataset. In addition, the data includes patients of different age, so the XJTU-EC dataset has more diversity. Based on this dataset, we investigated the first clinically automated deep learning framework for extracting and identifying normal or cancerous endometrial cell clumps. The results will be presented to cytopathologists as a reference.

In order to adapt to different staining styles, in the cell clump extraction stage, we use the robust UNet as the backbone, which has been previously generalized to many datasets. Based on this, we introduced the CA module pay attention to global contextual information, and MSS module to aggregate semantic features at multiple scales. So, our method achieves better segmentation results. Experiments demonstrate that CM-UNet is able to perform well on both H&E-stained images and papanicolaou-stained images.

In the cell clump classification stage, we design an ECRNet based on contrastive learning, which considers both instances and label facts. Specifically, different staining style images with the same classification label are considered similar. In addition, we learn meaningful and consistent anatomical features through the label contrastive loss, and introduce a label memory bank and a momentum update encoder to maintain encoded feature consistency. Experimental results show that our method achieves excellent performance on mixed staining style datasets, indirectly demonstrating its robustness. Compared to other methods, ECRNet achieves the best performance in both classification tasks with the two-stage strategy and the one-stage strategy.

Finally, there are two limitations of this work. On the one hand, because the data comes from a single institution, our approach is not externally validated on different institutional datasets. Although we have tried to ensure as much diversity as possible in the dataset during the data collection process, and used contrastive learning to enhance the generalization of the screening framework. However, we still lack external validation results from different medical centers. In future work, we will extend our method to other medical center datasets for external validation. In addition, annotation has been a challenge due to the scarce number of cytopathologists. We will focus on investigating self-supervised learning to reduce the annotation workload of cytopathologists.

Conclusions

In this paper, we present a clinically motivated deep learning framework for endometrial cancer cell clumps screening. in the first stage, we propose CM-UNet to obtain the ROI set, and the CA and MSS modules are able to fuse features from different scales to obtain more semantic information. In the second stage, we utilize ECRNet to classify ROIs. Contrastive learning is used to bring instances of the same class in the representation space closer together and push instances of different classes apart. Experiments show that our framework performs well on the XJTU-EC dataset. Our future work will focus on providing objective and complementary diagnostic input for clinical diagnosis, and supporting effective deployment by advanced algorithms. We believe that this can help reduce the burden on patients and physicians.

Data Availability

Data cannot be shared publicly because of privacy protection. The owner of the data is the Ethics Committee of the First Affiliated Hospital of Xi’an Jiaotong University, and therefore it is not freely available. The Ethics Committee of the First Affiliated Hospital of Xi’an Jiaotong University imposed these restrictions. Data are available from the Ethics Committee (xjyfyllh@163.com) for researchers who meet the criteria for access to confidential data. The externally validated data were obtained from the public data platform, AIstudio, accessible via the Internet (https://aistudio.baidu.com/datasetdetail/273988).Anyone can access this data after registering an account on this platform.

Funding Statement

This work is supported by National Natural Science Foundation of China (No. 62376211; 62206218), Natural Science Foundation of Zhejiang Province (No. LTGG23F030006), Special Project for Technological Innovation Guidance of Shaanxi Province (No. 2024ZC-YYDP-24).

References

- 1.Urick ME, Bell DW. Clinical actionability of molecular targets in endometrial cancer. Nature Reviews Cancer. 2019;19(9):510–21. doi: 10.1038/s41568-019-0177-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kong A, Johnson N, Kitchener HC, Lawrie TA. Adjuvant radiotherapy for stage I endometrial cancer. Cochrane Database Syst Rev. 2012;2012(4):Cd003916. Epub 2012/04/20. doi: 10.1002/14651858.CD003916.pub4 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Weiderpass E, Hashim D, Labrèche F. Malignant Tumors of the Female Reproductive System. In: Anttila S, Boffetta P, editors. Occupational Cancers. Cham: Springer International Publishing; 2020. p. 439–53. [Google Scholar]

- 4.Lortet-Tieulent J, Ferlay J, Bray F, Jemal A. International patterns and trends in endometrial cancer incidence, 1978–2013. JNCI: Journal of the National Cancer Institute. 2018;110(4):354–61. doi: 10.1093/jnci/djx214 [DOI] [PubMed] [Google Scholar]

- 5.Raglan O, Kalliala I, Markozannes G, Cividini S, Gunter MJ, Nautiyal J, et al. Risk factors for endometrial cancer: An umbrella review of the literature. International Journal of Cancer. 2019;145(7):1719–30. doi: 10.1002/ijc.31961 [DOI] [PubMed] [Google Scholar]

- 6.Nishida N, Murakami F, Kuroda A, Sakamoto Y, Tasaki K, Tasaki S, et al. Clinical utility of endometrial cell block cytology in postmenopausal women. Acta Cytologica. 2017;61(6):441–6. doi: 10.1159/000479307 [DOI] [PubMed] [Google Scholar]

- 7.Pei Z, Cao S, Lu L, Chen W. Direct Cellularity Estimation on Breast Cancer Histopathology Images Using Transfer Learning. Computational and Mathematical Methods in Medicine. 2019;2019:3041250. doi: 10.1155/2019/3041250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bao H-j, Chen X, Liu X, Wu W, Li Q-h, Xian J-y, et al. Box C/D snoRNA SNORD89 influences the occurrence and development of endometrial cancer through 2’-O-methylation modification of Bim. Cell Death Discovery. 2022;8(1):309. doi: 10.1038/s41420-022-01102-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Liu Y-S, Wang H-M, Gao Y. Controversy on Positive Peritoneal Cytology of Endometrial Carcinoma. Computational and Mathematical Methods in Medicine. 2022;2022:1906769. doi: 10.1155/2022/1906769 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 10.Kondo E, Tabata T, Koduka Y, Nishiura K, Tanida K, Okugawa T, et al. What is the best method of detecting endometrial cancer in outpatients?-endometrial sampling, suction curettage, endometrial cytology. Cytopathology. 2008;19(1):28–33. doi: 10.1111/j.1365-2303.2007.00509.x [DOI] [PubMed] [Google Scholar]

- 11.Buccoliero AM, Gheri CF, Castiglione F, Garbini F, Barbetti A, Fambrini M, et al. Liquid-based endometrial cytology: cyto-histological correlation in a population of 917 women. Cytopathology. 2007;18(4):241–9. doi: 10.1111/j.1365-2303.2007.00463.x [DOI] [PubMed] [Google Scholar]

- 12.Wadhwa N, Jatawa SK, Tiwari A. Non-invasive urine based tests for the detection of bladder cancer. Journal of clinical pathology. 2012;65(11):970–5. doi: 10.1136/jclinpath-2012-200812 [DOI] [PubMed] [Google Scholar]

- 13.Sawaya GF, Smith-McCune K, Kuppermann M. Cervical cancer screening: more choices in 2019. Jama. 2019;321(20):2018–9. doi: 10.1001/jama.2019.4595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.O’Flynn H, Ryan NAJ, Narine N, Shelton D, Rana D, Crosbie EJ. Diagnostic accuracy of cytology for the detection of endometrial cancer in urine and vaginal samples. Nature Communications. 2021;12(1):952. doi: 10.1038/s41467-021-21257-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Q, Wang Q, Zhao L, Han L, Sun C, Ma S, et al. Endometrial Cytology as a Method to Improve the Accuracy of Diagnosis of Endometrial Cancer: Case Report and Meta-Analysis. Frontiers in Oncology. 2019;9. doi: 10.3389/fonc.2019.00256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ke J, Shen Y, Lu Y, Deng J, Wright JD, Zhang Y, et al. Quantitative analysis of abnormalities in gynecologic cytopathology with deep learning. Laboratory Investigation. 2021;101(4):513–24. doi: 10.1038/s41374-021-00537-1 [DOI] [PubMed] [Google Scholar]

- 17.Landau MS, Pantanowitz L. Artificial intelligence in cytopathology: a review of the literature and overview of commercial landscape. Journal of the American Society of Cytopathology. 2019;8(4):230–41. doi: 10.1016/j.jasc.2019.03.003 [DOI] [PubMed] [Google Scholar]

- 18.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–8. doi: 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lilli L, Giarnieri E, Scardapane S. A Calibrated Multiexit Neural Network for Detecting Urothelial Cancer Cells. Computational and Mathematical Methods in Medicine. 2021;2021:5569458. doi: 10.1155/2021/5569458 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dov D, Kovalsky SZ, Assaad S, Cohen J, Range DE, Pendse AA, et al. Weakly supervised instance learning for thyroid malignancy prediction from whole slide cytopathology images. Medical Image Analysis. 2021;67:101814. doi: 10.1016/j.media.2020.101814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ma J, Yu J, Liu S, Chen L, Li X, Feng J, et al. PathSRGAN: Multi-Supervised Super-Resolution for Cytopathological Images Using Generative Adversarial Network. IEEE Transactions on Medical Imaging. 2020;39(9):2920–30. doi: 10.1109/TMI.2020.2980839 [DOI] [PubMed] [Google Scholar]

- 22.Wei J, Ji Q, Gao Y, Yang X, Guo D, Gu D, et al. A multi-scale, multi-region and attention mechanism-based deep learning framework for prediction of grading in hepatocellular carcinoma. Medical Physics. n/a(n/a). doi: 10.1002/mp.16127 [DOI] [PubMed] [Google Scholar]

- 23.Sun SW, Xu X, Liu QP, Chen JN, Zhu FP, Liu XS, et al. LiSNet: An artificial intelligence -based tool for liver imaging staging of hepatocellular carcinoma aggressiveness. Medical Physics. 2022;49(11):6903–13. doi: 10.1002/mp.15972 [DOI] [PubMed] [Google Scholar]

- 24.Zhao Q, He Y, Wu Y, Huang D, Wang Y, Sun C, et al. Vocal cord lesions classification based on deep convolutional neural network and transfer learning. Medical Physics. 2022;49(1):432–42. doi: 10.1002/mp.15371 [DOI] [PubMed] [Google Scholar]

- 25.Hodneland E, Dybvik JA, Wagner-Larsen KS, Šoltészová V, Munthe-Kaas AZ, Fasmer KE, et al. Automated segmentation of endometrial cancer on MR images using deep learning. Scientific Reports. 2021;11(1):179. doi: 10.1038/s41598-020-80068-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Xia Z, Zhang L, Liu S, Ran W, Liu Y, Tu J. Deep learning-based hysteroscopic intelligent examination and ultrasound examination for diagnosis of endometrial carcinoma. The Journal of Supercomputing. 2022;78(9):11229–44. doi: 10.1007/s11227-021-04046-2 [DOI] [Google Scholar]

- 27.Xiong L, Chen C, Lin Y, Mao W, Song Z. A computer-aided determining method for the myometrial infiltration depth of early endometrial cancer on MRI images. BioMedical Engineering OnLine. 2023;22(1):103. doi: 10.1186/s12938-023-01169-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Otani S, Himoto Y, Nishio M, Fujimoto K, Moribata Y, Yakami M, et al. Radiomic machine learning for pretreatment assessment of prognostic risk factors for endometrial cancer and its effects on radiologists’ decisions of deep myometrial invasion. Magnetic Resonance Imaging. 2022;85:161–7. doi: 10.1016/j.mri.2021.10.024 [DOI] [PubMed] [Google Scholar]

- 29.Mao W, Chen C, Gao H, Xiong L, Lin Y. Quantitative evaluation of myometrial infiltration depth ratio for early endometrial cancer based on deep learning. Biomedical Signal Processing and Control. 2023;84:104685. doi: 10.1016/j.bspc.2023.104685 [DOI] [Google Scholar]

- 30.Dong H-C, Dong H-K, Yu M-H, Lin Y-H, Chang C-C. Using Deep Learning with Convolutional Neural Network Approach to Identify the Invasion Depth of Endometrial Cancer in Myometrium Using MR Images: A Pilot Study. International Journal of Environmental Research and Public Health. 2020;17(16):5993. doi: 10.3390/ijerph17165993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fell C, Mohammadi M, Morrison D, Arandjelović O, Syed S, Konanahalli P, et al. Detection of malignancy in whole slide images of endometrial cancer biopsies using artificial intelligence. Plos one. 2023;18(3):e0282577. doi: 10.1371/journal.pone.0282577 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Fremond S, Andani S, Wolf JB, Dijkstra J, Melsbach S, Jobsen JJ, et al. Interpretable deep learning model to predict the molecular classification of endometrial cancer from haematoxylin and eosin-stained whole-slide images: a combined analysis of the PORTEC randomised trials and clinical cohorts. The Lancet Digital Health. 2023;5(2):e71–e82. doi: 10.1016/S2589-7500(22)00210-2 [DOI] [PubMed] [Google Scholar]

- 33.Feng M, Zhao Y, Chen J, Zhao T, Mei J, Fan Y, et al. A deep learning model for lymph node metastasis prediction based on digital histopathological images of primary endometrial cancer. Quant Imaging Med Surg. 2023;13(3):1899–913. Epub 2023/03/15. doi: 10.21037/qims-22-220 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kurata Y, Nishio M, Moribata Y, Kido A, Himoto Y, Otani S, et al. Automatic segmentation of uterine endometrial cancer on multi-sequence MRI using a convolutional neural network. Scientific Reports. 2021;11(1):14440. doi: 10.1038/s41598-021-93792-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Urushibara A, Saida T, Mori K, Ishiguro T, Inoue K, Masumoto T, et al. The efficacy of deep learning models in the diagnosis of endometrial cancer using MRI: a comparison with radiologists. BMC Medical Imaging. 2022;22(1):80. doi: 10.1186/s12880-022-00808-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zhang Y, Chen S, Wang Y, Li J, Xu K, Chen J, et al. Deep learning-based methods for classification of microsatellite instability in endometrial cancer from HE-stained pathological images. Journal of Cancer Research and Clinical Oncology. 2023;149(11):8877–88. doi: 10.1007/s00432-023-04838-4 [DOI] [PubMed] [Google Scholar]

- 37.Zhang X, Ba W, Zhao X, Wang C, Li Q, Zhang Y, et al. Clinical-grade endometrial cancer detection system via whole-slide images using deep learning. Frontiers in Oncology. 2022;12. doi: 10.3389/fonc.2022.1040238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hong R, Liu W, DeLair D, Razavian N, Fenyö D. Predicting endometrial cancer subtypes and molecular features from histopathology images using multi-resolution deep learning models. Cell Reports Medicine. 2021;2(9):100400. doi: 10.1016/j.xcrm.2021.100400 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lin H, Chen H, Wang X, Wang Q, Wang L, Heng P-A. Dual-path network with synergistic grouping loss and evidence driven risk stratification for whole slide cervical image analysis. Medical Image Analysis. 2021;69:101955. doi: 10.1016/j.media.2021.101955 [DOI] [PubMed] [Google Scholar]

- 40.Shaban MT, Baur C, Navab N, Albarqouni S, editors. Staingan: Stain Style Transfer for Digital Histological Images. 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019); 2019 8–11 April 2019.

- 41.Geng X, Liu X, Cheng S, Zeng S. Cervical cytopathology image refocusing via multi-scale attention features and domain normalization. Medical Image Analysis. 2022;81:102566. doi: 10.1016/j.media.2022.102566 [DOI] [PubMed] [Google Scholar]

- 42.Maksem JA, Meiers I, Robboy SJ. A primer of endometrial cytology with histological correlation. Diagnostic cytopathology. 2007;35(12):817–44. doi: 10.1002/dc.20745 [DOI] [PubMed] [Google Scholar]

- 43.Association WM. World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. Jama. 2013;310(20):2191–4. doi: 10.1001/jama.2013.281053 [DOI] [PubMed] [Google Scholar]

- 44.Ronneberger O, Fischer P, Brox T, editors. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention; 2015: Springer.

- 45.Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J. UNet++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. IEEE Transactions on Medical Imaging. 2020;39(6):1856–67. doi: 10.1109/TMI.2019.2959609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Khan SD, Alarabi L, Basalamah S. An Encoder–Decoder Deep Learning Framework for Building Footprints Extraction from Aerial Imagery. Arabian Journal for Science and Engineering. 2023;48(2):1273–84. doi: 10.1007/s13369-022-06768-8 [DOI] [Google Scholar]

- 47.Liu J, Guo X, Li B, Yuan Y, editors. COINet: Adaptive Segmentation with Co-Interactive Network for Autonomous Driving. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); 2021 27 Sept.-1 Oct. 2021.

- 48.Fu J, Liu J, Tian H, Li Y, Bao Y, Fang Z, et al., editors. Dual Attention Network for Scene Segmentation. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019 15–20 June 2019.

- 49.Wu H, Wang W, Zhong J, Lei B, Wen Z, Qin J. SCS-Net: A Scale and Context Sensitive Network for Retinal Vessel Segmentation. Medical Image Analysis. 2021;70:102025. doi: 10.1016/j.media.2021.102025 [DOI] [PubMed] [Google Scholar]

- 50.Ren Y, Bai J, Zhang J, editors. Label Contrastive Coding Based Graph Neural Network for Graph Classification. Database Systems for Advanced Applications; 2021. 2021//; Cham: Springer International Publishing. [Google Scholar]

- 51.He K, Fan H, Wu Y, Xie S, Girshick R, editors. Momentum Contrast for Unsupervised Visual Representation Learning. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020 13–19 June 2020.

- 52.Woo S, Park J, Lee J-Y, Kweon IS, editors. CBAM: Convolutional Block Attention Module. Computer Vision–ECCV 2018; 2018 2018//; Cham: Springer International Publishing. [Google Scholar]

- 53.Liu S, Deng W, editors. Very deep convolutional neural network based image classification using small training sample size. 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR); 2015 3–6 Nov. 2015.

- 54.Dice LR. Measures of the Amount of Ecologic Association Between Species. Ecology. 1945;26(3):297–302. doi: 10.2307/1932409 [DOI] [Google Scholar]

- 55.Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:14126980. 2014.

- 56.Shelhamer E, Long J, Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2017;39(4):640–51. doi: 10.1109/TPAMI.2016.2572683 [DOI] [PubMed] [Google Scholar]

- 57.Chaurasia A, Culurciello E, editors. LinkNet: Exploiting encoder representations for efficient semantic segmentation. 2017 IEEE Visual Communications and Image Processing (VCIP); 2017 10–13 Dec. 2017.

- 58.Chen L-C, Papandreou G, Schroff F, Adam H. Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:170605587. 2017.

- 59.Chen L-C, Zhu Y, Papandreou G, Schroff F, Adam H, editors. Encoder-decoder with atrous separable convolution for semantic image segmentation. Proceedings of the European conference on computer vision (ECCV); 2018.

- 60.Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:170404861. 2017.

- 61.He K, Zhang X, Ren S, Sun J, editors. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition; 2016.

- 62.Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z, editors. Rethinking the inception architecture for computer vision. Proceedings of the IEEE conference on computer vision and pattern recognition; 2016.

- 63.Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:201011929. 2020.

- 64.Xie S, Girshick R, Dollár P, Tu Z, He K, editors. Aggregated Residual Transformations for Deep Neural Networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 21–26 July 2017.

- 65.Tan M, Le Q, editors. Efficientnet: Rethinking model scaling for convolutional neural networks. International conference on machine learning; 2019: PMLR.

- 66.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ, editors. Densely connected convolutional networks. Proceedings of the IEEE conference on computer vision and pattern recognition; 2017.

- 67.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:14091556. 2014.

- 68.Xu Y, Jia Z, Wang LB, Ai Y, Zhang F, Lai M, et al. Large scale tissue histopathology image classification, segmentation, and visualization via deep convolutional activation features. BMC Bioinformatics. 2017;18(1):281. Epub 2017/05/28. doi: 10.1186/s12859-017-1685-x . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Endometrial Cancer; 2024 [cited 2024 Jun 4]. Database: AIstudio [Internet]. https://aistudio.baidu.com/datasetdetail/273988.