Abstract

Superresolution tools, such as PALM and STORM, provide nanoscale localization accuracy by relying on rare photophysical events, limiting these methods to static samples. By contrast, here, we extend superresolution to dynamics without relying on photodynamics by simultaneously determining emitter numbers and their tracks (localization and linking) with the same localization accuracy per frame as widefield superresolution on immobilized emitters under similar imaging conditions (≈50 nm). We demonstrate our Bayesian nonparametric track (BNP-Track) framework on both in cellulo and synthetic data. BNP-Track develops a joint (posterior) distribution that learns and quantifies uncertainty over emitter numbers and their associated tracks propagated from shot noise, camera artifacts, pixelation, background and out-of-focus motion. In doing so, we integrate spatiotemporal information into our distribution, which is otherwise compromised by modularly determining emitter numbers and localizing and linking emitter positions across frames. For this reason, BNP-Track remains accurate in crowding regimens beyond those accessible to other single-particle tracking tools.

Subject terms: Computational biophysics, Single-molecule biophysics

Bayesian nonparametric Track (BNP-Track) simultaneously determines emitter numbers and their tracks alongside uncertainty, extending the superresolution paradigm from static samples to single-particle tracking even in dense environments.

Main

Characterizing macromolecular assembly kinetics1, quantifying intracellular motility2–4 or interrogating pairwise biomolecular interactions5 requires accurate decoding of spatiotemporal processes at single-molecule scales, that is, high-nanometer spatial and rapid, often millisecond, temporal scales. These tasks ideally require superresolving positions of dynamic targets, typically fluorescently labeled molecules (light emitters), to tens of nanometer spatial resolution6–9 and, when more than one target is involved, discriminating between signals from multiple targets simultaneously.

Assessments using fluorescence experiments at the required scales suffer from inherent limitations often arising from the diffraction limit of light (≈250 nm in the visible range for typical applications), below which conventional fluorescence techniques cannot resolve neighboring emitters. To overcome limitations of conventional tools and achieve superresolution, improvements have been achieved through structured illumination10, structured detection11–14, photoresponse of fluorophore labels to excitation light6–8,15,16 or combinations thereof17–20.

Here, we focus on widefield superresolution microscopy (SRM), which typically relies on fluorophore photodynamics to achieve superresolution. SRM is regularly used both in vitro21,22 and in cellulo7,8,23–26. Specific widefield SRM image acquisition protocols, such as STORM15, PALM8 and PAINT21, through their associated image analyses, decode positions of light emitters separated by distances below the diffraction limit, often down to tens of nanometer resolution8,15. These widefield SRM protocols can be broken down into the following three conceptual steps: (1) specimen preparation, (2) imaging and (3) computational processing of the acquired images (frames). The success of step 3 is ensured by steps 1 and 2. In particular, in step 1, engineered fluorophores are selected, enabling the desired photodynamics, for example, photoswitching in STORM15, photoactivation/photobleaching in PALM8 or fluorophore binding/unbinding in PAINT21. Step 2 is then performed over extended periods, while rare photophysical (or binding–unbinding) events occur, and sufficient photons are collected to achieve superresolved localizations in step 3. For well-isolated bright spots, step 3 achieves superresolved localization6,7,27 while accounting for effects such as light diffraction, resulting in spot sizes of roughly twice 0.61λ/NA (the Rayleigh diffraction limit), set by the emitter wavelength (λ), the microscope objective’s numerical aperture (NA)28, the camera and its photon shot noise and spot pixelization.

Here, we show that computation alone may overcome the reliance on the photophysics of step 1 and the long acquisition times of step 2, which not only largely limit widefield SRM to spatiotemporally fixed samples but also induce sample photodamage. For example, although a moving emitter’s motion blur distributed over frames and pixels is typically a net disadvantage in the implementation of step 3, we conversely demonstrate that such a distribution of the photon budget in both space and time provides information that can be leveraged to superresolve emitter tracks, determine emitter numbers and help discriminate targets from their neighbors, even in the complete absence of photophysical processes (Fig. 1).

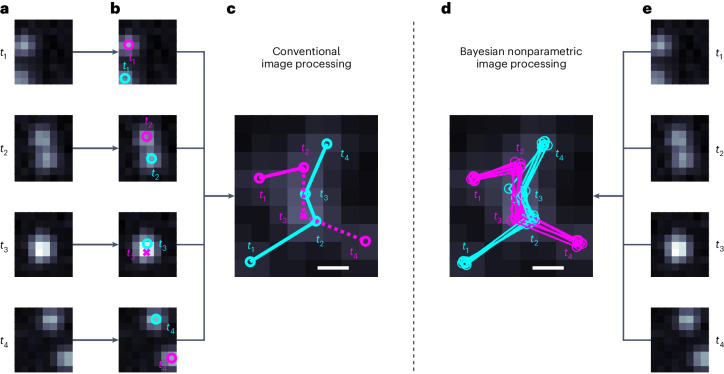

Fig. 1. Conceptual comparison between widely available tracking frameworks and BNP-Track.

a,e, Four frames from a dataset showing two emitters. b, Existing tracking approaches either completely or partially separate the task of first identifying and then localizing light emitters in the FOV of each frame independently. c, Conventional approaches then link emitter positions across frames. d, Our nonparametric approach (BNP-Track) simultaneously determines the number of emitters, localizes them and links their positions across frames. In b–d, circles denote correctly identified emitters, and crosses (×) denote missed emitters. In c and d, the scale bars indicate a distance equal to the nominal diffraction limit given by the Rayleigh diffraction limit of 0.61λ/NA.

Although captured in more detail in the framework put forward in Methods and Supplementary Information, here, we briefly highlight how our tracking framework, Bayesian nonparametric Track (BNP-Track), fundamentally differs from conventional tracking tools that determine emitter numbers, localize emitters and link emitter locations in sequential (modular) steps. In the language of Bayesian statistics, resolving emitter tracks and emitter numbers amounts to constructing the probability distribution , which reads ‘the joint posterior probability distribution of emitter numbers, locations and links given data’. The best set of emitter numbers and tracks are those globally maximizing this probability distribution. Without further approximation, this probability distribution can be decomposed as the following product:

| 1 |

Single-particle tracking (SPT) tools performing emitter number determination, emitter localization and linking as separate steps (for example, see refs. 6,7,29–36 and many more reviewed therein) invariably approximate the joint distribution’s maximization as a serial maximization of three terms. This process often involves additional approximations, such as using to approximate . Approximations such as these are acceptable for well-isolated and in-focus emitters. However, they have fundamentally limited our ability to superresolve emitters, especially as these move within light’s diffraction limit. By contrast, BNP-Track avoids such approximations and leverages all sources of information to construct the joint posterior, yielding superresolved emitter tracks.

The overall input to BNP-Track includes both raw image sequences and known information on the imaging system, including the microscope optics and camera electronics, as further detailed in Methods. Using Bayesian nonparametrics, we estimate unknowns, including the number of emitters and their associated tracks. We demonstrate BNP-Track on experimental SPT data, detailing how the simultaneous determination of emitter numbers and tracks can be computationally achieved. We also benchmark BNP-Track’s performance in Results against TrackMate30, to which we confer some advantage because direct comparison is impossible as existing tools do not simultaneously learn emitter numbers and associated tracks.

Results

To demonstrate our approach, we use BNP-Track first to analyze single mRNA molecules diffusing in live U-2 OS cells imaged under single-plane HILO illumination37 on a fluorescence microscope as previously described2,38,39 and use a beamsplitter to divide the single-color signal onto two cameras (Fig. 2). The dual-camera setup allows us to test for consistency of BNP-Track’s emitter number and track determination across cameras. In subsequent tests, we use noise-overlaid synthetic data for which the ground truth is known30,34 (Figs. 3 and 4 and Supplementary Fig. 2) and finally challenge BNP-Track with experimental data of crowded emitters (Fig. 5).

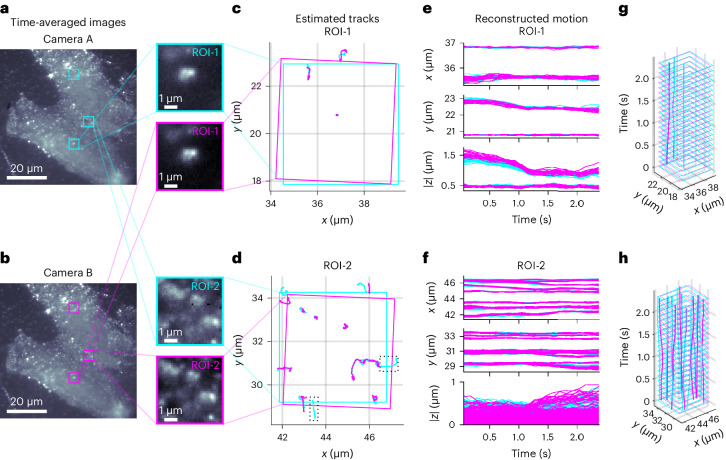

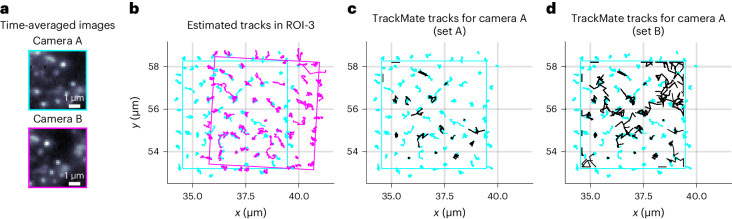

Fig. 2. Testing BNP-Track’s performance on two 5-μm-wide regions of interest with different emitter densities based on an experimental dataset from fluorophore-labeled mRNA molecules diffusing in live U-2 OS cells onto a dual-camera microscope.

a,b, For convenience only, we show time averages of all 22 frames analyzed from cameras A (a) and B (b). The selected ROIs are boxed, and the zoomed-in images of the indicated ROIs are shown on the right. The remaining region, ROI-3, is only highlighted and is analyzed later in the text (Fig. 5). c,d, Estimated tracks within the selected ROIs from both cameras, with solid boxes indicating the corresponding ROIs after image registration. e,f, Reconstructed time courses for individual tracks from the selected ROIs. The dotted boxes in d highlight two emitter tracks only detected by camera A. g, Time course reconstruction by combining the top and middle of e. h, Time course reconstruction by combining the top and middle of f.

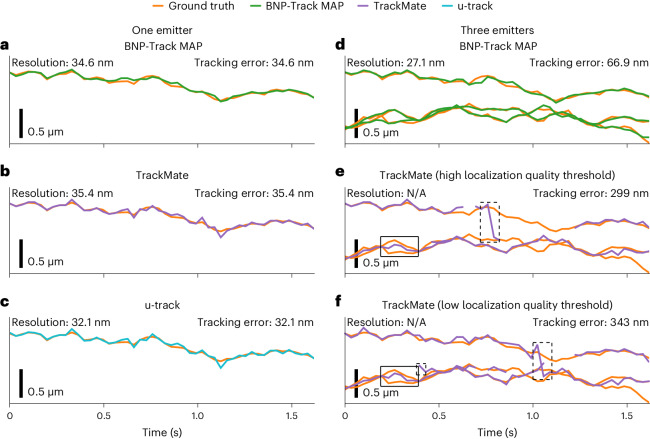

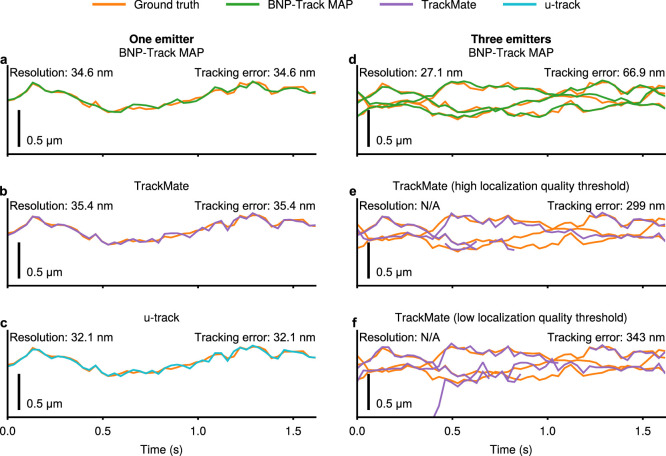

Fig. 3. A comparison of tracking performance among BNP-Track, TrackMate and u-track using two synthetic datasets with one emitter and three emitters in the y coordinate.

See Extended Data Fig. 2 for the same figure but with the x coordinate. a, BNP-Track’s MAP estimate compared to the one-emitter ground truth. b, TrackMate’s estimate compared to the one-emitter ground truth. Sets A and B are equivalent in this case. c, u-track’s estimate compared to the one-emitter ground truth. d, BNP-Track MAP estimates compared to the three-emitter ground truth. e,f, TrackMate estimates compared to the three-emitter ground truth with high localization quality threshold (e) and low localization quality threshold (f). In d–f, the top track of ground truth is the same as the ground truth in a–c. The boxed regions in e and f highlight areas where TrackMate performs relatively poorly. See Supplementary Data 5 and 6. Localization resolution is not applicable (N/A) to TrackMate estimates due to missing track segments.

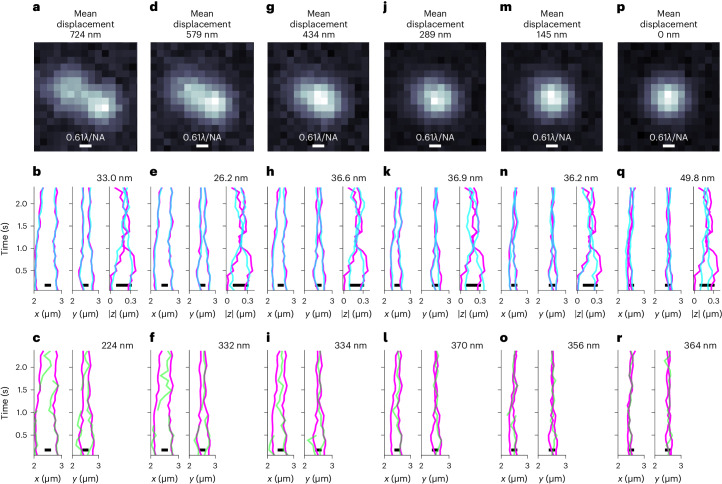

Fig. 4. Benchmarks of BNP-Track regarding the mean displacement between simulated tracks.

TrackMate tracks (set B) are also provided for comparison. a, Time-averaged image for the synthetic scenario (Supplementary Data 18) where the mean displacement between tracks is 724 nm. b, BNP-Track’s reconstructed tracks for all coordinates. The reconstructed tracks are in low-opacity cyan, and the ground truths are magenta. Lateral tracking errors (see Eq. (3)) are listed at the top right. c, TrackMate’s estimated tracks (low-opacity green) of the same datasets with the ground truths (magenta). Lateral tracking errors are listed at the top right. Also, no axial (∣z∣) result is plotted in c as TrackMate does not provide axial tracks for 2D images. d–f, The same layout as a–c but with a mean displacement of 579 nm (Supplementary Data 19). g–i, The same layout as a–c but with a mean displacement of 434 nm (Supplementary Data 20). j–l, The same layout as a–c but with a mean displacement of 289 nm (Supplementary Data 21). m–o, The same layout as a–c but with a mean displacement of 145 nm (Supplementary Data 22). p–r, The same layout as a–c but with a mean displacement of 0 nm (Supplementary Data 23).

Fig. 5. BNP-Track’s performance in increasingly crowded environments.

a, Time-averaged images over 22 frames from both cameras show many diffraction-limited emitters within the ROI analyzed here (ROI-3). b, BNP-Track’s tracking results after registering FOVs. c, Comparison between the BNP-Track result and the TrackMate result with a high localization quality threshold (set A) for the data from camera A. TrackMate tracks are in black; see Tinevez et al.30 for the definition of quality. d, A comparison between the BNP-Track result and the TrackMate result with a low localization quality threshold (set B) for the data from camera A. TrackMate tracks are in black.

As emitters move in three dimensions (3D), it is possible, and indeed helpful in more accurate lateral localization, for BNP-Track to estimate emitter axial distance (∣z∣) from the in-focus plane from two-dimensional (2D) images by modeling the dependence of the width of the emitter’s point spread function (PSF) on ∣z∣40. For this reason, although the axial distance from the in-focus plane is always less accurately determined than the lateral positions, we nonetheless report BNP-Track’s axial estimates for experimental data in Figs. 2 and 4.

Before showing the results, we note an important feature of Bayesian inference. Developed within the Bayesian paradigm41–43, BNP-Track goes beyond providing mere point estimates for unknown variables like the number of emitters and their corresponding tracks. It offers posterior probability distributions for these quantities, from which 95% credible intervals (CIs) can be computed. As we cannot easily visualize the output of multidimensional distributions over all candidate emitter numbers and associated tracks, we often report estimates for emitters that coincide with the number of emitters maximizing the posterior, termed maximum a posteriori (MAP) point estimates44. Having determined the MAP number of emitters, we then collect their associated tracks in figures, such as in Figs. 2 and 5.

BNP-Track superresolves sparse emitter tracks in cellulo

Because no direct ground truth is available for tracks from experimental SPT data, we use two cameras behind a beamsplitter to assess the success of BNP-Track. Using image registration (to correct for camera misalignment), we independently process two datasets for subsequent comparison and error estimation, knowing that, in principle, both cameras should have the same tracks (our ground truth). However, the noise realizations on both cameras are different, and emitters may move closer to each other than the nominal diffraction limit of 231 nm. To estimate tracking error quantitatively, based on Chenouard et al.34, we define a tracking error metric; see Eqs. (2) to (4) in Methods for details.

In Fig. 2a,b, we show, for illustrative purposes alone, time averages of a sequence of 22 successive frames spanning ≈2.5 s of real time in both detection channels, reflecting the complexity of the data to which BNP-Track applies. In data processing, we analyze the underlying frames without averaging. All raw data are provided in Supplementary Data 1–4.

From these frames, we track well-separated or dilute emitters (that is, whose PSFs are always well separated in space) in a 5-μm-wide square region of interest (ROI), named ROI-1. Fig. 2a also zooms in on ROI-1. As these are real experimental image stacks, there is no reason to assume a priori that the motion model is normally diffusive. The BNP-Track-derived track estimates are shown in Fig. 2c, while in Fig. 2e, all samples drawn from the posterior distribution are superposed. Due to fundamental optical limitations, we cannot determine whether the emitter’s axial position lies above or below the in-focus plane, so we only report the absolute value of the emitters’ axial position. As evident from Fig. 2c, BNP-Track successfully identifies and localizes the same tracks within the selected ROI in the two parallel camera datasets of ROI-1 despite different background and noise realizations on each camera. Of note, the two square ROI-1s are rotated relative to one another based on image registration in postprocessing. Fig. 2e shows tracks of all well-separated emitters identified within this field of view (FOV). We note that despite that the center of an emitter’s PSF near the top of ROI-1 in Fig. 2c lies outside ROI-1 for both cameras in all 22 frames, it is surprisingly independently picked up in the analysis of the data from both cameras. However, we exclude this unique track outside the FOV from Fig. 2e and further analysis.

Using the metric defined above in Eq. (4) for Fig. 2e, we report a tracking error of about 37 nm in the lateral direction, consistent with the prior superresolution values for immobilized targets6–8. As BNP-Track provides estimates of the lateral as well as the magnitude of the axial emitter position (see details in Methods), we can also assess the full 3D tracking error. This results in a ≈48-nm 3D tracking error. Having shown that we can track emitters in a dilute regimen similar to ROI-1 of Fig. 2c,e,g, we next analyze a more challenging ROI, ROI-2, where emitter PSFs now occasionally overlap.

As before, for illustrative purposes only, in Fig. 2b we show time averages of a sequence of 22 successive frames spanning ≈2.5 s of real time. Fig. 2d,f,h reflects the same information as described for ROI-1 but for ROI-2. As before, BNP-Track tracks emitters even as these diffuse away from a camera’s FOV. Using Eq. (4), we find that for ROI-2, the tracking error is slightly higher at 64 nm in the lateral direction, remaining below the nominal diffraction limit of 231 nm. Additionally, the tracking error in 3D now grows to 159 nm, resulting in a tracking error of about 80 nm.

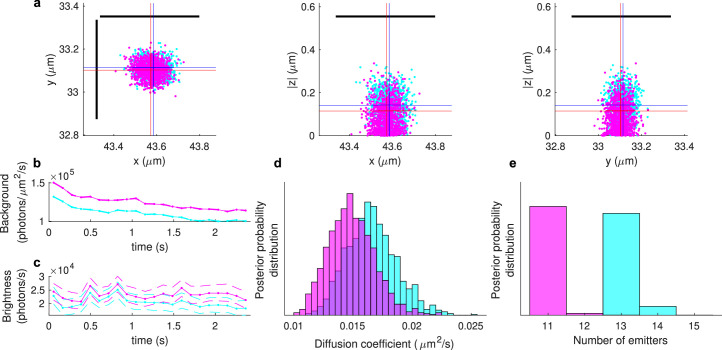

BNP-Track also estimates other dynamical quantities, including the background photon flux (photons per unit area per unit time), emitter brightness (photons per unit time), (effective) diffusion coefficient and number of emitters. Estimates for these quantities are summarized in Extended Data Fig. 1. From Extended Data Fig. 1b,c, the system’s background flux and emitter brightness vary substantially over time. Despite the agreement between tracks deduced from both cameras below light’s diffraction limit in ROI-2, discrepancies in some quantities (such as the diffusion coefficient in Extended Data Fig. 1d) highlight the sensitivity of these quantities to small track differences below light’s diffraction limit. Similarly, small discrepancies in the emitter brightness estimates (Extended Data Fig. 1c) may be induced by minute dissimilarities in the optical path leading to each camera.

Extended Data Fig. 1. Testing BNP-Track’s performance for ROI-2.

ROI-2 is shown in Fig. 2, and the color scheme here is the same as in Fig. 2 (cyan for camera A and magenta for camera B). a, Localization estimates in the lateral and axial directions at a selected frame of a selected emitter. Dots indicate individual positions sampled from the joint posterior distribution (as detailed in Methods), and blue and red crosses indicate average values for cyan and magenta, respectively. The black line segments mark the diffraction limit in the lateral direction. b and c, Estimated background photon fluxes (b) and emitter brightnesses (c) for both cameras throughout imaging. Dotted lines represent median estimates, and dashed lines map the 1%-99% credible interval. d and e, The posterior distributions of the diffusion coefficient (d) and number of emitters (e) for both cameras.

Finally, the number of emitters detected in the two cameras differs (Extended Data Fig. 1), with the additional tracks detected by camera A highlighted by dotted boxes in Fig. 2d. This is unsurprising for three reasons. First, the two cameras have slightly different FOVs. Second, as highlighted by the dotted boxes in Fig. 2d, a notable portion of the two extra tracks lies outside the FOV of either camera and thus are challenging to detect under any circumstance. Third, as out-of-focus emitters can mathematically model background noise and because two cameras draw slightly different conclusions on background photon emission rates and emitter brightnesses (Extended Data Fig. 1b,c), this may also naturally lead to slightly different estimates of the number of emitters, especially those out of focus or beyond the FOV. BNP-Track detects in-focus emitters and uses what it learns from in-focus emitters to extrapolate outside the FOV or in-focus plane. In such regions, the number of photons that BNP-Track uses to draw inferences on tracks is naturally limited.

Benchmarking BNP-Track with synthetic data

Next, we validate BNP-Track by using synthetically generated data where ground truth tracks are known. To ensure realistic data, we adopt the procedure outlined in Methods and Supplementary Information for data generation. The parameters (NA, pixel size, frame rate, diffusion coefficient, emitter brightness and background photon flux) used in generating synthetic data are identical or similar to those used in the earlier experiments. All parameter values are specified in Supplementary Table 1. Using simulated data with knowledge of the ground truth tracks, we evaluate BNP-Track’s performance in two ways. First, we compare BNP-Track’s tracking accuracy to an established SPT tool built on a leading tracking tool34, TrackMate30. Second, we test BNP-Track’s robustness across different parameter regimens and motion models (beyond normal diffusion).

Comparing BNP-Track to other SPT methods fairly and directly poses challenges. For instance, no other existing method simultaneously estimates emitter numbers alongside their associated tracks (alongside diffusion coefficients, time-dependent emitter brightnesses and time-dependent background photon fluxes). We simulated data with emitter brightness and background photon flux constant over time to address this. In the analyses conducted by BNP-Track, we fix the emitter brightness and no longer estimate its value, although diffusion coefficients and the constant background flux are still inferred. By contrast, the ground truth values for emitter brightness, background photon flux and diffusion coefficient are supplied to the conventional SPT tools. This deliberate approach gives a substantial advantage to these tools.

Additionally, considering that the dimension of the SPT problem (the number of estimated variables) can easily exceed hundreds (compare Eq. (1)), tuning the parameters of any participating SPT tool to optimize their tracking performance becomes impractical within finite time. Consequently, we allow 1 day to optimize tracking performance for BNP-Track, u-track and TrackMate. This limitation rules out options including writing customized code to optimize the tracking performance of conventional SPT tools.

Although the numerical value of the posterior distribution is an important performance metric for BNP-Track, no single metric exists to assess the performance of conventional SPT tools45. Without a quantitative numerical criterion such as a posterior value, we rely on preselected metrics, for example, tracks with minimal spurious detections or the fewest missed links (termed false negatives). Also, although it is generally preferable to have tracks with no false positives (spurious detections or tracks) and no false negatives (missed detections or tracks), competing methods often struggle to achieve both simultaneously. This difficulty arises because most methods cannot set frame-specific thresholds. Consequently, when false negatives and false positives cannot be reduced to 0 simultaneously for a conventional SPT tool, we output two sets of tracks for this SPT tool from the data. Set A, with ground truth tracks presented, prioritizes minimizing false positives during the localization (spot detection) phase and subsequently minimizes false negatives during the linking phase. By contrast, set B, again with ground truth tracks presented, focuses on reducing false negatives during the localization phase and then addresses false positives during the linking phase. Finally, if the resulting tracks consist of separate segments belonging to a single ground truth track, we manually fuse them, providing another advantage to competing tools.

Consequently, to summarize, throughout this study, we give a critical advantage to existing tools that we compare to BNP-Track by (1) manually tuning the parameters of these tools to have them best match the ground truth emitter numbers, locations and links and (2) asking BNP-Track to estimate parameters (diffusion coefficient and background photon flux) from the data while the ground truth values for these same parameters are used to tune competing methods to optimize their performance. See Methods for exactly how the aforementioned parameters are provided to existing methods and how track segments are fused. As we will show, although competing methods have substantial advantages, BNP-Track still exceeds the resolution of existing tools and yields reduced error rates (percentage of wrong links).

To quantitatively compare SPT tools to BNP-Track, we continue using the pairing distance and tracking error metrics previously used (Eqs. (2) to (4)). In addition, we use a finite gate value (see ϵ in Eq. (2)) of five pixels (presented in Methods). This gate value allows us to benchmark SPT tools that otherwise face challenges in localizing emitters within specific frames (that is, have missing segments) using a defined threshold. Using reasonably different gate values does not alter the subsequent discussion (see Supplementary Tables 2 and 3).

Nevertheless, pairing distance and tracking error metrics do not fully reflect track quality because linking error (mislink) penalties are included in the assessment. Including mislinks can be problematic when two or more emitters largely overlap in a frame, with emitters losing their identities and becoming indistinguishable. In such cases, penalizing mislinks is irrelevant to assessing overall track quality.

Therefore, to better capture localization accuracy, we introduce another metric called localization resolution, or simply resolution, that does not consider mislinks. Instead of pairing tracks, localization resolution independently pairs emitter positions in each frame without using a gate value. Consequently, localization resolution is undefined if the compared tracks do not have the same number of emitters in any given frame. In addition, if there are no mislinks, false positives or false negatives, tracking error and localization resolution are equivalent.

Comparison with TrackMate

The study begins with tracking a single emitter, where both BNP-Track (Fig. 3a), TrackMate (Fig. 3b) and u-track (Fig. 3c) accurately follow the emitter throughout a video sequence, achieving resolutions around 34.6 nm, 35.4 nm and 32.1 nm, respectively, demonstrating typical performance in straightforward scenarios. As the complexity increases to a three-emitter setup (see Supplementary Fig. 1g and Supplementary Data 6), BNP-Track outperforms TrackMate under most criteria (Fig. 3d and Supplementary Table 3), particularly in situations with overlapping PSFs, maintaining a resolution of 27.1 nm. TrackMate, however, struggles with issues like the misinterpretation of diffraction-limited emitters and spurious detections (Fig. 3e,f), which severely impairs its ability to resolve emitters, leading to notable tracking errors and diffusion coefficient overestimates. Detailed data and further analyses, including comparative metrics and the impact of tracking errors, are available in the Supplementary Information.

Robustness tests

We present in the Supplementary Information robustness results for BNP-Track by considering data drawn from different motion models and varying diffusion coefficients for normal diffusion in addition to varying emitter brightnesses, background photon fluxes and emitter numbers. As an example, in Fig. 4, we test how closely two emitters can come together while retaining the ability of BNP-Track to enumerate the number of emitters and track them.

BNP-Track’s performance in increasingly crowded environments

So far, we have evaluated the performance of BNP-Track on two distinct ROIs from an experimental dataset and computed its resolution using synthetic data. To further test the limits of BNP-Track, we analyzed a densely packed ROI, ROI-3 (Supplementary Data 30 and 31), selected from the same data set (Fig. 2a).

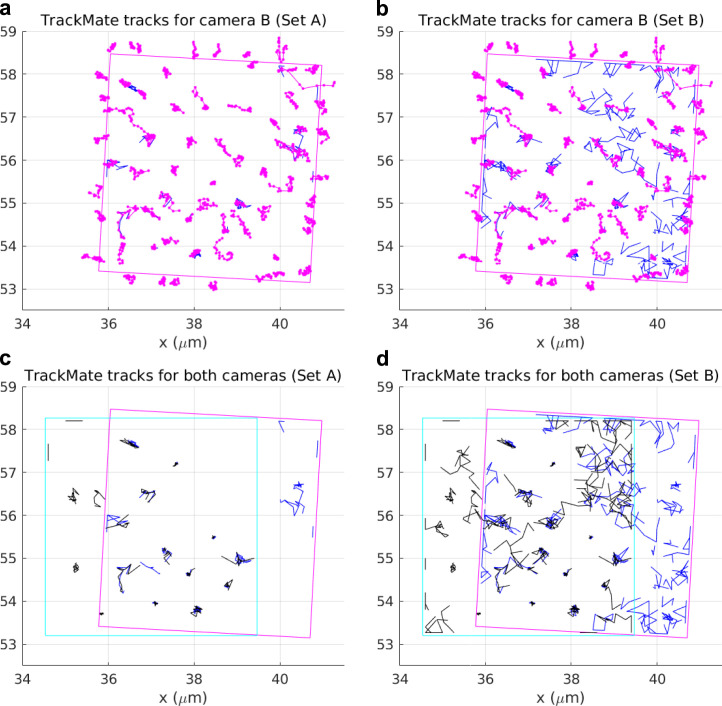

In Fig. 5a, similar to Fig. 2a,b, for illustrative purposes alone, we show time-averaged images from both cameras. These images reveal that ROI-3 contains tens of closely positioned and out-of-focus emitters. Furthermore, within ROI-3, cameras A and B observe slightly different FOVs, offset by approximately 1.5 μm and rotated by 5°. To provide an assessment of BNP-Track’s performance compared to that of TrackMate, we selected emitters whose z positions are within 150 nm of the in-focus plane in the overlapping region and calculated the pairing distance between tracks. The results show a tracking error of approximately 136.4 nm, which corresponds to a tracking error of 68.2 nm compared to the ground truth. These results are consistent with the performance for ROI-2 in Fig. 2.

As illustrated in Fig. 5a,b, ROI-3 presents a challenge in estimating the number of emitters due to crowding and overlapping PSFs as well as a larger number of out-of-focus emitters. These features pose severe challenges to conventional tracking tools that rely on manually setting thresholds to fix the number of emitters, especially dim or out-of-focus ones. To demonstrate this point, we used TrackMate again to analyze ROI-3. The results for camera A are illustrated in Fig. 5c,d. Specifically, for Fig. 5c, we set a high localization quality threshold (set A) relative to the nominal diffraction limit of 231 nm at 5 pixels (665 nm) to minimize spurious detection, resulting in a total of 18 tracks. Each of these tracks can be paired with one of BNP-Track’s 78 emitter tracks using the Tracking Performance Measures tool34 in Icy46, with the maximum pairing distance set at 2 pixels (266 nm). Despite the high localization threshold, TrackMate produces notably fewer emitter tracks than BNP-Track due to out-of-focus dim emitters and difficulties arising from overlapping PSFs. By contrast, we lower the quality threshold in TrackMate to 0 to detect more emitters (set B), thus increasing the total number of TrackMate tracks to 64 (Fig. 5d). Forty-one tracks can be paired with a subset of BNP-Track’s emitter tracks using the same pairing distance threshold. However, 23 spurious tracks are contaminating further analysis. Similar results are also obtained for data from camera B (Extended Data Fig. 3a,b). Furthermore, in Extended Data Fig. 3c,d, we show that, given the same image registration as used in Fig. 5b, TrackMate does not produce matching tracks for both cameras, underscoring why emitter numbers must be simultaneously learned while tracking rather than precalibrating emitter numbers.

Extended Data Fig. 3. BNP-Track’s performance in increasingly crowded environments.

a, A comparison between BNP-Track’s result and TrackMate’s result with a high localization quality threshold (Set A) for the data from camera B. TrackMate tracks are in blue. b, A comparison between BNP-Track’s result and TrackMate’s result with a low localization quality threshold (Set B) for the data from camera B. TrackMate tracks are in blue. c Comparison between TrackMate tracks (Set A) from both cameras with the same image registration as Fig. 5. d, Comparison between TrackMate tracks (Set B) from both cameras.

Discussion

We present an image processing framework, BNP-Track, superresolving emitters in cellulo without leveraging fluorophore photodynamics. Our framework analyzes continuous image measurements from diffraction-limited light emitters throughout image acquisition. BNP-Track extends the scope of widefield SRM by exploiting spatiotemporal information encoded across all frames and pixels. Additionally, BNP-Track unifies many existing approaches to localization microscopy and SPT and extends beyond them by simultaneously and self-consistently estimating emitter numbers.

By operating in three interlaced stages (preparation, imaging and processing), existing approaches to widefield SRM estimate locations of individual static emitters with a generally accepted resolution of ≈50 nm or less6–8. Such resolution for widefield applications is substantially improved relative to conventional microscopy’s diffraction limit of ≈250 nm. Although our processing framework cannot lift the limitations imposed by optics nor eliminate the degradation induced by noise, we show that our framework can substantially extend our ability to estimate emitter numbers and tracks from existing images with uncertainty both for more straightforward in-focus cases where emitters are well separated and more challenging cases where emitters are crowded, move out of focus and appear partly out of the FOV. In particular, because BNP-Track provides full distributions over unknowns, it readily computes error bars (often termed CIs within a Bayesian setting) associated with emitter numbers warranted by images (for example, Extended Data Fig. 1 and Supplementary Fig. 1), localization events for both isolated emitters and emitters closer than light’s diffraction limit and other parameters including diffusion coefficients.

Many tracking scenarios challenge all tracking tools, including out-of-focus motion, crowded environments, inhomogeneous illumination, optical aberrations, hot pixels or detector saturation. Although quantifying when BNP-Track fails depends on the specifics of any given circumstance, BNP-Track leverages broad spatiotemporal information typically eliminated by separating the tracking task in modular steps by traditional tools as highlighted earlier. BNP-Track leverages all information available by modeling the entire process from emitter motion to detector output simultaneously and self-consistently, thus maximizing the amount of information extracted from individual frames. As such, when BNP-Track fails to track for a particular system setting, conventional tracking methods typically fail earlier (for example, as shown in Fig. 4), indicating the need for an alternative experimental protocol.

The analysis of FOVs like those shown in Results (5 μm × 5 μm or about 1,500 pixels and 22 frames) requires about 300 min of computational time on an average laptop (Apple MacBook 2020 with macOS Ventura). The computational cost scales linearly with frame number, total pixel number and total emitter numbers. Larger-scale applications are within the existing computational capacity, although additional algorithmic improvements and computational optimization are possible. For instance, as part of future technological development, parallelism can be used in Eq. (6), the most costly part of our algorithm, given that the average photon numbers incident on each pixel are independent of the others. This approach results in a theoretical speed-up factor equal to the smaller of the two numbers: the total number of pixels and the number of processor cores. What is more, as single-photon detector arrays become available, it may be possible to imagine generalizing our emission model to consider binary (detection or nondetection) or other few-bit emission models extending beyond the continuum emission model invoked herein, thereby generalizing BNP-Track to faster diffusion processes.

Despite BNP-Track’s higher computational cost, we argue the following. First, as demonstrated in Figs. 3 to 5, cheaper conventional tracking methods not only fail to surpass the diffraction limit but also do not learn emitter numbers. However, learning emitter numbers is especially critical in correctly linking emitter locations across frames, especially in crowded environments. Second, BNP-Track’s execution time is primarily computational wall time, as BNP-Track is unsupervised and largely free from manual tuning. This stands in contrast to methods such as TrackMate used in the generation of Figs. 3 to 5, which require manual tuning and thresholding for proper execution and, even so, remain diffraction limited.

As BNP-Track is a framework, it can be adapted to accommodate specialized illumination modalities including TIRF47 and light-sheet48 or even multicolor imaging. Indeed, microscopy modalities collecting data across axial planes may help discriminate between background and out-of-focus emitters that are currently difficult to distinguish. For example, BNP-Track attributes variations in the appearance of spots (changes in shape, size and emission intensity) to (1) emitters moving in and out of the in-focus plane, (2) overlapping emitters and (3) motion blur. Along these same lines, in Methods, we made common modeling choices and used typical experimental parameters. For example, we used an EMCCD camera model and assumed a Gaussian PSF. Other choices can be made by simply changing the mathematical form of the camera model or the PSF, provided that these assume known precalibrated forms. None of these changes break BNP-Track’s conceptual framework.

Similarly, while BNP-Track uses a Brownian motion model, one may wonder about BNP-Track’s performance when emitters evolve according to alternative motion models. A preliminary answer to this question lies in the Results, where BNP-Track yields accurate tracking results consistent across two cameras for an experimental dataset with an unknown emitter motion model despite assuming normal diffusion (Brownian) motion. Moreover, further simulations shown in Supplementary Figs. 3 and 7 to 9 illustrate how BNP-Track applies and maintains its performance in cases where emitter motion is dictated by motion models extending beyond Brownian motion, highlighting the dominant contribution of the detector and photon emission model in tracking over the details of the motion model. This motivates our thinking of Brownian motion as justifying the use of Gaussian transition probabilities, following from the central limit theorem, between locations across frames.

Perhaps more fundamentally, these results imply that the amount of diffraction-limited tracking data analyzed may be insufficient to infer motion models, given that tracks learned by BNP-Track remain accurate even when the underlying motion model differs from normal diffusion. Either way, if we believe that a specific motion model is warranted and not accommodated by Gaussian transition probabilities, we may incorporate this change into our framework (Supplementary Note 6.3). The current questions raised by the insensitivity of the track determination to the motion model do raise questions as to how sensitive tracking is to boundary conditions of cells and obstacles encountered within cells, which we have yet to explore.

Building on the results and discussions presented thus far, we posit that BNP-Track applies to a broad spectrum of particle tracking scenarios. Its utility becomes especially pronounced in systems where traditional modular localization and linking faces challenges, specifically in scenarios featuring relatively dim emitters, intense background noise, fast diffusing emitters or a high local emitter number density. For systems containing consistently well-separated bright in-focus emitters within a large FOV, conventional tracking approaches may be preferred. Yet, it is difficult to control a fixed separation between emitters, even under low crowding conditions.

Postprocessing tools are frequently used to extract useful information from single-particle tracks, such as diffusion coefficients and diffusive states. These tools range from simple approaches, such as MSD, to more complex methods, such as Spot-On49 and SMAUG50. Because our framework produces tracks, these tracks can be analyzed by these tools, and our ability to make full distributions over tracks may also help estimate errors over postprocessed parameters. It is also conceivable that our output could be used as a training set for neural networks51 or be used to make predictions of molecular tracks in dense environments52, such as in Fig. 5, previously considered outside the scope of existing tools.

The framework that we present here is a proof-of-principle demonstration that computation feasibly achieves superresolution of evolving targets by avoiding the existing tracking paradigm’s modular structure and limiting tracking to dilute and in-focus samples.

Methods

Tracking error metrics

The agreement between tracks across both cameras within their FOV can be quantified according to a pairing distance metric34. Briefly, the distance between two tracks is defined using the gated Euclidean distance given by

| 2 |

Here, ϵ is called the gate value (representing the maximum distance for two detections to be paired), N is the total number of frames, and ∥ψn − ϕn∥ denotes the Euclidean distance between the emitter positions ψn and ϕn at time tn in both cameras. If a track fails to localize any emitter in a particular frame, the distance at that frame is considered to be ϵ (numerically defined shortly). When comparing BNP-Track’s tracking results for both cameras in the dual-camera setup, we use an infinite value for ϵ. This is because, by design, BNP-Track only outputs tracks with no missing segments, as emitters are assumed to originate from outside of the FOV or out of focus.

On the other hand, when comparing the track estimates from SPT tools to known ground truth tracks, the gate value should be configured to ensure that detections deemed ‘well separated’ or those exceeding twice the nominal diffraction limit are never paired. In the synthetic datasets, the nominal diffraction limit is approximately 280 nm, derived using parameters outlined in Supplementary Table 1. Consequently, we selected a gate value of 5 pixels, equivalent to approximately 665 nm. Despite this argument, using reasonably different gate values does not alter the subsequent discussion (refer to Supplementary Tables 2 and 3).

Based on Eq. (2), the pairing distance between two sets of tracks, denoted , is defined as the minimum total gated Euclidean distance among all possible track pairings between the sets. In this context, Mψ and Mϕ represent the number of tracks in each set. For a comprehensive understanding of the methodology used to determine this minimal distance, see Chenouard et al.34.

Moreover, to enable a more straightforward comparison with the diffraction limit, we introduce the concept of tracking error, which is defined as

| 3 |

Here, the notation is consistent with that of Eq. (2), and is the reference track set, which is the ground truth track set when available.

For the dual-camera experimental dataset, given the absence of ground truth, the tracking error is calculated slightly differently,

| 4 |

Here, the extra factor of two appears in the denominator as the tracking error now sums the distances from both tracks, and thus the error per track is half. In practice, we have found that both track sets share the same number of tracks, M, because BNP-Track reports the same number of emitters in the shared FOV between the two cameras in the experimental datasets discussed below. Otherwise, if the detectors are very different and the number of tracks detected is not the same, then by convention, M can be understood as the mean.

Image processing

As we demonstrate in Results, our analysis goal is to determine the probability distribution termed the posterior, . In this distribution, we use θ to gather the unknown quantities of interest, for instance, emitter tracks and photon emission rates, and to collect the data under processing, for example, timelapse images. Below, we present how this distribution is derived and its underlying assumptions.

We first present a detailed formulation of the physical processes in forming the acquired images necessary in the above quantitative analysis to facilitate the presentation. This formulation captures microscope optics and camera electronics and can be modified to accommodate more specialized imaging setups. In this formulation, the unknowns of interest are encoded by parameters. Next, we present the mathematical tools needed to estimate values for the unknown parameters. That is, we address the core challenge in SRM arising from the unknown number of emitters and their associated tracks. To overcome the challenge of estimating emitter numbers, we apply Bayesian nonparametrics. Our approach differs from the likelihood-based approaches currently used in localization microscopy, allowing us to relax the SRM photodynamical requirements.

As several of the notions in our description are stochastic (for example, parameters with unknown values and random emitter dynamics), we use probabilistic descriptions. Although our notation is standard for the statistical community, we provide an introduction more appropriate for a broader audience in Supplementary Information.

Model description

Our starting point consists of image measurements obtained in an SRM experiment denoted by , where subscripts n = 1,…, N indicate the exposures, and superscripts p = 1,…, P indicate pixels. For example, denotes the raw image value, typically reported in analog-to-digital units or counts and stored in TIFF format, measured in pixel 3 during the second exposure. Similarly, denotes every image value (that is, entire frame) measured during the second exposure. Because the image values are related to the specimen under imaging, we aim to develop a mathematical model encoding the physical processes that relate the system imaged with the acquired measurements.

Noise

The recorded images mix electronic signals that depend only stochastically on an average amount of incident photons53–56. For commercially available cameras, the overall relationship, from incident photons to recorded images, is linear and contaminated with multiplicative noise that results from shot noise, amplification and readout. Our formulation below applies to image data acquired with EMCCD-type cameras, as commonly used in superresolution imaging6,55. However, the expression below can be modified to accommodate other detector architectures. Here, in our formulation,

| 5 |

where is the average number of photons incident on pixel p during exposure n. The parameter f is a camera-dependent excess noise factor, and ξ is the overall gain that combines the effects of quantum efficiency, preamplification, amplification and quantization. The values of μ, υ, ξ and f are specific to the camera that acquires the images of interest, and their values can be calibrated as described in Supplementary Information.

Pixelization

As shot noise is already captured, depends deterministically on the underlying photon flux

| 6 |

where , mark the integration time of the nth exposure; mark the region monitored by pixel p; and U(x, y, t) is the photon flux at position x, y at time t. We detail our spatiotemporal frames of reference in Supplementary Information.

Optics

We model U(x, y, t) as consisting of background Uback(x, y, t) and fluorophore photon contributions (that is, flux) from every imaged light emitter . These are additive,

| 7 |

Specifically, for the latter, we consider a total of B emitters that we label with m = 1,…, B. Each of our emitters is characterized by a position Xm(t), Ym(t), Zm(t), all of which may change through time. Here, we use uppercase letters X, Y and Z for random variables and lowercase letters x, y and z for general variables (realizations of the corresponding random variables). Because the total number B of imaged emitters is a critical unknown quantity, in the next section, we describe how we modify the flux U(x, y, t) to allow for a variable number of emitters. In Supplementary Information, we describe how this flux is related to Xm(t), Ym(t), Zm(t).

Model inference

The quantities that we wish to estimate, for example, the positions X1:B(t), Y1:B(t), Z1:B(t), are unknown variables in the preceding formulation. The total number of such variables depends on the number of imaged emitters B, which in SRM remains unknown, thus prohibiting the processing of the images under flux U(x, y, t). Because B has such a subtle effect, we modify our formulation to make it compatible with the nonparametric paradigm of data analysis, allowing for processing under an unspecified number of variables41,42,57–59.

In particular, following the nonparametric latent feature paradigm41,42, we introduce indicator parameters bm that adopt only values 0 or 1 and recast U(x, y, t) in the form

| 8 |

Specifically, with the introduction of indicators, we increase the number of emitters represented in our model from B to a number M > B that may be arbitrarily large. The critical advantage is that the total number of model emitters M can now be set before processing, whereas the total number of actual emitters B remains unknown. With this formulation, we infer the values of b1:M during processing simultaneously with the other parameters of interest. In this way, we can actively recruit (that is, bm = 1) or discard (that is, bm = 0) light emitters consistently avoiding underfitting/overfitting. After image processing, our analysis recovers the total number of imaged emitters by the sum and the positions of the emitters Xm(t), Ym(t), Zm(t) by the estimated positions of the model emitters with bm = 1. However, a side effect of introducing M is that the results of our analysis may depend on the particular value chosen. To relax this dependence, we use a specialized nonparametric prior on bm that we describe in detail in Supplementary Information. This prior specifically allows for image processing at the formal limit M → ∞.

Our overall formulation also includes additional parameters (for example, background photon flux and fluorophore brightness) that may or may not be of immediate interest. To provide a flexible computational scheme that works around both unknown types (that is, parametric and nonparametric) and also allows for future extensions, we adopt a Bayesian approach in which we prescribe prior probability distributions on every unknown parameter beyond just the indicators bm. These priors, combined with the preceding formulation, lead to the posterior probability distribution , where θ gathers every unknown, on which our results rely. We describe the full posterior distribution and its evaluation in Supplementary Information.

After generating samples from the posterior probability distribution (see Supplementary Note 10 for details), numerous, often thousands of, instances of θ are acquired. Each θ contains values for every variable of interest. Subsequently, by aggregating all samples corresponding to each variable, their respective 95% CIs can be computed as the range between the 2.5th and 97.5th percentiles.

TrackMate, u-track and Tracking Performance Measure

Besides its widespread use, ongoing maintenance and updates and being built upon leading methods in Chenouard et al.34, we opt for TrackMate30 specifically because it combines various localization and linking methods and multiple thresholding options.

To generate tracks for comparison in Results, we first export simulated movies as TIFF files and import them in Fiji60 v1.54b for analysis with TrackMate v7.9.2. As part of implementing TrackMate, the Laplacian of Gaussian detector with subpixel localization and the linear assignment problem mathematical framework29 are used in spot detection. Spots are then filtered based on quality, contrast, sum intensity and radius (based on the actual PSF size used in data simulation). For the linear assignment problem tracker, we allow gap closing and tune based on diffusion coefficients, the parameters for maximum (interframe) distance, maximum frame gap and the number of spots in tracks to find the best tracks. No extra feature penalties are added. All aforementioned parameters are tuned to minimize tracking errors.

u-track29 is used for additional comparison, following the same tuning process described earlier. As an advantage to u-track, we provide u-track with the background emission (termed ‘absolute background’ in the manual), which we instead learn from the simulated data using BNP-Track.

The benchmarks in Supplementary Tables 2 and 3 were created using the Tracking Performance Measure34 plugin in Icy46 2.4.3.0. We exported the TrackMate track, the BNP-Track MAP estimates and ground truth tracks as XML files to generate these benchmarks. All tracks were imported into Icy’s TrackManager using the ‘Import from TrackMate’ feature, and the Tracking Performance Measure plugin was started using the ‘add Track Processor’ option. The only required input for this plugin is the ‘maximum distance between detections’ (gate value), for which we used the following three values: 2, 5 and 10 pixels.

Manual connection of track segments was also performed in TrackManager. Initially, we established links between track segments by dragging the last piece of one segment to the first piece of the next segment in the left panel (‘Track View’) of TrackManager. To ensure recognition of these manual connections by the Tracking Performance Measure plugin, we also selected ‘Edit’ and ‘Fuse All track segments’.

Image acquisition

Experimental timelapse images

Fluorescence timelapse images of U-2 OS (HTB-96, ATCC) cells injected with chemically labeled firefly luciferase mRNAs were acquired simultaneously on two cameras. This cell line was genotyped for authentication and subjected to biweekly mycoplasma contamination checks. This U-2 OS cell line is not in the list of known misidentified cell lines. Cell culture and handling of U-2 OS cells before injections were performed as previously described61. Firefly luciferase mRNAs were in vitro transcribed, capped and polyadenylated, and a variable number of Cy3 dyes were nonspecifically added to the poly(A) tail using Click chemistry39. Cells were injected with a solution of Cy3-labeled mRNAs and Cascade Blue-labeled 10-kDa dextran (Invitrogen, D1976) using a Femtojet pump and Injectman NI2 micromanipulator (Eppendorf) at 20 hPa for 0.1 s with 20 hPa of compensation pressure. Cells that were successfully injected were identified by the presence of a fluorescent dextran and were imaged 30 min after injection. The cells were continuously illuminated with a 532-nm laser in HILO mode, and Cy3 fluorescence was collected using a ×60/1.49-NA oil objective. Images were captured simultaneously on two Andor X-10 EMCCD cameras using a 50:50 beamsplitter with a 100-ms exposure time.

Synthetic timelapse images

We acquire validation and benchmarking data through standard computer simulations. We start from ground truth as specified in the captions of Figs. 3 and 4 and Supplementary Figs. 2 to 9 and then added noise with values that we estimated from the experimental timelapse images according to Supplementary Note 4.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Online content

Any methods, additional references, Nature Portfolio reporting summaries, source data, extended data, supplementary information, acknowledgements, peer review information; details of author contributions and competing interests; and statements of data and code availability are available at 10.1038/s41592-024-02349-9.

Supplementary information

Supplementary Figs. 1–9, Tables 1–5, Discussion and Notes.

Source data

Raw experimental image stacks.

Raw synthetic image stacks.

Raw synthetic image stacks.

Raw experimental image stacks.

Raw experimental image stacks.

Raw synthetic image stacks.

Raw experimental image stacks.

Acknowledgements

We acknowledge NIH NIGMS R01GM130745 (to S.P.) for supporting early efforts in nonparametrics and tracking, R01GM134426 (to S.P.) for supporting single-photon efforts, R35GM148237 (to S.P.) entitled ‘Toward high spatiotemporal resolution models of single molecules for in cellulo applications’ combining both prior R01s and NSF 2310610 (to S.P.) for providing support to build the lab’s expertise in competing tracking tools (in the context of bacterial tracking). We also acknowledge R01GM122803 and R35GM131922 (to N.G.W.) for enabling experimental data acquisition as well as the NSF MRI-ID grant DBI-0959823 (to N.G.W.) for seeding the Single Molecule Analysis in Real-Time (SMART) Center, whose Single Particle Tracker TIRFM equipment was used for acquiring experimental tracking data with support from J. D. Hoff. NIH T-32-GM007315 partially supported A.P.J.

Extended data

Extended Data Fig. 2. Tracking performance comparison among BNP-Track, TrackMate, and u-track.

Same layout as Fig. 3 but for the x coordinate. a, BNP-Track’s MAP estimate compared to the one-emitter ground truth. b, TrackMate’s estimate compared to the one-emitter ground truth. Sets A and B are equivalent in this case. c, u-track’s estimate compared to the one-emitter ground truth. d, BNP-Track MAP estimates compared to the three-emitter ground truth. e,f, TrackMate estimates compared to the three-emitter ground truth with high localization quality threshold (e) and low localization quality threshold (f). TrackMate estimates compared to the three-emitter ground truth, respectively. In d–f, the top track of ground truth is the same as the ground truth in a–c. The boxed regions in e and f highlight areas where TrackMate performs relatively poorly. See Supplementary Data 5 and 6. Localization resolution is not applicable (N/A) to TrackMate estimates due to missing track segments.

Author contributions

I.S. and L.W.Q.X. prepared the manuscript. I.S., L.W.Q.X. and Z.K. contributed to the analysis methods, computational implementation and software development. A.P.J. and N.G.W. provided the experimental data and provided feedback on the manuscript. I.S. and S.P. conceived the research, and S.P. supervised the entire project.

Peer review

Peer review information

Nature Methods thanks Tamiki Komatsuzaki and Jean-Baptiste Masson for their contribution to the peer review of this work. Primary Handling Editor: Rita Strack, in collaboration with the Nature Methods team. Peer reviewer reports are available.

Data availability

All data discussed in this manuscript are provided as Supplementary Data. Source data are provided with this paper.

Code availability

All algorithms are implemented in MATLAB R2022b62, tested in R2024a. Code can be accessed at the GitHub page for the S.P. laboratory at https://github.com/LabPresse/BNP-Track (ref. 63). Figure-generating scripts are in MATLAB or Julia64 v1.10.3 using Makie65 v0.20.9. These scripts are available upon request.

Competing interests

S.P., I.S. and L.W.Q.X. are co-inventors on a patent application that incorporates the methods outlined in this manuscript. S.P. is a cofounder at Saguaro Solutions. The other authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Ioannis Sgouralis, Lance W. Q. Xu.

Extended data

is available for this paper at 10.1038/s41592-024-02349-9.

Supplementary information

The online version contains supplementary material available at 10.1038/s41592-024-02349-9.

References

- 1.Cisse, I. I. et al. Real-time dynamics of RNA polymerase II clustering in live human cells. Science341, 664–667 (2013). 10.1126/science.1239053 [DOI] [PubMed] [Google Scholar]

- 2.Pitchiaya, S. et al. Dynamic recruitment of single RNAs to processing bodies depends on RNA functionality. Mol. Cell74, 521–533 (2019). 10.1016/j.molcel.2019.03.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jalihal, A. P. et al. Multivalent proteins rapidly and reversibly phase-separate upon osmotic cell volume change. Mol. Cell79, 978–990 (2020). 10.1016/j.molcel.2020.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jalihal, A. P. et al. Hyperosmotic phase separation: condensates beyond inclusions, granules and organelles. J. Biol. Chem. 296, 100044 (2021). [DOI] [PMC free article] [PubMed]

- 5.Shayegan, M. et al. Probing inhomogeneous diffusion in the microenvironments of phase-separated polymers under confinement. J. Am. Chem. Soc.141, 7751–7757 (2019). 10.1021/jacs.8b13349 [DOI] [PubMed] [Google Scholar]

- 6.Lee, A., Tsekouras, K., Calderon, C., Bustamante, C. & Pressé, S. Unraveling the thousand word picture: an introduction to super-resolution data analysis. Chem. Rev.117, 7276–7330 (2017). 10.1021/acs.chemrev.6b00729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Von Diezmann, L., Shechtman, Y. & Moerner, W. Three-dimensional localization of single molecules for super-resolution imaging and single-particle tracking. Chem. Rev.117, 7244–7275 (2017). 10.1021/acs.chemrev.6b00629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Betzig, E. et al. Imaging intracellular fluorescent proteins at nanometer resolution. Science313, 1642–1645 (2006). 10.1126/science.1127344 [DOI] [PubMed] [Google Scholar]

- 9.Fazel, M. et al. Fluorescence microscopy: a statistics-optics perspective. Rev. Mod. Phys.96, 025003 (2024). 10.1103/RevModPhys.96.025003 [DOI] [Google Scholar]

- 10.Gustafsson, M. G. Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy. J. Microsc.198, 82–87 (2000). 10.1046/j.1365-2818.2000.00710.x [DOI] [PubMed] [Google Scholar]

- 11.Jazani, S., Xu, L. W., Sgouralis, I., Shepherd, D. P. & Pressé, S. Computational proposal for tracking multiple molecules in a multifocus confocal setup. ACS Photonics9, 2489–2498 (2022). 10.1021/acsphotonics.2c00614 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wells, N. P., Lessard, G. A. & Werner, J. H. Confocal, three-dimensional tracking of individual quantum dots in high-background environments. Anal. Chem.80, 9830–9834 (2008). 10.1021/ac8021899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wells, N. P. et al. Time-resolved three-dimensional molecular tracking in live cells. Nano Lett.10, 4732–4737 (2010). 10.1021/nl103247v [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Perillo, E. P. et al. Deep and high-resolution three-dimensional tracking of single particles using nonlinear and multiplexed illumination. Nat. Commun.6, 7874 (2015). 10.1038/ncomms8874 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rust, M. J., Bates, M. & Zhuang, X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nat. Methods3, 793–795 (2006). 10.1038/nmeth929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bryan IV, J. S., Sgouralis, I. & Pressé, S. Diffraction-limited molecular cluster quantification with Bayesian nonparametrics. Nat. Comput. Sci.2, 102–111 (2022). 10.1038/s43588-022-00197-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hell, S. W. & Wichmann, J. Breaking the diffraction resolution limit by stimulated emission: stimulated-emission-depletion fluorescence microscopy. Opt. Lett.19, 780–782 (1994). 10.1364/OL.19.000780 [DOI] [PubMed] [Google Scholar]

- 18.Klar, T. A., Jakobs, S., Dyba, M., Egner, A. & Hell, S. W. Fluorescence microscopy with diffraction resolution barrier broken by stimulated emission. Proc. Natl Acad. Sci. USA97, 8206–8210 (2000). 10.1073/pnas.97.15.8206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Willig, K. I., Rizzoli, S. O., Westphal, V., Jahn, R. & Hell, S. W. STED microscopy reveals that synaptotagmin remains clustered after synaptic vesicle exocytosis. Nature440, 935–939 (2006). 10.1038/nature04592 [DOI] [PubMed] [Google Scholar]

- 20.Balzarotti, F. et al. Nanometer resolution imaging and tracking of fluorescent molecules with minimal photon fluxes. Science355, 606–612 (2017). 10.1126/science.aak9913 [DOI] [PubMed] [Google Scholar]

- 21.Schnitzbauer, J., Strauss, M. T., Schlichthaerle, T., Schueder, F. & Jungmann, R. Super-resolution microscopy with DNA-PAINT. Nat. Protoc.12, 1198–1228 (2017). 10.1038/nprot.2017.024 [DOI] [PubMed] [Google Scholar]

- 22.Jungmann, R. et al. Multiplexed 3D cellular super-resolution imaging with DNA-PAINT and Exchange-PAINT. Nat. Methods11, 313–318 (2014). 10.1038/nmeth.2835 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Holden, S. J. et al. High throughput 3D super-resolution microscopy reveals Caulobacter crescentus in vivo Z-ring organization. Proc. Natl Acad. Sci. USA111, 4566–4571 (2014). 10.1073/pnas.1313368111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Verweij, F. J. et al. The power of imaging to understand extracellular vesicle biology in vivo. Nat. Methods18, 1013–1026 (2021). 10.1038/s41592-021-01206-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kim, J. et al. Super-resolution localization photoacoustic microscopy using intrinsic red blood cells as contrast absorbers. Light Sci. Appl.8, 103 (2019). 10.1038/s41377-019-0220-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Otterstrom, J. et al. Super-resolution microscopy reveals how histone tail acetylation affects DNA compaction within nucleosomes in vivo. Nucleic Acids Res.47, 8470–8484 (2019). 10.1093/nar/gkz593 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Khater, I. M., Nabi, I. R. & Hamarneh, G. A review of super-resolution single-molecule localization microscopy cluster analysis and quantification methods. Patterns1, 100038 (2020). 10.1016/j.patter.2020.100038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rayleigh, L. XXXI. Investigations in optics, with special reference to the spectroscope. Lond. Edinb. Dubl. Phil. Mag.8, 261–274 (1879). 10.1080/14786447908639684 [DOI] [Google Scholar]

- 29.Jaqaman, K. et al. Robust single-particle tracking in live-cell time-lapse sequences. Nat. Methods5, 695–702 (2008). 10.1038/nmeth.1237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tinevez, J.-Y. et al. TrackMate: an open and extensible platform for single-particle tracking. Methods115, 80–90 (2017). 10.1016/j.ymeth.2016.09.016 [DOI] [PubMed] [Google Scholar]

- 31.Sgouralis, I., Nebenführ, A. & Maroulas, V. A Bayesian topological framework for the identification and reconstruction of subcellular motion. SIAM J. Imaging Sci.10, 871 (2017). 10.1137/16M1095755 [DOI] [Google Scholar]

- 32.Martens, K. J. A., Turkowyd, B., Hohlbein, J. & Endesfelder, U. Temporal analysis of relative distances (TARDIS) is a robust, parameter-free alternative to single-particle tracking. Nat. Methods. 21, 1074–1081 (2024). [DOI] [PubMed]

- 33.Chenouard, N., Bloch, I. & Olivo-Marin, J.-C. Multiple hypothesis tracking for cluttered biological image sequences. IEEE Trans. Pattern Anal. Mach. Intell.35, 2736–3750 (2013). 10.1109/TPAMI.2013.97 [DOI] [PubMed] [Google Scholar]

- 34.Chenouard, N. et al. Objective comparison of particle tracking methods. Nat. Methods11, 281–289 (2014). 10.1038/nmeth.2808 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Saxton, M. J. & Jacobson, K. Single-particle tracking: applications to membrane dynamics. Annu. Rev. Biophys.26, 373–399 (1997). 10.1146/annurev.biophys.26.1.373 [DOI] [PubMed] [Google Scholar]

- 36.Cheng, H.-J., Hsu, C.-H., Hung, C.-L. & Lin, C.-Y. A review for cell and particle tracking on microscopy images using algorithms and deep learning technologies. Biomed. J.45, 465–471 (2022). 10.1016/j.bj.2021.10.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tokunaga, M., Imamoto, N. & Sakata-Sogawa, K. Highly inclined thin illumination enables clear single-molecule imaging in cells. Nat. Methods5, 159–161 (2008). 10.1038/nmeth1171 [DOI] [PubMed] [Google Scholar]

- 38.Pitchiaya, S., Heinicke, L. A., Custer, T. C. & Walter, N. G. Single molecule fluorescence approaches shed light on intracellular RNAs. Chem. Rev.114, 3224–3265 (2014). 10.1021/cr400496q [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Custer, T. C. & Walter, N. G. In vitro labeling strategies for in cellulo fluorescence microscopy of single ribonucleoprotein machines. Protein Sci.26, 1363–1379 (2017). 10.1002/pro.3108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang, B., Zerubia, J. & Olivo-Marin, J.-C. Gaussian approximations of fluorescence microscope point-spread function models. Appl. Opt.46, 1819–1829 (2007). 10.1364/AO.46.001819 [DOI] [PubMed] [Google Scholar]

- 41.Van de Schoot, R. et al. Bayesian statistics and modelling. Nat. Rev. Methods Primers1, 1 (2021). 10.1038/s43586-020-00001-2 [DOI] [Google Scholar]

- 42.Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature521, 452–459 (2015). 10.1038/nature14541 [DOI] [PubMed] [Google Scholar]

- 43.Von Toussaint, U. Bayesian inference in physics. Rev. Mod. Phys.83, 943–999 (2011). 10.1103/RevModPhys.83.943 [DOI] [Google Scholar]

- 44.Pressé, S. & Sgouralis, I. Data Modeling for the Sciences: Applications, Basics, Computations (Cambridge University Press, 2023).

- 45.Roudot, P. et al. u-track3D: measuring, navigating, and validating dense particle trajectories in three dimensions. Cell Rep. Methods3, 100655 (2023). 10.1016/j.crmeth.2023.100655 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.De Chaumont, F. et al. Icy: an open bioimage informatics platform for extended reproducible research. Nat. Methods9, 690–696 (2012). 10.1038/nmeth.2075 [DOI] [PubMed] [Google Scholar]

- 47.Fish, K. N. Total internal reflection fluorescence (TIRF) microscopy. Curr. Protoc. Cytom.12, Unit 12.18 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Reynaud, E. G., Peychl, J., Huisken, J. & Tomancak, P. Guide to light-sheet microscopy for adventurous biologists. Nat. Methods12, 30–34 (2015). 10.1038/nmeth.3222 [DOI] [PubMed] [Google Scholar]

- 49.Hansen, A. S. et al. Robust model-based analysis of single-particle tracking experiments with Spot-On. eLife7, e33125 (2018). 10.7554/eLife.33125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Karslake, J. D. et al. SMAUG: analyzing single-molecule tracks with nonparametric Bayesian statistics. Methods193, 16–26 (2021). 10.1016/j.ymeth.2020.03.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kowalek, P., Loch-Olszewska, H. & Szwabiński, J. Classification of diffusion modes in single-particle tracking data: feature-based versus deep-learning approach. Phys. Rev. E100, 032410 (2019). 10.1103/PhysRevE.100.032410 [DOI] [PubMed] [Google Scholar]

- 52.Galvanetto, N. et al. Extreme dynamics in a biomolecular condensate. Nature619, 876–883 (2023). 10.1038/s41586-023-06329-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ram, S., Prabhat, P., Chao, J., Sally Ward, E. & Ober, R. J. High accuracy 3D quantum dot tracking with multifocal plane microscopy for the study of fast intracellular dynamics in live cells. Biophys. J.95, 6025–6043 (2008). 10.1529/biophysj.108.140392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kilic, Z. et al. Extraction of rapid kinetics from smFRET measurements using integrative detectors. Cell Rep. Phys. Sci.2, 100409 (2021). 10.1016/j.xcrp.2021.100409 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Hirsch, M., Wareham, R. J., Martin-Fernandez, M. L., Hobson, M. P. & Rolfe, D. J. A stochastic model for electron multiplication charge-coupled devices—from theory to practice. PLoS ONE8, e53671 (2013). 10.1371/journal.pone.0053671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Harpsøe, K. B., Andersen, M. I. & Kjægaard, P. Bayesian photon counting with electron-multiplying charge coupled devices (EMCCDs). Astron. Astrophys.537, A50 (2012). 10.1051/0004-6361/201117089 [DOI] [Google Scholar]

- 57.Wasserman, L. All of Nonparametric Statistics (Springer Science & Business Media, 2006).

- 58.Orbanz, P. & Teh, Y. W. in Encylopedia of Machine Learning 1st edn (eds Sammut, C. & Webb, G. I.) 81–89 (Springer Science & Business Media, 2010).

- 59.Müller, P., Quintana, F. A., Jara, A. & Hanson, T. Bayesian Nonparametric Data Analysis (Springer, 2015).

- 60.Schindelin, J. et al. Fiji: an open-source platform for biological-image analysis. Nat. Methods9, 676–682 (2012). 10.1038/nmeth.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Pitchiaya, S., Krishnan, V., Custer, T. C. & Walter, N. G. Dissecting non-coding RNA mechanisms in cellulo by single-molecule high-resolution localization and counting. Methods63, 188–199 (2013). 10.1016/j.ymeth.2013.05.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.The MathWorks, Inc. MATLAB Version: 9.13.0 (R2022b) (MathWorks, 2022).

- 63.LabPresse/BNP-Track. GitHubhttps://github.com/LabPresse/BNP-Track (2023).

- 64.Bezanson, J., Edelman, A., Karpinski, S. & Shah, V. B. Julia: a fresh approach to numerical computing. SIAM Rev.59, 65 (2017). 10.1137/141000671 [DOI] [Google Scholar]

- 65.Danisch, S. & Krumbiegel, J. Makie.jl: flexible high-performance data visualization for Julia. J. Open Source Softw.6, 3349 (2021). 10.21105/joss.03349 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figs. 1–9, Tables 1–5, Discussion and Notes.

Raw experimental image stacks.

Raw synthetic image stacks.

Raw synthetic image stacks.

Raw experimental image stacks.

Raw experimental image stacks.

Raw synthetic image stacks.

Raw experimental image stacks.

Data Availability Statement

All data discussed in this manuscript are provided as Supplementary Data. Source data are provided with this paper.

All algorithms are implemented in MATLAB R2022b62, tested in R2024a. Code can be accessed at the GitHub page for the S.P. laboratory at https://github.com/LabPresse/BNP-Track (ref. 63). Figure-generating scripts are in MATLAB or Julia64 v1.10.3 using Makie65 v0.20.9. These scripts are available upon request.