Abstract

The limited representation of minorities and disadvantaged populations in large-scale clinical and genomics research poses a significant barrier to translating precision medicine research into practice. Prediction models are likely to underperform in underrepresented populations due to heterogeneity across populations, thereby exacerbating known health disparities. To address this issue, we propose FETA, a two-way data integration method that leverages a federated transfer learning approach to integrate heterogeneous data from diverse populations and multiple healthcare institutions, with a focus on a target population of interest having limited sample sizes. We show that FETA achieves performance comparable to the pooled analysis, where individual-level data is shared across institutions, with only a small number of communications across participating sites. Our theoretical analysis and simulation study demonstrate how FETA’s estimation accuracy is influenced by communication budgets, privacy restrictions, and heterogeneity across populations. We apply FETA to multisite data from the electronic Medical Records and Genomics (eMERGE) Network to construct genetic risk prediction models for extreme obesity. Compared to models trained using target data only, source data only, and all data without accounting for population-level differences, FETA shows superior predictive performance. FETA has the potential to improve estimation and prediction accuracy in underrepresented populations and reduce the gap in model performance across populations.

Keywords: Federated learning, health equity, precision medicine, risk prediction, transfer learning

1. Introduction.

Precision medicine holds promises to improve individual health by integrating a person’s genetics, environment, and lifestyle information to determine the best approach to prevent or treat diseases (Ashley (2016)). Precision medicine research has attracted considerable interest and investment during the past few decades (Collins and Varmus (2015)). With the emergence of electronic health records (EHR) linked with biobank specimens, massive environmental data, and health surveys, we now have increasing opportunities to develop accurate personalized risk prediction models in a cost-effective way (Li et al. (2020)).

Despite the increasing availability of large-scale biomedical data, many demographic sub-populations are observed to be underrepresented in precision medicine research (Kraft et al. (2018), West, Blacksher and Burke (2017)). For example, a disproportionate majority (>75%) of participants in existing genomics studies are of European descent (Martin et al. (2019)). The UK Biobank, one of the largest biobanks, has more than 95% of European-ancestry participants (Sudlow et al. (2015)). Mounting evidence has shown that the transferability and generalizability of models trained in a particular population can be low due to a substantial amount of heterogeneity in underlying distributions of data across populations (Duncan et al. (2019), Kraft et al. (2018), Landry et al. (2018), West, Blacksher and Burke (2017)). For example, the performance of existing genetic risk prediction models in non-European populations has generally been found to be much poorer than in European-ancestry populations, most notably in African-ancestry populations (Duncan et al. (2019)). It remains challenging to optimize prediction model performance for underrepresented populations accounting for both the heterogeneity and the insufficient sample sizes. However, to advance precision medicine it is crucial to improve the performance of statistical and machine learning models in underrepresented populations so as not to exacerbate health disparities.

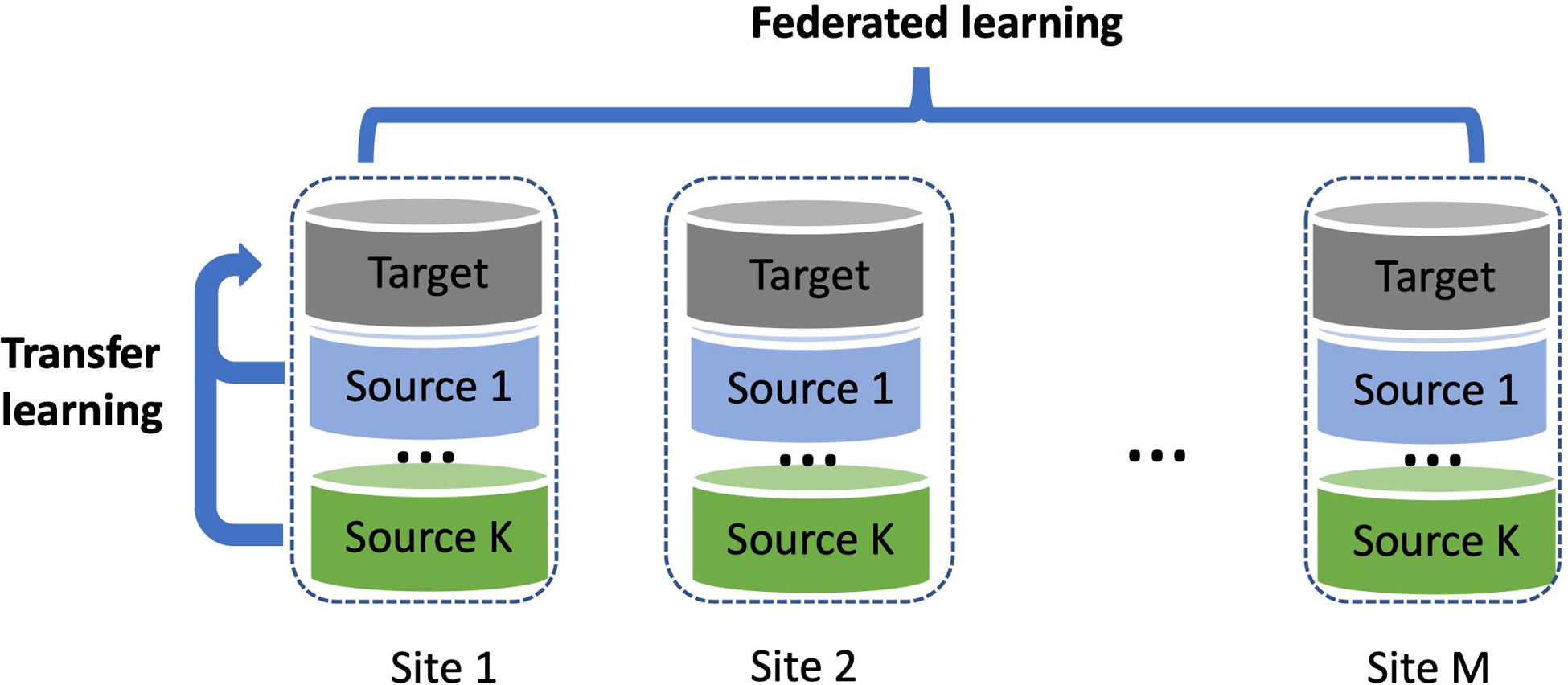

We propose to address the lack of representation and disparities in model performance through two data integration strategies: (1) leveraging the shared knowledge from diverse populations and (2) integrating larger bodies of data from multiple healthcare institutions. Data across multiple populations may share a certain amount of similarity that can be leveraged to improve the model performance in an underrepresented population (Cai et al. (2021)). However, conventional methods, where all data are combined and used indistinctly in training and testing, cannot tailor the prediction models to work well for a specific population; if using a population-specific training strategy, the sample size from an underrepresented population is usually not sufficient to train a model with reliable performance. To account for such heterogeneity and lack of representation, we propose to use transfer learning to transfer the shared knowledge learned from diverse populations to an underrepresented population so that comparable model performance can be reached with much less data for training (Weiss, Khoshgoftaar and Wang (2016)). In addition, multiinstitutional data integration can improve the sample size of the underrepresented populations and the diversity of data (McCarty et al. (2011)). In recent years, many research consortia and data networks have been built which provide infrastructures for multiinstitutional data integration. For example, the electronic Medical Records and Genomics (eMERGE) Network is a consortium of ten participating sites to investigate the use of EHR systems for genomic research (Gottesman et al. (2013)). In 2019, the Global Biobank Meta Initiative (GBMI) was founded which brings together 19 biobanks to work together to understand the genetic basis of human health and disease (Zhou, analysis Initiative et al. (2021)). We propose to use federated learning to unlock the multiinstitutional health records/biobank data, which overcomes two main barriers to institutional data integration. One is that the individual-level information can be highly sensitive and cannot be shared across institutions (van der Haak et al. (2003)). The other one is that the often-enormous size of the health records/biobanks data makes it infeasible or inefficient to pool all data together due to challenges in data storage, management, and computation (Kushida et al. (2012)). Therefore, as illustrated in Figure 1, we proposed a FEderated Transfer Algorithm (FETA) to incorporate data from diverse populations that are stored at multiple institutions to improve the model performance in a target underrepresented population.

Fig. 1.

A schematic illustration of the federated transfer learning framework and the problem setting.

Existing transfer learning methods primarily focus on settings where individual-level data can be shared. For example, Cai and Wei (2021) study the minimax and adaptive methods for nonparametric classification in the transfer learning setting. Bastani (2020) studies estimation and prediction in high-dimensional linear models, and the sample size of the auxiliary study is larger than the number of covariates. Li, Cai and Li (2022) propose a transfer learning algorithm in high-dimensional linear models and studies the adaptation to the unknown similarity level. Li, Cai and Li (2020) study transfer learning in high-dimensional Gaussian graphical models with false discovery rate control. Tian and Feng (2021) study transfer learning under high-dimensional generalized linear models. These individual data-based methods cannot be directly extended to the federated settings due to data sharing constraints and the potential heterogeneity across sites.

Under data sharing constraints, most federated learning methods focus on settings where the true models are the same across studies. For example, many algorithms fit a common model to data from each institution and then aggregate these local estimates through a weighted average, for example, Chen and Xie (2014), Lee et al. (2017), Li, Lin and Li (2013), Wang et al. (2019a). To improve efficiency, surrogate likelihood approaches have been adopted in recently proposed distributed algorithms (Duan et al. (2019), Duan et al. (2020), Jordan, Lee and Yang (2019)) to approximate the global likelihood. These methods cannot be easily extended to the federated transfer learning setting where training a model for a target population is of interest and both data sharing constraints and heterogeneity are present. Recently, Liu et al., 2021 and Cai, Liu and Xia (2022) propose distributed multitask learning approaches, which jointly train site-specific regression parameters based on derived summary data. Different from their work which assumes site-level homogeneous populations, we consider that data in each site are from multiple populations, and the sample sizes can be highly unbalanced. Without making assumptions that model parameters across populations have the same support, our methods are robust to the cases where some populations differ significantly from the target.

To summarize, our work contributes to the current literature from the following perspectives: (1) adopting transfer learning ideas, FETA tackles an important issue in precision medicine where sample sizes from different populations can be highly unbalanced. We provide theoretical analysis, extensive numerical experiments, and real data analysis to demonstrate the improved accuracy and the robustness to the level of heterogeneity; (2) FETA enables federated model training across multiple datasets, which only require a small number of communications across participating sites and can achieve performance comparable to the pooled analysis. Our theoretical analysis and numerical experiments demonstrated how the estimation accuracy is influenced by communication budgets, privacy restrictions, and heterogeneity among populations, and (3) our real data application shows the promise of applying FETA to improve the risk prediction in underrepresented population using data from multicenter large-scale clinical/genomic datasets with communication-efficiency and privacy protection.

The rest of the paper is organized as follows. In Section 2 we introduce FETA with detailed discussions on potential options for initialization, tuning parameter selection, and reducing communication costs. In Sections 3 and 4, we provide theoretical guarantees and simulation studies, respectively, to evaluate the performance of the methods and how it is influenced by the communication constraints and level of heterogeneity across populations. In Section 5 we apply our methods to the construction of prediction models for extreme obesity, which is an emerging public health issue and an important risk factor for many complex diseases. We combine multicenter data from eMERGE Network and target the underrepresented African-ancestry population.

2. Method.

2.1. Problem setup and notation.

We build our federated transfer learning methods based on sparse high-dimensional regression models (Tibshirani (1996), Bickel, Ritov and Tsybakov (2009)). These models have been widely applied to precision medicine research for both association studies and risk prediction models, due to the benefits of simultaneous model estimation and variable selection, and the desirable interpretability (Qian et al. (2020)).

We assume there are subjects in total from populations. We treat the underrepresented population of interest as the target population, indexed by , while the other populations are treated as source populations, indexed by . We assume data for the subjects are stored at different sites where, due to privacy constraints, no individual-level data are allowed to be shared across sites. We consider the case where is finite but is allowed to grow as the total sample size grows to infinity.

Let be the index sets of the data from the th population in the th site and denote the corresponding sample size, for and . We assume the index sets are known and do not overlap with one another, that is, for any . In precision medicine research, these index sets may be obtained from indicators of minority and disadvantaged groups, such as race, gender, and socioeconomic status. Denote and . We are particularly interested in the challenging scenario , where the underrepresentation is severe. However, at certain sites the relative sample compositions can be arbitrary. It is possible that some sites may not have data from certain populations, that is, for some but not all for . We consider the high-dimensional setting where can be larger and much larger than and .

For the th subject, we observe an outcome variable and a set of predictors , including the intercept term. We assume that the target data on the th site, , follow a generalized linear model

with a canonical link function and a negative log-likelihood function

for some unknown parameter and uniquely determined by . Similarly, the data from the th source population in the th site are , and they follow a generalized linear model

with negative log-likelihood

for some unknown parameter .

Our goal is to estimate , using data from populations and sites. These data are heterogeneous at two levels: for data from different populations, differences may exist in terms of both the regression coefficients, which characterize the conditional distribution , as well as the underlying distribution of the covariates, also known as covariate shift in some related work (Guo (2020)). For data from a given population, the distribution of covariates might also be heterogeneous across sites. We assume the regression parameters to be distinct across populations. In addition, we consider the setting where only summary-level data can be shared across sites.

Despite the presence of between-population heterogeneity, it is reasonable to believe that the population-specific models may share some degree of similarity. For example, in genetic risk prediction models the genetic architectures, captured by regression coefficients, of many complex traits and diseases are found to be highly concordant across ancestral groups (Lam et al. (2019)). It is important to characterize and leverage such similarities so that knowledge can be transferred from the source to the target population.

Under our proposed modeling framework, we characterize the similarities between the th source population and the target based on the difference between their regression parameters, . We consider the following parameter space:

where and are the upper bounds for the support size of and , respectively. Intuitively, a smaller indicates a higher level of similarity so that the source data can be more helpful for estimating in the target population. When is relatively large, incorporating data from source populations may be worse than only using data from the target population to fit the model, also known as negative transfer in the machine learning literature (Weiss, Khoshgoftaar and Wang (2016)). With unknown and , in practice, we aim to devise an adaptive estimator to avoid negative transfer under unknown levels of heterogeneity across populations.

Throughout, for real-valued sequences , we write , if for some universal constant , and , if for some universal constant . We say if and . We let , denote some universal constants. For a vector and an index set , we use to denote the subvector of corresponding to . For any vector , let be formed by setting all but the largest (in magnitude) elements of to zero.

2.2. The proposed algorithm.

To motivate our proposed FETA framework, we first consider the ideal case when site-level data can be shared. The transfer learning estimator of can be obtained via the following three-step procedure:

Step 1: Fit a regression model in each source population. For , we obtain

| (2.1) |

Step 2: Adjust for differences using target data. For , we obtain

| (2.2) |

Threshold .

Step 3: Joint estimation using source and target data. We obtain

| (2.3) |

where , and are tuning parameters. Instead of learning directly from the target data with a limited sample size, we learn from the source populations and use them to “jumpstart” the model fitting in the target population. More specifically, we learn the difference by offsetting each . In Step 3 we pool all the data to jointly learn , where the estimated differences are adjusted for data from the th source population. In contrast to the existing transfer learning methods based on generalized linear models, the above procedure has benefits in estimation accuracy and flexibility to be implemented in the federated setting. Compared to recent work (Tian and Feng (2021)), the above procedure has a faster convergence rate, which is, in fact, minimax optimal under mild conditions. Moreover, our method learns independently in Step 1 and Step 2, while in other related methods (Li, Cai and Li (2022), Tian and Feng (2021)), a pooled analysis is conducted with data from multiple populations. In a federated setting, finding a proper initialization for such a pooled estimator is challenging due to various levels of heterogeneity.

To generalize (2.1)–(2.3) to the federated setting, we consider an approximation of by the second-order expansion of at . That is,

The higher-order terms are omitted, given that the initial value is sufficiently close to the true parameter. Using these surrogate losses, the sites only need to share three sets of summary statistics, the current estimate , the score vector , and the Hessian matrix . For , we define

| (2.4) |

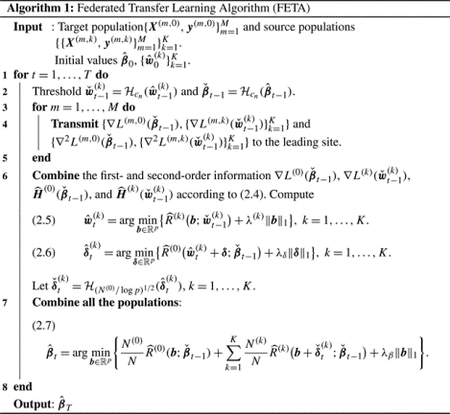

The functions are the combined surrogate log-likelihood functions for the th population, based on the previous estimate and corresponding gradients obtained from the sites. We then follow similar strategies as (2.1)–(2.3) but replace the full likelihood with the surrogate losses to construct a federated transfer learning estimator for , as detailed in Algorithm 1.

We discuss strategies for the initialization of and in Section 2.3. Algorithm 1 requires iterations, where within each iteration we collect the first- and second-order derivatives calculated at each site based on the current parameter values. In practice, when iterative communication across sites is not preferred, we can choose . We show in Section 3 that additional iterations can improve the estimation accuracy.

Remark 1. A special case of Algorithm 1 concerns the linear regression models where . In such a case, we can directly obtain summary statistics and . We can reconstruct , using and , as . Thus, each site only needs to transfer and to perform Steps 1–3 (in equations (2.1)–(2.3)), using summary statistics, and no iterative communication is needed.

For the proper choices of tuning parameters, we provide theoretical investigation in Section 3. In practical implementation, they can be chosen by cross-validation. In a federated setting, minimizing the communication cost of choosing tuning parameters is important, and we provide an efficient procedure in the following remark.

Remark 2. In a federated setting, cross-validation is preferred to be done locally, since otherwise the communication costs would be much higher if different models need to be fitted and validated in a federated way. To reduce communication costs, we suggest the following procedure. We first learn the best tuning parameter for each population using local data. For the th population, we find , which is the site with the largest sample size from the th population. Using data at site from the th population, we fit a local penalized GLM and use cross-validation to choose the best tuning parameter (denoted by ). With , based on the theoretical conclusion (van de Geer (2008)), which suggests the best tuning parameter is roughly , where is some positive constant not related to or , we can obtain . When fitting FETA, we choose tuning parameters by rescaling the local best tuning parameters. More specifically, in equation (2.5) we choose . In equation (2.6), we choose . In equation (2.7) we choose . This procedure is implemented in both our simulation study and real data application and is demonstrated to be effective. Most importantly, it does not increase the communication cost since tuning parameter selection is performed locally within a single site.

In practice, it is likely that some source models are substantially different from the target model. In such a case, excluding source populations that are highly different from the target may lead to better performance than including all source populations. In an extreme case, if all the source populations are not helpful, it is desired that our algorithm can at least maintain a comparable performance than the estimator obtained using the target data alone. We thus proposed to increase the robustness of the transfer learning by an aggregation step, based on a small target validation dataset in the leading site, which can guarantee that, loosely speaking, the aggregated estimator has prediction performance comparable to the best prediction performance among all the candidate estimators (Rigollet and Tsybakov (2011), Tsybakov (2014), Lecué and Rigollet (2014)). In the leading site (denoted as the th site), we denote the validation data to be , with sample size for some , where is the sample size of the training data in the leading site from the target population. We hope to first obtain a ranking of the source populations based on their similarity to the target. We can then obtain candidate estimators obtained by only incorporating the top source populations, for . Using the validation data, we select the estimator with the best performance. We summarize the details of the above procedures in Algorithm 2.

In the aggregation step, can be viewed as a weight vector for . Through optimization (2.9) one can find the optimal combination which maximizes the log-likelihood in some validation samples, and existing work has shown that the performance of the optimal combination is no worse than any of the candidate estimators in , when the sample size of the validation data is not too small (Rigollet and Tsybakov (2011), Tsybakov (2014), Lecué and Rigollet (2014)). Based on our simulation study and real data example, the size of the validation data can be relatively small, compared to the training data, and cross-fitting may be used to make full use of all the data. In practice, if there is strong prior knowledge indicating that the level of heterogeneity is low across populations, the aggregation step may be skipped.

Remark 3 (Avoid sharing Hessian matrices). Algorithm 1 requires each site to transmit Hessian matrices to the leading site, which may not be a concern when is relatively small. When is large, we provide possible options to reduce the communication cost of sharing Hessian matrices: (1) If the distributions of covariate variables are homogeneous across sites for a certain population, we propose to use Algorithm B.1 in Section B of the Supplementary Material (Li, Cai and Duan (2023)), which only requires the gradient vector from each site. (2) When the distributions of covariate variables are heterogeneous across sites, another possibility is to fit a density ratio model between each dataset and the target data in the leading site. Then we can still use the leading target data to approximate the Hessian matrices of the other datasets, through the density ratio tilting technique proposed in Duan, Ning and Chen, 2022. (3) We can leverage the sparsity structures of the population-level Hessian matrices, denoted by , to reduce the communication cost. For example, when constructing polygenic risk prediction, the existing knowledge on the structures of linkage disequilibrium may infer similar block-diagonal structures of the Hessian matrices. In such cases we can apply thresholding to the Hessian matrices and only share the resulting blocks. (4) As demonstrated in our simulation study and real data application, our algorithm with one round of iteration already achieves comparable performance as the pooled analysis. Thus, if choosing , each site will only need to share Hessian matrices once. If more iterations are allowed, we propose to only update the first-order gradients in the rest of the iterations.

2.3. Initialization strategies.

The initialization determines the convergence rate and the number of iterations that Algorithm 1 and Algorithm B.1 need to reach convergence. With data from more than one population, one needs to balance the sample sizes and similarities across populations.

Here we offer two initialization strategies, namely, the single-site initialization and the multisite initialization. The ideal scenario for initialization is that one site has relatively large sample sizes for all populations. In such a case, we initialize and using the single-site initialization. If we cannot find a site with enough data from all the populations, the multisite initialization can be used:

Strategy 1: Single-site initialization. Find , such that the sample sizes satisfy for all . In site , we initialize and by applying the pooled transfer learning approach introduced in equations (2.1)–(2.3). For example, the All of Us Precision Medicine Initiative aims to recruit one million Americans, with estimates of early recruitment showing up to 75% of participants are from underrepresented populations (Sankar and Parker (2017)). Such a database can be treated as an initialization site or leading site.

Strategy 2: Multisite initialization. We first find , which is the site with the largest sample size from the th population. In site , if the sample size of the th population is much smaller than the total sample size, we initialize by treating the th population as the target and other populations as the source and apply the transfer learning approach introduced in equations (2.1)–(2.3). If the th population is the dominating population, we can simply initialize using only its own data. The same procedure applies to the initialization of . For example, when constructing polygenic prediction models, the UK Biobank has around 500,000 European-ancestry samples but only 3000 African-ancestry samples (Collins (2012)). Thus, if UK Biobank is selected for initialization of the European-ancestry population, it can be done by using only the European-ancestry samples. On the contrary, if the UK Biobank is selected for initialization of the African-ancestry population, a transfer learning approach is needed to improve the accuracy of initialization by incorporating both European-ancestry and African-ancestry samples.

In Section 3 we show that the convergence rates of Algorithm 1 depend on the accuracy of the initial estimators. For the above two strategies, we derive their corresponding convergence rates in the next section. The corresponding theoretical results for Algorithm B.1 can be found in Section D of the Supplementary Material.

3. Theoretical guarantees.

We denote the population Hessian matrices for the th population at the th site as and . We denote the population Hessian matrices across all sites to be . We assume the following condition for the theoretical analysis.

Condition 1. The predictors are independent and uniformly bounded with mean zero and covariance with

The covariance matrices and the Hessian matrices are all positive definite for and .

Condition 2 (Lipschitz condition of ). The random noises are independent sub-Gaussian with mean zero for . The second-order derivative is uniformly bounded and for any .

Condition 1 assumes uniformly bounded designs with positive definite covariance matrices. The distribution of can be different for different and . This assumption is more realistic in practical settings than the homogeneity assumptions in, say, Jordan, Lee and Yang (2019). In fact, the heterogeneous covariates are allowed because the Hessian matrices from different sites are transmitted in Algorithm 1. In contrast, Algorithm B.1, which only requires transmitting the gradients across sites, would require a stricter version of Condition 1, as stated in Condition B.1 in the Supplementary Material. On the other hand, the positive definiteness assumption is only required for the Hessian matrices involved in the initialization and the pooled Hessian matrix . Moreover, when having unbounded covariates, one may consider relaxing the uniformly bounded designs to sub-Gaussian designs. We comment that our theoretical analysis still carries through with sub-Gaussian designs, but the convergence rate will be inflated with some factors of log . Condition 2 assumes a Lipschitz condition on the link function which holds for linear, logistic, and multinomial models.

For the tuning parameters, we take

for some constants and . We set at the magnitude of to simplify the theoretical analysis. Indeed, can be chosen as , where is the sample size for the th population at its initialization site. In practice, a common practice is to select the tuning parameters by cross-validation, as in Remark 2.

We first show the convergence rate of the pooled transfer learning estimator in the following lemma.

Lemma 1 (Pooled transfer learning estimator). Assume Conditions 1 and 2, , and . Then for defined in (2.3) and some constant ,

Lemma 1 demonstrates that the pooled estimator has optimal rates under mild conditions. Its convergence rate is faster than the target-only minimax rate when and . The sample size condition of Lemma 1 is relatively mild. First, is reasonable, as our target population is underrepresented. The condition suggests that it is beneficial to exclude too-small samples as source data. The condition that and requires that the similarity among different populations is sufficiently high.

Lemma 1 also shows the benefits of our two data integration strategies. When we integrate data across multiple sites, becomes larger, which relaxes the sparsity conditions and improves the convergence rate. On the other hand, when we incorporate data from diverse populations, the total sample size is increased, which also improves the convergence rate.

In the federated setting, we first provide in Theorem 3.1 a general conclusion that describes how the convergence rate of Algorithm 1 relies on the initial values. We then provide the convergence rates of Algorithm 1 under initialization Strategies 1 and 2 in Corollaries 1 and 2, respectively.

Theorem 3.1 (Error contraction of Algorithm 1). Assume Conditions 1 and 2 and the true parameters are in the parameter space . Assume that and . If event in (C.16) in the Supplementary Material holds for the initial estimators and , then with probability at least , for any finite ,

| (3.1) |

Theorem 3.1 establishes the convergence rate of under certain conditions on the initializations. As the conditions in event guarantee that for all and and converge to and in -norm, respectively. For large enough , the convergence rate of is , which is the minimax rate for estimating in . Hence, the FETA estimators converge to the global minimax estimators. With proper initialization the number of iterations for convergence can be very small. Detailed analysis based on the initialization strategies, proposed in Section 2.3, is provided in the sequel.

Comparing Theorem 3.1 with Lemma 1, we see some important trade-offs in federated learning. First, the larger estimation error of with small in comparison to the pooled version is a consequence of leveraging summary information rather than the individual data. Second, while the accuracy of improves as increases, the communication cost also increases. A balance between communication efficiency and estimation accuracy needs to be determined based on the practical constraints.

To better understand the convergence rate, we investigate the initialization strategies proposed in Section 2.3. Under the single-site strategy, we have the following conclusion.

Corollary 1 (Algorithm 1 with single-site initialization). We compute and via (2.1)–(2.3) based on the individual data in site . Assume Conditions 1 and 2 and the true parameters are in the parameter space . Assume that , and

Then with probability at least , for any fixed ,

where is an independent test sample with .

Corollary 1 provides estimation and prediction errors for FETA after iterations. If the response is binary, then it is of more interest to bound the classification errors, which will be proved in Remark 4. Corollary 1 uses the results that and under the current conditions. We see that, after number of iterations, has the same convergence rate as the pooled estimator . If and for some finite and , then only constant number of iterations are needed.

Remark 4 (Classification error under logistic link). Assume the conditions of Corollary 1 and logistic model . For an independent test sample , let . If and , it holds that

for some positive constants , and .

Remark 4 states that if the true signal is strong enough with high probability, then, under the conditions of previous theorems, the misclassification rate is .

Next, we study the performance of Algorithm 1 when using multisite initialization strategy. For simplicity, we study the case where . That is, the th population is abundant in site , and hence can be initialized based on the data from only the th population in site . Specifically,

| (3.2) |

Corollary 2 (Algorithm 1 with multisite initialization). We compute and via (3.2) based using data in site for . We take and with some large enough constant . Assume Conditions 1 and 2 and the true parameters are in the parameter space . Assume that , and . Then with probability at least , for any fixed ,

where is an independent test sample with .

Corollary 2 uses the results that and , given the optimization (3.2). In this case, the number of iterations needed for to reach the same rate as the pooled estimator is about .

4. Simulation studies.

4.1. Predictive performance under different levels of heterogeneity when .

Motivated from our real data application, which is introduced in Section 5, we first consider the setting where . We generate data to mimic genetic risk prediction in a federated network with sites. In site , we have samples from the target population and samples from a source population. We set the dimension of to be and generate to mimic the genotype data which take values (0, 1, and 2). The distribution of in the target population differs with the source population in terms of LD structures and minor allele frequencies; see Section E of the Supplementary Material for more details. For each subject we generate the binary outcome variable through a logistic regression model

where . The regression coefficients is subject is from the target population, otherwise . The regression-coefficient has nonzero entries which are generated from . The source coefficient is generated from the following two settings:

(S1) , where is a random subset of with and is sampled from with . This is corresponding to the setting that a small number of genetic variants have relatively large differences in effect sizes across populations.

(S2) where is a random subset with . We generate . This is corresponding to the setting that a large number of genetic variants have relatively small differences in effect sizes across populations.

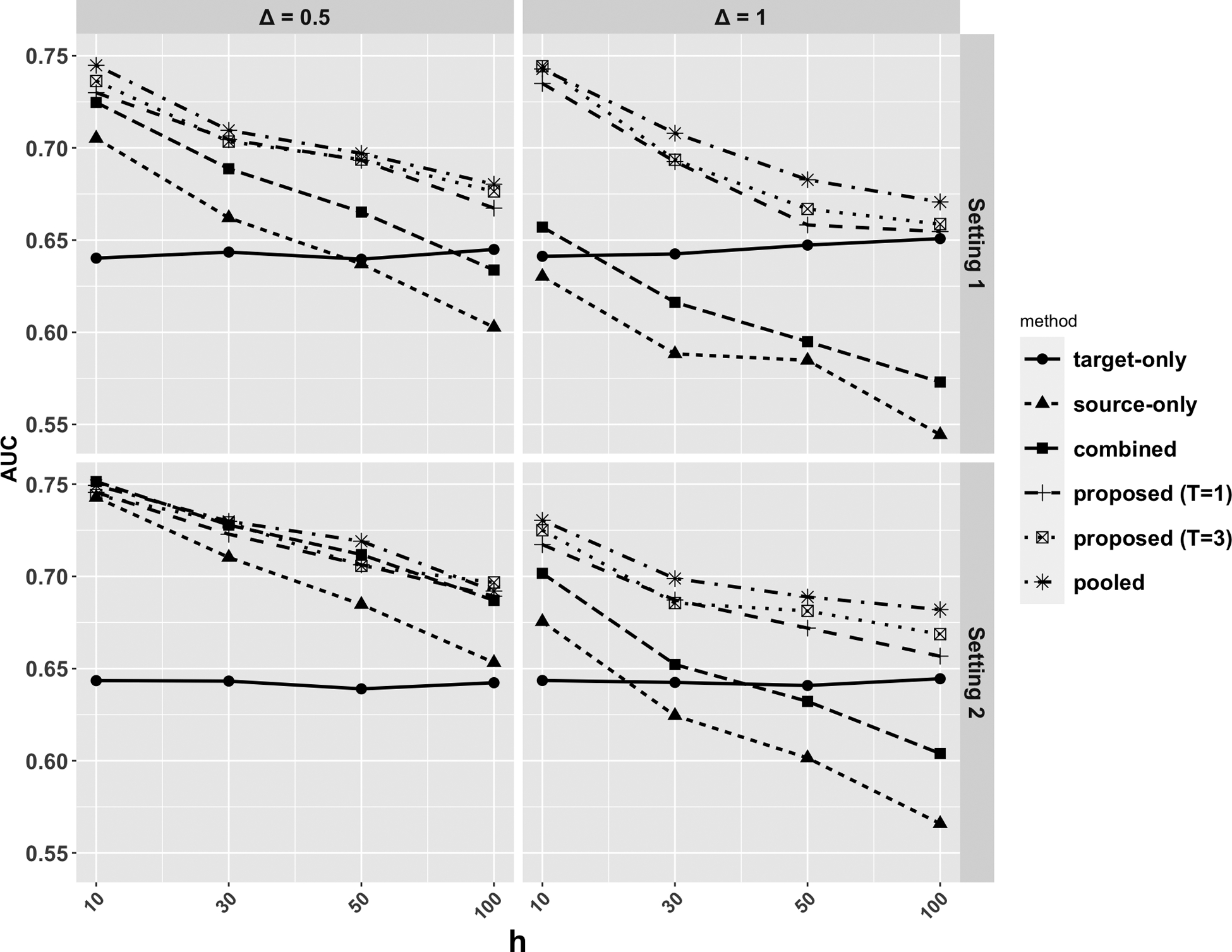

In both settings the level of heterogeneity increases with and . We compare a list of methods including: (1) federated learning based on all the target data (target-only), (2) federated learning based on all the source data (source-only), (3) federated learning based on all data combing both the source and target (combined), (4) Algorithm 2 with (proposed ), (5) Algorithm 2 with (proposed ), and (6) the pooled transfer learning equations (2.1)–(2.3) method where data from all sites are pooled together (pooled). The methods are evaluated based on the out-sample area under the receiver operating characteristic curve (AUC), based on a randomly generated testing sample with sample size .

We present in Figures 2 the AUC over 200 replications under different simulation settings. In setting 1 with the increase of and , the prediction performance of all methods decreases. This is consistent with our intuition that a source population more different to the target is less helpful. The estimator combining source and target data outperforms the source-only estimator in all scenarios, as it incorporates target samples. Our proposed estimators, even with only one round of communication , achieve better performance than these benchmarks. With , our methods can further improve the performance, especially when heterogeneity is high. Our estimators have comparable performance to the pooled estimator when or is small. When the level of heterogeneity is high, we see that the combining source and target data lead to worse performance than the target-only model, while the transfer learning and the proposed methods, due to the aggregation step, can prevent negative transfer from the source population, which is highly different from the target. Results from setting 2 convey similar conclusions. We see that numerically when is relatively large (even bigger than ), our methods still have improvement, given the magnitude of entries are small. We also evaluated the effect of aggregation by comparing the proposed estimator with from Algorithm 1 (see Figures E.1 and E.2 in the Supplementary Material). Across all scenarios the proposed estimators with aggregation perform no worse than the estimators without aggregation. The improvement is substantial when the level of heterogeneity is moderate or large.

Fig. 2.

Comparison of AUC over 200 replications under simulation settings 1 and 2.

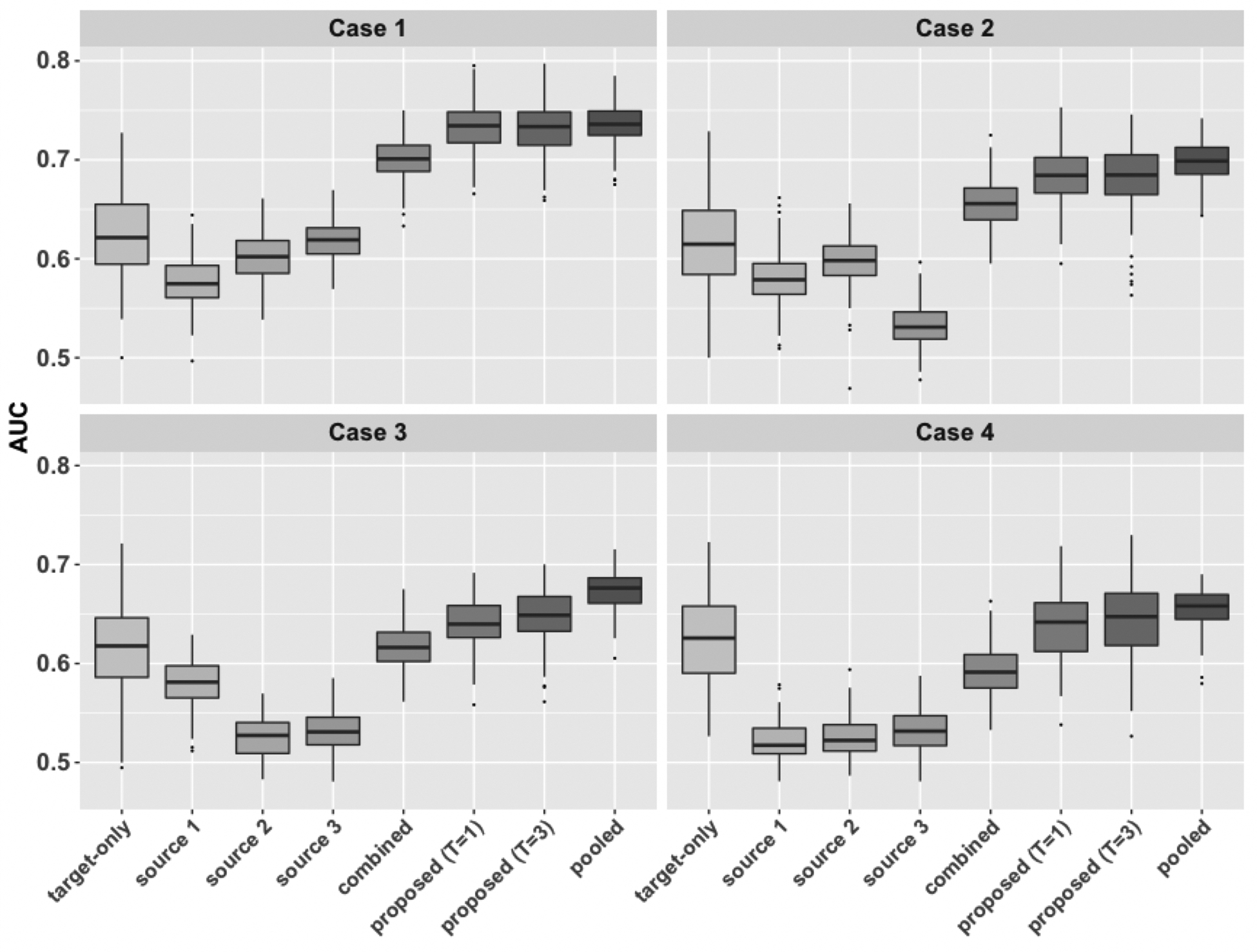

4.2. Robustness under different levels of heterogeneity when .

We then consider the setting with multiple source populations and investigate the robustness of the proposed method when some source populations are more helpful than others. In particular, we choose and , that is, data from three source populations and one target population are collected from five sites. We set for all . We generate in the same way, as described in Section 4.1. To generate , we choose the same procedure described in setting (S1) of Section 4.1, where for a “good” source population, we choose and and for a “bad” source, we choose and . We consider the following cases:

Case 1: No bad source, that is, for .

Case 2: One bad source, that is, for , and .

Case 3: Two bad sources, that is, and for .

Case 4: Three bad sources, that is, for .

From Figure 3, when all the source populations are helpful (Case 1), the proposed method with and has nearly the same AUC as the pooled transfer learning method. The combined approach also performs well in Case 1, although it has slightly lower AUC than the proposed method. From Case 1 to Case 4, the number of helpful source populations increases, and the performance of the proposed method also decreases, but it has performance no worse than the target-only estimator across all four cases. In Case 4 all the source data are not helpful, and the combined approach has AUC lower than the target-only approach. The proposed method has nearly the same performance than the target-only model, while the pooled transfer learning method is slightly better than the target-only approach probably due to the loss of federated learning.

Fig. 3.

Comparison of AUC over 200 replications under Case 1 to Case 4, where we set and . The number of helpful source populations decreases from Case 1 to Case 4.

In sum, the simulation studies demonstrate that our proposed methods have improved predictive accuracy compared to the benchmark methods. Compared to the ideal case where data are pooled together, FETA with only one iteration has comparable or slightly worst performance in most of the scenarios. When the level of heterogeneity is high, extra iterations can improve the accuracy and fill the gap between the federated analysis and the pooled analysis. In general, we recommend the aggregation step to ensure the robustness of the results and effectively leverage source data which are more helpful to the target.

5. Risk prediction of extreme obesity using data from the eMERGE network.

Obesity is one of the most significant risk factors for many complex human diseases, including hypertension, coronary heart disease, and type II diabetes (Kaplan (1989)). The prevalence of obesity among adults has more than doubled in the past three decades, and obesity continues to be a public health concern (Pan et al. (2011)). Many studies have suggested that genetics is likely to play a substantial role in the predisposition to obesity and may contribute up to 70% risk for the disease (Golden and Kessler (2020)). Over a hundred genes and gene variants related to excess weight have been discovered (Loos and Yeo (2022)), which has built the foundations for genetic risk prediction models. Similar as we have discussed in Section 1, existing genomics studies of obesity were mostly conducted in European ancestry populations (Zillikens et al. (2017)), raising concerns regarding the generalizability and transferibility of the study results.

5.1. Lack of representation of non-EA populations in eMERGE network.

To study this problem, we applied our proposed method to construct risk predication models using multicenter data from the eMERGE network, where EHR-derived phenotypes for 55,029 subjects from 10 participating sites were linked with DNA samples from biorepositories (Gottesman et al. (2013)). Among the 10 participating sites, three sites are children’s hospitals and are not included in this study. Trained EHR phenotyping algorithms are applied to derive the case-control status of extreme obesity, where participants are classified to either a “case,” a “control,” or “Unknown” (meaning the algorithm is not able to determine the case-control status based on the data available at the EHR system). In our study we exclude participants with unknown case-control status. We consider self-reported race as a population indicator, and Table 1 shows the sample size distribution across race and sites.

Table 1.

Sample sizes by self-reported races and sites.

| American Indian or Alaska Native | Asian | Black or African American | Unknown | White | |

|---|---|---|---|---|---|

| Geisinger Health System | * | * | * | * | 1023 |

| Group Health Cooperative | * | * | * | * | 252 |

| Marshfield Clinic | * | * | * | * | 1477 |

| Mayo Clinic | * | * | * | 47 | 1278 |

| Mount Sinai | * | * | 1148 | 343 | 185 |

| Northwestern University | * | * | 167 | * | 1306 |

| Vanderbilt University | * | * | 646 | 35 | 1472 |

indicates sample sizes less than 20.

A majority proportion of participants are White (>75%) across all seven sites, and in some sites, such as the Geisinger Health System, Marshfield Clinic, Mayo Clinic, more than 95% of the participant are White. There are around 20% of the participants who are Black or African American (AA), mostly coming from Mount Sinai, Northwestern University and Vanderbilt University. Except for Mount Sinai, White participants are overrepresented in all six sites. There are very few participants who are Asian (<1%), American Indian or Alaska Native (<1%), where all the sites have less than 20 participants in these racial groups.

In this application we treat the Black and AA population as our target population and treat the White population as the source. Due to the extremely small sample sizes from the other racial groups, a population-specific model is hard to be fitted, and we, therefore, incorporate them to the source population. As it is shown in Appendix F of the Supplementary Material, compared to a sensitivity which removes participants whose self-reported race is Asian, American Indian or Alaska Native, or unknown, the study results are consistent with the current findings, probably due to the extremely small sample sizes.

5.2. Data processing and feature selection.

Among the seven sites, Mount Sinai, Northwestern, and Vanderbilt have substantial target samples, while Geisinger, Group Health, Marshfield, and Mayo each has a target sample size less than 14, which may have only limited contributions to the model fitting and can increase the communication cost. Therefore, we exclude the target samples from Geisinger, Group Health, Marshfield, and Mayo.

To test the performance of the proposed method, we select three testing datasets each from Mount Sinai, Northwestern, and Vanderbilt, respectively. Specifically, from Vanderbilt and Northwestern we randomly select two internal testing datasets, each with size 300 target samples. We then choose all the target sample (n = 167) from Northwestern as an external testing dataset, whose data will be held out in the entire model training stage. We expect that this external testing dataset can help to evaluate the portability of the trained model when applied to a new dataset.

Predictors include age (coded as birth decades), gender, top 3 principal components (PCs) calculated from the genotypic data as well as top single nucleotide polymorphisms (SNPs), coded as 0,1, and 2 and selected by their marginal associations with the phenotypes. In particular, we applied regression between each phenotype and SNP controlling for age, gender, and 3 PCs and then select the top 2047 SNPs associated with each phenotype after filtering out SNPs with high LD, missing proportions and low minor allele frequency. The detailed filtering procedure and selected SNPs can be found in Section F of the Supplementary Material.

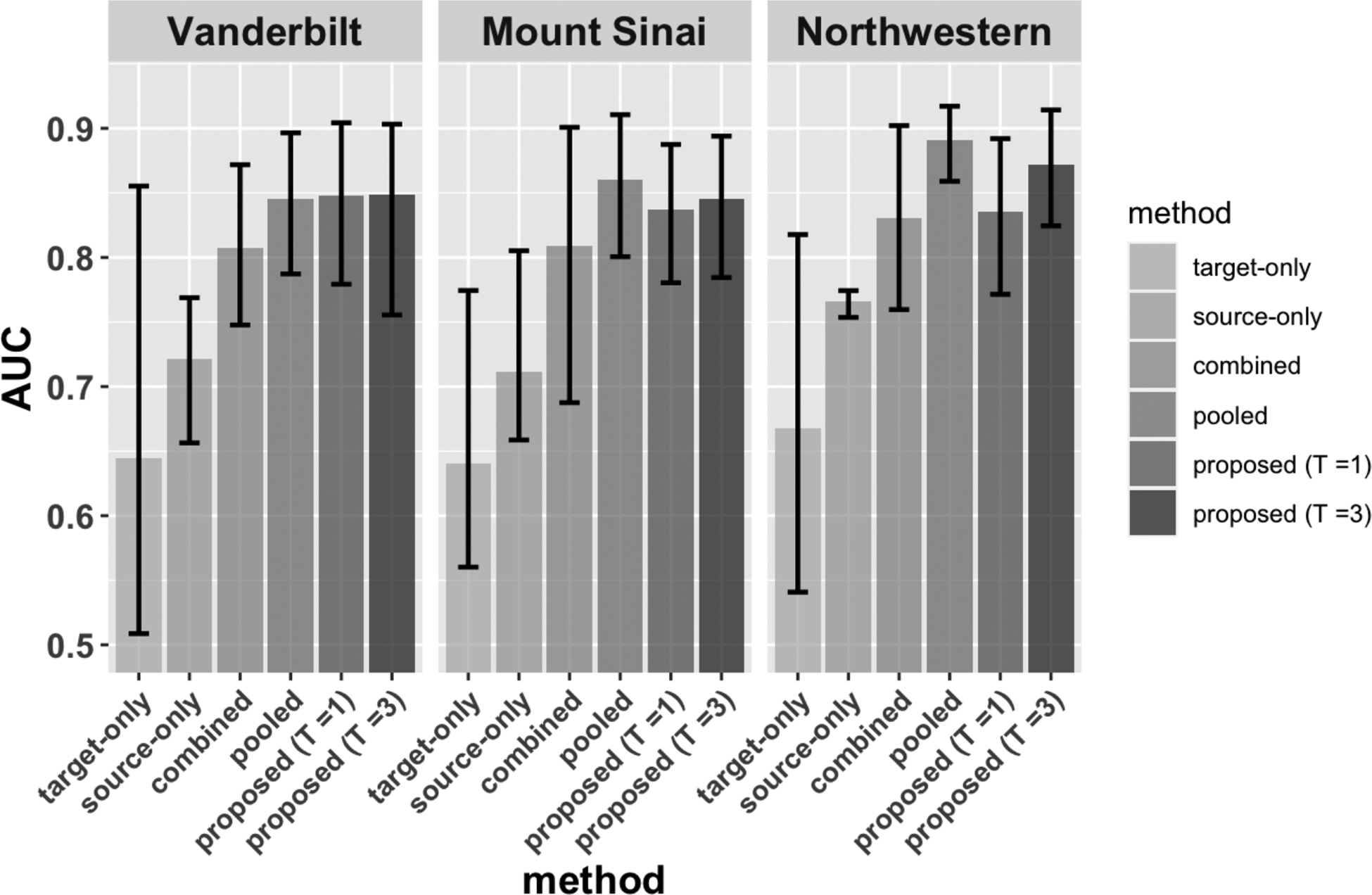

We applied our proposed method with a logistic regression. Similar as the simulation study, we choose the number of iterations to be and and compared them with the methods defined in Section 4. Methods are evaluated on the three testing datasets from Mount Sinai, Vanderbilt, and Northwestern. To account for sampling variability when selecting the testing datasets from Vanderbilt and Mount Sinai, we repeat the evaluation 20 times, and within each replication, prediction performance are evaluated separately on the three testing sets. Mount Sinai was chosen to be the leading site, whose individual-level data was used for the aggregation step.

5.3. Evidence for data heterogeneity between the source and the target populations.

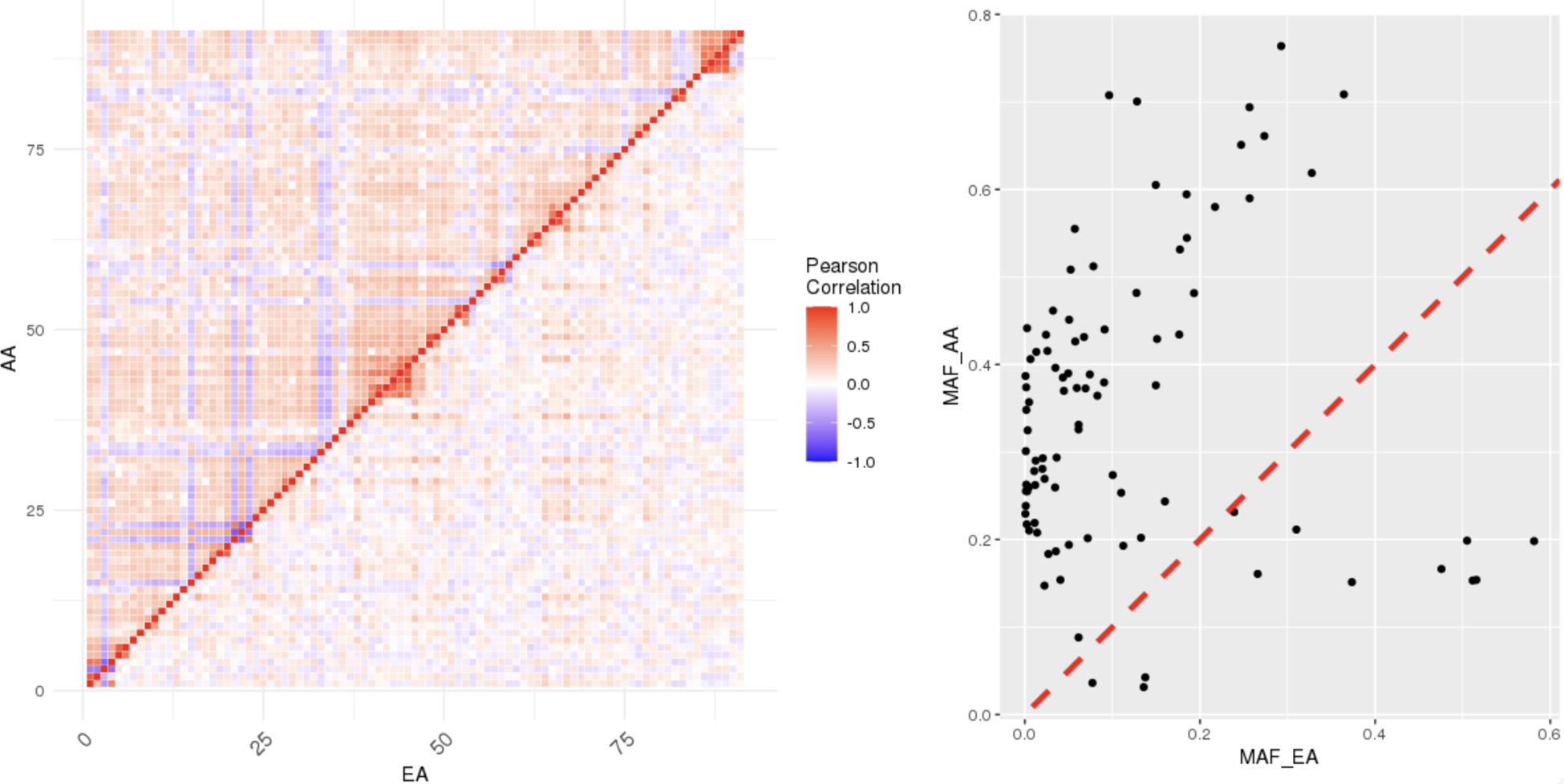

We first investigate the heterogeneity of the distributions of the predictors between the target and the source populations. Using the genotypic data from the target and the source samples, we calculate the minor allele frequencies and pairwise correlations of the SNPs. For more clear presentation, we only plot the SNPs in chromosome 1 in Figure 4, while the comparisons of SNPs in chromosomes 2–22 lead to consistent conclusions.

Fig. 4.

Comparisons of correlations and minor allele frequencies (MAFs) calculated from the source and the target populations. Among the 2047 selected SNPs, we show the SNPs on chromosome 1 for illustration purpose. The left panel shows the pairwise correlations between SNPs calculated from the source (the lower triangle) and the target (the upper triangle). The right panel compares the MAFs.

In the left panel of Figure 4, we compare the correlations of SNPs calculated from the target samples (the upper triangle) to the correlations calculated from the source samples (the lower triangle). Overall, the magnitude of correlations are larger and more structured in the target population than the source population. The weaker correlations, observed in the source population, are possibly due to the fact that we use the LD structure obtained from all data to remove SNPs with high correlations, which is closer to the LD structure of the White population due to the dominant sample size. In the right panel of Figure 4, we also observe that the MAFs of SNPs in the target population are highly different from the source population. Figure 4 is consistent with the existing findings, suggesting substantial differences in the LD structures and MAFs across different ancestral and ethnic groups (Martin et al. (2019)).

In addition, our method yields estimated values for the differences of the coefficients between the source and the target models, that is, , and Table 2 shows the SNPs with top differential effects between the two populations. Among the 25 SNPs, We found that most of them are in introns and intergenic regions. Existing literature found that rs6673201 is associated with interaction between insulin sensitivity index and BMI (Walford et al. (2016)); MEGF6 (multiple epidermal growth factor-like domains protein 6) was reported to contribute to the periotic mesenchyme and its derivatives, skin epidermis, certain cells in brain and ribs (Wang et al. (2019b)); HPSE2 was reported to be related to childhood BMI trajectory and adult lung function (Rathod et al. (2022)); ALOX5AP and TPD52 were reported to be associated with body weight (Kaaman et al. (2006)), and STK39 was shown to be a hypertension susceptibility gene (Wang et al. (2009)). Although there have not been enough multiethnic studies supporting the potentially heterogeneous effects of the above genes and SNPs on obesity across different ancestries, our analysis provides potential evidence for further investigations.

Table 2.

Top 25 SNPs having different effects in the source and the target populations

| SNPID | Gene name | Function | |

|---|---|---|---|

| 1 | rs6673201 | C1orf198 | 3_prime_UTR |

| 2 | rs41315290 | MEGF6 | intron |

| 3 | rs546147 | HPSE2 | intron |

| 4 | rs57588150 | ALOX5AP | intergenic |

| 5 | rs13410278 | AC016723.4 | intergenic |

| 6 | rs10497334 | STK39 | intron |

| 7 | rs9975258 | AP000233.4 | intron |

| 8 | rs75401219 | – | intergenic |

| 9 | rs845884 | RP11–157J24.2 | intergenic |

| 10 | rs7741633 | TPD52L1 | intron |

| 11 | rs4395726 | TCTE3 | intron |

| 12 | rs7763865 | KIAA0319 | intron |

| 13 | rs2143457 | TMEM14C | intergenic |

| 14 | rs2485835 | RP11–374I15.1 | intergenic |

| 15 | rs11975134 | NPY | intergenic |

| 16 | rs10282500 | TBRG4 | intergenic |

| 17 | rs7836712 | ZFPM2 | intron |

| 18 | rs11782183 | PVT1 | intron |

| 19 | rs55641476 | LRRC6 | intron |

| 20 | rs113235293 | KHDRBS3 | intron |

| 21 | rs672918 | GLIS3 | intron |

| 22 | rs10823830 | CDH23 | 3_prime_UTR |

| 23 | rs8008067 | RGS6 | – |

| 24 | rs6747667 | C2orf76 | intron |

| 25 | rs4848615 | AC018866.1 | intergenic |

5.4. Evaluation of the predictive performance.

Figure 5 presents the prediction performance of the compared methods. Due to the limited sample size, the target-only estimator has the lowest AUC among all the methods, where the average AUC is around 0.64–0.66, when evaluated across the three testing datasets. The variation of the target-only estimator across different random replications, however, is the largest, where the AUC can be as low as 0.51 and up to 0.85. The source-only estimator has better performance compared to the target-only estimator, having an average AUC around 0.71. Combining source and target data leads to better performance than using a population-specific training strategy, having an average AUC of 0.8. The pooled transfer learning estimator has the highest AUC which is around 0.85–0.89. With only one round of communication, FETA can achieve comparable performance to the pooled transfer learning estimator (ratios of AUC range from 88% to 107% with a median at 97%). With two additional rounds of communications, the AUC is nearly the same as the pooled estimator (ratios of AUC range from 92% to 102% with a median at 99%).

Fig. 5.

Comparisons of prediction performance across three testing datasets. Each colored bar denoted the average AUC over 20 replications, and the error bar indicates the highest and lowest performance.

Interestingly, the performance of FETA with and is similar when applied to the internal testing sets at Vanderbilt and Mount Sinai. When being evaluated at the external testing set from Northwestern University, FETA with is shown to perform better than , indicating additional iterations might be helpful for the portability of the prediction models. Overall, the results from external evaluation are similar to the internal evaluation, indicating relatively low between-site heterogeneity. Depending on the goal of the prediction model, one can modify our method to treat a specific site as the target population and treat other sites as source data. We also perform sensitivity study in which we exclude participants who have unknown self-reported race or who are nonWhite from the source samples; the prediction performance is nearly the same as the current results. More details can be found in Appendix F of the Supplementary Material.

In sum, this application demonstrates the feasibility and the promise of our methods to be implemented in large heal data networks for the construction of risk prediction models. It may also be applied in other use cases, such as phenotyping or risk profiling, using multi-center data.

6. Discussion.

In this paper we propose federated transfer learning methods to improve the performance of estimation and prediction in underrepresented populations, which enables incorporating data from diverse populations that are stored at different institutions in a communication-efficient and individual-data protected manner. Our theoretical analysis and numerical experiments demonstrate the improved accuracy and robustness of the proposed methods compared with benchmark methods. The application to eMERGE network for constructing prediction models for extreme obesity in the African-ancestry population demonstrates the feasibility of applying our methods to large clinical/genomics consortia for improved risk prediction and stratification among underrepresented groups.

Our proposed FETA framework and the theoretical analyses are illustrated based on high-dimensional GLM with -penalty, due to their wide application in biomedical applications (Morin et al. (2021), Wu, Roy and Stewart (2010), Lange et al. (2014)). However, the proposed framework can be extended to machine-learning methods with different penalty terms or model formulations. For example, if the difference of regression parameters between a source and the target population is nonsparse, we can change the -penalty to the -penalty or elastic net type of penalties. In addition, GLM can be replaced with other methods, such as the support vector machine which has been studied in the distributed setting (Stolpe, Bhaduri and Das (2016)). To account for the heterogeneity across populations, we can also allow a discrepancy term between the source and the target model parameters. However, some of the machine learning models may not be directly extended to the FETA framework. For instance, random forest has not been formally studied in the distributed setting as far as we know.

We account for population-level heterogeneity by allowing both the conditional distribution and the marginal distribution to be different across sites. We use an aggregation method to improve the robustness of our methods to the level of heterogeneity. To account for site-level heterogeneity, our methods allow to vary across sites, while is assumed to be shared across sites, given a specific population. In practice, there might still be site-level heterogeneity that causes differences in and robust methods to account for such heterogeneity need to be incorporated, which we will consider in our future work.

Our proposed methods improve the fairness of statistical models by reducing the gap of estimation accuracy across populations due to lack of representation. Our theoretical conclusion provides insights for future data collection, as it reveals how the level of heterogeneity impacts the accuracy gain and the sample size from the target population needed to achieve comparable accuracy as the source populations. This is different from methods that impose constraints on model fitting to ensure that the prediction accuracy of an algorithm has to be at the same level across different populations (Mehrabi et al. (2021)). Since we are focusing on improving the performance of models in an underrepresented population, our work cannot guarantee complete fairness in prediction accuracy across all groups. In the future we can incorporate fairness corrections and constraints into our framework and in the meantime consider a wide variety of models in longitudinal and survival analysis, causal inference, to advance algorithmic fairness in precision medicine.

Funding.

Supplementary Material

Acknowledgments.

The authors would like to thank the anonymous referees, an Associate Editor, and the Editor for their constructive comments that improved the quality of this paper. The datasets used for the analyses described in this manuscript were obtained from dbGaP at http://www.ncbi.nlm.nih.gov/gap through dbGaP accession number phs000888.v1.p1.

Rui Duan was supported by National Institutes of Health (R01 GM148494) and Tianxi Cai was supported by National Institutes of Health (R01 LM013614, R01 HL089778).

Footnotes

SUPPLEMENTARY MATERIAL

Supplementary Material to “Targeting underrepresented populations in precision medicine: A federated transfer learning approach” (DOI: 10.1214/23-AOAS1747SUPP;.pdf). This supplementary file contains alternative algorithms (Sections A and B), conditions, proofs to theorems, corollaries and additional lemmas (Sections C and D), additional simulation results (Section E) and data processing details (Section F).

REFERENCES

- Ashley EA (2016). Towards precision medicine. Nat. Rev. Genet 17 507–522. [DOI] [PubMed] [Google Scholar]

- Bastani H (2020). Predicting with proxies: Transfer learning in high dimension. Manage. Sci 67 2657–3320. [Google Scholar]

- Bickel PJ, Ritov Y and Tsybakov AB (2009). Simultaneous analysis of lasso and Dantzig selector. Ann. Statist 37 1705–1732. MR2533469 10.1214/08-AOS620 [DOI] [Google Scholar]

- Cai T, Liu M and Xia Y (2022). Individual data protected integrative regression analysis of high-dimensional heterogeneous data. J. Amer. Statist. Assoc 117 2105–2119. MR4528492 10.1080/01621459.2021.1904958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai TT and Wei H (2021). Transfer learning for nonparametric classification: Minimax rate and adaptive classifier. Ann. Statist 49 100–128. MR4206671 10.1214/20-AOS1949 [DOI] [Google Scholar]

- Cai M, Xiao J, Zhang S et al. (2021). A unified framework for cross-population trait prediction by leveraging the genetic correlation of polygenic traits. Am. J. Hum. Genet 108 632–655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X and Xie M (2014). A split-and-conquer approach for analysis of extraordinarily large data. Statist. Sinica 24 1655–1684. MR3308656 [Google Scholar]

- Collins R (2012). What makes uk biobank special? The Lancet (London, England) 379 1173–1174. [DOI] [PubMed] [Google Scholar]

- Collins FS and Varmus H (2015). A new initiative on precision medicine. N. Engl. J. Med 372 793–795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duan R, Ning Y and Chen Y (2022). Heterogeneity-aware and communication-efficient distributed statistical inference. Biometrika 109 67–83. MR4374641 10.1093/biomet/asab007 [DOI] [Google Scholar]

- Duan R, Boland MR, Moore JH and Chen Y (2019). ODAL: A one-shot distributed algorithm to perform logistic regressions on electronic health records data from multiple clinical sites. Pacific Symposium on Biocomputing 30–41. [PMC free article] [PubMed] [Google Scholar]

- Duan R, Luo C, Schuemie MJ et al. (2020). Learning from local to global: An efficient distributed algorithm for modeling time-to-event data. J. Amer. Med. Inform. Assoc 27 1028–1036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan L, Shen H, Gelaye B, Meijsen J, Ressler K, Feldman M, Peterson R and Domingue B (2019). Analysis of polygenic risk score usage and performance in diverse human populations. Nat. Commun 10 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golden A and Kessler C (2020). Obesity and genetics. J. Amer. Assoc. Nurse Pract 32 493–496. [DOI] [PubMed] [Google Scholar]

- Gottesman O, Kuivaniemi H, Tromp G, Faucett WA, Li R, Manolio TA, Sander-son SC, Kannry J, Zinberg R et al. (2013). The electronic medical records and genomics (emerge) network: Past, present, and future. Genet. Med 15 761–771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo Z (2020). Inference for high-dimensional maximin effects in heterogeneous regression models using a sampling approach. Preprint, arXiv:2011.07568 [Google Scholar]

- Jordan MI, Lee JD and Yang Y (2019). Communication-efficient distributed statistical inference. J. Amer. Statist. Assoc 114 668–681. MR3963171 10.1080/01621459.2018.1429274 [DOI] [Google Scholar]

- Kaaman M, Rydén M, Axelsson T, Nordström E, Sicard A, Bouloumie A, Langin D, Arner P and Dahlman I (2006). Alox5ap expression, but not gene haplotypes, is associated with obesity and insulin resistance. Int. J. Obes 30 447–452. [DOI] [PubMed] [Google Scholar]

- Kaplan NM (1989). The deadly quartet: Upper-body obesity, glucose intolerance, hypertriglyceridemia, and hypertension. Arch. Intern. Med 149 1514–1520. [DOI] [PubMed] [Google Scholar]

- Kraft SA, Cho MK, Gillespie K et al. (2018). Beyond consent: Building trusting relationships with diverse populations in precision medicine research. Am. J. Bioethics 18 3–20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kushida CA, Nichols DA, Jadrnicek R et al. (2012). Strategies for de-identification and anonymization of electronic health record data for use in multicenter research studies. Med. Care 50 S82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lam M, Chen C-Y, Li Z et al. (2019). Comparative genetic architectures of schizophrenia in East Asian and European populations. Nat. Genet 51 1670–1678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landry LG, Ali N, Williams DR et al. (2018). Lack of diversity in genomic databases is a barrier to translating precision medicine research into practice. Health Aff 37 780–785. [DOI] [PubMed] [Google Scholar]

- Lange K, Papp JC, Sinsheimer JS and Sobel EM (2014). Next generation statistical genetics: Modeling, penalization, and optimization in high-dimensional data. Annu. Rev. Stat. Appl 1 279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lecué G and Rigollet P (2014). Optimal learning with Q-aggregation. Ann. Statist 42 211–224. MR3178462 10.1214/13-AOS1190 [DOI] [Google Scholar]

- Lee JD, Liu Q, Sun Y and Taylor JE (2017). Communication-efficient sparse regression. J. Mach. Learn. Res. 18 Paper No 5, 30. MR3625709 [Google Scholar]

- Li S, Cai T and Duan R (2023). Supplement to “Targeting underrepresented populations in precision medicine: A federated transfer learning approach.” 10.1214/23-AOAS1747SUPP [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S, Cai TT and Li H (2020). Transfer learning in large-scale gaussian graphical models with false discovery rate control [DOI] [PMC free article] [PubMed]

- Li S, Cai TT and Li H (2022). Transfer learning for high-dimensional linear regression: Prediction, estimation and minimax optimality. J. R. Stat. Soc. Ser. B. Stat. Methodol 84 149–173. MR4400393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li R, Lin DKJ and Li B (2013). Statistical inference in massive data sets. Appl. Stoch. Models Bus. Ind 29 399–409. MR3117826 10.1002/asmb.1927 [DOI] [Google Scholar]

- Li R, Chen Y, Ritchie MD et al. (2020). Electronic health records and polygenic risk scores for predicting disease risk. Nat. Rev. Genet 21 493–502. [DOI] [PubMed] [Google Scholar]

- Liu M, Xia Y, Cho K and Cai T (2021). Integrative high dimensional multiple testing with heterogeneity under data sharing constraints. J. Mach. Learn. Res. 22 Paper No. 126, 26. MR4279777 [PMC free article] [PubMed] [Google Scholar]

- Loos RJ and Yeo GS (2022). The genetics of obesity: From discovery to biology. Nat. Rev. Genet 23 120–133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin AR, Kanai M, Kamatani Y et al. (2019). Clinical use of current polygenic risk scores may exacerbate health disparities. Nat. Genet 51 584–591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mccarty CA, Chisholm RL, Chute CG et al. (2011). The eMERGE network: A consortium of biorepositories linked to electronic medical records data for conducting genomic studies. BMC Med. Genom 41–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehrabi N, Morstatter F, Saxena N, Lerman K and Galstyan A (2021). A survey on bias and fairness in machine learning. ACM Comput. Surv 54 1–35. [Google Scholar]

- Morin O, Vallières M, Braunstein S, Ginart JB, Upadhaya T, Woodruff HC, Zwanenburg A, Chatterjee A, Villanueva-Meyer JE et al. (2021). An artificial intelligence framework integrating longitudinal electronic health records with real-world data enables continuous pan-cancer prognostication. Nat. Cancer 2 709–722. [DOI] [PubMed] [Google Scholar]

- Pan L, Freedman DS, Gillespie C, Park S and Sherry B (2011). Incidences of obesity and extreme obesity among us adults: Findings from the 2009 behavioral risk factor surveillance system. Popul. Health Metr 9 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qian J, Tanigawa Y, Du W et al. (2020). A fast and scalable framework for large-scale and ultrahigh-dimensional sparse regression with application to the UK Biobank. PLoS Genet 16 e1009141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rathod R, Zhang H, Karmaus W, Ewart S, Mzayek F, Arshad SH and Holloway JW (2022). Association of childhood bmi trajectory with post-adolescent and adult lung function is mediated by pre-adolescent dna methylation. Respir. Res 23 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigollet P and Tsybakov A (2011). Exponential screening and optimal rates of sparse estimation. Ann. Statist 39 731–771. MR2816337 10.1214/10-AOS854 [DOI] [Google Scholar]

- Sankar PL and Parker LS (2017). The precision medicine initiative’s all of us research program: An agenda for research on its ethical, legal, and social issues. Genet. Med 19 743–750. [DOI] [PubMed] [Google Scholar]

- Stolpe M, Bhaduri K and Das K (2016). Distributed support vector machines: An overview. In Solving Large Scale Learning Tasks. Lecture Notes in Computer Science 9580 109–138. Springer, Cham. MR3537495 10.1007/978-3-319-41706-6_5 [DOI] [Google Scholar]

- Sudlow C, Gallacher J, Allen N et al. (2015). Uk biobank: An open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Med 12 e 1001779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian Y and Feng Y (2022). Transfer Learning under High-dimensional Generalized Linear Models. J. Amer. Statist. Assoc 0 1–14. 10.1080/01621459.2022.2071278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R (1996). Regression shrinkage and selection via the lasso. J. Roy. Statist. Soc. Ser. B 58 267–288. MR1379242 [Google Scholar]

- Tsybakov AB (2014). Aggregation and minimax optimality in high-dimensional estimation. In Proceedings of the International Congress of Mathematicians-Seoul 2014 Vol. IV 225–246. Kyung Moon Sa, Seoul. MR3727610 [Google Scholar]

- van de Geer SA (2008). High-dimensional generalized linear models and the lasso. Ann. Statist 36 614–645. MR2396809 10.1214/009053607000000929 [DOI] [Google Scholar]

- van Der Haak M, Wolff AC, Brandner R et al. (2003). Data security and protection in cross-institutional electronic patient records. Int. J. Med. Inform 70 117–130. [DOI] [PubMed] [Google Scholar]

- Walford GA, Gustafsson S, Rybin D, Stančáková A, Chen H, Liu C-T, Hong J, Jensen RA, Rice K et al. (2016). Genome-wide association study of the modified stumvoll insulin sensitivity index identifies bcl2 and fam19a2 as novel insulin sensitivity loci. Diabetes 65 3200–3211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y, O’Connell JR, Mcardle PF, Wade JB, Dorff SE, Shah SJ, Shi X, Pan L, Rampersaud E et al. (2009). Whole-genome association study identifies stk39 as a hypertension susceptibility gene. Proc. Natl. Acad. Sci. USA 106 226–231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X, Yang Z, Chen X and Liu W (2019a). Distributed inference for linear support vector machine. J. Mach. Learn. Res. 20 Paper No 113, 41. MR3990467 [Google Scholar]

- Wang Y, Song H, Wang W and Zhang Z (2019b). Generation and characterization of megf6 null and cre knock-in alleles. Genesis 57 e23262. [DOI] [PubMed] [Google Scholar]

- Weiss K, Khoshgoftaar TM and Wang D (2016). A survey of transfer learning. J. Big Data 3 1–40. [Google Scholar]

- West KM, Blacksher E and Burke W (2017). Genomics, health disparities, and missed opportunities for the nation’s research agenda. JAMA 317 1831–1832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu J, Roy J and Stewart WF (2010). Prediction modeling using ehr data: Challenges, strategies, and a comparison of machine learning approaches. Medical care S106–S113. [DOI] [PubMed] [Google Scholar]

- Zhou W et al. (2021). Global biobank meta-analysis initiative: Powering genetic discovery across human diseases. MedRxiv [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zillikens MC, Demissie S, Hsu Y-H, Yerges-Armstrong LM, Chou W-C, Stolk L, Livshits G, Broer L, Johnson T et al. (2017). Large meta-analysis of genome-wide association studies identifies five loci for lean body mass. Nat. Commun 8 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.