Abstract

Data-driven methods for personalizing treatment assignment have garnered much attention from clinicians and researchers. Dynamic treatment regimes formalize this through a sequence of decision rules that map individual patient characteristics to a recommended treatment. Observational studies are commonly used for estimating dynamic treatment regimes due to the potentially prohibitive costs of conducting sequential multiple assignment randomized trials. However, estimating a dynamic treatment regime from observational data can lead to bias in the estimated regime due to unmeasured confounding. Sensitivity analyses are useful for assessing how robust the conclusions of the study are to a potential unmeasured confounder. A Monte Carlo sensitivity analysis is a probabilistic approach that involves positing and sampling from distributions for the parameters governing the bias. We propose a method for performing a Monte Carlo sensitivity analysis of the bias due to unmeasured confounding in the estimation of dynamic treatment regimes. We demonstrate the performance of the proposed procedure with a simulation study and apply it to an observational study examining tailoring the use of antidepressant medication for reducing symptoms of depression using data from Kaiser Permanente Washington.

Keywords: adaptive treatment strategies, bias, precision medicine

1 |. INTRODUCTION

Precision medicine focuses on data-driven methods for personalizing treatment assignment to improve health care. Dynamic treatment regimes (DTRs) operationalize clinical decision-making through a sequence of functions that map individual patient characteristics to a recommended treatment (Chakraborty and Moodie, 2013; Tsiatis et al., 2019). An optimal DTR is one that maximizes the mean of some desirable measure of clinical outcome when applied to select treatment for all patients in the population of interest (Murphy, 2003; Robins, 2004). Optimal DTRs have been estimated to improve care for many different medical conditions including HIV (Cain et al., 2010; van der Laan et al., 2005), bipolar disorder (Wu et al., 2015; Zhang et al., 2018), diabetes (Ertefaie and Strawderman, 2018; Luckett et al., 2018), and cancer (Murray et al., 2018; Thall et al., 2000).

Data for estimating an optimal DTR are ideally collected through the use of a sequential multiple assignment randomized trial (SMART) (Lavori and Dawson, 2000, 2004; Murphy, 2005). However, longitudinal observational studies are frequently used due to the availability of data collected from electronic health records (EHRs) and the potentially prohibitive cost of conducting an SMART. These analyses rely on assuming that there is no unmeasured confounding; this assumption is not verifiable from the observed data. Not adjusting for all of the confounding variables can result in a biased estimate of the optimal DTR.

Sensitivity analyses have been used as a way to assess how the estimated effect would change if there was an unmeasured confounder. There has been much work on conducting sensitivity analyses for unmeasured confounding when estimating average causal effects from observational data beginning with Cornfield et al. (1959), in which they studied whether the causal link found between smoking and lung cancer could be due to unmeasured confounding. A wide array of different approaches have been proposed since. Rosenbaum and Rubin (1983) proposed a method for examining how sensitive the conclusions of a study with a binary outcome are to a binary unmeasured confounder by assessing what odds ratios between the unmeasured confounder and each of the treatment and the outcome would cause the effect to be no longer significant. Lin et al. (1998) proposed positing a regression model for the outcome containing the effect of the unmeasured confounder and probability distributions for the unmeasured confounder in each treatment group. Under certain assumptions, this allows for simple expressions of the bias in the treatment effect that can then be used to calculate treatment effects for a wide range of different effect sizes of the unmeasured confounder on the outcome and exposure.

An alternative to formulaic approaches are probabilistic sensitivity analyses, which posit probability distributions for the bias parameters and average over the distributions to obtain bias-adjusted estimates. There are two different existing approaches to implementing a probabilistic sensitivity analysis, Bayesian sensitivity analysis, and Monte Carlo sensitivity analysis (McCandless and Gustafson, 2017). A Bayesian sensitivity analysis (McCandless et al., 2007) works by positing prior distributions for the bias parameters and uses Bayes theorem to generate a posterior distribution for the causal effect that accounts for the uncertainty due to the unmeasured confounder. In a Monte Carlo sensitivity analysis (Greenland, 2005; Phillips, 2003; Steenland and Greenland, 2004), we posit prior distributions for the bias parameters in the same way and then draw values from the prior distributions and calculate a bias-adjusted estimate for the parameter of interest for each Monte Carlo repetition. This allows for a straightforward implementation that corrects bias while incorporating uncertainty due to the unmeasured confounding within a frequentist framework.

There has been some work on estimating DTRs in the presence of unmeasured confounding. This has mainly focused on using instrumental variables to estimate an optimal DTR (Chen and Zhang, 2021; Cui and Tchetgen Tchetgen, 2021; Liao et al., 2021; Qiu et al., 2021). An instrumental variable is a pretreatment variable that is correlated with treatment, independent of all unmeasured confounders, and has no direct effect on the outcome of interest. In some applications, there is not an obvious choice for an instrumental variable based on domain knowledge and it is impossible to empirically check whether a variable satisfies the assumptions for being an instrumental variable (Hernan and Robins, 2006). Zhang et al. (2021) proposed a method for ranking individualized treatment rules when there is unmeasured confounding by creating a partial order under the framework proposed by Rosenbaum (1987). Kallus and Zhou (2019) examined estimating a DTR that maximizes the value for the worst-case scenario of an uncertainty set that quantifies the degree of confounding that is unmeasured.

We propose using a Monte Carlo sensitivity analysis for the estimation of DTRs. Section 2 details setup and notation. In Section 3, we provide an overview of our proposed sensitivity analysis procedure. In Section 4, we examine the performance of our proposed method using a simulation study. Section 5 demonstrates applying the proposed procedure to EHRs when estimating DTRs to reduce symptoms of depression using data from Kaiser Permanente Washington (KPWA). A discussion of the proposed procedure is contained in Section 6.

2 |. SETUP AND NOTATION

Suppose we have data from an observational study in which patients are treated throughout the course of stages. We collect data of the form , which consists of i.i.d. replicates of such that denotes baseline patient characteristics, denotes the treatment assigned at stage for for denotes patient information recorded during the course of the treatment, and denotes the continuous patient outcome coded such that higher values are better. We let denote an unmeasured time-fixed confounding variable that can be continuous or binary. This unmeasured confounder is measured at baseline and does not change over time, but influences treatment choices at any of the stages as well as the outcome . Let and for denote the history of a patient such that is all of the information available to a clinical decision maker at stage . Let denote a DTR such that for is a function that maps a patient’s history to a recommended treatment, where dom indicates the domain or set of possible treatments. Therefore, DTR would recommend treatment at stage for a patient with history .

To allow us to reference components of the history and regimes, we will use overbar notation to indicate up to stage such that , and . We will suppress the subscript when denoting the entire sequence, for example, . Similarly, we will use an underbar to denote treatments, covariates, and regimes after and including stage such that and likewise for and .

We will use the potential outcomes framework to define an optimal DTR (Rubin, 1978). Let denote the potential outcome under the treatment sequence and denote the potential history at stage under treatments . Denote the set of all potential outcomes as , , , . The potential outcome of a DTR, , is then defined as.

where 𝕀 is the indicator function (Murphy, 2003). Define the value of a DTR by . An optimal regime, , is defined as a regime that satisfies for all regimes .

When estimating DTRs, it is standard to make the following causal assumptions (Robins, 2004): (i) the stable unit treatment value assumption (SUTVA), and for (ii) positivity, with probability one for each for ; and (iii) sequential ignorability, for . We will assume SUTVA and positivity holds, but sequential ignorability does not hold because we have an unmeasured confounder, . This is a key assumption and if it is violated, standard methods for estimating DTRs will lead to biased estimates of the optimal DTR. In addition, sequential ignorability is unverifiable from data and requires domain expertise to assess whether it is a reasonable assumption. Our proposed Monte Carlo sensitivity analysis requires making the following additional assumptions. We will assume that is the only unmeasured confounder and for . We will also assume that the unmeasured confounder has an additive effect on the outcome and is conditionally independent of later-stage covariates, so we have that for . These last two assumptions can be relaxed, though that would require more complex assumptions about the distribution and effect of the unmeasured confounder as well as a more complex expression for the bias.

2.1 |. Dynamic weighted ordinary least squares

Dynamic weighted ordinary least squares (dWOLS) is a regression-based method for estimating DTRs (Wallace and Moodie, 2015). This method estimates an optimal DTR by estimating a contrast function that represents the difference in expected outcome of receiving a given treatment at stage when compared to the reference treatment, which we take to be treatment , assuming that treatment is assigned optimally after stage . This contrast function is referred to as the optimal blip-to-zero function, , and is defined as

The optimal blip-to-zero function characterizes the optimal DTR (Robins, 2004). The optimal treatment rule at stage is given by recommend treatment if , otherwise recommend . In general, when considering more than two treatment options, the optimal DTR at stage is given by . dWOLS estimates an optimal DTR by positing models for the blip functions and estimating their parameters through a series of weighted ordinary least squares regressions. Let denote our posited model for the blip function. We will assume that the blip-to-zero function is correctly specified, so we have that for all . The estimated optimal DTR is then given by such that .

Denote the treatment-free outcome at stage by that represents a patient’s observed outcome adjusted by the expected difference in a patient’s outcome had they received treatment 0 at stage and then treated optimally via the blip models with parameters after stage . Therefore, . We posit a model for the treatment-free outcome which we denote by . We assume linear models for the treatment-free and blip models, so a model for the outcome at stage is given by

where and are components of with a leading one for the intercept and . We also model the propensity score, , which we denote by for . We estimate the blip model parameters using weighted ordinary least squares using weights that satisfy . Examples of weights that satisfy this equality are given by the inverse probability of treatment weights (IPTWs), and overlap weights, . First, we estimate the blip parameters for the final stage using our posited model for the outcome. Then, form the pseudo-outcome which we define as

At each stage starting with and moving backwards, we model the pseudo-outcome by

and fit the model using weighted least squares. The resulting estimate of is consistent as long as the blip model is correctly specified and at least one of the treatment free and propensity score models is correctly specified. When we have an unmeasured confounder, , both the treatment free and propensity score model will be misspecified leading to bias in the estimator of .

3 |. MONTE CARLO SENSITIVITY ANALYSIS

A Monte Carlo sensitivity analysis is a probabilistic approach to sensitivity analysis in which probability distributions are posited for the parameters governing the bias due to the unmeasured confounder. The posited probability distributions are repeatedly sampled from and used to calculate a series of bias corrected effect estimates (Greenland, 2005; McCandless and Gustafson, 2017). In what follows, we detail a Monte Carlo approach that seeks to posit models (and associated parameters) to describe the confounding due to the unmeasured variable through its relationship to the outcome, the treatment, and other covariates, and to use that to impute the unmeasured confounder and hence assess and correct bias. Ideally, a secondary data set that contains data on the unmeasured confounder as well as the confounders, treatment, and outcomes measured in the current study with the potential unmeasured confounding is used to learn about the probability distributions for the bias parameters that must be posited. This allows us to fit the postulated unmeasured confounder models to the data from the secondary study and use the estimated parameters and standard errors to posit distributions that capture the uncertainty related to the unmeasured confounder. These data could be historical data or gathered from a concurrent or follow-up study and can be significantly smaller than the full study. If a secondary data set is unavailable, we can instead use subject matter expertise to posit probability distributions for the bias parameters with wide distributions to capture the additional uncertainty. For example, if we only know that the unmeasured confounder is positively associated with the outcome, we could posit a uniform distribution from 0 to a conservative estimate of the maximum for the presumed effect of the unmeasured confounder on the outcome. If there are bias parameters for which we have no information, we could posit a uniform distribution that is centered at 0 with conservative estimates for the bounds of the distribution. When noninformative distributions for the bias parameters are used, the bias-adjusted point estimate of the parameters indexing the optimal regime will be closer to the unadjusted estimate, but the confidence intervals will still capture the uncertainty in the optimal regime due to both sampling variability and unmeasured confounding.

Alternative approaches to sensitivity analyses for unmeasured confounding typically make assumptions about the association between the unmeasured confounder and treatment as well as the unmeasured confounder and outcome, often capturing these by a single sensitivity parameter (Groenwold et al., 2010; Hernan and Robins, 2020; Lash et al., 2009). When the bias due to unmeasured confounding is controlled by one or two parameters, a conditional analysis in which we examine the estimated effect for a fixed value of the bias parameters is appropriate. This type of analysis then allows for examining what values of the bias parameters would significantly change the conclusions of the study (Rosenbaum & Rubin, 1983). For the estimation of DTRs, we are interested in treatment interaction effects in addition to the main effect of treatment. To better quantify the bias in the interaction effects, we make additional assumptions about the association between the unmeasured confounder and the measured covariates. These assumptions lead to the bias being dictated by many more parameters. The additional parameters make it very difficult to succinctly describe the potential bounds of the bias parameters with a conditional analysis, as the regions for which the results would be consistent could be very complex in addition to being multidimensional. By instead positing distributions for the bias parameters, we can quantify the uncertainty in the estimated DTR while accounting for the uncertainty in the posited models for the unmeasured confounder and can integrate over the posited distributions to calculate a bias-adjusted estimate.

Recall that we assume that the unmeasured confounder has an additive effect on the outcome in addition to linear models for the treatment-free and blip models. Therefore, a model for the outcome is given by

We will also posit a model for the conditional mean of the unmeasured confounder given by such that denotes the parameters of the model. This will typically be given by a linear or logistic regression model depending on whether is continuous or binary, but there are no restrictions on the form of this model. We then have that and are the parameters that dictate the bias due to the unmeasured confounding with indexing the model for the conditional mean of , and controlling the effect of the unmeasured confounder on the outcome .

Let and for . If the unmeasured confounder is not included in the regression, and then the estimated coefficients are given by

such that denotes the empirical expectation. Recall we assume that the unmeasured confounder, , has an additive effect on the outcome and let denote the coefficient for the unmeasured confounder. In Section A of the Supporting Information, we show that the bias is given by

| (1) |

We can estimate the bias in finite samples by replacing the expectations with empirical expectations and imputing an estimate of for which we will denote by . Note that this is then a function of the data and the parameters of the unmeasured confounder models.

If we proceeded with dWOLS, the bias in the blip parameters would bias the pseudo-outcome given by . Additionally, estimating the blip model at stage without accounting for the unmeasured confounder would introduce additional bias that further compounds as we move backward through the stages. We can use the bias-corrected estimate of to calculate the pseudo-outcome and, if we assume that for , we have that Equation (1) for holds for . This assumption can be relaxed at the expense of additional assumptions about the effect of on and a more complex expression for the bias. Being able to accurately posit models and parameter distributions for when a more complex relationship exists could be potentially difficult without data. Therefore, a different approach to sensitivity analysis may be more appropriate when secondary data are unavailable if this assumption is unreasonable.

ALGORITHM 1.

Monte Carlo sensitivity analysis

| 1: | Posit a model for given by |

| 2: | Posit distributions and for the bias parameters and |

| 3: | for Monte Carlo/bootstrap repetitions do |

| 4: | Draw a bootstrap sample from |

| 5: | Sample , from the distributions posited in step 2 |

| 6: | Impute from |

| 7: | Estimate with the propensity score model and calculate weights |

| 8: | Estimate using weighted least squares, i.e. perform a dWOLS estimation using the bootstrap sample |

| 9: | Calculate bias in with equation 1 using and as estimates for and |

| 10: | Calculate bias-adjusted estimate |

| 11: | for do |

| 12: | Calculate pseudo-outcome |

| 13: | Estimate with the propensity score model and calculate weights |

| 14: | Estimate using weighted least squares |

| 15: | Calculate bias in with equation 1 using and as estimates for and |

| 16: | Calculate bias-adjusted estimate |

| 17: | end for |

| 18: | end for |

| 19: | Calculate bias-adjusted estimate for |

The primary objective of conducting sensitivity analyses in this context is to quantify the uncertainty in the estimated DTR when there is bias due to unmeasured confounding. If we generated confidence intervals for the bias by taking the percentiles of our adjusted results across the Monte Carlo samples, these interval estimates would only capture the variability due to the uncertainty in our bias parameters and would not account for the uncertainty in our estimate of the parameters of interest. Therefore, we take a bootstrap sample for each Monte Carlo repetition and confidence intervals are formed by taking percentiles of the bias-adjusted values of . To account for nonregularity in estimators resulting from the nonsmoothness in the pseudo-outcome when estimating a multistage DTR, we will adapt the bootstrap (Chakraborty et al., 2013).

We perform a Monte Carlo sensitivity analysis by first positing a model for the conditional mean of the unmeasured confounder given by . We also posit distributions and for the bias parameters and . We then draw bootstrap samples which we will denote by for . For each bootstrap sample, we sample and from the posited distributions, and . We use the sampled value of and the bootstrap data to impute values for the unmeasured confounder, , which we denote by . Then use the bootstrap data, the sampled bias parameter , and the imputed values of to calculate a bias-adjusted estimate of which we will denote by . This is done by implementing dWOLS to estimate and then using Equation (1) to calculate a bias-adjusted estimate, . This bias-adjusted estimate is then used to calculate the pseudo-outcome . We continue implementing dWOLS to estimate at each stage by estimating and using Equation (1) to adjust for the bias due to the unmeasured confounder. A bias-adjusted estimate for is then given by for . A step-by-step procedure for generating bias-adjusted estimates for the parameters indexing the optimal regime, , is given in Algorithm 1.

To construct a confidence interval for for a single-stage DTR, we calculate the percentiles of the bias-adjusted estimates from each bootstrap sample. When the number of stages is greater than 1, we have that the pseudo-outcome is a nonsmooth function of the generative model, and therefore, the estimator for for is nonregular (Laber et al., 2014; Moodie and Richardson, 2009; Robins, 2004). Therefore, we adapt the bootstrap that is a method for producing confidence intervals for nonsmooth functionals in which we take bootstrap samples with a smaller resample size of (Bickel et al., 1997; Dumbgen, 1993; Shao, 1994; Swanepoel, 1986). Chakraborty et al. (2013) proposed using a resample size of such that is a tuning parameter and to construct a confidence interval for when . The tuning parameter controls the minimum resample size because, for a fixed , the resample size takes values within the interval . Chakraborty et al. (2013) proposed using a double bootstrap procedure to choose the tuning parameter . The nonsmoothness in the pseudo-outcome occurs at and near the point(s) where , so large values of indicate a high degree of nonregularity. They propose using a plug-in estimator for given by

where denotes an estimate of and denotes the percentile of a chi-squared distribution with 1 degree of freedom. To generalize this to stages, we define and construct an estimate, , for each stage (Rose et al., 2022). We first calculate a resample size such that

We then take bootstrap samples of size to construct a confidence interval for for each in the sample. This confidence interval is created by estimating for each bootstrap sample by drawing from the posited distributions for the bias parameters and adjusting the estimated parameters using Equation (1) as before. This allows us to obtain an estimate, , of by calculating the proportion of individuals in the study for which the confidence interval for contains zero. This procedure is repeated for . We then calculate and the corresponding resample size . Finally, we take bootstrap samples of size and estimate for each bootstrap sample by again sampling from the posited distributions for the bias parameters, and , and adjusting for the bias using Equation (1). To construct a confidence region for , calculate and percentiles of , denoted as and , respectively, for . A confidence region for is then given by for . A step-by-step procedure for constructing a confidence interval for that accounts for uncertainty in the unmeasured confounder and random sampling variability is outlined in Algorithm 2.

4 |. SIMULATION EXPERIMENTS

We conducted a series of simulation experiments to evaluate the effectiveness of the proposed method. We applied the proposed method to two different data-generating models, the first being a one-stage study and the second a two-stage study. Additional simulations are contained in Sections B and C of the Supporting Information with B containing simulations with a binary unmeasured confounder and C containing a different generative model in which the bias does not meaningfully affect the performance of the estimated regime. The data-generating model for the one-stage study was given by:

ALGORITHM 2.

Monte Carlo sensitivity analysis: confidence intervals

| 1: | Calculate where denotes an estimate of |

| 2: | Calculate resample size |

| 3: | for do |

| 4: | Draw bootstrap samples of size |

| 5: | Estimate for |

| 6: | For each given , construct a confidence interval for |

| 7: | Calculate by the proportion of confidence intervals for that contain zero |

| 8: | Calculate the resample size |

| 9: | end for |

| 10: | Calculate and |

| 11: | Draw bootstrap sample of size |

| 12: | Estimate for , |

| 13: | Calculate and percentiles of which we denote by and respectively for |

| 14: | Calculate a confidence interval for by for |

We conducted 1000 repetitions for the simulation study. We let the sample size be given by and conducted Monte Carlo repetitions for the sensitivity analysis. The parameter values were given by:

We posited four different sets of distributions for the parameters in the unmeasured confounder models. This allowed us to assess how sensitive our proposed method is to bias in the distributions for these parameters. The four different simulation scenarios were given by: (i) narrow normal, centered properly; (ii) wide normal, centered properly; (iii) narrow normal, off-center; (iv) wide normal, off-center. For scenario (i), we posited models given by and for such that . For the wide distribution settings, the variance was increased to 0.5 and for the off-center scenarios, the distribution was centered at the true mean plus 0.1.

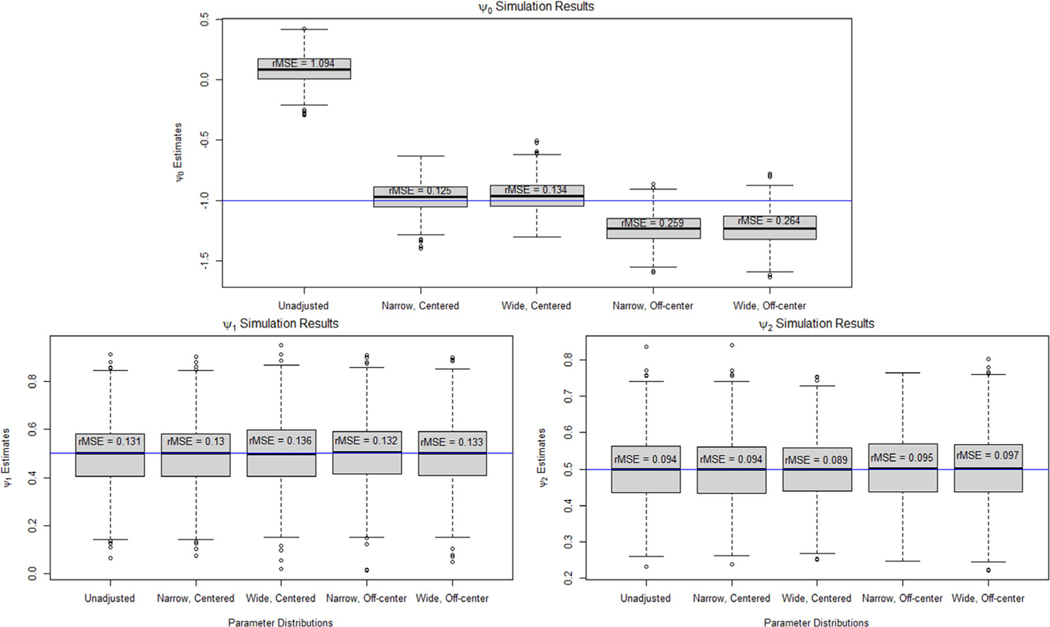

Figure 1 shows boxplots of the point estimates of , , and across 1000 repetitions when not adjusting and adjusting for the unmeasured confounder using a Monte Carlo sensitivity analysis with different distributions for the bias parameters. We can see that when we did not adjust for bias due to the unmeasured confounder, the estimate of is biased with a root mean squared error (rMSE) of 1.094. When the parameter distributions were centered on the true value, the adjusted estimate of was unbiased. There was some bias in the adjusted values of when the distribution is off-center as expected. We can see that for the narrow distribution, the rMSE increased from 0.125 to 0.259 and for the wide distribution, rMSE increased from 0.134 to 0.264. The unmeasured confounder did not cause bias in the estimation of and , so the adjusted estimates using a Monte Carlo sensitivity analysis were very similar to the unadjusted results.

FIGURE 1.

Boxplots of the point estimates for under an unadjusted model and when using Monte Carlo sensitivity analysis to adjust for bias due to unmeasured confounding for the one-stage data-generating model.

Bias in the blip model parameters that index the optimal DTR can cause the estimated regime to differ from the true optimal regime. For each repetition of the simulation study, we simulated 10,000 new patients and assessed what proportion of the patients’ treatment would match the recommended treatment of the true optimal DTR when following each one of the adjusted and unadjusted estimates of the optimal regime. Table 1 displays the proportion of patients who would receive the treatment recommend by the true optimal regime across all 1000 repetitions of the simulation study. We can see that the bias in causes the treatment recommended by the estimated regime to match the optimal regime 52.8% of the time. When adjusting for the unmeasured confounder, this increased to 95.6% in the ideal scenario of a narrow, centered parameter distribution and to 95.8% when we used a wide, off-centered parameter distributions.

TABLE 1.

Proportion of patients whose recommended treatment when following each of the estimated regime matches the recommendation of the true optimal regime for the one-stage data-generating model.

| Parameter Distr. | Proportion optimal |

|---|---|

| Unadjusted | 0.528 |

| Narrow, Centered | 0.956 |

| Wide, Centered | 0.953 |

| Narrow, Off-center | 0.959 |

| Wide, Off-center | 0.958 |

In practice, if we do not have external data to help posit the mean model for the unmeasured confounder and distributions for the parameters, we will have less belief that the bias-adjusted DTR is close to the true optimal regime. The proposed method should be used to examine confidence intervals to assess whether the unmeasured confounder could be introducing bias to our estimated regimes. Table 2 displays the coverage and width of 95% confidence intervals generated using a Monte Carlo sensitivity analysis and from the unadjusted analysis that assumes no unmeasured confounding. For the unadjusted analysis, the empirical coverage of the confidence interval for was 0%. Even for and , which did not appear to have any bias in the estimation, the coverage was still below the nominal rate of 95%. For all of the different posited parameter distributions, Monte Carlo sensitivity analysis produced confidence intervals that attained or were close to the nominal coverage probability. The width of the confidence intervals for increased significantly when conducting sensitivity analysis, indicating that this parameter is sensitive to the unmeasured confounding. Note that the width of the confidence intervals increased when the variability in the parameter distributions was increased.

TABLE 2.

Coverage (Cvr.) and average width (Wth.) of the 95% confidence intervals for for the unadjusted analysis and sensitivity analysis under each of the posited parameter distributions for the one-stage data-generating model.

| Parameter Distr. | Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) |

|---|---|---|---|

| Unadjusted | 0.000* (0.359) | 0.897* (0.426) | 0.887* (0.300) |

| Narrow, Centered | 1.000* (2.628) | 0.946 (0.520) | 0.947 (0.365) |

| Wide, Centered | 1.000* (6.212) | 0.954 (0.520) | 0.959 (0.365) |

| Narrow, Off-center | 1.000* (2.764) | 0.950 (0.519) | 0.951 (0.366) |

| Wide, Off-center | 1.000* (6.495) | 0.955 (0.519) | 0.936* (0.367) |

indicates coverages that are significantly different than 95%.

To compare our proposed procedure to an alternative method for sensitivity analysis, we conducted a simulation study for estimating an optimal DTR with G-estimation using the same single-stage generative model (Hernan and Robins, 2020; Robins et al., 1999). G-estimation for a single-stage study proceeds by defining (Robins, 2004). then represents a candidate potential outcome for for a given value of . Consider the model

| (2) |

We assume sequential ignorabilty holds, which implies that for the true value of where denotes the zero vector. Therefore, to estimate the true value of , we find the value of in that results in when fitting the logistic regression model in Equation (2). When there is unmeasured confounding, we have that . Therefore, we can conduct a sensitivity analysis by setting to a range of different fixed values and estimating for each to examine how sensitive the estimated DTR is to an unmeasured confounder.

This approach leads to bias parameters that are far more difficult to interpret than the bias parameters in our proposed Monte Carlo sensitivity analysis. A sensitivity analysis could conclude that a given value of would significantly alter the conclusions of the study, but it is unclear what degree of confounding would result in that value of . To avoid this problem, we can use a similar approach to our proposed Monte Carlo sensitivity analysis by collecting data from a small, secondary study that includes the unmeasured confounder to learn about the true value of the bias parameters . First, we use the secondary study to estimate using G-estimation with the unmeasured confounder included in the logistic regression model given in Equation (2). We refit the logistic regression model with included and the unmeasured confounder removed to estimate . We then proceed in the same manner as the proposed Monte Carlo procedure. We posit distributions for using the point estimate and standard error from the model fit to the secondary study. We then use the bootstrap, sample from the posited distribution for each repetition, and calculate a bias-adjusted estimate of for each Monte Carlo repetition. We then take the mean of the estimates for to get a point estimate and take percentiles to construct a confidence interval.

We conducted a simulation study of this procedure using comparable distributions for the bias parameters to those used for the previous simulations. For this generative model, is given by (0.52, 0, 0). For the simulation study, we will assume and and only posit a distribution for . The four different simulation settings will again be given by (i) narrow normal, centered properly; (ii) wide normal, centered properly; (iii) narrow normal, off-center; and (iv) wide normal, off-center. For scenario (i), we posited a distribution for given by . For the wide distributions settings, we increased the variance to 0.5, and for the off-center simulations, we centered the distribution at the true value of plus 0.1.

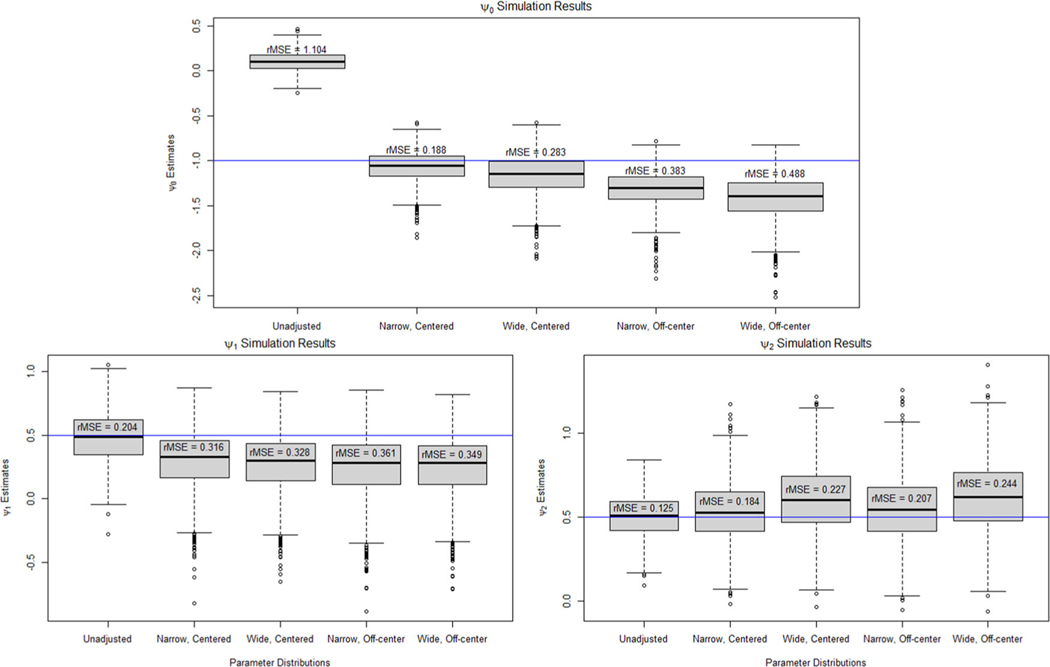

Figure 2 shows boxplots of the point estimates of across the 1000 repetitions. For , adjusting for the unmeasured confounder using the narrow, correctly centered distribution reduced the rMSE from 1.104 to 0.188. This was worse than our proposed procedure that reduced the rMSE to 0.125. The G-estimation sensitivity analysis was also more sensitive to misspecification of the bias parameter distribution with the incorrectly centered distribution resulting in an rMSE of 0.383 and 0.488 for the narrow and wide distributions, respectively. For the interaction effect parameters, and , the bias-adjusted estimates had a greater rMSE than the unadjusted estimates with the narrow, correctly centered bias distribution increasing the rMSE from 0.204 to 0.316 for and from 0.125 to 0.184 for . Section D of the Supporting Information contains additional results on the coverage of confidence intervals and the proportion of new patients whose recommended treatment from the estimated regimes matched the optimal DTR for this simulation study. Section D also contains an additional simulation study in which we do not assume and . The results for this were very poor with all four of the posited bias parameter distributions resulting in an increase in the rMSE in the estimates of , , and . These simulation results demonstrate how simpler sensitivity analysis methods that avoid positing a model for the relationship between the unmeasured confounder and the other covariates can reduce the number of models and bias parameters distributions that need to be posited, but can produce considerably less reliable results about the effect of the unmeasured confounding on the estimated DTR.

FIGURE 2.

Boxplots of the point estimates for under an unadjusted model and when using G-estimation sensitivity analysis to adjust for bias due to unmeasured confounding for the one-stage data-generating model when we assume we know and .

We conducted a similar simulation study with a two-stage study to assess the effectiveness of a Monte Carlo sensitivity analysis for the estimation of multistage DTRs. We let the data-generating model be given by:

As before, we conducted 1000 repetitions for the simulation study, set the sample size to , and conducted the sensitivity analysis using Monte Carlo repetitions. The parameter values for this data-generating process were given by:

We again varied the posited distributions for the parameters of the unmeasured confounder model and the effect of the unmeasured confounder using the same posited distributions as the single-stage simulation study.

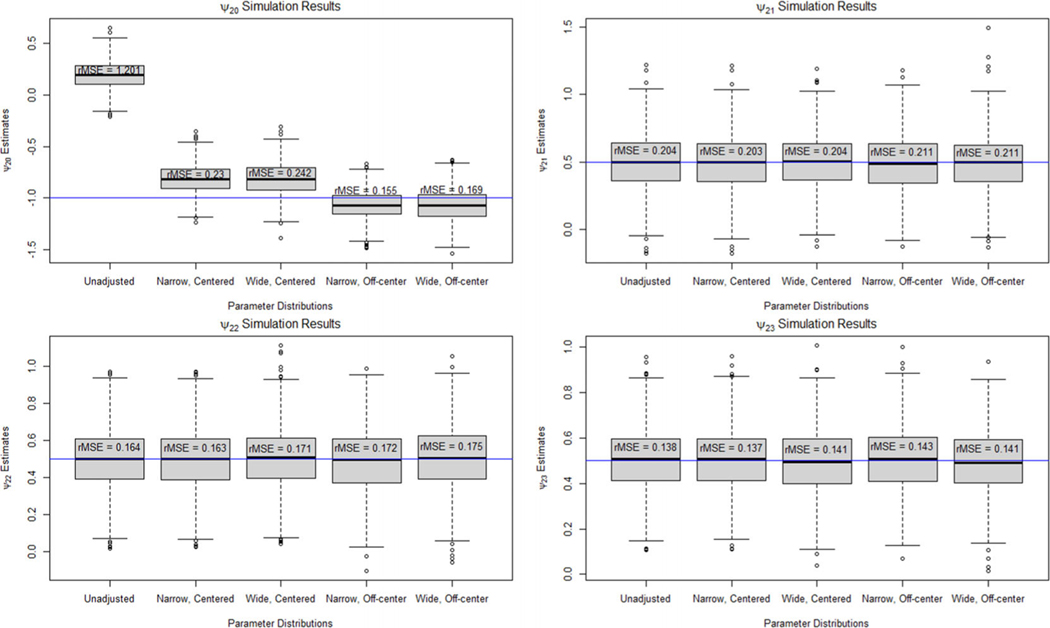

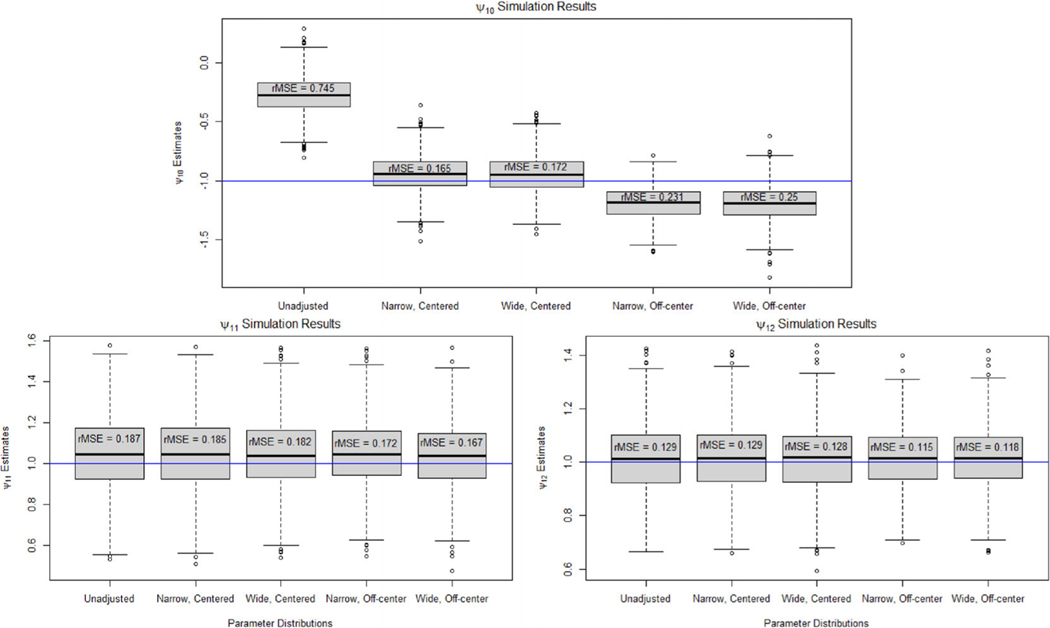

Figures 3 and 4 show boxplots of the point estimates for and , respectively, across the 1000 repetitions. The results for the two-stage study were very similar to the one-stage results. In the second-stage models, the unmeasured confounder caused significant bias in in the unadjusted analysis leading to an rMSE of 1.201. Applying our Monte Carlo sensitivity analysis reduced the bias significantly with all scenarios having an rMSE of 0.242 or less. We found that the unmeasured confounder did not cause bias in the estimates of , , and , leading to similar results between the unadjusted and adjusted estimates. There was significant bias in the unadjusted estimate of for the first-stage blip model. The rMSE of the unadjusted estimate is 0.745, whereas the rMSE of the bias-adjusted estimate under the narrow, centered parameter distribution is 0.165. The rMSE increased to 0.25 when using a wide parameter distribution that is not centered on the true parameter value.

FIGURE 3.

Boxplots of the point estimates for under an unadjusted model and when using Monte Carlo sensitivity analysis to adjust for bias due to unmeasured confounding for the two-stage data-generating model.

FIGURE 4.

Boxplots of the point estimates for under an unadjusted model and when using Monte Carlo sensitivity analysis to adjust for bias due to unmeasured confounding for the two-stage data-generating model.

As before, we simulated 10,000 additional patients and assessed whether the treatment recommended by each of the estimated regimes matched the recommendation of the true optimal regime for each of the 1000 repetitions. Table 3 displays the proportion of patients who were recommended the same treatment as the true optimal regime when following each of the different estimated regimes. The bias from the unmeasured confounder caused the unadjusted estimated regime to recommend the same treatment as the true optimal regime 81.4% of the time at the first stage and 70.2% of the at the second stage. After performing Monte Carlo sensitivity analysis using the narrow, centered posited distribution, the proportion increased to 95.1% at the first stage and 93.7% at the second stage. The alternative parameter distributions produced similar results.

TABLE 3.

Proportion of patients whose recommended treatment when following each of the estimated regime matches the recommendation of the true optimal regime at each stage for the two-stage data-generating model.

| Parameter Distr. | Stage 1 | Stage 2 |

|---|---|---|

| Unadjusted | 0.814 | 0.702 |

| Narrow, Centered | 0.951 | 0.937 |

| Wide, Centered | 0.949 | 0.936 |

| Narrow, Off-center | 0.951 | 0.948 |

| Wide, Off-center | 0.948 | 0.947 |

We also examined the coverage and width of the confidence intervals generated using a Monte Carlo sensitivity analysis for the two-stage study. Table 4 contains the empirical coverage and width of 95% confidence intervals for the parameters of the second-stage blip model. The empirical coverage of for the unadjusted analysis was 0% as it was significantly biased by the unmeasured confounder. Monte Carlo sensitivity analysis produced intervals for with 100% coverage for all of the simulation scenarios at the expense of being much wider. The remaining second-stage blip parameters, , , and , were not biased in the unadjusted analysis, but the unmeasured confounder led to confidence intervals that did not achieve the nominal coverage probability. Monte Carlo sensitivity analysis produced confidence intervals that achieved the nominal coverage for all sets of posited parameter distributions.

TABLE 4.

Coverage (Cvr.) and average width (Wth.) of the 95% confidence intervals for for the unadjusted analysis and sensitivity analysis under each of the posited parameter distributions for the two-stage data-generating model.

| Parameter Distr. | Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) |

|---|---|---|---|---|

| Unadjusted | 0.000* (0.339) | 0.810* (0.529) | 0.810* (0.435) | 0.801* (0.359) |

| Narrow, Centered | 1.000* (2.634) | 0.948 (0.803) | 0.953 (0.658) | 0.943 (0.541) |

| Wide, Centered | 1.000* (6.208) | 0.948 (0.804) | 0.951 (0.654) | 0.941 (0.537) |

| Narrow, Off-center | 1.000* (2.775) | 0.932* (0.803) | 0.949 (0.657) | 0.936* (0.542) |

| Wide, Off-center | 1.000* (6.483) | 0.934* (0.805) | 0.937 (0.659) | 0.941 (0.540) |

indicates coverages that are significantly different than 95%.

Table 5 contains the empirical coverage and average width of 95% confidence intervals for the parameters of the first-stage blip model. The unadjusted confidence interval for had a coverage of only 0.1%, whereas the sensitivity analysis resulted in conservative confidence intervals that had coverage of 100%. For and , the unadjusted analysis resulted in confidence intervals that did not attain nominal coverage with empirical coverages of 86.1% and 86.8%, respectively, despite the unmeasured confounder not resulting in bias in the parameters estimation. The sensitivity analysis intervals were instead conservative with coverages of 100% and 98.9% for and , respectively, for the narrow, centered parameter distributions. The confidence intervals resulting from the sensitivity analysis for the first-stage blip model were considerably wider than those for the second-stage blip model parameters.

TABLE 5.

Coverage (Cvr.) and average width (Wth.) of the 95% confidence intervals for for the unadjusted analysis and sensitivity analysis under each of the posited parameter distributions for the two-stage data-generating model.

| Parameter Distr. | Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) |

|---|---|---|---|

| Unadjusted | 0.001* (0.456) | 0.861* (0.542) | 0.868* (0.382) |

| Narrow, Centered | 1.000* (4.236) | 1.000* (2.609) | 0.989* (2.439) |

| Wide, Centered | 1.000* (7.362) | 1.000* (2.828) | 1.000* (2.590) |

| Narrow, Off-center | 1.000* (4.607) | 1.000* (2.833) | 0.995* (2.663) |

| Wide, Off-center | 1.000* (7.857) | 1.000* (3.081) | 1.000* (2.840) |

indicates coverages that are significantly different than 95%.

5 |. ILLUSTRATION USING KPWA EHR DATA

We applied the proposed method to data from EHRs. KPWA is a health system that provides heath care and insurance to its members. This study used data from EHRs and health insurance claims for all KPWA clients to study the use of antidepressants for treating depression. The data include information on demographics, prescription fills, and depressive symptom severity for 82,691 patients with depression who received antidepressant medication from 2008 through 2018. Severity of depression symptoms was assessed through the use of the Patient Health Questionnaire-8 (PHQ) (Kroenke et al., 2001). This is a self-report questionnaire that produces a score ranging from 0 to 24, such that higher values indicate more severe symptoms of depression. The inclusion criteria for the study were that patients must have been 13 years or older, have been enrolled in KPWA insurance for at least a year, have been diagnosed with a depressive disorder in the year before or 15 days after treatment initiation, had no prescription fills for antidepressant medications in the past year, and had no diagnosis for personality, bipolar, or psychotic disorders in the past year. We additionally required, for this analysis, that patients did not have missing information on obesity, baseline PHQ, or follow-up PHQ at 1 year after baseline. Our analysis focuses on demonstrating the impact of our sensitivity analysis but does not adjust for missing information; therefore, the estimated DTRs must be interpreted as potentially biased; any clinical findings should be viewed in this light.

Research has suggested that obesity increases the risk of depression (Luppino et al., 2010). Weight gain has been found to be a side effect of taking antidepressants (Fava, 2000), with different classes of antidepressants affecting weight differently; treatment guidelines for clinicians recommend using the current weight of a patient as a consideration when prescribing antidepressants (Santasieri and Schwartz, 2015). Obesity is therefore a potentially important confounder that, if unmeasured, could lead to bias in observational studies of their effects, whether average or individualized. We considered a patient to be obese if they had a body mass index (BMI) of 30 or larger. We used this data in two different ways. We assumed that obesity was unavailable and estimated a DTR to minimize depression symptoms without adjusting for obesity in the analysis. We then applied the proposed procedure to assess how sensitive the estimated regime was to obesity being unmeasured and compared this to the estimated regime with obesity measured. We also conducted a plasmode simulation study in which we used the real data with simulated obesity and patient outcomes. This allowed us to know the true optimal DTR and distribution of obesity.

5.1 |. Application to the study of first-line choice of antidepressant

Our outcome is the negative of the PHQ score after 1 year so that higher values correspond with better patient outcomes. Equally, we could use the PHQ score and find the regime that minimizes instead of maximizes the mean outcome. The PHQ score after 1 year was given by the PHQ score recorded between 305 and 425 days after initiating treatment that was measured closest to 365 days after medication initiation. Patients were treated with 1 of 17 different antidepressants initially. Selective serotonin reuptake inhibitors (SSRIs) are a class of antidepressants that increase serotonin levels in the brain by decreasing reabsorption by nerve cells and are commonly prescribed due to having generally milder side effects. We classified the initial treatment received as an antidepressant from either the SSRI class or an alternative class of antidepressants.

We let denote the negative of the PHQ score after 1 year. We denote treatment by , such that if assigned an SSRI and if assigned a non-SSRI. We considered sex, age, baseline PHQ, and obesity as confounders and examined the bias that occurs if obesity was unmeasured. We centered the age variable to make the intercept more interpretable. Our outcome model was given by:

| (3) |

such that the optimal DTR is then given by recommend an SSRI if () is greater than zero.

To posit the models for the unmeasured confounder and the distributions for the bias parameters in practice, we would ideally conduct a small, secondary study. To replicate this process for this example, we took a random subsample of 250 patients from our data and treated this as a secondary data set. We found that obesity was correlated with age, baseline PHQ, race, census block education level (EDU), and diagnosis of an anxiety disorder in the prior year (ANX). Race was categorized as Asian, Black or African American, Hispanic, Native Hawaiian/Pacific Islander, American Indian/Native Alaskan, White, other, or unknown and was coded using dummy coding with Asian as the reference group. EDU was given by an indicator for whether less than 25% of people living in the patient’s census block had a college degree. Therefore, to conduct a Monte Carlo sensitivity analysis for the bias if obesity is unmeasured, we posited a model for obesity given by:

| (4) |

We first estimated and using the secondary data set. We then posited normal distributions for and that were centered at the estimated values of the parameters with standard deviations equal to the estimated standard errors.

We also examined using our proposed procedure when we do not have a secondary data set to help specify distributions for the bias parameters and instead need to use subject matter expertise. Recall that research has found that obesity increases the risk of depression that implies that obesity is negatively correlated with the negative of the PHQ score. Therefore, we will let the distribution for be given by a uniform distribution from −2 to 0. Since we centered the age variable, expit() can be interpreted as the probability of being obese, given that an individual is 45 years old, Asian, has a baseline PHQ score of 0, has no diagnosis of an anxiety disorder, is not from a low education census block, and who received a non-SSRI. We used the overall prevalence of obesity in Asian people over 18 years old in the United States obtained from the 2017 National Health Interview Survey to center the distribution for (Blackwell and Villarroel, 2018). Since the overall prevalence was given by 0.119, the distribution for was selected to be a uniform distribution with support [, ]. We chose to use a uniform distribution as using the prevalence in the entire Asian population in the United States as a surrogate for the conditional probability given by expit() is an imperfect approach. We chose the distributions for each component of in the same manner with a uniform distribution centered using the overall prevalence in the United States by race with a width of 1. The remaining parameters in were given by a uniform distribution from −1 to 1 because we are unsure of how they are related to obesity.

Table 6 contains estimates and confidence intervals for from the full model, the model with obesity unmeasured, and after adjusting for the bias with and without a secondary data set to posit the bias parameter distributions. The adjusted estimates of using the secondary data set with obesity unmeasured were close to the estimates from the full model with obesity included; however, uncertainty was increased reflecting the lack of information available on the unmeasured confounder. The adjusted estimates using uniform distributions posited with subject matter expertise resulted in estimates close to the unadjusted estimate as expected. Both sensitivity analyses also produced confidence intervals that were wider for the parameters that were biased due to the unmeasured confounding. Section E of the Supporting Information contains a similar sensitivity analysis in which we used the full data set with obesity included to posit distributions for the bias parameters.

TABLE 6.

Estimates and 95% confidence intervals for treatment decision rule parameters from models with and without obesity and adjusted estimates from the sensitivity analysis for unmeasured confounding of obesity.

| Covariate | Full model | Obesity unmeasured | Adjusted Est. secondary data | Adjusted est. subject expertise |

|---|---|---|---|---|

| −1.56 (−3.11, 0.00) | −1.46 (−3.02, 0.10) | −1.60 (−3.37, 0.12) | −1.48 (−3.13, 0.42) | |

| 0.72 (−0.34, 1.79) | 0.69 (−0.38, 1.76) | 0.73 (−0.58, 2.05) | 0.69 (−0.70, 1.98) | |

| 0.00 (−0.03, 0.03) | 0.00 (−0.03, 0.03) | 0.00 (−0.04, 0.04) | 0.00 (−0.04, 0.04) | |

| 0.12 (0.02, 0.22) | 0.12 (0.02, 0.22) | 0.12 (0.00, 0.25) | 0.12 (−0.01, 0.24) |

5.2 |. Plasmode simulations

We also conducted a plasmode simulation study in which we used the real data with simulated obesity and patient outcomes. This allowed us to know the true optimal DTR and unmeasured confounder distribution. The data were simulated using models 3 and 4 that we used for the real data analysis with the outcome having a random error given by .

We let the value of , , and be given by the estimated values from the data that can be found in Section F of the Supporting Information. For conducting the sensitivity analysis, we posited the same normal distributions for and that were posited using the small, secondary study for the empirical evaluation in addition to the uniform distributions posited using subject matter expertise. We simulated 1000 data sets and estimated the adjusted parameter estimates for each data set. Table 7 contains the estimates of averaged over the 1000 simulated data sets in addition to the empirical coverage and average width of 95% confidence intervals for . The unadjusted estimate for was biased with an average estimate of −1.432 as opposed to the true value of −1.556. Adjusting for obesity using a secondary study reduced the bias and resulted in an estimate of −1.538. Positing the bias parameter distributions with subject matter expertise resulted in an estimate of −1.446 that was closer to the unadjusted estimate than the true value. The 95% confidence intervals from the unadjusted analysis did not achieve the nominal rate for any of the parameters. Monte Carlo sensitivity analysis resulted in slightly wider intervals that had the correct coverage when we posited the bias parameter distributions using a secondary data set or subject matter expertise.

TABLE 7.

Estimates (Est.), coverage (Cvr.), and average width (Wth.) of 95% confidence intervals for treatment decision rule parameters after adjusting for the unmeasured confounder obesity averaged over 1000 plasmode data sets using data from KPWA.

| Est. | Est. | Est. | Est. | |

|---|---|---|---|---|

| True Value | −1.556 | 0.724 | 0.001 | 0.119 |

| Unadjusted | −1.432 | 0.714 | 0.001 | 0.118 |

| Adjusted—Secondary Data | −1.538 | 0.721 | 0.001 | 0.119 |

| Adjusted—Subject Expertise | −1.446 | 0.715 | 0.001 | 0.119 |

| Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) | Cvr. (Wth.) | |

| Unadjusted | 0.822* (0.665) | 0.880* (0.455) | 0.870* (0.012) | 0.880* (0.042) |

| Adjusted—Secondary Data | 0.975* (0.958) | 0.949 (0.586) | 0.958 (0.016) | 0.959 (0.054) |

| Adjusted—Subject Expertise | 0.940 (0.889) | 0.953 (0.588) | 0.975* (0.018) | 0.981* (0.057) |

indicates coverages that are significantly different than 95%.

6 |. DISCUSSION

We proposed a method for conducting sensitivity analysis for bias due to unmeasured confounding in the estimation of DTRs. We used a Monte Carlo sensitivity analysis to estimate a bias-adjusted DTR and construct confidence intervals for the parameters indexing the optimal regime that account for the uncertainty due to unmeasured confounding. This procedure is straightforward to implement and can be used for continuous or binary unmeasured confounders. This approach was found to perform well for both simulated and real data.

This method requires positing parametric models for the relationship between the unmeasured confounder(s) and the outcome, treatment, and measured confounders. Moreover, the model for the conditional mean of the unmeasured confounder needs to be of high quality, which can be challenging without external data or domain expertise. We also must posit probability distributions for the parameters indexing these models. These distributions do not need to be centered on the true value of the parameter to construct confidence intervals, and they only need the true value to not be in the tails of the distribution. If there are high levels of uncertainty about these parameters, wider distributions can be posited resulting in wider confidence intervals for the parameters indexing the estimated optimal DTR. We posited normal distributions for these parameters, but any probability distribution can be used that accurately reflect the prior information about the true value of these parameters. Here, we focused on DTRs that are estimated using dWOLS, though this approach can be applied to any regression-based method of estimation. Direct-search or value-search estimators are an alternative approach to estimating DTRs that our proposed method could not be directly applied to (Laber and Zhao, 2015; Orellana et al., 2010). We leave extensions to direct-search estimators as future work.

Supplementary Material

ACKNOWLEDGMENTS

This work was supported by the National Institute of Mental Health of the National Institutes of Health under Award Number R01 MH114873. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. EEMM is a Canada Research Chair (Tier 1) and acknowledges the support of a chercheur de mérite career award from the Fonds de Recherche du Québec, Santé.

Funding information

Fonds de Recherche du Québec, Santé; National Institute of Mental Health, Grant/Award Number: R01 MH114873

Footnotes

SUPPORTING INFORMATION

Additional supporting information can be found online in the Supporting Information section at the end of this article.

CONFLICT OF INTEREST STATEMENT

The authors have declared no conflict of interest.

DATA AVAILABILITY STATEMENT

Research data are not shared.

REFERENCES

- Bickel PJ, Gotze F, & van Zwet WR (1997). Resampling fewer than n observations: Gains, losses, and remedies for losses. Statistica Sinica, 7, 1–31. [Google Scholar]

- Blackwell DL, & Villarroel MA (2018). Tables of summary health statistics for U.S. adults: 2017 National Health Interview Survey. http://www.cdc.gov/nchs/nhis/SHS/tables.htm

- Cain LE, Robins JM, Lanoy E, Logan R, Costagliola D, & Hernán MA (2010). When to start treatment? A systematic approach to the comparison of dynamic regimes using observational data. The International Journal of Biostatistics, 6(2), 1557–4679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakraborty B, Laber EB, & Zhao Y. (2013). Inference for optimal dynamic treatment regimes using an adaptive 𝑚-out-of-𝑛 bootstrap scheme. Biometrics, 69(3), 714–723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakraborty B, & Moodie EEM (2013). Statistical methods for dynamic treatment regimes. Springer. [Google Scholar]

- Chen S, & Zhang B. (2021). Estimating and improving dynamic treatment regimens with a time-varying instrumental variable. arXiv: 2104.07822.

- Cornfield J, Haenszel W, Hammond EC, Lilienfeld AM, Shimkin MB, & Wynder EL (1959). Smoking and lung cancer: Recent evidence and a discussion of some questions. Journal of the National Cancer Institute, 22(1), 173–203. [PubMed] [Google Scholar]

- Cui Y, & Tchetgen Tchetgen E. (2021). A semiparametric instrumental variable approach to optimal treatment regimes under endogeneity. Journal of the American Statistical Association, 116(533), 162–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumbgen L. (1993). On nondifferentiable functions and the bootstrap. Probability Theory and Related Fields, 95, 125–140. [Google Scholar]

- Ertefaie A, & Strawderman RL (2018). Constructing dynamic treatment regimes in infinite-horizon settings. Biometrika, 105(4), 963–977. [Google Scholar]

- Fava M. (2000). Weight gain and antidepressants. Journal of Clinical Psychiatry, 61(11), 37–41. [PubMed] [Google Scholar]

- Greenland S. (2005). Multiple-bias modelling for analysis of observational data. Journal of the Royal Statistical Society: Series A, 168(2), 267–306. [Google Scholar]

- Groenwold RHH, Nelson DB, Nichol KL, Hoes AW, & Hak E. (2010). Sensitivity analyses to estimate the potential impact of unmeasured confounding in causal research. International Journal of Epidemiology, 39, 107–117. [DOI] [PubMed] [Google Scholar]

- Hernan MA, & Robins JM (2006). Instruments for causal inference: An epidemiologist’s dream. Epidemiology, 17(4), 360–372. [DOI] [PubMed] [Google Scholar]

- Hernan MA, & Robins JM (2020). Causal inference: What if. Chapman & Hall/CRC. [Google Scholar]

- Kallus N, & Zhou A. (2019). Confounding-robust policy improvement. arXiv: 1805.08593.

- Kroenke K, Spitzer RL, & Williams JBW (2001). The PHQ-9: Validity of a brief depression severity measure. Journal of General Internal Medicine, 16(9), 606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laber EB, Lizotte DJ, Qian M, Pelham WE, & Murphy SA (2014). Dynamic treatment regimes: Technical challenges and applications. Electronic Journal of Statistics, 8(1), 1225–1272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laber EB, & Zhao YQ (2015). Tree-based methods for estimating individualized treatment regimes. Biometrika, 102(3), 501–514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lash TL, Fox MP, & Fink AK (2009). Applying quantitative bias analysis to epidemiologic data. Springer. [Google Scholar]

- Lavori P, & Dawson R. (2000). A design for testing clinical strategies: Biased adaptive within-subject randomization. Journal of the Royal Statistical Society: Series A (Statistics in Society), 163(1), 29–38. [Google Scholar]

- Lavori PW, & Dawson R. (2004). Dynamic treatment regimes: Practical design considerations. Clinical Trials, 1(1), 9–20. [DOI] [PubMed] [Google Scholar]

- Liao L, Fu Z, Yang Z, Kolar M, & Wang Z. (2021). Instrumental variable value iteration for causal offline reinforcement learning. arXiv: 2104.09907.

- Lin DY, Psaty BM, & Kronmal RA (1998). Assessing the sensitivity of regression results to unmeasured confounders in observational studies. Biometrics, 54(3), 948–963. [PubMed] [Google Scholar]

- Luckett DJ, Laber EB, Kahkoska AR, Maahs DM, Mayer-Davis E, & Kosorok MR (2018). Estimating dynamic treatment regimes in mobile health using V-learning. Journal of the American Statistical Association, 115(530), 692–706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luppino FS, de Wit LM, Bouvy PF, Stijnen T, Cuijpers P, Penninx BWJH, & Zitman FG (2010). Overweight, obesity, and depression: A systematic review and meta-analysis of longitudinal studies. Archives of General Psychiatry, 67(3), 220–229. [DOI] [PubMed] [Google Scholar]

- McCandless LC, Gustafon P, & Levy A. (2007). Bayesian sensitivity analysis for unmeasured confounding in observational studies. Statistics in Medicine, 26(11), 2331–2347. [DOI] [PubMed] [Google Scholar]

- McCandless LC, & Gustafson P. (2017). A comparison of Bayesian and Monte Carlo sensitivity analysis for unmeasured confounding. Statistics in Medicine, 36(18), 2887–2901. [DOI] [PubMed] [Google Scholar]

- Moodie EEM, & Richardson TS (2009). Estimating optimal dynamic regimes: Correcting bias under the null. The Scandinavian Journal of Statistics, 37, 126–146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SA. (2003). Optimal dynamic treatment regimes. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 65(2), 331–355. [Google Scholar]

- Murphy SA (2005). An experimental design for the development of adaptive treatment strategies. Statistics in Medicine, 24(10), 1455–1481. [DOI] [PubMed] [Google Scholar]

- Murray T, Yuan Y, & Thall P. (2018). A Bayesian machine learning approach for optimizing dynamic treatment regimes. Journal of the American Statistical Association, 113(523), 1255–1267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orellana L, Rotnitzky A, & Robins J. (2010). Dynamic regime marginal structural mean models for estimation of optimal dynamic treatment regimes, part I: Main content. International Journal of Biostatistics, 6(2), 1–49. [PubMed] [Google Scholar]

- Phillips CV (2003). Quantifying and reporting uncertainty from systemic errors. Epidemiology, 14(4), 459–466. [DOI] [PubMed] [Google Scholar]

- Qiu H, Carone M, Sadikova E, Petukhova M, Kessler RC, & Luedtke A. (2021). Optimal individualized decision rule using instrumental variable methods. Journal of the American Statistical Association, 116(533), 174–191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins J. (2004). Optimal structural nested models for optimal sequential decisions. In Lin DY & Heagerty P. (Eds.), Proceedings of the Second Seattle Symposium in Biostatistics: Analysis of Correlated Data (pp. 189–326). Springer. [Google Scholar]

- Robins JM, Rotnitzky A, & Scharfstein DO (1999). Sensitivity analysis for selection bias and unmeasured confounding in missing data and causal inference models. In Halloran ME & Berry D. (Eds.), Statistical models in epidemiology: The evironment and clinical trials (pp. 1–92). Springer-Verlag. [Google Scholar]

- Rose EJ, Moodie EEM, & Shortreed S. (2022). Using pilot data to size observational studies for the estimation of dynamic treatment regimes. arXiv: 2202.09451. [DOI] [PMC free article] [PubMed]

- Rosenbaum PR (1987). Sensitivity analysis for certain permutation inferences in matched observational studies. Biometrika, 74(1), 13–26. [Google Scholar]

- Rosenbaum PR, & Rubin DB (1983). Assessing sensitivity to an unobserved binary covariate in an observational study with binary outcome. Journal of the Royal Statistical Society: Series B, 45(2), 212–218. [Google Scholar]

- Rubin D. (1978). Bayesian inference for causal effects: The role of randomization. The Annals of Statistics, 6(1), 34–58. [Google Scholar]

- Santasieri D, & Schwartz TL (2015). Antidepressant efficacy and side-effect burden: A quick guide for clinicians. Drugs in Context, 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shao J. (1994). Bootstrap sample size in nonregular cases. Proceedings of the American Mathematical Society, 122(4), 1251–1262. [Google Scholar]

- Steenland K, & Greenland S. (2004). Monte Carlo sensitivity analysis and Bayesian analysis of smoking as an unmeasured confounder in a study of silica and lung cancer. American Journal of Epidemiology, 160(4), 384–392. [DOI] [PubMed] [Google Scholar]

- Swanepoel JWH (1986). A note on proving that the (modified) bootstrap works. Communications in Statistics-Theory and Methods, 15, 3193–3203. [Google Scholar]

- Thall PF, Millikan RE, & Sung H-G (2000). Evaluating multiple treatment courses in clinical trials. Statistics in Medicine, 19(8), 1011–1028. [DOI] [PubMed] [Google Scholar]

- Tsiatis AA, Davidian M, Holloway ST, & Laber EB (2019). Dynamic treatment regimes: Statistical methods for precision medicine. CRC Press. [Google Scholar]

- van der Laan MJ, Petersen ML, & Joffe MM (2005). History-adjusted marginal structural models and statically-optimal dynamic treatment regimens. The International Journal of Biostatistics, 1(1). [Google Scholar]

- Wallace MP, & Moodie EEM (2015). Doubly-robust dynamic treatment regimen estimation via weighted least squares. Biometrics, 71(3), 636–644. [DOI] [PubMed] [Google Scholar]

- Wu F, Laber EB, Lipkovich IA, & Severus E. (2015). Who will benefit from antidepressants in the acute treatment of bipolar depression? A reanalysis of the STEP-BD study by Sachs et al. 2007, using Q-learning. International Journal of Bipolar Disorders, 3(1), 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B, Small DS, & Zhao Q. (2021). Selecting and ranking individualized treatment rules with unmeasured confounding. Journal of the American Statistical Association, 116(533), 295–308. [Google Scholar]

- Zhang Y, Laber E, Tsiatis A, & Davidian M. (2018). Interpretable dynamic treatment regimes. Journal of the American Statistical Association, 113(524), 1541–1549. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Research data are not shared.