Abstract

The human brain seamlessly integrates internally generated thoughts with incoming sensory information, yet the networks supporting internal (default network, DN) and external (dorsal attention network, dATN) processing are traditionally viewed as antagonistic. This raises a crucial question: how does the brain integrate information between these seemingly opposed systems? Here, using precision neuroimaging methods, we show that these internal/external networks are not as dissociated as traditionally thought. Using densely-sampled 7T fMRI data, we defined individualized whole-brain networks from participants at rest and calculated the retinotopic preferences of individual voxels within these networks during an visual mapping task. We show that while the overall network activity between the DN and dATN is independent at rest, considering a latent retinotopic code reveals a complex, voxel-scale interaction stratified by visual responsiveness. Specifically, the interaction between the DN and dATN at rest is structured at the voxel-level by each voxel’s retinotopic preferences, such that the spontaneous activity of voxels preferring similar visual field locations is more anti-correlated than that of voxels preferring different visual field locations. Further, this retinotopic scaffold integrates with the domain-specific preferences of subregions within these networks, enabling efficient, parallel processing of retinotopic and domain-specific information. Thus, DN and dATN are not independent at rest: voxel-scale interaction between these networks preserves and encodes information in both positive and negative BOLD responses, even in the absence of visual input or task demands. These findings suggest that retinotopic coding may serve as a fundamental organizing principle for brain-wide communication, providing a new framework for understanding how the brain balances and integrates internal cognition with external perception.

A fundamental goal of neuroscience is to understand how activity distributed across the brain’s functional networks gives rise to cognition 1–9. Central to this aim is understanding principles that govern interactions between different brain networks, particularly those involved in externally-oriented attention (e.g., processing sensory input) and internally-oriented attention (e.g., introspection and memory) 10–14. This knowledge gap confounds our understanding of human cognition: the interaction between internally- and externally-oriented neural systems is foundational to how we perceive, remember, and navigate our world, yet the ‘common language’ facilitating their communication remains controversial.

One reason this knowledge gap exists is that the neural systems that subserve internally- and externally-oriented attention, such as the Default Network (DN) and dorsal attention network (dATN) respectively, are typically considered competitive10–12,14,15. Seminal neuroimaging studies investigating externally-oriented attention (visual processing, working memory, etc.) showed that visual tasks reliably activate brain areas in lateral occipital temporal cortex, dorsal parietal cortex, and prefrontal cortex, now collectively referred to as the dATN10,14,16,17. In contrast, visual tasks reliably deactivate regions in the internally-oriented DN, including lateral and medial parietal cortex, anterior temporal lobe, and medial prefrontal cortex13,18. This pattern reverses during introspective tasks, e.g., scene construction or theory of mind tasks: the DN systematically activates and the dATN deactivates6,19–23. The network-level independence between the dATN and DN has also been observed in spontaneous activity at rest. Prior work has found these network’s resting-state activity is independent (i.e., not correlated) or anti-correlated11,12,24,25 (depending on the application of global signal regression11,26). Together, DN/dATN independence is thought to facilitate our ability to attend to external stimuli without competition from internal mnemonic representations, and vice versa 10,12,27,28. However, if internally- and externally-oriented networks are functionally dissociated, it is not clear how the brain accomplishes tasks that require integrating perceptual and mnemonic information (e.g., anticipatory saccades, memory-based attention tasks, and mental imagery).

Two recent findings have shed light on this question. First, while the internally-oriented DN traditionally is thought to use an abstract or semantic neural code 2,28–30, recent work suggests that a neural code that is typically associated with externally-oriented visual information processing -- retinotopy -- also manifests in the DN 31–34. The retinotopic code in the DN is unlike classically visually-responsive areas, including the portions of the dATN. In the DN, retinotopic coding manifests as an “inverted retinotopic code”: while stimulation of the retina causes canonical visual areas to increase neural activity in a position-dependent manner, the DN exhibits position-specific decreases in activity 31,33,34. Thus, the DN’s deactivation reflects specific properties of the attended external stimulus and is informative during visual attention tasks.

Second, recently the inverted retinotopic code has been proposed to play a functional role in structuring mnemonic-perceptual interactions, specifically across scene-selective visual and memory areas near the DN34. During familiar scene processing, activity in brain areas specialized for scene perception and memory differentially increases during perception and recall tasks, respectively35,36. However, at the voxel level, the visual and memory responsive areas exhibit an interlocked, retinotopically specific opponent dynamic 34. In other words, stimuli in specific visual field locations activate perception voxels and suppress memory area voxels monitoring that location. This pattern reverses during memory tasks. This challenges the traditional view of internally- and externally-oriented brain networks as functionally-opposed via global opponent dynamics, suggesting instead that they are part of a common information processing stream. It also emphasizes the importance of voxel-wise activity patterns in uncovering neural codes that underpin global brain dynamics.

Based on these findings, we reasoned that retinotopic coding could be a widespread mechanism that scaffolds global interactions across large-scale internally- and externally-oriented brain networks37. We leveraged a high-resolution 7T fMRI dataset38 and voxel-wise modeling to test whether retinotopic coding structures global interactions between the DN and dATN. We tested three hypotheses. First, the voxel-wise retinotopic push-pull dynamic observed in the highly-specialized subareas of the DN/dATN during visual tasks34 will generalize to the overall networks’ spontaneous neural activity, even in the absence of experimenter-imposed task demands. Second, among subareas of the DN/dATN, retinotopic coding will be integrated (multiplexed) with an areas’ functional domain to allow fine-grained control of information exchange across cortex. Finally, retinotopic coding should be intrinsic throughout the brain, even in areas of the brain associated with internal attention. So, the retinotopic code will be evident in both top-down and bottom-up interactions between perceptual and mnemonic areas. Together, these results would suggest retinotopy is a unifying framework organizing brain-wide information processing and internal versus external attention dynamics.

Retinotopic coding in internally and externally oriented networks

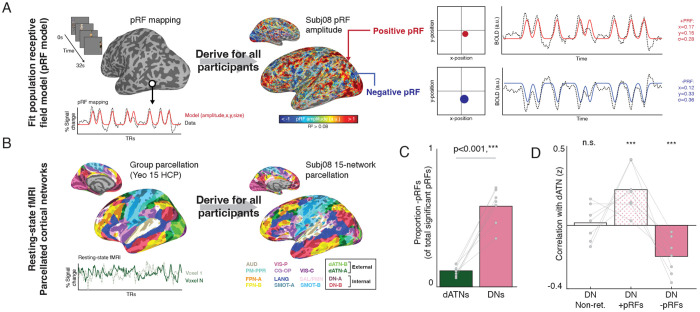

To investigate the role of retinotopic coding in structuring activity between internally- and externally-oriented brain networks, we used voxel-wise visual population receptive field (pRF) modeling and resting-state fMRI data from the Natural Scenes Dataset38 (Fig. 1A). We assessed the retinotopic responsiveness of all voxels in the brain by modeling BOLD activity in response to a sweeping bar stimulus 39. We considered any voxel with >8% variance explained by our pRF model to be exhibiting a retinotopic code, and hereafter we refer to these voxels as “pRFs”. PRF amplitude maps for all participants are shown in Fig. S1. Because we did not observe any hemispheric differences in pRF size, contra-laterality, distribution of pRF amplitude, or effects in subsequent analyses (ps > 0.55), data are presented collapsed across hemispheres.

Fig. 1.

Inversion of retinotopic coding between externally- and internally- oriented networks in the human brain. A. Population receptive field (pRF) modeling with fMRI. A visual pRF model was fit for all participants to determine visual field preferences for each voxel. Voxels with positive BOLD responses to the visual stimulus are referred to as positive pRFs (+pRFs), and those with negative BOLD responses are referred to as negative pRFs (−pRFs). B. Individualized resting-state network parcellation. Resting-state fMRI was collected in all participants (N=7; 34-102 minutes per participant) and used to derive individualized cortical network parcellations. Parcellations were generated using the multi-session hierarchical Bayesian modelling approach8,40 with the Yeo 15 HCP atlas44 as a prior. C. Differential concentrations of +/−pRFs in task-negative and task-positive (internally/externally oriented) brain networks. Bars show the proportion of −pRFs (of total suprathreshold voxels) within each individual’s cortical networks. The dATN (combined dATN-A/B) had the lowest proportion of −pRFs, while the DN (combined DN-A/B) had the highest. All networks’ concentration of −pRFs are shown in Fig., S3. D. Interaction between the DN and dATN differs by visual field preference of DN voxels. DN voxels with +pRFs had a positive correlation with the dATN (mean correlation = 0.22±0.144, t(6) = 3.99, p = 0.0072), while non-retinotopic DN voxels (i.e., pRF model R2 < 0.08) were not significantly correlated with the dATN (mean correlation = 0.02±0.115, t(6) = 0.37, p = 0.72). On the other hand, DN voxels with −pRFs were anti-correlated with the dATN (mean correlation = −0.20±0.12, t(6) = 4.22, p = 0.0055). To conduct these analyses, we accounted for variance associated with other cortical networks using partial correlation43.

Then, we used resting-state fMRI data to identify each participant’s idiosyncratic cortical networks 8,40 and assessed their visual responsiveness. Resting-state data were preprocessed using ICA with manual noise component selection41,42 and no global signal regression was performed26. We established 15 cortical networks using multi-session hierarchical Bayesian modeling8,40 and combined the Default Networks A-B and the Dorsal Attention Networks A-B to constitute our internal and externally oriented networks, respectively (hereafter, DN and dATN) (Fig. 1B; Fig. S2).

Consistent with the opponent interaction between the DN and dATN during visual tasks11,12,18, we saw a strong distinction in the visual response of these internally- and externally-oriented brain networks previously observed by others 31 (Fig. 1C; Fig. S3). Across participants, a larger proportion of dATN voxels exceeded our retinotopic variance explained threshold compared to the DN (55.3% of dATN voxels, 26.95% of DN voxels) consistent with their role as externally- and internally-oriented networks, respectively. However, the nature of retinotopy in these two networks differed significantly on their response amplitude (i.e. whether a stimulus in their preferred visual field location evoked a positive or a negative BOLD response) (Fig 1C). On average more than half of all suprathreshold pRFs in the DNs were inverted (i.e., had a negative BOLD response to visual stimulation in their population receptive field, −pRFs). In contrast, less than 20% of the suprathreshold voxels in the dATN were −pRFs (i.e., the majority of the voxels had positive BOLD responses to visual stimulation in their receptive field, +pRFs). This distinction is particularly remarkable given the proximity of the dATN and DN clusters in posterior cerebral cortex (Fig. 1B). The response amplitude of these pRF populations was reliable (Fig. S4). Together, these results establish the necessary foundation to assess whether the voxel-wise retinotopic code structures global interactions between the internally- and externally-oriented networks.

Do interactions between the DN and dATN depend on visual responsiveness of individual voxels? Across resting-state runs, we saw that the average activity of the DN and dATN was modestly positively correlated after accounting for variance across all cortical networks using partial correlation43 (mean correlation = 0.117±0.12 s.d., t(6) = 2.60, p=0.041). Crucially, however, we found a strong dissociation in the nature of the interaction between the DN and dATN when we split the DN voxels by their visual responsiveness (Fig 1D). DN voxels that responded positively to visual stimulation (+DN pRFs) had a positive correlation with the dATN (mean correlation = 0.22±0.144, t(6) = 3.99, p = 0.0072), and the activity of DN voxels that did not systematically respond to visual stimulation (i.e., pRF model R2<0.08) was not significantly correlated with the dATN (mean correlation = 0.02±0.115, t(6) = 0.37, p = 0.72). On the other hand, the activity of DN voxels with systematic negative responses to visual stimulation (−DN pRFs) was anti-correlated with the dATN (mean correlation = −0.20±0.12, t(6) = 4.22, p = 0.0055). Thus, the relationship between the DN and dATN is stratified by the visual responsiveness of the voxels within the DN, and opponency at rest between the DN and dATN can be attributed to voxels with negative responses to visual stimulation in the DN.

Retinotopic coding scaffolds DN and dATN interactions

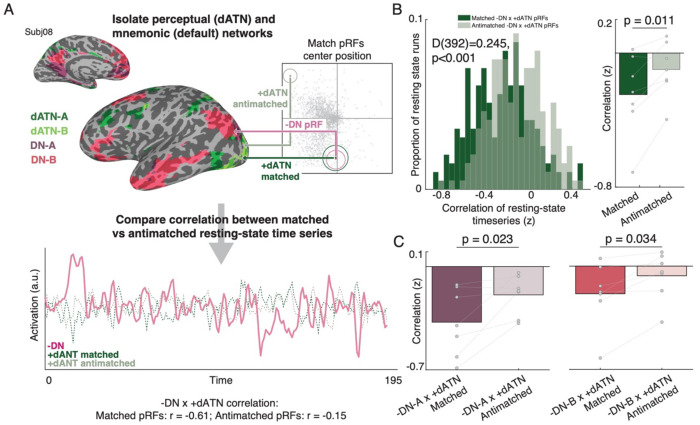

To test whether the retinotopic code structured the interaction between the internally- and externally-oriented brain networks at a voxel level, we examined whether the visual field preferences of individual voxels within these networks predicted their correlation at rest. Specifically, because of the hypothesized competition of the DN and dATN, we asked whether the opponent dynamic between −pRFs in the DN and +pRFs in the dATN is stronger for voxels with similar visual field preferences?

For each pRF in the DN and dATN, we calculated the pairwise distance between the RF center position (x, y parameter estimates) of −pRFs in the DN and +pRFs in dATN (Fig. 2A). For each DN −pRF, we found the 10 closest (Matched) and furthest (Antimatched) dATN +pRF centers. Interestingly, matched pRFs were distributed across the dATN, spanning posterior areas in retinotopic cortex through prefrontal cortical regions not typically associated with visual analysis (Fig. S5). We then averaged these matched and antimatched pRF’s resting-state time series together, and we compared the correlation of these matched/antimatched timeseries with the time series from the DN −pRFs (see methods). This resulted in two correlation values per resting-state run, which represented the correlation of the average matched and antimatched pRF’s time series between these areas. Importantly, the primary statistics relevant to the conclusions in the manuscript replicated when considering randomly sampled pRF pairs, and therefore our conclusions do not depend on how pRFs are selected to be compared to the best matched pRFs (See Supplemental methods and results)

Fig. 2.

Retinotopic coding organizes spontaneous interaction between internally and externally oriented brain networks. A. Determining spatially-matched pRFs in dATNs and DNs. We assessed the influence of retinotopic coding on the interaction between internally- and externally-oriented brain areas’ spontaneous activity during resting-state fMRI, by comparing the correlation in activation between pRFs in these networks that represent similar (vs. different) regions of visual space. For each −DN pRF, we established the top 10 closest +dATN pRF voxels’ centers (“matched”) and the 10 furthest pRF centers (“antimatched”). For each resting-state fMRI scan, we extracted the average time series from −DN pRFs and correlated that time series with the average time series from the +dATN matched and antimatched pRFs. We repeated this procedure for all resting-state runs in all participants. Plot shows one example resting-state time series from a participant’s −DN, +dATN matched and +dATN antimatched pRFs and their associated correlation values. B. Spatially matched −DN/+dATN pRFs have a greater opponent interaction than antimatched pRFs, showing that opponent dynamics depend on retinotopic preferences. Histogram shows the distribution in correlation values between matched (dark green) and antimatched (light green) pRF pairs for each resting-state run in all participants, which were significantly different (matched versus antimatched: D(392)=0.245, p<0.001). Bar plot shows the average correlation for each participant (matched versus antimatched: t(6)=3.49, p=0.011). C. DN subnetworks A and B both evidenced a retinotopic opponent interaction (DN-A: t(6)=3.02, p=0.023, DN-B: t(6)=2.72, p=0.034; DN-A vs. DN-B: t(6)=1.64, p<0.15), although the opponency was stronger overall in DN-A compared to DN-B (difference in average correlation between −DN/+dATN pRFs: t(6)=5.41, p=0.002).

If the −DN and +dATN opponent interaction is scaffolded by a retinotopic code, the correlation between spatially matched pRFs should be significantly more negative than the antimatched pRFs. For this analysis we partialled out variance associated with +DN pRFs to isolate the specific relationship between −DN and +dATN pRFs (and vice versa) 34,43. Our results were consistent with this hypothesis. We observed a negative correlation between both matched and antimatched −DN and +dATN pRFs in the overwhelming majority of resting state runs (Fig. 2B, left) 29,37 (see methods). Critically, the distribution of matched +dATN and −DN pRFs was significantly shifted compared to antimatched pRFs (D(392)=0.22, p<0.001), confirming an overall stronger negative correlation, and thus a stronger opponent interaction between matched compared to antimatched pRFs. The stronger opponent interaction for matched versus antimatched pRFs was clear when resting-state runs were averaged within each participant (7/7 participants; t(6)=3.63, p=0.010, Fig.2B, right). Interestingly, retinotopy played a similarly strong role in structuring the opponent dynamic between the dATN and both subnetworks of the DN (DN-A: t(6)=3.02, p=0.023, DN-B: t(6)=2.72, p=0.034; DN-A vs. DN-B: t(6)=1.511, p<0.182), although the opponency was stronger overall in DN-A compared to DN-B (t(6)=5.532, p=0.002). Importantly, tSNR of the matched and antimatched pRF’s resting-state time series did not differ (t(6)=1.007, p=0.353), suggesting that differences in signal quality did not underlie the difference in correlation.

Retinotopic coding scaffolded the interaction between +DN and +dATN pRFs, as well. Consistent with their overall positive interaction, both matched and antimatched +DN pRFs had a positive correlation with dATN pRFs after accounting for variance associated with −DN pRFs, and retinotopic coding enhanced this positive correlation, as matched pRFs had a significantly greater positive correlation than antimatched pRFs (D(392) = 0.245, p<0.001; t(6)=−3.99, p=0.007; Fig. S6). Thus, retinotopic coding also structures interactions between positively responsive voxels in the DN and dATN.

Together, these results suggest that the retinotopic code plays a role in structuring the overall interaction between the brain’s internally- and externally-oriented cortical networks, and the nature of the DN/dATN interaction depends on the visual response of DN pRFs. DN pRFs with positive responses to visual stimulation exhibit a retinotopically-specific positive correlation to dATN pRFs, while DN pRFs with negative responses to visual stimulation exhibit a retinotopically-specific opponent interaction with dATN pRFs.

Retinotopic coding organizes activity within functional domains

Our results so far clearly establish that retinotopic coding structures spontaneous interactions between internally- and externally-oriented neural systems in the absence of task demands. However, high-level cortical areas generally associate into networks based on their functional domain. For example, within the visual system, brain areas with differing retinotopic preferences (e.g., the scene-selective areas on the lateral and ventral surfaces of the brain) 45,46 nevertheless form functional networks based on their apparent preference for specific visual categories (e.g., faces, objects, or scenes)47,48. This raises a question: do retinotopic and domain-specific organizational principles interact to facilitate or constrain information flow across internally- and externally-oriented networks? Addressing this question would shed light on mechanisms that enable the brain to integrate information while maintaining functional specialization.

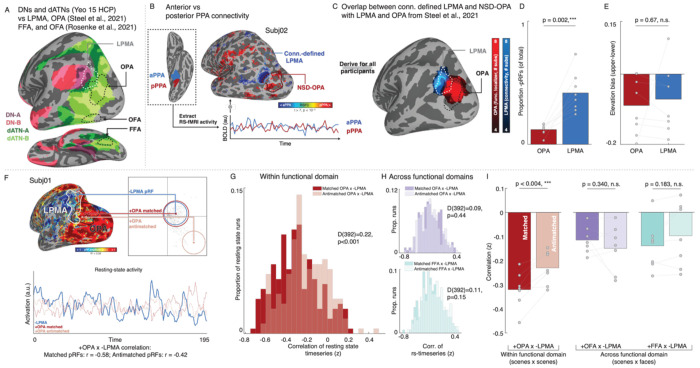

To address this question, we focused on the functional interplay between a set of areas in posterior cerebral cortex that are established models for mnemonic and visual processing in the domains of scene and face perception. Specifically, we considered the mnemonic lateral place memory area (LPMA35,36), an area on the brain’s lateral surface that is implicated in processing mnemonic information relevant to visual scenes at the border between DN-A and the dATNs (Fig 2A). We examined how LPMA activation co-fluctuates with the adjacent, scene-perception area “occipital place area” on the brain’s lateral surface (OPA49,50) compared to two face-perception regions on the lateral and ventral surfaces, the occipital and fusiform face areas (OFA and FFA 51,52). At a group level, these perceptual regions are situated within the dATNs and are at the same level of the visual hierarchy (Fig. 3A), but they are differentially associated with the domains of scene (OPA) and face (OFA, FFA) processing, making them ideal model systems to examine the impact of domain-specificity and retinotopic coding in organizing neural activity.

Fig. 3.

Retinotopic coding structures the spontaneous interaction between functionally-coupled mnemonic and perceptual areas during resting-state fMRI. A. Isolating functionally coupled internally- and externally-oriented brain areas within the DNs and dATNs. We identified brain areas that were specialized in two domains: scenes and faces processing. Specifically, we focused on the lateral place memory area (LPMA; from 35), white), a memory area in the domain of scene perception at the posterior edge of the DN-A (purple; from 44). We examined LPMA’s relationship to three different of category-selective visual areas in the dATN (green; from 44), 1) the occipital place area (OPA; from 35), an area within the domain of scene perception, along with 2) the occipital face area (OFA) and 3) the fusiform face area (FFA), two areas involved in the domain of face perception (white; from 55). B-C. We localized LPMA in all participants by contrasting the correlation in resting-state activity between anterior and posterior parahippocampal place area (PPA) (B). This yielded a region in lateral occipital-parietal cortex that overlapped with the LPMA defined in an independent group of participants (C). D-E. Consistent with prior work, the connectivity-defined LPMA had greater concentration of −pRFs compared to OPA (D), and exhibited a lower visual field bias to OPA (E), consistent with an opponent interaction between these areas during perception. F. We assessed the influence of retinotopic coding on the interaction between −pRFs in mnemonic and +pRFs in perceptual areas using the same pRF matching and correlation procedure described above. We compared pRFs within functional domain (scene memory x perception – LPMA to OPA) as well as across domains (scene memory x face perception – LPMA to the occipital face area (OFA) and fusiform face area (FFA)). G. Within functional domain opponent interaction reflects voxel-wise retinotopic coding. We observed a stronger negative correlation between matched compared to antimatched - LPMA/+OPA pRFs (−LPMA x +OPA matched versus antimatched pRFs: D(392)=0.22, p<0.001). H. Retinotopic coding did not impact the interaction between areas across functional domains. We found no significant difference between matched and antimatched pRFs between the scene memory area LPMA and the face perception areas FFA and OFA (−LPMA x +OFA: D(392)=0.09, p=0.44; −LPMA x +FFA: D(392)=0.11, p=0.15). Histograms depict the distribution of correlation values between matched (dark) and antimatched (light) pRFs for all runs in all participants. I. Retinotopic coding organizes interactions within a domain, but not across domains. When the correlation values were averaged within each participant, we observed a significant difference between matched versus antimatched pRFs within functional domain (−LPMA x +OPA: t(6)=4.45, p=0.004) but not across domains (−LPMA x +OFA: t(6)=1.04, p=0.34; −LPMA x +FFA: t(6)=1.48, p=0.188).

We first defined the mnemonic area LPMA in the NSD participants. To do this, we contrasted functional connectivity between the anterior and posterior halves of the parahippocampal place area using each participant’s resting state data53,54 (Fig. 3B; See Methods), which revealed a cluster in lateral parietal cortex with a similar topographic profile as LPMA based on a group analysis from our prior work 35 (Fig.3C). Replicating our previous findings34, this connectivity-defined LPMA had a higher concentration of robust −pRFs compared to OPA (t(7)=5.26, p=0.002) (Fig. 3D, Supplemental Fig. S4, S7) and exhibited a similar lower visual field bias as OPA (OPA: 8/8 t(7)=3.13, p=0.016; LPMA: 7/8 participants, t(7)=2.11, p=0.07; OPA v LPMA: t(7)=0.441, p=0.67) (Fig. 3E).

We first considered whether the retinotopic opponent dynamic we have previously shown in the domain of scenes (i.e., between −LPMA and +OPA pRFs) during perceptual and mnemonic tasks34 was also present at rest (Fig. 3F). As we observed for the overall −DN and +dATN pRFs, we found that −LPMA and +OPA pRFs are interlocked in a retinotopically-grounded opponent interaction. Resting-state activity of −LPMA and +OPA pRFs was reliably negatively correlated after accounting for variance associated with +DN pRFs, and, critically, this negative correlation was stronger for matched compared with antimatched pRFs (K-S test: D(392)=0.22, p<0.001; t(6)=4.45, p=0.004) (Fig. 3–I; Fig. S8). This pattern was consistent when matched/antimatched pRFs were equated for eccentricity and size (matched vs. antimatched: D(392)=0.148, p=0.028; t(6)=2.03,p=0.087; 5/7 participants) (Fig. S9). Matched and antimatched +OPA pRFs resting-state tSNR (t(6)=1.922, p=0.103) and variance explained by the pRF model (t(6)=0.47, p=0.65) did not differ, suggesting that idiosyncratic voxels did not drive these results. Additionally, the opponent interaction was specific to −pRFs in LPMA: the activity of the best matched +pRFs in LPMA and OPA was positively correlated, and significantly more so than antimatched +pRFs in LPMA and OPA (t(6)=3.22, p=0.018; Supplementary Fig. S10). Taken together, these results show that the retinotopic code scaffolds the spontaneous interaction between perceptual and mnemonic brain areas within a functional domain, conceptually replicating our previous findings from task-fMRI 34.

Having established that the retinotopic opponent dynamic between −/+pRFs is present among functionally-paired brain areas within the domain of scenes, we next tested whether this opponent dynamic was modified by functional domain (i.e., the scene memory area LPMA paired with the face perception areas FFA and OFA). Remarkably, when matching across functional domains we observed no significant difference between the distribution of correlation values for matched and antimatched pRFs (Matched versus antimatched pRFs – −LPMA x +OFA: D(392)=0.09,p=0.44, t(6)=1.04, p=0.34; FFA: D(392)=0.11,p=0.15, t(6)=1.48, p=0.18; Fig. 2G–I). Retinotopic-opponency was greater within-compared to across-domain matching (scene-memory x scene-perception versus average scene-memory x face-perception: t(6)=2.46, p=0.049). Importantly, matched pRFs within domain had a significantly stronger opponent interaction than across domains (within vs across domains – −LPMAx+OPA v −LPMAx+FFA: t(6)=5.94, p=0.001; −LPMAx+OPA v −LPMAx+OFA: t(6)=7.03, p<0.0005). These results indicate that retinotopic coding does not structure the interaction between pairs of regions associated with distinct functional domains. Instead, retinotopic scaffolding appears to be selective, operating only within a given functional domain. This coding scheme could allow for efficient, parallel processing of domain-specific information (e.g., faces, scenes) that can be flexibly adapted depending on task demands.

Retinotopic coding is inherent to internally-oriented areas

Our findings support the crucial role of retinotopic coding in scaffolding spontaneous interactions between functionally-coupled mnemonic and perceptual brain areas. However, a fundamental question remains: is retinotopy intrinsic to internally-oriented cortical areas’ top-down influence over externally-oriented areas, or is the internally-oriented areas’ retinotopic code merely adopted in response to bottom-up perceptual input? Resolving this distinction is critical to understanding if the retinotopic scaffold is a general-purpose mechanism for cross-network interaction that transcends specific task demands and cognitive domains (i.e., memory vs. perception).

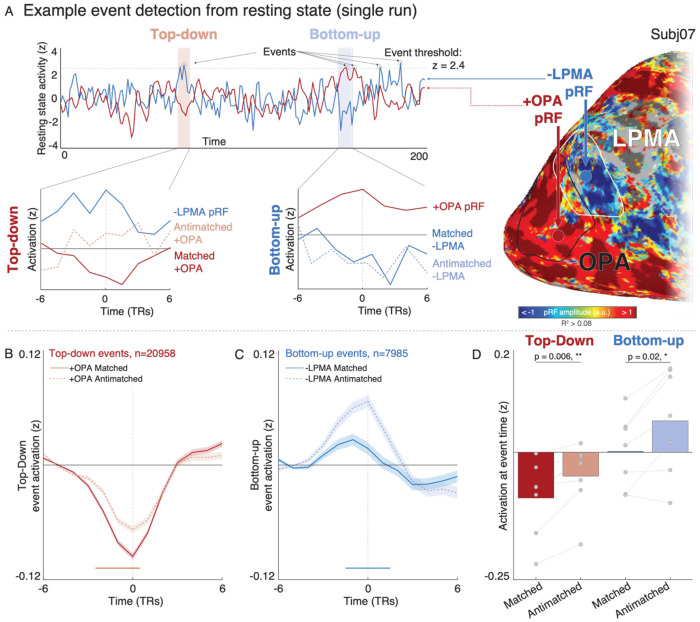

To address this question, we developed an analytical approach to disambiguate bottom-up perceptual signals from top-down mnemonic signals. This allowed us to determine whether the influence of top-down or bottom-up was more influential on the overall opponent relationship. We only considered the interaction between −LPMA and +OPA pRFs because we were specifically interested in whether the opponent dynamic would be present during periods of top-down drive. We identified periods where spontaneous activity of individual −LPMA or +OPA pRFs was unusually high (z-scored BOLD signal of a given voxel exceeded the 99th percentile of activity in a resting-state run). We considered these time points neural “events.” Events detected in −LPMA pRFs were considered top-down, and events in +OPA pRFs were considered bottom-up, and we analyzed the peri-event activation in the target area’s matched and antimatched pRFs (Fig. 3A). Because participants do not have any experimentally imposed task demands during resting-state fMRI, any structured interaction between areas will reflect these regions’ spontaneous dynamics.

Our analysis detected 20,958 top-down events and 7,193 bottom-up events. Top-down and bottom-up events occurred at the same rate per pRF (t(6)=0.71, p=0.50). Individual participants averaged 116±70.4 top-down and 32±11.76 bottom up (mean±sd) events per run. All pRFs had between 0 and 4 events per run (Fig. S11A–C). The wide distribution of events in time suggested that individual pRF-level correlation could be isolated from global fluctuations in regional activity (Fig. S11A), making this approach suitable for evaluating distinct interactions at the individual voxel level.

Intriguingly, although both top-down and bottom-up events tended to reduce target area activity via a retinotopic code, only top-down events resulted in suppression of the target pRFs (relative to the pre-event baseline). During top-down events, compared to pre-event baseline +OPA pRFs showed significant deactivation that was more pronounced in matched compared to antimatched pRFs (Fig. 3B). On the other hand, relative to their pre-event baseline −LPMA pRFs had elevated activity during bottom-up events. However, despite the lack of opponency, the activation of matched pRFs was significantly reduced compared to antimatched pRFs suggesting an inhibitory influence of bottom-up activity of +OPA on −LPMA pRFs. The influence of retinotopic coding was similar for both bottom-up and top-down events, indicating a symmetric inhibitory interaction: target area activity was significantly lower for matched compared to antimatched pRFs in both directions (top-down: t(6)=4.13, p=0.006; bottom-up: t(6)=3.17, p=0.02), with no difference between event types (t(6)=0.86, p=0.42). The balanced dynamic between −LPMA and +OPA pRFs mirrors other nervous system interactions 56–59, extending this well-established framework in these sensory/motor domains to higher-order cognitive functions.

We further tested whether top-down events detected across all DN −pRFs would show this opponent dynamic. Indeed, we observed a clear, retinotopic opponency during top-down events in −DN pRFs, relative to the activity of the dATN (Matched vs antimatched: t(6)=10.43, p<0.001, Fig. S12). This is clear evidence that both top-down and bottom-up events evoke retinotopically-specific inhibitory responses in the target region, supporting the hypothesis that retinotopic coding is intrinsic to mnemonic cortical areas, even in the absence of task demands or overt visual input.

Discussion

In summary, it is well-established that internally- and externally-oriented brain networks, including DNs and dATNs, support higher cognition in humans 1–8,11–13. Yet we lack an understanding of what coding principles, if any, underpin interactions across these distributed brain networks 30,60–64. Here, we show that a retinotopic code scaffolds the voxel-scale interaction between internally- and externally-oriented brain networks, even in the absence of overt visual demands. Moreover, by examining functionally-linked perceptual and mnemonic areas straddling the boundary between the DNs and dATNs, we found that this retinotopic information is multiplexed with domain-specific information, which may enable effective parallel processing of representations depending on retinotopic location, attentional-state, and task-demands. Finally, analysis of neural events in these functionally-linked regions showed that retinotopic opponency is present in top-down as well as bottom-up events, suggesting that the retinotopic code is intrinsic to both perceptual and mnemonic cortical areas. Collectively, our results provide a unified framework for understanding the flow of neural activity between brain areas, whereby macro-scale neural dynamics are organized at the meso-scale by functional domain and at the voxel-scale by a low-level retinotopic code. This multi-scale view of information processing has broad implications for understanding how the brain’s distributed networks give rise to attention, perception, and memory.

Because of the DN’s importance in many cognitive processes, including spatial and episodic memory, social processing, and executive functioning 13,65–70, resolving the coding principles inherent to the DN is central to understanding human cognition12,61–66. The traditional view of neural coding posits that sensory codes like retinotopy are shed in favor of abstract, amodal codes moving up the cortical hierarchy towards the DN 2,29,30. Our data contrast strikingly with this view. Instead of shedding the retinotopic code at the cortical apex, our prior work has shown that the low-level retinotopic code structures interactions between functionally paired perceptual and mnemonic areas in posterior cerebral cortex involved in visual scene analysis during visual and memory tasks34. Here we significantly extend that earlier finding by showing that the retinotopic code organizes spontaneous interactions between the brain’s primary internally- and externally-oriented large-scale networks, DN and dATN, even in the absence of task demands.

Our findings of retinotopic, voxel-scale opponency between DN and dATN pRFs is at odds with theories that posit that these networks’ activity is independent11,23,27,28 or even competitive10,12,71. Moreover, the traditional conception of the DN suggests that it does not participate in sensory processing10–13. Instead of independence between these networks, our results suggest that the activation of the visually-responsive voxels in the DN and dATN is complementary, with the precise relationship stratified by individual voxel’s positive or negative responsiveness to visual stimuli. Without considering voxel-wise information, these voxel-level distinctions appear to “average out” into a global independence or opponency 11,12, which has led to the conclusion that these networks are engaged in fundamentally different cognitive processes. In contrast, our results emphasize that the DN and dATNs jointly process common, retinotopic information. Their mutual retinotopic code enables these networks to encode retinotopic information at a voxel level in both positive and negative BOLD responses.

What purpose might this retinotopically-specific opponent dynamic coding serve in the context of overall brain function? We propose two complementary functions. One possibility is that oppositional coding of −DN pRFs, in particular, may serve as a neural mechanism for maintaining separate channels for perceptual and mnemonic information 61. Representing mnemonic signals in topographically distinct regions may prevent this internally-generated information from being interpreted as external. Similarly, by encoding remembered information in an inverted retinotopic format, the brain could process memory and perception simultaneously while preventing cross-talk—much like how opposing radio frequencies can carry different signals without interference. A second possibility is that oppositional coding could be a mechanism for signaling mnemonic predictions via top-down inhibitory processes72. Specifically, when the DN generates predictions about incoming sensory information, the inverted retinotopic code could act as a suppressive signal that sharpens tuning in perceptual areas73,74. This suppression would effectively enhance the neural representation of predicted stimuli by reducing background noise. These mechanisms likely work in concert: the inverted code simultaneously maintains separate channels for memory/perception while enabling precise top-down predictive signaling. Future work combining cellular recording techniques with behavior will be crucial for testing these proposed mechanisms and understanding how they contribute to cognitive function.

Retinotopic coding between the DN and dATN was clear in both subnetworks of the DN, DN-A and DN-B3,8,20. This is surprising given that these subnetworks are thought to subserve very different cognitive functions. Between these subnetworks, the role of DN-A is more easily tied to visual processing. DN-A is thought to be involved in memory tasks with a visual component, like episodic projection and scene construction 8,20, and at the group-level DN-A overlaps with areas of the brain that we have previously shown implement a retinotopic code and respond to visual tasks34–36. On the other hand, DN-B is thought to be involved with theory of mind tasks that do not obviously rely on visual information, like interpretation of false belief and emotional/physical pain 8,20. In this case, retinotopic coding might still be a useful format for transforming these abstract signals into sensory-grounded representations that can inform behavioral decisions (e.g., eye movements) and sensory predictions (e.g., anticipating facial expressions or body postures). Despite these differences, both DN subnetworks implemented a retinotopic code with respect to their interaction with the dATN, which further supports retinotopic coding as a core principle underpinning the brain’s functional organization.

The importance of retinotopic coding is further underscored when assessing “top-down” events (i.e. events that originate in the DN) detected at rest, which had a retinotopically-specific suppressive influence on pRFs in their downstream target area. This result joins mounting evidence demonstrating retinotopic coding in the DN 31–34, as well as new evidence supporting the importance of visual coding in the DN during visual tasks 34,75. Our findings align with other recent studies suggesting an important role of the DN in shaping visual responses. For example, others have shown that ongoing prestimulus DN activity influences the sensitivity of near-threshold visual object recognition 75. Other work has shown that subareas of the DN represent the visuospatial context that is associated in memory with a perceived scene 34–36,76. Relatedly, DN activity reflects semantic-level attentional priorities during visual search 77. Taken together, these findings show that, rather than being disengaged during visual tasks, the DN actively shapes responses in perceptually-oriented cortex, and that retinotopic coding is part of the DN’s “native language”. Taken together with prior results31–34, our findings prompt a reevaluation of the role of the DN in perceptual processing, and the extent to which roles in “internally- and externally-oriented attention” adequately captures the DN and dATN’s role in cognition.

These findings and others31–34 raise a fundamental question: is the DN a “visual” network? One possible interpretation of retinotopic coding in the DN is that the DN directly and obligatorily represents retinal position, like low-level visual areas, and that the DN is engaged in processing visual features in addition to more abstract features39,78. Under this hypothesis, the retinotopic code indicates that the information represented in the DN is, in part, sensory. Alternatively, the apparent visual coding may simply represent an underlying connectivity structure79 , which enables associative 80 and semantic 30,81 information that the DN directly represents to be effectively transferred to sensory areas of the brain. Under this alternative hypothesis, the information represented in the DN is not itself sensory in nature; the retinotopic code in the DN simply serves as a “highway” between the abstract representations in the DN and sensory representations in the dATN. Notably, in either case the retinotopic scaffolding represents a latent structure linking high-level and low-level areas through consistent, spatially-organized interactions79. This provides more evidence that low-level sensory codes are distributed across the brain, joining recent work showing latent somatotopic codes in regions of the brain that are not typically associated with somatosensation, including visual cortex 82. The wide and overlapping distribution of sensory codes across the brain may allow multiplexing of complex information to structure cross-network interactions37,82. Further studies investigating this framework with diverse tasks and stimuli could reshape our understanding of how the brain processes and integrates information across different levels of cognitive complexity.

Finally, our findings paint a clear picture of cortical dynamics spanning levels of analysis, from macro-scale brain networks, to meso-scale domain-specific subareas, to small-scale voxel-level interactions. Prior work has emphasized the importance of each of these organizational scales independently1,8,16,31,32,34,39,44,48,51,83,84. Here, our results suggest a comprehensive framework for understanding the brain’s functional organization that spans these levels of description. At the smallest scale, we demonstrate that retinotopic coding underpins voxel-scale interactions that are observable across the brain. At the meso-scale, these retinotopic interactions are constrained by specific brain areas’ functional domains (e.g., processing visual scenes). At a macro-scale, interplay among these domain-specific regions underpins the organization of large-scale brain networks, whose interactions give rise to specific complex behavior, like memory recall or visual attention. This nested hierarchy of neural interactions may account for the efficiency of parallel information processing in the brain and our ability to flexibly to adapt to ongoing task demands.

In summary, our results show that a retinotopic code organizes the spontaneous interactions of large-scale internally- and externally-oriented networks in the human brain. These findings challenge our classic understanding of internally-oriented networks like the DN, showing that the independence of the DN and dATN does not reflect disengagement from visual processing. Rather, their dynamic is structured by a voxel-wise retinotopic code that scaffolds interactions across these large-scale internally- and externally-oriented networks. Taken together, these results indicate that retinotopic coding, the human brain’s foundational visuo-spatial reference frame37,79, structures large-scale neural dynamics and may be a “common currency” or subspace for information exchange across the brain’s functional networks.

Methods

The data analyzed here are part of the Natural Scenes Dataset (NSD), a large 7T dataset of precision MRI data from 8 participants, including retinotopic mapping, anatomical segmentations, functional localizers for visual areas, and task and resting-state fMRI. A full description of the dataset can be found in the original manuscript 38. Here we detail the data processing and analysis steps relevant to the present work.

Subjects

The NSD comprises data from 8 participants collected at the University of Minnesota (two male, six female, ages 19-32). One subject (subj03) was excluded from resting-state analyses for having insufficient resting-state runs that passed our quality metrics. All participants had normal or correct to normal vision and no known neurological impairments. Informed consent was collected from all participants, and the study was approved by the University of Minnesota institutional review board.

MRI acquisition and processing

For this study we made use of the following data from the NSD: anatomical (T1 and FreeSurfer segmentation/reconstruction85,86), functional regions of interest ROIs, and minimally preprocessed retinotopy and resting-state time series. All analyses were conducted in original subject volume space, and data were projected on the surface for visualization purposes only.

Anatomical data

Anatomical data was collected using a 3T Siemens Prisma scanner and a 32-channel head coil. We used the anatomical data provided at 0.8mm resolution as well as the registered output from FreeSurfer recon-all, aligned to the 1.8mm functional data. For visualization purposes, we projected statistical and retinotopy data to the cortical surface using SUMA 87 from the afni software package88.

Defining functional regions of interest (PPA, OPA, OFA, FFA)

We used the volumetric functional regions of interest provided with the NSD at 1.8mm resolution. Specifically, we used the parahippocampal place area (PPA), occipital place area (OPA), iog-faces (referred to here as occipital face area (OFA)), and pfus-faces (fusiform face area, FFA1) regions of interest. In brief, these regions were defined in the NSD using within-subject data collected from 6 runs of a multi-category visual localizer paradigm 38.

Defining functional region LPMA

Because the NSD did not include mnemonic localizers 35, we used the resting-state data to define the lateral place memory area (LPMA). Briefly, the LPMA is a region anterior to OPA and near caudal inferior parietal lobule53,54 that selectively responds during recall of personally familiar places compared to other stimulus types. We have previous shown that OPA and LPMA are functionally-linked and work jointly to process knowledge of visuospatial context out of view during scene perception.

Prior work has suggested that a mnemonic area linked to scenes on the lateral surface can be localized by comparing resting-state co-fluctuations of anterior versus posterior PPA (aPPA and pPPA, respectively)19,53,54, and we adopted that approach here. We preprocessed the resting-state fixation fMRI data, runs 1 and 14 from NSD sessions 22 and 23, in all participants (prior to data exclusion) and extracted the average time series of aPPA, pPPA, aFFA, and pFFA. We used these time series as regressors in a general linear model, and we compared the beta-values from the aPPA and pPPA. We considered any voxels with a t-statistic > 5 within posterior parietal-occipital cortex on the lateral surface as an LPMA ROI (Individual ROIs can be found in Supplemental Fig.4). Across subjects, there was considerable overlap between this connectivity-defined area and a group-level LPMA defined based on our prior work (Fig. 2).

Functional MRI data acquisition and processing

All analyses were conducted on 1.8mm isotropic resolution minimally processed runs of a sweeping-bar retinotopy task and resting-state fixation provided in the NSD.

Quality assessment

To ensure only high-quality resting-state data were included, we trimmed the first 25 TRs (40s) from each run 76, which left 4.25 min of resting-state data per run. We then used afni’s quality control assessment tool (APQC89) on the raw trimmed resting-state time series to assess degree of motion in the resting-state scans. We excluded runs with greater than 1.8mm maximum displacement per run or 0.12mm framewise displacement from analysis and assessed runs with greater than 1mm displacement or 0.10mm framewise displacement on a case-by-case basis8. After exclusions, one participant (subj03) had only a single run that survived our criteria (4.25 minutes of data)), so we excluded them from resting-state analyses. The remaining 7 participants had at least 8 resting-state runs (> 34 minutes of data; mean number of runs: 14±6.13 (sd), range: 8-24 runs).

ICA denoising

To further denoise the retinotopy and remaining resting-state data, we used manual ICA classification of signal and noise on the minimally-preprocessed time series35,41,42. We used manual classification because automated tools perform poorly on the high spatial and temporal resolution data of the NSD90. For each retinotopy and resting state run, we decomposed the data into independent spatial components and their associated temporal signals using ICA (FSL’s melodic91,92). We then manually classified each component as signal or noise using the criteria established in42. Noise signals were projected out of the data using fsl_regfilt41.

We did not perform global signal regression26. Data were normalized to percent signal change. A 2.5mm FWHM smooth was applied to resting-state fixation data used in functional connectivity network identification. The analysis of voxel-scale interactions performed on unsmoothed data.

Data analysis - retinotopy

pRF Modelling

The NSD retinotopy stimuli features a mosaic of faces, houses, and objects superimposed on pink noise that are revealed through a continuously drifting aperture. For our analysis, we considered only the bar stimulus time series, which is consistent with other studies investigating −pRFs in high-level cortical areas 31,33,34. We did not consider the wedge/ring stimulus for any analyses.

After denoising the retinotopy data, we averaged the three retinotopy runs with the bar aperture together to form the final retinotopy time series. We performed population receptive field modeling using afni following the procedure described in 45. First, because the pRF stimulus in the NSD is continuous, we resampled the stimulus time series to the fMRI temporal resolution (TR = 1.333s). Next, we implemented afni’s pRF mapping procedure (3dNLfim). Given the position of the stimulus in the visual field at every time point, the model estimates the pRF parameters that yield the best fit to the data: pRF amplitude (positive, negative), pRF center location (x, y) and size (diameter of the pRF). Both Simplex and Powell optimization algorithms are used simultaneously to find the best time series/parameter sets (amplitude, x, y, size) by minimizing the least-squares error of the predicted time series with the acquired time series for each voxel. Relevant to the present work, the amplitude measure refers to the signed (positive or negative) degree of linear scaling applied to the pRF model, which reflects the sign of the neural response to visual stimulation of its receptive field.

Visual field coverage

Visual-field coverage (VFC) plots represent the sensitivity of an ROI across the visual field. We followed the procedure in Steel et al., 2024 to compute these34, which we have reproduced here. Individual participant VFC plots were first derived. These plots combine the best Gaussian receptive field model for each suprathreshold voxel within each ROI. Here, a max operator is used, which stores, at each point in the visual field, the maximum value from all pRFs within the ROI. The resulting coverage plot thus represents the maximum envelope of sensitivity across the visual field. Individual participant VFC plots were averaged across participants to create group-level coverage plots.

To compute the elevation biases, we calculated the mean pRF value (defined as the mean value in a specific portion of the visual-field coverage plot) in the contralateral upper visual field (UVF) and contralateral lower visual field (LVF) and computed the difference (UVF–LVF) for each participant, ROI and amplitude (+/−) separately. A positive value thus represents an upper visual-field bias, whereas a negative value represents a lower visual-field bias. Analysis of the visual-field biases considers pRF center, as well as pRF size and R2.

Reliability of pRF amplitude estimate

To assess the reliability of pRF amplitude (i.e., positive versus negative), we iteratively compared the amplitude of significant pRFs from individual runs of pRF data. Specifically, for each single run of pRF data, we fit our pRF model. We then binarized vertices according to significance and amplitude – voxels that surpassed our significance threshold (R2 > 0.08) in the full model were assigned a value of 0 or 1. When investigating +pRF reliability in OPA, significant vertices with a positive amplitude were assigned to 1, all other vertices 0. For −pRF reliability in LPMA, the opposite was done: significant negative amplitude vertices were assigned a value of 1, and all other vertices set to 0.

For each participant, after binarization, we calculated a dice-like coefficient within each ROI which considered all three retinotopy runs . We compared this value versus 5000 iterations of the same number of voxels randomly sampled from all voxels, both significant and non-significant, in the ROI. For each participant, this resulted in 1 “observed” dice coefficients, along with 5000 bootstrapped values representing the distribution of dice coefficients expected by chance which we used to evaluate the significance of each pRF amplitude’s run-to-run consistency.

Voxel-wise pRF matching

We matched −pRFs in source (i.e., the DN) with target (i.e., +pRFs in the dATN) using the following procedure. Within each participant, we computed the pairwise Euclidean distance between the center (x,y) of each source pRF with each target pRF. For each source pRF, we considered the top 10 closest target pRFs the “matched pRFs” and the 10 furthest target pRFs the “antimatched pRFs.” So, each pRF within a memory area yielded 10 matched and 10 antimatched pRFs.

To investigate the opponent interaction between areas for top-down interactions (below), we conducted this procedure using −LPMA pRFs as the source and +OPA pRFs as targets. To investigate bottom-up interactions, we considered +OPA pRFs as the source and −LPMA pRFs as targets. To investigate the importance of functional domain in retinotopic interactions, we considered −LPMA pRFs as the source and 1) +OFA and 2) +FFA pRFs as targets.

Resting-state analyses

Individual-specific cerebral network estimation

In each participant, we identified a set of 15 distributed networks on the cerebral cortical surface using a multi-session hierarchical Bayesian model (MS-HBM) functional connectivity approach 8,40. Briefly, the MS-HBM approach calculates a connectivity profile for every vertex on the cortical surface derived from that vertex’s correlation to all other vertices during each run of resting-state fixation. The MS-HBM then uses the resulting run- and participant-level profiles, along with a 15 network group-level prior created from a portion of the HCP S900 data44, to create a unique 15-network parcellation for each individual.

The MS-HBM has two primary advantages compared to other approaches used to parcellate functional connectivity data, such as k-mean clustering. First, it accounts for differences in connectivity both within an individual (likely the result of confounding variables such as scanner variability, time of day, etc.), and between participants (reflecting potentially meaningful individual differences), allowing for more reliable estimates. Second, by incorporating a group prior which includes all the networks of interest, we ensure that all networks will be identified in all participants, while allowing for idiosyncratic topographic differences.

Correlation among cortical networks

We calculated the unique correlation between the combined DNs and dATNs using partial correlation to account for variation associated with other cortical networks 43 . We averaged the time series from separate subnetworks of the DN and dATN prior to calculating the partial correlation. Then, to determine whether activity the different populations of visually responsive voxels (−pRFs, +pRFs, sub threshold (i.e., non-retinotopic) within the DNs was differentially correlated with the dATNs, we re-calculated the partial correlation between the average time series of these populations of DN voxels with the dATNs while accounting for the activity of all other cortical networks.

Opponent interaction at rest

To assess the influence of retinotopy on the correlation of areas at rest, we used the following procedure. First, we established matched source (i.e., −DN or −LPMA) and target (i.e., +dATN or +OPA) pRF pairs using the procedure described above. For this analysis, our primary focus was on the −pRFs in the DN or LPMA and their relationship with perceptual areas (dATN, OPA, OFA, FFA). Among these highly connected areas, large-scale fluctuations due to attention and motion will cause these voxels to be highly correlated. To control for this, consistent with prior work, we extracted the average time series of all +pRFs in the DN or LPMA and partialed out the variance associated with these pRFs from the −pRFs in the DN or LPMA and +pRFs in the perceptual areas of interest from each resting-state run 34,43. This provided an ROI-level analog to the whole-brain partial correlation described above (Section: Correlation among cortical networks), allowing us to isolate the unique contribution of the pRFs of interest from overall activity in the region as well as to control for global fluctuations that cause widespread positive correlations like motion and attentional state34,43.

To examine the opponent interaction for matched pRFs, we considered each resting state run separately. In each resting state run, we first calculated the average time series of all −pRFs in LPMA. We then calculated the average time series for the top 10 best matched +pRFs in OPA of all voxels. For example, if a participant had 211 −pRFs in LPMA, these voxels were averaged together to get a single −LPMA pRF time series, and the top 10 matched +pRFs in OPA (i.e., 2110 time series) would be averaged together to constitute the +OPA time series. Note that the matched +OPA pRFs were not unique for each voxel. We then correlated these time series (−LPMA and +OPA pRFs). A negative correlation was considered an opponent interaction.

To compare the importance of retinotopy in structuring the interaction between LPMA and OPA, we performed the same averaging and correlation procedure described above with the 10 worst matched pRFs (antimatched). For each participant, all Fisher-transformed correlation values (z) for matched versus antimatched pRFs were averaged together and we compared these matched and antimatched correlation values using a paired t-test. To examine the specificity within each functional network, we repeated this procedure for −LPMA matched/antimatched with OFA and FFA-1.

To confirm that retinotopic coding structured the opponent interaction, we repeated this anaylsis with two key differences: 1), to ensure that the choosing the bottom 10 voxels did not drive our results, we randomly sampled 10 pRFs from the furthest 33% of pRFs from each participant, and 2) to ensure that ROI-level effect was present at the individual pRF level, we performed the analysis without averaging the pRF’s resting-state time series within each ROI. For each resting state run, we extracted each −pRF time series and correlated this time series with the time series from the average of its top 10 best matched +pRF in OPA. We performed this matching and random sampling procedure 1000 times for each pRF in the source area. For example, if a participant had 211 −pRFs in LPMA, we would correlated each of these pRFs with the average time series from the their top 10 best matched +pRFs in OPA, resulting in 211 individual r-values for each resting-state run, and compared each of these 211 values with the correlation of 1000 randomly paired pRFs for that source pRF. We then Fisher-transformed these matched and randomly sampled values and averaged them to constitute a single value for each run. We repeated this procedure for all runs in all participants. The mean Fisher-transformed values were compared using a paired t-test. These results are described in the supplemental methods and results.

Resting-state event detection

We reasoned that the influence of top-down versus bottom-up drive on the spontaneous interaction between regions could be isolated by examining periods of unusually high activity in voxels in these respective areas (“events”)93,94. Specifically, we tested the hypothesis that top-down events in −LPMA pRFs would co-occur with periods of lower activity in +OPA pRFs, and that the suppressive influence of top-down events would be stronger compared with bottom-up events. To test these hypotheses, we isolated neural events in each source region’s (−LPMA (top-down) and +OPA (bottom-up)) pRF time series and examined the activity in the corresponding target region at these event times.

Event detection was performed for each +/− pRF independently. To detect events, we z-scored all pRF’s resting-state time series and identified TRs with unusually high activity in source region pRFs. Specifically, we considered each time point with a z-value greater than 2.4 (i.e., 99.18 percentile) as a neural event. Results were comparable with varying thresholds between 2.1 < z < 2.9. At each event, we extracted the 6 TRs before and after the event time (i.e., 13 TRs) surrounding the average time from that pRF’s top-10 matched pRFs in the target region. To make time series comparable across events, we normalized the event time series to the mean of the first 4 TRs. We repeated this procedure for all −LPMA/+OPA pRFs for top-down and bottom-up events.

For top-down and bottom-up events, we compared the activity of the target region (top-down: +OPA pRFs; bottom up: −LPMA pRFs) at event time using paired t-tests. We only considered matched pRFs for this analysis.

Statistical tests

Statistical analyses were implemented in Matlab (Mathworks, Inc). Given the small number of subjects in this dataset, we used two statistical analysis methods to ensure robustness of any detected effects. First, borrowing analytical methods from neuroscientific studies using animal models, we leveraged the large within participant data by pooling observations (e.g., resting-state runs or pRFs) from each participant. We then tested for differences in distributions using two-sample Kolmogorov-Smirnov goodness-of-fit hypothesis tests. Second, we adopted more classic statistical methods to test for effects within participants. We used paired-sample t-tests and corrected for multiple comparisons where appropriate.

Supplementary Material

Fig. 4.

Top-down vs. bottom-up neural events detected in spontaneous resting-state dynamics show evidence for retinotopically-specific suppression. A. Event detection and analysis procedure and example events from a single resting-state run. To detect events, we extracted the time series from each pRF in the source regions (top-down: −LPMA; bottom-up: +OPA) and isolated time points where the z-scored time series exceeded 2.4 s.d. (99th percentile). We then examined the activity of matched and antimatched pRFs from the target region in this peri-event time frame (6 TRs (8 s) before and after the event). Overall, this event detection procedure yielded 20958 top-down and 7985 bottom-up events that were well distributed in time (Fig. S11). B. −LPMA pRF events co-occur with suppression of retinotopically-matched +OPA pRFs. Peri-event time series depicts the grand average activity of matched (dark) and antimatched +OPA pRFs. Time series are baselined to the mean of the first three TRs (TRs −6 to −4 relative to event onset, dotted line). Red significance line shows time points with a significant difference between matched and antimatched activation, corrected for multiple comparisons (alpha-level: p<0.05/13 = 0.0038). C. Suppression of ongoing activity in retinotopically matched −LPMA pRFs during +OPA events. Peri-event time series depicts the grand average activity of matched (dark) and antimatched +OPA pRFs. As predicted, −LPMA activity is elevated during resting-state. This elevated ongoing activity is suppressed during events in retinotopically matched +OPA pRFs. Time series are baselined to the mean of the first three TRs (TRs −6 to −4 relative to event onset, dotted line). Blue significance line shows time points with a significant difference between matched and antimatched activation, corrected for multiple comparisons. D. Target area shows retinotopically-specific suppression of activity for both top-down and bottom-up events. Bars show the average activation at event time of the target areas’ matched and antimatched pRFs for each participant. Activity in matched pRFs was significantly lower than antimatched pRFs for both top-down (t(6)=4.13, p=0.006) and bottom-up (t(6)=3.17, p=0.02), and there was no difference in the influence of retinotopic coding between these event types (t(6)=0.86, p=0.42).

Acknowledgements:

The authors would like to thank the authors of the Natural Scenes Dataset for making these data publicly available. This work was supported by an award from the National Institutes of Mental Health (R01MH130529) to CER. AS was supported by the Neukom Institute for Computational Sciences. EHS was supported by the Biotechnology and Biological Sciences Research Council (BB/V003917/1).

Footnotes

Competing interests: The authors declare no competing interests.

Code availability: This data does not use any original code. Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Data availability:

All data is made publicly available via the Natural Scenes Dataset (https://naturalscenesdataset.org/)

References

- 1.Thomas Yeo B.T., Krienen F.M., Sepulcre J., Sabuncu M.R., Lashkari D., Hollinshead M., Roffman J.L., Smoller J.W., Zöllei L., Polimeni J.R., et al. (2011). The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J Neurophysiol 106, 1125–1165. 10.1152/JN.00338.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Margulies D.S., Ghosh S.S., Goulas A., Falkiewicz M., Huntenburg J.M., Langs G., Bezgin G., Eickhoff S.B., Castellanos F.X., Petrides M., et al. (2016). Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc Natl Acad Sci U S A 113, 12574–12579. 10.1073/PNAS.1608282113/SUPPL_FILE/PNAS.201608282SI.PDF. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Braga R.M., and Buckner R.L. (2017). Parallel Interdigitated Distributed Networks within the Individual Estimated by Intrinsic Functional Connectivity. Neuron 95, 457–471.e5. 10.1016/j.neuron.2017.06.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Gordon E.M., Laumann T.O., Gilmore A.W., Newbold D.J., Greene D.J., Berg J.J., Ortega M., Hoyt-Drazen C., Gratton C., Sun H., et al. (2017). Precision Functional Mapping of Individual Human Brains. Neuron 95, 791–807.e7. 10.1016/J.NEURON.2017.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ranganath C., and Ritchey M. (2012). Two cortical systems for memory-guided behaviour. Preprint at Nature Publishing Group, https://doi.org/10.1038/nrn3338 https://doi.org/10.1038/nrn3338. [DOI] [PubMed] [Google Scholar]

- 6.Andrews-Hanna J.R., Reidler J.S., Sepulcre J., Poulin R., and Buckner R.L. (2010). Functional-Anatomic Fractionation of the Brain’s Default Network. Neuron 65, 550–562. 10.1016/j.neuron.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Barnett A.J., Reilly W., Dimsdale-Zucker H.R., Mizrak E., Reagh Z., and Ranganath C. (2021). Intrinsic connectivity reveals functionally distinct cortico-hippocampal networks in the human brain. PLoS Biol 19, e3001275. 10.1371/JOURNAL.PBIO.3001275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Du J., DiNicola L.M., Angeli P.A., Saadon-Grosman N., Sun W., Kaiser S., Ladopoulou J., Xue A., Yeo B.T.T., Eldaief M.C., et al. (2024). Organization of the human cerebral cortex estimated within individuals: networks, global topography, and function. J Neurophysiol 131, 1014–1082. 10.1152/jn.00308.2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Laumann T.O., Gordon E.M., Adeyemo B., Snyder A.Z., Joo S.J., Chen M.Y., Gilmore A.W., McDermott K.B., Nelson S.M., Dosenbach N.U.F., et al. (2015). Functional System and Areal Organization of a Highly Sampled Individual Human Brain. Neuron 87, 657–670. 10.1016/J.NEURON.2015.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chun M.M., Golomb J.D., and Turk-Browne N.B. (2011). A Taxonomy of External and Internal Attention. Annu Rev Psychol 62, 73–101. 10.1146/annurev.psych.093008.100427. [DOI] [PubMed] [Google Scholar]

- 11.Dixon M.L., Andrews-Hanna J.R., Spreng R.N., Irving Z.C., Mills C., Girn M., and Christoff K. (2017). Interactions between the default network and dorsal attention network vary across default subsystems, time, and cognitive states. Neuroimage 147, 632–649. 10.1016/j.neuroimage.2016.12.073. [DOI] [PubMed] [Google Scholar]

- 12.Fox M.D., Snyder A.Z., Vincent J.L., Corbetta M., Van Essen D.C., and Raichle M.E. (2005). The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci U S A 102, 9673–9678. 10.1073/PNAS.0504136102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Raichle M.E. (2015). The Brain’s Default Mode Network. Annu Rev Neurosci 38, 433–447. 10.1146/annurev-neuro-071013-014030. [DOI] [PubMed] [Google Scholar]

- 14.Fox M.D., Corbetta M., Snyder A.Z., Vincent J.L., and Raichle M.E. (2006). Spontaneous neuronal activity distinguishes human dorsal and ventral attention systems. Proceedings of the National Academy of Sciences 103, 10046–10051. 10.1073/pnas.0604187103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Raichle M.E., MacLeod A.M., Snyder A.Z., Powers W.J., Gusnard D.A., and Shulman G.L. (2001). A default mode of brain function. Proceedings of the National Academy of Sciences 98, 676–682. 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fedorenko E., Duncan J., and Kanwisher N. (2013). Broad domain generality in focal regions of frontal and parietal cortex. Proceedings of the National Academy of Sciences 110, 16616–16621. 10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Vossel S., Geng J.J., and Fink G.R. (2013). Dorsal and Ventral Attention Systems: Distinct Neural Circuits but Collaborative Roles. The Neuroscientist 20, 150–159. 10.1177/1073858413494269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shulman G.L., Fiez J.A., Corbetta M., Buckner R.L., Miezin F.M., Raichle M.E., and Petersen S.E. (1997). Common Blood Flow Changes across Visual Tasks: II. Decreases in Cerebral Cortex. J Cogn Neurosci 9, 648–663. 10.1162/JOCN.1997.9.5.648. [DOI] [PubMed] [Google Scholar]

- 19.Silson E.H., Steel A., Kidder A., Gilmore A.W., and Baker C.I. (2019). Distinct subdivisions of human medial parietal cortex support recollection of people and places. Elife 8. 10.7554/eLife.47391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.DiNicola L.M., Braga R.M., and Buckner R.L. (2020). Parallel distributed networks dissociate episodic and social functions within the individual. J Neurophysiol 123, 1144–1179. 10.1152/jn.00529.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Thakral P.P., Madore K.P., and Schacter D.L. (2017). A Role for the Left Angular Gyrus in Episodic Simulation and Memory. J Neurosci 37, 8142–8149. 10.1523/JNEUROSCI.1319-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gilmore A.W., Quach A., Kalinowski S.E., Gotts S.J., Schacter D.L., and Martin A. (2021). Dynamic Content Reactivation Supports Naturalistic Autobiographical Recall in Humans. Journal of Neuroscience 41, 153–166. 10.1523/JNEUROSCI.1490-20.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Christoff K., Gordon A.M., Smallwood J., Smith R., and Schooler J.W. (2009). Experience sampling during fMRI reveals default network and executive system contributions to mind wandering. Proc Natl Acad Sci U S A 106, 8719–8724. 10.1073/PNAS.0900234106/SUPPL_FILE/0900234106SI.PDF. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Greicius M.D., Krasnow B., Reiss A.L., and Menon V. (2003). Functional connectivity in the resting brain: A network analysis of the default mode hypothesis. Proceedings of the National Academy of Sciences 100, 253–258. 10.1073/pnas.0135058100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Menon V. (2023). 20 years of the default mode network: A review and synthesis. Neuron 111, 2469–2487. 10.1016/j.neuron.2023.04.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Saad Z.S., Gotts S.J., Murphy K., Chen G., Jo H.J., Martin A., and Cox R.W. (2012). Trouble at Rest: How Correlation Patterns and Group Differences Become Distorted After Global Signal Regression. Brain Connect 2, 25–32. 10.1089/brain.2012.0080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Murphy C., Wang H.-T., Konu D., Lowndes R., Margulies D.S., Jefferies E., and Smallwood J. (2019). Modes of operation: A topographic neural gradient supporting stimulus dependent and independent cognition. Neuroimage 186, 487–496. 10.1016/j.neuroimage.2018.11.009. [DOI] [PubMed] [Google Scholar]

- 28.Murphy C., Jefferies E., Rueschemeyer S.A., Sormaz M., Wang H. ting, Margulies D.S., and Smallwood J. (2018). Distant from input: Evidence of regions within the default mode network supporting perceptually-decoupled and conceptually-guided cognition. Neuroimage 171, 393–401. 10.1016/J.NEUROIMAGE.2018.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bellmund J.L.S., Gärdenfors P., Moser E.I., and Doeller C.F. (2018). Navigating cognition: Spatial codes for human thinking. Science (1979) 362, eaat6766. 10.1126/SCIENCE.AAT6766. [DOI] [PubMed] [Google Scholar]

- 30.Popham S.F., Huth A.G., Bilenko N.Y., Deniz F., Gao J.S., Nunez-Elizalde A.O., and Gallant J.L. (2021). Visual and linguistic semantic representations are aligned at the border of human visual cortex. Nature Neuroscience 2021 24:11 24, 1628–1636. 10.1038/s41593-021-00921-6. [DOI] [PubMed] [Google Scholar]

- 31.Szinte M., and Knapen T. (2020). Visual Organization of the Default Network. Cerebral Cortex 30, 3518–3527. 10.1093/CERCOR/BHZ323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Knapen T. (2021). Topographic connectivity reveals task-dependent retinotopic processing throughout the human brain. Proc Natl Acad Sci U S A 118. 10.1073/PNAS.2017032118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Christiaan Klink P., Chen X., Vanduffel W., and Roelfsema P.R. (2021). Population receptive fields in non-human primates from whole-brain fmri and large-scale neurophysiology in visual cortex. Elife 10. 10.7554/ELIFE.67304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Steel A., Silson E.H., Garcia B.D., and Robertson C.E. (2024). A retinotopic code structures the interaction between perception and memory systems. Nat Neurosci 27, 339–347. 10.1038/s41593-023-01512-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Steel A., Billings M.M., Silson E.H., and Robertson C.E. (2021). A network linking scene perception and spatial memory systems in posterior cerebral cortex. Nature Communications 2021 12:1 12, 1–13. 10.1038/s41467-021-22848-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Steel A., Garcia B.D., Goyal K., Mynick A., and Robertson C.E. (2023). Scene Perception and Visuospatial Memory Converge at the Anterior Edge of Visually Responsive Cortex. The Journal of Neuroscience 43, 5723. 10.1523/JNEUROSCI.2043-22.2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Groen I.I.A., Dekker T.M., Knapen T., and Silson E.H. (2022). Visuospatial coding as ubiquitous scaffolding for human cognition. Trends Cogn Sci 26, 81–96. 10.1016/J.TICS.2021.10.011. [DOI] [PubMed] [Google Scholar]

- 38.Allen E.J., St-Yves G., Wu Y., Breedlove J.L., Prince J.S., Dowdle L.T., Nau M., Caron B., Pestilli F., Charest I., et al. (2021). A massive 7T fMRI dataset to bridge cognitive neuroscience and artificial intelligence. Nature Neuroscience 2021 25:1 25, 116–126. 10.1038/s41593-021-00962-x. [DOI] [PubMed] [Google Scholar]

- 39.Dumoulin S.O., and Wandell B.A. (2008). Population receptive field estimates in human visual cortex. Neuroimage 39, 647. 10.1016/J.NEUROIMAGE.2007.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kong R., Yang Q., Gordon E., Xue A., Yan X., Orban C., Zuo X.-N., Spreng N., Ge T., Holmes A., et al. (2021). Individual-Specific Areal-Level Parcellations Improve Functional Connectivity Prediction of Behavior. Cerebral Cortex 31, 4477–4500. 10.1093/cercor/bhab101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Griffanti L., Salimi-Khorshidi G., Beckmann C.F., Auerbach E.J., Douaud G., Sexton C.E., Zsoldos E., Ebmeier K.P., Filippini N., Mackay C.E., et al. (2014). ICA-based artefact removal and accelerated fMRI acquisition for improved resting state network imaging. Neuroimage 95, 232–247. 10.1016/j.neuroimage.2014.03.034. [DOI] [PMC free article] [PubMed] [Google Scholar]