Abstract

Representation learning for the electronic structure problem is a major challenge of machine learning in computational condensed matter and materials physics. Within quantum mechanical first principles approaches, density functional theory (DFT) is the preeminent tool for understanding electronic structure, and the high-dimensional DFT wavefunctions serve as building blocks for downstream calculations of correlated many-body excitations and related physical observables. Here, we use variational autoencoders (VAE) for the unsupervised learning of DFT wavefunctions and show that these wavefunctions lie in a low-dimensional manifold within latent space. Our model autonomously determines the optimal representation of the electronic structure, avoiding limitations due to manual feature engineering. To demonstrate the utility of the latent space representation of the DFT wavefunction, we use it for the supervised training of neural networks (NN) for downstream prediction of quasiparticle bandstructures within the GW formalism. The GW prediction achieves a low error of 0.11 eV for a combined test set of two-dimensional metals and semiconductors, suggesting that the latent space representation captures key physical information from the original data. Finally, we explore the generative ability and interpretability of the VAE representation.

Subject terms: Electronic structure, Computational methods

Representation learning for the electronic structure problem is a major challenge in computational physics. Here, authors learn a low-dimensional representation of the wave function and apply it for downstream prediction of many-body effects.

Introduction

Recently, machine learning (ML) has emerged as a powerful tool in condensed matter and materials physics, achieving substantial progress across areas including the identification of phase transitions1–7, quantum state reconstruction8–10, prediction of topological order11,12, symmetry13–19 and the study of electronic structure20–23. Among these ML applications, exploring the electronic structure of real materials is of particular interest, since it allows for the extension of computationally intensive predictive quantum theories to understand the physics of larger and more complex systems, such as moiré systems13,24–32 and defect states33–39. Within atomistic first principles theories, density functional theory (DFT)40 is the most commonly used approach for studying the electronic structure of materials. In principle, DFT gives accurate descriptions of the ground state charge density, but quantitative prediction of excited-state properties (including bandstructure) requires the introduction of excitations from the many-body ground state41,42. However, DFT can be used as a starting point for many-body calculations, where the wavefunctions within Kohn–Sham DFT are used to construct correlation functions for the excited states43,44. Therefore, harnessing the information embedded in the Kohn–Sham (KS) wavefunction40 becomes crucial for downstream applications. Here, a key challenge lies in distilling a succinct representation of the electronic structure while preserving the essential information45.

In contrast to the notable achievements of ML descriptors for crystal geometry and chemical composition46–50, the electronic structure of materials remains extremely challenging to learn. The high dimensionality of the KS wavefunction of real materials creates a complex data structure, making direct pattern detection challenging. Secondly, electronic structure is highly nonlinear and sensitive to both crystal configuration and intricate non-local correlations, making it difficult to develop a general ML model applicable across a broad spectrum of materials. ML models for electronic structure have been mostly confined to the study of specific subsets of materials, such as molecules51, perovskites52–54, or layered transition metal dichalcogenides (TMDs)55. In most of these approaches, the ML has focused on the prediction of single-valued properties, such as band gaps, largely due to the challenges of identifying well-defined, interpretable, and efficient representations of the electronic structure56–59. Recently, the development of operator representations, which capture more nuanced data about the underlying quantum states, has provided new opportunities for the prediction of full band structures. These techniques involve the physically informed selection of specific operators as fingerprints. Examples include the early successful use of energy decomposed operator matrix elements (ENDOME), combined with radially decomposed projected density of states (RAE-PDOS), to predict quasiparticle (QP) band structures60, and the use of spectral operator representations to predict material transparency61. However, the choice of descriptors in such approaches is informed by human physical intuition on the domain science side. This raises the question of whether more fundamental or generalizable descriptors can be learned, independent of human selection.

Variational autoencoders (VAEs)62,63, a class of probabilistic models that combine variational inference with autoencoders used to compress and decompress high dimensional data, stand out as a promising tool for learning electronic structure in a way that allows for unsupervised training and thus avoids feature engineering. The effectiveness of VAE compression has been demonstrated for various applications across condensed matter physics, including quantum state compression64, detection of critical features in phase transitions65 and decoupled subspaces66,67.

Here, we show that a well-crafted VAE is capable of representing KS-DFT wavefunctions on a manifold within a significantly compressed latent space, which is times smaller than the original input. Importantly, these succinct representations retain the physical information inherent in the initial data. To validate the efficacy of the VAE latent space in practical applications, we then build a supervised deep learning model for downstream prediction of k-resolved quasiparticle energies within the many-body GW approximation68,69, whose input includes VAE representations of KS states. This model yields a mean absolute error (MAE) of 0.11 eV, when applied to a test set of two-dimensional (2D) metals and insulators, which is comparable to the intrinsic numerical error of the GW approach32,44,70. Moreover, the VAE inherently predicts a smooth, physically realistic band structure, in contrast with previous models, which required an additional manually-imposed smoothing function to remove unphysical variations60. Lastly, we address the interpretability of the VAE representation and show that the success of electronic structure representation learning and downstream GW prediction is derived from the smoothness of the VAE latent space, which corresponds to the smoothness of the wavefunction in k-space. Because of this property, the VAE can serve as a promising wavefunction generator capable of predicting realistic wavefunctions at arbitrary points in latent space, which has the potential to be used for both wavefunction interpolation in k-space and materials design and discovery across the compositional phase space.

Results and discussion

VAE for electronic structure representation

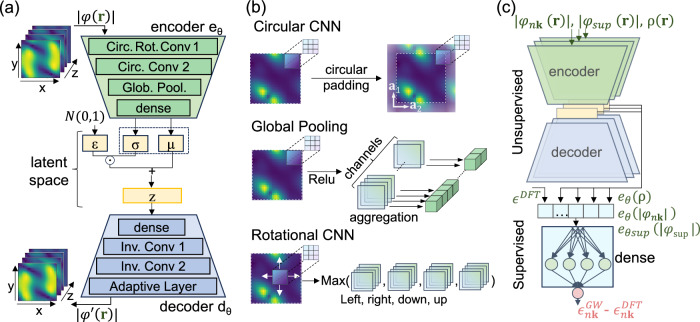

As Fig. 1a shows, the architecture of our VAE consists of two complementary sets of NN: the encoder () and the decoder (). The encoder maps the input of high-dimensional KS wavefunction moduli to a low-dimensional vector () of variational mean and deviation in the latent space. Here, , where represents the dimension of the latent space, and is the dimension of the real-space wavefunction ϕnk(r) in the unit cell, where the wavefunction is indexed by the band n and the crystal momentum (k-point) . Conversely, the decoder, mirroring the structure of the encoder, has an opposite function, which is to reconstruct the input wavefunction from its low-dimensional latent vector (). In essence, the VAE can be conceptualized as an information bottleneck for electronic structure, where the encoder acts as a data refinement process, discarding redundant information and noise from the input wavefunction. Meanwhile, the decoder ensures that vital physical information is preserved for wavefunction recovery. Since the KS state is essentially a spatial distribution with local patterns, convolutional neural networks (CNN)71–74 provide a practical framework for the first two layers of the encoder and decoder.

Fig. 1. Neural network architecture of variational autoencoders (VAE) for crystal systems.

a Schematic of the VAE. The encoder (green trapezoid) consists of a rotational convolutional neural network (CNN), a circular CNN, a global pooling layer and one flattened dense layer, which are explicitly presented in (b). The encoder maps a real space wavefunction to a latent space vector of variational mean and variance . Z is the sampled latent vector, drawn from a variational Gaussian distribution using a reparameterization trick63. The decoder (blue trapezoid) has symmetric neural networks (NN) structures. The latent space serves as an information bottleneck for the VAE as its dimensions are smaller than the input and output (represented by the colormaps of the wavefunction in real space). b The circular CNN layer includes circular padding techniques based on the periodic boundary conditions. The width of the circular padding equals the size of the CNN kernel so that the output of the CNN has the same periodicity as the input. Global pooling layers output the average value of each channel from the CNN feature map. Rotational CNN layers scan the input along four directions and outputs the max of (left, right, up, down) feature maps to the next layer. The detail of model parameters and additional benchmarks are listed in Supplementary Note 1. c Schematic of the overall semi-supervised learning model, including both the unsupervised VAE and supervised dense NN. The VAE inputs are the Kohn–Sham (KS) wavefunction modulus , all super states and charge density in real space. The input layer of the supervised dense NN is comprised of density functional theory (DFT) energies, denoted as , along with low dimensional effective representations of , and denoted as , and respectively. These representations are encoded within the VAE latent space (yellow square) through an encoder with parameters sup and , which are unsupervisedly trained for all KS wavefunctions, super states and charge density.

By imposing a distribution of latent variables close to a standard normal distribution during training, the VAE is capable of a smooth mapping from the connected KS states and to nearby points in the latent space, enabling generative power after training. We define the total VAE loss function as:

| 1 |

where the first term is the mean squared error (MSE) of the reconstructed wavefunctions, and the second term is the Kullback-Leibler (KL) divergence, which forces the latent space to approach a normal distribution. The parameter is the weight of KL divergence, tuning the degree of regularization of the latent space. is the total number of KS states in the training set and, and is an index in the latent space.

Additionally, we design our VAE to respect rotational and translational symmetries of the crystal so that downstream prediction of physical observables will not vary with the choice of unit cell. As shown in Fig. 1a, b, we insert a global average pooling (GAP)75 layer between the CNN and the dense layer in the encoder. The GAP layer allows CNNs to accept inputs of varying sizes and produce a fixed-length output, which is crucial for the subsequent fully connected layers in the encoder. By aggregating the spatial information of the feature maps, the GAP layer also enforces translational invariance with respect to the unphysical degree of freedom that arises from the choice of the origin of the unit cell. Then, we include a circular padding technique in the CNNs76 to account for the periodic boundary conditions of the crystal system. The first layer of the encoder features a discrete rotational CNN77 along the lattice constant directions, which select out the combined feature maps with maximum summation value to preserve invariance to the choice of lattice vectors and direction. With such a CNN, given the same material, data from any continuously complete periodicity of a specific KS state will generate the same latent space. This allows us to capture properties associated with physical symmetries of the crystal without explicitly including symmetry labels. Finally, to ensure that the VAE-generated wavefunction’s size aligns with the input, allowing us to define a proper loss function, we include an adaptive layer as the final layer in our decoder.

By feeding DFT wavefunctions to our VAE model, we can interpret the vector () in latent space as a low-dimensional effective representation associated with the individual KS state. This representation has several advantages: (i) it is smooth due to the smooth nature of the neural network; (ii) it can handle translational invariance and periodic boundary conditions, (iii) it can handle any symmetry and cell size; (iv) even when trained with unit cell data, it can be extended to supercells beyond a single unit cell. More details and benchmarks regarding the VAE model are given in Supplementary Notes 1–3.

Neural network for downstream prediction of GW band structures

Due to its ground-state nature, DFT calculations can yield inaccurate single-particle band structures and tend to underestimate the band gap of semiconductors compared to experiment78–80. To incorporate the many-electron correlation effects that are missing in DFT and obtain accurate quasiparticle (QP) bandstructures, one can replace the effective single-particle exchange correlation potential in DFT with non-local and energy-dependent self energy calculated within the GW approximation in many-body perturbation theory43,68,81,82. This approach proves highly effective for computing QP bandstructures in a wide range of materials43. However, in practice, constructing a GW self-energy, even for small systems70,81,83–86, is much more computationally expensive than DFT, and this remains a bottleneck to the broader adoption of the GW approach for high throughput studies. Therefore, in the context of understanding materials’ excited-state properties, a natural approach is to use low-fidelity techniques like density functional theory (DFT) to try and predict the results of high-fidelity, computationally intensive many-body calculations. Currently, most ML work in this field focuses on the use of indirect predictors for model training, using, for instance, crystal geometry, chemical composition, and the DFT bandgap as input46–49,56,57. These methods, however, are generally limited to single value prediction of the band gap, and only effective within specific subsets of materials, such as inorganic solids59. Operator fingerprint methods like ENDOME and RAD-PDOS extend predictive capability to k-resolved band structures, but the selection of features in these models heavily relies on human intuition and can introduce unphysical fluctuations in band structures, which are then smoothed in post-processing60. There have also been ML models dedicated to predicting the screened dielectric function87–89, which can speed up GW calculations but don’t provide information that is generalizable to different systems.

Due to the challenging nature of capturing non-local frequency-dependent correlations in the electronic structure, we select the downstream prediction of GW bandstructures as proof of principle of the effectiveness of our VAE latent vector representation of the KS wavefunctions. We develop a supervised deep NN on top of the VAE latent space to predict many-body GW corrections. The goal of this NN is to successfully learn the diagonal part of GW self-energy 68,69:

| 2 |

where is a reciprocal lattice vector; is the difference between any two k-vectors and is integrated over the Brillouin zone (BZ); is KS-DFT energy; and . is the screened Colomb interaction calculated within the random phase approximation, and is the frequency dependence of the self energy. Notably, the calculation of the GW self energy includes a sum over infinite bands, m. In practice, the sum over states is treated as a convergence parameter, and the number of bands included in the summation is of the same order as the number of reciprocal lattice vectors included in .

The diagonal self-energy matrix element for a specific state shown in Eq.(2) can be expressed as: , where and are the KS state vectors; is the charge density; is the total number of occupied and unoccupied states used in GW calculations. and are the corresponding DFT eigenvalues. Here, we note that includes and includes , but we include both terms explicitly in the function f to make later steps more transparent. The output is the GW self energy. Due to the closed-form expression of the self-energy, mathematically, we expect that a simple dense NN can acquire an understanding of the non-linear mapping from the KS wavefunction and energies to , which consists of the mapping of ()90,91.

However, two significant challenges prevent the application of a simple NN model such as , where is the NN predicted self-energy, and are the parameters of the model. (i) Due to the high computational cost of the GW algorithm, only a limited subset of GW energies near the Fermi level can be exactly calculated and used for the supervised learning training set, denoted as . As a result, , and overfitting is inevitable (see Supplementary Fig. 8). (ii) The curse of dimensionality makes it formidable to learn the nonlinear mapping from a high-dimensional sparse wavefunction space to the self-energy space. To address these challenges, we adopt the manifold assumption92–95 that the DFT electronic wavefunction in real space can be modeled as lying on a low-dimensional manifold. Additionally, we assume that two DFT wavefunctions mapped to nearby points on the manifold should have comparable contributions to the final GW energy corrections. If these two assumptions hold true, then the VAE is ideal for downstream prediction of GW self energies.

Here, to capture the non-local correlation, the pseudobands approximation96–98 is further employed for all states. That is, DFT wave functions with close energies are summed into effective super states (see Supplementary Note 6). We assume that the manifold assumption also applies to the super states and charge density, and the VAE is used to further remove redundant information in them. Eventually, our semi-supervised, physics-informed model reads:

| 3 |

where , and are encoders exclusively trained for KS states, super states and charge density respectively. The schematic workflow of our model is shown in Fig. 1b. The output of the NN is the predicted GW diagonal self-energies , and the inputs are the wavefunction of , super bands corresponding to occupied and unoccupied states on a uniform k-grid, and the ground state energies. These inputs are identical to the input of an explicit GW calculation to eliminate bias due to feature selection.

Application to dataset of 2D materials

To benchmark the predictive power of our model, we select 302 materials with unit cells of 3 to 4 atoms, including both metals and insulators, from the Computational 2D Materials Database (C2DB)99–101, for training and validation. For the unsupervised VAE training, our dataset is comprised of 68,384 DFT electronic states sampled on a 6 × 6 × 1 uniform k-grid, which are randomly split into 90% training set and 10% test set. For supervised training of GW corrections, the entire GW energy dataset is randomly partitioned into two subsets: 10% (2201 electronic states) is allocated as the test set, while the remaining 90% (19801 electronic states) is designated as the training set. We further completely exclude a small subset of 30 materials from the training set for the VAE and three monolayer TMD materials—MoS2, WS2, and CrS2—from both the VAE and GW training datasets. Comprehensive details regarding dataset, training dynamics and additional benchmarks are listed in Supplementary Note 5.

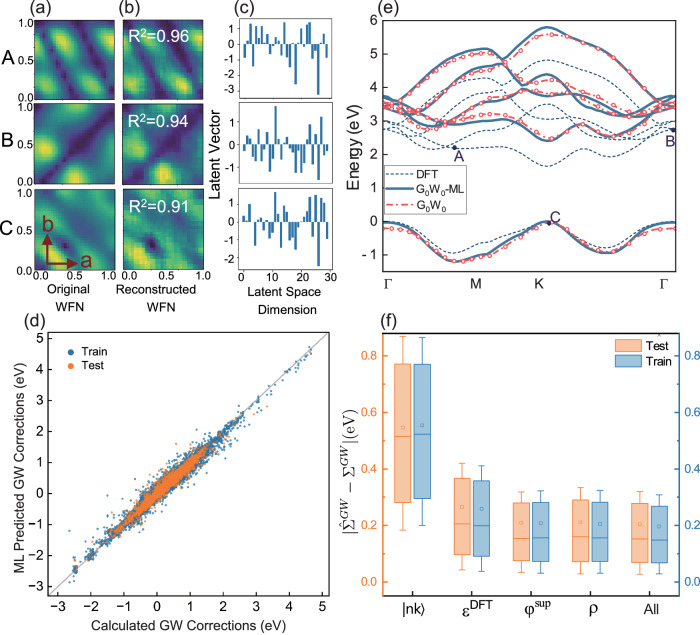

Figure 2a shows three DFT electronic wavefunctions, A, B, and C, for monolayer MoS2, which is completely excluded from the training data, within the x-y plane of the unit cell, which correspond to the dark blue circles shown in Fig. 2e. Figure 2b shows the VAE-reconstructed wavefunction of states A, B and C, which are nearly identical to the original wavefunction after recovery from the low-dimensional latent space with high coefficients of determination, , of 0.96, 0.94 and 0.91 respectively. Figure 2c shows the VAE variational mean vector for states A, B and C respectively. Overall, the of the VAE-reconstructed wavefunction is 0.92 across the test set when compared with the amplitude of the original DFT wavefunction, and the normalization of the wavefunction is preserved within 1% (see Supplementary Fig. 10). Remarkably, the VAE-reconstructed wavefunctions reproduce the ground state charge density, the physically relevant quantity in DFT, with a high even though the total charge density is never used in the VAE training. The results demonstrate that the original electronic wavefunction in the high-dimensional real space can be effectively compressed by times into a representative vector in in a way that still preserves the vital information needed for reconstruction. Our autonomously determined representation is over 100 times more compact than previous electronic fingerprint approaches60.

Fig. 2. Application of crystal variational autoencoders (VAE) and downstream GW predictions.

a Density functional theory (DFT) calculated wavefunction of states A, B and C in MoS2 (see (e)) used as input to VAE encoder, in crystal coordinates in units of the lattice vector. WFN denotes wavefunction. b VAE reconstructed wavefunctions of states A, B and C through latent space decoding. c Low-dimensional variational mean latent space for states A, B and C. (d) parity plot comparing the exact calculated values (x-axis) to the Machine learning (ML) predicted values (y-axis) of the GW correction for individual states. Blue (orange) dots represent training (test) sets. The mean absolute error (MAE) for the training set and test set are 0.06 and 0.11 eV respectively. The total number of data points in training (test) set are 19801(2201). e ML predicted GW band structures (blue solid curve) and calculated PBE band structures (blue dashed line) for monolayer MoS2. The red circles are the exactly calculated quasiparticle (QP) energies from GW self-energy. The red dashed lines are the interpolated GW band structures. f Whisker plot of ML predicted GW error without utilizing representations of KS states , DFT energies , superstates or charge density . "All" denotes using all information. The orange (blue) boxes represent the test (training) set. The total number of data points under each box are 19801(2201) for training(test) sets. Each box plot displays the absolute error distribution of the self energies , highlighting the median (box center line, i.e. Q2), 25th – 75th percentiles of dataset (lower and upper boundary of box, i.e. Q1 and Q3), mean (small square within box) and outlier cutoff (lower and upper whisker mark are defined as Q1-(Q3-Q1)0.2 and Q1 + (Q3-Q1)0.2, so all datapoints beyond the range are considered outliers). The training process spans 1,000 epochs. Source data are provided as a Source Data file.

Figure 2d shows the comparison between the GW corrections calculated explicitly and predicted by the downstream ML model, where orange (blue) dots represent the test (training) sets. The model yields an MAE of 0.06 eV () and 0.11 eV () for the training and test sets respectively, confirming that the VAE-learned representations of the DFT wavefunctions contain sufficient information to describe the non-local GW self-energy. We emphasize that the GW correction is more than a simple scissor shift. The average standard deviation of GW corrections across all bands and k-points in each material is 0.54 eV, which is much larger than the prediction accuracy of 0.11 eV (see Supplementary Fig. 4). Figure 2e shows the ML predicted GW band structure of monolayer MoS2, which agrees remarkably with results obtained by explicit GW calculation (red circles in the bandstructure). Due to the generative power of the VAE latent space63, even in the absence of electronic states along the high-symmetry path and the material MoS2 in the training set, our model can accurately predict a smooth GW bandstructure along for MoS2. Figure 2f shows how each individual input affects the accuracy of the GW NN model. We find that excluding the latent space representation of the wavefunction in training significantly reduces the value by 0.5, directly showing the importance of VAE representation of individual KS states in GW prediction. GW calculations can also be accomplished through the self-consistent Sternheimer equation, utilizing solely the occupied electronic states102–104, so in principle, inclusion of empty state information through the use of super states is not strictly necessary. Here, excluding the super states encoding the empty states reduces the value by 0.05. More details regarding training dynamics are presented in Supplementary Figs. 5–7.

Interpretability and generative power of the latent space

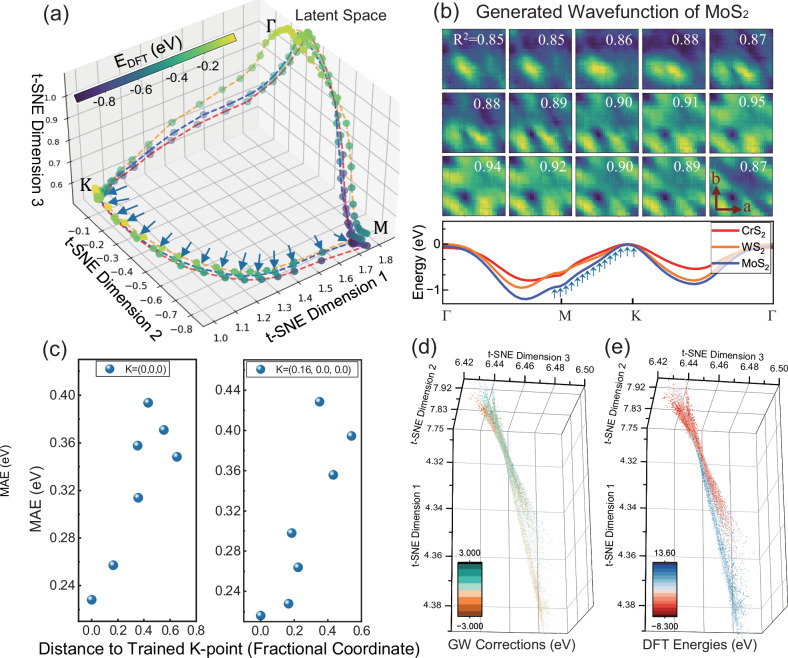

Next, to understand the generative power of our model, we open up the black box of the VAE and explore the meaning of the latent space obtained from unsupervised learning. We utilize a 3D t-distributed stochastic neighbor embedding (t-SNE) to visualize the VAE latent space for the electronic structure. As depicted in Fig. 3a, the circles linked by blue dashed lines represent the latent points of the first valence band states along the Γ-K-M-Γ high symmetry path of monolayer MoS2. These points form a continuous and enclosed trajectory in the latent space, corresponding to the smooth closed path in the k-space shown in the lower bandstructure of Fig. 3b. For comparison, the latent trajectories from two other TMD monolayers WS2 (blue) and CrS2 (red), are also shown in Fig. 3a. The similarity in the electronic structures of these three TMDs (see Fig. 3b) is encoded in the similarities of the paths in latent space. To further demonstrate the generative power of the VAE, Fig. 3b shows the continuous evolution of 15 VAE generated real-space KS wavefunction moduli from M-K in the first valence band of monolayer MoS2. The generated wavefunctions are constructed using the VAE decoder, which processes sampled points along a smooth curve from K to M in the latent space, denoted by the blue arrows in Fig. 3a. Notably, even though the VAE training set is only comprised of states sampled on a uniform k-point grid, and these three TMDs are entirely excluded from the training set, the generated wavefunction in Fig. 3b can still have a high R2 around 0.9, showing the VAE accurately generates wavefunctions that the training has never seen. The underlying reason is that the three trajectories in Fig. 3a lie on the smooth latent surface learned by the well-trained VAE, where similar states are mapped to neighboring points in the latent space. Therefore, the smoothness and regularity of the VAE latent space can be used to quantify the extrapolative and generative power of our model and suggest that any sampled points from the learned latent surface are physically meaningful and can be reconstructed back to a wavefunction.

Fig. 3. Interpretation and generative ability of low dimensional representation of Kohn–Sham (KS) states learnt by variational autoencoder (VAE).

a 3D t-distributed stochastic neighbor embedding (t-SNE) visualization of the latent space for KS wavefunctions in the first valence band along for the transition metal dichalcogenide (TMD) monolayers MoS2, WS2 and CrS2, as shown in the lower band structures in (b). The dots (latent points) are mapped from the high-dimensional electronic wavefunction with color coded by Density Functional Theory (DFT) energies. The blue, orange and red dashed lines connect latent points corresponding to the KS states from the first valence band of MoS2, WS2 and CrS2 respectively. Blue arrows denote latent points of 15 states along from MoS2, as shown in (b). b Generated real-space wavefunction moduli decoded from latent points, which are sampled from the smooth latent curve of monolayer MoS2, for each KS state from in the first valence band. The inset white number represents the R2 value, showing the correlation with the explicitly calculated wavefunction. The order of states are in row-major order and from left to right. The lower figure represents the DFT bandstructures of monolayer MoS2 (Blue), WS2 (Orange) and CrS2 (Red). The blue arrows indicate the KS states shown in the upper figures. The leftmost arrow indicates the first (top left) state shown in the upper part. c Mean absolute error (MAE) of GW prediction for different k-points and generative power for k-point interpolation. Here, the model is trained exclusively using GW energies at the (left panel) point or (right panel). The x-axis is the distance from the trained k-point to the untrained k-points in reciprocal space. d-e 3D t-SNE components of VAE latent vector of 22002 KS wavefunction with GW labels, whose color are mapped to the GW correction corrections (d) and DFT energies (e) respectively. Source data are provided as a Source Data file.

Additionally, to explain the success of our model in predicting GW corrections at arbitrary k-points, we investigate how the supervised training at certain k-points affects the GW prediction at untrained k-points in the BZ. Figure 3c illustrates the average GW MAE for different k-points, as predicted by a dense NN that is only trained with GW energies at the point (left panel) or a single finite momentum k-point (right panel). Notably, the prediction error tends to be lower for k-points in proximity to the trained k-point, contrasting with higher errors for those farther away from the trained k-point. This trend originates from the smoothness of the VAE latent space, indicating that two states that are close in the latent space should contribute comparably to the GW corrections, as they are also expected to be close in k-space as shown in Fig. 3b. This explains the capability of our model to accurately predict k-resolved GW band structures when trained with limited and uniform k-grid data.

Finally, to further verify the manifold assumption for electronic structure in real materials and explore how GW energies correlate to the VAE-coded representation, we apply the 3D t-SNE on top of the latent mean space for all 22002 states with GW labels, whose colors are coded to their calculated GW-correction (Fig. 3d) and DFT energies (Fig. 3e). The t-SNE analysis provides direct evidence for the manifold assumption that the VAE effectively maps the latent space of the electronic wavefunction to a smooth manifold even for different materials. More intriguingly, even though the unsupervised VAE learning procedure is entirely independent of GW labels, the magnitude of both the GW corrections and DFT energies exhibit a distinct pattern with different branches in the manifold corresponding to the magnitude of different GW corrections or DFT energies, as opposed to a random distribution. As a result, the t-SNE analysis serves as a crucial validation, demonstrating that unsupervised representation learning can effectively capture the inherent statistical correlation between GW energies and the electronic wavefunction. Thus, the unsupervised VAE acts as a form of pre-learning, which can significantly lower the barrier for the subsequent supervised learning of GW self energies.

In summary, we demonstrate that a properly designed VAE model can unsupervisedly learn KS DFT wavefunctions, compressing them as a low-dimensional latent space, in a way that preserves physical observables like total charge density and fundamental information needed for downstream prediction of excited-state properties. Since our model autonomously determines the crucial information for preservation through the unsupervised reconstruction of wavefunction data, it can establish a low-dimensional representation that avoids limitations due to feature engineering and selection. The representations preserve the translational invariance, discrete rotational invariance, and periodic boundary conditions of crystals with different cell sizes and symmetry. Our VAE model achieves a of 0.92 when reconstructing the wavefunctions in the test set. To further test the effectiveness of the VAE representation of KS states, we train a supervised dense NN for downstream prediction of GW self-energies on top of the latent space of KS states. The resulting model demonstrates a remarkably low MAE of 0.11 eV on a test set and can be used to predict both arbitrary k-points and materials held back from the training set. While other ML models have been used to predict GW bandstructure, the main advantage of our approach lies in our ability to interpret the smoothness of the latent space in relation to the completeness of our training set across both k-space and the space of material chemical and structural composition. This eliminates unphysical oscillations seen in previous models and allows us to confidently evaluate the generalizability of our model to states outside the training set improving its generative power. The smooth evolution of KS states in k-space can be mapped to a smooth trajectory in the latent space, and the sampled points from this continuous trajectory are physically meaningful and can also be reconstructed, enabling the generation of wavefunctions (and related physical observables) at uncalculated points at no additional cost. Here, in this first demonstration, we focus on predicting GW bandstructures of 2D materials, but we expect this framework to be generalizable to other crystalline systems and downstream applications, given expanded training sets. Notably, VAE wavefunction generation suggests a general route to building ML models for many post-Hartree-Fock (HF) corrections, including quantum Monte Carlo (QMC)105, Density Matrix Downfolding106, coupled cluster singles and doubles (CCSD)107,108, and complete active space configuration interaction (CASCI)109,110, all of which rely on the construction of higher-order wavefunctions, Green’s functions, and density matrices from DFT wavefunctions.

Methods

This section describes the details of construction of the translationally invariant variational autoencoder (VAE) model, downstream neural networks, and first principles density functional theory (DFT) and GW dataset. The mathematical proof of invariance, training dynamics, ground state applications of the latent space, additional benchmarks, and analysis of the pseudobands approximation are given in the Supplementary Notes. The atomic structure of materials used in this study are from the open database: Computational 2D Materials Database (C2DB)99–101.

Translationally invariant VAE

Our model is developed utilizing the Pytorch package with parameters shown in Table 1111. The first column categorizes each layer by its functionality, alongside the respective layer indices. Specifically, ‘Conv2d’ denotes a two-dimensional CNN layer, ‘RotationalConv2d’ denotes the two-dimensional rotational CNN layer. A kernel size of and 320 channels per CNN layer are sufficient to recover KS states with an overall R2 score exceeding 0.87. ‘ReLU’ stands for the rectified linear unit, an activation function renowned for its widespread application in neural networks112. ‘MaxPool2d’ indicates the max pooling layer, while the ‘Flatten’ layer plays a pivotal role by transforming the multi-dimensional outputs from preceding layers into a format suitable for the subsequent fully connected layers. ‘AdaptiveAvgPool2d’ indicates the GAP layer by specifying output as . The ‘Linear’ layer refers to the fully connected layer. ‘Sigmoid’ is mentioned as a nonlinear activation function that generates an output ranging from 0 to 1113, which is ideal for modeling the reconstruction of wavefunction moduli. The architecture’s layout is further detailed by listing the number of parameters contained within each model layer in the second column.

Table 1.

VAE Parameters

| Layer (type) | Parameter number |

|---|---|

| RotationalConv2d-1 | 115,520 |

| MaxPool2d-3 | 0 |

| Conv2d-4 | 921,920 |

| ReLU-5 | 0 |

| AdaptiveAvgPool2d-6 | 0 |

| Flatten-7 | 0 |

| Linear-8 | 19,260 |

| Linear-9 | 48,608 |

| ReLU-10 | 0 |

| Unflatten-11 | 0 |

| ConvTranspose2d-12 | 17,340 |

| ReLU-13 | 0 |

| ConvTranspose2d-14 | 9,640 |

| Sigmoid-15 | 0 |

| Total Trainable Parameters | 1,132,288 |

The table shows the detail of each layer of variational autoencoder (VAE).

The input grid size (resolution) of wavefunctions in real space within a primitive unit cell depends on both the crystal lattice and the kinetic energy cutoff of the plane wave basis in the density functional theory (DFT) calculations. However, even if the number of trainable parameters in the convolutional neural network (CNN) layers remains constant regardless of the size of the input grid, the fully connected dense layer always requires the same input size from the output of the CNN layer. To address this, a commonly used preprocessing technique is cropping/resizing before passing input to CNN. However, this straightforward method does not adequately account for the freedom to choose the origin of the unit cell and the invariance of physical properties under discrete translational and rotational symmetries.

The main bottlenecks faced by using a simply rigid and uniform grid are (i) lack of preservation of translational invariance, (ii) lack of preservation of discrete rotational symmetry, (iii) improper handling of periodic boundary conditions (PBC), and (iv) lost information about unit cell size and symmetry. To address these issues and improve the generalizability of the VAE, we integrate a circular CNN layer, global average pooling layer, and rotational CNN technique in the encoder as shown in Fig. 1a, b As a result, the latent vector of KS state are invariant to the unphysical degree of freedom of arbitrary translation and lattice constant selection. In addition, given the same crystal structure, are a representation of in the whole real space, so it is identical for different supercells constructed from the same unit cell. i.e.,

where are the output of the last CNN layer, is the number of channels, and are arbitrary positive integers defining the number of primitive cells in a supercell. is the fully connected neural network following the CNN. The mathematical demonstration, model parameters, and corresponding benchmark are detailed in Supplementary Note 1.

For the downstream prediction of GW bandstructures, we employ a vanilla neural network including three fully connected layers with 13,801 trainable parameters. To reduce the risk of training set dependence, we also adapt k-fold cross validation for our evaluating model, where the data set is evenly split into 10 subsets (see Supplementary Fig. 6c). To visualize the latent space learnt by the VAE, we employed 3D t-distributed stochastic neighbor embedding (t-SNE). The perplexity in t-SNE calculations is set as 10,000 (22,002 datapoints in dataset) to balance the preservation of the global and local structure of the high dimensional data.

DFT and GW dataset

To benchmark the predictive power of our model, we select 302 materials from the Computational 2D Materials Database (C2DB)99–101, which was also used in the work of Knøsgaard et al.60, for training and validation. The dataset includes both metals and semiconductors across all crystal systems (we note that previous ML for GW prediction in this database was restricted to the subset of semiconducting materials) with the number of atoms ranging from 3 to 4. For the unsupervised VAE training, our dataset is comprised of 68,384 DFT electronic states sampled on a 6 × 6 × 1 uniform k-grid for 302 2D materials, which are randomly split into 90% training set and 10% test set. We include the same number of conduction states as valence states for each material in the VAE training, ensuring a comprehensive understanding of the electronic structure of both occupied and unoccupied states. For supervised training of GW corrections, the entire GW energy dataset is randomly partitioned into two subsets: 10% (2201 electronic states) is allocated as the test set, while the remaining 90% (19801 electronic states) is designated as the training set. We further completely exclude a small subset of 30 materials from the training set for the VAE and three monolayer TMD materials—MoS2, WS2, and CrS2—from both the VAE and GW training datasets. The DFT and GW datasets are generated using GPAW114,115. Comprehensive details regarding dataset, training dynamics and additional benchmarks are listed in Supplementary Note 5.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Supplementary information

Source data

Acknowledgements

We gratefully acknowledge helpful discussions with Mr. Xingzhi Sun at Yale University. This work was primarily supported by the U.S. DOE, Office of Science, Basic Energy Sciences under Early Career Award No. DE-SC0021965 (DYQ). Excited-state code development was supported by Center for Computational Study of Excited-State Phenomena in Energy Materials (C2SEPEM) at the Lawrence Berkeley National Laboratory, funded by the U.S. DOE, Office of Science, Basic Energy Sciences, MaterialsSciences and Engineering Division, under Contract No. DE-C02-05CH11231 (DYQ). The calculations used resources of the National Energy Research Scientific Computing (NERSC), a DOE Office of Science User Facility operated under contract no. DE-AC02-05CH11231; the Advanced Cyberinfrastructure Coordination Ecosystem: Services & Support (ACCESS), which is supported by National Science Foundation grant number ACI-1548562; and the Texas Advanced Computing Center (TACC) at The University of Texas at Austin.

Author contributions

B.H. and D.Y.Q. conceived and designed the workflow of the project. B.H. and J.W. developed the Python code for VAE model and downstream prediction NNs. All authors analyzed the data and wrote the manuscript. D.Y.Q. supervised the work and helped interpret the results.

Peer review

Peer review information

Nature Communications thanks Shivesh Pathak and the other anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Data availability

The data (KS wavefunction and GW energies of 302 materials) generated in this study have been deposited in the Materials Data Facility repository under 10.18126/rpa1-yp91. Source data are provided with this paper.

Code availability

Code associated with this work is available from the public github repository https://github.com/bwhou1997/VAE-DFT with 10.5281/zenodo.13617033116 and from Code Ocean117.

Competing interests

The authors declare no competing interests

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41467-024-53748-7.

References

- 1.Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys.13, 431–434 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Broecker, P., Carrasquilla, J., Melko, R. G. & Trebst, S. Machine learning quantum phases of matter beyond the fermion sign problem. Sci. Rep.7, 8823 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ch’ng, K., Carrasquilla, J., Melko, R. G. & Khatami, E. Machine learning phases of strongly correlated fermions. Phys. Rev. X7, 031038 (2017). [Google Scholar]

- 4.Zhang, Y. & Kim, E.-A. Quantum loop topography for machine learning. Phys. Rev. Lett.118, 216401 (2017). [DOI] [PubMed] [Google Scholar]

- 5.van Nieuwenburg, EvertP. L., Liu, Y.-H. & Huber, SebastianD. Learning phase transitions by confusion. Nat. Phys.13, 435–439 (2017). [Google Scholar]

- 6.Costa, N. C., Hu, W., Bai, Z. J., Scalettar, R. T. & Singh, R. R. P. Principal component analysis for fermionic critical points. Phys. Rev. B.96, 195138 (2017). [Google Scholar]

- 7.Ponte, P. & Melko, R. G. Kernel methods for interpretable machine learning of order parameters. Phys. Rev. B.96, 205146 (2017). [Google Scholar]

- 8.Carrasquilla, J., Torlai, G., Melko, R. G. & Aolita, L. Reconstructing quantum states with generative models. Nat. Mach. Intell.1, 155–161 (2019). [Google Scholar]

- 9.Lohani, S., Searles, T. A., Kirby, B. T. & Glasser, R. T. On the experimental feasibility of quantum state reconstruction via machine learning. IEEE Trans. Quant. Eng.2, 1–10 (2021). [Google Scholar]

- 10.Lohani, S., Kirby, B. T., Brodsky, M., Danaci, O. & Glasser, R. T. Machine learning assisted quantum state estimation. Mach. Learn. Sci. Technol.1, 035007 (2020). [Google Scholar]

- 11.Scheurer, M. S. & Slager, R.-J. Unsupervised machine learning and band topology. Phys. Rev. Lett.124, 226401 (2020). [DOI] [PubMed] [Google Scholar]

- 12.Zhang, P., Shen, H. & Zhai, H. Machine learning topological invariants with neural networks. Phys. Rev. Lett.120, 066401 (2018). [DOI] [PubMed] [Google Scholar]

- 13.Gong, X. et al. General framework for E(3)-equivariant neural network representation of density functional theory Hamiltonian. Nat. Commun.14, 2848 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gasteiger, J., Becker, F. & Günnemann, S. Gemnet: Universal directional graph neural networks for molecules. Adv. Neural Inform. Process. Syst.34, 6790–6802 (2021). [Google Scholar]

- 15.Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun.13, 2453 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Qiao, Z. et al. Informing geometric deep learning with electronic interactions to accelerate quantum chemistry. Proc. Natl Acad. Sci. USA119, e2205221119 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett.120, 145301 (2018). [DOI] [PubMed] [Google Scholar]

- 18.Unke, O. T. & Meuwly, M. PhysNet: A neural network for predicting energies, forces, dipole moments, and partial charges. J. Chem. Theory Comput.15, 3678–3693 (2019). [DOI] [PubMed] [Google Scholar]

- 19.Li, H. et al. Deep-learning density functional theory Hamiltonian for efficient ab initio electronic-structure calculation. Nat. Comput. Sci.2, 367–377 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Xia, R. & Kais, S. Quantum machine learning for electronic structure calculations. Nat. Commun.9, 4195 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fiedler, L. et al. Predicting electronic structures at any length scale with machine learning. npj Comput. Mater.9, 115 (2023). [Google Scholar]

- 22.Jackson, N. E. et al. Electronic structure at coarse-grained resolutions from supervised machine learning. Sci. Adv.5, eaav1190 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schütt, K. T. et al. How to represent crystal structures for machine learning: towards fast prediction of electronic properties. Phys. Rev. B89, 205118 (2014). [Google Scholar]

- 24.Guo, H., Zhang, X. & Lu, G. Shedding light on moiré excitons: a first-principles perspective. Sci. Adv.6, eabc5638 (2020). [DOI] [PMC free article] [PubMed]

- 25.Cao, Y. et al. Unconventional superconductivity in magic-angle graphene superlattices. Nature556, 43–50 (2018). [DOI] [PubMed] [Google Scholar]

- 26.Andrei, E. Y. et al. The marvels of moiré materials. Nat. Rev. Mater.6, 201–206 (2021). [Google Scholar]

- 27.Liu, D., Luskin, M. & Carr, S. Seeing moir\‘e: convolutional network learning applied to twistronics. Phys. Rev. Res.4, 043224 (2022). [Google Scholar]

- 28.Willhelm, D. et al. Predicting Van der Waals heterostructures by a combined machine learning and density functional theory approach. ACS Appl. Mater. Interfaces14, 25907–25919 (2022). [DOI] [PubMed] [Google Scholar]

- 29.Sobral, J. A., Obernauer, S., Turkel, S., Pasupathy, A. N. & Scheurer, M. S. Machine learning the microscopic form of nematic order in twisted double-bilayer graphene. Nat. Commun.14, 5012 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yang, H. et al. Identification and structural characterization of twisted atomically thin bilayer materials by deep learning. Nano Lett.24, 2789–2797 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tritsaris, G. A., Carr, S. & Schleder, G. R. Computational design of moiré assemblies aided by artificial intelligence. Appl. Phys. Rev.8, 031401 (2021). [Google Scholar]

- 32.Louie, S. G., Chan, Y.-H., da Jornada, F. H., Li, Z. & Qiu, D. Y. Discovering and understanding materials through computation. Nat. Mater.20, 728–735 (2021). [DOI] [PubMed] [Google Scholar]

- 33.Stolz, S. et al. Spin-stabilization by coulomb blockade in a Vanadium Dimer in WSe2. ACS Nano17, 23422–23429 (2023). [DOI] [PubMed] [Google Scholar]

- 34.Frey, N. C., Akinwande, D., Jariwala, D. & Shenoy, V. B. Machine learning-enabled design of point defects in 2D materials for quantum and neuromorphic information processing. ACS Nano14, 13406–13417 (2020). [DOI] [PubMed] [Google Scholar]

- 35.Wang, C., Tan, X. P., Tor, S. B. & Lim, C. S. Machine learning in additive manufacturing: state-of-the-art and perspectives. Addit. Manuf.36, 101538 (2020). [Google Scholar]

- 36.Arrigoni, M. & Madsen, G. K. H. Evolutionary computing and machine learning for discovering of low-energy defect configurations. npj Comput. Mater.7, 71 (2021). [Google Scholar]

- 37.Cubuk, E. D. et al. Identifying structural flow defects in disordered solids using machine-learning methods. Phys. Rev. Lett.114, 108001 (2015). [DOI] [PubMed] [Google Scholar]

- 38.Schattauer, C., Todorović, M., Ghosh, K., Rinke, P. & Libisch, F. Machine learning sparse tight-binding parameters for defects. npj Comput. Mater.8, 116 (2022). [Google Scholar]

- 39.Wu, X., Chen, H., Wang, J. & Niu, X. Machine learning accelerated study of defect energy levels in Perovskites. J. Phys. Chem. C.127, 11387–11395 (2023). [Google Scholar]

- 40.Kohn, W. & Sham, L. J. Self-consistent equations including exchange and correlation effects. Phys. Rev.140, A1133–A1138 (1965). [Google Scholar]

- 41.Mattuck, R. D. A Guide to Feynman Diagrams in the Many-Body Problem 2nd edn, Vol. 464 (Dover Publication, 1992).

- 42.Cohen, M. L.& Louie, S. G. Fundamentals of condensed Matter Physics Vol. 460 (Cambridge University Press, 2016).

- 43.Aryasetiawan, F. & Gunnarsson, O. The GW method. Rep. Prog. Phys.61, 237 (1998). [Google Scholar]

- 44.Reining, L. The GW approximation: content, successes and limitations. Wiley Interdiscip. Rev. Comput. Mol. Sci.8, e1344 (2018). [Google Scholar]

- 45.Carleo, G. et al. Machine learning and the physical sciences. Rev. Modern Phys.91, 045002 (2019). [Google Scholar]

- 46.Schleder, G. R., Padilha, A. C., Acosta, C. M., Costa, M. & Fazzio, A. From DFT to machine learning: recent approaches to materials science–a review. J. Phys. Mater.2, 032001 (2019). [Google Scholar]

- 47.Li, Y., Dong, R., Yang, W. & Hu, J. Composition based crystal materials symmetry prediction using machine learning with enhanced descriptors. Comput. Mater. Sci.198, 110686 (2021). [Google Scholar]

- 48.Sonpal, A., Afzal, M. A. F., An, Y., Chandrasekaran, A. & Halls, M. D. Benchmarking machine learning descriptors for crystals. In Machine Learning in Materials Informatics: Methods and Applications (ed. An, Y.) 1416 (American Chemical Society, 2022).

- 49.Patala, S. Understanding grain boundaries – the role of crystallography, structural descriptors and machine learning. Comput. Mater. Sci.162, 281–294 (2019). [Google Scholar]

- 50.Himanen, L. et al. DScribe: Library of descriptors for machine learning in materials science. Comput. Phys. Commun.247, 106949 (2020). [Google Scholar]

- 51.Venturella, C., Hillenbrand, C., Li, J. & Zhu, T. Machine learning many-body green’s functions for molecular excitation spectra. J. Chem. Theory Comput.20, 143–154 (2024). [DOI] [PubMed] [Google Scholar]

- 52.Tao, Q., Xu, P., Li, M. & Lu, W. Machine learning for perovskite materials design and discovery. Npj Comput. Mater.7, 23 (2021). [Google Scholar]

- 53.Yılmaz, B. & Yıldırım, R. Critical review of machine learning applications in perovskite solar research. Nano Energy80, 105546 (2021). [Google Scholar]

- 54.Mattur, M. N., Nagappan, N., Rath, S. & Thomas, T. Prediction of nature of band gap of perovskite oxides (ABO3) using a machine learning approach. J. Mater.8, 937–948 (2022). [Google Scholar]

- 55.Ryu, B., Wang, L., Pu, H., Chan, M. K. & Chen, J. Understanding, discovery, and synthesis of 2D materials enabled by machine learning. Chem. Soc. Rev.51, 1899–1925 (2022). [DOI] [PubMed] [Google Scholar]

- 56.Lee, J., Seko, A., Shitara, K., Nakayama, K. & Tanaka, I. Prediction model of band gap for inorganic compounds by combination of density functional theory calculations and machine learning techniques. Phys. Rev. B93, 115104 (2016). [Google Scholar]

- 57.Rajan, A. C. et al. Machine-learning-assisted accurate band gap predictions of functionalized MXene. Chem. Mater.30, 4031–4038 (2018). [Google Scholar]

- 58.Na, G. S., Jang, S., Lee, Y.-L. & Chang, H. Tuplewise material representation based machine learning for accurate band gap prediction. J. Phys. Chem. A124, 10616–10623 (2020). [DOI] [PubMed] [Google Scholar]

- 59.Zhuo, Y., Mansouri Tehrani, A. & Brgoch, J. Predicting the band gaps of inorganic solids by machine learning. J. Phys. Chem. Lett.9, 1668–1673 (2018). [DOI] [PubMed] [Google Scholar]

- 60.Knøsgaard, N. R. & Thygesen, K. S. Representing individual electronic states for machine learning GW band structures of 2D materials. Nat. Commun.13, 468 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Zadoks, A., Marrazzo, A. & Marzari, N. Spectral operator representations. arXiv10.48550/arXiv.2403.01514 (2024).

- 62.Jimenez, D. R., Shakir M., Daan W. Stochastic backpropagation and approximate inference in deep generative models. arXiv10.48550/arXiv.1401.4082 (2014).

- 63.Kingma D. P., Welling M. Auto-encoding variational bayes. arXiv10.48550/arXiv13126114 (2013).

- 64.Rocchetto, A., Grant, E., Strelchuk, S., Carleo, G. & Severini, S. Learning hard quantum distributions with variational autoencoders. npj Quant. Inform.4, 28 (2018). [Google Scholar]

- 65.Yin, J., Pei, Z. & Gao, M. C. Neural network-based order parameter for phase transitions and its applications in high-entropy alloys. Nat. Comput. Sci.1, 686–693 (2021). [DOI] [PubMed] [Google Scholar]

- 66.Szołdra, T., Sierant, P., Lewenstein, M. & Zakrzewski, J. Unsupervised detection of decoupled subspaces: many-body scars and beyond. Phys. Rev. B.105, 224205 (2022). [Google Scholar]

- 67.Wetzel, S. J. Unsupervised learning of phase transitions: from principal component analysis to variational autoencoders. Phys. Rev. E.96, 022140 (2017). [DOI] [PubMed] [Google Scholar]

- 68.Hybertsen, M. S. & Louie, S. G. Electron correlation in semiconductors and insulators: band gaps and quasiparticle energies. Phys. Rev. B34, 5390–5413 (1986). [DOI] [PubMed] [Google Scholar]

- 69.Hedin, L. New method for calculating the one-particle green’s function with application to the electron-gas problem. Phys. Rev.139, A796–A823 (1965). [Google Scholar]

- 70.Qiu, D. Y., da Jornada, F. H. & Louie, S. G. Optical spectrum of MoS2: many-body effects and diversity of exciton states. Phys. Rev. Lett.111, 216805 (2013). [DOI] [PubMed]

- 71.Krizhevsky A., Sutskever I., Hinton G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inform. Process. Syst.60, 84–90 (2012).

- 72.Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv10.48550/arXiv14091556 (2014).

- 73.LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE86, 2278–2324 (1998). [Google Scholar]

- 74.Szegedy, C. et al. Going deeper with convolutions. arXiv10.48550/arXiv.1409.4842 (2015).

- 75.Lin, M., Chen, Q. & Yan, S. Network in network. arXiv10.48550/arXiv13124400 (2013).

- 76.Schubert, S., Neubert, P., Pöschmann, J. & Protzel, P. Circular convolutional neural networks for panoramic images and laser data. In 2019IEEE Intelligent Vehicles Symposium (IV) (IEEE, 2019).

- 77.Jaderberg M., Simonyan K., Zisserman A. Spatial transformer networks. Adv. Neural Inform. Process. Syst.10.48550/arXiv.1506.02025 (2015).

- 78.Cohen, A. J., Mori-Sánchez, P. & Yang, W. Challenges for density functional theory. Chem. Rev.112, 289–320 (2012). [DOI] [PubMed] [Google Scholar]

- 79.Burke, K. Perspective on density functional theory. J. Chem. Phys.136, 150901 (2012). [DOI] [PubMed] [Google Scholar]

- 80.Becke, A. D. Perspective: Fifty years of density-functional theory in chemical physics. J. Chem. Phys.140, 18A301 (2014). [DOI] [PubMed] [Google Scholar]

- 81.Deslippe, J. et al. BerkeleyGW: A massively parallel computer package for the calculation of the quasiparticle and optical properties of materials and nanostructures. Comput. Phys. Commun.183, 1269–1289 (2012). [Google Scholar]

- 82.Rojas, H. N., Godby, R. W. & Needs, R. J. Space-time method for Ab initio calculations of self-energies and dielectric response functions of solids. Phys. Rev. Lett.74, 1827–1830 (1995). [DOI] [PubMed] [Google Scholar]

- 83.Bruneval, F. & Gonze, X. AccurateG. W. self-energies in a plane-wave basis using only a few empty states: towards large systems. Phys. Rev. B.78, 085125 (2008). [Google Scholar]

- 84.Berger, J., Reining, L. & Sottile, F. Ab initio calculations of electronic excitations: collapsing spectral sums. Phys. Rev. B.82, 041103 (2010). [Google Scholar]

- 85.Shih, B.-C., Xue, Y., Zhang, P., Cohen, M. L. & Louie, S. G. Quasiparticle band gap of ZnO: high accuracy from the conventional G0W0 approach. Phys. Rev. Lett.105, 146401 (2010). [DOI] [PubMed] [Google Scholar]

- 86.Samsonidze, G., Jain, M., Deslippe, J., Cohen, M. L. & Louie, S. G. Simple approximate physical orbitals for $GW$ quasiparticle calculations. Phys. Rev. Lett.107, 186404 (2011). [DOI] [PubMed] [Google Scholar]

- 87.Dong, S. S., Govoni, M. & Galli, G. Machine learning dielectric screening for the simulation of excited state properties of molecules and materials. Chem. Sci.12, 4970–4980 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Morita, K., Davies, D. W., Butler, K. T. & Walsh, A. Modeling the dielectric constants of crystals using machine learning. J. Chem. Phys.153, 024503 (2020). [DOI] [PubMed]

- 89.Zauchner, M. G., Horsfield, A. & Lischner, J. Accelerating GW calculations through machine-learned dielectric matrices. npj Comput. Mater.9, 184 (2023). [Google Scholar]

- 90.Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw.4, 251–257 (1991). [Google Scholar]

- 91.Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Sign. Syst.2, 303–314 (1989). [Google Scholar]

- 92.Lin, B., He, X. & Ye, J. A geometric viewpoint of manifold learning. Appl. Inform.2, 3 (2015).

- 93.Bengio, Y., Courville, A. & Vincent, P. Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell.35, 1798–1828 (2013). [DOI] [PubMed] [Google Scholar]

- 94.Psenka, M., Pai, D., Raman, V., Sastry, S. & Ma, Y. Representation learning via manifold flattening and reconstruction. J. Mach. Learn. Res.25, 132 (2024).

- 95.Pandey, A., Fanuel, M., Schreurs, J. & Suykens, J. A. Disentangled representation learning and generation with manifold optimization. Neural Comput.34, 2009–2036 (2022). [DOI] [PubMed] [Google Scholar]

- 96.Altman, A. R., Kundu, S. & da Jornada, F. H. Mixed stochastic-deterministic approach for many-body perturbation theory calculations. Phys. Rev. Lett.132, 086401 (2024). [DOI] [PubMed] [Google Scholar]

- 97.Del Ben, M. et al. Large-scale GW calculations on pre-exascale HPC systems. Comput. Phys. Commun.235, 187–195 (2019). [Google Scholar]

- 98.Gao, W., Xia, W., Gao, X. & Zhang, P. Speeding up GW calculations to meet the challenge of large scale quasiparticle predictions. Sci. Rep.6, 36849 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Gjerding, M. N. et al. Recent progress of the computational 2D materials database (C2DB). 2D Mater.8, 044002 (2021). [Google Scholar]

- 100.Haastrup, S. et al. The computational 2D materials database: high-throughput modeling and discovery of atomically thin crystals. 2D Mater.5, 042002 (2018). [Google Scholar]

- 101.Rasmussen, A., Deilmann, T. & Thygesen, K. S. Towards fully automated GW band structure calculations: what we can learn from 60.000 self-energy evaluations. npj Comput. Mater.7, 22 (2021). [Google Scholar]

- 102.Giustino, F., Cohen, M. L. & Louie, S. G. GW method with the self-consistent Sternheimer equation. Phys. Rev. B81, 115105 (2010). [Google Scholar]

- 103.Umari, P., Stenuit, G. & Baroni, S. GW quasiparticle spectra from occupied states only. Phys. Rev. B81, 115104 (2010). [Google Scholar]

- 104.Govoni, M. & Galli, G. Large scale GW calculations. J. Chem. Theory Comput.11, 2680–2696 (2015). [DOI] [PubMed] [Google Scholar]

- 105.Foulkes, W. M., Mitas, L., Needs, R. & Rajagopal, G. Quantum Monte Carlo simulations of solids. Rev. Mod. Phys.73, 33 (2001). [Google Scholar]

- 106.Zheng, H., Changlani, H. J., Williams, K. T., Busemeyer, B. & Wagner, L. K. From real materials to model hamiltonians with density matrix downfolding. Front. Phys.6, 43 (2018). [Google Scholar]

- 107.Bartlett, R. J. & Musiał, M. Coupled-cluster theory in quantum chemistry. Rev. Mod. Phys.79, 291–352 (2007). [Google Scholar]

- 108.Purvis, G. D. & Bartlett, R. J. A full coupled‐cluster singles and doubles model: the inclusion of disconnected triples. J. Chem. Phys.76, 1910–1918 (1982). [Google Scholar]

- 109.Roos, B. O., Taylor, P. R. & Sigbahn, P. E. A complete active space SCF method (CASSCF) using a density matrix formulated super-CI approach. Chem. Phys.48, 157–173 (1980). [Google Scholar]

- 110.Knowles, P. J. & Handy, N. C. A new determinant-based full configuration interaction method. Chem. Phys. Lett.111, 315–321 (1984). [Google Scholar]

- 111.Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. Adva. Neural Inform. Process. Syst.32, 8026–8037(2019).

- 112.Hinton, G. Improving neural networks by preventing co-adaptation of feature detectors. arXiv10.48550/arXiv12070580 (2012).

- 113.Bishop C. M., Nasrabadi N. M. Pattern Recognition and Machine Learning 1st edn, Vol. 778 (Springer, 2006).

- 114.Mortensen, J. J. et al. GPAW: An open python package for electronic structure calculations. J. Chem. Phys.160, 092503 (2024). [DOI] [PubMed]

- 115.Larsen, A. H. et al. The atomic simulation environment—a python library for working with atoms. J. Phys. Condensed Matter29, 273002 (2017). [DOI] [PubMed] [Google Scholar]

- 116.Hou, B., Wu, J. & Qiu D. Y. Code fore unsupervised representation learning of Kohn-Sham states and consequences for downstream predictions of many-body effects’. Zenodo10.5281/zenodo.13617033 (2024). [DOI] [PubMed]

- 117.Hou, B., Wu, J. & Qiu D. Y. Code ocean for ‘unsupervised learning of individual Kohn-Sham states: interpretable representations and consequences for downstream predictions of many-body effects’. arXiv10.48550/arXiv.2404.14601 (2024). [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data (KS wavefunction and GW energies of 302 materials) generated in this study have been deposited in the Materials Data Facility repository under 10.18126/rpa1-yp91. Source data are provided with this paper.

Code associated with this work is available from the public github repository https://github.com/bwhou1997/VAE-DFT with 10.5281/zenodo.13617033116 and from Code Ocean117.