Abstract

Objective

To assess the effectiveness of evidence‐based quality improvement (EBQI) as an implementation strategy to expand the use of medications for opioid use disorder (MOUD) within nonspecialty settings.

Data Sources and Study Setting

We studied eight facilities in one Veteran Health Administration (VHA) region from October 2015 to September 2022 using administrative data.

Study Design

Initially a pilot, we sequentially engaged seven of eight facilities from April 2018 to September 2022 using EBQI, consisting of multilevel stakeholder engagement, technical support, practice facilitation, and data feedback. We established facility‐level interdisciplinary quality improvement (QI) teams and a regional‐level cross‐facility collaborative. We used a nonrandomized stepped wedge design with repeated cross sections to accommodate the phased implementation. Using aggregate facility‐level data from October 2015 to September 2022, we analyzed changes in patients receiving MOUD using hierarchical multiple logistic regression.

Data Collection/Extraction Methods

Eligible patients had an opioid use disorder (OUD) diagnosis from an outpatient or inpatient visit in the previous year. Receiving MOUD was defined as having been prescribed an opioid agonist or antagonist treatment or a visit to an opioid substitution clinic.

Principal Findings

The probability of patients with OUD receiving MOUD improved significantly over time for all eight facilities (average marginal effect [AME]: 0.0057, 95% CI: 0.0044, 0.0070) due to ongoing VHA initiatives, with the probability of receiving MOUD increasing by 0.577 percentage points, on average, each quarter, totaling 16 percentage points during the evaluation period. The seven facilities engaging in EBQI experienced, on average, an additional 5.25 percentage point increase in the probability of receiving MOUD (AME: 0.0525, 95%CI: 0.0280, 0.0769). EBQI duration was not associated with changes.

Conclusions

EBQI was effective for expanding access to MOUD in nonspecialty settings, resulting in increases in patients receiving MOUD exceeding those associated with temporal trends. Additional research is needed due to recent MOUD expansion legislation.

Keywords: buprenorphine, implementation science, methadone, naltrexone, opioid use disorder, quality improvement

What is known on this topic

In the last 10 years, the United States has seen a dramatic increase in opioid‐related mortality, fueled by the prevalence of fentanyl and exacerbated by the COVID‐19 pandemic.

Opioid‐related mortality can be reduced by treating opioid use disorder (OUD), using medications for opioid use disorder (MOUD), such as methadone, buprenorphine, and extended‐release naltrexone.

MOUD adoption has been slow, particularly in nonspecialty settings, due to numerous implementation challenges, such as stigma, logistical barriers, and lack of knowledge and training about MOUD.

What this study adds

Evidence‐based quality improvement (EBQI) was an effective implementation strategy for expanding access to MOUD in nonspecialty settings, resulting in increases in patients receiving MOUD exceeding those associated with temporal trends.

Quality improvement (QI) teams participated in a learning collaborative and developed action plans, resulting in an additional 5.25 percentage point increase in the probability of patients receiving MOUD during the evaluation period.

This project demonstrates that EBQI can be successfully used to implement evidence‐based practices, such as OUD treatment in nonspecialty settings.

1. INTRODUCTION

In the last 10 years, the United States has seen a dramatic increase in opioid‐related mortality, with the number of deaths from opioid overdose quadrupling since 1999. 1 Much of this increased mortality can be attributed to the use of fentanyl 2 and other synthetic opioids, which were responsible for over 90% of all drug overdose deaths in 2023, according to provisional data from the CDC. 3 Furthermore, the COVID‐19 pandemic contributed to the worsening of the opioid epidemic, with monthly overdose deaths surging in the first year of the pandemic. 3 Fortunately, opioid‐related mortality can be reduced by treating opioid use disorder (OUD), using medications for opioid use disorder (MOUD), such as methadone, buprenorphine, and extended‐release naltrexone. 4 These medications can effectively treat OUD, significantly reducing the risk of comorbid disease, overdose, and death. 5 , 6 , 7 , 8 , 9

The Veterans Health Administration (VHA) has strongly endorsed MOUD 10 ; however, MOUD adoption has been slow, particularly in nonspecialty settings (e.g., Primary Care, Mental Health), due to numerous implementation challenges. Barriers in the last decade include both patient and provider stigma, logistical barriers such as the previously mandated training to obtain the Drug Enforcement Agency (DEA) X‐waiver to prescribe buprenorphine, the X‐waiver itself, and lack of knowledge and training about MOUD. 11 , 12 , 13 For example, in one study of prescribers who received VHA‐sponsored X‐waiver training, only 7% reported prescribing buprenorphine at 9‐month follow‐up. 14 Providers in nonspecialty settings may be wary of engaging with patients with OUD about treatment, because of their own stigma regarding patients with substance use disorders (SUD). 13 As a result, the majority of buprenorphine prescribers work within specialty SUD clinic settings, which many patients are reluctant to access due to stigma. 14

We sought to expand the use of MOUD within nonspecialty settings within one VHA region. This effort initially began as a pilot project 15 and then expanded into a larger trial under the umbrella of a national VHA initiative to increase access to MOUD. We engaged support from multidisciplinary champions (i.e., primary care, SUD, pain, pharmacy, inpatient) and facility leadership at seven VHA facilities, to promote these therapies for OUD patients in any setting. Using a “no wrong door” approach, we aimed to educate nonspecialty providers about how to recognize OUD and its treatment options and to adapt MOUD delivery models to the unique needs of each facility and setting.

We used evidence‐based quality improvement (EBQI) as a systematic approach to engage facilities in OUD treatment from April 2018 to September 2022. EBQI involves cultivating a research–clinical partnership and engaging national and regional senior organizational leadership and front‐line staff in adapting and implementing evidence‐based practices through a bundle of implementation strategies. Evidence‐based practices are adapted collaboratively to local conditions, with researchers providing technical assistance, formative feedback, and practice facilitation. 16 EBQI has been successfully used in multiple previous large multisite studies, such as implementation of a collaborative care model for depression, 17 , 18 women's health primary care, 19 and patient‐centered medical home, 20 as well as in multiple smaller evaluations of diverse EBQI initiatives in primary care settings. 16

We sought to assess the effectiveness of EBQI as an implementation strategy to expand the use of MOUD within nonspecialty settings, with the hypothesis that EBQI would be an effective strategy to expand access to these medications in settings outside of specialty SUD treatment.

2. METHODS

While this initially began as a pilot project in 2018, it transitioned into a larger trial in 2020 as part of the national VHA initiative (Clinical Trial No. NCT04178551). This larger trial was a hybrid type III effectiveness implementation trial 21 , 22 to assess the effectiveness of EBQI as an implementation strategy. Our primary aim was to assess the effectiveness of EBQI as an implementation strategy. Our secondary aim was to examine the change in the proportion of patients with OUD who received MOUD at the sites over time. To evaluate the change in OUD treatment rates, we used a nonrandomized stepped wedge repeated cross‐sectional design to accommodate phased implementation based on site readiness 23 , 24 across eight facilities in one VHA region from April 2018 to September 2022.

We partnered with VHA regional leaders for facilities located in Southern California, Arizona, and New Mexico. Facilities A and B were selected during the pilot phase of the project in 2018 by the VHA regional leaders, given their relatively greater resources and interest (i.e., organizational readiness 25 ) to implement MOUD in primary care and develop tools to expand access. While not an explicit criterion, these two sites were the top two performers in MOUD treatment rates in the region and among the top 20th percentile in the nation. Later, as part of the larger national VHA initiative, Facilities C, D, and E were selected by VHA regional leaders as the next phase of sites starting in 2020, with Facilities F, G, and H making up the final phase beginning EBQI in 2021. Although we initiated engagement with leadership and providers at Facility H, this site declined to receive EBQI implementation services as part of this project, choosing instead to focus on preexisting internal efforts around MOUD at their facility (Table 1).

TABLE 1.

Facilities in the participating Veterans Health Administration (VHA) region.

| Site | Rural versus urban | Unique patients FY18 Q1 | Baseline MOUD treatment rate FY18 Q1 | MOUD treatment rate FY22 Q4 | EBQI participation |

|---|---|---|---|---|---|

| Facility A | Urban | 63,436 | 364/848 (42.9%) | 500/1051 (47.6%) | Yes |

| Facility B | Urban | 4034 | 189/571 (33.1%) | 311/454 (68.5%) | Yes |

| Facility C | Urban | 52,488 | 139/660 (21.1%) | 172/404 (42.6%) | Yes |

| Facility D | Urban | 47,258 | 382/871 (43.9%) | 373/840 (44.4%) | Yes |

| Facility E | Urban | 62,074 | 144/658 (21.9%) | 198/474 (41.8%) | Yes |

| Facility F | Urban | 21,190 | 67/224 (29.9%) | 200/329 (60.8%) | Yes |

| Facility G | Urban | 36,261 | 122/451 (27.1%) | 154/406 (37.9%) | Yes |

| Facility H | Urban | 38,081 | 257/667 (38.5%) | 248/540 (45.9%) | No |

Abbreviations: EBQI, evidence‐based quality improvement; FY, fiscal year; MOUD, medications for opioid use disorder; Q, quarter.

2.1. Ethics statement

Per VHA Handbook 1200.21 (Veterans Health Administration 2019), this project was conducted as a nonresearch evaluation under the authority of the Veterans Affairs (VA) Office of Mental Health and Suicide Prevention.

2.2. Data source

We analyzed VHA performance measure data, 26 which combine encounter data, diagnoses, and pharmacy data from the VHA Corporate Data Warehouse. We used VHA performance measure data, as these were a priority for our operational partners and routinely monitored for quality improvement (QI) purposes. 27

2.3. Primary outcome

Our outcome measure was whether (yes vs. no) patients received MOUD among the patients with an OUD diagnosis in the past year. For every quarter for the duration of the evaluation period, we obtained aggregated facility‐level data of the proportions of patients who received MOUD, with the number of patients diagnosed with OUD serving as the denominator and the number of patients receiving MOUD serving as the numerator at each facility, a VHA performance measure. 26 , 27 We used these data to calculate the number of patients diagnosed with OUD who did and did not receive MOUD. In sum, our data contained 131,388 patient‐level observations during the evaluation period.

Patients with an OUD diagnosis were identified using ICD‐10 codes (F11.1**, F11.2**) from an inpatient admission or outpatient encounter in the previous year. MOUD was defined as having at least one visit to an opioid substitution clinic or a prescription for at least one opioid antagonist [Naltrexone] or one of the specified opioid agonists (Buprenorphine, Buprenorphine/Naloxone [Suboxone], Methadone or Sublocade) in VHA and non‐VHA pharmacy data.

2.4. EBQI implementation activities

EBQI core elements (Table 2) included multilevel stakeholder engagement that was both “top‐down” (i.e., leadership) and “bottom‐up” (i.e., front‐line clinical staff), with external facilitators on the project team providing technical support, EBQI training, practice facilitation, and routine data feedback. External facilitators also facilitated across‐site learning collaboratives.

TABLE 2.

Descriptions of evidence‐based quality improvement activities.

| EBQI activity | Description | Example(s) from project |

|---|---|---|

| Multilevel interdisciplinary stakeholder engagement 28 , 29 | Engagement of multiple levels of the healthcare organization (e.g., national, regional, facility, and clinic level), using data to agree on priorities and target metrics at the start of the project. | Convened advisory committee meeting; met with VHA regional workgroup monthly; met with QI teams from all facilities monthly on cross‐facility interdisciplinary collaborative; met monthly with facility champions within each discipline. |

| EBQI training for Facility Champions 28 | Provide local QI team with problem‐solving skills (Plan–Do–Study–Act) 30 , 31 and leadership skills to engage healthcare leaders using data. | Trained facility clinical champions in Plan–Do–Study–Act. Discussed metrics of success meaningful to leadership. |

| Practice facilitation through regular calls with the local quality improvement teams 28 , 29 | Coach local QI team in QI principles to support improvement in clinical practices. | Collaborated with facilities to help them consider underlying problems, potential solutions, potential explanations for results, and alternative solutions that are specific to their facility. |

| Structured QI action plan 28 | Local QI teams develop QI action plans that follow the Plan–Do–Study–Act cycles, 30 including data collection and measurement | Facility champions developed and presented 15 quality improvement action plans to the cross‐facility interdisciplinary collaborative and to VHA regional workgroup. |

| Regular discussions of formative data feedback 28 , 29 | Review of progress towards target metrics using administrative data and qualitative data | Provided quarterly updates on data to cross‐facility interdisciplinary collaborative and to VHA regional workgroup. |

| Across‐site collaborative 28 , 29 | Multiple sites participate in a collaborative that enables across‐site learning, sharing best practices, and problem‐solving | Facilities participated in monthly cross‐facility interdisciplinary collaborative meeting. Champions within each discipline across facilities met monthly. |

Abbreviations: EBQI, evidence‐based quality improvement; QI, quality improvement; VHA, Veterans Health Administration.

Stakeholders were enlisted across national, regional, and facility levels. In the pilot phase of the project, we twice convened a national advisory board consisting of national VHA experts in pain and SUD, VHA regional and facility leaders in primary care and mental health, and a VHA health economist to ensure alignment with national priorities, develop consensus, and advise on benchmarks of success. We met monthly with VHA regional leaders throughout all phases of the project to review formative data on progress, discuss methods to leverage facilitators, and address implementation barriers.

At each facility, we established an interdisciplinary QI team (e.g., primary care, SUD, pain, pharmacy). Each facility identified clinical champions from various disciplines (e.g., primary care, mental health, pharmacy) who received training in EBQI provided by a subject matter expert (N = 19 champions trained). Champions then worked to develop QI action plans for their facilities and disciplines, developing and implementing 15 QI action plans across all facilities. We also convened monthly discipline‐specific meetings (i.e., primary care, pharmacy) across facilities to help develop and refine QI action plans.

The cornerstone of our EBQI activities was a monthly cross‐facility interdisciplinary collaborative that allowed our facility QI teams to share tools, lessons learned, and experiences. During these cross‐facility calls, we performed practice facilitation, provided education and additional training (Plan–Do–Study–Act cycles, using data to monitor progress, topics relevant to MOUD), developed tools, and reviewed data. Champions presented and received feedback on their QI action plans with the collaborative before presenting the QI action plan to facility and regional leadership. For example, a champion who developed a QI action plan reviewing existing VHA dashboards to identify patients who may benefit from MOUD received feedback advising against excluding the patients who were in remission, as including these patients may help with addressing relapse concerns and other issues proactively. The collaborative met twice monthly for a total of 23 meetings (average attendance = 6 QI team members/meeting) during the pilot phase of activity (Facilities A and B) and then monthly for a total of 27 meetings (average attendance = 20 QI team members/meeting, with an average of 4 representatives per facility) during the national VHA initiative (Facilities A–G) (Table 2).

2.5. Stepped wedge design

For this project, while the phased implementation period was from April 2018 to September 2022, the evaluation period extended from October 2015 to September 2022 to allow us to evaluate for changes in our outcome measure (proportion of patients with OUD who received MOUD) over time, regardless of EBQI implementation.

We used a stepped wedge design to evaluate the phased implementation 23 of EBQI across facilities from October 2015 to September 2022. The roll‐out of the EBQI was nonrandom, that is, not within researcher control. All facilities were in usual state from Quarter 1 to 10. Facilities A and B started implementation in Quarter 11, Facility C in Quarter 19, Facilities D and E in Quarter 20, Facility F in Quarter 23, and Facility G in Quarter 24 (Table 3). Facility H never implemented the EBQI and remained in the usual state for the entire evaluation period. In all, 7 out of 8 facilities implemented the EBQI at 5 distinct time points across 28 quarters.

TABLE 3.

Implementation schedule.

| Calendar | Oct‐Dec 2015 | Jan–Mar 2016 | Apr–Jun 2016 | Jul–Sep 2016 | Oct–Dec 2016 | Jan–Mar 2017 | Apr–Jun 2017 | Jul–Sep 2017 | Oct–Dec 2017 | Jan–Mar 2018 | Apr–Jun 2018 | Jul–Sep 2018 | Oct–Dec 2018 | Jan–Mar 2019 | Apr–Jun 2019 | Jul–Sep 2019 | Oct–Dec 2019 | Jan–Mar 2020 | Apr–Jun 2020 | Jul–Sep 2020 | Oct–Dec 2020 | Jan–Mar 2021 | Apr–Jun 2021 | Jul–Sep 2021 | Oct–Dec 2021 | Jan–Mar 2022 | Apr–Jun 2022 | Jul–Sep 2022 |

| Fiscal | Q1 FY16 | Q2 FY16 | Q3 FY16 | Q4 FY16 | Q1 FY17 | Q2 FY17 | Q3 FY17 | Q4 FY17 | Q1 FY18 | Q2 FY18 | Q3 FY18 | Q4 FY18 | Q1 FY19 | Q2 FY19 | Q3 FY19 | Q4 FY19 | Q1 FY20 | Q2 FY20 | Q3 FY20 | Q4 FY20 | Q1 FY21 | Q2 FY21 | Q3 FY21 | Q4 FY21 | Q1 FY22 | Q2 FY22 | Q3 FY22 | Q4 FY22 |

| Quarter | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| Concurrent VHA initiatives | N | N | N | N | N | M, N | M, N | M, N | M, N | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T | M,N, R,T |

| Facility A | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I |

| Facility B | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I | I |

| Facility C | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I | I | I | I | I |

| Facility D | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I | I | I | I |

| Facility E | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I | I | I | I |

| Facility F | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I | I |

| Facility G | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | I | I | I | I | I |

| Facility H | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U | U |

Abbreviations: FY, Fiscal Year; I, Implementation; M, Academic detailing; N, Naloxone prescribing; Q, Quarter; R, Dashboard review; T, Train‐the‐trainer; U, Usual Care.

2.6. Data analysis

Our main independent variables were facility, time, implementation status, and duration of implementation. Facility (Facilities A–H) represented differences between the eight facilities. Time (Quarter 1–Quarter 28) represented linear time trend over the evaluation period for all facilities. Implementation status (usual state vs. implementation) indicated whether facilities were implementing EBQI at each quarter. In addition, duration of implementation (0–18) represented the number of quarters that the facilities were in state of implementation.

Our data were multilevel and hierarchical 32 with implementation status (i.e., EBQI) targeted at the facility level, the explanatory variables at the time level, and the outcome of interest at the patient level; patients were clustered within time and facilities, and time was clustered within facilities. The three‐level data were structured as a repeated cross‐sectional design, which included patients at level 1, the quarter during which outcome of interest was assessed at level 2, and facilities at level 3. The use of a repeated cross‐sectional design allowed us to examine MOUD trends at the facility‐level while controlling for the compositional make‐up, for example, demographics, of the patients at those facilities. 33 Because the outcome of interest was binary and the data were multilevel, we used a hierarchical multiple logistic regression. 34 Our model included facility and quarterly time trends as controls to account for variation between sites and changes over time. We obtained average marginal effects for the explanatory variables after fitting our model.

In addition, we ran a facility‐level model as a sensitivity analysis using the aggregated facility‐level data of the proportions of patients who received MOUD among patients with OUD diagnosis at the facility. We used multiple linear regression model with the same explanatory variables site, time, EBQI implementation status, and duration, and obtained average marginal effects. The facility‐level data contained 224 observations during the 28‐quarter evaluation period.

3. RESULTS

3.1. Implementation activities

Multidisciplinary champions developed and implemented 15 QI action plans for their facilities and presented them to the cross‐facility QI team and to regional and facility leadership. QI action plans included expanding buprenorphine/naloxone prescribing in clinical teams outside of SUD treatment settings, using existing VHA dashboards to identify patients who may benefit from MOUD or were coded inappropriately for OUD, improving care transitions for OUD patients in several different clinical settings (emergency room, inpatient, specialty substance use disorder care, pain), and increasing access to harm reduction supplies (e.g., fentanyl test strips).

Teams also developed a variety of tools for providers and for patient marketing. Tools for providers included provider education sessions, dashboard guides, screening tools, and resources for accessing X‐waiver training and certification. Patient marketing tools included flyers to distribute in clinics, letters that were mailed out to patients taking opioids, and messaging for public areas of the hospital such as e‐boards and “table toppers.” In addition, email and social media campaigns were also created that included Veteran narratives that were developed with multiple rounds of feedback from VHA provider stakeholders and a Veterans Engagement Board and included a paragraph about how to access treatment. These were featured in facility emails to Veterans and on facility social media channels.

3.2. Clinical effectiveness

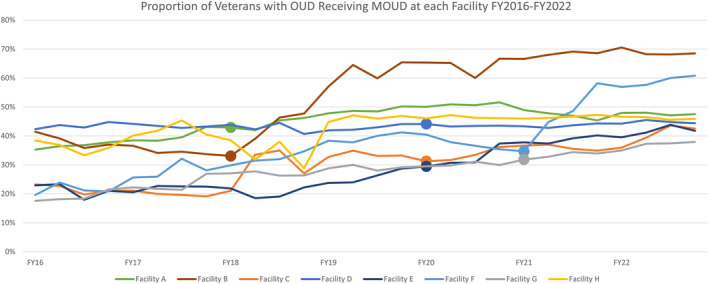

The proportion of patients with OUD receiving MOUD increased at all eight facilities during the 7‐year evaluation period (Figure 1). According to our analytic model, the probability of patients with OUD receiving MOUD improved significantly over time for all eight facilities (average marginal effect (AME): 0.0057, 95% CI: 0.0044, 0.0070), even without the EBQI implementation strategy; the probability of receiving MOUD increased by 0.57 percentage points on average each quarter, totaling an increase of 15.96 percentage points during the 28‐quarter evaluation period (Table 4). The seven facilities engaging in EBQI experienced an additional 5.25 percentage point increase, on average, in the probability of receiving MOUD (AME: 0.0525, 95%CI: 0.0280, 0.0769) during the evaluation period, with a total probability of 21.21 percentage point improvement during the evaluation period. The duration of EBQI implementation was not associated with changes in patients receiving MOUD. The sensitivity analysis yielded similar results (Table A1).

FIGURE 1.

Proportion of Veterans with opioid use disorder (OUD) Receiving medications for opioid use disorder (MOUD) at each Facility FY2016–FY2022. Dot on line signals the beginning of Implementation phase. FY, fiscal year; OUD, Opioid Use Disorder; MOUD, Medications for Opioid Use Disorder.

TABLE 4.

Average marginal effects for the three‐level multiple logistic regression model with site, time, evidence‐based quality improvement (EBQI) implementation status, and duration as explanatory variables.

| Average marginal effect (AME) | ||

|---|---|---|

| SITE | Estimate (SE) | 95% CI |

| A | −0.0134 (0.0166) | (−0.0465, 0.0186) |

| B | 0.0745** (0.0170) | (0.0412, 0.1079) |

| C | −0.1419** (0.0137) | (−0.1686, −0.1151) |

| D | −0.00749 (0.0141) | (−0.0351, 0.0202) |

| E | −0.1574** (0.0134) | (−0.1836, −0.1312) |

| F | −0.0627** (0.0144) | (−0.0910, −0.0345) |

| G | −0.1532** (0.0132) | (−0.1790, −0.1274) |

| Time | 0.0057** (0.00079) | (0.0044, 0.0070) |

| Implementation status | 0.05245** (0.0125) | (0.0280, 0.0769) |

| Duration | 0.00042 (0.0014) | (−0.0023, 0.0031) |

Abbreviations: CI, confidence interval; SE, standard error.

p < 0.0001.

4. DISCUSSION

In response to the accelerating national opioid epidemic, we partnered with VHA regional leaders for Southern California, Arizona, and New Mexico to increase MOUD treatment rates among patients diagnosed with OUD. Beginning with a pilot project and then expanding under the umbrella of a larger national VHA initiative, we used EBQI as an implementation strategy to engage facilities in a multifaceted intervention to expand the use of MOUD within nonspecialty settings with support from multidisciplinary champions and facility leadership at seven VHA facilities.

We found that the probability of patients with OUD receiving MOUD treatment improved significantly over time (16 percentage points over 7 years) for all eight facilities in the region, even without EBQI. Given the significant national attention on preventing opioid‐related mortality, legislative changes around prescribing buprenorphine, and clinical practice guidelines for opioid prescribing, 35 we were not surprised by these findings. VHA initiatives during the evaluation period 2015–2022 included academic detailing focused on opioid safety and OUD treatment, 36 , 37 the use of interdisciplinary team review of dashboards, 38 , 39 , 40 widespread naloxone prescribing to prevent opioid‐related mortality, 41 and train‐the‐trainer initiatives for OUD treatment. 42 All of these VHA national initiatives were already underway by the time this project began and were continued throughout this project (Table 3). Within the VHA, higher rates of OUD treatment have been driven by increases in buprenorphine prescribing. 43

With the phased EBQI implementation from April 2018 to September 2022, we found that the seven facilities that were engaged in EBQI experienced an additional improvement of 5.25 percentage points, on average, on top of the 16 percentage point increase over time, indicating that this implementation strategy was effective in augmenting the MOUD expansion efforts already being employed within the facilities and the overall VHA healthcare system. In comparison, a recently published review 16 of the EBQI literature found positive, but varying effect sizes for a variety of QI targets, with only one study reporting a statistically significant effect. This suggests that MOUD treatment expansion may be particularly well‐suited to the EBQI approach, as well as being an extremely salient QI target in the context of a worsening epidemic.

Implementation challenges to expanding access to MOUD continue to persist across many medical settings, despite significant work to address them. As of 2018, few providers in nonspecialty settings had completed the mandatory training to obtain the X‐waiver from the DEA required to prescribe buprenorphine, the most cost‐effective and convenient MOUD option. 11 Recognizing this requirement as a major barrier to MOUD access, the DEA enacted a series of changes to the training requirement for the X‐waiver in response to the dramatic increase in overdoses that were seen with the COVID pandemic, with the intention of easing access. As of December 2022, the Mainstreaming Addiction Treatment Act eliminated the DATA‐Waiver program, thus removing the X‐waiver requirement to prescribe buprenorphine. 44 However, given that most providers with an X‐waiver have never prescribed buprenorphine, 45 challenges to increasing access to MOUD are likely to persist and will continue to require strategies to assist with implementation. The results of this project suggest that EBQI is a very effective strategy to support MOUD implementation and should be considered by healthcare leadership to augment existing efforts. Further, an additional finding of this analysis was that the duration of EBQI implementation was not associated with changes in patients receiving MOUD. This indicates that the engagement in EBQI itself is the critical ingredient to focus on, rather than aiming for a lengthy duration of engagement. This has implications for healthcare leadership who increasingly need to prioritize staffing bandwidth among a plethora of competing demands.

The results of this project demonstrate that EBQI can be successfully used to implement OUD treatment in nonspecialty settings, across diverse facilities within one VHA region. This multisite initiative expands the demonstration of EBQI effectiveness beyond the previous evidence base of use in large multisite studies, such as implementation of a collaborative care model for depression, 17 , 18 women's health primary care, 19 and patient‐centered medical home, 20 as well as the multiple smaller evaluations of EBQI initiatives in primary care settings. 16

The limitations of this project include limited generalizability outside of VHA, the analytic design, and the use of aggregated facility‐level data. Veterans have more comorbidities and tend to be more complex than patients in most healthcare systems. 46 In addition, VHA offers integrated healthcare delivery and strong opioid prescribing safety practices. 47 However, other healthcare organizations with (1) multilevel structures (e.g., regional, local), (2) executive leadership interest in evidence, in solving the problem, and willingness to engage front‐line staff, and (3) resources to conduct QI may also benefit from EBQI. 16 , 48 In addition, we selected a pragmatic study design that would most closely mimic the conditions for our partnered research project. We used a stepped wedge design, which traditionally randomizes sites sequentially to an intervention until all sites are exposed. Because we collaborated closely with the VHA region, sites were selected based on site readiness 23 , 24 rather than randomized. This allowed us to engage sites in EBQI during a time when they were ready to participate. We did not evaluate whether observed effects were similar among sites that initiated the intervention early versus late in the project, and the outcome period varied in duration for sites that initiated the intervention early versus late in the project; however, we included variables in our model to account for time and duration of implementation. Finally, we used VA performance measure data as our outcome measure, as this was a priority for our operational partners and routinely monitored for QI purposes. 27 However, these data, which combine encounter data, diagnoses, and pharmacy data, are facility‐level data that limit deeper exploration into the data.

In this initiative, EBQI strategies used to engage healthcare teams within nonspecialty settings were effective in expanding access to MOUD. QI teams used an assortment of QI interventions and developed action plans to improve access to MOUD at their facilities, resulting in an increase in patients receiving MOUD above and beyond those associated with temporal trends. Given the recent MOUD expansion legislation, additional work will be needed to ensure these changes are consistently and equitably implemented and that improvements are maintained.

FUNDING INFORMATION

This initiative was funded by Veterans Health Administration Quality Enhancement Research Initiative (QUERI) Partnered Implementation Initiative (PII) 18‐179 and 19‐321.

CONFLICT OF INTEREST STATEMENT

Dr. Evelyn Chang served as a consultant to Behavioral Health Services, Inc, during this study. This was discussed with the VA Office of the General Counsel at the beginning of the study. The authors do not have any other conflicts of interest.

Supporting information

TABLE A1 Marginal effects for the facility‐level multiple linear regression model with site, time, evidence‐based quality improvement (EBQI) implementation status, and duration as explanatory variables (n = 8 sites).

ACKNOWLEDGMENTS

All views expressed are those of the authors and do not represent the views of the US Government or the Department of Veterans Affairs. The authors would like to thank the VA Consortium to Disseminate and Understand Implementation of Opioid Use Disorder Treatment (CONDUIT), and the VISN22 leadership, participating sites, and providers. The authors would also like to thank Dr. Melissa M. Farmer, Dr. Alison Hamilton, Dr. Martin Lee, and Dr. Lisa Rubenstein for their guidance and contributions.

Oberman RS, Huynh AK, Cummings K, et al. Engaging healthcare teams to increase access to medications for opioid use disorder. Health Serv Res. 2024;59(Suppl. 2):e14371. doi: 10.1111/1475-6773.14371

[Correction added on 4 October 2024, after first online publication: The copyright line was changed.]

REFERENCES

- 1. Hedegaard H, Minino AM, Warner M. Drug overdose deaths in the United States, 1999‐2019. NCHS Data Brief. 2020;394:1‐8. [PubMed] [Google Scholar]

- 2. Ciccarone D. The rise of illicit fentanyls, stimulants and the fourth wave of the opioid overdose crisis. Curr Opin Psychiatry. 2021;34(4):344‐350. doi: 10.1097/YCO.0000000000000717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Center for Disease Control, National Center for Health Statistics . Provisional Drug Overdose Death Counts. Accessed September 14, 2023. https://www.cdc.gov/nchs/nvss/vsrr/drug-overdose-data.htm

- 4. Sordo L, Barrio G, Bravo MJ, et al. Mortality risk during and after opioid substitution treatment: systematic review and meta‐analysis of cohort studies. BMJ. 2017;357:j1550. doi: 10.1136/bmj.j1550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Kampman K, Jarvis M. American Society of Addiction Medicine (ASAM) National Practice Guideline for the use of medications in the treatment of addiction involving opioid use. J Addict Med. 2015;9(5):358‐367. doi: 10.1097/ADM.0000000000000166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Mattick RP, Breen C, Kimber J, Davoli M. Buprenorphine maintenance versus placebo or methadone maintenance for opioid dependence. Cochrane Database Syst Rev. 2014;(2):CD002207. doi: 10.1002/14651858.CD002207.pub4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. McNamara KF, Biondi BE, Hernandez‐Ramirez RU, Taweh N, Grimshaw AA, Springer SA. A systematic review and meta‐analysis of studies evaluating the effect of medication treatment for opioid use disorder on infectious disease outcomes. Open Forum Infect Dis. 2021;8(8):ofab289. doi: 10.1093/ofid/ofab289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Tanum L, Solli KK, Latif ZE, et al. Effectiveness of injectable extended‐release naltrexone vs daily buprenorphine‐naloxone for opioid dependence: a randomized clinical noninferiority trial. JAMA Psychiatry. 2017;74(12):1197‐1205. doi: 10.1001/jamapsychiatry.2017.3206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Samples H, Williams AR, Crystal S, Olfson M. Impact of long‐term buprenorphine treatment on adverse health care outcomes in Medicaid. Health Aff. 2020;39(5):747‐755. doi: 10.1377/hlthaff.2019.01085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Opioid Use Disorder . A VA Clinican's Guide to Identification and Management of Opioid Use Disorder (US Department of Veterans Affairs). 2016.

- 11. Frank JW, Wakeman SE, Gordon AJ. No end to the crisis without an end to the waiver. Subst Abus. 2018;39(3):263‐265. doi: 10.1080/08897077.2018.1543382 [DOI] [PubMed] [Google Scholar]

- 12. Gordon AJ, Kavanagh G, Krumm M, et al. Facilitators and barriers in implementing buprenorphine in the Veterans Health Administration. Psychol Addict Behav. 2011;25(2):215‐224. doi: 10.1037/a0022776 [DOI] [PubMed] [Google Scholar]

- 13. Mackey K, Veazie S, Anderson J, Bourne D, Peterson K. Barriers and facilitators to the use of medications for opioid use disorder: a rapid review. J Gen Intern Med. 2020;35(Suppl 3):954‐963. doi: 10.1007/s11606-020-06257-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Cernasev A, Hohmeier KC, Frederick K, Jasmin H, Gatwood J. A systematic literature review of patient perspectives of barriers and facilitators to access, adherence, stigma, and persistence to treatment for substance use disorder. Explor Res Clin Soc Pharm. 2021;2:100029. doi: 10.1016/j.rcsop.2021.100029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Chang ET, Oberman RS, Cohen AN, et al. Increasing access to medications for opioid use disorder and complementary and integrative health services in primary care. J Gen Intern Med. 2020;35(Suppl 3):918‐926. doi: 10.1007/s11606-020-06255-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Hempel S, Bolshakova M, Turner BJ, et al. Evidence‐based quality improvement: a scoping review of the literature. J Gen Intern Med. 2022;37(16):4257‐4267. doi: 10.1007/s11606-022-07602-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Rubenstein LV, Chaney EF, Ober S, et al. Using evidence‐based quality improvement methods for translating depression collaborative care research into practice. Fam Syst Health. 2010;28(2):91‐113. doi: 10.1037/a0020302 [DOI] [PubMed] [Google Scholar]

- 18. Rubenstein LV, Meredith LS, Parker LE, et al. Impacts of evidence‐based quality improvement on depression in primary care: a randomized experiment. J Gen Intern Med. 2006;21(10):1027‐1035. doi: 10.1111/j.1525-1497.2006.00549.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Yano EM, Darling JE, Hamilton AB, et al. Cluster randomized trial of a multilevel evidence‐based quality improvement approach to tailoring VA Patient Aligned Care Teams to the needs of women Veterans. Implement Sci. 2016;11(1):101. doi: 10.1186/s13012-016-0461-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Rubenstein LV, Stockdale SE, Sapir N, et al. A patient‐centered primary care practice approach using evidence‐based quality improvement: rationale, methods, and early assessment of implementation. J Gen Intern Med. 2014;29(Suppl 2):S589‐S597. doi: 10.1007/s11606-013-2703-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness‐implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217‐226. doi: 10.1097/MLR.0b013e3182408812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Landes SJ, McBain SA, Curran GM. An introduction to effectiveness‐implementation hybrid designs. Psychiatry Res. 2019;280:112513. doi: 10.1016/j.psychres.2019.112513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Huynh AK, Lee ML, Farmer MM, Rubenstein LV. Application of a nonrandomized stepped wedge design to evaluate an evidence‐based quality improvement intervention: a proof of concept using simulated data on patient‐centered medical homes. BMC Med Res Methodol. 2016;16(1):143. doi: 10.1186/s12874-016-0244-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Mdege ND, Man MS, Taylor Nee Brown CA, Torgerson DJ. Systematic review of stepped wedge cluster randomized trials shows that design is particularly used to evaluate interventions during routine implementation. J Clin Epidemiol. 2011;64(9):936‐948. doi: 10.1016/j.jclinepi.2010.12.003 [DOI] [PubMed] [Google Scholar]

- 25. Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008;65(4):379‐436. doi: 10.1177/1077558708317802 [DOI] [PubMed] [Google Scholar]

- 26. Center for Strategic Analytics and Reporting (CSAR) . Strategic Analytics for Improvement and Learning (SAIL), Links to Relevant Tools and Reports. Accessed October 2, 2023. https://reports.vssc.med.va.gov/ReportServer/Pages/ReportViewer.aspx?/MgmtReports/SAIL/SAIL_Hyperlinks&rs:Command=Render

- 27. Gustavson AM, Hagedorn HJ, Jesser LE, et al. Healthcare quality measures in implementation research: advantages, risks and lessons learned. Health Res Policy Syst. 2022;20(1):131. doi: 10.1186/s12961-022-00934-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Hamilton AB, Brunner J, Cain C, et al. Engaging multilevel stakeholders in an implementation trial of evidence‐based quality improvement in VA women's health primary care. Transl Behav Med. 2017;7(3):478‐485. doi: 10.1007/s13142-017-0501-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Stockdale SE, Hamilton AB, Bergman AA, et al. Assessing fidelity to evidence‐based quality improvement as an implementation strategy for patient‐centered medical home transformation in the Veterans Health Administration. Implement Sci. 2020;15(1):18. doi: 10.1186/s13012-020-0979-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Varkey P, Reller MK, Resar RK. Basics of quality improvement in health care. Mayo Clin Proc. 2007;82(6):735‐739. doi: 10.4065/82.6.735 [DOI] [PubMed] [Google Scholar]

- 31. Deming WE. Elementary Principles of the Statistical Control of Quality. Nippon Kagaku Gijutsu Renmei; 1950. [Google Scholar]

- 32. Raudenbush SW, Bryk AS. Hierarchical linear models: applications and data analysis methods. Advanced Quantitative Techniques in the Social Sciences. Vol 1. Sage; 2002. [Google Scholar]

- 33. U.S. Department of Health and Human Services . National Institutes of Health, Office of Behavioral & Social Science Research (OBSSR). e‐Source: Behavioral and Social Science Research, Multilevel Modeling. Accessed October 19, 2023. https://obssr.od.nih.gov/sites/obssr/files/Multilevel-Modeling.pdf

- 34. Deeks J. When can odds ratios mislead? Odds ratios should be used only in case‐control studies and logistic regression analyses. BMJ. 1998;317(7166):1155‐1156; author reply 1156‐7. doi: 10.1136/bmj.317.7166.1155a [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Dowell D, Haegerich TM, Chou R. CDC guideline for prescribing opioids for chronic pain‐United States, 2016. JAMA. 2016;315(15):1624‐1645. doi: 10.1001/jama.2016.1464 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Sandbrink F, Oliva EM, McMullen TL, et al. Opioid prescribing and opioid risk mitigation strategies in the Veterans Health Administration. J Gen Intern Med. 2020;35(Suppl 3):927‐934. doi: 10.1007/s11606-020-06258-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Wyse JJ, Gordon AJ, Dobscha SK, et al. Medications for opioid use disorder in the Department of Veterans Affairs (VA) health care system: historical perspective, lessons learned, and next steps. Subst Abus. 2018;39(2):139‐144. doi: 10.1080/08897077.2018.1452327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Oliva EM, Bowe T, Tavakoli S, et al. Development and applications of the Veterans Health Administration's stratification tool for opioid risk mitigation (STORM) to improve opioid safety and prevent overdose and suicide. Psychol Serv. 2017;14(1):34‐49. doi: 10.1037/ser0000099 [DOI] [PubMed] [Google Scholar]

- 39. Minegishi T, Frakt AB, Garrido MM, et al. Randomized program evaluation of the Veterans Health Administration Stratification Tool for Opioid Risk Mitigation (STORM): a research and clinical operations partnership to examine effectiveness. Subst Abus. 2019;40(1):14‐19. doi: 10.1080/08897077.2018.1540376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Stratification Tool for Opioid Risk Mitigation (STORM). 2022.

- 41. Oliva EM, Christopher MLD, Wells D, et al. Opioid overdose education and naloxone distribution: Development of the Veterans Health Administration's national program. J Am Pharm Assoc (2003). 2017;57(2S):S168‐S179 e4. doi: 10.1016/j.japh.2017.01.022 [DOI] [PubMed] [Google Scholar]

- 42. Gordon AJ, Drexler K, Hawkins EJ, et al. Stepped Care for Opioid Use Disorder Train the Trainer (SCOUTT) initiative: expanding access to medication treatment for opioid use disorder within Veterans Health Administration facilities. Subst Abus. 2020;41(3):275‐282. doi: 10.1080/08897077.2020.1787299 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Wyse JJ, Shull S, Lindner S, et al. Access to medications for opioid use disorder in rural versus urban Veterans Health Administration facilities. J Gen Intern Med. 2023;38(8):1871‐1876. doi: 10.1007/s11606-023-08027-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Substance Abuse and Mental Health Services Administration . Waiver Elimination (MAT Act). Accessed September 14, 23. https://www.samhsa.gov/medications‐substance‐use‐disorders/waiver‐elimination‐mat‐act

- 45. Gordon AJ, Liberto J, Granda S, Salmon‐Cox S, Andree T, McNicholas L. Outcomes of DATA 2000 certification trainings for the provision of buprenorphine treatment in the Veterans Health Administration. Am J Addict. 2008;17(6):459‐462. doi: 10.1080/10550490802408613 [DOI] [PubMed] [Google Scholar]

- 46. Betancourt JA, Granados PS, Pacheco GJ, et al. Exploring health outcomes for U.S. veterans compared to non‐veterans from 2003 to 2019. Healthcare. 2021;9(5). doi: 10.3390/healthcare9050604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Gellad WF, Good CB, Shulkin DJ. Addressing the opioid epidemic in the United States: lessons from the Department of Veterans Affairs. JAMA Intern Med. 2017;177(5):611‐612. doi: 10.1001/jamainternmed.2017.0147 [DOI] [PubMed] [Google Scholar]

- 48. Kaplan HC, Brady PW, Dritz MC, et al. The influence of context on quality improvement success in health care: a systematic review of the literature. Milbank Q. 2010;88(4):500‐559. doi: 10.1111/j.1468-0009.2010.00611.x [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

TABLE A1 Marginal effects for the facility‐level multiple linear regression model with site, time, evidence‐based quality improvement (EBQI) implementation status, and duration as explanatory variables (n = 8 sites).