Summary

Current societal challenges exceed the capacity of humans operating either alone or collectively. As AI evolves, its role within human collectives will vary from an assistive tool to a participatory member. Humans and AI possess complementary capabilities that, together, can surpass the collective intelligence of either humans or AI in isolation. However, the interactions in human-AI systems are inherently complex, involving intricate processes and interdependencies. This review incorporates perspectives from complex network science to conceptualize a multilayer representation of human-AI collective intelligence, comprising cognition, physical, and information layers. Within this multilayer network, humans and AI agents exhibit varying characteristics; humans differ in diversity from surface-level to deep-level attributes, while AI agents range in degrees of functionality and anthropomorphism. We explore how agents’ diversity and interactions influence the system’s collective intelligence and analyze real-world instances of AI-enhanced collective intelligence. We conclude by considering potential challenges and future developments in this field.

Keywords: AI, collective intelligence, hybrid intelligence, multi-agent systems, human-machine networks, human-machine intelligence

The bigger picture

The challenges facing society today are becoming increasingly complex, surpassing what human efforts alone can manage, whether individually or collectively. As artificial intelligence (AI) evolves and improves, some might believe that replacing human intelligence with AI could solve our societal challenges. This notion has been criticized. Instead, we believe that AI can enhance human collective intelligence rather than replace it. Humans bring intuition, creativity, and diverse experiences, while AI offers vast computational power and rapid data processing. Combining these strengths can create a level of collective intelligence greater than the sum of its parts. However, understanding how humans and AI can effectively collaborate to achieve this collective intelligence is a complex and intellectually stimulating task. This is a new territory for us and requires multidisciplinary research to be harnessed effectively.

This paper reviews the state of the art and prospects of AI-enhanced collective intelligence. Drawing on insights from complexity science and network science, the authors propose a multilayer representation of the collective intelligence system. The paper examines factors influencing the emergence of collective intelligence and various AI modes of contribution. It also discusses real-world applications, implications, limitations, challenges, and future directions for advancing understanding and development in this field.

Introduction

Our societies form, sustain, and function because of our intelligence. In the animal kingdom, the more intelligent the animals are, the more social they are. Dunbar’s “social brain theory”1 even suggests that significantly superior human intelligence compared with other primates is the result of our need to be able to manage and maintain our social lives; it is humans’ outstanding sociality that forced them to become more intelligent. The bottom line is that, in human societies, collectives are intelligent, and intelligence is collective. As a concept, collective intelligence (CI) refers to the emergent outcome of the collective efforts of many individuals. The superiority of CI to the intelligence of any of the individuals who contributed to it has been demonstrated scientifically and practically in numerous instances.2

In every new chapter in the history of information and communication technologies (ICTs), CI has been elevated to a higher level through more readily and cheaply available platforms for collaboration and exchanging ideas. The Internet, especially the World Wide Web, was designed to foster large-scale collaboration. Tim Berners-Lee’s initial intention in developing hypertext transfer protocol (HTTP), which later led to the development of the WWW, was to facilitate collaboration between CERN researchers. Some 30 years later, the Web facilitates the largest collaborative projects in human history, such as Wikipedia,3 citizen science projects,4 collaborative software development, and collaborative tagging projects,5 all exemplifying technology-enhanced CI.

Even though large-scale knowledge-generating collaborations, crowdsourcing, and, in a more general form, CI are not Internet phenomena per se, we can see this new technology has undoubtedly boosted the state of the art in collaborative knowledge creation, task execution, and collective decision making. Artificial intelligence (AI), similar to the Web and Internet-based technologies, is reshaping different aspects of our lives at a tremendous pace. In light of this rapidly evolving situation, it is essential to consider how, similar to previous disruptions by new ICTs, AI can magnify and enhance our CI.

CI

Intelligent agents (humans, animals, and intelligent artifacts) often interact with others to tackle complex problems. They combine their knowledge and personal information with information acquired through interaction and social information, resulting in superior CI. The concept of CI has been redefined over the years, primarily due to different research streams that have employed the term in qualitatively different ways.6

One stream of research uses CI to refer to the outcome of collaboration produced by amalgamation of the input from a large crowd, exemplified in scenarios such as online contests or crowdsourced science.7,8,9 Here, the concept of CI is often mixed up with the wisdom of crowds (WoC). Both rely on the idea that collective input from a diverse group typically yields better outcomes than any individual member’s. The WoC involves aggregating independent judgments from a large, diverse group to arrive at collective decisions or predictions, often through statistical averaging.10 In contrast, CI pertains to the achievements of collaborating groups, whose sizes can vary. It emerges from the synergy of interactions and mutual feedback among intelligent agents11 working toward a common goal through interconnected efforts. However, social information and interconnection have also been studied in the context of WoC.12,13 Despite the conflict in the literature on the boundaries and overlaps between the two, almost all the AI enhancements we discuss in this paper in the context of CI can also be implemented in WoC scenarios.14 Nevertheless, for the sake of simplicity, we only use the term CI for the rest of the paper. Table 1 compares key aspects of the two concepts.

Table 1.

Comparison between WoC and CI

| Aspect | WoC | CI |

|---|---|---|

| Interaction | minimal | high, involving significant collaboration and coordination |

| Dependence | independent | interdependent and adaptable processes to changing conditions |

| Mechanism | aggregation | emergent and adaptable integration from coordinated efforts |

| Nature | static, immediate collective judgment | dynamic and adaptive, evolving to meet complex needs |

Another stream of research defines CI as an ability that “can be designed to solve a wide range of problems over time in the face of complex and changing environmental conditions.”6 Converging evidence suggests the presence of a general CI factor (c-factor) that serves as a predictor for a group’s performance across a wide range of tasks,15,16 similar to the individual’s general intelligence (g-factor) but extended to groups. Research has found that this c-factor is not strongly correlated with the average or maximum individual intelligence of the group members, suggesting that CI is more than just the sum of the individual intelligence present in the group.15 In this sense, this definition is virtually compatible with the one above.

A group’s CI is fundamentally influenced by its composition and interactions. Past work indicates a positive correlation between the c-factor and factors such as the average social sensitivity,15 the equitable distribution of conversational participation,15 and the percentage of female members in the group.15 Groups with diverse perspectives and skill sets are likelier to cultivate innovative solutions that might not emerge in a more homogeneous group. Effective interaction processes, encompassing coordination, cooperation,17 and communication patterns,18 are crucial. Prior studies have identified three distinct socio-cognitive transactive systems19 responsible for managing collective memory,20 collective attention,21 and collective reasoning,22 all of which are essential for the emergence of CI.

AI

The definition of AI has undergone numerous evolutions in the past semi-century. AI is the simulation of human intelligence processes by machines,23 especially computer systems. Previous research considers the classification of AI in distinct ways.24 The first classification categorizes AI based on its human-like cognitive abilities, such as thinking and feeling, and contains four primary AI types24: reactive AI, limited-memory AI, theory-of-mind (ToM) AI, and self-aware AI. Limited-memory AI is the most common at the current time, ranging from virtual assistants to chatbots to self-driving vehicles. Such AI can learn from historical data to recognize patterns, generate new knowledge and understanding, and inform subsequent decisions.

Yet another classification is a technology-oriented approach that categorizes AI into artificial narrow intelligence (ANI), artificial general intelligence (AGI), and artificial superintelligence (ASI). ANI involves creating computer systems capable of performing specific tasks like human intelligence,25 but it often surpasses humans in efficiency and accuracy.26 ANI machines have a narrow range of capabilities and can represent all existing AI. AGI refers to machines that exhibit the human ability to learn, perceive, and understand a wide range of intellectual tasks.27,28 Finally, ASI’s primary goal is to develop a machine with cognitive abilities higher than those of humans.24

Existing AI can perform tasks including but not limited to complex calculations, language translation, facial recognition, and financial market prediction. Recent developments in generative AI, such as OpenAI’s text-to-video model Sora (openai.com/sora), exemplify AI’s potential in creative industries. As AI technology evolves, it is poised to bring groundbreaking advancements across various fields. Beyond content creation, as AI deepens the understanding of the physical world and develops its simulation ability at the perceptual and cognitive level, it is possible to develop more super-intelligent tools in various fields.

Current AI systems can process vast amounts of data on a scale far beyond human capabilities. However, many real-world challenges cannot yet be solved solely by AI. Currently, AI lacks the deep conceptual and emotional understanding humans possess about objects and experiences.29 AI cannot interpret human language’s nuances, including contextual, symbolic, and cultural meanings.30,31 AI cannot be an ethical decision maker because it lacks the human attributes of intentionality, care, and responsibility.29 Therefore, combining human insight with AI’s analytical power is crucial for addressing complex real-world challenges, leveraging the strengths to compensate for each other’s weaknesses.

Human-AI hybrid CI

Even though the field of CI initially focused solely on groups of people, in recent years, it has gradually expanded to include AI as group members in a new framework referred to as “hybrid intelligence.”32 Proponents of the hybrid-intelligence perspective stress that humans and AI can connect in ways that allow them to collectively act more intelligently and achieve goals unreachable by any individual entities alone.33 Researchers investigating this hybrid CI explore “how people and computers can be connected so that collectively they act more intelligently than any person, group, or computer has ever done before.”34

Acknowledging the complementary capabilities of humans and AI as discussed in the previous section, researchers identify the need for developing socio-technological ensembles of humans and intelligent machines that possess the ability to collectively achieve superior results and continuously improve by learning from each other.35 Previous research provides evidence that teaming humans with AI has the potential to achieve complementary team performance (CTP), a level of performance that AI or humans cannot reach in isolation.36 For instance, a study demonstrates that humans can use contextual information to adjust the AI’s decision.37 Research on mixed teams composed of humans and AI shows hybrid teams could achieve higher performance metrics, such as team situational awareness and score, than all-human teams.36 Finally, recent advancements in developing large language models (LLMs) have also been proposed to reshape CI.38

Empirical evidence, theoretical gaps, and a new framework

Technological advances have constantly disrupted how we produce, exchange, collect, and analyze information and consequently make decisions, individually or collectively. Automation through smart devices based on machine learning, knowledge graph-based machine reasoning, and walking and talking household devices leveraging natural language processing have already reshaped our personal, professional, and social lives. CI cannot be an exception. Inevitably, our collective decision-making processes have been, and will be, disrupted by AI.

For example, machine learning and automation can increase the efficiency and scalability of CI in citizen science projects, where citizens volunteer to help scientists tag and classify their large-scale datasets.39 Members of a crowd with different interests and areas of competence can be matched to other tasks by recommendation systems.40 Generative algorithms can extrapolate human solutions and generate new ideas.41 Clustering algorithms can reduce a complex task’s solution space as humans explore possible solutions.42 Machines can unify similar solutions to mitigate statistical noise.43 Matching algorithms can match individuals and build efficient groups.44

In this review of AI-enhanced CI, we adopted a narrative review methodology guided by a complexity-theory and network-science framework. We began with a conceptual narrative and systematically identified relevant literature for each section. Therefore, we do not claim we have exhausted the vast and fast-growing literature. However, our approach integrates diverse theoretical perspectives and empirical studies using these theories to explain CI and its enhancement by AI. This approach bridges interdisciplinary insights, offering a holistic understanding of AI-CI.

This review focuses on integrating AI to bolster the CI of human groups, addressing a notable theoretical gap in understanding performance enhancements, particularly in hybrid human-AI configurations. With AI integration becoming pervasive across various sectors, exploring how this collaboration can unlock optimal capabilities is imperative. This exploration is essential for boosting productivity, fostering innovation, and ensuring that AI complements and enhances human skills rather than replacing them outright. The industry’s pivotal role in driving current AI-CI research underscores the absence of a comprehensive theoretical framework.45

In subsequent sections, we introduce a framework to deepen our comprehension of AI-enhanced CI systems. We then elaborate on the applications and discuss the implications of such fusion. Finally, we conclude by addressing existing challenges in designing AI-CI systems and speculate on the field’s future trajectory.

AI-enhanced CI framework

Multilayer representation of the CI system

In advancing the field of human-AI CI, prior research shows the necessity of formulating theories encompassing CI, combining both humans and AI.46 Developing such theoretical frameworks requires an in-depth comprehension and interpretation of human-AI systems, characterized by their high complexity and interrelated processes. Network science offers tools that enable us to understand the complexity of social systems47 where a multitude of interacting elements give rise to the collective behavior of the whole system.48 To enhance our understanding of CI in the human-AI system and explore how “the whole is more than the sum of its parts,”49,50 we integrate complexity science and network science approaches and propose a multilayer representation of the complex system involving human and AI agents.

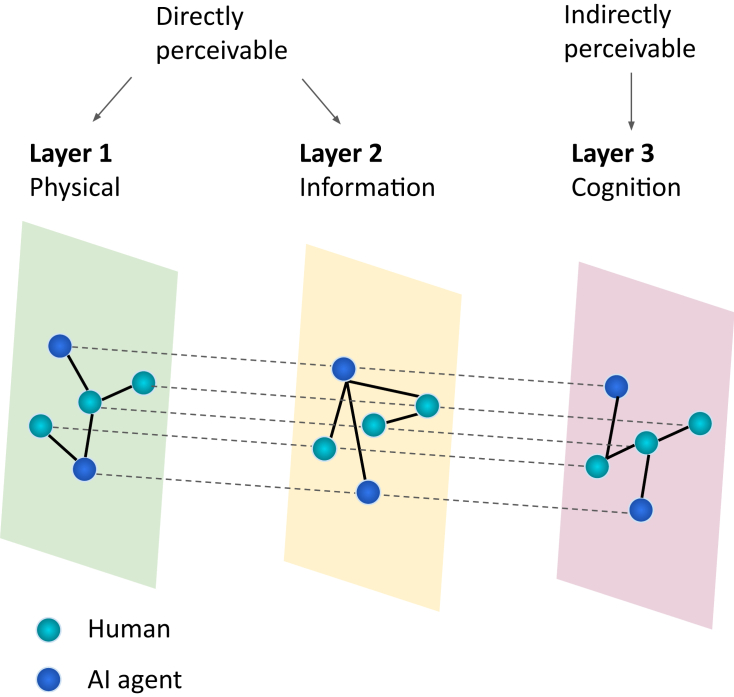

Complex system thinking has been used to understand diverse biological, physical, and social domains.51,52,53 Inspired by this approach, a real-world CI system can be mapped into a multilayer network with three interconnected layers: cognition, physical, and information. In this network, nodes represent interacting agents, and links represent relationships between them. The construction of such networks and the meaning of the links are context dependent. A node can also represent a group of agents instead of an individual agent in situations where the group behaves or is treated as a single entity within the network. Figure 1 illustrates a multiplex network,54 a special type of multilayer network where nodes remain the same but links differ across layers.

Figure 1.

A multilayer representation of a CI system

A multilayer representation can be used to untangle the processes within the complex system of human and AI agents. It consists of three interdependent layers: cognition, physical, and information. External factors and a changing environment can also influence the entire system’s emergent CI.

The cognition layer, which contains mental processes, is only indirectly perceivable. Intelligent processes are involved during problem solving, such as sense making, remembrance, creation, coordination, and decision making, often happening in the cognition layer. The links are hard to determine, but these processes exist and are fundamental to the emergence of CI in the whole system. In opposition to this indirectly perceivable layer is the physical layer, where humans and AI have tangible physical interactions. Information interaction in the information layer refers to exchanging information between agents through various communication channels. Besides intra-layer interactions, interactions in one layer can lead to information transfer and trigger interactions in other layers, represented as inter-layer links in the network.55 This interdependence and cross-layer influence are common features of multilayer networks. Moreover, even though our current framework revolves around pairwise interactions between nodes, recent advancements in the study of higher-order networks, going beyond pairwise interactions,56 may enrich our understanding of the collective emergence of intelligence.

In addition to internal processes, it is essential to acknowledge the influence of the environment on the system.57 The human-AI complex system functions in a potentially dynamic environment. Previous research suggests that collective intelligent behavior depends on the environment,57 as certain behaviors or strategies that work well in one setting may not be as effective or relevant in another.58

Human-AI hybrid systems can be viewed as complex adaptive systems, continually evolving and adapting through interactions within dynamic environments.59 The concept of emergence in complex systems can be used to describe the phenomenon where new properties emerge at the collective level, which is not present at the level of individual components.59,60,61,62 Here, the CI of the whole system can be seen as an emergent property superior to the micro-level individual intelligence. This property, which includes outcomes, abilities, characteristics, and behaviors, is aligned with and encompasses the major existing definitions of CI. It emerges through complex nonlinear relationships between the agents and is likely a result of bottom-up and top-down processes.16 The former encompasses the aggregation of group-member characteristics that foster collaboration. The latter includes structures, norms, and routines that govern collective behavior, thereby influencing the efficacy of coordination and collaboration.16 The modeling of the emergence of CI has leveraged analogies from a diverse array of fields,63 ranging from statistical physics64 to neuroscience,65 both within and beyond.

Employing a multilayer representation of this complex system in a changing environment can facilitate a deeper understanding of the interactions and relationships between humans and AI agents, untangling the intricate processes involved in emerging and maintaining CI. Given the interdisciplinary nature of this approach and the variety of terminologies used, key terms and main concepts are listed in Table S1 for more clarity. Table 2 provides a summary and breakdown of the components and features within the proposed multilayer network. The immediate benefit of this framework is that we can learn from the vast literature on multilayer networks and the studies on their robustness,66 adaptivity,67 scalability,68 resilience,69 and interoperability.70 We discuss this further in the following sections.

Table 2.

Summary and breakdown of the multilayer network of the human-AI complex system

| Components | Features |

|---|---|

| Layers | cognition layer information layer physical layer |

| Nodes | human: surface-level, deep-level diversity AI agents: diversity in functionality and anthropomorphism |

| Links | links can have directions. intra-layer links: interactions within layers inter-layer links: interactions between layers |

| Structure | size, centrality, density, hierarchy, community |

| Dynamics | communication patterns cognitive processes etc. |

The goal and the task

In the previous section, we view the hybrid human-AI groups as complex systems that often function in dynamic and complex task environments. Addressing challenges within such systems necessitates a clear understanding of the specific tasks’ goals and nature. The system’s goal might diverge from individual objectives, highlighting the need for strategic coordination to balance collective aims with personal pursuits.

Task types can vary significantly, from generative activities such as brainstorming, which requires creative and divergent thinking, to analytical tasks such as solving Sudoku, which demands logical reasoning and pattern recognition.71 The diversity of the tasks requires different abilities, including memory utilization, creative imagination, sense making, and critical analysis. In the following subsections, we focus on the details of group-member diversity’s influence, the structure and dynamics of interaction networks, and AI’s role in enhancing group performance.

Group diversity

The relationship between group diversity and CI is highly complex due to diversity’s complexity and the range of its effects under different conditions. A group can exhibit surface-level diversities.72 The surface-level diversity comes from readily observable social categories, including gender, age, and ethnicity.73 The deep-level diversity refers to differences in psychological characteristics,74 including personality, cognitive thinking styles, and values.

Diversity benefits teams by enhancing creativity, improving decision making, and expanding access to a broader talent pool.75,76 However, diversity can also have adverse effects, such as emotional conflict,77,78 stress,79 poor work relationships, and poor overall performance.80 There is a lack of consensus on which aspects of group diversity are likely to result in positive outcomes and which aspects could produce negative outcomes.80,81 The effects of diversity on group performance depend on the complexity of the task and various moderators, such as the density of the interaction network.82 A review underscores the need for in-depth research into surface and deep-level diversity within hybrid teams,81 exploring their impact across various outcomes and contexts over time.

Surface-level diversity

Gender

The evidence concerning the effect of gender diversity on team performance is equivocal and contingent upon various contextual factors.83 Past research found that gender-diverse teams outperform gender-homogeneous teams when perceived time pressure is low.84 One study showed gender diversity was negatively associated with performance, but only in large groups.73 Previous evidence strongly suggests that team collaboration is greatly improved by the presence of females in the group.15,83 Gender-diverse teams produce more novel and higher-impact scientific ideas.85 Other studies show that gender composition seems to matter for team performance. However, when controlling for the individual abilities of team members, the relation between gender composition and team performance vanishes.86 One study points out that the “romance” of working together can benefit group performance.87 Gender-diverse groups perform better than homogeneous groups by decreasing relationship and task conflict.87

Age

Age diversity may enhance group performance, but the benefits may depend on the context. Several studies have found that team age diversity positively correlated with team performance when completing complex tasks.88 Past research points out the positive effects were only seen under conditions of positive team climate and low age discrimination.88 Another study shows teams with more age diversity reported more age discrimination,89 associated with lower commitment and worse performance.

Ethnicity

There are inconsistent results in the case of ethnic diversity. One study90 found racially diverse groups produced significantly more feasible and effective ideas than homogeneous groups. Another study found racial similarity in groups associated with higher self-rated productivity and commitment.91 Under the right conditions, teams may benefit from diversity in ethnicity and nationality.92

AI surface-level diversity

AI exhibits surface-level diversity through different anthropomorphic features in robots, such as voice, avatars, and human-like characteristics. Research has indicated that the gendering of AI can affect perceptions and trust levels in users. For instance, studies have found that users may exhibit more trust in AI personal assistants whose voice gender matches their own.93 Assigning a female gender to AI bots enhances their perceived humanness and acceptance.94 Previous studies have indicated a perception bias in AI agents based on gender: male AI agents tend to be viewed as more competent, whereas female AI agents are often perceived as warmer.95 The perceived gender of the machine can make the social dynamics in hybrid teams even more complicated. However, these gendered characteristics in AI also raise concerns about reinforcing stereotypes and biases.96 The influence of AI’s gendered traits on human interactions is an ongoing area of research, with implications for how AI is designed and utilized. Despite substantial research on AI gender, there is a notable gap in understanding the influence of other characteristics, such as AI age and ethnicity, on human interactions, highlighting the need for further exploration in this area.

Deep-level diversity

Personality

Some work proposes that investigations into the relationship between personality and work-related behaviors should expand beyond the linearity assumptions,97 showing that extraversion, agreeableness, and conscientiousness have inverted U-shaped relationships with peer-rated contributions to teamwork.97 Similar work demonstrates a curvilinear relationship between a team’s average proactive personality and performance.98 This body of work also identifies the moderating effects of personality dispersion, team potency, and cohesion on this relationship.98 Previous research has found that a team’s collective openness to experience and emotional stability moderates how task conflict affects team performance.99 Specifically, in teams with high openness or emotional stability, task conflict positively influences performance.99

Cognitive style

Team cognitive-style diversity refers to the variation in team members’ ways of encoding, organizing, and processing information.100 Research indicates its significance in fostering innovation within teams.101 A certain level of cognitive diversity contributes to CI by providing diverse cognitive inputs and viewpoints necessary for task work.101 However, excessive diversity may lead to high coordination costs,102 as team members with differing perspectives struggle to understand each other.103,104 Studies suggest that the relationship between cognitive style diversity and CI is curvilinear, forming an inverted U shape.100 Additionally, the beneficial effects of cognitive diversity might be mediated through factors such as task reflexivity and relationship conflict.105

Value judgment

Values are internalized beliefs that can guide behavior and enhance motivation.106 Past research found higher diversity in values was associated with more conflict107 in relationships, tasks, and processes. Overall, existing research consistently indicates that value congruence within teams positively influences performance, satisfaction, and conflict81 and moderates the effects of informational diversity.108

AI deep-level diversity

While AI systems can display various behaviors or responses based on their programming and training, this diversity is a product of different algorithms, models, and data inputs. Unlike humans, AI does not have personal experiences, innate personality traits, cognitive thinking, or beliefs contributing to deep-level diversity. Although AI lacks inherent deep-level traits, AI might influence deep-level diversity in human teams, primarily by shaping social interactions and decision-making processes. The optimal composition of teams remains uncertain.109

Interactions

Interaction distinguishes a group from a mere collection of individuals57: one person’s behavior forms the basis for the responses of others.110 Therefore, the interaction between two members establishes a link in the interaction network. In terms of the group’s interaction network structure, past research suggests that the small-worldness of a collaboration network (small network diameter and high clustering) improves performance up to a certain point, after which the effect will reverse.111 An inverted-U relationship is found between connectedness and performance,112 suggesting an optimum number of connections for any given size of the collaboration network. A modular structure (a large number of connections within small groups that are loosely connected) can increase efficiency.113 A study on forbidden triads among jazz groups shows that heavily sided open triangles are associated with lower success (measured by the number of group releases).114

AI can contribute to human groups in various ways by augmenting existing human skills or complementing capabilities that humans lack. AI has attributes beyond humans, such as more extended memory, higher computational speed, and a more vital ability to work with large, diverse datasets. AI’s augmentation of human cognition enables teams to navigate complex situations effectively.19 AI may also help reduce the harm of implicit bias in humans115 and shape better decisions. Taking advantage of the differences between humans and AI can contribute to the performance of hybrid human-AI groups. In the following sections, we discuss the rules and incentives that govern the interaction processes and the structure and dynamics of the group’s interaction network.

Rules and incentives

Decision rules function as prescribed norms that direct interactions and play a crucial role in shaping the communication and information integration within a group.116 Previous research has focused on disentangling decision rules guiding the team’s interaction, ultimately fostering synergy. Some literature emphasizes that the decision rules themselves are intelligent.117 In addition to the rules guiding behavior, the group members interact with each other under specific incentive schemes. The intrinsic and extrinsic incentives are the driving forces118 for the agents to behave in a direction such that the social network becomes dynamic and moves toward a collective goal. Some research proposes that an incentive scheme rewarding accurate predictions by a minority can foster optimal diversity and collective predictive accuracy.119

AI can augment human cognition120 to help teams adapt to complexity. While AI can simulate certain human-like incentives through programming and learning algorithms,121 fully capturing the depth and nuance of human behavior, such as irrationality or altruism, is challenging. A recent study applying a Turing test to compare AI chatbots and humans found that AI chatbots exhibited more altruistic and cooperative behavior than their human counterparts in behavioral games.122 Machines can be programmed to mimic human behaviors, but whether they genuinely “exhibit” them like humans is debatable. In CI, integrating such human-like behaviors in AI may enhance collaboration and decision making within diverse teams. However, the extent to which AI can be truly self-aware and authentically replicate complex human traits remains a subject of ongoing research and philosophical debate.

Group structure and dynamics

Group size

Much research on CI has been dedicated to finding a group’s optimum size and structure. Several studies have reported that performance improves with group size through enhanced diversity,17 whereas others suggest the opposite: small groups are more efficient.123 Another study suggests that large teams develop and small teams innovate.124 Larger groups can better utilize diverse perspectives and knowledge to solve complex problems but also tend to experience more coordination problems and communication difficulties.15 The consideration of optimal group size can be related to the dynamics of the group, whether it is static, growing, or diminishing.

In human-AI hybrid teams, group size becomes nuanced with AI integration, especially when AI entities such as LLMs are involved. The countability of AI agents, particularly if multiple agents are derived from a single LLM, raises questions about their distinctiveness and individuality in a team context. Unlike humans, AI agents can simultaneously process multiple tasks, blurring the traditional boundaries of group size. Applying past research on human group dynamics to these hybrid systems requires rethinking the notions of individual contribution, team cohesion, and communication. It is important to consider how AI’s unique capabilities and scale impact these dynamics and whether multiple AI agents from a single model represent distinct entities or a CI resource.

Structure

Research shows that network structure, specifically hierarchy and link intensity, can affect information distribution,125 influencing group performance. Another study observed that the presence of structural holes126 in leaders’ networks and the adoption of core-periphery and hierarchical structures in groups correlated negatively with their performance.127 Incorporating the temporal dimension, adaptive social networks can significantly impact CI by allowing the network’s structure to evolve based on feedback from its members.128

Team size moderates the relationship between network structure and performance.129 Studies have found central members can coordinate with other team members more easily in smaller teams,130 whereas, in larger teams, communication challenges may arise.131 A recent study shows a more central connected leader in advice-giving networks has a more positive impact on the performance of larger teams, but this effect is reversed in smaller teams.129

Determining the optimal size, structure, and human-AI ratio for hybrid teams requires further study, particularly concerning task-specific requirements. Moreover, incorporating AI into human teams can reshape group hierarchies, norms, and rules, affecting CI.

Communication patterns

Teams often outperform individuals primarily due to explicit communication and feedback within the team.132 Understanding the communication network in crowds is crucial for effectively designing and managing crowdsourcing tasks.133 Various studies highlight that centralized and decentralized communication patterns can effectively promote team performance, contingent on the nature of the task and the team’s composition.134,135 In particular, teams handling complex tasks tend to be more productive with decentralized communication networks.136 Conversely, the centralization of communication around socially dominant or less reflective individuals can negatively impact the utilization of expertise and overall team performance.137 Communication patterns, such as equal participation and turn-taking of team members, can positively affect CI.15

The integration of AI in human groups can influence the conversation dynamics in different ways, depending on the role of AI. AI chat interventions can improve online political conversations at scale,138 reducing the chance of conflicts. While AI machines might struggle with capturing the subtle and ineffable social expressions that make up the dynamics of human groups, this can be advantageous. In certain contexts, AI can be social catalysts139 to promote communication where human capacity is limited. Striking the right balance in AI’s role is key to maintaining the natural dynamics of human interaction.

Cognitive processes

Cognitive processes are foundations for the emergence of CI,6 encompassing knowledge acquisition, information processing, problem solving, decision making, language, perception, memory, attention, and reasoning. Human cognition processes evolve and interact with each other during various tasks and experiences. Humans can represent the mental states of others, including desires, beliefs, and intentions,140 known as the ToM. Past research, inspired by ToM, showed that team members’ ability to assess others’ mental state is positively associated with team performance both in face-to-face settings15 and online.141 Regarding collective understanding within a group, the shared mental models (SMMs) theory is helpful for understanding, predicting, and improving performance in human teams.142

Applying theories such as SMMs to human-AI teams is a natural progression in research. These models can enhance team performance by ensuring that human and AI members have a similar understanding of tasks and each other,142 facilitating better prediction of needs and behaviors. Developing ToM in AI also improves coordination and adaptability in human-AI teams.143 This involves AI’s capability to anticipate and respond to new information and behaviors. Moreover, recent studies indicate that incorporating “hot” cognition, influenced by emotional states in AI, can further improve human-machine interactions.144 Understanding the transactive systems19 of memory, attention, and reasoning within these teams is key to grasping the members’ knowledge and task preferences.

AI modes of contribution

AI’s role in hybrid groups varies based on its autonomous agency and functionality. AI may serve as a technical tool for human assistance or as an active agent that interacts with and influences humans. Functionally, AI’s role can range from an assistant to a teammate, coach, or manager. Furthermore, the degree of AI’s anthropomorphism, from non-physical voice-only interfaces to avatars and physically embodied robots, also significantly affects its role in the group. Table 3 outlines the key roles of AI in human-AI contexts, accompanied by descriptions.

Table 3.

Key roles of AI in hybrid groups

| Role | Description | Examples |

|---|---|---|

| Assistant | complements or augments human abilities by performing tasks such as language translation, administrative duties, and smart-home device control | Google Translate, Alexa, or Siri managing smart-home devices |

| Teammate | collaborates with humans, offering complementary skills and enhancing team performance in various fields, such as healthcare and creative industries | AI collaborating with radiologists in image diagnosis; Google’s Magenta collaborating with artists |

| Coach | provides guidance, feedback, and strategic oversight, helping individuals and teams improve their skills and coordination | AI coaching in team sports, providing strategic advice; AI mentoring employees in skill development |

| Manager | assists in decision-making processes, reduces biases, promotes diversity, and optimizes task allocation and team dynamics | AI in hiring and promotion decisions; AI optimizing task allocation in project management |

| Embodied partner | integrates AI with robotics, enabling physical interactions and augmenting human capabilities in tasks requiring a physical presence | robotic arms in factories; autonomous vehicles aiding in logistics and transportation |

Assistant-type AI systems generally function as technical tools with limited autonomy, designed to complement or augment human abilities and enhance efficiency in task performance. For example, language translation AI can assist in translating text or spoken language, facilitating communication across language barriers. LLMs in education can assist students’ learning by adapting to different roles based on the prompts provided by the students.145 Administrative AI assistants can help schedule meetings, manage emails, and organize tasks. Smart home AI assistants such as Alexa and Siri can help manage smart home devices, play music, provide weather updates, answer questions, and assist with daily routines. AI assistants are technical tools that help humans coordinate tasks efficiently, optimize their decision making, and personalize their experiences.

AI can function as teammates, working alongside humans with complementary abilities. AI teammates are already used in real-world settings; most employees using AI already see it as a coworker. In healthcare, radiologists and AI work together to diagnose from the pictures of the patients. In creative industries, AI collaborators in music, art, and literature, such as Google’s Magenta project, collaborate with musicians and artists to create new compositions or artworks using AI algorithms. Furthermore, AI robots can serve as teammates in various workplaces, a topic we discuss in more detail later.

As a coach, AI can provide guidance, support, and personalized feedback to individuals seeking to improve their skills and achieve their goals. In team settings, one perspective highlights the AI coach’s ability to provide a comprehensive, global view of the team’s environment, guiding players who have only partial views.146 This approach involves the AI coach strategizing and coordinating, distributing tailored strategies to each team member based on their unique perspectives and roles. Another dimension of AI coaching focuses on assessing and improving teamwork by closely monitoring team members during collaborative tasks.147 Here, the AI coach actively intervenes with timely suggestions based on the team members’ inferred mental model misalignment.147

AI has been used in working environments with supervision or managerial roles, such as making decisions of hiring, promotion, and reassigning tasks.148 An AI manager may mitigate human biases149 in hiring and promotions, contributing to a more diverse workforce, which is a key aspect of CI. Additionally, by analyzing individual strengths and weaknesses and efficiently allocating tasks, an AI manager can optimize team dynamics and workflows, improving overall group performance.

With the advancement of AI in areas such as natural language processing, object recognition, and creative idea generation, there’s a trend to integrate AI with robotics, creating physical entities in the real world. Embodied AI150 refers to intelligent agents interacting with their environment through a physical body. These AI-enabled machines, embodied in robotic arms or autonomous vehicles, can augment the capabilities of human workers. They are equipped with advanced sensors, motors, and actuators, enabling recognition of people and objects and safe collaboration with humans in diverse settings, including factories, warehouses, and laboratories. Furthermore, advanced AI-robotics integration allows robots to recognize and respond to human speech and gestures, impacting conversational dynamics within mixed human-robot environments. Research has shown that a vulnerable robot’s social behavior positively shapes the conversation dynamics among human participants in a human-robot group.151 In scenarios where conflicts emerge within a human group or in groups of strangers, a humorous robot can potentially serve as an ice breaker to intervene and resolve the awkward situation,152 demonstrating the potential of robots to influence the nature of human-human interactions notably.

Human-AI CI emerging factors

Perception and reaction

In addition to investigating how humans perceive individual AI, previous research suggests the necessity of additional work to explore human perceptions of AI in collaborative team environments.153 For human-AI teams to succeed, human team members must be receptive to their new AI counterparts.154 People’s pre-existing attitudes toward AI were found to be significantly related to their willingness to be involved in human-AI teams.155 Research finds that two aspects of social perception, warmth and competence, are critical predictors of human receptivity to a new AI teammate. Psychological acceptance is positively related to perceived human-AI team viability.154 Regarding human perception of AI behaviors, one study finds that people cooperate less with benevolent AI agents than with benevolent humans.156

Humans tend to be more accepting of AI when it makes mistakes. Some studies find that users have a more favorable impression of the imperfect robot than the perfect robot when the robot behaves adequately after making mistakes.157 When errors occur in human-machine shared-control vehicles, the blame assigned to the machine is reduced if both drivers make mistakes.158 Research has found that errors occasionally performed by a humanoid robot can increase its perceived human likeness and likability.159 A recent study shows humans accept their AI teammate’s decision less often when they are deceived about the identity of the AI as another human.160 The nature of the task requested by the robot, e.g., whether its effects are revocable as opposed to irrevocable, significantly impacts participants’ willingness to follow its instructions.161 When taking AI’s advice, humans must know the AI system’s error boundary and decide when to accept or override AI’s recommendation.162

Trust

In AI-assisted decision making, where the individual strengths of humans and AI optimize the joint-decision outcome, the key to success is appropriately calibrating human trust.163 Trust between humans and AI plays a pivotal role in realizing CI.164 Previous research highlights the necessity of understanding factors that enable or hinder the formation, maintenance, and repair of trust in human-AI collaborations.164 Key factors influencing this trust include the perceived competence, benevolence, and integrity165 of the AI systems, mirroring the trust dynamics in human relationships.

The level of anthropomorphism of machines can be a predictor of the trust level of the human participants.166 Studies indicate that, while anthropomorphic features in AI agents initially create positive impressions, this effect is often short lived.164 Trust tends to decline, especially when the agent’s capabilities are not clearly presented and its performance fails to meet users’ expectations.167

Trust in a teammate may evolve over time irrespective of team performance.168 Knowing when to trust the AI allows human experts to appropriately apply their knowledge, improving decision outcomes in cases where the model is likely to perform poorly.163 A previous study also proposes a framework highlighting trust relationships within human-AI teams. It acknowledges the multilevel nature of team trust, which considers individual, dyads, and team levels as a whole.169 Another study points out that the trust level of a team needs to be uniform, as uncooperative members could undermine the team’s ability to reach intended goals.170

Explainability

In human-AI decision-making scenarios, people’s awareness of how AI works and its outcomes is critical in building a relationship with the system. AI explanation can support people in justifying their decisions.171 A study on content moderation found that, when explanations were provided for why the content was taken down, removal decisions made jointly by humans and AI were perceived as more trustworthy and acceptable than the same decisions made by humans alone.172 Many researchers have proposed using explainable AI (XAI) to enable humans to rely on AI advice appropriately and reach complementary team performance.37 However, some studies suggest that XAI can be associated with a white-box paradox,173 potentially leading to null or detrimental effects.

Previous research finds that AI explanations can increase human acceptance of AI recommendations, regardless of their correctness,174 leading to an over-reliance, threatening the performance of human-AI decision making. However, another study finds that there are scenarios where AI explanations can reduce over-reliance175 using a cost-benefit framework. The impact of AI explanations on decision-making tasks is contingent upon individuals’ diverse levels of domain expertise.176 Additionally, some studies advocate for the concept of causability,177 which measures whether and to what extent humans can understand a given machine explanation to develop effective human-AI interfaces, particularly in medical AI.

Applications and implications

The AI-enhanced CI approach is increasingly applied across various domains, offering innovative solutions to challenges such as community response to climate change, environment, and sustainability challenges.178 AI serves as a vital decision-support tool for policymakers in detecting misinformation179 and real-time crisis management, exemplified during the COVID-19 pandemic. Its application extends to high-stake areas, including medical diagnosis180 and criminal justice.181 AI’s integration into CI efforts enables significant amplification of its impact and scalability.182

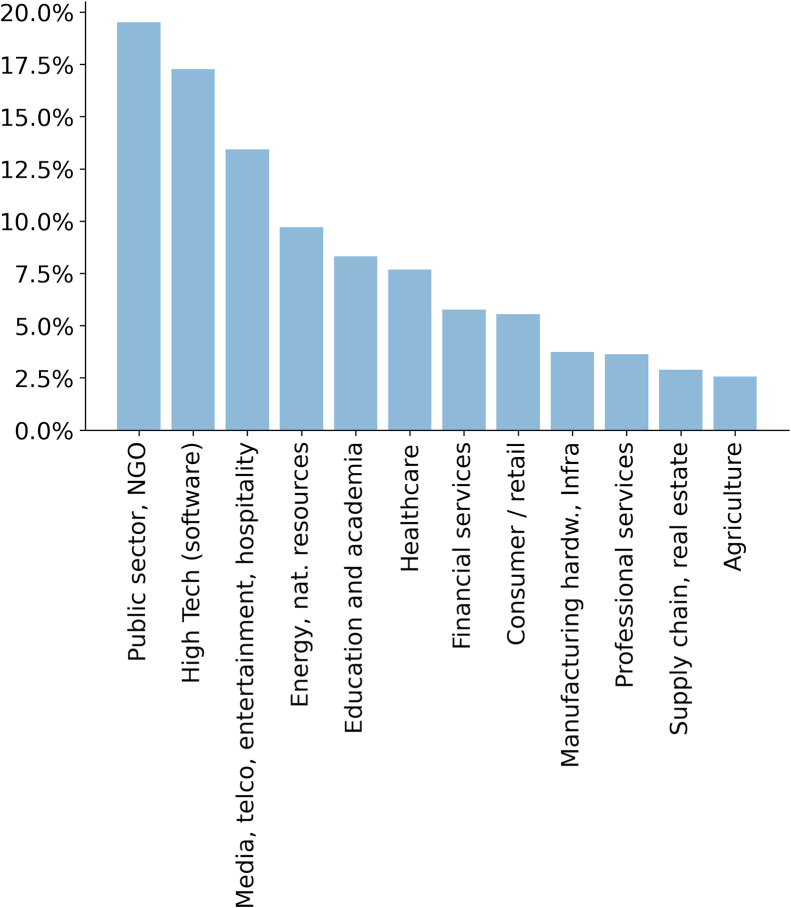

Here, we present our analysis utilizing the Supermind Design database,183 which includes over 1,000 actual examples of AI-enhanced CI, with 938 categorized into 12 application areas. Notably, while some cases might not directly involve AI or CI depending on the exact definitions, the database collectively offers a comprehensive overview. It provides insights into the state of the art in AI-CI applications. As shown in Figure 2, the majority of applications, approximately 20%, are found in the public sector and non-governmental organizations (NGOs), followed by high tech, media, telecommunications, entertainment, and hospitality. Fewer instances are found in supply chain, real estate, and agriculture. It is important to note that several cases classified under “Public sector, NGO” are private sector initiatives addressing public issues. In the upcoming sections, we analyze and highlight specific AI-CI examples across these diverse domains. Table S2 provides a summary of the CI and AI aspects of these examples.

Figure 2.

AI-CI cases by application area

Distribution of AI-enhanced CI cases by application area based on dataset curated by Supermind Design.

Public sector, NGO

Red Dot Foundation (reddotfoundation.in), formerly known as Safecity, is a platform that crowdsources personal stories of sexual harassment and abuse in public spaces. It collaborates with the AI partner OMDENA to build solutions to help women at risk of sexual abuse by predicting places at a high risk of sexual harassment incidents utilizing convolutional neural networks and long short-term memory (LSTM).

Ushahidi (ushahidi.com) is a non-profit online platform for crowdsourcing information, mapping, and visualizing data to respond to and inform decisions on social issues and crises. It aggregates crowdsourced information and reports from individuals across the globe. Ushahidi is developing new tools to enhance data management and analysis, such as leveraging machine-learning techniques to improve the efficiency of processing crisis reports, thus enabling faster response time.184

While NGOs and the public sector might seem like fertile grounds for AI-CI applications, it is crucial to consider the potential high-risk issues that may arise and their impact during the design and deployment of such AI-CI systems. A notable instance of a data-driven tool for social good that diverged from its original accuracy and effect is the infamous case of Google Flu.185

High tech (software)

Just as AI-enhanced CI helps address social issues and crises in the public sector and NGOs, it also drives innovation and efficiency in the high-tech industry. Bluenove (bluenove.com), a technology and consulting company that pioneers massive CI, focuses on mobilizing communities on a large scale. Their Assembl platform (bluenove.com/en/offers/assembl) facilitates open conversations among participants, employing advanced AI technologies such as natural language recognition, semantic analysis, and emotion analysis to categorize content published on the platform automatically.

Figure Eight (formerly known as CrowdFlower) was a human-in-the-loop machine-learning and AI company acquired by Appen (appen.com) in 2019. It leverages crowdsourced human intelligence to perform tasks such as text transcription or image annotation to train machine-learning algorithms, which can be used in various applications.

The recent advancements in high-tech technologies, especially the burgeoning field of LLM software, hold the potential for revolutionary developments. Nevertheless, the high risk associated with errors in automated or AI-generated software186 necessitates the inclusion of human intelligence in these projects.

Media, telecommunication, entertainment, hospitality

Similar to high tech, more traditional ICTs, such as the media sector, harness AI-enhanced CI for purposes such as investigative journalism, historical research, and combating misinformation.

Civil War Photo Sleuth (civilwarphotosleuth.com) combines facial recognition technology and community effort to uncover lost identities in photographs from the American Civil War era. It leverages a dedicated community with a keen interest in the US Civil War and focuses on more accurately tagging and identifying individuals in historical photographs.

Bellingcat (bellingcat.com) is an independent investigative online journalism community specializing in fact checking and open-source intelligence. It brings together a collective of researchers, investigators, and citizen journalists to publish investigation findings. Utilizing various AI tools in investigative work, Bellingcat obtains insights from massive open-source data, such as satellite imagery, photographs, video recordings, and social media posts.

Facing the emerging challenge of deepfake videos, research indicates that a hybrid system combining human judgment with AI models outperforms either humans or models alone in detecting deepfakes,187 underscoring the value of integrating human perceptual skills with AI technology in addressing the complexities posed by deepfake content.

Energy, natural resources

Switching to a more industrial environment, in the energy and natural resources sector, CI combined with AI can play a role in addressing environmental challenges through community-driven data collection and analysis. Litterati188 is a mobile app and community platform that combats litter by empowering people to take action. The app allows the worldwide community to contribute their observations and findings through photographing and documenting occurrences, creating a crowdsourced database of litter data. It also provides a platform for individuals to connect, share, and support each other’s initiatives. The platform uses AI, such as machine-learning and computer-vision techniques, to automatically recognize different objects and materials and efficiently organize the collected litter data. Similarly, OpenLitterMap (openlittermap.com) is another example of making a cleaner planet using AI-enhanced CI.

eBird189 is a mobile app and community platform for biodiversity and supports conservation initiatives to protect bird species and their habitats. It harnesses the power of a global community of birdwatchers and citizen scientists to collectively report bird observations and contribute to a shared knowledge base. eBird uses computer vision techniques to identify bird species from submitted photographs and recordings and employs machine-learning predictive models to forecast bird migration patterns and species distributions.

Tackling environmental challenges is crucial, and large-scale, crowd-based AI-enhanced CI projects play a significant role in this endeavor. Nevertheless, integrating AI with human efforts might dehumanize the work and demotivate human participants.190 This balance between efficiently leveraging AI and maintaining human engagement is a key consideration in such projects.

Education and academia

Education and academia are other areas that benefit from AI-enhanced CI by fostering collaborative learning environments and supporting large-scale research projects. Kialo Edu (kialo-edu.com) is an argument mapping and debate site dedicated to facilitating collaborative and critical thinking. Kialo uses natural language processing techniques to analyze arguments, cluster topics, and identify discussion patterns. It allows users to participate in structured debates and discussions, promoting constructive engagement among students. This interaction helps them deeply understand and analyze the core aspects of the topics under discussion.

Zooniverse (zooniverse.org) is a platform for people-powered research where volunteers assist professional researchers. Galaxy Zoo is a prominent project within the Zooniverse platform. This crowdsourced astronomy project invites people to assist in the morphological classification of large numbers of galaxies. It uses AI algorithms to learn patterns and characteristics indicative of different galaxy types by training on large datasets previously classified by citizen scientists. The combination of efforts from both the crowd and AI enables Galaxy Zoo to harness the power of human expertise and the efficiency of AI to scale up the analysis and understanding of galaxies.

A study analyzing the contributor demographics of the Zooniverse project revealed uneven geographical distribution and a gender imbalance, with approximately 30% of the citizen scientists being female.4 This finding highlights concerns about representativeness and diversity in citizen science initiatives, indicating potential biases in the data and insights from such projects. AI can be used to train human contributors,191 to de-bias CI, and to evaluate and combine human solutions.192

Healthcare

In a similar vein, the healthcare sector also leverages AI-enhanced CI, addressing challenges such as diagnostic accuracy and medical data analysis, which are critical for improving patient outcomes and advancing medical research. The Human Diagnosis Project (humandx.org) is an open online system of collective medical insights that provides healthcare support to patients. Human Dx enables healthcare professionals worldwide to contribute expertise and collaborate on complex diagnostic cases. The platform facilitates idea exchange and mutual learning to ultimately benefit patient care by utilizing machine-learning techniques that automatically learn from classifying patterns in crowdsourced data. Human Dx makes the most of limited medical resources by harnessing the CI of human physicians and AI, enabling more accurate, affordable, and accessible care for those in need.

CrowdEEG193 is a collaborative annotation tool for medical time series data. The project combines human and machine intelligence for scalable and accurate human clinical electroencephalogram (EEG) data analysis. It trains machine-learning algorithms using feedback from clinical experts and non-expert crowds to perform feature detection or classification tasks on medical time series data.

In healthcare, the use of AI-CI poses the risk of amplifying existing biases in human-only diagnosis once we combine AI with human judgment.194 Consequently, errors resulting from such biased decision making can erode patients’ trust not only in the AI systems but also in the medical professionals utilizing them.

Financial services

AI-enhanced CI in financial services optimizes investment strategies and improve market predictions, demonstrating the powerful synergy between human expertise and machine learning. Numerai (numerai.fund) is an AI-run quant hedge fund built on crowdsourced machine-learning models. Numerai hosts an innovative data science tournament in which a global network of data scientists develops machine-learning models to predict stock markets. Based on the combined knowledge of the participants, Numerai combines and aggregates these crowdsourced predictive models into an ensemble model to derive investment strategies in the financial markets.

CryptoSwarm AI is a forecasting service that provides rigorous insights and intelligence on cryptocurrencies and other Web3 assets. A combination of AI technology and real-time human insights powers it. CryptoSwarm AI uses Swarm AI to amplify the CI of the online communities quickly, enabling networked groups to converge on optimized solutions in real time.

In financial markets, research generally indicates positive impacts on market efficiency due to the superior information processing and optimization capabilities of machines, coupled with human anticipation of these effects. However, this improvement is sometimes disrupted by rapid price spikes and crashes, resulting from the herding behavior of machines and the challenges humans face in intervening at extremely fast timescales.195

Supply chain, real estate

The supply chain and real estate sectors also benefit from AI-enhanced CI, improving efficiency, transparency, and decision making through real-time data integration and analysis. MarineTraffic (marinetraffic.com) is a web-based platform that creates a global network of vessel tracking information, enabling users to track vessels, monitor maritime traffic, analyze trends, and make informed decisions. It relies on the collective contributions of the maritime community, who voluntarily transmit real-time information about vessel positions and movements from various sources, including automatic identification system (AIS) data and satellite data. MarineTraffic employs neural network architectures for automatic maritime object detection using satellite imagery.196

Waze (waze.com) is a GPS navigation software that works on smartphones and other computers. It analyzes crowdsourced GPS data and user-submitted information along the route in real time and uses community editing to ensure the accuracy of the map data. Waze uses AI algorithms to predict traffic, optimize routes,197 and provide personalized recommendations by analyzing driver behaviors. Waze has implemented integration with Google Assistant, enabling users to use the application by utilizing the voice command “Hey Google.”

By improving efficiency, reducing waste, and enabling more equitable distribution and accessibility of resources and spaces, AI can play a significant role in addressing inequalities in access to resources, paving the way for sustainable and efficient infrastructure development.198

Agriculture

Finally, the agriculture sector leverages AI-enhanced CI to improve farming practices, resource management, and crop yields, addressing global food security challenges. WeFarm is a social networking platform connecting the small-scale farming community. It helps millions of African farmers meet, exchange solutions to their questions, and trade equipment and supplies. Farmers ask each other questions about agriculture and promptly receive content and ideas from other farmers worldwide through crowdsourcing. Natural language processing processes and understands the text-based messages farmers send in different regions. Machine-learning matching algorithms consider farmers’ needs and expertise, identify the most suitable responses, and present them to the farmer who posed the question.

Mercy Corps AgriFin (mercycorpsagrifin.org) harnesses the power of AI and a global network of partners to transform agriculture for smallholder farmers. By utilizing state-of-the-art imagery, modeling, and analysis, AI enables precise management of crops and adaptation strategies against climate change impacts. AgriFin’s integration of digital technology and data, supported by a global network, empowers these farmers in a digitally interconnected world.

In light of the global food crisis and the quest for sustainable solutions, the importance of AI-enhanced CI platforms becomes increasingly critical. These platforms can significantly contribute to addressing agricultural challenges and transitioning toward global sustainable food systems.199

Limitations

The previous examples across various application domains demonstrate that CI and AI leverage the power of crowdsourced data, collaborative problem solving, and advanced data-processing techniques to enhance decision making, optimize processes, and improve outcomes. However, there are limitations and concerns associated with the AI-CI approach.

Scalability

AI-enhanced CI can scale up problem-solving efficiency for real-world challenges, but scalability remains a limitation. As the system grows, scaling the multilayer framework and ensuring its applicability across various domains and scales can be challenging. Integrating diverse fields provides a comprehensive approach but poses difficulties in coordination, consistency, and computational resources.

Bias

While AI can help mitigate certain human biases, they also have the possibility to introduce new ones. These biases can stem from various stages of AI development and deployment. AI systems learn from the data they are trained on, and biased training data can cause the AI to replicate these biases. Additionally, algorithmic design choices may inadvertently favor certain groups over others. Human-in-the-loop bias is another concern, where human inputs during training and feedback, such as biased labeling by annotators, can be learned by the AI. Moreover, user interactions with AI systems can also influence and reinforce these biases. AI biases pose risks to fair and equitable outcomes in society, especially in sensitive areas such as healthcare and education. Bias in AI algorithms can lead to unfair treatment or misdiagnosis in healthcare, and biased educational tools can perpetuate existing inequalities. Understanding how AI biases and human biases interact, and how to avoid doubly biased decisions made by human-machine intelligence,200 are crucial areas for further study.

Explainability

Many AI models, particularly deep-learning algorithms, operate as “black boxes,”201 making understanding how they arrive at specific decisions or recommendations difficult. This opacity can hinder trust and acceptance among users, especially in high-stakes domains such as healthcare and law. Explainability is essential for ensuring accountability, enabling users to understand and challenge AI decisions, and identifying and correcting biases within the system. Developing AI models that are both effective and interpretable requires ongoing research and innovation.

Challenges and outlooks

The application areas of AI-enhanced CI continually expand as technology advances and new opportunities emerge. As introduced in the previous section, successful examples of AI-enhanced CI exist in various domains. However, challenges coexist with opportunities. When realizing human-AI CI, many aspects must be considered, such as human-machine communication, trust, crowd retention, technology design, and ethical issues.202 Here, we briefly discuss some of these challenges and call for further work on each aspect.

Communication

Clear and effective communication between humans and AI is the engine for CI to emerge.164 Communication refers to the process of exchanging information between teammates.203 It is essential for team performance as it contributes to the development and maintenance of SMMs and the successful execution of necessary team processes.203,204

For effective communication in human-AI teamwork, AI must first be able to model human information comprehension accurately and then effectively communicate in a manner understandable to humans. Current AI technologies face limitations in verbal and contextual understanding204 and cognitive capabilities, such as reasoning about others’ mental states and intentions.205 Detection, interpretation, and reasoning about social cues from a human perspective is imperative to ensure effective coordination, but AI has yet to achieve this. Although recent developments in LLMs appear promising, further advancing our understanding of how LLMs can be utilized in such a way is crucial.206,207

AI’s inability to interpret nonverbal cues and limited self-explanation hinder human collaboration. Addressing these challenges, research suggests the development of AI agents with cognitive architectures that can facilitate both a machine theory of the human mind (MToHM) and a human theory of the machine mind (HToMM) will be especially important for supporting the emergence of CI.164

Trust

In team success, a human member’s trust in a machine is pivotal.208 Research indicates that users interact differently with AI than humans, often exhibiting more openness and self-disclosure with human partners.209 Developing explanatory methods that foster appropriate trust in AI is challenging yet crucial for enhancing performance in human-AI teams.174 Meanwhile, over-reliance on AI, especially when faced with incorrect AI advice, can be detrimental and lead to human skill degradation. Hence, it is essential for humans to judiciously rely on AI when appropriate and exercise self-reliance in the face of inaccurate AI guidance,210 especially in high-stakes situations. More tech literacy, public understanding of AI, and advancements in the technology itself will help in overcoming these challenges.

Crowd retention

Albeit beneficial in terms of efficiency, employing AI and citizen scientists together has been reported to damage the retention of citizen scientists.190 There is a trade-off between efficiency and retention of the crowds when deploying AI in citizen science projects, and ultimately, if all the crowds leave the projects, the performance of AI alone would decline again. One of the factors contributing to the retention of volunteers is reported to be the social ties they make in their teams.211 The challenge of forming social bonds with AI could increase human feelings of loneliness and isolation, potentially affecting mental health. Questions about identity and recognition could arise when humans are rewarded in conjunction with AI. Studies highlight concerns regarding the anthropomorphic appearance of social robots potentially threatening human distinctiveness.212 Additionally, collaboration with AI may demotivate humans due to a lack of competitive drive and an over-reliance on AI, which could diminish human participation and initiative. Understanding how to balance AI collaboration with maintaining human motivation and retention is crucial.

Technology design

The interface design of AI technology influences user experiences, engagement, and acceptance of AI-driven communication. Interfaces should be user friendly, promoting seamless information exchange and aligning with human cognitive processes. Since AI systems need time to process human input and generate output, there may be a significant time lag between the interactions in some instances. The delay of feedback between human input and AI responses can adversely affect213 the coordination and interaction efficiency between humans and AI, especially in collaborative tasks. On the other hand, human behavior possesses specific temporal patterns, mainly determined by circadian rhythm.214 It is essential to design such hybrid systems so that the temporal characteristics of both entities match, support mutual understanding, and balance the cognitive load and workload distribution. Moreover, these systems should be designed to augment human contributions.215 To effectively cater to diverse user groups, human-centered AI design approaches, including the use of personas,216 can be employed to develop adaptive and inclusive human-AI interfaces.

Ethical considerations

When integrating AI into human interactions, several ethical considerations emerge. Addressing these concerns involves balancing benefits and minimizing potential harms. Key issues include the ethical implications of mandatory AI use, especially when humans are reluctant or do not require AI assistance. When it comes to AI transparency, a study reported that humans who were paired with bots to play a repeated prisoner’s dilemma game outperformed human-human teams initially but later started to defect once they became aware that they were playing with a bot, showing a trade-off between efficiency and transparency.217 Another critical aspect is addressing biases inherent in AI outputs, which are influenced by the data inputs. It is essential to consider how AI integration might exacerbate or introduce new biases in decision making.200 Furthermore, liability questions arise when AI errors cause serious harm, an issue compounded by the lack of comprehensive regulations and laws. For instance, a robot failed in object recognition and crushed a human worker to death in a factory in South Korea.218 This highlights the urgent need for comprehensive legal frameworks to address such liabilities and ensure safety in environments where AI interacts with humans. As AI becomes more prevalent in the workplace, constructing ethical human-AI teams becomes a complex, evolving challenge.219

Conclusions

The advancement of AI technologies has led to enhanced and evolved approaches to tackling existing challenges. Approaches relying solely on human intelligence or AI encounter significant limitations. Therefore, a synergistic combination of both, complementing each other, is considered the ideal strategy for effective problem solving.

In current real-world AI-CI applications, AI is predominantly utilized as a technical tool to facilitate data analysis through techniques such as natural language processing and computer vision. Implementing AI not only scales up tasks but, in combination with human intelligence, leads to superior performance. Although AI is critical in some decision-making processes, humans make the ultimate decisions and contributions. It is essential to recognize that AI’s function is to support and enhance human collaborative processes rather than to replace human intelligence. Nevertheless, it is also worth considering CI emerging from a group of AI entities in future research. Past work has demonstrated that the collective behavior of autonomous machines can lead to the emergence of complex social behavior.220

AI-CI approaches present numerous challenges. Success in this field relies on multiple factors beyond the initial idea and readiness of technological solutions. Critical aspects include attracting the audience, designing user-friendly interfaces, scalability, and effectively integrating AI with human CI. Understanding how to combine human intelligence and AI to address social challenges remains a significant and worthy area of study that requires interdisciplinary collaboration.

While interdisciplinary collaboration presents challenges, such as differences in terminology, methodologies, and objectives across fields, which can create communication barriers and slow progress, it also offers benefits. Interdisciplinary collaboration fosters a comprehensive understanding of complex problems and enables developing more effective, adaptable, and ethical AI-enhanced CI systems.

Complex system thinking has illuminated various biological, physical, and social domains. Based on this, we can model real-world CI systems as multilayer networks. This framework allows us to leverage extensive research on multilayer networks, focusing on their robustness, adaptivity, scalability, resilience, and interoperability.

Our theoretical model of human-AI CI, a multilayer network with cognition, physical, and information layers, offers valuable insights into complex phenomena in such hybrid systems. For example, in the case of the Flash Crash 2012,221 the cognition layer includes high-frequency traders (algorithms) responding to market signals, the physical layer involves the actual financial transactions, and the information layer encompasses the data streams and communication networks connecting these elements. By understanding the interplay and feedback loops within these layers, our model helps identify vulnerabilities, such as how a single large sell order propagated through the network, causing a cascade of automated responses that led to the crash.195

Similarly, regarding the reported issue of human user retention while AI is being deployed in citizen science projects,190 our model elucidates the interaction between human volunteers and AI classifiers within the different layers. It highlights the importance of maintaining a balance between efficiency (enhanced by AI) and volunteer engagement (rooted in human cognition). This approach prepares us to preemptively address potential pitfalls, ensuring more resilient and effective CI systems in the future.

Future research directions

Future research in this field will require strong interdisciplinary communication between different domains and research fields. The following are a few directions for future research.

Behavioral studies