Abstract

Recently, Deep Learning (DL) models have shown promising accuracy in analysis of medical images. Alzeheimer Disease (AD), a prevalent form of dementia, uses Magnetic Resonance Imaging (MRI) scans, which is then analysed via DL models. To address the model computational constraints, Cloud Computing (CC) is integrated to operate with the DL models. Recent articles on DL-based MRI have not discussed datasets specific to different diseases, which makes it difficult to build the specific DL model. Thus, the article systematically explores a tutorial approach, where we first discuss a classification taxonomy of medical imaging datasets. Next, we present a case-study on AD MRI classification using the DL methods. We analyse three distinct models-Convolutional Neural Networks (CNN), Visual Geometry Group 16 (VGG-16), and an ensemble approach-for classification and predictive outcomes. In addition, we designed a novel framework that offers insight into how various layers interact with the dataset. Our architecture comprises an input layer, a cloud-based layer responsible for preprocessing and model execution, and a diagnostic layer that issues alerts after successful classification and prediction. According to our simulations, CNN outperformed other models with a test accuracy of 99.285%, followed by VGG-16 with 85.113%, while the ensemble model lagged with a disappointing test accuracy of 79.192%. Our cloud Computing framework serves as an efficient mechanism for medical image processing while safeguarding patient confidentiality and data privacy.

Keywords: Smart healthcare, Blockchain, Internet of things, Security & privacy, Patient data, Challenges

Subject terms: Computational platforms and environments, Health care, Biomedical engineering, Computer science

Introduction

In recent years, the healthcare industry has become increasingly interested in the latest technologies. Cloud computing (CC)1 and Deep Learning (DL)2 are two main domains that have revolutionized the healthcare ecosystem, having a great amount of potential in providing effective and innovative solutions to the challenges faced by healthcare professionals. One such example and challenge is the early treatment and diagnosis of Alzheimer’s Disease (AD), which is a neurological disorder that has affected millions of people worldwide. CC allows users to process and access data, storing data and applications on remote servers via Internet. The ability of healthcare providers to store and process enormous amounts of data in an economical and scalable manner due to CC has completely changed the healthcare sector3. However, significant security and privacy risks are associated with using CC in healthcare, especially when dealing with sensitive patient data4. Today, healthcare services make use of drones to deliver medical aid to remote places to extend the medical facility5,6.

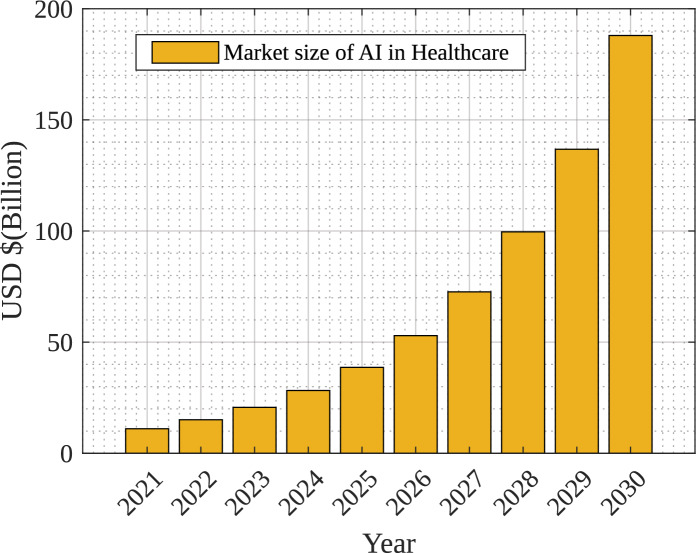

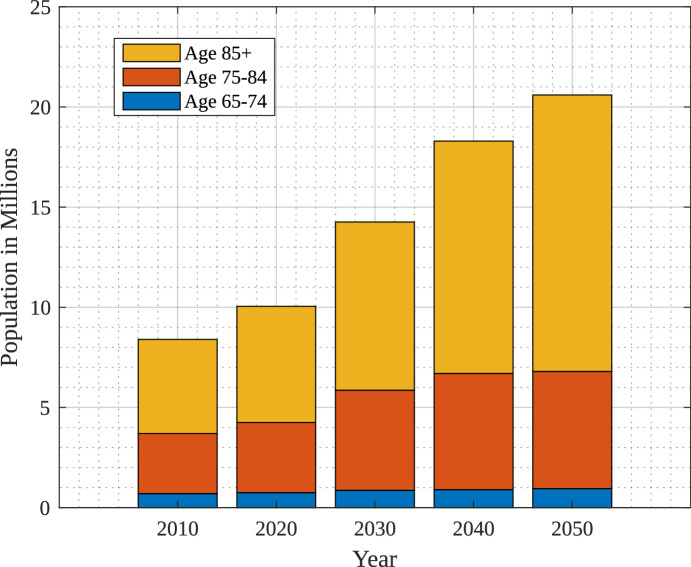

The impact of AI in 2022 was estimated at around 15 billion USD and it is expected to be 187 billion USD by the year 2030 which is a 36% compound annual growth rate. Figure 1 shows the growth of AI in healthcare over the year7. The use of digital technologies and enhanced care of patients are the main factors to boost the growth of market size in the healthcare domain. There have been observations on DL-based solutions over the years for diagnosis that have enhanced the trust of the medical community in primary research8. Moreover, explainable AI has provided a new dimension to verify and provide explanations for the decision made9. The projected number of people in different age groups in the U.S. suffering from Alzheimer’s Disease from the year 2010 to 2050 are shown in Fig. 2, as per study at10. Figure 2 also indicates drastic growth of Alzheimer’s Disease from 4.7 million to 13.8 million after the age of 85 years because of social and environmental conditions.

Figure 1.

Market size of AI in healthcare domain7.

Figure 2.

Impact of Alzheimer on age10.

DL is a promising approach that has demonstrated impressive performance in a number of areas, including Speech Recognition, Medical Image Classification, and Natural Language Processing11–14. Consequently, there has been a lot of interest in using DL models in healthcare in recent years. DL models in healthcare can help with early disease detection, effective diagnosis, and individualized treatment15. The early detection and diagnosis of AD is one of the most promising uses of DL in healthcare.

The field of medical imaging is progressing very fast with the developments presented for 2019 and beyond giving us interesting insights on both technology adoption and market dynamics. Ultrasound imaging, a critical aspect of diagnostic procedures, accounted for a large share in the market of 20.6% across globes as at 2019. North America was also prominent in this period by having a leading market portion of 42.1%, thereby emphasizing the pioneering nature of the region’s healthcare innovation16. An important trend is that Artificial Intelligence (AI) is being integrated into medical imaging, and it is anticipated to hit $4.3 billion by 2026 with regard to estimates. The global shift towards these diagnostic imaging processes is expected to reach 7.6 billion by 2030. Additionally, X-ray systems had dominated the market at around 30% in terms of its total sales in the year 2019 while multi-slice CT scanners had taken up about three quarters of their own markets back in 2018. The financial landscape valued this total at $3,199 million in FY2020 setting pace for notable valuations anticipated for MRI scanners’ markets in FY2027 or PET scanners market by FY2019 respectively. Moreover, the push towards advanced imaging techniques is mirrored in the growth predictions for nuclear medicine imaging and medical imaging software, poised for significant growth by 2026. The Asia-Pacific region, not to be outdone, is projected to experience a 5.9% CAGR in the medical imaging market between 2018 and 2023, reflecting a global tilt towards more sophisticated diagnostic capabilities. Teleradiology emerges as a pivotal technology, with its market projected to reach $10.8 billion by 2026. Amidst these advancements, General Electric’s market presence, with a 15.2% share in 2019, illustrates the competitive nature of the industry. Despite these technological strides, challenges such as the 20% of breast cancers undetected by mammography alone highlight the ongoing need for innovation and improvement in imaging modalities, including the promising growth of Optical Coherence Tomography (OCT) systems, expected to ascend at a CAGR of 10.4% from 2021 to 2028. These developments not only reflect the current state but also chart a course for the future of medical imaging, underscoring a blend of technological advancements, market shifts, and the perpetual aim of enhancing diagnostic accuracy and patient care.

Dataset images need to be processed into the platform that supports high computational environment. Hence, a platform for the storing, processing, and analysis of massive datasets is provided by CC that has transformed image-based categorization analysis in the healthcare system. CC offers a scalable and economical alternative for processing, storing, and analyzing enormous numbers of medical images in light of the growing digitization of medical data and the explosion in high-resolution medical imaging. Healthcare organizations can use the enormous computing capacity of the cloud to speed up challenging image classification tasks, such as locating cancers, lesions, or anomalies in radiological scans. Healthcare providers can drastically cut processing time by outsourcing resource-intensive computations to the cloud, enabling quicker diagnostic and treatments17.

Additionally, the collaborative nature of the cloud enables seamless data exchange and inter-institutional collaboration, allowing medical professionals to access and examine images from a distance. This has significant benefits for telemedicine and second-opinion settings where experts can share their opinion irrespective of regional limitations18. Advanced ML and AI techniques can also be used with cloud-based image analysis. These algorithms are capable of learning from various datasets and progressively increasing the precision. Hence, they can spot patterns and anomalies in medical images. The healthcare sector can increase diagnostic precision, boost patient outcomes, and streamline the entire healthcare workflow by utilizing the power of CC19. Therefore, CC is the best option for healthcare providers to store and process massive amount of patient data because of its scalability and everywhere accessibility. Using the given data, DL models can be trained on cloud platforms to find patterns and forecast outcomes20. As the CC resources are widely available so, DL models especially suited for CC in the healthcare industry must be developed21.

CC has completely changed how data is processed and stored22. CC has made it possible for healthcare workers to communicate effectively, share medical data, and monitor patients from a distance. With the help of the Cloud and DL the image classifications can be done in the optimal and fastest way. In this study we have focused on application of DL models for the early identification and diagnosis of AD in the healthcare industry. Alzheimer’s Disease (AD) is a degenerative neurological condition that impairs elderly people’s memory and cognitive abilities. A lot of patient information is needed for the diagnosis and treatment of AD, including medical histories, blood tests, and brain imaging studies. There is a need for effective and precise tools to analyze and derive valuable insights from the growing amount of healthcare provider data. A subset of ML called DL has demonstrated well in addressing this demand and has been used in a number of healthcare fields, including AD23.

In the field of categorizing AD, Machine Learning (ML) and DL have become crucial tools, providing previously unattainable insights into the complicated patterns of neuroimaging, clinical, and other medical-related data24. Support Vector Machines (SVMs) and Random Forests, ML approaches, have demonstrated proficiency in extracting discriminative features from neuroimaging modalities like Magnetic Resonance Imaging (MRI) and Positron Emission Tomography (PET) scans. These techniques work well in lower-dimensional areas when the retrieved characteristics indicate distinct neuroanatomical differences linked to various illness phases. In addition to this, the development of DL has also played important advances in the classification of AD. Convolution Neural Networks (CNN) have demonstrated a special aptitude for identifying spatial patterns in neuroimaging data, catching minute structural anomalies that would challenge conventional analysis. The architecture’s innate capacity to preserve context from earlier time steps gives them the sensitivity to spot early alterations in brain activity linked to diseases. Utilizing Transfer Learning (TL), which allows pre-trained DL models, has also proved a good option for the identification of AD from the available datasets25.

For both medical professionals and patients, accurate classification of medical images using cutting-edge image analysis algorithms has important consequences26,27. The accurate classification of images serves as a powerful tool for decision-making for clinicians, enhancing their clinical knowledge with quantitative and data-driven insights28,29. By identifying small patterns and anomalies that can be invisible to the human eye, physicians can improve diagnostic accuracy by utilizing ML and DL models30. This is especially important in fields like radiology, where proper classification of medical pictures can help early discovery of the diseases, allowing prompt treatment and better patient health31. Additionally, automated image analysis lessens the cognitive burden on doctors, enabling them to devote more time for tailored patient care and nuanced interpretations as opposed to lengthy routine picture assessments32. Doctors may confidently diagnose illnesses, create personalized treatment plans, and track disease development with increased precision by incorporating computational models into their workflow.

Accurate medical picture classification benefits patients by earlier identification, more potent therapies, earlier start of suitable treatments, all-around improved healthcare experiences, and possibly preventing disease progression in its early stages. Advanced image analysis can also reduce diagnostic ambiguity, which reduces patient anxiety since people are better able to make educated decisions about their healthcare when information is disseminated quickly and accurately33. As medical technology develops, patients gain from a healthcare ecosystem where clinical knowledge34 and AI-powered image analysis work together to provide optimized care routes35. This environment promotes patient population trust and well-being36. For the classification challenge of the medical images for Alzheimer’s Disease, three DL models are analyzed and implemented using CNN, Visual Geometry Group (VGG-16), and the Ensembled model, in this study. The topic of early identification and detection of AD is addressed by the proposed architecture and algorithm.

Research contributions

This research offers insights into the current status of DL and CC research in healthcare. Through a comprehensive review of existing literature, it uncovers research gaps. By utilizing MRI images, the study enhances AD diagnosis and prognosis, potentially leading to faster and more precise treatment.

The paper compares the performance of three distinct DL models namely: CNN, VGG-16, and Ensembled model for classification and prediction task of Alzheimer’s Disease. This evaluation offers important insights into the applicability and efficicny of various models for medical image analysis.

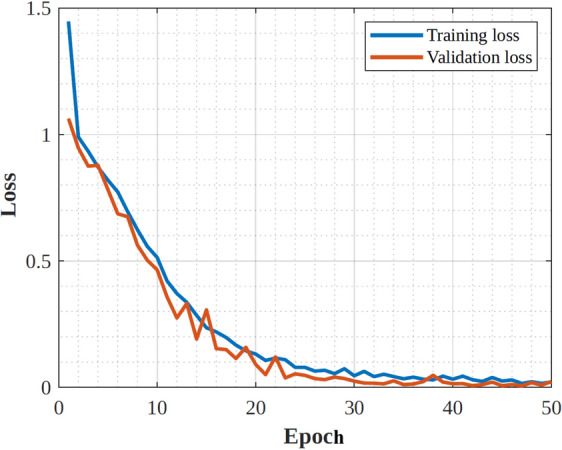

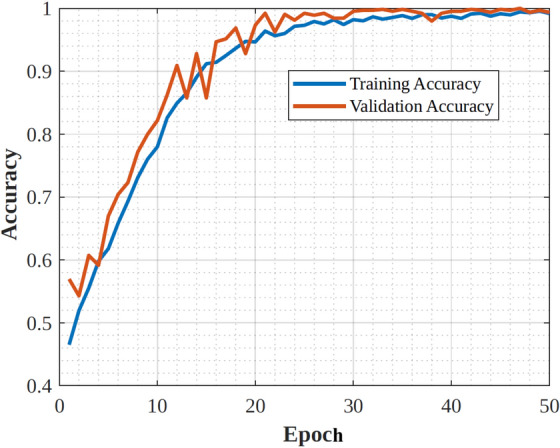

Simulation results show that CNN and VGG-16 models perform better on accuracy and predictions for medical image analysis investigations.

The remaining work is organized as follows. Section “Related work” provides a literature survey of the existing states of the art. Section “Classification of medical imaging datasets” consists of the classification of diseases and their related database available in the public domain for research purposes. Section “Case study: Alzheimer’s MRI classification using DL methods” provides architecture and algorithm for the proposed case study on AD using DL and transfer learning, Sect. “Simulation results” provides the result and comparative analysis of CNN, VGG-16, and Ensembled model on different datasets. The final section “Conclusion” provides the summary of the comprehensive analysis of the different datasets.

Related work

This section presents a details analysis of the literature survey mentioned in the Table 1 with existing state-of-the-art approaches. In recent years, DL techniques for medical image processing have attracted increasing amounts of interest for medical practitioners. For various medical imaging modalities, such as ultrasound and MRI, researchers have presented a variety of DL architectures. In the field of healthcare Nancy et al.37 proposed a smart healthcare system that integrates Internet-of-Things (IoT) and a cloud-based monitoring system for the prediction of heart disease using DL. The suggested method predicts heart disease with a 92.8% accuracy rate. The authors conclude that the suggested approach can effectively predict heart disease and can be used in real-time healthcare monitoring analysis. An approach for a cloud-based encrypted communication system for diagnosing tuberculosis (TB) was presented by Ahmed et al.38. The proposed system seeks to protect the privacy and security of medical images while enabling efficient and accurate diagnosis using DL techniques. Authors in39 propose a hybrid Deep Learning network to enhance the classification of Mild Cognitive Impairment (MCI) from brain MRI scans. The model integrates the Swin Transformer, Dimension Centric Proximity Aware Attention Network (DCPAN), and Age Deviation Factor (ADF) to improve feature representation. By combining global, local, and proximal features with dimensional dependencies, the network achieves better classification results. The experimental evaluation on the ADNI dataset shows the model’s effectiveness, with an accuracy of 79.8%, precision of 76.6%, recall of 80.2%, and an F1-score of 78.4%. Furthermore, some studies have also focused on the security aspects of images as well. For instance, Gupta et al.40 presents a DL approach for enhancing the security of multi-cloud healthcare systems that outperforms other methods in terms of detection accuracy and false alarm rate. Qamar et al.41 proposed a DL-based method for analyzing healthcare data at the same time ensuring cloud-based security that achieves high accuracy in classifying healthcare data and can be used as an effective tool for healthcare data analysis with cloud-based cybersecurity.

Table 1.

Comparative analysis of the existing state of the art.

| References | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | Pros | Cons |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gupta et al.55 | Y | N | N | N | N | N | Y | Y | Y | Y | Blockchain enabled system for early classification and detection of monkey-pox with the help of transfer learning on skin lesion dataset | Increased latency and bandwidth while accessing blockchain |

| Akhtar et al.56 | Y | N | N | N | N | Y | Y | Y | Y | N | Internet of medical thing based healthcare monitoring system that uses improved advanced feature set RNN | System requires high bandwidth and require large space to store patients data that is not secure |

| Nancy et al.37 | Y | Y | Y | N | N | Y | N | N | N | Y | A healthcare monitoring system for remote monitoring of patients health and real-time analysis using IOT and Cloud | To handle IOT data requires higher bandwidth |

| Ahmed et al.38 | Y | Y | Y | N | Y | Y | Y | N | Y | Y | A Perceptual Encryption method, applicable for both the images i.e. color and grayscale, improves robustness against different attacks | An assumption of a cloud computation server that is private, which may not be applicable in all settings |

| Gupta et al.40 | Y | N | N | Y | Y | Y | Y | N | N | N | The hierarchical model integrates seamlessly with the healthcare network’s hierarchical structure | Increased risk of malicious agents stealing or altering sensitive patient data |

| Qamar et al.41 | Y | N | N | N | N | N | Y | N | Y | Y | Utilizes DL-based classification and feature selection to analyze EHR data with a focus on cyber security | Additional complexity and potential security risks |

| Simeone et al.42 | Y | N | N | Y | Y | N | N | N | Y | Y | Cloud-based platform for worker health monitoring in hazardous manufacturing environments | The potential cost and complexity for implementation |

| Bolhasa-ni et al.57 | Y | N | N | Y | N | N | N | N | N | N | Comprehensive analysis of the possible uses of DL in IoT-based healthcare systems | Data Privacy and Security, increased risk of data breaches |

| Cotroneo et al.44 | Y | N | N | N | N | N | Y | Y | Y | Y | Yields comparable or superior results to manual clustering, which requires significant human effort and expertise | Requires high hardware requirements |

| Motwani et al.45 | Y | Y | N | N | N | Y | N | Y | Y | Y | Smart monitoring architecture monitors chronic patients in real-time and predicts Emergency, Alert, Warning, and Normal scenarios equally well locally and in the cloud | May require high costs for implementation and maintenance |

| Aazam et al.58 | Y | N | N | N | N | N | Y | N | Y | Y | Explored the use of ML in healthcare applications with edge computing | Challenges in ensuring interoperability between different devices and systems, which can limit the ability to scale and deploy such solutions on a larger scale |

| Hossain et al.46 | Y | Y | Y | Y | Y | Y | Y | N | Y | Y | Adequate for parallelization | Need for a reliable and high-speed internet connection |

| Praveen et al.59 | Y | Y | Y | N | N | Y | Y | N | Y | Y | OGSO-DNN is an energy-efficient illness detection and clustering approach for IoT-based sustainable healthcare systems | Scalability, Data privacy and security can be an issue |

| Shah et al.60 | Y | Y | N | Y | Y | Y | N | Y | Y | Y | Improves data accuracy and processing speed in IoT environments | Requires high bandwidth and robust infrastructure |

| Yan et al.50 | Y | N | N | N | N | N | Y | N | Y | Y | RSIF framework enhances healthcare data access in the cloud for users and service providers | May require a significant amount of computational resources |

| Tuli et al.52 | Y | N | N | Y | Y | Y | Y | Y | Y | Y | HealthFog: a portable, cost-effective solution for heart disease diagnosis using ensemble DL | Requires high compute resources for training and prediction |

| Durga et al.61 | Y | N | N | N | N | N | N | N | N | N | Explored algorithms for enhancing IoT-based healthcare systems in this study | High Complexity and Computation time |

1-Accuracy, 2-Sensitivity, 3-Specificity, 4-Latency, 5-Bandwidth Utilization, 6-Robustness, 7-Security, 8-Fault Tolerant, 9-Efficiency, 10-Reliability.

In risky manufacturing environments, Simeone et al.42 offers an AI-based cloud platform for monitoring risk. According to the authors, their platform can significantly lower the frequency of workplace accidents and increase employee productivity43. Cotroneo et al.44 proposed a DL-based technique to improve the analysis of software failures in CC systems. The authors described a DL-based method for identifying and categorizing software errors in CC systems using CNNs. In order to monitor patients in real-time and provide individualized recommendations, Motwani et al.45 proposed the Smart Patient Monitoring and Recommendation framework. A real-world dataset was used to evaluate the proposed framework, and the results demonstrated that it can accurately forecast patient conditions and offer useful personalized recommendations, thereby enhancing patient care. A DL-based pathology detection system for smart, connected healthcare was proposed by Hossain et al.46. The system uses images taken with a smartphone and sent to a cloud-based server for analysis to make a diagnosis of skin cancer. The accuracy of the suggested system was 87.31%. Ghaffar et al.47 presented a comparative analysis on COVID-19 detection for chest X-ray images. The authors used pretrained models like ResNet, DenseNet, and VGG to improve diagnostic accuracy. The study further builds upon these findings by providing a detailed comparative analysis of models such as MobileNet, EfficientNet, and InceptionV3, emphasizing their high accuracy rates in classifying COVID-19 infections, thus contributing valuable insights into effective methodologies for rapid and reliable disease detection in clinical practice. Illakiya and Karthik48 focused on the significant role of DL algorithms in medical image processing, particularly in helping radiologists to diagnose accurately disease. Their research reviews 103 articles to evaluate deep learning methods like CNNs, RNNs, and Transfer Learning for detecting Alzheimer’s Disease (AD) using neuroimaging modalities such as PET and MRI. The study highlights the effectiveness of these models in the detection of AD, while also emphasizing the need for a more detailed analysis of the progression from Mild Cognitive Impairment (MCI) to AD. Kang et al.49 address the challenge of overfitting in 3D CNN models for AD diagnosis due to limited labeled training samples. They propose a three-round learning strategy combining transfer learning and generative adversarial learning. This involves training a 3D Deep Convolutional Generative Adversarial Network (DCGAN) on sMRI data, followed by fine-tuning for AD versus cognitively normal (CN) classification, and transferring the learned weights for Mild Cognitive Impairment (MCI) diagnosis. The model achieves notable accuracies of 92.8% for AD versus CN, 78.1% for AD versus MCI, and 76.4% for MCI versus CN.

Yan et al.50 proposed a framework for DL-based concurrent processing and storage of healthcare data in a distributed CC setting51. The framework that has been proposed aims to address the difficulties involved in the processing and real-time analysis of massive amounts of healthcare data. An evaluation of the proposed framework using a prototype system reveals that it is capable of processing and storing large volumes of health data effectively. Tuli et al.52 proposed “HealthFog” for the automatic diagnosis of heart disease by integrating the IoT and fog computing environment. HealthFog uses an ensemble DL model to classify heart diseases based on ECG signals, with this system has improved the accuracy and efficiency of heart disease diagnosis53. Illakiya et al.54 present a novel Adaptive Hybrid Attention Network (AHANet) for the early detection of AD using brain MRI. AHANet integrates Enhanced Non-Local Attention (ENLA) for capturing global spatial and contextual information and Coordinate Attention for extracting local features. Additionally, an Adaptive Feature Aggregation (AFA) module effectively fuses these features, enhancing the network’s performance. Trained on the ADNI dataset, AHANet achieves a remarkable classification accuracy of 98.53%, outperforming existing methods in AD detection.

Novelty of the work

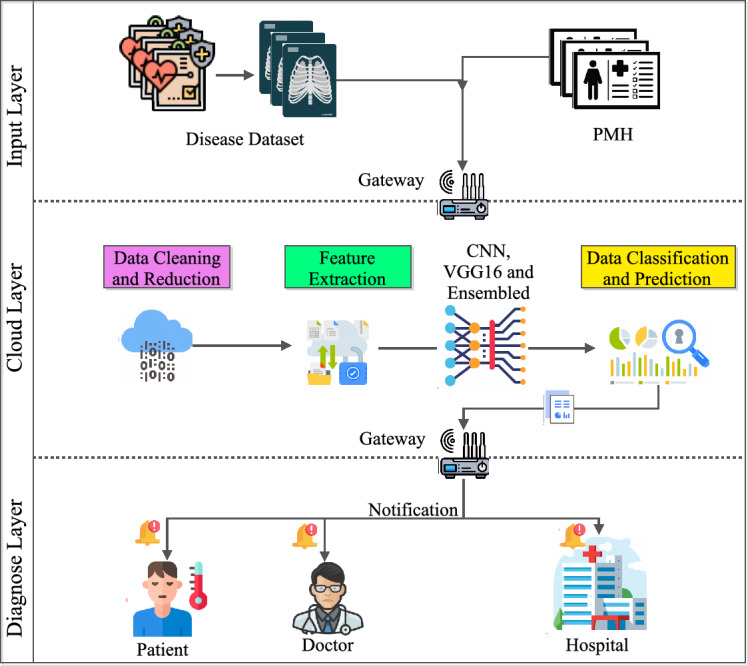

The article outlines a ground-breaking method for utilizing DL in healthcare, which focuses on medical image processing, specifically for MRI data-based AD diagnosis. In an effort to increase the accuracy of Alzheimer’s diagnosis. This study also conducts a thorough analysis of DL algorithms and CC. The article also discusses the various healthcare imaging databases analysis. For the experimental analysis, the three models-CNN, VGG-16, and an ensemble model have been analyzed for classification by carefully choosing an Alzheimer’s dataset. The recommendation of a novel design with three essential layers-the input layer, the cloud layer for preprocessing and model implementation, and the diagnostic layer for thorough categorization and notification generation is a standout addition. This layered approach improves model comprehension and provides guidelines for upcoming implementations in the domain of Healthcare systems. Beyond model performance, the research presents a ground-breaking CC framework that revolutionizes medical image processing while putting the needs of patient privacy and data confidentiality first. This study is positioned as a leading contribution to the breakthroughs in DL in the healthcare ecosystem.

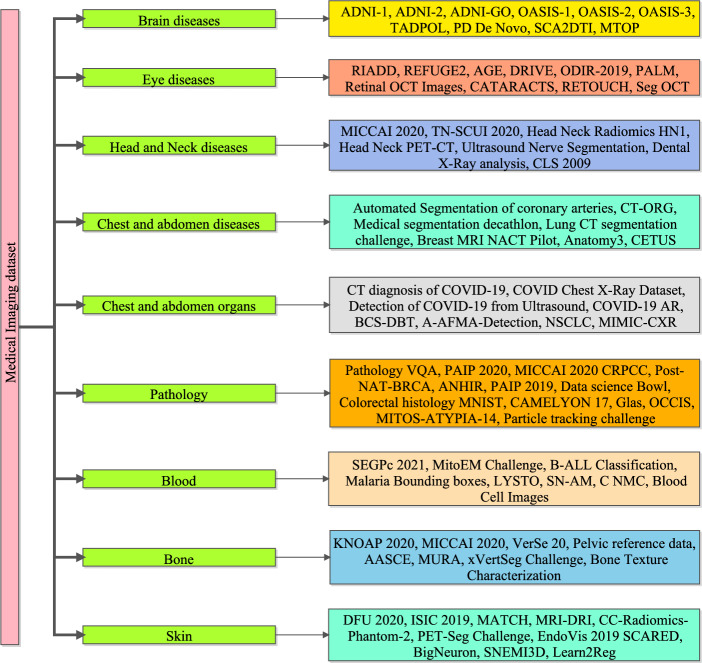

Classification of medical imaging datasets

An overview of all the image datasets, associated diseases, and techniques/technologies used for retrieving medical data is presented. [NOTE: All the presented datasets in the work are public and links are provided in the appendix. As of April 4, 2024, the links provided for accessing the datasets were verified to be functional. However, it is important to note that the authors cannot be held responsible for any changes, removal, or updates to the datasets as these links were sourced from the Internet. Any alterations to the dataset or additional information not accessible through these links are beyond the control and responsibility of the authors. Users are advised to verify the availability and integrity of the datasets independently]. The subsections provide vital information about the techniques/technologies and information regarding the associated disease or considered body parts. Medical imaging datasets are essential for the evolution of diagnostic and prognostic techniques in the healthcare area. Figure 3 presents the taxonomy of medical imaging data sets such as brain disease, eye diseases, head and neck disease, chest and abdomen, pathology, blood, bone, and skin database. These datasets include a wide range of medical images taken from various clinical contexts, such as X-rays images, MRI, CT, and ultrasound images. Medical imaging datasets are useful because they allow for the development and testing of cutting-edge algorithms, predictive models, and AI-based applications that improve medical diagnosis, treatment planning, and patient care. Medical imaging datasets are significant because they can also provide researchers and healthcare practitioners with a plethora of visual information about a patient’s internal anatomical structures, physiological processes, and potential problems. This knowledge allows for the early detection and accurate characterization of a wide range of medical problems, including, but not limited to, malignancies, cardiovascular diseases, neurological disorders, and orthopedics pathologies etc. The following section discusses the various datasets available for the various known diseases.

Figure 3.

Taxonomy of medical imaging dataset.

Associated diseases

This section contains necessary information related to the diseases mentioned in all medical image datasets.

Alzheimer’s disease

AD is a brain disorder that gradually affects the brain and results in memory, thinking, and loose the ability to work normally. As the disease progresses, individuals with Alzheimer’s may have difficulty with everyday activities, eventually losing the ability to recognize and communicate with people. The main cause of Alzheimer’s is under research, but it is believed to involve a complex interaction between genetics, lifestyle factors, and environmental factors62.

Parkinson’s diseases

Parkinson’s disease is a neurological condition that causes a chronic, progressive movement disorder. It results from the decline of dopamine-producing neurons in a particular region of the brain, which lowers dopamine levels63. Tremors, stiffness, slowness of movement, and issues with balance and coordination are some signs of Parkinson’s disease64. Non-motor symptoms such as cognitive decline and mood disorders can also be brought on by the disease. There is currently no cure for Parkinson’s disease, which typically develops gradually and gets worse over time65.

Spinocerebellar ataxia type II

The central nervous system is impacted by the uncommon genetic disorder known as Spinocerebellar ataxia type II. It is brought on by a mutation in the ATXN2 gene, which causes the cerebellum and other areas of the brain to deteriorate. Progressive movement issues, such as poor coordination, balance issues, slurred speech, and muscle stiffness, are a hallmark of SCA2. Additionally, it can cause psychological and cognitive symptoms like anxiety, depression, and memory issues. SCA2 is inherited in an autosomal dominant manner, which means that all it takes for a person to be affected by the condition is for one copy of the mutated gene to be inherited from one parent. SCA2 currently has no known treatment options; instead, efforts are made to control the illness’s symptoms66.

Mild traumatic brain injury

Concussion, also referred to as MTBI is a type of brain injury brought on by a blow to the head or body. Although the injury is usually not life-threatening, it is still referred to as “mild” because it can still result in many of physical and mental symptoms, including dizziness, confusion, memory issues, and mood swings. These symptoms may need medical attention and rehabilitation and can last for days, weeks, or even months67.

AMD

Age-related Macular Degeneration is referred to as AMD. It is a chronic eye condition that damages the macula, the retina region that controls central vision. AMD can result in a progressive loss of vision, making it challenging to read, drive, or identify people68.

DR

Diabetic Retinopathy, also known as DR, is an eye condition associated with diabetes that can cause blindness69. The high level of sugar in the blood affects the retina blood vessels leak and constrict. If untreated, DR is a progressive condition that can result in vision loss. In order to stop or slow the progression of DR, routine eye exams and blood sugar control are crucial70.

Glaucoma

It is a disease that damages the optic nerve which causes vision loss or blindness. The optic nerve transmits visual information from eye to the brain. This damage often occurs due to increased pressure in the eye, which can lead to vision loss and, ultimately blindness if left untreated. Glaucoma can come in a variety of eye forms, such as primary angle-closure, open-angle, and normal-tension glaucoma71.

Pathologic myopia

Pathologic myopia is a severe form of myopia (nearsightedness) characterized by a refractive error of at least − 6 diopters and associated structural changes in the eye. In pathologic myopia, the eye elongates, causing thinning and stretching of the retina, which can lead to various complications such as retinal detachment, choroidal neovascularization, and macular atrophy72.

Techniques/Technologies

In the medical field, different types of medical imaging technologies or techniques are used to capture images of the human body for treatment purposes and diagnosis. Each modality has its own strengths and weaknesses, and the particular medical issue being identified or treated determines which modality should be used.

DWI

Diffusion-weighted Imaging (DWI), is a type of medical imaging that creates images by utilizing the characteristics of water molecules in tissues. It is commonly used in MRI and is sensitive to changes in the microstructure of tissues, such as those caused by stroke, inflammation, or tumors73. DWI is particularly useful for detecting early signs of stroke and for monitoring the response to treatment74.

PT

The term “positron emission tomography” (PT) is an imaging technique that uses radioactive material to measure and visualize the changes in the metabolic process. Different tracers are used to monitor the flow, absorption, and chemical composition of blood. It is often used as a medical and research tool to detect tumor imaging75.

T1 and T2

T1 and T2 are MRI modalities that provide different types of tissue contrast. T1-weighted images have a shorter echo and repetition time, which makes them sensitive to changes in tissue composition, particularly in fat content. On the other hand, T2-weighted images have a longer echo and repetition time, which makes them sensitive to changes in tissue water content and can highlight fluid-filled structures such as cysts or edema76.

MRI FLAIR

Fluid Attenuated Inversion Recovery (FLAIR) is an MRI pulse sequence used to suppress fluids such as Cerebrospinal Fluid (CSF) and highlight pathological tissues with high water content, such as tumors, inflammation, and demyelination lesions. It is commonly used in neuroimaging to visualize subtle abnormalities in the brain and spinal cord77.

CT

Computed Tomography (CT) medical imaging produces comprehensive internal images of the body using X-rays. It is frequently used to identify and track many illnesses, including tumors, internal bleeding, and bone fractures78. Multiple X-ray images of that area of the body taken by the CT scanner from various angles are combined to form a complex cross-sectional image of the body component being investigated79.

Ultrasound

The medical imaging method known as ultrasound uses high-frequency sound waves to produce pictures of the organs and tissues of the body. The internal organs, tissues, and blood flow can be seen using this non-invasive, painless procedure. While ultrasound is frequently used in obstetrics to see the growing fetus, it can also be used to diagnose and track a number of medical conditions in other areas of the body, including the abdomen, pelvis, and heart80.

Computed radiography

Digital radiographic images are created using Computed Radiography (CR), a medical imaging technique that employs Photostimulable Phosphor plates. A digital image that can be viewed on a computer is created in CR by scanning an imaging plate with a laser beam after it has been exposed to X-rays. For its superior image quality and adaptability, CR is widely used in medical imaging, particularly in radiography. Digital radiography (DR), which provides quicker image acquisition and lower radiation exposure for patients, has largely replaced it81.

MR

By mixing radio waves and a potent magnetic field, the medical imaging method known as magnetic resonance produces detailed images of the body’s internal components. Frequently used to visualize the brain, spine, joints, and soft tissues and is particularly helpful in diagnosing conditions that might not be visible on other medical imaging techniques, like X-rays or CT scans. For some patients, MR imaging offers a safer alternative because it doesn’t expose them to ionizing radiation82.

Coronary CT angiography

In coronary CT Angiography (CTA), Computed Tomography (CT) is a non-invasive imaging method used to see the coronary arteries that provide blood to the heart. Contrast dye is injected into a vein and then circulated through the coronary arteries in this procedure. A 3D image of the coronary arteries is created by reconstructing images of the arteries taken by the CT scanner as the dye travels through them83. Utilizing this method, coronary artery disease, blockages, and other heart conditions can be identified or assessed84.

Radiotherapy structure set

A radiotherapy structure set is a collection of anatomical structures that are identified on medical images, such as organs or tumors, and which are typically used in the planning and administration of radiation therapy for the treatment of cancer. The structure set makes it easier to precisely direct radiation towards the tumor while minimizing damage to healthy cells and organs85.

PET

Positron Emission Tomography (PET) is known as PET. It is a type of medical imaging procedure that creates 3D images of bodily functions using radioactive materials called radiotracers. PET scans are frequently used to identify and treat cancer as well as other heart and brain diseases86.

Endoscopy

It involves the insertion of a long thin tube with a camera on the other end to examine internal organs or tissues. It is frequently used to check the joints, respiratory system, and digestive system. Endoscopy can also be used to obtain tissue samples for biopsies and perform minor surgical procedures87.

General microscopy

Using a microscope to view and examine samples that cannot be seen with the unaided eye, such as microorganisms, cells, tissues, and small structures, is known as general microscopy. It involves using a microscope to enlarge the image of the sample being examined in order to examine its features and characteristics in greater detail. Several disciplines, including biology, medicine, material science, and forensics, among others, can benefit from general microscopy88.

Electron microscope

An electron microscope that uses a beam of electrons for illumination to create an image of a sample. There are two variants of it one uses a transmission process and the other uses a scanning process. By colliding with the sample’s atoms, the electrons in the beam generate signals that can be picked up and used to build images with extremely high levels of magnification and resolution. Numerous scientific disciplines, such as materials science, biology, and nanotechnology, use electron microscopes89.

Datasets associated with the classification of brain diseases

The objective of the AD Neuroimaging Initiative (ADNI) is to understand how the disease develops and to find biomarkers for its early diagnosis and treatment. The ADNI study is divided into several phases, such as ADNI-1, ADNI-2, ADNI-3, and ADNI-GO (Grand Opportunity)90,91. Based on their cognitive abilities, the study participants can be divided into three groups: those with normal cognition (NC), mild cognitive impairment (MCI), and AD. Early Mild Cognitive Impairment (EMCI) and Later Mild Cognitive Impairment (LMCI) are two subgroups of MCI. An assortment of Neuroimaging datasets, such as structural and functional MRI scans, as well as data on demographics and clinical conditions, can be found in the Open Access Series of Imaging Studies (OASIS). Three datasets, referred to as OASIS-1, OASIS-2, and OASIS-392–94, have been made available by the OASIS project and are primarily used for research on AD. However, researchers have also used these datasets for related tasks like functional area segmentation. Table 2 depicts an overview of the datasets and difficulties associated with classifying brain diseases.

Table 2.

An overview of the datasets and difficulties associated with classifying brain diseases.

| Dataset | Year | Techniques | Associated disease | Classification |

|---|---|---|---|---|

| ADNI-190 | 2004 | T1, T2, DWI and PT | Alzheimer’s | NC, MCI and AD |

| ADNI-291 | 2015 | T1, T2, DWI and PT | Alzheimer’s | NC, EMCI, LMCI and AD |

| ADNI-GO90 | 2009 | T1, T2, DWI and PT | Alzheimer’s | NC, MCI and AD |

| OASIS 192 and OASIS 293 | 2007,2009 | T1 | Alzheimer’s | NC and AD |

| OASIS 394 | 2019 | T1, T2, PT and MRI FLAIR | Alzheimer’s | NC, AD |

| TADPOLE95 | 2017 | T1, T2, DWI and PT | Alzheimer’s | NC, MCI, AD |

| PD De Novo96 | 2019 | T1 | Parkinson’s | NC and PD |

| SCA2 DTI97 | 2018 | T1 and DWI | Spinocerebellar ataxia type II | NC and SCA2 |

| MTOP98 | 2016 | T1 and DWI | Mild traumatic brain injury | Healthy, category I or category II |

An extensive, global initiative to advance the comprehension, detection, and care of AD and similar diseases is the TADPOLE (Trajectory Analysis Project for AD) dataset95. The dataset contains clinical and imaging information from people who have been given the detection of Mild Cognitive Impairment (MCI), AD, or cognitive normality (CN). PD De Novo is a dataset of individuals who were newly infected with Parkinson’s disease (PD) and followed for up to three years to track the progression of the disease96. The dataset includes clinical assessments, imaging data, genetic information, and biospecimen samples from participants, and is intended to help researchers better understand the early stages of PD and develop new treatments. Some datasets concentrate on diagnosing mild traumatic brain injury and others on Spinocerebellar Ataxia type II (SCA2)97.

Datasets associated with eye diseases

Retinal Image Analysis for Multi-disease Detection (RIADD) is a public dataset that has a total of 2,740 retinal fundus images99. The dataset has been specifically intended for the development of algorithms that can detect and categorize various common retinal illnesses, including retinopathy caused by diabetes, glaucoma, and macular degeneration caused by age. Retinal Fundus Glaucoma Challenge 2019 (REFUGE2 ) is a public dataset consisting of retinal fundus images of both healthy and glaucomatous eyes100 . It was introduced as part of a challenge to develop ML algorithms for automated glaucoma diagnosis. The AGE dataset is related to the disease glaucoma. It is a dataset consisting of retinal fundus images collected from patients with glaucoma as well as healthy individuals101. The dataset is intended for use in developing and testing algorithms for automated glaucoma detection and diagnosis based on retinal fundus images. In the Paediatric Automated Lamellar Keratoplasty Missions (PALM ) dataset102, 1159 color scans and their segmentation masks show the optic nerve head (ONH) and macula, two ophthalmology-related regions of interest. The retinal OCT (Optical Coherence Tomography) pictures dataset is a collection of retinal OCT images used to train and evaluate DL models for the detection of retinal illnesses such as macular degeneration, diabetic retinopathy, and glaucoma103,104. A collection of retinal images used to identify cataracts is called the CATARACTS dataset. The dataset includes eyes with and without cataracts, and it is made up of retinal images taken with a slit-lamp camera. Retinal Optical Coherence Tomography (OCT)105 scans with Diabetic Macular Edema (DME) make up the Seg OCT (DME) dataset, a medical imaging collection that is openly accessible106. Table 3 depicts an overview of eye disease datasets along with challenges.

Table 3.

An overview of datasets and challenges related to tasks involving eye diseases.

| Dataset | Year | Techniques | Associated disease |

|---|---|---|---|

| RIADD99 | 2020 | FP | AMD, DR |

| REFUGE2100 | 2020 | FP | Glaucoma |

| AGE101 | 2019 | OCT | Closure glaucoma |

| DRIVE107 | 2019 | FP | Vessel extraction |

| ODIR-2019108 | 2019 | FP | AMD, glaucoma, diabetes, cataract, hypertension |

| PALM102 | 2019 | FP | Pathologic myopia |

| Retinal OCT Images103104 | 2018 | OCT | DR |

| CATARACTS105 | 2017 | Video | Surgery tools detection |

| RETOUCH109 | 2017 | OCT | Fluid segmentation |

| Seg OCT(DME)106 | 2015 | OCT | Diabetic macular edema |

Datasets associated with head and neck diseases

The MICCAI 2020 HECKTOR challenge dataset is a multi-institutional dataset of head and neck CT scans. It includes CT scans of 198 patients with head and neck tumors, along with annotations for Organs at Risk (OARs) and Gross Tumor Volumes (GTVs)110. The dataset is intended for use in developing and evaluating algorithms for automated segmentation of OARs and GTVs in head and neck CT scans. TN-SCUI 2020 is a dataset for thyroid nodule ultrasound image classification and segmentation111 . The dataset consists of 5949 ultrasound images of thyroid nodules. The Head Neck Radiomics HN1 dataset is a collection of head and neck cancer patients’ medical images and clinical data. The dataset includes CT, MRI, and PET-CT images of the patient’s head and neck region, as well as clinical information such as tumor stage and treatment outcomes. The Head Neck PET-CT dataset consists of Positron Emission Tomography (PET) and computed tomography (CT) images of the head and neck region, which are used for diagnosis and treatment planning of head and neck cancer. The dataset includes images from 58 patients, with each patient having a PET and CT scan112,113 .

The Ultrasound Nerve Segmentation dataset is a medical imaging dataset used for nerve segmentation tasks. It contains ultrasound images of the neck area of patients, with the goal of segmenting nerve structures in the images. The Dental X-Ray Analysis 1 Dataset is a publicly available dataset of dental X-ray images for the task of dental pathology detection. The dataset contains 5 different classes of dental pathologies, including Dental caries, Dental fillings, Dental implants, Dental infections, and Dental fractures. The CLS 2009 dataset is a medical image dataset of the carotid bifurcation. It contains 200 contrast-enhanced CT Angiography (CTA) scans of the neck region, including the carotid bifurcation, from 100 patients113–116 . Table 4 depicts an overview of challenges and datasets related to head and neck diseases.

Table 4.

An overview of the challenges and datasets related to head and neck diseases.

| Dataset | Year | Techniques | Emphasis |

|---|---|---|---|

| MICCAI 2020: HECKTOR110 | 2020 | CT, PT | Tumors originating in the head and neck area |

| TN-SCUI 2020111 | 2020 | Ultrasound | Diagnosis of nodules in the thyroid gland |

| Head Neck Radiomics HN1112 | 2019 | CT | Malignant growths in the head and neck |

| Head Neck PET-CT113 | 2017 | CT, PT | Tumor |

| Ultrasound Nerve Segmentation114 | 2016 | Ultrasound | Nerve |

| Dental X-Ray Analysis115 | 2015 | Computed Radiography | Caries |

| CLS 2009116 | 2009 | CT Angiography | Carotid bifurcation |

Datasets associated with chest and abdomen

A medical imaging dataset called the automated Segmentation of Coronary Arteries contains heart CT Angiography (CTA) images for the segmentation of coronary arteries. The dataset consists of 101 CTA heart images with an average of 291 slices per image. A collection of computed tomography (CT) volumes with various organ segmentations is called the CT-ORG dataset. It consists of 1,010 volumetric images of the thoracic and abdominal regions of the human body, with segments of the liver, pancreas, kidneys, stomach, gallbladder, aorta, and portal vein in each volume. The Medical Segmentation Decathlon (MSD) dataset consists of ten distinct medical image segmentation tasks for a variety of imaging modalities, including microscopy, CT, and MRI. Segmenting brain tumors, heart, liver, lungs, pancreas, prostate, and other organs are just a few of the tasks117,118.

A collection of lung lung-CT scans makes up the Lung CT Segmentation Challenge dataset. Automated lung segmentation from CT scans is a crucial step in the diagnosis and treatment of many lung diseases, including cancer and emphysema. This project was designed to develop and evaluate algorithms for this task. A medical imaging dataset called the Breast MRI NACT Pilot is made up of breast MRI scans from breast cancer patients who received Neoadjuvant Chemotherapy (NACT) treatment. The data set might contain MRI images of the breast from various patients annotated for various breast structures, including breast tissue, tumor, and surrounding anatomy, 514 for example. Anatomy 3 (Year: 2015): This dataset focuses on the segmentation of organs like the liver, lung, kidney, aorta, and trachea and includes both MR and CT scans. Developing algorithms to precisely segment these organs from MR and CT images, which are frequently used for various diagnostic and treatment planning purposes, may be a challenge. The data set might contain MRI and CT scans from various patients that have annotations for the relevant organs. CETUS 2014 (Year: 2014): This dataset focuses on the segmentation of the heart and utilizes ultrasound imaging. The challenge may involve developing algorithms to accurately segment the heart from ultrasound images, which are commonly used for cardiac imaging. The dataset may include ultrasound images from different patients with annotations for different cardiac structures such as the left ventricle, right ventricle, and myocardium119–122. Table 5 depicts an overview of the datasets and challenges for tasks that involve organ segmentation in the chest and abdomen.

Table 5.

Overview of datasets and challenges for tasks involving segmentation of organs in the chest and abdomen.

| Dataset | Year | Techniques | Considered body parts |

|---|---|---|---|

| Automated Segmentation of coronary arteries117 | 2020 | Coronary CT angiography | Coronary arteries |

| CT-ORG118 | 2019 | CT | Liver, lung, kidney and bladder |

| Medical segmentation decathlon119 | 2019 | MR, CT | Liver, lung, heart, pancreas and colon |

| Lung CT segmentation challenge120,123 | 2017 | Radiotherapy structure set | Lung and heart |

| Breast MRI NACT pilot121 | 2016 | MR | Breast |

| Anatomy3124 | 2015 | MR, CT | Liver, lung, kidney, aorta and trachea |

| CETUS122 | 2014 | Ultrasound | Heart |

Datasets associated with segmentation of organs in chest and abdomen regions

The COVID-19 CT Diagnosis dataset is a collection of CT scan data from patients who tested positive and negative for the virus125–127. Studies have employed CNNs and other machine learning models to extract features from chest X-rays and CT scans, achieving significant improvements in diagnostic accuracy. For instance, authors in128 demonstrated the efficacy of using CNNs in combination with recurrent neural networks (RNNs) for automated disease detection, underscoring the potential of hybrid models in enhancing diagnostic capabilities.

In order to generate the dataset, 2973 CT scans from 1173 individuals, including 888 COVID-19 positive cases and 285 COVID-19 negative cases, were obtained. The COVID Chest X-Ray Dataset is a collection of chest X-ray scans for cases that tested positive for COVID-19, for normal cases, and for instances that tested positive for other kinds of pneumonia. The dataset is comprised of 2 parts: Metadata, Which contains the patient’s data, and Image files, Which include the chest X-ray scans in Portable Network Graphics (PNG) format. There are a total of 12,721 images in the collection. COVID-19 AR, a dataset introduced in 2020, combined CT and CR imaging techniques to facilitate organ segmentation in patients with COVID-19. BCS-DBT, a dataset focused on digital breast tomosynthesis, was introduced in the same year with an emphasis on breast cancer segmentation129–133.

A-AFMA-Detection, a 2020 dataset, utilized ultrasound imaging to detect amniotic fluid in the abdomen region. In 2019, the NSCLC-RadiomicsInterobserver1 dataset was introduced, focusing on NSCLC and utilizing a radiotherapy structure set for organ segmentation. The MIMIC-CXR dataset, introduced in 2019, utilized electronic health records and report data for chest image analysis. The NSCLC-Radiomics-Interobserver1 dataset is a collection of medical images and annotations for NSCLC patients. The dataset includes CT images of the chest region for 30 NSCLC patients, with annotations for various regions of interest (ROIs) within each image. The MIMIC-CXR (Medical Information Mart for Intensive Care Chest X-ray) dataset contains radiology reports and chest X-ray images. More than 65,000 patients’ chest X-ray images and corresponding reports totaling more than 350,000 are included. The patient’s associated demographic and clinical information, as well as frontal and lateral views of the chest, are all included in the dataset134–136. Table 6 depicts an overview of Datasets and Challenges in tasks related to the Segmentation of Organs in the Chest and abdomen regions.

Table 6.

An overview of datasets and challenges in tasks related to the segmentation of organs in the chest and abdomen regions.

| Dataset | Year | Techniques | Emphasis |

|---|---|---|---|

| CT Diagnosis of COVID-19129 | 2020 | CT | COVID-19 |

| COVID Chest X-Ray Dataset130 | 2020 | CR | COVID-19 |

| Detection of COVID-19 from Ultrasound131 | 2020 | Ultrasound | COVID-19 |

| COVID-19 AR132 | 2020 | CT and CR | COVID-19 |

| BCS-DBT133 | 2020 | Digital breast tomosynthesis | Breast cancer |

| A-AFMA-Detection134 | 2022 | Ultrasound | Amniotic fluid detection |

| NSCLC-Radiomics-Interobserver1135 | 2019 | Radiotherapy structure set | Non-small cell lung cancer |

| MIMIC-CXR136 | 2019 | Electronic health record and report | Chest image analysis |

Datasets related with image analysis in Pathology

Pathology VQA (2020): This dataset was created especially for pathology-related visual question-answering (VQA) tasks. It involves responding to inquiries about pathology images, which necessitates comprehending the visual information contained in the images and doing so137.

PAIP 2020 (2020): In order to analyze and spot patterns and features in pathology images related to this particular type of cancer, this dataset specifically focuses on colorectal cancer138.

MICCAI 2020 CRPCC (2020): To investigate the synergy between radiology and pathology in disease diagnosis, this dataset combines radiology and pathology data for classification tasks139.

Post-NAT-BRCA (2019): The analysis of changes in cell morphology and structure following treatment is made possible by this dataset, which focuses on cell images specifically for studying the effects of post-neoadjuvant therapy in breast cancer140.

ANHIR (2019): This dataset was created for pathology image registration, which entails aligning and registering images from various sources or time points to allow for additional analysis and comparison141.

PAIP 2019 (2019): This dataset emphasizes the segmentation of liver tumors and tumor burden, offering information for the creation of algorithms and models for the precise segmentation of liver tumors and the estimation of tumor burden142.

Data Science Bowl 2018: The goal of this dataset, which contains images of cells, was to test participants’ ability to create algorithms for cell detection and classification tasks143.

Colorectal Histology MNIST (2018): This data which is based on patch-based analysis of colorectal pathology, can be used to examine and spot patterns in histology images of colorectal cancer144.

CAMELYON 17 (2017): This dataset focuses on breast cancer metastases and provides information for creating models and algorithms for identifying and classifying metastatic lesions in breast cancer pathology images145.

Glas (2015): This dataset, which is based on patches of colorectal cancer pathology, offers information for examining and identifying features in patches of colorectal cancer pathology images146.

OCCIS (2014): Data for studying cell morphology and structure in relation to cervical cancer screening is provided by this dataset, which consists of overlapping images of cervical cells147.

MITOS-ATYPIA-14 (2014): This dataset focuses on the identification of mitotic cells with the goal of creating algorithms for the precise identification of these crucial markers of cell division and proliferation148.

Particle Tracking Challenge (2012): This dataset involves particle tracking in microscopy images, providing data for developing algorithms and models for accurately tracking particles and analyzing their dynamics149.

Table 7 depicts an overview of Datasets and difficulties associated with image analysis in Pathology.

Table 7.

An Overview of Datasets and difficulties associated with image analysis in Pathology.

| Dataset | Year | Emphasis |

|---|---|---|

| Pathology VQA137 | 2020 | Visual question answering |

| PAIP 2020138 | 2020 | Colorectal cancer |

| MICCAI 2020 CRPCC139 | 2020 | Classification combining radiology and pathology data |

| Post-NAT-BRCA140 | 2019 | Cell |

| ANHIR141 | 2019 | Pathology image registration |

| PAIP 2019142 | 2019 | Liver cancer and tumor burden segmentation |

| Data Science Bowl143 | 2018 | Cell |

| Colorectal Histology MNIST144 | 2018 | Patch-based analysis of colorectal pathology |

| CAMELYON 17145 | 2017 | Breast cancer metastases |

| Glas146 | 2015 | Colorectal cancer pathology patch |

| OCCIS147 | 2014 | Overlapping cervical cell |

| MITOS-ATYPIA-14148 | 2014 | Mitotic |

| Particle Tracking Challenge149 | 2012 | Particle |

Datasets associated with the analysis of blood-related images

Medical datasets connected to blood-related image analysis have proven to be useful in furthering both research and therapeutic applications. These datasets typically consist of a collection of digital photographs of blood samples that have been annotated with information such as cell kinds, morphologies, and potential disease markers. The use of these datasets has aided in the development of advanced ML algorithms capable of accurately identifying and categorizing blood components. Researchers have made substantial advances in the early diagnosis of diseases such as leukemia, anemia, and different blood-borne infections by exploiting these databases. The diversity of these datasets, which include a wide range of blood properties, ensures a solid training basis for AI algorithms, contributing to a better knowledge of hematological illnesses. Such data-driven approaches have also resulted in more tailored treatment strategies, ultimately improving overall patient care quality. Below are various examples of the same.

SegPc 2021—Myeloma Plasma Cell: The analysis of myeloma plasma cells, a subset of white blood cells essential to the growth of multiple myeloma, a plasma cell cancer, is the main goal of the SegPc 2021 dataset. This dataset can be used for tasks like classifying, quantifying, and segmenting myeloma plasma cells in images related to blood150.

MitoEM Challenge 2020—Mitochondria: The analysis of mitochondria, significant organelles in charge of generating energy in cells, is the main focus of the MitoEM Challenge dataset. The understanding of mitochondrial function and dysfunction in various diseases can be aided by using this dataset for tasks like segmentation, detection, and classification of mitochondria in blood-related images151.

B-ALL Classification 2019—Leukemic Blasts in an Immature Stage: The analysis of leukemic blasts in an early stage is the main focus of the B-ALL Classification dataset. Blood cancer of the B-ALL (B-cell Acute Lymphoblastic Leukaemia) variety mainly affects lymphoid cells. This dataset can help with the development of B-ALL diagnostic and prognostic tools by being used for tasks like classifying, detecting, and quantifying leukemic blasts in blood-related images151.

Malaria Bounding Boxes 2019—Cells in Blood: The analysis of blood cells infected with malaria parasites is done using the Malaria Bounding Boxes dataset. Millions of people worldwide suffer from malaria, a mosquito-borne illness, and accurate identification and quantification of infected blood cells is essential for both diagnosis and treatment. This dataset can be used for tasks like object recognition, blood-related image segmentation, and classification of infected blood cells152.

LYSTO 2019—Lymphocytes: The analysis of lymphocytes, a category of white blood cells that is crucial to the immune response, is the main focus of the LYSTO dataset. The understanding of lymphocyte function in various diseases and conditions can be improved with the help of this dataset, which can be used for tasks like the segmentation, classification, and quantification of lymphocytes in images related to blood153.

SN-AM Dataset 2019—Stain Normalization: For the analysis of stain normalization methods in images involving blood, the SN-AM Dataset was created. In order to address staining variability across various samples and laboratories, stain normalization is a crucial preprocessing step in medical image analysis. For image analysis tasks involving blood, this dataset can be used to evaluate and benchmark stain normalization techniques154.

C NMC Dataset 2019—Classification of Immature Leukemic Cells: The immature leukemic cells, which are abnormal white blood cells that are indicative of leukemia, are the main focus of the C NMC Dataset. This dataset can be used for tasks like categorization, detection, and quantification of immature leukemic cells in images of blood as well as for the creation of automated tools for leukemia diagnosis and monitoring154.

Blood Cell Images 2018—Cells in Blood: Red blood cells, white blood cells, and platelets are just a few of the different types of blood cells included in the Blood Cell Images dataset. This dataset can be used for tasks like classifying, quantifying, and segmenting blood cells in images of the blood. It can also be a useful tool for researching blood cell morphology and comprehending various blood-related diseases and conditions155.

Table 8 depicts an overview of Datasets and challenges related to the Analysis of blood-related images

Table 8.

An overview of datasets and challenges related to the analysis of blood-related images.

| Dataset | Year | Emphasis |

|---|---|---|

| SegPc 2021150 | 2020 | Myeloma plasma cell |

| MitoEM challenge151 | 2020 | Mitochondria |

| B-ALL Classification156 | 2019 | Leukemic blasts in an immature stage |

| Malaria bounding boxes152 | 2019 | Cells in blood |

| LYSTO153 | 2019 | Lymphocytes |

| SN-AM dataset154 | 2019 | Stain normalization |

| C NMC dataset154 | 2019 | Classification of immature leukemic cells |

| Blood cell images155 | 2018 | Cells in blood |

Datasets associated with the analysis of bone-related images

Medical datasets connected to bone-related image processing are a valuable asset in the domains of orthopedics and radiography. These datasets frequently include a wide range of imaging modalities, such as X-rays, CT scans, and MRI images depicting diverse bone structures and accompanying disorders. Annotations in these databases may include information about fractures, osteoporosis, bone cancers, and other orthopedic diseases. Healthcare professionals can accomplish more exact diagnoses, prognoses, and treatment planning by using ML and AI algorithms trained on these massive datasets. The availability of such datasets has fueled innovation in the creation of automated diagnostic systems, saving time and perhaps reducing human error in analyzing complex bone pictures. As a result, these data-driven techniques are changing the landscape of bone health care by encouraging early intervention and leading to better patient outcomes. Some examples of the same have been showcased here.

KNOAP 2020—MR and CR Knee Osteoarthritis: The MR and CR images used to analyze knee osteoarthritis are the primary focus of the KNOAP 2020 dataset. This dataset can be used for tasks like segmenting, classifying, and quantifying features related to knee osteoarthritis, and it can help with the creation of early detection and monitoring methods for this degenerative joint disease157.

MICCAI 2020 RibFrac Challenge 2020—CT Detection and Classification of Rib Fractures: The CT images used in the MICCAI 2020 RibFrac Challenge dataset are intended for the detection and classification of rib fractures. This dataset can be used for tasks like fracture localization, classification, and detection, and it can aid in the creation of automated tools for managing and assessing rib fractures158.

VerSe 20 2020—CT Vertebra: The VerSe 20 dataset is dedicated to the CT-based analysis of vertebrae. This dataset can be used for tasks like categorizing, quantifying, and segmenting vertebral structures and abnormalities. It can also be used to help with the diagnosis and planning of treatments for a variety of conditions and diseases involving the spine159.

Pelvic Reference Data 2019—CT Pelvic Images: For use in a variety of pelvic-related image analysis tasks, the Pelvic Reference Data dataset offers CT images of the pelvic anatomy. This dataset can be used to perform operations on the pelvic structures, such as segmentation, registration, and measurement, and it can help with the creation of automated tools for pelvic imaging analysis in clinical and academic settings160.

AASCE 19 2019—CR Spinal Curvature: The CR images used for the analysis of spinal curvature are the main focus of the AASCE 19 dataset. This dataset can be used for tasks like segmentation, measurement, and classification of abnormal spinal curvature, which can help us understand conditions and deformities affecting the spine161.

MURA 2018—CR Abnormality in Musculoskeletal: The MURA dataset includes different musculoskeletal injuries and abnormalities, including those involving bones. This dataset can be a helpful tool for researching musculoskeletal disorders and injuries because it can be used for tasks like the localization, classification, and detection of abnormalities in CR images162.

xVertSeg Challenge 2016—CR Fractured Vertebrae: The CR images of fractured vertebrae are the focus of the xVertSeg Challenge dataset. This dataset can be used for tasks like segmenting, classifying, and localizing vertebral fractures. It can also help with the creation of automated tools for managing and assessing vertebral fractures163.

Bone Texture Characterization 2014—CR Bone Crisps: Using computed radiography (CR) images, the Bone Texture Characterization dataset is intended for the analysis of bone crisps. This dataset can be used for tasks like bone crisp classification, feature extraction, and texture analysis, which can help us understand the characteristics of bone texture and how they relate to bone health164.

Table 9 depicts an overview of Datasets and Challenges Related to the Analysis of bone-related images.

Table 9.

An overview of datasets and challenges related to the analysis of bone-related images.

| Dataset | Year | Techniques | Emphasis |

|---|---|---|---|

| KNOAP 2020157 | 2020 | MR and CR | Knee osteoarthritis |

| MICCAI 2020 RibFrac Challenge158 | 2020 | CT | Detection and classification of rib fractures |

| VerSe 20159 | 2020 | CT | Vertebra |

| Pelvic reference data160 | 2019 | CT | Pelvic images |

| AASCE 19161 | 2019 | CR | Spinal curvature |

| MURA162 | 2018 | CR | Abnormality in musculoskeletal |

| xVertSeg Challenge163 | 2016 | CR | Fractured vertebrae |

| Bone texture characterization164 | 2014 | CR | Bone crisps |

Datasets associated with skin and animal image analysis tasks

The fields of dermatology, veterinary medicine, and wildlife biology all place high importance on medical datasets linked to skin analysis and animal image analysis activities. Images of various dermatological disorders, including melanoma, psoriasis, and eczema, are frequently included in skin-related datasets, allowing the creation of AI-driven diagnostic tools that support early detection and treatment planning. Animal picture databases also offer important information about the morphology, behavior, and health of both domestic and wild animals. These could be information from monitoring wildlife for conservation and demographic studies, or veterinary medical imaging for disease diagnosis. By incorporating ML models that have been trained on such a wide range of datasets, healthcare, and ecology researchers are encouraged to take a multidisciplinary approach, opening up new possibilities for better medical diagnosis, individualized treatment plans, and greater animal species knowledge. With the help of technological improvements, the merging of skin and animal picture collections represents a cutting-edge frontier in scientific inquiry into ecology and medicine. Here are a few of its instances.

DFU 2020: This dataset focuses on the detection of foot ulcers in diabetic patients. Foot ulcers are a common complication in diabetic patients and early detection is crucial for timely intervention and prevention of serious complications such as infections and amputations. The emphasis of this dataset is on developing image analysis techniques using RGB imaging to accurately detect foot ulcers from medical images, which can aid in early diagnosis and effective management of diabetic foot ulcers165.

ISIC 2019: This dataset is focused on the classification of 9 different skin diseases, including melanoma, benign nevi, and dermatofibroma, among others. The emphasis of this dataset is to develop image analysis techniques using RGB imaging for accurate and automated classification of skin diseases, which can assist dermatologists in clinical decision-making and improve patient outcomes. Early and accurate classification of skin diseases can aid in timely treatment and management, and potentially prevent the progression of malignant skin conditions166.

MATCH 2020: This dataset focuses on tumor tracking in markerless lung CT images. The emphasis of this dataset is on developing advanced image analysis techniques using CT imaging to track lung tumors in dynamic medical images, which can aid in radiation therapy planning, assessment of tumor response to treatment, and monitoring disease progression. Accurate tumor tracking can improve treatment efficacy and minimize radiation exposure to healthy tissues167.

MRI-DIR: This dataset focuses on multi-modality registration with phantom images in MR and CT images. The emphasis of this dataset is on developing image registration techniques using MR and CT imaging to align different imaging modalities for accurate fusion and analysis of medical images. Accurate multi-modality registration can improve image-guided interventions, treatment planning, and diagnosis by providing a comprehensive view of anatomical and functional information from different imaging modalities168.

CC-Radiomics-Phantom-2: This dataset focuses on assessing feature variability using phantom images in CT imaging. The emphasis of this dataset is on developing image analysis techniques to assess the variability of radiomic features extracted from phantom images, which can help in evaluating the robustness and reproducibility of radiomics-based image analysis methods. Understanding feature variability in phantom images is crucial for ensuring the reliability and generalizability of radiomics-based models in clinical practice169.

PET-Seg Challenge 2016: This dataset focuses on phantom registration and research using CT and PET imaging. The emphasis of this dataset is on developing image registration techniques to accurately align CT and PET images of phantoms, which are used for quality assurance and calibration purposes in PET imaging. Accurate phantom registration can ensure the accuracy and reliability of PET imaging results, which are widely used for cancer diagnosis, staging, and treatment response assessment170.

EndoVis SCARED 2019: This dataset focuses on depth estimation from endoscopic data. The emphasis of this dataset is on developing computer vision and image processing techniques to accurately estimate the depth information from endoscopic images or videos. Accurate depth estimation can aid in 3D reconstruction, virtual reality visualization, and surgical planning during minimally invasive surgeries, improving the safety and efficacy of these procedures171.

BigNeuron 2016: This dataset focuses on general microscopy for animal neuron reconstruction. The emphasis of this dataset is on developing image analysis techniques to reconstruct the complex 3D structures of animal neurons from microscopy images. Accurate neuron reconstruction can provide insights into neural networks and connectivity, aiding in understanding brain function and neurological diseases172.

SNEMI3D 2013: This dataset focuses on the segmentation of neurites from electron microscopy images. The emphasis of this dataset is on developing advanced image segmentation techniques to accurately segment neurites, which are the elongated projections of nerve cells, from electron microscopy images. Accurate neurite segmentation is crucial for studying the morphology and connectivity of neurons, understanding neural circuits, and investigating neurological disorders173.

Learn2Reg 2020: This dataset focuses on image registration in medical imaging using MR and CT images. The emphasis of this dataset is on developing image registration techniques to align and fuse MR and CT images for various medical imaging tasks such as image-guided interventions, treatment planning, and disease diagnosis. Accurate image registration can improve the accuracy and precision of medical image analysis, aiding in better patient care and treatment outcomes174.

Table 10 depicts an overview of the Datasets and challenges related to Skin, phantom, and animal image analysis tasks.

Table 10.

An overview of the datasets and challenges related to skin, phantom, and animal image analysis tasks.

| Dataset | Year | Techniques | Emphasis |

|---|---|---|---|

| DFU 2020165 | 2020 | RGB | Detection of foot ulcers in diabetic patients |

| ISIC 2019166 | 2019 | RGB | Classification of 9 diseases |

| MATCH167 | 2020 | CT | Tumor tracking in lung |

| MRI-DIR168 | 2018 | MR, CT | Multi-modality registration with phantom |

| CC-Radiomics-Phantom-2169 | 2018 | CT | Feature assessment with phantom |

| PET-Seg Challenge170 | 2016 | CT, PET | Phantom registration and research |

| EndoVis 2019 SCARED171 | 2019 | Endoscopy | Depth estimation from endoscopic data |

| BigNeuron172 | 2016 | General microscopy | Animal neuron reconstruction |

| SNEMI3D173 | 2013 | Electron microscope | Segmentation of neurites |

| Learn2Reg174 | 2020 | MR, CT | Image registration |

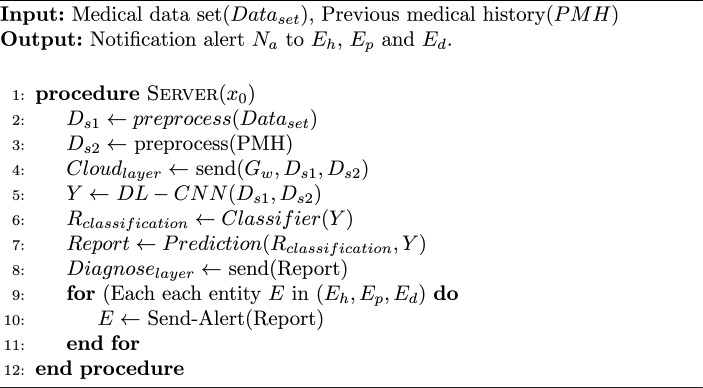

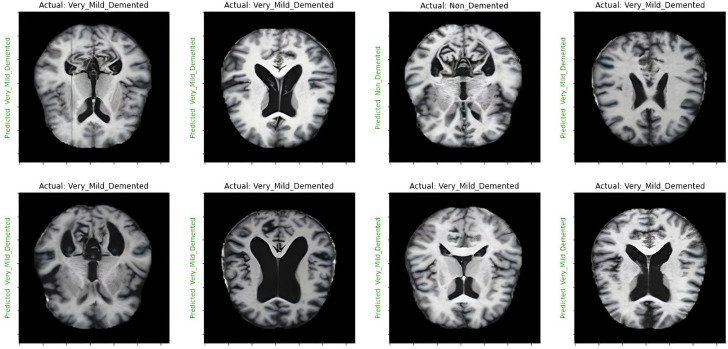

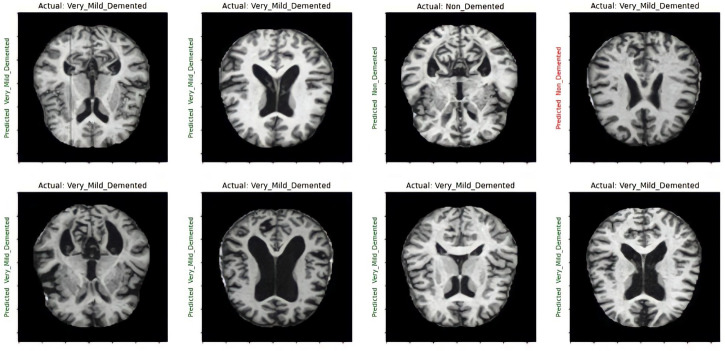

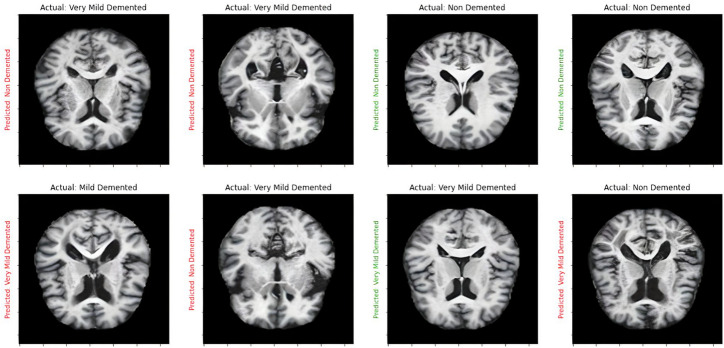

Case study: Alzheimer’s MRI classification using DL methods

DL techniques, notably CNNs and pre-trained models such as VGG-16, have been used successfully in the field of neuroimaging to categorize AD using MRI images175. CNNs, a sort of deep neural network primarily built for image processing, excel at extracting features from high-dimensional input176. They recognize complicated patterns in data using convolutional layers, pooling, and fully linked layers, making them excellent for MRI image processing. VGG-16, a CNN variation, is pre-trained on millions of images, generating a robust feature detection capability. Due to its resilience and depth (16 layers), it can record subtle patterns in MRI scans, greatly helping to diagnose AD and separate it from other types of dementia. However, the fundamental difficulty with DL models is the requirement for large volumes of training data to avoid overfitting and ensure accurate predictions. Obtaining a large volume of labeled data in the field of medical imaging, particularly in the context of AD, can be difficult due to privacy concerns and the time-consuming nature of the labeling procedure. To remedy this, consider using ensemble ML approaches. Ensemble learning is a method in which numerous models or ’learners’ are developed and strategically merged to tackle a specific computer intelligence problem. Combining CNN or VGG-16 output, for example, with other ML algorithms helps improve and refine the decision-making process, enhancing the accuracy and robustness of the final model. Furthermore, by efficiently learning from minority classes, ensemble methods can help address class imbalance concerns that are frequently seen in medical datasets. To summarize, the use of DL algorithms such as CNN, VGG-16, and ensemble ML approaches has promising promise in Alzheimer’s MRI Classification, potentially improving diagnostic precision and allowing for early therapies. The following sections elucidate the dataset used in this study and present the results achieved through the various methodologies mentioned above.

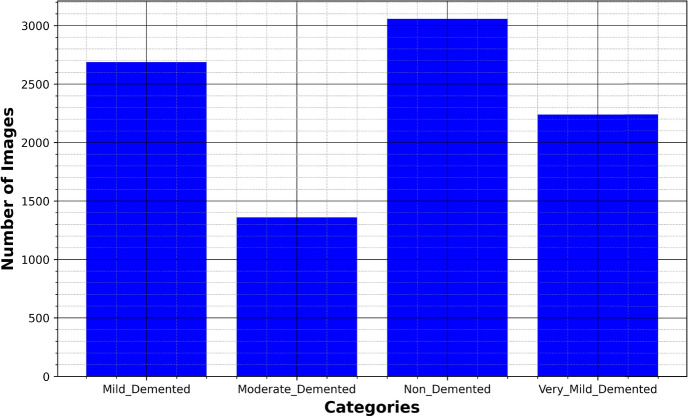

Dataset used

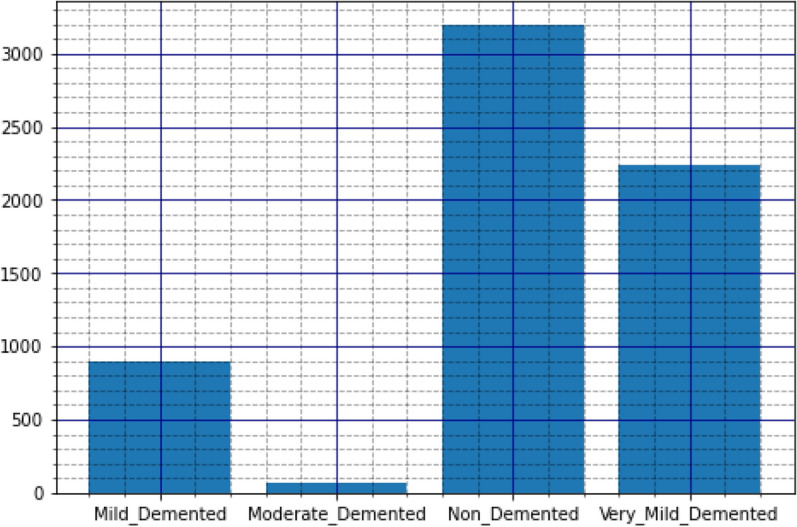

The disease taken into consideration is AD and the dataset taken into consideration is the Alzheimer’s MRI dataset. Figure 4 presents the distribution of different images in the dataset.

Figure 4.

Original dataset distribution.

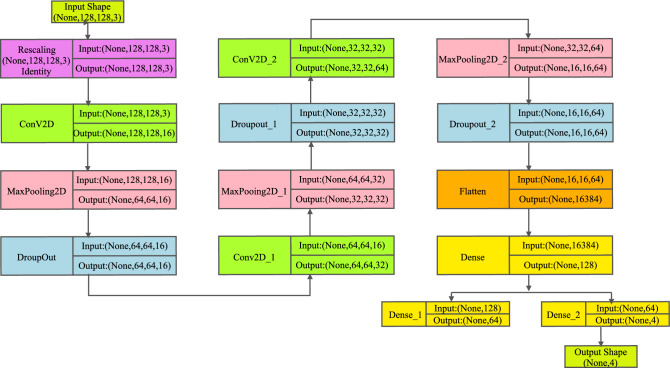

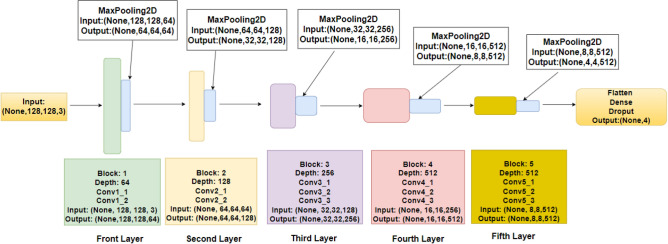

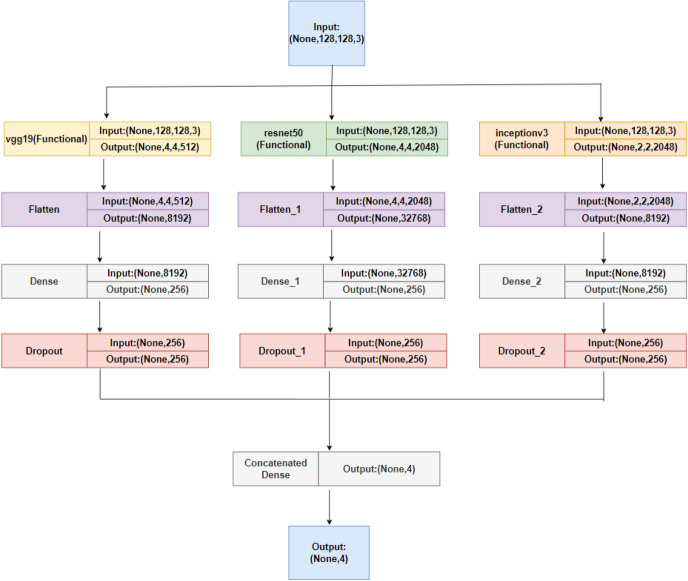

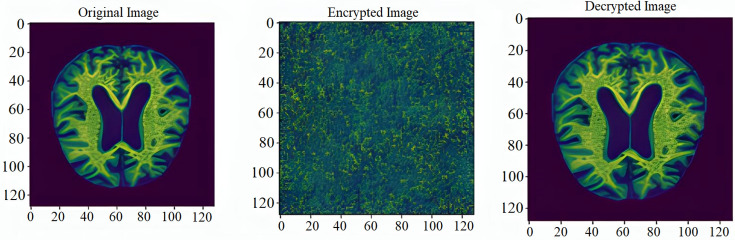

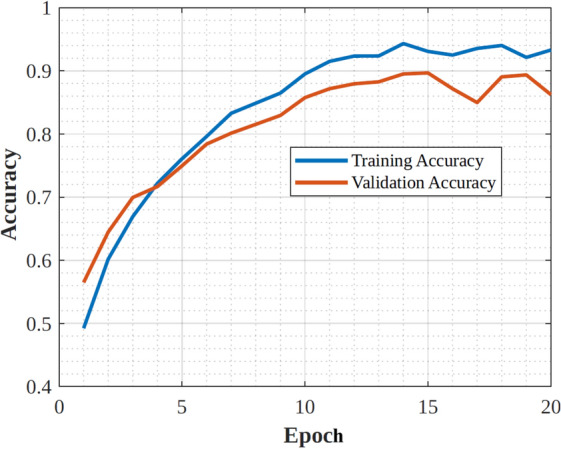

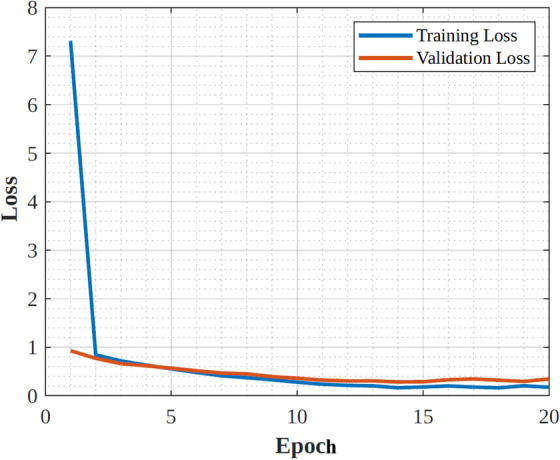

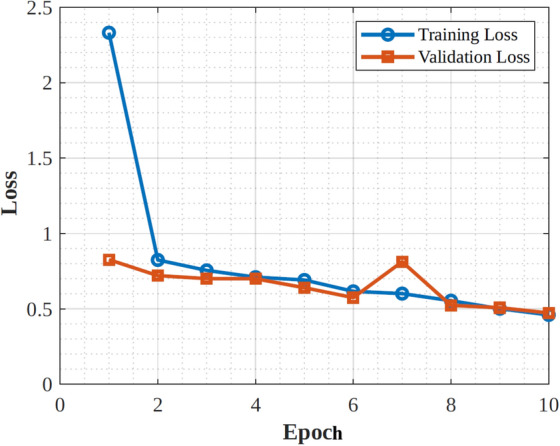

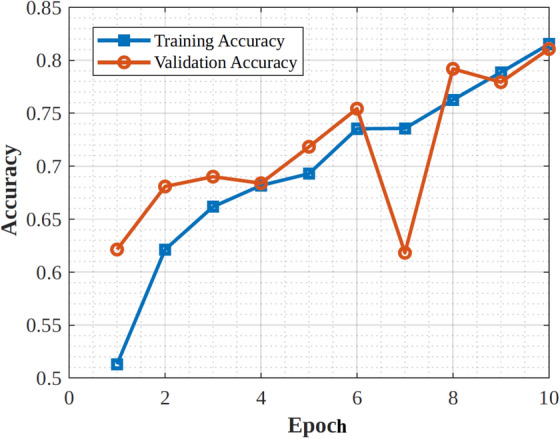

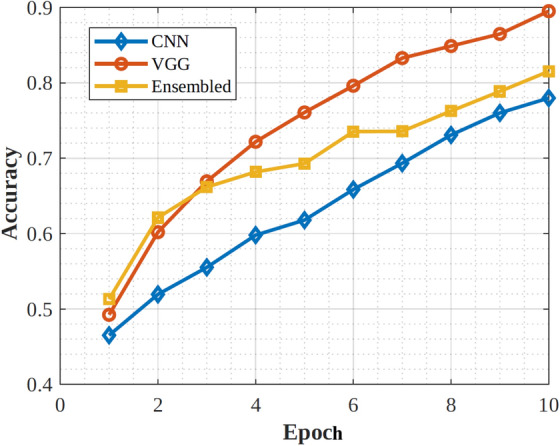

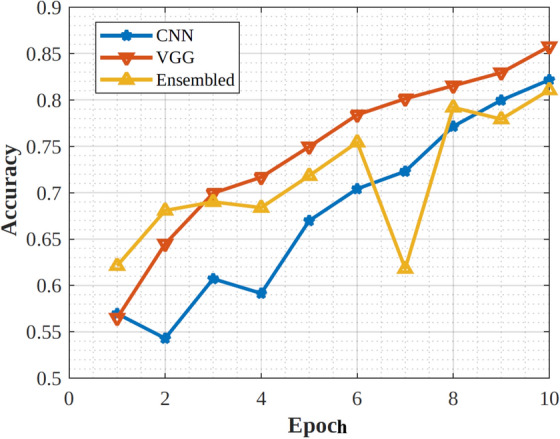

The dataset includes a total of 6400 images, which have all been resized to 128 × 128 pixels. The dataset consists of mild, moderate demented, non-demented, and mild demented images consisting of 896 images, Moderate Demented (64 images), Non-Demented (3200 images), and Very Mild Demented (2240 images). The images were preprocessed to remove noise and artifacts and then resized to 128 × 128 pixels for consistency. Each image is labeled with a class label (1–4) corresponding to the severity of dementia, as determined by a clinical expert.