Abstract

Acute flaccid paralysis (AFP) case surveillance is pivotal for the early detection of potential poliovirus, particularly in endemic countries such as Ethiopia. The community-based surveillance system implemented in Ethiopia has significantly improved AFP surveillance. However, challenges like delayed detection and disorganized communication persist. This work proposes a simple deep learning model for AFP surveillance, leveraging transfer learning on images collected from Ethiopia's community key informants through mobile phones. The transfer learning approach is implemented using a vision transformer model pretrained on the ImageNet dataset. The proposed model outperformed convolutional neural network-based deep learning models and vision transformer models trained from scratch, achieving superior accuracy, F1-score, precision, recall, and area under the receiver operating characteristic curve (AUC). It emerged as the optimal model, demonstrating the highest average AUC of 0.870 ± 0.01. Statistical analysis confirmed the significant superiority of the proposed model over alternative approaches (P < 0.001). By bridging community reporting with health system response, this study offers a scalable solution for enhancing AFP surveillance in low-resource settings. The study is limited in terms of the quality of image data collected, necessitating future work on improving data quality. The establishment of a dedicated platform that facilitates data storage, analysis, and future learning can strengthen data quality. Nonetheless, this work represents a significant step toward leveraging artificial intelligence for community-based AFP surveillance from images, with substantial implications for addressing global health challenges and disease eradication strategies.

Keywords: Acute flaccid paralysis, Surveillance, Community, Deep learning model, Transfer learning, Computer vision

1. Introduction

Acute flaccid paralysis (AFP) surveillance involves monitoring and reporting cases of acute flaccid paralysis, which could be indicative of poliovirus infection (Masa-Calles et al., 2018). In Ethiopia, as in other polio endemic countries, AFP surveillance is crucial for early detection of potential polio cases and for implementing rapid response measures to prevent the spread of the virus (Tegegne et al., 2017). The surveillance system typically involves health workers and surveillance officers at various levels of the healthcare system, including hospitals, health centers, and communities (Tegegne et al., 2018). They are trained to recognize and report cases of AFP promptly. Ethiopia has been actively involved in polio eradication efforts and has made significant progress in reducing polio transmission (Deressa et al., 2020). AFP case surveillance plays a vital role in ensuring that any cases of acute flaccid paralysis are promptly investigated, and appropriate measures are taken to prevent the spread of the disease (Ali et al., 2023). Most importantly, AFP surveillance is a critical tool in the eradication of polio, it also contributes to the overall strengthening of the country's disease surveillance and response systems, which can help in detecting and controlling other infectious diseases as well (Ayana et al., 2023; Mohammed et al., 2021).

Surveillance of AFP in hard-to-reach parts of the world is hindered by inadequate healthcare infrastructure, limited access to services, poor transportation and communication, high population mobility, security issues, cultural and language barriers, and low community awareness, leading to underreporting and delayed detection (Bessing et al., 2023; Datta et al., 2016). Efforts to overcome these challenges involve improving healthcare infrastructure, increasing community awareness, and utilizing mobile clinics and community health workers to enhance disease surveillance and control in these remote areas (Ahmed et al., 2022; Gwinji et al., 2022; Worsley-Tonks et al., 2022). However, there is limited work performed in bridging community and health facility/system for disease surveillance (Ibrahim et al., 2023; Jamison et al., 2006). Ethiopia implemented community-based disease surveillance in hard-to-reach parts of the country through the CORE Group Partners Project (CGPP) Ethiopia (https://coregroup.org/cgpp-ethiopia/) for two decades and resulted in improving surveillance sensitivity by 30% in its implementation sites (reporting one-third of total surveillance cases that could have been missed by health system) (Asegedew et al., 2019). CGPP trained and dispatched more than 10,000 community volunteers (CV) by providing them the necessary trainings to be able to immediately report AFP cases in their communities (Stamidis et al., 2019). These CVs report suspected AFP cases immediately to the nearest health facilities under their assigned health extension worker (HEW) (Biru et al., 2024). Based on the CVs information and HEWs confirmation, the CGPP field officers along with government health workers and World Health Organization (WHO) surveillance workers, investigate the suspected AFP case reported and take the necessary action (Lewis et al., 2020). The CGPP field officers fill the case information and use different platforms to submit it through their smartphone in the form of text, image, audio and video to the CGPP central server.

Two stool specimens must be collected from every AFP suspected case within 14 days of onset of paralysis. In case sample cannot be collected within 14 days the specimen should be collected up to 60 days from onset of paralysis, which takes days if not weeks (Asegedew et al., 2019). Such delay may result in a huge public health issue in case the child is infected by poliovirus, as the child interacts with the population (Quarleri, 2023). Moreover, the community workers and the stakeholders communicate on different platforms. For instance, text messages are sent via direct SMS, audio calls are made via direct calls, images and videos are sent via social media apps like Telegram, WhatsApp, and Messenger. This results in a surveillance system that is not well organized and important information not being stored on a dedicated platform bringing about a loss of precious data that could be used for future learning and also pandemic preparedness (Donelle et al., 2023).

To overcome these challenges, a unified platform that is powered by artificial intelligence (AI) can be built and utilized where all the data types (text, audio, image, and video) can be stored and a model trained on these data are used to promptly perform AFP surveillance. To this end, a fully automated system powered by AI that's used by government, communities, and non-governmental organizations (NGOs) has been developed in Ethiopia. When a registered user suspects AFP case, the user opens the Polio antenna mobile app (a dedicated app developed for polio surveillance in Ethiopia), enter information requested on the app about the child, take a picture, record a short video, and upload it. The app analyzes the data entered and instantly report it to all the stakeholders, including vicinity health officer, designated surveillance worker, Ethiopian Public Health Institute (EPHI) officer, Ministry of Health (MoH) officer, NGOs, and WHO officer, see Fig. 1. At the same time, the app recommends the user what to do to contain and monitor the case. Moreover, the app tracks stool sample taken using QR technology that holds all the information about the suspected case, automating stool transportation and tracking. The surveillance data is visualized on the dashboard of the repository developed for this system (https://polioantenna.org/). The repository is unique in its nature capturing AFP surveillance data, not only in text form but also in image and video formats automating decision making and enabling AI models to be trained on it.

Fig. 1.

A unified automated platform for community-based AFP surveillance.

Despite the relatively limited research in harnessing AI for AFP surveillance, a few pioneering efforts have emerged (Ayana, Dese, Daba, et al., 2024; Dese et al., 2024). These endeavors, albeit scarce, signify a growing recognition of the potential of AI in augmenting disease surveillance and prediction systems, particularly in the context of AFP surveillance. A hybrid machine learning method for predicting incidences of Vaccine-derived Poliovirus (VDPV) outbreaks was developed in (Hemedan et al., 2020). The study aimed to address the critical need for accurate VDPV surveillance, leveraging the unique combination of Random Vector Functional Link Networks (RVFL) and the Whale Optimization Algorithm (WOA). The results revealed the superior performance of the WOA-RVFL algorithm, surpassing traditional RVFL methods, and demonstrating its efficacy in detecting VDPV outbreaks. On the other hand, in (Draz et al., 2020, pp. 224–229), a multi-step approach was designed to identify specific Deoxyribonucleic acid (DNA) motifs associated with the presence of the poliovirus during its early stages of infection. The researchers collected diverse data related to the poliovirus, including DNA and Ribonucleic acid (RNA) sequences, from various sources such as genomic databases and experimental studies. These sequences were then subjected to rigorous computational analysis using bioinformatics tools specifically designed for motif discovery and analysis. By integrating computational analysis with data visualization, the methodology provided a comprehensive framework for understanding the genetic signatures of poliovirus infection at an early stage. Furthermore, Khan et al. (Khan et al., 2020, pp. 223–227) employed an innovative AI method to predict the likelihood of polio outbreaks. The study used diverse datasets are collected from sources like the National Institute of Health (NIH), medical store databases, and transport logs. Subsequently, the K-means clustering algorithm was applied to identify patterns within the data that may indicate potential polio outbreaks based on factors such as medical sales records and passenger travel patterns.

However, none of these works utilized images for AFP surveillance as well as the community were not source of their data, rather the studies used data from conventional health care system. The work reported in this paper developed the first deep learning model for identifying AFP cases from images collected by community volunteers to improve the sensitivity of AFP surveillance. Using suspected case images of AFP for polio surveillance offers a valuable advantage by enabling more precise identification of polio's characteristic signs (Dese et al., 2024). Paralytic polio typically manifests as asymmetric muscle weakness, which peaks within 3–5 days of onset and can progress for about a week (Gemechu et al., 2024). The paralysis caused by polio is generally flaccid and more severe in the proximal muscles, meaning the arms are often more affected than the legs, with absent or diminished deep tendon reflexes but preserved sensation (Daba et al., 2024; Demlew et al., 2024). These visual cues—such as asymmetry, floppiness, and the degree of muscle involvement—can be captured effectively in images, helping to distinguish polio from other conditions, like Guillain-Barré syndrome, that may present differently (e.g., more symmetrical paralysis or with sensory loss). Images offer the added benefit of allowing healthcare professionals, especially in remote or under-resourced areas, to assess these clinical signs more easily. By visually documenting signs like the asymmetry of weakness, the flaccid appearance of affected limbs, and the rate of progression, image-based surveillance can enhance the accuracy of early detection efforts. This combination of visual assessments with traditional surveillance methods helps expedite diagnosis and response, leading to quicker interventions such as vaccination drives or outbreak containment, thus supporting broader global efforts to eradicate polio.

2. Materials and methods

2.1. Dataset

The data for this study were collected from the CORE Group Partner Project Ethiopia implementation catchment area. The CGPP Ethiopia was established in 1999 and started implementation in Ethiopia in November 2001. CGPP Ethiopia has supported and coordinated efforts of Private Voluntary Organizations (PVOs) and NGOs involved in polio eradication activities. The CGPP Ethiopia Secretariat is hosted within the Consortium of Christian Relief and Development Associations (CCRDA) and closely collaborates with five international and four local NGOs. Additionally, CGPP Ethiopia works closely with the Ministry of Health, WHO, UNICEF, and Rotary International (Perry et al., 2019).

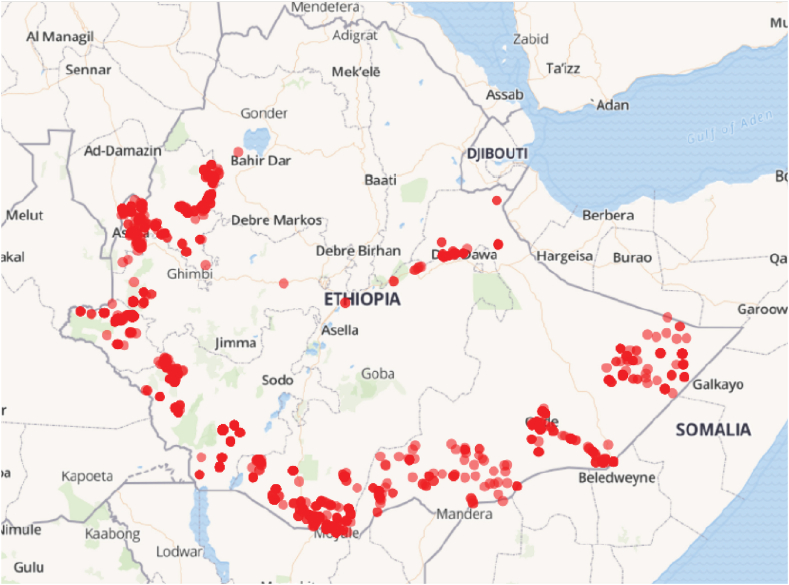

Currently, CGPP Ethiopia works in 82 rural pastoralists and hard-to-reach districts (called Woredas) located in six regional states; Benishangul-Gumuz, Gambella, Oromia, Somali, Southern Ethiopia, and Southwest Ethiopia. In the routine system, children with sudden changes in the status of child movement are detected during a visit to a health facility. In contrast to that, CVs carry out community-based surveillance by searching house to house for children with symptoms of AFP. CGPP Ethiopia deployed more than 10,000 CVs in more than 1700 Kebeles (the grassroot administration body in Ethiopia). For this study, an AFP surveillance dataset of 428 images, comprising 228 suspected AFP cases and 200 normal cases, was created using images of Ethiopian children collected over the past five years through the CGPP Ethiopia's community-based surveillance system. The images were anonymized by removing the parts above neck and no additional personal information were used for this study purpose. Fig. 2 shows sample images from the AFP image dataset utilized in this study and Fig. 3 shows a map of the dataset collection points.

Fig. 2.

Sample suspected AFP case images from the dataset.

Fig. 3.

Locations of the dataset collection points.

2.2. Proposed method

2.2.1. Rationale

AFP case images are rare images to find for many reasons. The first reason is that polio is eradicated from many countries except a few endemic countries. As a result, it is difficult to collect images of positive AFP cases. The second reason is the ethical issues related personal information usages. Thirdly, the cases are available in low-income countries where it is expensive to collect and store AFP case images. Fourthly, long term investment is needed to have a surveillance system that works in coordination to collect and store the images. Due to these and other reasons, it is hard to find large number of AFP case images that can be used to train the data intensive deep learning models so that it can extract important features and generalize well when subjected to new, previously unseen images to identify AFP cases. This is the main reason why transfer learning method is proposed in this work (Ayana, Dese, Abagaro, et al., 2024; Ayana et al., 2021).

2.2.2. Proposed model

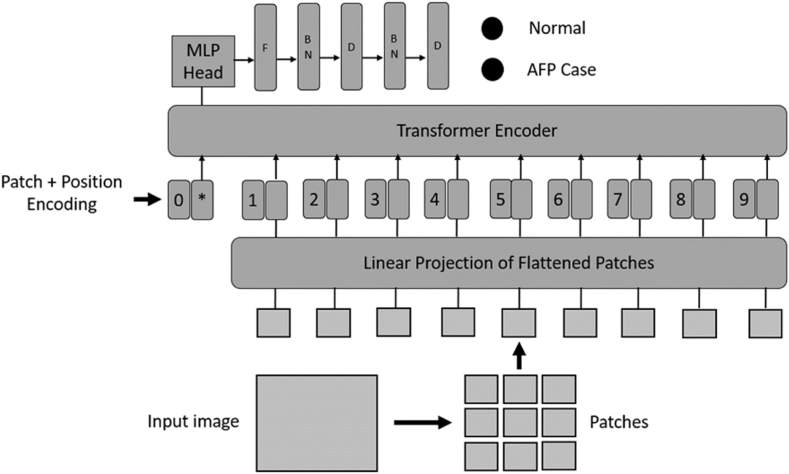

This study utilized the pretrained vision transformer model for distinguishing between normal and suspected AFP images (Dosovitskiy et al., 2020). Vision transformers (ViTs) offer several advantages over convolutional neural networks (CNNs) for AFP surveillance image analysis. ViTs use a global attention mechanism, allowing them to capture long-range dependencies across the entire image, which is crucial for identifying subtle patterns associated with AFP. They handle varying image sizes more flexibly, reducing the risk of losing important details through resizing. ViTs also generalize well with limited data, especially when pretrained on large datasets, making them suitable for medical imaging tasks where data is often scarce. Additionally, their attention maps provide better interpretability, helping medical professionals understand which areas of the image are critical for diagnosis. With fewer inductive biases, ViTs are more adaptable to complex patterns in medical images, making them a promising choice for AFP surveillance (Raghu et al., 2021).

Three vision transformer versions that have been pre-trained on ImageNet dataset are used in the proposed method to perform the classification task. Depending on the architecture type, pretrained vision transformer models have different parameters. The fine-tuning process removed the last layer of the pretrained model and replaced it with a flattening layer, followed by two batch normalization and a fully connected layers, the final fully connected layer outputting the number of classes required by the target dataset. This application of transfer learning enables the utilization of features learned on the large ImageNet dataset by the pretrained model as shown in Fig. 4.

Fig. 4.

The proposed vision transformer-based transfer learning model architecture. MLP, multi-layer perceptron; F, flatten; BN, batch normalization; D, dense layer.

2.2.3. Problem formulation and model definition

Preparing image as a series of patches is a crucial step in the use of vision transformers for computer vision tasks. The input image is transformed into patches or tokens to do this. The primary reason for selecting patches over individual pixels is to adapt the transformer architecture, initially designed for NLP tasks, to handle images. Furthermore, since patches minimize the quadratic complexity of transformers during the attention matrix computation, they are chosen over individual pixels. If every pixel is utilized, the attention matrix must handle every pixel, which is very computationally demanding and requires a lot of hardware.

Let the input image space is denoted by . We are interested in discovering image patches (Algorithm 1) given as and , given an input picture, and a patch size of . Patch embedding and positional embedding are then carried out. First, the patches are flattened, and then they undergo a linear transformation that converts the sequence elements into dimensions of outputs in order to do patch embedding. Dosovitskiy et al. (Dosovitskiy et al., 2020) used square patches in their paper to simplify the patch and positional encodings. Transformer sequences are not time sequences; hence it is difficult to determine the patch order. Positional encoding is used as a result to make up for this problem. In order to accomplish this, a randomly generated embedding matrix is placed on top of the concatenated matrix that has the patch imbedding and learnable class. Lastly, the transformer encoder receives the patch and positional encoding matrix.

The goal is to acquire knowledge of a mapping where represent dimension patches and represents the output class space. Thus, we are interested in establishing a mapping from sequence of input image patches to their matching output class probabilities. The transformer encoder unit and the multilayer perceptron (MLP) head are responsible for achieving this mapping. The encoder and decoder that make up the transformer encoder unit work together to convert an input patch sequence into an output. The primary components of the encoder and decoder modules are feed forward layers and multi-head attention. Equation (1) provides the mechanism of attention. One patch in the input sequence is represented by vector in equation (1), all of the patches in the sequence are represented by vector as keys, and all of the patches are represented by vector as values. Thus, is the attention weight based on which the numbers in the series are multiplied and added together.

| (1) |

The values between 0 and 1 are produced by the SoftMax function. In the multi-head attention process, this attention mechanism is executed many times concurrently, as shown in Algorithm 2, by multiplying the , , and with learnt weight matrices during the learning phase.

The feedforward algorithm proceeds after the multi-head attention processes in the encoder and decoder. Using the feed-forward layers, a distinct linear transformation is carried out for every element in the sequence. After that, the MLP receives the transformer encoder outputs and uses them to convert the features into an output classification function. Although the transformer encoder has several outputs, the MLP only chooses one that closely matches the class under consideration, disregarding the other outputs. A probability distribution of the matching classes that the image is assigned to is produced by the MLP.

Transfer learning: Transfer learning aims to improve the learning of a target function, , in a target domain, , using the knowledge gained from a source domain, , and learning task, . The typical definition of transfer learning involves a single-step transfer learning algorithm. Transfer learning in this study uses an ImageNet pre-trained model (natural images) for the task of classify AFP case images. The pre-trained model's weights, , are used to produce by minimizing the cross-entropy objective function in (2), which uses the SoftMax unit's output probability, , and bias . Given training samples within ImageNet dataset, , where, is the ith input and is the corresponding label, the objective function is given by (2).

| (2) |

2.3. Implementation

We used three variants of vision transformer architecture. The models were trained for 50 epochs using Adam optimizer at initial learning rate of 0.0001with a batch size of 16. Prior to the training the images were resized to pixels. In order to enrich the data set, we performed augmentation. This effort included random rotation, shift, and flip to the training set.

The CNN models were subjected to an identical protocol as ViT architectures, with the exception that CNN architectures employ ReLu as an activation function, whereas ViT architectures use GELU. Based on a pilot research employing popular pre-trained models—which are commonly used for medical image classification—three CNN models—ResNet50, EfficientNetB2, and InceptionV3—were selected. For transfer learning on AFP images, each model was utilized as a pre-trained CNN after being pre-trained on ImageNet. The way the pre-trained model training is implemented is that Keras is used to load the pre-trained weights on ImageNet. Only the final layer of the pre-trained model was removed when transferring learning from the ImageNet pre-trained model to AFP images. Instead, a flattening layer was added, followed by two batch normalization and fully connected layers. The final fully connected layer, like in the ViT architecture shown in Fig. 4, outputs the number of classes needed by the target dataset. Starting at 0.0001 learning rate, all of the weights from the ImageNet pre-trained model were adjusted using an exponentially decaying learning rate, with the exception of the final layer. Through random rotation, shift, and flip, augmentation has been used to expand the number of AFP image training datasets. All other parameters were identical to those found in the ViT models.

2.4. Evaluation metrics

Because stratified cross validation offers the benefit of rearranging data to ensure that each fold is a good sample of the whole data, assessments were conducted using stratified five-fold cross validation (Tougui et al., 2021). Metrics like accuracy, F1-score, recall, precision, and area under the receiver operating curve (ROC) (AUC) have been used to assess our model's performance. An average over five-fold cross-validation was used to assess the performances. A 95% confidence interval is used to determine each and every outcome. We have also performed Student's t-test P-value to evaluate the significance of the proposed model against vison transformer base models trained from scratch and CNN-based transfer learning models.

2.5. Experimental settings

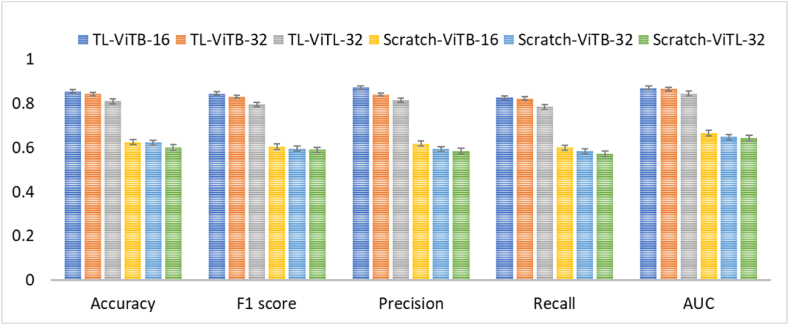

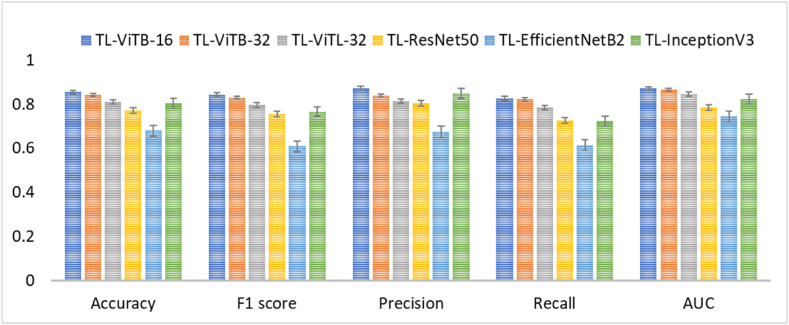

Three experiments have been carried out to evaluate the performance of the proposed model. The first one is measuring the performance of the proposed model using different vision transformer architectures to evaluate the performance of the proposed method on different architectures. For this purpose, we used three vision transformer variants including, ViTB-16, ViTB-32, and ViTL-32. ViTB-16, ViTB-32, and ViTL-32 are differ in their patch size and model size. ViTB-16 uses a smaller patch size (16x16 pixels), providing more detailed image representation, with 87 million parameters. ViTB-32 increases the patch size to 32x32 pixels, capturing less granular details but with almost similar model size of 88 million parameters. ViTL-32 also uses a 32x32 patch size but is much larger, with 304 million parameters, making it a more powerful model for tasks requiring greater capacity. In the second experiment, we performed comparison of the proposed model against vision transformer models trained from scratch on the images. Thirdly, direct comparison of the proposed vision transformer models with the state-of-the-art convolutional neural networks, which are ResNet50, EfficientNetB2, and InceptionV3 based models. The key differences between ResNet50, EfficientNetB2, and InceptionV3 lie in their architectural design and model efficiency. ResNet50 employs a deep residual learning architecture with skip connections to facilitate training in deeper networks, featuring around 26 million parameters. EfficientNetB2 focuses on model efficiency through a compound scaling method that optimally balances depth, width, and resolution, making it highly efficient with about 21 million parameters. In contrast, InceptionV3 utilizes Inception modules that perform convolutions at multiple scales in parallel, allowing it to capture features at various levels of detail with approximately 24 million parameters. In general, ResNet50 emphasizes depth and simplicity, EfficientNetB2 prioritizes efficiency, and InceptionV3 is versatile in capturing diverse image features. In all the cases transfer learning was done by removing the last layer of each model and replacing it with the same output layers.

3. Results

Performance of the proposed vision transformer-based transfer learning method: The proposed method has observed a slightly varying results for different vision transformer architectures. ViTB-16 has shown the best performance compared to ViTB-32 and ViTL-32 with AUC of 0.870 ± 0.01, 0.865 ± 0.02, and 0.845 ± 0.02 for ViTB-16, ViTB-32, and ViTL-32, respectively. The detailed results for the different vision transformer-based architectures using different performance metrics is presented in Table 1.

Table 1.

Performance results of vision transformer-based transfer learning models (ViT-TL), vision transformer models trained from scratch (ViT-scratch), and convolutional neural network-based transfer learning models (CNN-TL) approaches.

| Approach | Model | Accuracy | F1 score | Precision | Recall | AUC |

|---|---|---|---|---|---|---|

| ViT-TL |

ViTB-16 | 0.854 ± 0.01 | 0.844 ± 0.01 | 0.872 ± 0.01 | 0.826 ± 0.01 | 0.870 ± 0.01 |

| ViTB-32 | 0.842 ± 0.02 | 0.830 ± 0.03 | 0.840 ± 0.03 | 0.822 ± 0.03 | 0.865 ± 0.02 | |

| ViTL-32 |

0.810 ± 0.02 |

0.796 ± 0.02 |

0.814 ± 0.02 |

0.784 ± 0.02 |

0.845 ± 0.02 |

|

| ViT-scratch |

ViTB-16 | 0.624 ± 0.02 | 0.604 ± 0.03 | 0.618 ± 0.03 | 0.600 ± 0.04 | 0.666 ± 0.01 |

| ViTB-32 | 0.622 ± 0.02 | 0.596 ± 0.03 | 0.594 ± 0.03 | 0.584 ± 0.04 | 0.648 ± 0.01 | |

| ViTL-32 |

0.600 ± 0.02 |

0.590 ± 0.03 |

0.584 ± 0.03 |

0.572 ± 0.04 |

0.644 ± 0.01 |

|

| CNN-TL | ResNet50 | 0.772 ± 0.02 | 0.756 ± 0.02 | 0.804 ± 0.02 | 0.726 ± 0.03 | 0.785 ± 0.02 |

| EfficientNetB2 | 0.680 ± 0.07 | 0.608 ± 0.12 | 0.674 ± 0.07 | 0.614 ± 0.10 | 0.744 ± 0.06 | |

| InceptionV3 | 0.804 ± 0.02 | 0.766 ± 0.02 | 0.848 ± 0.02 | 0.722 ± 0.03 | 0.823 ± 0.01 |

Comparison against vision transformers trained from scratch on AFP dataset: The AFP image dataset has been used to train vision transformer models from scratch for the task of classification. Consequently, a high margin of performance drop has been registered compared to the proposed method where transfer learning has been implemented. The vision transformer models trained from scratch on AFP dataset provided AUC of 0.666 ± 0.01, 0.648 ± 0.01, and 0.644 ± 0.01 for ViTB-16, ViTB-32, and ViTL-32, respectively, compared to the proposed method's AUC results of 0.870 ± 0.01, 0.865 ± 0.02, and 0.845 ± 0.02 for ViTB-16, ViTB-32, and ViTL-32, respectively. Table 1 presents the detailed performance results of vision transformer models trained from scratch on AFP dataset. Fig. 5 summarizes the results comparison showing transfer learning-based ViTs' superiority over ViTs trained from scratch for AFP images.

Fig. 5.

Performance of vision transformer models trained from scratch compared against transfer learning-based vision transformers on AFP dataset.

Comparison against convolutional neural network-based transfer learning: To observe the effectiveness of the proposed vision transformer-based transfer learning, a comparison against CNN-based transfer learning for AFP image classification has been performed. As a result, CNN-based transfer learning method provided AUC of 0.785 ± 0.02, 0.744 ± 0.06, and 0.823 ± 0.01 for ResNet50, EfficientNetB2, and InceptionV3, respectively, compared to the proposed vision transformer-based transfer learning method's AUC results of 0.870 ± 0.01, 0.865 ± 0.02, and 0.845 ± 0.02 for ViTB-16, ViTB-32, and ViTL-32, respectively. Table 1 presents detailed performance results of the CNN-based transfer learning methods on AFP dataset and Fig. 6 summarizes the results of ViT- and CNN-based transfer learning comparison visually. As it can be depicted, ViT-based TL is more effective than CNN-based Tl for AFP images.

Fig. 6.

Performance of the transfer learning method using different convolutional neural network and vision transformer architectures.

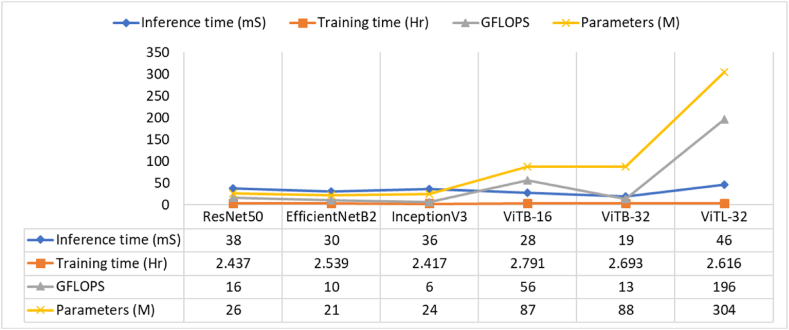

For practical application, it is essential to demonstrate the computational cost and inference time of the vision transformer and CNN-based models. Therefore, evaluation of the different models used in this study in terms of inference time, training time, and Giga Floating Point Operations Per Second (GFLOPS) has been conducted. Fig. 7 depicts the evaluation results. The comparison results of CNN-based models (ResNet50, EfficientNetB2, InceptionV3) with ViTs (ViTB-16, ViTB-32, ViTL-32) highlights differences in computational efficiency and practicality. ViTs, especially ViTB-32 with a fast inference time of 19ms, show promise in real-time applications, but they generally require higher GFLOPS and have more parameters than CNNs. For example, ViTL-32 has a massive, 304 million, parameters and 196 GFLOPS, making it computationally expensive, while ViTB-16 (87 million parameters) also demands significant resources with 56 GFLOPS. In contrast, CNN models like EfficientNetB2 (30ms inference time, 10 GFLOPS) and InceptionV3 (36 million parameters, 36ms inference time, and 6 GFLOPS) offer a more balanced trade-off between speed and computational cost, making them suitable for environments with limited hardware resources. For practical deployment, the choice between these models depends on the specific application requirements. ViTs might be better for tasks where high accuracy justifies the higher computational load, but CNNs are more efficient for tasks requiring quick inference times with fewer resources. EfficientNetB2 stands out for its parameter efficiency (21M) and low GFLOPS, making it a good fit for lightweight applications. On the other hand, ViTL-32's large parameter size and GFLOPS suggest that it is better suited for performance-critical scenarios where accuracy is prioritized over computational efficiency.

Fig. 7.

Computational cost analysis of the different models used in implementing the proposed approach.

4. Discussion

This work developed a simple transfer learning for the early detection of AFP suspected cases from images collected by community volunteers using state of the art vision transformer architectures. This is the first of its kind in using image-based deep learning analysis of AFP cases surveillance. The dataset used in the study is also a new dataset that was collected from the community using mobile phones that makes this work of a high value as it comes from the general public. Transfer learning approach is proposed as such images collected from the community are rare to find and even the disease under consideration is rare as it is close to eradication. The use of vision transformer as a main architecture for the transfer learning approach was proposed because of the vision transformer's capability in recognizing global features from image better.

The results of the experimental study highlight the superior performance of a vision transformer-based transfer learning method for AFP case image classification. The findings demonstrate that the choice of vision transformer architecture influences the classification performance, with ViTB-16 consistently outperforming ViTB-32 and ViTL-32 across various evaluation metrics. In terms of AUC, ViTB-16 achieved the highest average score of 0.870 ± 0.01, followed by ViTB-32 with 0.865 ± 0.02, and ViTL-32 with 0.845 ± 0.02 (Table 1). These results indicate that smaller transformer architectures may be more effective for the task of AFP image classification. Moreover, our detailed analysis of performance metrics such as accuracy, F1 score, precision, recall, and AUC further support the superior performance of ViTB-16 compared to other architectures.

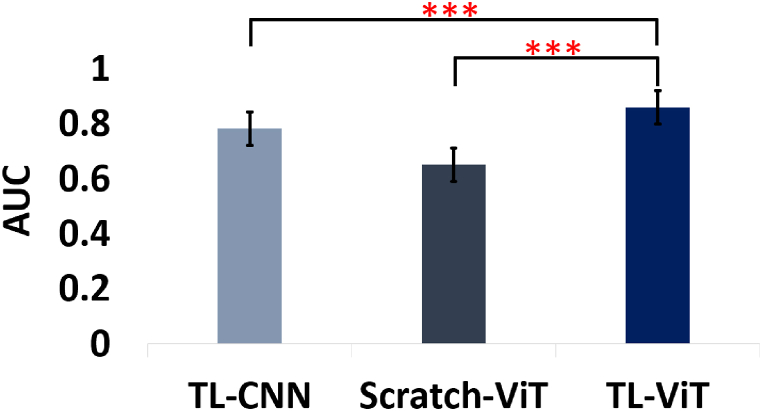

Furthermore, we compared the performance of our proposed vision transformer-based transfer learning approach with vision transformers trained from scratch on the AFP image dataset used for this study and CNN-based transfer learning methods. The comparison revealed a substantial performance drop when using vision transformers trained from scratch, with AUC scores ranging from 0.644 to 0.666, compared to the proposed vision transformer-based transfer learning method's AUC scores ranging from 0.845 to 0.870. This stark contrast underscores the effectiveness of transfer learning in leveraging pre-trained vision transformer models for AFP image classification tasks. Additionally, when compared to CNN-based transfer learning methods using popular architectures such as ResNet50, EfficientNetB2, and InceptionV3, the proposed vision transformer-based transfer learning approach consistently achieved superior AUC scores. This suggests that vision transformers are better than the state-of-the-art CNN architectures for transfer learning in AFP image classification tasks. Fig. 8 summarizes the comparison of the proposed vision transformer-based transfer learning against vision transformer models trained from scratch and the state-of-the-art CNN-based transfer learning models. The evaluation based on the statistical significance using P-value of average AUC results indicate the outstanding performance of the proposed method over the others. For this evaluation, AUC results of the three vision transformer-based transfer learning architectures used in this study (ViTB-16, ViTB-32, and ViTL-32) were averaged and compared against the average of the three vision transformers models trained from scratch (ViTB-16, ViTB-32, and ViTL-32) as well as the average of the three CNN architectures (ResNet50, EfficientNetB2, and InceptionV3) to determine if the performance improvement brought about by the vision transformer based transfer learning is statistically significant in terms of P-value. Consequently, the proposed method showed statistically significant improvement with P-values less than 0.001 (P < 0.001).

Fig. 8.

Comparison of the statistical significance of the proposed method in terms of area under the receiver operating curve (ROC) (AUC). ∗∗∗ represents statistical significance value of P < 0.001. TL-CNN, convolutional neural network-based transfer learning, TL-ViT, vision transformer-based transfer learning, Scratch-ViT, vision transformer model trained from scratch. Error bars represent standard deviation of AUCs.

This work not only addresses a critical gap in rare disease surveillance but also contributes to the broader goal of strengthening healthcare systems in low-resource settings. By bridging the gap between community-based reporting and health facility/system response, this study offers a scalable and sustainable solution for enhancing disease surveillance and control. Moreover, the establishment of a dedicated platform for data storage and analysis ensures the preservation of valuable information for future learning and preparedness, facilitating more effective responses to public health threats. Overall, this work has the potential to significantly improve AFP surveillance and contribute to the broader efforts in global health security and disease eradication.

This study has limitations in terms of the quality of image data collected. The images were collected by community workers who do not have much knowledge of how to take a good picture that can be used for the purpose of machine learning. Moreover, the quality of devices used to take the pictures were low compared to the devices used to collect publicly available images for the general use of machine learning. Future works should revolve around improving the quality of image data collected as the way images are captured should be improved in terms of how the community volunteers take pictures and also the quality of the mobile devices used may have impact on performance.

CRediT authorship contribution statement

Gelan Ayana: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation, Conceptualization. Kokeb Dese: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation, Conceptualization. Hundessa Daba Nemomssa: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation. Hamdia Murad: Writing – review & editing, Methodology, Formal analysis, Data curation. Efrem Wakjira: Writing – review & editing, Methodology, Formal analysis, Data curation. Gashaw Demlew: Writing – review & editing, Methodology, Formal analysis, Data curation. Dessalew Yohannes: Writing – review & editing, Methodology, Formal analysis, Data curation. Ketema Lemma Abdi: Writing – review & editing, Methodology, Formal analysis, Data curation. Elbetel Taye: Writing – review & editing, Methodology, Formal analysis, Data curation. Filimona Bisrat: Writing – review & editing, Visualization, Methodology, Formal analysis, Data curation. Tenager Tadesse: Writing – review & editing, Visualization, Methodology, Formal analysis, Data curation. Legesse Kidanne: Writing – review & editing, Visualization, Methodology, Formal analysis, Data curation. Se-woon Choe: Writing – review & editing, Resources, Methodology, Formal analysis, Data curation. Netsanet Workneh Gidi: Writing – review & editing, Methodology, Formal analysis, Data curation. Bontu Habtamu: Writing – review & editing, Methodology, Formal analysis, Data curation. Jude Kong: Writing – review & editing, Writing – original draft, Visualization, Validation, Supervision, Software, Resources, Project administration, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation, Conceptualization.

Informed consent statement

Informed consent was obtained from all subjects involved in the study.

Institutional Review Board statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Jimma University Institute of Health (protocol code JUIH/IRB/581/23 and date of approval, July 22, 2023).

Data availability statement

Image data is unavailable due to privacy or ethical restrictions.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

This work was supported by a fund from the International Development Research Centre (IDRC) (Grant No. 109981–001). This research is funded by Canada’s International Development Research Centre (IDRC) (Grant No. 109981-001). We acknowledge support from IDRC and UK's Foreign, Commonwealth and Development Office (FCDO) (Grant No. 110554-001). JK Also acknowledges support from NSERC Discovery Grant (Grant No. RGPIN-2022-04559), NSERC Discovery Launch Supplement (Grant No: DGECR-2022-00454) and New Frontier in Research Fund- Exploratory (Grant No. NFRFE-2021-00879).

Handling Editor: Dr Daihai He

Contributor Information

Gelan Ayana, Email: gelan.ayana@ju.edu.et.

Jude Kong, Email: jude.kong@utoronto.ca.

References

- Ahmed S., Chase L.E., Wagnild J., Akhter N., Sturridge S., Clarke A., Chowdhary P., Mukami D., Kasim A., Hampshire K. Community health workers and health equity in low- and middle-income countries: Systematic review and recommendations for policy and practice. International Journal for Equity in Health. 2022;21(1):49. doi: 10.1186/s12939-021-01615-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ali A.S.M.A., Allzain H., Ahmed O.M., Mahgoub E., Bashir M.B.M., Gorish B.M.T. Evaluation of acute flaccid paralysis surveillance system in the River Nile State - northern Sudan, 2021. BMC Public Health. 2023;23(1):125. doi: 10.1186/s12889-023-15019-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asegedew B., Tessema F., Perry H.B., Bisrat F. The CORE Group polio project's community volunteers and polio eradication in Ethiopia: Self-reports of their activities, knowledge, and contributions. The American Journal of Tropical Medicine and Hygiene. 2019;101(4_Suppl):45–51. doi: 10.4269/ajtmh.18-1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayana G., Dese K., Abagaro A.M., Jeong K.C., Yoon S.-D., Choe S. Multistage transfer learning for medical images. Artificial Intelligence Review. 2024;57(9):232. doi: 10.1007/s10462-024-10855-7. [DOI] [Google Scholar]

- Ayana G., Dese K., Choe S. Transfer learning in breast cancer diagnoses via ultrasound imaging. Cancers. 2021;13(4):738. doi: 10.3390/cancers13040738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayana G., Dese K., Daba H., Mellado B., Badu K., Yamba E.I., Faye S.L., Ondua M., Nsagha D., Nkweteyim D., Kong J.D. Decolonizing global AI governance: Assessment of the state of decolonized AI governance in Sub-Saharan Africa. SSRN Electronic Journal. 2023 doi: 10.2139/ssrn.4652444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ayana G., Dese K., Daba H., Wakjira E., Demlew G., Yohannes D., Lemma K., Murad H., Zewde E.T., Habtamu B., Bisrat F., Tadesse T., Gidi N.W., Kong J.D. Deep learning for improved community-based acute flaccid paralysis surveillance in Ethiopia. SSRN Electronic Journal. 2024 doi: 10.2139/ssrn.4750946. [DOI] [Google Scholar]

- Bessing B., Dagoe E.A., Tembo D., Mwangombe A., Kanyanga M.K., Manneh F., Matapo B.B., Bobo P.M., Chipoya M., Eboh V.A., Kayeye P.L., Masumbu P.K., Muzongwe C., Bakyaita N.N., Zomahoun D., Tuma J.N. Evaluation of the acute flaccid paralysis surveillance indicators in Zambia from 2015–2021: A retrospective analysis. BMC Public Health. 2023;23(1):2227. doi: 10.1186/s12889-023-17141-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biru G., Gemechu H., Gebremeskel E., Daba H., Dese K., Wakjira E., Demlew G., Yohannes D., Lemma K., Murad H., Zewde E.T., Habtamu B., Tefera M., Alayu M., Gidi N.W., Bisrat F., Tadesse T., Kidanne L., Choe S.…Ayana G. Community-based surveillance of acute flaccid paralysis: A review on detection and reporting strategy. 2024. [DOI]

- Daba H., Dese K., Wakjira E., Demlew G. Epidemiological trends and clinical features of polio in Ethiopia : A preliminary pooled data analysis and literature review polio continues to recurrently afflict Ethiopia , making it crucial to grasp the epidemiological trends. 2024. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4926664

- Datta S.S., Ropa B., Sui G.P., Khattar R., Krishnan R.S.S.G., Okayasu H. Using short-message-service notification as a method to improve acute flaccid paralysis surveillance in Papua New Guinea. BMC Public Health. 2016;16(1):409. doi: 10.1186/s12889-016-3062-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demlew G., Tefera M., Dese K., Daba H., Wakjira E., Yohannes D., Lemma K., Murad H., Zewde E.T., Habtamu B., Alayu M., Gidi N.W., Choe S., Kong J.D., Ayana G. Insights and implications from the analysis of acute flaccid paralysis surveillance data in Ethiopia. 2024. [DOI]

- Deressa W., Kayembe P., Neel A.H., Mafuta E., Seme A., Alonge O. Lessons learned from the polio eradication initiative in the democratic republic of Congo and Ethiopia: Analysis of implementation barriers and strategies. BMC Public Health. 2020;20(S4):1807. doi: 10.1186/s12889-020-09879-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dese K., Daba H., Wakjira E., Demlew G., Yohannes D., Lemma K., Murad H., Zewde E.T., Habtamu B., Tefera M., Alayu M., Gidi N.W., Bisrat F., Tadesse T., Kidanne L., Choe S., Kong J.D., Ayana G. Leveraging artificial intelligence for acute flaccid paralysis surveillance: A survey. 2024. [DOI]

- Donelle L., Comer L., Hiebert B., Hall J., Shelley J.J., Smith M.J., Kothari A., Burkell J., Stranges S., Cooke T., Shelley J.M., Gilliland J., Ngole M., Facca D. Use of digital technologies for public health surveillance during the COVID-19 pandemic: A scoping review. Digital Health. 2023;9 doi: 10.1177/20552076231173220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosovitskiy A., Beyer L., Kolesnikov A., Weissenborn D., Zhai X., Unterthiner T., Dehghani M., Minderer M., Heigold G., Gelly S., Uszkoreit J., Houlsby N. An image is worth 16x16 words: Transformers for image recognition at scale. 2020. http://arxiv.org/abs/2010.11929

- Draz U., Ali T., Yasin S., Waqas U., Ahmad W., Qazi A.S., Gul S. 2020 17th international bhurban conference on applied sciences and technology (IBCAST) 2020. A symptoms motif detection of polio at early stages by using data visual analytics: Polio free Pakistan. [DOI] [Google Scholar]

- Gemechu H., Biru G., Gebremeskel E., Daba H., Dese K., Wakjira E., Demlew G., Yohannes D., Lemma K., Murad H., Zewde E.T., Habtamu B., Tefera M., Alayu M., Gidi N.W., Bisrat F., Tadesse T., Kidanne L., Choe S., Ayana G. A survey on acute flaccid paralysis health system-based surveillance. 2024. [DOI]

- Gwinji P.T., Musuka G., Murewanhema G., Moyo P., Moyo E., Dzinamarira T. The re-emergence of wild poliovirus type 1 in Africa and the role of environmental surveillance in interrupting poliovirus transmission. IJID Regions. 2022;5:180–182. doi: 10.1016/j.ijregi.2022.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hemedan A.A., Abd Elaziz M., Jiao P., Alavi A.H., Bahgat M., Ostaszewski M., Schneider R., Ghazy H.A., Ewees A.A., Lu S. Prediction of the vaccine-derived poliovirus outbreak incidence: A hybrid machine learning approach. Scientific Reports. 2020;10(1):1–13. doi: 10.1038/s41598-020-61853-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibrahim M., Abdelmagid N., AbuKoura R., Khogali A., Osama T., Ahmed A., Alabdeen I.Z., Ahmed S.A.E., Dahab M. Finding the fragments: Community-based epidemic surveillance in Sudan. Global Health Research and Policy. 2023;8(1):20. doi: 10.1186/s41256-023-00300-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jamison D., Breman J., Measham A., Alleyne G., Claeson M., Evans D., Jha P., Mills A., Musgrove P. 2nd ed. Oxford University Press; 2006. Disease contol prioties in developing countries. [Google Scholar]

- Khan S., Azam F., Anwar M.W., Rasheed Y., Saleem M., Ejaz N. Proceedings of the 2020 6th international conference on computing and artificial intelligence. 2020. A novel data mining approach for detection of polio disease using spatio-temporal analysis. [DOI] [Google Scholar]

- Lewis J., LeBan K., Solomon R., Bisrat F., Usman S., Arale A. The critical role and evaluation of community mobilizers in polio eradication in remote settings in Africa and Asia. Global Health Science and Practice. 2020;8(3):396–412. doi: 10.9745/GHSP-D-20-00024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masa-Calles J., Torner N., López-Perea N., Torres de Mier M. de V., Fernández-Martínez B., Cabrerizo M., Gallardo-García V., Malo C., Margolles M., Portell M., Abadía N., Blasco A., García-Hernández S., Marcos H., Rabella N., Marín C., Fuentes A., Losada I., Gutiérrez J.G.…Castrillejo D. Acute flaccid paralysis (AFP) surveillance: Challenges and opportunities from 18 years' experience, Spain, 1998 to 2015. Euro Surveillance. 2018;23(47) doi: 10.2807/1560-7917.ES.2018.23.47.1700423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohammed A., Tomori O., Nkengasong J.N. Lessons from the elimination of poliomyelitis in Africa. Nature Reviews Immunology. 2021;21(12):823–828. doi: 10.1038/s41577-021-00640-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perry H.B., Solomon R., Bisrat F., Hilmi L., Stamidis K.V., Steinglass R., Weiss W., Losey L., Ogden E. Lessons learned from the CORE Group polio Project and their relevance for other global health priorities. The American Journal of Tropical Medicine and Hygiene. 2019;101(4_Suppl):107–112. doi: 10.4269/ajtmh.19-0036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quarleri J. Poliomyelitis is a current challenge: Long-term sequelae and circulating vaccine-derived poliovirus. GeroScience. 2023;45(2):707–717. doi: 10.1007/s11357-022-00672-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raghu M., Unterthiner T., Kornblith S., Zhang C., Dosovitskiy A. Do vision transformers see like convolutional neural networks? NeurIPS. 2021. http://arxiv.org/abs/2108.08810

- Stamidis K.V., Bologna L., Bisrat F., Tadesse T., Tessema F., Kang E. Trust, communication, and community networks: How the CORE Group polio Project community volunteers led the fight against polio in Ethiopia's most at-risk areas. The American Journal of Tropical Medicine and Hygiene. 2019;101(4_Suppl):59–67. doi: 10.4269/ajtmh.19-0038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tegegne A.A., Braka F., Shebeshi M.E., Aregay A.K., Beyene B., Mersha A.M., Ademe M., Muhyadin A., Jima D., Wyessa A.B. Characteristics of wild polio virus outbreak investigation and response in Ethiopia in 2013–2014: Implications for prevention of outbreaks due to importations. BMC Infectious Diseases. 2018;18(1):9. doi: 10.1186/s12879-017-2904-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tegegne A.A., Fiona B., Shebeshi M.E., Hailemariam F.T., Aregay A.K., Beyene B., Asemahgne E.W., Woyessa D.J., Woyessa A.B. Analysis of acute flaccid paralysis surveillance in Ethiopia, 2005-2015: Progress and challenges. Pan African Medical Journal. 2017;27 doi: 10.11604/pamj.supp.2017.27.2.10694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tougui I., Jilbab A., Mhamdi J. El. Impact of the choice of cross-validation techniques on the results of machine learning-based diagnostic applications. Healthcare Informatics Research. 2021;27(3):189–199. doi: 10.4258/HIR.2021.27.3.189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley-Tonks K.E.L., Bender J.B., Deem S.L., Ferguson A.W., Fèvre E.M., Martins D.J., Muloi D.M., Murray S., Mutinda M., Ogada D., Omondi G.P., Prasad S., Wild H., Zimmerman D.M., Hassell J.M. Strengthening global health security by improving disease surveillance in remote rural areas of low-income and middle-income countries. Lancet Global Health. 2022;10(4):e579–e584. doi: 10.1016/S2214-109X(22)00031-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Image data is unavailable due to privacy or ethical restrictions.