Abstract

Introduction

Ultrasound is important in heart diagnostics, yet implementing effective cardiac ultrasound requires training. While current strategies incorporate digital learning and ultrasound simulators, the effectiveness of these simulators for learning remains uncertain. This study evaluates the effectiveness of simulator-based versus human-based training in Focused Assessed with Transthoracic Echocardiography (FATE).

Materials and methods

This single-centre, prospective, randomised controlled study was conducted during an extracurricular FATE workshop (approximately 420 min) for third-year medical students. Participants were randomly assigned to the study group (training solely on simulators) or the control group (training on human subjects). Both groups completed a theory test and a self-assessment questionnaire before the course (T1) and at the end of the training (T2). At T2, all participants also completed two Direct Observation of Procedural Skills (DOPS) tests—one on the simulator (DOPSSim) and one on humans (DOPSHuman).

Results

Data from 128 participants were analysed (n = 63 study group; n = 65 control group). Both groups exhibited increased competency between the T1 and T2 self-assessments and theory tests (p < 0.01). In the DOPSHuman assessment at T2, the control group performed significantly better (p < 0.001) than the study group. While motivation remained consistently high among both groups, the study group rated their “personal overall learning experience” and the “realistic nature of the training” significantly worse than the control group (p < 0.0001). Both groups supported the use of ultrasound simulators as a “supplement to human training” (study: 1.6 ± 1.1 vs. control: 1.7 ± 1.2; p = 0.38), but not as a “replacement for human training” (study: 5.0 ± 2.3 vs. control: 5.4 ± 2.1; p = 0.37).

Conclusion

Both simulator- and human-based training effectively developed theoretical and practical skills in FATE. However, the simulator group demonstrated significantly poorer performance when applying their skills to human subjects, indicating limitations in the transferability of this simulator-based training to real-life patient care. These limitations of simulator-based ultrasound training should be considered in future training concepts.

Clinical trial number

Not Applicable.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12909-024-06564-y.

Keywords: Simulation-based training, High-Fidelity Simulation, Ultrasound education, FATE, FOCUS, Randomized controlled trials

Introduction

Examination of the heart using ultrasound is an important diagnostic method in cardiology [1]. Cardiac ultrasound is recommended in nearly all cardiac guidelines due to its ready availability, speed of implementation, and cost-effectiveness [1]. Nevertheless, achieving standardised and diagnostically reliable echocardiographic imaging can be challenging [2], requiring thorough training to ensure safety, accurate technique, and proper documentation [3, 4]. Basic skills are initially developed in training courses and reinforced through numerous supervised echocardiographic examinations, as outlined in the certification requirements of various professional societies [5].

Recently, specialised training concepts for students have been introduced at universities as part of medical degree programs [6–12], allowing students to acquire basic skills in performing echocardiography or focused sonography of the heart (FocUs) at an early stage [8]. This reflects the advice of international professional societies, which advocate for early training of students in ultrasound diagnostics and provide guidelines for its integration into education [13].

The COVID-19 pandemic, with its social distancing measures, further increased the demand for innovative, digitally supported teaching methods such as blended learning and simulator-based training [5, 14–25]. A wide range of ultrasound simulators are now commercially available, varying in their technical implementation, areas of diagnostic application, and the types of simulation images displayed on the viewing screen, which range from real CT and ultrasound images of patients to computer-generated images [21, 26].

Ultrasound simulators are frequently evaluated for their usefulness and applicability in training [7, 23, 27–34], particularly as an alternative or supplement to practising on live subjects [7, 14, 15, 17, 22, 23, 35–43]. Simulator-based training has shown benefits, particularly in improving diagnostic accuracy during patient examinations [28, 30].

Although evidence supporting the transfer of theoretical and practical skills from simulators to real patient care exists [44–47], larger randomized trials directly comparing simulator-based training with live-subject training for echocardiography skill transfer among medical students remain lacking, and current results vary significantly [23, 30, 42, 48]. This heterogeneity also extends to studies on simulation-based echocardiography training [7, 14, 36–38, 42, 49–51]. This study addresses these gaps by investigating how theoretical and practical skills in Focused Assessed Transthoracic Echo (FATE) are developed through simulator-based training compared to training on live subjects, and how effectively these skills translate to real patient examinations.

The goal of this study is to better understand the differences in training effectiveness in echocardiography, ultimately improving ultrasound education. Based on this randomised, prospective study, we propose that students’ ability to apply their training to real patients differs depending on whether they were trained using simulators or live subjects.

Materials and methods

Study design

This single-centre, prospective randomised controlled study (Fig. 1) was designed and conducted at a Capitalize University Hospital in 2022 [52, 53].

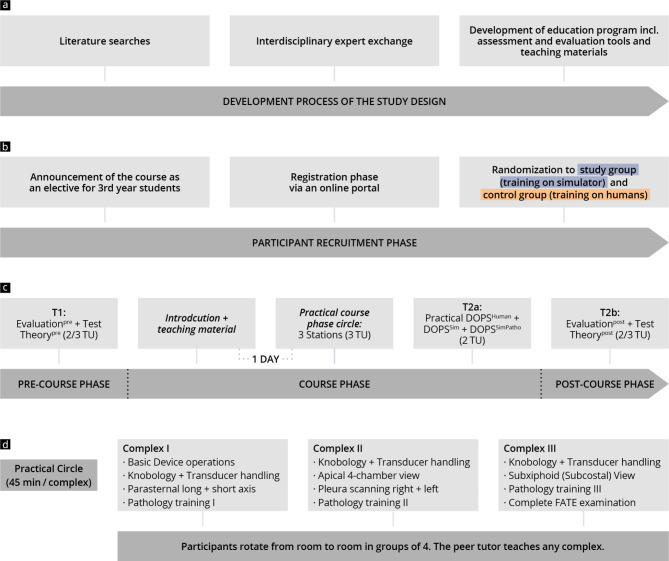

Fig. 1.

Chronological presentation of the study design and course concept, including data collection times (T1, T2a, and T2b). (a) The development process of the course design and content; (b) recruitment; (c) course concept; (d) course modules including learning objectives

This study utilized a validated ultrasound simulator (Vimedix, CAE Healthcare, Sarasota, Florida, US), approved by experts [54], in voluntary extracurricular workshops aimed at teaching the FATE protocol [55]. This was compared to traditional training using live human subjects. The 3rd year of the undergraduate medical degree programme corresponds to the first year of clinical training. Only third-year students were included to ensure that participants had no prior experience in echocardiography. The students were invited to participate in the study via an official announcement distributed to a mailing list from the Capitalize Dean’s Office. Volunteers registered for the workshops via an online portal.

After the enrolment deadline, participants were randomly assigned to either the study group (training on the simulator) or the control group (training on human subjects). Assessments, including self-evaluations (Evaluationpre, Evaluationpost), theory tests (Theorypre, Theorypost), and practical exams (DOPSSim, DOPSSimPatho, DOPSHuman), were conducted at two time points (T1 = pre and T2a and T2b = post) [56, 57]. Inclusion criteria required participants to have completed the first state examination, provided informed consent to participate in the study, and fully attended the introductory event, workshop, and examinations.

The primary endpoints of the study are threefold: an improvement in competency, as measured by theory tests; an improved practical skill level, as assessed by a practical FATE examination on either a simulator or live subject; and an ability to detect pathology. Secondary endpoints include a subjective increase in competency and motivation; the subjective achievement of the defined learning objectives; and an evaluation of the training concept.

Course concept and learning objectives

A FATE-specific workshop [55] was developed based on a training concept for focused sonography of the heart (FOCUS) [8] utilizing cross sections in transthoracic echocardiography [58], as proposed by the World Interactive Network Focused on Critical Ultrasound (WINFOCUS). The 360-minute workshop included an introductory session in plenary (90 min), practical exercises in small groups with practical tests (225 min), and a final plenary session (45 min). The learning objectives and the developed module sequence are presented in Fig. 1 and Supplement 1.

During the introductory session, held one day before the practical part of the workshop, participants completed a theory test (Theorypre) and an initial Evaluationpre. These were followed by a brief guided tour of the FATE protocol through a live pre-workshop session, and a study poster was distributed. During the practical exercises, participants rotated through 3 stations (135 min), each focussing on orientation in cross-sections and pathology training. Within the stations the control group was shown pre-recorded real ultrasound clips of pathologies, while the study group practiced case scenarios of pathologies on the simulator. To ensure uniformity in meeting the learning objectives, detailed instructions and station tasks for small group teaching were developed beforehand for each station (see Supplements 2 and 3).

At the end of the workshop, all participants completed the same Direct Observation of Procedural Skills test (90 min) on both the simulator (DOPSSim, DOPSSimPatho) and real people (DOPSHuman). They then took a theory post-test (Theorypost) and self-evaluation (Evaluationpost) in plenary (45 min). The control group first completed the tests on humans then on the simulator, the study group vice-versa.

A total of six workshops were held, each with six stations (three for the control group and three for the study group) running in parallel. Four grouped students per station were supervised by one tutor.

Tutors and equipment

A total of ten didactically and professionally trained peer tutors [8] (i.e., students from clinical semesters) taught the participants during the workshop under the supervision of two consultants. All tutors underwent systematic training on the simulator to master its operating functions.

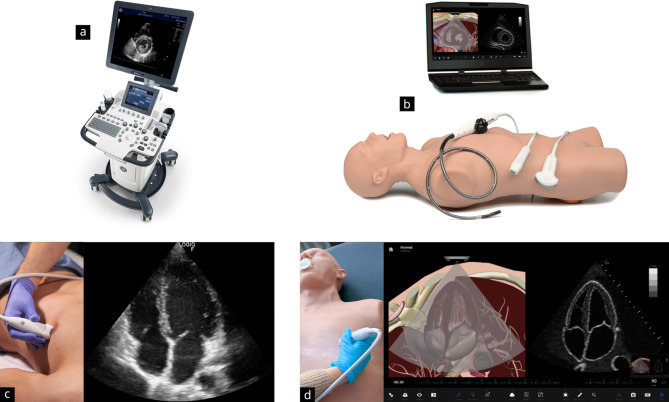

A total of three ultrasound devices from GE HealthCare (GE F8; General Electric Company, Boston) and three simulators (Vimedix, CAE Healthcare, Sarasota, Florida, US) were used (see Fig. 2). The ultrasound simulators have a life-sized sector transducer that can be applied realistically to a human torso model. The system provides a wide range of cardiology training sets and simulates an array of pathologies (see Supplement 4). In addition to displaying an anatomical cross-sectional image via animation, the monitor also projects an animated ultrasound image. Various measurement tools, Doppler functions, and other device functions can be used realistically. Additionally, transoesophageal echocardiography can be practised by changing the ultrasound probe.

Fig. 2.

Presentation of the training equipment used in the course. The control group trained on the ultrasound system GE F8 by General Electric Company, Boston (Fig. 2a and c), and the study group trained on the ultrasound simulator Vimedix by CAE Healthcare, Sarasota, Florida, US (Fig. 2b and d)

Assessments

The test design and evaluation instruments used are based on the consensus of ultrasound experts, instructors, and current professional recommendations [56–59].

Questionnaires

Evaluationpre and Evaluationpost (both approximately 5 min) addressed various topics across multiple items. These included “personal data”, “previous experience”, “simulator usage”, “motivation/expectations”, “learning goals”, “subjective competency assessment”, “course evaluation”, “teaching material”, and “tutor evaluation”. The answers were recorded using a seven-level Likert answering format (1 = strongly agree; 7 = strongly disagree), or by dichotomous questions (“yes”/“no”) and free text.

Pre- and post-test

The theory test (max. 74 points in Theorypre and max. 83 points in Theorypost), comprised the competency areas “anatomy” (max. 11 points); “basic principles” (max. 14 points); “normal findings = section assignments” (max. 10 points); “section labelling = normal findings or structure recognition in orientation sections” (50 points); and “pathology (recognition)” (max. 9 points, exclusively at T2), each derived from the learning objectives. The test comprised labelling, fill-in-the-blank, and multiple-choice question types [56, 60] (see Supplement 5 for example questions). The processing time per test was 25 min.

Practical tests (DOPS)

Practical skills were assessed by DOPS adapted from previous studies [61]. The DOPS tests, with a processing time of 10 min each, were carried out on the simulator (DOPSSim, max. 78 points) and human test subjects (DOPSHuman, max. 78 points). A total of six standard cross-sections of the FATE protocol [55] were tested as part of a case study (see Supplement 6). The test subjects were voluntary students, all of whom had a similar BMI.

The competency areas “communication” (max. 8 points); “transducer handling/device operation/patient guidance” (max. 14 points); “examination procedure FATE 1–6” (max. 36 points); “image explanation FATE 1–6” (max. 12 points); and “overall performance” (max. 8 points) were assessed in the tests.

Subsequently, a total of 4 case scenarios were completed on the simulator in the DOPSSimPatho (total of max. 48 points; total processing time 10 min) to assess pathology recognition (see Supplement 7). The competency areas “examination procedure or skill” (2 points); “pathology recognition” (2 points); and “overall impression” (8 points) were assessed in each case.

Statistics

Data for the evaluations as well as theoretical and practical learning success checks were manually evaluated using Microsoft Excel before analysis in R studio (RStudio Team [2020]. RStudio: Integrated Development for R. RStudio, PBC, http://www.rstudio.com, last accessed 09 02 2024) with R 4.0.3 (A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, http://www.R-project.org; last accessed 09 02 2024). Binary and categorical baseline parameters are expressed as absolute numbers and percentages. Continuous data are expressed as median and interquartile range (IQR) or mean and standard deviation (SD). Categorical parameters were compared using the chi-squared test and continuous parameters using the Mann-Whitney test. In addition, pairwise correlations of metric variables were obtained, and the correlation effect sizes and significances were calculated for both groups. Then, the Fisher z-transformation was used to compare correlations between the two groups. Finally, a multivariate linear regression model was employed to compare the influence of individual factors (“participation in an abdominal ultrasound course”, “lready had contact with simulator-based training”, “already had contact with ultrasound simulators”, “Number of independent sonographic examinations”, “Number of independent echocardiographies”, membership of the control group). P-values < 0.05 were considered statistically significant. A power analysis was conducted for this study to determine the sample size required to detect a statistically significant effect. Based on an expected effect size of 0.6, a significance level of 0.05, and a desired power of 0.90, the calculated sample size was set at 120 participants.

Results

Baseline

A total of 128 students were included in the study, with 63 in the study group and 65 in the control group (see Supplement 8). The baseline characteristics of both groups were similar (see Table 1), with both having almost equivalent demographic characteristics and prior training profiles. Both groups had a similar average age (study: 24 ± 4 years vs. control: 25 ± 4 years), were mainly female (study: 67% vs. control: 62%), and most had not previously used ultrasound simulators (study: 97% vs. control: 91%). Most had already taken an abdominal sonography course (study: 57% vs. control: 57%) but had not performed any independent echocardiograms (study: 95% vs. control: 85%; p = 0.02).

Table 1.

Group statistics at baseline

| Item | Parameter | Control group | Study group | p-value |

|---|---|---|---|---|

| Age | Years (mean ± SD) | 25 ± 4 | 24 ± 4 | 0.19 |

| Sex | Male (n) | 25 | 21 | 0.67 |

| Female (n) | 40 | 42 | ||

| Prior education | yes (n) | 36 | 24 | 0.07 |

| no (n) | 29 | 39 | ||

| University radiology course | n | 1 | 3 | 0.59 |

| Course in abdomen sonography | n | 37 | 36 | 1.0 |

| Number of observed | none | 6 | 5 | 0.37 |

| sonographic examinations | <=5 | 1 | 0 | |

| < 20 | 41 | 49 | ||

| 20–40 | 9 | 5 | ||

| >= 40 | 8 | 4 | ||

| Number of independent sonographic examinations | none | 12 | 9 | 0.62 |

| < 20 | 51 | 52 | ||

| 20–40 | 1 | 2 | ||

| >= 40 | 1 | 0 | ||

| Number of independent echocardiographies | none | 55 | 60 | 0.02 |

| < 20 | 9 | 1 | ||

| 20–40 | 1 | 0 | ||

| Prior simulator training | n | 28 | 33 | 0.38 |

| Prior use of ultrasound simulator | n | 6 | 2 | 0.29 |

Motivation, learning objectives, simulators and course concept

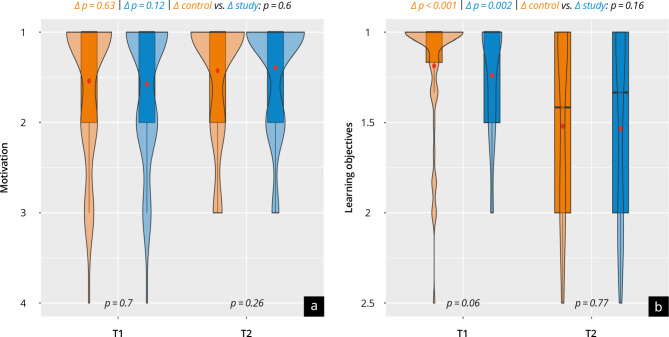

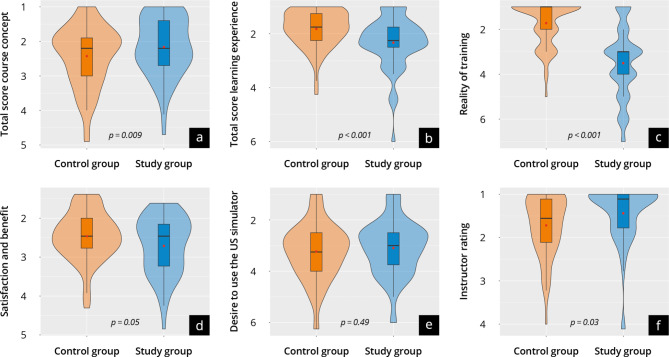

The evaluation of personal motivation, achievement of learning objectives, and the course concept are shown in Fig. 3 + 4 and Supplement 9 + 10. Both groups were similarly positively motivated at T1 and T2 (scale point range 1.4–1.6). Most participants in both groups state they achieved the learning objectives of the course overall and per subitem (scale point difference T1-T2: 0.2-04). No significant differences were found in “personal satisfaction and benefit of the course” (study: 2.7 ± 0.7 vs. control: 2.4 ± 1.0; p = 0.97) or “overall course evaluation” (study: 2.2 ± 0.9 vs. control: 2.4 ± 0.9; p = 0.09). The overall tutor evaluation was significantly more positive in the study group, with both groups (study: 1.4 ± 0.7 vs. control: 1.7 ± 0.7; p = 0.03) evaluating the tutors in very good scale ranges. The study group rated their subjective “personal overall learning experience” significantly worse than the control group (study: 2.3 ± 0.9 vs. control: 1.8 ± 0.7; p < 0.0001), which mainly results from the significantly worse assessment of the “realism of the training” (study: 3.5 ± 1.6 vs. control: 1.7 ± 0.9; p < 0.0001). Both groups support the use of an ultrasound simulator for training purposes as a “supplement to training on humans” (study: 1.6 ± 1.1 vs. control: 1.7 ± 1.2; p = 0.38), but not as a “replacement for training on humans” (study: 5.0 ± 2.3 vs. control: 5.4 ± 2.1; p = 0.37).

Fig. 3.

Self-evaluation of (a) motivation and (b) achievement of learning objectives at time points T1 and T2. The control group are represented in orange and the study group in blue

Fig. 4.

Evaluation results at T2 with the control group represented in orange and the study group represented in blue. (a) Results of the evaluation of the course concept; (b) the learning experience; (c) the realism of training; (d) the satisfaction and benefit; (e) the desire to use the ultrasound simulator; (f) the instructor rating

Subjective gain in competencies

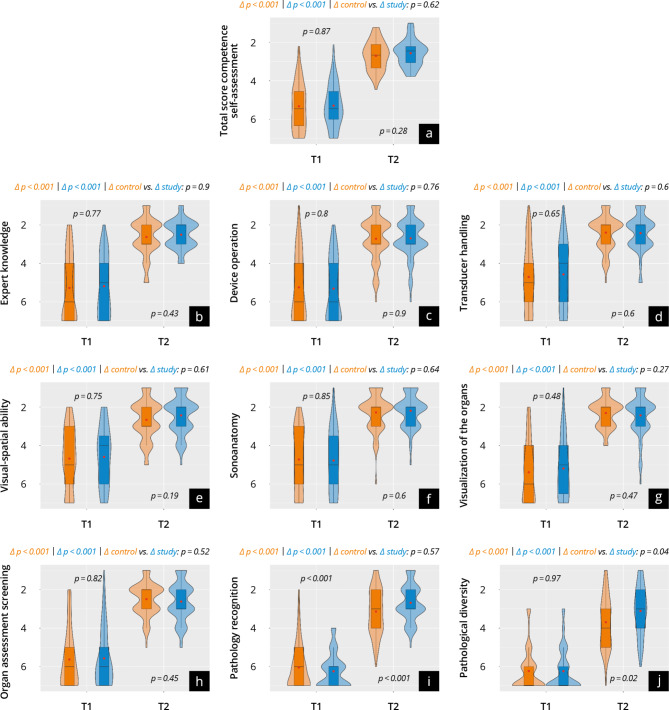

The results of the evaluation at T1 and T2 are shown in Fig. 5 and Supplement 11. No significant differences were found in the overall score at T1 (study: 5.3 ± 1.1 vs. control: 5.3 ± 1.2; p = 0.87). Both groups reported a significant increase in competency up to T2 (Delta p < 0.001) and reached a similarly high self-reported competency (study: 2.6 ± 0.7 vs. control: 2.7 ± 0.7; p = 0.23). This trend applied to almost all subcategories except for “pathology recognition”. Here, the study group reported a significantly higher increase in competency than the control group (study: Δ 3.7 ± 1.2 vs. control: Δ 2.8 ± 1.8; p < 0.01).

Fig. 5.

Results of subjective competence development at time points T1 and T2, with the control group represented in orange and the study group in blue. (a) The total score; (b–j) the subitems

Objective gain in competencies

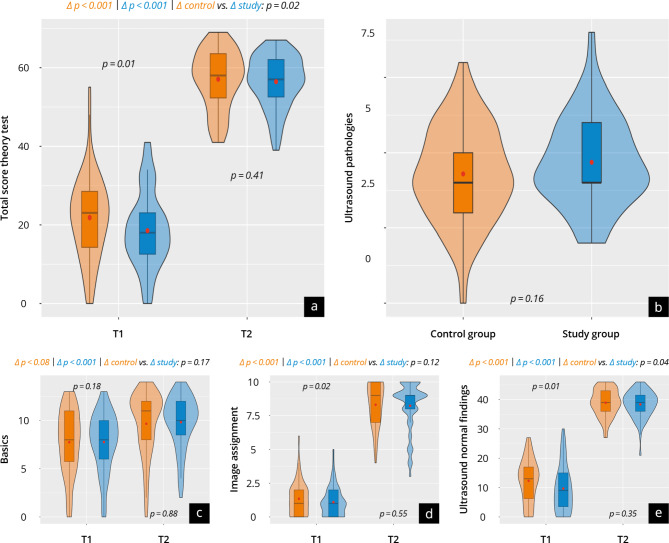

Theory test

The results of the theory tests at T1 (TheoryPre) and T2b (TheoryPost) are shown in Supplement 12 Fig. 6. In the overall score, the control performed better at T1 than the study group (study: 19 ± 10 vs. control: 24 ± 16; p = 0.01). Throughout the test, both groups achieved a significant (p < 0.001) objective increase in competency and demonstrated a similar level of competency at T2 (study: 56 ± 7 vs. control: 57 ± 8; p = 0.41), with the study group achieving a significantly higher increase (study: Δ 38 ± 9 vs. control: Δ 33 ± 14; p = 0.02). In the pathologies examined at T2, no significant differences between the groups were found (study: 5 ± 2 vs. control: 4 ± 2; p = 0.16).

Fig. 6.

Results of the theory test at T1 and T2b with the control group represented in orange and the study group in blue. (a) The total score; (b) the subcategories ultrasound pathologies; (c) basics; (d) image assignment; (e) ultrasound normal findings

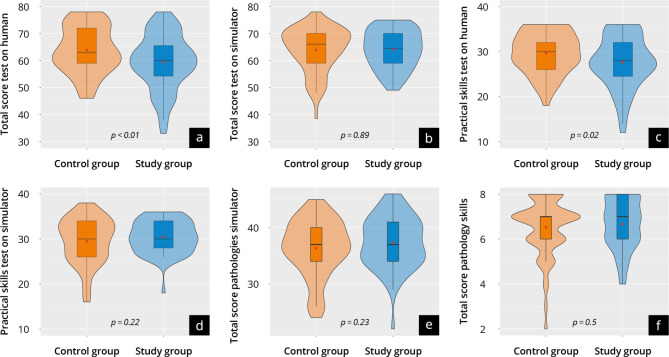

Practical test

The results of the practical tests DOPSSim and DOPSHuman at time T2 are shown in Supplement 13 and Fig. 7. Overall, both groups scored well in the DOPSSim (study: 64 ± 7 vs. control: 64 ± 8; p = 0.89) and in the DOPSSimPatho (study: 37 ± 5 vs. control: 36 ± 5; p = 0.23). The control group achieved significantly better results in the DOPSHuman (study: 59 ± 10 vs. control: 64 ± 9; p < 0.01). These trends hold for the subcategories of the respective DOPS, especially regarding “device operation”. When comparing results in DOPSSim with DOPSHuman, the control group achieved equivalent results in both DOPS (p = 0.97), whereas the study group showed significantly worse results in the DOPSHuman(p < 0.01).

Fig. 7.

Results of the practical test at time T2a of the control group (orange) and study group (blue). The violin plots present the results of: (a, d) DOPSHuman; (b, c) DOPSSim; (e, f) DOPSSimPatho

Influencing factors and correlations

The multivariable linear regression analysis of the theory tests and practical examinations yielded several influencing factors. In the T1 theory test (TheoryPre), these factors were “participation in an abdominal ultrasound course” (β = 6.51; p = 0.004); “already had contact with simulator-based training” (β = 5.13; p = 0.019); “already had contact with ultrasound simulators” (β = 13.51; p = 0.003); and “membership of the control group” (β = 4.24; p = 0.048). In the T2 theory test (TheoryPost), the factor “already had contact with ultrasound simulators” (β = 3.52; p = 0.004) had a significant influence. In the linear regression analysis of DOPSSim, DOPSSimPatho, and DOPSHuman, only DOPSHuman was significantly influenced by “membership of the control group” (β = 4.76; p = 0.005), with no other influencing factors detected.

The correlations between the objective test results of DOPSHuman and DOPSSim, DOPSHuman and TheoryPost, DOPSHuman and DOPSSimPatho were significantly higher in the control group than in the study group (p < 0.03).

Discussion

Summary of Key findings + relevance of research

This study compares the effectiveness of simulator-based ultrasound training to traditional ultrasound training with human subjects for teaching theoretical and practical skills in Focused Assessed Transthoracic Echo. It is the first simulator-focussed randomized echocardiographic study to examine an entire semester of students during the clinical phase of a medical degree program. Our results demonstrate that both training approaches can lead to a significant increase in skills, but those trained on a simulator alone did not perform comparably well when performing examinations on real humans. Additionally, participants reported that ultrasound simulators in training could “supplement” training on humans, but they did not accept it as a “replacement”. The results provide important insights into the potential advantages, disadvantages, and challenges of using ultrasound simulators in medical education and offer a basis for future training strategies.

Gain in competencies

The effectiveness of simulation-based training is still under research, especially regarding the transfer of skills from simulator training to real patient care in cardiac ultrasound diagnostics [17, 18, 20–22, 38, 42, 48, 62]. Preliminary studies have demonstrated that ultrasound simulators promote theoretical [7, 14–16, 21, 30, 31, 33, 34, 36–38, 41–43] and practical [7, 14, 16, 21, 30, 31, 34, 36–38, 41, 43] skill acquisition. These studies also suggested that simulation training was at least equivalent in effectiveness to lecture-based education [16, 33], textbook learning [32], video-based training [15], or e-learning [21, 30, 36]. Simulators offer a forgiving environment that allows early learners to make and learn from errors without the risk of causing harm, thereby fostering a safe and effective training experience. The participants in our study were also able to build theoretical and practical skills in FATE through simulator training, and they reported a subjective increase in their skills. The subjective and objective theoretical skill levels of both study groups at the end of the training were comparable and high [14, 29, 31, 33, 37, 38, 49]. Interestingly, the study group (those training with a simulator) achieved a significantly higher objective theoretical skills increase (T1 to T2) compared to the control group (training on humans). This indicates that simulator-based training can effectively support the transfer of theoretical knowledge.

While the groups performed nearly identically well in the practical tests on the simulator, the study group performed significantly worse than the control in the test on human subjects. This suggests that training on humans is irreplaceable at the moment and offers a realistic environment that cannot be fully reproduced by current simulators [63]. This finding is consistent with some previous studies [7, 41], but contrasts with other preliminary studies [14, 36–38, 42]. Whereas previous studies assessed the practical competency achieved either on a simulator [36] or human subjects [7, 14, 32, 37, 38, 41, 42], the multiple tests implemented in our study enabled us to make specific statements regarding the groups’ overall and relative development of skills, and, most importantly, regarding the skill transfer between simulator-based training and real human-subject examinations. The comparative results of the practical assessments on humans differed from those of previous findings [14, 36–38]. These differences could be explained by the longer duration of our training concept in comparison with former studies, which would enable finer differences between trained groups to emerge. The inclusion of a much larger number of participants and the use of multiple dedicated testing tools [57] might also explain the differences in our findings and serve to underline the robustness of the data collected.

Attitude towards simulation, motivation and evaluation of course

Various user groups have demonstrated a positive attitude toward simulators [19, 32, 36, 38, 39, 64]. Our study supports the use of an ultrasound simulator for training purposes as a supplement to training on humans. The participants showed a high level of motivation for training before and after the course, with no decrease in motivation despite the training modality [65]. However, participants across all groups did not view ultrasound simulator training as a replacement for human-based training, which aligns with recommendations from professional associations and preliminary studies [18, 19, 39]. The study group gave a significantly poorer subjective assessment of the overall learning experience and the realism of the training, further emphasizing the need for training on real humans [19, 23, 63]. The general acceptance of this broader training concept [6, 8, 9, 66] supports the future use of a combination of teaching methods. This could eliminate the observed gap in transferring skills from simulator training to real-patient practice, or make the training experience more realistic, thus providing the best possible learning environment [19, 40]. A practical strategy to achieve this would be the implementation of a longitudinal blended learning concept [67], which should include simulator self-learning programmes in paired teams [68] and practical training on humans. Furthermore, other innovative teaching strategies, such as artificial intelligence, virtual reality and telemedicine, could be integrated into ultrasound training in order to facilitate multimodal training [69].

Strengths and limitations of the study

The strengths of this study include its randomized design, clearly defined teaching methods, and consistent multiple assessment criteria, all of which provide an objective basis for interpreting the results.

However, there are also limitations. These include the voluntary nature of participation, the absence of a control group that received no training, and the reliance on ultrasound simulator models from only one manufacturer. In addition, the study did not explicitly assess the impact of the training modalities on patient safety or quality of care, although previous research suggests that simulator training improves the efficiency of care and reduces patient discomfort and the need for repeated examinations and trainee supervision [19].

A specific economic cost-benefit analysis was also not conducted [70]. While this can be seen as a limitation, certain benefits of simulator-based ultrasound training—such as preparing students for clinical practice, improving patient care and safety, and increasing satisfaction with the educational experience—cannot easily be quantified in monetary terms. Moreover, the study focuses mainly on quantitative assessments of knowledge and practical skills and may overlook qualitative aspects such as learning style preferences.

Despite accounting for several influencing factors through multivariate regression analysis, other personal factors of the participants that were not captured by the evaluations may have influenced the results. Additionally, the different sequencing of the tests (the study group completed the practical test on the simulator first and then on the human model; the control group did it the other way round) could also have influenced the results in ways we could not measure.

Finally, the study’s emphasis on the immediate effects of simulator-based training compared to human-model training does not consider long-term retention of skills. Future studies should explore how well these competencies are maintained over time to better evaluate the effectiveness of training methods [15, 36–38].

Conclusion

This study enhances our understanding of the effectiveness of approaches to ultrasound teaching. Ultrasound simulators offer promising opportunities, especially in transferring theoretical knowledge to focussed practice of basic skills. Nevertheless, hands-on training with human subjects remains indispensable for effective competency development, supporting the need for future multimodal training strategies. The early implementation of such innovative ultrasound training programs into medical degree curricula and specialist training would improve the quality of medical education and, ultimately, patient care.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

This study includes parts of the doctoral thesis of one of the authors (F.M.S.). We thank all participating students and lecturers for supporting our study. We would like to also thank C. Christe for her help in revising the figures. We would like to express our gratitude to Kay Stankov for his contributions to this publication. His dedicated efforts in consulting, supervising, and meticulously reviewing all statistical aspects have been instrumental in ensuring the rigor and accuracy of our research findings.

Author contributions

Conceptualization: J.M.W., F.M.S. and H.B.; methodology and software: J.M.W., F.M.S., R.K. and J.W.M., validation: J.M.W., F.M.S., S.G., R.K., and J.W.M.; formal analysis: J.M.W. and F.M.S.; investigation: J.M.W., F.M.S. and J.W.M.; resources: H.B., R.K. and J.M.W.; data curation: J.M.W., F.M.S., R.K., E.W.; writing—original draft preparation: J.M.W. and P.W. writing—review and editing: J.M.W., F.M.S., T.V., S.G., A.D., R. K., J.B., A.M.W., H.B., R.K., L.P., J.H., E.W., P.W. and J.W.M.; visualization: J.M.W. supervision: J.M.W., H.B. R.K. and J.M.W.; project administration: J.W. and J.M.W.; All authors have read and agreed to the published version of the manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL.

This research received no external funding.

Data availability

Data cannot be shared publicly because of institutional and national data policy restrictions imposed by the Ethics committee since the data contain potentially identifying study participants’ information. Data are available upon request from the Johannes Gutenberg University Mainz Medical Center (contact via weimer@uni-mainz.de) for researchers who meet the criteria for access to confidential data (please provide the manuscript title with your enquiry).

Declarations

Ethics approval and consent to participate

The approval for the study was waived by the local ethics committee of the State medical association of Rhineland-Palatinate (“Ethik-Kommission der Landesärztekammer Rheinland-Pfalz”, Mainz, Germany). All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. Informed written consent was obtained from all the participants.

Consent for publication

Not Applicable.

Competing interests

The authors declare no competing interests.

Authors’ information

J. M. W. is resident in internal medicine and coordinator of the student ultrasound education program of the University Medical Center Mainz. He has more than 10 years of experience in the didactic field and has also worked on the topic of ultrasound training methods in his doctoral thesis. He is a member of the DEGUM, EFSUMB and GMA.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hagendorff A, Fehske W, Flachskampf FA, Helfen A, Kreidel F, Kruck S, et al. Manual zur Indikation Und Durchführung Der Echokardiographie – Update 2020 Der Deutschen Gesellschaft für Kardiologie. Der Kardiologe. 2020;14(5):396–431. [Google Scholar]

- 2.Mitchell C, Rahko PS, Blauwet LA, Canaday B, Finstuen JA, Foster MC, et al. Guidelines for performing a comprehensive transthoracic echocardiographic examination in adults: recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr. 2019;32(1):1–64. [DOI] [PubMed] [Google Scholar]

- 3.Patel AR, Sugeng L, Lin BA, Smith MD, Sorrell VL. Communication and documentation of critical results from the Echocardiography Laboratory: a call to action. J Am Soc Echocardiogr. 2018;31(6):743–5. [DOI] [PubMed] [Google Scholar]

- 4.Wharton G, Steeds R, Allen J, Phillips H, Jones R, Kanagala P, et al. A minimum dataset for a standard adult transthoracic echocardiogram: a guideline protocol from the British Society of Echocardiography. Echo Res Pract. 2015;2(1):G9–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pezel T, Coisne A, Michalski B, Soliman H, Ajmone N, Nijveldt R, et al. EACVI SIMULATOR-online study: evaluation of transoesophageal echocardiography knowledge and skills of young cardiologists. Eur Heart J Cardiovasc Imaging. 2023;24(3):285–92. [DOI] [PubMed] [Google Scholar]

- 6.Gradl-Dietsch G, Menon AK, Gürsel A, Götzenich A, Hatam N, Aljalloud A, et al. Basic echocardiography for undergraduate students: a comparison of different peer-teaching approaches. Eur J Trauma Emerg Surg. 2018;44(1):143–52. [DOI] [PubMed] [Google Scholar]

- 7.Cawthorn TR, Nickel C, O’Reilly M, Kafka H, Tam JW, Jackson LC, et al. Development and evaluation of methodologies for teaching focused cardiac ultrasound skills to medical students. J Am Soc Echocardiography: Official Publication Am Soc Echocardiography. 2014;27(3):302–9. [DOI] [PubMed] [Google Scholar]

- 8.Weimer J, Rolef P, Müller L, Bellhäuser H, Göbel S, Buggenhagen H, et al. FoCUS cardiac ultrasound training for undergraduates based on current national guidelines: a prospective, controlled, single-center study on transferability. BMC Med Educ. 2023;23(1):80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Skrzypek A, Gorecki T, Krawczyk P, Podolec M, Cebula G, Jablonski K, et al. Implementation of the modified four-step approach method for teaching echocardiography using the FATE protocol-A pilot study. Echocardiography (Mount Kisco NY). 2018;35(11):1705–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Prosch H, Radzina M, Dietrich CF, Nielsen MB, Baumann S, Ewertsen C, et al. Ultrasound Curricula of Student Education in Europe: Summary of the experience. Ultrasound Int Open. 2020;6(1):E25–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Weimer J, Ruppert J, Vieth T, Weinmann-Menke J, Buggenhagen H, Künzel J, et al. Effects of undergraduate ultrasound education on cross-sectional image understanding and visual-spatial ability - a prospective study. BMC Med Educ. 2024;24(1):619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Neubauer R, Bauer CJ, Dietrich CF, Strizek B, Schäfer VS, Recker F. Evidence-based Ultrasound Education? – a systematic literature review of undergraduate Ultrasound Training studies. Ultrasound Int Open. 2024;10(continuous publication). [DOI] [PMC free article] [PubMed]

- 13.Dietrich CF, Hoffmann B, Abramowicz J, Badea R, Braden B, Cantisani V, et al. Medical Student Ultrasound Education: a WFUMB position paper, part I. Ultrasound Med Biol. 2019;45(2):271–81. [DOI] [PubMed] [Google Scholar]

- 14.Hempel C, Turton E, Hasheminejad E, Bevilacqua C, Hempel G, Ender J, et al. Impact of simulator-based training on acquisition of transthoracic echocardiography skills in medical students. Ann Card Anaesth. 2020;23(3):293–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhao Y, Yuan ZY, Zhang HY, Yang X, Qian D, Lin JY, et al. Simulation-based training following a theoretical lecture enhances the performance of medical students in the interpretation and short-term retention of 20 cross-sectional transesophageal echocardiographic views: a prospective, randomized, controlled trial. BMC Med Educ. 2021;21(1):336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Neelankavil J, Howard-Quijano K, Hsieh TC, Ramsingh D, Scovotti JC, Chua JH, et al. Transthoracic echocardiography simulation is an efficient method to train anesthesiologists in basic transthoracic echocardiography skills. Anesth Analg. 2012;115(5):1042–51. [DOI] [PubMed] [Google Scholar]

- 17.Singh K, Chandra A, Lonergan K, Bhatt A. Using Simulation to assess Cardiology Fellow performance of Transthoracic Echocardiography: lessons for Training in the COVID-19 pandemic. J Am Soc Echocardiography: Official Publication Am Soc Echocardiography. 2020;33(11):1421–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pezel T, Coisne A, Bonnet G, Martins RP, Adjedj J, Bière L, et al. Simulation-based training in cardiology: state-of-the-art review from the French Commission of Simulation Teaching (Commission d’enseignement par simulation-COMSI) of the French Society of Cardiology. Arch Cardiovasc Dis. 2021;114(1):73–84. [DOI] [PubMed] [Google Scholar]

- 19.Dietrich CF, Lucius C, Nielsen MB, Burmester E, Westerway SC, Chu CY, et al. The ultrasound use of simulators, current view, and perspectives: requirements and technical aspects (WFUMB state of the art paper). Endosc Ultrasound. 2023;12(1):38–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Biswas M, Patel R, German C, Kharod A, Mohamed A, Dod HS, et al. Simulation-based training in echocardiography. Echocardiography (Mount Kisco NY). 2016;33(10):1581–8. [DOI] [PubMed] [Google Scholar]

- 21.Nazarnia S, Subramaniam K. Role of Simulation in Perioperative Echocardiography Training. Semin Cardiothorac Vasc Anesth. 2017;21(1):81–94. [DOI] [PubMed] [Google Scholar]

- 22.Le KDR. Principles of Effective Simulation-based Teaching Sessions in Medical Education: a narrative review. Cureus. 2023;15(11):e49159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bradley K, Quinton AE, Aziz A. Determining if simulation is effective for training in ultrasound: a narrative review. Sonography. 2019;7:22–32. [Google Scholar]

- 24.Buckley E, Barrett A, Power D, Whelton H, Cooke J. A competitive edge: developing a simulation faculty using competition. Clin Teach. 2024;21(1):e13641. [DOI] [PubMed] [Google Scholar]

- 25.Chernikova O, Heitzmann N, Stadler M, Holzberger D, Seidel T, Fischer F. Simulation-based learning in higher education: a Meta-analysis. Rev Educ Res. 2020;90(4):499–541. [Google Scholar]

- 26.Østergaard ML, Konge L, Kahr N, Albrecht-Beste E, Nielsen MB, Nielsen KR. Four virtual-reality simulators for Diagnostic Abdominal Ultrasound Training in Radiology. Diagnostics (Basel). 2019;9(2). [DOI] [PMC free article] [PubMed]

- 27.Hempel D, Sinnathurai S, Haunhorst S, Seibel A, Michels G, Heringer F, et al. Influence of case-based e-learning on students’ performance in point-of-care ultrasound courses: a randomized trial. Eur J Emerg Med. 2016;23(4):298–304. [DOI] [PubMed] [Google Scholar]

- 28.Ostergaard ML, Rue Nielsen K, Albrecht-Beste E, Kjaer Ersboll A, Konge L, Bachmann Nielsen M. Simulator training improves ultrasound scanning performance on patients: a randomized controlled trial. Eur Radiol. 2019;29(6):3210–8. [DOI] [PubMed] [Google Scholar]

- 29.Rosen H, Windrim R, Lee YM, Gotha L, Perelman V, Ronzoni S. Simulator Based Obstetric Ultrasound Training: a prospective, randomized single-blinded study. J Obstet Gynaecol Can. 2017;39(3):166–73. [DOI] [PubMed] [Google Scholar]

- 30.Ostergaard ML, Ewertsen C, Konge L, Albrecht-Beste E, Bachmann Nielsen M. Simulation-based abdominal Ultrasound training - A systematic review. Ultraschall Med. 2016;37(3):253–61. [DOI] [PubMed] [Google Scholar]

- 31.Weimer J, Recker F, Hasenburg A, Buggenhagen H, Karbach K, Beer L et al. Development and evaluation of a simulator-based ultrasound training program for university teaching in obstetrics and gynecology–the prospective GynSim study. Front Med. 2024;11. [DOI] [PMC free article] [PubMed]

- 32.Kusunose K, Yamada H, Suzukawa R, Hirata Y, Yamao M, Ise T, et al. Effects of Transthoracic Echocardiographic Simulator Training on performance and satisfaction in medical students. J Am Soc Echocardiography: Official Publication Am Soc Echocardiography. 2016;29(4):375–7. [DOI] [PubMed] [Google Scholar]

- 33.Ding K, Chen M, Li P, Xie Z, Zhang H, Kou R, et al. The effect of simulation of sectional human anatomy using ultrasound on students’ learning outcomes and satisfaction in echocardiography education: a pilot randomized controlled trial. BMC Med Educ. 2024;24(1):494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Elison DM, McConnaughey S, Freeman RV, Sheehan FH. Focused cardiac ultrasound training in medical students: using an independent, simulator-based curriculum to objectively measure skill acquisition and learning curve. Echocardiography (Mount Kisco NY). 2020;37(4):491–6. [DOI] [PubMed] [Google Scholar]

- 35.Sidhu HS, Olubaniyi BO, Bhatnagar G, Shuen V, Dubbins P. Role of simulation-based education in ultrasound practice training. J Ultrasound Med. 2012;31(5):785–91. [DOI] [PubMed] [Google Scholar]

- 36.Weber U, Zapletal B, Base E, Hambrusch M, Ristl R, Mora B. Resident performance in basic perioperative transesophageal echocardiography: comparing 3 teaching methods in a randomized controlled trial. Medicine. 2019;98(36):e17072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Edrich T, Seethala RR, Olenchock BA, Mizuguchi AK, Rivero JM, Beutler SS, et al. Providing initial transthoracic echocardiography training for anesthesiologists: simulator training is not inferior to live training. J Cardiothorac Vasc Anesth. 2014;28(1):49–53. [DOI] [PubMed] [Google Scholar]

- 38.Canty D, Barth J, Yang Y, Peters N, Palmer A, Royse A, et al. Comparison of learning outcomes for teaching focused cardiac ultrasound to physicians: a supervised human model course versus an eLearning guided self- directed simulator course. J Crit Care. 2019;49:38–44. [DOI] [PubMed] [Google Scholar]

- 39.Gibbs V. The role of ultrasound simulators in education: an investigation into sonography student experiences and clinical mentor perceptions. Ultrasound (Leeds England). 2015;23(4):204–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nayahangan LJ, Dietrich CF, Nielsen MB. Simulation-based training in ultrasound - where are we now? Ultraschall Med. 2021;42(3):240–4. [DOI] [PubMed] [Google Scholar]

- 41.Juo YY, Quach C, Hiatt J, Hines OJ, Tillou A, Burruss S. Comparative analysis of simulated versus live patient-based FAST (focused Assessment with Sonography for Trauma) training. J Surg Educ. 2017;74(6):1012–8. [DOI] [PubMed] [Google Scholar]

- 42.Bentley S, Mudan G, Strother C, Wong N. Are live Ultrasound models replaceable? Traditional versus simulated Education Module for FAST exam. West J Emerg Med. 2015;16(6):818–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Koratala A, Paudel HR, Regner KR. Nephrologist-led Simulation-based focused Cardiac Ultrasound Workshop for Medical students: insights and implications. Am J Med Open. 2023;10:100051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. [DOI] [PubMed] [Google Scholar]

- 45.McGaghie WC, Draycott TJ, Dunn WF, Lopez CM, Stefanidis D. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc. 2011;6(SupplSuppl):S42–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zendejas B, Brydges R, Wang AT, Cook DA. Patient outcomes in simulation-based medical education: a systematic review. J Gen Intern Med. 2013;28(8):1078–89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Dawe SR, Pena GN, Windsor JA, Broeders JA, Cregan PC, Hewett PJ, et al. Systematic review of skills transfer after surgical simulation-based training. Br J Surg. 2014;101(9):1063–76. [DOI] [PubMed] [Google Scholar]

- 48.Singh J, Matern LH, Bittner EA, Chang MG. Characteristics of Simulation-based point-of-care Ultrasound Education: a systematic review of MedEdPORTAL Curricula. Cureus. 2022;14(2):e22249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rambarat CA, Merritt JM, Norton HF, Black E, Winchester DE. Using Simulation to teach Echocardiography: a systematic review. Simul Healthc. 2018;13(6):413–9. [DOI] [PubMed] [Google Scholar]

- 50.Breitkreutz R, Price S, Steiger HV, Seeger FH, Ilper H, Ackermann H, et al. Focused echocardiographic evaluation in life support and peri-resuscitation of emergency patients: a prospective trial. Resuscitation. 2010;81(11):1527–33. [DOI] [PubMed] [Google Scholar]

- 51.Sheehan FH, Zierler RE. Simulation for competency assessment in vascular and cardiac ultrasound. Vasc Med. 2018;23(2):172–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Moher D, Hopewell S, Schulz KF, Montori V, Gøtzsche PC, Devereaux PJ, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Duncan E, O’Cathain A, Rousseau N, Croot L, Sworn K, Turner KM, et al. Guidance for reporting intervention development studies in health research (GUIDED): an evidence-based consensus study. BMJ Open. 2020;10(4):e033516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Platts DG, Humphries J, Burstow DJ, Anderson B, Forshaw T, Scalia GM. The Use of Computerised simulators for Training of Transthoracic and Transoesophageal Echocardiography. Future Echocardiographic Training? Heart Lung Circulation. 2012;21(5):267–74. [DOI] [PubMed] [Google Scholar]

- 55.Nagre AS. Focus-assessed transthoracic echocardiography: implications in perioperative and intensive care. Ann Card Anaesth. 2019;22(3):302–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Puthiaparampil T, Rahman MM. Very short answer questions: a viable alternative to multiple choice questions. BMC Med Educ. 2020;20(1):141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Höhne E, Recker F, Dietrich CF, Schäfer VS. Assessment methods in Medical Ultrasound Education. Front Med (Lausanne). 2022;9:871957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Via G, Hussain A, Wells M, Reardon R, ElBarbary M, Noble VE, et al. International evidence-based recommendations for focused cardiac ultrasound. J Am Soc Echocardiography: Official Publication Am Soc Echocardiography. 2014;27(7):683.e1-.e33. [DOI] [PubMed] [Google Scholar]

- 59.Price S, Via G, Sloth E, Guarracino F, Breitkreutz R, Catena E, et al. Echocardiography practice, training and accreditation in the intensive care: document for the World Interactive Network focused on critical Ultrasound (WINFOCUS). Cardiovasc Ultrasound. 2008;6:49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Raymond MR, Stevens C, Bucak SD. The optimal number of options for multiple-choice questions on high-stakes tests: application of a revised index for detecting nonfunctional distractors. Adv Health Sci Educ Theory Pract. 2019;24(1):141–50. [DOI] [PubMed] [Google Scholar]

- 61.Weimer JM, Rink M, Müller L, Dirks K, Ille C, Bozzato A, et al. Development and Integration of DOPS as formative tests in Head and Neck Ultrasound Education: Proof of Concept Study for exploration of perceptions. Diagnostics. 2023;13(4):661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Bowman A, Reid D, Bobby Harreveld R, Lawson C. Evaluation of students’ clinical performance post-simulation training. Radiography (Lond). 2021;27(2):404–13. [DOI] [PubMed] [Google Scholar]

- 63.Moak JH, Larese SR, Riordan JP, Sudhir A, Yan G. Training in transvaginal sonography using pelvic ultrasound simulators versus live models: a randomized controlled trial. Acad Med. 2014;89(7):1063–8. [DOI] [PubMed] [Google Scholar]

- 64.Hani S, Chalouhi G, Lakissian Z, Sharara-Chami R. Introduction of Ultrasound Simulation in Medical Education: exploratory study. JMIR Med Educ. 2019;5(2):e13568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Pless A, Hari R, Harris M. Why are medical students so motivated to learn ultrasound skills? A qualitative study. BMC Med Educ. 2024;24(1):458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ray JJ, Meizoso JP, Hart V, Horkan D, Behrens V, Rao KA, et al. Effectiveness of a Perioperative Transthoracic Ultrasound Training Program for students and residents. J Surg Educ. 2017;74(5):805–10. [DOI] [PubMed] [Google Scholar]

- 67.Stockwell BR, Stockwell MS, Cennamo M, Jiang E. Blended Learn Improves Sci Educ Cell. 2015;162(5):933–6. [DOI] [PubMed] [Google Scholar]

- 68.See KC, Chua JW, Verstegen D, Van Merrienboer JJG, Van Mook WN. Focused echocardiography: Dyad versus individual training in an authentic clinical context. J Crit Care. 2019;49:50–5. [DOI] [PubMed] [Google Scholar]

- 69.Daum N, Blaivas M, Goudie A, Hoffmann B, Jenssen C, Neubauer R, et al. Student ultrasound education, current view and controversies. Role of Artificial Intelligence, virtual reality and telemedicine. Ultrasound J. 2024;16(1):44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Zendejas B, Wang AT, Brydges R, Hamstra SJ, Cook DA. Cost: the missing outcome in simulation-based medical education research: a systematic review. Surgery. 2013;153(2):160–76. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data cannot be shared publicly because of institutional and national data policy restrictions imposed by the Ethics committee since the data contain potentially identifying study participants’ information. Data are available upon request from the Johannes Gutenberg University Mainz Medical Center (contact via weimer@uni-mainz.de) for researchers who meet the criteria for access to confidential data (please provide the manuscript title with your enquiry).