Abstract

Identifying informative low-dimensional features that characterize dynamics in molecular simulations remains a challenge, often requiring extensive manual tuning and system-specific knowledge. Here, we introduce geom2vec, in which pretrained graph neural networks (GNNs) are used as universal geometric featurizers. By pretraining equivariant GNNs on a large dataset of molecular conformations with a self-supervised denoising objective, we obtain transferable structural representations that are useful for learning conformational dynamics without further fine-tuning. We show how the learned GNN representations can capture interpretable relationships between structural units (tokens) by combining them with expressive token mixers. Importantly, decoupling training the GNNs from training for downstream tasks enables analysis of larger molecular graphs (such as small proteins at all-atom resolution) with limited computational resources. In these ways, geom2vec eliminates the need for manual feature selection and increases the robustness of simulation analyses.

I. INTRODUCTION

Molecular dynamics simulations can provide atomistic insight into complex reaction dynamics, but their high dimensionality makes them hard to interpret. Analyzing simulations thus relies on identifying low-dimensional representations (features), but care is needed in choosing them because they can strongly impact conclusions1–4. Often features are selected manually, but doing so relies on system-specific intuition. Because developing intuition is often the goal of simulations, many researchers instead have turned to machine-learning methods for constructing features that capture observed variance (e.g., principal component analysis)5–7 or decorrelate slowly according to the simulation data (e.g., the variational approach for Markov processes, VAMP)8–12. While these objectives are not always aligned with the reaction of interest3,4,13,14, such features can serve as useful intermediaries for computing reaction-specific statistics14,15 that provide a principled way for evaluating mechanisms16–18.

Generally the inputs to the machine-learning methods above are internal coordinates such as distances between selected atoms and dihedral angles because they are invariant to translations and rotations of the system. However, the nonlocal nature of these coordinates and/or their effects (e.g., the rotation of a dihedral angle in a polymer backbone) can make the resulting features both ineffective and hard to interpret, and these issues become more significant with system size16,17. Additionally, it is not obvious how to represent permutationally invariant species such as solvent molecules with internal coordinates; recently introduced machine learning approaches for treating such species do not scale well with system size19.

Because atoms and their interactions (through bond or through space) can be viewed as the nodes and edges of graphs, molecular information can be readily encoded in graph representations (e.g., graph neural networks, GNNs). Importantly, graph representations can be constructed in ways that respect the symmetries of molecular systems, with translational, rotational, and permutational invariance and equivariance. Equivariance allows, for example, GNNs to output forces that rotate with the system, and appears to improve learning20–22. Owing to both their conceptual appeal and their performance, GNNs now dominate machine learning for force fields and molecular property prediction22–26. They also are being successfully used to learn representations of larger molecules for tasks such as protein structure prediction, design, fold classification, and function prediction27–30.

The tasks above concern prediction of static properties, including structures. Because molecular dynamics trajectories consist of sequences of structures, GNNs should be useful for identifying features for computing reaction statistics, and several groups have combined GNNs with VAMP8 to learn metastable states and relaxation time scales of both materials and biomolecules31–35. These groups report improved variational scores, convergence for shorter lag times, and more interpretable learned representations relative to VAMPnets based on fully connected networks. However, existing GNNs for analyzing dynamics do not readily scale to large numbers of atoms, so the graphs in these studies are small, either because the molecules are small, or only a subset of atoms (e.g., the atoms of proteins) are used as inputs. Furthermore, training these GNNs is computationally costly, limiting the number of architectures that can be explored and their use for other types of analyses.

The key idea of this paper is that GNNs can be pretrained using independent structural data prior to their use to analyze dynamics, thus decoupling GNN training from training for downstream tasks. Pretraining has transformed other domains such as natural language processing (NLP) and computer vision, enabling high-dimensional latent representations of words (“tokens”)36 or images (“patches”)37 to be learned by self-supervised, auto-regressive training. In NLP, word2vec pioneered the idea of using learnable vector representations for words by assigning them based on the word itself and its surrounding context38; these representations could then be used for diverse downstream tasks. Inspired by word2vec, we propose geom2vec, an approach that leverages pretrained GNNs to learn transferable vector representations for molecular geometries.

Various pretraining strategies have been tried in molecular contexts27,28,30,39–41, but in contrast to complex pretraining and encoding schemes devised for specific classes of molecules27,30, we use a scheme that can be applied generally. Building on the idea that corrupting data with noise and training a model to reconstruct the original data (denoising) can lead to learning meaningful representations for generative models42,43, Zaidi et al.40 showed that denoising atomic coordinates of structures of organic molecules significantly improved GNN performance on a number of molecular property prediction benchmarks.

Here, we show that this simple pretraining scheme also enables analysis of molecular dynamics simulations. Specifically, we pretrain GNNs using the same denoising objective and a dataset of structures of organic molecules obtained with density functional theory44 and analyze protein molecular dynamics simulations with the resulting representations. We consider two tasks: learning slowly decorrelating modes with VAMP8 and identifying metastable states with the state predictive information bottleneck (SPIB) framework45,46. We show that neural networks trained using the GNN representations can readily take all non-hydrogen atoms in a small protein as inputs, enabling, for example, discovery of side chain dynamics that are important for folding. By decoupling learning molecular representations from training for specific tasks, our method naturally accommodates alternative pretraining schemes27,47 and datasets48 (e.g., ones specific to particular classes of molecules), as well as other possible tasks49–52.

II. METHODS

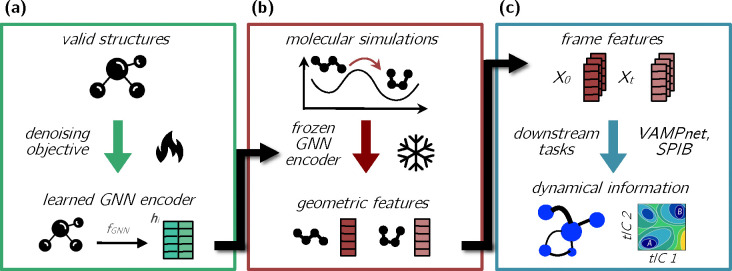

The basic idea of our method is to pretrain a GNN using a suitable task (here, denoising molecular coordinates) and then to use it with the resulting parameter values fixed as a feature encoder for other (downstream) tasks, as summarized in Figure 1. In this section, we provide an overview of the network architecture that we use and then describe its elaboration for the pretraining and downstream tasks; further details are provided in the Appendices. We refer to the workflow of transforming the atomic coordinates to representation vectors (i.e., features) and the use of those vectors as “geom2vec.”

FIG. 1.

The geom2vec workflow. (a) A GNN encoder is pretrained using a denoising objective on a dataset of structures of diverse molecules. (b) Geometric representations for configurations from molecular simulations are obtained by performing inference with the pretrained GNN encoder. (c) The representations are used as inputs to a downstream task head (here, a VAMPnet or SPIB), which is trained separately.

A. Network architecture

As noted above, our goal is learn a mapping from Cartesian coordinates to representation vectors via a GNN. There are many existing GNN architectures from which to choose23. Here, we use the ViSNet53 architecture, which is built on TorchMD-ET54. These are both equivariant geometric graph transformers with modified attention mechanisms that suppress interactions between distant atoms; ViSNet goes beyond TorchMD-ET in using (standard and improper) dihedral angle information in its internal representations. We take the activations after the last message-passing layer as the representations for downstream computations. These representations include three-dimensional vector features, which change appropriately with molecular translation and rotation, and one-dimensional scalar features, which are invariant to molecular translation and rotation. That is,

| (1) |

where is the number of atoms, and is the number of features associated with each atom (equal to the dimension of the last update layer). We represent the combined vector () and scalar () features for atom by . We choose ViSNet because it was previously shown to give accurate molecular property predictions and accurate conformational distributions when used to learn a potential for molecular dynamics simulations.53 We briefly introduce the TorchMD-ET and ViSNet architectures in Appendices A2 and A3, and we refer interested readers to the original publications and Ref. 55 for further details.

B. Pretraining by denoising

For the pretraining, we draw random displacements from a multivariate Gaussian distribution and add them to the Cartesian coordinates of the molecules in the training set; we then train the GNN to predict the displacements. This process is designed to encourage the network to learn representations that capture the geometry of the molecular conformations, and it can be viewed as learning a force field with energy minima close to the training set geometries40. We choose this objective because structural data are more readily available than energetic data, especially for large molecules such as proteins.

Here, we use the OrbNet Denali dataset, which consists of 215,000 molecules and complexes (with an average of 45 atoms) with 2.3 million conformations sampled from molecular trajectories44. We randomly select 10,000 conformations for validation and use the remainder for training.

Following Zaidi et al.40, we pretrain the model by passing the features for each atom (graph node) from the ViSNet architecture through a gated equivariant block (Algorithm 1) that combines the scalar and vector features to obtain a three-dimensional vector that represents the predictions for the displacement of that atom. We train for a fixed number of epochs and save the parameters resulting in the lowest mean squared error (MSE) between the predicted and added atom displacements for the validation set; for this comparison, we normalize the added atom displacements to have zero mean and unit variance. Further details, including hyperparameters, are given in Appendix D. Depending on the network architecture, choice of hyperparameters, and graphics card available, the one-time pretraining can take from several hours to a few days.

C. Use of the representations

In this section, we discuss the operational details of using the pretrained GNN for downstream tasks in general (summarized in Figure 1). The specific downstream tasks that we use for our numerical demonstrations are described in Section III.

1. Atom selection

We first select the atoms of interest. For example, when analyzing simulations with explicit solvent, we may select only the solute coordinates. Given the molecular coordinates, the pretrained GNN yields a learned representation for each selected atom . One can choose to base the calculations for the downstream tasks on the atomic representation vectors and/or the sums over their scalar and vector elements, but in most cases we reduce the size of the graph by coarse-graining it.

2. Coarse-graining

Let be a partition of the atoms into disjoint subsets, where each subset contains atoms belonging to a structural unit, such as a functional group or monomer in a polymer, depending on the system. We pool the atomic representations for each :

| (2) |

Each resulting coarse-grained representation vector represents the geometric information of the atoms within its structural unit in an average sense. Following the NLP literature, we refer to the coarse-grained representations as structural “tokens.”

How to partition the atoms is the user’s choice, but many systems have a natural structure. For example, here we study proteins and pool the representations for the atoms in each amino acid. In this case, the number of nodes in the graph is reduced by an order of magnitude. Coarse-graining reduces the computational cost and memory, and it facilitates both learning long-range relationships and interpretation of the results (e.g., attention maps).

3. Feature combination

To use the coarse-grained tokens in a learning task, we must combine their information in a useful fashion. How best to do so depends on the structural properties of interest, but we can generally lump approaches into two categories:

Pooling: We sum (or average) the coarse-grained tokens to obtain a single, global representation of the molecular system . Note that this approach is equivalent to pooling the atomic representation vectors .

Token mixing: This approach applies a learnable mixing operation to the coarse-grained tokens to capture their interactions and dependencies56. Token mixers allow for greater expressivity than direct pooling and can capture complex interactions between structural units.

In this work, we employ two basic token mixers: (1) a standard transformer architecture36, which we refer to as “SubFormer,”57 and (2) an MLP-mixer architecture58, which we refer to as “SubMixer.” Because the GNNs here employ a message passing architecture (Appendix A), the resulting features typically do not encode global geometric information. We show that this issue can be addressed by combining SubFormer and SubMixer with a special token that encodes global information59 such as pairwise distances. Alternatively, they can be combined with geometric vector perceptrons (GVPs), equivariant GNNs introduced for biomolecular modeling60 to learn expressive positional encodings (see Algorithms 2 and 3 in Appendices B and C).

D. Output layers

Ultimately, we combine the features from the different graph nodes and any graph-wide information (e.g., the CLS token of the transformer) and use an MLP to output quantities specific to a downstream task. Here, we consider downstream tasks that require only scalar quantities, so we input only the scalar features to the MLP, but generally scalar (invariant) and vector (equivariant) quantities can be input and output. We summarize the overall scheme in Algorithm 3.

III. DOWNSTREAM TASKS

To assess whether the geom2vec representations are useful for learning protein dynamics, we apply them to learning slowly decorrelating modes with VAMP8 and identifying metastable states with the state predictive information bottleneck (SPIB) framework45. As described previously, we apply the pretrained GNN to the coordinates from molecular dynamics trajectories and then use the resulting features as inputs to the desired task without further fine-tuning the GNN parameters. In this section, we briefly describe the two downstream tasks mathematically.

A. VAMPnets

Let be a Markov process and define the correlation functions

| (3) |

| (4) |

| (5) |

where and are vectors of functions and the expectation is over trajectories initialized from an arbitrary distribution . In our case, represents the molecular coordinates at time and are the molecular features from a pretrained GNN. The variational approach for Markov processes (VAMP)8–12 states that and represent the slowest decorrelating modes (or collective variables; CVs) of the system when maximizing the VAMP-2 score

| (6) |

where the subscript denotes the Frobenius norm.

Operationally, the components of and are learned from data by representing them by parameterized functions (e.g., neural networks in VAMPnets8,10; the output of geom2vec in our case) and maximizing (6). VAMPnets require one to specify the output dimension a priori. For the benchmark systems that we consider, we choose based on previous results in the literature.

As discussed below (Section IV D) we split each dataset into training and validation sets, evaluating the validation score every 10 training steps. Each training step or validation step, we randomly draw a batch of trajectory-frame pairs spaced by to compute (6). We found that a large batch size (at least 1000 and usually 5000 for the examples here) was required to achieve a high validation score. With smaller batch sizes, we encountered numerical instabilities when inverting the correlation matrices in the VAMP-2 loss function (6). A large batch size is also needed to minimize variance in the late phase of training because neural network outputs exhibit large changes between metastable states, where fewer trajectory-frame pairs contribute. To prevent overfitting, we apply an early stopping criterion12: we stop training when the training VAMP score does not increase for 500 batches or the validation VAMP score does not increase for 10 batches. Further training details are given in Table S3.

B. State Predictive Information Bottleneck (SPIB)

In the information bottleneck (IB) framework, an encoder-decoder setup is used to learn a low-dimensional (latent) representation that minimizes the information from a high-dimensional input while maximizing the information about a target . The associated loss function is

| (7) |

where refers to the mutual information between two random variables:

| (8) |

and the parameter controls the tradeoff between prediction accuracy and the complexity of the latent representation.

In the state predictive information bottleneck (SPIB) extension of IB45, the inputs are molecular features at time , and the targets are state labels that indicate the state of the system at time . The latent representation and state labels are learned simultaneously by predicting the state labels at time given the molecular features at time .

To learn the latent representation , SPIB maximizes the loss function

| (9) |

The encoder generates the latent representation from the input with probability , which is a multivariate normal distribution with learned mean and learned covariance . The decoder takes the latent representation and returns the probability of each state label is represented by a neural network with output dimension equal to the number of possible state labels. The quantity is a prior. The state labels are updated during training as follows:

| (10) |

It is important to note that (10) allows the number of distinct states that are populated to fluctuate (unpopulated states are ignored).

We follow Wang and Tiwary45 and use a variational mixture of posteriors for the prior61:

| (11) |

where and are learned parameters. In this work, we prepared the initial state labels by performing -means clustering on the CVs learned from VAMPnets based on distances between atoms with clusters. Training reduces the number of distinct states that are populated to the estimated number of metastable states. Further training details are given in Table S2.

IV. SYSTEMS STUDIED

We examine the performance of geom2vec for analyzing data from long molecular dynamics simulations of three well-characterized fast-folding proteins (chignolin, trp-cage, and villin)62. The data for each system is a single, unbiased simulation, which we assume approximately samples the equilibrium distribution. In this section, we introduce each system and briefly describe its structure and dynamics.

A. Chignolin

Chignolin is a 10-residue fast-folding protein with sequence YYDPETGTWY. The folded state consists of three -hairpin structures that are distinguished by hydrogen bonding between the threonine side chains and their dihedral angles, which interconvert on the nanosecond timescale63. The trajectory that we analyze is 106 μs long at 340 K and saved every 0.2 ns62. For VAMPnet fitting, we choose .

B. Trp-cage

Trp-cage is a 20-residue fast-folding protein64; here we study the K8A mutant with sequence DAYAQWLADGGPSSGRPPPS. Its secondary structure consists of an -helix (residues 2–9), a short 310-helix (residues 11–14), and a polyproline II helix (residues 17–19); the protein takes its name from Trp6, which is in the core of the folded state. The trajectory is 208 μs long at 290 K and saved every 0.2 ns62. Previous studies of this trajectory generally identified the folded and unfolded states, with varying numbers of intermediates and misfolded states46,65. For VAMPnet fitting, we choose .

C. Villin

The 35-residue villin headpiece subdomain (HP35)66 is a fast-folding protein with sequence LSDEDFKA-VFGMTRSAFANLPLWnLQQHLnLKEKGLF where nL refers to the unnatural amino acid norleucine. The K65nL/N68H/K70nL mutant was engineered to fold more rapidly67. The secondary structure of villin consists of three -helices at residues 3–10, 14–19, and 22–32. Villin has a hydrophobic core centered on residues Phe6, Phe10, and Phe17. The trajectory that we study is 125 μs long at 360 K and saved every 0.2 ns62. Previous studies typically identified three states: a folded state, an unfolded state, and a misfolded state12. Wang et al.68 proposed two primary folding pathways, where either the C-terminus or the N-terminus folds first, ultimately leading to the native state. Additionally, a co-operative hydrophobic interaction may facilitate a third folding pathway. For VAMPnet fitting, we choose .

D. Training-validation split

Although previous studies used a random split8,33,50, we observe that, due to the strong correlation between successive structures sampled by molecular dynamics simulations, a random split allows networks to achieve high validation scores even when they have memorized the training data rather than learned useful abstractions from it; the models then perform poorly on independent data. Consequently, for all our numerical experiments, we split the data into training and validation sets by time. That is, we select the first 50% of the long trajectory for training and the remainder for validation. If we had access to multiple independent trajectories, randomly choosing trajectories for training and validation would also be appropriate. Some studies split the trajectory into equal segments and draw random segments for training and validation46,65 (-fold cross-validation). When there are only two segments, this approach is identical to ours. When every structure is its own segment, one recovers the random split. Intermediate numbers of segments result in intermediate amounts of correlation between the training and validation sets. In cases where we vary the amount of training data, we first select the first 50% of the trajectory and then divide only this half of the trajectory into segments that we draw randomly for training; the second half of trajectory is used as holdout validation set. This approach is fundamentally different from cross-validation and minimizes the correlation between the training and validation datasets.

V. RESULTS

A. VAMPNets

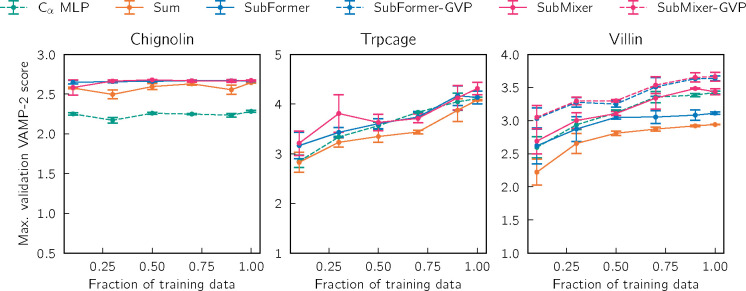

For each system and token mixer architecture pair that we consider, we independently train three VAMPnets using different random number generator seeds (and the training-validation split described in Section IV D). We report the training and validation VAMP-2 scores for the different token mixer architectures for each of the three systems in Figures S1 and S2. For chignolin, GNNs with pooling (summing), SubMixer, and SubFormer reach approximately the same maximum validation score. GNNs with SubMixer and SubFormer require fewer epochs to reach the convergence criteria, but they require more computational time per epoch, as we discuss in Section VI. For both villin and trp-cage, the token mixers generally outperform pooling.

Figure 2 displays the VAMP-2 scores for VAMPNets trained with different training dataset sizes, varying from 5% to 50% of the available data. For chignolin, the GNNs clearly outperform a multilayer perceptron (MLP) that takes distances between pairs of atoms as inputs; there is not a significant difference between pooling and the mixers considered. For trp-cage and villin, the GNN with pooling consistently achieves the lowest scores. The distance-based MLP and GNN with SubMixer perform comparably, presumably because the distances between pairs of atoms are sufficient to describe the folding (in contrast to chignolin, as we discuss below). For villin, we combined SubMixer and SubFormer with GVP and augmented them with a global token; this enables the GNNs to outperform the distance-based MLP. The improvement is particularly striking for SubFormer. The models with GVP are more expressive because they use the equivariant features at the token mixing stage and directly mix them with global features after message-passing. We note that the VAMP scores that we achieve are lower than published ones owing to our choice of the training-validation split (Section IV D) and output dimension . While the amount of data does not significantly impact the performance for chignolin, the trp-cage and villin results suggest that additional data would permit achieving higher scores.

FIG. 2.

VAMPnets with various geom2vec architectures. The amount of training data was varied by dividing the training data into 20 trajectory segments of equal length and then randomly selecting the indicated fraction for training. The validation set is held fixed as the second half of each trajectory. Error bars show standard errors over three independent runs.

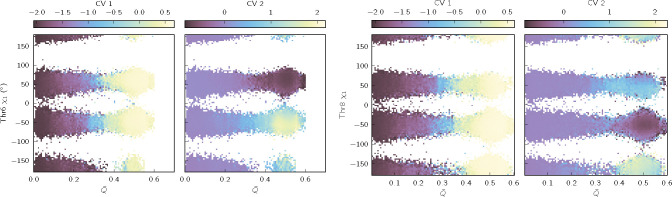

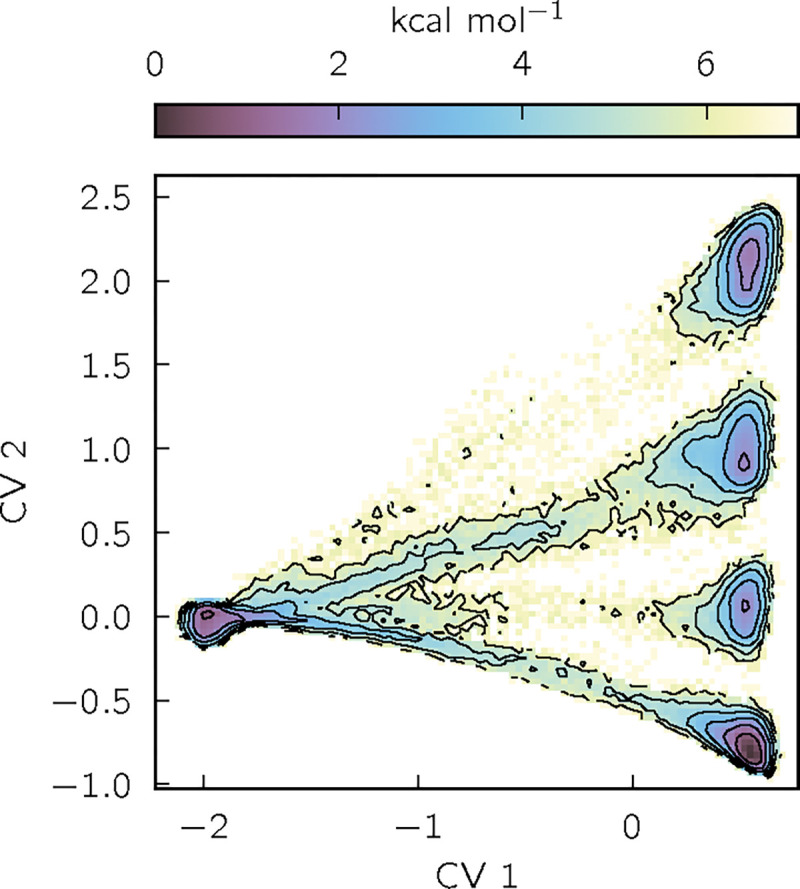

To visualize the results, we build histograms of learned CV-value pairs, which we convert to potentials of mean force (PMFs) by taking the negative logarithm (Figures 3, S3, S4, and S5). We also show average values of CVs as functions of physically-motivated coordinates (Figures 4, S6, and S7).

FIG. 3.

Potential of mean force (PMF) of chignolin as a function of the first two CVs learned by a VAMPnet trained with SubMixer. Contours are drawn every 1 kcal/mol. See Figure S3, S4, and S5 for corresponding plots for other architectures and proteins.

FIG. 4.

Chignolin VAMPnet (with SubMixer) CVs as a function of two physical coordinates: the fraction of native contacts and the side chain dihedral angle of Thr6 (left) or Thr8 (right). is the fraction of native contacts smoothed with a 1-ns moving window centered on each time point. We define native contacts as two residues that are three or more positions apart in sequence and have at least one distance between non-hydrogen atoms that is less than 4.5 Å in the crystal structure (5AWL69). See Figures S6 and S7 for analogous plots for trp-cage and villin.

The advantages of the GNN architecture are well illustrated by the results for chignolin. A previous machine-learning study of chignolin identified two slow CVs, one for the folding-unfolding transition and one distinguishing competing folded states63. Our VAMPnets appear to recover these two CVs (Figures 3 and S3; results shown are with SubMixer), distinguishing folded and unfolded states with CV 1 and four folded states with CV 2. To understand the physical differences between the folded states, we plot CVs 1 and 2 as functions of the fraction of native contacts and the side chain dihedral of Thr6 or Thr8 (Figure 4). The fraction of native contacts clearly correlates with CV 1, consistent with earlier studies. CV 2 distinguishes the folded states by the configurations of Thr6 and Thr8 side chains, which can each occupy two rotamers, yielding four possible folded states. These side chain dynamics could not be detected by VAMPnets that take backbone internal coordinates as inputs, as is common, or even GNNs limited to backbone atoms (Bonati, Piccini, and Parrinello63 included distances to side chain atoms and then manually curated the inputs). This makes clear the usefulness of the pre-training approach that we take here, which enables treating all the atoms with an architecture that supports both scalar and vector features.

B. SPIB

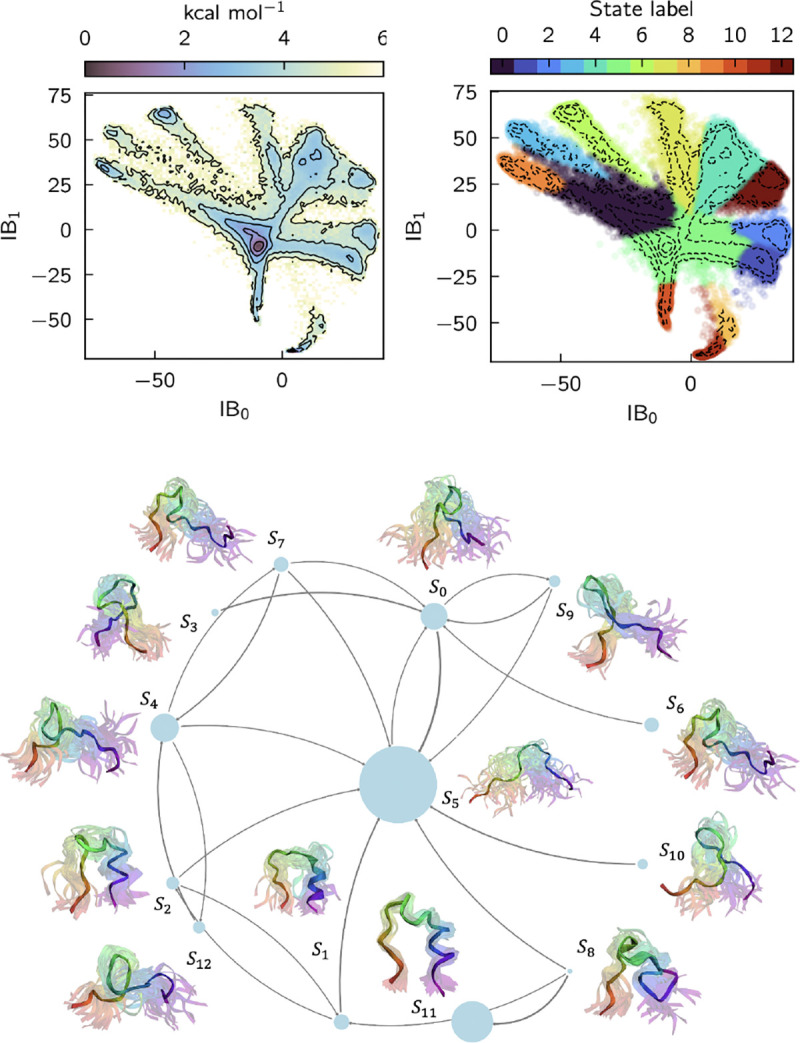

1. Trp-cage

Figure 5 shows a Markov state model based on the states learned for trp-cage with a lag time of 20 ns. There are 13 metastable states. State represents the folded ensemble with a well-defined structure. In states and the α-helix is folded, and the 310-helix and polyproline II helix are unfolded. In contrast, in state the 310-helix is folded, and the -helix and polyproline II helix are unfolded. State represents a fully unfolded state, which acts as a hub that connects all intermediate states and the folded state, . States , and are also largely unfolded and differ with regard to the specific conformations of the helices.

FIG. 5.

SPIB for trp-cage. All results are obtained from a GNN with SubFormer-GVP token mixer. (top left) PMF as a function of the first two information bottleneck coordinates (IBs). Contours are drawn every 1 kcal/mol. (top right) Same contours colored by SPIB assigned labels. (bottom) Learned Markov State Model. The highlighted structures are chosen randomly from the trajectory. The N-terminus is violet and the C-terminus is red.

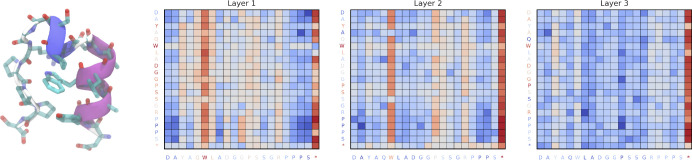

We show attention maps of the SubFormer blocks averaged over all structures in Figure 6 and over the structures in each state in Figures S8, S9, and S10. The attention maps are averaged over all heads of the transformer, yielding matrices, where for trp-cage represents the number of sequence positions (tokens), and the additional row and column correspond to a global token that encodes pairwise distances between the atoms57,59. The learned attention maps can be interpreted in terms of the structure. The residues that are most consistently activated across states and layers are Trp6 (W6) and Asp9–Ser14 (D9–S14), which roughly correspond to the 310-helix. Tyr3 (Y3), Gln5 (Q5), Gly15 (G15), and Arg16 (R16) are activated in selected states. The attention thus appears to track the packing of residues around Trp6. Across all three layers, the global token remains highly active, consistent with the fact that the metastable states of trp-cage are reasonably well-characterized by the distances between the atoms14,65. The fact that tokens other than the global token participate in the attention mechanism again underscores the ability of GNNs to go beyond distances between atoms.

FIG. 6.

SPIB SubFormer-GVP attention. (left) A typical fully folded trp-cage structure (classified as S11) with the central tryptophan residue (Trp6/W6) highlighted. (right three plots) Log-scaled averaged attention maps from three layers of the SubFormer block in the SubFormer-GVP architecture. The sequence is indicated by one-letter amino acid codes, and * represents a global token that encodes pairwise distances between the atoms. Tokens from query and key projections are colored according to the row-wise and column-wise sum of layer-wise attention weights. Results shown are for all structures in the trajectory. Results for individual states are in Figures S8, S9, and S10.

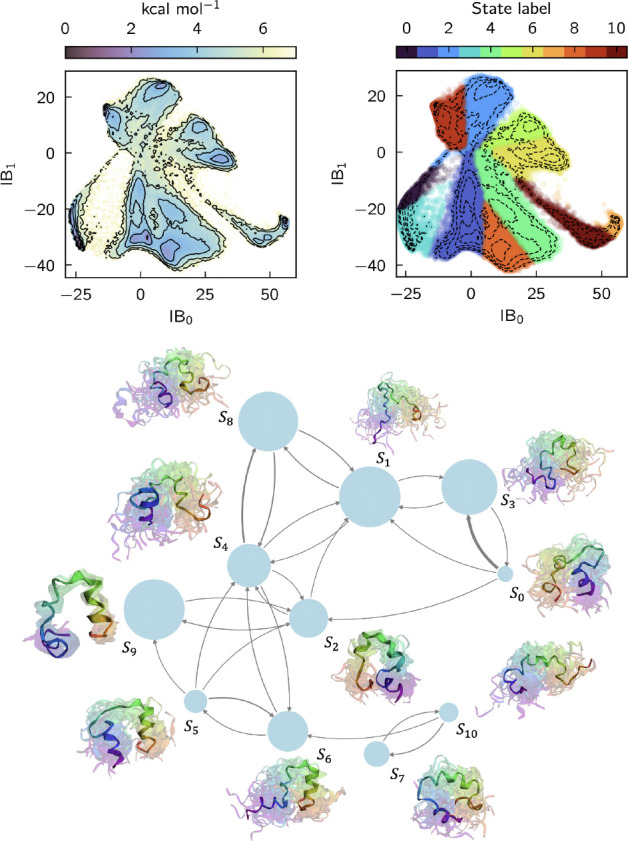

2. Villin

Figure 7 shows a Markov state model based on the states learned for villin HP35 with a lag time of 10 ns. There are 11 metastable states. State represents the fully folded structure, in which all three -helices are folded and packed compactly. States and correspond to fully unfolded states. In states and helix 1 is folded, suggesting a pathway in which folding initiates at the N-terminus, while in states and helix 3 is folded, suggesting a pathway in which folding initiates at the C-terminus. Both of these pathways are discussed in the literature (see Ref. 68 and references therein).

FIG. 7.

SPIB for villin. All results are obtained from a GNN with SubFormer-GVP token mixer. (top left) PMF as a function of the first two information bottleneck coordinates (IBs). Contours are drawn every 1 kcal/mol. (top right) Same contours colored by SPIB assigned labels. (bottom) Learned Markov State Model. The highlighted structures are chosen randomly from the trajectory. The N-terminus is violet and the C-terminus is red.

The attention maps (Figure S11, S12, and S13) exhibit patterns that correspond to features of the folded structure. Notably, the attention consistently focuses on the tokens representing Val9 (V9), Gly11 (G11), Met12 (M12), Arg14 (R14), Pro21 (P21), and Trp23 (W23). These residues correspond roughly to the turns between helices. In the attention maps for states 0, 2, 5, and 6, the tokens corresponding to helix 3 feature prominently; the tokens corresponding to helix 1 are also activated in state 0. The attention maps thus suggest that the network tracks the folding and packing of the helices.

It is interesting to compare our attention maps with those of Ghorbani et al.33 and Huang et al.35. Those studies leverage a graph attention network (GAT)70 to enhance expressive power and interpretability of their models. GAT computes representations of each node by attending to its one-hop neighboring nodes, which captures local dependencies but fails to model long-range interactions. In contrast, GNN-Transformer hybrids such as SubFormer57 allocate short-range interactions to the MP-GNN and use the self-attention mechanism for long-range interactions. This approach not only supports multimodal features (e.g., a global token) but also enables distant nodes to attend to each other, regardless of graph distance. This difference is evident in the attention map patterns: GAT attention maps33,35 show predominantly diagonal patterns, reflecting a focus on local neighborhoods, while SubFormer-GVP attention maps reveal vertical, blockwise, and global patterns, reflecting instead a focus on specific amino acids and their long-range interactions.

VI. COMPUTATIONAL REQUIREMENTS

Equivariant geometric GNNs use both invariant and equivariant features to capture the three-dimensional structure of molecules. For typical numbers of features, the memory and time requirements are expensive even for small graphs. To illustrate, we show the memory and time requirements for inference using a TorchMDET GNN with a small batch size with varying numbers of hidden channels (numbers of features) for trp-cage (144 non-hydrogen atoms) and villin (272 non-hydrogen atoms) in Figure S14. Even this already requires tens of gigabytes of memory and several seconds; a more complex architecture like ViSNet is expected to increase the memory and time requirements by roughly 50%. The memory and time scale linearly with both the number of atoms in the graph and the batch size. We use a batch size of 5000 for VAMP (except for GVP variants, for which we use 1000) and 1000 for SPIB, making training a GNN on the fly prohibitive, as we discuss in further detail below.

Geom2vec decouples training the GNNs and the networks for the downstream tasks. This allows us to use a small batch size for pretraining the GNNs (which need be done only once), and the networks for the downstream tasks take as inputs the tokens, which are fewer in number than the number of graph nodes. For example, here, the number of graph nodes is the number of non-hydrogen atoms, while the number of tokens is the number of amino acids, which is an order of magnitude smaller.

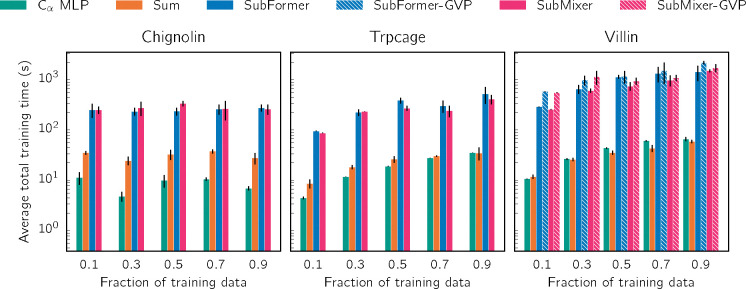

The computational costs for training VAMPnets with different token mixers are shown in Figure 8. The simplest GNN using pooling is not much more computationally costly than an MLP that takes distances between atoms as inputs. The GNNs with token mixers are about an order of magnitude more computationally costly but still manageable (hundreds of seconds) even without advanced acceleration techniques such as flash-attention or compilation. We expect the memory and computational requirements to scale with token number quadratically for SubFormer and subquadratically for SubMixer (depending on the expansion dimension in the token-mixing blocks); these requirements should scale linearly with respect to embedding dimension and network depth.

FIG. 8.

Computational time for training a single VAMPNet. We employ early stopping and stop training when the training VAMP score does not increase for 1000 batches or the validation VAMP score does not increase for 10 batches. Times reported are averages over three training runs, with validation performed at each step using the second half of each trajectory, as depicted in Figure S2. All times are for training on a single NVIDIA A40 GPU.

To estimate the memory usage and training time for a VAMPnet based on a GNN without pretraining, we consider a TorchMD-ET model with specific settings: a batch size of 1000, a hidden dimension of 64, and 6 layers; we assume 50 epochs are required to converge. For trp-cage, a batch size of 100 required 10.64 GB of memory. Since memory usage and training time should scale linearly with batch size, we estimate that increasing the batch size to 1000 would raise the memory usage to approximately 10.64 GB × 10 = 106.4 GB. Similarly, we estimate the training time at this larger batch size to be around 1.8 hours (excluding validation). The corresponding numbers for villin, which is about twice the size, are proportionally larger (219.0 GB and 4.0 hours for training). As noted above, employing a more complex architecture like ViSNet would further increase both time and memory requirements by roughly 50%. In the present study, we employ a batch size of 5000 for VAMP (except for GVP variants, as noted above); the batch size for SPIB is 1000, but it generally requires more iterations to converge. While rough, these estimates show that without pretraining equivariant GNNs for analyzing molecular dynamics are beyond the resources available to most researchers. By contrast, with pretraining, they are well within reach (Figure 8).

VII. CONCLUSIONS

In this paper, we use pretrained GNNs to convert molecular conformations into rich vector representations that can then be used for diverse downstream tasks. Decoupling the training of the GNNs and the networks for the downstream tasks dramatically decreases the memory and computational time requirements. Here, we focused on downstream tasks concerned with analyzing dynamics in molecular simulations, specifically VAMP and SPIB. For these tasks, we were able to use equivariant GNNs that take all non-hydrogen atoms of small proteins as inputs for the first time. The results for folding and unfolding of small proteins show that the GNNs use information beyond distances between atoms, which are commonly used as input features.

For pretraining, we used a simple denoising task with a dataset of structures of diverse molecules. Given that this dataset was not specific to proteins and/or VAMP and SPIB, we expect the present models to generalize to other classes of molecules and tasks, but quantitative tests on a wider variety of systems and tasks remain to be done. It would be interesting to investigate whether more complex pretraining strategies47,71 together with datasets specific to the class of molecules of interest (e.g., those specific to proteins48,72,73) can improve performance. We also explored a variety of token mixers and observed that more complex architectures were able to yield better results, which suggests there is scope for further engineering in this regard; these should be informed by ablation studies.

In our tests, we took care to split the dataset in a way that minimized the correlation between the training and validation datasets, and we believe that this should be standard practice. Because the data consisted of long, unbiased trajectories62, there were relatively few events of interest (here, folding and unfolding). Adapting our approach to methods that take short trajectories51,74, which allow for greater control of sampling74,75, is an important area of study for the future.

Supplementary Material

ACKNOWLEDGMENTS

We thank D. E. Shaw Research for making the molecular dynamics trajectories available to us. This work was supported by National Institutes of Health award R35 GM136381 and National Science Foundation award DMS-2054306. S.C.G. acknowledges support from the National Science Foundation Graduate Research Fellowship under Grant No. 2140001. Z.P. was supported with funding from the University of Chicago Data Science Institute’s AI+Science Research Initiative. This work was completed with computational resources administered by the University of Chicago Research Computing Center, including Beagle-3, a shared GPU cluster for biomolecular sciences supported by the NIH under the High-End Instrumentation (HEI) grant program award 1S10OD028655–0.

Appendix A: GNN architectures

1. Equivariant GNNs

Assuming defines the molecular conformation, where represents the three-dimensional (3D) coordinates of the -th atom, two of the most important symmetries for a GNN to obey are translation and rigid-body rotation invariances. These invariances require that the output of the GNN, , remains unchanged when the molecule is translated by a vector , i.e., , or when the molecule undergoes a rigid body rotation represented by a rotation matrix , i.e., . Translation invariance can be easily realized by using the relative displacements or internal coordinates of atoms. On the other hand, rotational invariance and equivariance can be realized in two ways:

representing the relative atom positions by internal coordinates (e.g., bond distances, bond angles, and dihedral angles) as in conventional classical force fields (“scalar-based network”)53,54,76,77;

encoding the relative displacement vectors with spherical harmonics, which are then propagated with tensor products (“group-equivariant network”)20–22.

The two approaches are theoretically and practically equivalent53,54,78, but group-equivariant networks need to store the features for each irreducible representation and are more computationally costly owing to the tensor products. Therefore, we chose to employ scalar-based GNNs.

2. TorchMD-ET architecture

The TorchMD-ET architecture54 is structured around three components that process and encode molecular geometric information effectively.

- Embedding layer: encodes atomic types and interatomic distances using exponential radial basis functions (eRBFs). Each distance within a cutoff is transformed by

where is a cosine cutoff function ensuring smooth transitions to zero beyond .(A1) -

Modified attention mechanism: incorporates edge information through an extended dot-product attention mechanism that integrates interatomic distances with distance kernels derived from eRBFs ( denotes element-wise multiplication):

(A2) The attention mechanism weights are then used to compute scalar features and filters for the update layer:(A3)

where and are attention value and distance projections analogous to , and .(A4) - Update layer: updates both scalar () and vector () features using and :

(A5) (A6)

This combines scalar and directional vector information describing the local atomic environment.

3. ViSNet

ViSNet53 builds on TorchMD-ET by incorporating additional scalar features into its architecture. These additional features include information about angles, dihedral angles, and improper dihedral angles. This information is computed in an efficient fashion by first associating with each atom information about its neighbors:

| (A7) |

| (A8) |

| (A9) |

where is the unit vector pointing from atom to neighboring atom , and is the sum of all such unit vectors around atom . Then the inner product

| (A10) |

represents the dihedral angle () information around inner atoms and , with neighbors indexed by and , respectively.

4. Gated equivariant block

The output layer of the networks consists of gated equivariant blocks79,80, each of which combines the scalar () and vector () features from graph node of the previous layer (Algorithm 1).

Algorithm 1.

Gated equivariant block (GEB)

| Require: | ||

| 1: | ▷ | |

| 2: | ▷ | |

| 3: | ▷ | |

| 4: | ▷ split into | |

| 5: | ||

| 6: | ▷ scales vector features, maintains equivariance | |

| 7: | return | ▷ |

Appendix B: Geometric vector perceptron

Geometric vector perceptron (GVP) was one of the first architectures to incorporate vector features for protein modeling60. Here, we use the GVP architecture as an equivariant token mixer to combine scalar and vector features (Algorithm 2).

Algorithm 2.

Geometric vector perceptron (GVP)

| Require: | ||

| 1: | ▷ project vector features, | |

| 2: | ▷ compute the (row-wise) norm of projected vectors, | |

| 3: | ▷ | |

| 4: | ▷ | |

| 5: | ▷ | |

| 6: | ▷ project vector features to | |

| 7: | ▷ column-wise multiplication with vector gate (on row-wise norm), | |

| 8: | return | |

Appendix C: Pseudo-code of overall architecture

Algorithm 3.

geom2vec

| Require: Atomic numbers , positions , coarse-grained mapping , mixer method {None, SubFormer, SubMixer, SubGVP}, global features | ||

| 1: | ▷ atomistic featurization, | |

| 2: | ▷ coarse-graining with mapping | |

| 3: | if None then | |

| 4: | ▷ sum over indices for direct pooling, | |

| 5: | ▷ Algorithm 1 | |

| 6: | else if SubFormer then | |

| 7: | ▷ apply GEB to input features | |

| 8: | if global token then | |

| 9: | ▷ project global features to | |

| 10: | ▷ pass regular and global tokens through the transformer encoder | |

| 11: | else | |

| 12: | ▷ pass only the regular tokens through the transformer encoder | |

| 13: | end if | |

| 14: | else if SubMixer then | |

| 15: | ▷ apply GEB to input features | |

| 16: | if global token then | |

| 17: | ▷ project global features to | |

| 18: | ▷ pass regular and global tokens through the MLP-Mixer | |

| 19: | else | |

| 20: | ▷ pass only the regular tokens through the MLP-Mixer | |

| 21: | end if | |

| 22: | else if SubGVP then | |

| 23: | ▷ Algorithm 2 | |

| 24: | if subformer or submixer then | |

| 25: | ▷ apply subformer or submixer block to GVP features | |

| 26: | end if | |

| 27: | end if | |

| 28: | ||

| 29: | return | ▷ |

Appendix D: Training details and hyperparameters

For pre-training, we use the Orbnet Denali dataset from Ref. 44, which consists of 2.3 million conformations of small to medium size molecules. These conformations are obtained with semi-empirical methods and contain nonequilibrium geometries, alternative tautomers, and non-bonded interactions. For pretraining, we randomly take 10,000 configurations as the validation set and the remainder as the training set.

DATA AVAILABILITY

Data sharing is not applicable to this article as no new data were created or analyzed in this study. Code for our implementation and examples are available at https://github.com/dinner-group/geom2vec.

REFERENCES

- 1.Husic B. E., McGibbon R. T., Sultan M. M., and Pande V. S., “Optimized parameter selection reveals trends in Markov state models for protein folding,” Journal of Chemical Physics 145, 194103 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Scherer M. K., Husic B. E., Hoffmann M., Paul F., Wu H., and Noé F., “Variational selection of features for molecular kinetics,” Journal of Chemical Physics 150, 194108 (2019). [DOI] [PubMed] [Google Scholar]

- 3.Nagel D., Sartore S., and Stock G., “Selecting features for Markov modeling: A case study on HP35,” Journal of Physical Chemistry Letters 14, 6956–6967 (2023).37504674 [Google Scholar]

- 4.Arbon R. E., Zhu Y., and Mey A. S. J. S., “Markov state models: To optimize or not to optimize,” Journal of Chemical Theory and Computation 20, 977–988 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tribello G. A., Ceriotti M., and Parrinello M., “Using sketch-map coordinates to analyze and bias molecular dynamics simulations,” Proceedings of the National Academy of Sciences 109, 5196–5201 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rohrdanz M. A., Zheng W., Maggioni M., and Clementi C., “Determination of reaction coordinates via locally scaled diffusion map,” Journal of Chemical Physics 134, 124116 (2011). [DOI] [PubMed] [Google Scholar]

- 7.Boninsegna L., Gobbo G., Noé F., and Clementi C., “Investigating molecular kinetics by variationally optimized diffusion maps,” Journal of Chemical Theory and Computation 11, 5947–5960 (2015). [DOI] [PubMed] [Google Scholar]

- 8.Mardt A., Pasquali L., Wu H., and Noé F., “VAMPnets for deep learning of molecular kinetics,” Nature Communications 9, 5 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Noé F. and Nüske F., “A variational approach to modeling slow processes in stochastic dynamical systems,” Multiscale Modeling & Simulation 11, 635–655 (2013). [Google Scholar]

- 10.Chen W., Sidky H., and Ferguson A. L., “Nonlinear discovery of slow molecular modes using state-free reversible VAMPnets,” Journal of Chemical Physics 150, 214114 (2019). [DOI] [PubMed] [Google Scholar]

- 11.Wu H. and Noé F., “Variational approach for learning Markov processes from time series data,” Journal of Nonlinear Science 30, 23–66 (2020). [Google Scholar]

- 12.Lorpaiboon C., Thiede E. H., Webber R. J., Weare J., and Dinner A. R., “Integrated variational approach to conformational dynamics: A robust strategy for identifying eigenfunctions of dynamical operators,” Journal of Physical Chemistry B 124, 9354–9364 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Trstanova Z., Leimkuhler B., and Lelièvre T., “Local and global perspectives on diffusion maps in the analysis of molecular systems,” Proceedings of the Royal Society A 476, 20190036 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Strahan J., Antoszewski A., Lorpaiboon C., Vani B. P., Weare J., and Dinner A. R., “Long-time-scale predictions from shorttrajectory data: A benchmark analysis of the trp-cage miniprotein,” Journal of Chemical Theory and Computation 17, 2948–2963 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Thiede E. H., Giannakis D., Dinner A. R., and Weare J., “Galerkin approximation of dynamical quantities using trajectory data,” Journal of Chemical Physics 150, 244111 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bolhuis P. G., Dellago C., and Chandler D., “Reaction coordinates of biomolecular isomerization,” Proceedings of the National Academy of Sciences 97, 5877–5882 (2000). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ma A. and Dinner A. R., “Automatic method for identifying reaction coordinates in complex systems,” Journal of Physical Chemistry B 109, 6769–6779 (2005). [DOI] [PubMed] [Google Scholar]

- 18.Guo S. C., Shen R., Roux B., and Dinner A. R., “Dynamics of activation in the voltage-sensing domain of Ciona intestinalis phosphatase Ci-VSP,” Nature Communications 15, 1408 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Herringer N. S., Dasetty S., Gandhi D., Lee J., and Ferguson A. L., “Permutationally invariant networks for enhanced sampling (PINES): Discovery of multimolecular and solvent-inclusive collective variables,” Journal of Chemical Theory and Computation 20, 178–198 (2023). [DOI] [PubMed] [Google Scholar]

- 20.Thomas N., Smidt T., Kearnes S., Yang L., Li L., Kohlhoff K., and Riley P., “Tensor field networks: Rotation-and translation-equivariant neural networks for 3D point clouds,” arXiv preprint arXiv:1802.08219 (2018). [Google Scholar]

- 21.Anderson B., Hy T.-S., and Kondor R., “Cormorant: Covariant molecular neural networks,” in Proceedings of the 33rd International Conference on Neural Information Processing Systems (Curran Associates Inc., Red Hook, NY, USA, 2019) pp. 14537–14546. [Google Scholar]

- 22.Batzner S., Musaelian A., Sun L., Geiger M., Mailoa J. P., Kornbluth M., Molinari N., Smidt T. E., and Kozinsky B., “E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials,” Nature Communications 13, 2453 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Han J., Cen J., Wu L., Li Z., Kong X., Jiao R., Yu Z., Xu T., Wu F., Wang Z., et al. , “A survey of geometric graph neural networks: Data structures, models and applications,” arXiv preprint arXiv:2403.00485 (2024). [Google Scholar]

- 24.Gilmer J., Schoenholz S. S., Riley P. F., Vinyals O., and Dahl G. E., “Neural message passing for quantum chemistry,” in International Conference on Machine Learning (PMLR, 2017) pp. 1263–1272. [Google Scholar]

- 25.Battaglia P. W., Hamrick J. B., Bapst V., Sanchez-Gonzalez A., Zambaldi V., Malinowski M., Tacchetti A., Raposo D., Santoro A., Faulkner R., Gulcehre C., Song F., Ballard A., Gilmer J., Dahl G., Vaswani A., Allen K., Nash C., Langston V., Dyer C., Heess N., Wierstra D., Kohli P., Botvinick M., Vinyals O., Li Y., and Pascanu R., “Relational inductive biases, deep learning, and graph networks,” arXiv preprint arXiv:1806.01261 (2018). [Google Scholar]

- 26.Husic B. E., Charron N. E., Lemm D., Wang J., Pérez A., Majewski M., Krämer A., Chen Y., Olsson S., de Fabritiis G., et al. , “Coarse graining molecular dynamics with graph neural networks,” Journal of Chemical Physics 153, 194101 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Jamasb A. R., Morehead A., Zhang Z., Joshi C. K., Didi K., Mathis S. V., Harris C., Tang J., Cheng J., Lio P., and Blundell T. L., “Evaluating representation learning on the protein structure universe,” in The Twelfth International Conference on Learning Representations (2023). [Google Scholar]

- 28.Hermosilla P. and Ropinski T., “Contrastive representation learning for 3D protein structures,” arXiv preprint arXiv:2205.15675 (2022). [Google Scholar]

- 29.Wang L., Liu H., Liu Y., Kurtin J., and Ji S., “Learning hierarchical protein representations via complete 3D graph networks,” arXiv preprint arXiv:2207.12600 (2023). [Google Scholar]

- 30.Zhang Z., Xu M., Jamasb A., Chenthamarakshan V., Lozano A., Das P., and Tang J., “Protein representation learning by geometric structure pretraining,” arXiv preprint arXiv:2203.06125 (2023). [Google Scholar]

- 31.Xie T., France-Lanord A., Wang Y., Shao-Horn Y., and Grossman J. C., “Graph dynamical networks for unsupervised learning of atomic scale dynamics in materials,” Nature Communications 10, 2667 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Soltani S., Sinclair C. W., and Rottler J., “Exploring glassy dynamics with Markov state models from graph dynamical neural networks,” Physical Review E 106, 025308 (2022). [DOI] [PubMed] [Google Scholar]

- 33.Ghorbani M., Prasad S., Klauda J. B., and Brooks B. R., “Graph-VAMPNet, using graph neural networks and variational approach to Markov processes for dynamical modeling of biomolecules,” Journal of Chemical Physics 156, 184103 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Liu B., Xue M., Qiu Y., Konovalov K. A., O’Connor M. S., and Huang X., “GraphVAMPnets for uncovering slow collective variables of self-assembly dynamics,” Journal of Chemical Physics 159, 094901 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Huang Y., Zhang H., Lin Z., Wei Y., and Xi W., “RevGraph-VAMP: A protein molecular simulation analysis model combining graph convolutional neural networks and physical constraints,” Methods 229, 163–174 (2024). [DOI] [PubMed] [Google Scholar]

- 36.Vaswani A., Shazeer N., Parmar N., Uszkoreit J., Jones L., Gomez A. N., Kaiser Ł., and Polosukhin I., “Attention is all you need,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17 (Curran Associates Inc., Red Hook, NY, USA, 2017) pp. 6000–6010. [Google Scholar]

- 37.Dosovitskiy A., Beyer L., Kolesnikov A., Weissenborn D., Zhai X., Unterthiner T., Dehghani M., Minderer M., Heigold G., Gelly S., et al. , “An image is worth 16×16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929 (2020). [Google Scholar]

- 38.Mikolov T., Sutskever I., Chen K., Corrado G., and Dean J., “Distributed representations of words and phrases and their compositionality,” in Proceedings of the 26th International Conference on Neural Information Processing Systems - Volume 2, NIPS’13 (Curran Associates Inc., Red Hook, NY, USA, 2013) pp. 3111–3119. [Google Scholar]

- 39.Guo Y., Wu J., Ma H., and Huang J., “Self-supervised pretraining for protein embeddings using tertiary structures,” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 36 (2022) pp. 6801–6809. [Google Scholar]

- 40.Zaidi S., Schaarschmidt M., Martens J., Kim H., Teh Y. W., Sanchez-Gonzalez A., Battaglia P., Pascanu R., and Godwin J., “Pre-training via denoising for molecular property prediction,” arXiv preprint arXiv:2206.00133 (2022). [Google Scholar]

- 41.Chen C., Zhou J., Wang F., Liu X., and Dou D., “Structure-aware protein self-supervised learning,” Bioinformatics 39, btad189 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sohl-Dickstein J., Weiss E. A., Maheswaranathan N., and Ganguli S., “Deep unsupervised learning using nonequilibrium thermodynamics,” in Proceedings of the 32nd International Conference on International Conference on Machine Learning - Volume 37, ICML’15 (JMLR.org, Lille, France, 2015) pp. 2256–2265. [Google Scholar]

- 43.Ho J., Jain A., and Abbeel P., “Denoising diffusion probabilistic models,” in Advances in Neural Information Processing Systems, Vol. 33 (Curran Associates, Inc., 2020) pp. 6840–6851. [Google Scholar]

- 44.Christensen A. S., Sirumalla S. K., Qiao Z., O’Connor M. B., Smith D. G., Ding F., Bygrave P. J., Anandkumar A., Welborn M., Manby F. R., et al. , “OrbNet Denali: A machine learning potential for biological and organic chemistry with semiempirical cost and DFT accuracy,” Journal of Chemical Physics 155, 204103 (2021). [DOI] [PubMed] [Google Scholar]

- 45.Wang D. and Tiwary P., “State predictive information bottleneck,” Journal of Chemical Physics 154, 134111 (2021). [DOI] [PubMed] [Google Scholar]

- 46.Wang D., Qiu Y., Beyerle E. R., Huang X., and Tiwary P., “Information bottleneck approach for Markov model construction,” Journal of Chemical Theory and Computation 20, 5352–5367 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Ni Y., Feng S., Hong X., Sun Y., Ma W.-Y., Ma Z.-M., Ye Q., and Lan Y., “Pre-training with fractional denoising to enhance molecular property prediction,” Nature Machine Intelligence 6, 1169–1178 (2024). [Google Scholar]

- 48.Vander Meersche Y., Cretin G., Gheeraert A., Gelly J.-C., and Galochkina T., “ATLAS: protein flexibility description from atomistic molecular dynamics simulations,” Nucleic Acids Research 52, D384–D392 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Hernández C. X., Wayment-Steele H. K., Sultan M. M., Husic B. E., and Pande V. S., “Variational encoding of complex dynamics,” Physical Review E 97, 062412 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Chen H., Roux B., and Chipot C., “Discovering reaction pathways, slow variables, and committor probabilities with machine learning,” Journal of Chemical Theory and Computation 19, 4414–4426 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Strahan J., Guo S. C., Lorpaiboon C., Dinner A. R., and Weare J., “Inexact iterative numerical linear algebra for neural network-based spectral estimation and rare-event prediction,” Journal of Chemical Physics 159, 014110 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jung H., Covino R., Arjun A., Leitold C., Dellago C., Bolhuis P. G., and Hummer G., “Machine-guided path sampling to discover mechanisms of molecular self-organization,” Nature Computational Science 3, 334–345 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wang Y., Wang T., Li S., He X., Li M., Wang Z., Zheng N., Shao B., and Liu T.-Y., “Enhancing geometric representations for molecules with equivariant vector-scalar interactive message passing,” Nature Communications 15, 313 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Thölke P. and De Fabritiis G., “TorchMD-NET: Equivariant transformers for neural network based molecular potentials,” arXiv preprint arXiv:2202.02541 (2022). [Google Scholar]

- 55.Pengmei Z., Shen Z., Wang Z., Collins M., and Rangwala H., “Pushing the limits of all-atom geometric graph neural networks: Pre-training, scaling and zero-shot transfer,” arXiv preprint arXiv:2410.21683 (2024). [Google Scholar]

- 56.Carion N., Massa F., Synnaeve G., Usunier N., Kirillov A., and Zagoruyko S., “End-to-end object detection with transformers,” in Computer Vision – ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I (Springer-Verlag, Berlin, Heidelberg, 2020) pp. 213–229. [Google Scholar]

- 57.Pengmei Z., Li Z., chan Tien C., Kondor R., and Dinner A. R., “Transformers are efficient hierarchical chemical graph learners,” arXiv preprint arXiv:2310.01704 (2023). [Google Scholar]

- 58.Tolstikhin I. O., Houlsby N., Kolesnikov A., Beyer L., Zhai X., Unterthiner T., Yung J., Steiner A., Keysers D., Uszkoreit J., Lucic M., and Dosovitskiy A., “MLP-Mixer: An all-MLP architecture for vision,” in Advances in Neural Information Processing Systems, Vol. 34 (Curran Associates, Inc., 2021) pp. 24261–24272. [Google Scholar]

- 59.Pengmei Z. and Li Z., “Technical report: The graph spectral token – enhancing graph transformers with spectral information,” arXiv preprint arXiv:2404.05604 (2024). [Google Scholar]

- 60.Jing B., Eismann S., Suriana P., Townshend R. J. L., and Dror R., “Learning from protein structure with geometric vector perceptrons,” in International Conference on Learning Representations (2020). [Google Scholar]

- 61.Tomczak J. and Welling M., “VAE with a VampPrior,” in Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics (PMLR, 2018) pp. 1214–1223. [Google Scholar]

- 62.Lindorff-Larsen K., Piana S., Dror R. O., and Shaw D. E., “How fast-folding proteins fold,” Science 334, 517–520 (2011). [DOI] [PubMed] [Google Scholar]

- 63.Bonati L., Piccini G., and Parrinello M., “Deep learning the slow modes for rare events sampling,” Proceedings of the National Academy of Sciences 118, e2113533118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Barua B., Lin J. C., Williams V. D., Kummler P., Neidigh J. W., and Andersen N. H., “The Trp-cage: optimizing the stability of a globular miniprotein,” Protein Engineering, Design and Selection 21, 171–185 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sidky H., Chen W., and Ferguson A. L., “High-resolution Markov state models for the dynamics of trp-cage miniprotein constructed over slow folding modes identified by state-free reversible VAMPnets,” Journal of Physical Chemistry B 123, 7999–8009 (2019). [DOI] [PubMed] [Google Scholar]

- 66.McKnight J. C., Doering D. S., Matsudaira P. T., and Kim P. S., “A thermostable 35-residue subdomain within villin headpiece,” Journal of Molecular Biology 260, 126–134 (1996). [DOI] [PubMed] [Google Scholar]

- 67.Kubelka J., Chiu T. K., Davies D. R., Eaton W. A., and Hofrichter J., “Sub-microsecond protein folding,” Journal of Molecular Biology 359, 546–553 (2006). [DOI] [PubMed] [Google Scholar]

- 68.Wang E., Tao P., Wang J., and Xiao Y., “A novel folding pathway of the villin headpiece subdomain HP35,” Physical Chemistry Chemical Physics 21, 18219–18226 (2019). [DOI] [PubMed] [Google Scholar]

- 69.Honda S., Akiba T., Kato Y. S., Sawada Y., Sekijima M., Ishimura M., Ooishi A., Watanabe H., Odahara T., and Harata K., “Crystal structure of a ten-amino acid protein,” Journal of the American Chemical Society 130, 15327–15331 (2008). [DOI] [PubMed] [Google Scholar]

- 70.Veličković P., Cucurull G., Casanova A., Romero A., Lio P., and Bengio Y., “Graph attention networks,” arXiv preprint arXiv:1710.10903 (2017). [Google Scholar]

- 71.Liao Y.-L., Smidt T., Shuaibi M., and Das A., “Generalizing denoising to non-equilibrium structures improves equivariant force fields,” arXiv preprint arXiv:2403.09549 (2024). [Google Scholar]

- 72.Sillitoe I., Bordin N., Dawson N., Waman V. P., Ashford P., Scholes H. M., Pang C. S. M., Woodridge L., Rauer C., Sen N., Abbasian M., Le Cornu S., Lam S. D., Berka K., Varekova I., Svobodova R., Lees J., and Orengo C. A., “CATH: increased structural coverage of functional space,” Nucleic Acids Research 49, D266–D273 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Barrio-Hernandez I., Yeo J., Jänes J., Mirdita M., Gilchrist C. L. M., Wein T., Varadi M., Velankar S., Beltrao P., and Steinegger M., “Clustering predicted structures at the scale of the known protein universe,” Nature 622, 637–645 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Strahan J., Finkel J., Dinner A. R., and Weare J., “Predicting rare events using neural networks and short-trajectory data,” Journal of computational physics 488, 112152 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Strahan J., Lorpaiboon C., Weare J., and Dinner A. R., “BADNEUS: Rapidly converging trajectory stratification,” Journal of Chemical Physics 161, 084109 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Schütt K. T., Sauceda H. E., Kindermans P.-J., Tkatchenko A., and Müller K.-R., “SchNet–a deep learning architecture for molecules and materials,” Journal of Chemical Physics 148, 241722 (2018). [DOI] [PubMed] [Google Scholar]

- 77.Gasteiger J., Groß J., and Günnemann S., “Directional message passing for molecular graphs,” arXiv preprint arXiv:2003.03123 (2020). [Google Scholar]

- 78.Villar S., Hogg D. W., Storey-Fisher K., Yao W., and Blum-Smith B., “Scalars are universal: Equivariant machine learning, structured like classical physics,” Advances in Neural Information Processing Systems 34, 28848–28863 (2021). [Google Scholar]

- 79.Weiler M., Geiger M., Welling M., Boomsma W., and Cohen T. S., “3D steerable CNNs: Learning rotationally equivariant features in volumetric data,” in Advances in Neural Information Processing Systems, Vol. 31 (Curran Associates, Inc., 2018). [Google Scholar]

- 80.Schütt K. T., Unke O. T., and Gastegger M., “Equivariant message passing for the prediction of tensorial properties and molecular spectra,” arXiv preprint arXiv:2102.03150 (2021). [Google Scholar]

- 81.Everett K., Xiao L., Wortsman M., Alemi A. A., Novak R., Liu P. J., Gur I., Sohl-Dickstein J., Kaelbling L. P., Lee J., and Pennington J., “Scaling exponents across parameterizations and optimizers,” arXiv preprint arXiv:2407.05872 (2024). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study. Code for our implementation and examples are available at https://github.com/dinner-group/geom2vec.