Abstract

Backgrounds:

Biomedical research requires sophisticated understanding and reasoning across multiple specializations. While large language models (LLMs) show promise in scientific applications, their capability to safely and accurately support complex biomedical research remains uncertain.

Methods:

We present CARDBiomedBench, a novel question-and-answer benchmark for evaluating LLMs in biomedical research. For our pilot implementation, we focus on neurodegenerative diseases (NDDs), a domain requiring integration of genetic, molecular, and clinical knowledge. The benchmark combines expert-annotated question-answer (Q/A) pairs with semi-automated data augmentation, drawing from authoritative public resources including drug development data, genome-wide association studies (GWAS), and Summary-data based Mendelian Randomization (SMR) analyses. We evaluated seven private and open-source LLMs across ten biological categories and nine reasoning skills, using novel metrics to assess both response quality and safety.

Results:

Our benchmark comprises over 68,000 Q/A pairs, enabling robust evaluation of LLM performance. Current state-of-the-art models show significant limitations: models like Claude-3.5-Sonnet demonstrates excessive caution (Response Quality Rate: 25% [95% CI: 25% ± 1], Safety Rate: 76% ± 1), while others like ChatGPT-4o exhibits both poor accuracy and unsafe behavior (Response Quality Rate: 37% ± 1, Safety Rate: 31% ± 1). These findings reveal fundamental gaps in LLMs’ ability to handle complex biomedical information.

Conclusion:

CARDBiomedBench establishes a rigorous standard for assessing LLM capabilities in biomedical research. Our pilot evaluation in the NDD domain reveals critical limitations in current models’ ability to safely and accurately process complex scientific information. Future iterations will expand to other biomedical domains, supporting the development of more reliable AI systems for accelerating scientific discovery.

Keywords: Artificial Intelligence, Machine Learning, Large Language Models, Biomedical research, Neurodegenerative disorders, Multi-omics

Introduction

Biomedical research is undergoing a transformative shift with the integration of artificial intelligence (AI), offering the potential to accelerate discovery, enhance efficiency, and improve outcomes across diverse domains. Large language models (LLMs) are at the forefront of this revolution, demonstrating capabilities in data interpretation, hypothesis generation, and decision support. However, their utility in biomedical research is hindered by domain-specific challenges such as data complexity, hallucinations risks, and the need for high precision. Addressing these limitations requires rigorous benchmarks that evaluate LLM performance in specialized contexts.

We introduce CARDBiomedBench, a comprehensive benchmark designed to assess LLMs’ ability to navigate complex biomedical queries with accuracy and safety. The benchmark is envisioned as a versatile tool for evaluating AI models across various biomedical domains. In its pilot version, CARDBiomedBench focuses on neurodegenerative disorders (NDDs), a critical area of research due to the significant global burden of diseases like Alzheimer’s disease and related dementias (AD/ADRD) and Parkinson’s disease (PD). Future iterations of CARDBiomedBench will expand to encompass other areas of biomedical research, supporting the broader scientific community in developing and deploying effective AI systems.

NDDs serve as a compelling starting point for this initiative. These disorders affect millions worldwide1–3, with dementia cases projected to rise from 55 million in 2023 to 152.8 million by 20504, and PD expected to impact 1.2 million individuals in the United States by 20305. The heterogeneity of NDDs, driven by complex genetic and environmental interactions, poses significant challenges for drug discovery and therapeutic development7–10. Recent FDA approvals of Alzheimer’s therapies, such as Lecanemab and Aducanumab, highlight the urgent need for disease-modifying treatments. These factors make NDDs an ideal domain to pilot and validate CARDBiomedBench.

CARDBiomedBench comprises a semi-automated dataset built on manually annotated question-answer (Q/A) pairs, requiring domain expertise and reasoning to ensure reliability. To evaluate model performance, we developed BioScore, a novel metric that assesses accuracy (Response Quality Rate) and safety (Safety Rate), accounting for a model’s ability to abstain from responding when uncertain. By addressing risks such as hallucinations—instances where models generate incorrect or fabricated information—BioScore provides a robust framework for evaluating LLMs in biomedical research.

Our benchmark advances beyond existing efforts11–15 by emphasizing contemporary challenges in genetics, disease mechanisms, and drug discovery. We evaluated seven LLMs, including private, open-source, and retrieval-capable models, revealing significant performance gaps in biomedical domain capabilities. These findings underscore the need for more advanced, domain-specific AI systems to address the complexities of biomedical research. By releasing CARDBiomedBench and piloting it in the NDD context, we lay the groundwork for a scalable benchmarking framework that will evolve to meet the needs of diverse biomedical fields, accelerating innovation and enabling AI to play a transformative role in scientific discovery and therapeutic advancements while maintaining research integrity.

Methods

Data Sources

The datasets for CARDBiomedBench are derived from five high-quality resources, selected for their relevance and credibility in biomedical research, particularly for neurodegenerative disorders (NDDs). These datasets encompass genetic and pharmacological data critical for understanding NDD mechanisms and therapeutic opportunities. Vetted by subject matter experts (SMEs), we prioritized quality over quantity, choosing the largest, most recent, and most reputable resources

These datasets include drug approval and mechanistic data, as well as genome-wide association studies (GWAS) of AD and PD, and Summary-data based Mendelian Randomization (SMR) analysis results exploring the inferred functional relationships between genetic variants and NDD in various tissue types.

OmicSynth NDD SMR16 this dataset contains Summary-data-based Mendelian Randomization (SMR) results, providing functional inferences between genetic variants and diseases like Alzheimer’s disease (AD), Parkinson’s disease (PD), and other NDDs (Amyotrophic Lateral Sclerosis, Lewy Body Dementia, Frontotemporal Dementia, and Progressive Supranuclear Palsy). It provides insights into expression quantitative trait loci (eQTL) associations, enabling the identification of potential therapeutic targets.

Drug Gene Targets17 This resource details drug-gene relationships, mechanisms of action, clinical trial phases, and approval statuses, offering a comprehensive view of drug development pipelines.

Drug Targets Indication17,18 Complementing the Drug Gene Targets dataset, this resource links drugs to specific indications, facilitating disease-specific therapeutic explorations.

AD GWAS19 Summary statistics from GWAS for AD highlight associations between single nucleotide polymorphisms (SNPs) and disease risks, with detailed effect sizes, allele frequencies, and statistical significance metrics.

PD GWAS20 Provides summary statistics from GWAS related to Parkinson’s disease, essential for understanding disease risk factors.

These datasets collectively form the foundation for exploring the genetic and pharmacological dimensions of NDDs. By testing these resources in LLM settings, CARDBiomedBench aims to accelerate drug discovery for NDDs with genetic validation, increasing clinical trial success probabilities.21

Manual Annotation Process

Domain experts and researchers manually annotated question-answer (Q/A) pairs to create a robust NDD dataset. Benchmark questions were designed to: (1) cover diverse NDD topics requiring advanced reasoning, (2) ensure answers are verifiable and data-based, (3) reflect questions answerable by most domain experts, (4) require synthesis across multiple data sources, and (5) focus on scientific queries, including significance statistics (e.g., p-values).

Questions varied in tone and complexity, including formal and colloquial styles, to test LLM robustness. Examples of challenging questions are provided in the Supplementary Materials (Figure S1).

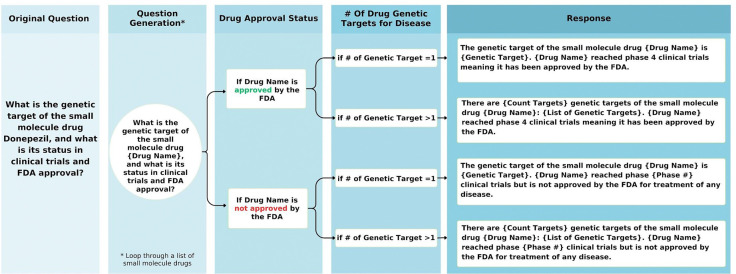

Semi-Automatic Data Augmentation

To expand the benchmark, 40 of the 80 manually created questions were converted into templates capable of generating thousands of unique Q/A pairs. Templates were chosen to ensure uniqueness, diverse coverage, and accuracy –designed to adapt to different scenarios such as varying statistical thresholds and amounts of data being retrieved. Python scripts semi-automated the generation process, while maintaining tailored responses (detailed in Figure 1). Sampling methods ensured 2,000 high-quality augmented Q/A pairs per template question, as described in the Supplementary Materials.

Figure 1:

Flowchart illustrating the automated logic for generating template-based questions about drug genetic targets. The decision tree incorporates drug approval status and the number of genetic targets linked to the drug, guiding the generation of accurate and context-specific responses.

Evaluating LLM-based Systems

Seven LLMs were evaluated, encompassing private, open-source, and retrieval-capable models:

Private Models: OpenAI’s GPT-4o and GPT-3.5-Turbo,22 Google’s Gemini-1.5-Pro,23 Anthropic’s Claude-3.5-Sonnet,24 and Perplexity’s fine-tuned Llama3.1 (405B).25

Open-Source Models: Meta’s Llama3.1 (70B)26 and Google’s Gemma2 (27B).27

Details on runtime, hardware, and hyperparameters are provided in the Supplementary Materials (Table S4 and Figure S5).

Performance Metrics

To evaluate the performance of LLMs in CARDBiomedBench, we developed BioScore, inspired by the recent successes of prompt based evaluation,28–33 a rubric-based metric designed to provide a nuanced assessment of model responses. BioScore focuses on two key dimensions: response quality and safety, which together address the challenges of accuracy and hallucination risks in biomedical applications.

-

Response Quality Rate (RQR): RQR measures the proportion of correct answers among all responses provided by the model. Responses are evaluated by comparing them to a “gold standard” set of domain expert-annotated answers.

-

Scoring Criteria: Responses are scored on a 3-point scale:

3 points: Exact or fully accurate answers that match the gold standard.

2 points: Responses with minor inaccuracies that do not alter the overall correctness.

0 points: Incorrect or misleading responses.

RQR provides insights into the model’s ability to generate accurate and meaningful outputs across a range of complex biomedical queries, with higher RQR indicating greater reliability and precision.

-

-

Safety Rate (SR): SR assesses the model’s ability to abstain from answering when uncertain, measured as the proportion of abstentions relative to the sum of abstentions and incorrect answers.

Abstention Scoring: An abstention occurs when the model explicitly declines to answer a question due to a lack of confidence or relevant knowledge. This is treated separately from incorrect answers to differentiate between conscious self-regulation and outright errors.

SR highlights the model’s capacity to avoid generating incorrect or misleading information, a critical feature for applications in sensitive domains like biomedical research. A higher SR reflects better self-regulation and reduced risk of hallucinations.

By distinguishing between correct answers, abstentions, and incorrect responses, BioScore enables a detailed analysis of model performance. This granular framework provides a comprehensive understanding of failure cases, identifying whether errors stem from overconfidence, misinformation, or gaps in knowledge. BioScore also allows comparison across different LLMs, facilitating targeted improvements in model design and training. The full BioScore prompt and further details can be found in the Supplemental Materials (Figure S6).

Results

The CARDBiomedBench Dataset

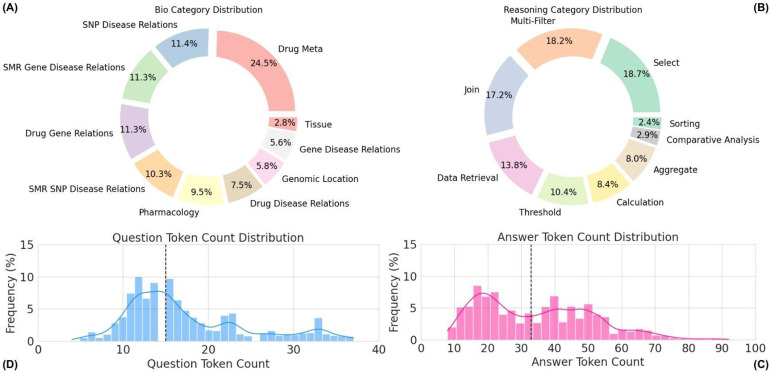

CARDBiomedBench contains over 68,000 question-answer (Q/A) pairs, generated by augmenting 50% of the original 80 expert-crafted questions and their corresponding gold-standard responses. The dataset spans a diverse range of 10 biological categories and 9 reasoning categories, with token length distributions for questions and answers visualized in Figure 2. Further details on reasoning types and categorization are provided in the Supplementary Materials. Examples of benchmark Q/A pairs are summarized in Table 1.

Figure 2:

Overview of CARDBiomedBench. (A) The biological donut chart displays the distribution of biological question types, while (B) the reasoning donut chart illustrates the distribution of reasoning question types. Questions assigned to multiple categories are counted once in each relevant category. (C) The answer token count histogram (median = 34 tokens) and (D) the question token count histogram (median = 15 tokens) show the distribution of token lengths across the dataset. Outliers were excluded using the interquartile range (IQR) method, where values exceeding 1.5 times the IQR were filtered out. Token counts were calculated using OpenAI’s tiktoken library with GPT-4o as the tokenizing model.

Table 1:

Examples of question-answer pairs from CARDBiomedBench. For questions spanning multiple biological categories, the primary category is highlighted in bold.

| Question | Gold Standard Response | Bio Categories |

|---|---|---|

| Is PSEN1 a druggable gene? | There are currently no approved drugs targeting PSEN1, but there are 6 drugs in clinical trials indicating that it may be a druggable gene. | Drug Meta |

| What is the genetic target of the small molecule drug Donepezil, and what is its status in clinical trials and FDA approval? | The genetic target of the small molecule drug Donepezil is ACHE. Donepezil reached phase 4 clinical trials meaning it has been approved by the FDA. | Drug Gene Relations; (Drug Meta) |

| Is Amantadine Hydrochloride approved to treat postencephalitic Parkinson disease? | Yes, the drug Amantadine Hydrochloride was approved by the FDA to treat postencephalitic Parkinson disease in 1968. | Drug Disease Relations; (Drug Meta) |

| What type of molecule is Trazodone Hydrochloride, and what is its action type? | Trazodone Hydrochloride is a small molecule drug that acts as an antagonist and an inhibitor. | Pharmacology |

| What is the relevance of the gene MAPT in Alzheimer’s Disease using SMR calculations? |

There are 13 SNPs within MAPT that are significant in Alzheimer’s Disease. Here are the top 10: - rs2532276 - Prefrontal Cortex: Adjusted SMR multi-SNP P-value: 2.59e-08; HEIDI: 5.8909e-03 - rs55825513 - Whole Brain: Adjusted SMR multi-SNP P-value: 1.52e-07; HEIDI: 1.2041e-04 - […] |

SMR Gene Disease Relations |

| Based on Whole Brain meta-analysis mQTL data, what is the top SNP in SNCA that is associated with Lewy Body Dementia (LBD), and what is its adjusted SMR p-value? | SNCA’s most significantly associated SNP with Lewy Body Dementia in the Whole Brain meta-analysis mQTL is rs1372520, with adjusted SMR multi-SNP P-value of 2.50e-06 and a HEIDI value of 1.3077e-01. | SMR SNP Disease Relations; (Tissue; SMR Gene Disease Relations) |

| Which SNP is most significantly associated with AD in the Liver? | The most significantly associated SNP with AD in the Liver is rs73045691, located in the APOC4 gene, with an adjusted SMR multi-SNP P-value of 3.17e-11 and a HEIDI value of 4.6883e-01. | Tissue; (SMR SNP Disease Relations) |

| Is there an association between MAPT and Parkinson’s disease ? |

Yes, there are 828 significant SNP associations between MAPT and PD, according to the largest European genome-wide meta-analysis of Parkinson’s disease (Nalls et al., 2019). Here are the top 10 most significant SNPs: - rs58879558: (GWAS P-value: 1.36e-21; b value: −2.3830e-01) - rs3785884: (GWAS P-value: 2.17e-21; b value: −2.3700e-01) - […] |

Gene Disease Relations |

| What is the allele frequency of the SNP rs567405864 in an Alzheimer’s disease study within a European cohort? | According to the largest European genome-wide meta-analysis of Alzheimer’s disease (Bellenguez, 2022), single nucleotide polymorphism rs567405864 has an effect allele C frequency of 0.003 and non-effect allele CATAAAG frequency of 0.997. | SNP Disease Relations |

| What is the genomic location of rs17051834 in the GRCh38/hg38 build of the human genome and what gene is it located on or near? | The SNP rs17051834 is located on or closest to the genes STC1 and ADAM28 on chromosome 8 at base pair position 24278303 in the GRCh38/hg38 build of the human genome. | Genomic Location |

LLMs Performance on CARDBiomedBench

Our evaluation reveals that modern large language models (LLMs) exhibit significant gaps in performance within the biomedical research and neurodegenerative disorders (NDD) domain. Using a sample of ~10,000 Q/A pairs, we calculated BioScores and derived two key metrics: Safety Rate and Response Quality Rate (sampling method detailed in the Supplementary Material). Safety Rate measures a model’s ability to abstain when uncertain, “acknowledge what it doesn’t know,” while Response Quality Rate reflects the accuracy of responses provided.

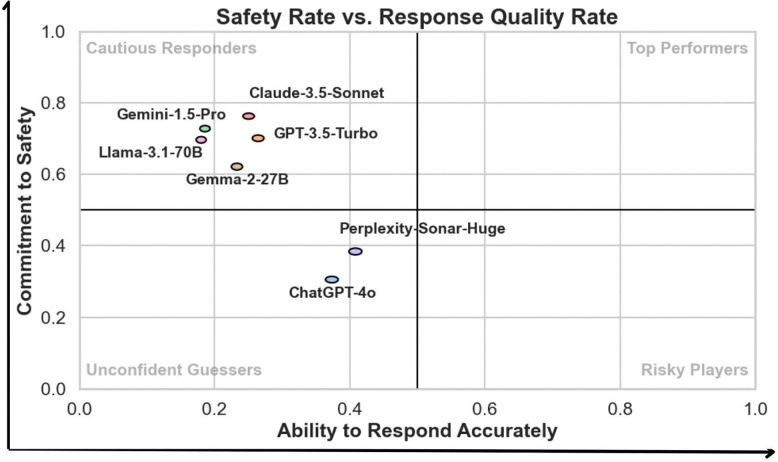

To interpret model performance, a scatter plot in Figure 3 maps models across these two dimensions, categorizing them into four performance quadrants:

Unconfident Guessers: Models with low Safety Rate and low Response Quality Rate fail to produce accurate answers and frequently guess without proper self-assessment. For instance, GPT-4o (Response Quality Rate: 0.37, Safety Rate: 0.31) and Perplexity-Sonar-Huge (Response Quality Rate: 0.41, Safety Rate: 0.38) fell into this category, requiring improvements in both accuracy and abstention behavior.

Risky Players: While none of the evaluated models fell into this quadrant, models in this category would exhibit high Response Quality Rate but low Safety Rate, indicating a propensity to attempt answers even when uncertain, increasing the risk of generating misleading information, making them less suitable for sensitive biomedical applications.

Cautious Responders: Models in this category achieve high Safety Rates but lower Response Quality Rates, indicating a conservative approach with frequent abstentions. While these models excel at self-regulation, their limited accuracy reduces their overall utility. For example, Gemini-1.5-Pro (Response Quality Rate: 0.19, Safety Rate: 0.73) and Claude-3.5-Sonnet (Response Quality Rate: 0.25, Safety Rate: 0.76) were among the cautious responders.

Top Performers: No models achieved the ideal balance of high Response Quality Rate and high Safety Rate. A model in this quadrant would represent the optimal candidate for real-world biomedical applications by reliably delivering accurate answers while abstaining appropriately when uncertain.

Figure 3:

Scatter plot of Safety Rate versus Response Quality Rate, illustrating model performance across four quadrants: Cautious Responders, Top Performers, Unconfident Guessers, and Risky Players. The x-axis represents the Ability to Respond (Response Quality Rate), and the y-axis represents the Commitment to Safety (Safety Rate), both ranging from 0.0 to 1.0, with higher values indicating better performance. Quadrant thresholds are set at 0.5 for both axes to categorize models. Ellipses around data points indicate 95% confidence intervals, reflecting consistent performance across all question types. None of the evaluated models demonstrated sufficient performance to qualify as Top Performers.

The scatter plot (Figure 3) demonstrates that existing models struggle to balance safety and response quality, either over-abstaining or providing inaccurate responses. These findings highlight the need for targeted improvements to optimize LLMs for biomedical applications.

This visualization provides a more nuanced view of model behavior compared to traditional metrics, showcasing the trade-offs between safety and accuracy. Additionally, we compared BioScores with traditional natural language processing (NLP) metrics, including BLEU,34 ROUGE-2, ROUGE-L,35,36 and BERTScore.36 A full comparison of these metrics is provided in the Supplementary Materials (Figures S9–S13).

Analysis of LLMs Limitations and Failure Patterns

Manual error analysis revealed that most failures were not due to alternate interpretations of data but stemmed from the models’ inability to access real-time information or handle advanced computational queries. Common limitations included:

Genomic Data Queries: Models often struggled with SNP and Gene-Disease Relation queries, including retrieving allele frequencies or SMR calculations. Genomic Location queries were another weak area, where models frequently provided incorrect locations with unwarranted confidence.

Pharmacology and Drug Data: In Drug Meta and Pharmacology categories, errors were often linked to unrecognized drug names or safety restrictions. While models like GPT-4o and Perplexity provided partial explanations, they frequently failed to retrieve precise statistics or integrate data from multiple biological categories.

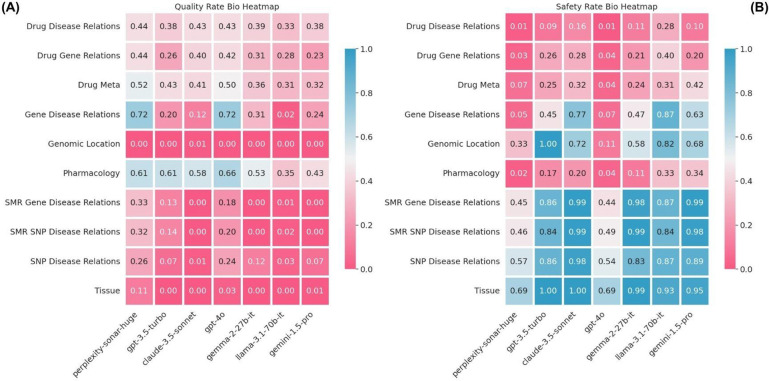

Figure 4 illustrates these limitations through a heatmap of Response Quality and Safety Rates across biological categories. Categories with the poorest performance included SNP and Gene-Disease Relations, which showed both low response quality and high abstention rates, as well as Genomic Location queries.

Figure 4:

(A) Heatmap of Response Quality Rate by model (x-axis) and biological category (y-axis). (B) Heatmap of Safety Rate for the same categories and models. Both metrics range from 0.0 to 1.0, with higher values (blue) indicating better performance and lower values (red) representing poor performance. Across all biological categories, models exhibit challenges in either safety, quality, or both, highlighting areas for improvement.

The primary sources of error were the (i) inability to locate or retrieve publicly accessible data, particularly real-time or curated datasets, and (ii) a lack of capacity to process and integrate complex queries requiring statistical or biological synthesis. These insights emphasize the need for more robust data integration capabilities and advanced reasoning frameworks in future biomedical LLMs to address domain-specific challenges effectively.

Discussion

Advancing Biomedical LLM Evaluation

CARDBiomedBench represents a significant advancement in biomedical LLM evaluation, distinguishing itself from existing benchmarks in several key ways. While previous efforts have focused on elementary medical knowledge (e.g., college-level biology or medicine courses),11,12 or broad interpretations of research papers such as those in PubMed,15 our benchmark addresses the complex intersection of genetics, disease mechanisms, and drug development. Unlike genomic information extraction benchmarks,13,14 CARDBiomedBench evaluates LLMs’ ability to synthesize and reason about cutting-edge biomedical research findings, offering a specialized resource tailored to the unique challenges of this domain.

Our pilot implementation in the NDD domain demonstrates the benchmark’s capability to assess critical aspects of biomedical research, including: (i) Integration of genetic and therapeutic knowledge, (ii) Analysis of complex statistical data, (iii) Synthesis of findings across multiple data sources, and (iv) Safe handling of uncertain or incomplete information.

CARDBiomedBench Design Considerations and Limitations

We acknowledge potential limitations in grounding our Q/A pairs within the specific datasets we provide, which may not represent universally accepted truths. While the benchmark reflects the most reliable and current evidence—such as the latest GWAS data—biology often involves variability, with different studies producing differing conclusions. Despite these challenges, we believe our benchmark provides an accurate snapshot of current knowledge, and all data used in its creation is publicly accessible, ensuring transparency in evaluation.

As science advances, newer insights may emerge that supersede our current dataset. To address this, we are committed to maintaining and updating CARDBiomedBench with the latest available data to ensure its ongoing relevance and utility. Future iterations will also aim to expand the dataset’s inclusivity by representing more diverse populations, covering additional genetic pathways, and integrating broader biological contexts. However, in its initial form, CARDBiomedBench offers a robust foundation for exploring the genetic and therapeutic dimensions of NDDs.

Our rubric-based evaluation further enhances reliability by allowing for differences in phrasing or formatting, as long as the core facts align with the gold standard. This ensures the evaluation is robust to natural variations in language while maintaining the integrity of the scoring process.

LLM-Based Evaluation: Advantages and Challenges

In this study, we prioritized LLM-based evaluation 28–31 over traditional NLP metrics due to its superior ability to differentiate between model outputs. As demonstrated in our results (see Supplementary Materials Figures S9–S13), the LLM-based rubric provides a precise scoring schema that aligns closely with manual grading, effectively distinguishing between high- and low-quality responses.32,33 This approach also allows for granular insights, including the ability to differentiate between failed answers and abstentions, which is critical in biomedical contexts where hallucinations or misleading statements can have severe consequences. High accuracy and low hallucination rates, even if accompanied by more frequent abstentions, are crucial for the safe application of LLMs in biomedical research.

Despite its advantages, the LLM-based evaluation approach has potential limitations. It can be resource-intensive and may not be entirely robust to edge cases. Furthermore, it may introduce biases, such as favoring frequently observed statements from pretraining data or the model’s own generated responses,37,38 which may not always align with factual accuracy.39 These biases pose challenges in assessing the truthfulness of less-common or novel statements. While these limitations are acknowledged, we view them as opportunities for refinement in future evaluations, aiming to enhance both reliability and fairness.

Supplementary Material

Table S1: Summary of CARDBiomedBench statistics, including approximate token counts using OpenAI’s tiktoken with GPT-4o as the tokenizing model.

Figure S2: GPT-4o struggling to answer a query from CARDBiomedBench involving p-values. Highlighted in red are specific failures such as: providing a hallucinated p-value. This example highlights the limitations of current LLMs in handling specialized, data-intensive queries in the field of biological research, underscoring the need for domain-specific adaptation.

Figure S3: Example of refi nement from a seed to a template question. The seed question requests the genomic location of two SNP’s while the template question is focused to only request one.

Table S4: Cost breakdown of collecting responses and grading them via BioScore for our experiments. Each model has varying costs per token and number of tokens it responds with so cost is broken down by model.

Figure S5: The complete system prompt given to each LLM along with the question, asking explicitly to abstain when they are unsure.

Figure S6: The complete BioScore grading prompt, to be fi lled in with appropriate question {question}, domain expert annotated “gold standard response” {golden_response}, and an LLM’s attempted answer {predicted_response}.

Figure S7: BioScore grading metric applied to the question “What is the ChEMBL ID of the drug Sunitinib?”. The fi rst column represents the highest score, 3 points, for an exact match. In the second column, a deduction of 0.5 points is applied, yielding a BioScore of 2.5, due to unnecessary elaboration in the response. The third column illustrates an incorrect ChEMBL ID for Sunitinib but a correct ID for a related compound, resulting in a partial credit score of 1. In cases of a refusal to respond, a score of -1 is assigned, as seen in the fourth and fifth columns. Finally, an incorrect response receives a score of 0.

Figure S8: Barchart showing the percentage of Gemini API “safety errors” by Bio Category. They are a result of Gemini API’s safety fi lters, in particular the harm category “Dangerous Content”. Error rate can range between 0% and 100%, in the context of our Q/A lower is better as none of our questions should be deemed dangerous.

Figure S9: Performance of various state-of-the-art AI models on CARDBiomedBench (measured via BioScore). The Abstain Rate (AR) for each model (i.e., the ratio of the cases with the model’s self reported “I don’t know”) are also provided under each bar. A model with a higher BioScore and lower AR is more desirable. Models are sorted by decreasing median BioScore, followed by decreasing Abstain Rate (AR), and then increasing spread (interquartile range). Ranges are between 0.0 and 1.0, with higher BioScore and low AR being more desirable.

Figure S10: Boxplot of Performance of various state-of-the-art AI models on CARDBiomedBench (measured via traditional NLP metrics). The order of models is preserved from the Figure above. As shown, traditional NLP metrics do not accurately capture performance on CARDBiomedBench. This is the motivation behind our more fi ne-grained, rubric-based evaluation metric BioScore and accompanying AR. Ranges are between 0.0 and 1.0, with higher being more desirable.

Table S11: The tables report the Mean and 95% CI for each custom and NLP metric across diff erent models. Ranges are between 0.0 and 1.0, with higher for all metrics and low AR being more desirable.

Figure S12: A, heatmap of mean BioScore by model (x-axis) and biological category (y-axis). B, accompanying Abstention Rates (AR). Higher BioScore (blue) and lower AR (white) are more desirable while low BioScore (red) and high AR (orange) are considered poor performance. Cells corresponding to categories with insuffi cient data (less than 5 responses) are displayed in dark gray and annotated with ‘NA’ to denote unavailability of reliable data. Ranges are between 0.0 and 1.0, with higher BioScore and low AR being more desirable.

Figure S13: A, a heatmap of Quality Rate by model (x-axis) and reasoning category (y-axis), and B is the same heatmap Safety Rates. Higher Quality Rate and Safety Rate (blue) are more desirable while low of either (red) are considered poor performance. Ranges are between 0.0 and 1.0, with higher being more desirable.

Acknowledgments

This work utilized the computational resources of the NIH STRIDES Initiative (https://cloud.nih.gov) through the Other Transaction agreement - Azure: OT2OD032100, Google Cloud Platform: OT2OD027060, Amazon Web Services: OT2OD027852. This work utilized the computational resources of the NIH HPC Biowulf cluster (https://hpc.nih.gov).

Funding

This research was supported in part by the Intramural Research Program of the NIH, National Institute on Aging (NIA), National Institutes of Health, Department of Health and Human Services; project number ZO1 AG000534, as well as the National Institute of Neurological Disorders and Stroke (NINDS).

Footnotes

Competing interests

Some authors’ participation in this project was part of a competitive contract awarded to DataTecnica LLC by the National Institutes of Health to support open science research. M.A.N. also owns stock in Character Bio Inc. and Neuron23 Inc.

Code availability. To provide transparency of methods, reproducibility of results, and facilitate expansion of our work, we have published a well documented repository of our code. It queries LLMs on CARDBiomedBench, computes each of the metrics presented in this manuscript, and creates all of the visualizations. We encourage the community to clone the repository and add their own models and attempt to beat our benchmark. Code can be found on GitHub at https://github.com/NIH-CARD/CARDBiomedBench.

Data and code availability

Data availability. We provide access to CARDBiomedBench Q/A on HuggingFace where there is a train/test split. The test split of ~10k examples is what we evaluated the models on and reported in this work. Data can be found at https://huggingface.co/datasets/NIH-CARD/CARDBiomedBench.

References

- 1.Scheltens P, Blennow K, Breteler MMB, et al. Alzheimer’s disease. Lancet 2016;388(10043):505–17. [DOI] [PubMed] [Google Scholar]

- 2.Bloem BR, Okun MS, Klein C. Parkinson’s disease. Lancet 2021;397(10291):2284–303. [DOI] [PubMed] [Google Scholar]

- 3.Holtzman DM, Morris JC, Goate AM. Alzheimer’s disease: the challenge of the second century. Sci Transl Med 2011;3(77):77sr1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.GBD 2019 Dementia Forecasting Collaborators. Estimation of the global prevalence of dementia in 2019 and forecasted prevalence in 2050: an analysis for the Global Burden of Disease Study 2019. Lancet Public Health 2022;7(2):e105–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Willis AW, Roberts E, Beck JC, et al. Incidence of Parkinson disease in North America. NPJ Parkinsons Dis 2022;8(1):170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yang W, Hamilton JL, Kopil C, et al. Current and projected future economic burden of Parkinson’s disease in the U.S. NPJ Parkinsons Dis 2020;6(1):15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fereshtehnejad S-M, Zeighami Y, Dagher A, Postuma RB. Clinical criteria for subtyping Parkinson’s disease: biomarkers and longitudinal progression. Brain 2017;140(7):1959–76. [DOI] [PubMed] [Google Scholar]

- 8.Dadu A, Satone V, Kaur R, et al. Identification and prediction of Parkinson’s disease subtypes and progression using machine learning in two cohorts. NPJ Parkinsons Dis 2022;8(1):172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Blesson A, Cohen JS. Genetic Counseling in Neurodevelopmental Disorders. Cold Spring Harb Perspect Med [Internet] 2020. [cited 2024 Oct 9];10(4). Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7117955/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Satone VK, Kaur R, Dadu A, et al. Predicting Alzheimer’s disease progression trajectory and clinical subtypes using machine learning [Internet]. bioRxiv. 2019;792432. Available from: https://www.biorxiv.org/content/10.1101/792432v2

- 11.Hendrycks D, Burns C, Basart S, et al. Measuring Massive Multitask Language Understanding. In: International Conference on Learning Representations \CNFXICLR. 2020. [Google Scholar]

- 12.Pal A, Umapathi LK, Sankarasubbu M. MedMCQA: A Large-scale Multi-Subject Multi-Choice Dataset for Medical domain Question Answering. In: Flores G, Chen GH, Pollard T, Ho JC, Naumann T, editors. Proceedings of the Conference on Health, Inference, and Learning. PMLR; 2022. p. 248–60. [Google Scholar]

- 13.Hou W, Ji Z. GeneTuring tests GPT models in genomics. bioRxiv [Internet] 2023;Available from: 10.1101/2023.03.11.532238 [DOI] [Google Scholar]

- 14.Jin Q, Yang Y, Chen Q, Lu Z. Genegpt: Augmenting large language models with domain tools for improved access to biomedical information. Bioinformatics 2024;40(2):btae075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jin Q, Dhingra B, Liu Z, Cohen W, Lu X. PubMedQA: A Dataset for Biomedical Research Question Answering [Internet]. In: Conference on Empirical Methods in Natural Language Processing \CNFXEMNLP. 2019. Available from: https://arxiv.org/abs/1909.06146 [Google Scholar]

- 16.Alvarado CX, Makarious MB, Weller CA, et al. omicSynth: An open multi-omic community resource for identifying druggable targets across neurodegenerative diseases. Am J Hum Genet 2024;111(1):150–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ochoa D, Hercules A, Carmona M, et al. The next-generation Open Targets Platform: reimagined, redesigned, rebuilt. Nucleic Acids Res 2023;51(D1):D1353–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zdrazil B, Felix E, Hunter F, et al. The ChEMBL Database in 2023: a drug discovery platform spanning multiple bioactivity data types and time periods. Nucleic Acids Res 2024;52(D1):D1180–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bellenguez C, Küçükali F, Jansen IE, et al. New insights into the genetic etiology of Alzheimer’s disease and related dementias. Nat Genet 2022;54(4):412–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nalls MA, Blauwendraat C, Vallerga CL, et al. Identification of novel risk loci, causal insights, and heritable risk for Parkinson’s disease: a meta-analysis of genome-wide association studies. Lancet Neurol 2019;18(12):1091–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Minikel EV, Painter JL, Dong CC, Nelson MR. Refining the impact of genetic evidence on clinical success. Nature 2024;629(8012):624–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.OpenAI. GPT-4 Technical Report. 2023;

- 23.Team Gemini, Georgiev P Lei VI, et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context [Internet]. arXiv [cs.CL]. 2024;Available from: http://arxiv.org/abs/2403.05530 [Google Scholar]

- 24.Claude 3.5 Sonnet [Internet]. [cited 2024 Aug 21];Available from: https://www.anthropic.com/news/claude-3-5-sonnet

- 25.Perplexity [Internet]. Perplexity AI. [cited 2025 Jan 15];Available from: https://www.perplexity.ai/

- 26.Dubey A, Jauhri A, Pandey A, et al. The Llama 3 herd of models [Internet]. arXiv [cs.AI]. 2024;Available from: http://arxiv.org/abs/2407.21783 [Google Scholar]

- 27.Team Gemma, Mesnard T, Hardin C, et al. Gemma: Open models based on Gemini research and technology [Internet]. arXiv [cs.CL]. 2024;Available from: http://arxiv.org/abs/2403.08295 [Google Scholar]

- 28.Zeng Z, Yu J, Gao T, Meng Y, Goyal T, Chen D. Evaluating Large Language Models at Evaluating Instruction Following [Internet]. 2023. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2310.07641 [Google Scholar]

- 29.Chiang C-H, Lee H-Y. Can Large Language Models Be an Alternative to Human Evaluations? In: Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2023. p. 15607–31. [Google Scholar]

- 30.Chang Y, Wang X, Wang J, et al. A Survey on Evaluation of Large Language Models [Internet]. 2023. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2307.03109 [Google Scholar]

- 31.Zheng L, Chiang W-L, Sheng Y, et al. Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena [Internet]. 2023. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2306.05685 [Google Scholar]

- 32.Hashemi H, Eisner J, Rosset C, Van Durme B, Kedzie C. LLM-Rubric: A Multidimensional, Calibrated Approach to Automated Evaluation of Natural Language Texts. In: Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2024. p. 13806–34. [Google Scholar]

- 33.Lin Y-T, Chen Y-N. LLM-Eval: Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with Large Language Models [Internet]. 2023. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2305.13711

- 34.Papineni K, Roukos S, Ward T, Zhu W-J. Bleu: a Method for Automatic Evaluation of Machine Translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics. Philadelphia, Pennsylvania, USA: Association for Computational Linguistics; 2002. p. 311–8. [Google Scholar]

- 35.Lin C-Y. ROUGE: A Package for Automatic Evaluation of Summaries [Internet]. In: ACL Workshop on Text Summarization Branches Out. 2004. Available from: https://aclanthology.org/W04-1013/ [Google Scholar]

- 36.Zhang T, Kishore V, Wu F, Weinberger KQ, Artzi Y. BERTScore: Evaluating Text Generation with BERT [Internet]. In: International Conference on Learning Representations \CNFXICLR. 2019. Available from: https://openreview.net/forum?id=SkeHuCVFDr [Google Scholar]

- 37.Kandpal N, Deng H, Roberts A, Wallace E, Raffel C. Large Language Models Struggle to Learn Long-Tail Knowledge [Internet]. 2022. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2211.08411 [Google Scholar]

- 38.Mallen A, Asai A, Zhong V, Das R, Khashabi D, Hajishirzi H. When Not to Trust Language Models: Investigating Effectiveness of Parametric and Non-Parametric Memories [Internet]. 2022. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2212.10511

- 39.Lin S, Hilton J, Evans O. TruthfulQA: Measuring How Models Mimic Human Falsehoods [Internet]. 2021. [cited 2024 Sep 12];Available from: http://arxiv.org/abs/2109.07958

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1: Summary of CARDBiomedBench statistics, including approximate token counts using OpenAI’s tiktoken with GPT-4o as the tokenizing model.

Figure S2: GPT-4o struggling to answer a query from CARDBiomedBench involving p-values. Highlighted in red are specific failures such as: providing a hallucinated p-value. This example highlights the limitations of current LLMs in handling specialized, data-intensive queries in the field of biological research, underscoring the need for domain-specific adaptation.

Figure S3: Example of refi nement from a seed to a template question. The seed question requests the genomic location of two SNP’s while the template question is focused to only request one.

Table S4: Cost breakdown of collecting responses and grading them via BioScore for our experiments. Each model has varying costs per token and number of tokens it responds with so cost is broken down by model.

Figure S5: The complete system prompt given to each LLM along with the question, asking explicitly to abstain when they are unsure.

Figure S6: The complete BioScore grading prompt, to be fi lled in with appropriate question {question}, domain expert annotated “gold standard response” {golden_response}, and an LLM’s attempted answer {predicted_response}.

Figure S7: BioScore grading metric applied to the question “What is the ChEMBL ID of the drug Sunitinib?”. The fi rst column represents the highest score, 3 points, for an exact match. In the second column, a deduction of 0.5 points is applied, yielding a BioScore of 2.5, due to unnecessary elaboration in the response. The third column illustrates an incorrect ChEMBL ID for Sunitinib but a correct ID for a related compound, resulting in a partial credit score of 1. In cases of a refusal to respond, a score of -1 is assigned, as seen in the fourth and fifth columns. Finally, an incorrect response receives a score of 0.

Figure S8: Barchart showing the percentage of Gemini API “safety errors” by Bio Category. They are a result of Gemini API’s safety fi lters, in particular the harm category “Dangerous Content”. Error rate can range between 0% and 100%, in the context of our Q/A lower is better as none of our questions should be deemed dangerous.

Figure S9: Performance of various state-of-the-art AI models on CARDBiomedBench (measured via BioScore). The Abstain Rate (AR) for each model (i.e., the ratio of the cases with the model’s self reported “I don’t know”) are also provided under each bar. A model with a higher BioScore and lower AR is more desirable. Models are sorted by decreasing median BioScore, followed by decreasing Abstain Rate (AR), and then increasing spread (interquartile range). Ranges are between 0.0 and 1.0, with higher BioScore and low AR being more desirable.

Figure S10: Boxplot of Performance of various state-of-the-art AI models on CARDBiomedBench (measured via traditional NLP metrics). The order of models is preserved from the Figure above. As shown, traditional NLP metrics do not accurately capture performance on CARDBiomedBench. This is the motivation behind our more fi ne-grained, rubric-based evaluation metric BioScore and accompanying AR. Ranges are between 0.0 and 1.0, with higher being more desirable.

Table S11: The tables report the Mean and 95% CI for each custom and NLP metric across diff erent models. Ranges are between 0.0 and 1.0, with higher for all metrics and low AR being more desirable.

Figure S12: A, heatmap of mean BioScore by model (x-axis) and biological category (y-axis). B, accompanying Abstention Rates (AR). Higher BioScore (blue) and lower AR (white) are more desirable while low BioScore (red) and high AR (orange) are considered poor performance. Cells corresponding to categories with insuffi cient data (less than 5 responses) are displayed in dark gray and annotated with ‘NA’ to denote unavailability of reliable data. Ranges are between 0.0 and 1.0, with higher BioScore and low AR being more desirable.

Figure S13: A, a heatmap of Quality Rate by model (x-axis) and reasoning category (y-axis), and B is the same heatmap Safety Rates. Higher Quality Rate and Safety Rate (blue) are more desirable while low of either (red) are considered poor performance. Ranges are between 0.0 and 1.0, with higher being more desirable.

Data Availability Statement

Data availability. We provide access to CARDBiomedBench Q/A on HuggingFace where there is a train/test split. The test split of ~10k examples is what we evaluated the models on and reported in this work. Data can be found at https://huggingface.co/datasets/NIH-CARD/CARDBiomedBench.