Abstract

Background: Unintended consequences of computerized patient care system interventions may increase resource use, foster clinical errors, and reduce users' confidence.

Objective: To evaluate three successive interventions designed to reduce serum magnesium test ordering through a care provider order entry system (CPOE). The second, modeled after a previously successful intervention, caused paradoxical increases in magnesium test ordering rates.

Design: A time-series analysis modeled weekly rates of magnesium test ordering, underlying trends, the impact of the three successive interventions, and the impact of potential covariates. The first intervention exhorted users to discontinue unnecessary tests recurring more than 72 hours into the future. The second displayed recent magnesium, calcium, and phosphorus test results, limited testing to one test instance per order, and provided education regarding appropriate indications for testing. The third targeted only magnesium ordering, displayed recent results, limited testing to one instance per order, summarized indications for testing, and required users to select an indication.

Participants: Clinicians at Vanderbilt University Hospital, a 609-bed academic inpatient tertiary care facility, from 1998 through 2003.

Measurements: Weekly rates of new serum magnesium test orders, instances, and results.

Results: At baseline, there were 539 magnesium tests ordered per week. This decreased to 380 (p = 0.001) per week after the first intervention, increased to 491 per week (p < 0.001) after the second, and decreased to 276 per week (p < 0.001) after the third.

Conclusion: A clinical decision support intervention intended to regulate testing increased test order rates as an unintended result of decision support. CPOE implementers must carefully design resource-related interventions and monitor their impact over time.

Clinical decision support (CDS) systems are computerized tools developed to assist clinical decision making by presenting to health care providers relevant patient-, disease-, or institution-specific evidence.1 When integrated into workflows that include care provider order entry (CPOE), CDS systems increase adherence to guidelines and protocols.1,2,3,4,5,6,7,8,9 For example, decision support tools described in the medical literature have improved compliance with testing guidelines,10,11,12,13,14,15,16,17,18,19 primary care and preventive medicine guidelines,20,21,22,23,24,25,26 and disease-specific management protocols.27,28,29,30,31,32,33,34,35,36,37,38,39,40

Despite their potential utility, a growing body of evidence has demonstrated that computerized patient care information systems (PCISs), such as CDS and CPOE systems, may cause unintended consequences.41 Unintended consequences of PCISs and CDS tools can lead to workflow inefficiency, excessive resource use, reduced confidence in such systems, and actual clinical errors. Studies investigating the factors leading to unintended consequences of decision support remain uncommon.

The current study evaluates an unintended consequence fostered by a decision support intervention designed to regulate inpatient serum magnesium testing at Vanderbilt University Medical Center (VUMC). The intervention was one of three developed at the request of the VUMC Resource Utilization Committee (RUC), which has periodically requested modifications in the institutional CPOE system to deliver targeted decision support interventions to reduce excessive or variable resource consumption.19 A similar project had previously decreased rates of serum chemistry test ordering (e.g., sodium, potassium, chloride, bicarbonate, blood urea nitrogen, creatinine, and glucose) at Vanderbilt University Hospital (VUH) by 51% below baseline.19 Between 1999 and 2002, the RUC commissioned development of three sequential interventions to regulate serum magnesium test ordering via the CPOE system. According to the RUC, the impetus for managing serum magnesium testing was twofold: (1) Analysis of CPOE system log files and laboratory results indicated that over 99% of the magnesium test results fell into a clinically acceptable “normal” range; 77% of tests represented repeat tests on individual patients, and patients' serum magnesium levels, when checked, were tested an average 4.2 times per admission. (2) The RUC knew of evidence in the literature indicating poor correlation of serum magnesium levels with total body magnesium stores42 and that improved clinical outcomes rarely resulted from magnesium screening.43

Design

Study Setting and Participants

At the time of the study, from 1998 through 2003, VUH was a 609-bed inpatient tertiary care facility with large local and regional primary referral bases, cared for 31,000 inpatients annually, was staffed by approximately 900 attending physicians, and annually trained more than 650 house staff and fellows in over 50 subspecialty areas. Vanderbilt University Medical Center (VUMC), through its Department of Biomedical Informatics, Informatics Center, and clinical infrastructure,44 has developed and implemented clinical information systems, including CPOE45 and clinical data repository46 systems. At the start of the study period, the CPOE system was implemented on 30 of 33 inpatient wards, and over 12, 000 orders were entered into the system daily. On active CPOE units, nearly 100% of orders were entered into the CPOE system. All hospital units using the CPOE system at the beginning of the study period were included in the study; this excluded only the pediatric and neonatal intensive care units, the Emergency Department, and the General Clinical Research Center (GCRC).

Using the standard Vanderbilt CPOE system prior to any of the current study interventions, health care providers could freely order laboratory testing on inpatients; there were no system-imposed requirements that users know appropriate indications for the tests, that they review the patient's prior results, or that they limit test frequency and duration. Certain hospital units also had protocols for nonphysicians to order recurring magnesium testing: testing on the hematology/oncology care unit was driven primarily by disease-specific standard protocols for bone marrow transplantation (e.g., one order set protocol allowed magnesium testing every Monday and Thursday, regardless of the patients' clinical status). On the trauma surgical unit, nursing protocols permit testing triggered from clinical conditions (e.g., magnesium was tested whenever patients had certain cardiac arrhythmias).

Decision Support Interventions Targeting Magnesium Ordering

In developing an approach to determining whether some of the orders for serum magnesium tests were excessive or inappropriate, the RUC identified two “normal” ranges for serum magnesium results. One represented the institutional laboratory's statistically based normal range, 1.5–2.5 mg/dL. The other represented a “physiologically appropriate” range (i.e., the serum magnesium was unlikely directly to cause clinical sequelae if within this range since the body's magnesium stores are primarily intracellular rather than in the serum). The RUC defined the physiologically appropriate serum magnesium range as 1.0–3.9 mg/dL.

Three decision support interventions were designed and implemented to regulate serum magnesium test ordering (▶). The study's decision support interventions were displayed to all physicians, nurse practitioners, and medical students entering orders for any patient receiving care on VUH hospital units where CPOE was implemented. The first intervention, active from December 5, 1999 through March 21, 2000, globally targeted all open-ended laboratory and radiology orders. Intervention-generated feedback to CPOE system users indicated daily when any test order was scheduled to recur more than 72 hours into the future (e.g., twice daily serum magnesium levels for five days), prompting users to consider discontinuing the order.

Table 1.

Decision support interventions designed to implement an institutional magnesium testing protocol*

| Intervention | Dates | Target and Description |

|---|---|---|

| Baseline | 1/1/98 – 12/4/99 | No protocols restricting the frequency, context, or volume of magnesium testing |

| First intervention† | 12/5/99 – 3/21/00 | Targeted all laboratory testing instances. Instances scheduled for more than 72 hours into the future flagged; users prompted to discontinue them. |

| Second intervention† | 6/20/00 – 11/30/01 | Targeted magnesium, calcium, & phosphorus testing instances. Included a graphical display of recent serum magnesium, calcium, and phosphorus results; educational material outlining indications for magnesium testing and interpretation; and restrictions on the volume of magnesium, calcium, and phosphorus testing |

| Third intervention‡ | 12/1/01 – 12/31/03 | Targeted magnesium testing orders. Included the most recent serum magnesium result; graphical display of a calculated corrected magnesium value; restriction testing volume to one test per order; and requirement to select a magnesium testing indication |

For the purpose of this study, orders are finalized interactions with the CPOE system that lead to one or more actions on patients (such as laboratory testing). Instances are the individual actions that occur on patients as the result of an order (e.g., one order for twice-daily magnesium testing to occur over 5 days leads to 10 magnesium testing instances).

Broad-based tools, globally addressing multiple laboratory tests simultaneously.

Focused tool targeting only serum magnesium testing orders.

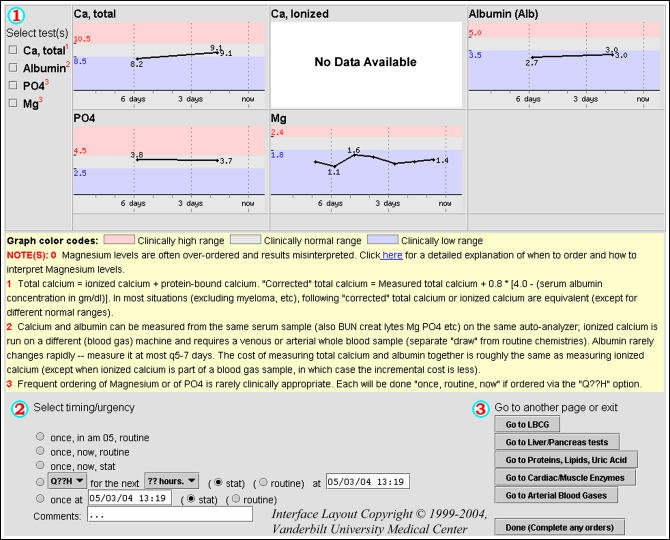

The second study-related intervention (▶), active from June 20, 2000 through November 30, 2001, emulated a previously successful technique used to regulate serum chemistry testing (e.g., sodium, potassium, chloride, bicarbonate, blood urea nitrogen, creatinine, and glucose).19 The second intervention forced any care provider who ordered a serum magnesium, calcium, or phosphorus level to view a common Web page that displayed the patient's previous results for those tests and included a link to a list of testing guidelines (▶). This intervention also limited orders to one test per order (i.e., no recurrent testing was possible). System users could bypass the second intervention only by ordering magnesium testing from disease-specific order sets or by specifically ordering a single magnesium test (rather than recurrent testing) from the standard CPOE system user interface.

Figure 1.

The second decision support tool was designed to reduce unnecessary magnesium, calcium, and phosphorus testing. The tool's interface included graphical displays of any magnesium, calcium, and phosphorus testing results from the previous seven days. Albumin results were also included to allow the user to “correct” the measured magnesium and calcium values. The interface included additional educational material and a link to testing guidelines under “Notes.” At the bottom of the tool, the user was prompted to enter the timing of tests, and orders are restricted to one-time testing (for magnesium and phosphorus) or to repeat testing to occur only within the following 24-hour period (for calcium and albumin).

Table 2.

Institutional Guidelines for Serum Magnesium Testing*

| 1. Routine or repeated magnesium testing is not indicated unless evidence from the clinical evaluation of the patient suggests magnesium deficiency. |

| 2. If magnesium is thought to have therapeutic value and the patient does not have renal failure, then simply give it, since serum magnesium levels are not sensitive enough to guide this kind of empirical replacement therapy. |

| 3. Healthy-eating persons generally do not require any magnesium supplementation unless their levels are less than 1.0 mg/dL, and repeated magnesium testing is not needed in such individuals, unless new indications arise. |

| 4. Almost never give magnesium to someone with significant renal impairment. |

| 5. Correct any serum magnesium level for albumin before treating an asymptomatic patient. |

Links to these guidelines were available from the second and third decision support tools described in this article.

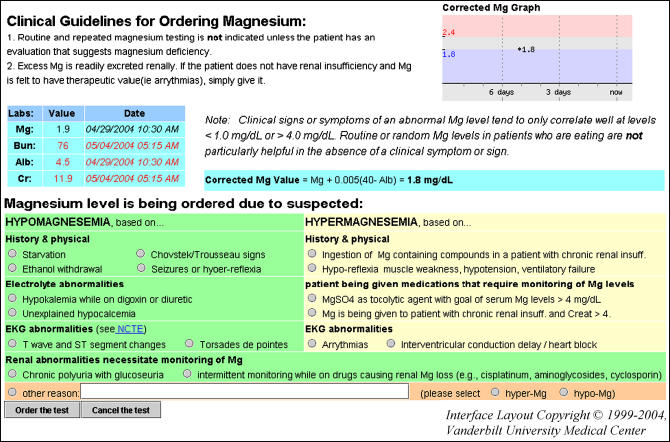

The third study-related intervention (▶), active from December 1, 2001 (two years after introduction of the first intervention and 1½ years after the second) onward, was designed solely to reduce orders for magnesium testing and did not address calcium or phosphorus testing. Like the second intervention, the third displayed the patient's recent results, limited orders to one test per order, and summarized guidelines for testing. The third intervention also added a requirement for the user to enter a reason for testing after reviewing indications (▶). CPOE users could only bypass the third intervention by ordering magnesium tests from a disease-specific order set.

Figure 2.

The third decision support tool specifically targeted magnesium testing. The interface included graphical and tabular display of the most recent results for magnesium, albumin, and a corrected magnesium, if available. Blood urea nitrogen and creatinine were also displayed to indicate the patient's renal function. Users were also required to select or enter an indication for testing. All magnesium orders entered through this tool were for single test instances.

Data Sources

After Institutional Review Board approval was obtained for this study, a computer program extracted from CPOE system log files all new orders for serum magnesium, calcium, and phosphorus testing and all related test order “instances” (e.g., one order for twice-daily magnesium testing to occur over five days would lead to ten magnesium testing instances). Net instances were calculated by excluding any previously scheduled instances that did not occur due to an intercurrent “discontinue” order (e.g., an order for twice-daily magnesium testing over five days might be discontinued after two days, leading to a net four magnesium testing instances). All inpatient serum magnesium, calcium, and phosphorus test results from July 25, 1999 (when the current VUH laboratory system was implemented) to December 31, 2003 were extracted from the institutional clinical data repository. Patient data, including age, gender, mortality, hospital length of stay, and disease complexity (as measured by diagnosis-related group weight, assigned at discharge) were extracted from institutional demographic databases to include in the analyses as covariates.

Statistical Analysis

The primary outcomes were weekly rates of new serum magnesium test orders, net serum magnesium order instances, and reported magnesium results. Secondary outcomes included rates of concurrent orders for serum magnesium and calcium or phosphorus tests, and weekly rates of serum magnesium orders on units with a high proportion of nurse-entered or protocol-driven orders. Interrupted time-series analysis allows the evaluation of an outcome of interest in a single population to determine whether changes were coincident with a specific point in time47,48,49 and may be especially useful for studying decision support interventions deployed in academic teaching hospitals.50 Outcomes were modeled using an interrupted time-series analysis that included moving averages, auto regressive terms, and structural variables corresponding with each intervention. The structural variables in our analysis included components to model the preintervention period and the immediate change (i.e., the intercept change) and trend change (i.e., the slope change) in ordering rates following each intervention. The analysis accounted for potential confounding by including demographic covariates, including weekly admission rates and mean patient age.51 Outcomes were considered statistically significant if p-values were below 0.050. All statistical analyses were performed using Stata SE version 8.0 (Stata Corp, College Station, TX).

Results

Patient Characteristics

During the six-year study period, VUH admitted a total of 194,192 patients as inpatients, increasing from an initial rate of 548 patients admitted per week to a final 720 per week (an upward trend of 0.6 patients every week; p = 0.035). Mean patient age, proportional admissions by gender, proportional hospital deaths, and patient complexity did not appreciably change throughout the study period.

Decision Support Impact on Ordering Rates and Reported Results

System users ordered a baseline mean 0.87 magnesium net instances per admitted patient on all study units. This decreased to 0.59 net instances per patient (p < 0.001) with the first intervention, increased to 0.87 per patient (p = 0.001) after the second intervention, and decreased to 0.39 per patient after the third intervention (p = 0.003). There were no significant trend changes in net instances per patient with any of the interventions. At the end of the study period, the expected rate of magnesium testing had dropped to 0.41 instances per patient. Overall, magnesium testing was ordered on 21% of all admitted patients at the start of the study period, dropping to 14% at the end of the study period.

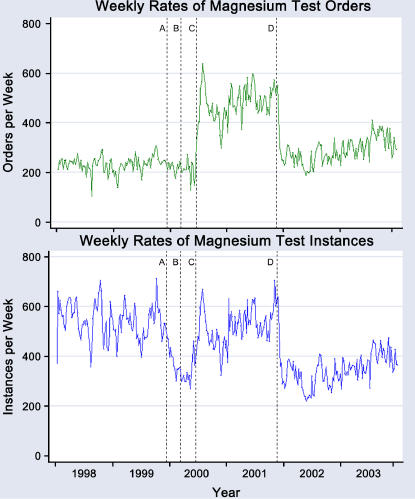

▶ shows the impact of each of the three interventions on the order and instance rates for serum magnesium test orders. ▶ reports the weekly rates for serum magnesium, phosphorus, and calcium test ordering during the baseline period and after each intervention. Weekly rates of serum magnesium tests performed on inpatient study units had no underlying trends, dropped 29% with the first intervention (p < 0.001), increased 30% with the second intervention (p = 0.001), and dropped 46% with the third intervention (p < 0.001). During all periods of the study, 14% of serum magnesium results fell outside the laboratory normal range, and 0.4% of results fell outside the physiologically acceptable range; there was no change with any of the interventions.

Figure 3.

Weekly rates of magnesium orders (top) and net instances (bottom) over the entire study period. Rates of instances and results track closely together and have an overall correlation coefficient of 0.87. Study-related events affecting ordering rates included implementation of the first decision support tool (A), removal of the first decision support tool (B), implementation of the second decision support tool (C), and replacement of the second decision support tool with the third (D). The change in rates at C brings net instances and results back to the preinterventions baseline. When corrected for increasing hospital admission rates, the apparent upward trend following D remains significant for net instances.

Table 3.

Changes in Test Order Rates Associated With Decision Support Interventions

| Orders* |

Instances* |

|||

|---|---|---|---|---|

| Tests | Value | p Value† | Value | p Value† |

| Magnesium | ||||

| Mean weekly tests during baseline period | 234 | — | 539 | — |

| Baseline trend in weekly tests | 0.1 | 0.845 | −0.5 | 0.163 |

| Weekly test rate after the first intervention‡ | 219 | 0.640 | 378 | 0.001 |

| Weekly test rate after the second intervention | 485 | <0.001 | 491 | 0.022 |

| Trend change in the weekly test rate after the second intervention | 0.6 | 0.315 | 1.5 | 0.050 |

| Weekly test rate after the third intervention | 230 | <0.001 | 277 | <0.001 |

| Trend change in the weekly test rate related the third intervention | 0.9 | 0.076 | 1.6 | 0.007 |

| Mean weekly test rate at the end of study period | 320 | — | 379 | — |

| Calcium | ||||

| Mean weekly tests during baseline period | 200 | — | 400 | — |

| Baseline trend in weekly tests | 0.1 | 0.924 | −0.5 | 0.361 |

| Weekly test rate after the first intervention‡ | 190 | 0.822 | 336 | 0.643 |

| Weekly test rate after the second intervention | 368 | 0.113 | 452 | 0.541 |

| Trend change in the weekly test rate after the second intervention | 2.5 | 0.012 | 3.6 | 0.003 |

| Weekly test rate after the third intervention | 462 | 0.045 | 621 | 0.001 |

| Trend change in the weekly test rate related the third intervention | 7.6 | < 0.001 | 8.0 | < 0.001 |

| Mean weekly test rate at the end of study period | 1259 | — | 1417 | — |

| Phosphorus | ||||

| Mean weekly tests during baseline period | 108 | — | 282 | — |

| Baseline trend in weekly tests | 0.1 | 0.662 | 0.1 | 0.777 |

| Weekly test rate after the first intervention‡ | 107 | 0.823 | 227 | 0.056 |

| Weekly test rate after the second intervention | 292 | < 0.001 | 313 | 0.011 |

| Trend change in the weekly test rate after the second intervention | 1.1 | 0.008 | 1.5 | 0.015 |

| Weekly test rate after the third intervention | 229 | 0.008 | 289 | 0.189 |

| Trend change in the weekly test rate related the third intervention | 0.7 | 0.054 | 0.8 | 0.057 |

| Mean weekly test rate at the end of study period | 309 | — | 363 | — |

All outcomes are corrected for changes in weekly admission rates.

p Values for “weekly test rate” tests the hypothesis that the current rate is the same as the preceding rate. p Values for “trend change” tests the hypothesis that the current trend is equal to zero.

Trends during the period when the first tool was implemented could not be tested due to inadequate sample.

During the baseline period, 33% of orders for calcium testing were entered simultaneously with orders for magnesium testing. Simultaneous calcium and magnesium test orders did not immediately change with implementation of the first intervention, but dropped over time to 23% (p < 0.001) prior to implementation of the second intervention. Concurrent magnesium and calcium ordering increased to 37% (p < 0.001) after implementation of the second intervention and dropped to 25% (p < 0.001) following the third intervention. During the baseline period, 48% of phosphorus test orders were entered simultaneously with orders for magnesium tests. Simultaneous phosphorus and magnesium test orders increased to 80% (p < 0.001) with the second decision support intervention and decreased to 47% (p < 0.001) with the third.

Decision Support Impact on Nurse-Entered or Protocol-Driven Ordering Rates

The analysis was repeated excluding the hematology/oncology and trauma surgical units, where magnesium orders were often placed by protocol. Excluding these units, 194 orders were placed per week for 423 net magnesium testing instances. The order rate increased to 362 per week after the second decision support intervention (p < 0.001) and decreased to 212 per week after the third (p < 0.001), while net instances dropped to 281 after the first intervention (p = 0.092), increased to 357 instances per week with the second (p = 0.011), and dropped to 310 instances per week with the third (p = 0.012). Excluding the hematology/oncology and trauma surgical units, magnesium testing was obtained 3.3 times per admitted patient when ordered at all, compared to 4.9 times per patient on those units (p < 0.001).

On the hematology/oncology care unit, there were at baseline 59 net instances weekly, decreasing by five tests every 20 weeks (p = 0.003). After the third intervention, net instances increased by eight tests every 20 weeks (p = 0.006) over baseline, leading to a final expected mean rate of 48 net instances per week. On the trauma surgical units alone, there were 34 orders for 82 net instances per week at baseline. After the third intervention, orders dropped from 114 per week to 43 per week (p = 0.006), and net instances dropped from 121 per week to 37 per week (p = 0.002). There were no other changes in new order or net instance rates in these units.

Discussion

The current study evaluated the impact of three sequential CDS interventions implemented through a CPOE system to regulate magnesium test ordering rates. Despite emulating a previously successful decision support intervention, the second intervention caused several unintended consequences, increasing testing rates to before the first-intervention baseline and doubling the rates at which users placed study test orders into the CPOE system. These results ultimately required development of a third intervention. The paradoxically increased rates of magnesium testing following the second intervention were observed across multiple analyses, whether measured as CPOE system orders, individual tests requested per week, tests performed per week, or per-patient test rates. The increases were not observed on hospital units with relatively high proportions of protocol-driven rather than physician-driven orders for magnesium testing but were pronounced when these units were excluded from the analysis. The second intervention was also associated with increased rates of simultaneously entered orders for magnesium and calcium or phosphorus testing.

Patient care information systems and CDS interventions can lead to unintended consequences and paradoxical effects. Such consequences can cause clinical errors, fragment health care providers' workflow, increase resource use for testing and medication prescribing, and reduce users' confidence in PCISs.41 The paradoxical increase in order rates described in this study likely resulted from an unintended consequence, inadvertent prompting by the decision support system that led users to increase ordering. System developers placed together in the same user interface page (for the second intervention) measures meant individually to limit serum magnesium, calcium, and phosphorus testing. In such a setting, placing the ability to order three tests from a single CPOE user interface may have unintentionally suggested that the tests should be ordered together, thereby prompting users to order them without originally planning to do so.

Inadvertent prompting results when a decision support system unexpectedly stimulates system user to perform an action that was not anticipated by the system developers. This may occur when system users, such as health care providers, make decisions based on habitual patterns or complex reasoning52 that were not obvious to system developers. For example, a health care provider might routinely request (often unnecessary) tests such as a serum magnesium and phosphorus levels when ordering serum calcium tests; system developers unaware of this clinical approach would not plan to avert such behaviors.

In addition to inadvertent prompting, combining the three tests on a single Web page created a workflow convenience. The intervention's developers had placed the measures together because serum magnesium testing is often ordered concurrently with serum calcium and phosphorus testing. The standard CPOE system interface required that users enter individual orders for each; magnesium, calcium, and phosphorus tests were previously ordered separately, with unique test frequency, urgency, and timing for each. The second study intervention allowed system users to order magnesium, calcium, and phosphorus simultaneously, specifying frequency, urgency, and timing once for all three tests. The intervention did not balance the increased ease of ordering that it provided with any rational constraints on ordering (such as requiring that users review the graphical presentation of previous magnesium results and that users become familiar with local guidelines and educational material about magnesium testing or provide reasons for test ordering). The result of the imbalanced second intervention was a paradoxical increase in magnesium test ordering rates. The third study intervention addressed the imbalance and lowered magnesium test ordering rates.

Limitations

The current investigation has limitations that merit discussion. First, as is the case for all time-series analyses, it is possible that unmeasured events occurring coincident with the CDS interventions caused the observed outcomes rather than the interventions themselves. Second, because the second decision support intervention was removed from the CPOE system at the same time that the third was introduced, the study could not determine which event led to the drop in testing rates occurring in the transition between them. Rates of serum phosphorus testing did not change in the transition between the second and third interventions and therefore served as a partial “control”; it is reasonable to postulate that the third intervention caused the drop in magnesium testing. The study also did not include an analysis of other unintended consequences of decision support interventions, including adverse clinical impact or poor cost-effectiveness of implementation. While this study could not directly evaluate whether system users ordered serum magnesium testing more or less appropriately with any of the interventions, it did include an analysis of rates of abnormal results. It is likely that had the interventions improved appropriateness of test ordering, they would have reduced the proportion of normal results. Fourth, the results may not generalize to other health care settings. This study was performed in a teaching hospital where the majority of order entry was performed by house staff physicians and may not be representative of smaller community hospitals.

Conclusion

Combining ordering interventions aimed at decreasing testing for multiple tests into a single user interface Web page inadvertently caused health care providers to order more tests than prior to the interventions. The increase in testing occurred because the intervention inadvertently prompted users who might otherwise have ordered only a serum calcium or phosphorus test also to order serum magnesium tests and inadvertently made it efficient for them to do so. Designers of CDS interventions should take into account the paradoxical prompting that such interventions might generate. Upon deploying decision support interventions, developers should also monitor their impact and assess any unintended consequences.

The project was supported by United States National Library of Medicine Grants (5 T15 LM007450-02 and 5 R01 LM 06226), the Vanderbilt General Clinical Research Center (M01-RR00095), the Vanderbilt Physician Scientist Development Program, and Vanderbilt University Medical Center Funds.

The WizOrder Care Provider Order Entry (CPOE) system described in this manuscript was developed by Vanderbilt University Medical Center faculty and staff within the School of Medicine and Informatics Center beginning in 1994. In May 2001, Vanderbilt University licensed the product to a commercial vendor, who is modifying the software. The system as described represents the Vanderbilt noncommercialized software code. Dr. Rosenbloom, the manuscript's lead author, has been paid by the commercial vendor in the past to demonstrate to potential clients the noncommercialized product. Drs. Miller and Talbert have been recognized by Vanderbilt as contributing to the authorship of the WizOrder software and have received and will continue to receive royalties from Vanderbilt under the University's intellectual property policies. While these involvements could potentially be viewed as a conflict of interest with respect to the submitted manuscript, the authors have taken a number of concerted steps to avoid an actual conflict, specifically by including additional authors and a statistician not involved in development or commercialization of the CPOE system.

References

- 1.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10:523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tierney WM, Overhage JM, Takesue BY, et al. Computerizing guidelines to improve care and patient outcomes: the example of heart failure. J Am Med Inform Assoc. 1995;2:316–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Overhage JM, Middleton B, Miller RA, Zielstorff RD, Hersh WR. Does national regulatory mandate of provider order entry portend greater benefit than risk for health care delivery? The 2001 ACMI debate. The American College of Medical Informatics. J Am Med Inform Assoc. 2002;9:199–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Miller RA. Medical diagnostic decision support systems—past, present, and future: a threaded bibliography and brief commentary. J Am Med Inform Assoc. 1994;1:8–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295:1351–5. [DOI] [PubMed] [Google Scholar]

- 6.Cimino C, Barnett GO, Blewett DR, et al. Interactive query workstation: a demonstration of the practical use of UMLS knowledge sources. Proc Annu Symp Comput Appl Med Care. 1992:823–4. [PMC free article] [PubMed]

- 7.Sittig DF, Stead WW. Computer-based physician order entry: the state of the art. J Am Med Inform Assoc. 1994;1:108–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Warner HR, Olmsted CM, Rutherford BD. HELP—a program for medical decision-making. Comput Biomed Res. 1972;5:65–74. [DOI] [PubMed] [Google Scholar]

- 9.Stead WW, Miller RA, Musen MA, Hersh WR. Integration and beyond: linking information from disparate sources and into workflow [see comments]. J Am Med Inform Assoc. 2000;7:135–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests [see comments]. N Engl J Med. 1990;322:1499–504. [DOI] [PubMed] [Google Scholar]

- 11.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests [see comments]. Am J Med. 1999;106:144–50. [DOI] [PubMed] [Google Scholar]

- 12.Cummings KM, Frisof KB, Long MJ, Hrynkiewich G. The effects of price information on physicians' test-ordering behavior. Ordering of diagnostic tests. Med Care. 1982;20:293–301. [DOI] [PubMed] [Google Scholar]

- 13.Gortmaker SL, Bickford AF, Mathewson HO, Dumbaugh K, Tirrell PC. A successful experiment to reduce unnecessary laboratory use in a community hospital. Med Care. 1988;26:631–42. [DOI] [PubMed] [Google Scholar]

- 14.Hawkins HH, Hankins RW, Johnson E. A computerized physician order entry system for the promotion of ordering compliance and appropriate test utilization. J Healthc Inf Manag. 1999;13:63–72. [PubMed] [Google Scholar]

- 15.Shojania KG, Yokoe D, Platt R, Fiskio J, Ma'luf N, Bates DW. Reducing vancomycin use utilizing a computer guideline: results of a randomized controlled trial. J Am Med Inform Assoc. 1998;5:554–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Studnicki J, Bradham DD, Marshburn J, Foulis PR, Straumfjord JV. A feedback system for reducing excessive laboratory tests. Arch Pathol Lab Med. 1993;117:35–9. [PubMed] [Google Scholar]

- 17.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized physician order entry on prescribing practices. Arch Intern Med. 2000;160:2741–7. [DOI] [PubMed] [Google Scholar]

- 18.Sanders DL, Miller RA. The effects on clinician ordering patterns of a computerized decision support system for neuroradiology imaging studies. Proc AMIA Symp. 2001:583–7. [PMC free article] [PubMed]

- 19.Neilson EG, Johnson KB, Rosenbloom ST, et al. The impact of peer management on test-ordering behavior. Ann Intern Med. 2004;141:196–204. [DOI] [PubMed] [Google Scholar]

- 20.McDonald CJ, Hui SL, Smith DM, et al. Reminders to physicians from an introspective computer medical record. A two-year randomized trial. Ann Intern Med. 1984;100:130–8. [DOI] [PubMed] [Google Scholar]

- 21.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001;345:965–70. [DOI] [PubMed] [Google Scholar]

- 22.Balas EA, Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving preventive care by prompting physicians. Arch Intern Med. 2000;160:301–8. [DOI] [PubMed] [Google Scholar]

- 23.Dexter PR, Wolinsky FD, Gramelspacher GP, et al. Effectiveness of computer-generated reminders for increasing discussions about advance directives and completion of advance directive forms. A randomized, controlled trial [see comments]. Ann Intern Med. 1998;128:102–10. [DOI] [PubMed] [Google Scholar]

- 24.Eccles M, Grimshaw J, Steen N, et al. The design and analysis of a randomized controlled trial to evaluate computerized decision support in primary care: the COGENT study. Fam Pract. 2000;17:180–6. [DOI] [PubMed] [Google Scholar]

- 25.Montgomery AA, Fahey T, Peters TJ, MacIntosh C, Sharp DJ. Evaluation of computer based clinical decision support system and risk chart for management of hypertension in primary care: randomised controlled trial. BMJ. 2000;320:686–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting [see comments]. J Am Med Inform Assoc. 1996;3:399–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Balcezak TJ, Krumholz HM, Getnick GS, Vaccarino V, Lin ZQ, Cadman EC. Utilization and effectiveness of a weight-based heparin nomogram at a large academic medical center. Am J Manag Care. 2000;6:329–38. [PubMed] [Google Scholar]

- 28.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review [see comments]. JAMA. 1998;280:1339–46. [DOI] [PubMed] [Google Scholar]

- 29.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome. A critical appraisal of research. Ann Intern Med. 1994;120:135–42. [DOI] [PubMed] [Google Scholar]

- 30.Kuperman GJ, Teich JM, Tanasijevic MJ, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc. 1999;6:512–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Margolis A, Bray BE, Gilbert EM, Warner HR. Computerized practice guidelines for heart failure management: the HeartMan system. Proc Annu Symp Comput Appl Med Care. 1995:228–32. [PMC free article] [PubMed]

- 32.McDonald CJ, Overhage JM, Tierney WM, Abernathy GR, Dexter PR. The promise of computerized feedback systems for diabetes care. Ann Intern Med. 1996;124:170–4. [DOI] [PubMed] [Google Scholar]

- 33.Persson M, Bohlin J, Eklund P. Development and maintenance of guideline-based decision support for pharmacological treatment of hypertension. Comput Methods Programs Biomed. 2000;61:209–19. [DOI] [PubMed] [Google Scholar]

- 34.Raschke RA, Gollihare B, Wunderlich TA, et al. A computer alert system to prevent injury from adverse drug events: development and evaluation in a community teaching hospital [see comments]. JAMA. 1998;280:1317–20, Erratum in: JAMA. 1999;281:420. [DOI] [PubMed] [Google Scholar]

- 35.Schriger DL, Baraff LJ, Rogers WH, Cretin S. Implementation of clinical guidelines using a computer charting system. Effect on the initial care of health care workers exposed to body fluids [see comments]. JAMA. 1997;278:1585–90. [PubMed] [Google Scholar]

- 36.Schriger DL, Baraff LJ, Buller K, et al. Implementation of clinical guidelines via a computer charting system: effect on the care of febrile children less than three years of age. J Am Med Inform Assoc. 2000;7:186–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Selker HP, Beshansky JR, Griffith JL. Use of the electrocardiograph-based thrombolytic predictive instrument to assist thrombolytic and reperfusion therapy for acute myocardial infarction. A multicenter, randomized, controlled, clinical effectiveness trial. Ann Intern Med. 2002;137:87–95. [DOI] [PubMed] [Google Scholar]

- 38.Smith SA, Murphy ME, Huschka TR, et al. Impact of a diabetes electronic management system on the care of patients seen in a subspecialty diabetes clinic. Diabetes Care. 1998;21:972–6. [DOI] [PubMed] [Google Scholar]

- 39.Starmer JM, Talbert DA, Miller RA. Experience using a programmable rules engine to implement a complex medical protocol during order entry. Proc AMIA Symp. 2000:829–32. [PMC free article] [PubMed]

- 40.Sintchenko V, Coiera E, Iredell JR, Gilbert GL. Comparative impact of guidelines, clinical data, and decision support on prescribing decisions: an interactive Web experiment with simulated cases. J Am Med Inform Assoc. 2004;11:71–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Brenner BM, Rector FC. Brenner and Rector's the kidney. Philadelphia: Saunders, 2000, p. xix, 2653, xlix.

- 43.Rose WD, Martin JE, Abraham FM, Jackson RL, Williams JM, Gunel E. Calcium, magnesium, and phosphorus: emergency department testing yield. Acad Emerg Med. 1997;4:559–63. [DOI] [PubMed] [Google Scholar]

- 44.Stead WW, Bates RA, Byrd J, Giuse DA, Miller RA, Shultz EK. Case Study: The Vanderbilt University Medical Center information management architecture. In: Van De Velde R, Degoulet P, editors. Clinical information systems: a component-based approach. New York: Springer-Verlag, 2003.

- 45.Geissbuhler A, Miller RA. A new approach to the implementation of direct care-provider order entry. Proc AMIA Annu Fall Symp. 1996:689–93. [PMC free article] [PubMed]

- 46.Giuse DA, Mickish A. Increasing the availability of the computerized patient record. Proc AMIA Annu Fall Symp. 1996:633–7. [PMC free article] [PubMed]

- 47.Box GEP, Jenkins GM, Reinsel GC. Time series analysis: forecasting and control. Englewood Cliffs, NJ: Prentice-Hall, 1994, p. xvi, 598.

- 48.Diggle P. Time series: a biostatistical introduction. Oxford statistical science series; 5. Oxford, England/New York: Clarendon Press/Oxford University Press, 1990, p. xi, 257.

- 49.Ray WA. Policy and program analysis using administrative databases. Ann Intern Med. 1997;127:712–8. [DOI] [PubMed] [Google Scholar]

- 50.Grimshaw J, Campbell M, Eccles M, Steen N. Experimental and quasi-experimental designs for evaluating guideline implementation strategies. Fam Pract. 2000;17(Suppl 1):S11–6. [DOI] [PubMed] [Google Scholar]

- 51.Sun GW, Shook TL, Kay GL. Inappropriate use of bivariable analysis to screen risk factors for use in multivariable analysis. J Clin Epidemiol. 1996;49:907–16. [DOI] [PubMed] [Google Scholar]

- 52.McDonald CJ. Medical heuristics: the silent adjudicators of clinical practice. Ann Intern Med. 1996;124:56–62. [DOI] [PubMed] [Google Scholar]