Abstract

Federated learning (FL)–a distributed machine learning that offers collaborative training of global models across multiple clients. FL has been considered for the design and development of many FL systems in various domains. Hence, we present a comprehensive survey and analysis of existing FL systems, drawing insights from more than 250 articles published in 2019-2024. Our review elucidates the functioning of FL systems, particularly in comparison with alternative distributed learning approaches. Considering the healthcare domain as an example, we define the building blocks of a typical FL healthcare system, including system architecture, federation scale, data partitioning, open-source frameworks, ML models, and aggregation algorithms. Furthermore, we identify and discuss key challenges associated with the design and implementation of FL systems within the healthcare sector while outlining the directions of future research. In general, through systematic categorization and analysis of existing FL systems, we offer insights to design efficient, accurate, and privacy-preserving healthcare applications using cutting-edge FL techniques.

Keywords: Federated learning, Aggregation algorithms, Data privacy, Data partitioning, Non-identically distributed data, Attacks

Graphical abstract

1. Introduction

In the present age of big data, artificial intelligence (AI) experts are keen on leveraging massive datasets for precise model training. Meanwhile, approaches capable of efficiently training models while preserving privacy are critical in domains such as healthcare, finance, defense, and Internet of Things (IoT) networks. In most industries, data are often scattered across various entities in isolated silos, primarily due to privacy concerns, security risks, business competition, and organizational complexities, limiting data sharing among organizations. However, machine learning (ML) models should be trained on aggregated data for optimal performance and impartiality. Traditionally, such aggregated data are centralized for training. However, privacy and security risks, single-center failures, and challenges in sharing massive amounts of data often limit the feasibility of such data centralization.

The healthcare sector has immense potential for AI applications, which shows considerable promise in revolutionizing patient care strategies and operational efficiency, as evidenced by the implementation of various tools, such as clinical decision support systems that leverage large amounts of pre-existing biomedical data to perform accurate diagnoses and create customized treatment plans [1], [2], [3]. However, current AI-based developments in the healthcare sector have yet to fully exploit their potential due to several barriers–including data availability–as highlighted in a recent report published by the US Government Accountability Office [4]. In particular, this report highlights the significant challenges associated with data access and curation, impeding research, data utilization, and progress in the healthcare sector. Stringent regulations restricting the sharing of biomedical data such as the EU's General Data Protection Regulation [5]. Even when good quality individual-level data is available, the process often involves lengthy applications mandating strict adherence to legal requirements for proper data usage and protection [6], [7], further limiting progress. In particular, Google has recently introduced FL [8], which allows users to leverage data without data centralization. This innovation ensures data privacy while enabling efficient, accurate and highly secure ML training, demonstrating immense potential for revolutionizing healthcare practices.

FL- also known as collaborative learning-is a technique of constructing high-quality, robust ML models via approaches involving data distribution across multiple clients instead of data storage on a single centralized server. Within this framework, independent clients develop local ML models using private data and store these models on a central server. These individual local models are further aggregated to create a new global model, which is subsequently shared with individual clients for further refinement (Fig. 1).

Fig. 1.

Different steps in the FL Framework for one iteration (Local models are represented in gray boxes near the clients and the global model is displayed next to the server).

Fig. 1 shows the primary components of an FL system, including a server, a communication computation framework, and a set of clients. Let us assume a scenario involving N clients (, , ..., ) each holding private data (, ,..., , respectively) with the aim of collectively training the model without sharing their respective data. FL facilitates such model training, where data from individual clients are collaboratively processed to train model without requiring direct sharing of data among them (i ϵ ). During this process, the server first selects an initial global model and shares this with all clients. The clients then train their local models using private data and relay it back to the server. Subsequently, the server aggregates the received models and updates the global model on the basis of the derived result. Finally, the server shares the global model with all clients. This iterative process continues until the model converges or a termination condition is satisfied.

The application of FL to biomedical and health data ensures the privacy of patients and healthcare providers, including hospitals. This technique enables data holders–such as hospitals or patients with medical data stored on their smartphones-to collectively analyze raw data without sharing it. In such a scenario, explicit sharing of collaborative models instead of local models specific to individual healthcare providers helps enforce privacy guarantees. However, this practice could have negative implications, including loss of information on in-hospital-acquired bacteria. In particular, the application of FL in medicine extends to the prediction of mortality rates, durations of hospital stay, durations of intensive care unit (ICU) stay, and identification of clinically similar patients with respect to their electronic health records (EHR). Furthermore, FL can facilitate brain tumor detection and whole brain segmentation in magnetic resonance imaging (MRI). Furthermore, FL applications can offer promising solutions to address pandemic situations [3], [9], [10], [11] and advance academic research in cancer and tumor treatments, mammograms, and genetic disorders [12]. ML FL models are reported to outperform those trained on a single datapoint, while also being more generalizable [4].

Healthcare presents unique and pressing challenges that make it an ideal but complex domain for FL. Patient data is often fragmented between multiple institutions, each with different electronic health record (EHR) systems, formats, and sampling protocols, leading to extreme data heterogeneity. Additionally, regulatory constraints such as the Health Insurance Portability and Accountability Act (HIPAA) in the US and the General Data Protection Regulation (GDPR) in the EU severely limit direct data sharing between hospitals, making traditional centralized machine learning approaches infeasible. The need for decentralized, privacy-preserving learning solutions is further amplified by the sensitivity of healthcare data and the critical consequences of model mispredictions. FL offers a promising solution by enabling collaborative model training without transferring raw patient data, but its deployment in healthcare is far from straightforward, requiring adaptation to clinical workflows, trust models, and compliance frameworks. Table 1 describes the significance statement, which summarizes the key motivations, contributions, and implications of this survey in the context of FL for healthcare.

Table 1.

Statement of Significance Table.

| Aspect | Description |

|---|---|

| Study Focus | A comprehensive review and analysis of FL systems with a focus on healthcare applications. |

| Innovation | Synthesizes insights from over 250 publications (2019-2024), providing a systematic categorization of FL systems. |

| Healthcare Relevance | Identifies the building blocks of FL systems tailored for healthcare, addressing privacy and data distribution challenges. |

| Key Contributions | - Comparative evaluation of FL with alternative distributed learning approaches. - In-depth discussion of federation scale, data partitioning, aggregation algorithms, and open-source frameworks. - Recommendations for future research in privacy-preserving and efficient FL systems. |

| Impact on Field | Serves as a resource for researchers and practitioners, guiding the design of robust FL systems for privacy-preserving healthcare applications. |

| Future Directions | Outlines challenges and opportunities, including addressing non-identical data distributions, system scalability, and robust privacy mechanisms. |

Recent developments in brain data analytics have been largely focused on integrating brain computer interfaces (BCI), neuromodulation, and FL to create secure, efficient, and personalized neurotechnological systems for treating neurological disorders or enhancing cognitive functions [13], [14]. BCIs, which facilitate direct communication between the brain and external devices, and neuromodulation, which can change neural activity occurring in the brain by directly stimulating it for therapeutic purposes, generate voluminous amounts of sensitive data. This requires robust data privacy, security, and computational scalability, and decentralized processing methods. Federated learning addresses these challenges by allowing machine learning models to be trained across many decentralized devices without sharing raw data, respecting user privacy and data protection regulations. This promising solution enables decentralized model training across multiple edge devices or institutions without requiring sensitive data to be shared. By keeping brain data local while sharing model updates, FL ensures privacy preservation and regulatory compliance, particularly important in clinical applications. In addition, FL allows for continuous personalized model refinement in real-time BCI applications and adaptive neuromodulation, fostering more accurate, responsive, and ethically aligned neurotechnological systems. Research studies show how FL can mitigate data heterogeneity and improve EEG-based classification tasks [15], [16]. In addition, the Augmented Robustness Ensemble algorithm incorporates privacy-protection techniques into BCI decoding, and security and performance are enhanced. Researchers confirm that FL can be on par with or even better than centralized models in the classification of emotions and motor imagery even under non-IID data conditions [17], [18]. Moreover, it has been illustrated that subject-adaptive FL methods achieve better classification results while maintaining serious data privacy [19]. Finally, some papers highlighted the imperativeness of federated techniques in the construction of strong and safe neurotechnologies based on ethics [20]. Overall, these efforts represent a promising way toward large-scale, privacy-conscientious brain data analytics and enabling real-time, personalized neuromodulation, and BCI applications.

The rest of the paper is organized as follows. Section 2 explains the research methodology used to select and analyze relevant studies. Section 3 reviews the related literature. Section 4 outlines the core components of FL-based healthcare systems. Section 5 discusses key challenges and open problems. Section 6 explores FL applications in healthcare and the underlying ML models. Finally, Section 7 summarizes the findings and outlines future directions.

2. Research methodology

2.1. Gathering data and strategic insights

For data collection, literature research was conducted in multiple databases-including Scopus, IEEE Xplore, JMIR, PubMed and Web of Science-targeting materials published from January 2017 to January 2024. This search used the keyword combinations of “federated learning” and “health *” to identify articles published from January 2020 to January 2024 in the selected databases. This strategy ensured a comprehensive collection of relevant scholarly articles. To efficiently extract essential information and minimize selection biases, we predominantly relied on abstract analysis to facilitate an objective evaluation of content related to our research topic.

2.2. Inclusion and exclusion criteria

Notably, we primarily focused on FL applications within healthcare settings, exploring specific ways through which FL can foster data privacy and collaboration without the need to centralize sensitive information. To ensure focus, articles introducing new FL techniques not explicitly designed for health-related applications were excluded, regardless of their potential relevance to general FL advances. Furthermore, the inclusion criteria were stringent, including only peer-reviewed articles, books, and conference proceedings while excluding conference abstracts and commentaries.

2.3. Study selection

The selection process began with the collection of 2,284 records through comprehensive searches performed in the selected databases, including Scopus, IEEE, JMIR, PubMed, and Web of Science. Of these, 774 duplicate records were promptly excluded. The further selection of the remaining 1,510 records eliminated 1,246 records for several reasons, such as non-adherence to the FL principles, publishing dates before 2020, or lack of relevance to healthcare. Subsequently, 264 reports were sought for retrieval. Following diligent eligibility evaluations, all 264 reports were deemed appropriate for inclusion. However, further detailed evaluations resulted in the exclusion of 105 reports that focused on generalized FL application scenarios in healthcare. The remaining 159 studies were included in the review and comprised various studies, including 22 considering centralized and decentralized ML models, 31 involving aggregation algorithms, 25 related to healthcare privacy, and 65 assorted studies.

This selection process was meticulously executed to ensure the compliance with the inclusion criteria and maintain the integrity of the scoping review. Fig. 2 outlines the selection process for this study, while Table 2 compares existing FL-based healthcare surveys with the current survey.

Fig. 2.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses 2024; flow diagram of the selection process.

Table 2.

Comparisons of existing FL-based healthcare surveys with our survey.

| Contribution | Healthcare |

Research gap identification | Possible Solutions identification | Research review scope | ||

|---|---|---|---|---|---|---|

| Taxonomy comparison (All) | Underlying ML model | Privacy concern | ||||

| [21] | x | x | ✓ | ✓ | x | 2019-2023 |

| [22] | x | x | ✓ | ✓ | ✓ | 2017-2022 |

| [23] | x | x | x | ✓ | x | 2021-2022 |

| [24] | x | x | x | ✓ | ✓ | 2020-2023 |

| [25] | x | x | x | ✓ | ✓ | 2018-2023 |

| [26] | x | x | x | ✓ | x | 2019-2023 |

| [27] | x | x | ✓ | ✓ | x | 2017-2021 |

| [28] | x | x | ✓ | ✓ | ✓ | 2021-2023 |

| [29] | x | x | ✓ | ✓ | x | 2019-2022 |

| [30] | x | x | ✓ | ✓ | x | 2017-2020 |

| [31] | x | x | ✓ | ✓ | x | 2018-2021 |

| [32] | x | ✓ | x | ✓ | ✓ | 2019-2022 |

| [33] | x | ✓ | x | ✓ | x | 2015-2021 |

| Our work | ✓ | ✓ | ✓ | ✓ | ✓ | 2019-2024 |

2.4. Critical analysis of existing FL surveys in healthcare

While several surveys (e.g. [21], [22], [23]) have addressed federated learning (FL) in general, most lack a focused analysis of its application in healthcare. For example, the survey by Yang et al. [21] provides a broad overview of FL techniques, but lacks relevance to healthcare and domain-specific implications. Similarly, the work in [22] provides good coverage of the timeline and scope of FL, but provides minimal discussion of privacy issues or healthcare-specific challenges. Technical overviews such as those of [24], [25] and [26] offer useful architectural insights, but do not identify clear research gaps or practical implementation challenges in clinical settings. Some works (e.g., [27], [28], [29]) attempt to identify research challenges and propose solutions but lack depth in evaluating model robustness, scalability, and data heterogeneity issues typical in healthcare. Although [32] and [33] incorporate healthcare perspectives, they do not deeply compare data partitioning effects or modern privacy risks in multi-institutional setups. In contrast, our work provides a comprehensive and healthcare-specific review of FL, offering critical analysis, a unified taxonomy, and identified gaps, thus contributing a meaningful direction for future research.

3. Related literature

With the exponential growth of data in the modern world, traditional data mining and research analysis tools have become impractical due to the challenges in managing and sharing massive datasets [12]. Consequently, distributed algorithms for multiinstitutional learning have been developed as viable alternatives (Fig. 3).

Fig. 3.

Different approaches for distributed multi-institutional learning).

Secure multiparty computation (SMC)– a related but relatively primitive concept-enables joint computations of arbitrary functions among distributed institutions or parties without necessarily sharing personal raw data or intermediate computation steps. In this approach, only the final output is shared with the participants. Although SMC algorithms guarantee data privacy and demonstrate high precision by transforming centralized algorithms into distributed ones using cryptographic concepts, their efficiency is often hindered by the use of many cryptographic tools and the requirements for extensive communication [34], [35].

Split Learning (SL)– is a technique for distributed ML that preserves data privacy while allowing multiple parties to collaborate on model development. Within the SL framework, each party owns a portion of the data and performs local computations before sharing a small portion of the results with a central server [36]. Subsequently, the server aggregates all results to generate a global model, which is further shared with all parties for further refinement of individual local models. Considering that SL algorithms only allow sharing of local computation results while preventing data transfers among parties, they provide an efficient means of maintaining the privacy of sensitive data while allowing multi-party collaborations for model development. Consequently, SL has emerged as a vital technique for distributed multi-institutional learning, enabling multiple institutions to collaborate on model construction using personal data without compromising data privacy.

Specifically, the SL process involves the following steps:

-

•

Initialization: Each party initializes a local model using personal data.

-

•

Forward pass: Each party executes a forward pass on their local data using a local model.

-

•

Communication: Each party transmits a small portion of its forward-pass results to the central server using secure communication protocols to ensure data privacy.

-

•

Aggregation: The server aggregates the results of each party, generating a global model.

-

•

Backward pass: The server transmits the global model to individual parties, and using this global model, individual parties perform backward passes on their local data to update their local models.

-

•

Iteration: The above mentioned process is iterated over a fixed number of turns until the desired level of accuracy is achieved.

Previous studies have demonstrated the effectiveness of SL algorithms in various ML tasks, including image classification and natural language processing. Furthermore, several open-source frameworks, including TensorFlow Federated and PySyft, have been developed for SL implementation.

Statistical Distributed Learning (SDL)– creates distributed algorithms to compute the statistical parameters of the data. Specifically, in the SDL process of big data, a common approach involves randomly splitting available data (along with observations) into multiple subsets, which independently execute the required computations. Subsequent aggregations of all such local computations yield the final results, limiting communication (and information sharing) to the final step, thereby notably mitigating complexities and generating simpler algorithms. Standard SDL algorithms prioritize the estimation of statistical parameters while minimizing communication between participating entities [37], [38], [39], without considering privacy concerns.

The FL approach– introduced in 2016–aimed to facilitate efficient model training among distributed clients without sharing personal raw data. Thus, FL is a distributed ML approach that enables multiple clients to train a global model while ensuring their data privacy under the coordination of a central server. Within FL, localized data train individual models, which are further transmitted to a server for aggregation. The resulting aggregated and updated model is then relayed to all participants, who generate the final learning model through refinements. This process can be compared with the integration of SDL with a focus on privacy considerations. Furthermore, unlike SMC, FL focuses on preserving the privacy of client raw data rather than the privacy of intermediate aggregated data.

Consequently, FL has emerged as a crucial technique for distributed multiinstitutional learning, enabling multiple institutions to collaborate on model construction using personal data without compromising data privacy. Compared to traditional centralized ML approaches, FL offers numerous advantages-including enhanced privacy, reduced communication overhead, and increased scalability [40].

In particular, our extensive survey provides detailed comparisons among various FL applications in the healthcare sector (Table 3). Furthermore, by addressing typical topics such as taxonomy, ML models, and privacy concerns, identifying research gaps, and suggesting new approaches, our survey sets itself apart from previous surveys within this field. In addition, it presents in-depth reviews of relevant literature published in 2019-2024 and summarizes the latest developments in FL applications. In addition, it emphasizes privacy concerns and sets benchmarks against previous surveys to highlight trends and innovation opportunities. Our comprehensive approach follows the evolution of the field and serves as a critical reference for ongoing research and future developments, positioning itself as a key resource for FL-based healthcare practices. We aim to dissect the taxonomies of various FL approaches (including those yet to be discussed in this paper) and to detail the building blocks guiding the design of FL-based healthcare systems. The results of our comprehensive survey are anticipated to guide researchers in choosing the appropriate components when designing federated ML systems. This paper also summarizes current challenges and future research directions to pave the way for a new era of FL solutions.

Table 3.

Comparisons of various methods for distributed multi-institutional learning.

| Approach | Computation | Communication | Data Heterogeneity | Privacy | Design Purpose |

|---|---|---|---|---|---|

| SDL | Distributed computation requirements | Low communication overhead, communication cost increases with the number of participating clients | Data is assumed to be independent and identically distributed across all parties | No privacy guarantees, but can be enhanced with additional methods | Efficient statistical model training |

| SL | Distributed computation and communication requirements, less than FL | Low communication overhead, communication cost increases with the number of participating clients | Able to handle data heterogeneity | High privacy guarantees | Privacy-preserving model training |

| SMC | Heavy use of cryptographic tools and significant communication requirements | High communication overhead, communication cost increases with the number of parties involved | Able to handle data heterogeneity | High privacy guarantees | Privacy-preserving computation without data sharing |

| FL | Distributed computation and communication requirements, less than SMC | Low communication overhead, communication cost increases with the number of participating clients | Aspires to deal with heterogeneous datasets | High privacy guarantees | Privacy-preserving model training |

4. Basic building blocks of FL-based healthcare systems

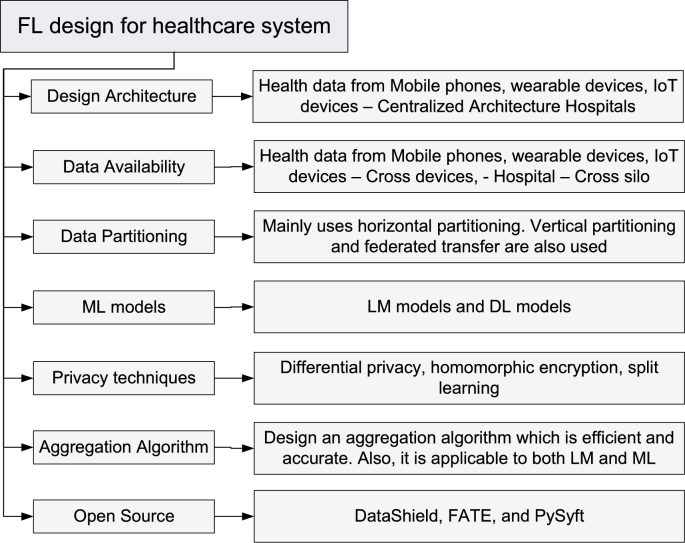

The key building blocks of an FL-based healthcare system include system architectures, data availability, data partitioning schemes, ML models, privacy techniques, aggregation algorithms, and available open source frameworks for FL system design [64], [65], [66], [67]. This section elaborates on each of these blocks, comparing and emphasizing their roles in supporting FL-based healthcare practices.

4.1. System architecture

Centralized and decentralized architectures represent primary FL system architectures.

-

•

In the centralized architecture, the central server shares an initial model with all clients, who subsequently compute local models using personal local data and relay these local models back to the central server for model aggregation, yielding a global model. The central server is entrusted with the execution of correct calculations, preserving the privacy of client data, and orchestrating the complete training process. Small reliable devices- such as mobile devices-often adopt a centralized architecture. However, the primary drawback of this architecture is the potential disruption of all client training processes in case of a central server failure.

-

•

In contrast, in the decentralized architecture, peer-to-peer communication among individual clients replaces communication with the central server, allowing each client to directly update the global parameters of the model, thereby minimizing the risk of a single-point failure. However, in this peer-to-peer communication approach, one client acts as a leader, initiating communication, establishing learning tasks, and aggregating and forwarding the summarized results to all other clients. Although organizations such as hospitals typically favor this architecture, the selection of reliable and available leaders can be challenging. Furthermore, in scenarios where the current leaders crash or are unavailable, the network needs a new leader out of the remaining clients.

Table 4 summarizes recent studies that adopt centralized and decentralized architectures. Typically, most healthcare applications adopt the centralized architecture.

Table 4.

Comparison of centralized and decentralized architecture and some of the recent applications.

| System Architecture | Advantages | Disadvantages | Applications | Ref. |

|---|---|---|---|---|

| Centralized |

Central server orchestrates the entire training process | Single point of failure | Healthcare-Hospital data | [41] |

| Healthcare-Hospital data | [42] | |||

| Healthcare-Clinical and hospital data | [43] | |||

| Simple compared to decentralized architecture | Healthcare-Clinical data | [44] | ||

| Healthcare-Covid 19 patients' data | [45] | |||

| Healthcare, Medical image data | [46] | |||

| IoT devices | [47] | |||

| Health data from wearable devices | [48] | |||

| Small reliable devices are generally use centralized architecture |

All clients are required to agree on one |

Cyber security-IoT and IIoT data | [49] | |

| Cyber security-IoT data | [50] | |||

| Networking-Intrusion detection dataset. | [51] | |||

| IoT devices-Electrical load data | [50] | |||

| Decentralized | Each client can directly update the global parameters of the model | Selecting a leader to initiate and aggregate the process is challenging. | Networking-sensor data | [52] |

| Public-social survey, census and sales data | [53] | |||

| Healthcare-hospital data | [54] | |||

| Reduces the risk of a single-point failure | Each client has its own behavior, it is hard to achieve collective tasks and global knowledge. | 5G enabled UAVs | [55] | |

| Vehicular network-Autonomous Driving Cars | [56] | |||

| Not suitable for small systems due to inefficient system management and performance | Medicine-drug discovery | [57] | ||

| Generally used by organizations | Vehicular network | [58] | ||

| Scaling and performance problems | Healthcare–X–ray images | [59] | ||

| Healthcare-clinical dataset | [60] | |||

| Healthcare-Cancer dataset | [61] | |||

| Cyber security | [62] | |||

| Anomaly detection-IoT data | [63] | |||

4.2. Data availability

Usually, within an FL-distributed data set framework, potential clients include organizations such as hospitals and banks or mobile and edge equipment, including wearable devices, routers, and integrated access devices. The “cross-silo” and “cross-device” architectures represent two primary design architectures for federation in FL systems. The cross-silo scheme typically involves some relatively reliable clients, while the cross-device scheme includes numerous clients. In the cross-silo approach, participating organizations, such as hospitals, collaborate to train a model using their private data, and each entity participates in all computation rounds [68], [69]. Meanwhile, the cross-device scheme involves multiple clients, each with their private data. All devices within this framework-including mobile and wearable devices-can be active or inactive at any timepoint, with device inactivity primarily resulting from several issues such as power loss or network concerns [70]. Within this scheme, clients are often active and participate in one round of computation. Consequently, a new set of active clients contributes their local data for model training in each round. In particular, both schemes execute model training without sharing individual private data. Table 5 compares the cross-silo and cross-device architectures. In general, cross-silo structures are widely adopted by organizations, while cross-device structures are used in mobile devices [65]. Furthermore, cross-silo structures demonstrate strong hardware capacities, better storage capabilities, and better performance than cross-device structures. Cross-silo structures typically deal with nonindependent and identically distributed (nonIID) data, with all clients being active participants. These architectures are powerful and have fewer failures, making them more stable than cross-device structures. However, cross-silo architectures are only scalable over a relatively small number of clients (approximately 2-100).

Table 5.

Comparison between FL schemes.

| Properties | Cross-Silo | Cross-Devices |

|---|---|---|

| Entities | Hospitals/ Organizations | Mobile/ IoT devices/ Wearable devices |

| Performance | High | Low |

| Scale | Relatively small [15] | Large |

| Stability | High stability [43] | Low stability |

| Communication reliability | Reliable – All clients participate in communication [14] | Unreliable – Communication of some clients may be lost during computation [15] |

| Number of clients | Typically, around 2-100 | Very large (millions) |

| Participation of clients for the computation | All clients in the communication should participate | Fraction of the clients only participates in the computation |

| Data distribution | Usually, non-IID | Usually, IID |

| Primary bottleneck | Computation/ communication | Communication. (Uses wi-fi or slower connections) [15] |

| Stateful/stateless | Clients are Stateful- They save the models of the previous round [16] | Clients are Stateless- do not save previous round information [16] |

| Reliability | Clients have comparatively few failures [43] | Clients are highly unreliable |

| Computation power | Entities often have high computation power | The only central server is assumed to have high computation power. |

4.3. Data partitioning in FL

Data partitioning in FL typically relies on the distribution of clients' data across the sample and attribute spaces. Notably, FL data partitioning schemes are categorized into horizontal, vertical, and federated transfer learning (FTL) data partitioning.

-

•

Horizontal data partitioning divides client datasets featuring the same attributes but different participants (the sample space). For instance, in a speech disorder-detection system, multiple users utter a given sentence (attribute space) into their smartphones with varying voices (sample space). Thereafter, local speech updates are averaged by a parameter server to generate a global speech recognition model [71]. Most FL-based medical applications adopt horizontal data partitioning [72], [73], [74], [75], [76], [77], [78], [79], [80], [81].

-

•

Vertical data partitioning divides client datasets featuring the same participants but varying attributes (with possible overlap). For instance, medical records maintained by hospitals-which can aid the decision-making efforts of insurance companies-and the records maintained by the insurance companies themselves may share patient details (same sample space) yet differ in their data fields (attribute space). The applications of vertical data partitioning in medicine are comparatively less widespread than those of horizontal data partitioning [82], [83], [84].

-

•

FTL data partitioning combines the horizontal and vertical partitioning approaches [82]. In other words, client datasets feature some overlap in the sample (participants) and attribute spaces, such as any collaborative disease diagnosis performed by hospitals worldwide (sample space) with varying features (attribute space), resulting in improved diagnosis accuracy [85], [86], [87]. However, research on FTL data partitioning is still in its nascent stages.

In horizontal and vertical FL data partitioning, the training data owned by various organizations must share identical attributes or sample spaces. In healthcare organizations where this is not feasible, the FTL partitioning scheme emerges as a viable alternative [85]. Table 6 summarizes the distinctions between these three data partitioning schemes.

Table 6.

Differences between the three data partitioning methods.

| Properties | Horizontal | Vertical | Federated Transfer |

|---|---|---|---|

| Advantage | Increases the total number of training samples, Improve accuracy | Increasing total feature dimension, Allows richer hypothesis building | Increases user samples and feature dimensions, Remedies the problem of inadequate data [88] |

| Methods | FedAvg [72], [8] | SecureBoost [89] | Transfer learning [67] |

| Application | IoT, Healthcare | Finance | Healthcare |

4.4. Machine-learning models

Theoretically, the FL framework can collaboratively train any ML model. However, in actual practice, FL applications have stringent requirements, presenting several challenges:

-

•

The complexity of the selected model must match the capacities of user devices. Although introducing surrogate parties can address this, they may compromise user privacy.

-

•

The availability, nature, and distribution of training data across clients must be considered during model selection.

Given the unavailability of a single model that is universally applicable across all datasets, selecting appropriate ML models is paramount. Supervised ML models commonly implemented in FL include linear models (LMs), tree models (TMs), and neural networks (NNs). However, unsupervised ML models include clustering approaches, the K-nearest neighbor (K-NN) algorithm, and principal component analysis (PCA).

There is no single model that works on every dataset. Therefore, selecting an appropriate ML model is very significant. The supervised ML models implemented in FL include linear models, tree models, and neural networks, while unsupervised ML models include clustering, K-NN, and PCA.

4.4.1. Linear models

LMs typically assume a linear relationship among two or more independent and dependent variables. These models include linear regression (LR) models [90], logistic regression models, ridge regression approaches, and support vector machines [65]. LMs are generally concise, straightforward, easy to implement, and robust [60]; hence, these approaches are extensively utilized for patient data (HER) prediction in FL. The stochastic gradient descent (SGD) algorithm-an efficient iterative optimization algorithm-is typically employed in FL for the implementation of ML models, including LMs. However, previous studies have implemented distributed linear regression without iterations [37].

4.4.2. Tree models

TMs facilitate easy training and feature smaller model dimensions than deep-learning models such as NNs. Decision trees (DTs) and the random forest algorithm represent the primary TMs commonly implemented in FL [91]. Among these, DTs often utilize gradient boosting decision trees (GBDTs) [89], which are adept at processing high-dimensional data and are commonly employed for decision-making applications, including those in clinical practices. However, in certain applications, GBDTs may suffer from inefficiency or low accuracy, hindering their practical applications [92], [53]. Recent FL studies have focused on improving the efficiency and accuracy of GBDTs [93], [94], [89], which are crucial for medical applications.

4.4.3. Advanced ML models: deep learning and unsupervised learning

FL in healthcare leverages both advanced deep learning models and unsupervised learning techniques to address diverse clinical data types and analytical needs. Deep learning models, such as convolutional and recurrent neural networks, are particularly effective for capturing complex, non-linear patterns in high-dimensional data like medical imaging and physiological time-series. At the same time, unsupervised models, including clustering and dimensionality reduction methods, are valuable in settings where labeled data is scarce or unavailable—facilitating tasks like patient stratification, anomaly detection, and exploratory analysis. This section presents an integrated overview of both model classes, highlighting their relevance, advantages, and limitations within FL-based healthcare systems.

Deep learning models

Neural Networks (NNs) are powerful models widely used in healthcare for capturing non-linear relationships in complex data. Applications span medical imaging, diagnostics, and time-series analysis [95], [96], [97]. However, their limited interpretability poses challenges in critical domains such as genomics and radiology [12].

In FL-based healthcare systems, CNNs are used for image analysis, RNNs and LSTMs for sequential data like ECGs, and MLPs for structured datasets. These models are typically trained using stochastic gradient descent (SGD), with global aggregation via algorithms such as FedAvg. Despite their effectiveness, training deep NNs in FL requires large datasets, high computational resources, and multiple communication rounds, making them costly and time-consuming.

However, training deep NNs in FL presents several computational and convergence challenges. Compared to linear models, NNs require substantially larger datasets to achieve generalizable performance. The training process becomes more resource-intensive as data volume, model depth, and the number of participating clients increase. Furthermore, in federated setups, NN training via SGD may demand numerous communication rounds for convergence, resulting in prolonged training times and higher energy costs. These limitations necessitate careful model selection and optimization in practical healthcare FL deployments. A comparative overview of ML models adopted in FL-based healthcare applications is provided in Table 7.

Table 7.

List of ML models used in FL medical applications.

| Work | Linear Models | DL models | Datasets |

Applications | |

|---|---|---|---|---|---|

| Name | Availability | ||||

| [37] | LR | - | Diabetic | Private | Prediction- length of stay in hospital |

| [50] | - | NN | eICU Collaborative research Database | Public | Mortality prediction |

| [42] | LoR | MLP | MIMIC-III | Public | In-hospital mortality prediction |

| [43] | SVM Perceptron LoR | - | LCED, MIMIC-III | Private, Public | Adversary drug reaction prediction, Mortality prediction |

| [45] | LoR | MLP | Covid-19 EHR | Private | Covid 19 patients' mortality prediction |

| [98] | SVM | ANN | MIMIC-III, i2b2 | Public | Patient representation, Phenotyping |

| [99] | LoR | NN | eICU Collaborative research Database | Public | Mortality prediction, Prolonged length of stay |

| [100] | - | RNN | Cerner Health Facts-HER database | Public | Preterm-birth prediction |

| [101] | - | CNN | Covid patient hospital data-Wuhan | Private | Pneumonia detection |

| [102] | LR, LoR | - | COPDGene, SHIP dataset | Public, Public | Genetic association |

| [48] | - | CNN, RNN | Health records from wearable devices | Public | Human activity prediction |

| [103] | - | CNN | Health data- X-ray, Ultrasound images | Private | Diagnosis of COVID-19 |

| [104] | LoR, SVM | - | INTERSPEECH 2020[82] | Public | Alzheimer's disease detection |

| [105] | - | MLP | MNIST, CIFAR10, CIFAR100 | Public | General |

| [60] | - | NN | BraTS, Clinical data | Public, Private | Cancer diagnosis |

| [46] | - | GNN | TCGA dataset[83] | Public | Histopathology |

Unsupervised models

Existing FL applications are predominantly based on supervised learning. However, unsupervised learning may prove beneficial in scenarios featuring either unlabeled data or data with limited labels. Examples of unsupervised learning methods frequently adopted in FL include clustering methods [69], PCA [106], and the K-NN classifier [107]. Notably, traditional centralized unsupervised learning methods face several limitations, especially regarding the protection of raw data and the computational burden associated with processing large-scale centralized datasets. Remarkably, FL clustering methods overcome these constraints, facilitating the handling of large datasets while preserving privacy [108]. ML models are promising for developing robust, accurate, and efficient FL-based healthcare systems. For instance, FL aids in predicting the rates of hospitalization because of cardiac events, tumors, cancer, and diabetes; rates of mortality; and durations of ICU stay. Furthermore, the benefits and application scope of FL extend to medical imaging, MRI-based whole brain segmentation, and brain tumor segmentation. The applicable ML model changes according to the dataset and prediction variables. Table 7 lists a few primary ML models employed in FL-based medical applications from 2019 to 2024.

4.4.4. Critical analysis of the performance of key FL algorithms in healthcare applications

Table 8 provides a comprehensive summary of performance metrics across a diverse range of FL algorithms applied in healthcare; however, certain limitations and conflicting observations merit deeper analysis. For instance, while the study by [48] reports an exceptionally high accuracy of 99.67% using FedAvg with BiLSTM+DRL, such results are uncommon in real-world cross-silo FL environments, raising questions about potential overfitting or limited dataset diversity. In contrast, other studies using FedAvg (e.g., [42], [43], and [45] consistently demonstrate modest accuracy and AUC values (e.g., 84% and 0.789 AUC), especially in the presence of DP, which typically introduces a trade-off between privacy and performance. The study by [43] using DP reports lower F1 scores (e.g., 0.820 for Perceptron) compared to non-private settings, reflecting a quantifiable privacy-utility trade-off. Moreover, several studies (e.g., [104], [111])) lack clear dataset size disclosure or data heterogeneity context, which limits the generalizability of reported metrics. Studies such as [109], [110]), which achieve 97% accuracy through added techniques like SMOTE and feature selection or EfficientNet architectures, may be difficult to reproduce in decentralized real-world hospital settings. These variations highlight not only algorithmic diversity but also a gap in standardized benchmarks and evaluation protocols across studies.

Table 8.

Performance Comparison of Key FL Algorithms in Healthcare Applications.

| Study | Aggregation Algorithm | ML/DL Model | Privacy Mechanism | Performance Metrics (Accuracy / AUC / Dice / F1) |

|---|---|---|---|---|

| [2] | Custom FedAvg | Neural Network | HE | Accuracy: 90% |

| [37] | SecureAvg | LoR | SMC | R2: 0.98 |

| [42] | FedAvg | LoR / MLP | FL | AUC: 0.7890 (LoR), 0.7769 (MLP) |

| [43] | FedAvg | LoR / SVM / Perceptron | FL + DP | F1 Score (MIMIC-III): 0.860 (LoR), 0.845 (SVM), 0.820 (Perceptron) |

| [45] | FedAvg | LoR / MLP | DP | Accuracy: 84% |

| [48] | FedAvg | BiLSTM + DRL (DL) | FL | Accuracy: 99.67% |

| AUC: 0.98, F1 Score: 98.92 | ||||

| [60] | FedAvg + CFA | U-Net (CNN-based) | FL | Dice Score: |

| 0.88 (Centralized), 0.85 (Decentralized) | ||||

| [79] | FSVRG | ANN / SVM (NLP) | Apache cTAKES (UIDs) | Accuracy: 81.5% |

| [100] | FUALA | RNN | FL | AU-ROC: 67.8 ± 2.7 |

| [103] | Clustered FL | VGG16 + FC layers | FL (edge-based) | F1 Score: |

| X-ray: 0.76 (COVID), 0.96 (Healthy) | ||||

| [104] | FedAvg + Secure Aggregation | LoR | FL + DP | Accuracy: 81.9% |

| [109] | FedAvg | DNN | FL with SMOTE + L1 Feature Selection | Accuracy: 97.54% |

| F1 Score: 97% | ||||

| [110] | FedAvg | EfficientNetB0 | FL + HE + DP | Accuracy: 98.60% |

| [111] | Weighted Avg | CNN | DP | Accuracy: 89% |

| [112] | FedAvg | CNN | Not Specified | Accuracy: 87% |

| [113] | FedAvg + LoRA | LLM (MobileBERT, MiniLM) | FL + LoRA (HIPAA, GDPR compliant) | F1 Score: 80.43 (BERT-base-uncasedFL) |

| MobileBERTFL: 67.11, MiniLMFL: 68.08 |

While deep learning and unsupervised models play vital roles in FL-based healthcare systems, their use introduces several practical limitations, particularly around training efficiency, communication cost, and system heterogeneity. Rather than elaborating on these challenges here, we provide a more detailed analysis of these issues-including convergence delays, client variability, and non-IID data-in Section 5.

4.5. Privacy techniques

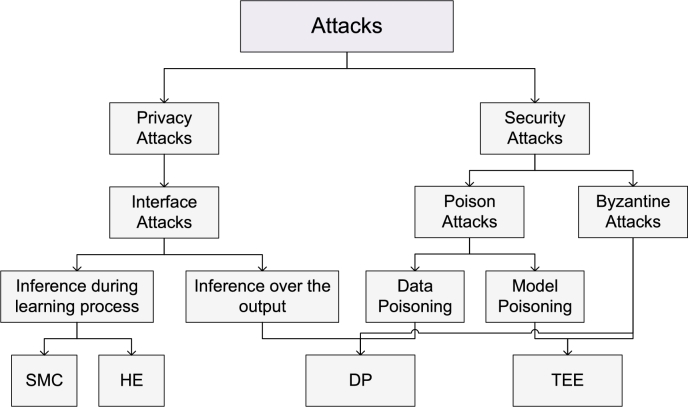

FL facilitates collaborative model learning without necessitating raw data sharing. However, with continuous model updates throughout the training process, intermediate model parameters are shared among all clients, potentially leaking sensitive client data [114]. FL models adopt one of the four techniques- SMC, homomorphic encryption (HE) [115], differential privacy (DP) [116], and trusted execution environments (TEEs) [117]-to avoid privacy breaches.

4.5.1. Homomorphic encryption

HE is a cryptographic technique that enables the computation of encrypted data without data decryption. This approach facilitates data computations without requiring data exposure to unauthorized parties [118]. Considering distributed multi-institutional learning, HE allows multiple institutions to collaborate on ML model construction without sharing their raw data. Here, each institution can encrypt its data before transmitting it to the server for computation. Next, the server can perform computations on the encrypted data without retaining any related information. Subsequently, the generated model can be relayed to participating institutions for personalized applications [90], [119], [120].

-

•

Fully homomorphic encryption (FHE) enables operations on encrypted data without the need for decryption. Although FHE provides strong privacy, it is too slow for most practical uses.

-

•

Partially homomorphic encryption (PHE) supports the execution of one operation type on encrypted data-such as addition or multiplication-making it less powerful but more efficient than FHE.

-

•

Somewhat homomorphic encryption (SHE) allows the implementation of a limited set of operations on encrypted data -such as addition and multiplication -with some complexity restrictions. Therefore, SHE is less powerful than FHE but more efficient than PHE.

HE enables direct computation on encrypted data. For a ciphertext , the encryption scheme ensures:

where ∘ is an operation on ciphertexts and ⁎ is the corresponding plaintext operation. In FHE, both additions and multiplications are supported an arbitrary number of times. However, due to bootstrapping and ciphertext expansion, FHE is computationally infeasible for large models or real-time scenarios.

PHE schemes like Paillier (additive) and RSA (multiplicative) support a single operation (e.g., encrypted addition):

HE approaches have become critical in distributed multiinstitutional learning applications, where multiple institutions must collaborate on ML model construction without sharing individual raw data. As highlighted, the HE technique can encrypt data before data transmission to the server, which can perform computations on the data without accessing the plaintext. The generated encrypted model can then be transmitted to participating institutions that can decrypt and implement the model in personalized applications [121]. Popular HE libraries-including Microsoft SEAL [122], HElib [123], and Palisade [124]-can facilitate efficient and secure implementations of HE schemes in various applications, including multi-institutional learning.

4.5.2. Secure multiparty computation

SMC is a cryptographic tool that enables multiple parties to perform joint computations of the aggregated data set without sharing their raw data. SMC allows n parties to collaboratively compute a function on their private inputs without revealing them. One common approach is incremental secret sharing, where a value v is split into shares such that:

SMC employs various protocols, including secret sharing, oblivious transfer, and garbled circuits. Among these, in secret sharing, private data are divided into multiple encoded parts known as secret shares, which reveal no information regarding the original data. The minimum number of shares required to reveal the secret is identified as the threshold. During an oblivious transfer, another widely used cryptographic protocol-a sender-transmits one value out of several to a receiver, but retains no information regarding this value. Meanwhile, in Yao's garbled circuit protocol, multiple clients can jointly compute functions using garbled Boolean circuits on their sensitive data while preserving input privacy. Although SMC techniques are usually lossless and preserve accuracy while maintaining privacy, they incur significant communication overhead. Although SMC techniques have been used to preserve the privacy of intermediate model results in FL, their communication costs can be unrealistic, particularly in cross-device configurations, because FL requires multiple model updates until convergence [35], [125], [126], [127].

4.5.3. Differential privacy

DP is a privacy-enhancing technique that introduces random noise into query results, or, in our case, into model parameters. DP provides a mathematically rigorous privacy guarantee by ensuring that the output of a computation is statistically indistinguishable whether or not a specific individual's data are included. A random mechanism satisfies -DP if for all measurable subsets S of the output space and all datasets D and differ in at most one record:

In FL, the most commonly used method is DP-SGD, where each client clips the per-sample gradient to a fixed norm C, and Gaussian noise is added before averaging:

DP provides a tunable privacy-utility trade-off but introduces stochastic noise that can impair model convergence, especially in imbalanced or small medical datasets.

DP facilitates the sharing of statistical information regarding a dataset while protecting individual privacy. Furthermore, the introduction of noise ensures minimal changes in model parameters in the event of record removal [116]. Consequently, this approach ensures that no participant exerts a “detectable” influence on the generated model. Previous studies have implemented DP mechanisms in FL to prevent information leakage from intermediate and final model parameters. Furthermore, in these techniques, noise is typically introduced into the client-side local parameters before uploading them to the server for aggregation, potentially preventing privacy breaches. However, such noise additions can adversely affect the accuracies of local and global models, along with their convergence speeds. Furthermore, configuring DP parameters is often challenging [128], [129], but the DP approach is generally favored for its lower computation overhead compared to other privacy mechanisms [130], [131], [132].

4.5.4. Trusted execution environment -TEE

TEEs are hardware-based privacy-preserving approaches featuring separation kernels as their fundamental components. TEEs are isolated processing environments that facilitate secure data processing, without interference from other system components. TEEs create secure environments within central processors, offering robust privacy and integrity guarantees for stored or processed data and codes. In FL systems, TEEs are often employed on the client side for local training and on the server side for secure aggregation. However, TEEs typically incur high communication overhead and require remarkable storage capacities [117]. Furthermore, TEEs may experience substantial performance deterioration due to additional internal operations to ensure security. Moreover, TEEs are susceptible to hardware attacks that compromise the integrity of the underlying codes [133], [134].

Table 9 summarizes the privacy mechanisms commonly adopted in FL to enhance user privacy protection.

Table 9.

Various privacy protection mechanisms and their comparisons.

| Mechanism | Approach | Technique | Advantages | Disadvantages |

|---|---|---|---|---|

| Differential Privacy | Addition of random noise to shared parameters | Noise addition | Preserves privacy Low computation overhead Not vulnerable to inference from the final output (as the final output is protected by noise addition) | Accuracy loss from added random noise Privacy parameters are hard to set |

| Secure Multiparty Computation | Application of SMC protocols on shared parameters | Secret sharing, Oblivious Transfer, Garbled Circuit | Preserves privacy and accuracy | Computation overhead Communication complexity Vulnerable to inference from the final output |

| Homomorphic Encryption | Application of HE on shared parameters | Partial homomorphic encryption (Additive or multiplicative) | Preserves privacy Relatively small accuracy loss | Computation overhead Communication complexity Vulnerable to inference from the final output. |

| Trusted execution environments | Perform aggregation on secured Hardware | Separation kernel isolation method | Strong privacy and security. | Expensive Computational overhead Vulnerable to hardware attacks |

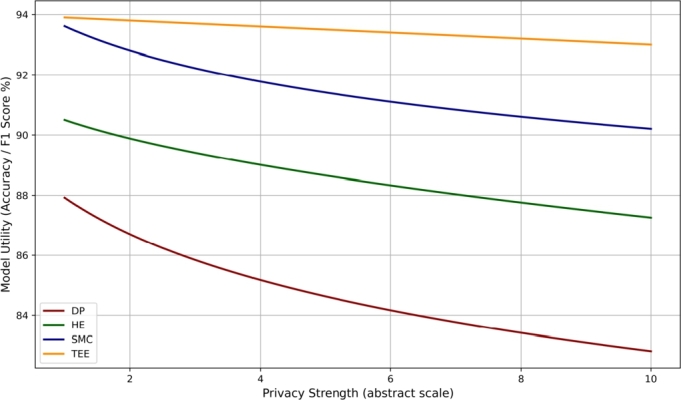

Tradeoff Between Privacy and Utility: While privacy-preserving techniques such as HE, SMC, and DP enhance data confidentiality, they often come with a trade-off in terms of utility-particularly model accuracy or training efficiency. For example, a stronger noise in DP improves privacy, but degrades accuracy. Similarly, while fully homomorphic encryption offers strong confidentiality, it significantly increases computational overhead.

Fig. 4 provides a clear visualization of the conceptual trade-off between privacy strength and model utility (e.g., accuracy or F1 score) for DP, HE, SMC, and TEE. The privacy strength is plotted on the x-axis (abstract scale from 1 to 10), while the corresponding model utility is shown on the y-axis as a percentage. Utility values are generated using hypothetical but representative mathematical functions that mimic trends reported in the literature. As the privacy level increases, DP shows a steep decline in utility due to excessive noise, whereas HE and SMC maintain moderate accuracy but suffer from computational overhead. TEE, on the other hand, preserves utility more effectively with only a slight linear drop, which represents a minimal impact on performance.

Fig. 4.

Trade-off Between Privacy Strength and Model Utility Graph.

4.6. Aggregation algorithms

LM and deep-learning model computations typically rely on optimization algorithms that are inherently sequential and require numerous iterations to converge to a local minimum of a function. Hence, despite being computationally efficient, these approaches require numerous training rounds to produce satisfactory models. GD and its variants-such as SGD variants, minibatch GD, and distributed GD-are most commonly employed as optimization algorithms in FL [135], [136], with the application of SGD prominent in local model computations.

Usually, in FL frameworks, aggregation algorithms are essential to integrate local model parameters derived from all clients at each iteration stage to generate a global model. Subsequently, the clients iterate through the learning process beginning with this new global model until an adequate model is obtained or a stopping criterion is reached. In particular, ideal aggregation algorithms are expected to (1) limit the number of iterations, thereby ensuring rapid convergence; (2) reduce the local computation duration per iteration on the client side; and (3) reduce communication overheads between clients and the server.

The FedAvg algorithm [8] is the first and most widely adopted aggregation algorithm in FL. Within its framework, a randomly selected subset of clients implements SGD on their local data to generate local model parameters, which are transmitted to the server for parameter averaging and subsequent global model generation. Subsequently, this process is repeated with the selection of a new random subset of clients. FedAvg [8] is the foundational aggregation algorithm for FL. In each communication round, a subset of clients performs local stochastic gradient descent (SGD) for multiple epochs and sends the resulting model weights to a central server. The server computes the global model w as a weighted average:

where is the number of data samples in client i and is the total number of samples in all selected clients. Although FedAvg is communication efficient, its convergence is hindered under non-IID data due to client drift and weight divergence.

Although FedAvg is the most popular aggregation algorithm, it may not always yield a suitable model in practical scenarios. In particular, when dealing with heterogeneous, unbalanced, and variable-distribution client data, FedAvg can adversely affect model aggregation, convergence, and accuracy, introducing communication burdens. Recent studies have attempted to improve the accuracy of FedAvg while reducing its computational overhead through modifications such as FedProx and FSVRG [137], [138], [139], [140], [141], [142], [143], [144], [145].

The FedAvg algorithm and its variants are predominantly adopted in FL horizontal data partitioning frameworks. FedProx [140] addresses the instability of FedAvg by modifying the local target with a proximal term that penalizes deviation from the global model . The local loss function on client i becomes:

where μ is a tunable regularization parameter. This term reduces local model divergence and improves robustness to data heterogeneity, making it better suited for healthcare applications where client data distributions can vary significantly. The frame [139] introduces control variates to mitigate the impact of client drift in non-IID settings. Each client maintains a local control variate and the server maintains a global control variate c. These are used to correct the update direction during local training:

where η is the learning rate. The stack is particularly effective in scenarios where clients perform many local updates between communication rounds, such as resource-constrained healthcare IoT environments. Given that vertical data partitioning systems require collaborations between parties in every iteration, implementations of the FedAvg algorithm and its variants can be challenging [72], [146], [147]. However, some vertically partitioned FL techniques employ concatenation for local model aggregation [148]. In particular, TMs cannot be optimized using SGD. Instead, they require specialized frameworks, such as the GBDT [89], [94], [149] Other aggregation algorithms leverage the architectures of models for training [150], [37], [115], [151], [148], [152]. Although specialized methods can yield higher accuracy, their applications are limited compared to those of other models. Recent studies have integrated privacy mechanisms-such as SecureBoost [149] for DT and SecAgg [153] for NN—-into the framework of aggregation algorithms. Table 10 summarizes recent (2020-2022) popular state-of-the-art studies on aggregation algorithms, classified according to (1) algorithm employed for local model computation, (2) adopted aggregation method, (3) utilized ML models and (4) adopted data partitioning scheme. In particular, some of these algorithms are tailored for medical applications.

Table 10.

Aggregation Algorithms.

| FL Aggregation Algorithm | Algorithm for local models | Aggregation Method | ML Model | Data Partitioning |

|---|---|---|---|---|

| FedAvg [8] | Stochastic GD | Average | NN, LM | Horizontal |

| FedProx [140] | LogR, NN | Horizontal | ||

| FEDPER [137] | NN | Horizontal | ||

| SCAFFOLD [139] | LogR, NN | Horizontal | ||

| FedPage [145] | LM | Horizontal | ||

| FedNova [143] | LogR, NN | Horizontal | ||

| Per-FedAvg [138] | NN | Horizontal | ||

| FedAdam [142] | LogR, NN | Horizontal | ||

| FedCM [144] | NN | Horizontal | ||

| FedDF [141] | NN | Horizontal | ||

| FedRobust [154] | NN | Horizontal | ||

| PFedMe [155] | LogR, NN | Horizontal | ||

| VANE [156] | LM | Horizontal | ||

| FedSGD [157] | LM, NN | Horizontal | ||

| FedDPGAN [81] | NN, KNN, SVM | Horizontal | ||

| Weighted FedAvg [81] | NN | Horizontal | ||

| FedV [147] | LM | Vertical | ||

| FedBCD [146] | LogR, NN | Vertical | ||

| FedCluster [150] | Cluster | Horizontal and Vertical | ||

| CORK [158] | NN | Horizontal | ||

| FedMDFG [159] | NN | Horizontal | ||

| FedDELTA [160] | LogR, CNN | Horizontal | ||

| CROWDFL [161] | NN | Horizontal | ||

| FedHealth [85] | NN | FTL | ||

| FedMA [152] | NN Specialized | NN | Horizontal | |

| FedGraphNN [151] | Sum | NN | Vertical | |

| GBDT [89] | DT Specialized | DT | Horizontal | |

| SecureBoost [149] | DT | Vertical | ||

| Fed-EINI [94] | DT | Vertical | ||

| FeARH [75] | Distributed GD | Average | LM, NN | FTL |

| FastSecAgg [162] | Mini-batch GD | NN | Horizontal |

Most aggregation methods assume that all clients work reliably and use similar models, which is often unrealistic. In practice, healthcare FL deployments face issues such as straggler clients, hardware heterogeneity, and nonstationary data, which necessitate adaptive and fault-tolerant aggregation protocols. In addition, there is a lack of comprehensive benchmarking of these algorithms under realistic clinical workloads, which limits their practical generalizability and adoption in real-world healthcare environments.

4.7. Open-source FL systems

Using software libraries for FL implementation can improve the effectiveness of FL. The seven primary open-source FL frameworks currently under active development include Google TensorFlow Federated [163], Federated AI Technology Enabler (FATE) [164], OpenMined PySyft (PySyft) [165], Baidu PaddleF [166], FL and Differential Privacy Framework [167], FedML [168], and DataSHIELD [169], [170] (Table 11).

Table 11.

Features of Open-Source FL Systems.

| Properties | TFF | FATE | PySyft | PFL | FL&DP | FedML | DataSHIELD |

|---|---|---|---|---|---|---|---|

| System Architecture | Centralized, Decentralized | Centralized | Decentralized | Centralized, Decentralized | Centralized | Centralized, Decentralized | Centralized |

| Data Partitioning | Horizontal | Horizontal, Vertical | Horizontal | Horizontal, Vertical | Horizontal | Horizontal, Vertical | Horizontal, Vertical |

| Scale | Cross-Silo | Cross-Silo | Cross-Silo, Cross-Devices | Cross-Silo, Cross-Devices | Cross-Silo | Cross-Silo, Cross-Devices | Cross-Silo |

| Machine Learning Models | NN, LM | NN, DT, LM | NN, LM | NN, LM | NN, LM, Clustering | NN, LM | PCA, LM, NN, KNN, Clustering |

| Privacy Mechanisms | DP | DP, SMC, HE | DP, SMC, HE | DP, SMC | DP | SMC | Anonymization |

| Aggregation Method | FedAvg, FedSGD | SecureBoost, SecAgg, FedAvg | FedAvg, SplitNN | SecAgg, FedAvg, SplitNN | FedAvg, Weighted FedAvg, FedCluster | FedAvg, SplitNN | Customizable |

As depicted in Table 11, FATE is the most comprehensive system that supports numerous ML models under horizontal and vertical partitioning conditions with varying federation scales. Furthermore, compared with other open-source systems, FATE offers more privacy mechanisms and implements numerous aggregation algorithms. However, FATE exclusively supports centralized and cross-device architectures. In contrast, FedML integrates several state-of-the-art FL algorithms, supporting centralized and decentralized setups. Furthermore, it supports cross-device and cross-silo architectures. However, its application scope is restricted to fewer ML models and privacy mechanisms.

DataSHIELD–subjected to continuous developments is a framework tailored to the domains of healthcare and social sciences and enables advanced statistical analyses of individual-level data from multiple sources without data pooling. The approach adopted by DataSHIELD differs from that of other systems. Specifically, for a research question of interest, participating clients select a set of variables to be analyzed and subsequently create local problem-specific datasets. The individual clients then independently analyze their generated datasets and anonymize their results before sharing them with the central server for aggregation. The process repeats until a final result is obtained, and the aggregation method of the central server is custom-defined to efficiently address the question. Furthermore, the specific anonymization process is not predefined within the system, but is specified during the computation based on the best practices and data protection rules frequently employed within the given domain. This flexibility makes DataSHIELD more versatile than other available systems. However, it may still encounter challenges such as result inconsistencies and/or disagreements on anonymization methods.

4.8. Representative healthcare datasets in FL

To enhance the depth of our comparative analysis, we identify and summarize the five main data sets that are most frequently used in FL healthcare research. These datasets are selected based on their recurrence in literature, data richness, public availability, and relevance to core healthcare prediction tasks such as mortality estimation, disease diagnosis, and physiological signal analysis. Table 12 categorizes these datasets into three major types: tabular medical data, one-dimensional time-series data, and image-based data. The MIMIC-III, eICU and Cerner Health Facts datasets represent structured clinical data commonly used in FL for tasks such as mortality and prediction of adverse events. The MIT-BIH dataset provides standardized ECG signals and is widely applied in signal-based FL experiments. Lastly, publicly available chest X-ray and CT datasets related to COVID-19 have been instrumental in validating FL models for image-based disease diagnosis. These datasets are favored due to their large sample sizes, diverse patient demographics, and compatibility with privacy-preserving FL frameworks across real-world healthcare settings.

Table 12.

Top Five Datasets Used in FL for Healthcare: Categorized by Data Type.

| Dataset | Data Modality | Data Type | Why It Ranks Top |

|---|---|---|---|

| MIMIC-III | ICU EHR Records | Tabular Medical Data | Public, large-scale dataset used for mortality and ADR prediction across multiple FL studies. |

| eICU Collaborative DB | ICU Patient Data | Tabular Medical Data | Realistic multi-institution EHR; widely used for mortality prediction in horizontal FL settings. |

| Cerner Health Facts | EHR from 50 Hospitals | Tabular Medical Data | Massive real-world dataset; supports non-IID federated prediction of preterm birth. |

| MIT-BIH ECG | ECG Signal (Time-Series) | One-Dimensional Data | Gold-standard ECG signal dataset for arrhythmia detection; benchmarks FL performance on time-series data. |

| Chest X-ray / CT (COVID) | X-ray / CT Images | Image-Based Data | Widely used for FL-based pneumonia/COVID-19 diagnosis using CNNs; high clinical relevance. |

Fig. 5 presents a summarized design of a FL-based healthcare system, highlighting its key components such as design architecture, data availability and partitioning methods, machine learning models, privacy techniques, aggregation algorithms, and open-source platforms commonly used for implementation. While open-source FL frameworks provide flexible platforms for implementation, real-world deployments in healthcare introduce several technical and domain-specific challenges. The following section highlights these challenges and examines ongoing efforts to address them.

Fig. 5.

Summarized FL design healthcare system.

5. Challenges and open problems in federated learning

The volumes of medical datasets grow rapidly over time as patients undergo treatment and monitoring, resulting in rapidly growing, massive, and dynamic datasets. The design of efficient FL algorithms capable of demonstrating high accuracy, minimum processing durations, and reduced memory usage is crucial. FL provides a means of accessing extensive patient data obtained from various locations, paving the way for future innovations. This section discusses the main challenges associated with FL systems [171], [140], [172], [173], [155], [140], [174] and their impacts on FL implementation in healthcare services compared to conventionally distributed data computations and traditional privacy-preserving learning techniques.

5.1. Communication overhead

Medical data is often distributed across numerous locations. Given that distributed learning trains a model on the entire distributed dataset, intense communication among clients is required to obtain the final result. These extensive communication requirements are among the main bottlenecks of traditionally distributed learning approaches. Remarkably, SDL and FL attempt to minimize communication overhead by restricting raw data to the client side and transmitting local model parameters for global model training. However, FL systems often involve numerous clients (hundreds of hospitals or millions of smart mobile phones and wearable devices), requiring several-parameter communications for global model updates. For example, the authors of [175], [156] used 350 and 1,000 iterations to train their global regression models, while those of [72] adopted 200 iterations to train their NN model. Meanwhile, the authors of [176] utilized 25 iterations to train a DT model, implying that healthcare applications require high-performance algorithms to improve the quality of healthcare service.

To improve efficiency and reduce communication costs of FL systems [177], [178], [179], three parameters must be considered: (i) total iterations performed, along with the communication load per iteration; (ii) sizes of messages shared between clients and the server at each iteration; and (iii) complexity of the aggregation algorithm adopted by the server at each iteration.

-

•

Number of iterations and communication load per iteration: researchers have devoted considerable efforts toward the minimization of the number of iterations required to obtain a desired model, as well as the overall communication load encountered during each iteration. Several optimization algorithms for local model computations have been introduced to enhance model convergence durations in FL systems [146]. However, some of these algorithms reduce communication costs at the expense of partial deterioration in accuracy [180], [181]. Other methods recommend the transmission of model updates from a selected client set instead of all, substantially reducing communication costs per iteration [182], [183].

-

•

Message size at each iteration: further efficiency improvements can be realized by reducing the message size. Dankar et al. [37], [90] designed a secure feature selection method for a distributed LR model, which reduced the communication cost per client by decreasing the number of features. Furthermore, while compression techniques and quantization methods are adopted to reduce message sizes [184], [185], [186], [187], the former approaches can compromise the accuracy of the model. Given that high analysis accuracies are required in the medical domain, adopting compression techniques is not advisable.

-

•

Complexity of the aggregate algorithm: The complexity of the adopted aggregation algorithm is another critical parameter that influences efficiency. Remarkable research efforts have been devoted to the development of faster aggregation methods (Table 10). For instance, Bonawitz et al. [188] implemented a quantization method for efficient communication, highlighting certain challenges encountered during parameter configurations. Furthermore, the study reported that manipulations of the learning rate, model update frequency, and number of quantization bits could reduce overall communication costs.

5.2. Systems diversity -systems heterogeneity

Devices like wearables and smartphones often have limited storage, power, and network access, making FL more difficult [189]. For instance, poor network connectivity can result in interrupted communication between clients and the server. Meanwhile, limited memory storage and computational capacities of mobile phones and wearable devices can cause delays in model transmission and hinder active client participation, leading to difficulties in gradient updates. Several key strategies for addressing system heterogeneity have been proposed by previous studies; These include improving system fault tolerances (improved system ability to continue operations despite failures of one or more components), implementing asynchronous communication (facilitating model update transfers without time constraints) and performing active device sampling (selecting active client devices) [140]. Among these, fault tolerance is critical for cross-device FL to ensure uninterrupted operations despite possible dropouts of participating devices before completing training iterations, which can compromise computations and bias device sampling schemes (if only failure-prone devices are ignored). Standard techniques for improving fault tolerance in FL involve reducing the number of participating clients per round and excluding faulty devices [188], [169]. However, these techniques may yield less efficient models, which is undesirable in the medical field with high critical efficiencies.

5.3. Non-IID data -statistical heterogeneity

Most healthcare institutions adopting FL-including hospitals, clinical organizations, and pharmaceutical and insurance companies-may not possess independent and IID datasets. Several factors-including variations in geographic locations and data collection times-can contribute to these differences. Local datasets often differ in features and labels, making training inconsistent across clients [190], [191], [192], [193]. Recent experiments have demonstrated that the FedAvg algorithm using non-IID data demonstrates poor accuracies and convergence speeds [8]. Zhao et al. [194] explain that non-IID data leads to convergence latency, loss of accuracy, and decrease in model utility. Hence, in medical applications requiring high accuracies and efficiencies, addressing non-IID data is crucial. Thus, Jeong et al. [195] proposed a data augmentation scheme using generative adversarial networks (GANs). Within this scheme, local datasets are augmented with synthetic data to transform them into balanced and independent datasets, thereby reducing communication overhead and improving accuracy. However, this method necessitates additional complex training of the GAN (on the server side), compromising raw data privacy as each client needs to upload a few data samples to the server. Alternative approaches for improving accuracy and model applicability [196], [197], [198]-as well as for accelerating model convergence rates [199]-have been introduced; however, finding a low-complexity-burden solution that preserves privacy remains a challenge.

While GAN-based data augmentation has shown promise in alleviating non-IID challenges by generating synthetic samples to balance data distributions across clients, recent studies have raised concerns about potential privacy leakage through GAN outputs [115], [200]. Adversarial clients could exploit GANs to reconstruct sensitive attributes or even identifiable features of training data, especially in healthcare settings where patterns can be patient-specific.

As a safer alternative, [201], [138] has been proposed to improve the generalization of the model across heterogeneous client distributions without relying on synthetic data generation. Meta-learning frameworks, such as Model-Agnostic Meta-Learning (MAML), aim to train a global initialization that can be quickly adapted to each client's local distribution using a few steps of fine-tuning. This approach naturally fits non-IID scenarios, as it allows personalization while preserving the shared structure of the task.