ABSTRACT

Antibodies are versatile therapeutic molecules that use combinatorial sequence diversity to cover a vast fitness landscape. Designing optimal antibody sequences, however, remains a major challenge. Recent advances in deep learning provide opportunities to address this challenge by learning sequence–function relationships to accurately predict fitness landscapes. These models enable efficient in silico prescreening and optimization of antibody candidates. By focusing experimental efforts on the most promising candidates guided by deep learning predictions, antibodies with optimal properties can be designed more quickly and effectively. Here we present AlphaBind, a domain-specific model that uses protein language model embeddings and pre-training on millions of quantitative laboratory measurements of antibody–antigen binding strength to achieve state-of-the-art performance for guided affinity optimization of parental antibodies. We demonstrate that an AlphaBind-powered antibody optimization pipeline can deliver candidates with substantially improved binding affinity across four parental antibodies (some of which were already affinity-matured) and using two different types of training data. The resulting candidates, which include up to 11 mutations from parental sequence, yield a sequence diversity that allows optimization of other biophysical characteristics, all while using only a single round of data generation for each parental antibody. AlphaBind weights and code are publicly available at: https://github.com/A-Alpha-Bio/alphabind.

KEYWORDS: Antibody engineering, computational protein design, machine learning, yeast display

Introduction

Therapeutic antibodies play an increasingly pivotal role in the biotherapeutics market, and the ability of antibody variable fragments to participate in high-affinity and highly specific protein–protein interactions makes them ideal for therapeutic modalities, including antibody-drug conjugates, bispecific antibodies, and CAR T-cell therapies. Their development has historically relied on labor-intensive experimental methods including directed evolution via display techniques or via animal immunization, followed by manual optimization through iterative assessment of a small number of human-designed variants.1,2

For the development of such molecules, it is essential to control and optimize the binding affinity of an antibody to its target, sometimes to multiple targets, while also ensuring maintenance of a valid antibody-like structure and favorable biochemical properties like solubility, aggregation, and potential immunogenicity.3–6 However, the plausible search space is both enormous (e.g., each amino acid change from a parental antibody of length 100 increases the search space by a factor of approximately 2,000) and sparse (most sequences derived from any given parental antibody will be poor binders, poorly behaved as recombinant protein, or both).7 The challenge is increased when a candidate antibody must bind more than one target (e.g., cross-reactivity across a phylogeny of target sequences in an infectious disease context), or when properties other than affinity make orthogonal demands on primary sequence (e.g., multi-objective optimization for affinity plus solubility, thermostability).

Recently, deep learning methods have greatly improved the ability to effectively sample valid antibody sequences, including evolution- and structure-informed approaches, and both unguided methods, and guided methods incorporating additional in vitro data.8–11 Among these approaches, effective model scaling has been demonstrated according to model complexity as well as the amount and quality of training data.12–14 Unguided generative sampling of valid sequences near a parental sequence can produce proposal sets, including many that can be expressed as functional proteins and may have similar or improved function compared to the parental sequence.15–17 Given any such technique for sampling valid sequences, design of a novel therapeutic sequence can be further accelerated via prioritizing proposals for in vitro validation based on an appropriate fitness landscape, assuming a suitable prediction of fitness is available. Taking this guided approach, several studies have demonstrated the effectiveness of deep learning approaches incorporating large, high-quality datasets to train highly effective affinity prediction models used to prioritize candidate sequences for in vitro validation.18–20

The AlphaSeq assay,21 a yeast display system that quantitatively measures large numbers of protein–protein interaction affinities in parallel, has been leveraged to generate such datasets characterizing antibody affinity using large libraries of antibody and antigen sequence variants, including a large dataset of largely scFv and VHH antibodies vs. a SARS-CoV-2 receptor-binding domain (RBD) mutation library;22 a dataset of scFv-format antibodies vs. a SARS-CoV-2-derived peptide;23 and several large panels comprising variants of VHH72, a camelid antibody targeting SARS-CoV-1 RBD with some SARS-CoV-2 RBD cross-reactivity.18 Multiple studies using these datasets have previously demonstrated that AlphaSeq data are highly effective for training machine learning (ML) models for guided antibody sequence optimization.18,19

Here, we combine high-throughput antibody–antigen affinity datasets that explore the local fitness landscape around a parental antibody with AlphaBind, a domain-specific model pre-trained on approximately 7.5 million quantitative affinity measurements from unrelated antibody–antigen systems, to perform guided antibody optimization on three diverse parental antibodies using AlphaSeq datasets to fine-tune our pretrained model. We demonstrate that (1) given adequate local training data, sequence optimization using AlphaBind quickly generates thousands of high-affinity antibody derivatives, (2) both protein language model embeddings and pre-training on unrelated antibody–antigen binding data contribute significantly to model performance, and (3) the AlphaBind model retains good performance even on local data generated via a different assay than its own training data, using previously published high throughout mammalian display data exploring CDRH3 mutations of trastuzumab to conduct a fourth optimization campaign. Furthermore, we show that a fine-tuned AlphaBind model can guide sequence optimization for predicted developability and immunogenicity while maintaining affinity, resulting in an optimized variant of our anti-TIGIT scFv with no predicted sequence liabilities and extensive sequence reversion to human germline with no loss in affinity. Finally, we present the necessary background, model architecture and code, including a pre-trained model checkpoint, to enable broad application of the AlphaBind model for affinity optimization of therapeutic antibody candidates.

Results

Antibody optimization campaigns

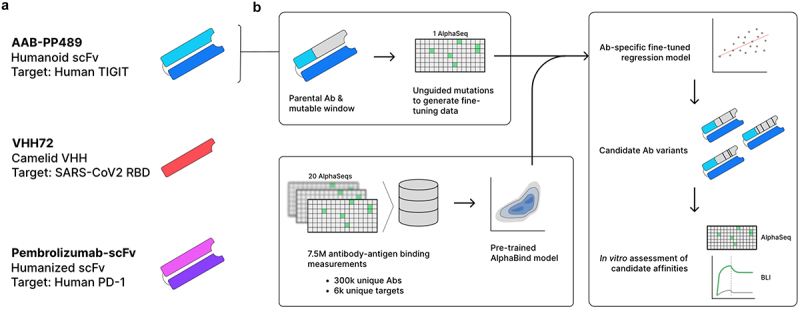

Three different antibody–antigen systems were chosen for benchmarking of AlphaSeq-based antibody optimization with AlphaBind: AAB-PP489, a previously undescribed humanoid scFv targeting human TIGIT; Pembrolizumab-scFv, an scFv-formatted humanized mouse antibody targeting human PD-1;24 and VHH72, a camelid single-chain antibody targeting the RBD of SARS-CoV1 with cross-reactivity to SARS-CoV2.25 Figure 1 provides an overview of the AlphaBind fine-tuning and optimization process, and additional details for each system are given in Table S1.

Figure 1.

Experimental overview.

(a): Summary of the three parental antibodies used to benchmark AlphaBind, including a humanoid scFv binding human TIGIT (AAB-PP489), a camelid single-domain heavy chain binding SARS-CoV2 RBD (VHH72), and a humanized mouse scFv binding human PD-1 (Pembrolizumab-scFv). (b): For each parental antibody, a contiguous 300nt region (including 5’ and 3’ primer sites) was identified, defining the mutable window; AlphaSeq was used to generate a training dataset of approximately 30,000 variants within the mutable window. The AlphaBind regressor, pre-trained on unrelated antibody–antigen affinity data, was fine-tuned with each training dataset to generate an antibody-specific sequence-to-affinity regressor, and then used to generate candidate sequences optimized for predicted binding affinity. Candidate variants of each parental antibody were validated in vitro using AlphaSeq for large-scale validation and biolayer interferometry for a subset of candidates.

For each parental antibody, a fine-tuning dataset was generated by constructing a library of approximately 30,000 antibody variants. A contiguous region of approximately 83 amino-acid residues was selected for each antibody covering as much of the CDRH regions as possible; limiting the mutable region to 83 amino acids allowed generation of large variant libraries using 300-nt oligonucleotides including fixed flanks for cloning. Within each mutable region, we constructed a yeast display library comprising essentially all single missense mutations (except for cysteine), a limited number of insertions and deletions, many randomly selected double and triple missense mutations, and several replicates of the parental antibody sequence. These libraries were then used to generate AlphaSeq data, providing quantitative affinity values for binding between each library member and its associated target; in each campaign, most variants had measurable binding to their targets with a minority showing improved affinity, establishing the quantitative data necessary to learn an antibody-specific fitness landscape for each parental antibody. Fine-tuning AlphaSeq datasets are available as part of Supplemental Materials, and Table S2 provides a summary of datasets generated as part of this work.

Model training & optimization

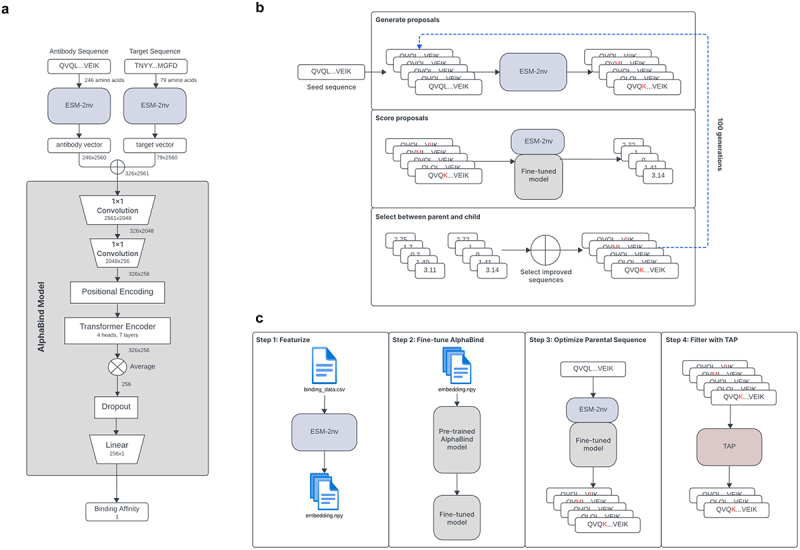

Figure 2 summarizes the architecture and pre-training of the AlphaBind regression model and our optimization strategy. Briefly, the AlphaBind model was pre-trained on antibody–antigen AlphaSeq affinity data from unrelated systems. Twenty distinct AlphaSeq datasets were used for pre-training, comprising approximately 7.5 million rows of antibody–antigen affinity data, including roughly 300,000 distinct antibody constructs and 6,000 distinct target constructs. Thirteen datasets included camelid VHH antibodies, and nine datasets included human, humanoid or humanized scFv-formatted antibodies (including two datasets with both types of antibodies). The pre-training data included 11 datasets primarily comprising variant libraries (i.e., tens of thousands of sequence variants of their respective parental antibodies), as well as 9 datasets comprising primarily diverse libraries (e.g., libraries cloned from animal immunization or phage panning experiments – thus including tens of thousands of distinct parental antibodies). Median overall size among the 20 training datasets was 720,000 PPIs, including a median of 24,000 antibodies or antibody variants and 47 targets or target variants. Figure S1 provides a visual representation of antibody and target sequence diversity with respect to appropriate baselines. Among antibodies, the pretraining data covers a wide range of diversity, spanning essentially the same scope as is present in the Observed Antibody Space (OAS) database, though coverage is sparse except for several clusters of density corresponding to parental antibodies for which variant libraries were present in the training data. Among targets, which were compared to a sample of Uniclust30 sequences, coverage is substantially more sparse.

Figure 2.

AlphaBind Architecture.

(a): Antibody and target are encoded using ESM-2nv, then those tensors are concatenated and input into the AlphaBind model, including a transformer encoder with four heads and seven layers and a total of 15 M parameters. (b): During optimization, ESM-2nv masking is used to perturb seed sequences, which are scored according to the fine-tuned regression model and accepted if they have improved predicted affinity, then the process is iterated. (c): Overall campaign architecture: training data are featurized, the AlphaBind regressor is fine-tuned, optimization is performed to generate a pool of candidates, and TAP5,6 is used to filter the resulting candidates to ensure no major predicted developability issues.

Training data were screened to prevent inclusion of any data related to the parental antibodies or targets being optimized herein. As a result, only Pembrolizumab-scFv used the full pre-trained model for fine-tuning – AAB-PP489 and VHH72 utilized a smaller pre-trained model (see Materials and Methods).

After pre-training, AlphaSeq datasets for each parental antibody were used to fine-tune the AlphaBind model. Each fine-tuned regression model was used to generate approximately 6 million sequence proposals, from which approximately 7,500 candidate sequences were selected for in vitro validation in AlphaSeq. To explore AlphaBind’s ability to extrapolate beyond its training data, candidates were selected according to a uniform distribution for 2 through 11 mutations with respect to the parental antibody. Among these 7,500 candidates, the top 5 candidates with the strongest predicted affinity were selected for expression and validation using biolayer interferometry (BLI), without regard to the number of mutations. Detailed information on model training, optimization, and sequence selection can be found below in the Materials and Methods section.

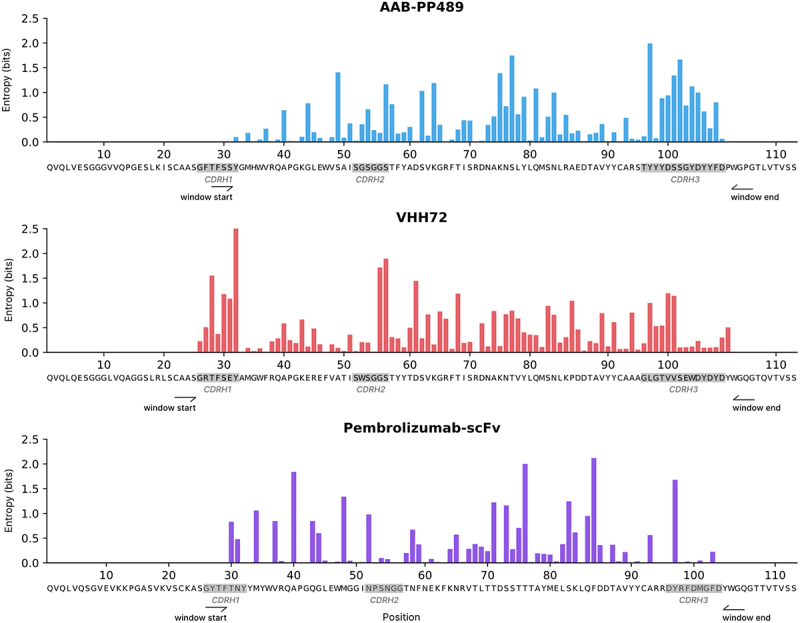

An analysis of sequence diversity among the 7,500 candidates chosen for AlphaSeq validation for each parental antibody demonstrates a broad range of both positions mutated and variety of mutations selected, as shown in Figure 3 (CDRs annotated using ANARCI, according to Chothia nomenclature).26,27 For AAB-PP489, AlphaBind preferred to mutate CDRH3 (as might be expected for a phage panning hit not otherwise affinity-matured to a local optimum), while also making many mutations in FR3. For VHH72, diversity was highest in CDRH1 and CDRH2, also with many mutations in FR3. For Pembrolizumab-scFv, only a single position in CDRH3 had high sequence diversity among optimized candidates, again with many mutations made in FR3. Figure S2 shows logo plots of the mutations proposed among optimized candidates for each parental antibody, identifying a handful of residues in each antibody that AlphaBind repeatedly favors for specific mutations, as well as a broader range of residues at which some sequence diversity is tolerated. We calculated information gain relative to parental sequences and compared these results to known and predicted antibody structures (Figure S3), identifying important residues at the paratope–epitope interface, but also across the antibody structure, underscoring the model’s ability to identify advantageous residues beyond the immediate paratope region. This analysis demonstrates that AlphaBind candidates cover a wide space with respect to both sequence and structure, providing substantial freedom for downstream selection on additional properties such as predicted biophysical properties.

Figure 3.

Sequence diversity of optimized candidates.

For each parental antibody, we annotate CDRH regions and plot the primary sequence diversity (Shannon entropy, measured in bits) at each position within the designated mutational windows. While there is substantial variation in the mutability of each position, AlphaBind proposes a broad variety of mutations throughout the CDR and framework regions, with many different positions mutated and many different mutations observed at key positions.

In vitro validation of AlphaBind candidates

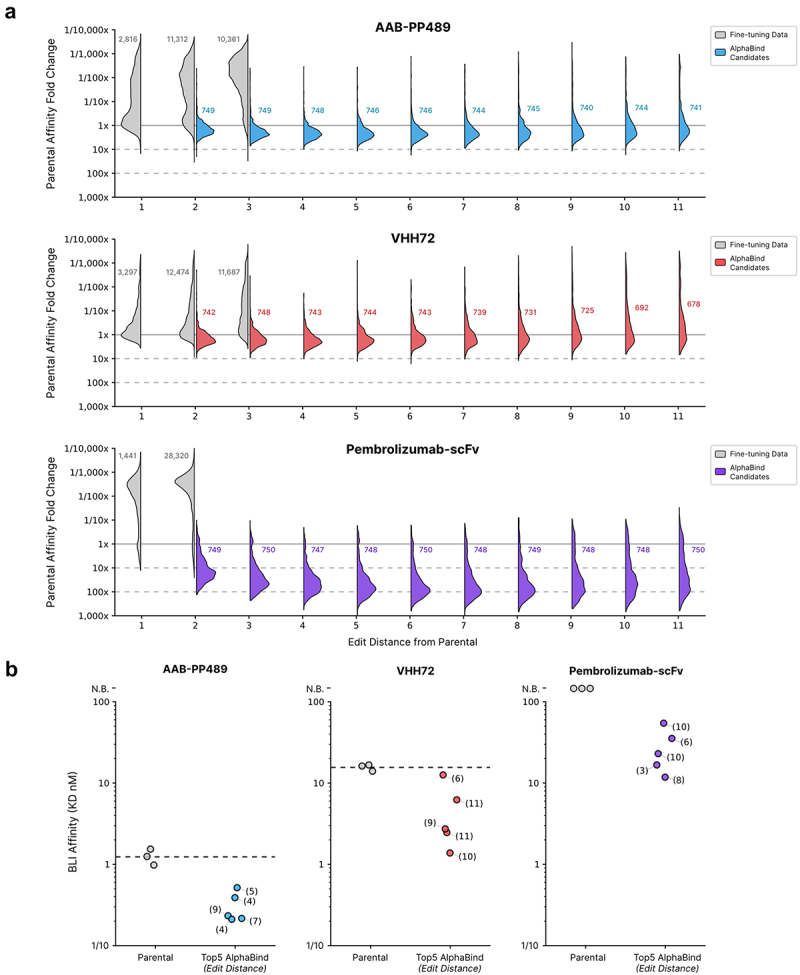

AlphaSeq validation results for each parental antibody are summarized in Figure 4a; across all parental antibodies, AlphaBind fine-tuning and optimization was able to generate thousands of candidates with binding affinity stronger than the parental. Up to 8 mutations away from the parental for VHH72, and up to 11 mutations away from parental (the maximum tested) for Pembrolizumab-scFv and AAB-PP489, the median binding affinity for an AlphaBind-proposed candidate was better than its parent. Across campaigns, candidates were specific for their intended targets: among candidates with ≤1 µM AlphaSeq affinity to their target, 80% of VHH72 candidates, 92% of Pembrolizumab-scFv candidates, and 97% of AAB-PP489 candidates demonstrated specific binding defined as on-target affinity ≥100x higher than average off-target affinity measured to irrelevant control proteins in AlphaSeq.

Figure 4.

AlphaBind validation results.

(a): Fine-tuned AlphaBind models were trained for each of three parental antibodies and used to generate 750 candidates at each edit distance from 2 through 11 mutations relative to parental. AlphaSeq was then used to prospectively assess the affinity of optimized candidates. For each parental antibody, we report the distribution of affinities for optimized candidates at each edit distance as affinity relative to the mean affinity among 50 parental antibody replicate measurements. Numerical annotations represent the number of candidates tested at each edit distance. AlphaBind-derived candidates have a median affinity better than the parental molecule up to 8 edits away from the parent sequence for VHH72 and up to 11 edits from the parent sequence for Pembrolizumab-scFv and AAB-PP489. (b): BLI validation results for the top five AlphaBind candidates for each parental antibody. Mean parental affinities are displayed as dashed lines. Across the three systems, 15/15 (100%) top candidates were successfully expressed, and of those 10/10 (100%) had better affinity than the parental antibody. Affinity relative to parental antibody could not be assessed for Pembrolizumab-scFv since the parental antibody was not successfully expressed and thus no BLI measurement was available. No candidates had any measurable binding to an irrelevant negative control target. Single-point BLI values for certain candidates were confirmed via either multi-point BLI or KinExA for candidates with off-rates at or near the BLI limit of detection, confirming a 74x improvement in affinity for the best AAB-PP489 candidate and a 14x improved affinity for the best VHH72 candidate.

Figure 4b summarizes the results of the top 15 optimized candidates selected for BLI validation (5 per parental antibody); 15 of 15 candidates (100%) were successfully expressed (as scFv-FC for scFv antibodies), and 10 of 10 (100%) had superior binding affinity than their parental antibodies. The parental Pembrolizumab-scFv could not be expressed in scFv-FC format; all five top variants did express, but their affinity could, therefore, not be compared to parental. No candidates had any binding to an irrelevant negative control target by BLI. Affinity for the top VHH72 candidates was confirmed by multi-point BLI. Several AAB-PP489 candidates had binding affinities outside the accurate range of BLI and failed to generate a reliable off-rate (i.e., reported as <1.0E–4/s). Affinity for the top performing AAB-PP489 variant and matched parental was confirmed via kinetic exclusion assay (KinExA). We confirmed an affinity improvement of 74x for the best AAB-PP489 variant (from 56.5 pM for parental to 766 fM, by KinExA) and 14x for the best VHH72 variant (from 16.8 nM to 1.17 nM, by multi-point BLI).

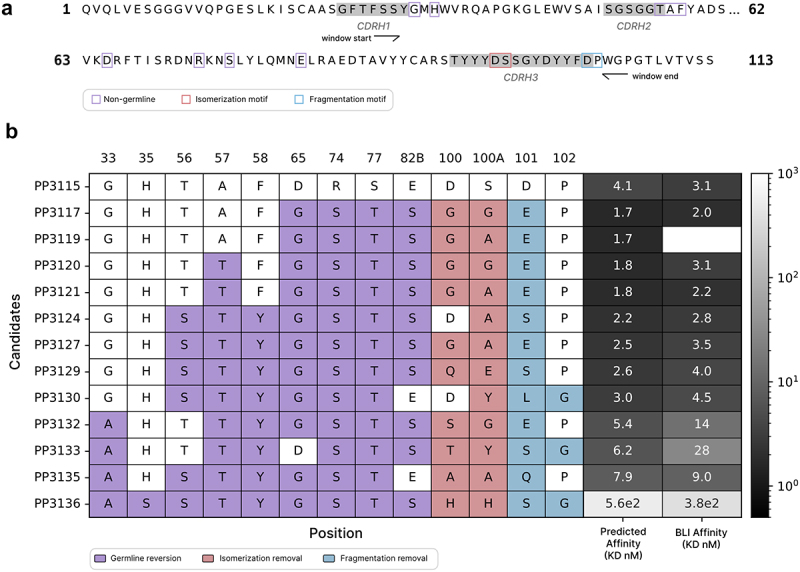

Affinity-guided developability engineering

To demonstrate the utility of a fine-tuned AlphaBind affinity model for downstream engineering, we used the fine-tuned AAB-PP489 model to design variants with improved developability attributes. We selected AAB-PP3115, an affinity-optimized variant of AAB-PP489, for further engineering due to its strong affinity and observed biophysical properties (see Supplemental Materials for the sequence of AAB-PP3115 and its variants). This engineering campaign is summarized in Figure 5; briefly, AAB-PP3115 contains two potential sequence liabilities, a DS isomerization motif and a DP fragmentation motif in CDRH3, and has nine residues that do not match the closest human germline (IGHV3–23*04 and IGHJ5*02) within its heavy chain mutable window. Our goal was to ablate those sequence liabilities (improving predicted developability) and revert as many residues as possible to germline (improving predicted immunogenicity) while maintaining or improving affinity to human TIGIT. First, we generated (194 = 130,321) candidates with all possible mutations to the four residues involved in the liability motifs, keeping 736 that removed both predicted liabilities and had an AlphaBind-predicted affinity within 0.5 logs of parental AAB-PP3115. Then, for each of those 736 we generated 29 (512) sequences with all combinations of germline-reverted residues, for a total of 376,832 candidates. From these candidates we selected 11 with the most germline reversions, best AlphaBind-predicted affinity, and favorable predicted developability and immunogenicity metrics according to TAP and NaturalAntibody,5,28 then expressed those candidates for in vitro validation alongside parental AAB-PP3115. As a control for the trivial case of full germline reversion, we expressed one candidate with the best predicted liability-reversion mutations and all nine positions reverted to germline, predicted by AlphaBind to have a 337-fold reduced affinity compared to parental. This process led to the identification of AAB-PP3117, with no liability motifs in the mutable window, four of nine residues reverted to human germline, improved expression compared to its parental molecule (334.1 µg/mL compared to 259.1 µg/mL), and improved binding to human TIGIT. We measured an affinity of 310 fM in IgG format by KinExA, a 182-fold improvement over the original AAB-PP489 (56.5 pM scFv-FC by KinExA), and a 3.4-fold improvement over its parent AAB-PP3115 (1.04 pM IgG by KinExA), despite making seven additional mutations guided only by the fine-tuned AlphaBind regression model. As predicted, the fully germlined variant was a poor binder, underscoring the need for ML guidance to maintain affinity when undertaking additional sequence engineering. Details of all tested candidates, as well as full BLI and KinExA results, are available in the Supplemental Materials, including BLI and protein analytics in Table S5 and KinExA results in Table S7.

Figure 5.

Affinity-guided developability engineering.

(a): AAB-PP3115 is an AlphaBind variant of AAB-PP489 with optimized affinity. Within its mutable window, AAB-PP3115 contains nine non-germline residues along with one isomerization liability motif and one fragmentation liability motif. (b): The fine-tuned AAB-PP489 AlphaBind regressor was used to assess approximately 500,000 derivatives of AAB-PP3115, to ablate an isomerization motif and a fragmentation motif in CDRH3, and revert as much of FR2/FR3 as possible to human germline; 11 candidates were chosen for in vitro validation by BLI. 10/11 (91%) candidates expressed, 7/11 (64%) maintained affinity within 2x and 3/11 (27%) had improved affinity for human TIGIT, all while making an additional 7–9 mutations in the successful sequences. Full analytics results including titer, BLI affinity, ASEC and DSF are provided in Table S5.

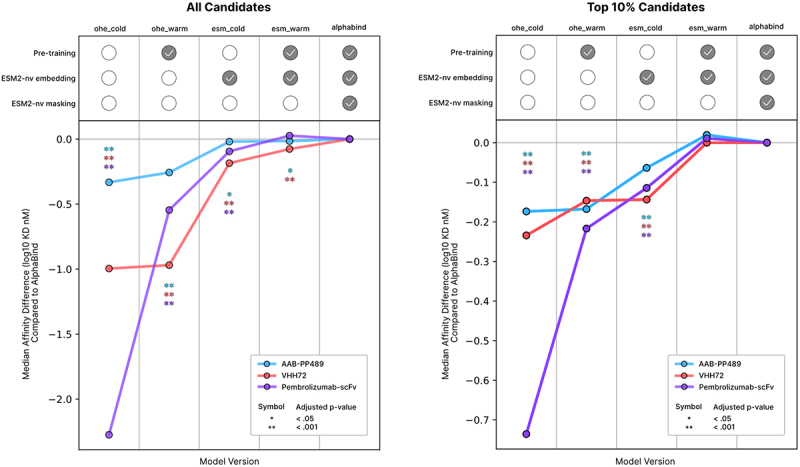

Assessment of ablated AlphaBind models

To investigate the relative contribution of different AlphaBind architectural components, we performed analogous regressor training and affinity optimization using four ablated versions of the AlphaBind model. Results are summarized in Figure 6, with additional details in Materials and Methods. Briefly, we compared the optimized candidate sequences from each ablated model, either in full (all candidates) or among top candidates after in vitro validation with AlphaSeq (i.e., just those most likely be chosen for further development). Each ablated model was compared to the full AlphaBind model according to median candidate affinity, with p-values computed via Mann–Whitney U test, assessing the null hypothesis that candidates from the full AlphaBind model had no better affinity than candidates from each ablated model. The simplest transformer-based regression model with one-hot encoded sequences (‘ohe_cold’), while still generating many candidates with improved affinity over parental and extrapolating several mutations farther than its training data, generated candidates much worse than the full AlphaBind model. Two other ablated regressor architectures, one-hot encoded sequences plus pretraining (‘ohe_warm’) and ESM-2nv embedded sequences without pretraining (‘esm_cold’), demonstrated intermediate results with the combination of ESM-2nv-embedded input sequences and pretraining together accounting for AlphaBind performance. An ablated model with an identical regressor but lacking ESM-2nv masking during the optimization step (‘esm_warm’) was nearly equivalent in candidate performance, but substantially increased overall optimization cost (the ablated model had to be optimized for 300 generations, rather than 100 for the full AlphaBind model, to generate sufficient candidates at edit distances of up to 11 mutations). Overall, the full AlphaBind model had significantly improved performance over models that lacked either ESM-2nv embedding or pre-training on related data, with a substantial difference in the quality of the top candidates in particular.

Figure 6.

AlphaBind ablation analysis.

(a): For each experimental system, all 7,500 candidates were compared for each of five models. The difference in median affinity between AlphaBind candidates and candidates from each ablated model is plotted, and significance is calculated according to a Mann–Whitney U test on candidate affinities with Benjamini–Hochberg procedure for control of false discovery. The removal of ESM-2nv embedding or pre-training on unrelated AlphaSeq data both significantly degrade overall model performance, while removal of ESM-2nv masking during optimization is equivalent or marginally superior, though less computationally efficient. (b): Ablated model performance, according to the same procedure and metrics as above, but focused only on the top 10% of candidates according to AlphaSeq validation, i.e., the candidates most likely to be chosen for additional validation or engineering; again, the effect of ESM-2nv masking during optimization is marginal to slightly negative, but removal of either ESM-2nv embedding or pre-training substantially degrades performance.

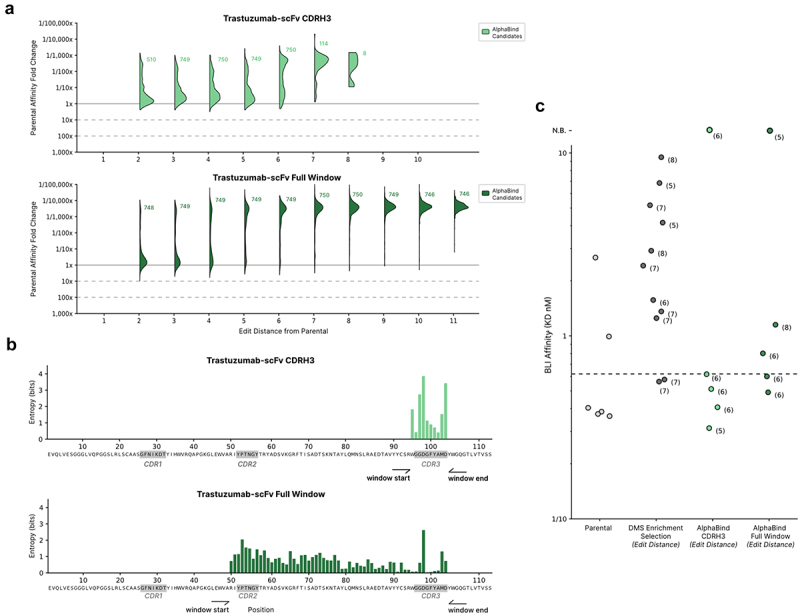

Antibody optimization with alternative fine-tuning data

To assess the utility of the AlphaBind pre-trained model with fine-tuning data from sources other than our own AlphaSeq assay, we performed an optimization campaign on Trastuzumab-scFv, a humanized mouse antibody targeting human HER2.29 Trastuzumab has been a common benchmarking system for the design and optimization of antibody variants using ML, with many studies assessing different methods,15,16,20,30 and variant binding datasets are readily available for fine-tuning. We utilized the affinity-sorted mammalian display dataset of approximately 36,000 CDRH3 variants from Mason et al.20 as fine-tuning data, and performed two different optimization experiments with the resulting fine-tuned AlphaBind model: first, we optimized just the CDRH3 region, concordant with the domain of the training dataset; second, we allowed our optimization routine to edit a larger contiguous window comprising the CDRH2-FR3-CDRH3 region, with edits to the CDRH2 and FR3 regions representing out-of-distribution prediction. As with the other optimization campaigns, we selected 7,500 candidates for AlphaSeq validation and 5 candidates for BLI validation, for each approach.

Our results are shown in Figure 7: when mutating only the CDR, we observed 60% successful candidates (i.e., an AlphaSeq reported affinity ≤1 uM), and in BLI we observed expression and binding from four of five top candidates, with three candidates within 10× of parental Trastuzumab affinity and one candidate with improved affinity over parental. When we optimized the entire CDRH1-CDRH3 window, we observed 23% success in AlphaSeq and four of five candidates with expression and binding in BLI, of which all four candidates were within 10× of parental affinity.

Figure 7.

Optimization of Trastuzumab-scFv.

(a): AlphaBind models fine-tuned on mammalian display enrichment data for a combinatorial DMS (deep mutational scan) library of Trastuzumab-scFv CDRH3 variants, then used to generate candidates across two spans: only CDRH3 (concordant with the DMS data), and a larger mutable window covering CDRH2 through CDRH3. (b): Sequence diversity as measured by Shannon entropy for the two spans is shown. Despite not being fine-tuned on any mutations in the framework regions, AlphaBind makes many edits in FR2 when allowed to mutate there. (c): BLI validation results for parental Trastuzumab-scFv, DMS-optimized candidates selected by enrichment in the fine-tuning dataset, and AlphaBind-optimized candidates. Three of five AlphaBind candidates with only mutations in CDRH3 were superior to the best directly observed in the fine-tuning dataset, and AlphaBind candidates in the full mutable window were comparable to those chosen by enrichment in the fine-tuning data despite being out of distribution with respect to sequences in the fine-tuning data. Edit distance for each candidate is shown in parentheses.

KinExA was used to confirm affinities for the top candidate from each optimization strategy, resulting in final relative affinity gains of 2.8x for the best CDRH3-only variant of Trastuzumab-scFv (from 7.43 pM to 2.74 pM, by KinExA) and 2.1x worse than parental for the best full-window variant of Trastuzumab-scFv (from 7.43 pM to 15.12 pM, by KinExA).

Overall, these results are comparable to those reported in the original study, which used in silico sequence proposal plus a purpose-built CNN classifier to create CDRH3 variants and found that 13 of 14 candidates bound HER2 (generally within 10× of parental) and 1 of 14 candidates had improved binding. Across both contexts, the candidates optimized with AlphaBind showed good affinity with respect to the most-enriched candidates taken directly from the Mason et al. dataset used for fine-tuning: three of five (60%) of the AlphaBind candidates were better than any candidate from the training dataset when editing CDRH3. These data indicate that AlphaBind can optimize antibodies effectively with a different data format than what it was trained on, that AlphaBind-based optimization can substantially improve candidate quality over candidates assayed directly in the training dataset with minimal additional time and compute cost, and that our results are concordant with those previously demonstrated via an ML model built specifically for the Trastuzumab optimization task.

In silico assessment

To assess the presence of any zero-shot optimization capability (i.e., prediction of antibody–antigen binding affinity in the absence of any fine-tuning data), we performed inference using the pre-trained AlphaBind model on the fine-tuning datasets for each parental antibody system (i.e., a library of single, double, and triple mutants). Results are summarized in Table S8; AlphaBind predictions have no correlation with measured affinities in a zero-shot context for three parental antibodies and a modest correlation for VHH72; ESM-2nv maskless pseudo-log likelihoods alone have a modest correlation with variant affinities for the three parental antibodies with full fine-tuning datasets (as expected, given that those datasets saturate single mutants including many highly disfavored mutations across the framework regions) and no correlation with affinities from the Trastuzumab fine-tuning dataset, which is restricted to CDRH3 variants.

We also performed an in silico analysis to compare the performance of supervised optimization using AlphaBind against current zero-shot modeling approaches. Recently, Chinery et al.31 conducted an in silico evaluation of various methods for their ability to generate HER2-binding variants of the 10-amino acid Trastuzumab HCDR3 sequence, based on the same dataset we used in our study for fine-tuning and optimizing Trastuzumab binders.20

In their approach, Chinery et al. train a convolutional neural network (CNN) classifier on the Mason et al. HCDR3 dataset and use this model as an oracle to assess the zero-shot performance of several sequence generation strategies. The methods evaluated include: (1) random sequence generation, (2) BLOSUM-based mutation, (3) AbLang with all positions masked simultaneously, (4) AbLang with one position masked at a time, (5) ProteinMPNN, and (6) ESM-2, with results ranging from 13% to 30% success rate. We applied the CNN oracle from Chinery et al. to the AlphaBind candidates measured in vitro with AlphaSeq. The CNN oracle predicts that 43% of the AlphaBind candidates will bind, notably higher than any of the zero-shot methods in Chinery et al., as expected for a supervised model. Binarizing according to AlphaSeq results (≤1 uM affinity) rather than CNN predictions results in an even higher success rate of 60% binders. These analyses indicate that while supervised optimization using AlphaBind is highly effective in practice, and superior to available zero-shot methods, AlphaBind does require fine-tuning data for effective optimization.

Discussion

Across four separate parental systems, including humanized, humanoid, and camelid antibodies, we demonstrated that AlphaBind, with a combination of antibody-specific training data generated via unguided high-throughput affinity measurement, protein language models, and transfer learning from unrelated antibody–antigen affinity data, generates highly diverse antibody variants with substantial affinity improvements, although the scale of affinity improvement varied widely between 2x-74x for the four systems tested.

Exact comparisons between the scope of affinity gains from ML-guided optimizations are difficult due to widespread differences in parental antibodies, optimization methodology, and assessment techniques across experiments. However, we believe that these results are among the best reported for ML-guided optimization of antibody sequences, especially given only a single round of optimization rather than an iterative process. Angermueller et al.18 recently reported a similar experiment to optimize VHH72, also using AlphaSeq training and validation datasets but utilizing an additional iteration of model training and optimization, and report 12 of 12 top candidates with improved affinity to SARS-CoV2-RBD by BLI, compared to 5 of 5 top candidates with improved affinity by BLI in the present study. Frey et al.15 used an unguided method on Trastuzumab and reported 70% of designs expressed and bound target, compared to 80% (4/5) of our BLI tested designs that expressed and bound target. Li et al.19 published a similar experiment optimizing naïve human Fabs targeting a SARS-CoV2 peptide, discovered by phage panning (i.e., similar to our discovery and optimization of AAB-PP489 but against a different target): they reported greater than 90% success rate of ML designs in AlphaSeq validation, including binders with AlphaSeq affinities up to 29x improved over any sequences in the training dataset. Finally, Krause et al.32 recently used antibody-specific affinity data and language model guided design to optimize five parental antibodies binding CD40L and validated designs with surface plasmon resonance. They reported strong binders up to eight mutations away from parental sequences, candidates with improved affinity for four of five parental antibodies, and an overall rate of approximately 40% of designed candidates with improved affinity compared to parental. In this study, we demonstrated binding on candidates with up to 11 mutations, and 10 of 10 (100%) tested candidates showed improved affinity by BLI.

As is evident in the comparisons above, the differences we observed in the scope of affinity gains across parental antibodies roughly accord with differences observed in other ML-guided antibody optimization papers, which report results ranging from a majority of sequences showing expression and some binding, but with affinity gains exceedingly rare,15,20 to 90%+ of candidates showing improved binding compared to a parental antibody.19 We speculate that these differences are primarily a function of how close each parental antibody is to a local optimum. It is notable that our work on AAB-PP489, an antibody that was optimized directly after discovery from a phage display library without in vitro or in vivo affinity maturation to drive it to a local optimum, had the best demonstrated improvement in affinity, at approximately 74×.

Additionally, while our study demonstrates the effectiveness of AlphaBind across four distinct antibody–antigen systems, future work will be required to validate the model’s generalizability across a broader range of antibody classes, including complex cases such as antibodies targeting GPCRs, antibodies requiring conditional binding (e.g., pH dependence or conformation-specific binding), and antibodies that require broad cross-reactivity across homologs, paralogs, or strain variants of microbial targets. We anticipate that any antibody whose binding behavior can be interrogated with sufficiently high-throughput fine-tuning data will be amenable to supervised optimization with AlphaBind, but further studies will be required to demonstrate efficacy in optimizing diverse antibody therapeutics. We expect that a variety of in vitro techniques may be necessary to generate adequate affinity datasets for such studies (e.g., acquisition of binding datasets under multiple specific pH conditions for pH-dependent binding; generation of stabilized target variants locked into a particular conformation for conformation-dependent binding), but that once fine-tuning datasets are available training and optimization should perform well.

Taken together, AlphaBind demonstrated similar or improved performance compared to other recent studies across four different antibody optimization campaigns with a simple architecture, low training and optimization costs, significant transfer learning from unrelated antibody–antigen binding data, and a standardized codebase for easy adaptation to new contexts. While the affinity gains using this method varied widely between parental antibodies – from Pembrolizumab-scFv where almost all optimized candidates had improved affinity over the parental molecule, to Trastuzumab where only a small proportion of candidates had improved affinity – AlphaBind was able to generate candidates with improved affinity in each case. However, while we have demonstrated substantial affinity improvements across several parental antibodies, the most striking feature of these results is the breadth of primary sequence available among optimized candidates; for each of the parental antibodies studied, we identified thousands of variants with high affinity but highly diverse primary sequence. We anticipate that the ability to maintain or improve parental binding while opening a massive space of primary sequence will be critical to make best use of emerging capabilities in the prediction and engineering of non-affinity phenotypes, including solubility, thermostability, and expression in mammalian cell culture systems, and we demonstrated this capability on an AlphaBind-derived variant of AAB-PP489, using the fine-tuned AlphaBind model to guide sequence-based improvements to predicted developability while further improving affinity. AlphaBind thus guided the optimization to a candidate with good expression, no observed aggregation, good thermostability, full ablation of sequence liabilities in the heavy chain, and femtomolar binding affinity by KinExA.

Finally, a key factor contributing to AlphaBind’s improved performance is the availability of large-scale (~7.5 million AlphaSeq data points), high-quality, quantitative antibody–antigen interaction data for pre-training, allowing the model to capture general sequence–function relationships that enable transfer learning across parental antibodies. While this pre-training enhances AlphaBind’s ability to fine-tune effectively on specific datasets, it does not yet fully generalize across diverse binding landscapes. Additionally, we have not rigorously explored the scaling behavior of either pretraining data, which will be important in assessing the degree to which scaling pretraining dataset size within a similar architecture is an effective strategy for continued improvements in performance or fine-tuning data, which is critical for defining the types of datasets and assays that can be relied upon to train an effective affinity prediction model. Such scaling questions are an important avenue for continued research.

Our results are, however, already sufficient to demonstrate that achieving a truly generalizable model – capable of predicting optimal antibody sequences without the need for local fine-tuning – will require some combination of significantly larger and more diverse datasets, spanning a wider range of structural antibody–antigen systems, or advances in architecture. We think that the path forward will likely include multi-modal models able to integrate high-volume affinity labeled sequence data with much lower-volume structural training data; in contrast to structural models whose training datasets are growing very slowly as new solved structures accumulate, our corpus of quantitative molecular affinity data via AlphaSeq is large and growing rapidly, meaning that a model that can simultaneously benefit from the many recent advances in structural understanding while making effective use of abundant sequence-based affinity labels is a promising direction for future research. Looking ahead, such advancements in data collection and ML models will be critical for enabling zero-shot antibody engineering, where therapeutic antibodies can be optimized entirely in silico. This will dramatically reduce the reliance on wet lab screening, accelerating early-stage drug discovery and making biologics development faster, more cost-effective, and more accessible.

Materials and methods

AlphaSeq data generation

AlphaSeq analysis to generate large-scale affinity datasets for fine-tuning and large-scale candidate validation was performed as previously described.21,22 Briefly, plasmids encoding yeast surface display cassettes were constructed and linearized for integration into the yeast genome. A 300 bp oligonucleotide pool ordered from Twist Bioscience (South San Francisco, CA) was PCR amplified and inserted into each antibody backbone using Gibson assembly and subsequently PCR amplified. For the yeast library transformation, MATa and MATalpha AlphaSeq yeast were grown in YPAD media. Yeast cells were washed, resuspended, and incubated with the DNA library and unique barcodes using electroporation to pair each library member with multiple barcodes. DNA from the yeast libraries was extracted, and fragments containing the genes and associated DNA barcodes were PCR amplified. The amplified fragments were sequenced using a GridION sequencer from Oxford Nanopore Technologies (Oxford, UK) to link the DNA barcodes and antibody sequences. Library-on-library AlphaSeq assays were performed for each campaign by combining MATa and MATalpha libraries in YPAD media with a low concentration of Tween 20 and incubating for 16 h. Control yeast strains with known interaction affinities were included as standard controls. DNA fragments were amplified by qPCR with Illumina sequencing adaptors, which were then sequenced using Illumina NextSeq 500 (San Diego, CA). Sequencing data were analyzed to identify MATa and MATalpha barcode pairs, normalized based on haploid frequencies, and assigned estimated affinities using a linear regression derived from the control strains.

AlphaBind model training

Briefly, AlphaBind takes as input ESM-2nv embeddings14,33 of both an antibody and a target sequence, then applies a transformer model with four attention heads and seven layers trained to predict affinity with MSE loss (see Figure 2). AlphaBind network weights were pretrained using approximately 7.5 million measurements of AlphaSeq affinity data from unrelated antibody–antigen systems; due to a large quantity of relevant AlphaSeq data comprising antibodies targeting human TIGIT and SARS-CoV2-RBD, the full pretrained AlphaBind model was fine-tuned only for Pembrolizumab-scFv; a reduced AlphaBind model pretrained on approximately 1 million rows of unrelated antibody–antigen data was fine-tuned for VHH72 and AAB-PP489, in order to prevent information leakage.

After pretraining, antibody-specific AlphaBind models were fine-tuned using the AlphaSeq fine-tuning datasets for each parental antibody system. Fine-tuning each model took approximately 1 h per parental antibody on a single H100 GPU, using a p5.48xlarge instance from Amazon Web Services (Seattle, WA). Models were trained for 100 epochs and the checkpoint with lowest validation score (using a uniform random 90/10 train/validation split) was chosen as the final model. We observed a relatively flat training curve after 40 epochs, suggesting that in routine use fewer epochs may be acceptable.

AlphaBind sequence optimization and candidate selection

Each fine-tuned regression model was used to generate candidates for in vitro validation by stochastic greedy optimization of predicted binding affinity. Briefly, 60,000 trajectories for each parental antibody were optimized for 100 generations, during which a proposed sequence for each trajectory was randomly generated by masking 1–3 positions (not necessarily contiguous) within the mutational window (with the probability distribution: [1: 0.5, 2: 0.3, 3: 0.2]) in that trajectory’s current proposal and using ESM-2nv logits to sample mutations; if the new sequence had better predicted affinity according to the appropriate fine-tuned regression model, it was kept.

ESM-2nv embeddings and AlphaBind inference calls during this optimization process represent the main computational bottleneck for this work: optimization for each parental antibody was performed on AWS p5.48xlarge instances with 8 H100 GPUs and took approximately 5 h per candidate optimization batch, for an overall cost of roughly 200 USD per parental antibody at the time of publication.

Once all trajectories were proposed and scored, we stratified the candidate sequences from all generations of all trajectories by their edit distance from the parental sequence, for the closed interval [2, 11], yielding 10 bins in total. From each bin, we selected the top 1,500 sequences by predicted affinity and screened them for predicted developability properties using TAP,5,6 discarding sequences that had amber or red flags for any of the five TAP metrics. Finally, from each bin, we selected up to 750 of the top remaining sequences by predicted affinity, yielding a total of approximately 7,500 selected sequences per target.

Ablated AlphaBind model training and optimization

Ablated AlphaBind models were constructed as follows: for ‘esm_warm,’ training and optimization were identical to the full AlphaBind model, except that during the sequence proposal step of optimization, mutations were sampled at random rather than by using ESM-2nv logits, which necessitated 300 generations of optimization to accumulate sufficient candidates for validation. ‘esm_cold’ is similar to ‘esm_warm,’ with the additional change that AlphaBind network weights were not pre-trained on unrelated antibody–antigen AlphaSeq data, and was likewise optimized for 300 generations; ‘ohe_warm’ and ‘ohe_cold’ were trained using a network architecture analogous to AlphaBind, but using one-hot encoding rather than ESM-2nv for sequence embedding, and additionally comprised an ensemble of five models differentiated by random seed, due to very poor performance of single models; ‘ohe_warm’ was pre-trained on the same unrelated AlphaSeq data as the full AlphaBind model, and ‘ohe_cold’ was not pre-trained. ‘ohe_warm’ and ‘ohe_cold’ both generated sufficient candidates for validation within 100 generations due to high acceptance rates. We provide the AlphaBind GitHub repository (https://github.com/A-Alpha-Bio/alphabind) for additional technical details on ablated model training and optimization.

Biolayer interferometry and kinetic exclusion assays

Sequences for BLI validation were chosen by a similar method as above, incorporating an additional filtering criterion of zero introduced liabilities compared to the parental sequence, scored using software from NaturalAntibody (Szczecin, Poland).28 The top five sequences by predicted affinity were then selected for BLI validation. In addition to our experimental candidates, three replicates of the parental antibody sequence were submitted for expression and BLI at Twist Bioscience. All three parental replicates for Pembrolizumab-scFv had insufficient expression to enable BLI measurements.

In order to confirm measured affinity values and establish better quantitative comparisons of parental and variant affinity for the best AlphaBind candidates, single-point BLI at Twist was followed by either multi-point BLI (for VHH72 variants), or KinExA34, 35, 36, 37, 38 where single-point BLI indicated an off-rate near or below the limit of detection (i.e., for the best AAB-PP489 and Trastuzumab-scFv variants from our initial optimization campaigns, as well as AAB-PP3115 and AAB-PP3117). Additional information on BLI and KinExA methods and results is available in Supplemental Materials.

Supplementary Material

Acknowledgments

We would like to acknowledge Dasha Krayushkina, Leah Homad, Jacob Brockerman, and the rest of the Technical Operations team from A-Alpha Bio for their assistance with the AlphaSeq and BLI data generation for this work. We would also like to acknowledge Darren Hsu, John Judge, Thomas Grilli, Vega Shah, and Neha Tadimeti from Nvidia for additional support with technical details and application of BioNeMo ESM-2nv.

AI use: GPT-3.5 and GPT-4-turbo models were used during the preparation of this manuscript, solely for language improvement.

Funding Statement

The author(s) reported there is no funding associated with the work featured in this article.

Disclosure statement

All A-Alpha Bio-affiliated authors were employees of A-Alpha Bio, Inc. (A-Alpha Bio) at the time the research was performed, and own stock/stock options of A-Alpha Bio. A-Alpha Bio has a patent application relating to certain research described in this article.

Code and data availability

Code used to train fine-tuned AlphaBind models and to conduct sampling, AlphaSeq fine-tuning and validation datasets, notebooks to reproduce the results and figures in this study, and trained AlphaBind model weights are all included in an open-source GitHub repository under the MIT license: https://github.com/A-Alpha-Bio/alphabind.

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/19420862.2025.2534626

References

- 1.Lu RM, Hwang Y-C, Liu I-J, Lee C-C, Tsai H-Z, Li H-J, Wu H-C.. Development of therapeutic antibodies for the treatment of diseases. J Biomed Sci. 2020;27(1). doi: 10.1186/s12929-019-0592-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wang Z, Wang G, Lu H, Li H, Tang M, Tong A. Development of therapeutic antibodies for the treatment of diseases. Mol Biomed. 2022;3(35). doi: 10.1186/s43556-022-00100-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bashour H, Smorodina E, Pariset M, Zhong J, Akbar R, Chernigovskaya M, Lê Quý K, Snapkow I, Rawat P, Krawczyk K, et al. Biophysical cartography of the native and human-engineered antibody landscapes quantifies the plasticity of antibody developability. Commun Biol. 2024;7(1):922. doi: 10.1038/s42003-024-06561-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jain T, Sun T, Durand S, Hall A, Houston NR, Nett JH, Sharkey B, Bobrowicz B, Caffry I, Yu Y. et al. Biophysical properties of the clinical-stage antibody landscape. Proc Natl Acad Sci USA. 2017;114(5):944–17. doi: 10.1073/pnas.1616408114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Raybould MIJ, Deane CM. The therapeutic antibody profiler for computational developability assessment. Methods Mol Biol. 2022;2313:115–125. doi: 10.1007/978-1-0716-1450-1_5. [DOI] [PubMed] [Google Scholar]

- 6.Raybould MIJ, Marks C, Krawczyk K, Taddese B, Nowak J, Lewis AP, Bujotzek A, Shi J, Deane CM. Five computational developability guidelines for therapeutic antibody profiling. Proc Natl Acad Sci USA. 2019;116(10):4025–4030. doi: 10.1073/pnas.1810576116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chen C, Roberts VA, Rittenberg MB. Generation and analysis of random point mutations in an antibody CDR2 sequence: many mutated antibodies lose their ability to bind antigen. J Exp Med. 1992;176(3):855–866. doi: 10.1084/jem.176.3.855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bennett NR, Watson JL, Ragotte RJ, Borst AJ, See DL, Weidle C, Biswas R, Shrock, EL, Leung PJ, Huang B, et al. Atomically accurate de novo design of single-domain antibodies. bioRxiv. 2024; 2024.2003.2014.585103. doi: 10.1101/2024.03.14.585103. [DOI]

- 9.Kim J, McFee M, Fang Q, Abdin O, Kim PM. Computational and artificial intelligence-based methods for antibody development. Trends Pharmacol Sci. 2023;44(3):175–189. doi: 10.1016/j.tips.2022.12.005. [DOI] [PubMed] [Google Scholar]

- 10.Makowski EK, Chen HT, Tessier PM. Simplifying complex antibody engineering using machine learning. Cell Syst. 2023;14(8):667–675. doi: 10.1016/j.cels.2023.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wossnig L, Furtmann N, Buchanan A, Kumar S, Greiff V. Best practices for machine learning in antibody discovery and development. arXiv: 2312.08470. 2023; doi: 10.1016/j.drudis.2024.104025. [DOI] [PubMed]

- 12.Fournier Q, Vernon RM, van der Sloot A, Schulz B, Chandar S, Langmead CJ. Protein language models: is scaling necessary? bioRxiv. 2024; 2024.2009.2023.614603. doi: 10.1101/2024.09.23.614603. [DOI]

- 13.Lin Z, Akin H, Rao R, Hie B, Zhu Z, Lu W, Smetanin N, Verkuil R, Kabeli O, Shmueli Y, et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science. 2023;379(6637):1123–1130. doi: 10.1126/science.ade2574. [DOI] [PubMed] [Google Scholar]

- 14.Rives A, Meier J, Sercu T, Goyal S, Lin Z, Liu J, Guo D, Ott M, Zitnick CL, Ma J, et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc Natl Acad Sci USA. 2021;118(15). doi: 10.1073/pnas.2016239118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Frey NC, Berenberg D, Zadorozhny K, Kleinhenz J, Lafrance-Vanasse J, Hotzel I, Wu Y, Ra S, Bonneau R, Cho K, et al. Protein discovery with discrete walk-jump sampling. arXiv: 2306.12360. 2023; https://ui.adsabs.harvard.edu/abs/2023arXiv230612360F.

- 16.Gruver N, Stanton S, Frey N, Rudner TG, Hotzel I, Lafrance-Vanasse J, Rajpal A, Cho K, Wilson AG. Protein design with guided discrete diffusion. Adv Neural Inform Process Syst. 2023;36:12489–517. https://ui.adsabs.harvard.edu/abs/2023arXiv230520009G. [Google Scholar]

- 17.Shanker VR, Bruun TUJ, Hie BL, Kim PS. Unsupervised evolution of protein and antibody complexes with a structure-informed language model. Science. 2024;385(6704):46–53. doi: 10.1126/science.adk8946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Angermueller C, Mariet Z, Jester B, Engelhart E, Emerson R, Alipanahi B, Lin C, Shikany C, Guion D, Nelson J, et al. High-throughput ML-guided design of diverse single-domain antibodies against SARS-CoV-2. bioRxiv. 2023; 2023.2012.2001.569227. doi: 10.1101/2023.12.01.569227. [DOI]

- 19.Li L, Gupta E, Spaeth J, Shing L, Jaimes R, Engelhart E, Lopez R, Caceres RS, Bepler T, Walsh ME, et al. Machine learning optimization of candidate antibody yields highly diverse sub-nanomolar affinity antibody libraries. Nat Commun. 2023;14(1):3454. doi: 10.1038/s41467-023-39022-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mason DM, Friedensohn S, Weber CR, Jordi C, Wagner B, Meng SM, Ehling RA, Bonati L, Dahinden J, Gainza P, et al. Optimization of therapeutic antibodies by predicting antigen specificity from antibody sequence via deep learning. Nat Biomed Eng. 2021;5(6):600–612. doi: 10.1038/s41551-021-00699-9. [DOI] [PubMed] [Google Scholar]

- 21.Younger D, Berger S, Baker D, Klavins E. High-throughput characterization of protein-protein interactions by reprogramming yeast mating. Proc Natl Acad Sci USA. 2017;114(46):12166–12171. doi: 10.1073/pnas.1705867114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Engelhart E, Lopez R, Emerson R, Lin C, Shikany C, Guion D, Kelley M, Younger D. Massively multiplexed affinity characterization of therapeutic antibodies against SARS-CoV-2 variants. Antib Ther. 2022;5(2):130–137. doi: 10.1093/abt/tbac011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Engelhart E, Emerson R, Shing L, Lennartz C, Guion D, Kelley M, Lin C, Lopez R, Younger D, Walsh ME, et al. A dataset comprised of binding interactions for 104,972 antibodies against a SARS-CoV-2 peptide. Sci Data. 2022;9(1):653. doi: 10.1038/s41597-022-01779-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hamid O, Robert C, Daud A, Hodi FS, Hwu W-J, Kefford R, Wolchok JD, Hersey P, Joseph RW, Weber JS, et al. Safety and tumor responses with lambrolizumab (anti-PD-1) in melanoma. N Engl J Med. 2013;369(2):134–144. doi: 10.1056/NEJMoa1305133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wrapp D, De Vlieger D, Corbett KS, Torres GM, Wang N, Van Breedam W, Roose K, van Schie L, Hoffmann M, Pöhlmann S, et al. Structural basis for potent neutralization of betacoronaviruses by single-domain camelid antibodies. Cell. 2020;181(5):1004–1015.e1015. doi: 10.1016/j.cell.2020.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Al-Lazikani B, Lesk AM, Chothia C. Standard conformations for the canonical structures of immunoglobulins 1 1Edited by I. A. Wilson. J Mol Biol. 1997;273(4):927–948. doi: 10.1006/jmbi.1997.1354. [DOI] [PubMed] [Google Scholar]

- 27.Dunbar J, Deane CM. ANARCI: antigen receptor numbering and receptor classification. Bioinformatics. 2016;32(2):298–300. doi: 10.1093/bioinformatics/btv552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.NaturalAntibody . NaturalAntibody research App. 2024. https://abstudio.naturalantibody.com.

- 29.Carter P, Presta L, Gorman CM, Ridgway JB, Henner D, Wong WL, Rowland AM, Kotts C, Carver ME, Shepard HM. et al. Humanization of an anti-p185HER2 antibody for human cancer therapy. Proc Natl Acad Sci USA. 1992;89(10):4285–4289. doi: 10.1073/pnas.89.10.4285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Martinkus K, Ludwiczak J, Liang WC, Lafrance-Vanasse J, Hotzel I, Rajpal A, Wu Y, Cho K, Bonneau R, Gligorijevic V, et al. AbDiffuser: full-atom generation of in vitro functioning antibodies. Adv Neural Inform Process Syst. 2023;2308(5027). https://ui.adsabs.harvard.edu/abs/2023arXiv230805027M. [Google Scholar]

- 31.Chinery L, Hummer AM, Mehta BB, Akbar R, Rawat P, Slabodkin A, Quy, KL, Lund-Johansen F, Greiff V, Jeliazkov JR, et al. Baselining the buzz trastuzumab-HER2 affinity, and beyond. bioRxiv. 2024; 2024.2003.2026.586756. doi: 10.1101/2024.03.26.586756. [DOI]

- 32.Krause B, Subramanian S, Yuan T, Yang M, Sato A, Naik N. Improving antibody affinity using laboratory data with language model guided design. bioRxiv. 2023; 2023.2009.2013.557505. doi: 10.1101/2023.09.13.557505. [DOI]

- 33.John PS, Lin D, Binder P, Greaves M, Shah V, John, JS, Lange A, Hsu P, Illango R, Ramanathan A, et al. BioNeMo framework: a modular, high-performance library for AI model development in drug discovery. arXiv. 2024;2411(10548). https://ui.adsabs.harvard.edu/abs/2024arXiv241110548S. [Google Scholar]

- 34.Erasmus MF, Dovner M, Ferrara F, D’Angelo S, Teixeira AA, Leal-Lopes C, Spector L, Hopkins E, Bradbury ARM. Determining the affinities of high-affinity antibodies using KinExA and surface plasmon resonance. MAbs. 2023;15(1):2291209. doi: 10.1080/19420862.2023.2291209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.McInnes L, Healy J, Melville J. UMAP: uniform manifold approximation and projection for dimension reduction. arXiv: 1802.03426. 2018. https://ui.adsabs.harvard.edu/abs/2018arXiv180203426M.

- 36.Raschka S, Patterson J, Nolet C. Machine learning in Python: main developments and technology trends in data science, machine learning, and artificial intelligence. Information. 2020;11(4):193. doi: 10.3390/info11040193. [DOI] [Google Scholar]

- 37.Olsen TH, Boyles F, Deane CM. Observed antibody space: a diverse database of cleaned, annotated, and translated unpaired and paired antibody sequences. Protein Sci: Publ Protein Soc. 2022;31(1):141–146. doi: 10.1002/pro.4205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Mirdita M, von den Driesch L, Galiez C, Martin MJ, Söding J, Steinegger M. Uniclust databases of clustered and deeply annotated protein sequences and alignments. Nucleic Acids Res. 2016;45(D1):D170–D176. doi: 10.1093/nar/gkw1081. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Code used to train fine-tuned AlphaBind models and to conduct sampling, AlphaSeq fine-tuning and validation datasets, notebooks to reproduce the results and figures in this study, and trained AlphaBind model weights are all included in an open-source GitHub repository under the MIT license: https://github.com/A-Alpha-Bio/alphabind.